- 1、下载文档前请自行甄别文档内容的完整性,平台不提供额外的编辑、内容补充、找答案等附加服务。

- 2、"仅部分预览"的文档,不可在线预览部分如存在完整性等问题,可反馈申请退款(可完整预览的文档不适用该条件!)。

- 3、如文档侵犯您的权益,请联系客服反馈,我们会尽快为您处理(人工客服工作时间:9:00-18:30)。

N

ξi ≥ 0, for all i

C : tradeoff between complexity of the machine

and the number of nonseparable points

24

Dual Problem

Given the training sample {( xi , di )}iN 1 , find the Lagrange multipliers {αi }iN 1 that = = maximize the objective function 1 N N Q (α ) = ∑αi − ∑∑αiα j di d j xiT x j 2 i =1 j =1 i =1 subject to the constraints (1) ∑αi di = 0

wT w = (∑ α i di xi )T (∑ α i di xi ) = ∑∑ α iα j di d j xiT x j

i =1 i =1

N

N

N

N

N

i =1 j =1

N

α i di wT xi = ∑ α i di (∑ α j d j x j )T xi = ∑∑ α i diα j d j xiT x j ∑

⇒

Φ (w ',α ) ≤ Φ (w ',α ' ) ≤ Φ (w,α ' )

why we find a saddle point

Theorem:

( w' , α ' ) is a saddle point of if

Φ ( w, α ) = f ( w ) − ∑ α i g i ( w )

i =1 N

N

∑α d

i i=1

N

i

=0 for i = 1, 2,..., N

(2)α i ≥ 0

H (i, j )

20

Some discussions

1. Q(α ) depends only on the input patterns in the form of a set of dot products, {xiT x j }(N, j ) =1 i 2. support vectors determine the hyperplane

w = ∑ α i di xi

i =1

N

∑α d

i =1 i

N

i

=0

18

Solve the dual problem (ctd.)

N 1 T J ( w , b, α ) = w w − ∑ α i [di ( w T xi + b) − 1] 2 i =1

∑α d

i =1 i

N

i

=0

N N N 1 T = w w − ∑ α i di wT xi − b∑ α i di + ∑ α i 2 i =1 i =1 i =1

N 1 T Φ ( w, α ) = J ( w , b, α ) = w w − ∑ α i [di ( w T xi + b) − 1] 2 i =1

dual function:

Q(α ) = min J ( w, b, α )

w ,b

∂J ( w, b, α ) =0 ∂w ∂J ( w, b, α ) =0 ∂b

α ≥0 α ≥0

i =1 N

min L( w)

w

min max Φ ( w, α )

w

α ≥0

Q(α ) = min Φ ( w, α )

w

max Q(α )

α ≥0

max min Φ ( w, α )

α ≥0

w

we prefer to solve the dual problem!

17

Solve the dual problem

T

N i =1

(P)

for d = +1 i for d = −1 i

di ( w xi + b) ≥ 1 for i = 1, 2,..., N

gi (w)=di ( w xi + b) − 1 ≥ 0 for i = 1, 2,..., N 10

T

Φ ( w, α )

Lagrange function

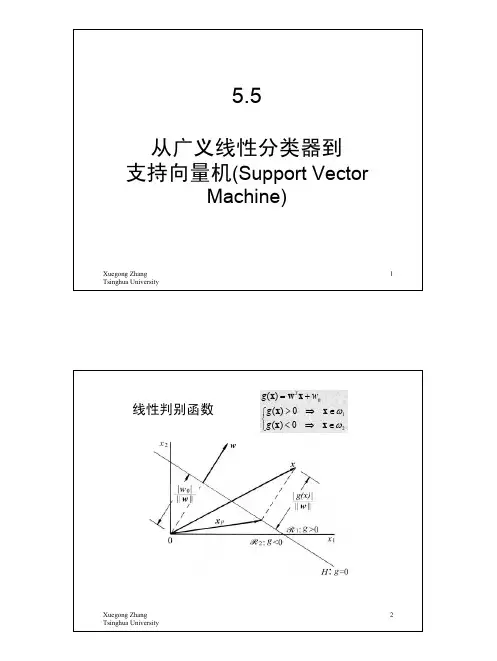

wo Decomposition of x: x = x p + r wo

T g ( x) = wo x + bo = r wo

⇒

g ( x) r= wo

7

Linear classfication

Training sample set T = {(xi , d i )}iN 1 =

⎧ d i = + 1, positive patterns ⎨ ⎩ d i = − 1, negative patterns

α

w w

α

holds if and only if there exists a pair ( w' , α ' ) satisfies the saddle-point condition for Φ Proof: (omitted)

“Stephen G.Nash & Ariela Sofer Linear and Nonlinear Programming” pp468

' ' ' i =1 i =1 ' i ' i =1 N N N

let α = 0

∑ α gi ( w ) ≤ 0

i =1 ' i '

N

N

α i' gi ( w' ) = 0 ∑

i =1

N

consider the second inequality

f ( w ) ≤ f ( w) − ∑ α i' gi ( w)

' ' i =1 ' i ' i =1

N

N

let

' ' α1 = α1' + 1, α 2 = α 2 ,...α N = α N

g i ( w' ) ≥ 0

14

w

'

is a feasible solution of (P)

why we find a saddle point (ctd.)

f ( w ) − ∑ α i gi (w ) ≤ f ( w ) − ∑ α gi (w ) ≤ f ( w) − ∑ α i' gi (w)

Margin of separation

2 ρ = 2r = wo

9

Optimization problem

Training sample set T = {(xi , d i )}

1 T min f ( w ) = w w 2

subject to ⎧ wT x + b ≥ +1 ⎨ T i ⎩ w x + b ≤ −1 i

max min Φ ( w, α ) = Φ ( w' , α ' ) = min max Φ ( w, α )

α

w w

α

16

Dual Problem

primal function primal problem dual function dual problem

L( w) = max Φ ( w, α ) = max[ f ( w) − ∑ α i gi ( w)]

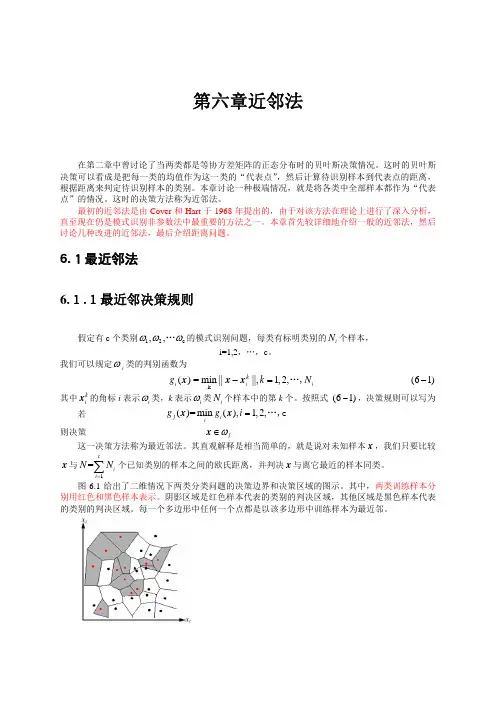

Optimal Separating hyperplane

3

Optimal Hyperplane

4

Linear classfication

Training sample set T = {(xi , d i )}iN 1 =

⎧ d i = + 1, positive patterns ⎨ ⎩ d i = − 1, negative patterns

i =1 i =1 j =1 i =1 j =1

19

N

N

N

Dual Problem

We may now state the dual problem:

Given the training sample {( xi , di )}iN 1 , find the Lagrange multipliers {α i }iN 1 that = = maximize the objective function 1 N N Q (α ) = ∑ α i − ∑∑ α iα j di d j xiT x j 2 i =1 j =1 i =1 subject to the constraints (1)

' i =1

gi ( w) ≥ 0 if w is feasible

f ( w' ) ≤ f ( w)

w

'

is optimal solution of (P)

15

Strong Duality

Strong Duality: the condition

max min Φ ( w, α ) = min max Φ ( w, α )

Support Vector Machine

张长水 清华大学自动化系

1

Outline

Linearly separable patterns Linearly non-separable patterns Nonlinear case Some examples

2

Linearly separable case

∑ α g (w) = 0

i =1 i i

_ _ T _