并行计算(中科大讲义)

- 格式:ppt

- 大小:8.40 MB

- 文档页数:617

GPU Architecture in detail and PerformanceOptimization (Part II)Bin ZHOU USTCAutumn, 20131 © 2012, NVIDIAAnnouncements •The Following Classes:–11/23 Review and Project Review–11/30 Final Exam + Project–12/07 12/14 Project–12/21 Project Defense–12/28 Or after that Course Close. •Project Source Code + Report + PPT •Important Time: Due to: 2013/12/18 24:00Contents•Last lecture Review + Continue•Optimization + Kepler New Things•Tools for Project3 © 2012, NVIDIAOptimizationMake the best or most effective use of asituation or resourceLast Lecture•General guideline•Occupancy Optimization•Warp branch divergence•Global memory access•Shared memory accessOutline•General guideline II•CPU-GPU Interaction Optimization•Kepler in detailTools•Winscp–Copy files from/to remote servers•Notepad++–Edit source files (with keyword highlighting)GENERAL GUIDELINE II8 © 2012, NVIDIAKernel Optimization WorkflowFind LimiterCompare topeak GB/s Memory optimization Compare topeak inst/sInstructionoptimizationConfigurationoptimizationMemory boundInstructionboundLatencybound Done!<< <<~ ~General Optimization Strategies: Measurement•Find out the limiting factor in kernel performance –Memory bandwidth bound (memory optimization)–Instruction throughput bound (instruction optimization) –Latency bound (configuration optimization)•Measure effective memory/instruction throughputMemory Optimization•If the code is memory-bound and effective memory throughput is much lower than the peak•Purpose: access only data that are absolutely necessary•Major techniques–Improve access pattern to reduce wasted transactions–Reduce redundant access: read-only cache, shared memoryInstruction Optimization•If you find out the code is instruction bound–Compute-intensive algorithm can easily become memory-bound if not careful enough–Typically, worry about instruction optimization after memory and execution configuration optimizations•Purpose: reduce instruction count–Use less instructions to get the same job done•Major techniques–Use high throughput instructions (ex. wider load)–Reduce wasted instructions: branch divergence, reduce replay (conflict), etc.Latency Optimization•When the code is latency bound–Both the memory and instruction throughputs are far from the peak•Latency hiding: switching threads–A thread blocks when one of the operands isn’t ready•Purpose: have enough warps to hide latency•Major techniques: increase active warps, increase ILPCPU-GPU INTERACTION14 © 2012, NVIDIAMinimize CPU-GPU data transferHost<->device data transfer has much lower bandwidth than global memory access.16 GB/s (PCIe x16 Gen3) vs 250 GB/s & 3.95 Tinst/s (GK110)Minimize transferIntermediate data can be allocated, operated, de-allocated directly on GPU Sometimes it’s even better to recompute on GPUMove CPU codes to GPU that do not have performance gains if it can reduce data transferGroup transferOne large transfer much better than many small onesOverlap memory transfer with computationPCI Bus 1.Copy input data from CPU memory to GPUmemoryPCI Bus 1.Copy input data from CPU memory to GPUmemory2.Load GPU code and execute itPCI Bus 1.Copy input data from CPU memory to GPUmemory2.Load GPU code and execute it3.Copy results from GPU memory to CPUmemory•T total=T HtoD+T Exec+T DtoH •More Overlap?HtoD Exec DtoH Stream 1HD1 HD2 E1 E2 DH1 DH2 Stream 2cudaStreamCreate(&stream1);cudaMemcpyAsync(dst1, src1, size, cudaMemcpyHostToDevice, stream1);kernel<<<grid, block, 0, stream1>>>(…);cudaMemcpyAsync(dst1, src1, size, stream1);cudaStreamSynchronize(stream1);cudaStreamCreate(&stream1);cudaStreamCreate(&stream2);cudaMemcpyAsync(dst1, src1, size, cudaMemcpyHostToDevice, stream1); cudaMemcpyAsync(dst2, src2, size, cudaMemcpyHostToDevice,stream2);kernel<<<grid, block, 0, stream1>>>(…);kernel<<<grid, block, 0, stream2>>>(…);cudaMemcpyAsync(dst1, src1, size, cudaMemcpyDeviceToHost, stream1); cudaMemcpyAsync(dst2, src2, size, cudaMemcpyDeviceToHost, stream2);cudaStreamSynchronize(stream1);cudaStreamSynchronize(stream2);KEPLER IN DETAIL23 © 2012, NVIDIAKepler•NVIDIA Kepler–1.31 tflops double precision–3.95 tflops single precision–250 gb/sec memorybandwidth–2,688 Functional Units(cores)•~= #1 on Top500 in 1997- KeplerKepler GK110 SMX vs Fermi SM3x perfPower goes down!New ISA Encoding: 255 Registers per Thread•Fermi limit: 63 registers per thread–A common Fermi performance limiter–Leads to excessive spilling•Kepler : Up to 255 registers per thread–Especially helpful for FP64 appsHyper-Q•Feature of Kepler K20 GPUs to increase application throughput by enabling work to be scheduled onto the GPU in parallel •Two ways to take advantage–CUDA Streams – now they really are concurrent –CUDA Proxy for MPI – concurrent CUDA MPIprocesses on one GPUBetter Concurrency SupportWork Distributor32 active gridsStream Queue Mgmt C B AR Q PZ Y XGrid Management UnitPending & Suspended Grids 1000s of pending gridsSMX SMX SMX SMXSM SM SM SM Work Distributor16 active gridsStream Queue MgmtC B AZ Y XR Q PCUDAGeneratedWorkFermiKepler GK110Fermi ConcurrencyFermi allows 16-way concurrency –Up to 16 grids can run at once–But CUDA streams multiplex into a single queue –Overlap only at stream edges P<<<>>> ;Q<<<>>> ;R<<<>>> A<<<>>> ; B<<<>>> ;C<<<>>> X<<<>>> ;Y<<<>>> ; Z<<<>>> Stream 1Stream 2Stream 3Hardware Work QueueA--B--C P--Q--R X--Y--ZKepler Improved ConcurrencyP<<<>>> ; Q<<<>>> ; R<<<>>>A <<<>>>;B <<<>>>;C<<<>>>X <<<>>>;Y <<<>>>; Z<<<>>>Stream 1Stream 2Stream 3Multiple Hardware Work QueuesA--B--CP--Q--R X--Y--ZKepler allows 32-way concurrencyOne work queue per stream Concurrency at full-stream level No inter-stream dependenciesCPU ProcessesShared GPUE FDCBACPU ProcessesShared GPUE FDCBACPU ProcessesShared GPUE FDCBACPU ProcessesShared GPUE FDCBACPU ProcessesShared GPUE FDCBACPU ProcessesShared GPUE FDCBACPU ProcessesShared GPUE FDCBAHyper-Q: Simultaneous MultiprocessE FDCBACPU ProcessesShared GPUCUDA ProxyClient – Server Software SystemWithout Hyper-QTime100500 G P U U t i l i z a t i o n % A B C D E FWith Hyper-Q Time 10050 0 G P U U t i l i z a t i o n % A A ABB BC CC D DDE E EF F FWhat is Dynamic Parallelism?The ability to launch new kernels from the GPU –Dynamically - based on run-time data–Simultaneously - from multiple threads at once–Independently - each thread can launch a different gridCPU GPU CPU GPU Fermi: Only CPU can generate GPU work Kepler: GPU can generate work for itselfCPU GPU CPU GPUWhat Does It Mean?Autonomous, Dynamic Parallelism GPU as Co-ProcessorNew Types of Algorithms•Recursive Parallel Algorithms like Quick sort •Adaptive Mesh Algorithms like Mandelbrot CUDA TodayCUDA on KeplerComputational Powerallocated to regions of interestGPU Familiar Programming Model__global__ void B(float *data) {do_stuff(data);X <<< ... >>> (data);Y <<< ... >>> (data);Z <<< ... >>> (data);cudaDeviceSynchronize();do_more_stuff(data);}ABCXYZ CPUint main() {float *data;setup(data);A <<< ... >>> (data);B <<< ... >>> (data);C <<< ... >>> (data);cudaDeviceSynchronize(); return 0;}__device__ float buf[1024]; __global__ void cnp(float *data){int tid = threadIdx.x;if(tid % 2)buf[tid/2] = data[tid]+data[tid+1];__syncthreads();if(tid == 0) {launch<<< 128, 256 >>>(buf); cudaDeviceSynchronize(); }__syncthreads();cudaMemcpyAsync(data, buf, 1024); cudaDeviceSynchronize();}Code Example Launch is per-threadand asynchronous__device__ float buf[1024]; __global__ void cnp(float *data) { int tid = threadIdx.x; if(tid % 2) buf[tid/2] = data[tid]+data[tid+1]; __syncthreads(); if(tid == 0) { launch<<< 128, 256 >>>(buf); cudaDeviceSynchronize();}__syncthreads();cudaMemcpyAsync(data, buf, 1024); cudaDeviceSynchronize();}Code Example Launch is per-threadand asynchronousCUDA primitives are per-blocklaunched kernels and CUDA objects like streams are visible to all threads in athread blockcannot be passed to child kernel__device__ float buf[1024]; __global__ void cnp(float *data) { int tid = threadIdx.x; if(tid % 2) buf[tid/2] = data[tid]+data[tid+1];__syncthreads();if(tid == 0) {launch<<< 128, 256 >>>(buf); cudaDeviceSynchronize();}__syncthreads();cudaMemcpyAsync(data, buf, 1024); cudaDeviceSynchronize();} Code Example Launch is per-threadand asynchronousCUDA primitives are per-blockSync includes all launches by any thread in the block__device__ float buf[1024]; __global__ void cnp(float *data) { int tid = threadIdx.x; if(tid % 2) buf[tid/2] = data[tid]+data[tid+1]; __syncthreads(); if(tid == 0) { launch<<< 128, 256 >>>(buf); cudaDeviceSynchronize(); } __syncthreads();cudaMemcpyAsync(data, buf, 1024); cudaDeviceSynchronize();}Code Example Launch is per-threadand asynchronousCUDA primitives are per-blockSync includes all launchesby any thread in the blockcudaDeviceSynchronize() does not imply syncthreads()__device__ float buf[1024]; __global__ void cnp(float *data){int tid = threadIdx.x;if(tid % 2)buf[tid/2] = data[tid]+data[tid+1];__syncthreads();if(tid == 0) {launch<<< 128, 256 >>>(buf); cudaDeviceSynchronize();}__syncthreads();cudaMemcpyAsync(data, buf, 1024); cudaDeviceSynchronize();}Code Example Launch implies membar(child sees parent state at time of launch)__device__ float buf[1024]; __global__ void cnp(float *data) { int tid = threadIdx.x; if(tid % 2)buf[tid/2] = data[tid]+data[tid+1];__syncthreads();if(tid == 0) {launch<<< 128, 256 >>>(buf); cudaDeviceSynchronize(); }__syncthreads();cudaMemcpyAsync(data, buf, 1024); cudaDeviceSynchronize(); } Code Example Launch implies membar(child sees parent state at time of launch) Sync implies invalidate(parent sees child writes after sync)。

燕山大学课程讲义并行计算导论授课人:郭栋梁学时:32学时其中实验课:8学时三级项目:16学时第1章引言1.1概述单处理器计算机即将成为过时的概念.我们需要考虑如下因素来着手改进提高计算机的性能:(1)单纯依靠单处理器很难提升现有计算机的性能.即使有一个性能十分强大的单处理器,其功耗也让人无法接受.想要提升计算机的性能,更加可行的方法是同时使用多个简单处理器,它所能达到的性能可能是现有单处理器计算机性能的几千倍。

(2)观察结果显示,除非使用并行处理技术,一个程序在一台型号更新的单处理器计算机上的运行速度,可能比在旧的计算机上的运行速度更慢。

能依照给定算法检测出程序中的并行结构的编程工具还有待开发。

此算法需要能够检测出变ja之间的依赖关系是否规则;而且不管这些依赖是否规则,此算法都能在保证程序正确性的前提下,通过将程序中的一些子任务并行化来加速程序的执行。

(3)提升未来的计算机性能的关键就在于并行程序的开发,这涉及各个层面的工作:算法、程序开发、操作系统、编译器及硬件设备。

(4)并行计算除了要考虑到参与并行计算的处理器的数量,还应该考虑处理器与处理器、处理器与内存之间的通信。

最终计算性能的提升既依赖于算法能够提升的空间,更依赖于处理器执行算法的效率。

而通信性能的提升则依赖于处理器对数据的供应和提取的速度。

(5)内存系统的速度始终比处理器慢,而且由于一次只能进行单个字的读写操作,内存系统的带宽也有限制。

(6)内存系统的速度始终比处理器慢,而且由于一次只能进行单个字的读写操作,内存系统的带宽也有限制。

本书内容主要涉及并行算法与为了实现这些算法而设计的硬件结构。

硬件和软件是相互影响的,任何软件的最终运行环境是由处理器组成的底层硬件设备和相应的操作系统组成.我们在本章开始的部分会介绍一些概念,之后再来讨论为了实现这些概念有哪些方法和限制.1.2自动并行编程对于算法在软件中的实现过程我们都很熟悉。

在编程并不需要了解目标计算机系统的具体细节,因为编译器会处理这些细节.但是在编程和调试时依旧沿用着在单一央处理器(CPU)上顺序处理的模式.从另一方面讲,为了实现并行算法,硬件和软件之间的相互联系需要比我们想象的更加密切。

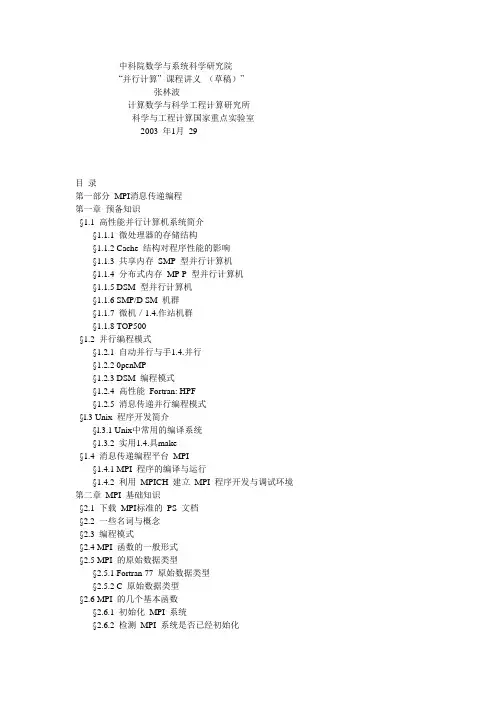

中科院数学与系统科学研究院“并行计算” 课程讲义(草稿)”张林波计算数学与科学工程计算研究所科学与工程计算国家重点实验室2003 年1月29目录第一部分MPI消息传递编程第一章预备知识§1.1 高性能并行计算机系统简介§1.1.1 微处理器的存储结构§1.1.2 Cache 结构对程序性能的影响§1.1.3 共享内存SMP 型并行计算机§1.1.4 分布式内存MP P 型并行计算机§1.1.5 DSM 型并行计算机§1.1.6 SMP/D SM 机群§1.1.7 微机/1.4.作站机群§1.1.8 TOP500§1.2 并行编程模式§1.2.1 自动并行与手1.4.并行§1.2.2 0penMP§1.2.3 DSM 编程模式§1.2.4 高性能Fortran: HPF§1.2.5 消息传递并行编程模式§l.3 Unix 程序开发简介§l.3.1 Unix中常用的编译系统§1.3.2 实用1.4.具make§1.4 消息传递编程平台MPI§1.4.1 MPI 程序的编译与运行§1.4.2 利用MPICH 建立MPI 程序开发与调试环境第二章MPI 基础知识§2.1 下载MPI标准的PS 文档§2.2 一些名词与概念§2.3 编程模式§2.4 MPI 函数的一般形式§2.5 MPI 的原始数据类型§2.5.1 Fortran 77 原始数据类型§2.5.2 C 原始数据类型§2.6 MPI 的几个基本函数§2.6.1 初始化MPI 系统§2.6.2 检测MPI 系统是否已经初始化§2.6.3 得到通信器的进程数及进程在通信器中的序号§2.6.4 退出MPI 系统§2.6.5 异常终止MPI 程序的执行§2.6.6 查询处理器名称§2.6.7 莸取墙上时间及时钟精度§2.7 MPI 程序的基本结构§2.7.1 Fortran 77 程序§2.7.2 C 程序第三章点对点通信§3.1 标准阻塞型点对点通信函数§3.1.1 标准阻塞发送§3.1.2 阻塞接收§3.1.3 阻塞型消息传递实例§3.1.4 其它一些阻塞型消息传递函数§3.2 消息发送模式§3.2.1 阻塞型缓冲模式消息发送函数§3.3 阻塞型与非阻塞型函数§3.4 非阻塞型点对点通信函数§3.4.1 非阻塞发送§3.4.2 非阻塞接收§3.4.3 通信请求的完成与检测§3.4.4 通信请求的释放§3.5 消息探测与通信请求的取消§3.5.1 消息探测§3.5.2 通信请求的取消§3.6 点对点通信函数汇总§3.7 持久通信请求§3.7.1 创建持久消息发送请求§3.7.2 创建持久消息接收请求§3.7.3 开始基于持久通信请求的通信§3.7.4 持久通信请求的完成与释放第四章数据类型§4.1 与数据类型有关的一些定义§4.1.1 数据类型定义§4.1.2 数据类型的大小§4.1.3 数据类型的下界、上界与域§4.1.4 MPI_LB 和MPI_UB§4.1.5 数据类型查询函数§4.2 数据类型创建函数§4.2.1 MPI_Type_contiguous§4.2.2 MPI_Type_vector§4.2.3 MPI_Type_hvector§4.2.4 MPI_Type_indexed§4.2.5 MPI_Type_hindexed§4.2.6 MPI_Type_struct§4.2.7 地址函数MPI_Address§4.3 数据类型的使用§4.3.1 数据类型的提交§4.3.2 数据类型的释放§4.3.3 MPI_Get_elements§4.4 数据的打包与拆包§4.4.1 数据打包§4.4.2 数据拆包§4.4.3 得到打包后的数据大小§4.5 MPI l.l 中位移与数据大小的限制第五章聚含通信(Collective Communications)§5.1 障碍同步§5.2 广播§5.3 数据收集§5.3.1 收集相同长度数据块MPI_Gather§5.3.2 收集不同长度数据块MPI_Gatherv§5.3.3 全收集MPI_Allgather§5.3.4 不同长度数据块的全收集MPI_Allgatherv §5.4 数据散发§5.4.1 散发相同长度数据块MPI_Scatter§5.4.2 散发不同长度数据块MPI_Scatterv§5.5 全部进程对全部进程的数据散发收集§5.5.1 相同数据长度的全收集散发MPI_Alltoall§5.5.2 不同数据长度的全收集散发MPI_Alltoallv §5.6 归约§5.6.1 归约函数MPI_Reduce§5.6.2 全归约MPI_Allreduce§5.6.3 归约散发MPI_Reduce_scatter§5.6.4 前缀归约MPI_Scan§5.6.5 归约与前缀归约中用户自定义的运算§5.7 两个程序实例§5.7.1 π值计算§5.7.2 Jacobi 迭代求解二维Poisson 方程第六章进程组与通信器§6.1 基本概念§6.1.1 进程组§6.1.2 上下文(Context)§6.1.3 域内通信器(Intracommunicator)§6.1.4 域间通信器(Intercommunicator)§6.2 进程组操作函数§6.2.1 查询进程组大小和进程在组中的序号§6.2.2 两个进程组间进程序号的映射§6.2.3 比较两个进程组§6.2.4 进程组的创建与释放§6.3 域内通信器操作函数§6.3.1 比较两个通信器§6.3.2 通信器的创建与释放§6.4 通信器的附加属性(Caching)§6.5 域间通信器(Intercommunicator)§6.6 进程拓扑结构§6.6.1 迪卡尔拓扑结构§6.6.2 一般拓扑结构§6.6.3 底层支持函数第七章文件输入输出§7.1 基本术语§7.2 基本文件操作§7.2.1 打开MPI 文件§7.2.2 关闭MPI 文件§7.2.3 删除文件§7.2.4 设定文件长度§7.2.5 为文件预留空间§7.2.6 查询文件长度§7.3 查询文件参数§7.3.1 查询打开文件的进程组§7.3.2 查询文件访问模式§7.4 设定文件视窗§7.4.1 文件中的数据表示格式§7.4.2 可移植数据类型§7.4.3 查询数据类型相应于文件数据表示格式的域§7.5 文件读写操作§7.5.1 使用显式位移的阻塞型文件读写§7.5.2 使用独立文件指针的阻塞型文件读写§7.5.3 使用共享文件指针的阻塞型文件读写§7.5.4 非阻塞型文件读写函数§7.5.5 分裂型文件读写函数§7.6 文件指针操作§7.6.1 独立文件指针操作§7.6.2 共享文件指针操作§7.6.3 文件位移在文件中的绝对地址§7.7 不同进程对同一文件读写操作的相容性§7.7.1 设定文件访问的原子性§7.7.2 查询atomicity 的当前值§7.7.3 文件读写与存储设备间的同步§7.8 子数组数据类型创建函数本讲义仅供课程学员及其他感兴趣者个人参考用,尚处于逐步修改完善的过程中,许多内容代表的是作者的个人观点。

Performance Optimization Process•Use appropriate performance metric for each kernel –For example, Gflops/s don’t make sense for a bandwidth-bound kernel •Determine what limits kernel performance–Memory throughput–Instruction throughput–Latency–Combination of the above•Address the limiters in the order of importance–Determine how close to the HW limits the resource is being used–Analyze for possible inefficiencies–Apply optimizations•Often these will just fall out from how HW operatesPresentation Outline•Identifying performance limiters•Analyzing and optimizing :–Memory-bound kernels–Instruction (math) bound kernels–Kernels with poor latency hiding–Register spilling•For each:–Brief background–How to analyze–How to judge whether particular issue is problematic–How to optimize–Some cases studies based on “real-life” application kernels •Most information is for Fermi GPUsNotes on profiler•Most counters are reported per Streaming Multiprocessor (SM)–Not entire GPU–Exceptions: L2 and DRAM counters• A single run can collect a few counters–Multiple runs are needed when profiling more counters•Done automatically by the Visual Profiler•Have to be done manually using command-line profiler•Counter values may not be exactly the same for repeated runs –Threadblocks and warps are scheduled at run-time–So, “two counters being equal” usually means “two counters within a small delta”•See the profiler documentation for more informationIdentifying Performance LimitersLimited by Bandwidth or Arithmetic?•Perfect instructions:bytes ratio for Fermi C2050:–~4.5 : 1 with ECC on–~3.6 : 1 with ECC off–These assume fp32 instructions, throughput for other instructions varies •Algorithmic analysis:–Rough estimate of arithmetic to bytes ratio•Code likely uses more instructions and bytes than algorithm analysis suggests:–Instructions for loop control, pointer math, etc.–Address pattern may result in more memory fetches–T wo ways to investigate:•Use the profiler (quick, but approximate)•Use source code modification (more accurate, more work intensive)Analysis with Profiler•Profiler counters:–instructions_issued, instructions_executed•Both incremented by 1per warp•“issued” includes replays, “executed” does not–gld_request, gst_request•Incremented by1 per warp for each load/store instruction•Instruction may be counted if it is “predicated out”–l1_global_load_miss, l1_global_load_hit, global_store_transaction•Incremented by 1per L1 line(line is 128B)–uncached_global_load_transaction•Incremented by1 per group of 1, 2, 3, or 4 transactions•Better to look at L2_read_request counter (incremented by 1 per 32 bytes, per GPU)•Compare:–32 * instructions_issued/* 32 = warp size */–128B * (global_store_transaction+ l1_global_load_miss)A Note on Counting Global Memory Accesses•Load/store instruction count can be lower than the number of actual memory transactions–Address pattern, different word sizes•Counting requests from L1 to the rest of the memory system makes the most sense–Caching-loads: count L1 misses–Non-caching loads and stores: count L2 read requests•Note that L2 counters are for the entire chip, L1 counters are per SM•Some shortcuts, assuming “coalesced” address patterns:–One 32-bit access instruction-> one 128-byte transaction per warp–One 64-bit access instruction-> two 128-byte transactions per warp–One 128-bit access instruction-> four 128-byte transactions per warpAnalysis with Modified Source Code•Time memory-only and math-only versions of the kernel –Easier for codes that don’t have data-dependent control-flow oraddressing–Gives you good estimates for:•Time spent accessing memory•Time spent in executing instructions•Comparing the times for modified kernels–Helps decide whether the kernel is mem or math bound–Shows how well memory operations are overlapped with arithmetic •Compare the sum of mem-only and math-only times to full-kernel timetimeMemory-boundGood mem-mathoverlap: latency not aproblem(assuming memorythroughput is not lowcompared to HW theory)mem mathfull mem math fullMath-boundGood mem-mathoverlap: latency not aproblem(assuming instructionthroughput is not lowcompared to HW theory)Memory-boundGood mem-mathoverlap: latency not aproblem(assuming memorythroughput is not lowcompared to HW theory) timemem mathfull mem mathfull mem math full Math-boundGood mem-mathoverlap: latency not aproblem(assuming instructionthroughput is not lowMemory-boundGood mem-math overlap: latency not a problem(assuming memory throughput is not low compared to HW theory)BalancedGood mem-math overlap: latency not a problem(assuming memory/instr throughput is not low compared to HW theory)timemem mathfull mem mathfull mem mathfull mem math fullMemory and latency boundPoor mem-math overlap:latency is a problem Math-boundGood mem-mathoverlap: latency not aproblem(assuming instructionthroughput is not lowMemory-boundGood mem-math overlap: latency not a problem(assuming memory throughput is not low compared to HW theory)BalancedGood mem-math overlap: latency not a problem(assuming memory/instr throughput is not low compared to HW theory)timeSource Modification•Memory-only:–Remove as much arithmetic as possible•Without changing access pattern•Use the profiler to verify that load/store instruction count is the same •Store-only:–Also remove the loads•Math-only:–Remove global memory accesses–Need to trick the compiler:•Compiler throws away all code that it detects as not contributing to stores•Put stores inside conditionals that always evaluate to false–Condition should depend on the value about to be stored (prevents other optimizations)–Condition outcome should not be known to the compilerSource Modification for Math-only __global__ void fwd_3D( ..., int flag){...value = temp + coeff* vsq; if( 1 == value * flag )g_output[out_idx] = value; }If you compare only the flag, the compiler may move the computation into the conditional as wellSource Modification and Occupancy •Removing pieces of code is likely to affect register count–This could increase occupancy, skewing the results–See slide 23 to see how that could affect throughput •Make sure to keep the same occupancy–Check the occupancy with profiler before modifications –After modifications, if necessary add shared memory to match the unmodified kernel’s occupancykernel<<< grid, block, smem,...>>>(...)•Analysis:–Instr:byte ratio = ~2.66•32*18,194,139 / 128*1,708,032–Good overlap between math and mem:•2.12 ms of math-only time (13%) are not overlapped with mem–App memory throughput: 62 GB/s•HW theory is 114 GB/s , so we’re off•Conclusion:–Code is memory-bound –Latency could be an issue too–Optimizations should focus on memorythroughput first•math contributes very little to total time (2.12 out of 35.39ms)•3DFD of the wave equation, fp32•Time (ms):–Full-kernel:35.39–Mem-only:33.27–Math-only:16.25•Instructions issued:–Full-kernel:18,194,139–Mem-only:7,497,296–Math-only:16,839,792•Memory access transactions:–Full-kernel:1,708,032–Mem-only:1,708,032–Math-only:•Analysis:–Instr:byte ratio = ~2.66•32*18,194,139 / 128*1,708,032–Good overlap between math and mem:•2.12 ms of math-only time (13%) are not overlapped with mem–App memory throughput: 62 GB/s•HW theory is 114 GB/s , so we’re off•Conclusion:–Code is memory-bound –Latency could be an issue too–Optimizations should focus on memorythroughput first•math contributes very little to total time (2.12out of 35.39ms )•3DFD of the wave equation, fp32•Time (ms):–Full-kernel:35.39–Mem-only:33.27–Math-only:16.25•Instructions issued:–Full-kernel:18,194,139–Mem-only:7,497,296–Math-only:16,839,792•Memory access transactions:–Full-kernel:1,708,032–Mem-only:1,708,032–Math-only:Summary: Limiter Analysis•Rough algorithmic analysis:–How many bytes needed, how many instructions •Profiler analysis:–Instruction count, memory request/transaction count •Analysis with source modification:–Memory-only version of the kernel–Math-only version of the kernel–Examine how these times relate and overlapOptimizations for Global MemoryMemory Throughput Analysis•Throughput: from application point of view–From app point of view:count bytes requested by the application –From HW point of view:count bytes moved by the hardware–The two can be different•Scattered/misaligned pattern: not all transaction bytes are utilized•Broadcast: the same small transaction serves many requests•Two aspects to analyze for performance impact:–Addressing pattern–Number of concurrent accesses in flightMemory Throughput Analysis•Determining that access pattern is problematic:–Profiler counters: access instruction count is significantly smaller thantransaction count•gld_request< ( l1_global_load_miss+ l1_global_load_hit) * ( word_size/ 4B )•gst_request< 4 * l2_write_requests* ( word_size/ 4B )•Make sure to adjust the transaction counters for word size (see slide 8)–App throughput is much smaller than HW throughput•Use profiler to get HW throughput•Determining that the number of concurrent accesses is insufficient:–Throughput from HW point of view is much lower than theoreticalConcurrent Accesses and Performance•Increment a 64M element array–T wo accesses per thread (load then store, but they are dependent)•Thus, each warp (32 threads) has one outstanding transaction at a time•Tesla C2050, ECC on, theoretical bandwidth: ~120 GB/sSeveral independent smalleraccesses have the same effectas one larger one.For example:Four 32-bit ~= one 128-bitOptimization: Address Pattern•Coalesce the address pattern–128-byte lines for caching loads–32-byte segments for non-caching loads, stores– A warp’s address pattern is converted to transactions•Coalesce to maximize utilization of bus transactions•Refer to CUDA Programming Guide / Best Practices Guide / Fundamental Opt. talk •Try using non-caching loads–Smaller transactions (32B instead of 128B)•more efficient for scattered or partially-filled patterns•Try fetching data from texture–Smaller transactions and different caching–Cache not polluted by other gmem loadsOptimizing Access Concurrency•Have enough concurrent accesses to saturate the bus –Need (mem_latency)x(bandwidth) bytes in flight (Little’s law)–Fermi C2050 global memory:•400-800cycle latency, 1.15 GHz clock, 144 GB/s bandwidth, 14 SMs•Need 30-50128-byte transactions in flight per SM•Ways to increase concurrent accesses:–Increase occupancy•Adjust threadblock dimensions–T o maximize occupancy at given register and smem requirements•Reduce register count (-maxrregcount option, or __launch_bounds__)–Modify code to process several elements per threadCase Study: Access Pattern 1•Same 3DFD code as in the previous study•Using caching loads (compiler default):–Memory throughput: 62 /74 GB/s for app / hw–Different enough to be interesting•Loads are coalesced:–gld_request== ( l1_global_load_miss + l1_global_load_hit )•There are halo loads that use only 4threads out of 32–For these transactions only 16bytes out of 128are useful•Solution: try non-caching loads ( -Xptxas–dlcm=cg compiler option)–Performance increase of 7%•Not bad for just trying a compiler flag, no code change–Memory throughput: 66 /67 GB/s for app / hwCase Study: Accesses in Flight•Continuing with the FD code–Throughput from both app and hw point of view is 66-67 GB/s–Now 30.84out of 33.71 ms are due to mem–1024concurrent threads per SM•Due to register count (24 per thread)•Simple copy kernel reaches ~80% of achievable mem throughput at this thread count •Solution: increase accesses per thread–Modified code so that each thread is responsible for 2output points •Doubles the load and store count per thread, saves some indexing math•Doubles the tile size -> reduces bandwidth spent on halos–Further 25% increase in performance•App and HW throughputs are now 82and 84 GB/s, respectively•Kernel from climate simulation code–Mostly fp64 (so, at least 2transactions per mem access)•Profiler results:–gld_request:72,704–l1_global_load_hit:439,072–l1_global_load_miss:724,192•Analysis:–L1 hit rate: 37.7%–16 transactions per load instruction•Indicates bad access pattern(2are expected due to 64-bit words)•Of the 16, 10 miss in L1 and contribute to mem bus traffic•So, we fetch 5x more bytes than needed by the app•Looking closer at the access pattern:–Each thread linearly traverses a contiguous memory region–Expecting CPU-like L1 caching•Remember what I said about coding for L1 and L2•(Fundamental Optimizations, slide 11)–One of the worst access patterns for GPUs•Solution:–Transposed the code so that each warp accesses a contiguous memory region–2.17transactions per load instruction–This and some other changes improved performance by 3xSummary: Memory Analysis and Optimization•Analyze:–Access pattern:•Compare counts of access instructions and transactions•Compare throughput from app and hw point of view–Number of accesses in flight•Look at occupancy and independent accesses per thread•Compare achieved throughput to theoretical throughput–Also to simple memcpy throughput at the same occupancy•Optimizations:–Coalesce address patterns per warp (nothing new here), consider texture–Process more words per thread (if insufficient accesses in flight to saturate bus)–Try the 4 combinations of L1 size and load type (caching and non-caching)–Consider compressionOptimizations for Instruction ThroughputPossible Limiting Factors•Raw instruction throughput–Know the kernel instruction mix–fp32, fp64, int, mem, transcendentals, etc. have different throughputs •Refer to the CUDA Programming Guide / Best Practices Guide–Can examine assembly, if needed:•Can look at PTX (virtual assembly), though it’s not the final optimized code•Can look at post-optimization machine assembly for GT200 (Fermi version coming later)•Instruction serialization–Occurs when threads in a warp issue the same instruction in sequence •As opposed to the entire warp issuing the instruction at once•Think of it as “replaying” the same instruction for different threads in a warp –Some causes:•Shared memory bank conflicts•Constant memory bank conflictsInstruction Throughput: Analysis•Profiler counters (both incremented by 1 per warp):–instructions executed:counts instructions encoutered during execution –instructions issued:also includes additional issues due to serialization –Difference between the two: issues that happened due to serialization,instr cache misses, etc.•Will rarely be 0, cause for concern only if it’s a significant percentage ofinstructions issued•Compare achieved throughput to HW capabilities–Peak instruction throughput is documented in the Programming Guide –Profiler also reports throughput:•GT200: as a fraction of theoretical peak for fp32 instructions•Fermi: as IPC (instructions per clock)Instruction Throughput: Optimization•Use intrinsics where possible ( __sin(), __sincos(),__exp(), etc.)–Available for a number of math.h functions–2-3 bits lower precision, much higher throughput•Refer to the CUDA Programming Guide for details–Often a single instruction, whereas a non-intrinsic is a SW sequence •Additional compiler flags that also help (select GT200-level precision):–-ftz=true: flush denormals to 0–-prec-div=false: faster fp division instruction sequence (some precision loss) –-prec-sqrt=false: faster fp sqrt instruction sequence (some precision loss)•Make sure you do fp64 arithmetic only where you mean it:–fp64 throughput is lower than fp32–fp literals without an “f” suffix ( 34.7 ) are interpreted as fp64 per C standardSerialization: Profiler Analysis•Serialization is significant if–instructions_issued is significantly higher than instructions_executed •Warp divergence–Profiler counters: divergent_branch, branch–Compare the two to see what percentage diverges•However, this only counts the branches, not the rest of serialized instructions •SMEM bank conflicts–Profiler counters:•l1_shared_bank_conflict: incremented by 1per warp for each replay–double counts for 64-bit accesses•shared_load, shared_store: incremented by 1per warp per instruction –Bank conflicts are significant if both are true:•instruction throughput affects performance•l1_shared_bank_conflict is significant compared to instructions_issuedSerialization: Analysis with Modified Code •Modify kernel code to assess performance improvement if serialization were removed–Helps decide whether optimizations are worth pursuing •Shared memory bank conflicts:–Change indexing to be either broadcasts or just threadIdx.x–Should also declare smem variables as volatile•Prevents compiler from “caching” values in registers•Warp divergence:–change the condition to always take the same path–Time both paths to see what each costsSerialization: Optimization•Shared memory bank conflicts:–Pad SMEM arrays•For example, when a warp accesses a 2D array’s column•See CUDA Best Practices Guide, Transpose SDK whitepaper –Rearrange data in SMEM•Warp serialization:–Try grouping threads that take the same path•Rearrange the data, pre-process the data•Rearrange how threads index data (may affect memory perf)Case Study: SMEM Bank Conflicts• A different climate simulation code kernel, fp64•Profiler values:–Instructions:•Executed / issued:2,406,426/ 2,756,140•Difference:349,714(12.7% of instructions issued were “replays”)–GMEM:•Total load and store transactions:170,263•Instr:byte ratio: 4–suggests that instructions are a significant limiter (especially since there is a lot of fp64 math)–SMEM:•Load / store:421,785/ 95,172•Bank conflict:674,856(really 337,428because of double-counting for fp64)–This means a total of 854,385SMEM access instructions, (421,785+95,172+337,428), 39% replays •Solution:–Pad shared memory array: performance increased by 15%•replayed instructions reduced down to 1%Instruction Throughput: Summary•Analyze:–Check achieved instruction throughput–Compare to HW peak (but must take instruction mix intoconsideration)–Check percentage of instructions due to serialization •Optimizations:–Intrinsics, compiler options for expensive operations–Group threads that are likely to follow same execution path –Avoid SMEM bank conflicts (pad, rearrange data)Optimizations for LatencyLatency: Analysis•Suspect if:–Neither memory nor instruction throughput rates are close to HW theoretical rates–Poor overlap between mem and math•Full-kernel time is significantly larger than max{mem-only, math-only}•Two possible causes:–Insufficient concurrent threads per multiprocessor to hide latency•Occupancy too low•T oo few threads in kernel launch to load the GPU–elapsed time doesn’t change if problem size is increased (and with it the number of blocks/threads)–T oo few concurrent threadblocks per SM when using __syncthreads()•__syncthreads() can prevent overlap between math and mem within the same threadblockMath-only time Memory-only time Full-kernel time, one large threadblock per SM Kernel where most math cannot be executed until all data is loaded by the threadblockMath-only time Memory-only time Full-kernel time, two threadblocks per SM (each half the size of one large one)Full-kernel time, one large threadblock per SM Kernel where most math cannot be executed until all data is loaded by the threadblockLatency: Optimization•Insufficient threads or workload:–Increase the level of parallelism (more threads)–If occupancy is already high but latency is not being hidden:•Process several output elements per thread –gives more independent memory and arithmetic instructions (which get pipelined)•Barriers:–Can assess impact on perf by commenting out __syncthreads()•Incorrect result, but gives upper bound on improvement–Try running several smaller threadblocks•Think of it as “pipelining” blocks•In some cases that costs extra bandwidth due to halos•Check out Vasily Volkov’s talk 2238 at GTC 2010 for a detailed treatment:–“Better Performance at Lower Latency”Register SpillingRegister Spilling•Compiler “spills” registers to local memory when register limit is exceeded –Fermi HW limit is 63 registers per thread–Spills also possible when register limit is programmer-specified•Common when trying to achieve certain occupancy with -maxrregcount compiler flag or __launch_bounds__ in source–lmem is like gmem, except that writes are cached in L1•lmem load hit in L1-> no bus traffic•lmem load miss in L1-> bus traffic (128 bytes per miss)–Compiler flag –Xptxas–v gives the register and lmem usage per thread •Potential impact on performance–Additional bandwidth pressure if evicted from L1–Additional instructions–Not always a problem, easy to investigate with quick profiler analysisRegister Spilling: Analysis•Profiler counters:l1_local_load_hit, l1_local_load_miss •Impact on instruction count:–Compare to total instructions issued•Impact on memory throughput:–Misses add 128 bytes per warp–Compare 2*l1_local_load_miss count to gmem access count(stores + loads)•Multiply lmem load misses by 2: missed line must have been evicted ->store across bus•Comparing with caching loads: count only gmem misses in L1•Comparing with non-caching loads: count all loadsOptimization for Register Spilling•Try increasing the limit of registers per thread–Use a higher limit in –maxrregcount, or lower thread count for __launch_bounds__–Will likely decrease occupancy, potentially making gmem accesses lessefficient–However, may still be an overall win –fewer total bytes being accessed in gmem•Non-caching loads for gmem–potentially fewer contentions with spilled registers in L1•Increase L1 size to 48KB–default is 16KB L1 / 48KB smemRegister Spilling: Case Study•FD kernel, (3D-cross stencil)–fp32, so all gmem accesses are 4-byte words •Need higher occupancy to saturate memory bandwidth –Coalesced, non-caching loads•one gmem request = 128 bytes•all gmem loads result in bus traffic–Larger threadblocks mean lower gmem pressure •Halos (ghost cells) are smaller as a percentage•Aiming to have 1024concurrent threads per SM –Means no more than 32 registers per thread–Compiled with –maxrregcount=32•10th order in space kernel (31-point stencil)–32 registers per thread : 68 bytes of lmem per thread : upto1024 threads per SM•Profiled counters:–l1_local_load_miss= 36inst_issued= 8,308,582–l1_local_load_hit= 70,956gld_request= 595,200–local_store= 64,800gst_request= 128,000•Conclusion: spilling is not a problem in this case–The ratio of gmem to lmem bus traffic is approx 8,444 : 1 (hardly any bus traffic is due to spills)•L1 contains most of the spills (99.9% hit rate for lmem loads)–Only 1.6% of all instructions are due to spills•Comparison:–42 registers per thread : no spilling : upto768 threads per SM•Single 512-thread block per SM : 24% perf decrease•Three 256-thread blocks per SM : 7% perf decrease•12th order in space kernel (37-point stencil)–32 registers per thread : 80 bytes of lmem per thread : upto1024 threads per SM •Profiled counters:–l1_local_load_miss= 376,889inst_issued= 10,154,216–l1_local_load_hit= 36,931gld_request= 550,656–local_store= 71,176gst_request= 115,200•Conclusion: spilling is a problem in this case–The ratio of gmem to lmem bus traffic is approx 7 : 6 (53% of bus traffic is due to spilling)•L1 does not contain the spills (8.9% hit rate for lmem loads)–Only 4.1% of all instructions are due to spills•Solution: increase register limit per thread–42 registers per thread : no spilling : upto768 threads per SM–Single 512-thread block per SM : 13%perf increase–Three 256-thread blocks per SM : 37%perf increase。