基于Apache ShardingSphere打造分布式数据库

- 格式:pptx

- 大小:1.54 MB

- 文档页数:38

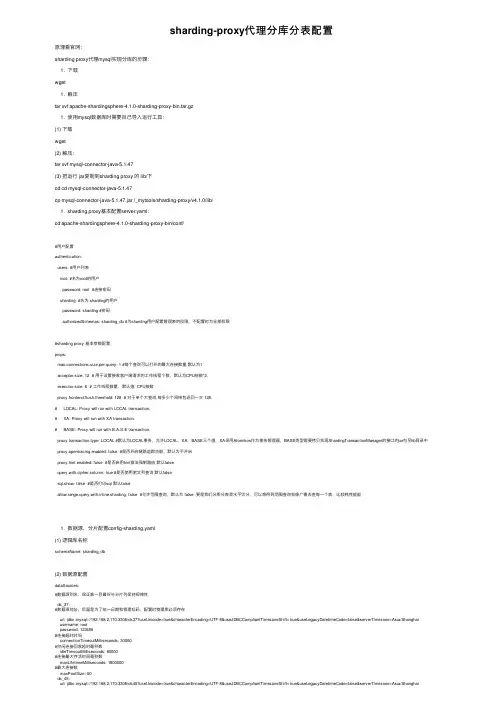

sharding-proxy代理分库分表配置原理看官⽹:sharding proxy代理mysql实现分库的步骤:1. 下载wget1. 解压tar xvf apache-shardingsphere-4.1.0-sharding-proxy-bin.tar.gz1. 使⽤mysql数据库时需要⾃⼰导⼊运⾏⼯具:(1) 下载wget(2) 解压:tar xvf mysql-connector-java-5.1.47(3) 把运⾏ jar复制到sharding proxy 的 lib/下cd cd mysql-connector-java-5.1.47cp mysql-connector-java-5.1.47.jar /_mytools/sharding-proxy/v4.1.0/lib/1. sharding proxy基本配置server.yaml:cd apache-shardingsphere-4.1.0-sharding-proxy-bin/conf/#⽤户配置authentication:users: #⽤户列表root: #名为root的⽤户password: root #连接密码sharding: #名为 sharding的⽤户password: sharding #密码authorizedSchemas: sharding_db #为sharding⽤户配置管理表的权限,不配置时为全部权限#sharding proxy 基本参数配置props:max.connections.size.per.query: 1 #每个查询可以打开的最⼤连接数量,默认为1acceptor.size: 12 # ⽤于设置接收客户端请求的⼯作线程个数,默认为CPU核数*2.executor.size: 6 # ⼯作线程数量,默认值: CPU核数proxy.frontend.flush.threshold: 128 # 对于单个⼤查询,每多少个⽹络包返回⼀次 128.# LOCAL: Proxy will run with LOCAL transaction.# XA: Proxy will run with XA transaction.# BASE: Proxy will run with B.A.S.E transaction.proxy.transaction.type: LOCAL #默认为LOCAL事务,允许LOCAL,XA,BASE三个值,XA采⽤Atomikos作为事务管理器,BASE类型需要拷贝实现ShardingTransactionManager的接⼝的jar包⾄lib⽬录中 proxy.opentracing.enabled: false #是否开启链路追踪功能,默认为不开启proxy.hint.enabled: false #是否启⽤hint算法强制路由默认falsequery.with.cipher.column: true #是否使⽤密⽂列查询默认falsesql.show: false #是否打印sql 默认falseallow.range.query.with.inline.sharding: false #允许范围查询,默认为 false ,要是我们分库分表是⽔平切分,可以想得到范围查询会像⼴播去查每⼀个表,⽐较耗性能能1. 数据源、分⽚配置config-sharding.yaml(1) 逻辑库名称schemaName: sharding_db(2) 数据源配置dataSources:#数据源别名,保证唯⼀且最好与分⽚列保持规律性,ds_37:#数据源地址,后⾯是为了统⼀⽇期和管理乱码,配置时物理库必须存在url: jdbc:mysql://192.168.2.170:3306/ds37?useUnicode=true&characterEncoding=UTF-8&useJDBCCompliantTimezoneShift=true&useLegacyDatetimeCode=false&serverTimezone=Asia/Shanghaiusername: rootpassword: 123456#连接超时时间connectionTimeoutMilliseconds: 30000#空闲连接回收超时毫秒数idleTimeoutMilliseconds: 60000#连接最⼤存活时间毫秒数maxLifetimeMilliseconds: 1800000#最⼤连接数maxPoolSize: 50ds_45:url: jdbc:mysql://192.168.2.170:3306/ds45?useUnicode=true&characterEncoding=UTF-8&useJDBCCompliantTimezoneShift=true&useLegacyDatetimeCode=false&serverTimezone=Asia/Shanghaiusername: rootpassword: 123456connectionTimeoutMilliseconds: 30000idleTimeoutMilliseconds: 60000maxLifetimeMilliseconds: 1800000maxPoolSize: 50(3) 分⽚规则shardingRule:tables: #数据分⽚规则,可配置多个seal_data: #逻辑库中的逻辑表名actualDataNodes: ds_${['37','45']}.seal_data #规则节点,逻辑数据源+逻辑表名⽀持inline表达式。

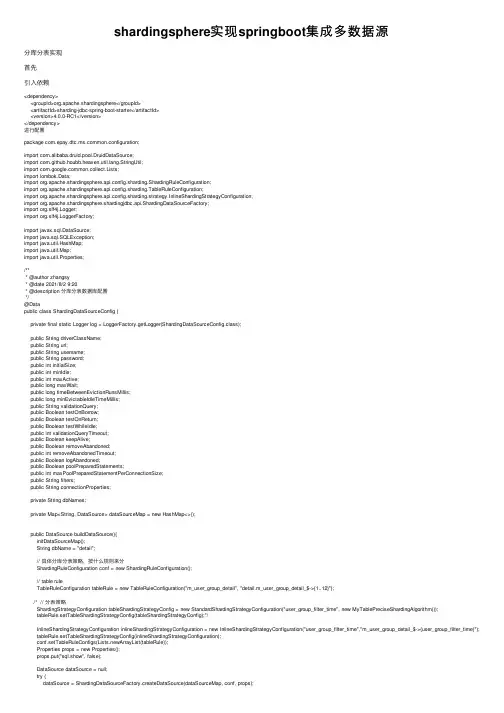

springboot:shardingsphere多数据源,⽀持未分表的数据源(shard。

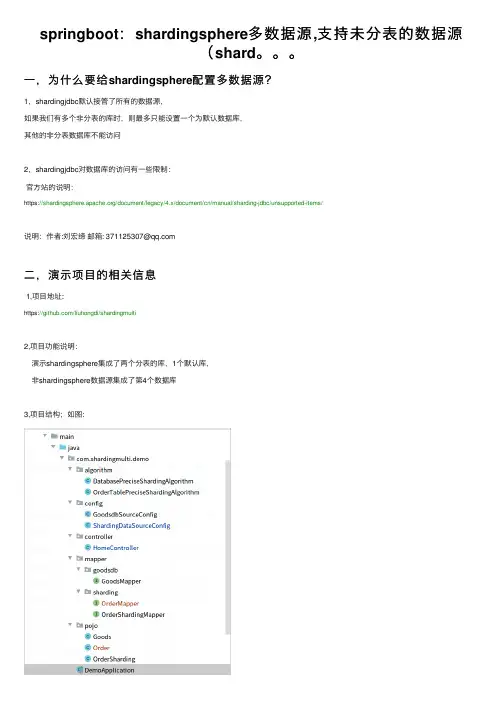

⼀,为什么要给shardingsphere配置多数据源?1,shardingjdbc默认接管了所有的数据源,如果我们有多个⾮分表的库时,则最多只能设置⼀个为默认数据库,其他的⾮分表数据库不能访问2,shardingjdbc对数据库的访问有⼀些限制:官⽅站的说明:https:///document/legacy/4.x/document/cn/manual/sharding-jdbc/unsupported-items/说明:作者:刘宏缔邮箱: 371125307@⼆,演⽰项⽬的相关信息1,项⽬地址:https:///liuhongdi/shardingmulti2,项⽬功能说明:演⽰shardingsphere集成了两个分表的库,1个默认库,⾮shardingsphere数据源集成了第4个数据库3,项⽬结构;如图:4,数据库结构:三,配置⽂件说明1,pom.xml<dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId><exclusions><exclusion><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-logging</artifactId></exclusion></exclusions></dependency><!--sharding jdbc begin--><dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-core</artifactId><version>4.1.1</version></dependency><!--sharding jdbc end--><!--mybatis begin--><dependency><groupId>org.mybatis.spring.boot</groupId><artifactId>mybatis-spring-boot-starter</artifactId><version>2.1.3</version></dependency><!--mybatis end--><!--druid begin--><dependency><groupId>com.alibaba</groupId><artifactId>druid-spring-boot-starter</artifactId><version>1.1.23</version></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-log4j2</artifactId></dependency><dependency><groupId>com.lmax</groupId><artifactId>disruptor</artifactId><version>3.4.2</version></dependency><!--druid end--><!--mysql begin--><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><scope>runtime</scope></dependency><!--mysql end--><!--pagehelper begin--><dependency><groupId>com.github.pagehelper</groupId><artifactId>pagehelper-spring-boot-starter</artifactId><version>1.3.0</version></dependency><!--pagehelper end--><!--thymeleaf begin--><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-thymeleaf</artifactId></dependency><!--thymeleaf end-->2,application.properties#errorserver.error.include-stacktrace=always#error.springframework.web=trace#name = dynamic# goodsdb数据源基本配置ername = rootspring.datasource.druid.goodsdb.password = lhddemospring.datasource.druid.goodsdb.driver-class-name = com.mysql.cj.jdbc.Driverspring.datasource.druid.goodsdb.url = jdbc:mysql://127.0.0.1:3306/store?serverTimezone=UTC spring.datasource.druid.goodsdb.type = com.alibaba.druid.pool.DruidDataSourcespring.datasource.druid.goodsdb.initialSize = 5spring.datasource.druid.goodsdb.minIdle = 5spring.datasource.druid.goodsdb.maxActive = 20spring.datasource.druid.goodsdb.maxWait = 60000spring.datasource.druid.goodsdb.timeBetweenEvictionRunsMillis = 60000spring.datasource.druid.goodsdb.minEvictableIdleTimeMillis = 300000spring.datasource.druid.goodsdb.validationQuery = SELECT 1 FROM DUALspring.datasource.druid.goodsdb.testWhileIdle = truespring.datasource.druid.goodsdb.testOnBorrow = falsespring.datasource.druid.goodsdb.testOnReturn = falsespring.datasource.druid.goodsdb.poolPreparedStatements = true# 配置监控统计拦截的filters,去掉后监控界⾯sql⽆法统计,'wall'⽤于防⽕墙spring.datasource.druid.maxPoolPreparedStatementPerConnectionSize = 20eGlobalDataSourceStat = truespring.datasource.druid.connectionProperties = druid.stat.mergeSql=true;druid.stat.slowSqlMillis=500 #druid sql firewall monitorspring.datasource.druid.filter.wall.enabled=true#druid sql monitorspring.datasource.druid.filter.stat.enabled=truespring.datasource.druid.filter.stat.log-slow-sql=truespring.datasource.druid.filter.stat.slow-sql-millis=10000spring.datasource.druid.filter.stat.merge-sql=true#druid uri monitorspring.datasource.druid.web-stat-filter.enabled=truespring.datasource.druid.web-stat-filter.url-pattern=/*spring.datasource.druid.web-stat-filter.exclusions=*.js,*.gif,*.jpg,*.bmp,*.png,*.css,*.ico,/druid/*#druid session monitorspring.datasource.druid.web-stat-filter.session-stat-enable=truespring.datasource.druid.web-stat-filter.profile-enable=true#druid spring monitorspring.datasource.druid.aop-patterns=com.druid.*#monintor,druid login user configspring.datasource.druid.stat-view-servlet.enabled=truespring.datasource.druid.stat-view-servlet.login-username=rootspring.datasource.druid.stat-view-servlet.login-password=root# IP⽩名单 (没有配置或者为空,则允许所有访问)spring.datasource.druid.stat-view-servlet.allow = 127.0.0.1,192.168.163.1# IP⿊名单 (存在共同时,deny优先于allow)spring.datasource.druid.stat-view-servlet.deny = 192.168.10.1#mybatismybatis.mapper-locations=classpath:/mapper/sharding/*Mapper.xml#mybatis.type-aliases-package=com.example.demo.mappermybatis.configuration.log-impl=org.apache.ibatis.logging.stdout.StdOutImpl#loglogging.config = classpath:log4j2.xml#shardingsphere第⼀个分表数据源spring.datasource.druid.saleorder01.type=com.alibaba.druid.pool.DruidDataSourcespring.datasource.druid.saleorder01.driverClassName=com.mysql.cj.jdbc.Driverspring.datasource.druid.saleorder01.url=jdbc:mysql://127.0.0.1:3306/saleorder01?characterEncoding=utf-8ername=rootspring.datasource.druid.saleorder01.password=lhddemo#shardingsphere第⼆个分表数据源spring.datasource.druid.saleorder02.type=com.alibaba.druid.pool.DruidDataSourcespring.datasource.druid.saleorder02.driverClassName=com.mysql.cj.jdbc.Driverspring.datasource.druid.saleorder02.url=jdbc:mysql://127.0.0.1:3306/saleorder02?characterEncoding=utf-8ername=rootspring.datasource.druid.saleorder02.password=lhddemo#shardingsphere第三个数据源,⾮分表,作为默认库访问spring.datasource.druid.orderdb.type=com.alibaba.druid.pool.DruidDataSourcespring.datasource.druid.orderdb.driverClassName=com.mysql.cj.jdbc.Driverspring.datasource.druid.orderdb.url=jdbc:mysql://127.0.0.1:3306/orderdb?characterEncoding=utf-8ername=rootspring.datasource.druid.orderdb.password=lhddemo3,各数据表的建表语句:我们创建两个库:saleorder01,saleorder02然后在各个库内各创建两个数据表:saleorder01库包括t_order_1,t_order_2saleorder02库包括t_order_3,t_order_4建表的sql:CREATE TABLE `t_order_4` (`orderId` bigint(11) unsigned NOT NULL AUTO_INCREMENT COMMENT 'id',`goodsName` varchar(250) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL DEFAULT'' COMMENT 'name', PRIMARY KEY (`orderId`)) ENGINE=InnoDB AUTO_INCREMENT=0DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci COMMENT='order4' goodsdb的数据表:CREATE TABLE `goods` (`goodsId` bigint(11) unsigned NOT NULL AUTO_INCREMENT COMMENT 'id',`goodsName` varchar(500) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL DEFAULT'' COMMENT 'name', `subject` varchar(200) NOT NULL DEFAULT'' COMMENT '标题',`price` decimal(15,2) NOT NULL DEFAULT'0.00' COMMENT '价格',`stock` int(11) NOT NULL DEFAULT'0' COMMENT 'stock',PRIMARY KEY (`goodsId`)) ENGINE=InnoDB AUTO_INCREMENT=0DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci COMMENT='商品表'插⼊数据:INSERT INTO `goods` (`goodsId`, `goodsName`, `subject`, `price`, `stock`) VALUES(3, '100分电动⽛刷', '好⽤到让你爱上刷⽛', '59.00', 96);orderdb的数据表:CREATE TABLE `orderinfo` (`orderId` bigint(11) unsigned NOT NULL AUTO_INCREMENT COMMENT 'id',`orderSn` varchar(100) NOT NULL DEFAULT'' COMMENT '编号',`orderTime` timestamp NOT NULL DEFAULT'1971-01-01 00:00:01' COMMENT '下单时间',`orderStatus` tinyint(4) NOT NULL DEFAULT'0' COMMENT '状态:0,未⽀付,1,已⽀付,2,已发货,3,已退货,4,已过期',`userId` int(12) NOT NULL DEFAULT'0' COMMENT '⽤户id',`price` decimal(10,0) NOT NULL DEFAULT'0' COMMENT '价格',`addressId` int(12) NOT NULL DEFAULT'0' COMMENT '地址',PRIMARY KEY (`orderId`),UNIQUE KEY `orderSn` (`orderSn`)) ENGINE=InnoDB AUTO_INCREMENT=0DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci COMMENT='订单表'插⼊数据:INSERT INTO `orderinfo` (`orderId`, `orderSn`, `orderTime`, `orderStatus`, `userId`, `price`, `addressId`) VALUES(77, '20200814171411660', '2020-08-14 09:14:12', 0, 8, '100', 0);四,java代码说明:1, DatabasePreciseShardingAlgorithm.java@Componentpublic class DatabasePreciseShardingAlgorithm implements PreciseShardingAlgorithm<Long> {@Overridepublic String doSharding(Collection<String> availableTargetNames, PreciseShardingValue<Long> shardingValue) {Long curValue = shardingValue.getValue();String curBase = "";if (curValue > 0 && curValue<=200) {curBase = "saleorder01";} else {curBase = "saleorder02";}return curBase;}}数据库分库算法2,OrderTablePreciseShardingAlgorithm.java@Componentpublic class OrderTablePreciseShardingAlgorithm implements PreciseShardingAlgorithm<Long> {@Overridepublic String doSharding(Collection<String> availableTargetNames, PreciseShardingValue<Long> shardingValue) {Long curValue = shardingValue.getValue();String curTable = "";if (curValue > 0 && curValue<=100) {curTable = "t_order_1";} else if (curValue > 100 && curValue<=200) {curTable = "t_order_2";} else if (curValue > 200 && curValue<=300) {curTable = "t_order_3";} else {curTable = "t_order_4";}return curTable;}}数据库分表算法3,GoodsdbSourceConfig.java@Configuration@MapperScan(basePackages = "com.shardingmulti.demo.mapper.goodsdb", sqlSessionTemplateRef = "goodsdbSqlSessionTemplate") public class GoodsdbSourceConfig {@Bean@Primary@ConfigurationProperties("spring.datasource.druid.goodsdb")public DataSource goodsdbDataSource() {return DruidDataSourceBuilder.create().build();}@Bean@Primarypublic SqlSessionFactory goodsdbSqlSessionFactory(@Qualifier("goodsdbDataSource") DataSource dataSource) throws Exception { SqlSessionFactoryBean bean = new SqlSessionFactoryBean();bean.setDataSource(dataSource);bean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath:mapper/goodsdb/*.xml"));return bean.getObject();}@Bean@Primarypublic DataSourceTransactionManager goodsdbTransactionManager(@Qualifier("goodsdbDataSource") DataSource dataSource) {return new DataSourceTransactionManager(dataSource);}@Bean@Primarypublic SqlSessionTemplate goodsdbSqlSessionTemplate(@Qualifier("goodsdbSqlSessionFactory") SqlSessionFactory sqlSessionFactory) throws Exception { return new SqlSessionTemplate(sqlSessionFactory);}}配置goodsdb数据源,注意因为要使⽤mybatis,所以指明了mapper⽂件所在的包,和xml⽂件所在的路径因为这个数据源不是由shardingjdbc所管理,所以要注意两个数据源的mapper程序和xml⽂件要隔离开分别放在 mapper/goodsdb 和 mapper/sharding4,ShardingDataSourceConfig.java@Configuration@MapperScan(basePackages = "com.shardingmulti.demo.mapper.sharding", sqlSessionFactoryRef = "shardingSqlSessionFactory")public class ShardingDataSourceConfig {//分表算法@Resourceprivate OrderTablePreciseShardingAlgorithm orderTablePreciseShardingAlgorithm;//分库算法@Resourceprivate DatabasePreciseShardingAlgorithm databasePreciseShardingAlgorithm;//第⼀个订单库@Bean(name = "saleorder01")@ConfigurationProperties(prefix = "spring.datasource.druid.saleorder01")public DataSource saleorder01(){return DruidDataSourceBuilder.create().build();}//第⼆个订单库@Bean(name = "saleorder02")@ConfigurationProperties(prefix = "spring.datasource.druid.saleorder02")public DataSource saleorder02(){return DruidDataSourceBuilder.create().build();}//第三个库,订单统计库,做为默认@Bean(name = "orderdb")@ConfigurationProperties(prefix = "spring.datasource.druid.orderdb")public DataSource orderdb(){return DruidDataSourceBuilder.create().build();}//创建数据源,需要把分库的库都传递进去@Bean("dataSource")public DataSource dataSource(@Qualifier("saleorder01") DataSource saleorder01,@Qualifier("saleorder02") DataSource saleorder02,@Qualifier("orderdb") DataSource orderdb) throws SQLException {// 配置真实数据源Map<String, DataSource> dataSourceMap = new HashMap<String, DataSource>();dataSourceMap.put("orderdb", orderdb);dataSourceMap.put("saleorder01", saleorder01);dataSourceMap.put("saleorder02", saleorder02);ShardingRuleConfiguration shardingRuleConfig = new ShardingRuleConfiguration();shardingRuleConfig.setDefaultDataSourceName("orderdb");//如果有多个数据表需要分表,依次添加到这⾥shardingRuleConfig.getTableRuleConfigs().add(getOrderTableRuleConfiguration());Properties p = new Properties();p.setProperty("sql.show", Boolean.TRUE.toString());// 获取数据源对象DataSource dataSource = ShardingDataSourceFactory.createDataSource(dataSourceMap, shardingRuleConfig,p);return dataSource;}// 创建SessionFactory@Bean(name = "shardingSqlSessionFactory")public SqlSessionFactory shardingSqlSessionFactory(@Qualifier("dataSource") DataSource dataSource) throws Exception {SqlSessionFactoryBean bean = new SqlSessionFactoryBean();bean.setDataSource(dataSource);bean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath:mapper/sharding/*.xml"));return bean.getObject();}// 创建事务管理器@Bean("shardingTransactionManger")public DataSourceTransactionManager shardingTransactionManger(@Qualifier("dataSource") DataSource dataSource) {return new DataSourceTransactionManager(dataSource);}// 创建SqlSessionTemplate@Bean(name = "shardingSqlSessionTemplate")public SqlSessionTemplate shardingSqlSessionTemplate(@Qualifier("shardingSqlSessionFactory") SqlSessionFactory sqlSessionFactory) { return new SqlSessionTemplate(sqlSessionFactory);}//订单表的分表规则配置private TableRuleConfiguration getOrderTableRuleConfiguration() {TableRuleConfiguration result = new TableRuleConfiguration("t_order","saleorder01.t_order_$->{1..2},saleorder02.t_order_$->{3..4}");result.setDatabaseShardingStrategyConfig(new StandardShardingStrategyConfiguration("orderId",databasePreciseShardingAlgorithm)); result.setTableShardingStrategyConfig(new StandardShardingStrategyConfiguration("orderId",orderTablePreciseShardingAlgorithm));return result;}//分页@Bean(name="pageHelper")public PageHelper getPageHelper() {PageHelper pageHelper = new PageHelper();Properties properties = new Properties();properties.setProperty("reasonable", "true");properties.setProperty("supportMethodsArguments", "true");properties.setProperty("returnPageInfo", "true");properties.setProperty("params", "count=countSql");pageHelper.setProperties(properties);return pageHelper;}}shardingjdbc的数据源,创建时要使⽤ShardingDataSourceFactory它负责连接3个库:两个分表库:saleorder01,saleorder02, ⼀个⾮分表库:orderdb注意shardingjdbc所管理的数据源中,只能有⼀个⾮分表的库,⽽且要设置为默认库,否则不能正常访问5,HomeController.java@Controller@RequestMapping("/home")public class HomeController {@Resourceprivate GoodsMapper goodsMapper;@Resourceprivate OrderMapper orderMapper;@Resourceprivate OrderShardingMapper orderShardingMapper;//商品详情参数:商品id@GetMapping("/goodsinfo")@ResponseBody@DS("goodsdb")public Goods goodsInfo(@RequestParam(value="goodsid",required = true,defaultValue = "0") Long goodsId) {Goods goods = goodsMapper.selectOneGoods(goodsId);return goods;}//订单统计库,参数:订单id@GetMapping("/orderinfo")@ResponseBodypublic Order orderInfo(@RequestParam(value="orderid",required = true,defaultValue = "0") Long orderId) {Order order = orderMapper.selectOneOrder(orderId);return order;}//两个分表库中的订单列表@GetMapping("/orderlist")public String list(Model model, @RequestParam(value="currentPage",required = false,defaultValue = "1") Integer currentPage){ PageHelper.startPage(currentPage, 5);List<OrderSharding> orderList = orderShardingMapper.selectAllOrder();model.addAttribute("orderlist",orderList);PageInfo<OrderSharding> pageInfo = new PageInfo<>(orderList);model.addAttribute("pageInfo", pageInfo);System.out.println("------------------------size:"+orderList.size());return "order/list";}}实现到各个数据源的访问6,OrderShardingMapper.xml<?xml version="1.0" encoding="UTF-8" ?><!DOCTYPE mapper PUBLIC "-////DTD Mapper 3.0//EN" "/dtd/mybatis-3-mapper.dtd" ><mapper namespace="com.shardingmulti.demo.mapper.sharding.OrderShardingMapper"><select id="selectAllOrder" resultType="com.shardingmulti.demo.pojo.OrderSharding">select * from t_order order by orderId desc</select></mapper>7,OrderMapper.xml<?xml version="1.0" encoding="UTF-8" ?><!DOCTYPE mapperPUBLIC "-////DTD Mapper 3.0//EN""/dtd/mybatis-3-mapper.dtd"><mapper namespace="com.shardingmulti.demo.mapper.sharding.OrderMapper"><select id="selectOneOrder" parameterType="long" resultType="com.shardingmulti.demo.pojo.Order">select * from orderinfo where orderId=#{orderId}</select></mapper>8,GoodsMapper.xml<?xml version="1.0" encoding="UTF-8" ?><!DOCTYPE mapperPUBLIC "-////DTD Mapper 3.0//EN""/dtd/mybatis-3-mapper.dtd"><mapper namespace="com.shardingmulti.demo.mapper.goodsdb.GoodsMapper"><select id="selectOneGoods" parameterType="long" resultType="com.shardingmulti.demo.pojo.Goods">select * from goods where goodsId=#{goodsId}</select></mapper>五,测试效果1,访问goodsdbhttp://127.0.0.1:8080/home/goodsinfo?goodsid=3返回:2,访问orderdbhttp://127.0.0.1:8080/home/orderinfo?orderid=77返回:3,访问分表库:http://127.0.0.1:8080/home/orderlist/返回:4,从druid的监控页⾯查看创建的连接: http://127.0.0.1:8080/druid可以看到连接有共4个数据源:六,查看spring boot版本: . ____ _ __ _ _/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \\\/ ___)| |_)| | | | | || (_| | ) ) ) )' |____| .__|_| |_|_| |_\__, | / / / /=========|_|==============|___/=/_/_/_/ :: Spring Boot :: (v2.3.3.RELEASE)。

ShardingSpherejdbc集成多数据源的实现步骤1. 引入ShardingSphere的依赖:在项目的pom.xml文件中添加ShardingSphere的相关依赖。

具体的依赖配置可以参考ShardingSphere的官方文档。

2.配置数据源:在项目的配置文件中配置数据源的相关信息。

可以配置多个数据源,并指定每个数据源的驱动类、连接地址、用户名、密码等信息。

3.配置分片规则:如果需要对数据进行分片,需要配置对应的分片规则。

分片规则定义了数据如何进行拆分和路由。

可以根据具体的需求选择合适的分片算法和键值。

4. 配置数据源和分片规则的映射关系:将每个数据源和对应的分片规则进行映射。

可以使用ShardingSphere提供的配置方式,也可以通过编程方式进行配置。

5. 编写DAO层代码:在DAO层编写数据库操作的代码。

可以使用ShardingSphere提供的API,也可以使用原生的JDBC API。

在编写代码时,需要指定使用哪个数据源进行操作。

6. 配置事务管理:如果需要使用事务,需要配置对应的事务管理器。

可以使用Spring框架提供的事务管理器,也可以使用其他第三方的事务管理器。

7.启动应用程序:启动应用程序,并进行测试。

可以通过执行数据库操作的代码,验证多数据源的集成是否成功。

总结起来,使用ShardingSphere集成多数据源的步骤包括:引入依赖、配置数据源、配置分片规则、配置数据源和分片规则的映射关系、编写DAO层代码、配置事务管理、启动应用程序。

通过这些步骤,可以实现多数据源的集成,并能够对数据进行分片和路由操作。

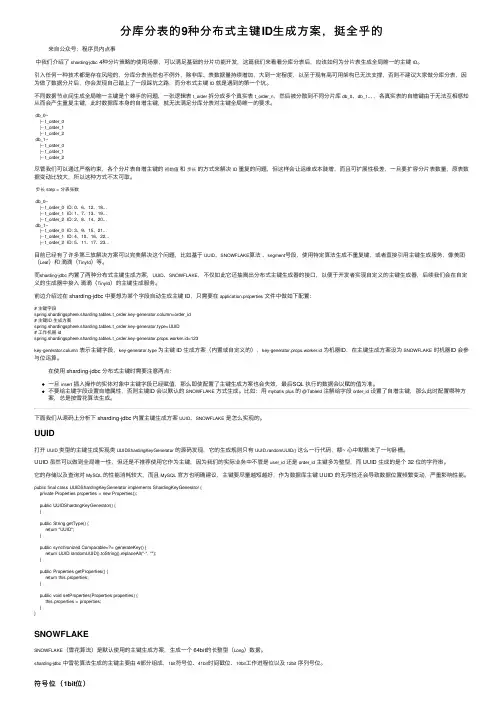

分库分表的9种分布式主键ID⽣成⽅案,挺全乎的来⾃公众号:程序员内点事中我们介绍了sharding-jdbc 4种分⽚策略的使⽤场景,可以满⾜基础的分⽚功能开发,这篇我们来看看分库分表后,应该如何为分⽚表⽣成全局唯⼀的主键ID。

引⼊任何⼀种技术都是存在风险的,分库分表当然也不例外,除⾮库、表数据量持续增加,⼤到⼀定程度,以⾄于现有⾼可⽤架构已⽆法⽀撑,否则不建议⼤家做分库分表,因为做了数据分⽚后,你会发现⾃⼰踏上了⼀段踩坑之路,⽽分布式主键ID就是遇到的第⼀个坑。

不同数据节点间⽣成全局唯⼀主键是个棘⼿的问题,⼀张逻辑表t_order拆分成多个真实表t_order_n,然后被分散到不同分⽚库db_0、db_1... ,各真实表的⾃增键由于⽆法互相感知从⽽会产⽣重复主键,此时数据库本⾝的⾃增主键,就⽆法满⾜分库分表对主键全局唯⼀的要求。

db_0--|-- t_order_0|-- t_order_1|-- t_order_2db_1--|-- t_order_0|-- t_order_1|-- t_order_2尽管我们可以通过严格约束,各个分⽚表⾃增主键的初始值和步长的⽅式来解决ID重复的问题,但这样会让运维成本陡增,⽽且可扩展性极差,⼀旦要扩容分⽚表数量,原表数据变动⽐较⼤,所以这种⽅式不太可取。

步长 step = 分表张数db_0--|-- t_order_0 ID: 0、6、12、18...|-- t_order_1 ID: 1、7、13、19...|-- t_order_2 ID: 2、8、14、20...db_1--|-- t_order_0 ID: 3、9、15、21...|-- t_order_1 ID: 4、10、16、22...|-- t_order_2 ID: 5、11、17、23...⽬前已经有了许多第三放解决⽅案可以完美解决这个问题,⽐如基于UUID、SNOWFLAKE算法、segment号段,使⽤特定算法⽣成不重复键,或者直接引⽤主键⽣成服务,像美团(Leaf)和滴滴(TinyId)等。

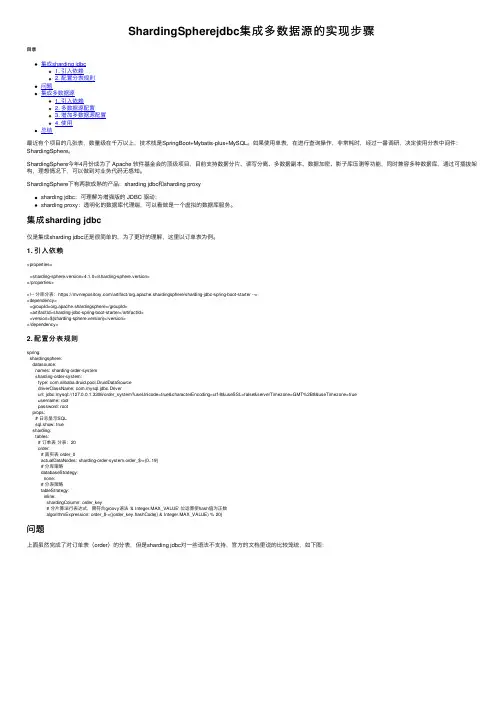

ShardingSpherejdbc集成多数据源的实现步骤⽬录集成sharding jdbc1. 引⼊依赖2. 配置分表规则问题集成多数据源1. 引⼊依赖2. 多数据源配置3. 增加多数据源配置4. 使⽤总结最近有个项⽬的⼏张表,数量级在千万以上,技术栈是SpringBoot+Mybatis-plus+MySQL。

如果使⽤单表,在进⾏查询操作,⾮常耗时,经过⼀番调研,决定使⽤分表中间件:ShardingSphere。

ShardingSphere今年4⽉份成为了 Apache 软件基⾦会的顶级项⽬,⽬前⽀持数据分⽚、读写分离、多数据副本、数据加密、影⼦库压测等功能,同时兼容多种数据库,通过可插拔架构,理想情况下,可以做到对业务代码⽆感知。

ShardingSphere下有两款成熟的产品:sharding jdbc和sharding proxysharding jdbc:可理解为增强版的 JDBC 驱动;sharding proxy:透明化的数据库代理端,可以看做是⼀个虚拟的数据库服务。

集成sharding jdbc仅是集成sharding jdbc还是很简单的,为了更好的理解,这⾥以订单表为例。

1. 引⼊依赖<properties><sharding-sphere.version>4.1.0</sharding-sphere.version></properties><!-- 分库分表:https:///artifact/org.apache.shardingsphere/sharding-jdbc-spring-boot-starter --><dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-spring-boot-starter</artifactId><version>${sharding-sphere.version}</version></dependency>2. 配置分表规则spring:shardingsphere:datasource:names: sharding-order-systemsharding-order-system:type: com.alibaba.druid.pool.DruidDataSourcedriverClassName: com.mysql.jdbc.Driverurl: jdbc:mysql://127.0.0.1:3306/order_system?useUnicode=true&characterEncoding=utf-8&useSSL=false&serverTimezone=GMT%2B8&useTimezone=trueusername: rootpassword: rootprops:# ⽇志显⽰SQLsql.show: truesharding:tables:# 订单表分表:20order:# 真实表 order_0actualDataNodes: sharding-order-system.order_$->{0..19}# 分库策略databaseStrategy:none:# 分表策略tableStrategy:inline:shardingColumn: order_key# 分⽚算法⾏表达式,需符合groovy语法 '& Integer.MAX_VALUE' 位运算使hash值为正数algorithmExpression: order_$->{(order_key.hashCode() & Integer.MAX_VALUE) % 20}问题上⾯虽然完成了对订单表(order)的分表,但是sharding jdbc对⼀些语法不⽀持,官⽅的⽂档⾥说的⽐较笼统,如下图:像insert into ... select这些语法是不⽀持的,**⽽且对于没有涉及到分表的语句,也有同样的限制。

shardingsphere 原理

ShardingSphere 是一个开源的分布式数据库中间件解决方案。

它基于分布式数据库技术,旨在简化分布式数据库架构的开发和维护工作,使用户可以根据业务需要自行选择分片策略。

分布式数据库有三个组件组成:分片、路由和管理。

其中,分片是将大容量数据集拆分成小块被称为分片,以减轻单台服务器的负载压力;路由用于将业务请求转发至正确的分片;管理则是对分布式数据库进行注册、任务管理、故障排查等。

ShardingSphere 针对上述三个组件开发了三个子系统:Sharding-JDBC、Sharding-Proxy和Sharding-Sidecar,每个子系统都负责其对应的功能:

1. Sharding-JDBC 主要用于配置JDBC 连接和实现分片策略;

2. Sharding-Proxy 是一个数据库代理,用于将客户端的请求路由到正确的应用服务器,并实现读写分离;

3. Sharding-Sidecar 是一个容器管理层,负责管理和维护分片,分片注册、拆分、数据同步等管理任务。

此外,ShardingSphere 还提供了以上三个子系统的统一入口——ShardingSphere Console,用于管理和监控分布式数据库。

如何使用MySQL进行数据分片与分布式部署引言:随着互联网应用的不断发展和用户量的不断增加,传统的单一MySQL数据库架构已经无法满足高并发读写的需求。

为了解决这个问题,分片技术和分布式部署成为了一种常用的解决方案。

本文将介绍如何使用MySQL进行数据分片与分布式部署,帮助读者更好地理解和应用这些技术。

一、什么是数据分片数据分片是将大数据集合切分为较小的数据片段,分布存储在不同的数据库节点上。

每个数据片段又称为一个分片,不同的分片可以存储在不同的数据库服务器上。

通过数据分片,实现了数据的横向扩展,提高了系统的并发读写能力。

1.1 分片策略在进行数据分片之前,需要选择合适的分片策略。

常用的分片策略有以下几种:1.按照主键范围进行分片:将主键按照一定规则进行切分,例如按照主键范围进行分片,每个分片存储一定范围内的主键数据。

2.按照哈希值进行分片:将主键进行哈希计算,根据哈希值进行分片。

哈希算法要求分片的数据分布均匀,避免出现热点数据集中在某个分片的情况。

3.按照业务属性进行分片:根据业务属性对数据进行分类,并将相同属性的数据存储在同一个分片中。

这种分片策略适用于需要频繁进行数据查询的场景。

1.2 分片键与非分片键在进行数据分片时,需要将数据根据某个字段进行切分为不同的分片。

这个字段被称为分片键。

除了分片键之外的字段称为非分片键。

分片键应该具备以下属性:1.唯一性:每个分片键的取值必须唯一,避免数据在分片间重复存储。

2.分布均匀:分片键的取值应该分布均匀,避免数据倾斜导致某个分片的负载过重。

二、分布式部署分布式部署是将数据分片部署在不同的数据库节点上,通过分片和节点间的协作来完成数据的读写操作。

在分布式部署中,常用的架构有主从复制架构、主主复制架构和多主架构。

2.1 主从复制架构主从复制架构是最常见的分布式部署方式之一。

其中,一个节点(主节点)负责处理写操作,而其他一或多个节点(从节点)负责处理读操作。

shardingsphere通过注解实现分库ShardingSphere 是一款非常流行的分布式数据库中间件,它提供了灵活且强大的数据库分库分表功能。

ShardingSphere 的分库功能可以通过注解实现,使得开发人员可以在代码层面轻松地指定数据分库的逻辑。

ShardingSphere 的注解实现分库功能主要基于 Java 语言的注解功能。

因此,在使用 ShardingSphere 进行分库之前,首先需要配置相关的依赖项。

首先,在 Maven 中添加 ShardingSphere 的相关依赖项:```xml<dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-spring-boot-starter</artifactId> <version>5.0.0-alpha</version></dependency>```接下来,在 Spring Boot 中配置 ShardingSphere 的数据源和分库规则。

在配置文件中添加以下内容:```yaml#数据库连接配置#分库规则配置上述配置中,我们定义了两个数据源 ds0 和 ds1,分别对应了两个物理数据库。

接着,我们定义了分库规则,使用了内联分片算法,根据user_id 的奇偶性将数据分到不同的数据源中。

如上所示,当 user_id 为偶数时,数据将存储到 ds0 数据源中;当 user_id 为奇数时,数据将存储到 ds1 数据源中。

```javapublic class UserInfoprivate Integer id;private Integer userId;//...``````javapublic class UserRepositoryprivate EntityManager entityManager;public int save(UserInfo userInfo)entityManager.persist(userInfo);entityManager.flush(;return userInfo.getId(;}```通过上述的配置和代码,我们就可以实现数据库的分库功能。

shardingsphere实现springboot集成多数据源分库分表实现⾸先引⼊依赖<dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-spring-boot-starter</artifactId><version>4.0.0-RC1</version></dependency>进⾏配置package mon.configuration;import com.alibaba.druid.pool.DruidDataSource;import ng.StringUtil;import mon.collect.Lists;import lombok.Data;import org.apache.shardingsphere.api.config.sharding.ShardingRuleConfiguration;import org.apache.shardingsphere.api.config.sharding.TableRuleConfiguration;import org.apache.shardingsphere.api.config.sharding.strategy.InlineShardingStrategyConfiguration;import org.apache.shardingsphere.shardingjdbc.api.ShardingDataSourceFactory;import org.slf4j.Logger;import org.slf4j.LoggerFactory;import javax.sql.DataSource;import java.sql.SQLException;import java.util.HashMap;import java.util.Map;import java.util.Properties;/*** @author zhangsy* @date 2021/8/2 9:20* @description 分库分表数据库配置*/@Datapublic class ShardingDataSourceConfig {private final static Logger log = LoggerFactory.getLogger(ShardingDataSourceConfig.class);public String driverClassName;public String url;public String username;public String password;public int initialSize;public int minIdle;public int maxActive;public long maxWait;public long timeBetweenEvictionRunsMillis;public long minEvictableIdleTimeMillis;public String validationQuery;public Boolean testOnBorrow;public Boolean testOnReturn;public Boolean testWhileIdle;public int validationQueryTimeout;public Boolean keepAlive;public Boolean removeAbandoned;public int removeAbandonedTimeout;public Boolean logAbandoned;public Boolean poolPreparedStatements;public int maxPoolPreparedStatementPerConnectionSize;public String filters;public String connectionProperties;private String dbNames;private Map<String, DataSource> dataSourceMap = new HashMap<>();public DataSource buildDataSource(){initDataSourceMap();String dbName = "detail";// 具体分库分表策略,按什么规则来分ShardingRuleConfiguration conf = new ShardingRuleConfiguration();// table ruleTableRuleConfiguration tableRule = new TableRuleConfiguration("m_user_group_detail", "detail.m_user_group_detail_$->{1..12}");/* // 分表策略ShardingStrategyConfiguration tableShardingStrategyConfig = new StandardShardingStrategyConfiguration("user_group_filter_time", new MyTablePreciseShardingAlgorithm());tableRule.setTableShardingStrategyConfig(tableShardingStrategyConfig);*/InlineShardingStrategyConfiguration inlineShardingStrategyConfiguration = new InlineShardingStrategyConfiguration("user_group_filter_time","m_user_group_detail_$->{user_group_filter_time}"); tableRule.setTableShardingStrategyConfig(inlineShardingStrategyConfiguration);conf.setTableRuleConfigs(Lists.newArrayList(tableRule));Properties props = new Properties();props.put("sql.show", false);DataSource dataSource = null;try {dataSource = ShardingDataSourceFactory.createDataSource(dataSourceMap, conf, props);} catch (SQLException e) {log.error("数据源初始化失败", e);}return dataSource;}// @Beanpublic void initDataSourceMap() {dbNames = "detail";for (String name : dbNames.split(",")) {DruidDataSource druidDataSource = new DruidDataSource();druidDataSource.setDriverClassName(driverClassName);druidDataSource.setUrl(url);druidDataSource.setUsername(username);druidDataSource.setPassword(password);if(initialSize > 0){druidDataSource.setInitialSize(initialSize);}if(minIdle > 0){druidDataSource.setMinIdle(minIdle);}if(maxActive > 0){druidDataSource.setMaxActive(maxActive);}if(maxWait > 0){druidDataSource.setMaxWait(maxWait);}if(timeBetweenEvictionRunsMillis > 0){druidDataSource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);}if(minEvictableIdleTimeMillis > 0){druidDataSource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);}if(validationQueryTimeout > 0){druidDataSource.setValidationQueryTimeout(validationQueryTimeout);}if(removeAbandonedTimeout > 0){druidDataSource.setRemoveAbandonedTimeout(removeAbandonedTimeout);}if(maxPoolPreparedStatementPerConnectionSize > 0){druidDataSource.setMaxPoolPreparedStatementPerConnectionSize(maxPoolPreparedStatementPerConnectionSize); }if(StringUtil.isNotBlank(validationQuery)){druidDataSource.setValidationQuery(validationQuery);}if(StringUtil.isNotBlank(connectionProperties)){druidDataSource.setConnectionProperties(connectionProperties);}if(testOnReturn != null){druidDataSource.setTestOnReturn(testOnReturn);}if(testWhileIdle != null){druidDataSource.setTestWhileIdle(testWhileIdle);}if(keepAlive != null){druidDataSource.setKeepAlive(keepAlive);}if(removeAbandoned != null){druidDataSource.setRemoveAbandoned(removeAbandoned);}if(logAbandoned != null){druidDataSource.setLogAbandoned(logAbandoned);}if(poolPreparedStatements != null){druidDataSource.setPoolPreparedStatements(poolPreparedStatements);}try {if(StringUtil.isNotBlank(filters)){druidDataSource.setFilters(filters);}dataSourceMap.put(name, druidDataSource);} catch (SQLException e) {e.printStackTrace();}}}}ymal配置s = detailspring.shardingsphere.datasource.detail.type = com.alibaba.druid.pool.DruidDataSourcespring.shardingsphere.datasource.detail.driverClassName = com.mysql.cj.jdbc.Driverspring.shardingsphere.datasource.detail.url = jdbc:mysql://localhost:3306/****?useSSL=false&useUnicode=true&characterEncoding=utf8&serverTimezone=Asia/Shanghaiername = rootspring.shardingsphere.datasource.detail.password = rootspring.shardingsphere.datasource.detail.filters = stat,slf4j,wallspring.shardingsphere.datasource.detail.initialSize = 5spring.shardingsphere.datasource.detail.keepAlive = truespring.shardingsphere.datasource.detail.maxActive = 10spring.shardingsphere.datasource.detail.maxWait = 10000spring.shardingsphere.datasource.detail.minEvictableIdleTimeMillis = 300000spring.shardingsphere.datasource.detail.minIdle = 5spring.shardingsphere.datasource.detail.removeAbandoned = truespring.shardingsphere.datasource.detail.removeAbandonedTimeout = 80spring.shardingsphere.datasource.detail.testOnBorrow = truespring.shardingsphere.datasource.detail.testOnReturn = falsespring.shardingsphere.datasource.detail.testWhileIdle = truespring.shardingsphere.datasource.detail.timeBetweenEvictionRunsMillis = 60000spring.shardingsphere.datasource.detail.validationQuery = SELECT 1spring.shardingsphere.datasource.detail.validationQueryTimeout = 1spring.shardingsphere.sharding.tables.m_user_group_detail.logic-table = detail.m_user_group_detailspring.shardingsphere.sharding.tables.m_user_group_detail.actual-data-nodes = detail.m_user_group_detail_0$->{1..9},detail.m_user_group_detail_$->{10..12} spring.shardingsphere.sharding.tables.m_user_group_detail.table-strategy.inline.sharding-column = user_group_filter_timespring.shardingsphere.sharding.tables.m_user_group_detail.table-strategy.inline.algorithm-expression = m_user_group_detail_$->{user_group_filter_time} spring.shardingsphere.sharding.default-data-source-name = detailspring.shardingsphere.sharding.binding-tables = m_user_group_detailspring.shardingsphere.props.sql.show = trueconfig配置package com.epay.dtc.ms.cust.provider.configuration.mysql.detail;import mon.configuration.ShardingDataSourceConfig;import org.apache.ibatis.session.SqlSessionFactory;import org.mybatis.spring.SqlSessionFactoryBean;import org.mybatis.spring.SqlSessionTemplate;import org.mybatis.spring.annotation.MapperScan;import org.springframework.beans.factory.annotation.Qualifier;import org.springframework.boot.context.properties.ConfigurationProperties;import org.springframework.context.annotation.Bean;import org.springframework.core.io.support.PathMatchingResourcePatternResolver;import ponent;import javax.sql.DataSource;/*** @author zh* @date 2021/07/06 09:48* @description*/@Component@MapperScan(basePackages = "mon.mapper.mysql.detail", sqlSessionFactoryRef = "detailSqlSessionFactory")@ConfigurationProperties(prefix = "spring.shardingsphere.datasource.detail")public class DetailDataSourceConfig extends ShardingDataSourceConfig {private String dbNames;@Bean("detailDataSource")public DataSource getDetailDataSource(){return buildDataSource();}@Bean("detailSqlSessionFactory")public SqlSessionFactory detailSqlSessionFactory(@Qualifier("detailDataSource") DataSource dataSource) throws Exception {SqlSessionFactoryBean bean = new SqlSessionFactoryBean();bean.setDataSource(dataSource);bean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath*:mapper/mysql/detail/*.xml"));return bean.getObject();}@Bean("detailSqlSessionTemplate")public SqlSessionTemplate detailSqlSessionTemplate(@Qualifier("detailSqlSessionFactory") SqlSessionFactory sqlSessionFactory){return new SqlSessionTemplate(sqlSessionFactory);}}。

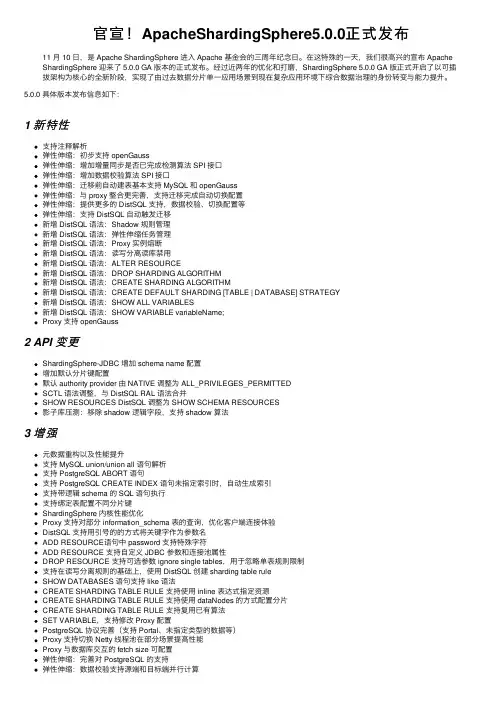

官宣!ApacheShardingSphere5.0.0正式发布11 ⽉ 10 ⽇,是 Apache ShardingSphere 进⼊ Apache 基⾦会的三周年纪念⽇。

在这特殊的⼀天,我们很⾼兴的宣布 ApacheShardingSphere 迎来了 5.0.0 GA 版本的正式发布。

经过近两年的优化和打磨,ShardingSphere 5.0.0 GA 版正式开启了以可插拔架构为核⼼的全新阶段,实现了由过去数据分⽚单⼀应⽤场景到现在复杂应⽤环境下综合数据治理的⾝份转变与能⼒提升。

5.0.0 具体版本发布信息如下:1 新特性⽀持注释解析弹性伸缩:初步⽀持 openGauss弹性伸缩:增加增量同步是否已完成检测算法 SPI 接⼝弹性伸缩:增加数据校验算法 SPI 接⼝弹性伸缩:迁移前⾃动建表基本⽀持 MySQL 和 openGauss弹性伸缩:与 proxy 整合更完善,⽀持迁移完成⾃动切换配置弹性伸缩:提供更多的 DistSQL ⽀持,数据校验、切换配置等弹性伸缩:⽀持 DistSQL ⾃动触发迁移新增 DistSQL 语法:Shadow 规则管理新增 DistSQL 语法:弹性伸缩任务管理新增 DistSQL 语法:Proxy 实例熔断新增 DistSQL 语法:读写分离读库禁⽤新增 DistSQL 语法:ALTER RESOURCE新增 DistSQL 语法:DROP SHARDING ALGORITHM新增 DistSQL 语法:CREATE SHARDING ALGORITHM新增 DistSQL 语法:CREATE DEFAULT SHARDING [TABLE | DATABASE] STRATEGY新增 DistSQL 语法:SHOW ALL VARIABLES新增 DistSQL 语法:SHOW VARIABLE variableName;Proxy ⽀持 openGauss2 API 变更ShardingSphere-JDBC 增加 schema name 配置增加默认分⽚键配置默认 authority provider 由 NATIVE 调整为 ALL_PRIVILEGES_PERMITTEDSCTL 语法调整,与 DistSQL RAL 语法合并SHOW RESOURCES DistSQL 调整为 SHOW SCHEMA RESOURCES影⼦库压测:移除 shadow 逻辑字段,⽀持 shadow 算法3 增强元数据重构以及性能提升⽀持 MySQL union/union all 语句解析⽀持 PostgreSQL ABORT 语句⽀持 PostgreSQL CREATE INDEX 语句未指定索引时,⾃动⽣成索引⽀持带逻辑 schema 的 SQL 语句执⾏⽀持绑定表配置不同分⽚键ShardingSphere 内核性能优化Proxy ⽀持对部分 information_schema 表的查询,优化客户端连接体验DistSQL ⽀持⽤引号的的⽅式将关键字作为参数名ADD RESOURCE语句中 password ⽀持特殊字符ADD RESOURCE ⽀持⾃定义 JDBC 参数和连接池属性DROP RESOURCE ⽀持可选参数 ignore single tables,⽤于忽略单表规则限制⽀持在读写分离规则的基础上,使⽤ DistSQL 创建 sharding table ruleSHOW DATABASES 语句⽀持 like 语法CREATE SHARDING TABLE RULE ⽀持使⽤ inline 表达式指定资源CREATE SHARDING TABLE RULE ⽀持使⽤ dataNodes 的⽅式配置分⽚CREATE SHARDING TABLE RULE ⽀持复⽤已有算法SET VARIABLE,⽀持修改 Proxy 配置PostgreSQL 协议完善(⽀持 Portal、未指定类型的数据等)Proxy ⽀持切换 Netty 线程池在部分场景提⾼性能Proxy 与数据库交互的 fetch size 可配置弹性伸缩:完善对 PostgreSQL 的⽀持弹性伸缩:数据校验⽀持源端和⽬标端并⾏计算4 重构重构 SingleTable 功能以⽀持 Encrypt 多数据源重构 examples,调整模块结构调整注册中⼼节点持久化数据结构弹性伸缩:重构增量同步是否已完成检测算法默认实现弹性伸缩:重构数据校验算法默认实现弹性伸缩:移除 HTTP API 和独⽴打包影⼦库压测:去除 DML 重写流程影⼦库压测:⽀持 DDL 路由5 漏洞修复修复 INTERVAL 分⽚算法问题修复 SHOW INDEX FROM TABLE FROM TABLE 语句改写异常修复 Encrypt 多表关联改写异常修复⼦查询 index out of range 异常修复 Oracle 分页异常修复 Sharding 场景下未配置 KeyGenerateStrategy 时改写异常修复 Oracle ⽅⾔⼤⼩写导致 Federation 执⾏引擎异常修复 Sharding/Encrypt 整合使⽤时改写异常修复 Oracle 元数据加载异常的问题修复 SHOW RESOURCES 语句⽆法展⽰⾃定义属性的问题修复 SQL 执⾏异常不抛出的问题修复 Etcd ⽆法发送节点新增事件修复 PostgreSQL Proxy 查询结果可能丢失包含 null 值的数据⾏的问题修复 PostgreSQL 元数据列顺序可能错乱的问题修复 Proxy 字符编码可能不正确的问题下载链接:更新⽇志:项⽬地址:6 社区建设Apache ShardingSphere 5.0.0 版本的发布离不开社区⽤户的⽀持和贡献,期间共有 168 位 Contributor 提交了 4468 个 PR,助⼒ShardingSphere 5.0.0 版本的全⼒发布,感谢社区伙伴们的⼤⼒⽀持。

SpringCloud下结合shardingSphere进⾏分库分表(实现Sharding。

通过ShardingAlgorithm的实现,可以进⼀步发现分⽚策略的灵活和强⼤;可以实现⼀致性hash算法、按时间分⽚算法、以及mod算法等;更进⼀步,可以对同⼀个表按业务需求实现不同的分⽚算法,⽐如原来按年分⽚的业务表,⽐如随着业务量的扩展,需要提⾼分⽚频率,可是⼜不想进⾏⼤量历史数据迁移,可以在某⼀时刻开始按⽉或者按⽇分⽚;当然前提是要维护⼀个相对复杂的分⽚算法;下⾯展⽰⼀个⾃定义分⽚算法原型,留作业务扩展;业务模型和上⼀篇的inline表达式⼀样,下⾯进⾏核⼼代码说明:1)核⼼pom⽂件内容<dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><scope>runtime</scope></dependency><dependency><groupId>com.alibaba</groupId><artifactId>druid-spring-boot-starter</artifactId><version>1.1.10</version></dependency><dependency><groupId>io.shardingsphere</groupId><artifactId>sharding-jdbc-spring-boot-starter</artifactId><version>3.1.0</version></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-data-jpa</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency>2)核⼼yml内容:sharding:jdbc:datasource:names: master0,master0salve0,master0slave1,master1,master1slave0,master1slave1master0:type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://localhost:3306/mcspcsharding0?useUnicode=true&character_set_server=utf8mb4&useSSL=false&serverTimezone=GMT%2B8username: rootpassword: rootmaster0salve0:type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://localhost:3306/mcspcsharding0s0?useUnicode=true&character_set_server=utf8mb4&useSSL=false&serverTimezone=GMT%2B8username: rootpassword: rootmaster0slave1:type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://localhost:3306/mcspcsharding0s1?useUnicode=true&character_set_server=utf8mb4&useSSL=false&serverTimezone=GMT%2B8username: rootpassword: rootmaster1:type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://localhost:3306/mcspcsharding1?useUnicode=true&character_set_server=utf8mb4&useSSL=false&serverTimezone=GMT%2B8username: rootpassword: rootmaster1slave0:type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://localhost:3306/mcspcsharding1s0?useUnicode=true&character_set_server=utf8mb4&useSSL=false&serverTimezone=GMT%2B8username: rootpassword: rootmaster1slave1:type: com.alibaba.druid.pool.DruidDataSourceurl: jdbc:mysql://localhost:3306/mcspcsharding1s1?useUnicode=true&character_set_server=utf8mb4&useSSL=false&serverTimezone=GMT%2B8username: rootpassword: rootconfig:sharding:tables:mc_member:actual-nodes: mcspcsharding$->{0..1}.mc_member$->{0..1}database-strategy:standard:sharding-column: genderprecise-algorithm-class-name: com.chong.mcspcshardingdbtable.sharding.DbShardingAlgorithmtable-strategy:complex:sharding-columns: idalgorithm-class-name: com.chong.mcspcshardingdbtable.sharding.MemberTblComplexKeyShardingbinding-tables: mc_member # 多个时逗号隔开broadcast-tables: mc_mastermaster-slave-rules:ms0:master-data-source-name: master0slave-data-source-names: master0salve0,master0slave1ms1:master-data-source-name: master1slave-data-source-names: master1slave0,master1slave1props:sql:show: true3)database数据源的sharding算法,实现了PreciseShardingAlgorithmpackage com.chong.mcspcshardingdbtable.sharding;import io.shardingsphere.api.algorithm.sharding.PreciseShardingValue;import io.shardingsphere.api.algorithm.sharding.standard.PreciseShardingAlgorithm;import ponent;import java.util.Collection;@Componentpublic class DbShardingAlgorithm implements PreciseShardingAlgorithm<Integer> {@Overridepublic String doSharding(Collection<String> collection, PreciseShardingValue<Integer> preciseShardingValue) { Integer index = preciseShardingValue.getValue() % 2;for (String dataSourceName : collection) {if (dataSourceName.endsWith(index + "")) {return dataSourceName;}}throw new UnsupportedOperationException();}}4)table的sharding算法,实现了ComplexKeysShardingAlgorithmpackage com.chong.mcspcshardingdbtable.sharding;import mon.collect.Range;import io.shardingsphere.api.algorithm.sharding.ListShardingValue;import io.shardingsphere.api.algorithm.sharding.PreciseShardingValue;import io.shardingsphere.api.algorithm.sharding.RangeShardingValue;import io.shardingsphere.api.algorithm.sharding.ShardingValue;import plexKeysShardingAlgorithm;import ponent;import java.util.ArrayList;import java.util.Collection;import java.util.List;/*** 通过复合分⽚键进⾏演⽰,覆盖Precise,Range,List三种类型的ShardingValue。

在多数据源中对部分数据表使⽤shardingsphere进⾏分库分表背景近期在项⽬中需要使⽤多数据源,其中有⼀些表的数据量⽐较⼤,需要对其进⾏分库分表;⽽其他数据表数据量⽐较正常,单表就可以。

项⽬中可能使⽤其他组的数据源数据,因此需要多数据源⽀持。

经过调研多数据源配置⽐较⽅便。

在该项⽬中分库分表的策略⽐较简单,仅根据⼀个字段分就可以,因此分库分表⽅案选⽤⽐较流⾏⽅便易⽤的 sharding-jdbc需要实现的⽬标是根据学⽣姓名字段 student_name 进⾏分表,但是不需要分库。

数据表是student_hist0 - student_hist9引⼊ sharding-jdbc maven 依赖<dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-core</artifactId><version>4.1.1</version></dependency>数据源配置⽂件spring:application:name: student-service-providerjackson:date-format: yyyy-MM-dd HH:mm:sstime-zone: GMT+8defaultPropertyInclusion: non_nulldeserialization:FAIL_ON_UNKNOWN_PROPERTIES: false#对返回的时间进⾏格式化datasource:hikari:student:url: jdbc:mysql://127.0.0.1:3306/student_service?allowMultiQueries=true&useUnicode=true&characterEncoding=UTF-8&useSSL=false&useTimezone=true&serverTimezone=GMT%2 username: rootpassword: root123log1:url: jdbc:mysql://127.0.0.1:3306/log1?allowMultiQueries=true&useUnicode=true&characterEncoding=UTF-8&useSSL=falseusername: rootpassword: root123log2:url: jdbc:mysql://127.0.0.1:3306/log2?allowMultiQueries=true&useUnicode=true&characterEncoding=UTF-8&useSSL=falseusername: rootpassword: root123配置多数据源代码DataSourceProperties 数据源import com.zaxxer.hikari.HikariDataSource;import lombok.Data;import org.springframework.boot.context.properties.ConfigurationProperties;import org.springframework.context.annotation.Configuration;@Data@Configuration@ConfigurationProperties(prefix = "spring.datasource.hikari")public class DataSourceProperties {private HikariDataSource student;private HikariDataSource log1;private HikariDataSource log2;}DynamicDataSource 动态数据源import org.springframework.jdbc.datasource.lookup.AbstractRoutingDataSource;public class DynamicDataSource extends AbstractRoutingDataSource {/*当前据源名称*/private static final ThreadLocal<String> dataSourceContextHolder = new ThreadLocal<>();/*设置数据源名称*/public static void setDataSourceName(String dataSourceName) {dataSourceContextHolder.set(dataSourceName);}/*获取据源名称*/public static String getDataSourceName() {return dataSourceContextHolder.get();}/*清除当数据源名称*/public static void clearDataSource() {dataSourceContextHolder.remove();}@Overrideprotected Object determineCurrentLookupKey() {return getDataSourceName();}}MultiDataSource 多数据源标记import ng.annotation.ElementType;import ng.annotation.Retention;import ng.annotation.RetentionPolicy;import ng.annotation.Target;@Retention(RetentionPolicy.RUNTIME)@Target({ElementType.METHOD})public @interface MultiDataSource {String value() default DataSourceConfig.DEFAULT_DATASOURCE_NAME;}重点来了,因为是根据表的某⼀个字段进⾏分表,该字段是⼀个字符串类型,因此需要想根据字符串的⼀致性hash码算出在哪张表上。

Java基于ShardingSphere实现分库分表的实例详解⽬录⼀、简介⼆、项⽬使⽤1、引⼊依赖2、数据库3、实体类4、mapper5、yml配置6、测试类7、数据⼀、简介 Apache ShardingSphere 是⼀套开源的分布式数据库解决⽅案组成的⽣态圈,它由 JDBC、Proxy 和 Sidecar(规划中)这 3 款既能够独⽴部署,⼜⽀持混合部署配合使⽤的产品组成。

它们均提供标准化的数据⽔平扩展、分布式事务和分布式治理等功能,可适⽤于如 Java 同构、异构语⾔、云原⽣等各种多样化的应⽤场景。

Apache ShardingSphere 旨在充分合理地在分布式的场景下利⽤关系型数据库的计算和存储能⼒,⽽并⾮实现⼀个全新的关系型数据库。

关系型数据库当今依然占有巨⼤市场份额,是企业核⼼系统的基⽯,未来也难于撼动,我们更加注重在原有基础上提供增量,⽽⾮颠覆。

Apache ShardingSphere 5.x 版本开始致⼒于可插拔架构,项⽬的功能组件能够灵活的以可插拔的⽅式进⾏扩展。

⽬前,数据分⽚、读写分离、数据加密、影⼦库压测等功能,以及 MySQL、PostgreSQL、SQLServer、Oracle 等 SQL 与协议的⽀持,均通过插件的⽅式织⼊项⽬。

开发者能够像使⽤积⽊⼀样定制属于⾃⼰的独特系统。

Apache ShardingSphere ⽬前已提供数⼗个 SPI 作为系统的扩展点,仍在不断增加中。

ShardingSphere 已于2020年4⽉16⽇成为 Apache 软件基⾦会的顶级项⽬。

⼆、项⽬使⽤1、引⼊依赖<dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-spring-boot-starter</artifactId><version>4.0.0-RC2</version></dependency>2、数据库3、实体类@Datapublic class User {private Integer id;private String name;private Integer age;}4、mapper这⾥⽤的Mybatis-plus 3.4版本。

shardingsphere整合分表操作shardingsphere整合分表操作项⽬整合1.添加依赖<!-- 数据库分库分表的依赖配置 start--><dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-spring-boot-starter</artifactId><version>4.1.1</version></dependency><!-- MYSQL数据库的依赖配置 end-->2.添加分表配置#spring配置spring:#分库分表shardingsphere:datasource:names: ds0#客户数据库ds0:driver-class-name: com.mysql.jdbc.Driver#druid数据库连接池配置 urlurl: jdbc:mysql://10.249.2.163:3306/agentwapcustomers?useUnicode=true&characterEncoding=UTF-8&useSSL=false#HiKariCP数据库连接池配置 jdbc-url# jdbc-url: jdbc:mysql://10.249.2.163:3306/agentwapcustomers?useUnicode=true&characterEncoding=UTF-8&useSSL=false password: M@EeO3m2type: com.alibaba.druid.pool.DruidDataSourceusername: rootsharding:tables:dw_cust_info:actual-data-nodes: ds0.dw_cust_info$->{0..1}key-generator:column: idtype: SNOWFLAKEtable-strategy:inline:algorithm-expression: dw_cust_info$->{id % 2}sharding-column: id#使⽤⾃定义类进⾏分表策略# standard:# sharding-column: create_date# precise-algorithm-class-name: com.allianity.utils.ShardingAlgorithm3.去除⾃动配置@SpringBootApplication(exclude = {DataSourceAutoConfiguration.class,DruidDataSourceAutoConfigure.class})注意点:如果使⽤了PageHelper分页功能需要排除分页⾃动配置PageHelperAutoConfiguration.class@SpringBootApplication(exclude = {DruidDataSourceAutoConfigure.class,DataSourceAutoConfiguration.class, PageHelperAutoConfiguration.class})结果演⽰。

Java中使⽤Shardingsphere-JDBC进⾏分库分表的使⽤⽅法(精简)1.pom.xml中添加依赖<dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-spring-boot-starter</artifactId><version>4.1.1</version></dependency><!--相关基础依赖--><dependency><groupId>com.alibaba</groupId><artifactId>druid</artifactId><version>1.1.10</version></dependency><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId></dependency><dependency><groupId>com.baomidou</groupId><artifactId>mybatis-plus-boot-starter</artifactId><version>3.3.1</version></dependency>2. application.yml中添加相关配2.1 不分库分表的基础连接配置 spring: shardingsphere: datasource: names: s1 s1: type: com.alibaba.druid.pool.DruidDataSource driver-class-name: com.mysql.cj.jdbc.Driver url: jdbc:mysql://localhost:3306/mydb?serverTimezone=GMT%2B8 username: root password: root props: sql: #是否打印sql show: true*注意:这样配的话,跟操作单库单表的数据源配置效果⼀样2.2 不分库但分表的连接配置 spring: shardingsphere: datasource: names: s1 s1: type: com.alibaba.druid.pool.DruidDataSource driver-class-name: com.mysql.cj.jdbc.Driver url: jdbc:mysql://localhost:3306/mydb?serverTimezone=GMT%2B8 username: root password: root props: sql: show: true sharding: #分表 tables: #参与分表的表名(虚拟表名) test: #分⼏个表,这⾥是两个,test_1和test_2 actual-data-nodes: s1.test_$->{1..2} key-generator: #主键 #主键填值,雪花 type: SNOWFLAKE table-strategy: #主键分表路由策略 inline: sharding-column: tid #这⾥采⽤偶数键值去test_1表,奇数键值去test_2表 algorithm-expression: test_$->{tid%2+1}2.3 分库分表的连接配置 spring: shardingsphere: datasource: names: s1,s2 s1: type: com.alibaba.druid.pool.DruidDataSource driver-class-name: com.mysql.cj.jdbc.Driver url: jdbc:mysql://localhost:3306/mydb1?serverTimezone=GMT%2B8 username: root password: root s2: type: com.alibaba.druid.pool.DruidDataSource driver-class-name: com.mysql.cj.jdbc.Driver url: jdbc:mysql://localhost:3307/mydb2?serverTimezone=GMT%2B8 username: root password: root props: sql: show: true sharding: #分表 tables: #参与分表的表名(虚拟表名) test: #分库分表关键 actual-data-nodes: s$->{1..2}.test_$->{1..2} key-generator: #主键 column: tid #主键填值,雪花 type: SNOWFLAKE table-strategy: #主键分表路由策略 inline: sharding-column: tid #这⾥采⽤偶数键值去test_1表,奇数键值去test_2表 algorithm-expression: test_$->{tid%2+1} data-strategy: #主键分库路由策略 inline: sharding-column: tid #这⾥采⽤偶数键值去s1库,奇数键值去s2库 algorithm-expression: s_$->{tid%2+1}2.3 读写分离(mysql实现配置了主从架构)分库分表的连接配置 spring: shardingsphere: datasource: names: m1,s1 m1: type: com.alibaba.druid.pool.DruidDataSource driver-class-name: com.mysql.cj.jdbc.Driver url: jdbc:mysql://localhost:3306/mydb?serverTimezone=GMT%2B8 username: root password: root s1: type: com.alibaba.druid.pool.DruidDataSource driver-class-name: com.mysql.cj.jdbc.Driver url: jdbc:mysql://localhost:3307/mydb?serverTimezone=GMT%2B8 password: root props: sql: show: true sharding: #分表 tables: #参与分表的表名(虚拟表名) test: #分库分表关键 actual-data-nodes: ms0.test_$->{1..2} key-generator: #主键 column: tid #主键填值,雪花 type: SNOWFLAKE table-strategy: #主键分表路由策略 inline: sharding-column: tid #这⾥采⽤偶数键值去test_1表,奇数键值去test_2表 algorithm-expression: test_$->{tid%2+1} #主从规则 master-slave-rules: #规则⼀,名字随意,我这⾥⽤ms0表⽰ ms0: master-data-source-name: m0 #这⾥可以看出从库可以配多个 slave-data-source-names[0]: s03.分库分表路由策略 shardingsphere为我们提供了以下⼏种策略类型: inline(内联),standard(标准),complex(复杂),hint(强制)3.1 inline#分⽚策略(test是我们的逻辑表,这⾥对应实际表test_1和test_2)spring.shardingsphere.sharding.tables.test.table-strategy.inline.sharding-column=tidspring.shardingsphere.sharding.tables.test.table-strategy.inline.algorithm-expression=test_$->{tid%2+1} #分库策略spring.shardingsphere.sharding.tables.test.database-strategy.inline.sharding-column=tidspring.shardingsphere.sharding.tables.test.database-strategy.inline.algorithm-expression=s$->{tid%2+1} *注:只⽀持分⽚主键的精确路由,不⽀持范围查询、模糊查询、联表查询。