信息论课后习题答案

- 格式:doc

- 大小:41.00 KB

- 文档页数:2

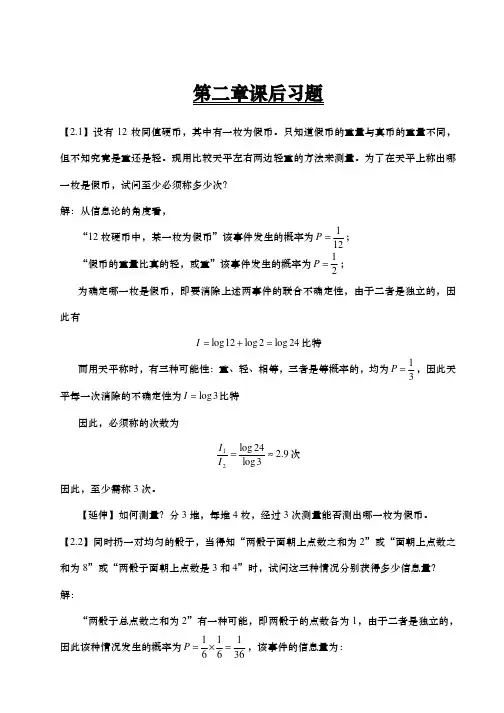

【】设有 12 枚同值硬币,其中有一枚为假币。

只知道假币的重量与真币的重量不同,但不知究竟是重还是轻。

现用比较天平左右两边轻重的方法来测量。

为了在天平上称出哪一枚是假币,试问至少必须称多少次?解:从信息论的角度看,“12 枚硬币中,某一枚为假币”该事件发生的概率为P 1;12“假币的重量比真的轻,或重”该事件发生的概率为P 1;2为确定哪一枚是假币,即要消除上述两事件的联合不确定性,由于二者是独立的,因此有I log12log2log 24 比特而用天平称时,有三种可能性:重、轻、相等,三者是等概率的,均为P 1 ,因此天3平每一次消除的不确定性为Ilog 3 比特因此,必须称的次数为I1log24I2log3次因此,至少需称 3 次。

【延伸】如何测量?分 3 堆,每堆 4 枚,经过 3 次测量能否测出哪一枚为假币。

【】同时扔一对均匀的骰子,当得知“两骰子面朝上点数之和为 2”或“面朝上点数之和为 8”或“两骰子面朝上点数是 3 和 4”时,试问这三种情况分别获得多少信息量?解:“两骰子总点数之和为 2”有一种可能,即两骰子的点数各为 1,由于二者是独立的,因此该种情况发生的概率为P1 16 61,该事件的信息量为:36I log 36比特“两骰子总点数之和为 8”共有如下可能:2 和 6、3 和 5、4 和 4、5 和 3、6 和2,概率为P 1 1 56 65,因此该事件的信息量为:36I log365比特“两骰子面朝上点数是 3 和 4”的可能性有两种:3 和 4、4 和 3,概率为P因此该事件的信息量为:1 121,6 6 18I log18比特【】如果你在不知道今天是星期几的情况下问你的朋友“明天星期几?”则答案中含有多少信息量?如果你在已知今天是星期四的情况下提出同样的问题,则答案中你能获得多少信息量(假设已知星期一至星期日的顺序)?解:如果不知今天星期几时问的话,答案可能有七种可能性,每一种都是等概率的,均为P 1,因此此时从答案中获得的信息量为7I log 7比特而当已知今天星期几时问同样的问题,其可能性只有一种,即发生的概率为1,此时获得的信息量为0 比特。

信息论第3章课后习题答案信息论是一门研究信息传输、存储和处理的学科。

它的核心理论是香农信息论,由克劳德·香农于1948年提出。

信息论的应用范围广泛,涵盖了通信、数据压缩、密码学等领域。

在信息论的学习过程中,课后习题是巩固知识、检验理解的重要环节。

本文将对信息论第3章的课后习题进行解答,帮助读者更好地理解和掌握信息论的基本概念和方法。

1. 证明:对于任意两个随机变量X和Y,有H(X,Y)≤H(X)+H(Y)。

首先,根据联合熵的定义,有H(X,Y)=-∑p(x,y)log2p(x,y)。

而熵的定义为H(X)=-∑p(x)log2p(x)和H(Y)=-∑p(y)log2p(y)。

我们可以将联合熵表示为H(X,Y)=-∑p(x,y)log2(p(x)p(y))。

根据对数的性质,log2(p(x)p(y))=log2p(x)+log2p(y)。

将其代入联合熵的表达式中,得到H(X,Y)=-∑p(x,y)(log2p(x)+log2p(y))。

再根据概率的乘法规则,p(x,y)=p(x)p(y)。

将其代入上式中,得到H(X,Y)=-∑p(x,y)(log2p(x)+log2p(y))=-∑p(x,y)log2p(x)-∑p(x,y)log2p(y)。

根据熵的定义,可以将上式分解为H(X,Y)=H(X)+H(Y)。

因此,对于任意两个随机变量X和Y,有H(X,Y)≤H(X)+H(Y)。

2. 证明:对于一个随机变量X,有H(X)≥0。

根据熵的定义,可以得到H(X)=-∑p(x)log2p(x)。

由于概率p(x)是非负的,而log2p(x)的取值范围是负无穷到0之间,所以-p(x)log2p(x)的取值范围是非负的。

因此,对于任意一个随机变量X,H(X)≥0。

3. 证明:对于一个随机变量X,当且仅当X是一个确定性变量时,H(X)=0。

当X是一个确定性变量时,即X只能取一个确定的值,概率分布为p(x)=1。

本答案是英文原版的配套答案,与翻译的中文版课本题序不太一样但内容一样。

翻译的中文版增加了题量。

2.2、Entropy of functions. Let X be a random variable taking on a finite number of values. What is the (general) inequality relationship of ()H X and ()H Y if(a) 2X Y =?(b) cos Y X =?Solution: Let ()y g x =. Then():()()x y g x p y p x ==∑.Consider any set of x ’s that map onto a single y . For this set()():():()()log ()log ()log ()x y g x x y g x p x p x p x p y p y p y ==≤=∑∑,Since log is a monotone increasing function and ():()()()x y g x p x p x p y =≤=∑.Extending this argument to the entire range of X (and Y ), we obtain():()()log ()()log ()x y x g x H X p x p x p x p x =-=-∑∑∑()log ()()yp y p y H Y ≥-=∑,with equality iff g if one-to-one with probability one.(a) 2X Y = is one-to-one and hence the entropy, which is just a function of the probabilities does not change, i.e., ()()H X H Y =.(b) cos Y X =is not necessarily one-to-one. Hence all that we can say is that ()()H X H Y ≥, which equality if cosine is one-to-one on the range of X .2.16. Example of joint entropy. Let (,)p x y be given byFind(a) ()H X ,()H Y .(b) (|)H X Y ,(|)H Y X . (c) (,)H X Y(d) ()(|)H Y H Y X -.(e) (;)I X Y(f) Draw a Venn diagram for the quantities in (a) through (e).Solution:Fig. 1 Venn diagram(a) 231()log log30.918 bits=()323H X H Y =+=.(b) 12(|)(|0)(|1)0.667 bits (/)33H X Y H X Y H X Y H Y X ==+===((,)(|)()p x y p x y p y =)((|)(,)()H X Y H X Y H Y =-)(c) 1(,)3log3 1.585 bits 3H X Y =⨯=(d) ()(|)0.251 bits H Y H Y X -=(e)(;)()(|)0.251 bits=-=I X Y H Y H Y X(f)See Figure 1.2.29 Inequalities. Let X,Y and Z be joint random variables. Prove the following inequalities and find conditions for equality.(a) )ZHYXH≥X(Z()|,|(b) )ZIYXI≥X((Z);,;(c) )XYXHZ≤Z-H-XYH),(,)(((X,,)H(d) )XYIZIZII+-XZY≥Y(););(|;;(Z|)(XSolution:(a)Using the chain rule for conditional entropy,HZYXHZXH+XH≥XYZ=),(|(Z)(||,()|)With equality iff 0YH,that is, when Y is a function of X andXZ,|(=)Z.(b)Using the chain rule for mutual information,ZIXXIZYX+=,I≥IYZ(|;)X);)(,;;(Z)(With equality iff 0ZYI, that is, when Y and Z areX)|;(=conditionally independent given X.(c)Using first the chain rule for entropy and then definition of conditionalmutual information,XZHYHIXZYX==-H-XHYYZ)()(;Z)|,|),|(X(,,)(XHXZH-Z≤,=,)()()(X|HWith equality iff 0ZYI, that is, when Y and Z areX(=|;)conditionally independent given X .(d) Using the chain rule for mutual information,);()|;();,();()|;(Z X I X Y Z I Z Y X I Y Z I Y Z X I +==+And therefore this inequality is actually an equality in all cases.4.5 Entropy rates of Markov chains.(a) Find the entropy rate of the two-state Markov chain with transition matrix⎥⎦⎤⎢⎣⎡--=1010010111p p p p P (b) What values of 01p ,10p maximize the rate of part (a)?(c) Find the entropy rate of the two-state Markov chain with transition matrix⎥⎦⎤⎢⎣⎡-=0 1 1p p P(d) Find the maximum value of the entropy rate of the Markov chain of part (c). We expect that the maximizing value of p should be less than 2/1, since the 0 state permits more information to be generated than the 1 state.Solution:(a) T he stationary distribution is easily calculated.10010*********,p p p p p p +=+=ππ Therefore the entropy rate is10011001011010101012)()()()()|(p p p H p p H p p H p H X X H ++=+=ππ(b) T he entropy rate is at most 1 bit because the process has only two states. This rate can be achieved if( and only if) 2/11001==p p , in which case the process is actually i.i.d. with2/1)1Pr()0Pr(====i i X X .(c) A s a special case of the general two-state Markov chain, the entropy rate is1)()1()()|(1012+=+=p p H H p H X X H ππ.(d) B y straightforward calculus, we find that the maximum value of)(χH of part (c) occurs for 382.02/)53(=-=p . The maximum value isbits 694.0)215()1()(=-=-=H p H p H (wrong!)5.4 Huffman coding. Consider the random variable⎪⎪⎭⎫ ⎝⎛=0.02 0.03 0.04 0.04 0.12 0.26 49.0 7654321x x x x x x x X (a) Find a binary Huffman code for X .(b) Find the expected codelength for this encoding.(c) Find a ternary Huffman code for X .Solution:(a) The Huffman tree for this distribution is(b)The expected length of the codewords for the binary Huffman code is 2.02 bits.( ∑⨯=)()(i p l X E )(c) The ternary Huffman tree is5.9 Optimal code lengths that require one bit above entropy. The source coding theorem shows that the optimal code for a random variable X has an expected length less than 1)(+X H . Given an example of a random variable for which the expected length of the optimal code is close to 1)(+X H , i.e., for any 0>ε, construct a distribution for which the optimal code has ε-+>1)(X H L .Solution: there is a trivial example that requires almost 1 bit above its entropy. Let X be a binary random variable with probability of 1=X close to 1. Then entropy of X is close to 0, but the length of its optimal code is 1 bit, which is almost 1 bit above its entropy.5.25 Shannon code. Consider the following method for generating a code for a random variable X which takes on m values {}m ,,2,1 with probabilities m p p p ,,21. Assume that the probabilities are ordered so thatm p p p ≥≥≥ 21. Define ∑-==11i k i i p F , the sum of the probabilities of allsymbols less than i . Then the codeword for i is the number ]1,0[∈i Frounded off to i l bits, where ⎥⎥⎤⎢⎢⎡=i i p l 1log . (a) Show that the code constructed by this process is prefix-free and the average length satisfies 1)()(+<≤X H L X H .(b) Construct the code for the probability distribution (0.5, 0.25, 0.125, 0.125).Solution:(a) Since ⎥⎥⎤⎢⎢⎡=i i p l 1log , we have 11log 1log +<≤i i i p l pWhich implies that 1)()(+<=≤∑X H l p L X H i i .By the choice of i l , we have )1(22---<≤ii l i l p . Thus j F , i j > differs from j F by at least il -2, and will therefore differ from i F is at least one place in the first i l bits of the binary expansion of i F . Thus thecodeword for j F , i j >, which has length i j l l ≥, differs from thecodeword for i F at least once in the first i l places. Thus no codewordis a prefix of any other codeword.(b) We build the following table3.5 AEP. Let ,,21X X be independent identically distributed random variables drawn according to theprobability mass function {}m x x p ,2,1),(∈. Thus ∏==n i i n x p x x x p 121)(),,,( . We know that)(),,,(log 121X H X X X p n n →- in probability. Let ∏==n i i n x q x x x q 121)(),,,( , where q is another probability mass function on {}m ,2,1.(a) Evaluate ),,,(log 1lim 21n X X X q n-, where ,,21X X are i.i.d. ~ )(x p . Solution: Since the n X X X ,,,21 are i.i.d., so are )(1X q ,)(2X q ,…,)(n X q ,and hence we can apply the strong law of large numbers to obtain∑-=-)(log 1lim ),,,(log 1lim 21i n X q n X X X q n 1..))((log p w X q E -=∑-=)(log )(x q x p∑∑-=)(log )()()(log )(x p x p x q x p x p )()||(p H q p D +=8.1 Preprocessing the output. One is given a communication channel withtransition probabilities )|(x y p and channel capacity );(max )(Y X I C x p =.A helpful statistician preprocesses the output by forming )(_Y g Y =. He claims that this will strictly improve the capacity.(a) Show that he is wrong.(b) Under what condition does he not strictly decrease the capacity? Solution:(a) The statistician calculates )(_Y g Y =. Since _Y Y X →→ forms a Markov chain, we can apply the data processing inequality. Hence for every distribution on x ,);();(_Y X I Y X I ≥. Let )(_x p be the distribution on x that maximizes );(_Y X I . Then__)()()(_)()()();(max );();();(max __C Y X I Y X I Y X I Y X I C x p x p x p x p x p x p ==≥≥===.Thus, the statistician is wrong and processing the output does not increase capacity.(b) We have equality in the above sequence of inequalities only if we have equality in data processing inequality, i.e., for the distribution that maximizes );(_Y X I , we have Y Y X →→_forming a Markov chain.8.3 An addition noise channel. Find the channel capacity of the following discrete memoryless channel:Where {}{}21Pr 0Pr ====a Z Z . The alphabet for x is {}1,0=X . Assume that Z is independent of X . Observe that the channel capacity depends on the value of a . Solution: A sum channel.Z X Y += {}1,0∈X , {}a Z ,0∈We have to distinguish various cases depending on the values of a .0=a In this case, X Y =,and 1);(max =Y X I . Hence the capacity is 1 bitper transmission.1,0≠≠a In this case, Y has four possible values a a +1,,1,0. KnowingY ,we know the X which was sent, and hence 0)|(=Y X H . Hence thecapacity is also 1 bit per transmission.1=a In this case Y has three possible output values, 0,1,2, the channel isidentical to the binary erasure channel, with 21=f . The capacity of this channel is 211=-f bit per transmission.1-=a This is similar to the case when 1=a and the capacity is also 1/2 bit per transmission.8.5 Channel capacity. Consider the discrete memoryless channel)11 (mod Z X Y +=, where ⎪⎪⎭⎫ ⎝⎛=1/3 1/3, 1/3,3 2,,1Z and {}10,,1,0 ∈X . Assume thatZ is independent of X .(a) Find the capacity.(b) What is the maximizing )(*x p ?Solution: The capacity of the channel is );(max )(Y X I C x p =)()()|()()|()();(Z H Y H X Z H Y H X Y H Y H Y X I -=-=-=bits 311log)(log );(=-≤Z H y Y X I , which is obtained when Y has an uniform distribution, which occurs when X has an uniform distribution.(a)The capacity of the channel is bits 311log /transmission.(b) The capacity is achieved by an uniform distribution on the inputs.10,,1,0for 111)( ===i i X p 8.12 Time-varying channels. Consider a time-varying discrete memoryless channel. Let n Y Y Y ,,21 be conditionally independent givenn X X X ,,21 , with conditional distribution given by ∏==ni i i i x y p x y p 1)|()|(.Let ),,(21n X X X X =, ),,(21n Y Y Y Y =. Find );(max )(Y X I x p . Solution:∑∑∑∑∑=====--≤-≤-=-=-=-=ni i n i i i n i i ni i i ni i i n p h X Y H Y H X Y H Y H X Y Y Y H Y H X Y Y Y H Y H X Y H Y H Y X I 111111121))(1()|()()|()(),,|()()|,,()()|()();(With equlity ifnX X X ,,21 is chosen i.i.d. Hence∑=-=ni i x p p h Y X I 1)())(1();(max .10.2 A channel with two independent looks at Y . Let 1Y and 2Y be conditionally independent and conditionally identically distributed givenX .(a) Show );();(2),;(21121Y Y I Y X I Y Y X I -=. (b) Conclude that the capacity of the channelX(Y1,Y2)is less than twice the capacity of the channelXY1Solution:(a) )|,(),(),;(212121X Y Y H Y Y H Y Y X I -=)|()|();()()(212121X Y H X Y H Y Y I Y H Y H ---+=);();(2);();();(2112121Y Y I Y X I Y Y I Y X I Y X I -=-+=(b) The capacity of the single look channel 1Y X → is );(max 1)(1Y X I C x p =.Thecapacityof the channel ),(21Y Y X →is11)(211)(21)(22);(2max );();(2max ),;(max C Y X I Y Y I Y X I Y Y X I C x p x p x p =≤-==10.3 The two-look Gaussian channel. Consider the ordinary Shannon Gaussian channel with two correlated looks at X , i.e., ),(21Y Y Y =, where2211Z X Y Z X Y +=+= with a power constraint P on X , and ),0(~),(221K N Z Z ,where⎥⎦⎤⎢⎣⎡=N N N N K ρρ. Find the capacity C for (a) 1=ρ (b) 0=ρ (c) 1-=ρSolution:It is clear that the two input distribution that maximizes the capacity is),0(~P N X . Evaluating the mutual information for this distribution,),(),()|,(),()|,(),(),;(max 212121212121212Z Z h Y Y h X Z Z h Y Y h X Y Y h Y Y h Y Y X I C -=-=-==Nowsince⎪⎪⎭⎫⎝⎛⎥⎦⎤⎢⎣⎡N N N N N Z Z ,0~),(21ρρ,wehave)1()2log(21)2log(21),(222221ρππ-==N e Kz e Z Z h.Since11Z X Y +=, and22Z X Y +=, wehave ⎪⎪⎭⎫⎝⎛⎥⎦⎤⎢⎣⎡++++N N P N N P N Y Y P P ,0~),(21ρρ, And ))1(2)1(()2log(21)2log(21),(222221ρρππ-+-==PN N e K e Y Y h Y .Hence⎪⎪⎭⎫⎝⎛++=-=)1(21log 21),(),(21212ρN P Z Z h Y Y h C(a) 1=ρ.In this case, ⎪⎭⎫⎝⎛+=N P C 1log 21, which is the capacity of a single look channel.(b) 0=ρ. In this case, ⎪⎭⎫⎝⎛+=N P C 21log 21, which corresponds to using twice the power in a single look. The capacity is the same as the capacity of the channel )(21Y Y X +→.(c) 1-=ρ. In this case, ∞=C , which is not surprising since if we add1Y and 2Y , we can recover X exactly.10.4 Parallel channels and waterfilling. Consider a pair of parallel Gaussianchannels,i.e.,⎪⎪⎭⎫⎝⎛+⎪⎪⎭⎫ ⎝⎛=⎪⎪⎭⎫ ⎝⎛212121Z Z X X Y Y , where⎪⎪⎭⎫ ⎝⎛⎥⎥⎦⎤⎢⎢⎣⎡⎪⎪⎭⎫ ⎝⎛222121 00 ,0~σσN Z Z , And there is a power constraint P X X E 2)(2221≤+. Assume that 2221σσ>. At what power does the channel stop behaving like a single channel with noise variance 22σ, and begin behaving like a pair of channels? Solution: We will put all the signal power into the channel with less noise until the total power of noise+signal in that channel equals the noise power in the other channel. After that, we will split anyadditional power evenly between the two channels. Thus the combined channel begins to behave like a pair of parallel channels when the signal power is equal to the difference of the two noise powers, i.e., when 22212σσ-=P .。

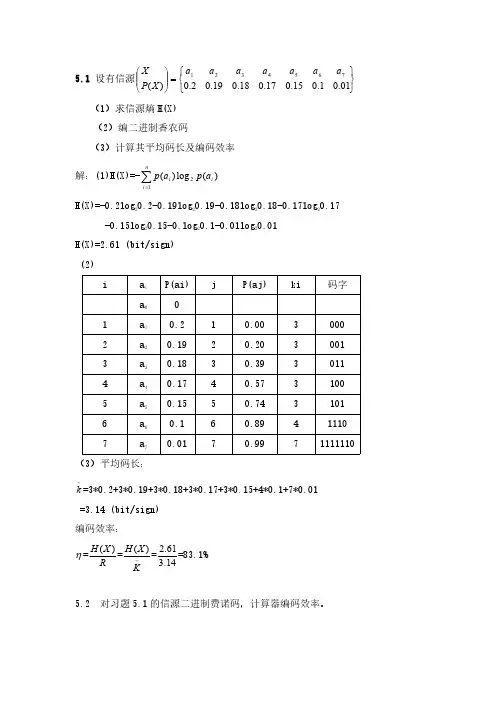

5.1设有信源⎭⎬⎫⎩⎨⎧=⎪⎪⎭⎫ ⎝⎛01.01.015.017.018.019.02.0)(7654321a a a a a a a X P X (1)求信源熵H(X)(2)编二进制香农码(3)计算其平均码长及编码效率解:(1)H(X)=-)(log )(21i ni i a p a p ∑=H(X)=-0.2log 20.2-0.19log 20.19-0.18log 20.18-0.17log 20.17-0.15log 20.15-0.log 20.1-0.01log 20.01H(X)=2.61(bit/sign)(2)ia i P(ai)jP(aj)ki码字a 001a 10.210.0030002a 20.1920.2030013a 30.1830.3930114a 40.1740.5731005a 50.1550.7431016a 60.160.89411107a 70.0170.9971111110(3)平均码长:-k =3*0.2+3*0.19+3*0.18+3*0.17+3*0.15+4*0.1+7*0.01=3.14(bit/sign)编码效率:η=R X H )(=-KX H )(=14.361.2=83.1%5.2对习题5.1的信源二进制费诺码,计算器编码效率。

⎭⎬⎫⎩⎨⎧=⎪⎪⎭⎫ ⎝⎛0.01 0.1 0.15 0.17 0.18 0.19 2.0 )(7654321a a a a a a a X P X 解:Xi)(i X P 编码码字ik 1X 0.2000022X 0.191001033X 0.18101134X 0.17101025X 0.151011036X 0.110111047X 0.01111114%2.9574.2609.2)()(74.2 01.0.041.0415.0317.0218.0319.032.02 )(/bit 609.2)(1.5=====⨯+⨯+⨯+⨯+⨯+⨯+⨯===∑KX H R X H X p k K sign X H ii i η已知由5.3、对信源⎭⎬⎫⎩⎨⎧=⎪⎪⎭⎫ ⎝⎛01.01.015.017.018.019.02.0)(7654321x x x x x x x X P X 编二进制和三进制赫夫曼码,计算各自的平均码长和编码效率。

信息论基础第二版习题答案信息论是一门研究信息传输和处理的学科,它的基础理论是信息论。

信息论的基本概念和原理被广泛应用于通信、数据压缩、密码学等领域。

而《信息论基础》是信息论领域的经典教材之一,它的第二版是对第一版的修订和扩充。

本文将为读者提供《信息论基础第二版》中部分习题的答案,帮助读者更好地理解信息论的基本概念和原理。

第一章:信息论基础1.1 信息的定义和度量习题1:假设有一个事件发生的概率为p,其信息量定义为I(p) = -log(p)。

求当p=0.5时,事件的信息量。

答案:将p=0.5代入公式,得到I(0.5) = -log(0.5) = 1。

习题2:假设有两个互斥事件A和B,其概率分别为p和1-p,求事件A和B 同时发生的信息量。

答案:事件A和B同时发生的概率为p(1-p),根据信息量定义,其信息量为I(p(1-p)) = -log(p(1-p))。

1.2 信息熵和条件熵习题1:假设有一个二进制信源,产生0和1的概率分别为p和1-p,求该信源的信息熵。

答案:根据信息熵的定义,信源的信息熵为H = -plog(p) - (1-p)log(1-p)。

习题2:假设有两个独立的二进制信源A和B,产生0和1的概率分别为p和1-p,求两个信源同时发生时的联合熵。

答案:由于A和B是独立的,所以联合熵等于两个信源的信息熵之和,即H(A,B) = H(A) + H(B) = -plog(p) - (1-p)log(1-p) - plog(p) - (1-p)log(1-p)。

第二章:信道容量2.1 信道的基本概念习题1:假设有一个二进制对称信道,其错误概率为p,求该信道的信道容量。

答案:对于二进制对称信道,其信道容量为C = 1 - H(p),其中H(p)为错误概率为p时的信道容量。

习题2:假设有一个高斯信道,信道的信噪比为S/N,求该信道的信道容量。

答案:对于高斯信道,其信道容量为C = 0.5log(1 + S/N)。

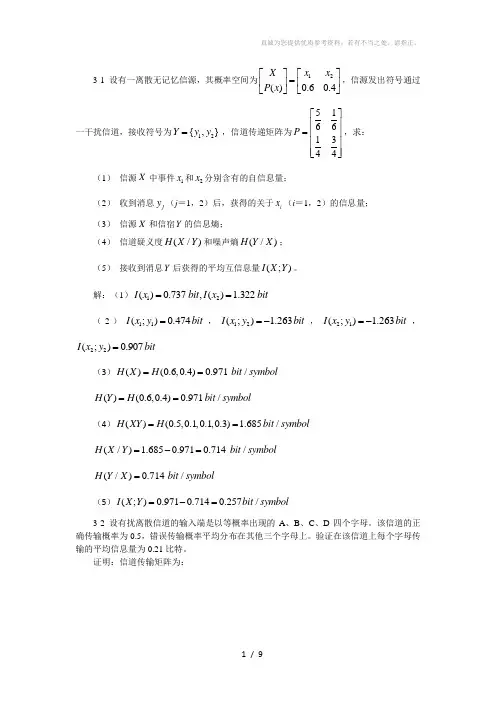

3-1 设有一离散无记忆信源,其概率空间为12()0.60.4X x x P x ⎡⎤⎡⎤=⎢⎥⎢⎥⎣⎦⎣⎦,信源发出符号通过一干扰信道,接收符号为12{,}Y y y =,信道传递矩阵为51661344P ⎡⎤⎢⎥=⎢⎥⎢⎥⎢⎥⎣⎦,求: (1) 信源X 中事件1x 和2x 分别含有的自信息量;(2) 收到消息j y (j =1,2)后,获得的关于i x (i =1,2)的信息量; (3) 信源X 和信宿Y 的信息熵;(4) 信道疑义度(/)H X Y 和噪声熵(/)H Y X ; (5) 接收到消息Y 后获得的平均互信息量(;)I X Y 。

解:(1)12()0.737,() 1.322I x bit I x bit ==(2)11(;)0.474I x y bit =,12(;) 1.263I x y bit =-,21(;) 1.263I x y bit =-,22(;)0.907I x y bit =(3)()(0.6,0.4)0.971/H X H bit symbol ==()(0.6,0.4)0.971/H Y H bit symbol ==(4)()(0.5,0.1,0.1,0.3) 1.685/H XY H bit symbol ==(/) 1.6850.9710.714/H X Y bit symbol =-= (/)0.714/H Y X bit symbol =(5)(;)0.9710.7140.257/I X Y bit symbol =-=3-2 设有扰离散信道的输入端是以等概率出现的A 、B 、C 、D 四个字母。

该信道的正确传输概率为0.5,错误传输概率平均分布在其他三个字母上。

验证在该信道上每个字母传输的平均信息量为0.21比特。

证明:信道传输矩阵为:11112666111162661111662611116662P ⎡⎤⎢⎥⎢⎥⎢⎥⎢⎥=⎢⎥⎢⎥⎢⎥⎢⎥⎢⎥⎣⎦,信源信宿概率分布为:1111()(){,,,}4444P X P Y ==, H(Y/X)=1.79(bit/符号),I(X;Y)=H(Y)- H(Y/X)=2-1.79=0.21(bit/符号)3-3 已知信源X 包含两种消息:12,x x ,且12()() 1/2P x P x ==,信道是有扰的,信宿收到的消息集合Y 包含12,y y 。

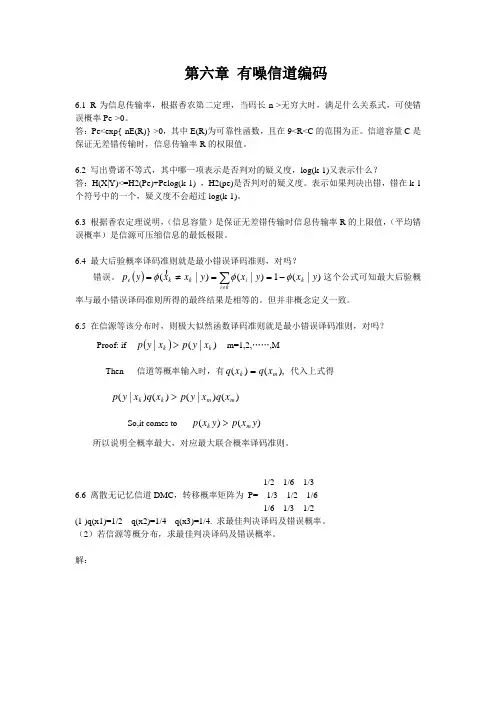

第六章 有噪信道编码6.1 R 为信息传输率,根据香农第二定理,当码长n->无穷大时,满足什么关系式,可使错误概率Pe->0。

答:Pe<exp{-nE(R)}->0,其中E(R)为可靠性函数,且在9<R<C 的范围为正。

信道容量C 是保证无差错传输时,信息传输率R 的权限值。

6.2 写出费诺不等式,其中哪一项表示是否判对的疑义度,log(k-1)又表示什么?答:H(X|Y)<=H2(Pe)+Pelog(k-1) ,H2(pe)是否判对的疑义度。

表示如果判决出错,错在k-1个符号中的一个,疑义度不会超过log(k-1)。

6.3 根据香农定理说明,(信息容量)是保证无差错传输时信息传输率R 的上限值,(平均错误概率)是信源可压缩信息的最低极限。

6.4 最大后验概率译码准则就是最小错误译码准则,对吗?错误。

()∑≠-==≠=k i k i k k e y x y xy x x y p )|(1)|()|(φφφ 这个公式可知最大后验概率与最小错误译码准则所得的最终结果是相等的。

但并非概念定义一致。

6.5 在信源等该分布时,则极大似然函数译码准则就是最小错误译码准则,对吗? Proof: if ())|(|k k x y p x y p > m=1,2,……,MThen 信道等概率输入时,有),()(m k x q x q = 代入上式得)()|()()|(m m k k x q x y p x q x y p >So,it comes to )()(y x p y x p m k >所以说明全概率最大,对应最大联合概率译码准则。

1/2 1/6 1/36.6 离散无记忆信道DMC ,转移概率矩阵为 P= 1/3 1/2 1/61/6 1/3 1/2(1 )q(x1)=1/2 q(x2)=1/4 q(x3)=1/4. 求最佳判决译码及错误概率。

(2)若信源等概分布,求最佳判决译码及错误概率。

第二章习题参考答案2-1解:同时掷两个正常的骰子,这两个事件是相互独立的,所以两骰子面朝上点数的状态共有6×6=36种,其中任一状态的分布都是等概的,出现的概率为1/36。

(1)设“3和5同时出现”为事件A ,则A 的发生有两种情况:甲3乙5,甲5乙3。

因此事件A 发生的概率为p(A)=(1/36)*2=1/18 故事件A 的自信息量为I(A)=-log 2p(A)=log 218=4.17 bit(2)设“两个1同时出现”为事件B ,则B 的发生只有一种情况:甲1乙1。

因此事件B 发生的概率为p(B)=1/36 故事件B 的自信息量为I(B)=-log 2p(B)=log 236=5.17 bit (3) 两个点数的排列如下:因为各种组合无序,所以共有21种组合: 其中11,22,33,44,55,66的概率是3616161=⨯ 其他15个组合的概率是18161612=⨯⨯symbol bit x p x p X H ii i / 337.4181log 18115361log 3616)(log )()(=⎪⎭⎫ ⎝⎛⨯+⨯-=-=∑(4) 参考上面的两个点数的排列,可以得出两个点数求和的概率分布:sym bolbit x p x p X H X P X ii i / 274.3 61log 61365log 365291log 912121log 1212181log 1812361log 3612 )(log )()(36112181111211091936586173656915121418133612)(=⎪⎭⎫ ⎝⎛+⨯+⨯+⨯+⨯+⨯-=-=⎪⎭⎪⎬⎫⎪⎩⎪⎨⎧=⎥⎦⎤⎢⎣⎡∑(5)“两个点数中至少有一个是1”的组合数共有11种。

bitx p x I x p i i i 710.13611log )(log )(3611116161)(=-=-==⨯⨯=2-2解:(1)红色球x 1和白色球x 2的概率分布为⎥⎥⎦⎤⎢⎢⎣⎡=⎥⎦⎤⎢⎣⎡2121)(21x x x p X i 比特 12log *21*2)(log )()(2212==-=∑=i i i x p x p X H(2)红色球x 1和白色球x 2的概率分布为⎥⎥⎦⎤⎢⎢⎣⎡=⎥⎦⎤⎢⎣⎡100110099)(21x x x p X i 比特 08.0100log *100199100log *10099)(log )()(22212=+=-=∑=i i i x p x p X H (3)四种球的概率分布为⎥⎥⎦⎤⎢⎢⎣⎡=⎥⎦⎤⎢⎣⎡41414141)(4321x x x x x p X i ,42211()()log ()4**log 4 2 4i i i H X p x p x ==-==∑比特2-5解:骰子一共有六面,某一骰子扔得某一点数面朝上的概率是相等的,均为1/6。

2.2 居住某地区的女孩子有25%是大学生,在女大学生中有75%是身高160厘米以上的,而女孩子中身高160厘米以上的占总数的一半。

假如我们得知“身高160厘米以上的某女孩是大学生”的消息,问获得多少信息量? 解:设随机变量X 代表女孩子学历 X x 1(是大学生) x 2(不是大学)P(X) 0.250.75设随机变量Y 代表女孩子身高Y y 1(身高>160cm ) y 2(身高<160cm ) P(Y) 0.50.5已知:在女大学生中有75%是身高160厘米以上的 即:bit x y p 75.0)/(11=求:身高160厘米以上的某女孩是大学生的信息量即:b i ty p x y p x p y x p y x I 415.15.075.025.0log )()/()(log )/(log )/(11111111=⨯-=-=-= 2.4 设离散无记忆信源⎭⎬⎫⎩⎨⎧=====⎥⎦⎤⎢⎣⎡8/14/1324/18/310)(4321x x x x X P X ,其发出的信息为( 02120130213001203210110321010021032011223210),求(1) 此消息的自信息量是多少?(2) 此消息中平均每符号携带的信息量是多少? 解:(1) 此消息总共有14个0、13个1、12个2、6个3,因此此消息发出的概率是:62514814183⎪⎭⎫ ⎝⎛⨯⎪⎭⎫ ⎝⎛⨯⎪⎭⎫ ⎝⎛=p 此消息的信息量是:bit p I 811.87log =-=(2) 此消息中平均每符号携带的信息量是:b i t n I 951.145/811.87/==2.9 设有一个信源,它产生0,1序列的信息。

它在任意时间而且不论以前发生过什么符号,均按P(0) = 0.4,P(1) = 0.6的概率发出符号。

(1) 试问这个信源是否是平稳的? (2) 试计算H(X 2), H(X 3/X 1X 2)及H ∞;(3) 试计算H(X 4)并写出X 4信源中可能有的所有符号。

3.1 设二元对称信道的传递矩阵为⎥⎥⎥⎦⎤⎢⎢⎢⎣⎡32313132(1) 若P(0) = 3/4, P(1) = 1/4,求H(X), H(X/Y), H(Y/X)和I(X;Y); (2) 求该信道的信道容量及其达到信道容量时的输入概率分布;解: 1)symbolbit Y X H X H Y X I symbol bit X Y H Y H X H Y X H X Y H Y H Y X H X H Y X I symbol bit y p Y H x y p x p x y p x p y x p y x p y p x y p x p x y p x p y x p y x p y p symbolbit x y p x y p x p X Y H symbolbit x p X H jj iji j i j i i i / 062.0749.0811.0)/()();(/ 749.0918.0980.0811.0)/()()()/()/()()/()();(/ 980.0)4167.0log 4167.05833.0log 5833.0()()(4167.032413143)/()()/()()()()(5833.031413243)/()()/()()()()(/ 918.0 10log )32lg 324131lg 314131lg 314332lg 3243( )/(log )/()()/(/ 811.0)41log 4143log 43()()(222221212221221211112111222=-==-==+-=+-=-=-==⨯+⨯-=-==⨯+⨯=+=+==⨯+⨯=+=+==⨯⨯+⨯+⨯+⨯-=-==⨯+⨯-=-=∑∑∑∑2)2221122max (;)log log 2(lg lg )log 100.082 /3333mi C I X Y m H bit symbol==-=++⨯=其最佳输入分布为1()2i p x =3-2某信源发送端有2个符号,i x ,i =1,2;()i p x a =,每秒发出一个符号。

1. 有一个马尔可夫信源,已知p(x 1|x 1)=2/3,p(x 2|x 1)=1/3,p(x 1|x 2)=1,p(x 2|x 2)=0,试画出该信源的香农线图,并求出信源熵。

解:该信源的香农线图为: 1/3○○2/3(x 1) 1 (x 2)在计算信源熵之前,先用转移概率求稳定状态下二个状态x 1和 x 2的概率)(1x p 和)(2x p 立方程:)()()(1111x p x x p x p =+)()(221x p x x p=)()(2132x p x p + )()()(1122x p x x p x p =+)()(222x p x x p=)(0)(2131x p x p + )()(21x p x p +=1 得431)(=x p 412)(=x p 马尔可夫信源熵H = ∑∑-IJi j i jix x p x xp x p )(log )()( 得 H=0.689bit/符号2.设有一个无记忆信源发出符号A 和B ,已知4341)(.)(==B p A p 。

求: ①计算该信源熵;②设该信源改为发出二重符号序列消息的信源,采用费诺编码方法,求其平均信息传输速率; ③又设该信源改为发三重序列消息的信源,采用霍夫曼编码方法,求其平均信息传输速率。

解:①∑-=Xiix p x p X H )(log )()( =0.812 bit/符号②发出二重符号序列消息的信源,发出四种消息的概率分别为1614141)(=⨯=AA p 1634341)(=⨯=AB p 1634143)(=⨯=BA p 1694343)(=⨯=BB p 用费诺编码方法 代码组 b iBB 0 1 BA 10 2 AB 110 3 AA 111 3 无记忆信源 624.1)(2)(2==X H X H bit/双符号 平均代码组长度 2B =1.687 bit/双符号BX H R )(22==0.963 bit/码元时间③三重符号序列消息有8个,它们的概率分别为641)(=AAA p 643)(=AAB p 643)(=BAA p 643)(=ABA p 649)(=BBA p 649)(=BAB p 649)(=ABB p 6427)(=BBB p用霍夫曼编码方法 代码组 b i BBB 6427 0 0 1 BBA 649 0 )(6419 1 110 3 BAB 649 1 )(6418)(644 1 101 3 ABB 649 0 0 100 3AAB 643 1 )(646 1 11111 5 BAA 643 0 1 11110 5ABA 643 1 )(6440 11101 5AAA 6410 11100 5)(3)(3X H X H ==2.436 bit/三重符号序列 3B =2.469码元/三重符号序列3R =BX H )(3=0.987 bit/码元时间 3.已知符号集合{ 321,,x x x }为无限离散消息集合,它们的出现概率分别为 211)(=x p ,412)(=x p 813)(=x p ···i i x p 21)(=···求: ① 用香农编码方法写出各个符号消息的码字(代码组); ② 计算码字的平均信息传输速率; ③ 计算信源编码效率。

信息论习题集一、名词解释(每词2分)(25道)1、“本体论”的信息(P3)2、“认识论”信息(P3)3、离散信源(11)4、自信息量(12)5、离散平稳无记忆信源(49)6、马尔可夫信源(58)7、信源冗余度 (66)8、连续信源 (68)9、信道容量 (95)10、强对称信道 (99) 11、对称信道 (101-102)12、多符号离散信道(109)13、连续信道 (124) 14、平均失真度 (136) 15、实验信道 (138) 16、率失真函数 (139) 17、信息价值率 (163) 18、游程序列 (181) 19、游程变换 (181) 20、L-D 编码(184)、 21、冗余变换 (184) 22、BSC 信道 (189) 23、码的最小距离 (193)24、线性分组码 (195) 25、循环码 (213) 二、填空(每空1分)(100道)1、 在认识论层次上研究信息的时候,必须同时考虑到形式、含义和效用 三个方面的因素。

2、 1948年,美国数学家 香农 发表了题为“通信的数学理论”的长篇论文,从而创立了信息论。

3、 按照信息的性质,可以把信息分成语法信息、语义信息和语用信息 。

4、 按照信息的地位,可以把信息分成 客观信息和主观信息 。

5、 人们研究信息论的目的是为了高效、可靠、安全 地交换和利用各种各样的信息。

6、 信息的可度量性 是建立信息论的基础。

7、 统计度量 是信息度量最常用的方法。

8、 熵是香农信息论最基本最重要的概念。

9、 事物的不确定度是用时间统计发生 概率的对数 来描述的。

10、单符号离散信源一般用随机变量描述,而多符号离散信源一般用 随机矢量 描述。

11、一个随机事件发生某一结果后所带来的信息量称为自信息量,定义为 其发生概率对数的负值。

12、自信息量的单位一般有 比特、奈特和哈特 。

13、必然事件的自信息是 0 。

14、不可能事件的自信息量是 ∞ 。

第六章 有噪信道编码

6.1 R 为信息传输率,根据香农第二定理,当码长n->无穷大时,满足什么关系式,可使错误概率Pe->0。

答:Pe<exp{-nE(R)}->0,其中E(R)为可靠性函数,且在9<R<C 的范围为正。

信道容量C 是保证无差错传输时,信息传输率R 的权限值。

6.2 写出费诺不等式,其中哪一项表示是否判对的疑义度,log(k-1)又表示什么?

答:H(X|Y)<=H2(Pe)+Pelog(k-1) ,H2(pe)是否判对的疑义度。

表示如果判决出错,错在k-1个符号中的一个,疑义度不会超过log(k-1)。

6.3 根据香农定理说明,(信息容量)是保证无差错传输时信息传输率R 的上限值,(平均错误概率)是信源可压缩信息的最低极限。

6.4 最大后验概率译码准则就是最小错误译码准则,对吗?

错误。

()∑≠-==≠=k i k i k k e y x y x

y x x y p )|(1)|()|(φφφ 这个公式可知最大后验概

率与最小错误译码准则所得的最终结果是相等的。

但并非概念定义一致。

6.5 在信源等该分布时,则极大似然函数译码准则就是最小错误译码准则,对吗? Proof: if ())|(|k k x y p x y p > m=1,2,……,M

Then 信道等概率输入时,有),()(m k x q x q = 代入上式得

)()|()()|(m m k k x q x y p x q x y p >

So,it comes to )()(y x p y x p m k >

所以说明全概率最大,对应最大联合概率译码准则。

1/2 1/6 1/3

6.6 离散无记忆信道DMC ,转移概率矩阵为 P= 1/3 1/2 1/6

1/6 1/3 1/2

(1 )q(x1)=1/2 q(x2)=1/4 q(x3)=1/4. 求最佳判决译码及错误概率。

(2)若信源等概分布,求最佳判决译码及错误概率。

解:

(1)首先求联合概率矩阵111412611164121111264XY P ⎡⎤⎢⎥⎢⎥⎢⎥=⎢⎥⎢⎥⎢⎥⎢⎥⎣⎦

最大后验概率准则即最小错误概率准则,也等同于最大联合概率准则,因此,从联合概率矩阵的每一列中选联合概率最大的发送符号作为译码输出,因此将

112233,,y x y x y x →→→ 此时正确译码概率为11223313()()()344

c p p x y p x y p x y =++=⨯= 错误概率为114

e c p p =-= (2)当信源等概率分布时,极大似然准则等价于最大后验概率准则,因此从信道矩阵的每一列中取转移概率最大的一个发送符号作为相应接收符号的译码输出,即是最佳译码方案,因此将112233,,y x y x y x →→→ 此时正确译码概率为112233111()()()(3)322c p p x y p x y p x y =++=⨯⨯= 错误概率为112

e c p p =-=。