信息论英文课后部分习题答案

- 格式:doc

- 大小:1.13 MB

- 文档页数:16

⋅ 第二章课后习题【2.1】设有 12枚同值硬币,其中有一枚为假币。

只知道假币的重量与真币的重量不同,但不知究竟是重还是轻。

现用比较天平左右两边轻重的方法来测量。

为了在天平上称出哪一枚是假币,试问至少必须称多少次?解:从信息论的角度看,“12枚硬币中,某一枚为假币”该事件发生的概率为 P = 112 ; “假币的重量比真的轻,或重”该事件发生的概率为 P = 1 2; 为确定哪一枚是假币,即要消除上述两事件的联合不确定性,由于二者是独立的,因此有I = log12 + log 2 = log 24比特而用天平称时,有三种可能性:重、轻、相等,三者是等概率的,均为 P = 平每一次消除的不确定性为 I = log 3比特因此,必须称的次数为13,因此天I 1 I 2log 24 log 3 H 2.9次因此,至少需称 3次。

【延伸】如何测量?分 3堆,每堆 4枚,经过 3次测量能否测出哪一枚为假币。

【2.2】同时扔一对均匀的骰子,当得知“两骰子面朝上点数之和为 2”或“面朝上点数之和为 8”或“两骰子面朝上点数是 3和 4”时,试问这三种情况分别获得多少信息量?解:“两骰子总点数之和为 2”有一种可能,即两骰子的点数各为 1,由于二者是独立的,因此该种情况发生的概率为 P =1 1 6 6 136,该事件的信息量为:⋅ ⋅ 5 =⋅ ⋅ 2 =I = log 36 H 5.17比特“两骰子总点数之和为 8”共有如下可能:2和 6、3和 5、4和 4、5和 3、6和 2,概率为 P =1 1 6 6 536 ,因此该事件的信息量为:36 I = logH 2.85比特 5“两骰子面朝上点数是 3和 4”的可能性有两种:3和 4、4和 3,概率为P = 1 1 6 6 118, 因此该事件的信息量为:I = log18 H 4.17比特【2.3】如果你在不知道今天是星期几的情况下问你的朋友“明天星期几?”则答案中含有多少信息量?如果你在已知今天是星期四的情况下提出同样的问题,则答案中你能获得多少信息量(假设已知星期一至星期日的顺序)?解:如果不知今天星期几时问的话,答案可能有七种可能性,每一种都是等概率的,均为P = 17,因此此时从答案中获得的信息量为I = log 7 = 2.807比特而当已知今天星期几时问同样的问题,其可能性只有一种,即发生的概率为 1,此时获得的信息量为 0比特。

2-1 同时掷两个正常的骰子,也就是各面呈现的概率都是1/6,求: (1)“3和5同时出现” 这事件的自信息量。

(2)“两个1同时出现” 这事件的自信息量。

(3)两个点数的各种组合(无序对)的熵或平均信息量。

(4)两个点数之和(即2,3,…,12构成的子集)的熵。

(5)两个点数中至少有一个是1的自信息。

解:(1)设X 为‘3和5同时出现’这一事件,则P (X )=1/18,因此 17.418log)(log)(22==-=x p X I (比特)(2)设‘两个1同时出现’这一事件为X ,则P (X )=1/36,因此 17.536log)(log)(22==-=x p X I (比特)(3 ) “两个相同点数出现”这一事件的概率为1/36,其他事件的概率为1/18,则 337.418log181536log366)(22=+=X H (比特/组合)(4)222222111111()[log 36log 18()log 12()log 936181836181811136111()log ]2()log 6 3.44(/)1818365181818H X =++++++++⨯+++=比特两个点数之和(5)两个点数至少有一个为1的概率为P (X )= 11/36 71.13611log)(2=-=X I (比特)2-6设有一离散无记忆信源,其概率空间为⎪⎪⎭⎫⎝⎛=====⎪⎪⎭⎫⎝⎛8/134/124/118/304321x x x x PX该信源发出的信息符号序列为(202 120 130 213 001 203 210 110 321 010 021 032 011 223 210),求:(1) 此信息的自信息量是多少?(2) 在此信息中平均每个符号携带的信息量是多少? 解:(1)由无记忆性,可得序列)(比特/18.87)3(6)2(12)1(13)0(14=+++=I I I I(2)符号)(比特/91.145/==I H 2-9在一个袋中放有5个黑球、10个白球,以摸一个球为一次实验,摸出的球不再放进去。

第六章 有噪信道编码6.1 R 为信息传输率,根据香农第二定理,当码长n->无穷大时,满足什么关系式,可使错误概率Pe->0。

答:Pe<exp{-nE(R)}->0,其中E(R)为可靠性函数,且在9<R<C 的范围为正。

信道容量C 是保证无差错传输时,信息传输率R 的权限值。

6.2 写出费诺不等式,其中哪一项表示是否判对的疑义度,log(k-1)又表示什么?答:H(X|Y)<=H2(Pe)+Pelog(k-1) ,H2(pe)是否判对的疑义度。

表示如果判决出错,错在k-1个符号中的一个,疑义度不会超过log(k-1)。

6.3 根据香农定理说明,(信息容量)是保证无差错传输时信息传输率R 的上限值,(平均错误概率)是信源可压缩信息的最低极限。

6.4 最大后验概率译码准则就是最小错误译码准则,对吗?错误。

()∑≠-==≠=k i k i k k e y x y xy x x y p )|(1)|()|(φφφ 这个公式可知最大后验概率与最小错误译码准则所得的最终结果是相等的。

但并非概念定义一致。

6.5 在信源等该分布时,则极大似然函数译码准则就是最小错误译码准则,对吗? Proof: if ())|(|k k x y p x y p > m=1,2,……,MThen 信道等概率输入时,有),()(m k x q x q = 代入上式得)()|()()|(m m k k x q x y p x q x y p >So,it comes to )()(y x p y x p m k >所以说明全概率最大,对应最大联合概率译码准则。

1/2 1/6 1/36.6 离散无记忆信道DMC ,转移概率矩阵为 P= 1/3 1/2 1/61/6 1/3 1/2(1 )q(x1)=1/2 q(x2)=1/4 q(x3)=1/4. 求最佳判决译码及错误概率。

(2)若信源等概分布,求最佳判决译码及错误概率。

第一单元元件及定律A.课文译文电阻器、电容器和电感器在电子电路中,电阻器、电容器和电感器是非常重要的元件。

电阻器和电阻电阻器是二端口元件。

电阻是阻止电流流动,更确切地说,是阻止电荷流动的能力。

在国际单位制中,电阻用欧姆来度量。

希腊字母Ω是欧姆的标准符号。

较大的电阻一般用千欧和兆欧来表示。

模拟这种特性常用的电路元件是电阻器。

图1.1表示电阻器的电路符号,R表示电阻器的电阻值。

图1.1 电阻器的电路符号为了进行电路分析,我们必须在电阻器中指明电流和电压的参考方向。

如果我们选择关联参考方向,那么电压和电流之间的关系是:v=iR (1.1)这里v是电压,其单位是伏特, i是电流,其单位是安培,R是电阻,其单位是欧姆。

如果选择非关联参考方向,我们必须写成:v=-iR (1.2)用在公式(1.1)和(1.2)中的代数式就是著名的欧姆定律。

欧姆定律表示了电压作为电流的函数。

然而,要表示电流是电压的函数也是非常方便的。

欧姆定律是电阻两端的电压和电流间的代数关系。

电容器和电容电能可以存储在电场中,存储电能的装置叫电容器。

电容器存储电能的能力叫做电容。

图1.2表示电容器的电路符号。

电容的电路参数用字母C 表示,用法拉来度量。

因为法拉是相当大的电容量,实际上电容值通常位于皮法和微法之间。

图1.2 电容器的电路符号当电压随时间变化时,电荷的位移也随时间变化,引起了众所周知的位移电流。

在终端,位移电流和传导电流没有区别。

当电流参考方向和电压参考方向是关联参考方向时,电流正比于电容两端电压随时间的变化率的数学表达式为: dt dv Ci (1.3)这里 i 的单位是安培,C 的单位是法拉,v 的单位是伏特, t 的单位是秒。

电感器和电感众所周知,电感是电子电路中的模块之一。

所有的线圈都有电感。

电感是抵抗流过线圈电流的任何变化的性质。

电感用字母L 表示,其单位是亨利。

图1.3表示一个电感器。

图1.3 电感器的电路符号当电流和电压的参考方向关联时,有 dtdi Lv (1.4)这里v 的单位是伏特,L 的单位是亨利,i 的单位是安培,t 的单位是秒。

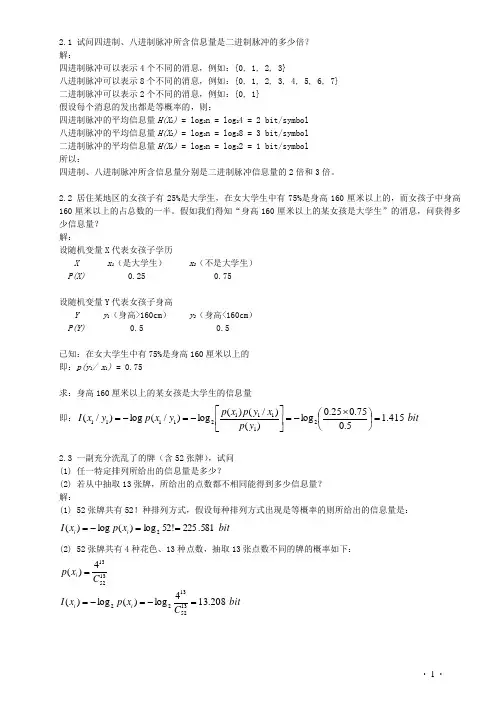

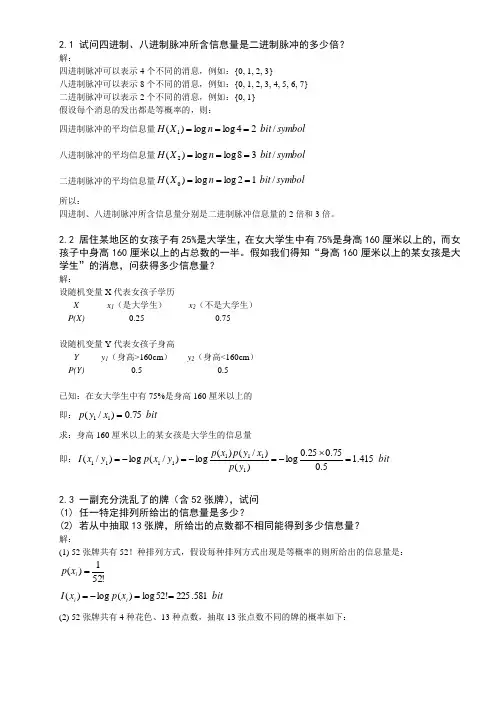

· 1 ·2.1 试问四进制、八进制脉冲所含信息量是二进制脉冲的多少倍? 解:四进制脉冲可以表示4个不同的消息,例如:{0, 1, 2, 3}八进制脉冲可以表示8个不同的消息,例如:{0, 1, 2, 3, 4, 5, 6, 7} 二进制脉冲可以表示2个不同的消息,例如:{0, 1} 假设每个消息的发出都是等概率的,则:四进制脉冲的平均信息量H(X 1) = log 2n = log 24 = 2 bit/symbol 八进制脉冲的平均信息量H(X 2) = log 2n = log 28 = 3 bit/symbol 二进制脉冲的平均信息量H(X 0) = log 2n = log 22 = 1 bit/symbol 所以:四进制、八进制脉冲所含信息量分别是二进制脉冲信息量的2倍和3倍。

2.2 居住某地区的女孩子有25%是大学生,在女大学生中有75%是身高160厘米以上的,而女孩子中身高160厘米以上的占总数的一半。

假如我们得知“身高160厘米以上的某女孩是大学生”的消息,问获得多少信息量? 解:设随机变量X 代表女孩子学历X x 1(是大学生) x 2(不是大学生) P(X) 0.25 0.75设随机变量Y 代表女孩子身高Y y 1(身高>160cm ) y 2(身高<160cm ) P(Y) 0.5 0.5已知:在女大学生中有75%是身高160厘米以上的 即:p(y 1/ x 1) = 0.75求:身高160厘米以上的某女孩是大学生的信息量 即:bit y p x y p x p y x p y x I 415.15.075.025.0log )()/()(log )/(log )/(2111121111=⎪⎭⎫⎝⎛⨯-=⎥⎦⎤⎢⎣⎡-=-=2.3 一副充分洗乱了的牌(含52张牌),试问 (1) 任一特定排列所给出的信息量是多少?(2) 若从中抽取13张牌,所给出的点数都不相同能得到多少信息量? 解:(1) 52张牌共有52!种排列方式,假设每种排列方式出现是等概率的则所给出的信息量是:bit x p x I i i 581.225!52log )(log )(2==-=(2) 52张牌共有4种花色、13种点数,抽取13张点数不同的牌的概率如下:bit C x p x I C x p i i i 208.134log )(log )(4)(13521322135213=-=-==· 2 ·2.4 设离散无记忆信源⎭⎬⎫⎩⎨⎧=====⎥⎦⎤⎢⎣⎡8/14/1324/18/310)(4321x x x x X P X ,其发出的信息为(202120130213001203210110321010021032011223210),求(1) 此消息的自信息量是多少?(2) 此消息中平均每符号携带的信息量是多少? 解:(1) 此消息总共有14个0、13个1、12个2、6个3,因此此消息发出的概率是:62514814183⎪⎭⎫ ⎝⎛⨯⎪⎭⎫ ⎝⎛⨯⎪⎭⎫ ⎝⎛=p此消息的信息量是:bit p I 811.87log 2=-=(2) 此消息中平均每符号携带的信息量是:bit n I 951.145/811.87/==2.5 从大量统计资料知道,男性中红绿色盲的发病率为7%,女性发病率为0.5%,如果你问一位男士:“你是否是色盲?”他的回答可能是“是”,可能是“否”,问这两个回答中各含多少信息量,平均每个回答中含有多少信息量?如果问一位女士,则答案中含有的平均自信息量是多少? 解: 男士:sym bolbit x p x p X H bitx p x I x p bit x p x I x p i i i N N N Y Y Y / 366.0)93.0log 93.007.0log 07.0()(log )()( 105.093.0log )(log )(%93)( 837.307.0log )(log )(%7)(22222222=+-=-==-=-===-=-==∑女士:symbol bit x p x p X H ii i / 045.0)995.0log 995.0005.0log 005.0()(log )()(2222=+-=-=∑2.6 设信源⎭⎬⎫⎩⎨⎧=⎥⎦⎤⎢⎣⎡17.016.017.018.019.02.0)(654321x x x x x x X P X ,求这个信源的熵,并解释为什么H(X) >log6不满足信源熵的极值性。

信息论与编码学习辅导及习题详解Information Theory and Coding Learning Coaching and Exercise ExplanationInformation Theory and Coding are two closely related scientific disciplines for signal processing, data communication, and computer networks. These two studies are often used together in applications, making the learning of the concepts of Information Theory and Coding important. This essay will guide through the basic knowledge of these two fields, provide coaching on learning Information Theory and Coding, and go through exercise explanations.I. Information TheoryA. DefinitionInformation Theory is a branch of applied mathematics and electrical engineering dealing with the quantification, storage, and communication of information. It was developed in 1948 by Claude Shannon. The fundamental problem of communication is to convey a message from a sender to a receiver without any errors. Information Theory is the study of how information is transmitted over a communication medium, and how the communication medium affects the transmission process.B. Basic ConceptsSome basic concepts of Information Theory are entropy, noise, channel capacity, coding, and error control. Entropy is a measure of uncertainty, and is used to help determine the amount of information contained in a signal.Noise is any disturbances that have an impact on the transmission of information. Channel capacity is the maximum amount of data that can be transmitted through a communication channel. Coding is the process of translating the message from the source into a form that can be understood by the destination. Error control is the process of detecting, identifying, and correcting any errors that occur during transmission.II. CodingA. DefinitionCoding is a branch of mathematics and computer science dealing with the efficient representation of data. It was developed in the late 1950s and early 1960s. Coding is used in a variety of applications, including data storage, image processing, digital signal processing, and communications. Coding techniques can greatly reduce the amount of data that needs to be stored and transmitted.B. Basic ConceptsThe main concepts of Coding are coding, signaling, modulation, coding rate, coding efficiency, and entropy. Coding is the process of transforming the message from the source into a form that can be understood by the destination. Signaling is the process of conveying information through a medium over a communication link. Modulation is the process of varying some aspect of a signal in order to transmit information. The coding rate is the number of bits required to encode one message. The coding efficiency is the ratio between the actual number of bits used to encode the message, and the total number of bits used. Entropy is a measure of the amount of information contained in a signal.III. Learning CoachingA. FundamentalsThe best way to learn the fundamentals of Information Theory and Coding is to start by familiarizing oneself with the core concepts such as entropy, noise, channel capacity, coding, and error control. Taking a college course in Information Theory and Coding is also beneficial. Alternatively, reading textbooks and studying reference material is a viable option.B. PracticePracticing the concepts of Information Theory and Coding is essential to mastering them. It is important to try to understand the material rather than memorize it. Doing practice problems is the best way to learn and build an understanding of the material.IV. Exercise ExplanationA. Information TheoryFor the Information Theory part of this exercise, the main goal is to determine the maximum rate at which data can be transmitted through a given communication channel. To do this, one needs to first calculate the entropy of the signal to determine the amount of information contained in it. Then, the channel capacity needs to be calculated by taking the s ignal’s entropy, the noise of the channel, and the coding rate into consideration.B. CodingFor the Coding part of this exercise, the main goal is to encode a message into a format that can be understood by the destination. To do this, one needsto first select an appropriate coding technique. Then, the information needs to be encoded using this technique. Finally, the encoded message needs to be transmitted through a communication link.In conclusion, Information Theory and Coding are two important scientific fields for signal processing, data communication, and computer networks. This essay has guided through the basics of these two fields, provided coaching on learning Information Theory and Coding, and gone through exercise explanations. Therefore, it is essential for one to understand the fundamentals of Information Theory and Coding and practice the concepts in order to gain mastery in these fields.。

3.1 设二元对称信道的传递矩阵为⎥⎥⎥⎦⎤⎢⎢⎢⎣⎡32313132(1) 若P(0) = 3/4, P(1) = 1/4,求H(X), H(X/Y), H(Y/X)和I(X;Y); (2) 求该信道的信道容量及其达到信道容量时的输入概率分布;解: 1)symbolbit Y X H X H Y X I symbol bit X Y H Y H X H Y X H X Y H Y H Y X H X H Y X I symbol bit y p Y H x y p x p x y p x p y x p y x p y p x y p x p x y p x p y x p y x p y p symbolbit x y p x y p x p X Y H symbolbit x p X H jj iji j i j i i i / 062.0749.0811.0)/()();(/ 749.0918.0980.0811.0)/()()()/()/()()/()();(/ 980.0)4167.0log 4167.05833.0log 5833.0()()(4167.032413143)/()()/()()()()(5833.031413243)/()()/()()()()(/ 918.0 10log )32lg 324131lg 314131lg 314332lg 3243( )/(log )/()()/(/ 811.0)41log 4143log 43()()(222221212221221211112111222=-==-==+-=+-=-=-==⨯+⨯-=-==⨯+⨯=+=+==⨯+⨯=+=+==⨯⨯+⨯+⨯+⨯-=-==⨯+⨯-=-=∑∑∑∑2)2221122max (;)log log 2(lg lg )log 100.082 /3333mi C I X Y m H bit symbol==-=++⨯=其最佳输入分布为1()2i p x =3-2某信源发送端有2个符号,i x ,i =1,2;()i p x a =,每秒发出一个符号。

U n i t1E x e r c i s e s(1)T r a n s l a t e t h e f o l l o w i n g s e n t e n c e s i n t o C h i n e s e.1.As with series resonance, the greater the resistance in the circuitthe lower the Q and, accordingly, the flatter and broader theresonance curve of either line current or circuit impedance.对于串联谐振,电路中的电阻愈大Q值就愈低,相应地线路电流或电路阻抗的谐振曲线也就愈平、愈宽。

2.A wire carrying a current looks exactly the same and weighsexactly the same as it does when it is not carrying a current.一根带电的导线其外表与重量都与不带电导线完全一样。

3.Click mouse on the waveform and drag it to change the pulserepetition rate, or directly enter a new value of the period in theprovided dialogue box, while keeping the pulse width unchanged.在波形上点击鼠标并拖动来改变脉冲重复频率,或者在提供的对话框中直接输入新的周期值,而保持脉冲宽度不变。

4.Electronics is the science and the technology of the passage ofcharged particles in a gas, in a vacuum, or in a semiconductor.Please note that particle motion confined within a metal only is not considered electronics.电子学是一门有关带电粒子在气体、真空或半导体中运动的科学技术。

参考答案( Communication English )第一章电子通信导论1.将表1-1译成中文。

表1-1 通信大事纪年代事件公元前3000年埃及人发明象形文字公元800年借鉴印度, 阿拉伯人使用我们的现行数制1440年约翰.戈登贝尔发明可移动的金属记录带1752年贝莱明.富兰克林的风筝证明了雷闪是电1827年欧姆发表欧姆定律( I = E / R)1834年 C.F.高斯和E.H.韦伯发明电磁电报1838年W.F.库克和C.维特斯通发明电报1844年S.F.B.莫尔斯演示巴尔的摩和华盛顿的电报线路1850年G.R.基尔赫夫发表基尔赫夫电路定律1858年铺设第一条越洋电缆, 并于26天后举办博览会1864年J.C.麦克斯威尔预言电磁辐射1871年电报工程师协会在伦敦成立1876年 A.G.贝尔发明电话并获专利1883年 A.爱迪生发现真空管中的电子流1884年美国电气工程师协会(AIEE)成立1887年H.赫兹证明麦克斯威的理论1900年G.马可尼传送第一个越洋无线电信号1905年R.芬森登利用无线电传送语音和音乐1906年L.弗雷斯特真空三极管放大器1915年贝尔系统完成美国大陆电话线路1918年 E.H.阿尔莫斯通发明超外差接收机电路1920年第一个定时无线广播J.R.卡尔松将取样用于广播1926年美国演示电视1927年H.布兰克在贝尔实验室发明负反馈放大器1931年电传打字机投入运营1933年 E.H.阿尔莫斯通发明调频1935年R.A.沃特森-瓦特发明第一个实用雷达1936年英国广播公司(BBC)开办第一个电视广播1937年 A.雷弗斯提出脉冲编码调制(PCM)1941年J.V.阿当拉索夫在依俄华州立大学发明计算机1945年ENIAC电子数字计算机研发于宾夕伐尼亚大学1947年布雷登、巴登和肖克利在贝尔实验室研制晶体管S.O.莱斯在贝尔实验室研究噪声的统计表征1948年 C.E.香农发表他的信息理论1950年时分多路用于电话1953年美国提出NTSC彩色电视1957年苏联发射第一个地球卫星Sputnik I1958年 A.L.肖洛和C.H.托莱斯发表激光原理仙童公司的R.诺依斯生第一硅集成电路1961年美国开始立体声调频广播1962年第一个有源卫星, Telstar I , 实现美国与欧洲的电视中继1963年贝尔系统推出按键式电话电气与电子工程师协会(IEEE)成立1963~66年研究纠错码和自适应均衡1964年电子电话交换系统(No.1ESS)投入运营1965年笫一个商用通信卫星,Early Bird,发射1968年开发电缆电视系统1971年Intel公司研制第一个单片微处理器—40041972年摩托罗拉向美国联邦通信委员会(FCC)演示蜂窝式电话1976年推出个人计算机1979年64-kb随机存取存储器标志着进入VLSI时代1980年贝尔系统研发FT3光纤通信, Philips和Sony研发光碟(CD) 1984年苹果公司研发Macintosh计算机1985年传真机普及1989年摩托罗拉推出袖珍移动电话1990~现在用微处理器进行数字信号处理的时代, 数字示波器, 数字调谐接收机, 扩频系统, ISDN, HDTV, 数字卫星系统2.将表1-2译成中文。

U n i t1E x e r c i s e s(1)T r a n s l a t e t h e f o l l o w i n g s e n t e n c e s i n t o C h i n e s e.1.As with series resonance, the greater the resistance in the circuit the lowerthe Q and, accordingly, the flatter and broader the resonance curve ofeither line current or circuit impedance.对于串联谐振,电路中的电阻愈大Q值就愈低,相应地线路电流或电路阻抗的谐振曲线也就愈平、愈宽。

2.A wire carrying a current looks exactly the same and weighs exactly thesame as it does when it is not carrying a current.一根带电的导线其外表与重量都与不带电导线完全一样。

3.Click mouse on the waveform and drag it to change the pulse repetitionrate, or directly enter a new value of the period in the provided dialogue box, while keeping the pulse width unchanged.在波形上点击鼠标并拖动来改变脉冲重复频率,或者在提供的对话框中直接输入新的周期值,而保持脉冲宽度不变。

4.Electronics is the science and the technology of the passage of chargedparticles in a gas, in a vacuum, or in a semiconductor. Please note thatparticle motion confined within a metal only is not consideredelectronics.电子学是一门有关带电粒子在气体、真空或半导体中运动的科学技术。

•乌养案(< ommunicatioii English ) I «« l.l ifKtt・2 <144 1.2 if tA I X.J MH sin ■文•亢・I."■QC*纸件阳芦个・・■的冈眞*:•仏g 中赳a的・asa低■的■ j纽依电的倦•斂也«iittrj4<t>-f ! rfA I «««««・侧・.U »!••(!* X4U •IMXWHzmi号・:7此话白It■的Lliflb滋口布舟于*/U 2MHr仙讳運眾・t«»:用P橫积欣4何从知5«皿血的出活JUH詈建为454MfcH/ 口说蠶・2進不栓(址含« ftOM*人f K'^的殆号・MAiftt ^/xmMMR.Uft4id电血■酸*运他0时・能"乂处«用11 t叫用倩n论配■宜・n■峻旳仿・WE・ oftweuA^%时ixr^fl的■«・怜进的I9X»<F.W;J:电也丈9$的mo律明Hintey)m出了制宣.忡於时仙QU思幕EZ叙的关条.心^乂疋冷MLmWM 刚151M W41 真・rti*/.ciawt>*etew<M/>^■"負xm・ati:x;A«j.ciAw«tir比,桑檢帯■:和伶・H 曲的tlftiAfck.綁・侑・毎$用0—4礼》<件遇的信—侑■ 巣怜絵时刚tMMnuc久逮过•址伶堪鸽佶・■住現比初受化.• g说心Hgg弓•■余•住怡论时刖片伶送它诙蘿■的讯■人•伶逵诂金斥■nt -1 MH/ n««.・tz 用・・«19需配・2omu枷■而(Mt广・m«UB.Rl*■鳖平$钿&的•■・IW 俅JC.E4HU也■貝尔电话实的W的於贝尔*“荻术•玄t逐灰伦文.屹14 了u・・隹做•上为/ T2M・AWCMM机期S*歎H/L'MUXC■比Mlt 粉.林“血旳U i»tti®ii(;*f;.Wlt^ toiKkMMBt.il I 2AH/.HP;的W 眾比兔G 息專E为f・X9U和.・上暹列阿加鍛尺处上过251H/ 26 Wp» ff9fi 於也見町Ifc的.ftM、Jt用』制■绘.r Md 2 26<^i?nar>M J个itwmua.HI & r«wwwm^n号加“芋件s ■娥・4.将卜址匀f—a©攵・(l> A B ml«f infomtuon towct ptvitvn RBCMMO知*c<M>n»4 ca 4 c^minncn. wbika 气MfiiMnobcM twvr praiam a wi of ^OAibk WM^r*(2> Tic bcaco<i"fn< 9wcr m incunl China ww a cvmrwucwiom 巧wa(3> Sho* tha tkc entropy B < nunnam *he« b probebilrty of Mndit^ 9 brnary 丨B equal10 the prvb<Mx> •( gdin. a b«ur)•・H> lnfor*AiK« it a of hou id inbimauM an be uvi^cnd .g^h a ixmniirMcwiacm In 4gi»c»i pen ml uf iimf(5> The ・Wrr Ik tandu tAf) and l>c tonga the tnx M w»$mi$«on. the wwc infimraaoc*cin be cemed the gem5 A M*<V I W fftUoumti(l>Sowwcl XCorac deveioped iW (irudcctroa* ccmmunKMiom vywlom in 1M3*⑵(3> He mod<(4> Hanky't ・•tmet 4MI■ .Wcr •© bandwidth aad Uc ioaser ik vmsmiMKMuse. the ncrc mbofnuuon tui coa becce>c>cd duoufh Ac tyign The Shttaaoa't icmula «> / utecre /^S MMUXJMI2T tunhukMHy' .V .V-vgMM^om pwo ougfiig(5>la)VtF. (MMF. (clSHFe-«I WWittHSbMHa ・\(l> rWw ant Co. wnpertMH tnl^rrMiMin wn:<«: —d.m«MK. ,(tue«仇andgnfwief ■tUi ⑵TWw weoewv* M<c« frodictaen. «n4 pmermi(3>Tkh>»d»»d*o040«>3l(K41/(4) A SUIM I ddlcn fhMB a s^ec^ ■ tfut ns speanim occupies J much * 3ba»4 of frcq^cacc* ihM nuy cuaid ¥ 3 tbovi I SMb(5) Ibf iynMMiv . ■ edrviMon, m ■ «<Ma>n wMr the «unc 柯*Q. M ■(inmlf. «w tlill(6> I B bbci<*iutw TV. on' a Ivminvicc vjpui 15 needed. Houewr. in cokr TV Acre arc <hctca lamanoBcc M^aoi and a p« of chroairuncc Mgnils.(?>¥«•(N) Nepto* »*€ 4itt hu arc OIIOIOI Foe the oM |MM>. «I cwt ^r1i> bii ef I tkovld be cnM. to lh» lhe lott) numto »T I»11 5. whch nodd(9) LotaAat oompresssoa ope ram b? the redundsnt ■fcmalicci coaumed n thedon M attM Le«iy comtmtnoa mvKn the bti W mtormnon ■ a cMtrolled mamer L OM> I;M|WCWIMI m«> ftfieaw t (AIM■OB ikM AUMM I I I C with lotUcu anohMh(IO! There arc gr <*<ma opcriiiovn roi©-frup<»cy mapping p9><chct»romt»c modchng qum/abon «nd codiot. jnd frane^ed啊2. WU ・U itlTRBM个人itwiupaea为鼻们口维〜矯车诃i姒的滤*.僅们用计口机血发电/ ■rr鱼鼻贞•奧* •'心是的斗utn用人;“,*心4交議城ASCHM行•吗.A$CHI£HS片发的XJJNWIOII伯的■钱亡的第-介了斤弘康/力7o ・ i 的诚创$式・uv*ar^p<tt*Vb<i»«:ia)m (b~y iMW';J. 2 -l»个的m・ Xi八了符壑"斡协好•人斯丫・■礼■報左捕呂.勿如宣・$. %•・«&♦umm ・w i.m「.n. HUfcAOrtfmWTBif^MflfAi Wl»l t ・ jy /f<1. 皿g 妣■弟"氏円总稍这厂松0位啄为也監位.■沖的* C比©"为一金“澈59如Ci)・咼図枝验・ f XIM啲I的■【山佬■: ffMMtt. ■为Att.Mtt. Mtilfl*用Hits. I Af »MI*W・审代・位拓*** .・情为1•刚匕oi«^iftw)tfnv^mr个比歸初啓么.也・个人w 当ASCH*败实乂上肋frr袖E・・w. MS・•卡■♦■am位*个■■个・i W«?ihttUAIUa7HM・ MIM2・2所稹・・ NIVft K»m I. UR»V电1•的a<>・ AW 2-2 'K n': 0 ««I IjtTEtn也分別林矿中号丘电權.h*>好約文4dl饗先仔* RH次tyMftiid4nf,:«>!Cii.-M4aiii^Ai. UHM.血比皿“利MUH・卩何*"Num次KWV■"休1I1R1I・IHF矫和卅步的從介终斜辑产住的倍幼合.秋盘nfIHMH调Rzfcm)以空沿仁追FT■占匕计im卢纶的ewn咤科6臥4宅钓的4乍占* 宜斜•♦他W・个Mi-ffll2WmU0ttRri£flH** ««*«发竹.u<t>i从节“斥・m夬itZEfrihk別•的・・妙从计«r氛的々握忘揍仇弃厦的的克爼e我定发MMJ・怖比Z F.»ttifi Q?fewM?a«i>rxa(;:.賞珂u住说&的・H外.我"«忖射«1从耳地加■診恨恢鼻•卜UlMfW・・ MiKMmf转实用的方状.1人他%«1处”皆•伦・D“・《K・ AiR^«a actw的分別IK行區细w乐・■*!:・<r曲片渺式的・再1从*:① JUBUZf•它玄n右冃則K中的疋兪口U."以。

信息英语参考答案信息英语参考答案随着全球化和科技的迅速发展,信息英语成为了一个不可或缺的技能。

无论是在学术领域还是职场上,掌握信息英语都能为我们带来更多的机会和竞争力。

然而,对于许多人来说,信息英语仍然是一个挑战。

在这篇文章中,我们将探讨一些信息英语的参考答案,帮助读者更好地理解和应用这一技能。

首先,信息英语的参考答案之一是积累词汇。

词汇是语言的基础,掌握更多的词汇将使我们更容易理解和表达信息。

建议读者通过阅读英文文章、书籍和报纸来扩大词汇量。

此外,使用词汇卡片或手机应用程序进行词汇记忆也是一个有效的方法。

记住,词汇的积累是一个长期的过程,需要持续的努力和实践。

其次,信息英语的参考答案之二是提高阅读理解能力。

阅读是获取信息的主要途径之一,因此,我们需要提高自己的阅读理解能力。

建议读者选择适合自己水平的英文材料,并尝试进行有针对性的阅读练习。

在阅读过程中,可以使用词典或在线翻译工具来帮助理解生词和句子。

此外,阅读时要注意上下文和语境,以便更好地理解文章的意思。

第三,信息英语的参考答案之三是培养听力技巧。

在信息时代,我们经常需要通过听力获取信息。

为了提高听力技巧,建议读者多听英语广播、音频课程和英语电影等。

同时,可以使用字幕来帮助理解和记忆。

此外,注意听力材料的语速和语调,并尝试模仿和跟读,以提高自己的语音和语调。

第四,信息英语的参考答案之四是加强写作能力。

写作是表达自己观点和思想的重要方式之一。

为了提高写作能力,建议读者多写英文作文、日记或博客。

可以请教老师或擅长英语写作的朋友进行修改和建议。

此外,阅读优秀的英文文章和写作范例也是提高写作能力的有效方法。

最后,信息英语的参考答案之五是创造语言环境。

语言是一种社交工具,只有在实际应用中才能真正掌握。

建议读者寻找英语角、参加英语俱乐部或与外国朋友交流,创造一个英语环境。

通过与他人交流,我们可以提高口语表达能力,并且学习到更多地道的表达方式。

综上所述,信息英语是一项重要的技能,对于我们的学术和职业发展都至关重要。

本答案是英文原版的配套答案,与翻译的中文版课本题序不太一样但内容一样。

翻译的中文版增加了题量。

2.2、Entropy of functions. Let X be a random variable taking on a finite number of values. What is the (general) inequality relationship of ()H X and ()H Y if(a) 2X Y =?(b) cos Y X =?Solution: Let ()y g x =. Then():()()x y g x p y p x ==∑.Consider any set of x ’s that map onto a single y . For this set()():():()()log ()log ()log ()x y g x x y g x p x p x p x p y p y p y ==≤=∑∑,Since log is a monotone increasing function and ():()()()x y g x p x p x p y =≤=∑.Extending this argument to the entire range of X (and Y ), we obtain():()()log ()()log ()x y x g x H X p x p x p x p x =-=-∑∑∑()log ()()yp y p y H Y ≥-=∑,with equality iff g if one-to-one with probability one.(a) 2X Y = is one-to-one and hence the entropy, which is just a function of the probabilities does not change, i.e., ()()H X H Y =.(b) cos Y X =is not necessarily one-to-one. Hence all that we can say is that ()()H X H Y ≥, which equality if cosine is one-to-one on the range of X .2.16. Example of joint entropy. Let (,)p x y be given byFind(a) ()H X ,()H Y .(b) (|)H X Y ,(|)H Y X . (c) (,)H X Y(d) ()(|)H Y H Y X -.(e) (;)I X Y(f) Draw a Venn diagram for the quantities in (a) through (e).Solution:Fig. 1 Venn diagram(a) 231()log log30.918 bits=()323H X H Y =+=.(b) 12(|)(|0)(|1)0.667 bits (/)33H X Y H X Y H X Y H Y X ==+===((,)(|)()p x y p x y p y =)((|)(,)()H X Y H X Y H Y =-)(c) 1(,)3log3 1.585 bits 3H X Y =⨯=(d) ()(|)0.251 bits H Y H Y X -=(e)(;)()(|)0.251 bits=-=I X Y H Y H Y X(f)See Figure 1.2.29 Inequalities. Let X,Y and Z be joint random variables. Prove the following inequalities and find conditions for equality.(a) )ZHYXH≥X(Z()|,|(b) )ZIYXI≥X((Z);,;(c) )XYXHZ≤Z-H-XYH),(,)(((X,,)H(d) )XYIZIZII+-XZY≥Y(););(|;;(Z|)(XSolution:(a)Using the chain rule for conditional entropy,HZYXHZXH+XH≥XYZ=),(|(Z)(||,()|)With equality iff 0YH,that is, when Y is a function of X andXZ,|(=)Z.(b)Using the chain rule for mutual information,ZIXXIZYX+=,I≥IYZ(|;)X);)(,;;(Z)(With equality iff 0ZYI, that is, when Y and Z areX)|;(=conditionally independent given X.(c)Using first the chain rule for entropy and then definition of conditionalmutual information,XZHYHIXZYX==-H-XHYYZ)()(;Z)|,|),|(X(,,)(XHXZH-Z≤,=,)()()(X|HWith equality iff 0ZYI, that is, when Y and Z areX(=|;)conditionally independent given X .(d) Using the chain rule for mutual information,);()|;();,();()|;(Z X I X Y Z I Z Y X I Y Z I Y Z X I +==+And therefore this inequality is actually an equality in all cases.4.5 Entropy rates of Markov chains.(a) Find the entropy rate of the two-state Markov chain with transition matrix⎥⎦⎤⎢⎣⎡--=1010010111p p p p P (b) What values of 01p ,10p maximize the rate of part (a)?(c) Find the entropy rate of the two-state Markov chain with transition matrix⎥⎦⎤⎢⎣⎡-=0 1 1p p P(d) Find the maximum value of the entropy rate of the Markov chain of part (c). We expect that the maximizing value of p should be less than 2/1, since the 0 state permits more information to be generated than the 1 state.Solution:(a) T he stationary distribution is easily calculated.10010*********,p p p p p p +=+=ππ Therefore the entropy rate is10011001011010101012)()()()()|(p p p H p p H p p H p H X X H ++=+=ππ(b) T he entropy rate is at most 1 bit because the process has only two states. This rate can be achieved if( and only if) 2/11001==p p , in which case the process is actually i.i.d. with2/1)1Pr()0Pr(====i i X X .(c) A s a special case of the general two-state Markov chain, the entropy rate is1)()1()()|(1012+=+=p p H H p H X X H ππ.(d) B y straightforward calculus, we find that the maximum value of)(χH of part (c) occurs for 382.02/)53(=-=p . The maximum value isbits 694.0)215()1()(=-=-=H p H p H (wrong!)5.4 Huffman coding. Consider the random variable⎪⎪⎭⎫ ⎝⎛=0.02 0.03 0.04 0.04 0.12 0.26 49.0 7654321x x x x x x x X (a) Find a binary Huffman code for X .(b) Find the expected codelength for this encoding.(c) Find a ternary Huffman code for X .Solution:(a) The Huffman tree for this distribution is(b)The expected length of the codewords for the binary Huffman code is 2.02 bits.( ∑⨯=)()(i p l X E )(c) The ternary Huffman tree is5.9 Optimal code lengths that require one bit above entropy. The source coding theorem shows that the optimal code for a random variable X has an expected length less than 1)(+X H . Given an example of a random variable for which the expected length of the optimal code is close to 1)(+X H , i.e., for any 0>ε, construct a distribution for which the optimal code has ε-+>1)(X H L .Solution: there is a trivial example that requires almost 1 bit above its entropy. Let X be a binary random variable with probability of 1=X close to 1. Then entropy of X is close to 0, but the length of its optimal code is 1 bit, which is almost 1 bit above its entropy.5.25 Shannon code. Consider the following method for generating a code for a random variable X which takes on m values {}m ,,2,1 with probabilities m p p p ,,21. Assume that the probabilities are ordered so thatm p p p ≥≥≥ 21. Define ∑-==11i k i i p F , the sum of the probabilities of allsymbols less than i . Then the codeword for i is the number ]1,0[∈i Frounded off to i l bits, where ⎥⎥⎤⎢⎢⎡=i i p l 1log . (a) Show that the code constructed by this process is prefix-free and the average length satisfies 1)()(+<≤X H L X H .(b) Construct the code for the probability distribution (0.5, 0.25, 0.125, 0.125).Solution:(a) Since ⎥⎥⎤⎢⎢⎡=i i p l 1log , we have 11log 1log +<≤i i i p l pWhich implies that 1)()(+<=≤∑X H l p L X H i i .By the choice of i l , we have )1(22---<≤ii l i l p . Thus j F , i j > differs from j F by at least il -2, and will therefore differ from i F is at least one place in the first i l bits of the binary expansion of i F . Thus thecodeword for j F , i j >, which has length i j l l ≥, differs from thecodeword for i F at least once in the first i l places. Thus no codewordis a prefix of any other codeword.(b) We build the following table3.5 AEP. Let ,,21X X be independent identically distributed random variables drawn according to theprobability mass function {}m x x p ,2,1),(∈. Thus ∏==n i i n x p x x x p 121)(),,,( . We know that)(),,,(log 121X H X X X p n n →- in probability. Let ∏==n i i n x q x x x q 121)(),,,( , where q is another probability mass function on {}m ,2,1.(a) Evaluate ),,,(log 1lim 21n X X X q n-, where ,,21X X are i.i.d. ~ )(x p . Solution: Since the n X X X ,,,21 are i.i.d., so are )(1X q ,)(2X q ,…,)(n X q ,and hence we can apply the strong law of large numbers to obtain∑-=-)(log 1lim ),,,(log 1lim 21i n X q n X X X q n 1..))((log p w X q E -=∑-=)(log )(x q x p∑∑-=)(log )()()(log )(x p x p x q x p x p )()||(p H q p D +=8.1 Preprocessing the output. One is given a communication channel withtransition probabilities )|(x y p and channel capacity );(max )(Y X I C x p =.A helpful statistician preprocesses the output by forming )(_Y g Y =. He claims that this will strictly improve the capacity.(a) Show that he is wrong.(b) Under what condition does he not strictly decrease the capacity? Solution:(a) The statistician calculates )(_Y g Y =. Since _Y Y X →→ forms a Markov chain, we can apply the data processing inequality. Hence for every distribution on x ,);();(_Y X I Y X I ≥. Let )(_x p be the distribution on x that maximizes );(_Y X I . Then__)()()(_)()()();(max );();();(max __C Y X I Y X I Y X I Y X I C x p x p x p x p x p x p ==≥≥===.Thus, the statistician is wrong and processing the output does not increase capacity.(b) We have equality in the above sequence of inequalities only if we have equality in data processing inequality, i.e., for the distribution that maximizes );(_Y X I , we have Y Y X →→_forming a Markov chain.8.3 An addition noise channel. Find the channel capacity of the following discrete memoryless channel:Where {}{}21Pr 0Pr ====a Z Z . The alphabet for x is {}1,0=X . Assume that Z is independent of X . Observe that the channel capacity depends on the value of a . Solution: A sum channel.Z X Y += {}1,0∈X , {}a Z ,0∈We have to distinguish various cases depending on the values of a .0=a In this case, X Y =,and 1);(max =Y X I . Hence the capacity is 1 bitper transmission.1,0≠≠a In this case, Y has four possible values a a +1,,1,0. KnowingY ,we know the X which was sent, and hence 0)|(=Y X H . Hence thecapacity is also 1 bit per transmission.1=a In this case Y has three possible output values, 0,1,2, the channel isidentical to the binary erasure channel, with 21=f . The capacity of this channel is 211=-f bit per transmission.1-=a This is similar to the case when 1=a and the capacity is also 1/2 bit per transmission.8.5 Channel capacity. Consider the discrete memoryless channel)11 (mod Z X Y +=, where ⎪⎪⎭⎫ ⎝⎛=1/3 1/3, 1/3,3 2,,1Z and {}10,,1,0 ∈X . Assume thatZ is independent of X .(a) Find the capacity.(b) What is the maximizing )(*x p ?Solution: The capacity of the channel is );(max )(Y X I C x p =)()()|()()|()();(Z H Y H X Z H Y H X Y H Y H Y X I -=-=-=bits 311log)(log );(=-≤Z H y Y X I , which is obtained when Y has an uniform distribution, which occurs when X has an uniform distribution.(a)The capacity of the channel is bits 311log /transmission.(b) The capacity is achieved by an uniform distribution on the inputs.10,,1,0for 111)( ===i i X p 8.12 Time-varying channels. Consider a time-varying discrete memoryless channel. Let n Y Y Y ,,21 be conditionally independent givenn X X X ,,21 , with conditional distribution given by ∏==ni i i i x y p x y p 1)|()|(.Let ),,(21n X X X X =, ),,(21n Y Y Y Y =. Find );(max )(Y X I x p . Solution:∑∑∑∑∑=====--≤-≤-=-=-=-=ni i n i i i n i i ni i i ni i i n p h X Y H Y H X Y H Y H X Y Y Y H Y H X Y Y Y H Y H X Y H Y H Y X I 111111121))(1()|()()|()(),,|()()|,,()()|()();(With equlity ifnX X X ,,21 is chosen i.i.d. Hence∑=-=ni i x p p h Y X I 1)())(1();(max .10.2 A channel with two independent looks at Y . Let 1Y and 2Y be conditionally independent and conditionally identically distributed givenX .(a) Show );();(2),;(21121Y Y I Y X I Y Y X I -=. (b) Conclude that the capacity of the channelX(Y1,Y2)is less than twice the capacity of the channelXY1Solution:(a) )|,(),(),;(212121X Y Y H Y Y H Y Y X I -=)|()|();()()(212121X Y H X Y H Y Y I Y H Y H ---+=);();(2);();();(2112121Y Y I Y X I Y Y I Y X I Y X I -=-+=(b) The capacity of the single look channel 1Y X → is );(max 1)(1Y X I C x p =.Thecapacityof the channel ),(21Y Y X →is11)(211)(21)(22);(2max );();(2max ),;(max C Y X I Y Y I Y X I Y Y X I C x p x p x p =≤-==10.3 The two-look Gaussian channel. Consider the ordinary Shannon Gaussian channel with two correlated looks at X , i.e., ),(21Y Y Y =, where2211Z X Y Z X Y +=+= with a power constraint P on X , and ),0(~),(221K N Z Z ,where⎥⎦⎤⎢⎣⎡=N N N N K ρρ. Find the capacity C for (a) 1=ρ (b) 0=ρ (c) 1-=ρSolution:It is clear that the two input distribution that maximizes the capacity is),0(~P N X . Evaluating the mutual information for this distribution,),(),()|,(),()|,(),(),;(max 212121212121212Z Z h Y Y h X Z Z h Y Y h X Y Y h Y Y h Y Y X I C -=-=-==Nowsince⎪⎪⎭⎫⎝⎛⎥⎦⎤⎢⎣⎡N N N N N Z Z ,0~),(21ρρ,wehave)1()2log(21)2log(21),(222221ρππ-==N e Kz e Z Z h.Since11Z X Y +=, and22Z X Y +=, wehave ⎪⎪⎭⎫⎝⎛⎥⎦⎤⎢⎣⎡++++N N P N N P N Y Y P P ,0~),(21ρρ, And ))1(2)1(()2log(21)2log(21),(222221ρρππ-+-==PN N e K e Y Y h Y .Hence⎪⎪⎭⎫⎝⎛++=-=)1(21log 21),(),(21212ρN P Z Z h Y Y h C(a) 1=ρ.In this case, ⎪⎭⎫⎝⎛+=N P C 1log 21, which is the capacity of a single look channel.(b) 0=ρ. In this case, ⎪⎭⎫⎝⎛+=N P C 21log 21, which corresponds to using twice the power in a single look. The capacity is the same as the capacity of the channel )(21Y Y X +→.(c) 1-=ρ. In this case, ∞=C , which is not surprising since if we add1Y and 2Y , we can recover X exactly.10.4 Parallel channels and waterfilling. Consider a pair of parallel Gaussianchannels,i.e.,⎪⎪⎭⎫⎝⎛+⎪⎪⎭⎫ ⎝⎛=⎪⎪⎭⎫ ⎝⎛212121Z Z X X Y Y , where⎪⎪⎭⎫ ⎝⎛⎥⎥⎦⎤⎢⎢⎣⎡⎪⎪⎭⎫ ⎝⎛222121 00 ,0~σσN Z Z , And there is a power constraint P X X E 2)(2221≤+. Assume that 2221σσ>. At what power does the channel stop behaving like a single channel with noise variance 22σ, and begin behaving like a pair of channels? Solution: We will put all the signal power into the channel with less noise until the total power of noise+signal in that channel equals the noise power in the other channel. After that, we will split anyadditional power evenly between the two channels. Thus the combined channel begins to behave like a pair of parallel channels when the signal power is equal to the difference of the two noise powers, i.e., when 22212σσ-=P .。