人工神经网络第2章

- 格式:ppt

- 大小:631.00 KB

- 文档页数:24

2-1 分析为什么平方损失函数不适用于分类问题?损失函数是一个非负实数,用来量化模型预测和真实标签之间的差异。

我们一般会用损失函数来进行参数的优化,当构建了不连续离散导数为0的函数时,这对模型不能很好地评估。

直观上,对特定的分类问题,平方差的损失有上限(所有标签都错,损失值是一个有效值),但交叉熵则可以用整个非负域来反映优化程度的程度。

从本质上看,平方差的意义和交叉熵的意义不一样。

概率理解上,平方损失函数意味着模型的输出是以预测值为均值的高斯分布,损失函数是在这个预测分布下真实值的似然度,softmax 损失意味着真实标签的似然度。

在二分类问题中y = { + 1 , − 1 }在C 分类问题中y = { 1 , 2 , 3 , ⋅ ⋅ ⋅ , C }。

可以看出分类问题输出的结果为离散的值。

分类问题中的标签,是没有连续的概念的。

每个标签之间的距离也是没有实际意义的,所以预测值和标签两个向量之间的平方差这个值不能反应分类这个问题的优化程度。

比如分类 1,2,3, 真实分类是1, 而被分类到2和3错误程度应该是一样的,但是明显当我们预测到2的时候是损失函数的值为1/2而预测到3的时候损失函数为2,这里再相同的结果下却给出了不同的值,这对我们优化参数产生了误导。

至于分类问题我们一般采取交叉熵损失函数(Cross-Entropy Loss Function )来进行评估。

2-2 在线性回归中,如果我们给每个样本()()(,)n n x y 赋予一个权重()n r ,经验风险函数为()()()211()()2N n n T n n R w r y w x ==−∑,计算其最优参数*w ,并分析权重()n r 的作用.答:其实就是求一下最优参数*w ,即导数为0,具体如下:首先,取权重的对角矩阵:()(),,,n P diag r x y w =均以向量(矩阵)表示,则原式为:21()||||2T R P Y X Ω=−Ω ,进行求导:()0T R XP Y X ∂=−−Ω=∂Ω,解得:*1()T XPX XPY −Ω=,相比于没有P 时的Ω:1()T withoutP XX XY −Ω=,可以简单理解为()n r 的存在为每个样本增加了权重,权重大的对最优值ω的影响也更大。

计算智能基础教学大纲计算智能基础教学大纲人工智能已经成为国际竞争的新焦点,其在图像识别、语音翻译、行为分析等方面得到广泛应用,智能机器人、无人商店、机器翻译、共享汽车、自动驾驶等新产品备受瞩目,在城市规划、智能交通等领域的应用也颇具特色。

本课程目的是使学生了解计算智能基础知识,掌握神经网络、模糊计算、进化算法等算法。

课程概述人工智能已经成为国际竞争的新焦点,其在图像识别、语音翻译、行为分析等方面得到广泛应用,智能机器人、无人商店、机器翻译、共享汽车、自动驾驶等新产品备受瞩目,在城市规划、智能交通等领域的应用也颇具特色。

随着新一轮科技革命和产业变革应运兴起,网络设施的演进、大数据的形成、理论算法的革新、计算能力的提升,新一代人工智能正在创造新市场、新机会,全面重塑传统行业发展模式和格局。

本课程目的是使学生了解计算智能基础知识,掌握神经网络、模糊计算、进化算法等主要计算智能算法。

通过学习本课程,学生可以学习和应用最新的深度学习等方面的最新知识和技术。

神经网络部分主要包括各种不同的神经网络结构、神经网络权值的确定和学习算法,重点介绍以多层感知器为代表的神经网络及其BP 学习算法,径向基神经网络以及神经网络在分类和函数拟合中的应用等;模糊逻辑及模糊控制部分主要包括模糊集合、隶属度函数、模糊逻辑和模糊推理以及模糊控制等方面的一些基础知识;进化计算部分主要包括遗传算法、蚁群算法、粒子群算法等。

授课目标使学生了解计算智能基础知识,掌握神经网络、模糊计算、进化算法等主要计算智能算法,并为学习后继课程打下良好的知识基础。

1、掌握计算智能基本理论、方法、技术等基础知识,包括人工神经网络基础、BP神经网络及设计、自组织神经网络及设计、反馈神经网络、模糊逻辑及其应用简介、模糊集合与隶属度函数、模糊模式识别、模糊聚类分析、模糊推理、模糊控制理论、进化算法的基本概念、进化算法中的遗传算法和粒子群算法。

2、获得计算智能在现代交通工程领域分析问题、开展研究、设计解决方案的基本训练,获得一定的使用现代工具的锻炼。

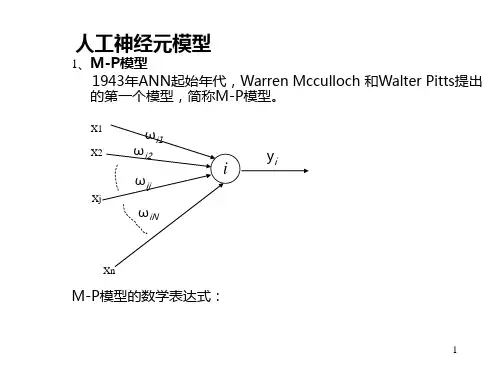

机器学习论文题目:人工神经网络模型及仿真学院:电子工程学院专业:电路与系统姓名:学号:摘要人工神经网络(artificial neural network,ANN)通常被认为是基于生物学产生的很复杂的分析技术,能够拟合极其复杂的非线性函数。

它是一项发展十分迅速、应用领域十分广泛的技术,已在人工智能、自动控制、模式识别等许多应用领域中取得广泛成功。

ANN是一种重要的机器学习工具。

本文首先简要讲述了一些相关的生物神经网络知识,在此基础上,引出了人工神经网络。

然后概述了ANN的发展历史及现状并总结了ANN的特点。

在第二部分,对ANN发展过程中具有标志性的几种ANN的模型及其结构进行了讲解,如:感知器、线性神经网络、BP网络、反馈网络等,并给出了相应的简单应用事例,而且使用功能强大的仿真软件——MATLAB对它们的性能进行了仿真分析。

在论文最后,给出了本文的总结以及作者的一些体会。

ABSTRACTArtificial neural network(ANN) is commonly known as biologically inspired, highly sophisticated analytical technique, capable of capturing highly complex non-linear functions. ANN is a kind of widely applied technique developed highly,and it has been applied sucessfully in the domains, such as artificial intelligence, autocontrol, pattern recognition and so on. In addition, ANN is a significant means of machine learning.In this paper,the author firstly show some basic biological neural networks, on which the introduction of artificial neural network is based. Then, the author dispicts simplily the history of ANN and the present condition of ANN, and concludes the characters of ANN. In the second part of the paper, the models and structures of ANNs which representive the ANN’s development are emphasized, such as perceptron,linear neural network,BP neural network,recurrent network and so on, and some examples based on those networks are illustrated. In addition, the author simulate the performance of the ANNs by a powerful software, MATLAB. At last, the author puts forward the conclutions of this paper and his thoughts.目录第一章神经网络 (1)1.1 生物学神经网络 (1)1.2 人工神经网络 (2)1.2.1 人工神经网络的产生 (2)1.2.2 人工神经网络的发展 (3)1.2.3 人工神经网络的现状 (5)1.3 人工神经网络的特点 (5)第二章人工神经网络模型及仿真 (6)2.1 人工神经元建模 (6)2.1.1 人工神经元的基本构成 (6)2.1.2 激活函数 (7)2.2 感知器 (8)2.2.1 感知器模型 (8)2.2.2 感知器网络设计实例 (9)2.3 线性神经网络 (10)2.3.1线性神经网络模型 (10)2.3.2线性神经网络设计实例 (10)2.4 BP网络 (11)2.4.1 BP网络模型 (11)2.4.2 BP网络设计实例 (12)2.5 径向基函数网络 (15)2.5.1径向基函数网络模型 (15)2.5.2径向基函数网络设计实例 (16)2.6 竞争型网络 (18)2.6.1竞争型网络模型 (18)2.6.2竞争型网络设计实例 (18)2.7 反馈型网络 (20)2.7.1 Elman网络 (20)2.7.2 Hopfield网络 (23)第三章本文总结 (26)参考文献 (28)第一章神经网络人工神经网络(artificial neural network,ANN)是通过对生物神经网络进行抽象,并综合运用信息处理技术、数学手段等建立简化模型而发展起来的一门交叉学科。

第一章测试1【判断题】(1分)Synapseistheplacewhereneuronsconnectinfunction.Itiscomposedofpresynapticmembra ne,synapticspaceandpostsynapticmembrane.()A.对B.错2【单选题】(1分)Biologicalneuronscanbedividedintosensoryneurons,motorneuronsand()accordingtoth eirfunctions.A.multipolarneuronsB.interneuronsC.PseudounipolarneuralnetworksD.bipolarneurons3【判断题】(1分)Neuronsandglialcellsarethetwomajorpartsofthenervoussystem.()A.错B.对4【判断题】(1分)Neuronsarehighlypolarizedcells,whicharemainlycomposedoftwoparts:thecellbodyandth esynapse.()A.对B.错5【判断题】(1分)Thehumanbrainisanimportantpartofthenervoussystem,whichcontainsmorethan86billion neurons.Itisthecentralinformationprocessingorganizationofhumanbeings.()A.对B.错第二章测试1【单选题】(1分)In1989,Mead,thefatherofVLSI,publishedhismonograph"()",inwhichageneticneuralnetwo rkmodelbasedonevolutionarysystemtheorywasproposed.A.AnalogVLSIandNeuralSystemsB.JournalNeuralNetworksC.LearningMachinesD.Perceptrons:AnIntroductiontoComputationalGeometry2【判断题】(1分)In1989,YannLecunproposedconvolutionalneuralnetworkandappliedittoimageprocessin g,whichshouldbetheearliestapplicationfieldofdeeplearningalgorithm.()A.对B.错3【判断题】(1分)In1954,Eccles,aneurophysiologistattheUniversityofMelbourne,summarizedtheprinciple ofDale,aBritishphysiologist,that"eachneuronsecretesonlyonekindoftransmitter".()A.错B.对4【判断题】(1分)In1972,ProfessorKohonenofFinlandproposedaself-organizingfeaturemap(SOFM)neural networkmodel.()A.对B.错5【判断题】(1分)Predictionandevaluationisanactivityofscientificcalculationandevaluationofsomecharacte risticsanddevelopmentstatusofthingsoreventsinthefutureaccordingtotheknowninformati onofobjectiveobjects.()A.对B.错第三章测试1【判断题】(1分) Thefunctionoftransferfunctioninneuronsistogetanewmappingoutputofsummeraccordingt othespecifiedfunctionrelationship,andthencompletesthetrainingofartificialneuralnetwork.()A.对B.错2【判断题】(1分)Thedeterminantchangessignwhentworows(ortwocolumns)areexchanged.Thevalueofdet erminantiszerowhentworows(ortwocolumns)aresame.()A.错B.对3【判断题】(1分)Therearetwokindsofphenomenaintheobjectiveworld.Thefirstisthephenomenonthatwillha ppenundercertainconditions,whichiscalledinevitablephenomenon.Thesecondkindisthep henomenonthatmayormaynothappenundercertainconditions,whichiscalledrandomphen omenon.()A.错B.对4【判断题】(1分)LogarithmicS-typetransferfunction,namelySigmoidfunction,isalsocalledS-shapedgrowth curveinbiology.()A.对B.错5【判断题】(1分)Rectifiedlinearunit(ReLU),similartotheslopefunctioninmathematics,isthemostcommonly usedtransferfunctionofartificialneuralnetwork.()A.对B.错第四章测试1【单选题】(1分) Theperceptronlearningalgorithmisdrivenbymisclassification,sothestochasticgradientdes centmethodisusedtooptimizethelossfunction.()A.misclassificationB.maximumC.minimumD.correct2【判断题】(1分)Perceptronisasingle-layerneuralnetwork,orneuron,whichisthesmallestunitofneuralnetwo rk.()A.对B.错3【判断题】(1分)Whentheperceptronislearning,eachsamplewillbeinputintotheneuronasastimulus.Theinp utsignalisthefeatureofeachsample,andtheexpectedoutputisthecategoryofthesample.Wh entheoutputisdifferentfromthecategory,wecanadjustthesynapticweightandbiasvalueuntil theoutputofeachsampleisthesameasthecategory.()A.对B.错4【判断题】(1分) Ifthesymmetrichardlimitfunctionisselectedforthetransferfunction,theoutputcanbeexpressedas.Iftheinnerproductoftherowvectorandtheinputvectorin theweightmatrixisgreaterthanorequalto-b,theoutputis1,otherwisetheoutputis-1.()A.错B.对5【判断题】(1分) Thebasicideaofperceptronlearningalgorithmistoinputsamplesintothenetworkstepbystep, andadjusttheweightmatrixofthenetworkaccordingtothedifferencebetweentheoutputresul tandtheidealoutput,thatistosolvetheoptimizationproblemoflossfunctionL(w,b).()A.对B.错第五章测试1【单选题】(1分)TheoutputofBPneuralnetworkis()ofneuralnetwork.A.theinputofthelastlayerB.theoutputofthesecondlayerC.theoutputofthelastlayerD.theinputofthesecondlayer2【判断题】(1分) BPneuralnetworkhasbecomeoneofthemostrepresentativealgorithmsinthefieldofartificiali ntelligence.Ithasbeenwidelyusedinsignalprocessing,patternrecognition,machinecontrol (expertsystem,datacompression)andotherfields.()A.错B.对3【判断题】(1分)In1974,PaulWerbosofthenaturalsciencefoundationoftheUnitedStatesfirstproposedtheus eoferrorbackpropagationalgorithmtotrainartificialneuralnetworksinhisdoctoraldissertatio nofHarvardUniversity,anddeeplyanalyzedthepossibilityofapplyingittoneuralnetworks,eff ectivelysolvingtheXORloopproblemthatsinglesensorcannothandle.()A.错B.对4【判断题】(1分) InthestandardBPneuralnetworkalgorithmandmomentumBPalgorithm,thelearningrateisa constantthatremainsconstantthroughoutthetrainingprocess,andtheperformanceofthelea rningalgorithmisverysensitivetotheselectionofthelearningrate.()A.对B.错5【判断题】(1分)L-Malgorithmismainlyproposedforsuperlargescaleneuralnetwork,anditisveryeffectiveinp racticalapplication.()A.对B.错第六章测试1【判断题】(1分) RBFneuralnetworkisanovelandeffectivefeedforwardneuralnetwork,whichhasthebestloc alapproximationandglobaloptimalperformance.()A.对B.错2【判断题】(1分)Atpresent,RBFneuralnetworkhasbeensuccessfullyappliedinnonlinearfunctionapproxima tion,timeseriesanalysis,dataclassification,patternrecognition,informationprocessing,ima geprocessing,systemmodeling,controlandfaultdiagnosis.()A.对B.错3【判断题】(1分) ThebasicideaofRBFneuralnetworkistouseradialbasisfunctionasthe"basis"ofhiddenlayer hiddenunittoformhiddenlayerspace,andhiddenlayertransformsinputvector.Theinputdata transformationoflowdimensionalspaceismappedintohigh-dimensionalspace,sothatthepr oblemoflinearseparabilityinlow-dimensionalspacecanberealizedinhigh-dimensionalspac e.()A.对B.错4【判断题】(1分)ForthelearningalgorithmofRBFneuralnetwork,thekeyproblemistodeterminethecenterpar ametersoftheoutputlayernodereasonably.()A.对B.错5【判断题】(1分) ThemethodofselectingthecenterofRBFneuralnetworkbyself-organizinglearningistoselec tthecenterofRBFneuralnetworkbyk-meansclusteringmethod,whichbelongstosupervisedl earningmethod.()A.对B.错第七章测试1【判断题】(1分)Intermsofalgorithm,ADALINEneuralnetworkadoptsW-Hlearningrule,alsoknownasthelea stmeansquare(LMS)algorithm.Itisdevelopedfromtheperceptronalgorithm,anditsconverg encespeedandaccuracyhavebeengreatlyimproved.()A.对B.错2【判断题】(1分)ADALINEneuralnetworkhassimplestructureandmulti-layerstructure.Itisflexibleinpractical applicationandwidelyusedinsignalprocessing,systemidentification,patternrecognitionan dintelligentcontrol.()A.错B.对3【判断题】(1分)WhentherearemultipleADALINEinthenetwork,theadaptivelinearneuralnetworkisalsocall edMadalinewhichmeansmanyAdalineneuralnetworks.()A.对B.错4【判断题】(1分)Thealgorithmusedinsingle-layerADALINEnetworkisLMSalgorithm,whichissimilartotheal gorithmofperceptron,andalsobelongstosupervisedlearningalgorithm.()A.对B.错5【判断题】(1分)Inpracticalapplication,theinverseofthecorrelationmatrixandthecorrelationcoefficientaren oteasytoobtain,sotheapproximatesteepestdescentmethodisneededinthealgorithmdesig n.Thecoreideaisthattheactualmeansquareerrorofthenetworkisreplacedbythemeansquar eerrorofthek-thiteration.()A.错B.对第八章测试1【单选题】(1分) Hopfieldneuralnetworkisakindofneuralnetworkwhichcombinesstoragesystemandbinarys ystem.Itnotonlyprovidesamodeltosimulatehumanmemory,butalsoguaranteestheconver genceto().A.localminimumB.minimumC.localmaximumD.maximum2【判断题】(1分)Atpresent,researchershavesuccessfullyappliedHopfieldneuralnetworktosolvethetravelin gsalesmanproblem(TSP),whichisthemostrepresentativeofoptimizationcombinatorialpro blems.()A.错B.对3【判断题】(1分)In1982,AmericanscientistJohnJosephHopfieldputforwardakindoffeedbackneuralnetwor k"Hopfieldneuralnetwork"inhispaperNeuralNetworksandPhysicalSystemswithEmergent CollectiveComputationalAbilities.()A.错B.对4【判断题】(1分)Undertheexcitationofinputx,DHNNentersadynamicchangeprocess,untilthestateofeachn euronisnolongerchanged,itreachesastablestate.Thisprocessisequivalenttotheprocessof networklearningandmemory,andthefinaloutputofthenetworkisthevalueofeachneuroninth establestate.()A.对B.错5【判断题】(1分)Theorderinwhichneuronsadjusttheirstatesisnotunique.Itcanbeconsideredthatacertainor dercanbespecifiedorselectedrandomly.Theprocessofneuronstateadjustmentincludesthr eesituations:from0to1,and1to0andunchanged.()A.对B.错第九章测试1【判断题】(1分)ComparedwithGPU,CPUhashigherprocessingspeed,andhassignificantadvantagesinpro cessingrepetitivetasks.()A.错B.对2【判断题】(1分)Atpresent,DCNNhasbecomeoneofthecorealgorithmsinthefieldofimagerecognition,butiti sunstablewhenthereisasmallamountoflearningdata.()A.对B.错3【判断题】(1分)Inthefieldoftargetdetectionandclassification,thetaskofthelastlayerofneuralnetworkistocla ssify.()A.错B.对4【判断题】(1分)InAlexNet,thereare650000neuronswithmorethan600000parametersdistributedinfivecon volutionlayersandthreefullyconnectedlayersandSoftmaxlayerswith1000categories.()A.错B.对5【判断题】(1分)VGGNetiscomposedoftwoparts:theconvolutionlayerandthefullconnectionlayer,whichca nberegardedasthedeepenedversionofAlexNet.()A.错B.对第十章测试1【单选题】(1分)TheessenceoftheoptimizationprocessofDandGistofindthe().A.maximumB.localmaximaC.minimaxD.minimum2【判断题】(1分)Intheartificialneuralnetwork,thequalityofmodelingwilldirectlyaffecttheperformanceoftheg enerativemodel,butasmallamountofpriorknowledgeisneededfortheactualcasemodeling.()A.对B.错3【判断题】(1分)AGANmainlyincludesageneratorGandadiscriminatorD.()A.对B.错4【判断题】(1分) Becausethegenerativeadversarialnetworkdoesnotneedtodistinguishthelowerboundand approximateinference,itavoidsthepartitionfunctioncalculationproblemcausedbythetraditi onalrepeatedapplicationofMarkovchainlearningmechanism,andimprovesthenetworkeffi ciency.()A.对B.错5【判断题】(1分)Fromtheperspectiveofartificialintelligence,GANusesneuralnetworktoguideneuralnetwor k,andtheideaisverystrange.()A.对B.错第十一章测试1【判断题】(1分) ThecharacteristicofElmanneuralnetworkisthattheoutputofthehiddenlayerisdelayedandst oredbythefeedbacklayer,andthefeedbackisconnectedtotheinputofthehiddenlayer,which hasthefunctionofinformationstorage.()A.错B.对2【判断题】(1分)InElmannetwork,thetransferfunctionoffeedbacklayerisnonlinearfunction,andthetransferf unctionofoutputlayerislinearfunction.()A.对B.错3【判断题】(1分) Thefeedbacklayerisusedtomemorizetheoutputvalueoftheprevioustimeofthehiddenlayer unitandreturnittotheinput.Therefore,Elmanneuralnetworkhasdynamicmemoryfunction.()A.对B.错4【判断题】(1分) TheneuronsinthehiddenlayerofElmannetworkadoptthetangentS-typetransferfunction,w hiletheoutputlayeradoptsthelineartransferfunction.Ifthereareenoughneuronsinthefeedb acklayer,thecombinationofthesetransferfunctionscanmakeElmanneuralnetworkapproac hanyfunctionwitharbitraryprecisioninfinitetime.()A.错B.对5【判断题】(1分)Elmanneuralnetworkisakindofdynamicrecurrentnetwork,whichcanbedividedintofullfeed backandpartialfeedback.Inthepartialrecurrentnetwork,thefeedforwardconnectionweight canbemodified,andthefeedbackconnectioniscomposedofagroupoffeedbackunits,andthe connectionweightcannotbemodified.()A.对B.错第十二章测试1【单选题】(1分)ThelossfunctionofAdaBoostalgorithmis().A.exponentialfunctionB.linearfunctionC.logarithmicfunctionD.nonlinearfunction2【判断题】(1分)Boostingalgorithmisthegeneralnameofaclassofalgorithms.Theircommongroundistocons tructastrongclassifierbyusingagroupofweakclassifiers.Weakclassifiermainlyreferstothec lassifierwhosepredictionaccuracyisnothighandfarbelowtheidealclassificationeffect.Stro ngclassifiermainlyreferstotheclassifierwithhighpredictionaccuracy.()A.对B.错3【判断题】(1分)Amongthemanyimprovedboostingalgorithms,themostsuccessfuloneistheAdaBoost(ada ptiveboosting)algorithmproposedbyYoavFreundofUniversityofCaliforniaSanDiegoandR obertSchapireofPrincetonUniversityin1996.()A.错B.对4【判断题】(1分) ThemostbasicpropertyofAdaBoostisthatitreducesthetrainingerrorcontinuouslyinthelearn ingprocess,thatis,theclassificationerrorrateonthetrainingdatasetuntileachweakclassifieri scombinedintothefinalidealclassifier.()A.错B.对5【判断题】(1分) Themainpurposeofaddingregularizationtermintotheformulaofcalculatingstrongclassifieri stopreventtheoverfittingofAdaBoostalgorithm,whichisusuallycalledstepsizeinalgorithm.()A.错B.对第十三章测试1【单选题】(1分)ThecorelayerofSOFMneuralnetworkis().A.hiddenlayerB.inputlayerC.competitionlayerD.outputlayer2【单选题】(1分)Inordertodividetheinputpatternsintoseveralclasses,thedistancebetweeninputpatternvect orsshouldbemeasuredaccordingtothesimilarity.()areusuallyused.A.SinemethodB.CosinemethodC.EuclideandistancemethodD.Euclideandistancemethodandcosinemethod3【判断题】(1分) SOFMneuralnetworksaredifferentfromotherartificialneuralnetworksinthattheyadoptcom petitivelearningratherthanbackwardpropagationerrorcorrectionlearningmethodsimilarto gradientdescent,andinasense,theyuseneighborhoodfunctionstopreservetopologicalpro pertiesofinputspace.()A.对B.错4【判断题】(1分)ForSOFMneuralnetwork,thecompetitivetransferfunction(CTF)responseis0forthewinning neurons,and1forotherneurons.()A.错B.对5【判断题】(1分) Whentheinputpatterntothenetworkdoesnotbelongtoanypatterninthenetworktrainingsam ples,SOFMneuralnetworkcanonlyclassifyitintotheclosestmode.()A.错B.对第十四章测试1【单选题】(1分)Theneuralnetworktoolboxcontains()modulelibraries.A.fiveB.threeC.sixD.four2【单选题】(1分) The"netprod"inthenetworkinputmodulecanbeusedfor().A.dotdivisionB.additionorsubtractionC.dotmultiplicationordotdivisionD.dotmultiplication3【判断题】(1分)The"dotrod"intheweightsettingmoduleisanormaldotproductweightfunction.()A.错B.对4【判断题】(1分)Themathematicalmodelofsingleneuronisy=f(wx+b).()A.错B.对5【判断题】(1分)Theneuronmodelcanbedividedintothreeparts:inputmodule,transferfunctionandoutputmo dule.()A.错B.对第十五章测试1【判断题】(1分)Inlarge-scalesystemsoftwaredesign,weneedtoconsiderthelogicalstructureandphysicalst ructureofsoftwarearchitecture.()A.错B.对2【判断题】(1分)Themenupropertybarhas"label"and"tag".Thelabelisequivalenttothetagvalueofthemenuit em,andthetagisthenameofthemenudisplay.()A.错B.对3【判断题】(1分)Itisnecessarytodeterminethestructureandparametersoftheneuralnetwork,includingthenu mberofhiddenlayers,thenumberofneuronsinthehiddenlayerandthetrainingfunction.()A.错B.对4【判断题】(1分) Thedescriptionoftheproperty"tooltipstring"isthepromptthatappearswhenthemouseisover theobject.()A.对B.错5【判断题】(1分)Thedescriptionoftheproperty"string"is:thetextdisplayedontheobject.()A.错B.对第十六章测试1【单选题】(1分) Thedescriptionoftheparameter"validator"ofthewx.TextCtrlclassis:the().A.styleofcontrolB.validatorofcontrolC.sizeofcontrolD.positionofcontrol2【单选题】(1分) Thedescriptionoftheparameter"defaultDir"ofclasswx.FileDialogis:().A.savethefileB.defaultpathC.openthefileD.defaultfilename3【判断题】(1分) InthedesignofartificialneuralnetworksoftwarebasedonwxPython,creatingGUImeansbuild ingaframeworkinwhichvariouscontrolscanbeaddedtocompletethedesignofsoftwarefuncti ons.()A.错B.对4【判断题】(1分)Whenthewindoweventoccurs,themaineventloopwillrespondandassigntheappropriateev enthandlertothewindowevent.()A.错B.对5【判断题】(1分)Fromtheuser'spointofview,thewxPythonprogramisidleforalargepartofthetime,butwhenth euserortheinternalactionofthesystemcausestheevent,andthentheeventwilldrivethewxPy thonprogramtoproducethecorrespondingaction.()A.对B.错。