人脸识别英文专业词汇教学提纲

- 格式:doc

- 大小:24.50 KB

- 文档页数:5

【英语教案】面容特征:眼睛、鼻子和嘴巴Introduction:人们一直在探索面容特征的神秘之处。

在面容特征中,眼睛、鼻子和嘴巴被认为是最重要和最基本的标志。

这些特征不仅赋予了面部美感,还在某种程度上反映了一个人的性格、健康状况、文化背景和地域特征。

因此,本教案将着重探讨人的面容特征及其各自的特点,旨在提高学生的英语口语水平、拓宽视野、增长知识。

Teaching Objectives:1. 了解人面部特征的基本构成要素,包括眼睛、鼻子和嘴巴。

2. 学会描述不同面部特征,根据面部特征判断不同人的年龄、性别和文化习俗等。

3. 通过教授听、说、读、写的方式,提高学生的英语口语能力。

Teaching Procedures:Step 1. 教师引入话题,引导学生探索不同的人面部特征T: Good morning, students. Let’s start today’s class by talking about someone’s face. Could you tell me what kind of face features can you distinguish whe n you look at someone’s face? (学生回答:eyes, nose, mouth, chin等)T: Very good. So, as you all know, eyes, nose, and mouth are the most important features that make up the face. Next, we will learn more about these features.Step 2. 学习眼部特征描述及不同文化的眼部习俗T: Le t’s start with the first feature, eyes. Eyes are the window to the soul. They can convey emotions, express moods, and even reflect one's personality. Now, let's take a closer look at the structure of eyes and learn some vocabulary about eyes.(present some relevant pictures, for example, the structure of the eye, the eyelid, the eyelash, the iris, the pupil, etc.)T: Great. Now we know what the eye is made of, next let's talk about the different cultural standards of eye beauty. For example, in some Asian countries, big eyes are consideredbeautiful, whereas in some European countries, almond-shaped eyes are more popular. Do you know any other eye cultural standards?(学生们举手回答,教师梳理总结)T: Since different countries have different cultural standards for eyes, do you think these standards affectpeople's lives and emotions? Please share your thoughts.Step 3. 学习鼻部特征描述及不同文化的鼻部习俗T: Good job, students. Now, let's move on to another important feature, the nose. Though the nose is not the most noticeable feature, it plays a vital role in overall facial appearance.(present some relevant pictures, such as the nasal bridge, the nostril, the nose tip, etc.)T: Now we have learned some vocabulary about the nose. Next, let's talk about the different cultural standards of nose beauty. For example, in many African countries, a broad and flat nose is considered attractive, while in someEuropean countries, a small and pointed nose is a sign of beauty. Do you know any other nose cultural standards?(学生们举手回答,教师梳理总结)T: Since different countries have different cultural standards for noses, do you think these standards affect people's lives and emotions? Please share your thoughts.Step 4. 学习嘴部特征描述及不同文化的嘴部习俗T: Well done, students. Now, let’s move onto the last important feature, the mouth. The mouth not only affects a person's facial features but also plays a significant role in their communication.(present some relevant pictures, such as the lips, the teeth, the cheeks, etc.)T: Now we have learned some vocabulary about the mouth. Next, let's talk about the different cultural standards oflip beauty. For example, in some African countries, a large, full-lipped mouth is considered attractive, while in someAsian countries, a small and delicate mouth is more popular. Do you know any other lip cultural standards?(学生们举手回答,教师梳理总结)T: Since different countries have different cultural standards for lips, do you think these standards affect people's lives and emotions? Please share your thoughts.Step 5. 总结课程,增强学生英语口语能力T: Great job today, students! We have learned a lot about facial features and cultural differences. Now, let’s summarize today's class. What have we learned today? Please share your opinions.(学生们回答,教师纠错并提供意见)T: As we have learned, facial features not only reflect our looks but also influence our identity, culture, andsocial status. Also, learning the cultural standards of beauty in different countries will help us understanddifferent cultures and customs better.T: OK. That's all for today's class. I hope you learned a lot and had fun. Homework tonight is to describe the face of a person you admire the most and its facial features in English. Have a great day, everyone!。

English Version:The Knowledge Behind Face Recognition TechnologyFace recognition is an advanced technology that has revolutionized the way we interact with digital systems. It involves a myriad of complex concepts and technologies, ranging from computer vision and artificial intelligence to biometric analysis. In this article, we will delve into the knowledge and principles behind face recognition.At its core, face recognition relies heavily on computer vision, a field that deals with the analysis and understanding of digital images and videos. Computer vision algorithms are trained to detect and identify patterns, such as edges, shapes, and textures, within these digital representations. In the context of face recognition, these algorithms are specifically designed to recognize and extract features from human faces.One of the most critical components of face recognition is feature extraction. This process involves identifying distinctive characteristics of a face, such as the shape of the eyes, nose, or mouth, and encoding them into a numerical representation. These features are then used to create a unique identifier for each individual, known as a faceprint.Faceprints are then compared against a database of pre-existing faceprints to identify a match. This comparison process is facilitated by algorithms that calculate the similarity between two faceprints based on various metrics. If a match is found, the system can then identify the individual associated with that faceprint.Artificial intelligence (AI) plays a crucial role in face recognition by enabling the system to learn and improve over time. Machine learning algorithms, such as deep neural networks, are trained on large datasets of faces to recognize patterns and make accurate identifications. As more data becomes available, these algorithms become more accurate and reliable.Biometric analysis is another important aspect of face recognition. Biometrics refers to the measurement and analysis of physical characteristics for identification purposes. In face recognition, biometric analysis involves measuring and comparing facial features to verify the identity of an individual.In conclusion, face recognition technology draws upon a vast array of knowledge and technologies, including computer vision, artificial intelligence, and biometric analysis. The complexity and sophistication of these components make face recognition a powerful and accurate tool for identification and verificationin various applications.Chinese Version:人脸识别背后的知识人脸识别技术已经彻底改变了我们与数字系统的交互方式,是一项革命性的技术。

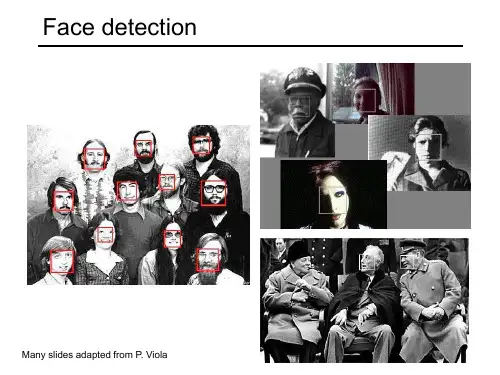

文献信息文献标题:Face Recognition Techniques: A Survey(人脸识别技术综述)文献作者:V.Vijayakumari文献出处:《World Journal of Computer Application and Technology》, 2013,1(2):41-50字数统计:英文3186单词,17705字符;中文5317汉字外文文献Face Recognition Techniques: A Survey Abstract Face is the index of mind. It is a complex multidimensional structure and needs a good computing technique for recognition. While using automatic system for face recognition, computers are easily confused by changes in illumination, variation in poses and change in angles of faces. A numerous techniques are being used for security and authentication purposes which includes areas in detective agencies and military purpose. These surveys give the existing methods in automatic face recognition and formulate the way to still increase the performance.Keywords: Face Recognition, Illumination, Authentication, Security1.IntroductionDeveloped in the 1960s, the first semi-automated system for face recognition required the administrator to locate features ( such as eyes, ears, nose, and mouth) on the photographs before it calculated distances and ratios to a common reference point, which were then compared to reference data. In the 1970s, Goldstein, Armon, and Lesk used 21 specific subjective markers such as hair color and lip thickness to automate the recognition. The problem with both of these early solutions was that the measurements and locations were manually computed. The face recognition problem can be divided into two main stages: face verification (or authentication), and face identification (or recognition).The detection stage is the first stage; it includesidentifying and locating a face in an image. The recognition stage is the second stage; it includes feature extraction, where important information for the discrimination is saved and the matching where the recognition result is given aid of a face database.2.Methods2.1.Geometric Feature Based MethodsThe geometric feature based approaches are the earliest approaches to face recognition and detection. In these systems, the significant facial features are detected and the distances among them as well as other geometric characteristic are combined in a feature vector that is used to represent the face. To recognize a face, first the feature vector of the test image and of the image in the database is obtained. Second, a similarity measure between these vectors, most often a minimum distance criterion, is used to determine the identity of the face. As pointed out by Brunelli and Poggio, the template based approaches will outperform the early geometric feature based approaches.2.2.Template Based MethodsThe template based approaches represent the most popular technique used to recognize and detect faces. Unlike the geometric feature based approaches, the template based approaches use a feature vector that represent the entire face template rather than the most significant facial features.2.3.Correlation Based MethodsCorrelation based methods for face detection are based on the computation of the normalized cross correlation coefficient Cn. The first step in these methods is to determine the location of the significant facial features such as eyes, nose or mouth. The importance of robust facial feature detection for both detection and recognition has resulted in the development of a variety of different facial feature detection algorithms. The facial feature detection method proposed by Brunelli and Poggio uses a set of templates to detect the position of the eyes in an image, by looking for the maximum absolute values of the normalized correlation coefficient of these templates at each point in test image. To cope with scale variations, a set of templates atdifferent scales was used.The problems associated with the scale variations can be significantly reduced by using hierarchical correlation. For face recognition, the templates corresponding to the significant facial feature of the test images are compared in turn with the corresponding templates of all of the images in the database, returning a vector of matching scores computed through normalized cross correlation. The similarity scores of different features are integrated to obtain a global score that is used for recognition. Other similar method that use correlation or higher order statistics revealed the accuracy of these methods but also their complexity.Beymer extended the correlation based on the approach to a view based approach for recognizing faces under varying orientation, including rotations with respect to the axis perpendicular to the image plane(rotations in image depth). To handle rotations out of the image plane, templates from different views were used. After the pose is determined ,the task of recognition is reduced to the classical correlation method in which the facial feature templates are matched to the corresponding templates of the appropriate view based models using the cross correlation coefficient. However this approach is highly computational expensive, and it is sensitive to lighting conditions.2.4.Matching Pursuit Based MethodsPhilips introduced a template based face detection and recognition system that uses a matching pursuit filter to obtain the face vector. The matching pursuit algorithm applied to an image iteratively selects from a dictionary of basis functions the best decomposition of the image by minimizing the residue of the image in all iterations. The algorithm describes by Philips constructs the best decomposition of a set of images by iteratively optimizing a cost function, which is determined from the residues of the individual images. The dictionary of basis functions used by the author consists of two dimensional wavelets, which gives a better image representation than the PCA (Principal Component Analysis) and LDA(Linear Discriminant Analysis) based techniques where the images were stored as vectors. For recognition the cost function is a measure of distances between faces and is maximized at each iteration. For detection the goal is to find a filter that clusters together in similar templates (themean for example), and minimized in each iteration. The feature represents the average value of the projection of the templates on the selected basis.2.5.Singular Value Decomposition Based MethodsThe face recognition method in this section use the general result stated by the singular value decomposition theorem. Z.Hong revealed the importance of using Singular Value Decomposition Method (SVD) for human face recognition by providing several important properties of the singular values (SV) vector which include: the stability of the SV vector to small perturbations caused by stochastic variation in the intensity image, the proportional variation of the SV vector with the pixel intensities, the variances of the SV feature vector to rotation, translation and mirror transformation. The above properties of the SV vector provide the theoretical basis for using singular values as image features. In addition, it has been shown that compressing the original SV vector into the low dimensional space by means of various mathematical transforms leads to the higher recognition performance. Among the various dimensionality reducing transformations, the Linear Discriminant Transform is the most popular one.2.6.The Dynamic Link Matching MethodsThe above template based matching methods use an Euclidean distance to identify a face in a gallery or to detect a face from a background. A more flexible distance measure that accounts for common facial transformations is the dynamic link introduced by Lades et al. In this approach , a rectangular grid is centered all faces in the gallery. The feature vector is calculated based on Gabor type wavelets, computed at all points of the grid. A new face is identified if the cost function, which is a weighted sum of two terms, is minimized. The first term in the cost function is small when the distance between feature vectors is small and the second term is small when the relative distance between the grid points in the test and the gallery image is preserved. It is the second term of this cost function that gives the “elasticity” of this matching measure. While the grid of the image remains rectangular, the grid that is “best fit” over the test image is stretched. Under certain constraints, until the minimum of the cost function is achieved. The minimum value of the cost function isused further to identify the unknown face.2.7.Illumination Invariant Processing MethodsThe problem of determining functions of an image of an object that are insensitive to illumination changes are considered. An object with Lambertian reflection has no discriminative functions that are invariant to illumination. This result leads the author to adopt a probabilistic approach in which they analytically determine a probability distribution for the image gradient as a function of the surfaces geometry and reflectance. Their distribution reveals that the direction of the image gradient is insensitive to changes in illumination direction. Verify this empirically by constructing a distribution for the image gradient from more than twenty million samples of gradients in a database of thousand two hundred and eighty images of twenty inanimate objects taken under varying lighting conditions. Using this distribution, they develop an illumination insensitive measure of image comparison and test it on the problem of face recognition. In another method, they consider only the set of images of an object under variable illumination, including multiple, extended light sources, shadows, and color. They prove that the set of n-pixel monochrome images of a convex object with a Lambertian reflectance function, illuminated by an arbitrary number of point light sources at infinity, forms a convex polyhedral cone in IR and that the dimension of this illumination cone equals the number of distinct surface normal. Furthermore, the illumination cone can be constructed from as few as three images. In addition, the set of n-pixel images of an object of any shape and with a more general reflectance function, seen under all possible illumination conditions, still forms a convex cone in IRn. These results immediately suggest certain approaches to object recognition. Throughout, they present results demonstrating the illumination cone representation.2.8.Support Vector Machine ApproachFace recognition is a K class problem, where K is the number of known individuals; and support vector machines (SVMs) are a binary classification method. By reformulating the face recognition problem and reinterpreting the output of the SVM classifier, they developed a SVM-based face recognition algorithm. The facerecognition problem is formulated as a problem in difference space, which models dissimilarities between two facial images. In difference space we formulate face recognition as a two class problem. The classes are: dissimilarities between faces of the same person, and dissimilarities between faces of different people. By modifying the interpretation of the decision surface generated by SVM, we generated a similarity metric between faces that are learned from examples of differences between faces. The SVM-based algorithm is compared with a principal component analysis (PCA) based algorithm on a difficult set of images from the FERET database. Performance was measured for both verification and identification scenarios. The identification performance for SVM is 77-78% versus 54% for PCA. For verification, the equal error rate is 7% for SVM and 13% for PCA.2.9.Karhunen- Loeve Expansion Based Methods2.9.1.Eigen Face ApproachIn this approach, face recognition problem is treated as an intrinsically two dimensional recognition problem. The system works by projecting face images which represents the significant variations among known faces. This significant feature is characterized as the Eigen faces. They are actually the eigenvectors. Their goal is to develop a computational model of face recognition that is fact, reasonably simple and accurate in constrained environment. Eigen face approach is motivated by the information theory.2.9.2.Recognition Using Eigen FeaturesWhile the classical eigenface method uses the KLT (Karhunen- Loeve Transform) coefficients of the template corresponding to the whole face image, the author Pentland et.al. introduce a face detection and recognition system that uses the KLT coefficients of the templates corresponding to the significant facial features like eyes, nose and mouth. For each of the facial features, a feature space is built by selecting the most significant “eigenfeatures”, which are the eigenvectors corresponding to the largest eigen values of the features correlation matrix. The significant facial features were detected using the distance from the feature space and selecting the closest match. The scores of similarity between the templates of the test image and thetemplates of the images in the training set were integrated in a cumulative score that measures the distance between the test image and the training images. The method was extended to the detection of features under different viewing geometries by using either a view-based Eigen space or a parametric eigenspace.2.10.Feature Based Methods2.10.1.Kernel Direct Discriminant Analysis AlgorithmThe kernel machine-based Discriminant analysis method deals with the nonlinearity of the face patterns’ distribution. This method also effectively solves the so-called “small sample size” (SSS) problem, which exists in most Face Recognition tasks. The new algorithm has been tested, in terms of classification error rate performance, on the multiview UMIST face database. Results indicate that the proposed methodology is able to achieve excellent performance with only a very small set of features being used, and its error rate is approximately 34% and 48% of those of two other commonly used kernel FR approaches, the kernel-PCA (KPCA) and the Generalized Discriminant Analysis (GDA), respectively.2.10.2.Features Extracted from Walshlet PyramidA novel Walshlet Pyramid based face recognition technique used the image feature set extracted from Walshlets applied on the image at various levels of decomposition. Here the image features are extracted by applying Walshlet Pyramid on gray plane (average of red, green and blue. The proposed technique is tested on two image databases having 100 images each. The results show that Walshlet level-4 outperforms other Walshlets and Walsh Transform, because the higher level Walshlets are giving very coarse color-texture features while the lower level Walshlets are representing very fine color-texture features which are less useful to differentiate the images in face recognition.2.10.3.Hybrid Color and Frequency Features ApproachThis correspondence presents a novel hybrid Color and Frequency Features (CFF) method for face recognition. The CFF method, which applies an Enhanced Fisher Model(EFM), extracts the complementary frequency features in a new hybrid color space for improving face recognition performance. The new color space, the RIQcolor space, which combines the component image R of the RGB color space and the chromatic components I and Q of the YIQ color space, displays prominent capability for improving face recognition performance due to the complementary characteristics of its component images. The EFM then extracts the complementary features from the real part, the imaginary part, and the magnitude of the R image in the frequency domain. The complementary features are then fused by means of concatenation at the feature level to derive similarity scores for classification. The complementary feature extraction and feature level fusion procedure applies to the I and Q component images as well. Experiments on the Face Recognition Grand Challenge (FRGC) show that i) the hybrid color space improves face recognition performance significantly, and ii) the complementary color and frequency features further improve face recognition performance.2.10.4.Multilevel Block Truncation Coding ApproachIn Multilevel Block Truncation coding for face recognition uses all four levels of Multilevel Block Truncation Coding for feature vector extraction resulting into four variations of proposed face recognition technique. The experimentation has been conducted on two different face databases. The first one is Face Database which has 1000 face images and the second one is “Our Own Database” which has 1600 face images. To measure the performance of the algorithm the False Acceptance Rate (FAR) and Genuine Acceptance Rate (GAR) parameters have been used. The experimental results have shown that the outcome of BTC (Block truncation Coding) Level 4 is better as compared to the other BTC levels in terms of accuracy, at the cost of increased feature vector size.2.11.Neural Network Based AlgorithmsTemplates have been also used as input to Neural Network (NN) based systems. Lawrence et.al proposed a hybrid neural network approach that combines local image sampling, A self organizing map (SOM) and a convolutional neural network. The SOP provides a set of features that represents a more compact and robust representation of the image samples. These features are then fed into the convolutional neural network. This architecture provides partial invariance to translation, rotation, scale and facedeformation. Along with this the author introduced an efficient probabilistic decision based neural network (PDBNN) for face detection and recognition. The feature vector used consists of intensity and edge values obtained from the facial region of the down sampled image in the training set. The facial region contains the eyes and nose, but excludes the hair and mouth. Two PDBNN were trained with these feature vectors and used one for the face detection and other for the face recognition.2.12.Model Based Methods2.12.1.Hidden Markov Model Based ApproachIn this approach, the author utilizes the face that the most significant facial features of a frontal face which includes hair, forehead, eyes, nose and mouth which occur in a natural order from top to bottom even if the image undergo small variation/rotation in the image plane perpendicular to the image plane. One dimensional HMM (Hidden Markov Model) is used for modeling the image, where the observation vectors are obtained from DCT or KLT coefficients. They given c face images for each subject of the training set, the goal of the training set is to optimize the parameters of the Hidden Markov Model to best describe the observations in the sense of maximizing the probability of the observations given in the model. Recognition is carried out by matching the best test image against each of the trained models. To do this, the image is converted to an observation sequence and then model likelihoods are computed for each face model. The model with the highest likelihood reveals the identity of the unknown face.2.12.2.The Volumetric Frequency Representation of Face ModelA face model that incorporates both the three dimensional (3D) face structure and its two-dimensional representation are explained (face images). This model which represents a volumetric (3D) frequency representation (VFR) of the face , is constructed using range image of a human head. Making use of an extension of the projection Slice Theorem, the Fourier transform of any face image corresponds to a slice in the face VFR. For both pose estimation and face recognition a face image is indexed in the 3D VFR based on the correlation matching in a four dimensional Fourier space, parameterized over the elevation, azimuth, rotation in the image planeand the scale of faces.3.ConclusionThis paper discusses the different approaches which have been employed in automatic face recognition. In the geometrical based methods, the geometrical features are selected and the significant facial features are detected. The correlation based approach needs face template rather than the significant facial features. Singular value vectors and the properties of the SV vector provide the theoretical basis for using singular values as image features. The Karhunen-Loeve expansion works by projecting the face images which represents the significant variations among the known faces. Eigen values and Eigen vectors are involved in extracting the features in KLT. Neural network based approaches are more efficient when it contains no more than a few hundred weights. The Hidden Markov model optimizes the parameters to best describe the observations in the sense of maximizing the probability of observations given in the model .Some methods use the features for classification and few methods uses the distance measure from the nodal points. The drawbacks of the methods are also discussed based on the performance of the algorithms used in the approaches. Hence this will give some idea about the existing methods for automatic face recognition.中文译文人脸识别技术综述摘要人脸是心灵的指标。

gallery set参考图像集Probe set=test set测试图像集face renderingFacial Landmark Detection人脸特征点检测3D Morphable Model 3D形变模型AAM (Active Appearance Model)主动外观模型Aging modeling老化建模Aging simulation老化模拟Analysis by synthesis 综合分析Aperture stop孔径光标栏Appearance Feature表观特征Baseline基准系统Benchmarking 确定基准Bidirectional relighting双向重光照Camera calibration摄像机标定(校正)Cascade of classifiers级联分类器face detection 人脸检测Facial expression面部表情Depth of field 景深Edgelet 小边特征Eigen light-fields本征光场Eigenface特征脸Exposure time曝光时间Expression editing表情编辑Expression mapping表情映射Partial Expression Ratio Image局部表情比率图(,PERI) extrapersonal variations类间变化Eye localization,眼睛定位face image acquisition人脸图像获取Face aging人脸老化Face alignment人脸对齐Face categorization人脸分类Frontal faces 正面人脸Face Identification人脸识别Face recognition vendor test人脸识别供应商测试Face tracking人脸跟踪Facial action coding system面部动作编码系统Facial aging面部老化Facial animation parameters脸部动画参数Facial expression analysis人脸表情分析Facial landmark面部特征点Facial Definition Parameters人脸定义参数Field of view视场Focal length焦距Geometric warping几何扭曲Street view街景Head pose estimation头部姿态估计Harmonic reflectances谐波反射Horizontal scaling水平伸缩Identification rate识别率Illumination cone光照锥Inverse rendering逆向绘制技术Iterative closest point迭代最近点Lambertian model朗伯模型Light-field光场Local binary patterns局部二值模式Mechanical vibration机械振动Multi-view videos多视点视频Band selection波段选择Capture systems获取系统Frontal lighting正面光照Open-set identification开集识别Operating point操作点Person detection行人检测Person tracking行人跟踪Photometric stereo光度立体技术Pixellation像素化Pose correction姿态校正Privacy concern隐私关注Privacy policies隐私策略Profile extraction轮廓提取Rigid transformation刚体变换Sequential importance sampling序贯重要性抽样Skin reflectance model,皮肤反射模型Specular reflectance镜面反射Stereo baseline立体基线Super-resolution超分辨率Facial side-view面部侧视图Texture mapping纹理映射Texture pattern纹理模式Rama Chellappa读博计划:1.完成先前关于指纹细节点统计建模的相关工作。

人脸识别基本名词在人脸识别领域,有一些基本的名词和概念,下面是其中一些常用的:1.人脸检测(Face Detection):人脸检测是指通过算法和技术来自动检测图像或视频中的人脸区域。

它是人脸识别的第一步,用于确定图像中是否存在人脸。

2.人脸对齐(Face Alignment):人脸对齐是将检测到的人脸在图像上进行标准化和调整,使得人脸区域具有一致的位置、大小和朝向,以便进行后续的特征提取和比对。

3.特征提取(Feature Extraction):特征提取是从人脸图像或人脸区域中提取出有助于识别和表征的关键特征。

常用的特征包括颜色、纹理、形状和局部特征等。

4.特征向量(Feature Vector):特征向量是特征提取过程中得到的数值化表示,它将人脸特征转换为向量形式,用于进行人脸匹配和识别。

5.人脸匹配(Face Matching):人脸匹配是指对两个或多个人脸图像或人脸特征进行比对,以确定它们是否属于同一个人或是否相似。

匹配过程可以采用相似度度量方法,如欧氏距离、余弦相似度等。

6.人脸识别(Face Recognition):人脸识别是指将输入的人脸图像与已知数据库中的人脸进行比对,然后确定其身份或标识。

它可以用于人脸认证、身份验证、身份辨认等应用。

7.人脸数据库(Face Database):人脸数据库是存储人脸图像、人脸特征和标注信息的集合,用于人脸识别系统的建立和训练。

通常包括多个人的人脸数据。

8.活体检测(Liveness Detection):活体检测是为了防止使用照片、视频或面具等伪造物进行攻击而引入的技术。

它通过分析人脸动作、纹理、光照等特征,判断图像或视频中的人脸是否为真实的活体。

以上是人脸识别领域的一些基本名词和概念,它们构成了人脸识别技术的基础,用于实现人脸的检测、对齐、特征提取、匹配和识别等功能。

二、(国外)英文参考资料1、网上文献2、国际会议文章(英文)[C1]Afzal S, Sezgin T.M, Yujian Gao, Robinson P. Perception of emotional expressions in different representations using facial feature points. In: Affective Computing and Intelligent Interaction and Workshops, Amsterdam,Holland, 2009 Page(s): 1 - 6[C2]Yuwen Wu, Hong Liu, Hongbin Zha. Modeling facial expression space for recognition In:Intelligent Robots and Systems,Edmonton,Canada,2005: 1968 – 1973[C3]Y u-Li Xue, Xia Mao, Fan Zhang. Beihang University Facial Expression Database and Multiple Facial Expression Recognition. In: Machine Learning and Cybernetics, Dalian,China,2006: 3282 – 3287[C4] Zhiguo Niu, Xuehong Qiu. Facial expression recognition based on weighted principal component analysis and support vector machines. In: Advanced Computer Theory and Engineering (ICACTE), Chendu,China,2010: V3-174 - V3-178[C5] Colmenarez A, Frey B, Huang T.S. A probabilistic framework for embedded face and facial expression recognition. In: Computer Vision and Pattern Recognition, Ft. Collins, CO, USA, 1999:[C6] Yeongjae Cheon, Daijin Kim. A Natural Facial Expression Recognition Using Differential-AAM and k-NNS. In: Multimedia(ISM 2008),Berkeley, California, USA,2008: 220 - 227[C7]Jun Ou, Xiao-Bo Bai, Yun Pei,Liang Ma, Wei Liu. Automatic Facial Expression Recognition Using Gabor Filter and Expression Analysis. In: Computer Modeling and Simulation, Sanya, China, 2010: 215 - 218[C8]Dae-Jin Kim, Zeungnam Bien, Kwang-Hyun Park. Fuzzy neural networks (FNN)-based approach for personalized facial expression recognition with novel feature selection method. In: Fuzzy Systems, St.Louis,Missouri,USA,2003: 908 - 913[C9] Wei-feng Liu, Shu-juan Li, Yan-jiang Wang. Automatic Facial Expression Recognition Based on Local Binary Patterns of Local Areas. In: Information Engineering, Taiyuan, Shanxi, China ,2009: 197 - 200[C10] Hao Tang, Hasegawa-Johnson M, Huang T. Non-frontal view facial expression recognition based on ergodic hidden Markov model supervectors.In: Multimedia and Expo (ICME), Singapore ,2010: 1202 - 1207[C11] Yu-Jie Li, Sun-Kyung Kang,Young-Un Kim, Sung-Tae Jung. Development of a facial expression recognition system for the laughter therapy. In: Cybernetics and Intelligent Systems (CIS), Singapore ,2010: 168 - 171[C12] Wei Feng Liu, ZengFu Wang. Facial Expression Recognition Based on Fusion of Multiple Gabor Features. In: Pattern Recognition, HongKong, China, 2006: 536 - 539[C13] Chen Feng-jun, Wang Zhi-liang, Xu Zheng-guang, Xiao Jiang. Facial Expression Recognition Based on Wavelet Energy Distribution Feature and Neural Network Ensemble. In: Intelligent Systems, XiaMen, China, 2009: 122 - 126[C14] P. Kakumanu, N. Bourbakis. A Local-Global Graph Approach for Facial Expression Recognition. In: Tools with Artificial Intelligence, Arlington, Virginia, USA,2006: 685 - 692[C15] Mingwei Huang, Zhen Wang, Zilu Ying. Facial expression recognition using Stochastic Neighbor Embedding and SVMs. In: System Science and Engineering (ICSSE), Macao, China, 2011: 671 - 674[C16] Junhua Li, Li Peng. Feature difference matrix and QNNs for facial expression recognition. In: Control and Decision Conference, Yantai, China, 2008: 3445 - 3449[C17] Yuxiao Hu, Zhihong Zeng, Lijun Yin,Xiaozhou Wei, Jilin Tu, Huang, T.S. A study of non-frontal-view facial expressions recognition. In: Pattern Recognition, Tampa, FL, USA,2008: 1 - 4[C18] Balasubramani A, Kalaivanan K, Karpagalakshmi R.C, Monikandan R. Automatic facial expression recognition system. In: Computing, Communication and Networking, St. Thomas,USA, 2008: 1 - 5[C19] Hui Zhao, Zhiliang Wang, Jihui Men. Facial Complex Expression Recognition Based on Fuzzy Kernel Clustering and Support Vector Machines. In: Natural Computation, Haikou,Hainan,China,2007: 562 - 566[C20] Khanam A, Shafiq M.Z, Akram M.U. Fuzzy Based Facial Expression Recognition. In: Image and Signal Processing, Sanya, Hainan, China,2008: 598 - 602[C21] Sako H, Smith A.V.W. Real-time facial expression recognition based on features' positions and dimensions. In: Pattern Recognition, Vienna,Austria, 1996: 643 - 648 vol.3[C22] Huang M.W, Wang Z.W, Ying Z.L. A novel method of facial expression recognition based on GPLVM Plus SVM. In: Signal Processing (ICSP), Beijing, China, 2010: 916 - 919[C23] Xianxing Wu; Jieyu Zhao; Curvelet feature extraction for face recognition and facial expression recognition. In: Natural Computation (ICNC), Yantai, China, 2010: 1212 - 1216[C24]Xu Q Z, Zhang P Z, Yang L X, et al.A facial expression recognition approach based on novel support vector machine tree. In Proceedings of the 4th International Symposium on Neural Networks, Nanjing, China, 2007: 374-381.[C25] Wang Y B, Ai H Z, Wu B, et al. Real time facial expression recognition with adaboost.In: Proceedings of the 17th International Conference on Pattern Recognition , Cambridge,UK, 2004: 926-929.[C26] Guo G, Dyer C R. Simultaneous feature selection and classifier training via linear programming: a case study for face expression recognition. In: Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, W isconsin, USA, 2003,1: 346-352.[C27] Bourel F, Chibelushi C C, Low A A. Robust facial expression recognition using a state-based model of spatially-localised facial dynamics. In: Proceedings of the 5th IEEE International Conference on Automatic Face and Gesture Recognition, Washington, DC, USA, 2002: 113-118·[C28] Buciu I, Kotsia I, Pitas I. Facial expression analysis under partial occlusion. In: Proceedings of the 2005 IEEE International Conference on Acoustics, Speech, and Signal Processing, Philadelphia, PA, USA, 2005,V: 453-456.[C29] ZHAN Yong-zhao,YE Jing-fu,NIU De-jiao,et al.Facial expression recognition based on Gabor wavelet transformation and elastic templates matching. Proc of the 3rd International Conference on Image and Graphics.Washington DC, USA,2004:254-257.[C30] PRASEEDA L V,KUMAR S,VIDYADHARAN D S,et al.Analysis of facial expressions using PCA on half and full faces. Proc of ICALIP2008.2008:1379-1383.[C31]LEE J J,UDDIN M Z,KIM T S.Spatiotemporal human facial expression recognition using Fisher independent component analysis and Hidden Markov model[C]//Proc of the 30th Annual International Conference of IEEE Engineering in Medicine and Biology Society.2008:2546-2549.[C32] LITTLEWORT G,BARTLETT M,FASELL. Dynamics of facial expression extracted automatically from video. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Workshop on Face Processing inVideo, Washington DC,USA,2006:80-81.[C33] Kotsia I, Nikolaidis N, Pitas I. Facial Expression Recognition in Videos using a Novel Multi-Class Support Vector Machines Variant. In: Acoustics, Speech and Signal Processing, Honolulu, Hawaii, USA, 2007: II-585 - II-588[C34] Ruo Du, Qiang Wu, Xiangjian He, Wenjing Jia, Daming Wei. Facial expression recognition using histogram variances faces. In: Applications of Computer Vision (WACV), Snowbird, Utah, USA, 2009: 1 - 7[C35] Kobayashi H, Tange K, Hara F. Real-time recognition of six basic facial expressions. In: Robot and Human Communication, Tokyo , Japan,1995: 179 - 186[C36] Hao Tang, Huang T.S. 3D facial expression recognition based on properties of line segments connecting facial feature points. In: Automatic Face & Gesture Recognition, Amsterdam, The Netherlands, 2008: 1 - 6[C37] Fengjun Chen, Zhiliang Wang, Zhengguang Xu, Donglin Wang. Research on a method of facial expression recognition.in: Electronic Measurement & Instruments, Beijing,China, 2009: 1-225 - 1-229[C38] Hui Zhao, Tingting Xue, Linfeng Han. Facial complex expression recognition based on Latent DirichletAllocation. In: Natural Computation (ICNC), Yantai, Shandong, China, 2010: 1958 - 1960[C39] Qinzhen Xu, Pinzheng Zhang, Wenjiang Pei, Luxi Yang, Zhenya He. An Automatic Facial Expression Recognition Approach Based on Confusion-Crossed Support Vector Machine Tree. In: Acoustics, Speech and Signal Processing, Honolulu, Hawaii, USA, 2007: I-625 - I-628[C40] Sung Uk Jung, Do Hyoung Kim, Kwang Ho An, Myung Jin Chung. Efficient rectangle feature extraction for real-time facial expression recognition based on AdaBoost.In: Intelligent Robots and Systems, Edmonton,Canada, 2005: 1941 - 1946[C41] Patil K.K, Giripunje S.D, Bajaj P.R. Facial Expression Recognition and Head Tracking in Video Using Gabor Filter .In: Emerging Trends in Engineering and Technology (ICETET), Goa, India, 2010: 152 - 157[C42] Jun Wang, Lijun Yin, Xiaozhou Wei, Yi Sun. 3D Facial Expression Recognition Based on Primitive Surface Feature Distribution.In: Computer Vision and Pattern Recognition, New York, USA,2006: 1399 - 1406[C43] Shi Dongcheng, Jiang Jieqing. The method of facial expression recognition based on DWT-PCA/LDA.IN: Image and Signal Processing (CISP), Yantai,China, 2010: 1970 - 1974[C44] Asthana A, Saragih J, Wagner M, Goecke R. Evaluating AAM fitting methods for facial expression recognition. In: Affective Computing and Intelligent Interaction and Workshops, Amsterdam,Holland, 2009:1-8[C45] Geng Xue, Zhang Youwei. Facial Expression Recognition Based on the Difference of Statistical Features.In: Signal Processing, Guilin, China, 2006[C46] Metaxas D. Facial Features Tracking for Gross Head Movement analysis and Expression Recognition.In: Multimedia Signal Processing, Chania,Crete,GREECE, 2007:2[C47] Xia Mao, YuLi Xue, Zheng Li, Kang Huang, ShanWei Lv. Robust facial expression recognition based on RPCA and AdaBoost.In: Image Analysis for Multimedia Interactive Services, London, UK, 2009: 113 - 116[C48] Kun Lu, Xin Zhang. Facial Expression Recognition from Image Sequences Based on Feature Points and Canonical Correlations.In: Artificial Intelligence and Computational Intelligence (AICI), Sanya,China, 2010: 219 - 223[C49] Peng Zhao-yi, Wen Zhi-qiang, Zhou Yu. Application of Mean Shift Algorithm in Real-Time Facial Expression Recognition.In: Computer Network and Multimedia Technology, Wuhan,China, 2009: 1 - 4[C50] Xu Chao, Feng Zhiyong, Facial Expression Recognition and Synthesis on Affective Emotions Composition.In: Future BioMedical InformationEngineering, Wuhan,China, 2008: 144 - 147[C51] Zi-lu Ying, Lin-bo Cai. Facial Expression Recognition with Marginal Fisher Analysis on Local Binary Patterns.In: Information Science and Engineering (ICISE), Nanjing,China, 2009: 1250 - 1253[C52] Chuang Yu, Yuning Hua, Kun Zhao. The Method of Human Facial Expression Recognition Based on Wavelet Transformation Reducing the Dimension and Improved Fisher Discrimination.In: Intelligent Networks and Intelligent Systems (ICINIS), Shenyang,China, 2010: 43 - 47[C53] Stratou G, Ghosh A, Debevec P, Morency L.-P. Effect of illumination on automatic expression recognition: A novel 3D relightable facial database .In: Automatic Face & Gesture Recognition and Workshops (FG 2011), Santa Barbara, California,USA, 2011: 611 - 618[C54] Jung-Wei Hong, Kai-Tai Song. Facial expression recognition under illumination variation.In: Advanced Robotics and Its Social Impacts, Hsinchu, Taiwan,2007: 1 - 6[C55] Ryan A, Cohn J.F, Lucey S, Saragih J, Lucey P, De la Torre F, Rossi A. Automated Facial Expression Recognition System.In: Security Technology, Zurich, Switzerland, 2009: 172 - 177[C56] Gokturk S.B, Bouguet J.-Y, Tomasi C, Girod B. Model-based face tracking for view-independent facial expression recognition.In: Automatic Face and Gesture Recognition, Washington, D.C., USA, 2002: 287 - 293[C57] Guo S.M, Pan Y.A, Liao Y.C, Hsu C.Y, Tsai J.S.H, Chang C.I. A Key Frame Selection-Based Facial Expression Recognition System.In: Innovative Computing, Information and Control, Beijing,China, 2006: 341 - 344[C58] Ying Zilu, Li Jingwen, Zhang Youwei. Facial expression recognition based on two dimensional feature extraction.In: Signal Processing, Leipzig, Germany, 2008: 1440 - 1444[C59] Fengjun Chen, Zhiliang Wang, Zhengguang Xu, Jiang Xiao, Guojiang Wang. Facial Expression Recognition Using Wavelet Transform and Neural Network Ensemble.In: Intelligent Information Technology Application, Shanghai,China,2008: 871 - 875[C60] Chuan-Yu Chang, Yan-Chiang Huang, Chi-Lu Yang. Personalized Facial Expression Recognition in Color Image.In: Innovative Computing, Information and Control (ICICIC), Kaohsiung,Taiwan, 2009: 1164 - 1167 [C61] Bourel F, Chibelushi C.C, Low A.A. Robust facial expression recognition using a state-based model of spatially-localised facial dynamics. In: Automatic Face and Gesture Recognition, Washington, D.C., USA, 2002: 106 - 111[C62] Chen Juanjuan, Zhao Zheng, Sun Han, Zhang Gang. Facial expression recognition based on PCA reconstruction.In: Computer Science and Education (ICCSE), Hefei,China, 2010: 195 - 198[C63] Guotai Jiang, Xuemin Song, Fuhui Zheng, Peipei Wang, Omer A.M.Facial Expression Recognition Using Thermal Image.In: Engineering in Medicine and Biology Society, Shanghai,China, 2005: 631 - 633[C64] Zhan Yong-zhao, Ye Jing-fu, Niu De-jiao, Cao Peng. Facial expression recognition based on Gabor wavelet transformation and elastic templates matching.In: Image and Graphics, Hongkong,China, 2004: 254 - 257[C65] Ying Zilu, Zhang Guoyi. Facial Expression Recognition Based on NMF and SVM. In: Information Technology and Applications, Chengdu,China, 2009: 612 - 615[C66] Xinghua Sun, Hongxia Xu, Chunxia Zhao, Jingyu Yang. Facial expression recognition based on histogram sequence of local Gabor binary patterns. In: Cybernetics and Intelligent Systems, Chengdu,China, 2008: 158 - 163[C67] Zisheng Li, Jun-ichi Imai, Kaneko M. Facial-component-based bag of words and PHOG descriptor for facial expression recognition.In: Systems, Man and Cybernetics, San Antonio,TX,USA,2009: 1353 - 1358[C68] Chuan-Yu Chang, Yan-Chiang Huang. Personalized facial expression recognition in indoor environments.In: Neural Networks (IJCNN), Barcelona, Spain, 2010: 1 - 8[C69] Ying Zilu, Fang Xieyan. Combining LBP and Adaboost for facial expression recognition.In: Signal Processing, Leipzig, Germany, 2008: 1461 - 1464[C70] Peng Yang, Qingshan Liu, Metaxas, D.N. RankBoost with l1 regularization for facial expression recognition and intensity estimation.In: Computer Vision, Kyoto,Japan, 2009: 1018 - 1025[C71] Patil R.A, Sahula V, Mandal A.S. Automatic recognition of facial expressions in image sequences: A review.In: Industrial and Information Systems (ICIIS), Mangalore, India, 2010: 408 - 413[C72] Iraj Hosseini, Nasim Shams, Pooyan Amini, Mohammad S. Sadri, Masih Rahmaty, Sara Rahmaty. Facial Expression Recognition using Wavelet-Based Salient Points and Subspace Analysis Methods.In: Electrical and Computer Engineering, Ottawa, Canada, 2006: 1992 - 1995[C73][C74][C75][C76][C77][C78][C79]3、英文期刊文章[J1] Aleksic P.S., Katsaggelos A.K. Automatic facial expression recognition using facial animation parameters and multistream HMMs.IEEE Transactions on Information Forensics and Security, 2006, 1(1):3-11 [J2] Kotsia I,Pitas I. Facial Expression Recognition in Image Sequences Using Geometric Deformation Features and Support Vector Machines. IEEE Transactions on Image Processing, 2007, 16(1):172 – 187[J3] Mpiperis I, Malassiotis S, Strintzis M.G. Bilinear Models for 3-D Face and Facial Expression Recognition. IEEE Transactions on Information Forensics and Security, 2008,3(3) : 498 - 511[J4] Sung J, Kim D. Pose-Robust Facial Expression Recognition Using View-Based 2D+3D AAM. IEEE Transactions on Systems and Humans, 2008 , 38 (4): 852 - 866[J5]Yeasin M, Bullot B, Sharma R. Recognition of facial expressions and measurement of levels of interest from video. IEEE Transactions on Multimedia, 2006, 8(3): 500 - 508[J6] Wenming Zheng, Xiaoyan Zhou, Cairong Zou, Li Zhao. Facial expression recognition using kernel canonical correlation analysis (KCCA). IEEE Transactions on Neural Networks, 2006, 17(1): 233 - 238 [J7]Pantic M, Patras I. Dynamics of facial expression: recognition of facial actions and their temporal segments from face profile image sequences. IEEE Transactions on Systems, Man, and Cybernetics, Part B: Cybernetics, 2006, 36(2): 433 - 449[J8] Mingli Song, Dacheng Tao, Zicheng Liu, Xuelong Li, Mengchu Zhou. Image Ratio Features for Facial Expression Recognition Application. IEEE Transactions on Systems, Man, and Cybernetics, Part B: Cybernetics, 2010, 40(3): 779 - 788[J9] Dae Jin Kim, Zeungnam Bien. Design of “Personalized” Classifier Using Soft Computing Techniques for “Personalized” Facial Expression Recognition. IEEE Transactions on Fuzzy Systems, 2008, 16(4): 874 - 885 [J10] Uddin M.Z, Lee J.J, Kim T.-S. An enhanced independent component-based human facial expression recognition from video. IEEE Transactions on Consumer Electronics, 2009, 55(4): 2216 - 2224[J11] Ruicong Zhi, Flierl M, Ruan Q, Kleijn W.B. Graph-Preserving Sparse Nonnegative Matrix Factorization With Application to Facial Expression Recognition. IEEE Transactions on Systems, Man, and Cybernetics, Part B:Cybernetics, 2011, 41(1): 38 - 52[J12] Chibelushi C.C, Bourel F. Hierarchical multistream recognition of facial expressions. IEE Proceedings - Vision, Image and Signal Processing, 2004, 151(4): 307 - 313[J13] Yongsheng Gao, Leung M.K.H, Siu Cheung Hui, Tananda M.W. Facial expression recognition from line-based caricatures. IEEE Transactions on Systems, Man and Cybernetics, Part A: Systems and Humans, 2003, 33(3): 407 - 412[J14] Ma L, Khorasani K. Facial expression recognition using constructive feedforward neural networks. IEEE Transactions on Systems, Man, and Cybernetics, Part B: Cybernetics, 2004, 34(3): 1588 - 1595[J15] Essa I.A, Pentland A.P. Coding, analysis, interpretation, and recognition of facial expressions. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1997, 19(7): 757 - 763[J16] Anderson K, McOwan P.W. A real-time automated system for the recognition of human facial expressions. IEEE Transactions on Systems, Man, and Cybernetics, Part B: Cybernetics, 2006, 36(1): 96 - 105[J17] Soyel H, Demirel H. Facial expression recognition based on discriminative scale invariant feature transform. Electronics Letters 2010, 46(5): 343 - 345[J18] Fei Cheng, Jiangsheng Yu, Huilin Xiong. Facial Expression Recognition in JAFFE Dataset Based on Gaussian Process Classification. IEEE Transactions on Neural Networks, 2010, 21(10): 1685 – 1690[J19] Shangfei Wang, Zhilei Liu, Siliang Lv, Yanpeng Lv, Guobing Wu, Peng Peng, Fei Chen, Xufa Wang. A Natural Visible and Infrared Facial Expression Database for Expression Recognition and Emotion Inference. IEEE Transactions on Multimedia, 2010, 12(7): 682 - 691[J20] Lajevardi S.M, Hussain Z.M. Novel higher-order local autocorrelation-like feature extraction methodology for facial expression recognition. IET Image Processing, 2010, 4(2): 114 - 119[J21] Yizhen Huang, Ying Li, Na Fan. Robust Symbolic Dual-View Facial Expression Recognition With Skin Wrinkles: Local Versus Global Approach. IEEE Transactions on Multimedia, 2010, 12(6): 536 - 543[J22] Lu H.-C, Huang Y.-J, Chen Y.-W. Real-time facial expression recognition based on pixel-pattern-based texture feature. Electronics Letters 2007, 43(17): 916 - 918[J23]Zhang L, Tjondronegoro D. Facial Expression Recognition Using Facial Movement Features. IEEE Transactions on Affective Computing, 2011, pp(99): 1[J24] Zafeiriou S, Pitas I. Discriminant Graph Structures for Facial Expression Recognition. Multimedia, IEEE Transactions on 2008,10(8): 1528 - 1540[J25]Oliveira L, Mansano M, Koerich A, de Souza Britto Jr. A. Selecting 2DPCA Coefficients for Face and Facial Expression Recognition. Computingin Science & Engineering, 2011, pp(99): 1[J26] Chang K.I, Bowyer W, Flynn P.J. Multiple Nose Region Matching for 3D Face Recognition under Varying Facial Expression. Pattern Analysis and Machine Intelligence, IEEE Transactions on2006, 28(10): 1695 - 1700 [J27] Kakadiaris I.A, Passalis G, Toderici G, Murtuza M.N, Yunliang Lu, Karampatziakis N, Theoharis T. Three-Dimensional Face Recognition in the Presence of Facial Expressions: An Annotated Deformable Model Approach.IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007, 29(4): 640 - 649[J28] Guoying Zhao, Pietikainen M. Dynamic Texture Recognition Using Local Binary Patterns with an Application to Facial Expressions. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007, 29(6): 915 - 928[J29] Chakraborty A, Konar A, Chakraborty U.K, Chatterjee A. Emotion Recognition From Facial Expressions and Its Control Using Fuzzy Logic. IEEE Transactions on Systems, Man and Cybernetics, Part A: Systems and Humans, 2009, 39(4): 726 - 743[J30] Pantic M, RothkrantzL J.M. Facial action recognition for facial expression analysis from static face images. IEEE Transactions on Systems, Man, and Cybernetics, Part B: Cybernetics, 2004, 34(3): 1449 - 1461 [J31] Calix R.A, Mallepudi S.A, Bin Chen, Knapp G.M. Emotion Recognition in Text for 3-D Facial Expression Rendering. IEEE Transactions on Multimedia, 2010, 12(6): 544 - 551[J32]Kotsia I, Pitas I, Zafeiriou S, Zafeiriou S. Novel Multiclass Classifiers Based on the Minimization of the Within-Class Variance. IEEE Transactions on Neural Networks, 2009, 20(1): 14 - 34[J33]Cohen I, Cozman F.G, Sebe N, Cirelo M.C, Huang T.S. Semisupervised learning of classifiers: theory, algorithms, and their application to human-computer interaction. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2004, 26(12): 1553 - 1566[J34] Zafeiriou S. Discriminant Nonnegative Tensor Factorization Algorithms. IEEE Transactions on Neural Networks, 2009, 20(2): 217 - 235 [J35] Zafeiriou S, Petrou M. Nonlinear Non-Negative Component Analysis Algorithms. IEEE Transactions on Image Processing, 2010, 19(4): 1050 - 1066[J36] Kotsia I, Zafeiriou S, Pitas I. A Novel Discriminant Non-Negative Matrix Factorization Algorithm With Applications to Facial Image Characterization Problems. IEEE Transactions on Information Forensics and Security, 2007, 2(3): 588 - 595[J37] Irene Kotsia, Stefanos Zafeiriou, Ioannis Pitas. Texture and shape information fusion for facial expression and facial action unit recognition. Pattern Recognition, 2008, 41(3): 833-851[J38]Wenfei Gu, Cheng Xiang, Y.V. Venkatesh, Dong Huang, Hai Lin. Facial expression recognition using radial encoding of local Gabor features andclassifier synthesis.Pattern Recognition, In Press, Corrected Proof, Available online 27 May 2011[J39] F Dornaika, E Lazkano, B Sierra. Improving dynamic facial expression recognition with feature subset selection.Pattern Recognition Letters, 2011, 32(5): 740-748[J40] Te-Hsun Wang, Jenn-Jier James Lien. Facial expression recognition system based on rigid and non-rigid motion separation and 3D pose estimation. Pattern Recognition, 2009, 42(5): 962-977[J41] Hyung-Soo Lee, Daijin Kim.Expression-invariant face recognition by facial expression transformations.Pattern Recognition Letters, 2008, 29(13): 1797-1805[J42] Guoying Zhao, Matti Pietikäinen. Boosted multi-resolution spatiotemporal descriptors for facial expression recognition. Pattern Recognition Letters, 2009, 30(12): 1117-1127[J43] Xudong Xie, Kin-Man Lam. Facial expression recognition based on shape and texture. Pattern Recognition, 2009, 42(5):1003-1011[J44] Peng Yang, Qingshan Liu, Dimitris N. Metaxas Boosting encoded dynamic features for facial expression recognition. Pattern Recognition Letters, 2009,30(2): 132-139[J45] Sungsoo Park, Daijin Kim. Subtle facial expression recognition using motion magnification. Pattern Recognition Letters, 2009, 30(7): 708-716[J46] Chathura R. De Silva, Surendra Ranganath, Liyanage C. De Silva. Cloud basis function neural network: A modified RBF network architecture for holistic facial expression recognition.Pattern Recognition, 2008, 41(4): 1241-1253[J47] Do Hyoung Kim, Sung Uk Jung, Myung Jin Chung. Extension of cascaded simple feature based face detection to facial expression recognition.Pattern Recognition Letters, 2008, 29(11): 1621-1631[J48] Y. Zhu, L.C. De Silva, C.C. Ko. Using moment invariants and HMM in facial expression recognition. Pattern Recognition Letters, 2002, 23(1-3): 83-91[J49] Jun Wang, Lijun Yin. Static topographic modeling for facial expression recognition and puter Vision and Image Understanding, 2007, 108(1-2): 19-34[J50] Caifeng Shan, Shaogang Gong, Peter W. McOwan. Facial expression recognition based on Local Binary Patterns: A comprehensive study. Image and Vision Computing, 2009, 27(6): 803-816[J51] Xue-wen Chen, Thomas Huang. Facial expression recognition: A clustering-based approach. Pattern Recognition Letters, 2003, 24(9-10): 1295-1302[J52] Irene Kotsia, Ioan Buciu, Ioannis Pitas.An analysis of facial expression recognition under partial facial image occlusion. Image and Vision Computing, 2008, 26(7): 1052-1067[J53] Shuai Liu, Qiuqi Ruan. Orthogonal Tensor Neighborhood Preserving Embedding for facial expression recognition.Pattern Recognition, 2011, 44(7):1497-1513[J54] Eszter Székely, Henning Tiemeier, Lidia R. Arends, Vincent W.V. Jaddoe, Albert Hofman, Frank C. Verhulst, Catherine M. Herba. Recognition of Facial Expressions of Emotions by 3-Year-Olds. Emotion, 2011, 11(2): 425-435[J55] Kathleen M. Corcoran, Sheila R. Woody, David F. Tolin.Recognition of facial expressions in obsessive–compulsive disorder. Journal of Anxiety Disorders, 2008, 22(1): 56-66[J56] Bouchra Abboud, Franck Davoine, MôDang. Facial expression recognition and synthesis based on an appearance model. Signal Processing: Image Communication, 2004, 19(8): 723-740[J57] Teng Sha, Mingli Song, Jiajun Bu, Chun Chen, Dacheng Tao. Feature level analysis for 3D facial expression recognition. Neurocomputing, 2011, 74(12-13) :2135-2141[J58] S. Moore, R. Bowden. Local binary patterns for multi-view facial expression recognition. Computer Vision and Image Understanding, 2011, 15(4):541-558[J59] Rui Xiao, Qijun Zhao, David Zhang, Pengfei Shi. Facial expression recognition on multiple manifolds.Pattern Recognition, 2011, 44(1):107-116[J60] Shyi-Chyi Cheng, Ming-Yao Chen, Hong-Yi Chang, Tzu-Chuan Chou. Semantic-based facial expression recognition using analytical hierarchy process.Expert Systems with Applications, 2007, 33(1): 86-95[J71] Carlos E. Thomaz, Duncan F. Gillies, Raul Q. Feitosa. Using mixture covariance matrices to improve face and facial expression recognitions. Pattern Recognition Letters, 2003, 24(13): 2159-2165[J72]Wen G,Bo C,Shan Shi-guang,et al. The CAS-PEAL large-scale Chinese face database and baseline evaluations.IEEE Transactions on Systems,Man and Cybernetics,part A:Systems and Hu-mans,2008,38(1):149-161. [J73] Yongsheng Gao,Leung ,M.K.H. Face recognition using line edge map.IEEE Transactions on Pattern Analysis and Machine Intelligence,2002,24:764-779.[J74] Hanouz M,Kittler J,Kamarainen J K,et al. Feature-based affine-invariant localization of faces.IEEE Transactions on Pat-tern Analysis and Machine Intelligence,2005,27:1490-1495.[J75] WISKOTT L,FELLOUS J M,KRUGER N,et al.Face recognition by elastic bunch graph matching.IEEE Trans on Pattern Analysis and Machine Intelligence,1997,19(7):775-779.[J76] Belhumeur P.N, Hespanha J.P, Kriegman D.J. Eigenfaces vs. fischerfaces: recognition using class specific linear projection.IEEE Trans on Pattern Analysis and Machine Intelligence,1997,15(7):711-720 [J77] MA L,KHORASANI K.Facial Expression Recognition Using ConstructiveFeedforward Neural Networks. IEEE Transactions on Systems, Man and Cybernetics, Part B,2007,34(3):1588-1595.[J78][J79][J80][J81][J82][J83][J84][J85][J86][J87][J88][J89][J90]。

英文回答:FaceSussion User GuideFaceSussion is a technical tool for face recognition and facial analysis designed to help users quickly and accurately identify and analyse facial expressions。

This technology can be applied in areas such as face recognition door—to—door systems and facial face analysis applications, which can contribute to enhancing public security and management efficiency。

Through the use of FaceSussion, national security, social order and public administration can be better achieved and more secure and accessible services provided to the civilian population。

FaceSussion使用指南FaceSussion是一项针对人脸识别和面部表情分析的技术工具,旨在帮助用户快速准确地进行人脸识别和分析面部表情。

该技术可以应用于人脸识别门禁系统、面部表情分析应用等领域,有助于提高社会治安和管理效率。

通过使用FaceSussion,可以更好地实现国家安全、社会秩序和公共管理方针,为人民裙众提供更加安全、便捷的服务。

The user registers and acquires the API key of FaceSussion,which is then integrated and initialized by the steps of the crown document。

文献信息文献标题:Face Recognition Techniques: A Survey(人脸识别技术综述)文献作者:V.Vijayakumari文献出处:《World Journal of Computer Application and Technology》, 2013,1(2):41-50字数统计:英文3186单词,17705字符;中文5317汉字外文文献Face Recognition Techniques: A Survey Abstract Face is the index of mind. It is a complex multidimensional structure and needs a good computing technique for recognition. While using automatic system for face recognition, computers are easily confused by changes in illumination, variation in poses and change in angles of faces. A numerous techniques are being used for security and authentication purposes which includes areas in detective agencies and military purpose. These surveys give the existing methods in automatic face recognition and formulate the way to still increase the performance.Keywords: Face Recognition, Illumination, Authentication, Security1.IntroductionDeveloped in the 1960s, the first semi-automated system for face recognition required the administrator to locate features ( such as eyes, ears, nose, and mouth) on the photographs before it calculated distances and ratios to a common reference point, which were then compared to reference data. In the 1970s, Goldstein, Armon, and Lesk used 21 specific subjective markers such as hair color and lip thickness to automate the recognition. The problem with both of these early solutions was that the measurements and locations were manually computed. The face recognition problem can be divided into two main stages: face verification (or authentication), and face identification (or recognition).The detection stage is the first stage; it includesidentifying and locating a face in an image. The recognition stage is the second stage; it includes feature extraction, where important information for the discrimination is saved and the matching where the recognition result is given aid of a face database.2.Methods2.1.Geometric Feature Based MethodsThe geometric feature based approaches are the earliest approaches to face recognition and detection. In these systems, the significant facial features are detected and the distances among them as well as other geometric characteristic are combined in a feature vector that is used to represent the face. To recognize a face, first the feature vector of the test image and of the image in the database is obtained. Second, a similarity measure between these vectors, most often a minimum distance criterion, is used to determine the identity of the face. As pointed out by Brunelli and Poggio, the template based approaches will outperform the early geometric feature based approaches.2.2.Template Based MethodsThe template based approaches represent the most popular technique used to recognize and detect faces. Unlike the geometric feature based approaches, the template based approaches use a feature vector that represent the entire face template rather than the most significant facial features.2.3.Correlation Based MethodsCorrelation based methods for face detection are based on the computation of the normalized cross correlation coefficient Cn. The first step in these methods is to determine the location of the significant facial features such as eyes, nose or mouth. The importance of robust facial feature detection for both detection and recognition has resulted in the development of a variety of different facial feature detection algorithms. The facial feature detection method proposed by Brunelli and Poggio uses a set of templates to detect the position of the eyes in an image, by looking for the maximum absolute values of the normalized correlation coefficient of these templates at each point in test image. To cope with scale variations, a set of templates atdifferent scales was used.The problems associated with the scale variations can be significantly reduced by using hierarchical correlation. For face recognition, the templates corresponding to the significant facial feature of the test images are compared in turn with the corresponding templates of all of the images in the database, returning a vector of matching scores computed through normalized cross correlation. The similarity scores of different features are integrated to obtain a global score that is used for recognition. Other similar method that use correlation or higher order statistics revealed the accuracy of these methods but also their complexity.Beymer extended the correlation based on the approach to a view based approach for recognizing faces under varying orientation, including rotations with respect to the axis perpendicular to the image plane(rotations in image depth). To handle rotations out of the image plane, templates from different views were used. After the pose is determined ,the task of recognition is reduced to the classical correlation method in which the facial feature templates are matched to the corresponding templates of the appropriate view based models using the cross correlation coefficient. However this approach is highly computational expensive, and it is sensitive to lighting conditions.2.4.Matching Pursuit Based MethodsPhilips introduced a template based face detection and recognition system that uses a matching pursuit filter to obtain the face vector. The matching pursuit algorithm applied to an image iteratively selects from a dictionary of basis functions the best decomposition of the image by minimizing the residue of the image in all iterations. The algorithm describes by Philips constructs the best decomposition of a set of images by iteratively optimizing a cost function, which is determined from the residues of the individual images. The dictionary of basis functions used by the author consists of two dimensional wavelets, which gives a better image representation than the PCA (Principal Component Analysis) and LDA(Linear Discriminant Analysis) based techniques where the images were stored as vectors. For recognition the cost function is a measure of distances between faces and is maximized at each iteration. For detection the goal is to find a filter that clusters together in similar templates (themean for example), and minimized in each iteration. The feature represents the average value of the projection of the templates on the selected basis.2.5.Singular Value Decomposition Based MethodsThe face recognition method in this section use the general result stated by the singular value decomposition theorem. Z.Hong revealed the importance of using Singular Value Decomposition Method (SVD) for human face recognition by providing several important properties of the singular values (SV) vector which include: the stability of the SV vector to small perturbations caused by stochastic variation in the intensity image, the proportional variation of the SV vector with the pixel intensities, the variances of the SV feature vector to rotation, translation and mirror transformation. The above properties of the SV vector provide the theoretical basis for using singular values as image features. In addition, it has been shown that compressing the original SV vector into the low dimensional space by means of various mathematical transforms leads to the higher recognition performance. Among the various dimensionality reducing transformations, the Linear Discriminant Transform is the most popular one.2.6.The Dynamic Link Matching MethodsThe above template based matching methods use an Euclidean distance to identify a face in a gallery or to detect a face from a background. A more flexible distance measure that accounts for common facial transformations is the dynamic link introduced by Lades et al. In this approach , a rectangular grid is centered all faces in the gallery. The feature vector is calculated based on Gabor type wavelets, computed at all points of the grid. A new face is identified if the cost function, which is a weighted sum of two terms, is minimized. The first term in the cost function is small when the distance between feature vectors is small and the second term is small when the relative distance between the grid points in the test and the gallery image is preserved. It is the second term of this cost function that gives the “elasticity” of this matching measure. While the grid of the image remains rectangular, the grid that is “best fit” over the test image is stretched. Under certain constraints, until the minimum of the cost function is achieved. The minimum value of the cost function isused further to identify the unknown face.2.7.Illumination Invariant Processing MethodsThe problem of determining functions of an image of an object that are insensitive to illumination changes are considered. An object with Lambertian reflection has no discriminative functions that are invariant to illumination. This result leads the author to adopt a probabilistic approach in which they analytically determine a probability distribution for the image gradient as a function of the surfaces geometry and reflectance. Their distribution reveals that the direction of the image gradient is insensitive to changes in illumination direction. Verify this empirically by constructing a distribution for the image gradient from more than twenty million samples of gradients in a database of thousand two hundred and eighty images of twenty inanimate objects taken under varying lighting conditions. Using this distribution, they develop an illumination insensitive measure of image comparison and test it on the problem of face recognition. In another method, they consider only the set of images of an object under variable illumination, including multiple, extended light sources, shadows, and color. They prove that the set of n-pixel monochrome images of a convex object with a Lambertian reflectance function, illuminated by an arbitrary number of point light sources at infinity, forms a convex polyhedral cone in IR and that the dimension of this illumination cone equals the number of distinct surface normal. Furthermore, the illumination cone can be constructed from as few as three images. In addition, the set of n-pixel images of an object of any shape and with a more general reflectance function, seen under all possible illumination conditions, still forms a convex cone in IRn. These results immediately suggest certain approaches to object recognition. Throughout, they present results demonstrating the illumination cone representation.2.8.Support Vector Machine ApproachFace recognition is a K class problem, where K is the number of known individuals; and support vector machines (SVMs) are a binary classification method. By reformulating the face recognition problem and reinterpreting the output of the SVM classifier, they developed a SVM-based face recognition algorithm. The facerecognition problem is formulated as a problem in difference space, which models dissimilarities between two facial images. In difference space we formulate face recognition as a two class problem. The classes are: dissimilarities between faces of the same person, and dissimilarities between faces of different people. By modifying the interpretation of the decision surface generated by SVM, we generated a similarity metric between faces that are learned from examples of differences between faces. The SVM-based algorithm is compared with a principal component analysis (PCA) based algorithm on a difficult set of images from the FERET database. Performance was measured for both verification and identification scenarios. The identification performance for SVM is 77-78% versus 54% for PCA. For verification, the equal error rate is 7% for SVM and 13% for PCA.2.9.Karhunen- Loeve Expansion Based Methods2.9.1.Eigen Face ApproachIn this approach, face recognition problem is treated as an intrinsically two dimensional recognition problem. The system works by projecting face images which represents the significant variations among known faces. This significant feature is characterized as the Eigen faces. They are actually the eigenvectors. Their goal is to develop a computational model of face recognition that is fact, reasonably simple and accurate in constrained environment. Eigen face approach is motivated by the information theory.2.9.2.Recognition Using Eigen FeaturesWhile the classical eigenface method uses the KLT (Karhunen- Loeve Transform) coefficients of the template corresponding to the whole face image, the author Pentland et.al. introduce a face detection and recognition system that uses the KLT coefficients of the templates corresponding to the significant facial features like eyes, nose and mouth. For each of the facial features, a feature space is built by selecting the most significant “eigenfeatures”, which are the eigenvectors corresponding to the largest eigen values of the features correlation matrix. The significant facial features were detected using the distance from the feature space and selecting the closest match. The scores of similarity between the templates of the test image and thetemplates of the images in the training set were integrated in a cumulative score that measures the distance between the test image and the training images. The method was extended to the detection of features under different viewing geometries by using either a view-based Eigen space or a parametric eigenspace.2.10.Feature Based Methods2.10.1.Kernel Direct Discriminant Analysis AlgorithmThe kernel machine-based Discriminant analysis method deals with the nonlinearity of the face patterns’ distribution. This method also effectively solves the so-called “small sample size” (SSS) problem, which exists in most Face Recognition tasks. The new algorithm has been tested, in terms of classification error rate performance, on the multiview UMIST face database. Results indicate that the proposed methodology is able to achieve excellent performance with only a very small set of features being used, and its error rate is approximately 34% and 48% of those of two other commonly used kernel FR approaches, the kernel-PCA (KPCA) and the Generalized Discriminant Analysis (GDA), respectively.2.10.2.Features Extracted from Walshlet PyramidA novel Walshlet Pyramid based face recognition technique used the image feature set extracted from Walshlets applied on the image at various levels of decomposition. Here the image features are extracted by applying Walshlet Pyramid on gray plane (average of red, green and blue. The proposed technique is tested on two image databases having 100 images each. The results show that Walshlet level-4 outperforms other Walshlets and Walsh Transform, because the higher level Walshlets are giving very coarse color-texture features while the lower level Walshlets are representing very fine color-texture features which are less useful to differentiate the images in face recognition.2.10.3.Hybrid Color and Frequency Features ApproachThis correspondence presents a novel hybrid Color and Frequency Features (CFF) method for face recognition. The CFF method, which applies an Enhanced Fisher Model(EFM), extracts the complementary frequency features in a new hybrid color space for improving face recognition performance. The new color space, the RIQcolor space, which combines the component image R of the RGB color space and the chromatic components I and Q of the YIQ color space, displays prominent capability for improving face recognition performance due to the complementary characteristics of its component images. The EFM then extracts the complementary features from the real part, the imaginary part, and the magnitude of the R image in the frequency domain. The complementary features are then fused by means of concatenation at the feature level to derive similarity scores for classification. The complementary feature extraction and feature level fusion procedure applies to the I and Q component images as well. Experiments on the Face Recognition Grand Challenge (FRGC) show that i) the hybrid color space improves face recognition performance significantly, and ii) the complementary color and frequency features further improve face recognition performance.2.10.4.Multilevel Block Truncation Coding ApproachIn Multilevel Block Truncation coding for face recognition uses all four levels of Multilevel Block Truncation Coding for feature vector extraction resulting into four variations of proposed face recognition technique. The experimentation has been conducted on two different face databases. The first one is Face Database which has 1000 face images and the second one is “Our Own Database” which has 1600 face images. To measure the performance of the algorithm the False Acceptance Rate (FAR) and Genuine Acceptance Rate (GAR) parameters have been used. The experimental results have shown that the outcome of BTC (Block truncation Coding) Level 4 is better as compared to the other BTC levels in terms of accuracy, at the cost of increased feature vector size.2.11.Neural Network Based AlgorithmsTemplates have been also used as input to Neural Network (NN) based systems. Lawrence et.al proposed a hybrid neural network approach that combines local image sampling, A self organizing map (SOM) and a convolutional neural network. The SOP provides a set of features that represents a more compact and robust representation of the image samples. These features are then fed into the convolutional neural network. This architecture provides partial invariance to translation, rotation, scale and facedeformation. Along with this the author introduced an efficient probabilistic decision based neural network (PDBNN) for face detection and recognition. The feature vector used consists of intensity and edge values obtained from the facial region of the down sampled image in the training set. The facial region contains the eyes and nose, but excludes the hair and mouth. Two PDBNN were trained with these feature vectors and used one for the face detection and other for the face recognition.2.12.Model Based Methods2.12.1.Hidden Markov Model Based ApproachIn this approach, the author utilizes the face that the most significant facial features of a frontal face which includes hair, forehead, eyes, nose and mouth which occur in a natural order from top to bottom even if the image undergo small variation/rotation in the image plane perpendicular to the image plane. One dimensional HMM (Hidden Markov Model) is used for modeling the image, where the observation vectors are obtained from DCT or KLT coefficients. They given c face images for each subject of the training set, the goal of the training set is to optimize the parameters of the Hidden Markov Model to best describe the observations in the sense of maximizing the probability of the observations given in the model. Recognition is carried out by matching the best test image against each of the trained models. To do this, the image is converted to an observation sequence and then model likelihoods are computed for each face model. The model with the highest likelihood reveals the identity of the unknown face.2.12.2.The Volumetric Frequency Representation of Face ModelA face model that incorporates both the three dimensional (3D) face structure and its two-dimensional representation are explained (face images). This model which represents a volumetric (3D) frequency representation (VFR) of the face , is constructed using range image of a human head. Making use of an extension of the projection Slice Theorem, the Fourier transform of any face image corresponds to a slice in the face VFR. For both pose estimation and face recognition a face image is indexed in the 3D VFR based on the correlation matching in a four dimensional Fourier space, parameterized over the elevation, azimuth, rotation in the image planeand the scale of faces.3.ConclusionThis paper discusses the different approaches which have been employed in automatic face recognition. In the geometrical based methods, the geometrical features are selected and the significant facial features are detected. The correlation based approach needs face template rather than the significant facial features. Singular value vectors and the properties of the SV vector provide the theoretical basis for using singular values as image features. The Karhunen-Loeve expansion works by projecting the face images which represents the significant variations among the known faces. Eigen values and Eigen vectors are involved in extracting the features in KLT. Neural network based approaches are more efficient when it contains no more than a few hundred weights. The Hidden Markov model optimizes the parameters to best describe the observations in the sense of maximizing the probability of observations given in the model .Some methods use the features for classification and few methods uses the distance measure from the nodal points. The drawbacks of the methods are also discussed based on the performance of the algorithms used in the approaches. Hence this will give some idea about the existing methods for automatic face recognition.中文译文人脸识别技术综述摘要人脸是心灵的指标。

用英语根据文章内容讲解Facial recognition technology, a kind of biometric technology, is becoming increasingly popular.人脸识别技术,一种生物识别技术,越来越受欢迎。