[计算机]基于Lucene的中文字典分词模块

- 格式:ppt

- 大小:590.02 KB

- 文档页数:28

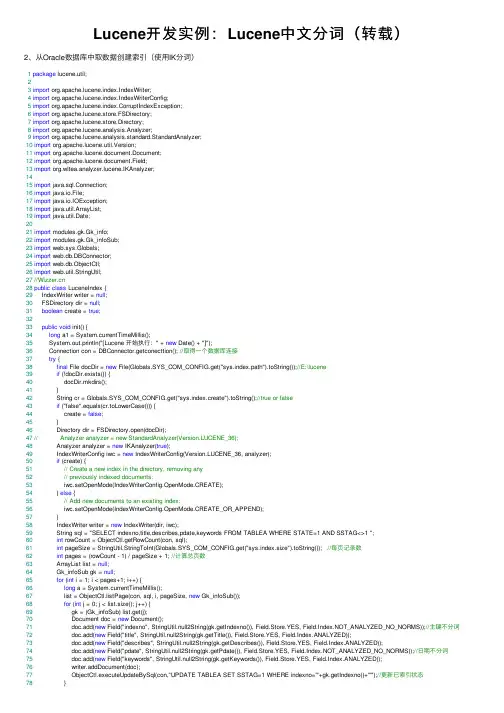

Lucene开发实例:Lucene中⽂分词(转载)2、从Oracle数据库中取数据创建索引(使⽤IK分词)1package lucene.util;23import org.apache.lucene.index.IndexWriter;4import org.apache.lucene.index.IndexWriterConfig;5import org.apache.lucene.index.CorruptIndexException;6import org.apache.lucene.store.FSDirectory;7import org.apache.lucene.store.Directory;8import org.apache.lucene.analysis.Analyzer;9import org.apache.lucene.analysis.standard.StandardAnalyzer;10import org.apache.lucene.util.Version;11import org.apache.lucene.document.Document;12import org.apache.lucene.document.Field;13import org.wltea.analyzer.lucene.IKAnalyzer;1415import java.sql.Connection;16import java.io.File;17import java.io.IOException;18import java.util.ArrayList;19import java.util.Date;2021import modules.gk.Gk_info;22import modules.gk.Gk_infoSub;23import web.sys.Globals;24import web.db.DBConnector;25import web.db.ObjectCtl;26import web.util.StringUtil;27//28public class LuceneIndex {29 IndexWriter writer = null;30 FSDirectory dir = null;31boolean create = true;3233public void init() {34long a1 = System.currentTimeMillis();35 System.out.println("[Lucene 开始执⾏:" + new Date() + "]");36 Connection con = DBConnector.getconecttion(); //取得⼀个数据库连接37try {38final File docDir = new File(Globals.SYS_COM_CONFIG.get("sys.index.path").toString());//E:\lucene39if (!docDir.exists()) {40 docDir.mkdirs();41 }42 String cr = Globals.SYS_COM_CONFIG.get("sys.index.create").toString();//true or false43if ("false".equals(cr.toLowerCase())) {44 create = false;45 }46 Directory dir = FSDirectory.open(docDir);47// Analyzer analyzer = new StandardAnalyzer(Version.LUCENE_36);48 Analyzer analyzer = new IKAnalyzer(true);49 IndexWriterConfig iwc = new IndexWriterConfig(Version.LUCENE_36, analyzer);50if (create) {51// Create a new index in the directory, removing any52// previously indexed documents:53 iwc.setOpenMode(IndexWriterConfig.OpenMode.CREATE);54 } else {55// Add new documents to an existing index:56 iwc.setOpenMode(IndexWriterConfig.OpenMode.CREATE_OR_APPEND);57 }58 IndexWriter writer = new IndexWriter(dir, iwc);59 String sql = "SELECT indexno,title,describes,pdate,keywords FROM TABLEA WHERE STATE=1 AND SSTAG<>1 ";60int rowCount = ObjectCtl.getRowCount(con, sql);61int pageSize = StringUtil.StringToInt(Globals.SYS_COM_CONFIG.get("sys.index.size").toString()); //每页记录数62int pages = (rowCount - 1) / pageSize + 1; //计算总页数63 ArrayList list = null;64 Gk_infoSub gk = null;65for (int i = 1; i < pages+1; i++) {66long a = System.currentTimeMillis();67 list = ObjectCtl.listPage(con, sql, i, pageSize, new Gk_infoSub());68for (int j = 0; j < list.size(); j++) {69 gk = (Gk_infoSub) list.get(j);70 Document doc = new Document();71 doc.add(new Field("indexno", StringUtil.null2String(gk.getIndexno()), Field.Store.YES, Field.Index.NOT_ANALYZED_NO_NORMS));//主键不分词72 doc.add(new Field("title", StringUtil.null2String(gk.getTitle()), Field.Store.YES, Field.Index.ANALYZED));73 doc.add(new Field("describes", StringUtil.null2String(gk.getDescribes()), Field.Store.YES, Field.Index.ANALYZED));74 doc.add(new Field("pdate", StringUtil.null2String(gk.getPdate()), Field.Store.YES, Field.Index.NOT_ANALYZED_NO_NORMS));//⽇期不分词75 doc.add(new Field("keywords", StringUtil.null2String(gk.getKeywords()), Field.Store.YES, Field.Index.ANALYZED));76 writer.addDocument(doc);77 ObjectCtl.executeUpdateBySql(con,"UPDATE TABLEA SET SSTAG=1 WHERE indexno='"+gk.getIndexno()+"'");//更新已索引状态78 }80long b = System.currentTimeMillis();81long c = b - a;82 System.out.println("[Lucene " + rowCount + "条," + pages + "页,第" + i + "页花费时间:" + c + "毫秒]");83 }84 mit();8586 } catch (Exception e) {87 e.printStackTrace();88 } finally {89 DBConnector.freecon(con); //释放数据库连接90try {91if (writer != null) {92 writer.close();93 }94 } catch (CorruptIndexException e) {95 e.printStackTrace();96 } catch (IOException e) {97 e.printStackTrace();98 } finally {99try {100if (dir != null && IndexWriter.isLocked(dir)) {101 IndexWriter.unlock(dir);//注意解锁102 }103 } catch (IOException e) {104 e.printStackTrace();105 }106 }107 }108long b1 = System.currentTimeMillis();109long c1 = b1 - a1;110 System.out.println("[Lucene 执⾏完毕,花费时间:" + c1 + "毫秒,完成时间:" + new Date() + "]");111 }112 }3、单字段查询以及多字段分页查询⾼亮显⽰1package lucene.util;23import org.apache.lucene.store.FSDirectory;4import org.apache.lucene.store.Directory;5import org.apache.lucene.search.*;6import org.apache.lucene.search.highlight.SimpleHTMLFormatter;7import org.apache.lucene.search.highlight.Highlighter;8import org.apache.lucene.search.highlight.SimpleFragmenter;9import org.apache.lucene.search.highlight.QueryScorer;10import org.apache.lucene.queryParser.QueryParser;11import org.apache.lucene.queryParser.MultiFieldQueryParser;12import org.apache.lucene.analysis.TokenStream;13import org.apache.lucene.analysis.Analyzer;14import org.apache.lucene.analysis.KeywordAnalyzer;15import org.apache.lucene.document.Document;16import org.apache.lucene.index.IndexReader;17import org.apache.lucene.index.Term;18import org.apache.lucene.util.Version;19import modules.gk.Gk_infoSub;2021import java.util.ArrayList;22import java.io.File;23import java.io.StringReader;24import ng.reflect.Constructor;2526import web.util.StringUtil;27import web.sys.Globals;28import org.wltea.analyzer.lucene.IKAnalyzer;29//30public class LuceneQuery {31private static String indexPath;// 索引⽣成的⽬录32private int rowCount;// 记录数33private int pages;// 总页数34private int currentPage;// 当前页数35private int pageSize; //每页记录数3637public LuceneQuery() {38this.indexPath = Globals.SYS_COM_CONFIG.get("sys.index.path").toString();39 }4041public int getRowCount() {42return rowCount;43 }4445public int getPages() {46return pages;47 }49public int getPageSize() {50return pageSize;51 }5253public int getCurrentPage() {54return currentPage;55 }5657/**58 * 函数功能:根据字段查询索引59*/60public ArrayList queryIndexTitle(String keyWord, int curpage, int pageSize) {61 ArrayList list = new ArrayList();62try {63if (curpage <= 0) {64 curpage = 1;65 }66if (pageSize <= 0) {67 pageSize = 20;68 }69this.pageSize = pageSize; //每页记录数70this.currentPage = curpage; //当前页71int start = (curpage - 1) * pageSize;72 Directory dir = FSDirectory.open(new File(indexPath));73 IndexReader reader = IndexReader.open(dir);74 IndexSearcher searcher = new IndexSearcher(reader);75 Analyzer analyzer = new IKAnalyzer(true);76 QueryParser queryParser = new QueryParser(Version.LUCENE_36, "title", analyzer);77 queryParser.setDefaultOperator(QueryParser.AND_OPERATOR);78 Query query = queryParser.parse(keyWord);79int hm = start + pageSize;80 TopScoreDocCollector res = TopScoreDocCollector.create(hm, false);81 searcher.search(query, res);8283 SimpleHTMLFormatter simpleHTMLFormatter = new SimpleHTMLFormatter("<span style='color:red'>", "</span>");84 Highlighter highlighter = new Highlighter(simpleHTMLFormatter, new QueryScorer(query));85this.rowCount = res.getTotalHits();86this.pages = (rowCount - 1) / pageSize + 1; //计算总页数87 TopDocs tds = res.topDocs(start, pageSize);88 ScoreDoc[] sd = tds.scoreDocs;89for (int i = 0; i < sd.length; i++) {90 Document hitDoc = reader.document(sd[i].doc);91 list.add(createObj(hitDoc, analyzer, highlighter));92 }9394 } catch (Exception e) {95 e.printStackTrace();96 }9798return list;99100 }101/**102 * 函数功能:根据字段查询索引103*/104public ArrayList queryIndexFields(String allkeyword, String onekeyword, String nokeyword, int curpage, int pageSize) {105 ArrayList list = new ArrayList();106try {107if (curpage <= 0) {108 curpage = 1;109 }110if (pageSize <= 0) {111 pageSize = 20;112 }113this.pageSize = pageSize; //每页记录数114this.currentPage = curpage; //当前页115int start = (curpage - 1) * pageSize;116 Directory dir = FSDirectory.open(new File(indexPath));117 IndexReader reader = IndexReader.open(dir);118 IndexSearcher searcher = new IndexSearcher(reader);119 BooleanQuery bQuery = new BooleanQuery(); //组合查询120if (!"".equals(allkeyword)) {//包含全部关键词121 KeywordAnalyzer analyzer = new KeywordAnalyzer();122 BooleanClause.Occur[] flags = {BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD};//AND 123 Query query = MultiFieldQueryParser.parse(Version.LUCENE_36, allkeyword, new String[]{"title", "describes", "keywords"}, flags, analyzer); 124 bQuery.add(query, BooleanClause.Occur.MUST); //AND125 }126if (!"".equals(onekeyword)) { //包含任意关键词127 Analyzer analyzer = new IKAnalyzer(true);128 BooleanClause.Occur[] flags = {BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD};//OR 129 Query query = MultiFieldQueryParser.parse(Version.LUCENE_36, onekeyword, new String[]{"title", "describes", "keywords"}, flags, analyzer); 130 bQuery.add(query, BooleanClause.Occur.MUST); //AND131 }132if (!"".equals(nokeyword)) { //排除关键词133 Analyzer analyzer = new IKAnalyzer(true);134 BooleanClause.Occur[] flags = {BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD, BooleanClause.Occur.SHOULD};//NOT 135 Query query = MultiFieldQueryParser.parse(Version.LUCENE_36, nokeyword, new String[]{"title", "describes", "keywords"}, flags, analyzer); 136 bQuery.add(query, BooleanClause.Occur.MUST_NOT); //AND137138 }139int hm = start + pageSize;140 TopScoreDocCollector res = TopScoreDocCollector.create(hm, false);141 searcher.search(bQuery, res);142 SimpleHTMLFormatter simpleHTMLFormatter = new SimpleHTMLFormatter("<span style='color:red'>", "</span>");143 Highlighter highlighter = new Highlighter(simpleHTMLFormatter, new QueryScorer(bQuery));144this.rowCount = res.getTotalHits();145this.pages = (rowCount - 1) / pageSize + 1; //计算总页数146 System.out.println("rowCount:" + rowCount);147 TopDocs tds = res.topDocs(start, pageSize);148 ScoreDoc[] sd = tds.scoreDocs;149 Analyzer analyzer = new IKAnalyzer();150for (int i = 0; i < sd.length; i++) {151 Document hitDoc = reader.document(sd[i].doc);152 list.add(createObj(hitDoc, analyzer, highlighter));153 }154155 } catch (Exception e) {156 e.printStackTrace();157 }158159return list;160161 }162163/**164 * 创建返回对象(⾼亮)165*/166167private synchronized static Object createObj(Document doc, Analyzer analyzer, Highlighter highlighter) {168169 Gk_infoSub gk = new Gk_infoSub();170try {171172if (doc != null) {173 gk.setIndexno(StringUtil.null2String(doc.get("indexno")));174 gk.setPdate(StringUtil.null2String(doc.get("pdate")));175 String title = StringUtil.null2String(doc.get("title"));176 gk.setTitle(title);177if (!"".equals(title)) {178 highlighter.setTextFragmenter(new SimpleFragmenter(title.length()));179 TokenStream tk = analyzer.tokenStream("title", new StringReader(title));180 String htext = StringUtil.null2String(highlighter.getBestFragment(tk, title));181if (!"".equals(htext)) {182 gk.setTitle(htext);183 }184 }185 String keywords = StringUtil.null2String(doc.get("keywords"));186 gk.setKeywords(keywords);187if (!"".equals(keywords)) {188 highlighter.setTextFragmenter(new SimpleFragmenter(keywords.length()));189 TokenStream tk = analyzer.tokenStream("keywords", new StringReader(keywords));190 String htext = StringUtil.null2String(highlighter.getBestFragment(tk, keywords));191if (!"".equals(htext)) {192 gk.setKeywords(htext);193 }194 }195 String describes = StringUtil.null2String(doc.get("describes"));196 gk.setDescribes(describes);197if (!"".equals(describes)) {198 highlighter.setTextFragmenter(new SimpleFragmenter(describes.length()));199 TokenStream tk = analyzer.tokenStream("keywords", new StringReader(describes));200 String htext = StringUtil.null2String(highlighter.getBestFragment(tk, describes));201if (!"".equals(htext)) {202 gk.setDescribes(htext);203 }204 }205206 }207return gk;208 }209catch (Exception e) {210211 e.printStackTrace();212return null;213 }214finally {215 gk = null;216 }217218 }219220private synchronized static Object createObj(Document doc) {221222 Gk_infoSub gk = new Gk_infoSub();223try {224225if (doc != null) {226 gk.setIndexno(StringUtil.null2String(doc.get("indexno")));227 gk.setPdate(StringUtil.null2String(doc.get("pdate")));228 gk.setTitle(StringUtil.null2String(doc.get("title")));229 gk.setKeywords(StringUtil.null2String(doc.get("keywords")));230 gk.setDescribes(StringUtil.null2String(doc.get("describes")));231 }232return gk;233 }234catch (Exception e) {235236 e.printStackTrace();237return null;238 }239finally {240 gk = null;241 }242243 }244 }单字段查询:1long a = System.currentTimeMillis();2try {3int curpage = StringUtil.StringToInt(StringUtil.null2String(form.get("curpage")));4int pagesize = StringUtil.StringToInt(StringUtil.null2String(form.get("pagesize")));5 String title = StringUtil.replaceLuceneStr(StringUtil.null2String(form.get("title")));6 LuceneQuery lu = new LuceneQuery();7 form.addResult("list", lu.queryIndexTitle(title, curpage, pagesize));8 form.addResult("curPage", lu.getCurrentPage());9 form.addResult("pageSize", lu.getPageSize());10 form.addResult("rowCount", lu.getRowCount());11 form.addResult("pageCount", lu.getPages());12 } catch (Exception e) {13 e.printStackTrace();14 }15long b = System.currentTimeMillis();16long c = b - a;17 System.out.println("[搜索信息花费时间:" + c + "毫秒]");多字段查询:1long a = System.currentTimeMillis();2try {3int curpage = StringUtil.StringToInt(StringUtil.null2String(form.get("curpage")));4int pagesize = StringUtil.StringToInt(StringUtil.null2String(form.get("pagesize")));5 String allkeyword = StringUtil.replaceLuceneStr(StringUtil.null2String(form.get("allkeyword")));6 String onekeyword = StringUtil.replaceLuceneStr(StringUtil.null2String(form.get("onekeyword")));7 String nokeyword = StringUtil.replaceLuceneStr(StringUtil.null2String(form.get("nokeyword")));8 LuceneQuery lu = new LuceneQuery();9 form.addResult("list", lu.queryIndexFields(allkeyword,onekeyword,nokeyword, curpage, pagesize));10 form.addResult("curPage", lu.getCurrentPage());11 form.addResult("pageSize", lu.getPageSize());12 form.addResult("rowCount", lu.getRowCount());13 form.addResult("pageCount", lu.getPages());14 } catch (Exception e) {15 e.printStackTrace();16 }17long b = System.currentTimeMillis();18long c = b - a;19 System.out.println("[⾼级检索花费时间:" + c + "毫秒]");4、Lucene通配符查询1 BooleanQuery bQuery = new BooleanQuery(); //组合查询2if (!"".equals(title)) {3 WildcardQuery w1 = new WildcardQuery(new Term("title", title+ "*"));4 bQuery.add(w1, BooleanClause.Occur.MUST); //AND5 }6int hm = start + pageSize;7 TopScoreDocCollector res = TopScoreDocCollector.create(hm, false);8 searcher.search(bQuery, res);5、Lucene嵌套查询实现SQL:(unitid like 'unitid%' and idml like 'id2%') or (tounitid like 'unitid%' and tomlid like 'id2%' and tostate=1)1 BooleanQuery bQuery = new BooleanQuery();2 BooleanQuery b1 = new BooleanQuery();3 WildcardQuery w1 = new WildcardQuery(new Term("unitid", unitid + "*"));4 WildcardQuery w2 = new WildcardQuery(new Term("idml", id2 + "*"));5 b1.add(w1, BooleanClause.Occur.MUST);//AND6 b1.add(w2, BooleanClause.Occur.MUST);//AND7 bQuery.add(b1, BooleanClause.Occur.SHOULD);//OR8 BooleanQuery b2 = new BooleanQuery();9 WildcardQuery w3 = new WildcardQuery(new Term("tounitid", unitid + "*"));10 WildcardQuery w4 = new WildcardQuery(new Term("tomlid", id2 + "*"));11 WildcardQuery w5 = new WildcardQuery(new Term("tostate", "1"));12 b2.add(w3, BooleanClause.Occur.MUST);//AND13 b2.add(w4, BooleanClause.Occur.MUST);//AND14 b2.add(w5, BooleanClause.Occur.MUST);//AND15 bQuery.add(b2, BooleanClause.Occur.SHOULD);//OR6、Lucene先根据时间排序后分页1int hm = start + pageSize;2 Sort sort = new Sort(new SortField("pdate", SortField.STRING, true));3 TopScoreDocCollector res = TopScoreDocCollector.create(pageSize, false);4 searcher.search(bQuery, res);5this.rowCount = res.getTotalHits();6this.pages = (rowCount - 1) / pageSize + 1; //计算总页数7 TopDocs tds =searcher.search(bQuery,rowCount,sort);// res.topDocs(start, pageSize);8 ScoreDoc[] sd = tds.scoreDocs;9 System.out.println("rowCount:" + rowCount);10int i=0;11for (ScoreDoc scoreDoc : sd) {12 i++;13if(i<start){14continue;15 }16if(i>hm){17break;18 }19 Document doc = searcher.doc(scoreDoc.doc);20 list.add(createObj(doc));21 }这个效率不⾼,正常的做法是创建索引的时候进⾏排序,之后使⽤分页⽅法,不要这样进⾏2次查询。

基于Lucene的中文分词器的设计与实现彭焕峰【摘要】According to the low efficiency of the Chinese words segmentation machines of Lucene, this paper designs a new word segmentation machine based on all-Hash segmentation mechanism according to binary-seek-by-word by analyzing many old dictionary mechanisms. The new mechanism uses the word's Hash value to reduce the number of string findings. The maintenance of dictionary file is convenient, and the developers can customize the dictionary based on different application to improve search efficiency.%针对Lucene自带中文分词器分词效果差的缺点,在分析现有分词词典机制的基础上,设计了基于全哈希整词二分算法的分词器,并集成到Lucene中,算法通过对整词进行哈希,减少词条匹配次数,提高分词效率。

该分词器词典文件维护方便,可以根据不同应用的要求进行定制,从而提高了检索效率。

【期刊名称】《微型机与应用》【年(卷),期】2011(030)018【总页数】3页(P62-64)【关键词】Lucene;哈希;整词二分;最大匹配【作者】彭焕峰【作者单位】南京工程学院计算机工程学院,江苏南京211167【正文语种】中文【中图分类】TP391.1信息技术的发展,形成了海量的电子信息数据,人们对信息检索的要求越来越高,搜索引擎技术也得到了快速发展,并逐渐地被应用到越来越多的领域。

java实现中⽂分词IK Analyzer是基于lucene实现的分词开源框架下载路径:/so/search/s.do?q=IKAnalyzer2012.jar&t=doc&o=&s=all&l=null 需要在项⽬中引⼊:IKAnalyzer2012.jarlucene-core-3.6.0.jar实现的两种⽅法:使⽤(lucene)实现:1import java.io.IOException;2import java.io.StringReader;3import org.wltea.analyzer.core.IKSegmenter;4import org.wltea.analyzer.core.Lexeme;56public class Fenci1 {7public static void main(String[] args) throws IOException{8 String text="你好,我的世界!";9 StringReader sr=new StringReader(text);10 IKSegmenter ik=new IKSegmenter(sr, true);11 Lexeme lex=null;12while((lex=ik.next())!=null){13 System.out.print(lex.getLexemeText()+",");14 }15 }1617 }使⽤(IK Analyzer)实现:1import java.io.IOException;2import java.io.StringReader;3import org.apache.lucene.analysis.Analyzer;4import org.apache.lucene.analysis.TokenStream;5import org.apache.lucene.analysis.tokenattributes.CharTermAttribute;6import org.wltea.analyzer.lucene.IKAnalyzer;78public class Fenci {9public static void main(String[] args) throws IOException {11 String text="你好,我的世界!";12//创建分词对象13 Analyzer anal=new IKAnalyzer(true);14 StringReader reader=new StringReader(text);15//分词16 TokenStream ts=anal.tokenStream("", reader);17 CharTermAttribute term=ts.getAttribute(CharTermAttribute.class);18//遍历分词数据19while(ts.incrementToken()){20 System.out.print(term.toString()+",");21 }22 reader.close();23 System.out.println();24 }2526 }运⾏后结果:你好,我,的,世界,。

基于Lucene的中文文本分词

王继明;杨国林

【期刊名称】《内蒙古工业大学学报(自然科学版)》

【年(卷),期】2007(026)003

【摘要】中文文本分词技术是文本挖掘领域的一个重要分支,在中国仍然处于发展阶段.Apache Jakarta 的开源工程Lucene是一个十分优秀的基于Java语言的文本检索工具包,在国外已经得到广泛的应用.但是Lucene对中文分词功能的支持不太理想,给Lucene加入好的中文分词功能对Lucene在国内的发展和应用将会起到很大的推动作用.

【总页数】4页(P185-188)

【作者】王继明;杨国林

【作者单位】内蒙古工业大学信息工程学院,呼和浩特,010051;内蒙古工业大学信息工程学院,呼和浩特,010051

【正文语种】中文

【中图分类】TP311.11

【相关文献】

1.一种基于互信息的串扫描中文文本分词方法 [J], 赵秦怡;王丽珍

2.基于词条组合的中文文本分词方法 [J], 黄魏;高兵;刘异;杨克巍

3.基于Lucene的石墨烯中文文献搜索引擎设计与实现 [J], 肖显东;王勤生;杨永强;章国宝;

4.基于Lucene的中文是非问答系统的设计与实现 [J], 罗东霞;卿粼波;吴晓红

5.基于Lucene的中文是非问答系统的设计与实现 [J], 罗东霞;卿粼波;吴晓红因版权原因,仅展示原文概要,查看原文内容请购买。

基于Lucene的自定义中文分词器的设计与实现王桐;王韵婷【期刊名称】《电脑知识与技术》【年(卷),期】2014(000)002【摘要】该文设计了一个基于复杂形式最大匹配算法(MMSeg_Complex)的自定义中文分词器,该分词器采用四种歧义消除规则,并实现了用户自定义词库、自定义同义词和停用词的功能,可方便地集成到Lucene中,从而有效地提高了Lucene的中文处理能力。

通过实验测试表明,该分词器的分词性能跟Lucene自带的中文分词器相比有了极大的提高,并最终构建出了一个高效的中文全文检索系统。

%This paper designed a custom Chinese word analyzer that based on a complex form of maximum matching algorithm (MMSEG_Complex). This analyzer use four kinds of disambiguation rules, and has achieved user-defined thesaurus、custom func-tion of synonyms and stop words , which can be easily integrated into Lucene, thus effectively improving the Chinese processing capabilities of Lucene. Through experiments we found that this analyzer's performance of Chinese word segmentation has been greatly improved compared to the Chinese word analyzer which built-in Lucene, and then we can eventually build an effective Chinese full-text retrieval system.【总页数】4页(P430-433)【作者】王桐;王韵婷【作者单位】哈尔滨工程大学信息与通信工程学院,黑龙江哈尔滨 150001;哈尔滨工程大学信息与通信工程学院,黑龙江哈尔滨 150001【正文语种】中文【中图分类】TP393【相关文献】1.基于Lucene和MMSEG算法的中文分词器研究 [J], 邓晓枫;蒋廷耀2.基于Lucene的中文分词器的改进与实现 [J], 罗惠峰;郭淑琴3.基于Lucene的中文分词器的设计与实现 [J], 彭焕峰4.基于Lucene的中文是非问答系统的设计与实现 [J], 罗东霞;卿粼波;吴晓红5.基于Lucene的中文是非问答系统的设计与实现 [J], 罗东霞;卿粼波;吴晓红因版权原因,仅展示原文概要,查看原文内容请购买。

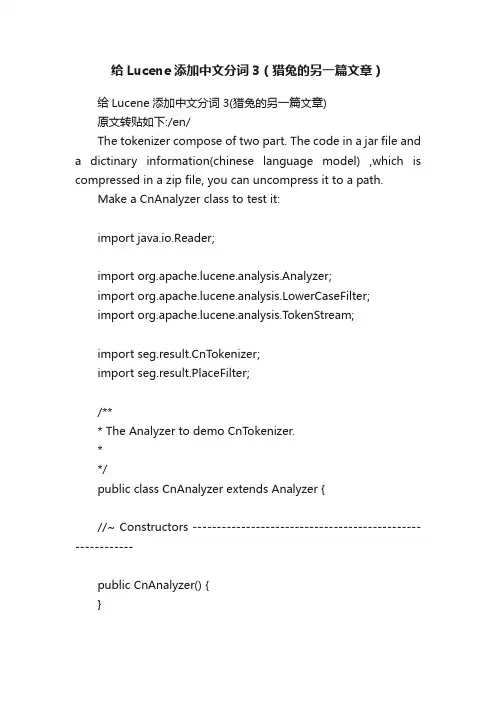

给Lucene添加中文分词3(猎兔的另一篇文章)给Lucene添加中文分词3(猎兔的另一篇文章)原文转贴如下:/en/The tokenizer compose of two part. The code in a jar file and a dictinary information(chinese language model) ,which is compressed in a zip file, you can uncompress it to a path.Make a CnAnalyzer class to test it:import java.io.Reader;import org.apache.lucene.analysis.Analyzer;import org.apache.lucene.analysis.LowerCaseFilter;import org.apache.lucene.analysis.TokenStream;import Tokenizer;import seg.result.PlaceFilter;/*** The Analyzer to demo CnT okenizer.**/public class CnAnalyzer extends Analyzer {//~ Constructors -----------------------------------------------------------public CnAnalyzer() {}//~ Methods ----------------------------------------------------------------/*** get token stream from input** @param fieldName lucene field name* @param reader input reader** @return TokenStream*/public final TokenStream tokenStream(String fieldName, Reader reader) {TokenStream result = new CnT okenizer(reader);result = new LowerCaseFilter(result);//还加入了地名过滤result = new PlaceFilter(result);return result;}}Use a test class to test CnAnalyzer:public static void testCnAnalyzer() throws Exception {long startTime;long endTime;StringReader input;CnT okenizer.makeT ag= false;String sentence="其中包括兴安至全州、桂林至兴安、全州至黄沙河、阳朔至平乐、桂林至阳朔、桂林市国道过境线灵川至三塘段、平乐至钟山、桂林至三江高速公路。

中文分词及其在基于Lucene的全文检索中的应用的开题报告一、研究背景随着搜索引擎技术的快速发展,全文检索成为了信息检索领域的主流技术之一,对于处理大量文本数据时更加高效。

在全文检索中,中文分词是非常重要的一环,在处理中文文本时需要将文本按照一定规则分成若干个词语或短语,以便进行后续的检索和处理。

二、研究目的和意义中文分词是中文信息处理和文本数据挖掘中的一个核心问题,其准确性和效率对于文本处理的质量和速度至关重要。

因此,本文旨在对中文分词的相关技术进行研究与分析,并探讨其在基于Lucene的全文检索中的应用,从而提高中文文本检索的准确性和效率。

三、研究内容1. 中文分词技术的研究与分析本文将对中文分词的基本概念、传统方法和现有技术进行详细的研究与分析,包括基于规则、基于词典和基于统计的分词方法,以及近年来兴起的深度学习分词技术等。

2. 基于Lucene的全文检索系统的设计与实现本文将以基于Lucene的全文检索系统为例进行设计和实现,并结合中文分词技术,探讨如何在全文检索系统中实现中文文本的准确分词和快速检索。

具体包括索引的建立、查询的处理和结果的排序等核心技术。

四、研究方法本文采用文献调研、理论分析、案例分析和实践操作等方法,对中文分词技术的相关文献进行搜集和分析,对全文检索系统的设计和实现进行思考和探讨,通过实践操作来验证理论分析的正确性。

五、预期成果1. 理论成果:详细介绍中文分词的相关技术特点和优缺点,分析其在全文检索中的应用和发展趋势。

2. 实践成果:设计和实现基于Lucene的全文检索系统,并结合中文分词技术来实现中文文本的准确分词和快速检索。

3. 经济效益:提高中文文本检索的准确性和效率,为企业和组织提供更加快速和准确的文本搜索服务,从而提高工作效率和经济效益。

六、论文结构全文分为五个部分,分别是绪论、中文分词技术的研究与分析、基于Lucene的全文检索系统的设计与实现、实验结果分析及总结与展望。

while (ts.i ncreme ntToke n()) {//取得下一个词搜索引擎中文分词原理与实现因为中文文本中,词和词之间不像英文一样存在边界, 所以中文分词是一个专业处理中文信息的搜索引擎首先面对的问题,需要靠程序来切分出词。

一、LUCene 中的中文分词LUCene 在中处理中文的常用方法有三种,以 皎死猎人的狗"为例说明之:单 字:【咬】【死】 【猎】 【人】 【的】 【狗】二元覆盖:【咬死】 【死猎】 【猎人】 【人的】 【的狗】分词:【咬】 【死】 【猎人】 【的】【狗】LUCene 中的StandardTokenizer 采用单子分词方式, CJKTokenize 采用二元覆盖方式。

1、LUCene 切分原理LUCene 中负责语言处理的部分在 org.apache.Iucene.analysis 包,其中, TokenStream 类 用来进行基本的分词工作, Analyzer 类是TokenStream 的包装类,负责整个解析工作,Analyzer 类接收整段文本,解析出有意义的词语。

通常不需要直接调用分词的处理类 analysis ,而是由LUCene 内存内部来调用,其中:(1) 在索引阶段,调用 addDocument (doc )时,LUCene 内部使用 Analyzer 来处理每 个需要索引的列,具体如下图:图1 LUCene 对索引文本的处理In dexWriter in dex = new In dexWriter(i ndexDirectory, new CnAn alyzer(), //用于支持分词的分析器 !in Creme ntal,In dexWriter.MaxFieldLe ngth.UNLIMITED);(2) 在搜索阶段,调用QUeryParSer.parse (queryText )来解析查询串时, QUeryParSer 会调用Analyzer 来拆分查询字符串,但是对于通配符等查询不会调用 Analyzer 。

Lucene中文分词Lucene 中文分词什么是中文分词众所周知,英文是以词为单位的,词和词之间是靠空格隔开,而中文是以字为单位,句子中所有的字连起来才能描述一个意思。

例如,英文句子I am a student,用中文则为:“我是一个学生”。

计算机可以很简单通过空格知道student是一个单词,但是不能很容易明白“学”、“生”两个字合起来才表示一个词。

把中文的汉字序列切分成有意义的词,就是中文分词,有些人也称为切词。

我是一个学生,分词的结果是:我是一个学生。

回页首中文分词技术现有的分词技术可分为三类:基于字符串匹配的分词基于理解的分词基于统计的分词这篇文章中使用的是基于字符串匹配的分词技术,这种技术也被称为机械分词。

它是按照一定的策略将待分析的汉字串与一个“充分大的”词库中的词条进行匹配。

若在词库中找到某个字符串则匹配成功(识别出一个词)。

按照扫描方向的不同,串匹配分词方法可以分为正向匹配和逆向匹配;按照不同长度优先匹配的情况,可以分为最大(最长)匹配和最小(最短)匹配;按照是否与词性标注过程相结合,又可以分为单纯分词法和分词与标注结合法。

常用的几种机械分词方法如下:正向最大匹配法(由左到右的方向)逆向最大匹配法(由右到左的方向)回页首分词器实现这个实现了机械分词中正向最大匹配法的Lucene分词器包括两个类,CJKAnalyzer和CJKT okenizer,他们的源代码如下:package org.solol.analysis;import java.io.Reader;import java.util.Set;import org.apache.lucene.analysis.Analyzer;import org.apache.lucene.analysis.StopFilter;import org.apache.lucene.analysis.TokenStream;/*** @author solo L**/public class CJKAnalyzer extends Analyzer {//实现了Analyzer 接口,这是lucene的要求public final static String[] STOP_WORDS = {};private Set stopTable;public CJKAnalyzer() {stopTable = StopFilter.makeStopSet(STOP_WORDS);}@Overridepublic TokenStream tokenStream(String fieldName, Readerreader) {return new StopFilter(new CJKT okenizer(reader), stopTable);}}package org.solol.analysis;import java.io.BufferedReader;import java.io.FileInputStream;import java.io.IOException;import java.io.InputStream;import java.io.InputStreamReader;import java.io.Reader;import java.util.TreeMap;import org.apache.lucene.analysis.Token;import org.apache.lucene.analysis.Tokenizer;/*** @author solo L**/public class CJKT okenizer extends Tokenizer {//这个TreeMap用来缓存词库private static TreeMap simWords = null;private static final int IO_BUFFER_SIZE = 256;private int bufferIndex = 0;private int dataLen = 0;private final char[] ioBuffer = new char[IO_BUFFER_SIZE]; private String tokenType = "word";public CJKT okenizer(Reader input) {this.input = input;}//这里是lucene分词器实现的最关键的地方public Token next() throws IOException {loadWords();StringBuffer currentWord = new StringBuffer();while (true) {char c;Character.UnicodeBlock ub;if (bufferIndex >= dataLen) {dataLen = input.read(ioBuffer);bufferIndex = 0;}if (dataLen == -1) {if (currentWord.length() == 0) {return null;} else {break;}} else {c = ioBuffer[bufferIndex++];ub = Character.UnicodeBlock.of(c);}//通过这个条件不难看出这里只处理了CJK_UNIFIED_IDEOGRAPHS,//因此会丢掉其它的字符,如它会丢掉LATIN字符和数字//这也是该lucene分词器的一个限制,您可以在此基础之上完善它,//也很欢迎把您完善的结果反馈给我if (Character.isLetter(c) && ub == Character.UnicodeBlock.CJK_UNIFIED_IDEOGRAPHS) { tokenType = "double";if (currentWord.length() == 0) {currentWord.append(c);} else {//这里实现了正向最大匹配法String temp = (currentWord.toString() + c).intern();if (simWords.containsKey(temp)) {currentWord.append(c);} else {bufferIndex--;break;}}}}Token token = new T oken(currentWord.toString(), bufferIndex - currentWord.length(), bufferIndex, tokenType);currentWord.setLength(0);return token;//装载词库,您必须明白它的逻辑和之所以这样做的目的,这样您才能理解正向最大匹配法是如何实现的public void loadWords() {if (simWords != null)return;simWords = new TreeMap();try {InputStream words = new FileInputStream("simchinese.txt");BufferedReader in = new BufferedReader(new InputStreamReader(words,"UTF-8"));String word = null;while ((word = in.readLine()) != null) {//#使得我们可以在词库中进行必要的注释if ((word.indexOf("#") == -1) && (word.length() < 5)) {simWords.put(word.intern(), "1");if (word.length() == 3) {if (!simWords.containsKey(word.substring(0, 2).intern())) {simWords.put(word.substring(0, 2).intern(), "2");}}if (word.length() == 4) {if (!simWords.containsKey(word.substring(0, 2).intern())) {simWords.put(word.substring(0, 2).intern(), "2");}if (!simWords.containsKey(word.substring(0, 3).intern())) {simWords.put(word.substring(0, 3).intern(), "2");}}}}in.close();} catch (IOException e) {e.printStackTrace();}}}回页首分词效果这是我在当日的某新闻搞中随意选的一段话:此外,巴黎市政府所在地和巴黎两座体育场会挂出写有相同话语的巨幅标语,这两座体育场还安装了巨大屏幕,以方便巴黎市民和游客观看决赛。

LuceneIK分词器集成,词典扩展本⽂主要介绍在Lucene中集成IKAnalyzer1 环境介绍系统:win10lucene版本:7.3.0 https:///jdk:1.82 IKAnalyzer 集成说明IK分词器最先作为lucence上使⽤⽽开发,主要⽤于对中⽂的分词,后来发展成独⽴的分词组件,⽬前只提供到lucence 4.0版本的⽀持,我们在使⽤4.0以后的版本的时候需要简单的集成⼀下。

IK需要集成⼀因为lucence4.0后,Analyer的createComponents⽅法的参数改变了。

我们在ikAnalyzer包中提供的Lucence⽀持类中可以看到4.0后的版本中,该⽅法的参数只有⼀个fileldName,没有第⼆个输⼊流参数。

故需要修改后使⽤3 maven 依赖引⽤<!-- lucene 核⼼模块 --><dependency><groupId>org.apache.lucene</groupId><artifactId>lucene-core</artifactId><version>7.3.0</version></dependency><!-- ikanalyzer 中⽂分词器 --><dependency><groupId>com.janeluo</groupId><artifactId>ikanalyzer</artifactId><version>2012_u6</version><exclusions><exclusion><groupId>org.apache.lucene</groupId><artifactId>lucene-core</artifactId></exclusion><exclusion><groupId>org.apache.lucene</groupId><artifactId>lucene-queryparser</artifactId></exclusion><exclusion><groupId>org.apache.lucene</groupId><artifactId>lucene-analyzers-common</artifactId></exclusion></exclusions></dependency>4 重写IKAnalyer 类我们重命名为IKAnalyzer4Lucene7,我们把ik包下的ikanalyer类⾥⾯的内容全部复制到IKAnalyzer4Lucene7中即可。

lucene 中文分词方法Lucene 中文分词方法Lucene是一款开源的全文检索引擎库,支持中文分词。

中文分词是指将中文文本按照一定规则切分成一个个词语的过程,是中文文本处理的核心环节之一。

在Lucene中,中文分词方法采用了一种被称为“最大正向匹配”(Maximum Matching)的算法来实现。

最大正向匹配算法是一种基于词典的分词算法,其基本思想是从左到右遍历待分词文本,找出最长的匹配词,然后将其切分出来。

具体步骤如下:1. 构建词典:首先需要构建一个中文词典,词典中包含了常用的中文词语。

词典可以手动创建,也可以通过自动分词算法生成。

2. 正向匹配:对于待分词文本,从左到右遍历每个字符,依次匹配词典中的词语。

当匹配到一个词语时,将其切分出来,并将指针移动到下一个位置继续匹配。

3. 最长匹配:在匹配过程中,选择最长的匹配词语进行切分。

这样可以避免将一个词语切分成多个部分,提高分词的准确性。

4. 重复匹配:如果一个词语可以匹配多个词典中的词语,选择其中最长的词语进行切分。

这样可以避免将一个长词切分成多个短词,提高分词的准确性。

5. 后处理:对于一些特殊情况,例如未登录词(未在词典中出现的词语)或者歧义词(一个词语有多个意思),可以通过后处理来进行处理,例如利用统计信息或者上下文信息进行判断。

Lucene中的中文分词方法通过上述算法实现了对中文文本的分词。

在使用Lucene进行中文分词时,可以通过调用相关API来实现,具体步骤如下:1. 创建分词器:首先需要创建一个中文分词器,例如使用Lucene 中提供的SmartChineseAnalyzer分词器。

2. 分词:将待分词的中文文本传入分词器的分词方法,即可获取到分词结果。

分词结果是一个词语列表,包含了文本中的所有词语。

3. 处理分词结果:可以对分词结果进行一些后处理,例如去除停用词(常用但无实际意义的词语)或者对词语进行统计分析。

通过使用Lucene中的中文分词方法,可以有效地对中文文本进行分词处理,提高中文文本处理的效果。