第三章神经网络控制及应用(基础)

- 格式:ppt

- 大小:716.50 KB

- 文档页数:10

神经网络原理及应用随着科技的不断进步,人们对于人工智能的热情也日趋高涨。

人工智能有很多种算法,其中神经网络是最为基础且应用最广泛的一种。

一、神经网络的原理神经网络是一种模拟人脑神经元的计算模型,它是由大量的神经元和它们之间的连接构成的。

每个神经元都有自己的输入和输出,这些输入通过一系列的“权重”给定了输入的影响程度,神经元通过函数将这些带权输入求和并送入输出函数得到输出。

其中,输入函数有不同的表现形式,一般来说,是将输入加权和的结果送入激活函数中,以输出神经元的值。

激活函数有很多种,常见有sigmoid函数、ReLU函数、tanh函数等。

而连接权重是神经网络中的一个非常重要的概念,它决定了神经元间的传递强度和方向。

在训练神经网络时,我们通常使用反向传播算法,根据训练数据来不断调整连接权重,以找到最小化损失函数的权重。

二、神经网络的应用神经网络有着广泛的应用,其中最为突出的有以下几个:1、图像识别神经网络可以对图像进行识别,这在计算机视觉和人机交互领域有着非常重要的应用。

神经网络学习识别图像的主要过程是输入训练数据,进行误差预测,然后调整网络权重以提高预测准确率。

2、自然语言处理神经网络可以通过训练学会自然语言处理任务,如语音识别、机器翻译和情感分析等。

在这些任务中,神经网络可以归纳出与自然语言相关的语法、语义和上下文信息,从而提高自然语言处理的准确性和速度。

3、推荐系统神经网络可以利用用户的历史行为和其他信息来推荐符合用户利益的商品、视频和新闻。

在这方面,神经网络可以识别隐藏在用户行为背后的模式和信号,以便提供更加精确的推荐服务。

4、游戏智能神经网络可以在游戏中进行决策,自动控制角色,提供游戏智能服务。

在这些应用中,神经网络开始了进化学习算法,以提高角色行动的判断力和反应速度,从而更好地帮助玩家取得游戏胜利。

三、神经网络的未来神经网络在许多领域的发展都取得了非常突出的成果。

随着硬件技术的不断进步和数据规模的不断增长,神经网络的未来前景也是一片光明。

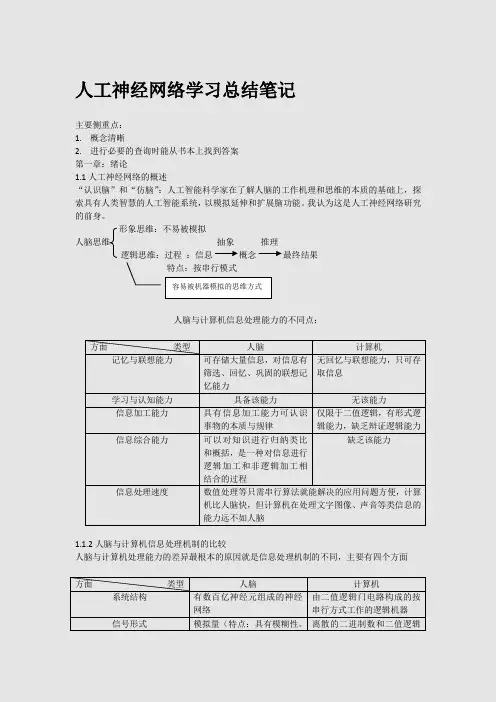

人工神经网络学习总结笔记主要侧重点:1.概念清晰2.进行必要的查询时能从书本上找到答案第一章:绪论1.1人工神经网络的概述“认识脑”和“仿脑”:人工智能科学家在了解人脑的工作机理和思维的本质的基础上,探索具有人类智慧的人工智能系统,以模拟延伸和扩展脑功能。

我认为这是人工神经网络研究的前身。

形象思维:不易被模拟人脑思维抽象推理逻辑思维:过程:信息概念最终结果特点:按串行模式人脑与计算机信息处理能力的不同点:方面类型人脑计算机记忆与联想能力可存储大量信息,对信息有筛选、回忆、巩固的联想记忆能力无回忆与联想能力,只可存取信息学习与认知能力具备该能力无该能力信息加工能力具有信息加工能力可认识事物的本质与规律仅限于二值逻辑,有形式逻辑能力,缺乏辩证逻辑能力信息综合能力可以对知识进行归纳类比和概括,是一种对信息进行逻辑加工和非逻辑加工相结合的过程缺乏该能力信息处理速度数值处理等只需串行算法就能解决的应用问题方便,计算机比人脑快,但计算机在处理文字图像、声音等类信息的能力远不如人脑1.1.2人脑与计算机信息处理机制的比较人脑与计算机处理能力的差异最根本的原因就是信息处理机制的不同,主要有四个方面方面类型人脑计算机系统结构有数百亿神经元组成的神经网络由二值逻辑门电路构成的按串行方式工作的逻辑机器信号形式模拟量(特点:具有模糊性。

离散的二进制数和二值逻辑容易被机器模拟的思维方式难以被机器模拟)和脉冲两种形式形式信息储存人脑中的信息分布存储于整个系统,所存储的信息是联想式的有限集中的串行处理机制信息处理机制高度并行的非线性信息处理系统(体现在结构上、信息存储上、信息处理的运行过程中)1.1.3人工神经网络的概念:在对人脑神经网络的基本认识的基础上,用数理方法从信息处理的角度对人脑神经网络进行抽象,并建立某种简化模型,称之为人工神经网络,是对人脑的简化、抽象以及模拟,是一种旨在模仿人脑结构及其功能的信息处理系统。

其他定义:由非常多个非常简单的处理单元彼此按某种方式相互连接而形成的计算系统,外部输入信息之后,系统产生动态响应从而处理信息。

Neural Networks & Application1第3章感知器神经网络z 单层感知器z 多层感知器z 基本BP 算法z 标准BP 算法的改进z 基于BP 算法的多层感知器设计基础z 基于BP 算法的多层感知器应用与设计实例z课件下载::8080/aiwebdrive/wdshare/getsh are.do?action=exhibition&theParam=liangjing@zzu.e Neural Networks & Application23.1 单层感知器z 1958年,美国心理学家Frank Rosenblatt 提出一种具有单层计算单元的神经网络,称为Perceptron, 及感知器。

z感知器研究中首次提出了自组织、自学习的思想,而且对于所能解决的问题存在着收敛算法,并能从数学上严格证明,因而对神经网络的研究起了重要推动作用。

z单层感知器的结构与功能都非常简单,所以在解决实际问题时很少被采用,但在神经网络研究中具有重要意义,是研究其他网络的基础,而且较易学习和理解,适合于作为学习神经网络的起点。

Neural Networks & Application33.1 单层感知器z3.1.1 感知器模型单层感知器:只有一层处理单元的感知器12(,,...,)Tm o o o =O 单层感知器输入层(感知层)输出层(处理层)12(,,...,)Tn x x x =X 12(,,...,),1,2,...,T j j nj w w w j m==j W Neural Networks & Application43.1 单层感知器对于处理层中任一节点,其净输入net ’j 为来自输入层各节点的输入加权和离散型单层感知器的变换函数一般采用符号函数1'nj ij ii net w x ==∑0sgn(')sgn()sgn()nT j j j ij i j i o net T w x ==−==∑W XNeural Networks & Application53.1 单层感知器z 3.1.2 感知器的功能z单计算节点感知器就是一个M-P 神经元模型,采取符号变换函数,又称为符号单元。

人工神经网络及应用智慧树知到课后章节答案2023年下长安大学长安大学第一章测试1.Synapse is the place where neurons connect in function. It is composed ofpresynaptic membrane, synaptic space and postsynaptic membrane.()A:对 B:错答案:对2.Biological neurons can be divided into sensory neurons, motor neurons and()according to their functions.A:multipolar neurons B:interneuronsC:Pseudo unipolar neural networks D:bipolar neurons答案:interneurons3.Neurons and glial cells are the two major parts of the nervous system. ()A:错 B:对答案:对4.Neurons are highly polarized cells, which are mainly composed of two parts:the cell body and the synapse. ()A:错 B:对答案:对5.The human brain is an important part of the nervous system, which containsmore than 86 billion neurons. It is the central information processingorganization of human beings. ()A:对 B:错答案:对第二章测试1.In 1989, Mead, the father of VLSI, published his monograph "( )", in which agenetic neural network model based on evolutionary system theory wasproposed.A:Learning MachinesB:Journal Neural NetworksC:Analog VLSI and Neural SystemsD:Perceptrons: An Introduction to Computational Geometry答案:Analog VLSI and Neural Systems2.In 1989, Yann Lecun proposed convolutional neural network and applied itto image processing, which should be the earliest application field of deeplearning algorithm. ()A:对 B:错答案:对3.In 1954, Eccles, a neurophysiologist at the University of Melbourne,summarized the principle of Dale, a British physiologist, that "each neuronsecretes only one kind of transmitter ".()A:错 B:对答案:对4.In 1972, Professor Kohonen of Finland proposed a self-organizing featuremap (SOFM) neural network model. ()A:对 B:错答案:对5.Prediction and evaluation is an activity of scientific calculation andevaluation of some characteristics and development status of things orevents in the future according to the known information of objective objects.()A:对 B:错答案:对第三章测试1.The function of transfer function in neurons is to get a new mapping outputof summer according to the specified function relationship, and thencompletes the training of artificial neural network. ()A:对 B:错答案:对2.The determinant changes sign when two rows (or two columns) areexchanged. The value of determinant is zero when two rows (or two columns) are same. ()A:对 B:错答案:对3.There are two kinds of phenomena in the objective world. The first is thephenomenon that will happen under certain conditions, which is calledinevitable phenomenon. The second kind is the phenomenon that may ormay not happen under certain conditions, which is called randomphenomenon. ()A:错 B:对答案:对4.Logarithmic S-type transfer function, namely Sigmoid function, is also calledS-shaped growth curve in biology. ()A:错 B:对答案:对5.Rectified linear unit (ReLU), similar to the slope function in mathematics, isthe most commonly used transfer function of artificial neural network. ()A:错 B:对答案:对第四章测试1.The perceptron learning algorithm is driven by misclassification, so thestochastic gradient descent method is used to optimize the loss function. ()A:misclassification B:maximum C:minimumD:correct答案:misclassification2.Perceptron is a single-layer neural network, or neuron, which is the smallestunit of neural network. ()A:错 B:对答案:对3.When the perceptron is learning, each sample will be input into the neuronas a stimulus. The input signal is the feature of each sample, and the expected output is the category of the sample. When the output is different from the category, we can adjust the synaptic weight and bias value until the output of each sample is the same as the category. ()A:对 B:错答案:对4.If the symmetric hard limit function is selected for the transfer function, theoutput can be expressed as . If the inner product of the row vector and the input vector in the weight matrix is greater than or equal to -b, the output is 1, otherwise the output is -1. ()A:错 B:对答案:对5.The basic idea of perceptron learning algorithm is to input samples into thenetwork step by step, and adjust the weight matrix of the network according to the difference between the output result and the ideal output, that is tosolve the optimization problem of loss function L(w,b). ()A:错 B:对答案:对第五章测试1.The output of BP neural network is ()of neural network.A:the output of the last layer B:the input of the last layerC:the output of the second layer D:the input of the second layer答案:the output of the last layer2.BP neural network has become one of the most representative algorithms inthe field of artificial intelligence. It has been widely used in signal processing, pattern recognition, machine control (expert system, data compression) and other fields. ()A:对 B:错答案:对3.In 1974, Paul Werbos of the natural science foundation of the United Statesfirst proposed the use of error back propagation algorithm to train artificialneural networks in his doctoral dissertation of Harvard University, anddeeply analyzed the possibility of applying it to neural networks, effectivelysolving the XOR loop problem that single sensor cannot handle. ()A:对 B:错答案:对4.In the standard BP neural network algorithm and momentum BP algorithm,the learning rate is a constant that remains constant throughout the training process, and the performance of the learning algorithm is very sensitive tothe selection of the learning rate. ()答案:对5.L-M algorithm is mainly proposed for super large scale neural network, andit is very effective in practical application. ()A:对 B:错答案:错第六章测试1.RBF neural network is a novel and effective feedforward neural network,which has the best local approximation and global optimal performance. ()A:对 B:错答案:对2.At present, RBF neural network has been successfully applied in nonlinearfunction approximation, time series analysis, data classification, patternrecognition, information processing, image processing, system modeling,control and fault diagnosis. ()A:对 B:错答案:对3.The basic idea of RBF neural network is to use radial basis function as the"basis" of hidden layer hidden unit to form hidden layer space, and hiddenlayer transforms input vector. The input data transformation of lowdimensional space is mapped into high-dimensional space, so that theproblem of linear separability in low-dimensional space can be realized inhigh-dimensional space. ()答案:对4.For the learning algorithm of RBF neural network, the key problem is todetermine the center parameters of the output layer node reasonably. ()A:对 B:错答案:错5.The method of selecting the center of RBF neural network by self-organizinglearning is to select the center of RBF neural network by k-means clustering method, which belongs to supervised learning method. ()A:错 B:对答案:错第七章测试1.In terms of algorithm, ADALINE neural network adopts W-H learning rule,also known as the least mean square (LMS) algorithm. It is developed fromthe perceptron algorithm, and its convergence speed and accuracy have been greatly improved. ()A:错 B:对答案:对2.ADALINE neural network has simple structure and multi-layer structure. It isflexible in practical application and widely used in signal processing, system identification, pattern recognition and intelligent control. ()A:对 B:错答案:对3.When there are multiple ADALINE in the network, the adaptive linear neuralnetwork is also called Madaline which means many Adaline neural networks.()A:对 B:错答案:对4.The algorithm used in single-layer ADALINE network is LMS algorithm,which is similar to the algorithm of perceptron, and also belongs tosupervised learning algorithm. ()A:对 B:错答案:对5.In practical application, the inverse of the correlation matrix and thecorrelation coefficient are not easy to obtain, so the approximate steepestdescent method is needed in the algorithm design. The core idea is that theactual mean square error of the network is replaced by the mean squareerror of the k-th iteration.()A:错 B:对答案:对第八章测试1.Hopfield neural network is a kind of neural network which combines storagesystem and binary system. It not only provides a model to simulate humanmemory, but also guarantees the convergence to ().A:local minimum B:local maximumC:minimumD:maximum答案:local minimum2.At present, researchers have successfully applied Hopfield neural network tosolve the traveling salesman problem (TSP), which is the most representative of optimization combinatorial problems. ()A:错 B:对答案:对3.In 1982, American scientist John Joseph Hopfield put forward a kind offeedback neural network "Hopfield neural network" in his paper NeuralNetworks and Physical Systems with Emergent Collective ComputationalAbilities. ()A:对 B:错答案:对4.Under the excitation of input x, DHNN enters a dynamic change process, untilthe state of each neuron is no longer changed, it reaches a stable state. This process is equivalent to the process of network learning and memory, and the final output of the network is the value of each neuron in the stable state.()A:错 B:对答案:对5.The order in which neurons adjust their states is not unique. It can beconsidered that a certain order can be specified or selected randomly. The process of neuron state adjustment includes three situations: from 0 to 1, and1 to 0 and unchanged. ()A:错 B:对答案:对第九章测试pared with GPU, CPU has higher processing speed, and has significantadvantages in processing repetitive tasks. ()A:对 B:错答案:错2.At present, DCNN has become one of the core algorithms in the field of imagerecognition, but it is unstable when there is a small amount of learning data.()A:对 B:错答案:错3.In the field of target detection and classification, the task of the last layer ofneural network is to classify. ()A:对 B:错答案:对4.In AlexNet, there are 650000 neurons with more than 600000 parametersdistributed in five convolution layers and three fully connected layers andSoftmax layers with 1000 categories. ()A:对 B:错答案:错5.VGGNet is composed of two parts: the convolution layer and the fullconnection layer, which can be regarded as the deepened version of AlexNet.()A:错 B:对答案:对第十章测试1.The essence of the optimization process of D and G is to find the().A:maximum B:minimax C:local maximaD:minimum答案:minimax2.In the artificial neural network, the quality of modeling will directly affect theperformance of the generative model, but a small amount of prior knowledge is needed for the actual case modeling.()A:对 B:错答案:错3. A GAN mainly includes a generator G and a discriminator D. ()A:对 B:错答案:对4.Because the generative adversarial network does not need to distinguish thelower bound and approximate inference, it avoids the partition functioncalculation problem caused by the traditional repeated application of Markov chain learning mechanism, and improves the network efficiency. ()A:对 B:错答案:对5.From the perspective of artificial intelligence, GAN uses neural network toguide neural network, and the idea is very strange. ()A:对 B:错答案:对第十一章测试1.The characteristic of Elman neural network is that the output of the hiddenlayer is delayed and stored by the feedback layer, and the feedback isconnected to the input of the hidden layer, which has the function ofinformation storage. ()A:对 B:错答案:对2.In Elman network, the transfer function of feedback layer is nonlinearfunction, and the transfer function of output layer islinear function.()A:对 B:错答案:对3.The feedback layer is used to memorize the output value of the previous timeof the hidden layer unit and return it to the input. Therefore, Elman neuralnetwork has dynamic memory function.()A:对 B:错答案:对4.The neurons in the hidden layer of Elman network adopt the tangent S-typetransfer function, while the output layer adopts the linear transfer function. If there are enough neurons in the feedback layer, the combination of thesetransfer functions can make Elman neural network approach any functionwith arbitrary precision in finite time.()A:对 B:错答案:对5.Elman neural network is a kind of dynamic recurrent network, which can bedivided into full feedback and partial feedback. In the partial recurrentnetwork, the feedforward connection weight can be modified, and thefeedback connection is composed of a group of feedback units, and theconnection weight cannot be modified. ()A:错 B:对答案:对第十二章测试1.The loss function of AdaBoost algorithm is().A:exponential functionB:nonlinear function C:linear functionD:logarithmic function答案:exponential function2.Boosting algorithm is the general name of a class of algorithms. Theircommon ground is to construct a strong classifier by using a group of weakclassifiers. Weak classifier mainly refers to the classifier whose predictionaccuracy is not high and far below the ideal classification effect. Strongclassifier mainly refers to the classifier with high prediction accuracy. ()A:错 B:对答案:对3.Among the many improved boosting algorithms, the most successful one isthe AdaBoost (adaptive boosting) algorithm proposed by Yoav Freund ofUniversity of California San Diego and Robert Schapire of PrincetonUniversity in 1996. ()A:错 B:对答案:对4.The most basic property of AdaBoost is that it reduces the training errorcontinuously in the learning process, that is, the classification error rate onthe training data set until each weak classifier is combined into the final ideal classifier. ()A:错 B:对答案:对5.The main purpose of adding regularization term into the formula ofcalculating strong classifier is to prevent the over fitting of AdaBoostalgorithm, which is usually called step size in algorithm. ()A:错 B:对答案:对第十三章测试1.The core layer of SOFM neural network is().A:input layer B:hidden layerC:output layer D:competition layer答案:competition layer2.In order to divide the input patterns into several classes, the distancebetween input pattern vectors should be measured according to thesimilarity. ()are usually used.A:Euclidean distance method B:Cosine methodC:Sine method D:Euclidean distance method and cosine method答案:Euclidean distance method and cosine method3.SOFM neural networks are different from other artificial neural networks inthat they adopt competitive learning rather than backward propagationerror correction learning method similar to gradient descent, and in a sense, they use neighborhood functions to preserve topological properties of input space. ()A:对 B:错答案:对4.For SOFM neural network, the competitive transfer function (CTF) responseis 0 for the winning neurons, and 1 for other neurons.()A:错 B:对答案:错5.When the input pattern to the network does not belong to any pattern in thenetwork training samples, SOFM neural network can only classify it into the closest mode. ()A:对 B:错答案:对第十四章测试1.The neural network toolbox contains()module libraries.A:three B:sixC:five D:four答案:five2.The "netprod" in the network input module can be used for().A:dot multiplication B:dot divisionC:addition or subtractionD:dot multiplication or dot division答案:dot multiplication or dot division3.The "dotrod" in the weight setting module is a normal dot product weightfunction.()A:错 B:对答案:错4.The mathematical model of single neuron is y=f(wx+b).()A:错 B:对答案:对5.The neuron model can be divided into three parts: input module, transferfunction and output module. ()A:对 B:错答案:对第十五章测试1.In large-scale system software design, we need to consider the logicalstructure and physical structure of software architecture. ()A:对 B:错答案:对2.The menu property bar has "label" and "tag". The label is equivalent to thetag value of the menu item, and the tag is the name of the menu display.()A:对 B:错答案:错3.It is necessary to determine the structure and parameters of the neuralnetwork, including the number of hidden layers, the number of neurons inthe hidden layer and the training function.()A:对 B:错答案:对4.The description of the property "tooltipstring" is the prompt that appearswhen the mouse is over the object. ()A:对 B:错答案:对5.The description of the property "string" is: the text displayed on the object.()A:错 B:对答案:对第十六章测试1.The description of the parameter "validator" of the wx.TextCtrl class is: the().A:size of controlB:style of control C:validator of controlD:position of control答案:validator of control2.The description of the parameter "defaultDir" of class wx.FileDialog is: ().A:open the file B:default file nameC:default path D:save the file答案:default path3.In the design of artificial neural network software based on wxPython,creating GUI means building a framework in which various controls can beadded to complete the design of software functions. ()A:对 B:错答案:对4.When the window event occurs, the main event loop will respond and assignthe appropriate event handler to the window event. ()A:对 B:错答案:对5.From the user's point of view, the wxPython program is idle for a large partof the time, but when the user or the internal action of the system causes the event, and then the event will drive the wxPython program to produce the corresponding action.()A:对 B:错答案:对。

计算机初学者必读的人工智能基础教程第一章:人工智能概述人工智能(Artificial Intelligence,简称AI)是研究和开发用于模拟、延伸和扩展人类智能的理论、方法、技术及应用系统的新型信息技术。

本章将介绍人工智能的定义、发展历程、应用领域等基础知识,帮助读者对人工智能有一个整体的了解。

第二章:机器学习机器学习(Machine Learning)是人工智能的一个重要分支,通过让机器从数据中学习并改善性能,实现对未知数据的准确预测。

本章将介绍机器学习的基本概念、分类、算法和应用实例,包括监督学习、无监督学习和强化学习等内容,帮助读者理解机器学习的基本原理和应用方法。

第三章:神经网络神经网络(Neural Network)是一种模仿人类神经系统结构和功能的数学模型,是实现人工智能的核心技术之一。

本章将介绍神经网络的基本原理、结构和训练方法,包括前馈神经网络、卷积神经网络和循环神经网络等类型,以及深度学习在图像识别、自然语言处理等领域的应用案例。

第四章:自然语言处理自然语言处理(Natural Language Processing,简称NLP)是人工智能与语言学、计算机科学交叉的领域,研究如何使机器能够理解、处理和生成人类自然语言。

本章将介绍自然语言处理的基本概念、技术和应用,包括词法分析、句法分析、信息抽取、机器翻译等,以及近年来在智能客服、智能翻译等领域的研究进展。

第五章:计算机视觉计算机视觉(Computer Vision)是研究如何使计算机具有类似人类视觉系统的功能,能够理解和解释图像和视频。

本章将介绍计算机视觉的基本概念、算法和应用,包括图像特征提取、目标检测与识别、图像分割与理解等内容,以及在无人驾驶、智能监控等领域的具体应用案例。

第六章:推荐系统推荐系统(Recommendation System)是一种通过分析用户历史行为和兴趣,向用户推荐相关信息、产品或服务的技术系统。

本章将介绍推荐系统的基本原理、算法和应用,包括基于内容的推荐、协同过滤、深度学习推荐等,以及在电商、社交媒体等领域的实际应用案例。