普林斯顿大学博弈论讲义10

- 格式:pdf

- 大小:118.79 KB

- 文档页数:5

博弈论概要1.研究背景及意义在现实生活中,人们的利益冲突与一致具有普遍性,因此,几乎所有的决策问题都可以认为是博弈。

博弈论在政治学、经济学等许多领域都有着广泛的应用。

在经济学中博弈论作为一种重要的分析方法已渗透到几乎所有的领域,每一领域的最新进展都应用了博弈论,博弈论已经成为主流经济学的一部分,对经济学理论与方法正产生越来越重要的影响。

虽然博弈论是数学的一个分支,但其应用范围十分广泛,在经济学、管理学、社会学、政治学、法律学、军事学等领域都有许多成功运用博弈论的案例。

早在1994年,提出博弈均衡理论的纳什博士与他的伙伴哈尔萨尼教授、泽尔滕教授就共同分享了当年的诺贝尔经济学奖和93万美元的奖金。

2005年,瑞典皇家科学院再次把诺贝尔经济学奖颁给了有着以色列、美国双重国籍的罗伯特·奥曼和美国人托马斯·谢林,以表彰他们在博弈论领域作出的贡献。

纳什的贡献是在1944年与奥斯卡·摩根斯特恩合著了《博弈论与经济行为》一书,标志着现代系统博弈理论的的初步形成。

而谢林和奥曼两位博弈论先驱在政治理论、社会学甚至生物学等方面成功运用到了博弈学理论。

奥曼用数学分析为博弈论列出了精确的公式,谢林则是想通过实践来展示博弈论在社会各个领域的实际意义。

他们两位利用博弈论对商业谈判、种族隔离、武器控制等领域进行了实际分析,谢林教授认为博弈论运用的重要领域应该包括核威慑和武器控制,同时还可以研究种族关系、有组织犯罪、雇员关系乃至自我管理等方面。

2.博弈论相关概念与发展史综述2.1博弈论的概念2.1.1博弈论的定义博弈论(Game Theory,又称对策论)研究决策主体的行为在发生直接的相互作用时,人们如何进行决策以及这种决策的均衡问题。

博弈论是研究理性的决策者之间冲突与合作的理论。

在博弈论分析中,一定场合中的每个对弈者在决定采取何种行动时都策略地、有目的地行事,他考虑到他的决策行为对其他人的可能影响,以及其他人的行为对他的可能影响,通过选择最佳行动计划,来寻求收益或效用的最大化。

Principal-Agent Models&Incentive Compatibility/Participation Constraints Suppose Pat manages a large computer software company and Allen is a talented program designer.Pat would like to hire Allen to develop a new software package.If Allen works for Pat,Allen must choose whether or not to expend high effort or low effort on the job.At the end of the work cycle,Pat will learn whether the project is successful or not.A successful project yields revenue of6for thefirm,whereas the revenue is2if the project is unsuccessful.Success depends on Allen’s high effort as well as on a random factor.Specif-ically,if Allen expends high effort,then success will be achieved with probability 1/2;if Allen expends low effort,then the project is unsuccessful for sure.As-sume that,to epxend high effort,Allen must endure a personal cost of1.The parties can write a contract that specifies compensationfor Allen conditional on whether the project is successful(but it cannot be conditioned directly on Allen’s effort).Assume that Allen values money acording to the utility func-tion v A(x)=x a.Assume0<a<1.Finally,suppose Pat’s utility function is v P(x)=x.Imagine that the games interact as depicted above.At the beginning of the game,Pat offers Allen a wage and bonus package.The wage w is to be paid regardless of the project outcome,whereas the bonus b would be paid only if the project is successful.Then Allen decides whether or not to accept the contract. If he declines(N)the game ends,with Pat getting0and Allen obtaining utility of1(corresponding to his outside opportunities).If Allen accepts the contract(Y),then he decides whether to exert high(H) or low(L)effort.Low effort leads to an unsuccessful project,whereby Pat gets revenue of2minus the wage w and Allen gets his utility of the wage,w a. High effort leads to a chance node,where nature picks whether the project is successful(with probability1/2)or not(probability1/2).An unsuccessful project implies the same payoffs as with low effort,except that,in this case, Allen also pays his effort cost of1.A successful project raises Pat’s revenue to 6and trigers the bonus b paid to Allen in addition to the wage.Calculating the expected payoffs from the chance node,we can rewrite the game as shown above.To solve the game and learn about the interaction of risk and incentives, we use backward induction.Start by observing that Pat would certainly like Allen to exert high effort.In fact,it is inefficient for Allen to expend low effort, because Pat can compensate Allen for exerting high effort by increasing his pay by1(offsetting Allen’s effort cost).By doing so,Pat’s expected revenue increases by2.Another way of looking at this is that if Allen’s effort were verifiable,the parties would write a contract that induces high effort.Given that high effort is desired,we must ask whether there is a contract that induces it.Can Patfind a wage and bonus package that motivates Allen to exert high effort and Gives Pat a large payoff?What can be accomplished w/out a bonus?b=0implies Allen has no incentive to exert high effort;he gets w a when he chooses L and less(w−1)a,when he chooses H.The best that Pat can do without a bonus is to offer w=1,which Allen is willing to accept(he1will accept no less,given his outside option).Thus,the best no-bonus contract (w=1and b=0)yields the payoffvector(1,1).Next,consider a bonus contract,designed to induce high effort.In order for him to be motivated to exert high effort,Allen’s expected payofffrom H must be at least as great as is his payofffrom L:1 2(w+b−1)+12(w−1)a≥w a.In principal-agent models,this kind of inequality is usally called the effort constraint or incentive compatibility constraint.In addition to the effort con-straint,the contract must give Allen an expected payoffat least as great as the value of his outside opportunity:1 2(w+b−1)+12(w−1)a≥1Theorists call this participation constraint.Assuming she wants to motivate high effort,Pat will offer Allen the bonus contract satisfying the two inequalities above.2。

普林斯顿大学的博弈论习题如果给你2个师的兵力,有你当“司令”,任务是攻克“敌方”占据的城市。

通往城市的道路只有甲乙两条,而敌方的守备力量是3个师。

规定:双方的兵力只能证实调动,当你发起进攻的时候,你的兵力超过敌方,你就获胜;你的兵力比敌方的守备兵力少或相当时,你就失败。

那么你将如何制定攻城方案?运用博弈论来加以分析,就可以模拟这场战斗的方案设置,每一方获胜的概率都是50%。

级谁赢谁输的可能性是一半对一半。

假设敌方有三个师,不妨在甲乙两条道路上。

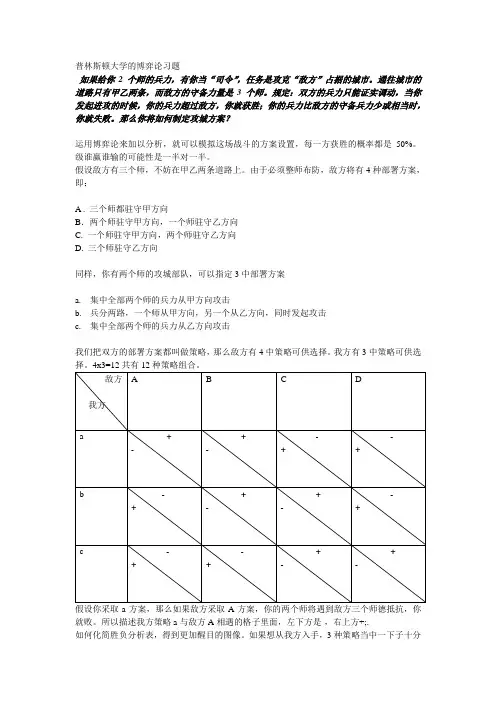

由于必须整师布防,敌方将有4种部署方案,即:A . 三个师都驻守甲方向B.两个师驻守甲方向,一个师驻守乙方向C. 一个师驻守甲方向,两个师驻守乙方向D. 三个师驻守乙方向同样,你有两个师的攻城部队,可以指定3中部署方案a.集中全部两个师的兵力从甲方向攻击b.兵分两路,一个师从甲方向,另一个从乙方向,同时发起攻击c.集中全部两个师的兵力从乙方向攻击我们把双方的部署方案都叫做策略,那么敌方有4中策略可供选择。

我方有3中策略可供选择。

4x3=12共有12种策略组合。

假设你采取a方案,那么如果敌方采取A方案,你的两个师将遇到敌方三个师德抵抗,你就败。

所以描述我方策略a与敌方A相遇的格子里面,左下方是-,右上方+;.如何化简胜负分析表,得到更加醒目的图像。

如果想从我方入手,3种策略当中一下子十分不错优劣的,a与b,b与c,a与c之间,无法区分。

于是我们先从敌方入手,尝试站在敌军的立场,比较4种不同策略。

先比较策略A&B。

如果我方采取策略a,那么敌方采取策略A&B都会赢。

如果我方采取策略b,敌方采取策略A会输,采取策略B敌方会赢。

如果我方采取策略c,敌方采取策略A或策略B都会输。

可见站在敌方的立场上,策略B比策略A好。

比较敌方的策略A&B,我们知道B是敌方的优势策略,A是敌方的劣势策略。

同样,比较敌方的策略C和策略D,我们知道C是敌方的优势策略,D是敌方的劣势策略。

博弈论博弈论(Game Theory),亦名“对策论”、“赛局理论”,属应用数学的一个分支,博弈论已经成为经济学的标准分析工具之一。

目前在生物学、经济学、国际关系、计算机科学、政治学、军事战略和其他很多学科都有广泛的应用。

博弈论主要研究公式化了的激励结构间的相互作用。

是研究具有斗争或竞争性质现象的数学理论和方法。

也是运筹学的一个重要学科。

博弈论考虑游戏中的个体的预测行为和实际行为,并研究它们的优化策略。

生物学家使用博弈理论来理解和预测进化论的某些结果。

参见:行为生态学(behavioral ecology)。

约翰·冯·诺依曼博弈论是二人在平等的对局中各自利用对方的策略变换自己的对抗策略,达到取胜的目的。

博弈论思想古已有之,中国古代的《孙子兵法》就不仅是一部军事著作,而且算是最早的一部博弈论著作。

博弈论最初主要研究象棋、桥牌、赌博中的胜负问题,人们对博弈局势的把握只停留在经验上,没有向理论化发展。

博弈论考虑游戏中的个体的预测行为和实际行为,并研究它们的优化策略。

近代对于博弈论的研究,开始于策墨洛(Zermelo),波雷尔(Borel)及冯·诺伊曼(von Neumann)。

1928年,冯·诺依曼证明了博弈论的基本原理,从而宣告了博弈论的正式诞生。

1944年,冯·诺依曼和摩根斯坦共著的划时代巨著《博弈论与经济行为》将二人博弈推广到n人博弈结构并将博弈论系统的应用于经济领域,从而奠定了这一学科的基础和理论体系。

1950~1951年,约翰·福布斯·纳什(John Forbes Nash Jr)利用不动点定理证明了均衡点的存在,为博弈论的一般化奠定了坚实的策墨洛(Zermelo)基础。

纳什的开创性论文《n人博弈的均衡点》(1950),《非合作博弈》(1951)等等,给出了纳什均衡的概念和均衡存在定理。

此外,塞尔顿、哈桑尼的研究也对博弈论发展起到推动作用。

osborne博弈论Osborne博弈论,是指由美国普林斯顿大学的经济学家David Osborne提出的一种博弈理论。

这个理论将博弈理论与数学思维相结合,被广泛应用于政经领域和国际贸易中,被认为是一种新兴的博弈理论。

1. 博弈的基本概念和分类博弈基本概念:参与者、策略、收益等。

博弈分类:合作和非合作博弈,完全信息和不完全信息博弈,非零和博弈、零和博弈、有限博弈、无限博弈等。

2. 囚徒困境模型囚徒困境模型是指一个犯罪案件的两个嫌犯分别面临决策,要么供认罪行获得从轻处理,要么保持沉默但承受较长的牢狱之灾。

如果双方都选择供认,则两个人都会受到重刑的惩罚。

如果他们都选择保持沉默,则只会受到轻判。

这个案例说明传统博弈理论中的纳什均衡并不是最优选择。

3. 博弈理论的意义博弈理论在经济学、市场竞争和商业竞争中得到了广泛应用。

它可以通过分析各种策略的结果,在竞争中寻求最优解。

同时,它可以通过确定各方的决策,对实际交易进行预测。

他还可以帮助政府和高科技公司分析合法性以及找到最佳的竞争策略。

4. Osborne博弈理论Osborne博弈理论是基于传统博弈理论发展而来的。

它通过概率性和动态性的思考,使得博弈理论更加现实和适用于复杂的市场环境中。

Osborne博弈理论最大的特点是可以对对手的策略进行预测。

在市场竞争中,它可以帮助企业决策和制定营销策略。

5. 存在性和唯一性问题Osborne博弈理论存在唯一性问题,即是否存在且仅存在一个纳什均衡点。

这个问题目前仍没有被完全解决,因为现实的博弈环境非常复杂。

Osborne博弈理论也面临着精度问题,因为实践过程中,策略和收益的估计都具有不确定性。

6. 发展趋势Osborne博弈理论目前仍在发展中,针对唯一性问题和精度问题,学者们正在寻求更加精确和适用的博弈理论。

目前,Osborne博弈理论正逐渐应用于政治、外交和著作领域,这一趋势将在未来得到进一步发展。

综上所述,Osborne博弈理论在实际应用中发挥重要作用,尤其是对于复杂的市场竞争环境。

Eco514—Game TheoryLecture10:Extensive Games with(Almost)PerfectInformationMarciano SiniscalchiOctober19,1999IntroductionBeginning with this lecture,we focus our attention on dynamic games.The majority of games of economic interest feature some dynamic component,and most often payoffuncertainty as well.The analysis of extensive games is challenging in several ways.At the most basic level, describing the possible sequences of events(choices)which define a particular game form is not problematic per se;yet,different formal definitions have been proposed,each with its pros and cons.Representing the players’information as the play unfolds is nontrivial:to some extent, research on this topic may still be said to be in progress.The focus of this course will be on solution concepts;in this area,subtle and unexpected difficulties arise,even in simple games.The very representation of players’beliefs as the play unfolds is problematic,at least in games with three or more players.There has been afierce debate on the“right”notion of rationality for extensive games,but no consensus seems to have emerged among theorists.We shall investigate these issues in due course.Today we begin by analyzing a particu-larly simple class of games,characterized by a natural multistage structure.I should point out that,perhaps partly due to its simplicity,this class encompasses the vast majority of extensive games of economic interest,especially if one allows for payoffuncertainty.We shall return to this point in the next lecture.Games with Perfect InformationFollowing OR,we begin with the simplest possible extensive-form game.The basic idea is as follows:play proceeds in stages,and at each stage one(and only one)player chooses an1action.Sequences of actions are called histories;some histories are terminal,i.e.no furtheractions are taken,and players receive their payoffs.Moreover,at each stage every playergets to observe all previous actions.Definition1An extensive-form game with perfect information is a tupleΓ=(N,A,H,P,Z,U)where:N is a set of players;A is a set of actions;H is a collection offinite and countable sequences of elements from A,such that:(i)∅∈H;(ii)(a1,...,a k)∈H implies(a1,...,a )∈H for all <k;(iii)If h=(a1,...,a k,...)and(a1,...,a k)∈H for all k≥1,then h∈H.Z is the set of terminal histories:that is,(a1,...,a k)∈Z iff(a1,...,a k)∈H and(a1,...,a k,a)∈H for all a∈A.Also let X=H\Z.All infinite histories are terminal.P:X→N is the player function,associating with each non-terminal history h∈X theplayer P(h)on the move after history h.U=(U i)i∈N:Z→R is the payofffunction,associating a vector of payoffs to everyterminal history.I differ from OR in two respects:first,Ifind it useful to specify the set of actions inthe definition of an extensive-form game.Second,at the expense of some(but not much!) generality,I represent preferences among terminal nodes by means of a vN-M utility function.Interpreting Definition1A few comments on formal aspects are in order.First,actions are best thought of as movelabels;what really defines the game is the set H of sequences.If one wishes,one can think ofA as a product set(i.e.every player gets her own set of move labels),but this is inessential.Histories encode all possible partial and complete plays of the gameΓ.Indeed,it isprecisely by spelling out what the possible plays are that we fully describe the game under consideration!Thus,consider the following game:N={1,2};A={a1,d1,a2,d2,A,D};H={∅,(d1),(a1),(a1,D),(a1, thus,Z={(d1),(a1,D),(a1,A,d2),(a1,A,a2)}and X={∅,(a1),(a1,A),};finally,P(∅)=P((a1,A))=1,P(a1)=2,and U((d1))=(2,2),U((a1,D))=(1,1),U((a1,A,d1))=(0,0),U((a1,A,a2))=(3,3).ThenΓ=(N,A,H,Z,P,U)is the game in Figure1.The empty history is always an element of H,and denotes the initial point of the game.Part(ii)in the definition of H says that every sub-history of a history h is itself a history inits own right.Part(iii)is a“limit”definition of infinite histories.Note that infinite historiesare logically required to be terminal.A key assumption is that,whenever a history h occurs,all players(in particular,PlayerP(h))get to observe it.23,3r 12,2d 1a 1r 2D A 1,1r 1d 2a 20,0Figure 1:A perfect-information gameStrategies and normal form(s)Definition 1is arguably a “natural”way of describing a dynamic game—and one that is at least implicit in most applications of the theory.According to our formulations,actions are the primitive objects of choice.However,the notion of a strategy ,i.e.a history-contingent plan,is also relevant:Definition 2Fix an extensive-form game with perfect information Γ.For every history h ∈X ,let A (h )={a ∈A :(h,a )∈H }be the set of actions available at h .Then,for every player i ∈N ,a strategy is a function s i :P −1(i )→A such that,for every h such that P (h )=i ,s i (h )∈A (h ).Denote by S i and S the set of strategies of Player i and the set of all strategy profiles.Armed with this definition (to which we shall need to return momentarily)we are ready to extend the notion of Nash equilibrium to extensive games.Definition 3Fix an extensive-form game Γwith perfect information.The outcome function O is a map O :S →Z defined by∀h =(a 1,...,a k )∈Z, <k :a +1=s P ((a 1,...,a ))((a 1,...,a ))The normal form of the game Γis G Γ=(N,(S i ,u i )i ∈N ),where u i (s )=U i (O (s )).The outcome function simply traces out the history generated by a strategy profile.The normal-form payofffunction u i is then derived from U i and O in the natural way.Finally:Definition 4Fix an extensive-form game Γwith perfect information.A pure-strategy Nash equilibrium of Γis a profile of strategies s ∈S which constitutes a Nash equilibrium of its normal form G Γ;a mixed-strategy Nash equilibrium of Γis a Nash equilibrium of the mixed extension of G Γ.3Thus,in the game of Figure1,both(a1a2,A)and(d1d2,D)are Nash equilibria.Observe that a strategy indicates choices even at histories which previous choices dictated by the same strategy prevent from obtaining.In the game of Figure1,for instance,d1a1is a strategy of Player1,although the history(a1,A)cannot obtain if Player1chooses d1at∅.It stands to reason that d2in the strategy d1d2cannot really be a description of Player 1’s action—she will never really play d2!We shall return to this point in the next lecture.For the time being,let us provisionally say that d2in the context of the equilibrium(d1d2,D)represents only Player2’s beliefs about Player1’s action in the counterfactual event that she chooses a1at∅,and Player2follows it with A.The key observation here is that this belief is crucial in sustaining(d1d2,D)as a Nash equilibrium.Games with observable actions and chance movesThe beauty of the OR notation becomes manifest once one adds the possibility that more than one player might choose an action simultaneously at a given history.The resulting game is no longer one of perfect information,because there is some degree of strategic uncertainty. Yet,we maintain the assumption that histories are observable:that is,every player on the move at a history h observes all previous actions and action profiles which comprise h.The OR definition is a bit vague,so let me provide a rigorous,inductive one.I also add the possibility of chance moves,i.e.exogenous uncertainty.Definition5An extensive-form game with observable actions and chance moves is a tuple Γ=(N,A,H,P,Z,U,f c)where:N is a set of players;Chance,denoted by c,is regarded as an additional player,so c∈N.A is a set of actionsH is a set of sequences whose elements are points in i∈J A for some A⊂N∪{c};Z and X are as in Definition1;P is the player correspondence P:X⇒N∪{c}U:Z→R N as in Definition1;H satisfies the conditions in Definition1.Moreover,for every k≥1,(a1,...,a k)∈H implies that(a1,...,a k−1)∈H and a k∈ i∈P((a1,...,a k−1))A.For every i∈N∪{c},let A i(h)={a i∈A:∃a−i∈ j∈P(h)\{i}A s.t.(h,(a i,a−i))∈H}. Then f c:{h:c∈P(h)}→∆(A)indicates the probability of each chance move,and f c(h)(A i(h))=1for all h such that c∈P(h).The definition is apparently complicated,but the underlying construction is rather nat-ural:at each stage,we allow more than one player(including Chance)to pick an action;the4chosen profile then becomes publicly observable.We quite simply replace individual actions with action profiles in the definition of a history,and adapt the notation accordingly. Remark0.1Let A(h)={a∈ i∈P(h)A:(h,a)∈H}.Then A(h)= i∈P(h)A i(h).The definition of a strategy needs minimal modifications:Definition6Fix an extensive-form gameΓwith observable actions and chance moves. Then,for every player i∈N∪{c},a strategy is a function s i:{h:i∈P(h)}→A such that,for every h such that i∈P(h),s i(h)∈A i(h).Denote by S i and S the set of strategies of Player i and the set of all strategy profiles.In the absence of chance moves,Definition4applies verbatim to the new setting.You can think about how to generalize it with chance moves(we do not really wish to treat Chance as an additional player in a normal-form game,so we need to redefine the payofffunctions in the natural way).Finally,the definition of Nash equilibrium requires no change.For those of you who are used to the traditional,tree-based definition of an extensive game,note that you need to use information sets in order to describe games without perfect information,but with observable actions.That is,you need to use the full expressive power of the tree-based notation in order to describe what is a slight and rather natural extension of perfect-information games.1Most games of economic interest are games with observable actions,albeit possibly with payoffuncertainty;hence,the OR notation is sufficient to deal with most applied problems (payoffuncertainty is easily added to the basic framework,as we shall see).1On the other hand,the OR notation is equivalent to the standard one for games with perfect information: just call histories“nodes”,actions“arcs”,terminal histories“leaves”and∅“root”.5。