Clustering Large Categorical Data Set via Genetic Algorithms Algoritmi genetici per il ragg

- 格式:pdf

- 大小:99.11 KB

- 文档页数:4

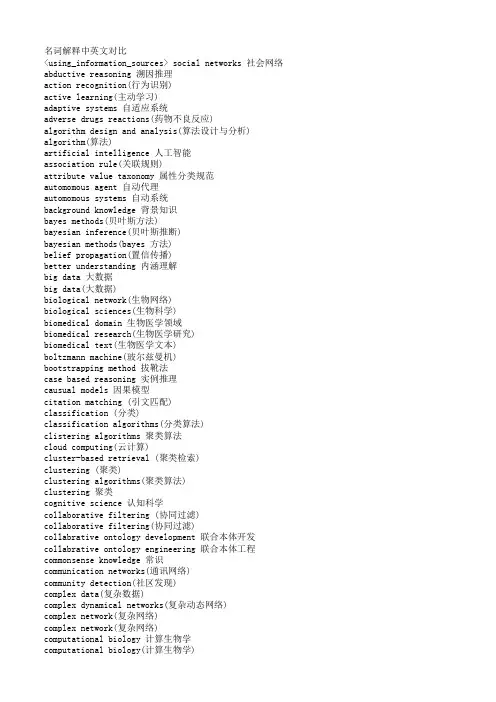

名词解释中英文对比<using_information_sources> social networks 社会网络abductive reasoning 溯因推理action recognition(行为识别)active learning(主动学习)adaptive systems 自适应系统adverse drugs reactions(药物不良反应)algorithm design and analysis(算法设计与分析) algorithm(算法)artificial intelligence 人工智能association rule(关联规则)attribute value taxonomy 属性分类规范automomous agent 自动代理automomous systems 自动系统background knowledge 背景知识bayes methods(贝叶斯方法)bayesian inference(贝叶斯推断)bayesian methods(bayes 方法)belief propagation(置信传播)better understanding 内涵理解big data 大数据big data(大数据)biological network(生物网络)biological sciences(生物科学)biomedical domain 生物医学领域biomedical research(生物医学研究)biomedical text(生物医学文本)boltzmann machine(玻尔兹曼机)bootstrapping method 拔靴法case based reasoning 实例推理causual models 因果模型citation matching (引文匹配)classification (分类)classification algorithms(分类算法)clistering algorithms 聚类算法cloud computing(云计算)cluster-based retrieval (聚类检索)clustering (聚类)clustering algorithms(聚类算法)clustering 聚类cognitive science 认知科学collaborative filtering (协同过滤)collaborative filtering(协同过滤)collabrative ontology development 联合本体开发collabrative ontology engineering 联合本体工程commonsense knowledge 常识communication networks(通讯网络)community detection(社区发现)complex data(复杂数据)complex dynamical networks(复杂动态网络)complex network(复杂网络)complex network(复杂网络)computational biology 计算生物学computational biology(计算生物学)computational complexity(计算复杂性) computational intelligence 智能计算computational modeling(计算模型)computer animation(计算机动画)computer networks(计算机网络)computer science 计算机科学concept clustering 概念聚类concept formation 概念形成concept learning 概念学习concept map 概念图concept model 概念模型concept modelling 概念模型conceptual model 概念模型conditional random field(条件随机场模型) conjunctive quries 合取查询constrained least squares (约束最小二乘) convex programming(凸规划)convolutional neural networks(卷积神经网络) customer relationship management(客户关系管理) data analysis(数据分析)data analysis(数据分析)data center(数据中心)data clustering (数据聚类)data compression(数据压缩)data envelopment analysis (数据包络分析)data fusion 数据融合data generation(数据生成)data handling(数据处理)data hierarchy (数据层次)data integration(数据整合)data integrity 数据完整性data intensive computing(数据密集型计算)data management 数据管理data management(数据管理)data management(数据管理)data miningdata mining 数据挖掘data model 数据模型data models(数据模型)data partitioning 数据划分data point(数据点)data privacy(数据隐私)data security(数据安全)data stream(数据流)data streams(数据流)data structure( 数据结构)data structure(数据结构)data visualisation(数据可视化)data visualization 数据可视化data visualization(数据可视化)data warehouse(数据仓库)data warehouses(数据仓库)data warehousing(数据仓库)database management systems(数据库管理系统)database management(数据库管理)date interlinking 日期互联date linking 日期链接Decision analysis(决策分析)decision maker 决策者decision making (决策)decision models 决策模型decision models 决策模型decision rule 决策规则decision support system 决策支持系统decision support systems (决策支持系统) decision tree(决策树)decission tree 决策树deep belief network(深度信念网络)deep learning(深度学习)defult reasoning 默认推理density estimation(密度估计)design methodology 设计方法论dimension reduction(降维) dimensionality reduction(降维)directed graph(有向图)disaster management 灾害管理disastrous event(灾难性事件)discovery(知识发现)dissimilarity (相异性)distributed databases 分布式数据库distributed databases(分布式数据库) distributed query 分布式查询document clustering (文档聚类)domain experts 领域专家domain knowledge 领域知识domain specific language 领域专用语言dynamic databases(动态数据库)dynamic logic 动态逻辑dynamic network(动态网络)dynamic system(动态系统)earth mover's distance(EMD 距离) education 教育efficient algorithm(有效算法)electric commerce 电子商务electronic health records(电子健康档案) entity disambiguation 实体消歧entity recognition 实体识别entity recognition(实体识别)entity resolution 实体解析event detection 事件检测event detection(事件检测)event extraction 事件抽取event identificaton 事件识别exhaustive indexing 完整索引expert system 专家系统expert systems(专家系统)explanation based learning 解释学习factor graph(因子图)feature extraction 特征提取feature extraction(特征提取)feature extraction(特征提取)feature selection (特征选择)feature selection 特征选择feature selection(特征选择)feature space 特征空间first order logic 一阶逻辑formal logic 形式逻辑formal meaning prepresentation 形式意义表示formal semantics 形式语义formal specification 形式描述frame based system 框为本的系统frequent itemsets(频繁项目集)frequent pattern(频繁模式)fuzzy clustering (模糊聚类)fuzzy clustering (模糊聚类)fuzzy clustering (模糊聚类)fuzzy data mining(模糊数据挖掘)fuzzy logic 模糊逻辑fuzzy set theory(模糊集合论)fuzzy set(模糊集)fuzzy sets 模糊集合fuzzy systems 模糊系统gaussian processes(高斯过程)gene expression data 基因表达数据gene expression(基因表达)generative model(生成模型)generative model(生成模型)genetic algorithm 遗传算法genome wide association study(全基因组关联分析) graph classification(图分类)graph classification(图分类)graph clustering(图聚类)graph data(图数据)graph data(图形数据)graph database 图数据库graph database(图数据库)graph mining(图挖掘)graph mining(图挖掘)graph partitioning 图划分graph query 图查询graph structure(图结构)graph theory(图论)graph theory(图论)graph theory(图论)graph theroy 图论graph visualization(图形可视化)graphical user interface 图形用户界面graphical user interfaces(图形用户界面)health care 卫生保健health care(卫生保健)heterogeneous data source 异构数据源heterogeneous data(异构数据)heterogeneous database 异构数据库heterogeneous information network(异构信息网络) heterogeneous network(异构网络)heterogenous ontology 异构本体heuristic rule 启发式规则hidden markov model(隐马尔可夫模型)hidden markov model(隐马尔可夫模型)hidden markov models(隐马尔可夫模型) hierarchical clustering (层次聚类) homogeneous network(同构网络)human centered computing 人机交互技术human computer interaction 人机交互human interaction 人机交互human robot interaction 人机交互image classification(图像分类)image clustering (图像聚类)image mining( 图像挖掘)image reconstruction(图像重建)image retrieval (图像检索)image segmentation(图像分割)inconsistent ontology 本体不一致incremental learning(增量学习)inductive learning (归纳学习)inference mechanisms 推理机制inference mechanisms(推理机制)inference rule 推理规则information cascades(信息追随)information diffusion(信息扩散)information extraction 信息提取information filtering(信息过滤)information filtering(信息过滤)information integration(信息集成)information network analysis(信息网络分析) information network mining(信息网络挖掘) information network(信息网络)information processing 信息处理information processing 信息处理information resource management (信息资源管理) information retrieval models(信息检索模型) information retrieval 信息检索information retrieval(信息检索)information retrieval(信息检索)information science 情报科学information sources 信息源information system( 信息系统)information system(信息系统)information technology(信息技术)information visualization(信息可视化)instance matching 实例匹配intelligent assistant 智能辅助intelligent systems 智能系统interaction network(交互网络)interactive visualization(交互式可视化)kernel function(核函数)kernel operator (核算子)keyword search(关键字检索)knowledege reuse 知识再利用knowledgeknowledgeknowledge acquisitionknowledge base 知识库knowledge based system 知识系统knowledge building 知识建构knowledge capture 知识获取knowledge construction 知识建构knowledge discovery(知识发现)knowledge extraction 知识提取knowledge fusion 知识融合knowledge integrationknowledge management systems 知识管理系统knowledge management 知识管理knowledge management(知识管理)knowledge model 知识模型knowledge reasoningknowledge representationknowledge representation(知识表达) knowledge sharing 知识共享knowledge storageknowledge technology 知识技术knowledge verification 知识验证language model(语言模型)language modeling approach(语言模型方法) large graph(大图)large graph(大图)learning(无监督学习)life science 生命科学linear programming(线性规划)link analysis (链接分析)link prediction(链接预测)link prediction(链接预测)link prediction(链接预测)linked data(关联数据)location based service(基于位置的服务) loclation based services(基于位置的服务) logic programming 逻辑编程logical implication 逻辑蕴涵logistic regression(logistic 回归)machine learning 机器学习machine translation(机器翻译)management system(管理系统)management( 知识管理)manifold learning(流形学习)markov chains 马尔可夫链markov processes(马尔可夫过程)matching function 匹配函数matrix decomposition(矩阵分解)matrix decomposition(矩阵分解)maximum likelihood estimation(最大似然估计)medical research(医学研究)mixture of gaussians(混合高斯模型)mobile computing(移动计算)multi agnet systems 多智能体系统multiagent systems 多智能体系统multimedia 多媒体natural language processing 自然语言处理natural language processing(自然语言处理) nearest neighbor (近邻)network analysis( 网络分析)network analysis(网络分析)network analysis(网络分析)network formation(组网)network structure(网络结构)network theory(网络理论)network topology(网络拓扑)network visualization(网络可视化)neural network(神经网络)neural networks (神经网络)neural networks(神经网络)nonlinear dynamics(非线性动力学)nonmonotonic reasoning 非单调推理nonnegative matrix factorization (非负矩阵分解) nonnegative matrix factorization(非负矩阵分解) object detection(目标检测)object oriented 面向对象object recognition(目标识别)object recognition(目标识别)online community(网络社区)online social network(在线社交网络)online social networks(在线社交网络)ontology alignment 本体映射ontology development 本体开发ontology engineering 本体工程ontology evolution 本体演化ontology extraction 本体抽取ontology interoperablity 互用性本体ontology language 本体语言ontology mapping 本体映射ontology matching 本体匹配ontology versioning 本体版本ontology 本体论open government data 政府公开数据opinion analysis(舆情分析)opinion mining(意见挖掘)opinion mining(意见挖掘)outlier detection(孤立点检测)parallel processing(并行处理)patient care(病人医疗护理)pattern classification(模式分类)pattern matching(模式匹配)pattern mining(模式挖掘)pattern recognition 模式识别pattern recognition(模式识别)pattern recognition(模式识别)personal data(个人数据)prediction algorithms(预测算法)predictive model 预测模型predictive models(预测模型)privacy preservation(隐私保护)probabilistic logic(概率逻辑)probabilistic logic(概率逻辑)probabilistic model(概率模型)probabilistic model(概率模型)probability distribution(概率分布)probability distribution(概率分布)project management(项目管理)pruning technique(修剪技术)quality management 质量管理query expansion(查询扩展)query language 查询语言query language(查询语言)query processing(查询处理)query rewrite 查询重写question answering system 问答系统random forest(随机森林)random graph(随机图)random processes(随机过程)random walk(随机游走)range query(范围查询)RDF database 资源描述框架数据库RDF query 资源描述框架查询RDF repository 资源描述框架存储库RDF storge 资源描述框架存储real time(实时)recommender system(推荐系统)recommender system(推荐系统)recommender systems 推荐系统recommender systems(推荐系统)record linkage 记录链接recurrent neural network(递归神经网络) regression(回归)reinforcement learning 强化学习reinforcement learning(强化学习)relation extraction 关系抽取relational database 关系数据库relational learning 关系学习relevance feedback (相关反馈)resource description framework 资源描述框架restricted boltzmann machines(受限玻尔兹曼机) retrieval models(检索模型)rough set theroy 粗糙集理论rough set 粗糙集rule based system 基于规则系统rule based 基于规则rule induction (规则归纳)rule learning (规则学习)rule learning 规则学习schema mapping 模式映射schema matching 模式匹配scientific domain 科学域search problems(搜索问题)semantic (web) technology 语义技术semantic analysis 语义分析semantic annotation 语义标注semantic computing 语义计算semantic integration 语义集成semantic interpretation 语义解释semantic model 语义模型semantic network 语义网络semantic relatedness 语义相关性semantic relation learning 语义关系学习semantic search 语义检索semantic similarity 语义相似度semantic similarity(语义相似度)semantic web rule language 语义网规则语言semantic web 语义网semantic web(语义网)semantic workflow 语义工作流semi supervised learning(半监督学习)sensor data(传感器数据)sensor networks(传感器网络)sentiment analysis(情感分析)sentiment analysis(情感分析)sequential pattern(序列模式)service oriented architecture 面向服务的体系结构shortest path(最短路径)similar kernel function(相似核函数)similarity measure(相似性度量)similarity relationship (相似关系)similarity search(相似搜索)similarity(相似性)situation aware 情境感知social behavior(社交行为)social influence(社会影响)social interaction(社交互动)social interaction(社交互动)social learning(社会学习)social life networks(社交生活网络)social machine 社交机器social media(社交媒体)social media(社交媒体)social media(社交媒体)social network analysis 社会网络分析social network analysis(社交网络分析)social network(社交网络)social network(社交网络)social science(社会科学)social tagging system(社交标签系统)social tagging(社交标签)social web(社交网页)sparse coding(稀疏编码)sparse matrices(稀疏矩阵)sparse representation(稀疏表示)spatial database(空间数据库)spatial reasoning 空间推理statistical analysis(统计分析)statistical model 统计模型string matching(串匹配)structural risk minimization (结构风险最小化) structured data 结构化数据subgraph matching 子图匹配subspace clustering(子空间聚类)supervised learning( 有support vector machine 支持向量机support vector machines(支持向量机)system dynamics(系统动力学)tag recommendation(标签推荐)taxonmy induction 感应规范temporal logic 时态逻辑temporal reasoning 时序推理text analysis(文本分析)text anaylsis 文本分析text classification (文本分类)text data(文本数据)text mining technique(文本挖掘技术)text mining 文本挖掘text mining(文本挖掘)text summarization(文本摘要)thesaurus alignment 同义对齐time frequency analysis(时频分析)time series analysis( 时time series data(时间序列数据)time series data(时间序列数据)time series(时间序列)topic model(主题模型)topic modeling(主题模型)transfer learning 迁移学习triple store 三元组存储uncertainty reasoning 不精确推理undirected graph(无向图)unified modeling language 统一建模语言unsupervisedupper bound(上界)user behavior(用户行为)user generated content(用户生成内容)utility mining(效用挖掘)visual analytics(可视化分析)visual content(视觉内容)visual representation(视觉表征)visualisation(可视化)visualization technique(可视化技术) visualization tool(可视化工具)web 2.0(网络2.0)web forum(web 论坛)web mining(网络挖掘)web of data 数据网web ontology lanuage 网络本体语言web pages(web 页面)web resource 网络资源web science 万维科学web search (网络检索)web usage mining(web 使用挖掘)wireless networks 无线网络world knowledge 世界知识world wide web 万维网world wide web(万维网)xml database 可扩展标志语言数据库附录 2 Data Mining 知识图谱(共包含二级节点15 个,三级节点93 个)间序列分析)监督学习)领域 二级分类 三级分类。

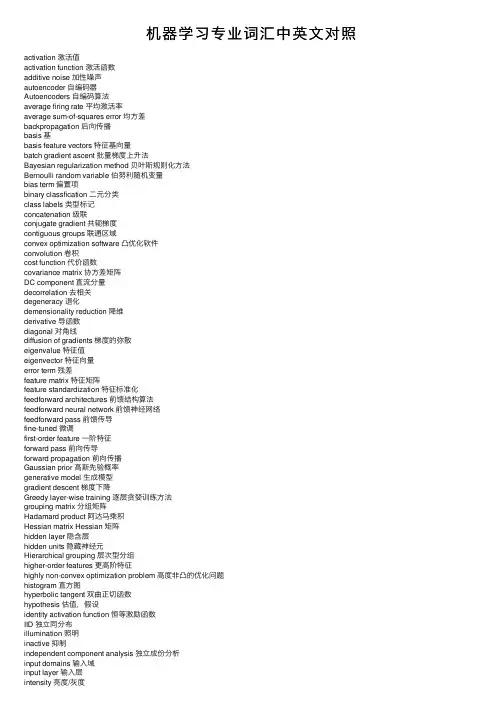

机器学习专业词汇中英⽂对照activation 激活值activation function 激活函数additive noise 加性噪声autoencoder ⾃编码器Autoencoders ⾃编码算法average firing rate 平均激活率average sum-of-squares error 均⽅差backpropagation 后向传播basis 基basis feature vectors 特征基向量batch gradient ascent 批量梯度上升法Bayesian regularization method 贝叶斯规则化⽅法Bernoulli random variable 伯努利随机变量bias term 偏置项binary classfication ⼆元分类class labels 类型标记concatenation 级联conjugate gradient 共轭梯度contiguous groups 联通区域convex optimization software 凸优化软件convolution 卷积cost function 代价函数covariance matrix 协⽅差矩阵DC component 直流分量decorrelation 去相关degeneracy 退化demensionality reduction 降维derivative 导函数diagonal 对⾓线diffusion of gradients 梯度的弥散eigenvalue 特征值eigenvector 特征向量error term 残差feature matrix 特征矩阵feature standardization 特征标准化feedforward architectures 前馈结构算法feedforward neural network 前馈神经⽹络feedforward pass 前馈传导fine-tuned 微调first-order feature ⼀阶特征forward pass 前向传导forward propagation 前向传播Gaussian prior ⾼斯先验概率generative model ⽣成模型gradient descent 梯度下降Greedy layer-wise training 逐层贪婪训练⽅法grouping matrix 分组矩阵Hadamard product 阿达马乘积Hessian matrix Hessian 矩阵hidden layer 隐含层hidden units 隐藏神经元Hierarchical grouping 层次型分组higher-order features 更⾼阶特征highly non-convex optimization problem ⾼度⾮凸的优化问题histogram 直⽅图hyperbolic tangent 双曲正切函数hypothesis 估值,假设identity activation function 恒等激励函数IID 独⽴同分布illumination 照明inactive 抑制independent component analysis 独⽴成份分析input domains 输⼊域input layer 输⼊层intensity 亮度/灰度intercept term 截距KL divergence 相对熵KL divergence KL分散度k-Means K-均值learning rate 学习速率least squares 最⼩⼆乘法linear correspondence 线性响应linear superposition 线性叠加line-search algorithm 线搜索算法local mean subtraction 局部均值消减local optima 局部最优解logistic regression 逻辑回归loss function 损失函数low-pass filtering 低通滤波magnitude 幅值MAP 极⼤后验估计maximum likelihood estimation 极⼤似然估计mean 平均值MFCC Mel 倒频系数multi-class classification 多元分类neural networks 神经⽹络neuron 神经元Newton’s method ⽜顿法non-convex function ⾮凸函数non-linear feature ⾮线性特征norm 范式norm bounded 有界范数norm constrained 范数约束normalization 归⼀化numerical roundoff errors 数值舍⼊误差numerically checking 数值检验numerically reliable 数值计算上稳定object detection 物体检测objective function ⽬标函数off-by-one error 缺位错误orthogonalization 正交化output layer 输出层overall cost function 总体代价函数over-complete basis 超完备基over-fitting 过拟合parts of objects ⽬标的部件part-whole decompostion 部分-整体分解PCA 主元分析penalty term 惩罚因⼦per-example mean subtraction 逐样本均值消减pooling 池化pretrain 预训练principal components analysis 主成份分析quadratic constraints ⼆次约束RBMs 受限Boltzman机reconstruction based models 基于重构的模型reconstruction cost 重建代价reconstruction term 重构项redundant 冗余reflection matrix 反射矩阵regularization 正则化regularization term 正则化项rescaling 缩放robust 鲁棒性run ⾏程second-order feature ⼆阶特征sigmoid activation function S型激励函数significant digits 有效数字singular value 奇异值singular vector 奇异向量smoothed L1 penalty 平滑的L1范数惩罚Smoothed topographic L1 sparsity penalty 平滑地形L1稀疏惩罚函数smoothing 平滑Softmax Regresson Softmax回归sorted in decreasing order 降序排列source features 源特征sparse autoencoder 消减归⼀化Sparsity 稀疏性sparsity parameter 稀疏性参数sparsity penalty 稀疏惩罚square function 平⽅函数squared-error ⽅差stationary 平稳性(不变性)stationary stochastic process 平稳随机过程step-size 步长值supervised learning 监督学习symmetric positive semi-definite matrix 对称半正定矩阵symmetry breaking 对称失效tanh function 双曲正切函数the average activation 平均活跃度the derivative checking method 梯度验证⽅法the empirical distribution 经验分布函数the energy function 能量函数the Lagrange dual 拉格朗⽇对偶函数the log likelihood 对数似然函数the pixel intensity value 像素灰度值the rate of convergence 收敛速度topographic cost term 拓扑代价项topographic ordered 拓扑秩序transformation 变换translation invariant 平移不变性trivial answer 平凡解under-complete basis 不完备基unrolling 组合扩展unsupervised learning ⽆监督学习variance ⽅差vecotrized implementation 向量化实现vectorization ⽮量化visual cortex 视觉⽪层weight decay 权重衰减weighted average 加权平均值whitening ⽩化zero-mean 均值为零Letter AAccumulated error backpropagation 累积误差逆传播Activation Function 激活函数Adaptive Resonance Theory/ART ⾃适应谐振理论Addictive model 加性学习Adversarial Networks 对抗⽹络Affine Layer 仿射层Affinity matrix 亲和矩阵Agent 代理 / 智能体Algorithm 算法Alpha-beta pruning α-β剪枝Anomaly detection 异常检测Approximation 近似Area Under ROC Curve/AUC Roc 曲线下⾯积Artificial General Intelligence/AGI 通⽤⼈⼯智能Artificial Intelligence/AI ⼈⼯智能Association analysis 关联分析Attention mechanism 注意⼒机制Attribute conditional independence assumption 属性条件独⽴性假设Attribute space 属性空间Attribute value 属性值Autoencoder ⾃编码器Automatic speech recognition ⾃动语⾳识别Automatic summarization ⾃动摘要Average gradient 平均梯度Average-Pooling 平均池化Letter BBackpropagation Through Time 通过时间的反向传播Backpropagation/BP 反向传播Base learner 基学习器Base learning algorithm 基学习算法Batch Normalization/BN 批量归⼀化Bayes decision rule 贝叶斯判定准则Bayes Model Averaging/BMA 贝叶斯模型平均Bayes optimal classifier 贝叶斯最优分类器Bayesian decision theory 贝叶斯决策论Bayesian network 贝叶斯⽹络Between-class scatter matrix 类间散度矩阵Bias 偏置 / 偏差Bias-variance decomposition 偏差-⽅差分解Bias-Variance Dilemma 偏差 – ⽅差困境Bi-directional Long-Short Term Memory/Bi-LSTM 双向长短期记忆Binary classification ⼆分类Binomial test ⼆项检验Bi-partition ⼆分法Boltzmann machine 玻尔兹曼机Bootstrap sampling ⾃助采样法/可重复采样/有放回采样Bootstrapping ⾃助法Break-Event Point/BEP 平衡点Letter CCalibration 校准Cascade-Correlation 级联相关Categorical attribute 离散属性Class-conditional probability 类条件概率Classification and regression tree/CART 分类与回归树Classifier 分类器Class-imbalance 类别不平衡Closed -form 闭式Cluster 簇/类/集群Cluster analysis 聚类分析Clustering 聚类Clustering ensemble 聚类集成Co-adapting 共适应Coding matrix 编码矩阵COLT 国际学习理论会议Committee-based learning 基于委员会的学习Competitive learning 竞争型学习Component learner 组件学习器Comprehensibility 可解释性Computation Cost 计算成本Computational Linguistics 计算语⾔学Computer vision 计算机视觉Concept drift 概念漂移Concept Learning System /CLS 概念学习系统Conditional entropy 条件熵Conditional mutual information 条件互信息Conditional Probability Table/CPT 条件概率表Conditional random field/CRF 条件随机场Conditional risk 条件风险Confidence 置信度Confusion matrix 混淆矩阵Connection weight 连接权Connectionism 连结主义Consistency ⼀致性/相合性Contingency table 列联表Continuous attribute 连续属性Convergence 收敛Conversational agent 会话智能体Convex quadratic programming 凸⼆次规划Convexity 凸性Convolutional neural network/CNN 卷积神经⽹络Co-occurrence 同现Correlation coefficient 相关系数Cosine similarity 余弦相似度Cost curve 成本曲线Cost Function 成本函数Cost matrix 成本矩阵Cost-sensitive 成本敏感Cross entropy 交叉熵Cross validation 交叉验证Crowdsourcing 众包Curse of dimensionality 维数灾难Cut point 截断点Cutting plane algorithm 割平⾯法Letter DData mining 数据挖掘Data set 数据集Decision Boundary 决策边界Decision stump 决策树桩Decision tree 决策树/判定树Deduction 演绎Deep Belief Network 深度信念⽹络Deep Convolutional Generative Adversarial Network/DCGAN 深度卷积⽣成对抗⽹络Deep learning 深度学习Deep neural network/DNN 深度神经⽹络Deep Q-Learning 深度 Q 学习Deep Q-Network 深度 Q ⽹络Density estimation 密度估计Density-based clustering 密度聚类Differentiable neural computer 可微分神经计算机Dimensionality reduction algorithm 降维算法Directed edge 有向边Disagreement measure 不合度量Discriminative model 判别模型Discriminator 判别器Distance measure 距离度量Distance metric learning 距离度量学习Distribution 分布Divergence 散度Diversity measure 多样性度量/差异性度量Domain adaption 领域⾃适应Downsampling 下采样D-separation (Directed separation)有向分离Dual problem 对偶问题Dummy node 哑结点Dynamic Fusion 动态融合Dynamic programming 动态规划Letter EEigenvalue decomposition 特征值分解Embedding 嵌⼊Emotional analysis 情绪分析Empirical conditional entropy 经验条件熵Empirical entropy 经验熵Empirical error 经验误差Empirical risk 经验风险End-to-End 端到端Energy-based model 基于能量的模型Ensemble learning 集成学习Ensemble pruning 集成修剪Error Correcting Output Codes/ECOC 纠错输出码Error rate 错误率Error-ambiguity decomposition 误差-分歧分解Euclidean distance 欧⽒距离Evolutionary computation 演化计算Expectation-Maximization 期望最⼤化Expected loss 期望损失Exploding Gradient Problem 梯度爆炸问题Exponential loss function 指数损失函数Extreme Learning Machine/ELM 超限学习机Letter FFactorization 因⼦分解False negative 假负类False positive 假正类False Positive Rate/FPR 假正例率Feature engineering 特征⼯程Feature selection 特征选择Feature vector 特征向量Featured Learning 特征学习Feedforward Neural Networks/FNN 前馈神经⽹络Fine-tuning 微调Flipping output 翻转法Fluctuation 震荡Forward stagewise algorithm 前向分步算法Frequentist 频率主义学派Full-rank matrix 满秩矩阵Functional neuron 功能神经元Letter GGain ratio 增益率Game theory 博弈论Gaussian kernel function ⾼斯核函数Gaussian Mixture Model ⾼斯混合模型General Problem Solving 通⽤问题求解Generalization 泛化Generalization error 泛化误差Generalization error bound 泛化误差上界Generalized Lagrange function ⼴义拉格朗⽇函数Generalized linear model ⼴义线性模型Generalized Rayleigh quotient ⼴义瑞利商Generative Adversarial Networks/GAN ⽣成对抗⽹络Generative Model ⽣成模型Generator ⽣成器Genetic Algorithm/GA 遗传算法Gibbs sampling 吉布斯采样Gini index 基尼指数Global minimum 全局最⼩Global Optimization 全局优化Gradient boosting 梯度提升Gradient Descent 梯度下降Graph theory 图论Ground-truth 真相/真实Letter HHard margin 硬间隔Hard voting 硬投票Harmonic mean 调和平均Hesse matrix 海塞矩阵Hidden dynamic model 隐动态模型Hidden layer 隐藏层Hidden Markov Model/HMM 隐马尔可夫模型Hierarchical clustering 层次聚类Hilbert space 希尔伯特空间Hinge loss function 合页损失函数Hold-out 留出法Homogeneous 同质Hybrid computing 混合计算Hyperparameter 超参数Hypothesis 假设Hypothesis test 假设验证Letter IICML 国际机器学习会议Improved iterative scaling/IIS 改进的迭代尺度法Incremental learning 增量学习Independent and identically distributed/i.i.d. 独⽴同分布Independent Component Analysis/ICA 独⽴成分分析Indicator function 指⽰函数Individual learner 个体学习器Induction 归纳Inductive bias 归纳偏好Inductive learning 归纳学习Inductive Logic Programming/ILP 归纳逻辑程序设计Information entropy 信息熵Information gain 信息增益Input layer 输⼊层Insensitive loss 不敏感损失Inter-cluster similarity 簇间相似度International Conference for Machine Learning/ICML 国际机器学习⼤会Intra-cluster similarity 簇内相似度Intrinsic value 固有值Isometric Mapping/Isomap 等度量映射Isotonic regression 等分回归Iterative Dichotomiser 迭代⼆分器Letter KKernel method 核⽅法Kernel trick 核技巧Kernelized Linear Discriminant Analysis/KLDA 核线性判别分析K-fold cross validation k 折交叉验证/k 倍交叉验证K-Means Clustering K – 均值聚类K-Nearest Neighbours Algorithm/KNN K近邻算法Knowledge base 知识库Knowledge Representation 知识表征Letter LLabel space 标记空间Lagrange duality 拉格朗⽇对偶性Lagrange multiplier 拉格朗⽇乘⼦Laplace smoothing 拉普拉斯平滑Laplacian correction 拉普拉斯修正Latent Dirichlet Allocation 隐狄利克雷分布Latent semantic analysis 潜在语义分析Latent variable 隐变量Lazy learning 懒惰学习Learner 学习器Learning by analogy 类⽐学习Learning rate 学习率Learning Vector Quantization/LVQ 学习向量量化Least squares regression tree 最⼩⼆乘回归树Leave-One-Out/LOO 留⼀法linear chain conditional random field 线性链条件随机场Linear Discriminant Analysis/LDA 线性判别分析Linear model 线性模型Linear Regression 线性回归Link function 联系函数Local Markov property 局部马尔可夫性Local minimum 局部最⼩Log likelihood 对数似然Log odds/logit 对数⼏率Logistic Regression Logistic 回归Log-likelihood 对数似然Log-linear regression 对数线性回归Long-Short Term Memory/LSTM 长短期记忆Loss function 损失函数Letter MMachine translation/MT 机器翻译Macron-P 宏查准率Macron-R 宏查全率Majority voting 绝对多数投票法Manifold assumption 流形假设Manifold learning 流形学习Margin theory 间隔理论Marginal distribution 边际分布Marginal independence 边际独⽴性Marginalization 边际化Markov Chain Monte Carlo/MCMC 马尔可夫链蒙特卡罗⽅法Markov Random Field 马尔可夫随机场Maximal clique 最⼤团Maximum Likelihood Estimation/MLE 极⼤似然估计/极⼤似然法Maximum margin 最⼤间隔Maximum weighted spanning tree 最⼤带权⽣成树Max-Pooling 最⼤池化Mean squared error 均⽅误差Meta-learner 元学习器Metric learning 度量学习Micro-P 微查准率Micro-R 微查全率Minimal Description Length/MDL 最⼩描述长度Minimax game 极⼩极⼤博弈Misclassification cost 误分类成本Mixture of experts 混合专家Momentum 动量Moral graph 道德图/端正图Multi-class classification 多分类Multi-document summarization 多⽂档摘要Multi-layer feedforward neural networks 多层前馈神经⽹络Multilayer Perceptron/MLP 多层感知器Multimodal learning 多模态学习Multiple Dimensional Scaling 多维缩放Multiple linear regression 多元线性回归Multi-response Linear Regression /MLR 多响应线性回归Mutual information 互信息Letter NNaive bayes 朴素贝叶斯Naive Bayes Classifier 朴素贝叶斯分类器Named entity recognition 命名实体识别Nash equilibrium 纳什均衡Natural language generation/NLG ⾃然语⾔⽣成Natural language processing ⾃然语⾔处理Negative class 负类Negative correlation 负相关法Negative Log Likelihood 负对数似然Neighbourhood Component Analysis/NCA 近邻成分分析Neural Machine Translation 神经机器翻译Neural Turing Machine 神经图灵机Newton method ⽜顿法NIPS 国际神经信息处理系统会议No Free Lunch Theorem/NFL 没有免费的午餐定理Noise-contrastive estimation 噪⾳对⽐估计Nominal attribute 列名属性Non-convex optimization ⾮凸优化Nonlinear model ⾮线性模型Non-metric distance ⾮度量距离Non-negative matrix factorization ⾮负矩阵分解Non-ordinal attribute ⽆序属性Non-Saturating Game ⾮饱和博弈Norm 范数Normalization 归⼀化Nuclear norm 核范数Numerical attribute 数值属性Letter OObjective function ⽬标函数Oblique decision tree 斜决策树Occam’s razor 奥卡姆剃⼑Odds ⼏率Off-Policy 离策略One shot learning ⼀次性学习One-Dependent Estimator/ODE 独依赖估计On-Policy 在策略Ordinal attribute 有序属性Out-of-bag estimate 包外估计Output layer 输出层Output smearing 输出调制法Overfitting 过拟合/过配Oversampling 过采样Letter PPaired t-test 成对 t 检验Pairwise 成对型Pairwise Markov property 成对马尔可夫性Parameter 参数Parameter estimation 参数估计Parameter tuning 调参Parse tree 解析树Particle Swarm Optimization/PSO 粒⼦群优化算法Part-of-speech tagging 词性标注Perceptron 感知机Performance measure 性能度量Plug and Play Generative Network 即插即⽤⽣成⽹络Plurality voting 相对多数投票法Polarity detection 极性检测Polynomial kernel function 多项式核函数Pooling 池化Positive class 正类Positive definite matrix 正定矩阵Post-hoc test 后续检验Post-pruning 后剪枝potential function 势函数Precision 查准率/准确率Prepruning 预剪枝Principal component analysis/PCA 主成分分析Principle of multiple explanations 多释原则Prior 先验Probability Graphical Model 概率图模型Proximal Gradient Descent/PGD 近端梯度下降Pruning 剪枝Pseudo-label 伪标记Letter QQuantized Neural Network 量⼦化神经⽹络Quantum computer 量⼦计算机Quantum Computing 量⼦计算Quasi Newton method 拟⽜顿法Letter RRadial Basis Function/RBF 径向基函数Random Forest Algorithm 随机森林算法Random walk 随机漫步Recall 查全率/召回率Receiver Operating Characteristic/ROC 受试者⼯作特征Rectified Linear Unit/ReLU 线性修正单元Recurrent Neural Network 循环神经⽹络Recursive neural network 递归神经⽹络Reference model 参考模型Regression 回归Regularization 正则化Reinforcement learning/RL 强化学习Representation learning 表征学习Representer theorem 表⽰定理reproducing kernel Hilbert space/RKHS 再⽣核希尔伯特空间Re-sampling 重采样法Rescaling 再缩放Residual Mapping 残差映射Residual Network 残差⽹络Restricted Boltzmann Machine/RBM 受限玻尔兹曼机Restricted Isometry Property/RIP 限定等距性Re-weighting 重赋权法Robustness 稳健性/鲁棒性Root node 根结点Rule Engine 规则引擎Rule learning 规则学习Letter SSaddle point 鞍点Sample space 样本空间Sampling 采样Score function 评分函数Self-Driving ⾃动驾驶Self-Organizing Map/SOM ⾃组织映射Semi-naive Bayes classifiers 半朴素贝叶斯分类器Semi-Supervised Learning 半监督学习semi-Supervised Support Vector Machine 半监督⽀持向量机Sentiment analysis 情感分析Separating hyperplane 分离超平⾯Sigmoid function Sigmoid 函数Similarity measure 相似度度量Simulated annealing 模拟退⽕Simultaneous localization and mapping 同步定位与地图构建Singular Value Decomposition 奇异值分解Slack variables 松弛变量Smoothing 平滑Soft margin 软间隔Soft margin maximization 软间隔最⼤化Soft voting 软投票Sparse representation 稀疏表征Sparsity 稀疏性Specialization 特化Spectral Clustering 谱聚类Speech Recognition 语⾳识别Splitting variable 切分变量Squashing function 挤压函数Stability-plasticity dilemma 可塑性-稳定性困境Statistical learning 统计学习Status feature function 状态特征函Stochastic gradient descent 随机梯度下降Stratified sampling 分层采样Structural risk 结构风险Structural risk minimization/SRM 结构风险最⼩化Subspace ⼦空间Supervised learning 监督学习/有导师学习support vector expansion ⽀持向量展式Support Vector Machine/SVM ⽀持向量机Surrogat loss 替代损失Surrogate function 替代函数Symbolic learning 符号学习Symbolism 符号主义Synset 同义词集Letter TT-Distribution Stochastic Neighbour Embedding/t-SNE T – 分布随机近邻嵌⼊Tensor 张量Tensor Processing Units/TPU 张量处理单元The least square method 最⼩⼆乘法Threshold 阈值Threshold logic unit 阈值逻辑单元Threshold-moving 阈值移动Time Step 时间步骤Tokenization 标记化Training error 训练误差Training instance 训练⽰例/训练例Transductive learning 直推学习Transfer learning 迁移学习Treebank 树库Tria-by-error 试错法True negative 真负类True positive 真正类True Positive Rate/TPR 真正例率Turing Machine 图灵机Twice-learning ⼆次学习Letter UUnderfitting ⽋拟合/⽋配Undersampling ⽋采样Understandability 可理解性Unequal cost ⾮均等代价Unit-step function 单位阶跃函数Univariate decision tree 单变量决策树Unsupervised learning ⽆监督学习/⽆导师学习Unsupervised layer-wise training ⽆监督逐层训练Upsampling 上采样Letter VVanishing Gradient Problem 梯度消失问题Variational inference 变分推断VC Theory VC维理论Version space 版本空间Viterbi algorithm 维特⽐算法Von Neumann architecture 冯 · 诺伊曼架构Letter WWasserstein GAN/WGAN Wasserstein⽣成对抗⽹络Weak learner 弱学习器Weight 权重Weight sharing 权共享Weighted voting 加权投票法Within-class scatter matrix 类内散度矩阵Word embedding 词嵌⼊Word sense disambiguation 词义消歧Letter ZZero-data learning 零数据学习Zero-shot learning 零次学习Aapproximations近似值arbitrary随意的affine仿射的arbitrary任意的amino acid氨基酸amenable经得起检验的axiom公理,原则abstract提取architecture架构,体系结构;建造业absolute绝对的arsenal军⽕库assignment分配algebra线性代数asymptotically⽆症状的appropriate恰当的Bbias偏差brevity简短,简洁;短暂broader⼴泛briefly简短的batch批量Cconvergence 收敛,集中到⼀点convex凸的contours轮廓constraint约束constant常理commercial商务的complementarity补充coordinate ascent同等级上升clipping剪下物;剪报;修剪component分量;部件continuous连续的covariance协⽅差canonical正规的,正则的concave⾮凸的corresponds相符合;相当;通信corollary推论concrete具体的事物,实在的东西cross validation交叉验证correlation相互关系convention约定cluster⼀簇centroids 质⼼,形⼼converge收敛computationally计算(机)的calculus计算Dderive获得,取得dual⼆元的duality⼆元性;⼆象性;对偶性derivation求导;得到;起源denote预⽰,表⽰,是…的标志;意味着,[逻]指称divergence 散度;发散性dimension尺度,规格;维数dot⼩圆点distortion变形density概率密度函数discrete离散的discriminative有识别能⼒的diagonal对⾓dispersion分散,散开determinant决定因素disjoint不相交的Eencounter遇到ellipses椭圆equality等式extra额外的empirical经验;观察ennmerate例举,计数exceed超过,越出expectation期望efficient⽣效的endow赋予explicitly清楚的exponential family指数家族equivalently等价的Ffeasible可⾏的forary初次尝试finite有限的,限定的forgo摒弃,放弃fliter过滤frequentist最常发⽣的forward search前向式搜索formalize使定形Ggeneralized归纳的generalization概括,归纳;普遍化;判断(根据不⾜)guarantee保证;抵押品generate形成,产⽣geometric margins⼏何边界gap裂⼝generative⽣产的;有⽣产⼒的Hheuristic启发式的;启发法;启发程序hone怀恋;磨hyperplane超平⾯Linitial最初的implement执⾏intuitive凭直觉获知的incremental增加的intercept截距intuitious直觉instantiation例⼦indicator指⽰物,指⽰器interative重复的,迭代的integral积分identical相等的;完全相同的indicate表⽰,指出invariance不变性,恒定性impose把…强加于intermediate中间的interpretation解释,翻译Jjoint distribution联合概率Llieu替代logarithmic对数的,⽤对数表⽰的latent潜在的Leave-one-out cross validation留⼀法交叉验证Mmagnitude巨⼤mapping绘图,制图;映射matrix矩阵mutual相互的,共同的monotonically单调的minor较⼩的,次要的multinomial多项的multi-class classification⼆分类问题Nnasty讨厌的notation标志,注释naïve朴素的Oobtain得到oscillate摆动optimization problem最优化问题objective function⽬标函数optimal最理想的orthogonal(⽮量,矩阵等)正交的orientation⽅向ordinary普通的occasionally偶然的Ppartial derivative偏导数property性质proportional成⽐例的primal原始的,最初的permit允许pseudocode伪代码permissible可允许的polynomial多项式preliminary预备precision精度perturbation 不安,扰乱poist假定,设想positive semi-definite半正定的parentheses圆括号posterior probability后验概率plementarity补充pictorially图像的parameterize确定…的参数poisson distribution柏松分布pertinent相关的Qquadratic⼆次的quantity量,数量;分量query疑问的Rregularization使系统化;调整reoptimize重新优化restrict限制;限定;约束reminiscent回忆往事的;提醒的;使⼈联想…的(of)remark注意random variable随机变量respect考虑respectively各⾃的;分别的redundant过多的;冗余的Ssusceptible敏感的stochastic可能的;随机的symmetric对称的sophisticated复杂的spurious假的;伪造的subtract减去;减法器simultaneously同时发⽣地;同步地suffice满⾜scarce稀有的,难得的split分解,分离subset⼦集statistic统计量successive iteratious连续的迭代scale标度sort of有⼏分的squares平⽅Ttrajectory轨迹temporarily暂时的terminology专⽤名词tolerance容忍;公差thumb翻阅threshold阈,临界theorem定理tangent正弦Uunit-length vector单位向量Vvalid有效的,正确的variance⽅差variable变量;变元vocabulary词汇valued经估价的;宝贵的Wwrapper包装分类:。

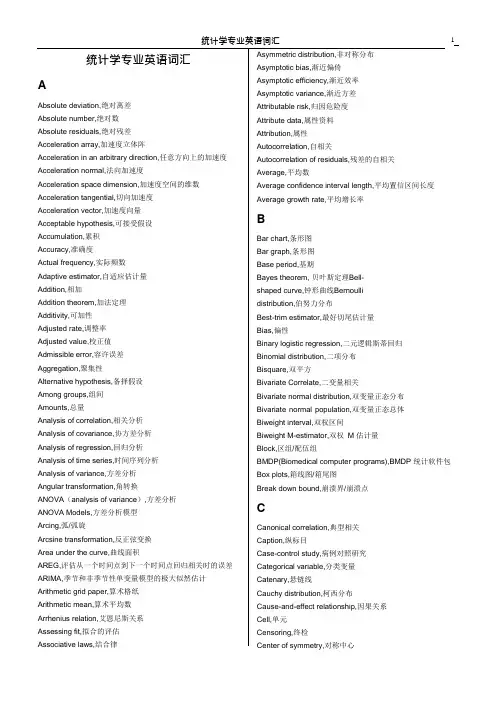

机器学习与人工智能领域中常用的英语词汇1.General Concepts (基础概念)•Artificial Intelligence (AI) - 人工智能1)Artificial Intelligence (AI) - 人工智能2)Machine Learning (ML) - 机器学习3)Deep Learning (DL) - 深度学习4)Neural Network - 神经网络5)Natural Language Processing (NLP) - 自然语言处理6)Computer Vision - 计算机视觉7)Robotics - 机器人技术8)Speech Recognition - 语音识别9)Expert Systems - 专家系统10)Knowledge Representation - 知识表示11)Pattern Recognition - 模式识别12)Cognitive Computing - 认知计算13)Autonomous Systems - 自主系统14)Human-Machine Interaction - 人机交互15)Intelligent Agents - 智能代理16)Machine Translation - 机器翻译17)Swarm Intelligence - 群体智能18)Genetic Algorithms - 遗传算法19)Fuzzy Logic - 模糊逻辑20)Reinforcement Learning - 强化学习•Machine Learning (ML) - 机器学习1)Machine Learning (ML) - 机器学习2)Artificial Neural Network - 人工神经网络3)Deep Learning - 深度学习4)Supervised Learning - 有监督学习5)Unsupervised Learning - 无监督学习6)Reinforcement Learning - 强化学习7)Semi-Supervised Learning - 半监督学习8)Training Data - 训练数据9)Test Data - 测试数据10)Validation Data - 验证数据11)Feature - 特征12)Label - 标签13)Model - 模型14)Algorithm - 算法15)Regression - 回归16)Classification - 分类17)Clustering - 聚类18)Dimensionality Reduction - 降维19)Overfitting - 过拟合20)Underfitting - 欠拟合•Deep Learning (DL) - 深度学习1)Deep Learning - 深度学习2)Neural Network - 神经网络3)Artificial Neural Network (ANN) - 人工神经网络4)Convolutional Neural Network (CNN) - 卷积神经网络5)Recurrent Neural Network (RNN) - 循环神经网络6)Long Short-Term Memory (LSTM) - 长短期记忆网络7)Gated Recurrent Unit (GRU) - 门控循环单元8)Autoencoder - 自编码器9)Generative Adversarial Network (GAN) - 生成对抗网络10)Transfer Learning - 迁移学习11)Pre-trained Model - 预训练模型12)Fine-tuning - 微调13)Feature Extraction - 特征提取14)Activation Function - 激活函数15)Loss Function - 损失函数16)Gradient Descent - 梯度下降17)Backpropagation - 反向传播18)Epoch - 训练周期19)Batch Size - 批量大小20)Dropout - 丢弃法•Neural Network - 神经网络1)Neural Network - 神经网络2)Artificial Neural Network (ANN) - 人工神经网络3)Deep Neural Network (DNN) - 深度神经网络4)Convolutional Neural Network (CNN) - 卷积神经网络5)Recurrent Neural Network (RNN) - 循环神经网络6)Long Short-Term Memory (LSTM) - 长短期记忆网络7)Gated Recurrent Unit (GRU) - 门控循环单元8)Feedforward Neural Network - 前馈神经网络9)Multi-layer Perceptron (MLP) - 多层感知器10)Radial Basis Function Network (RBFN) - 径向基函数网络11)Hopfield Network - 霍普菲尔德网络12)Boltzmann Machine - 玻尔兹曼机13)Autoencoder - 自编码器14)Spiking Neural Network (SNN) - 脉冲神经网络15)Self-organizing Map (SOM) - 自组织映射16)Restricted Boltzmann Machine (RBM) - 受限玻尔兹曼机17)Hebbian Learning - 海比安学习18)Competitive Learning - 竞争学习19)Neuroevolutionary - 神经进化20)Neuron - 神经元•Algorithm - 算法1)Algorithm - 算法2)Supervised Learning Algorithm - 有监督学习算法3)Unsupervised Learning Algorithm - 无监督学习算法4)Reinforcement Learning Algorithm - 强化学习算法5)Classification Algorithm - 分类算法6)Regression Algorithm - 回归算法7)Clustering Algorithm - 聚类算法8)Dimensionality Reduction Algorithm - 降维算法9)Decision Tree Algorithm - 决策树算法10)Random Forest Algorithm - 随机森林算法11)Support Vector Machine (SVM) Algorithm - 支持向量机算法12)K-Nearest Neighbors (KNN) Algorithm - K近邻算法13)Naive Bayes Algorithm - 朴素贝叶斯算法14)Gradient Descent Algorithm - 梯度下降算法15)Genetic Algorithm - 遗传算法16)Neural Network Algorithm - 神经网络算法17)Deep Learning Algorithm - 深度学习算法18)Ensemble Learning Algorithm - 集成学习算法19)Reinforcement Learning Algorithm - 强化学习算法20)Metaheuristic Algorithm - 元启发式算法•Model - 模型1)Model - 模型2)Machine Learning Model - 机器学习模型3)Artificial Intelligence Model - 人工智能模型4)Predictive Model - 预测模型5)Classification Model - 分类模型6)Regression Model - 回归模型7)Generative Model - 生成模型8)Discriminative Model - 判别模型9)Probabilistic Model - 概率模型10)Statistical Model - 统计模型11)Neural Network Model - 神经网络模型12)Deep Learning Model - 深度学习模型13)Ensemble Model - 集成模型14)Reinforcement Learning Model - 强化学习模型15)Support Vector Machine (SVM) Model - 支持向量机模型16)Decision Tree Model - 决策树模型17)Random Forest Model - 随机森林模型18)Naive Bayes Model - 朴素贝叶斯模型19)Autoencoder Model - 自编码器模型20)Convolutional Neural Network (CNN) Model - 卷积神经网络模型•Dataset - 数据集1)Dataset - 数据集2)Training Dataset - 训练数据集3)Test Dataset - 测试数据集4)Validation Dataset - 验证数据集5)Balanced Dataset - 平衡数据集6)Imbalanced Dataset - 不平衡数据集7)Synthetic Dataset - 合成数据集8)Benchmark Dataset - 基准数据集9)Open Dataset - 开放数据集10)Labeled Dataset - 标记数据集11)Unlabeled Dataset - 未标记数据集12)Semi-Supervised Dataset - 半监督数据集13)Multiclass Dataset - 多分类数据集14)Feature Set - 特征集15)Data Augmentation - 数据增强16)Data Preprocessing - 数据预处理17)Missing Data - 缺失数据18)Outlier Detection - 异常值检测19)Data Imputation - 数据插补20)Metadata - 元数据•Training - 训练1)Training - 训练2)Training Data - 训练数据3)Training Phase - 训练阶段4)Training Set - 训练集5)Training Examples - 训练样本6)Training Instance - 训练实例7)Training Algorithm - 训练算法8)Training Model - 训练模型9)Training Process - 训练过程10)Training Loss - 训练损失11)Training Epoch - 训练周期12)Training Batch - 训练批次13)Online Training - 在线训练14)Offline Training - 离线训练15)Continuous Training - 连续训练16)Transfer Learning - 迁移学习17)Fine-Tuning - 微调18)Curriculum Learning - 课程学习19)Self-Supervised Learning - 自监督学习20)Active Learning - 主动学习•Testing - 测试1)Testing - 测试2)Test Data - 测试数据3)Test Set - 测试集4)Test Examples - 测试样本5)Test Instance - 测试实例6)Test Phase - 测试阶段7)Test Accuracy - 测试准确率8)Test Loss - 测试损失9)Test Error - 测试错误10)Test Metrics - 测试指标11)Test Suite - 测试套件12)Test Case - 测试用例13)Test Coverage - 测试覆盖率14)Cross-Validation - 交叉验证15)Holdout Validation - 留出验证16)K-Fold Cross-Validation - K折交叉验证17)Stratified Cross-Validation - 分层交叉验证18)Test Driven Development (TDD) - 测试驱动开发19)A/B Testing - A/B 测试20)Model Evaluation - 模型评估•Validation - 验证1)Validation - 验证2)Validation Data - 验证数据3)Validation Set - 验证集4)Validation Examples - 验证样本5)Validation Instance - 验证实例6)Validation Phase - 验证阶段7)Validation Accuracy - 验证准确率8)Validation Loss - 验证损失9)Validation Error - 验证错误10)Validation Metrics - 验证指标11)Cross-Validation - 交叉验证12)Holdout Validation - 留出验证13)K-Fold Cross-Validation - K折交叉验证14)Stratified Cross-Validation - 分层交叉验证15)Leave-One-Out Cross-Validation - 留一法交叉验证16)Validation Curve - 验证曲线17)Hyperparameter Validation - 超参数验证18)Model Validation - 模型验证19)Early Stopping - 提前停止20)Validation Strategy - 验证策略•Supervised Learning - 有监督学习1)Supervised Learning - 有监督学习2)Label - 标签3)Feature - 特征4)Target - 目标5)Training Labels - 训练标签6)Training Features - 训练特征7)Training Targets - 训练目标8)Training Examples - 训练样本9)Training Instance - 训练实例10)Regression - 回归11)Classification - 分类12)Predictor - 预测器13)Regression Model - 回归模型14)Classifier - 分类器15)Decision Tree - 决策树16)Support Vector Machine (SVM) - 支持向量机17)Neural Network - 神经网络18)Feature Engineering - 特征工程19)Model Evaluation - 模型评估20)Overfitting - 过拟合21)Underfitting - 欠拟合22)Bias-Variance Tradeoff - 偏差-方差权衡•Unsupervised Learning - 无监督学习1)Unsupervised Learning - 无监督学习2)Clustering - 聚类3)Dimensionality Reduction - 降维4)Anomaly Detection - 异常检测5)Association Rule Learning - 关联规则学习6)Feature Extraction - 特征提取7)Feature Selection - 特征选择8)K-Means - K均值9)Hierarchical Clustering - 层次聚类10)Density-Based Clustering - 基于密度的聚类11)Principal Component Analysis (PCA) - 主成分分析12)Independent Component Analysis (ICA) - 独立成分分析13)T-distributed Stochastic Neighbor Embedding (t-SNE) - t分布随机邻居嵌入14)Gaussian Mixture Model (GMM) - 高斯混合模型15)Self-Organizing Maps (SOM) - 自组织映射16)Autoencoder - 自动编码器17)Latent Variable - 潜变量18)Data Preprocessing - 数据预处理19)Outlier Detection - 异常值检测20)Clustering Algorithm - 聚类算法•Reinforcement Learning - 强化学习1)Reinforcement Learning - 强化学习2)Agent - 代理3)Environment - 环境4)State - 状态5)Action - 动作6)Reward - 奖励7)Policy - 策略8)Value Function - 值函数9)Q-Learning - Q学习10)Deep Q-Network (DQN) - 深度Q网络11)Policy Gradient - 策略梯度12)Actor-Critic - 演员-评论家13)Exploration - 探索14)Exploitation - 开发15)Temporal Difference (TD) - 时间差分16)Markov Decision Process (MDP) - 马尔可夫决策过程17)State-Action-Reward-State-Action (SARSA) - 状态-动作-奖励-状态-动作18)Policy Iteration - 策略迭代19)Value Iteration - 值迭代20)Monte Carlo Methods - 蒙特卡洛方法•Semi-Supervised Learning - 半监督学习1)Semi-Supervised Learning - 半监督学习2)Labeled Data - 有标签数据3)Unlabeled Data - 无标签数据4)Label Propagation - 标签传播5)Self-Training - 自训练6)Co-Training - 协同训练7)Transudative Learning - 传导学习8)Inductive Learning - 归纳学习9)Manifold Regularization - 流形正则化10)Graph-based Methods - 基于图的方法11)Cluster Assumption - 聚类假设12)Low-Density Separation - 低密度分离13)Semi-Supervised Support Vector Machines (S3VM) - 半监督支持向量机14)Expectation-Maximization (EM) - 期望最大化15)Co-EM - 协同期望最大化16)Entropy-Regularized EM - 熵正则化EM17)Mean Teacher - 平均教师18)Virtual Adversarial Training - 虚拟对抗训练19)Tri-training - 三重训练20)Mix Match - 混合匹配•Feature - 特征1)Feature - 特征2)Feature Engineering - 特征工程3)Feature Extraction - 特征提取4)Feature Selection - 特征选择5)Input Features - 输入特征6)Output Features - 输出特征7)Feature Vector - 特征向量8)Feature Space - 特征空间9)Feature Representation - 特征表示10)Feature Transformation - 特征转换11)Feature Importance - 特征重要性12)Feature Scaling - 特征缩放13)Feature Normalization - 特征归一化14)Feature Encoding - 特征编码15)Feature Fusion - 特征融合16)Feature Dimensionality Reduction - 特征维度减少17)Continuous Feature - 连续特征18)Categorical Feature - 分类特征19)Nominal Feature - 名义特征20)Ordinal Feature - 有序特征•Label - 标签1)Label - 标签2)Labeling - 标注3)Ground Truth - 地面真值4)Class Label - 类别标签5)Target Variable - 目标变量6)Labeling Scheme - 标注方案7)Multi-class Labeling - 多类别标注8)Binary Labeling - 二分类标注9)Label Noise - 标签噪声10)Labeling Error - 标注错误11)Label Propagation - 标签传播12)Unlabeled Data - 无标签数据13)Labeled Data - 有标签数据14)Semi-supervised Learning - 半监督学习15)Active Learning - 主动学习16)Weakly Supervised Learning - 弱监督学习17)Noisy Label Learning - 噪声标签学习18)Self-training - 自训练19)Crowdsourcing Labeling - 众包标注20)Label Smoothing - 标签平滑化•Prediction - 预测1)Prediction - 预测2)Forecasting - 预测3)Regression - 回归4)Classification - 分类5)Time Series Prediction - 时间序列预测6)Forecast Accuracy - 预测准确性7)Predictive Modeling - 预测建模8)Predictive Analytics - 预测分析9)Forecasting Method - 预测方法10)Predictive Performance - 预测性能11)Predictive Power - 预测能力12)Prediction Error - 预测误差13)Prediction Interval - 预测区间14)Prediction Model - 预测模型15)Predictive Uncertainty - 预测不确定性16)Forecast Horizon - 预测时间跨度17)Predictive Maintenance - 预测性维护18)Predictive Policing - 预测式警务19)Predictive Healthcare - 预测性医疗20)Predictive Maintenance - 预测性维护•Classification - 分类1)Classification - 分类2)Classifier - 分类器3)Class - 类别4)Classify - 对数据进行分类5)Class Label - 类别标签6)Binary Classification - 二元分类7)Multiclass Classification - 多类分类8)Class Probability - 类别概率9)Decision Boundary - 决策边界10)Decision Tree - 决策树11)Support Vector Machine (SVM) - 支持向量机12)K-Nearest Neighbors (KNN) - K最近邻算法13)Naive Bayes - 朴素贝叶斯14)Logistic Regression - 逻辑回归15)Random Forest - 随机森林16)Neural Network - 神经网络17)SoftMax Function - SoftMax函数18)One-vs-All (One-vs-Rest) - 一对多(一对剩余)19)Ensemble Learning - 集成学习20)Confusion Matrix - 混淆矩阵•Regression - 回归1)Regression Analysis - 回归分析2)Linear Regression - 线性回归3)Multiple Regression - 多元回归4)Polynomial Regression - 多项式回归5)Logistic Regression - 逻辑回归6)Ridge Regression - 岭回归7)Lasso Regression - Lasso回归8)Elastic Net Regression - 弹性网络回归9)Regression Coefficients - 回归系数10)Residuals - 残差11)Ordinary Least Squares (OLS) - 普通最小二乘法12)Ridge Regression Coefficient - 岭回归系数13)Lasso Regression Coefficient - Lasso回归系数14)Elastic Net Regression Coefficient - 弹性网络回归系数15)Regression Line - 回归线16)Prediction Error - 预测误差17)Regression Model - 回归模型18)Nonlinear Regression - 非线性回归19)Generalized Linear Models (GLM) - 广义线性模型20)Coefficient of Determination (R-squared) - 决定系数21)F-test - F检验22)Homoscedasticity - 同方差性23)Heteroscedasticity - 异方差性24)Autocorrelation - 自相关25)Multicollinearity - 多重共线性26)Outliers - 异常值27)Cross-validation - 交叉验证28)Feature Selection - 特征选择29)Feature Engineering - 特征工程30)Regularization - 正则化2.Neural Networks and Deep Learning (神经网络与深度学习)•Convolutional Neural Network (CNN) - 卷积神经网络1)Convolutional Neural Network (CNN) - 卷积神经网络2)Convolution Layer - 卷积层3)Feature Map - 特征图4)Convolution Operation - 卷积操作5)Stride - 步幅6)Padding - 填充7)Pooling Layer - 池化层8)Max Pooling - 最大池化9)Average Pooling - 平均池化10)Fully Connected Layer - 全连接层11)Activation Function - 激活函数12)Rectified Linear Unit (ReLU) - 线性修正单元13)Dropout - 随机失活14)Batch Normalization - 批量归一化15)Transfer Learning - 迁移学习16)Fine-Tuning - 微调17)Image Classification - 图像分类18)Object Detection - 物体检测19)Semantic Segmentation - 语义分割20)Instance Segmentation - 实例分割21)Generative Adversarial Network (GAN) - 生成对抗网络22)Image Generation - 图像生成23)Style Transfer - 风格迁移24)Convolutional Autoencoder - 卷积自编码器25)Recurrent Neural Network (RNN) - 循环神经网络•Recurrent Neural Network (RNN) - 循环神经网络1)Recurrent Neural Network (RNN) - 循环神经网络2)Long Short-Term Memory (LSTM) - 长短期记忆网络3)Gated Recurrent Unit (GRU) - 门控循环单元4)Sequence Modeling - 序列建模5)Time Series Prediction - 时间序列预测6)Natural Language Processing (NLP) - 自然语言处理7)Text Generation - 文本生成8)Sentiment Analysis - 情感分析9)Named Entity Recognition (NER) - 命名实体识别10)Part-of-Speech Tagging (POS Tagging) - 词性标注11)Sequence-to-Sequence (Seq2Seq) - 序列到序列12)Attention Mechanism - 注意力机制13)Encoder-Decoder Architecture - 编码器-解码器架构14)Bidirectional RNN - 双向循环神经网络15)Teacher Forcing - 强制教师法16)Backpropagation Through Time (BPTT) - 通过时间的反向传播17)Vanishing Gradient Problem - 梯度消失问题18)Exploding Gradient Problem - 梯度爆炸问题19)Language Modeling - 语言建模20)Speech Recognition - 语音识别•Long Short-Term Memory (LSTM) - 长短期记忆网络1)Long Short-Term Memory (LSTM) - 长短期记忆网络2)Cell State - 细胞状态3)Hidden State - 隐藏状态4)Forget Gate - 遗忘门5)Input Gate - 输入门6)Output Gate - 输出门7)Peephole Connections - 窥视孔连接8)Gated Recurrent Unit (GRU) - 门控循环单元9)Vanishing Gradient Problem - 梯度消失问题10)Exploding Gradient Problem - 梯度爆炸问题11)Sequence Modeling - 序列建模12)Time Series Prediction - 时间序列预测13)Natural Language Processing (NLP) - 自然语言处理14)Text Generation - 文本生成15)Sentiment Analysis - 情感分析16)Named Entity Recognition (NER) - 命名实体识别17)Part-of-Speech Tagging (POS Tagging) - 词性标注18)Attention Mechanism - 注意力机制19)Encoder-Decoder Architecture - 编码器-解码器架构20)Bidirectional LSTM - 双向长短期记忆网络•Attention Mechanism - 注意力机制1)Attention Mechanism - 注意力机制2)Self-Attention - 自注意力3)Multi-Head Attention - 多头注意力4)Transformer - 变换器5)Query - 查询6)Key - 键7)Value - 值8)Query-Value Attention - 查询-值注意力9)Dot-Product Attention - 点积注意力10)Scaled Dot-Product Attention - 缩放点积注意力11)Additive Attention - 加性注意力12)Context Vector - 上下文向量13)Attention Score - 注意力分数14)SoftMax Function - SoftMax函数15)Attention Weight - 注意力权重16)Global Attention - 全局注意力17)Local Attention - 局部注意力18)Positional Encoding - 位置编码19)Encoder-Decoder Attention - 编码器-解码器注意力20)Cross-Modal Attention - 跨模态注意力•Generative Adversarial Network (GAN) - 生成对抗网络1)Generative Adversarial Network (GAN) - 生成对抗网络2)Generator - 生成器3)Discriminator - 判别器4)Adversarial Training - 对抗训练5)Minimax Game - 极小极大博弈6)Nash Equilibrium - 纳什均衡7)Mode Collapse - 模式崩溃8)Training Stability - 训练稳定性9)Loss Function - 损失函数10)Discriminative Loss - 判别损失11)Generative Loss - 生成损失12)Wasserstein GAN (WGAN) - Wasserstein GAN(WGAN)13)Deep Convolutional GAN (DCGAN) - 深度卷积生成对抗网络(DCGAN)14)Conditional GAN (c GAN) - 条件生成对抗网络(c GAN)15)Style GAN - 风格生成对抗网络16)Cycle GAN - 循环生成对抗网络17)Progressive Growing GAN (PGGAN) - 渐进式增长生成对抗网络(PGGAN)18)Self-Attention GAN (SAGAN) - 自注意力生成对抗网络(SAGAN)19)Big GAN - 大规模生成对抗网络20)Adversarial Examples - 对抗样本•Encoder-Decoder - 编码器-解码器1)Encoder-Decoder Architecture - 编码器-解码器架构2)Encoder - 编码器3)Decoder - 解码器4)Sequence-to-Sequence Model (Seq2Seq) - 序列到序列模型5)State Vector - 状态向量6)Context Vector - 上下文向量7)Hidden State - 隐藏状态8)Attention Mechanism - 注意力机制9)Teacher Forcing - 强制教师法10)Beam Search - 束搜索11)Recurrent Neural Network (RNN) - 循环神经网络12)Long Short-Term Memory (LSTM) - 长短期记忆网络13)Gated Recurrent Unit (GRU) - 门控循环单元14)Bidirectional Encoder - 双向编码器15)Greedy Decoding - 贪婪解码16)Masking - 遮盖17)Dropout - 随机失活18)Embedding Layer - 嵌入层19)Cross-Entropy Loss - 交叉熵损失20)Tokenization - 令牌化•Transfer Learning - 迁移学习1)Transfer Learning - 迁移学习2)Source Domain - 源领域3)Target Domain - 目标领域4)Fine-Tuning - 微调5)Domain Adaptation - 领域自适应6)Pre-Trained Model - 预训练模型7)Feature Extraction - 特征提取8)Knowledge Transfer - 知识迁移9)Unsupervised Domain Adaptation - 无监督领域自适应10)Semi-Supervised Domain Adaptation - 半监督领域自适应11)Multi-Task Learning - 多任务学习12)Data Augmentation - 数据增强13)Task Transfer - 任务迁移14)Model Agnostic Meta-Learning (MAML) - 与模型无关的元学习(MAML)15)One-Shot Learning - 单样本学习16)Zero-Shot Learning - 零样本学习17)Few-Shot Learning - 少样本学习18)Knowledge Distillation - 知识蒸馏19)Representation Learning - 表征学习20)Adversarial Transfer Learning - 对抗迁移学习•Pre-trained Models - 预训练模型1)Pre-trained Model - 预训练模型2)Transfer Learning - 迁移学习3)Fine-Tuning - 微调4)Knowledge Transfer - 知识迁移5)Domain Adaptation - 领域自适应6)Feature Extraction - 特征提取7)Representation Learning - 表征学习8)Language Model - 语言模型9)Bidirectional Encoder Representations from Transformers (BERT) - 双向编码器结构转换器10)Generative Pre-trained Transformer (GPT) - 生成式预训练转换器11)Transformer-based Models - 基于转换器的模型12)Masked Language Model (MLM) - 掩蔽语言模型13)Cloze Task - 填空任务14)Tokenization - 令牌化15)Word Embeddings - 词嵌入16)Sentence Embeddings - 句子嵌入17)Contextual Embeddings - 上下文嵌入18)Self-Supervised Learning - 自监督学习19)Large-Scale Pre-trained Models - 大规模预训练模型•Loss Function - 损失函数1)Loss Function - 损失函数2)Mean Squared Error (MSE) - 均方误差3)Mean Absolute Error (MAE) - 平均绝对误差4)Cross-Entropy Loss - 交叉熵损失5)Binary Cross-Entropy Loss - 二元交叉熵损失6)Categorical Cross-Entropy Loss - 分类交叉熵损失7)Hinge Loss - 合页损失8)Huber Loss - Huber损失9)Wasserstein Distance - Wasserstein距离10)Triplet Loss - 三元组损失11)Contrastive Loss - 对比损失12)Dice Loss - Dice损失13)Focal Loss - 焦点损失14)GAN Loss - GAN损失15)Adversarial Loss - 对抗损失16)L1 Loss - L1损失17)L2 Loss - L2损失18)Huber Loss - Huber损失19)Quantile Loss - 分位数损失•Activation Function - 激活函数1)Activation Function - 激活函数2)Sigmoid Function - Sigmoid函数3)Hyperbolic Tangent Function (Tanh) - 双曲正切函数4)Rectified Linear Unit (Re LU) - 矩形线性单元5)Parametric Re LU (P Re LU) - 参数化Re LU6)Exponential Linear Unit (ELU) - 指数线性单元7)Swish Function - Swish函数8)Softplus Function - Soft plus函数9)Softmax Function - SoftMax函数10)Hard Tanh Function - 硬双曲正切函数11)Softsign Function - Softsign函数12)GELU (Gaussian Error Linear Unit) - GELU(高斯误差线性单元)13)Mish Function - Mish函数14)CELU (Continuous Exponential Linear Unit) - CELU(连续指数线性单元)15)Bent Identity Function - 弯曲恒等函数16)Gaussian Error Linear Units (GELUs) - 高斯误差线性单元17)Adaptive Piecewise Linear (APL) - 自适应分段线性函数18)Radial Basis Function (RBF) - 径向基函数•Backpropagation - 反向传播1)Backpropagation - 反向传播2)Gradient Descent - 梯度下降3)Partial Derivative - 偏导数4)Chain Rule - 链式法则5)Forward Pass - 前向传播6)Backward Pass - 反向传播7)Computational Graph - 计算图8)Neural Network - 神经网络9)Loss Function - 损失函数10)Gradient Calculation - 梯度计算11)Weight Update - 权重更新12)Activation Function - 激活函数13)Optimizer - 优化器14)Learning Rate - 学习率15)Mini-Batch Gradient Descent - 小批量梯度下降16)Stochastic Gradient Descent (SGD) - 随机梯度下降17)Batch Gradient Descent - 批量梯度下降18)Momentum - 动量19)Adam Optimizer - Adam优化器20)Learning Rate Decay - 学习率衰减•Gradient Descent - 梯度下降1)Gradient Descent - 梯度下降2)Stochastic Gradient Descent (SGD) - 随机梯度下降3)Mini-Batch Gradient Descent - 小批量梯度下降4)Batch Gradient Descent - 批量梯度下降5)Learning Rate - 学习率6)Momentum - 动量7)Adaptive Moment Estimation (Adam) - 自适应矩估计8)RMSprop - 均方根传播9)Learning Rate Schedule - 学习率调度10)Convergence - 收敛11)Divergence - 发散12)Adagrad - 自适应学习速率方法13)Adadelta - 自适应增量学习率方法14)Adamax - 自适应矩估计的扩展版本15)Nadam - Nesterov Accelerated Adaptive Moment Estimation16)Learning Rate Decay - 学习率衰减17)Step Size - 步长18)Conjugate Gradient Descent - 共轭梯度下降19)Line Search - 线搜索20)Newton's Method - 牛顿法•Learning Rate - 学习率1)Learning Rate - 学习率2)Adaptive Learning Rate - 自适应学习率3)Learning Rate Decay - 学习率衰减4)Initial Learning Rate - 初始学习率5)Step Size - 步长6)Momentum - 动量7)Exponential Decay - 指数衰减8)Annealing - 退火9)Cyclical Learning Rate - 循环学习率10)Learning Rate Schedule - 学习率调度11)Warm-up - 预热12)Learning Rate Policy - 学习率策略13)Learning Rate Annealing - 学习率退火14)Cosine Annealing - 余弦退火15)Gradient Clipping - 梯度裁剪16)Adapting Learning Rate - 适应学习率17)Learning Rate Multiplier - 学习率倍增器18)Learning Rate Reduction - 学习率降低19)Learning Rate Update - 学习率更新20)Scheduled Learning Rate - 定期学习率•Batch Size - 批量大小1)Batch Size - 批量大小2)Mini-Batch - 小批量3)Batch Gradient Descent - 批量梯度下降4)Stochastic Gradient Descent (SGD) - 随机梯度下降5)Mini-Batch Gradient Descent - 小批量梯度下降6)Online Learning - 在线学习7)Full-Batch - 全批量8)Data Batch - 数据批次9)Training Batch - 训练批次10)Batch Normalization - 批量归一化11)Batch-wise Optimization - 批量优化12)Batch Processing - 批量处理13)Batch Sampling - 批量采样14)Adaptive Batch Size - 自适应批量大小15)Batch Splitting - 批量分割16)Dynamic Batch Size - 动态批量大小17)Fixed Batch Size - 固定批量大小18)Batch-wise Inference - 批量推理19)Batch-wise Training - 批量训练20)Batch Shuffling - 批量洗牌•Epoch - 训练周期1)Training Epoch - 训练周期2)Epoch Size - 周期大小3)Early Stopping - 提前停止4)Validation Set - 验证集5)Training Set - 训练集6)Test Set - 测试集7)Overfitting - 过拟合8)Underfitting - 欠拟合9)Model Evaluation - 模型评估10)Model Selection - 模型选择11)Hyperparameter Tuning - 超参数调优12)Cross-Validation - 交叉验证13)K-fold Cross-Validation - K折交叉验证14)Stratified Cross-Validation - 分层交叉验证15)Leave-One-Out Cross-Validation (LOOCV) - 留一法交叉验证16)Grid Search - 网格搜索17)Random Search - 随机搜索18)Model Complexity - 模型复杂度19)Learning Curve - 学习曲线20)Convergence - 收敛3.Machine Learning Techniques and Algorithms (机器学习技术与算法)•Decision Tree - 决策树1)Decision Tree - 决策树2)Node - 节点3)Root Node - 根节点4)Leaf Node - 叶节点5)Internal Node - 内部节点6)Splitting Criterion - 分裂准则7)Gini Impurity - 基尼不纯度8)Entropy - 熵9)Information Gain - 信息增益10)Gain Ratio - 增益率11)Pruning - 剪枝12)Recursive Partitioning - 递归分割13)CART (Classification and Regression Trees) - 分类回归树14)ID3 (Iterative Dichotomiser 3) - 迭代二叉树315)C4.5 (successor of ID3) - C4.5(ID3的后继者)16)C5.0 (successor of C4.5) - C5.0(C4.5的后继者)17)Split Point - 分裂点18)Decision Boundary - 决策边界19)Pruned Tree - 剪枝后的树20)Decision Tree Ensemble - 决策树集成•Random Forest - 随机森林1)Random Forest - 随机森林2)Ensemble Learning - 集成学习3)Bootstrap Sampling - 自助采样4)Bagging (Bootstrap Aggregating) - 装袋法5)Out-of-Bag (OOB) Error - 袋外误差6)Feature Subset - 特征子集7)Decision Tree - 决策树8)Base Estimator - 基础估计器9)Tree Depth - 树深度10)Randomization - 随机化11)Majority Voting - 多数投票12)Feature Importance - 特征重要性13)OOB Score - 袋外得分14)Forest Size - 森林大小15)Max Features - 最大特征数16)Min Samples Split - 最小分裂样本数17)Min Samples Leaf - 最小叶节点样本数18)Gini Impurity - 基尼不纯度19)Entropy - 熵20)Variable Importance - 变量重要性•Support Vector Machine (SVM) - 支持向量机1)Support Vector Machine (SVM) - 支持向量机2)Hyperplane - 超平面3)Kernel Trick - 核技巧4)Kernel Function - 核函数5)Margin - 间隔6)Support Vectors - 支持向量7)Decision Boundary - 决策边界8)Maximum Margin Classifier - 最大间隔分类器9)Soft Margin Classifier - 软间隔分类器10) C Parameter - C参数11)Radial Basis Function (RBF) Kernel - 径向基函数核12)Polynomial Kernel - 多项式核13)Linear Kernel - 线性核14)Quadratic Kernel - 二次核15)Gaussian Kernel - 高斯核16)Regularization - 正则化17)Dual Problem - 对偶问题18)Primal Problem - 原始问题19)Kernelized SVM - 核化支持向量机20)Multiclass SVM - 多类支持向量机•K-Nearest Neighbors (KNN) - K-最近邻1)K-Nearest Neighbors (KNN) - K-最近邻2)Nearest Neighbor - 最近邻3)Distance Metric - 距离度量4)Euclidean Distance - 欧氏距离5)Manhattan Distance - 曼哈顿距离6)Minkowski Distance - 闵可夫斯基距离7)Cosine Similarity - 余弦相似度8)K Value - K值9)Majority Voting - 多数投票10)Weighted KNN - 加权KNN11)Radius Neighbors - 半径邻居12)Ball Tree - 球树13)KD Tree - KD树14)Locality-Sensitive Hashing (LSH) - 局部敏感哈希15)Curse of Dimensionality - 维度灾难16)Class Label - 类标签17)Training Set - 训练集18)Test Set - 测试集19)Validation Set - 验证集20)Cross-Validation - 交叉验证•Naive Bayes - 朴素贝叶斯1)Naive Bayes - 朴素贝叶斯2)Bayes' Theorem - 贝叶斯定理3)Prior Probability - 先验概率4)Posterior Probability - 后验概率5)Likelihood - 似然6)Class Conditional Probability - 类条件概率7)Feature Independence Assumption - 特征独立假设8)Multinomial Naive Bayes - 多项式朴素贝叶斯9)Gaussian Naive Bayes - 高斯朴素贝叶斯10)Bernoulli Naive Bayes - 伯努利朴素贝叶斯11)Laplace Smoothing - 拉普拉斯平滑12)Add-One Smoothing - 加一平滑13)Maximum A Posteriori (MAP) - 最大后验概率14)Maximum Likelihood Estimation (MLE) - 最大似然估计15)Classification - 分类16)Feature Vectors - 特征向量17)Training Set - 训练集18)Test Set - 测试集19)Class Label - 类标签20)Confusion Matrix - 混淆矩阵•Clustering - 聚类1)Clustering - 聚类2)Centroid - 质心3)Cluster Analysis - 聚类分析4)Partitioning Clustering - 划分式聚类5)Hierarchical Clustering - 层次聚类6)Density-Based Clustering - 基于密度的聚类7)K-Means Clustering - K均值聚类8)K-Medoids Clustering - K中心点聚类9)DBSCAN (Density-Based Spatial Clustering of Applications with Noise) - 基于密度的空间聚类算法10)Agglomerative Clustering - 聚合式聚类11)Dendrogram - 系统树图12)Silhouette Score - 轮廓系数13)Elbow Method - 肘部法则14)Clustering Validation - 聚类验证15)Intra-cluster Distance - 类内距离16)Inter-cluster Distance - 类间距离17)Cluster Cohesion - 类内连贯性18)Cluster Separation - 类间分离度19)Cluster Assignment - 聚类分配20)Cluster Label - 聚类标签•K-Means - K-均值1)K-Means - K-均值2)Centroid - 质心3)Cluster - 聚类4)Cluster Center - 聚类中心5)Cluster Assignment - 聚类分配6)Cluster Analysis - 聚类分析7)K Value - K值8)Elbow Method - 肘部法则9)Inertia - 惯性10)Silhouette Score - 轮廓系数11)Convergence - 收敛12)Initialization - 初始化13)Euclidean Distance - 欧氏距离14)Manhattan Distance - 曼哈顿距离15)Distance Metric - 距离度量16)Cluster Radius - 聚类半径17)Within-Cluster Variation - 类内变异18)Cluster Quality - 聚类质量19)Clustering Algorithm - 聚类算法20)Clustering Validation - 聚类验证•Dimensionality Reduction - 降维1)Dimensionality Reduction - 降维2)Feature Extraction - 特征提取3)Feature Selection - 特征选择4)Principal Component Analysis (PCA) - 主成分分析5)Singular Value Decomposition (SVD) - 奇异值分解6)Linear Discriminant Analysis (LDA) - 线性判别分析7)t-Distributed Stochastic Neighbor Embedding (t-SNE) - t-分布随机邻域嵌入8)Autoencoder - 自编码器9)Manifold Learning - 流形学习10)Locally Linear Embedding (LLE) - 局部线性嵌入11)Isomap - 等度量映射12)Uniform Manifold Approximation and Projection (UMAP) - 均匀流形逼近与投影13)Kernel PCA - 核主成分分析14)Non-negative Matrix Factorization (NMF) - 非负矩阵分解15)Independent Component Analysis (ICA) - 独立成分分析16)Variational Autoencoder (VAE) - 变分自编码器17)Sparse Coding - 稀疏编码18)Random Projection - 随机投影19)Neighborhood Preserving Embedding (NPE) - 保持邻域结构的嵌入20)Curvilinear Component Analysis (CCA) - 曲线成分分析•Principal Component Analysis (PCA) - 主成分分析1)Principal Component Analysis (PCA) - 主成分分析2)Eigenvector - 特征向量3)Eigenvalue - 特征值4)Covariance Matrix - 协方差矩阵。

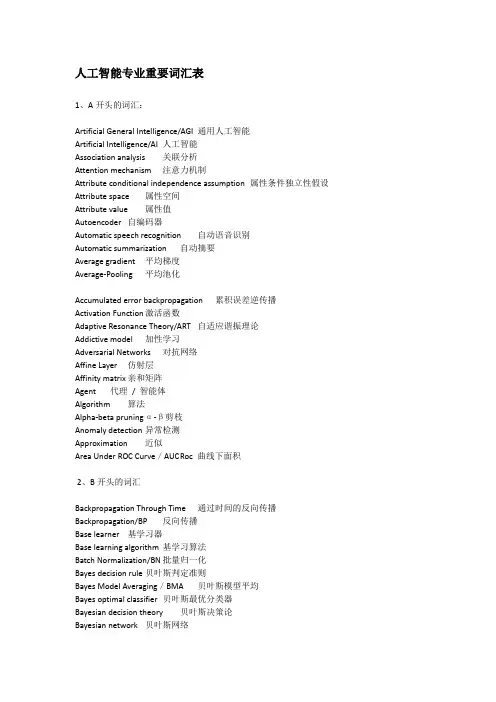

人工智能专业重要词汇表1、A开头的词汇:Artificial General Intelligence/AGI通用人工智能Artificial Intelligence/AI人工智能Association analysis关联分析Attention mechanism注意力机制Attribute conditional independence assumption属性条件独立性假设Attribute space属性空间Attribute value属性值Autoencoder自编码器Automatic speech recognition自动语音识别Automatic summarization自动摘要Average gradient平均梯度Average-Pooling平均池化Accumulated error backpropagation累积误差逆传播Activation Function激活函数Adaptive Resonance Theory/ART自适应谐振理论Addictive model加性学习Adversarial Networks对抗网络Affine Layer仿射层Affinity matrix亲和矩阵Agent代理/ 智能体Algorithm算法Alpha-beta pruningα-β剪枝Anomaly detection异常检测Approximation近似Area Under ROC Curve/AUC R oc 曲线下面积2、B开头的词汇Backpropagation Through Time通过时间的反向传播Backpropagation/BP反向传播Base learner基学习器Base learning algorithm基学习算法Batch Normalization/BN批量归一化Bayes decision rule贝叶斯判定准则Bayes Model Averaging/BMA贝叶斯模型平均Bayes optimal classifier贝叶斯最优分类器Bayesian decision theory贝叶斯决策论Bayesian network贝叶斯网络Between-class scatter matrix类间散度矩阵Bias偏置/ 偏差Bias-variance decomposition偏差-方差分解Bias-Variance Dilemma偏差–方差困境Bi-directional Long-Short Term Memory/Bi-LSTM双向长短期记忆Binary classification二分类Binomial test二项检验Bi-partition二分法Boltzmann machine玻尔兹曼机Bootstrap sampling自助采样法/可重复采样/有放回采样Bootstrapping自助法Break-Event Point/BEP平衡点3、C开头的词汇Calibration校准Cascade-Correlation级联相关Categorical attribute离散属性Class-conditional probability类条件概率Classification and regression tree/CART分类与回归树Classifier分类器Class-imbalance类别不平衡Closed -form闭式Cluster簇/类/集群Cluster analysis聚类分析Clustering聚类Clustering ensemble聚类集成Co-adapting共适应Coding matrix编码矩阵COLT国际学习理论会议Committee-based learning基于委员会的学习Competitive learning竞争型学习Component learner组件学习器Comprehensibility可解释性Computation Cost计算成本Computational Linguistics计算语言学Computer vision计算机视觉Concept drift概念漂移Concept Learning System /CLS概念学习系统Conditional entropy条件熵Conditional mutual information条件互信息Conditional Probability Table/CPT条件概率表Conditional random field/CRF条件随机场Conditional risk条件风险Confidence置信度Confusion matrix混淆矩阵Connection weight连接权Connectionism连结主义Consistency一致性/相合性Contingency table列联表Continuous attribute连续属性Convergence收敛Conversational agent会话智能体Convex quadratic programming凸二次规划Convexity凸性Convolutional neural network/CNN卷积神经网络Co-occurrence同现Correlation coefficient相关系数Cosine similarity余弦相似度Cost curve成本曲线Cost Function成本函数Cost matrix成本矩阵Cost-sensitive成本敏感Cross entropy交叉熵Cross validation交叉验证Crowdsourcing众包Curse of dimensionality维数灾难Cut point截断点Cutting plane algorithm割平面法4、D开头的词汇Data mining数据挖掘Data set数据集Decision Boundary决策边界Decision stump决策树桩Decision tree决策树/判定树Deduction演绎Deep Belief Network深度信念网络Deep Convolutional Generative Adversarial Network/DCGAN深度卷积生成对抗网络Deep learning深度学习Deep neural network/DNN深度神经网络Deep Q-Learning深度Q 学习Deep Q-Network深度Q 网络Density estimation密度估计Density-based clustering密度聚类Differentiable neural computer可微分神经计算机Dimensionality reduction algorithm降维算法Directed edge有向边Disagreement measure不合度量Discriminative model判别模型Discriminator判别器Distance measure距离度量Distance metric learning距离度量学习Distribution分布Divergence散度Diversity measure多样性度量/差异性度量Domain adaption领域自适应Downsampling下采样D-separation (Directed separation)有向分离Dual problem对偶问题Dummy node哑结点Dynamic Fusion动态融合Dynamic programming动态规划5、E开头的词汇Eigenvalue decomposition特征值分解Embedding嵌入Emotional analysis情绪分析Empirical conditional entropy经验条件熵Empirical entropy经验熵Empirical error经验误差Empirical risk经验风险End-to-End端到端Energy-based model基于能量的模型Ensemble learning集成学习Ensemble pruning集成修剪Error Correcting Output Codes/ECOC纠错输出码Error rate错误率Error-ambiguity decomposition误差-分歧分解Euclidean distance欧氏距离Evolutionary computation演化计算Expectation-Maximization期望最大化Expected loss期望损失Exploding Gradient Problem梯度爆炸问题Exponential loss function指数损失函数Extreme Learning Machine/ELM超限学习机6、F开头的词汇Factorization因子分解False negative假负类False positive假正类False Positive Rate/FPR假正例率Feature engineering特征工程Feature selection特征选择Feature vector特征向量Featured Learning特征学习Feedforward Neural Networks/FNN前馈神经网络Fine-tuning微调Flipping output翻转法Fluctuation震荡Forward stagewise algorithm前向分步算法Frequentist频率主义学派Full-rank matrix满秩矩阵Functional neuron功能神经元7、G开头的词汇Gain ratio增益率Game theory博弈论Gaussian kernel function高斯核函数Gaussian Mixture Model高斯混合模型General Problem Solving通用问题求解Generalization泛化Generalization error泛化误差Generalization error bound泛化误差上界Generalized Lagrange function广义拉格朗日函数Generalized linear model广义线性模型Generalized Rayleigh quotient广义瑞利商Generative Adversarial Networks/GAN生成对抗网络Generative Model生成模型Generator生成器Genetic Algorithm/GA遗传算法Gibbs sampling吉布斯采样Gini index基尼指数Global minimum全局最小Global Optimization全局优化Gradient boosting梯度提升Gradient Descent梯度下降Graph theory图论Ground-truth真相/真实8、H开头的词汇Hard margin硬间隔Hard voting硬投票Harmonic mean调和平均Hesse matrix海塞矩阵Hidden dynamic model隐动态模型Hidden layer隐藏层Hidden Markov Model/HMM隐马尔可夫模型Hierarchical clustering层次聚类Hilbert space希尔伯特空间Hinge loss function合页损失函数Hold-out留出法Homogeneous同质Hybrid computing混合计算Hyperparameter超参数Hypothesis假设Hypothesis test假设验证9、I开头的词汇ICML国际机器学习会议Improved iterative scaling/IIS改进的迭代尺度法Incremental learning增量学习Independent and identically distributed/i.i.d.独立同分布Independent Component Analysis/ICA独立成分分析Indicator function指示函数Individual learner个体学习器Induction归纳Inductive bias归纳偏好Inductive learning归纳学习Inductive Logic Programming/ILP归纳逻辑程序设计Information entropy信息熵Information gain信息增益Input layer输入层Insensitive loss不敏感损失Inter-cluster similarity簇间相似度International Conference for Machine Learning/ICML国际机器学习大会Intra-cluster similarity簇内相似度Intrinsic value固有值Isometric Mapping/Isomap等度量映射Isotonic regression等分回归Iterative Dichotomiser迭代二分器10、K开头的词汇Kernel method核方法Kernel trick核技巧Kernelized Linear Discriminant Analysis/KLDA核线性判别分析K-fold cross validation k 折交叉验证/k 倍交叉验证K-Means Clustering K –均值聚类K-Nearest Neighbours Algorithm/KNN K近邻算法Knowledge base知识库Knowledge Representation知识表征11、L开头的词汇Label space标记空间Lagrange duality拉格朗日对偶性Lagrange multiplier拉格朗日乘子Laplace smoothing拉普拉斯平滑Laplacian correction拉普拉斯修正Latent Dirichlet Allocation隐狄利克雷分布Latent semantic analysis潜在语义分析Latent variable隐变量Lazy learning懒惰学习Learner学习器Learning by analogy类比学习Learning rate学习率Learning Vector Quantization/LVQ学习向量量化Least squares regression tree最小二乘回归树Leave-One-Out/LOO留一法linear chain conditional random field线性链条件随机场Linear Discriminant Analysis/LDA线性判别分析Linear model线性模型Linear Regression线性回归Link function联系函数Local Markov property局部马尔可夫性Local minimum局部最小Log likelihood对数似然Log odds/logit对数几率Logistic Regression Logistic 回归Log-likelihood对数似然Log-linear regression对数线性回归Long-Short Term Memory/LSTM长短期记忆Loss function损失函数12、M开头的词汇Machine translation/MT机器翻译Macron-P宏查准率Macron-R宏查全率Majority voting绝对多数投票法Manifold assumption流形假设Manifold learning流形学习Margin theory间隔理论Marginal distribution边际分布Marginal independence边际独立性Marginalization边际化Markov Chain Monte Carlo/MCMC马尔可夫链蒙特卡罗方法Markov Random Field马尔可夫随机场Maximal clique最大团Maximum Likelihood Estimation/MLE极大似然估计/极大似然法Maximum margin最大间隔Maximum weighted spanning tree最大带权生成树Max-Pooling最大池化Mean squared error均方误差Meta-learner元学习器Metric learning度量学习Micro-P微查准率Micro-R微查全率Minimal Description Length/MDL最小描述长度Minimax game极小极大博弈Misclassification cost误分类成本Mixture of experts混合专家Momentum动量Moral graph道德图/端正图Multi-class classification多分类Multi-document summarization多文档摘要Multi-layer feedforward neural networks多层前馈神经网络Multilayer Perceptron/MLP多层感知器Multimodal learning多模态学习Multiple Dimensional Scaling多维缩放Multiple linear regression多元线性回归Multi-response Linear Regression /MLR多响应线性回归Mutual information互信息13、N开头的词汇Naive bayes朴素贝叶斯Naive Bayes Classifier朴素贝叶斯分类器Named entity recognition命名实体识别Nash equilibrium纳什均衡Natural language generation/NLG自然语言生成Natural language processing自然语言处理Negative class负类Negative correlation负相关法Negative Log Likelihood负对数似然Neighbourhood Component Analysis/NCA近邻成分分析Neural Machine Translation神经机器翻译Neural Turing Machine神经图灵机Newton method牛顿法NIPS国际神经信息处理系统会议No Free Lunch Theorem/NFL没有免费的午餐定理Noise-contrastive estimation噪音对比估计Nominal attribute列名属性Non-convex optimization非凸优化Nonlinear model非线性模型Non-metric distance非度量距离Non-negative matrix factorization非负矩阵分解Non-ordinal attribute无序属性Non-Saturating Game非饱和博弈Norm范数Normalization归一化Nuclear norm核范数Numerical attribute数值属性14、O开头的词汇Objective function目标函数Oblique decision tree斜决策树Occam’s razor奥卡姆剃刀Odds几率Off-Policy离策略One shot learning一次性学习One-Dependent Estimator/ODE独依赖估计On-Policy在策略Ordinal attribute有序属性Out-of-bag estimate包外估计Output layer输出层Output smearing输出调制法Overfitting过拟合/过配Oversampling过采样15、P开头的词汇Paired t-test成对t 检验Pairwise成对型Pairwise Markov property成对马尔可夫性Parameter参数Parameter estimation参数估计Parameter tuning调参Parse tree解析树Particle Swarm Optimization/PSO粒子群优化算法Part-of-speech tagging词性标注Perceptron感知机Performance measure性能度量Plug and Play Generative Network即插即用生成网络Plurality voting相对多数投票法Polarity detection极性检测Polynomial kernel function多项式核函数Pooling池化Positive class正类Positive definite matrix正定矩阵Post-hoc test后续检验Post-pruning后剪枝potential function势函数Precision查准率/准确率Prepruning预剪枝Principal component analysis/PCA主成分分析Principle of multiple explanations多释原则Prior先验Probability Graphical Model概率图模型Proximal Gradient Descent/PGD近端梯度下降Pruning剪枝Pseudo-label伪标记16、Q开头的词汇Quantized Neural Network量子化神经网络Quantum computer量子计算机Quantum Computing量子计算Quasi Newton method拟牛顿法17、R开头的词汇Radial Basis Function/RBF径向基函数Random Forest Algorithm随机森林算法Random walk随机漫步Recall查全率/召回率Receiver Operating Characteristic/ROC受试者工作特征Rectified Linear Unit/ReLU线性修正单元Recurrent Neural Network循环神经网络Recursive neural network递归神经网络Reference model参考模型Regression回归Regularization正则化Reinforcement learning/RL强化学习Representation learning表征学习Representer theorem表示定理reproducing kernel Hilbert space/RKHS再生核希尔伯特空间Re-sampling重采样法Rescaling再缩放Residual Mapping残差映射Residual Network残差网络Restricted Boltzmann Machine/RBM受限玻尔兹曼机Restricted Isometry Property/RIP限定等距性Re-weighting重赋权法Robustness稳健性/鲁棒性Root node根结点Rule Engine规则引擎Rule learning规则学习18、S开头的词汇Saddle point鞍点Sample space样本空间Sampling采样Score function评分函数Self-Driving自动驾驶Self-Organizing Map/SOM自组织映射Semi-naive Bayes classifiers半朴素贝叶斯分类器Semi-Supervised Learning半监督学习semi-Supervised Support Vector Machine半监督支持向量机Sentiment analysis情感分析Separating hyperplane分离超平面Sigmoid function Sigmoid 函数Similarity measure相似度度量Simulated annealing模拟退火Simultaneous localization and mapping同步定位与地图构建Singular Value Decomposition奇异值分解Slack variables松弛变量Smoothing平滑Soft margin软间隔Soft margin maximization软间隔最大化Soft voting软投票Sparse representation稀疏表征Sparsity稀疏性Specialization特化Spectral Clustering谱聚类Speech Recognition语音识别Splitting variable切分变量Squashing function挤压函数Stability-plasticity dilemma可塑性-稳定性困境Statistical learning统计学习Status feature function状态特征函Stochastic gradient descent随机梯度下降Stratified sampling分层采样Structural risk结构风险Structural risk minimization/SRM结构风险最小化Subspace子空间Supervised learning监督学习/有导师学习support vector expansion支持向量展式Support Vector Machine/SVM支持向量机Surrogat loss替代损失Surrogate function替代函数Symbolic learning符号学习Symbolism符号主义Synset同义词集19、T开头的词汇T-Distribution Stochastic Neighbour Embedding/t-SNE T–分布随机近邻嵌入Tensor张量Tensor Processing Units/TPU张量处理单元The least square method最小二乘法Threshold阈值Threshold logic unit阈值逻辑单元Threshold-moving阈值移动Time Step时间步骤Tokenization标记化Training error训练误差Training instance训练示例/训练例Transductive learning直推学习Transfer learning迁移学习Treebank树库Tria-by-error试错法True negative真负类True positive真正类True Positive Rate/TPR真正例率Turing Machine图灵机Twice-learning二次学习20、U开头的词汇Underfitting欠拟合/欠配Undersampling欠采样Understandability可理解性Unequal cost非均等代价Unit-step function单位阶跃函数Univariate decision tree单变量决策树Unsupervised learning无监督学习/无导师学习Unsupervised layer-wise training无监督逐层训练Upsampling上采样21、V开头的词汇Vanishing Gradient Problem梯度消失问题Variational inference变分推断VC Theory VC维理论Version space版本空间Viterbi algorithm维特比算法Von Neumann architecture冯·诺伊曼架构22、W开头的词汇Wasserstein GAN/WGAN Wasserstein生成对抗网络Weak learner弱学习器Weight权重Weight sharing权共享Weighted voting加权投票法Within-class scatter matrix类内散度矩阵Word embedding词嵌入Word sense disambiguation词义消歧23、Z开头的词汇Zero-data learning零数据学习Zero-shot learning零次学习。