数据结构与算法分析 第2章

- 格式:ppt

- 大小:363.50 KB

- 文档页数:32

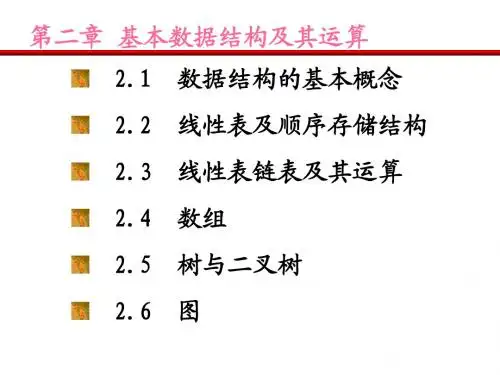

数据结构的课程设计目的一、课程目标知识目标:1. 掌握数据结构的基本概念,包括线性结构(如数组、链表、栈、队列)和非线性结构(如树、图等)的特点与应用。

2. 学会分析不同数据结构在存储和处理数据时的效率,理解时间复杂度和空间复杂度的概念。

3. 掌握常见数据结构的具体实现方法,并能够运用到实际编程中。

技能目标:1. 培养学生运用数据结构解决实际问题的能力,能够根据问题的特点选择合适的数据结构进行优化。

2. 提高学生的编程实践能力,使其能够熟练编写与数据结构相关的程序代码,并进行调试与优化。

3. 培养学生独立思考和团队协作的能力,通过项目实践和课堂讨论,提高问题分析、解决方案的设计与实现能力。

情感态度价值观目标:1. 培养学生对数据结构学科的兴趣,激发学生的学习热情和主动探究精神。

2. 培养学生严谨、细致、踏实的学术态度,使其认识到数据结构在计算机科学与软件开发领域的重要性。

3. 培养学生具备良好的团队合作精神,学会倾听、沟通、表达与协作,提高人际交往能力。

课程性质:本课程为计算机科学与技术及相关专业的基础课程,旨在培养学生的数据结构知识和编程技能,提高学生解决实际问题的能力。

学生特点:学生已具备一定的编程基础,具有一定的逻辑思维能力和问题解决能力,但可能对数据结构的应用和实现方法了解不足。

教学要求:结合学生特点,课程设计应注重理论与实践相结合,以案例驱动、项目导向的教学方法,引导学生掌握数据结构知识,提高编程实践能力。

同时,注重培养学生的情感态度价值观,使其在学习过程中形成积极的学习态度和良好的团队协作精神。

通过具体的学习成果评估,确保课程目标的达成。

二、教学内容1. 线性结构:- 数组:数组的概念、存储方式、应用场景。

- 链表:单链表、双向链表、循环链表的概念及实现。

- 栈与队列:栈的概念、应用场景、实现方法;队列的概念、应用场景、实现方法。

2. 非线性结构:- 树:树的概念、二叉树、二叉查找树、平衡树(如AVL树)、堆的概念及其应用。

《数据结构与算法分析》(C++第二版)【美】Clifford A.Shaffer著课后习题答案二5Binary Trees5.1 Consider a non-full binary tree. By definition, this tree must have some internalnode X with only one non-empty child. If we modify the tree to removeX, replacing it with its child, the modified tree will have a higher fraction ofnon-empty nodes since one non-empty node and one empty node have been removed.5.2 Use as the base case the tree of one leaf node. The number of degree-2 nodesis 0, and the number of leaves is 1. Thus, the theorem holds.For the induction hypothesis, assume the theorem is true for any tree withn − 1 nodes.For the induction step, consider a tree T with n nodes. Remove from the treeany leaf node, and call the resulting tree T. By the induction hypothesis, Thas one more leaf node than it has nodes of degree 2.Now, restore the leaf node that was removed to form T. There are twopossible cases.(1) If this leaf node is the only child of its parent in T, then the number ofnodes of degree 2 has not changed, nor has the number of leaf nodes. Thus,the theorem holds.(2) If this leaf node is the child of a node in T with degree 2, then that nodehas degree 1 in T. Thus, by restoring the leaf node we are adding one newleaf node and one new node of degree 2. Thus, the theorem holds.By mathematical induction, the theorem is correct.32335.3 Base Case: For the tree of one leaf node, I = 0, E = 0, n = 0, so thetheorem holds.Induction Hypothesis: The theorem holds for the full binary tree containingn internal nodes.Induction Step: Take an arbitrary tree (call it T) of n internal nodes. Selectsome internal node x from T that has two leaves, and remove those twoleaves. Call the resulting tree T’. Tree T’ is full and has n−1 internal nodes,so by the Induction Hypothesis E = I + 2(n − 1).Call the depth of node x as d. Restore the two children of x, each at leveld+1. We have nowadded d to I since x is now once again an internal node.We have now added 2(d + 1) − d = d + 2 to E since we added the two leafnodes, but lost the contribution of x to E. Thus, if before the addition we had E = I + 2(n − 1) (by the induction hypothesis), then after the addition we have E + d = I + d + 2 + 2(n − 1) or E = I + 2n which is correct. Thus,by the principle of mathematical induction, the theorem is correct.5.4 (a) template <class Elem>void inorder(BinNode<Elem>* subroot) {if (subroot == NULL) return; // Empty, do nothingpreorder(subroot->left());visit(subroot); // Perform desired actionpreorder(subroot->right());}(b) template <class Elem>void postorder(BinNode<Elem>* subroot) {if (subroot == NULL) return; // Empty, do nothingpreorder(subroot->left());preorder(subroot->right());visit(subroot); // Perform desired action}5.5 The key is to search both subtrees, as necessary.template <class Key, class Elem, class KEComp>bool search(BinNode<Elem>* subroot, Key K);if (subroot == NULL) return false;if (subroot->value() == K) return true;if (search(subroot->right())) return true;return search(subroot->left());}34 Chap. 5 Binary Trees5.6 The key is to use a queue to store subtrees to be processed.template <class Elem>void level(BinNode<Elem>* subroot) {AQueue<BinNode<Elem>*> Q;Q.enqueue(subroot);while(!Q.isEmpty()) {BinNode<Elem>* temp;Q.dequeue(temp);if(temp != NULL) {Print(temp);Q.enqueue(temp->left());Q.enqueue(temp->right());}}}5.7 template <class Elem>int height(BinNode<Elem>* subroot) {if (subroot == NULL) return 0; // Empty subtreereturn 1 + max(height(subroot->left()),height(subroot->right()));}5.8 template <class Elem>int count(BinNode<Elem>* subroot) {if (subroot == NULL) return 0; // Empty subtreeif (subroot->isLeaf()) return 1; // A leafreturn 1 + count(subroot->left()) +count(subroot->right());}5.9 (a) Since every node stores 4 bytes of data and 12 bytes of pointers, the overhead fraction is 12/16 = 75%.(b) Since every node stores 16 bytes of data and 8 bytes of pointers, the overhead fraction is 8/24 ≈ 33%.(c) Leaf nodes store 8 bytes of data and 4 bytes of pointers; internal nodesstore 8 bytes of data and 12 bytes of pointers. Since the nodes havedifferent sizes, the total space needed for internal nodes is not the sameas for leaf nodes. Students must be careful to do the calculation correctly,taking the weighting into account. The correct formula looks asfollows, given that there are x internal nodes and x leaf nodes.4x + 12x12x + 20x= 16/32 = 50%.(d) Leaf nodes store 4 bytes of data; internal nodes store 4 bytes of pointers. The formula looks as follows, given that there are x internal nodes and35x leaf nodes:4x4x + 4x= 4/8 = 50%.5.10 If equal valued nodes were allowed to appear in either subtree, then during a search for all nodes of a given value, whenever we encounter a node of that value the search would be required to search in both directions.5.11 This tree is identical to the tree of Figure 5.20(a), except that a node with value 5 will be added as the right child of the node with value 2.5.12 This tree is identical to the tree of Figure 5.20(b), except that the value 24 replaces the value 7, and the leaf node that originally contained 24 is removed from the tree.5.13 template <class Key, class Elem, class KEComp>int smallcount(BinNode<Elem>* root, Key K);if (root == NULL) return 0;if (KEComp.gt(root->value(), K))return smallcount(root->leftchild(), K);elsereturn smallcount(root->leftchild(), K) +smallcount(root->rightchild(), K) + 1;5.14 template <class Key, class Elem, class KEComp>void printRange(BinNode<Elem>* root, int low,int high) {if (root == NULL) return;if (KEComp.lt(high, root->val()) // all to leftprintRange(root->left(), low, high);else if (KEComp.gt(low, root->val())) // all to rightprintRange(root->right(), low, high);else { // Must process both childrenprintRange(root->left(), low, high);PRINT(root->value());printRange(root->right(), low, high);}}5.15 The minimum number of elements is contained in the heap with a single node at depth h − 1, for a total of 2h−1 nodes.The maximum number of elements is contained in the heap that has completely filled up level h − 1, for a total of 2h − 1 nodes.5.16 The largest element could be at any leaf node.5.17 The corresponding array will be in the following order (equivalent to level order for the heap):12 9 10 5 4 1 8 7 3 236 Chap. 5 Binary Trees5.18 (a) The array will take on the following order:6 5 3 4 2 1The value 7 will be at the end of the array.(b) The array will take on the following order:7 4 6 3 2 1The value 5 will be at the end of the array.5.19 // Min-heap classtemplate <class Elem, class Comp> class minheap {private:Elem* Heap; // Pointer to the heap arrayint size; // Maximum size of the heapint n; // # of elements now in the heapvoid siftdown(int); // Put element in correct placepublic:minheap(Elem* h, int num, int max) // Constructor{ Heap = h; n = num; size = max; buildHeap(); }int heapsize() const // Return current size{ return n; }bool isLeaf(int pos) const // TRUE if pos a leaf{ return (pos >= n/2) && (pos < n); }int leftchild(int pos) const{ return 2*pos + 1; } // Return leftchild posint rightchild(int pos) const{ return 2*pos + 2; } // Return rightchild posint parent(int pos) const // Return parent position { return (pos-1)/2; }bool insert(const Elem&); // Insert value into heap bool removemin(Elem&); // Remove maximum value bool remove(int, Elem&); // Remove from given pos void buildHeap() // Heapify contents{ for (int i=n/2-1; i>=0; i--) siftdown(i); }};template <class Elem, class Comp>void minheap<Elem, Comp>::siftdown(int pos) { while (!isLeaf(pos)) { // Stop if pos is a leafint j = leftchild(pos); int rc = rightchild(pos);if ((rc < n) && Comp::gt(Heap[j], Heap[rc]))j = rc; // Set j to lesser child’s valueif (!Comp::gt(Heap[pos], Heap[j])) return; // Done37swap(Heap, pos, j);pos = j; // Move down}}template <class Elem, class Comp>bool minheap<Elem, Comp>::insert(const Elem& val) { if (n >= size) return false; // Heap is fullint curr = n++;Heap[curr] = val; // Start at end of heap// Now sift up until curr’s parent < currwhile ((curr!=0) &&(Comp::lt(Heap[curr], Heap[parent(curr)]))) {swap(Heap, curr, parent(curr));curr = parent(curr);}return true;}template <class Elem, class Comp>bool minheap<Elem, Comp>::removemin(Elem& it) { if (n == 0) return false; // Heap is emptyswap(Heap, 0, --n); // Swap max with last valueif (n != 0) siftdown(0); // Siftdown new root valit = Heap[n]; // Return deleted valuereturn true;}38 Chap. 5 Binary Trees// Remove value at specified positiontemplate <class Elem, class Comp>bool minheap<Elem, Comp>::remove(int pos, Elem& it) {if ((pos < 0) || (pos >= n)) return false; // Bad posswap(Heap, pos, --n); // Swap with last valuewhile ((pos != 0) &&(Comp::lt(Heap[pos], Heap[parent(pos)])))swap(Heap, pos, parent(pos)); // Push up if largesiftdown(pos); // Push down if small keyit = Heap[n];return true;}5.20 Note that this summation is similar to Equation 2.5. To solve the summation requires the shifting technique from Chapter 14, so this problem may be too advanced for many students at this time. Note that 2f(n) − f(n) = f(n),but also that:2f(n) − f(n) = n(24+48+616+ ··· +2(log n − 1)n) −n(14+28+316+ ··· +log n − 1n)logn−1i=112i− log n − 1n)= n(1 − 1n− log n − 1n)= n − log n.5.21 Here are the final codes, rather than a picture.l 00h 010i 011e 1000f 1001j 101d 11000a 1100100b 1100101c 110011g 1101k 11139The average code length is 3.234455.22 The set of sixteen characters with equal weight will create a Huffman coding tree that is complete with 16 leaf nodes all at depth 4. Thus, the average code length will be 4 bits. This is identical to the fixed length code. Thus, in this situation, the Huffman coding tree saves no space (and costs no space).5.23 (a) By the prefix property, there can be no character with codes 0, 00, or 001x where “x” stands for any binary string.(b) There must be at least one code with each form 1x, 01x, 000x where“x” could be any binary string (including the empty string).5.24 (a) Q and Z are at level 5, so any string of length n containing only Q’s and Z’s requires 5n bits.(b) O and E are at level 2, so any string of length n containing only O’s and E’s requires 2n bits.(c) The weighted average is5 ∗ 5 + 10 ∗ 4 + 35 ∗ 3 + 50 ∗ 2100bits per character5.25 This is a straightforward modification.// Build a Huffman tree from minheap h1template <class Elem>HuffTree<Elem>*buildHuff(minheap<HuffTree<Elem>*,HHCompare<Elem> >* hl) {HuffTree<Elem> *temp1, *temp2, *temp3;while(h1->heapsize() > 1) { // While at least 2 itemshl->removemin(temp1); // Pull first two treeshl->removemin(temp2); // off the heaptemp3 = new HuffTree<Elem>(temp1, temp2);hl->insert(temp3); // Put the new tree back on listdelete temp1; // Must delete the remnantsdelete temp2; // of the trees we created}return temp3;}6General Trees6.1 The following algorithm is linear on the size of the two trees. // Return TRUE iff t1 and t2 are roots of identical// general treestemplate <class Elem>bool Compare(GTNode<Elem>* t1, GTNode<Elem>* t2) { GTNode<Elem> *c1, *c2;if (((t1 == NULL) && (t2 != NULL)) ||((t2 == NULL) && (t1 != NULL)))return false;if ((t1 == NULL) && (t2 == NULL)) return true;if (t1->val() != t2->val()) return false;c1 = t1->leftmost_child();c2 = t2->leftmost_child();while(!((c1 == NULL) && (c2 == NULL))) {if (!Compare(c1, c2)) return false;if (c1 != NULL) c1 = c1->right_sibling();if (c2 != NULL) c2 = c2->right_sibling();}}6.2 The following algorithm is Θ(n2).// Return true iff t1 and t2 are roots of identical// binary treestemplate <class Elem>bool Compare2(BinNode<Elem>* t1, BinNode<Elem* t2) { BinNode<Elem> *c1, *c2;if (((t1 == NULL) && (t2 != NULL)) ||((t2 == NULL) && (t1 != NULL)))return false;if ((t1 == NULL) && (t2 == NULL)) return true;4041if (t1->val() != t2->val()) return false;if (Compare2(t1->leftchild(), t2->leftchild())if (Compare2(t1->rightchild(), t2->rightchild())return true;if (Compare2(t1->leftchild(), t2->rightchild())if (Compare2(t1->rightchild(), t2->leftchild))return true;return false;}6.3 template <class Elem> // Print, postorder traversalvoid postprint(GTNode<Elem>* subroot) {for (GTNode<Elem>* temp = subroot->leftmost_child();temp != NULL; temp = temp->right_sibling())postprint(temp);if (subroot->isLeaf()) cout << "Leaf: ";else cout << "Internal: ";cout << subroot->value() << "\n";}6.4 template <class Elem> // Count the number of nodesint gencount(GTNode<Elem>* subroot) {if (subroot == NULL) return 0int count = 1;GTNode<Elem>* temp = rt->leftmost_child();while (temp != NULL) {count += gencount(temp);temp = temp->right_sibling();}return count;}6.5 The Weighted Union Rule requires that when two parent-pointer trees are merged, the smaller one’s root becomes a child of the larger one’s root. Thus, we need to keep track of the number of nodes in a tree. To do so, modify the node array to store an integer value with each node. Initially, each node isin its own tree, so the weights for each node begin as 1. Whenever we wishto merge two trees, check the weights of the roots to determine which has more nodes. Then, add to the weight of the final root the weight of the new subtree.6.60 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15-1 0 0 0 0 0 0 6 0 0 0 9 0 0 12 06.7 The resulting tree should have the following structure:42 Chap. 6 General TreesNode 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15Parent 4 4 4 4 -1 4 4 0 0 4 9 9 9 12 9 -16.8 For eight nodes labeled 0 through 7, use the following series of equivalences: (0, 1) (2, 3) (4, 5) (6, 7) (4 6) (0, 2) (4 0)This requires checking fourteen parent pointers (two for each equivalence),but none are actually followed since these are all roots. It is possible todouble the number of parent pointers checked by choosing direct children ofroots in each case.6.9 For the “lists of Children” representation, every node stores a data value and a pointer to its list of children. Further, every child (every node except the root)has a record associated with it containing an index and a pointer. Indicatingthe size of the data value as D, the size of a pointer as P and the size of anindex as I, the overhead fraction is3P + ID + 3P + I.For the “Left Child/Right Sibling” representation, every node stores three pointers and a data value, for an overhead fraction of3PD + 3P.The first linked representation of Section 6.3.3 stores with each node a datavalue and a size field (denoted by S). Each child (every node except the root)also has a pointer pointing to it. The overhead fraction is thusS + PD + S + Pmaking it quite efficient.The second linked representation of Section 6.3.3 stores with each node adata value and a pointer to the list of children. Each child (every node exceptthe root) has two additional pointers associated with it to indicate its placeon the parent’s linked list. Thus, the overhead fraction is3PD + 3P.6.10 template <class Elem>BinNode<Elem>* convert(GTNode<Elem>* genroot) {if (genroot == NULL) return NULL;43GTNode<Elem>* gtemp = genroot->leftmost_child();btemp = new BinNode(genroot->val(), convert(gtemp),convert(genroot->right_sibling()));}6.11 • Parent(r) = (r − 1)/k if 0 < r < n.• Ith child(r) = kr + I if kr +I < n.• Left sibling(r) = r − 1 if r mod k = 1 0 < r < n.• Right sibling(r) = r + 1 if r mod k = 0 and r + 1 < n.6.12 (a) The overhead fraction is4(k + 1)4 + 4(k + 1).(b) The overhead fraction is4k16 + 4k.(c) The overhead fraction is4(k + 2)16 + 4(k + 2).(d) The overhead fraction is2k2k + 4.6.13 Base Case: The number of leaves in a non-empty tree of 0 internal nodes is (K − 1)0 + 1 = 1. Thus, the theorem is correct in the base case.Induction Hypothesis: Assume that the theorem is correct for any full Karytree containing n internal nodes.Induction Step: Add K children to an arbitrary leaf node of the tree withn internal nodes. This new tree now has 1 more internal node, and K − 1more leaf nodes, so theorem still holds. Thus, the theorem is correct, by the principle of Mathematical Induction.6.14 (a) CA/BG///FEDD///H/I//(b) CA/BG/FED/H/I6.15 X|P-----| | |C Q R---| |V M44 Chap. 6 General Trees6.16 (a) // Use a helper function with a pass-by-reference// variable to indicate current position in the// node list.template <class Elem>BinNode<Elem>* convert(char* inlist) {int curr = 0;return converthelp(inlist, curr);}// As converthelp processes the node list, curr is// incremented appropriately.template <class Elem>BinNode<Elem>* converthelp(char* inlist,int& curr) {if (inlist[curr] == ’/’) {curr++;return NULL;}BinNode<Elem>* temp = new BinNode(inlist[curr++], NULL, NULL);temp->left = converthelp(inlist, curr);temp->right = converthelp(inlist, curr);return temp;}(b) // Use a helper function with a pass-by-reference // variable to indicate current position in the// node list.template <class Elem>BinNode<Elem>* convert(char* inlist) {int curr = 0;return converthelp(inlist, curr);}// As converthelp processes the node list, curr is// incremented appropriately.template <class Elem>BinNode<Elem>* converthelp(char* inlist,int& curr) {if (inlist[curr] == ’/’) {curr++;return NULL;}BinNode<Elem>* temp =new BinNode<Elem>(inlist[curr++], NULL, NULL);if (inlist[curr] == ’\’’) return temp;45curr++ // Eat the internal node mark.temp->left = converthelp(inlist, curr);temp->right = converthelp(inlist, curr);return temp;}(c) // Use a helper function with a pass-by-reference// variable to indicate current position in the// node list.template <class Elem>GTNode<Elem>* convert(char* inlist) {int curr = 0;return converthelp(inlist, curr);}// As converthelp processes the node list, curr is// incremented appropriately.template <class Elem>GTNode<Elem>* converthelp(char* inlist,int& curr) {if (inlist[curr] == ’)’) {curr++;return NULL;}GTNode<Elem>* temp =new GTNode<Elem>(inlist[curr++]);if (curr == ’)’) {temp->insert_first(NULL);return temp;}temp->insert_first(converthelp(inlist, curr));while (curr != ’)’)temp->insert_next(converthelp(inlist, curr));curr++;return temp;}6.17 The Huffman tree is a full binary tree. To decode, we do not need to know the weights of nodes, only the letter values stored in the leaf nodes. Thus, we can use a coding much like that of Equation 6.2, storing only a bit mark for internal nodes, and a bit mark and letter value for leaf nodes.7Internal Sorting7.1 Base Case: For the list of one element, the double loop is not executed and the list is not processed. Thus, the list of one element remains unaltered and is sorted.Induction Hypothesis: Assume that the list of n elements is sorted correctlyby Insertion Sort.Induction Step: The list of n + 1 elements is processed by first sorting thetop n elements. By the induction hypothesis, this is done correctly. The final pass of the outer for loop will process the last element (call it X). This isdone by the inner for loop, which moves X up the list until a value smallerthan that of X is encountered. At this point, X has been properly insertedinto the sorted list, leaving the entire collection of n + 1 elements correctly sorted. Thus, by the principle of Mathematical Induction, the theorem is correct.7.2 void StackSort(AStack<int>& IN) {AStack<int> Temp1, Temp2;while (!IN.isEmpty()) // Transfer to another stackTemp1.push(IN.pop());IN.push(Temp1.pop()); // Put back one elementwhile (!Temp1.isEmpty()) { // Process rest of elemswhile (IN.top() > Temp1.top()) // Find elem’s placeTemp2.push(IN.pop());IN.push(Temp1.pop()); // Put the element inwhile (!Temp2.isEmpty()) // Put the rest backIN.push(Temp2.pop());}}46477.3 The revised algorithm will work correctly, and its asymptotic complexity will remain Θ(n2). However, it will do about twice as many comparisons, since it will compare adjacent elements within the portion of the list already knownto be sorted. These additional comparisons are unproductive.7.4 While binary search will find the proper place to locate the next element, it will still be necessary to move the intervening elements down one position in the array. This requires the same number of operations as a sequential search. However, it does reduce the number of element/element comparisons, and may be somewhat faster by a constant factor since shifting several elements may be more efficient than an equal number of swap operations.7.5 (a) template <class Elem, class Comp>void selsort(Elem A[], int n) { // Selection Sortfor (int i=0; i<n-1; i++) { // Select i’th recordint lowindex = i; // Remember its indexfor (int j=n-1; j>i; j--) // Find least valueif (Comp::lt(A[j], A[lowindex]))lowindex = j; // Put it in placeif (i != lowindex) // Add check for exerciseswap(A, i, lowindex);}}(b) There is unlikely to be much improvement; more likely the algorithmwill slow down. This is because the time spent checking (n times) isunlikely to save enough swaps to make up.(c) Try it and see!7.6 • Insertion Sort is stable. A swap is done only if the lower element’svalue is LESS.• Bubble Sort is stable. A swap is done only if the lower element’s valueis LESS.• Selection Sort is NOT stable. The new low value is set only if it isactually less than the previous one, but the direction of the search isfrom the bottom of the array. The algorithm will be stable if “less than”in the check becomes “less than or equal to” for selecting the low key position.• Shell Sort is NOT stable. The sublist sorts are done independently, andit is quite possible to swap an element in one sublist ahead of its equalvalue in another sublist. Once they are in the same sublist, they willretain this (incorrect) relationship.• Quick-sort is NOT stable. After selecting the pivot, it is swapped withthe last element. This action can easily put equal records out of place.48 Chap. 7 Internal Sorting• Conceptually (in particular, the linked list version) Mergesort is stable.The array implementations are NOT stable, since, given that the sublistsare stable, the merge operation will pick the element from the lower listbefore the upper list if they are equal. This is easily modified to replace“less than” with “less than or equal to.”• Heapsort is NOT stable. Elements in separate sides of the heap are processed independently, and could easily become out of relative order.• Binsort is stable. Equal values that come later are appended to the list.• Radix Sort is stable. While the processing is from bottom to top, thebins are also filled from bottom to top, preserving relative order.7.7 In the worst case, the stack can store n records. This can be cut to log n in the worst case by putting the larger partition on FIRST, followed by the smaller. Thus, the smaller will be processed first, cutting the size of the next stacked partition by at least half.7.8 Here is how I derived a permutation that will give the desired (worst-case) behavior:a b c 0 d e f g First, put 0 in pivot index (0+7/2),assign labels to the other positionsa b c g d e f 0 First swap0 b c g d e f a End of first partition pass0 b c g 1 e f a Set d = 1, it is in pivot index (1+7/2)0 b c g a e f 1 First swap0 1 c g a e f b End of partition pass0 1 c g 2 e f b Set a = 2, it is in pivot index (2+7/2)0 1 c g b e f 2 First swap0 1 2 g b e f c End of partition pass0 1 2 g b 3 f c Set e = 3, it is in pivot index (3+7/2)0 1 2 g b c f 3 First swap0 1 2 3 b c f g End of partition pass0 1 2 3 b 4 f g Set c = 4, it is in pivot index (4+7/2)0 1 2 3 b g f 4 First swap0 1 2 3 4 g f b End of partition pass0 1 2 3 4 g 5 b Set f = 5, it is in pivot index (5+7/2)0 1 2 3 4 g b 5 First swap0 1 2 3 4 5 b g End of partition pass0 1 2 3 4 5 6 g Set b = 6, it is in pivot index (6+7/2)0 1 2 3 4 5 g 6 First swap0 1 2 3 4 5 6 g End of parition pass0 1 2 3 4 5 6 7 Set g = 7.Plugging the variable assignments into the original permutation yields:492 6 4 0 13 5 77.9 (a) Each call to qsort costs Θ(i log i). Thus, the total cost isni=1i log i = Θ(n2 log n).(b) Each call to qsort costs Θ(n log n) for length(L) = n, so the totalcost is Θ(n2 log n).7.10 All that we need to do is redefine the comparison test to use strcmp. The quicksort algorithm itself need not change. This is the advantage of paramerizing the comparator.7.11 For n = 1000, n2 = 1, 000, 000, n1.5 = 1000 ∗√1000 ≈ 32, 000, andn log n ≈ 10, 000. So, the constant factor for Shellsort can be anything less than about 32 times that of Insertion Sort for Shellsort to be faster. The constant factor for Shellsort can be anything less than about 100 times thatof Insertion Sort for Quicksort to be faster.7.12 (a) The worst case occurs when all of the sublists are of size 1, except for one list of size i − k + 1. If this happens on each call to SPLITk, thenthe total cost of the algorithm will be Θ(n2).(b) In the average case, the lists are split into k sublists of roughly equal length. Thus, the total cost is Θ(n logk n).7.13 (This question comes from Rawlins.) Assume that all nuts and all bolts havea partner. We use two arrays N[1..n] and B[1..n] to represent nuts and bolts. Algorithm 1Using merge-sort to solve this problem.First, split the input into n/2 sub-lists such that each sub-list contains twonuts and two bolts. Then sort each sub-lists. We could well come up with apair of nuts that are both smaller than either of a pair of bolts. In that case,all you can know is something like:N1, N2。

数据结构与算法分析课后习题答案【篇一:《数据结构与算法》课后习题答案】>2.3.2 判断题2.顺序存储的线性表可以按序号随机存取。

(√)4.线性表中的元素可以是各种各样的,但同一线性表中的数据元素具有相同的特性,因此属于同一数据对象。

(√)6.在线性表的链式存储结构中,逻辑上相邻的元素在物理位置上不一定相邻。

(√)8.在线性表的顺序存储结构中,插入和删除时移动元素的个数与该元素的位置有关。

(√)9.线性表的链式存储结构是用一组任意的存储单元来存储线性表中数据元素的。

(√)2.3.3 算法设计题1.设线性表存放在向量a[arrsize]的前elenum个分量中,且递增有序。

试写一算法,将x 插入到线性表的适当位置上,以保持线性表的有序性,并且分析算法的时间复杂度。

【提示】直接用题目中所给定的数据结构(顺序存储的思想是用物理上的相邻表示逻辑上的相邻,不一定将向量和表示线性表长度的变量封装成一个结构体),因为是顺序存储,分配的存储空间是固定大小的,所以首先确定是否还有存储空间,若有,则根据原线性表中元素的有序性,来确定插入元素的插入位置,后面的元素为它让出位置,(也可以从高下标端开始一边比较,一边移位)然后插入x ,最后修改表示表长的变量。

int insert (datatype a[],int *elenum,datatype x) /*设elenum为表的最大下标*/ {if (*elenum==arrsize-1) return 0; /*表已满,无法插入*/else {i=*elenum;while (i=0 a[i]x)/*边找位置边移动*/{a[i+1]=a[i];i--;}a[i+1]=x;/*找到的位置是插入位的下一位*/ (*elenum)++;return 1;/*插入成功*/}}时间复杂度为o(n)。

2.已知一顺序表a,其元素值非递减有序排列,编写一个算法删除顺序表中多余的值相同的元素。

第二章习题1. 描述以下三个概念的区别:头指针,头结点,首元素结点。

2. 填空:(1)在顺序表中插入或删除一个元素,需要平均移动元素,具体移动的元素个数与有关。

(2)在顺序表中,逻辑上相邻的元素,其物理位置相邻。

在单链表中,逻辑上相邻的元素,其物理位置相邻。

(3)在带头结点的非空单链表中,头结点的存储位置由指示,首元素结点的存储位置由指示,除首元素结点外,其它任一元素结点的存储位置由指示。

3.已知L是无表头结点的单链表,且P结点既不是首元素结点,也不是尾元素结点。

按要求从下列语句中选择合适的语句序列。

a. 在P结点后插入S结点的语句序列是:。

b. 在P结点前插入S结点的语句序列是:。

c. 在表首插入S结点的语句序列是:。

d. 在表尾插入S结点的语句序列是:。

供选择的语句有:(1)P->next=S;(2)P->next= P->next->next;(3)P->next= S->next;(4)S->next= P->next;(5)S->next= L;(6)S->next= NULL;(7)Q= P;(8)while(P->next!=Q) P=P->next;(9)while(P->next!=NULL) P=P->next;(10)P= Q;(11)P= L;(12)L= S;(13)L= P;4. 设线性表存于a(1:arrsize)的前elenum个分量中且递增有序。

试写一算法,将X插入到线性表的适当位置上,以保持线性表的有序性。

5. 写一算法,从顺序表中删除自第i个元素开始的k个元素。

6. 已知线性表中的元素(整数)以值递增有序排列,并以单链表作存储结构。

试写一高效算法,删除表中所有大于mink且小于maxk的元素(若表中存在这样的元素),分析你的算法的时间复杂度(注意:mink和maxk是给定的两个参变量,它们的值为任意的整数)。

第2章线性表典型例题解析一、选择题1.线性表是具有n个(n≥0)的有限序列。

A.表元素B.字符C.数据元素D.数据项【分析】线性表是具有相同数据类型的n(n≥0)个数据元素的有限序列,通常记为(a1,a2,…,a n),其中n为表长,n=0时称为空表。

【答案】C2.顺序存储结构的优点是。

A.存储密度大B.插入运算方便C.删除运算方便D.可方便地用于各种逻辑结构的存储表示【分析】顺序存储结构是采用一组地址连续的存储单元来依次存放数据元素,数据元素的逻辑顺序和物理次序一致。

因此,其存储密度大。

【答案】A3.带头结点的单链表head为空的判断条件是。

A.head==NULL B.head->next==NULLC.head->next==head D.head!=NULL【分析】链表为空时,头结点的指针域为空。

【答案】B4.若某线性表中最常用的操作是在最后一个元素之后插入一个元素和删除第一个元素,则采用存储方式最节省运算时间。

A.单链表B.仅有头指针的单循环链表C.双链表D.仅有尾指针的单循环链表【分析】根据题意要求,该线性表的存储应能够很方便地找到线性表的第一个元素和最后一个元素,A和B都能很方便地通过头指针找到线性表的第一个元素,却要经过所有元素才能找到最后一个元素;选项C双链表若存为双向循环链表,则能很方便地找到线性表的第一个元素和最后一个元素,但存储效率要低些,插入和删除操作也略微复杂;选项D可通过尾指针直接找到线性表的最后一个元素,通过线性表的最后一个元素的循环指针就能很方便地找到第一个元素。

【答案】D5.若某线性表最常用的操作是存取任一指定序号的元素和在最后进行插入和删除运算,则利用存储方式最节省时间。

A.顺序表B.双链表C.带头结点的双循环链表D.单循环链表【分析】某线性表最常用的操作是存取任一指定序号的元素和在最后进行插入和删除运算。

因此不需要移动线性表种元素的位置。

根据题意要求,该线性表的存储应能够很方便地找到线性表的任一指定序号的元素和最后一个元素,顺序表是由地址连续的向量实现的,因此具有按序号随机访问的特点。

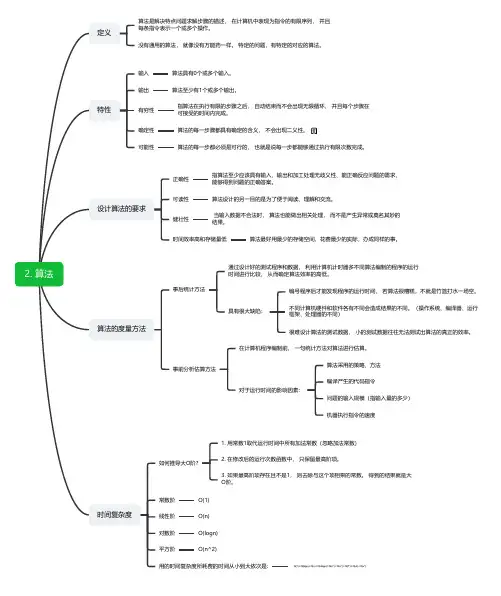

2. 算法定义算法是解决特点问题求解步骤的描述, 在计算机中表现为指令的有限序列, 并且每条指令表示一个或多个操作。

没有通用的算法, 就像没有万能药一样。

特定的问题,有特定的对应的算法。

特性输入算法具有0个或多个输入。

输出算法至少有1个或多个输出。

有穷性指算法在执行有限的步骤之后, 自动结束而不会出现无限循环, 并且每个步骤在可接受的时间内完成。

确定性算法的每一步骤都具有确定的含义, 不会出现二义性。

可能性算法的每一步都必须是可行的, 也就是说每一步都能够通过执行有限次数完成。

设计算法的要求正确性指算法至少应该具有输入、输出和加工处理无歧义性、能正确反应问题的需求、能够得到问题的正确答案。

可读性算法设计的另一目的是为了便于阅读、理解和交流。

健壮性当输入数据不合法时, 算法也能做出相关处理, 而不是产生异常或莫名其妙的结果。

时间效率高和存储量低算法最好用最少的存储空间,花费最少的实际,办成同样的事。

算法的度量方法事后统计方法通过设计好的测试程序和数据, 利用计算机计时器多不同算法编制的程序的运行时间进行比较, 从而确定算法效率的高低。

具有很大缺陷:编号程序后才能发现程序的运行时间, 若算法很糟糕,不就是竹篮打水一场空。

不同计算机硬件和软件各有不同会造成结果的不同。

(操作系统、编译器、运行框架、处理器的不同)很难设计算法的测试数据, 小的测试数据往往无法测试出算法的真正的效率。

事前分析估算方法在计算机程序编制前, 一句统计方法对算法进行估算。

对于运行时间的影响因素:算法采用的策略、方法编译产生的代码指令问题的输入规模(指输入量的多少)机器执行指令的速度时间复杂度如何推导大O阶?1. 用常数1取代运行时间中所有加法常数(忽略加法常数)2. 在修改后的运行次数函数中, 只保留最高阶项。

3. 如果最高阶项存在且不是1, 则去除与这个项相乘的常数。

得到的结果就是大O阶。

常数阶O(1)线性阶O(n)对数阶O(logn)平方阶O(n^2)用的时间复杂度所耗费的时间从小到大依次是:。

Chapter 2:Algorithm Analysis2.12/N ,37,√ N ,N ,N log log N ,N log N ,N log (N 2),N log 2N ,N 1.5,N 2,N 2log N ,N 3,2N / 2,2N .N log N and N log (N 2)grow at the same rate.2.2(a)True.(b)False.A counterexample is T 1(N ) = 2N ,T 2(N ) = N ,and f (N ) = N .(c)False.A counterexample is T 1(N ) = N 2,T 2(N ) = N ,and f (N ) = N 2.(d)False.The same counterexample as in part (c)applies.2.3We claim that N log N is the slower growing function.To see this,suppose otherwise.Then,N ε/ √ log N would grow slower than log N .Taking logs of both sides,we find that,under this assumption,ε/ √ log N log N grows slower than log log N .But the first expres-sion simplifies to ε√ logN .If L = log N ,then we are claiming that ε√ L grows slower than log L ,or equivalently,that ε2L grows slower than log 2 L .But we know that log 2 L = ο (L ),so the original assumption is false,proving the claim.2.4Clearly,log k 1N = ο(log k 2N )if k 1 < k 2,so we need to worry only about positive integers.The claim is clearly true for k = 0and k = 1.Suppose it is true for k < i .Then,by L’Hospital’s rule,N →∞lim N log i N ______ = N →∞lim i N log i −1N _______The second limit is zero by the inductive hypothesis,proving the claim.2.5Let f (N ) = 1when N is even,and N when N is odd.Likewise,let g (N ) = 1when N is odd,and N when N is even.Then the ratio f (N ) / g (N )oscillates between 0and ∞.2.6For all these programs,the following analysis will agree with a simulation:(I)The running time is O (N ).(II)The running time is O (N 2).(III)The running time is O (N 3).(IV)The running time is O (N 2).(V) j can be as large as i 2,which could be as large as N 2.k can be as large as j ,which is N 2.The running time is thus proportional to N .N 2.N 2,which is O (N 5).(VI)The if statement is executed at most N 3times,by previous arguments,but it is true only O (N 2)times (because it is true exactly i times for each i ).Thus the innermost loop is only executed O (N 2)times.Each time through,it takes O ( j 2) = O (N 2)time,for a total of O (N 4).This is an example where multiplying loop sizes can occasionally give an overesti-mate.2.7(a)It should be clear that all algorithms generate only legal permutations.The first two algorithms have tests to guarantee no duplicates;the third algorithm works by shuffling an array that initially has no duplicates,so none can occur.It is also clear that the first two algorithms are completely random,and that each permutation is equally likely.The third algorithm,due to R.Floyd,is not as obvious;the correctness can be proved by induction.SeeJ.Bentley,"Programming Pearls,"Communications of the ACM 30(1987),754-757.Note that if the second line of algorithm 3is replaced with the statementSwap(A[i],A[RandInt(0,N-1)]);then not all permutations are equally likely.To see this,notice that for N = 3,there are 27equally likely ways of performing the three swaps,depending on the three random integers.Since there are only 6permutations,and 6does not evenly divide27,each permutation cannot possibly be equally represented.(b)For the first algorithm,the time to decide if a random number to be placed in A [i ]has not been used earlier is O (i ).The expected number of random numbers that need to be tried is N / (N − i ).This is obtained as follows:i of the N numbers would be duplicates.Thus the probability of success is (N − i ) / N .Thus the expected number of independent trials is N / (N − i ).The time bound is thusi =0ΣN −1N −i Ni ____ < i =0ΣN −1N −i N 2____ < N 2i =0ΣN −1N −i 1____ < N 2j =1ΣN j 1__ = O (N 2log N )The second algorithm saves a factor of i for each random number,and thus reduces the time bound to O (N log N )on average.The third algorithm is clearly linear.(c,d)The running times should agree with the preceding analysis if the machine has enough memory.If not,the third algorithm will not seem linear because of a drastic increase for large N .(e)The worst-case running time of algorithms I and II cannot be bounded because there is always a finite probability that the program will not terminate by some given time T .The algorithm does,however,terminate with probability 1.The worst-case running time of the third algorithm is linear -its running time does not depend on the sequence of random numbers.2.8Algorithm 1would take about 5days for N = 10,000,14.2years for N = 100,000and 140centuries for N = 1,000,000.Algorithm 2would take about 3hours for N = 100,000and about 2weeks for N = 1,000,000.Algorithm 3would use 1⁄12minutes for N = 1,000,000.These calculations assume a machine with enough memory to hold the array.Algorithm 4solves a problem of size 1,000,000in 3seconds.2.9(a)O (N 2).(b)O (N log N ).2.10(c)The algorithm is linear.2.11Use a variation of binary search to get an O (log N )solution (assuming the array is preread).2.13(a)Test to see if N is an odd number (or 2)and is not divisible by 3,5,7,...,√N .(b)O (√ N ),assuming that all divisions count for one unit of time.(c)B = O (log N ).(d)O (2B / 2).(e)If a 20-bit number can be tested in time T ,then a 40-bit number would require about T 2time.(f)B is the better measure because it more accurately represents the size of the input.2.14The running time is proportional to N times the sum of the reciprocals of the primes lessthan N .This is O (N log log N ).See Knuth,Volume2,page394.2.15Compute X 2,X 4,X 8,X 10,X 20,X 40,X 60,and X 62.2.16Maintain an array PowersOfX that can befilled in a for loop.The array will contain X ,X 2,X 4,up to X 2 log N .The binary representation of N (which can be obtained by testing even or odd and then dividing by2,until all bits are examined)can be used to multiply the appropriate entries of the array.2.17For N =0or N =1,the number of multiplies is zero.If b (N )is the number of ones in thebinary representation of N ,then if N >1,the number of multiplies used islog N + b (N )−12.18(a)A .(b)B .(c)The information given is not sufficient to determine an answer.We have only worst-case bounds.(d)Yes.2.19(a)Recursion is unnecessary if there are two or fewer elements.(b)One way to do this is to note that if thefirst N −1elements have a majority,then the lastelement cannot change this.Otherwise,the last element could be a majority.Thus if N is odd,ignore the last element.Run the algorithm as before.If no majority element emerges, then return the N th element as a candidate.(c)The running time is O (N ),and satisfies T (N )= T (N / 2)+ O (N ).(d)One copy of the original needs to be saved.After this,the B array,and indeed the recur-sion can be avoided by placing each B i in the A array.The difference is that the original recursive strategy implies that O (log N )arrays are used;this guarantees only two copies. 2.20Otherwise,we could perform operations in parallel by cleverly encoding several integersinto one.For instance,if A=001,B=101,C=111,D=100,we could add A and B at the same time as C and D by adding00A00C+00B00D.We could extend this to add N pairs of numbers at once in unit cost.2.22No.If Low =1,High =2,then Mid =1,and the recursive call does not make progress. 2.24No.As in Exercise2.22,no progress is made.。

数据结构与算法知到章节测试答案智慧树2023年最新中国民用航空飞行学院绪论单元测试1.本课程中需要掌握数据结构的基本概念、基本原理和基本方法。

参考答案:对2.在本课程的学习中还需要掌握算法基本的时间复杂度与空间复杂度的分析方法,能够设计出求解问题的高效算法。

参考答案:对第一章测试1.算法的时间复杂度取决于()。

参考答案:问题的规模2.算法的计算量的大小称为算法的()。

参考答案:复杂度3.算法的时间复杂度与()有关。

参考答案:问题规模4.以下关于数据结构的说法中正确的是()。

参考答案:数据结构的逻辑结构独立于其存储结构5.数据结构研究的内容是()。

参考答案:包括以上三个方面第二章测试1.线性表是具有n个()的有限序列。

参考答案:数据元素2.单链表又称为线性链表,在单链表上实施插入和删除操作()。

参考答案:不需移动结点,只需改变结点指针3.单链表中,增加一个头结点的目的是()。

参考答案:方便运算的实现4.单链表中,要将指针q指向的新结点插入到指针p指向的单链表结点之后,下面的操作序列中()是正确的。

参考答案:q->next=p->next; p->next=q;5.链表不具有的特点是()。

参考答案:可随机访问任一元素第三章测试1.循环队列存储在A[0..m]中,则入队时的操作是()。

参考答案:rear=(rear+1)%(m+1)2.关于循环队列,以下()的说法正确。

参考答案:循环队列不会产生假溢出3.如果循环队列用大小为m的数组表示,队头位置为front、队列元素个数为size,那么队尾元素位置rear为()。

参考答案:(front+size-1)%m4.若顺序栈的栈顶指针指向栈顶元素位置,则压入新元素时,应()。

参考答案:先移动栈顶指针5.设栈S和队列Q的初始状态均为空,元素{1, 2, 3, 4, 5, 6, 7}依次进入栈S。

若每个元素出栈后立即进入队列Q,且7个元素出队的顺序是{2, 5, 6, 4, 7, 3, 1},则栈S的容量至少是:参考答案:46.栈操作数据的原则是()。

数据结构第二章习题第2章线性表一、单项选择题1.线性表就是具备n个_________的非常有限序列。

a.表元素b.字符c.数据元素d.数据项2.线性表是_________。

a.一个非常有限序列,可以为空b.一个非常有限序列,不可以为空c.一个无穷序列,可以为空d.一个无穷序列,不可以为空3.线性表使用链表存储时,其地址_________。

a.必须是连续的b.一定是不连续的c.部分地址必须是连续的d.连续与否均可以4.链表不具备的特点是_________。

a.可以随机出访任一结点b.填入删掉不须要移动元素c.不必事先估算存储空间d.所须要空间与其长度成正比5.设立线性表存有n个元素,以下操作方式中,_________在顺序单上同时实现比在链表上同时实现效率更高。

a.输出第i(1≤i≤n)个元素值b.互换第1个元素与第2个元素的值c.顺序输入这n个元素的值d.输出与给定值x相等的元素在线性表中的序号6.设立线性表中存有2n个元素,以下操作方式中,_________在单链单上同时实现Obrero在顺序单上同时实现效率更高。

a.删掉选定的元素b.在最后一个元素的后面插入一个新元素c.顺序输出前k个元素d.互换第i个元素和第2n-i-1个元素的值(i=0,1…,n-1)7.如果最常用的操作是取第i个结点及其前驱,则采用_________存储方式最节省时间。

a.单链表中b.双链表中c.单循环链表d.顺序表8.与单链表相比,双链表的优点之一是_________。

a.填入和删掉操作方式更直观b.可以展开随机出访c.可以省略字段指针或表尾指针d.出访前后相连结点更有效率9.率先垂范结点的单链表中l为觑的认定条件就是_________。

a.l==nullb.l->next==nullc.l->next==ld.l!=null10.在一个具备n个结点的有序单链表填入一个崭新结点并仍然维持有序的时间复杂度就是_________。

第二章线性表:习题习题一、选择题1.L是线性表,已知Length(L)的值是5,经运算?Delete(L,2)后,length(L)的值是( c)。

A.5B.0C.4D.62.线性表中,只有一个直接前驱和一个直接后继的是( )。

A.首元素 B.尾元素C.中间的元素 D.所有的元素+3.带头结点的单链表为空的判定条件是( )。

A. head= =NULLB. head->next= =NULLC. head->next=headD. head!=NULL4.不带头结点的单链表head为空的判定条件是( )。

A. head=NULLB. head->next =NULLC.head->next=headD. head!=NULL5.非空的循环单链表head的尾结点P满足()。

A. p->next = =NULLB. p=NULLC. p->next==headD. p= =head6.线性表中各元素之间的关系是( c)关系。

A.层次B.网状C.有序 D.集合7.在循环链表的一个结点中有()个指针。

A.1B.2 C. 0 D. 38.在单链表的一个结点中有()个指针。

A.1B.2 C. 0 D. 39.在双链表的一个结点中有()个指针。

A.1B.2 C. 0 D. 310.在一个单链表中,若删除p所指结点的后继结点,则执行()。

A.p->next= p->next ->next;B. p=p->next; p->next=p->next->next;C. p->next= p->next;D. p=p->next->next;11.指针P指向循环链表L的首元素的条件是()。

A.P= =L B. P->next= =L C. L->next=P D. P-> next= =NU LL12. 在一个单链表中,若在p所指结点之后插入s所指结点,则执行()。

数据结构与算法分析课后习题答案第一章:基本概念一、题目:什么是数据结构与算法?数据结构是指数据在计算机中存储和组织的方式,如栈、队列、链表、树等;而算法是一系列解决问题的清晰规范的指令步骤。

数据结构和算法是计算机科学的核心内容。

二、题目:数据结构的分类有哪些?数据结构可以分为以下几类:1. 线性结构:包括线性表、栈、队列等,数据元素之间存在一对一的关系。

2. 树形结构:包括二叉树、AVL树、B树等,数据元素之间存在一对多的关系。

3. 图形结构:包括有向图、无向图等,数据元素之间存在多对多的关系。

4. 文件结构:包括顺序文件、索引文件等,是硬件和软件相结合的数据组织形式。

第二章:算法分析一、题目:什么是时间复杂度?时间复杂度是描述算法执行时间与问题规模之间的增长关系,通常用大O记法表示。

例如,O(n)表示算法的执行时间与问题规模n成正比,O(n^2)表示算法的执行时间与问题规模n的平方成正比。

二、题目:主定理是什么?主定理(Master Theorem)是用于估计分治算法时间复杂度的定理。

它的公式为:T(n) = a * T(n/b) + f(n)其中,a是子问题的个数,n/b是每个子问题的规模,f(n)表示将一个问题分解成子问题和合并子问题的所需时间。

根据主定理的不同情况,可以得到算法的时间复杂度的上界。

第三章:基本数据结构一、题目:什么是数组?数组是一种线性数据结构,它由一系列具有相同数据类型的元素组成,通过索引访问。

数组具有随机访问、连续存储等特点,但插入和删除元素的效率较低。

二、题目:栈和队列有什么区别?栈和队列都是线性数据结构,栈的特点是“先进后出”,即最后压入栈的元素最先弹出;而队列的特点是“先进先出”,即最先入队列的元素最先出队列。

第四章:高级数据结构一、题目:什么是二叉树?二叉树是一种特殊的树形结构,每个节点最多有两个子节点。

二叉树具有左子树、右子树的区分,常见的有完全二叉树、平衡二叉树等。

第二章线性表2.1描述以下三个概念的区别:头指针,头结点,首元结点(第一个元素结点)。

并说明头指针和头结点的作用。

答:头指针是一个指针变量,里面存放的是链表中首结点的地址,并以此来标识一个链表。

如链表H,链表L等,表示链表中第一个结点的地址存放在H、L中。

头结点是附加在第一个元素结点之前的一个结点,头指针指向头结点。

当该链表表示一个非空的线性表时,头结点的指针域指向第一个元素结点,为空表时,该指针域为空。

开始结点指第一个元素结点。

头指针的作用是用来惟一标识一个单链表。

头结点的作用有两个:一是使得对空表和非空表的处理得以统一。

二是使得在链表的第一个位置上的操作和在其他位置上的操作一致,无需特殊处理。

2.2填空题1、在顺序表中插入或删除一个元素,需要平均移动(表中一半)元素,具体移动的元素个数与(表长和该元素在表中的位置)有关。

2、顺序表中逻辑上相邻的元素的物理位置(必定)相邻。

单链表中逻辑上相邻的元素的物理位置(不一定)相邻。

3、在单链表中,除了首元结点外,任一结点的存储位置由(其直接前驱结点的链域的值)指示。

4、在单链表中设置头结点的作用是(插入和删除元素不必进行特殊处理)。

2.3何时选用顺序表、何时选用链表作为线性表的存储结构为宜?答:在实际应用中,应根据具体问题的要求和性质来选择顺序表或链表作为线性表的存储结构,通常有以下几方面的考虑:1.基于空间的考虑。

当要求存储的线性表长度变化不大,易于事先确定其大小时,为了节约存储空间,宜采用顺序表;反之,当线性表长度变化大,难以估计其存储规模时,采用动态链表作为存储结构为好。

2.基于时间的考虑。

若线性表的操作主要是进行查找,很少做插入和删除操作时,采用顺序表做存储结构为宜;反之,若需要对线性表进行频繁地插入或删除等的操作时,宜采用链表做存储结构。

并且,若链表的插入和删除主要发生在表的首尾两端,则采用尾指针表示的单循环链表为宜。

2.10 Status DeleteK(SqList &a,int i,int k)//删除线性表a中第i个元素起的k个元素{if(i<1||k<0||i+k-1>a.length) return INFEASIBLE;for(count=1;i+count-1<=a.length-k;count++) //注意循环结束的条件a.elem[i+count-1]=a.elem[i+count+k-1];a.length-=k;return OK;}//DeleteK2.11设顺序表中的数据元素递增有序,试写一算法,将X插入到顺序表的适当位置上,以保持该表的有序性。

数据结构和算法分析java课后答案【篇一:java程序设计各章习题及其答案】>1、 java程序是由什么组成的?一个程序中必须有public类吗?java源文件的命名规则是怎样的?答:一个java源程序是由若干个类组成。

一个java程序不一定需要有public类:如果源文件中有多个类时,则只能有一个类是public类;如果源文件中只有一个类,则不将该类写成public也将默认它为主类。

源文件命名时要求源文件主名应和主类(即用public修饰的类)的类名相同,扩展名为.java。

如果没有定义public类,则可以任何一个类名为主文件名,当然这是不主张的,因为它将无法进行被继承使用。

另外,对applet小使用程序来说,其主类必须为public,否则虽然在一些编译编译平台下可以通过(在bluej下无法通过)但运行时无法显示结果。

2、怎样区分使用程序和小使用程序?使用程序的主类和小使用程序的主类必须用public修饰吗?答:java application是完整的程序,需要独立的解释器来解释运行;而java applet则是嵌在html编写的web页面中的非独立运行程序,由web浏览器内部包含的java解释器来解释运行。

在源程序代码中两者的主要区别是:任何一个java application使用程序必须有且只有一个main方法,它是整个程序的入口方法;任何一个applet小使用程序要求程序中有且必须有一个类是系统类applet的子类,即该类头部分以extends applet结尾。

使用程序的主类当源文件中只有一个类时不必用public修饰,但当有多于一个类时则主类必须用public修饰。

小使用程序的主类在任何时候都需要用public来修饰。

3、开发和运行java程序需要经过哪些主要步骤和过程?答:主要有三个步骤(1)、用文字编辑器notepad(或在jcreator,gel, bulej,eclipse, jbuilder等)编写源文件;(2)、使用java编译器(如javac.exe)将.java源文件编译成字节码文件.class;(3)、运行java程序:对使用程序应通过java解释器(如java.exe)来运行,而对小使用程序应通过支持java标准的浏览器(如microsoft explorer)来解释运行。