数据挖掘任务实例——KDDCUP

- 格式:ppt

- 大小:604.50 KB

- 文档页数:19

ACM SIGKDD数据挖掘及知识发现会议1清华大学计算机系王建勇1、KDD概况ACM SIGKDD国际会议(简称KDD)是由ACM的数据挖掘及知识发现专委会[1]主办的数据挖掘研究领域的顶级年会。

它为来自学术界、企业界和政府部门的研究人员和数据挖掘从业者进行学术交流和展示研究成果提供了一个理想场所,并涵盖了特邀主题演讲(keynote presentations)、论文口头报告(oral paper presentations)、论文展板展示(poster sessions)、研讨会(workshops)、短期课程(tutorials)、专题讨论会(panels)、展览(exhibits)、系统演示(demonstrations)、KDD CUP赛事以及多个奖项的颁发等众多内容。

由于KDD的交叉学科性和广泛应用性,其影响力越来越大,吸引了来自统计、机器学习、数据库、万维网、生物信息学、多媒体、自然语言处理、人机交互、社会网络计算、高性能计算及大数据挖掘等众多领域的专家、学者。

KDD可以追溯到从1989年开始组织的一系列关于知识发现及数据挖掘(KDD)的研讨会。

自1995年以来,KDD已经以大会的形式连续举办了17届,论文的投稿量和参会人数呈现出逐年增加的趋势。

2011年的KDD会议(即第17届KDD 年会)共收到提交的研究论文(Research paper)714篇和应用论文(Industrial and Government paper)73篇,参会人数也达到1070人。

下面我们将就会议的内容、历年论文投稿及接收情况以及设置的奖项情况进行综合介绍。

此外,由于第18届KDD年会将于2012年8月12日至16日在北京举办,我们还将简单介绍一下KDD’12[4]的有关情况。

2、会议内容自1995年召开第1届KDD年会以来,KDD的会议内容日趋丰富且变的相对稳定。

其核心内容是以论文报告和展版(poster)的形式进行数据挖掘同行之间的学术交流和成果展示。

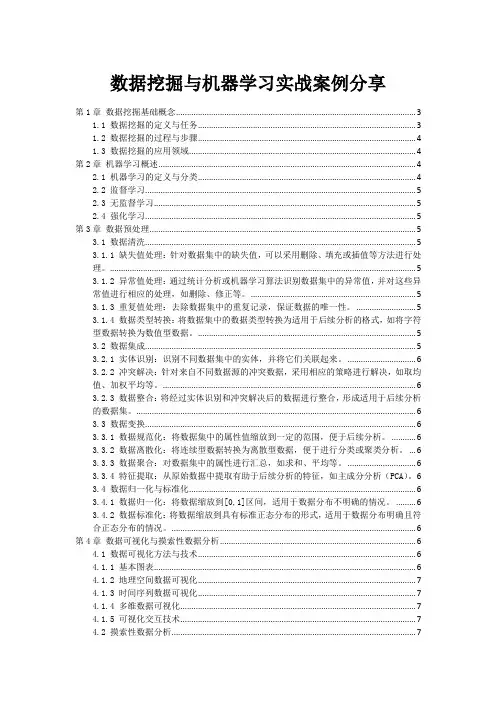

数据挖掘与机器学习实战案例分享第1章数据挖掘基础概念 (3)1.1 数据挖掘的定义与任务 (3)1.2 数据挖掘的过程与步骤 (4)1.3 数据挖掘的应用领域 (4)第2章机器学习概述 (4)2.1 机器学习的定义与分类 (4)2.2 监督学习 (5)2.3 无监督学习 (5)2.4 强化学习 (5)第3章数据预处理 (5)3.1 数据清洗 (5)3.1.1 缺失值处理:针对数据集中的缺失值,可以采用删除、填充或插值等方法进行处理。

(5)3.1.2 异常值处理:通过统计分析或机器学习算法识别数据集中的异常值,并对这些异常值进行相应的处理,如删除、修正等。

(5)3.1.3 重复值处理:去除数据集中的重复记录,保证数据的唯一性。

(5)3.1.4 数据类型转换:将数据集中的数据类型转换为适用于后续分析的格式,如将字符型数据转换为数值型数据。

(5)3.2 数据集成 (5)3.2.1 实体识别:识别不同数据集中的实体,并将它们关联起来。

(6)3.2.2 冲突解决:针对来自不同数据源的冲突数据,采用相应的策略进行解决,如取均值、加权平均等。

(6)3.2.3 数据整合:将经过实体识别和冲突解决后的数据进行整合,形成适用于后续分析的数据集。

(6)3.3 数据变换 (6)3.3.1 数据规范化:将数据集中的属性值缩放到一定的范围,便于后续分析。

(6)3.3.2 数据离散化:将连续型数据转换为离散型数据,便于进行分类或聚类分析。

(6)3.3.3 数据聚合:对数据集中的属性进行汇总,如求和、平均等。

(6)3.3.4 特征提取:从原始数据中提取有助于后续分析的特征,如主成分分析(PCA)。

63.4 数据归一化与标准化 (6)3.4.1 数据归一化:将数据缩放到[0,1]区间,适用于数据分布不明确的情况。

(6)3.4.2 数据标准化:将数据缩放到具有标准正态分布的形式,适用于数据分布明确且符合正态分布的情况。

KDD CUP 2009英文关键词:Customer Relationship Management (CRM), marketing databases, French Telecom company Orange, propensity of customers,中文关键词:客户关系管理(CRM)、营销数据库,法国电信公司橙、倾向的客户,数据格式:TEXT数据介绍:Customer Relationship Management (CRM) is a key element of modern marketing strategies. The KDD Cup 2009 offers the opportunity to work on large marketing databases from the French Telecom company Orange to predict the propensity of customers to switch provider (churn), buy new products or services (appetency), or buy upgrades or add-ons proposed to them to make the sale more profitable (up-selling).The most practical way, in a CRM system, to build knowledge on customer is to produce scores. A score (the output of a model) is anevaluation for all instances of a target variable to explain (i.e. churn, appetency or up-selling). Tools which produce scores allow to project, on a given population, quantifiable information. The score is computed using input variables which describe instances. Scores are then used by the information system (IS), for example, to personalize the customer relationship. An industrial customer analysis platform able to build prediction models with a very large number of input variables has been developed by Orange Labs. This platform implements several processing methods for instances and variables selection, prediction and indexation based on an efficient model combined with variable selection regularization and model averaging method. The main characteristic of this platform is its ability to scale on very large datasets with hundreds of thousands of instances and thousands of variables. The rapid and robust detection of the variables that have most contributed to the output prediction can be a key factor in a marketing application.The challenge is to beat the in-house system developed by Orange Labs. It is an opportunity to prove that you can deal with a very large database, including heterogeneous noisy data (numerical and categorical variables), and unbalanced class distributions. Time efficiency is often a crucial point. Therefore part of the competition will be time-constrained to test the ability of the participants to deliver solutions quickly.[以下内容来由机器自动翻译]客户关系管理(CRM) 是现代营销战略的一个关键要素。

KDD Cup 1997 Datasets(1997年KDD杯数据集)数据摘要:This is the data set used for The First International Knowledge Discovery and Data Mining Tools Competition, which was held in conjunction with KDD-97 The Third International Conference on Knowledge Discovery and Data Mining.中文关键词:KDD杯,知识发现,数据挖掘,数据集,英文关键词:KDD Cup,Knowledge Discovery,Data Mining,Datasets,数据格式:TEXT数据用途:Data Mining数据详细介绍:KDD Cup 1997 DatasetsAbstractThis is the data set used for The First International Knowledge Discovery and Data Mining Tools Competition, which was held in conjunction with KDD-97 The Third International Conference on Knowledge Discovery and Data Mining.Usage NotesThe KDD-CUP-97 data set and the accompanying documentation are now available for general use with the following restrictions:1. The users of the data must notify Ismail Parsa (iparsa@) and KenHowes (khowes@) in the event they produce results, visuals or tables, etc. from the data and send a note that includes a summary of the final result.2. The authors of published and/or unpublished articles that use the KDD-Cup-97data set must also notify the individuals listed above and send a copy of their published and/or unpublished work.3. If you intend to use this data set for training or educational purposes, you must notreveal the name of the sponsor PVA (Paralyzed Veterans of America) to the trainees or students. You are allowed to say "a national veterans organization"...Information files∙readme. This list, listing the files in the FTP server and their contents.∙instruct.txt . General instructions for the competition.∙cup98doc.txt. This file, an overview and pointer to more detailed information about the competition.∙cup98dic.txt. Data dictionary to accompany the analysis data set.∙cup98que.txt. KDD-CUP questionnaire. PARTICIPANTS ARE REQUIRED TO FILL-OUT THE QUESTIONNAIRE and turn in with the results.∙valtargt.readme. Describes the valtargt.txt file.Data files∙cup98lrn.zip PKZIP compressed raw LEARNING data set. (36.5M; 117.2M uncompressed)∙cup98val.zip PKZIP compressed raw VALIDATION data set. (36.8M; 117.9M uncompressed)∙cup98lrn.txt.Z UNIX COMPRESSed raw LEARNING data set. (36.6M; 117.2M uncompressed)∙cup98val.txt.Z UNIX COMPRESSed raw VALIDATION data set. (36.9M; 117.9M uncompressed)∙valtargt.txt. This file contains the target fields that were left out of the validation data set that was sent to the KDD CUP 97 participants. (1.1M)数据预览:点此下载完整数据集。

KDD Cup 1997 Datasets(1997年KDD杯数据集)数据摘要:This is the data set used for The First International Knowledge Discovery and Data Mining Tools Competition, which was held in conjunction with KDD-97 The Third International Conference on Knowledge Discovery and Data Mining.中文关键词:KDD杯,知识发现,数据挖掘,数据集,英文关键词:KDD Cup,Knowledge Discovery,Data Mining,Datasets,数据格式:TEXT数据用途:Data Mining数据详细介绍:KDD Cup 1997 DatasetsAbstractThis is the data set used for The First International Knowledge Discovery and Data Mining Tools Competition, which was held in conjunction with KDD-97 The Third International Conference on Knowledge Discovery and Data Mining.Usage NotesThe KDD-CUP-97 data set and the accompanying documentation are now available for general use with the following restrictions:1. The users of the data must notify Ismail Parsa (iparsa@) and KenHowes (khowes@) in the event they produce results, visuals or tables, etc. from the data and send a note that includes a summary of the final result.2. The authors of published and/or unpublished articles that use the KDD-Cup-97data set must also notify the individuals listed above and send a copy of their published and/or unpublished work.3. If you intend to use this data set for training or educational purposes, you must notreveal the name of the sponsor PVA (Paralyzed Veterans of America) to the trainees or students. You are allowed to say "a national veterans organization"...Information files∙readme. This list, listing the files in the FTP server and their contents.∙instruct.txt . General instructions for the competition.∙cup98doc.txt. This file, an overview and pointer to more detailed information about the competition.∙cup98dic.txt. Data dictionary to accompany the analysis data set.∙cup98que.txt. KDD-CUP questionnaire. PARTICIPANTS ARE REQUIRED TO FILL-OUT THE QUESTIONNAIRE and turn in with the results.∙valtargt.readme. Describes the valtargt.txt file.Data files∙cup98lrn.zip PKZIP compressed raw LEARNING data set. (36.5M; 117.2M uncompressed)∙cup98val.zip PKZIP compressed raw VALIDATION data set. (36.8M; 117.9M uncompressed)∙cup98lrn.txt.Z UNIX COMPRESSed raw LEARNING data set. (36.6M; 117.2M uncompressed)∙cup98val.txt.Z UNIX COMPRESSed raw VALIDATION data set. (36.9M; 117.9M uncompressed)∙valtargt.txt. This file contains the target fields that were left out of the validation data set that was sent to the KDD CUP 97 participants. (1.1M)数据预览:点此下载完整数据集。

xx大学信息科学与工程学院《数据挖掘》实验报告实验序号:实验项目名称:KDD2015成为标签内容,标签内容包括两列:第一列是用户的注册id,第二列是0或者1,0表示用户继续学习,1表示退学了。

而7月22日的之前的所有日期的将作为特征提取的数据来源。

2. 以后,时间点将依次向后推迟7天,与第一步相同,在这个时间点之后的10天的数据作为标签来源,之前的所有的日期的数据作为特征提取的数据。

每一周的数据作为一个训练集的一个组成部分。

3.特征提取时,提取了12个标签:①.source-event pairs counted:用户在该课程的操作(按照“来源-事件”对来计数,这里的“来源-事件”举个例子来说就是browser-access,server-discussion,下同)数量,按周来划分,前一周,前两周,直到最后一周,举个例子来说明这个例子就是某用户在某个星期利用客户端操作了某件事的次数。

②. courses by user counted:用户有行为的课程数量,前一周、前两周、前三周、前四周的。

③. course population counted:有多少人选择了这门课。

④. course dropout counted:有多少人在上课期间放弃了这门课。

⑤. ratio of user ops on all courses:用户在该课程的操作数量占他在所有课程的操作数量的比例,前一周、前两周、前三周、前四周的,以及更以前的时间。

⑥. ratio of courses ops of all users:用户在该课程的操作数量占所有用户在该课程的操作数量的比例,前一周、前两周、前三周、前四周的,以及更以前的时间。

⑦. dropout ratio of courses:课程的放弃率。

⑧. days from course first update:这门课程的材料首次发布距今几天了。

⑨. d ays from course last update:这门课程的材料最后一次发布距今几天了。

ACM SIGKDD数据挖掘及知识发现会议1清华大学计算机系王建勇1、KDD概况ACM SIGKDD国际会议(简称KDD)是由ACM的数据挖掘及知识发现专委会[1]主办的数据挖掘研究领域的顶级年会。

它为来自学术界、企业界和政府部门的研究人员和数据挖掘从业者进行学术交流和展示研究成果提供了一个理想场所,并涵盖了特邀主题演讲(keynote presentations)、论文口头报告(oral paper presentations)、论文展板展示(poster sessions)、研讨会(workshops)、短期课程(tutorials)、专题讨论会(panels)、展览(exhibits)、系统演示(demonstrations)、KDD CUP赛事以及多个奖项的颁发等众多内容。

由于KDD的交叉学科性和广泛应用性,其影响力越来越大,吸引了来自统计、机器学习、数据库、万维网、生物信息学、多媒体、自然语言处理、人机交互、社会网络计算、高性能计算及大数据挖掘等众多领域的专家、学者。

KDD可以追溯到从1989年开始组织的一系列关于知识发现及数据挖掘(KDD)的研讨会。

自1995年以来,KDD已经以大会的形式连续举办了17届,论文的投稿量和参会人数呈现出逐年增加的趋势。

2011年的KDD会议(即第17届KDD 年会)共收到提交的研究论文(Research paper)714篇和应用论文(Industrial and Government paper)73篇,参会人数也达到1070人。

下面我们将就会议的内容、历年论文投稿及接收情况以及设置的奖项情况进行综合介绍。

此外,由于第18届KDD年会将于2012年8月12日至16日在北京举办,我们还将简单介绍一下KDD’12[4]的有关情况。

2、会议内容自1995年召开第1届KDD年会以来,KDD的会议内容日趋丰富且变的相对稳定。

其核心内容是以论文报告和展版(poster)的形式进行数据挖掘同行之间的学术交流和成果展示。

第1篇一、实验背景随着大数据时代的到来,数据挖掘技术逐渐成为各个行业的重要工具。

数据挖掘是指从大量数据中提取有价值的信息和知识的过程。

本实验旨在通过数据挖掘技术,对某个具体领域的数据进行挖掘,分析数据中的规律和趋势,为相关决策提供支持。

二、实验目标1. 熟悉数据挖掘的基本流程,包括数据预处理、特征选择、模型选择、模型训练和模型评估等步骤。

2. 掌握常用的数据挖掘算法,如决策树、支持向量机、聚类、关联规则等。

3. 应用数据挖掘技术解决实际问题,提高数据分析和处理能力。

4. 实验结束后,提交一份完整的实验报告,包括实验过程、结果分析及总结。

三、实验环境1. 操作系统:Windows 102. 编程语言:Python3. 数据挖掘库:pandas、numpy、scikit-learn、matplotlib四、实验数据本实验选取了某电商平台用户购买行为数据作为实验数据。

数据包括用户ID、商品ID、购买时间、价格、商品类别、用户年龄、性别、职业等。

五、实验步骤1. 数据预处理(1)数据清洗:剔除缺失值、异常值等无效数据。

(2)数据转换:将分类变量转换为数值变量,如年龄、性别等。

(3)数据归一化:将不同特征的范围统一到相同的尺度,便于模型训练。

2. 特征选择(1)相关性分析:计算特征之间的相关系数,剔除冗余特征。

(2)信息增益:根据特征的信息增益选择特征。

3. 模型选择(1)决策树:采用CART决策树算法。

(2)支持向量机:采用线性核函数。

(3)聚类:采用K-Means算法。

(4)关联规则:采用Apriori算法。

4. 模型训练使用训练集对各个模型进行训练。

5. 模型评估使用测试集对各个模型进行评估,比较不同模型的性能。

六、实验结果与分析1. 数据预处理经过数据清洗,剔除缺失值和异常值后,剩余数据量为10000条。

2. 特征选择通过相关性分析和信息增益,选取以下特征:用户ID、商品ID、购买时间、价格、商品类别、用户年龄、性别、职业。

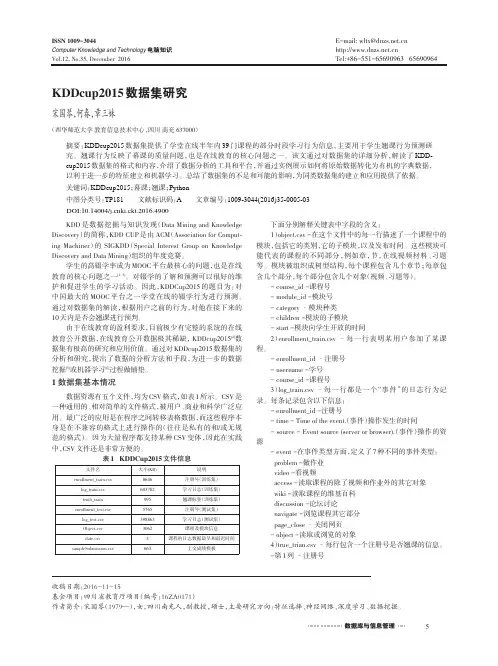

数据库与信息管理本栏目责任编辑:王力KDDcup2015数据集研究宋国琴,何春,章三妹(西华师范大学教育信息技术中心,四川南充637000)摘要:KDDcup2015数据集提供了学堂在线半年内39门课程的部分时段学习行为信息,主要用于学生翘课行为预测研究。

翘课行为反映了幕课的质量问题,也是在线教育的核心问题之一。

该文通过对数据集的详细分析,解读了KDD-cup2015数据集的格式和内容,介绍了数据分析的工具和平台,并通过实例展示如何将原始数据转化为有机的字典数据,以利于进一步的特征建立和机器学习。

总结了数据集的不足和可能的影响,为同类数据集的建立和应用提供了依据。

关键词:KDDcup2015;幕课;翘课;Python 中图分类号:TP181文献标识码:A文章编号:1009-3044(2016)35-0005-03KDD 是数据挖掘与知识发现(Data Mining and Knowledge Discovery )的简称,KDD CUP 是由ACM (Association for Comput⁃ing Machiner )的SIGKDD (Special Interest Group on Knowledge Discovery and Data Mining )组织的年度竞赛。

学生的高辍学率成为MOOC 平台最核心的问题,也是在线教育的核心问题之一[1-3]。

对辍学的了解和预测可以很好的维护和促进学生的学习活动。

因此,KDDCup2015的题目为:对中国最大的MOOC 平台之一学堂在线的辍学行为进行预测。

通过对数据集的解读,根据用户之前的行为,对他在接下来的10天内是否会翘课进行预判。

由于在线教育的盈利要求,目前极少有完整的系统的在线教育公开数据,在线教育公开数据极其稀缺,KDDcup2015[4]数据集有极高的研究和应用价值。

通过对KDDcup2015数据集的分析和研究,提出了数据的分析方法和手段,为进一步的数据挖掘[5]或机器学习[6]过程做铺垫。

KDD CUP 2004英文关键词:KDD CUP 2004,performance criteria,bioinformatics,quantum physics,中文关键词:KDD 杯2004 年业绩标准、生物信息学、量子物理,数据格式:TEXT数据介绍:This year's competition focuses on data-mining for a variety of performance criteria such as Accuracy, Squared Error, Cross Entropy, and ROC Area. As described on this WWW-site, there are two main tasks based on two datasets from the areas of bioinformatics and quantum physics.The file you downloaded is a TAR archive that is compressed with GZIP. Most decompression programs (e.g. winzip) can decompress these formats. If you run into problems, send us email. The archive shouldcontain four files:phy_train.dat: Training data for the quantum physics task (50,000 train cases)phy_test.dat: Test data for the quantum physics task (100,000 test cases) bio_train.dat: Training data for the protein homology task (145,751 lines) bio_test.dat: Test data for the protein homology task (139,658 lines)The file formats for the two tasks are as follows.Format of the Quantum Physics DatasetEach line in the training and the test file describes one example. The structure of each line is as follows:The first element of each line is an EXAMPLE ID that uniquely describes the example. You will need this EXAMPLE ID when you submit results. The second element is the class of the example. Positive examples are denoted by 1, negative examples by 0. Test examples have a "?" in this position. This is a balanced problem so the target values are roughly half 0's and 1's.All following elements are feature values. There are 78 feature values in each line.Missing values: columns 22,23,24 and 46,47,48 use a value of "999" to denote "not available", and columns 31 and 57 use "9999" to denote "not available". These are the column numbers in the data tables starting with 1 for the first column (the case ID numbers). If you remove the first twocolumns (the case ID numbers and the targets), and start numbering the columns at the first attribute, these are attributes 20,21,22, and 44,45,46, and 29 and 55, respectively. You may treat missing values any way you want, including coding them as a unique value, imputing missing values, using learning methods that can handle missing values, ignoring these attributes, etc.The elements in each line are separated by whitespace.Format of the Protein Homology DatasetEach line in the training and the test file describes one example. The structure of each line is as follows:The first element of each line is a BLOCK ID that denotes to which native sequence this example belongs. There is a unique BLOCK ID for each native sequence. BLOCK IDs are integers running from 1 to 303 (one for each native sequence, i.e. for each query). BLOCK IDs were assigned before the blocks were split into the train and test sets, so they do not run consecutively in either file.The second element of each line is an EXAMPLE ID that uniquely describes the example. You will need this EXAMPLE ID and the BLOCK ID when you submit results.The third element is the class of the example. Proteins that are homologous to the native sequence are denoted by 1, non-homologous proteins (i.e. decoys) by 0. Test examples have a "?" in this position.All following elements are feature values. There are 74 feature values in each line. The features describe the match (e.g. the score of a sequence alignment) between the native protein sequence and the sequence that is tested for homology.There are no missing values (that we know of) in the protein data.To give an example, the first line in bio_train.dat looks like this:279 261532 0 52.00 32.69 ... -0.350 0.26 0.76279 is the BLOCK ID. 261532 is the EXAMPLE ID. The "0" in the third column is the target value. This indicates that this protein is not homologous to the native sequence (it is a decoy). If this protein was homologous the target would be "1". Columns 4-77 are the input attributes.The elements in each line are separated by whitespace.[以下内容来由机器自动翻译]今年的比赛重点的性能标准精度、平方错误、交叉熵和中华民国区等各种数据挖掘。

Data Mining on the Purchase Behaviors of the Electronic Commerce Customers 作者: 吕晓玲[1] 吴喜之[2]

作者机构: [1]香港城市大学管理科学系,香港 [2]中国人民大学统计学院,北京100872

出版物刊名: 统计与信息论坛

页码: 29-32页

主题词: 电子商务 网络购物 KDD Cup 2000数据 数据挖掘 购物篮模型 预测模型

摘要:电子商务已经成为越来越多的消费者购物的一个重要途径,分析网络购物客户的个人特征及其购物行为,对商业的成功有着至关重要的作用。

然而电子商务还是一个崭新的商业领域,很多的业界人士仍忙于技术方面的考虑,却很少分析客户的网络购买行为。

而使用真实网络购物KDD Cup 2000数据,分析Gazelle.com公司客户的个人特征和网络购物行为,并应用数据挖掘的购物篮模型对各商品之间的关联性进行分析,才能更确切地预测模型预测客户的忠诚度。

KDD CUP 2006英文关键词:KDD Cup 2006,medical data mining,Computer-AidedDetection,clinical problem,中文关键词:KDD 杯2006 年,医疗数据挖掘,计算机辅助检测、临床问题,数据格式:TEXT数据介绍:This year's KDD Cup challenge problem is drawn from the domain of medical data mining. The tasks are a series of Computer-Aided Detection problems revolving around the clinical problem of identifying pulmonary embolisms from three-dimensional computed tomography data. This challenging domain is characterized by:Multiple instance learningNon-IID examplesNonlinear cost functionsSkewed class distributionsNoisy class labelsSparse dataFor this task, a total of 69 cases were collected and provided to an expert chest radiologist, who reviewed each case and marked the PEs. The cases were randomly divided into training and test sets. The training set includes 38 positive cases and 8 negative cases, while the test set contains the remaining 23 cases. The test group is sequestered and will only be used to evaluate the performance of the final system.KDDPETrain.txtKDDPEfeatureNames.txtKDDPETest.txtKDDPETestLabel.txtNote: It turns out that patients numbers 3111 and 3126 in the test data duplicate patients 3103 and 3115, respectively, in the training data. Therefore, 3111 and 3126 were dropped from the final evaluation for the competition. These patients should be discarded from testing for any future comparisons to the KDD Cup results. The filedrop_duplicate_patients_mask.dat, in the KDD Cup archive file, can be used to identify the excluded rows in the KDDPETest.txt file.More data descriptions are provided in this PDF file.Data FormatThe training data will consist of a single data file plus a file containingfield (feature) names. Each line of the file represents a single candidate and comprises a number of whitespace-separated fields:Field 0: Patient ID (unique integer per patient)Field 1: Label - 0 for "negative" - this candidate is not a PE; >0 for "positive" - this candidate is a PEField 2+: Additional featuresThe testing data will be in the same format data file, except that Field 1 (label) will be "-1" denoting "unknown".[以下内容来由机器自动翻译]今年的KDD 杯挑战问题是来自医疗数据挖掘中的域。

ACM SIGKDD数据挖掘及知识发现会议1清华大学计算机系王建勇1、KDD概况ACM SIGKDD国际会议(简称KDD)是由ACM的数据挖掘及知识发现专委会[1]主办的数据挖掘研究领域的顶级年会。

它为来自学术界、企业界和政府部门的研究人员和数据挖掘从业者进行学术交流和展示研究成果提供了一个理想场所,并涵盖了特邀主题演讲(keynote presentations)、论文口头报告(oral paper presentations)、论文展板展示(poster sessions)、研讨会(workshops)、短期课程(tutorials)、专题讨论会(panels)、展览(exhibits)、系统演示(demonstrations)、KDD CUP赛事以及多个奖项的颁发等众多内容。

由于KDD的交叉学科性和广泛应用性,其影响力越来越大,吸引了来自统计、机器学习、数据库、万维网、生物信息学、多媒体、自然语言处理、人机交互、社会网络计算、高性能计算及大数据挖掘等众多领域的专家、学者。

KDD可以追溯到从1989年开始组织的一系列关于知识发现及数据挖掘(KDD)的研讨会。

自1995年以来,KDD已经以大会的形式连续举办了17届,论文的投稿量和参会人数呈现出逐年增加的趋势。

2011年的KDD会议(即第17届KDD 年会)共收到提交的研究论文(Research paper)714篇和应用论文(Industrial and Government paper)73篇,参会人数也达到1070人。

下面我们将就会议的内容、历年论文投稿及接收情况以及设置的奖项情况进行综合介绍。

此外,由于第18届KDD年会将于2012年8月12日至16日在北京举办,我们还将简单介绍一下KDD’12[4]的有关情况。

2、会议内容自1995年召开第1届KDD年会以来,KDD的会议内容日趋丰富且变的相对稳定。

其核心内容是以论文报告和展版(poster)的形式进行数据挖掘同行之间的学术交流和成果展示。

KDD Cup 2003 Datasets(2003年KDD数据集)数据摘要:The e-print arXiv, initiated in Aug 1991, has become the primary mode of research communication in multiple fields of physics, and some related disciplines. It currently contains over 225,000 full text articles and is growing at a rate of 40,000 new submissions per year. It provides nearly comprehensive coverage of large areas of physics, and serves as an on-line seminar system for those areas. It serves 10 million requests per month, including tens of thousands of search queries per day. Its collections are a unique resource for algorithmic experiments and model building. Usage data has been collected since 1991, including Web usage logs beginning in 1993. On average, the full text of each paper was downloaded over 300 times since 1996, and some were downloaded tens of thousands of times.中文关键词:KDD杯,数据集,研究交流,物理,文章,算法与实验,建模,英文关键词:KDD Cup,Datasets,researchcommunication,physics,articles,algorithmic experiments,model building,数据格式:TEXT数据用途:Social Network AnalysisInformation ProcessingClassification数据详细介绍:KDD Cup 2003 DatasetsNewsSept 5, 2003: Presentation slides from the KDD conference are now available.August 20, 2003: Scores for the winners of Tasks 1-3 have been posted.August 19, 2003: Solutions for Task 1-3 have been posted.August 18, 2003: Results for Task 1 have been posted.August 15, 2003: Results for Tasks 2, 3, and 4 have been posted. The winners for Task 1 will be announced by August 18.IntroductionWelcome to KDD Cup 2003, a knowledge discovery and data mining competition held in conjunction with the Ninth Annual ACM SIGKDD Conference. This year's competition focuses on problems motivated by network mining and the analysis of usage logs. Complex networks have emerged as a central theme in data mining applications, appearing in domains that range from communication networks and the Web, to biological interaction networks, to social networks and homeland security. At the same time, thedifficulty in obtaining complete and accurate representations of large networks has been an obstacle to research in this area.This KDD Cup is based on a very large archive of research papers that provides an unusually comprehensive snapshot of a particular social network in action; in addition to the full text of research papers, it includes both explicit citation structure and (partial) data on the downloading of papers by users. It provides a framework for testing general network and usage mining techniques, which will be explored via four varied and interesting task. Each task is a separate competition with its own specific goals.The first task involves predicting the future; contestants predict how many citations each paper will receive during the three months leading up to the KDD 2003 conference. For the second task, contestants must build a citation graph of a large subset of the archive from only the LaTex sources. In the third task, each paper's popularity will be estimated based on partial download logs. And the last task is open! Given the large amount of data, contestants can devise their own questions and the most interesting result is the winner.Data DescriptionThe e-print arXiv, initiated in Aug 1991, has become the primary mode of research communication in multiple fields of physics, and some related disciplines. It currently contains over 225,000 full text articles and is growing at a rate of 40,000 new submissions per year. It provides nearly comprehensive coverage of large areas of physics, and serves as an on-line seminar system for those areas. It serves 10 million requests per month, including tens of thousands of search queries per day. Its collections are a unique resource for algorithmic experiments and model building. Usage data has been collected since 1991, including Web usage logs beginning in 1993. On average, the full text of each paper was downloaded over 300 times since 1996, and some were downloaded tens of thousands of times.The Stanford Linear Accelerator Center SPIRES-HEP database has been comprehensively cataloguing the High Energy Particle Physics (HEP) literature onlinesince 1974, and indexes more than 500,000 high-energy physics related articles including their full citation tree.数据预览:点此下载完整数据集。