Constraint Satisfaction Problems with Order-Sorted Domains

- 格式:pdf

- 大小:242.90 KB

- 文档页数:14

约束也是一种幸福1000字作文英文回答:Constraints are indeed a form of happiness. At first glance, it may seem contradictory to associate constraints with happiness. After all, constraints imply limitationsand restrictions, which may hinder one's freedom and autonomy. However, upon closer examination, it becomesclear that constraints can actually contribute to a senseof contentment and fulfillment.Constraints provide structure and guidance in our lives. They set boundaries and establish rules, which help us navigate through the complexities of life. Without constraints, we would be lost and overwhelmed by theinfinite possibilities and choices available to us. Constraints act as a compass, guiding us towards what is important and valuable.Moreover, constraints foster discipline and self-control. By adhering to constraints, we develop the ability to resist temptations and make responsible decisions. This self-discipline brings a sense of achievement and pride, leading to a deeper satisfaction and happiness. It allows us to overcome obstacles and achieve our goals, knowingthat we have exercised restraint and stayed on the right path.Constraints also encourage creativity and innovation. When faced with limitations, we are forced to think outside the box and find alternative solutions. Constraints push us to explore new ideas and approaches, leading to breakthroughs and advancements. The challenges posed by constraints stimulate our minds and ignite our creativity, ultimately leading to a greater sense of accomplishment and happiness.In addition, constraints promote gratitude and appreciation. When we are aware of the limitations imposed on us, we learn to value what we have and cherish the opportunities available to us. Constraints remind us of the privileges and blessings we enjoy, fostering a sense ofgratitude and contentment. This gratitude enhances our overall well-being and contributes to our happiness.In conclusion, constraints are not necessarily detrimental to happiness. On the contrary, they can be a source of contentment and fulfillment. Constraints provide structure, foster discipline, encourage creativity, and promote gratitude. Embracing and navigating within constraints can lead to a deeper sense of happiness and satisfaction in life.中文回答:约束确实是一种幸福。

EUROGRAPHICS Workshop on...(2004),pp.1–10M.-P.Cani and M.Slater(Guest Editors)Can Machines Interpret Line Drawings?P.A.C.Varley,1R.R.Martin2and H.Suzuki11Department of Fine Digital Engineering,The University of Tokyo,Tokyo,Japan2School of Computer Science,Cardiff University,Cardiff,Wales,UKKeywordsSketching,Line Drawing Interpretation,Engineering De-sign,Conceptual Design1.IntroductionCan computers interpret line drawings of engineering ob-jects?In principle,they cannot:any line drawing is the 2D representation of an infinite number of possible3D ob-jects.Fortunately,a counter-argument suggests that comput-ers should be able to interpret line drawings.Human engi-neers use line drawings to communicate shape in the clear expectation that the recipient will interpret the drawing in the way the originator intended.It is believed[Lip98,Var03a] that human interpretation of line drawings is a skill which can be learned.If such skills could be translated into algo-rithms,computers could understand line drawings.There are good reasons why we want computers to in-terpret line drawings.Studies such as Jenkins[Jen92]have shown that it is common practice for design engineers to sketch ideas on paper before entering them into a CAD pack-age.Clearly,time and effort could be saved if a computer could interpret the engineer’s initial concept drawings as solid models.Furthermore,if this conversion could be done within a second or two,it would give helpful feedback,fur-ther enhancing the designer’s creativity[Gri97].The key problem is to produce a model of the3D object the engineer would regard as the most reasonable interpreta-tion of the2D drawing,and to do so quickly.While there are infinitely many objects which could result in drawings cor-responding to e.g.Figures1and2,in practice,an engineer would be in little doubt as to which was the correct interpre-tation.For this reason,the problem is as much heuristic as geometric:it is not merely tofind a geometrically-realisable solid which corresponds to the drawing,but tofind the one which corresponds to the engineer’s expectations.We suggest the following fully automatic approach,re-quiring no user intervention;our implementation verifies its utility for many drawings of polyhedral objects.(In a com-panion paper[VTMS04],we summarise an approach for in-terpreting certain drawings of curved objects with minimal user intervention.)submitted to EUROGRAPHICS Workshop on (2004)2P .Varley &R.Martin &H.Suzuki /Can Machines Interpret LineDrawings?Figure 1:Trihedral Draw-ing[Yan85]Figure 2:Non-Trihedral Drawing [Yan85]•Convert the engineer’s original freehand sketch to a line drawing.This is described in Section 3.•Determine the frontal geometry of the object.The three most crucial aspects of this are:–Label the lines in the drawing as convex,concave,or occluding.See Section 4.–Determine which pairs of lines in the drawing are in-tended to be parallel in 3D.See Section 5.–Inflate the drawing to 212D”).In a frontal geometry,everything visible in the nat-ural line drawing is given a position in 3D space,but the oc-cluded part of the object,not visible from the chosen view-point,is not present.A polyhedron is trihedral if three faces meet at each ver-tex.It is extended trihedral [PLVT98]if three planes meet at each vertex (there may be four or more faces if some are coplanar).It is tetrahedral if no more than four faces meet at any vertex.It is a normalon if all edges and face normals are aligned with one of three main perpendicular axes.Junctions of different shapes are identified by letter:junc-tions where two lines meet are L-junctions ,junctions of three lines may be T-junctions ,W-junctions or Y-junctions ,and junctions of four lines may be K-junctions ,M-junctions or X-junctions .Vertex shapes follow a similar convention:for example,when all four edges of a K-vertex are visible,the drawing has four lines meeting at a K -junction.When reconstructing an object from a drawing,we take the correct object to be the one which a human would decide to be the most plausible interpretation of the drawing.3.Convert Sketch to Line DrawingFor drawings of polyhedral objects,we believe it to be most convenient for the designer to input straight lines directly,and our own prototype system,RIBALD,includes such an interface.However,it could be argued that freehand sketch-ing is more “intuitive”,corresponding to a familiar interface:pen and paper.Several systems exist which are capable of converting freehand sketches into natural line drawings—see e.g.[ZHH96],[Mit99],[SS01].4.Which Lines are Convex/Concave?Line labelling is the process of determining whether each line in the drawing represents a convex,a concave,or an oc-cluding edge.For drawings of trihedral objects with no hole loops,the line labelling problem was essentially solved by Huffman [Huf71]and Clowes [Clo70],who elaborated the catalogue of valid trihedral junction labels.This turns line labelling into a discrete constraint satisfaction problem with 1-node constraints that each junction must have a label in the catalogue and 2-node constraints that each line must have the same label throughout its length .The Clowes-Huffman catalogue for L -,W -and Y -junctions is shown in Figure 3;+indicates a convex edge,−indicates a concave edge,and an arrow indicates an occluding edge with the occluding face on the right-hand side of the arrow.In trihedral objects,T -junctions (see Figure 4)are always occluding.For trihedral objects,algorithms for Clowes-Huffman line labelling,e.g.those of Waltz [Wal72]and Kanatani [Kan90],although theoretically taking O (2n )time,are usually O (n )insubmitted to EUROGRAPHICS Workshop on (2004)P .Varley &R.Martin &H.Suzuki /Can Machines Interpret Line Drawings?3+-+-+-+++---Figure 3:Clowes-HuffmanCatalogueFigure 4:Occluding T -Junctionspractice [PT94].It is believed that the time taken is more a function of the number of legal labellings than of the algo-rithm,and for trihedral objects there is often only a single legal labelling.For example,Figure 1has only one valid la-belling if the trihedral (Clowes-Huffman)catalogue is used.Extending line labelling algorithms to non-trihedral nor-malons is fairly straightforward [PLVT98].The additional legal junction labels are those shown in Figure 5.Note,how-ever,that a new problem has been introduced:the new T -junctions are not occluding.-+-+-+Figure 5:Extended Trihedral JunctionsExtension to the 4-hedral general case is less straight-forward.The catalogue of 4-hedral junction labels is much larger [VM03]—for example,Figure 6shows just the possi-bilities for W -junctions.Because the 4-hedral catalogue isno+-+-+-Figure 6:4-Hedral W -Junctionslonger sparse ,there are often many valid labellings for each drawing.Non-trihedral line labelling using the previously mentioned algorithms is now O (2n )in practice as well as in theory,and thus too slow.Furthermore,choosing the best labelling from the valid ones is not straightforward either,although there are heuristics which can help (see [VM03]).Instead,an alternative labelling method is to use a relax-ation bel probabilities are maintained for each line and each junction;these probabilities are iteratively up-dated.If a probability falls to 0,that label is removed;if a probability reaches 1,that label is chosen and all other la-bels are removed.In practice,this method is much faster—labels which are possible but very unlikely are removed quickly by relaxation,whereas they are not removed at all by combinatorial algorithms.However,relaxation methods are less reliable (the heuristics developed for choosing between valid labellings when using combinatorial methods are rea-sonably effective).In test we performed on 535line draw-ings [Var03b],combinatorial labelling labelled 428entirely correctly,whereas relaxation labelling only labelled 388en-tirely correctly.The most serious problem with either approach is that in treating line labelling as a discrete constraint satisfaction problem,the geometry of the drawing is not taken into ac-count,e.g.the two drawings in Figure 7are labelled the same.The problems created by ignoring geometrybecomeFigure 7:Same Topologymuch worse in drawings with several non-trihedral junctions (see [VSM04]),and for these,other methods are required.A new approach to labelling outlined in that paper and subsequently developed further [VMS04]makes use of an idea previously proposed for inflation [LB90]:•Assign relative i ,j ,k coordinates to each junction by as-suming that distances along the 2D axes in Figure 8cor-respond to 3D distances along spatial i ,j ,k axes.•Rotate the object from i ,j ,k to x ,y ,z space,where the lat-ter correspond to the 2D x ,y axes and z is perpendicular to the plane of the drawing.•Find the 3D equation for each planar region using vertex x ,y ,z coordinates.•For each line,determine from the equations of the two faces which meet the line whether it is convex,concave or occluding (if there is only one face,the line is occluding).submitted to EUROGRAPHICS Workshop on (2004)4P .Varley &R.Martin &H.Suzuki /Can Machines Interpret LineDrawings?+k-k+i-i+j-jFigure 8:Three Perpendicular Axes in 2DOn its own,this method does not work well:e.g.it is dif-ficult to specify a threshold distance d between two faces such that a distance greater than d corresponds to a step,and hence an occluding line,while if the distance is less than d the planes meet and the line is convex or concave.However,using the predictions made by this method as input to a re-laxation labelling algorithm provides far better results than using arbitrary initialisation in the same algorithm.This idea can be combined with many of the ideas in Sec-tion 6when producing a provisional geometry.Various vari-ants of the idea have been considered (see [VMS04]),partic-ularly with reference to how the i ,j ,k axes are identified in a 2D drawing,without as yet any firm conclusions as to which is best overall.Another strength is that the idea uses the relaxation labeller to reject invalid labellings while collat-ing predictions made by other approaches.This architecture allows additional approaches to labelling,such as Clowes-Huffman labelling for trihedral objects,to make a contribu-tion in those cases where they are useful [VMS04].Even so,the current state-of-the-art only labels approxi-mately 90%of non-boundary edges correctly in a represen-tative sample of drawings of engineering objects [VMS04].Note that any approach which uses catalogue-based la-belling can only label those drawings whose vertices are in a catalogue—it seems unlikely that 7-hedral and 8-hedral ex-tended K-type vertices of the type found in Figure 9willbeFigure 9:Uncatalogued Verticescatalogued in the near future.In view of this,one must ques-tion whether line labelling is needed.Humans are skilled at interpreting line drawings,and introspection tells us that line labelling is not always a part of this process—it may evenbe that humans interpret the drawing first,and then (if nec-essary)determine which lines are convex,concave and oc-cluding from the resulting mental model.Our investigations indicate that line labelling is needed,at least at present.We are investigating interpreting line draw-ings without labelling,based on identifying aspects of draw-ings which humans are known or believed to see quickly,such as line parallelism [LS96],cubic corners [Per68]and major axis alignment [LB90].Current results are disappoint-ing.Better frontal geometry can be obtained if junction la-bels are available.More importantly,the frontal geometry is topologically unsatisfactory.Distinguishing occluding from non-occluding T -junctions without labelling information is unreliable,and as a result,determination of hidden topology (Section 8)is unlikely to be successful.5.Which Lines are Parallel?Determining which lines in a drawing are intended to be par-allel in 3D is surprisingly difficult.It is,for example,obvious to a human which lines in the two drawings in Figure 10are intended to be parallel and which are not,but determining this algorithmically presentsproblems.Figure 10:Which Lines are Parallel?Sugihara [Sug86]attempted to define this problem away by using a strict definition of the general position rule:the user must choose a viewpoint such that if lines are parallel in 2D,the corresponding edges in 3D must be parallel.This makes no allowance for the small drawing errors which in-evitably arise in a practical system.Grimstead’s “bucketing”approach [Gri97],grouping lines with similar orientations,works well for many draw-ings,but fails for both drawings in Figure 10.Our own “bundling”approach [Var03a],although somewhat more re-liable,fares no better with these two drawings.The basic idea used in bundling is that edges are parallel if they look parallel unless it can be deduced from other information that they cannot be parallel.The latter is problematic for two rea-sons.Firstly,if ‘other information’means labelling,iden-tification of parallel lines must occur after labelling,limit-ing the system organisation for computing frontal geome-try.Secondly,to cover increasingly rare exceptional cases,we must add extra,ever more complex,rules for deducing which lines may or may not be parallel.This is tedious andsubmitted to EUROGRAPHICS Workshop on (2004)P.Varley&R.Martin&H.Suzuki/Can Machines Interpret Line Drawings?5 rapidly reaches the point of diminishing returns.For exam-ple,a rule which can deduce that the accidental coincidencein Figure11should not result in parallel lines would be bothcomplicated to implement and of no use in many other cases.**Figure11:Accidental CoincidenceFurthermore,there are also cases where it is far from cleareven to humans which edges should be parallel in3D(edgesA,B,C and D in Figure12are a case in point).ABCDFigure12:Which Edges Should Be Parallel?In view of these problems,more recent approaches tofrontal geometry(e.g.[VMS04])simply ignore the possi-bility that some lines which appear parallel in2D cannot infact be parallel in3D.Initially,it is assumed that they areparallel;this information is then re-checked after inflation.6.Inflation to212D by assigning z-coordinates(depth coordinates)to eachvertex,producing a frontal geometry.The approach taken here is the simplest:we use compliance functions[LS96]to generate equations linear in vertex depth coordinates,and solve the resulting linear least squares problem.Many com-pliance functions can be translated into linear equations in z-coordinates.Of these,the most useful are:Cubic Corners[Per68],sometimes called corner orthogo-nality,assumes that a W-junction or Y-junction corresponds to a vertex at which three orthogonal faces meet.See Fig-ure13:the linear equation relates depth coordinates z V and z A to angles F and G.Nakajima[Nak99]reports successful creation of frontal geometry solely by using a compliance function similar to corner orthogonality,albeit with a limited set of test drawings in which orthogonality predominates. Line Parallelism uses two edges assumed to be parallel in 3D.The linear equation relates the four z-coordinates of theVA BCEFGVABCEFGFigure13:Cubic Cornersvertices at either end of the two edges.Line parallelism is not,by itself,inflationary:there is a trivial solution(z=0 for all vertices).Vertex Coplanarity uses four vertices assumed to be coplanar.The linear equation relating their z-coordinates is easily obtained from2D geometry.Vertex coplanarity is also not,by itself,inflationary,having the trivial solution z=0 for all vertices.General use of four-vertex coplanarity is not recommended(Lipson[Lip98]notes that if three vertices on a face are collinear,four-vertex coplanarity does not guar-antee a planar face).However,it is invaluable for cases like those in Figure14,to link vertices on inner and outer face loops:without it the linear system of depth equations would be disjoint,with infinitely many solutions.**Figure14:Coplanar VerticesLipson and Shpitalni[LS96]list the above and several other compliance functions;we have devised the following. Junction-Label Pairs[Var03a]assumes that pairs of junc-tions with identified labels have the same depth implications they would have in the simplest possible drawing contain-ing such a pair.An equation is generated relating the vertex depths at each end of the line based on the junction labels of those vertices.For example,see Figure15:this pair of junc-tion labels can be found in an isometric drawing of a cube, and the implication is that the Y-junction is nearer to the viewer than the W-junction,with the ratio of2D line length to depth change being√6P .Varley &R.Martin &H.Suzuki /Can Machines Interpret LineDrawings?Figure 15:Junction La-bel Pair *Figure 16:Incorrect Infla-tion?paper.Although it occasionally fails to determine correctly which end of a line should be nearer the viewer,such failures arise in cases like the one in Figure 16where a human would also have difficulty.The only systematic case where using a linear system of compliance functions fails is for Platonic and Archimedean solids,but a known special-case method (Marill’s MSDA [Mar91])is successful for these.In order to make the frontal geometry process more ro-bust when the input information (especially line labelling)is incorrect,we have experimented with two approaches to inflation which do without some or all of this information.The first is the ‘preliminary inflation’described in Sec-tion 4:find the i ,j ,k axes in the drawing,inflate the object in i ,j ,k space,then determine the transformation between i ,j ,k and x ,y ,z spaces.This requires parallel line information (to group lines along the i ,j ,k axes).Where such information is misleading,the quality of inflation is unreliable,but this is not always a problem,e.g.the left-hand drawing in Figure 10is labelled correctly despite incorrect parallel line informa-tion.Once (i)a drawing has been labelled correctly and (ii)there is reason to suppose that the parallel line information is unreliable,better-established inflation methods can be used to refine the frontal geometry.However,the right-hand draw-ing is one of those which is not labelled correctly,precisely because of the misleading parallel line information.A second promising approach,needing further work,at-tempts to emulate what is known or hypothesised about hu-man perception of line drawings.It allocates merit figures to possible facts about the drawing;these,and the geometry which they imply,are iteratively refined using relaxation:•Face-vertex coplanarity corresponds to the supposition that vertices lie in the plane of faces.We have already noted the difficulty of distinguishing occluding from non-occluding T -junctions;to do so,we must at some time decide which vertices do lie in the plane of a face.•Corner orthogonality ,which was described earlier.At least one inflationary compliance function is required,and this one has been found reliable.Although limited in prin-ciple,corner orthogonality is particularly useful in prac-tice as cubic corners are common in engineering objects.•Major axis alignment is the idea described above of using i ,j ,k axes.This is also an inflationary compliance func-tion.It is newer than corner orthogonality,and for this reason considered less reliable.However,unlike cornerorthogonality (which can fail entirely in some circum-stances),major axis alignment does always inflate a draw-ing,if not always entirely correctly.•Line parallelism is useful for producing ‘tidy’output (e.g.to make lines terminating in occluding T -junctions paral-lel in 3D to other lines with similar 2D orientation).How-ever,the main reason for its inclusion here is that it also produces belief values for pairs of lines being parallel as a secondary output,solving the problem in Section 5.•Through lines correspond to the requirement that a contin-uous line intercepted by a T -junction or K -junction corre-sponds to a single continuous edge of the object.A third,simpler,approach assumes that numerically cor-rect geometry is not required at this early stage of pro-cessing,and identifying relative depths of neighbouring ver-tices is sufficient.Schweikardt and Gross’s [SG00]work,al-though limited to objects which can be labelled using the Clowes-Huffman catalogue,and not extending well to non-normalons,suggests another possible way forward.7.Classification and SymmetryIdeally,one method should work for all drawings of poly-hedral objects;identification of special cases should not be necessary.However,the state-of-the-art is well short of this ideal—in practice it is useful to identify certain frequent properties of drawings and objects.Identification of planes of mirror symmetry is particularly useful.Knowledge of such a symmetry can help both to construct hidden topol-ogy (Section 8)and to beautify the resulting geometry (Sec-tion 9).Identification of centres of rotational symmetry is less useful [Var03a],but similar methods could be applied.The technique adopted is straightforward:for each possi-ble bisector of each face,create a candidate plane of mirror symmetry,attempt to propagate the mirror symmetry across the entire visible part of the object,and assess the results us-ing the criteria of (i)to what extent the propagation attempt succeeded,(ii)whether there is anything not visible which should be visible if the plane of mirror symmetry were a genuine property of the object,and (iii)how well the frontal geometry corresponds to the predicted mirror symmetry.Classification of commonly-occurring types of objects (examples include extrusions,normalons,and trihedral ob-jects)is also useful [Var03a],as will be seen in Section 8.One useful combination of symmetry and classification is quite common in engineering practice (e.g.see Figures 1and 2):a semi-normalon (where many,but not all,edges and face normals are aligned with the major axes)also having a dominant plane of mirror symmetry aligned with one of the object’s major axes [Var03a].The notable advantage of this classification is that during beautification (Section 9)it pro-vides constraints on the non-axis-aligned edges and faces.We recommend that symmetry detection and classifica-submitted to EUROGRAPHICS Workshop on (2004)P.Varley&R.Martin&H.Suzuki/Can Machines Interpret Line Drawings?7tion should be performed after creation of the frontal geom-etry.Detecting candidate symmetries without line labels is unreliable,and assessing candidate symmetries clearly ben-efits from the3D information provided by inflation.The issue is less clear for classification.Some classifications (e.g.whether the object is a normalon)can be done directly from the drawing,without creating the frontal geometryfirst. However,others cannot,so for simplicity it is preferable to classify the object after creating its frontal geometry.8.Determine Hidden TopologyOnce the frontal geometry has been determined,the next stage of processing is to add the hidden topology.The method is essentially that presented in[VSMM00]:firstly, add extra edges to complete the wireframe,and then add faces to the wireframe to compete the object,as follows:While the wireframe is incomplete:•Project hypothesised edges from each incomplete vertex along the appropriate axes•Eliminate any edges which would be visible at their points of origin•Find locations where the remaining edges intersect,as-signing meritfigures according to how certain it is that edges intersect at this location(e.g.an edge intersecting only one other edge has a higher meritfigure than an edge has potential intersections with two or more other edges)•Reduce the merit for any locations which would be visible (these must be considered,as drawing errors are possible)•Choose the location at which the merit is greatest •Add a vertex at this location,and the hypothesised edges meeting at this location,to the known object topology The process of completing the wireframe topology varies in difficulty according to the type of object drawn.We il-lustrate two special-case object classes,extrusions and nor-malons,and the general case.In some cases(e.g.if the ob-ject is symmetrical or includes a recognised feature),more than one vertex can be added in one iteration,as described in[Var03a].Such cases increase both the speed and reliabil-ity of the process of completing the wireframe. Completing the topology of extrusions from a known front end cap is straightforward.Figure17shows a draw-ing and the corresponding completed extrusionwireframe.Figure17:ExtrusionKnowing that the object is a normalon simplifies recon-struction of the wireframe,since when hypothesised edges are projected along axes,there is usually only one possibil-ity from any particular incomplete vertex.Figure18shows a drawing of a normalon and the corresponding completed wireframe.Similarly,if the object is trihedral,there canbeFigure18:Normalon[Yan85]at most one new edge from each incomplete vertex,simplify-ing reconstruction of the correct wireframe.Figure19shows a drawing of a trihedral object and the corresponding com-pletedwireframe.Figure19:Trihedral Object[Yan85] However,in the general case,where the object is neither a normalon nor trihedral,there is the significant difference that hypothesised edges may be projected in any direction paral-lel to an existing edge.Even after eliminating edges which would be visible,there may be several possibilities at any given incomplete vertex.The large number of possible op-tions rapidly becomes confusing and it is easy to choose an incorrect crossing-point at an early stage.Although such er-rors can sometimes be rectified by backtracking,the more common result is a valid but unwanted wireframe.Only very simple drawings can be processed reliably.Figure20shows a general-case object and the corresponding completed wire-frame;this represents the limit of the current state of theart.Figure20:General Case ObjectOne particular problem,a specific consequence of the ap-proach of completing the wireframe before faces,is thatsubmitted to EUROGRAPHICS Workshop on (2004)8P.Varley&R.Martin&H.Suzuki/Can Machines Interpret Line Drawings?there is no assurance that the local environments of either end of a new edge match.It may happen that a sector around a new edge is solid at one end and empty at the other.This is perhaps the single most frequent cause of failure at present, and is especially problematic in that the resulting completed wireframe can appear correct.We aim to investigate faster and more reliable ways of determining the correct hidden topology of an object,starting with approaches aimed at cor-recting this conflicting local environment problem. Adding additional faces to the completed wireframe topology for which the frontal geometry is already known is straightforward.We use repeated applications of Dijkstra’s Algorithm[Dij59]tofind the best loop of unallocated half-edges for each added face,where the merit for a loop of half-edges is based both on the number of half-edges re-quired(the fewer,the better)and their geometry(the closer to coplanar,the better).We have not known this approach to fail when the input is a valid wireframe(which,as noted above,is not always the case).9.Beautification of Solid ModelsAs can be seen from the Figures in the previous Section, even when topologically correct,the solid models produced may have(possibly large)geometric imperfections.They re-quire‘beautification’.More formally,given a topologically-correct object and certain symmetry and regularity hypothe-ses,we wish to translate these hypotheses into constraints, and update the object geometry so that it maximises some merit function based on the quantity and quality of con-straints enforced.In order to make this problem more tractable,we decom-pose it into determination of face normals and determination of face distances from the origin;once faces are known,ver-tex coordinates may be determined by intersection.The ra-tionale for this partitioning[KY01]is that changing face nor-mals can destroy satisfied distance constraints,but changing face distances cannot destroy satisfied normal constraints. However,there are theoretical doubts about this sub-division,related to the resolvable representation prob-lem[Sug99]offinding a‘resolution sequence’in which information can befixed while guaranteeing that no previ-ous information is contradicted.For example,determining face equationsfirst,and calculating vertex coordinates from them,is a satisfactory resolution sequence for many polyhe-dra,including all trihedral polyhedra.Similarly,fixing vertex coordinates and calculating face planes from them is a satis-factory resolution sequence for deltahedra and triangulated mesh models.Sugihara[Sug99]proved that:•all genus0solids have resolution sequences(although if neither trihedral nor deltahedra,finding the resolution se-quence might not be straightforward);•(by counterexample)genus non-zero solids do not neces-sarily have resolution sequences.Thus,there are two resolvable representation issues:•finding a resolution sequence for those solids which have a non-trivial resolution sequence;•producing a consistent geometry for those solids which do not have a resolution sequence.Currently,neither problem has been solved satisfactorily. Thus,although there are objects which have resolution se-quences,but for which determining face normals,and then face distances,andfinally vertex coordinates,is not a satis-factory resolution sequence,the frequency of occurrence of such objects has yet to be determined.If low,the pragmatic advantages of such an approach are perhaps more important than its theoretical inadequacy.Our overall beautification algorithm is[Var03a]:•Make initial estimates of face normals•Use any object classification to restrict face normals •Identify constraints on face normals•Adjust face normals to match constraints •Make initial estimates of face distances •Identify constraints on face distances•Adjust face distances to match constraints •Obtain vertex locations by intersecting planes in threes •Detect vertex/face failures and adjust faces to correct them We use numerical methods for constraint processing,as this seems to be the approach which holds most promise.Al-ternatives,although unfashionable for various reasons,may become more viable as the state of the art develops:see Lip-son et al[LKS03]for a discussion.In addition to the resolvable representation problem,there is a further theoretical doubt about this approach.When at-tempting to satisfy additional constraints,it is necessary to know how many degrees of freedom are left in the system once previous,already-accepted,constraints are enforced. This apparently-simple problem appears to have no fully-reliable solution.One solution proposed by Li[LHS01]per-turbs the variables slightly and detects which constraints have been violated.However,this is slow,and not necessar-ily theoretically sound either(e.g.a constraint relating face distances A and B may allow them to move together,but not independently of one another).Two differing approaches have been tried to the problem offinding whether or not a geometry exists which satis-fies a new constraint while continuing to satisfy previously-accepted constraints.Thefirst encodes constraint satisfaction as an error func-tion(the lower the value,the better-satisfied the con-straint),and face normals and/or face distances as vari-ables,using a downhill optimiser to minimise the error function[CCG99,LMM02,Var03a].Such algorithms use a ‘greedy’approach,in which the constraint with the highest figure of merit is always accepted and enforced,and then for each other constraint,in descending order of merit:if thesubmitted to EUROGRAPHICS Workshop on (2004)。

ISSN 1000-9825, CODEN RUXUEW E-mail: jos@Journal of Software, Vol.17, No.10, October 2006, pp.2029−2039 DOI: 10.1360/jos172029 Tel/Fax: +86-10-62562563© 2006 by Journal of Softwar e. All rights reserved.∗分布式约束满足问题研究及其进展王秦辉, 陈恩红+, 王煦法(中国科学技术大学计算机科学技术系,安徽合肥 230027)Research and Development of Distributed Constraint Satisfaction ProblemsWANG Qin-Hui, CHEN En-Hong+, WANG Xu-Fa(Department of Computer Science and Technology, University of Science and Technology of China, Hefei 230027, China)+ Corresponding author: Phn: +86-551-3602824, Fax: +86-551-3603388, E-mail: cheneh@Wang QH, Chen EH, Wang XF. Research and development of distributed constraint satisfaction problems.Journal of Software, 2006,17(10):2029−2039. /1000-9825/17/2029.htmAbstract: With the rapid development and wide application of the Internet technology, many problems ofArtificial Intelligence, for example scheduling, planning, resource allocation etc., are formally distributed now,which turn into a kind of multi-agent system problems. Accordingly, the standard constraint satisfaction problemsturn into distributed constraint satisfaction problems, which become the general architecture for resolvingmulti-agent system. This paper first briefly introduces the basic concepts of distributed CSPs, and then summarizesthe basic and the improved algorithms. Their efficiency and performance are analyzed and the typical applicationsof distributed CSPs in recent years are discussed. Finally, this paper presents the extensions of the basicformalization and the research trends in this area. Recent related work indicates that the future work will focus onthe theoretical research to present the solid theoretical foundation for the practical problems.Key words: constraint satisfaction; distributed AI; multi-agent system; search; asynchronous摘 要: 近年来,随着网络技术的快速发展和广泛应用,人工智能领域中的诸多问题,如时序安排、计划编制、资源分配等,越来越多地以分布形式出现,从而形成一类多主体系统.相应地,求解该类问题的传统约束满足问题也发展为分布式约束满足问题,分布式约束满足已经成为多主体系统求解的一般框架.首先,简要介绍了分布式约束满足问题的基本概念,总结了该问题的基本算法及其改进算法,并对这些算法的效率和性能进行了比较分析.然后,讨论了近年来分布式约束满足问题的若干典型应用;最后,给出了分布式约束满足问题基本形式的扩展和今后的研究方向.分布式约束满足问题最新研究进展表明:今后的工作将着重于面向现实问题求解的理论研究,为实际应用提供坚实的理论基础.关键词: 约束满足;分布式人工智能;多主体系统;搜索;异步中图法分类号: TP301文献标识码: A自1974年Montanari在图像处理中首先提出了约束满足问题(constraint satisfaction problems,简称CSPs)[1]∗ Supported by the National Natural Science Foundation of China under Grant No.60573077 (国家自然科学基金); the Program forNew Century Excellent Talents in University of China under Grant No.NCET-05-0549 (新世纪优秀人才支持计划)Received 2006-03-09; Accepted 2006-05-082030 Journal of Software软件学报 V ol.17, No.10, October 2006以来,约束满足作为一种重要的求解方法在人工智能与计算机科学其他领域的很多问题中都得到了广泛的应用[2],从n皇后、图染色等经典问题到时序安排、计划编制、资源分配等大型应用问题,都可以形式化为约束满足问题进行求解.正因为在人工智能领域中的广泛适用,约束满足问题在理论、实验、应用上都得以深入研究,成为人工智能中很成功的问题解决范例之一.其相关成果一直是人工智能权威期刊《Artificial Intelligence》的热点,并有多个专题对此进行讨论;国内也有很多学者致力于约束满足问题的研究,主要的工作有约束程序理论、设计与应用的研究[3,4]、约束归纳逻辑程序设计等方面的研究[5,6],以及约束满足问题的求解研究[7−9]等等.约束满足问题是由一系列变量、变量相应的值域以及变量之间的约束关系组成,目标是为这些变量找到一组或多组满足所有约束关系的赋值.回溯搜索以及约束一致性检查两种基本思想和引入它们中的各种启发式方法构成了多种约束满足问题求解算法.随着硬件和网络技术的发展,分布式计算环境快速、广泛地在各个领域中得到应用,很多人工智能问题也越来越多地处于分布式计算环境下,使得分布式人工智能成为一个十分重要的研究领域,特别是关系到人工自治Agent间需要相互协调影响的分布式问题.如在多智能体系统(multi-agent system,简称MAS)中,处于同一环境下的Agent间通常存在着某种约束,此时,为各个Agent寻找一组满足它们之间约束的动作组合的分布式人工智能应用问题都可以看作是分布式约束满足问题(distributed CSPs).分布式约束满足问题是变量以及变量间的约束都分布在不同自治Agent中的约束满足问题,每个Agent控制一个或多个变量,并试图决定这些变量的值,一般在Agent内和Agent间都存在约束关系,对变量的赋值要满足所有这些约束.正因为不同的变量和约束是由不同的Agent控制,因此,在这种情形下,将所有Agent控制的变量及相关的约束等信息集中到一个Agent,再用传统的集中式约束满足算法进行求解往往是不充分或者是不可能的,有如下几点原因[10]:(1) 生成集中控制会带来额外开销.如类似于传感网络的约束满足问题很可能自然地分布在由一些同等Agent构成的集合中.这种情况下,对问题进行集中控制就需要增加不出现在原有结构中的额外元素.(2) 信息传递的开销.在很多情况下,约束由复杂的决策过程产生,这些过程是内在于Agent并且不可能被集中控制的.集中式算法需要获得这些约束关系就要承担信息传递的开销.(3) 隐私和安全的保证.在电子商务等情况中,常出现Agent之间的约束是不能泄露给竞争者甚至也不能泄露给集中控制的战略信息的情况.此时,隐私只能在分布式方法中得到很好的保护.(4) 面对失败的鲁棒性.集中控制求解时的失败可能是致命的;而在分布式方法中,一个Agent的失败并不是致命的,其他Agent可以在忽略已失败Agent的情况下找到问题的解.比如在传感网络和基于网络的应用中,当约束求解过程正在进行而参与者可能离开时,都会产生这些问题.从上述原因可以看出:此类分布式环境中的问题需要更有效的解决方法.随着人工智能领域协作式分布问题研究的深入,Yokoo等人在文献[11]中提出了分布式约束满足问题的框架和相应算法.作为一种新的技术,它特别适用于表示及求解规模大、难度高的组合问题.所以,分布式约束满足问题成为人工智能领域的一个研究热点.本文在文献[12]对分布式约束满足问题综述的基础上,不仅介绍了分布式约束满足的问题形式和一系列求解算法,还介绍了近年来在分布式约束满足问题基本形式上的扩展和多主体系统的应用.本文第1节介绍分布式约束满足问题的定义.第2节详述一系列求解分布式约束满足问题的算法,比如异步回溯、异步Weak-commitment搜索、分布式逃逸算法等.第3节介绍相应的应用.最后总结该问题上的一些扩展和类似的工作,如开放式、分布式局部约束满足、隐私安全性等.1 分布式约束满足1.1 约束满足问题约束满足问题是在一定的值域范围内为所有变量寻找满足它们彼此间约束关系的赋值的问题,由变量、变量的值域和变量之间的约束组成.定义1(约束满足问题). 约束满足问题可以形式化为一个约束网[13],由变量的集合、每个变量的值域的集合以及变量间的约束关系的集合来定义,表示为三元组(V,D,C),其中:王秦辉等:分布式约束满足问题研究及其进展2031V是变量的集合{v1,…,v n};D是所有变量的值域的集合,D={D1,…,D n}, D i是变量v i的所有可能取值的有限域;C是变量之间的约束关系的集合C={C1,…,C m},其中每个约束包含一个V的子集{v i,…,v j}和一个约束关系R⊆D i×…×D j.约束满足方法是一种有效的问题求解方法,它为每个变量在其值域中寻找一个赋值,使得所有约束被满足.定义2(约束满足问题的解). 约束满足问题的解是分配给问题中所有变量的一组不违反任何约束的赋值.也即一组对所有变量的赋值S(v1,…,v n)={d1∈D1,…,d n∈D n},∀C r∈C都有S(v ri,…,v rj)={d ri,…,d rj}∈R r.例如,n皇后问题就是典型的约束满足问题.该问题描述为要在n×n的棋盘上摆放n个皇后,使得每一行、每一列和每条对角线上只能有一个皇后存在.图1是4皇后问题的示例以及相应的约束满足问题.(a) (b) (d)Fig.1 4 queens constraint satisfaction problem图1 4皇后及相应的约束满足问题图1(a)表示在4×4的棋盘上放置4个皇后Q1~Q4,即为变量集合;图1(b)表示任意行、列、对角线上不能同时有两个皇后,即为约束关系;图1(c)是相对应的约束满足关系网;图1(d)是该问题的一个解.1.2 分布式约束满足问题分布式约束满足问题是变量和约束都分布在不同自治Agent中的约束满足问题.在约束满足问题定义的基础上,可如下定义分布式约束满足问题:定义3(分布式约束满足问题). n个Agent表示为A1,A2,…,A n,m个变量为v1,v2,…,v m,m个变量的值域为D1, D2,…,D m,变量间的约束仍用C表示;每个Agent有一个或多个变量,每个变量v j属于一个A i,表示为belongs(v j, A i);变量间的约束关系分布在Agent内或Agent之间,当A l知道约束关系C k时,表示为Known(C k,A l).分布在Agent内的约束称为局部约束,而Agent间的约束称为全局约束,局部约束可以通过Agent的计算来处理,全局约束不仅需要Agent的计算,更需要Agent间的通信来处理,因此需要如下的通信模式假设: 假设1. Agent间的通信通过传递消息完成,当且仅当一个Agent知道对方地址时才能够传递消息给其他Agent.假设2. 传递消息的延时是随机但有限的,任何一对Agent间消息接收的顺序与消息发送的顺序是一致的.假设3. 每个Agent只知道整个问题的部分信息.分布式约束满足中的Agent与多智能体系统(MAS)中的Agent有着细小的差别[14],分布式约束满足中的Agent是遵从协作机制来执行决策行为的计算实体;MAS中的Agent自治地决定是否遵从特定的协作机制,并能以结构化的语义消息交换形式与其他Agent进行通信.在将MAS形式化为分布式约束满足问题进行求解时,并不考虑这些区别.每一个Agent负责一些变量并决定它们的值,因为还存在着Agent间的内在约束,所以,赋值必须满足这些约束.分布式约束满足问题的解的形式化定义为:定义4(分布式约束满足问题的解). 当且仅当满足下述条件时,分布式约束满足问题找到了解:∀A i,∀v j存在关系belongs(v j,A i),当v j的赋值是d j∈D j时,∀C k,∀A l,Known(C k,A l)都有C k被满足.也即此时对问题中所有变量的赋值满足Agent间及Agent内的所有约束.2032 Journal of Software 软件学报 V ol.17, No.10, October 2006图2是一个分布式约束满足问题的示例.该问题为分布式图染色问题,从黑、白、灰这3种颜色中选一种分配给Agent 中的节点变量,使得互相连接的节点颜色不同.图中每个Agent 都各有3个变量,变量之间的边就表示彼此间存在着约束关系,该问题不仅Agent 内而且Agent 间都存在着约束关系.(a) (b) (c)Fig.2 Example of distributed constraint satisfaction problem图2 分布式约束满足问题示例图2(a)表示该问题的约束关系网;图2(b)为随机分配着色的初始状态;图2(c)是该问题的一个解.1.3 分布式约束满足与并行/分布式计算的区别分布式约束满足问题看起来与求解约束满足问题的并行/分布式方法[15,16]虽然很相似,但它们从根本上是不同的.并行/分布式方法应用到约束满足问题求解中的目的是为了提高问题的求解效率,针对不同的约束满足问题可以选择任何一种合适的并行/分布式计算机体系结构将问题分而治之,取得较高的求解效率.而在分布式约束满足中,问题的变量和约束等相关信息从问题给定时就既定地分布于各个自治Agent 中,所以,研究的出发点是如何在这种固有的情形下有效地获得问题的解.比如一个大规模的n 皇后问题,可以利用分布式并行计算获得更快的求解速度.而对应到分布式的n 皇后问题,则是很多个不同的Agent 拥有数量不同的皇后,通过自我决策和Agent 间的通信协作来共同达到问题的解.2 求解分布式约束满足问题的算法在分布式约束满足问题提出的同时,Yokoo 就在文献[11]中提出了异步回溯算法.近些年来,其他的分布式约束满足求解算法也得到了进一步的研究,特别是异步Weak-commitment 搜索[17,18]和分布式逃逸算法[19]等.这些算法基本上是由约束满足问题的求解算法而来,是这些传统算法的分布式扩展.但是,因为分布式约束满足问题中Agent 之间也存在着约束关系,所以,Agent 间需要通信是与传统算法的最大区别.分布式约束满足算法有两种最基本的消息需要通信,分别是ok ?和nogood [11].定义5(ok ?消息). ok ?是指Agent 将当前的赋值信息传递给相邻Agent 的消息.定义6(nogood 消息). nogood 是用来传递约束是否发生冲突而产生新约束的消息.在文献[12]中,对各种算法的描述都做了如下假设:(1) 每个Agent 只控制一个变量;(2) 所有的约束都是二元的;(3) 每个Agent 知道所有和自己变量相关的约束.因此,可以不加区分地使用相同标识v i 表示一个Agent 及其变量,用约束网中的有向边表示约束关系,该有向边由发送消息Agent 指向接收消息Agent.对假设2和假设3都可以自然地扩展到一般情形.下面分别介绍基于回溯的异步回溯算法、基于优化迭代的分布式逃逸算法和基于混合算法的异步Weak-commitment 算法.2.1 异步回溯(AB :Asynchronous backtracking )异步回溯算法是由求解约束满足问题的回溯算法而来.所不同的是,异步回溯算法是分布式的、异步的.在异步回溯算法中,每个Agent 都有一个优先顺序,该优先顺序是预先定义好的,一般由Agent 标识的字母顺序来王秦辉等:分布式约束满足问题研究及其进展2033决定,比如,按降序或升序来决定Agent的优先序的高低.在该算法中,每个Agent除了要发送接受ok?和nogood 消息以外,还要维护一个agent_view,这是用来记录其他Agent的当前赋值的.当一个Agent接收到ok?消息时,则检查其赋值与优先顺序高的Agent的当前赋值是否满足约束关系,如果不满足约束关系产生冲突而不一致就改变自己的赋值;如果该Agent值域中没有能与高优先序Agent的赋值相一致的值,就产生一个新的约束关系(也就是nogood),并且将nogood传递给高优先序Agent,这样,高优先序Agent就可以改变自己的赋值.必须注意到:如果Agent不断地改变它们的赋值而不能达到一个稳定状态,那么它们就处于一种无限处理循环,当一个Agent的赋值导致其他Agent改变赋值而最终影响到自己时就可能产生这种无限循环.为了避免这种情况的发生,在算法中,按照标识的字母序为每个Agent定义了优先顺序,ok?只能从高优先序Agent发送给低优先序Agent.当产生nogood时,也是nogood中的优先序最低的Agent接收到nogood消息.另外,每个Agent的行动都是同时异步发生的,而且Agent间的通信是通过消息传递来进行的,所以,agent_view中可能包含有已经无用的信息.因此,每个Agent都需要产生新的nogood进行通信,新nogood的接收方也必须检查在自己的agent_view的基础上与此nogood是否有冲突.因为算法中最高优先序的Agent不会陷入无限处理循环中,文献[18]用归纳法证明了该算法是具备完全性的,也即:如果问题有解存在,那么一定能找到这个解;如果没有解存在,那么算法也会终止,不会陷入无限循环.近年来,有很多工作都对异步回溯算法进行了改进.在对算法进行扩展时,文献[20]采用了Agent的动态重排序;文献[21]引入了一致性维护;文献[22]提出了不存储nogood消息的分布式回溯算法.这些算法与基本的异步回溯算法相比只是存储nogood消息的方式不同,它们都需要在未相连的Agent之间添加通信连接来检测已经无用的消息.而文献[23]中提出了一种新的异步回溯算法来避免在初始未相连的Agent之间动态地增添新的约束,这样就可以避免将一些信息传递给不需要知道的Agent,从而提高效率.文献[24]从另一个角度提出了如何利用值聚集来减少信息阻塞以及如何利用弧一致维护来提高异步分布式下问题求解的有效性.2.2 异步Weak-Commitment搜索(AWS:Asynchronous weak-commitment search)异步回溯算法的局限在于Agent的优先顺序是预先定义好的,是静态的.如果高优先序Agent的赋值选择得不好,那么,低优先序Agent就要进行穷尽查找来修正不利的赋值.异步Weak-commitment搜索算法[17,18]的两个基本思想是:为了减少不利赋值的风险而引入了最小冲突启发;更进一步地,Agent的优先级顺序是可以动态改变的,这样,不利赋值不需要穷尽搜索就能够得到更正.最小冲突启发是指Agent选择值域中那些与其他Agent的赋值产生最少冲突的值作为自己的赋值.而为了使Agent的优先级顺序能够动态改变,特别地为Agent引入了优先值,优先值是非负整数,优先值大的Agent具有较高的优先顺序,优先值相等的Agent的优先顺序由它们所标识的字母序来决定.初始时,Agent的优先值均为0,当Agent的赋值与约束发生冲突而不一致时,该Agent的优先值就变为相邻Agent中的最大优先值再加1.与异步回溯算法相比,异步Weak-commitment搜索算法的不同在于:(1) 异步回溯中每个Agent只将变量赋值发送给约束相关的低优先级Agent;而异步Weak-commitment搜索中每个Agent将变量赋值发送给约束相关的所有Agent,无论优先级的高低;(2)ok?消息不仅用来传递Agent的当前赋值,还用来传递Agent的优先值;(3) 如果当前的赋值与agent_view不一致,则Agent用最小冲突启发来改变赋值;(4) 如果Agent不能找到与自己的agent_view一致的赋值,就发送nogood消息给其他Agent,同时改变自己的优先值.如果Agent不能生成新的nogood,那么就不改变自己的优先值,并等待下一条消息.第4步的过程是保证算法的完全性所必需的.因为优先值的改变只有在新的nogood产生时才发生,而可能的nogood的数量是有限的,优先值不可能无限地改变,所以到了某个时间之后,优先值就稳定下来,此后,过程就与异步回溯算法一样,故而算法是完全的.为了保证算法的完全性,Agent要记录所有目前已知的nogood,实际操作时,可以限制记录nogood的数目,比如每个Agent只记录固定数目的最近发生的nogood消息.正如前面所提到的假设,这些算法中的Agent都只含有一个变量,对于解决Agent含有多个变量的问题,无论是采用先让Agent找到自己局部问题的所有解后再将问题重新形式化为分布式约束满足问题来求解,还是让2034 Journal of Software软件学报 V ol.17, No.10, October 2006Agent为每个局部变量生成一个虚拟Agent再来模拟这些Agent的动作来求解,对大规模问题而言,既没有效率也不能扩展.文献[25]对异步Weak-commitment搜索算法进行了扩展,利用变量顺序来解决多个局部变量的问题,称为Multi-AWS.它的特点是Agent按顺序来改变自己变量的值,当某个变量不存在满足所有与高优先序的变量有关的约束时,就增加该变量的优先值.不断反复该过程,当Agent中所有局部变量都与高优先序变量满足约束时,就传递值改变消息给相关的Agent.2.3 分布式逃逸(DB:Distributed breakout)在最小冲突回溯等约束满足算法中的爬山(hill-climbing)搜索策略,有时会使求解过程陷入局部最小(local-minima)状态.local-minima状态就是一些约束没有被满足从而出现了冲突,但是这些冲突的数目不能通过单独改变任何一个变量的值来减少.文献[26]中提出的逃逸算法是一种跳出local-minima状态的方法,算法中为每个约束定义了权值,所有冲突约束的权值总和作为一个评估值.当陷入local-minima状态时,逃逸算法增加当前状态中冲突约束的权值,使得当前状态的评估值高于其他邻接的状态,从而跳出local-minima状态,开始新的搜索.文献[19]在此基础上通过以下两个步骤来实现分布式逃逸:(1) 始终保证评估值是逐步提高的:相邻的Agent对可能会提高的评估值进行交流,只有能够最大提高评估值的Agent才有权改变自己的值.如果两个Agent不相邻,那么它们有可能同时改变自己的值;(2) 与检测整个Agent是否陷入local-minima不同的是,每个Agent检测其是否处于quasi-local-minima状态,这是比local-minima要弱一些的条件,并且能够通过局部通信而检测到.定义7(Agent A i处于quasi-local-minimum状态). A i的赋值使部分约束产生冲突,并且A i和所有A i邻居的可能提高值均为0.在分布式逃逸算法中,相邻的Agent之间有两种消息的通信:ok?和improve. improve消息用来对可能提高的评估值进行通信,Agent在整个过程中处于wait_ok?和wait_improve两种交替状态.分布式逃逸算法有可能陷入无限循环当中,因而不能保证算法的完全性.文献[27]在对分布式逃逸算法进行扩展时,不仅提出了解决Agent有多个局部变量的Multi-DB算法,还对此算法引入了两种随机方式.一种是利用了随机跳出技术的Multi-DB+算法,另一种是在Multi-DB+中又引入随机行走的Multi-DB++算法.这些算法比其他异步方法有更好的扩展性,但有时也会有更差的性能.2.4 几种算法的比较上面介绍了几种求解分布式约束满足问题的基本算法,这些算法有各自的特点和适用性,也各有优点和局限性,表1是就上述算法在完全性及解决多局部变量方面的一个定性比较.Table 1Comparison of algorithms for solving distributed CSPs表1几种基本分布式约束满足问题求解算法的定性比较Algorithm AB AWS Multi-AWS DB Multi-DBNo NoCompleteness Yes Yes YesMulti local variables No No Yes No Yes文献[12]对基本的异步回溯(AB)、异步Weak-commitment(AWS)和分布式逃逸(DB)算法进行了性能比较.通过离散的事件模拟来评估算法的效率,其中每个Agent维护自己的模拟时钟,只要Agent执行一个计算周期,其时间就增加一个模拟时间单元.一个计算周期包括读取所有消息、执行局部计算和发送消息.假设一个消息在时间t发布,则对接收者来说,在时间t+1时可用.最后,通过解决问题所需的计算周期数量来分析算法的性能.表2是用分布式图染色问题来评测的结果,其中:Agent(变量)的数目n=60,90和120;约束的数量为m=n×2,可能的颜色数目为3.总共生成10个不同的问题,对每个问题执行10次不同的初始赋值,并且限制周期最大为1000,超过后就终止算法.表中列出了算法求解所需的平均周期和求解成功的比例.明显地,AWS算法要优于AB算法,因为在AWS算法中,不需要执行穷尽查找就能修正错误的赋值.在表3和表4比较AWS与DB算法时,Agent(变量)的数目为n=90,120和150,约束的数量分别为m=n×2王秦辉等:分布式约束满足问题研究及其进展2035和m=n×2.7两种情况,可能的颜色数仍然为3.当m=n×2时,可认为Agent间的约束是比较稀疏的;而当m=n×2.7时,则被认为是能够产生阶段跳跃的临界状态[28].Table 2 Comparison between AB and AWS表2算法AB和AWS的比较nAlgorithm60 90 120Ratio (%) 13 0 0ABCycles 917.4 --Ratio (%) 100 100 100AWSCycles 59.4 70.1 106.4Table 3Comparison between DB and AWS (m=n×2)表3算法DB和AWS的比较(m=n×2)nAlgorithm90 120 150Ratio (%) 100 100 100DBCycles 150.8 210.1 278.8Ratio (%) 100 100 100AWSCycles 70.1 106.4 159.2Table 4Comparison between DB and AWS (m=n×2.7)表4算法DB和AWS的比较(m=n×2.7)nAlgorithm90 120 150Ratio (%) 100 100 100DBCycles 517.1 866.4 1175.5Ratio (%) 97 65 29AWSCycles 1869.6 6428.4 8249.5从表3和表4中可以看出:当问题为临界困难时,DB算法要优于AWS算法.而对于一般情形,AWS算法要优于DB算法.因为在DB算法中,每个模式(wait_ok?或者wait_improve)都需要一个周期,所以每个Agent在两个周期内至多只能改变一次赋值;而在AWS算法中,每个Agent在每个周期内都可以改变赋值.如前所述,近年来的很多工作都对这些基本算法进行了改进或者扩展,在性能和效率方面也有各自的特点.文献[20]中的实验表明:对Agent进行动态重排序的ABTR(ABT with asynchronous reordering)算法的平均性能要优于AB算法,说明额外增加动态重排序的启发式消息实际上是可以提高算法效率的.文献[24]通过实验表明:采用值聚集的AAS(asynchronous aggregation search)算法的效果要稍好一些.虽然在查找第一个解时,不利用值聚集的效果会更好,但是如果解不存在,那么AAS的性能总是要优于不采用值聚集的算法,因为此时需要扩展到整个搜索空间,AAS可以减少消息序列,也就是减少存储nogoods消息的数目.更进一步地,使用了bound- consistency一致维护技术的MHDC(maintaining hierarchical distributed consistency)算法在实验中的整体性能要比AAS有很大的提高.通过图染色实验,文献[25]比较了算法Multi-AWS,AWS-AP(Agent priority)和Single-AWS,对于Agent有多个变量的情况,Multi-AWS算法在执行周期以及一致性检查数目上都要优于其他两种算法,而且随着Agent或变量的增多,其性能就越优于AWS-AP算法和Single-AWS算法.这是因为在解决Agent有多个变量的问题时,AWS-AP算法和Single-AWS算法需要增加额外的虚拟Agents来将多局部变量分布化,这样就增加了Agents 间的通信,从而使性能降低.文献[27]首先比较了Multi-DB和Multi-AWS算法.Multi-DB算法随着变量数目的增多,效率会越来越优于Multi-AWS算法,在很多情形下会有至少1个数量级的提高,但是成功率却较低.原因是由于Multi-DB算法在查找过程中固有的确定性使算法缺少了随机性.对于实验中Multi-DB++算法的效率优于Multi-DB算法,可以得出结论:多Agent搜索进程中的停滞可以通过添加随机行走来避免.。

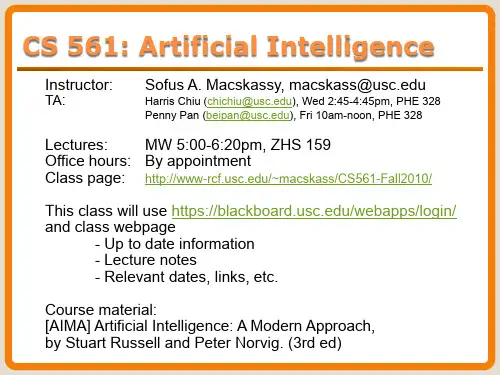

CS188Introduction to Artificial IntelligenceSpring2019Note5 These lecture notes are heavily based on notes originally written by Nikhil Sharma.Constraint Satisfaction ProblemsIn the previous note,we learned how tofind optimal solutions to search problems,a type of planning problem.Now,we’ll learn about solving a related class of problems,constraint satisfaction problems (CSPs).Unlike search problems,CSPs are a type of identification problem,problems in which we must simply identify whether a state is a goal state or not,with no regard to how we arrive at that goal.CSPs are defined by three factors:1.Variables-CSPs possess a set of N variables X1,...,X N that can each take on a single value from somedefined set of values.2.Domain-A set{x1,...,x d}representing all possible values that a CSP variable can take on.3.Constraints-Constraints define restrictions on the values of variables,potentially with regard to othervariables.Consider the N-queens identification problem:given an N×N chessboard,can wefind a configuration in which to place N queens on the board such that no two queens attack each another?We can formulate this problem as a CSP as follows:1.Variables-X i j,with0≤i,j<N.Each X i j represents a grid position on our N×N chessboard,with iand j specifying the row and column number respectively.2.Domain-{0,1}.Each X i j can take on either the value0or1,a boolean value representing theexistence of a queen at position(i,j)on the board.3.Constraints-•∀i,j,k(X i j,X ik)∈{(0,0),(0,1),(1,0)}.This constraint states that if two variables have the samevalue for i,only one of them can take on a value of1.This effectively encapsulates the conditionthat no two queens can be in the same row.•∀i,j,k(X i j,X k j)∈{(0,0),(0,1),(1,0)}.Almost identically to the previous constraint,this con-straint states that if two variables have the same value for j,only one of them can take on a valueof1,encapsulating the condition that no two queens can be in the same column.•∀i,j,k(X i j,X i+k,j+k)∈{(0,0),(0,1),(1,0)}.With similar reasoning as above,we can see thatthis constraint and the next represent the conditions that no two queens can be in the same majoror minor diagonals,respectively.•∀i,j,k(X i j,X i+k,j−k)∈{(0,0),(0,1),(1,0)}.X i j=N.This constraint states that we must have exactly N grid positions marked with a1,•∑i,jand all others marked with a0,capturing the requirement that there are exactly N queens on theboard.Constraint satisfaction problems are NP-hard,which loosely means that there exists no known algorithm for finding solutions to them in polynomial time.Given a problem with N variables with domain of size O(d)for each variable,there are O(d N)possible assignments,exponential in the number of variables.We can often get around this caveat by formulating CSPs as search problems,defining states as partial assignments (variable assignments to CSPs where some variables have been assigned values while others have not). Correspondingly,the successor function for a CSP state outputs all states with one new variable assigned, and the goal test verifies all variables are assigned and all constraints are satisfied in the state it’s testing. Constraint satisfaction problems tend to have significantly more structure than traditional search problems, and we can exploit this structure by combining the above formulation with appropriate heuristics to hone in on solutions in a feasible amount of time.Constraint GraphsLet’s introduce a second CSP example:map coloring.Map coloring solves the problem where we’re given a set of colors and must color a map such that no two adjacent states or regions have the same color.Constraint satisfaction problems are often represented as constraint graphs,where nodes represent variables and edges represent constraints between them.There are many different types of constraints,and each is handled slightly differently:•Unary Constraints-Unary constraints involve a single variable in the CSP.They are not represented in constraint graphs,instead simply being used to prune the domain of the variable they constrain when necessary.•Binary Constraints-Binary constraints involve two variables.They’re represented in constraint graphs as traditional graph edges.•Higher-order Constraints-Constraints involving three or more variables can also be represented with edges in a CSP graph,they just look slightly unconventional.Consider map coloring the map of Australia:The constraints in this problem are simply that no two adjacent states can be the same color.As a result,by drawing an edge between every pair of states that are adjacent to one another,we can generate the constraint graph for the map coloring of Australia as follows:The value of constraint graphs is that we can use them to extract valuable information about the structure of the CSPs we are solving.By analyzing the graph of a CSP,we can determine things about it like whether it’s sparsely or densely connected/constrained and whether or not it’s tree-structured.We’ll cover this more in depth as we discuss solving constraint satisfaction problems in more detail.Solving Constraint Satisfaction ProblemsConstraint satisfaction problems are traditionally solved using a search algorithm known as backtracking search.Backtracking search is an optimization on depthfirst search used specifically for the problem of constraint satisfaction,with improvements coming from two main principles:1.Fix an ordering for variables,and select values for variables in this order.Because assignments arecommutative(e.g.assigning WA=Red,NT=Green is identical to NT=Green,WA=Red),this is valid.2.When selecting values for a variable,only select values that don’t conflict with any previously as-signed values.If no such values exist,backtrack and return to the previous variable,changing its value.The pseudocode for how recursive backtracking works is presented below:For a visualization of how this process works,consider the partial search trees for both depthfirst search and backtracking search in map coloring:Note how DFS regretfully colors everything red before ever realizing the need for change,and even then doesn’t move too far in the right direction towards a solution.On the other hand,backtracking search only assigns a value to a variable if that value violates no constraints,leading to a significantly less backtracking. Though backtracking search is a vast improvement over the brute-forcing of depthfirst search,we can get more gains in speed still with further improvements throughfiltering,variable/value ordering,and structural explotation.FilteringThefirst improvement to CSP performance we’ll consider isfiltering,which checks if we can prune the domains of unassigned variables ahead of time by removing values we know will result in backtracking.A naïve method forfiltering is forward checking,which whenever a value is assigned to a variable X i, prunes the domains of unassigned variables that share a constraint with X i that would violate the constraint if assigned.Whenever a new variable is assigned,we can run forward checking and prune the domains of unassigned variables adjacent to the newly assigned variable in the constraint graph.Consider our map coloring example,with unassigned variables and their potential values:Note how as we assign WA=red and then Q=green,the size of the domains for NT,NSW,and SA(states adjacent to WA,Q,or both)decrease in size as values are eliminated.The idea of forward checking can be generalized into the principle of arc consistency.For arc consistency,we interpret each undirected edge of the constraint graph for a CSP as two directed edges pointing in opposite directions.Each of these directed edges is called an arc.The arc consistency algorithm works as follows:•Begin by storing all arcs in the constraint graph for the CSP in a queue Q.•Iteratively remove arcs from Q and enforce the condition that in each removed arc X i−→X j,for every remaining value v for the tail variable X i,there is at least one remaining value w for the head variable X j such that X i=v,X j=w does not violate any constraints.If some value v for X i would not work with any of the remaining values for X j,we remove v from the set of possible values for X i.•If at least one value is removed for X i when enforcing arc consistency for an arc X i−→X j,add arcs of the form X k−→X i to Q,for all unassigned variables X k.If an arc X k−→X i is already in Q during this step,it doesn’t need to be added again.•Continue until Q is empty,or the domain of some variable is empty and triggers a backtrack.The arc consistency algorithm is typically not the most intuitive,so let’s walk through a quick example with map coloring:We begin by adding all arcs between unassigned variables sharing a constraint to a queue Q,which gives us Q=[SA→V,V→SA,SA→NSW,NSW→SA,SA→NT,NT→SA,V→NSW,NSW→V]For ourfirst arc,SA→V,we see that for every value in the domain of SA,{blue},there is at least one value in the domain of V,{red,green,blue},that violates no constraints,and so no values need to be pruned from SA’s domain.However,for our next arc V→SA,if we set V=blue we see that SA will have no remaining values that violate no constraints,and so we prune blue from V’s domain.Because we pruned a value from the domain of V,we need to enqueue all arcs with V at the head-SA→V, NSW→V.Since NSW→V is already in Q,we only need to add SA→V,leaving us with our updated queueQ=[SA→NSW,NSW→SA,SA→NT,NT→SA,V→NSW,NSW→V,SA→V]We can continue this process until we eventually remove the arc SA→NT from Q.Enforcing arc consis-tency on this arc removes blue from SA’s domain,leaving it empty and triggering a backtrack.Note that the arc NSW→SA appears before SA→NT in Q and that enforcing consistency on this arc removes blue from the domain of NSW.Arc consistency is typically implemented with the AC-3algorithm(Arc Consistency Algorithm#3),for which the pseudocode is as follows:The AC-3algorithm has a worst case time complexity of O(ed3),where e is the number of arcs(directed edges)and d is the size of the largest domain.Overall,arc consistency is more holistic of a domain pruning technique than forward checking and leads to fewer backtracks,but requires running significantly more computation in order to enforce.Accordingly,it’s important to take into account this tradeoff when deciding whichfiltering technique to implement for the CSP you’re attempting to solve.As an interesting parting note about consistency,arc consistency is a subset of a more generalized notion of consistency known as k-consistency,which when enforced guarantees that for any set of k nodes in the CSP,a consistent assignment to any subset of k−1nodes guarantees that the k th node will have at least one consistent value.This idea can be further extended through the idea of strong k-consistency.A graph that is strong k-consistent possesses the property that any subset of k nodes is not only k-consistent but also k−1,k−2,...,1consistent as well.Not surprisingly,imposing a higher degree of consistency on a CSP is more expensive to compute.Under this generalized definition for consistency,we can see that arc consistency is equivalent to2-consistency.OrderingWe’ve delineated that when solving a CSP,wefix some ordering for both the variables and values involved. In practice,it’s often much more effective to compute the next variable and corresponding value"on thefly" with two broad principles,minimum remaining values and least constraining value:•Minimum Remaining Values(MRV)-When selecting which variable to assign next,using an MRV policy chooses whichever unassigned variable has the fewest valid remaining values(the most con-strained variable).This is intuitive in the sense that the most constrained variable is most likely to run out of possible values and result in backtracking if left unassigned,and so it’s best to assign a value to it sooner than later.•Least Constraining Value(LCV)-Similarly,when selecting which value to assign next,a good policy to implement is to select the value that prunes the fewest values from the domains of the remain-ing unassigned values.Notably,this requires additional computation(e.g.rerunning arc consis-tency/forward checking or otherfiltering methods for each value tofind the LCV),but can still yieldspeed gains depending on usage.StructureAfinal class of improvements to solving constraint satisfaction problems are those that exploit their struc-ture.In particular,if we’re trying to solve a tree-structured CSP(one that has no loops in its constraint graph),we can reduce the runtime forfinding a solution from O(d N)all the way to O(nd2),linear in the number of variables.This can be done with the tree-structured CSP algorithm,outlined below:•First,pick an arbitrary node in the constraint graph for the CSP to serve as the root of the tree(it doesn’t matter which one because basic graph theory tells us any node of a tree can serve as a root).•Convert all undirected edges in the tree to directed edges that point away from the root.Then linearize (or topologically sort)the resulting directed acyclic graph.In simple terms,this just means order the nodes of the graph such that all edges point rightwards.Noting that we select node A to be our root and direct all edges to point away from A,this process results in the following conversion for the CSP presented below:•Perform a backwards pass of arc consistency.Iterating from i=n down to i=2,enforce arc con-sistency for all arcs Parent(X i)−→X i.For the linearized CSP from above,this domain pruning will eliminate a few values,leaving us with the following:•Finally,perform a forward assignment.Starting from X1and going to X n,assign each X i a value consistent with that of its parent.Because we’ve enforced arc consistency on all of these arcs,no matter what value we select for any node,we know that its children will each all have at least one consistent value.Hence,this iterative assignment guarantees a correct solution,a fact which can be proven inductively without difficulty.The tree structured algorithm can be extended to CSPs that are reasonably close to being tree-structured with cutset conditioning.Cutset conditioning involvesfirstfinding the smallest subset of variables in a constraint graph such that their removal results in a tree(such a subset is known as a cutset for the graph). For example,in our map coloring example,South Australia(SA)is the smallest possible cutset:Once the smallest cutset is found,we assign all variables in it and prune the domains of all neighboring nodes.What’s left is a tree-structured CSP,upon which we can solve with the tree-structured CSP algorithm from above!The initial assignment to a cutset of size c may leave the resulting tree-structured CSP(s)with no valid solution after pruning,so we may still need to backrack up to d c times.Since removal of the cutset leaves us with a tree-structured CSP with(n−c)variables,we know this can be solved(or determined that no solution exists)in O((n−c)d2).Hence,the runtime of cutset conditioning on a general CSP is O(d c(n−c)d2),very good for small c.Local SearchAs afinal topic of interest,backtracking search is not the only algorithm that exists for solving constraint satisfaction problems.Another widely used algorithm is local search,for which the idea is childishly simple but remarkably useful.Local search works by iterative improvement-start with some random assignment to values then iteratively select a random conflicted variable and reassign its value to the one that violates the fewest constraints until no more constraint violations exist(a policy known as the min-conflicts heuristic). Under such a policy,constraint satisfaction problems like N-queens becomes both very time efficient and space efficient to solve.For example,in following example with4queens,we arrive at a solution after only2iterations:for N-queens with arbitrarily large N,but also for any randomly generated CSP!However,despite theseadvantages,local search is both incomplete and suboptimal and so won’t necessarily converge to an optimal solution.Additionally,there is a critical ratio around which using local search becomes extremely expensive:SummaryIt’s important to remember that constraint satisfaction problems in general do not have an efficient algorithm which solves them in polynomial time with respect to the number of variables involved.However,by using various heuristics,we can oftenfind solutions in an acceptable amount of time:•Filtering-Filtering handles pruning the domains of unassigned variables ahead of time to prevent unnecessary backtracking.The two importantfiltering techniques we’ve covered are forward checking and arc consistency.•Ordering-Ordering handles selection of which variable or value to assign next to make backtracking as unlikely as possible.For variable selection,we learned about a MRV policy and for value selection we learned about a LCV policy.•Structure-If a CSP is tree-structured or close to tree-structured,we can run the tree-structured CSP algorithm on it to derive a solution in linear time.Similarly,if a CSP is close to tree structured,we can use cutset conditioning to transform the CSP into one or more independent tree-structured CSPs and solve each of these separately.加入“知识星球 行业与管理资源”库,每日免费获取报告1、每月分享1000+份最新行业报告(涵盖科技、金融、教育、互联网、房地产、生物制药、医疗健康等最新行业)2、每月分享500+企业咨询管理文件(涵盖国内外著名咨询公司相关管理方案,企业运营制度等)3、每月分享500+科技类论文或者大师课件、笔记。