用R软件做聚类分析的例子.ppt

- 格式:ppt

- 大小:755.51 KB

- 文档页数:95

INTERFACE WORKSHOP-APRIL 2004RFtools--for Predicting and Understanding DataLeo BreimanStatistics, UCBleo@Adele CutlerMathematics, USUadele@u1. Overview of Features--Leo Breiman2. Graphics and Case Sturies--Adele Cutler3. Nuts and Bolts--Adele CutlerThe Data AvalancheThe ability to gather and store data has resulted in an avalanche of scientific data over the last 25 years. Who is trying to analyze this data and extract answers from it?There are small groups of academic statisticians, machine learning specialists in academia or at high end places likeIBM, Microsoft, NEC, ETC.More numerous are the workers in many diverse projects trying to extract significant information from data.Question (US Forestry Service). "We have lots of satellite data over our forests. We want to use this data to figure out what is going on"Question (LA County) "We have many years of information about incoming prisoners and whether they turned violent. We want to use this data to screen incoming prisoners for potential violent behavior."Tools NeededWhere are the tools coming from?SAS$$$S+$$$SPSS$$$R000 (free open source) Other scattered packagesThe most prevalent of these tools are two generations old--General and non-parametricCARTlike (binary decision trees) ClusteringNeural NetsWhat Kind Of Tools Are Needed toAnalyze Data ?An example--CARTThe most successful tool (with lookalikes) of the last 20 years. Why?1) Universally applicable to classification and regression problems with no assumptions on the data structure.2) The picture of the tree structure gives valuable insights into which variables were important and where.3) Terminal nodes gave a natural clustering of the data into homogenous groups.4) Handles missing data and categorical variables efficiently.4) Can handle large data sets--computational requirements are order of MNlogN where N is number of cases and M is number of variablesDrawbacksaccuracy: current methods such as SVMs and ensembles average 30% lower error rates than CART.instability: change the data a little and you get a different tree picture. So the interpretation of what goes on is built on shifting sands.In 2003 we can do betterWhat would we want in a tool to be a useful to the sciences.6 Tool Specifications For ScienceThe Minimum1) Universally applicable in classification andregression.2) Unexcelled accuracy3) Capable of handling large data sets4) Handles missing values effectivelyMuch Morethink of CART tree picture.5) which variables are important?6) how do variables interact?7) what is the shape of the data--howdoes it cluster?8) how does the multivariate action ofthe variables separate classes?9) find novel cases and outliers7 ToolmakersAdele Cutler & Leo Breimanfree open source written in f77/users/breiman/RFtoolsThe generic names of our tools is random forests (RF).Characteristics as a classification machine:1) Unexcelled accuracy-about the same as SVMs2) Scales up to large data sets.Unusually RichIn the wealth of scientifically important insights it gives into the data It is a general purpose tool, not designed for any specific application8 Outline of Part One (Leo Breiman)I The Basic Paradigma. error, bias and varianceb. randomizing "weak" predictorsc. two dimensional illustrationsd. unbiasedness in higher dimensionsII.Definition of Random Forestsa) the randomization usedb) properties as a classification machinec) two valuable by-productsoob data and proximities9 III Using Oob Data and Proximitiesa) using oob data to estimate errorb) using oob data to find importantvariablesc) using proximities to computeprototypesd) using proximities to get 2-d datapicturese) using proximities to replace missingvaluesf) using proximiites to find outliersg) using proximities to find mislabeleddataIV Other Capabilitiesa)balancing errorb) unsupervised learning10I. The Fundamental ParadigmGiven a training set of dataT=(y n,x n)n=1,...,N} where the y n are the response vectors and the x n are vectors of predictor variables:Problem: Find a function f on the space of prediction vectors with values in the response space such that the prediction error is small.If the (y n,x n) are i.i.d from the distribution (Y,X) and given a function L(y,y') that measures the loss between y a nd the prediction y': the prediction error isPE(f,T)=E Y,X L(Y,f(X,T))Usually y is one dimensional.If numerical, the problem is regression.the loss is squared error.If unordered labels, it is classification.11Bias and Variance in Regression For a specific predictor the bias measures its "systematic error". The variance measures how much it "bounces around"the Bias-Variance Decomposition A random variable Y related to a random vector X can be expressed as (1) where f *(X) = E(Y|X), E( ε |X) = 0 This decomposes Y into its structural part f *(X) which can be predicted in terms of X , and the unpredictable noise component. Y = f *(X) + ε12Mean-squared generalization error of a predictor f (x,T ) is PE( f (•,T)) = EY,X (Y − f (X,T))2(2)where the subscripts indicate expectation with respect to Y,X holding T fixed. Take the expectation of (2) over all training sets of the same size drawn from the same distribution . This is the mean-squared generalization error PE*( f ) . Let f (x) be the average over training sets of the predicted value at x. That is; (3) f (x) = ET ( f (x,T)).13The bias-variance decomposition(4)PE*( f ) = Eε 2 +EX ( f *(X) − f (X))2 +EX,T ( f (X,T)− f (X))2the first term is the noise variance,the second is the bias squaredthe third is the variance.14Weak Learners Definition: a weak learner is a prediction function that has low bias. Generally, low bias comes at the cost of high variance. A weak learner is usually not an accurate predictor because of high variance. two dimensional example The 100 case training set is generated by taking: x(n) =100 uniformly spaced points on [0,1] y(n)=sin(2*pi*x(n))+N(0,1) The weak learnerf (x,T)is defined by:If x(n)≤x<x(n+1) then y(n),y(n+1) is linearly interpolated between y(n),y(n+1) i.e. the weak learner is join the data dots by straight line segments.15FIRST TRAINING SET 3 2 1 y values 0 -1 -2 -3 -4 0 .2 .4 x variable .6 .8 1WEAK LEARNER FOR FIRST TRAINING SET 4 3 2 1 y values 0 -1 -2 -3 -4 0 .2 .4 x variable .6 .8 116Bias 1000 training sets are generated in the same way and the 1000 weak learners averaged.AVERAGE OF WEAK LEARNERS OVER 1000 TRAINING SETS 1.51.5 y values0-.5-1-1.5 0 .2 .4 x value .6 .8 1The averages approximate the underlying function sin(2*pi*x). The weak learner is almost unbiased but with large variance. But 1000 replicate training sets are rarely available. Making Silk Purses out of Weak Learners Here are some examples of our fundamental paradigm applied to a single training set17using the same "connect-the -dots" weak learner.18FiIRST SMOOTH EXAMPLE21Y Variables0-1data function prediction-2 0 .2 .4 X Variable .6 .8 119SECOND SMOOTH EXAMPLE1.5data function 1 prediction.5 Y Variables 0 -.5 -1 0 .2 .4 X Variable .6 .8 120THIRD SMOOTH EXAMPLE2data function prediction1Y Variables 0 -1 0 .2 .4 X Variable .6 .8 1T he Paradigm--IID Randomization of Weak LearnersThe predictions shown above are the averages of 1000 weak learners. Here is how the weak learners are formed:-2-1.5-1-.5.511.52Y V a r i a b l e 0.2.4.6.81X VariableFORMING THE WEAK LEARNERSubsets of the training set consisting of two-thirds of the cases is selected at random. All the (y,x) points in the subset are connected by lines.Repeat 1000 times and average the 1000 weak learners prediction.The Paradigm-ContinuedThe kth weak learner is of the form:f k(x,T)=f(x,T,Θk)where Θk is the random vector that selects the points to be in the weak learner.The Θk are i.i.d.If there are N cases in the training set, each Θk selects, at random, 2N/3 integers from among the integers 1,...,N.The values of y(n),x(n) for the selected n are deleted from the training set.The ensemble predictor is:F(x,T)=1Kf(x,T,Θk) k∑Algebra and the LLN leads to:Var(F)=E X,Θ,Θ'[ρT(f(x,T,Θ)f(x,T,Θ'))Var T(f(x,T,Θ) where Θ,Θ'are independent. Applying the mean value theorem--Var(F)=ρVar(f)andBias2(F)=E Y,X(Y−E T,Θf(x,T,Θ))2≤E Y,X,Θ(Y−E T f(x,T,Θ))2=EΘbias2f(x,T,Θ)+Eε2Using the iid randomization of predictors leaves the bias approximately unchanged while reducing variance by a factor of ρThe MessageA big win is possible with using iid randomization of weak learners as long as their correlation and bias are low.In sin curve example, base predictor is connect all points in order of x(n).bias2=.000variance=.166For the ensemblebias2 = .042variance =.0001Random forests is an example of iid randomization applied to binary classifiction trees (CART-like)What is Random ForestsA random forest (RF) is a collection of tree predictorsf(x,T,Θk),k=1,2,...,K)where the Θk are i.i.d random vectors.In classification, the forest prediction is the unweighted plurality of class votesThe Law of Large Numbers insures convergence as k→∞The test set error rates (modulo a little noise) are monotonically decreasing and converge to a limit.That is: there is no overfitting as the number of trees increasesBias and CorrelationThe key to accuracy is low correlation and bias.To keep bias low, trees are grown to maximum depth.To keep correlation low, the current version uses this randomization.i) Each tree is grown on a bootstrap sample of the training set.ii) A number m is specified much smaller than the total number of variables M.iii) At each node, m variables are selected at random out of the M.iv) The split used is the best split on these m variablesThe only adjustable parameter in RF is m. User setting of m will be discussed later.27 Properties as a classification machine.a) excellent accuracyin tests on collections of datasets, has better accuracy thanAdaboost and Support VectorMachinesb) is fastwith 100 variables, 100 trees ina forest can be grown in thesame computing time as 3single CART treesc) handlesthousands of variablesmany valued categoricalsextensive missing valuesbadly unbalanced data setsd) givesinternal unbiased estimateof test set error as trees areadded to ensemblee) cannot overfit(already discussed)28 Two Key ByproductsThe out-of-bag test setFor every tree grown, about one-third of the cases are out-of-bag (out of the bootstrap sample). Abbreviated oob.The oob samples can serve as a test set forthe tree grown on the non-oob data.This is used to:i) Form unbiased estimates of the forest testset error as the trees are added.ii) Form estimates of variable importance.29 The Oob Error Estimateoob is short for out-of-bag meaning not inthe bootstrap training sample.the bootstrap training sample leaves outabout a third of the cases.each time a case is oob, it is put down the corresponding tree and given a predicted classification.for each case. as the forest is grown, the plurality of these predictions give a forest class prediction for that case.this is compared to the true class, to give the oob error rate.Illustration-satellite data30 This data set has 4435 cases, 35 variables and a test set of 2000 cases. If the output to the monitor is graphed for a run of 100 trees, this is how it appears:The oob error rate is larger at the beginning because each case is oob in only about a third of the trees.The oob error ate is used to select m (c a l l e d mtry in the code)by starting with m=M, running about 25 trees, recording the oob error rate. Then increasing and decreasing m u n t i l the minimum oob error is found.31 Using Oob for Variable Importanceto assess the importance of the mth variable, after growing the kth tree randomly permute the values of the mth variable among all oob cases.put the oob cases down the tree.compute the decrease in the number of votes for the correct class due to permuting the mth variable.average this over the forest.also compute the standard deviation of the decreases and the standard error.dividing the average by the se gives a z-score.assuming normality, convert to asignificance value.the importance of all variables is assessed in a single runIllustration-breast cancer data699 cases, 9 variables, two classes.initial error rate is 3.3%.added 10,000 independent unit normalvariables to each case.did a run to generate a list 10,009 long ofvariable importances and ordered them by z-scorehere are the first 12 entriesvariable # raw score z-score significance6 3.2740.9360.1753 3.521 0.910 0.1812 3.484 0.902 0.1831 2.369 0.898 0.1857 2.811 0.879 0.1908 2.266 0.847 0.1995 2.164 0.829 0.2044 1.853 0.814 0.2089 0.825 0.700 0.2428104 0.016 0.204 0.419430 0.005 0.155 0.4385128 0.004 0.147 0.4412003 NIPS competition on feature selection in data sets with thousands of variablesover 1600 entries from some of the mostprominent people in Machine Learning.the top 2nd and 3rd entry used RF for feature selection.Illustration-Microarray Data 81 cases, three classes, 4682 variablesThis data set was run without variable deletionThe error rate is 1.25% --one case misclassified.Importance of all variables is computed in a single run of 1000 trees.1357z -s c o r e 010002000300040005000gene numberZ-SCORES FOR GENE EXPRESSION IMPORTANCE34 The ProximitiesSince the trees are grown to maximum depth, the terminal nodes are small.For each tree grown, pour all the data down the tree.If two data points x n and x k occupy the same terminal node,increase prox(x n,x k)by one.At the end of forest growing, these proximities are normalized by division by the number of trees.They form an intrinsic similarity measure between pairs of data vectors.These are used to:i) Replace missing values.ii) Give informative data views via metric scaling.iii) Understanding how variables separate classes--prototypesiv) Locate outliers and novel cases35 Replacing Missing Values using ProximitiesRF has two ways of replacing missing values.The Cheap WayReplace every missing value in the mth coordinate by the median of the non-missing values of that coordinate or by the most frequent value if it is categorical.The Expensive WayThis is an iterative process. If the mth coordinate in instance x n is missing then it is estimated by a weighted average over the instances x k with non-missing mth coordinate where the weight is prox(x n,x k).The replaced values are used in the next iteration of the forest which computes new proximities.The process it automatically stopped when no more improvement is possible or when five iterations are reached.Tested on data sets , this replacement method turned out to be remarkably effective.Illustration-DNA Splice Datathe DNA splice data set has 60 variables, all four valued categorical, three classes, 2000 cases in the training set and 1186 in the test set.interesting as a case study because the categorical nature of variables makes many other methods, such as nearest neighbor,difficult to apply.runs were done deleting 10%, 20%,30%, 40%,and 50%. at random and both methods used to replace.forests were constructed using the replaced values and the test set accuracy of the forests c o m p u t e d ,010203040t e s t s e t e r r o r %1020304050percent missingERROR VS MISSING FOR MFIXALL AND MFIRTRIt is remarkable how effective the proximity-based replacement process is. Similar results have been gotten on other data sets.Using Proximites to Picture the Data Clustering=getting a picture of the data.To cluster, you have to have a distance,a dissimilarity, or a similarity between pairs of instances.Challenge: find an appropriate distance measure between pairs of instances in 4691 dimensions. Euclidean? Euclidean normalized?The values (1-proximity(k,j) ) are distances squared in a high-dimensional Euclidean space.They can be projected down onto a low dimensional space using metric scaling.Metric scaling derives scaling coordinates which are related to the eigenvectors of a modified version of the proximity'38 An Illustration: Microarray Data81 cases, 4691 variables, 3 classes (lymphoma)error rate (CV) 1.2%--no variable deletionOthers do as well, but only with extensive variable deletion.So have a few algorithms that can give accurate classification.But this is not the goal, more is needed forthe science.1) What does the data look like? how does it cluster?2) Which genes are active in the discrimination?3) What multivariate levels of gene expressions discriminate between classes.2) can be answered by using variable importance in RF.now we work on 1) and 3)39Picturing the Microarray Data The graph below is a plot of the 2nd scaling coordinate vs. the first:-.2-.10.1.2.3.4.5.62n d S c a l i n g C o o r d i n a t e-.5-.4-.3-.2-.10.1.2.3.41st Scaling CoordinateMetric Scaling Microarray Dataconsider the possiblilities of getting a picture by standard clustering methods.i.e. find an appropriate distance measure between 4691 variables!40 Using Proximites to Get PrototypesPrototypes are a way of getting a picture of how the variables relate to the classification.For each class j, it searches for that case n1 such that weighted class j cases is among its K nearest neighbors in proximity measure is largest.Among these K cases the median, 25th percentile, and 75th percentile is computed for each variable. The medians are the prototype for class j and the quartiles give an estimate of is stability.For the second class j prototype, a search is made for that case n2 which is not a member of the K neighbors to n1 having the largest weighted number of class j among its K nearest neighbors.This is repeated until all the desired prototypes for class j have been computed. Similarly for the other classes.Illustration-Microarray DataIn the microarray data, the class sizes were 2943 9. K is set equal to 20, and a single prototype is computed for each class using only the 15most important variables.0.25.5.751v a r i a b l e s v a l u e s246810121416variablesPROTOTYPES FOR MICROARRAY DATAIt is easy to see from this graph how the separaration into classes works. For instance,cl;ass 1 is low on variables 1,2-high on 3, low on 4, etc.Prototype VariabilityIn the same run the 25th and 75th percentiles are computed for each variable. Here is the graph of the prototype for class 2 together with percentiles.25.5.751v a r i a b l e v a l u e s246810121416variablesUPPER AND LOWER PROTOTYPE BOUNDS CLASS 1The prototypes show how complex theclassification process may be, involving the need to look at multivariate values of the variables.Using Proximities to Find OutliersQutliers can be found using proximities An outlier is a case whose proximities to all other cases is small.Based on this concept, a measure of outlyingness is computed for each case in the training sample.The measure for case x(n) is 1/(sum of squares of prox(x(n),x(k)) , k not equal to nOur rule of thumb that if the measure is greater than 10, the case should be carefully inspected.Outlyingness for the Microarray Data-2024********o u t l y i n g n e s s102030405060708090sequence numberOutlyingness Measure Microarray Data45Illustration-- Pima Indian DataAs second example, we plot the outlyingness for the Pima Indians hepatitis data. This data set has 768 cases, 8 variables and 2 classes.It has been used often as an example inMachine Learning research and is suspected of containing a number of outliers.510152025o u t l y i n g n e s s200400600800sequence numberOutlyingness Measure Pima DataIf 10 is used as a cutoff point, there are 12cases suspected of being outliers.46 Mislabeled Data as OutliersOften, the instances in the training set are labeled by human hand. The results areoften either ambiguous or downright incorrect.Our experiment--change 100 labels atrandom in the DNA data. Maybe these will turn up as outliers.47-.1.91.92.93.94.9o u t l i e r m e a s u r e500100015002000case numberaltered classunaltered class)UTLIER MEASURE-ALTERED CLASSES48 Learning from Unbalanced Data SetsIncreasingly often, data sets are occurring where the class of interest has a population that is a small fraction of the total population.In document classification, the number of relevant documents may be 1-2% of the total number.In drug searches, the number of active drugsin the sample may be similarly small.In such unbalanced data, the classifier will achieve great accuracy by classifying almostall cases as the majority case, thus--completely misclassifying the class of interest.Example--Small Class In Satellite Data Class 4 in the satellite data has 415 cases. The other classes total 4020 cases.objectives--considered as a two class problem (class 4 relabelled class 2) from the rest(class1)1) equalize the error rates between the classes.2) find wich variables are important in separating the two classes1st run: no attention to unbalanceoverall error rate 5.8%error rate-class 2 51.0%error rate-class 1 1.2%2nd run: 8:1 weight on class 2overall error rate 12.9%error rate-class 2 13.1%error rate-class 1 11.3%Variables Importances Unweighted And Weighted Here is a graph of both z-scores:48121620z -s c o r e s s2468101214161820222426283032343638variableVARIABLE IMPORTANCE-BALANCED AND UNBALANCEDThere are significant differences. Forexample, variable 18 becomes important. So does variable 23.In the unbalanced data, because class 2 is virtually ignored, the variable importances tend to be more equal.In the balanced case a small number stand out.。

R语言聚类分析实例教程R语言是一种广泛使用的统计分析和数据可视化的编程语言。

聚类分析是一种无监督学习方法,用于将数据集中的对象分成不同的组或类。

在这个实例教程中,我们将使用R语言进行聚类分析。

首先,让我们导入所需的包。

在R中,可以使用`install.packages(`函数安装包,然后使用`library(`函数加载包。

```Rinstall.packages("cluster")install.packages("factoextra")library(cluster)library(factoextra)```接下来,我们准备好要进行聚类分析的数据集。

在这个实例中,我们将使用一个名为`iris`的经典数据集,其中包含了150个不同花朵的测量数据。

你可以使用`head(`函数来查看数据集的前几行。

```Rdata(iris)head(iris)```现在,我们已经准备好开始进行聚类分析了。

首先,我们需要选择一个合适的聚类方法。

在这个实例中,我们将使用K均值聚类方法。

K均值聚类是一种划分聚类方法,它将数据集划分为k个不重叠的簇。

在R中,可以使用`kmeans(`函数来进行K均值聚类。

我们将设置簇的数量为3,并使用`Sepal.Length`和`Sepal.Width`这两个变量进行聚类。

```Rkmeans_model <- kmeans(iris[, c("Sepal.Length","Sepal.Width")], centers = 3)```接下来,我们可以使用`fviz_cluster(`函数可视化聚类结果。

这个函数将绘制数据集中的所有点,并使用颜色标记每个点所属的簇。

```Rfviz_cluster(kmeans_model, data = iris[, c("Sepal.Length", "Sepal.Width")])```现在,让我们对聚类结果进行一些分析。

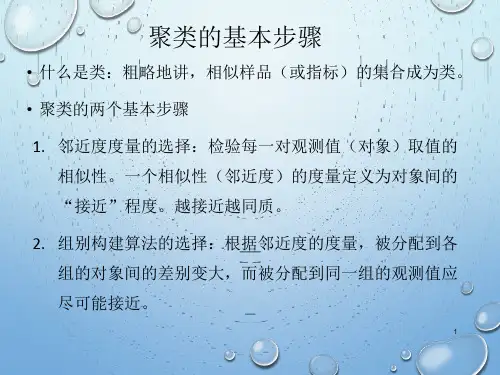

聚类分析原理及R语言实现过程聚类分析原理及R语言实现过程聚类分析定义与作用:是把分类对象按照一定规则分成若干类,这些类不是事先设定的,而是根据数据的特征确定的。

在同一类中这些对象在某种意义上趋向于彼此相似,而在不同类中对象趋向于彼此不相似。

在经济、管理、地质勘探、天气预报、生物分类、考古学、医学、心理学以及制定国家标准和区域标准等许多方面应用十分广泛,是国内外较为流行的多变量统计分析方法之一,在机器学习中扮演重要角色。

聚类分析的类型是实际问题中,如根据各省主要的经济指标,将全国各省区分成为几个区域等。

这个主要的经济指标是我们用来分类的依据。

称为指标(变量),用X1、X2 …Xp表示,p是变量的个数。

在聚类分析中,基本的思想是认为所研究的样品或者多个观测指标(变量)之间存在着程度不同的相似性(亲疏关系)。

根据这些相识程度,把样品划分成一个由小到大的分类系统,最后画出一张聚类图表示样品之间的亲疏关系。

根据分类对象的不同,可将聚类分析分为两类,一是对分类处理,叫Q 型;另一种是对变量处理,叫R型。

聚类统计量聚类分析的基本原则是将有较大相似性的对象归为同一类,可进行聚类的统计量有距离和相似系数。

聚类分析的方法:系统聚类法、快速聚类法、模糊聚类法。

系统聚类常用的有如下六种:1、最短距离法;2、最长距离法;3、类平均法;4、重心法;5、中间距离法;6、离差平方和法快速聚类常见的有K-means聚类。

R语言实现系统聚类和K-means聚类过程详解系统聚类R语言教程第一步:计算距离在R语言进行系统聚类时,先计算样本之间的距离,计算之前先对样品进行标准变换。

用scale()函数。

R语言各种距离的计算用dist()函数来实现。

具体用法为:dist(x , method = " euclidean " , diag = FALSE, upper = FALSE, p = 2)x:为数据矩阵或者数据框。

R语言层次聚类分析层次聚类分析是一种常用的聚类分析方法,常用于对数据进行分类和群组划分。

该方法通过计算数据点间的相似度或距离矩阵,将数据点聚集为不同的群组或类别。

层次聚类分析的优势在于可以通过可视化结果来直观地理解数据的结构和组织。

层次聚类方法可以分为两类:凝聚式和分裂式。

凝聚式层次聚类方法从每个数据点作为单独的类别开始,然后将它们合并成越来越大的类别,直到所有数据点都被合并为一个类别。

而分裂式层次聚类方法则是从所有数据点作为一个类别开始,然后逐步将其中的数据点划分为不同的类别,直到每个数据点都被划分到一个单独的类别中。

在R语言中,可以使用不同的包来实现层次聚类分析。

最常用的包包括`hclust`、`agnes`和`dendextend`。

其中,`hclust`包提供了凝聚式层次聚类的函数,`agnes`包提供了凝聚式层次聚类的函数,并提供了更多的选项和功能,`dendextend`包则提供了对层次聚类结果的可视化和扩展功能。

以下是一个基本的层次聚类分析的示例:```R#安装和加载相关的包install.packages("cluster")library(cluster)#创建数据集set.seed(123)x <- matrix(rnorm(60), ncol = 3)#计算数据点间的欧氏距离dist_matrix <- dist(x)hc <- hclust(dist_matrix)#绘制层次聚类结果的树状图plot(hc)```在这个例子中,我们首先创建了一个包含3个变量的数据集,其中包含了60个数据点。

然后使用`dist`函数计算了数据点间的欧氏距离,得到了距离矩阵。

接下来,我们使用`hclust`函数进行层次聚类分析,得到了一个聚类结果的树状图。

最后,使用`plot`函数对树状图进行可视化。

除了这个基本示例之外,还可以使用不同的参数和选项来进一步定制层次聚类分析。

R语言之高级数据分析「聚类分析」作者简介Introduction姚某某这一节主要总结数据分析中聚类分析的思想。

聚类分析仅根据在数据中发现的描述对象及其关系的信息,将数据对象分组。

其目标是,组内的对象相互之间是相似的(相关的),而不同组中的对象是不同的(不相关的)。

组内相似性(同质)越大,组间差别越大,说明聚类就越好。

这一解释来自于《数据挖掘导论》,已经是大白话,很好理解了。

举个栗子:把生物按照界(Kingdom)、门(Phylum)、纲(Class)、目(Order)、科(Family)、属(Genus)、种(Species)分类。

0. 聚类分析的一般步骤0.1. 步骤•选择适合的变量,选择可能对识别和理解数据中不同观测值分组有重要影响的变量。

(这一步很重要,高级的聚类方法也不能弥补聚类变量选不好的问题)•缩放数据,一般将数据标准化处理即可,用于避免各种数据由于量纲大小不同所带来的不同•寻找异常点,异常点对聚类分析的结果影响很大,因此要筛选并删除•计算距离,后面再细讲,这个距离用于判别相关关系的大小,进而影响聚类分析的结果•选择聚类算法,层次聚类更适用于小样本,划分聚类更适用于较大数据量。

还有许多其他的优秀算法,根据实际选择合适的。

•得到结果,一是获得一种或多种聚类方法的结果,二是确定最终需要的类数,三是提出子群,得到最终的聚类方案•可视化和解读,用于展示方案的意义•验证结果0.2. 计算距离计算距离,是指运用一种合适的距离计算方式,来计算出不同观测之间的距离,这个距离用于度量观测之间的相似性或相异性。

可选的方式有欧几里得距离、曼哈顿距离、兰氏距离、非对称二元距离、最大距离和闵可夫斯基距离。

最常用的为欧几里得距离。

它计算的是两个观测之间所有变量之差的平方和的开方:通常用于连续型数据的距离度量。

1. 划分聚类分析将观测值分为K组,并根据给定的规则改组成最优粘性的类,即为划分方法。

通常有两种:K 均值和 K 中心点1.1. K 均值聚类K 均值聚类分析是最常见的划分方法,它使用质心来表示一个类。

利用R内置数据集iris(鸢尾花)第一步:对数据集进行初步统计分析检查数据的维度> dim(iris)[1] 150 5显示数据集中的列名> names(iris)[1] "Sepal.Length" "Sepal.Width" "Petal.Length" "Petal.Width" "Species"显示数据集的内部结构> str(iris)'data.frame': 150 obs. of 5 variables:$ Sepal.Length: num 5.1 4.9 4.7 4.6 5 5.4 4.6 5 4.4 4.9 ...$ Sepal.Width : num 3.5 3 3.2 3.1 3.6 3.9 3.4 3.4 2.9 3.1 ...$ Petal.Length: num 1.4 1.4 1.3 1.5 1.4 1.7 1.4 1.5 1.4 1.5 ...$ Petal.Width : num 0.2 0.2 0.2 0.2 0.2 0.4 0.3 0.2 0.2 0.1 ...$ Species : Factor w/ 3 levels "setosa","versicolor",..: 1 1 1 1 1 1 1 1 1 1 ...显示数据集的属性> attributes(iris)$names --就是数据集的列名[1] "Sepal.Length" "Sepal.Width" "Petal.Length" "Petal.Width" "Species"$s --个人理解就是每行数据的标号[1] 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20[21] 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40[41] 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60[61] 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80[81] 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100[101] 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120[121] 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140[141] 141 142 143 144 145 146 147 148 149 150$class --表示类别[1] "data.frame"查看数据集的前五项数据情况> iris[1:5,]Sepal.Length Sepal.Width Petal.Length Petal.Width Species1 5.1 3.5 1.4 0.2 setosa2 4.9 3.0 1.4 0.2 setosa3 4.7 3.2 1.3 0.2 setosa4 4.6 3.1 1.5 0.2 setosa5 5.0 3.6 1.4 0.2 setosa查看数据集中属性Sepal.Length前10行数据> iris[1:10, "Sepal.Length"][1] 5.1 4.9 4.7 4.6 5.0 5.4 4.6 5.0 4.4 4.9同上> iris$Sepal.Length[1:10][1] 5.1 4.9 4.7 4.6 5.0 5.4 4.6 5.0 4.4 4.9显示数据集中每个变量的分布情况> summary(iris)Sepal.Length Sepal.Width Petal.Length Petal.Width SpeciesMin. :4.300 Min. :2.000 Min. :1.000 Min. :0.100 setosa :501st Qu.:5.100 1st Qu.:2.800 1st Qu.:1.600 1st Qu.:0.300 versicolor:50Median :5.800 Median :3.000 Median :4.350 Median :1.300 virginica :50Mean :5.843 Mean :3.057 Mean :3.758 Mean :1.1993rd Qu.:6.400 3rd Qu.:3.300 3rd Qu.:5.100 3rd Qu.:1.800Max. :7.900 Max. :4.400 Max. :6.900 Max. :2.500显示iris数据集列Species中各个值出现频次> table(iris$Species)setosa versicolor virginica50 50 50根据列Species画出饼图> pie(table(iris$Species))算出列Sepal.Length的所有值的方差> var(iris$Sepal.Length)[1] 0.6856935算出列iris$Sepal.Length和iris$Petal.Length的协方差> cov(iris$Sepal.Length, iris$Petal.Length)[1] 1.274315算出列iris$Sepal.Length和iris$Petal.Length的相关系数,从结果看这两个值是强相关。

基于R语言遗传多样性聚类分析实例(操作系统Mac)1.数据为0、1矩阵,排列方式如图。

运行代码:2. 21份材料聚类分析> library(cluster)> library(vegan)> library(permute)> library(lattice)> a=read.csv("soso.csv",header=F,s=1)> d=vegdist(a,method="jac")> hc.UPGMA=hclust(d,"average")> par(family='STKaiti') #华文行楷> par(family='STSongti-SC-Regular') #宋体简> plot(hc.UPGMA,hang=-1,main="",xlab="供试材料Materials",ylab="遗传距离Genetic distance")3. 21份供试材料聚类结果重排距离矩阵热图分析> library(cluster)> library(vegan)> library(permute)> library(lattice)> library(gclus)> library(RColorBrewer)> a=read.csv("soso.csv",header=F,s=1)> d=vegdist(a,method="jac")> hc.UPGMA=hclust(d,"average")> Hm=reorder.hclust(hc.UPGMA,d)> dend=as.dendrogram(Hm)> heatmap(as.matrix(d),Rowv=dend, symm=TRUE,margin=c(3,3))数据排列方式> a=read.csv("sg.csv",header=T) > pc <-princomp(a,cor=TRUE)> summary(pc,loadings=TRUE)Importance of components:Comp.1 Comp.2Standard deviation 3.7590459 1.21318973Proportion of Variance 0.6928774 0.07008711Cumulative Proportion 0.6728774 0.74296453Comp.3 Comp.4Standard deviation 1.03793096 0.92225843Proportion of Variance 0.05130003 0.04050289Cumulative Proportion 0.79426457 0.83476745计算结果中Proportion of Variance:Comp.1 +Comp.2 +Comp.3=0.81,即贡献率大于80。

R软件在系统聚类分析中的应用提要多元统计聚类方法已被广泛应用于自然科学和社会科学的各个领域,而在现实处理多元数据聚类分析中,离不开统计软件的支持;R软件由于其免费、开源、强大的统计分析及其完美的作图功能已得到越来越多人的关注与应用;本文结合实例介绍了 R软件在多元统计系统分析中的应用。

关键词:R软件;系统聚类分析;多元统计弓I言多€统计分析是统计学的一个重要分支,也称多变量统计分析;在现实生活中,受多种指标共同作用和影响的现象大量存在,多元统计分析就是研究多个随机变量之间相互依赖关系及其内在统计规律的重要学科,其中最常用聚类分析方法,由于多元统计聚类分析方法一般涉及复杂的数学理论,一般无法用手工计算,必须有计算机和统计软件的支持。

在统计软件方面,常用的统计软件有SPSS、SAS、STAT、R、S-PLUS,等等。

R软件是一个自由、免费、开源的软件,是一个具有强大统计分析功能和优秀统计制图功能的统计软件,现已是国内外众多统计学者喜爱的数据分析工具。

本文结合实例介绍R软件在多元统计聚类分析中的应用。

一、系统聚类分析聚类分析又称群分析,它是研究(样品或指标)分类问题的一种多元统计方法,所谓类,通俗地说,就是指相似元素的集合。

在社会经济领域中存在着大量分类问题,比如若对某些大城市的物价指数进行考察,而物价指数很多,有农用生产物价指数、服务项目价指数、食品消费物价指数、建材零售价格指数等等。

由于要考察的物价指数很多,通常先对这些物价指数进行分类。

总之,需要分类的问题很多,因此聚类分析这个有用的工具越来越受到人们的重视,它在许多领域中都得到了广泛的应用。

聚类分析内容非常丰富,有系统聚类法、有序样品聚类法、动态聚类法、模糊聚类法、图论聚类法、聚类预报法等;最常用最成功的聚类分析为系统聚类法,系统聚类法的基本思想为先将n个样品各自看成一类,然后规定样品之间的“距离”和类与类之间的距离。

选择距离最近的两类合并成一个新类,计算新类和其他类(各当前类)的距离,再将距离最近的两类合并。

R语言进行中文分词和聚类目标:对大约6w条微博进行分类环境:R语言由于时间较紧,且人手不够,不能采用分类方法,主要是没有时间人工分类一部分生成训练集……所以只能用聚类方法,聚类最简单的方法无外乎:K-means与层次聚类。

尝试过使用K-means方法,但结果并不好,所以最终采用的是层次聚类,也幸亏结果还不错……⊙﹏⊙分词(Rwordseg包):分词采用的是Rwordseg包,具体安装和一些细节请参考作者首页。

请仔细阅读该页提供的使用说明pdf文档,真是有很大帮助。

•安装:P.S.由于我是64位机,但是配置的rj包只能在32bit的R上使用,而且Rwordseg包貌似不支持最新版本的R(3.01),所以请在32bit的R.exe(2.15)中运行如下语句安装0.0-4版本:install.packages("Rwordseg", repos = "")貌似直接在Rstudio中运行会安装失败,而且直接在Rstudio中点击install安装,安装的是0.0-5版本,我就一直失败……•使用:1.分词时尽量关闭人名识别segmentCN(doc,recognition=F)否则会将“中秋国庆”,分为“中”“秋国庆“2.可以使用insertWords()函数添加临时的词汇3.对文档向量进行分词时,强烈建议用for循环对每一个元素执行segmentCN,而不要对整个向量执行因为我蛋疼的发现对整个向量执行时,还是会出现识别人名的现象……4.运行完后请detach()包,removeWords()函数与tm包中的同名函数冲突。

微博分词的一些建议:1.微博内容中经常含有url,分词后会将url拆散当做英文单词处理,所以我们需要用正则表达式,将url去掉:gsub(pattern="http:[a-zA-Z\\/\\.0-9]+","",doc)2.微博中含有#标签#,可以尽量保证标签的分词准确,可以先提取标签,然后用insertWords()人工添加一部分词汇:3.library("stringr")4.tag=str_extract(doc,"^#.+?#") #以“#”开头,“."表示任意字符,"+"表示前面的字符至少出现一次,"?"表示不采用贪婪匹配—即之后遇到第一个#就结束5.tag=na.omit(tag) #去除NAtag=unique(tag) #去重文本挖掘(tm包):•语料库:分词之后生成一个列表变量,用列表变量构建语料库。