半监督分类方法在软件缺陷预测中的方法比较

- 格式:pdf

- 大小:651.11 KB

- 文档页数:8

半监督学习的优缺点分析在机器学习领域,半监督学习是一种非常重要的方法。

与监督学习和无监督学习相比,半监督学习可以通过利用有标签和无标签的数据,为机器学习模型提供更多的信息,从而提高模型的性能。

然而,半监督学习也存在一些缺点,需要我们进行深入的分析。

优点一:利用未标记数据半监督学习的一个显著优点是可以利用未标记的数据。

在现实生活中,很多时候我们只能获得一小部分有标签的数据,而大部分数据都是未标记的。

利用这些未标记数据,可以帮助机器学习模型更好地理解数据的分布和特征,从而提高模型的泛化能力。

优点二:降低人力成本与监督学习相比,半监督学习可以降低人力成本。

在监督学习中,标记数据的获取需要大量的人工标注,而在半监督学习中,我们可以利用未标记的数据,减少对标记数据的依赖,从而减少了人力成本。

缺点一:标记数据的噪声半监督学习的一个明显缺点是标记数据的噪声问题。

在实际应用中,有标签的数据可能存在错误标注或者不准确的标签,这些噪声数据会对模型的训练产生负面影响,导致模型性能下降。

缺点二:依赖领域知识在半监督学习中,需要针对具体的领域进行特征工程和模型设计。

这就要求我们具备丰富的领域知识,才能更好地利用未标记数据来辅助模型训练。

缺乏对领域知识的理解和把握,可能会导致模型的泛化能力不足,无法取得良好的性能。

优点三:扩大数据规模半监督学习可以通过未标记数据的利用,扩大数据规模。

在监督学习中,由于有标记数据的限制,模型的训练往往受到数据规模的限制。

而在半监督学习中,我们可以利用更多的未标记数据,扩大训练数据集的规模,从而提高了模型的性能和泛化能力。

缺点三:标记数据的稀缺性另一个值得关注的缺点是有标签数据的稀缺性。

在实际应用中,获得有标签的数据往往是非常困难的,有时甚至无法获得足够的有标签数据来训练模型。

这就对半监督学习提出了更高的要求,需要更好地利用未标记数据来弥补有标签数据的不足。

综上所述,半监督学习的优点在于可以利用未标记数据、降低人力成本、扩大数据规模;缺点则主要表现在标记数据的噪声、依赖领域知识和标记数据的稀缺性上。

基于半监督集成学习的软件缺陷预测王铁建;吴飞;荆晓远【期刊名称】《模式识别与人工智能》【年(卷),期】2017(30)7【摘要】在软件缺陷预测中,标记样本不足与类不平衡问题会影响预测结果.为了解决这些问题,文中提出基于半监督集成学习的软件缺陷预测方法.该方法利用大量存在的未标记样本进行学习,得到较好的分类器,同时能集成一系列弱分类器,减少多数类数据对预测产生的偏倚.考虑到预测风险成本问题,文中还采用训练样本集权重向量更新策略,降低有缺陷模块预测为无缺陷模块的风险.在NASA MDP数据集上的对比实验表明,文中方法具有较好的预测效果.%The software defect prediction is usually adversely affected by the limitation of the labeled modules and the class-imbalance of software defect data.Aiming at this problem, a semi-supervised ensemble learning software defect prediction approach is proposed.High-performance classifiers can be built through semi-supervised ensemble learning by using a large amount of unlabeled modules and a better prediction capability is achieved for class-imbalanced data by using a series of weak classifiers to reduce the bias generated by the majority class.With the consideration of the cost of risk in software defect prediction, a sample weight vector updating strategy is employed to reduce the cost of risk caused by misclassifying defective modules as non-defective ones.Experimental results on NASA MDPdatasets show better software defect prediction capability of the proposed approach.【总页数】7页(P646-652)【作者】王铁建;吴飞;荆晓远【作者单位】武汉大学计算机学院软件工程国家重点实验室武汉430072;南京邮电大学自动化学院南京210023;武汉大学计算机学院软件工程国家重点实验室武汉430072【正文语种】中文【中图分类】TP391【相关文献】1.基于数据过采样和集成学习的软件缺陷数目预测方法 [J], 简艺恒;余啸2.基于多核集成学习的跨项目软件缺陷预测 [J], 黄琳;荆晓远;董西伟3.基于集成学习的静态软件缺陷预测模型构建 [J], 丁晓梅4.基于集成学习的静态软件缺陷预测模型构建 [J], 丁晓梅5.基于代价敏感半监督的跨项目软件缺陷数预测模型 [J], 高晶因版权原因,仅展示原文概要,查看原文内容请购买。

Software Engineering and Applications 软件工程与应用, 2020, 9(2), 116-123Published Online April 2020 in Hans. /journal/seahttps:///10.12677/sea.2020.92014Multi-Source Software Defect PredictionModel Based on Semi-Supervised LearningLonghai Yu, Xiaoling Wu*, Jie Lin, Zunhong XuGuangdong University of Technology, Guangzhou GuangdongReceived: Mar. 27th, 2020; accepted: Apr. 14th, 2020; published: Apr. 21st, 2020AbstractThis paper studies the method of establishing a general software defect prediction model between different software projects. By analyzing the project information of multi-source software, this paper designs 25-dimensional software features for machine learning. In order to overcome the differences between different software projects and achieve the generality of the model, a Self-training algo-rithm based on semi-supervised learning is used to generate a classifier. Finally, the 25-dimensional data features are used to build training data, and a general multi-source software defect predic-tion model is generated by the Self-training algorithm.KeywordsSelf-Training, Software Defect Prediction, Multi-Source Software基于半监督学习的多源软件缺陷预测模型于龙海,吴晓鸰*,凌捷,许遵鸿广东工业大学,广东广州收稿日期:2020年3月27日;录用日期:2020年4月14日;发布日期:2020年4月21日摘要本文研究在不同的软件项目之间,建立通用软件缺陷预测模型的方法。

半监督学习的优缺点分析半监督学习是一种介于监督学习和无监督学习之间的机器学习方法,它使用带有标签的数据和未标记的数据来构建模型。

在实际应用中,半监督学习具有一定的优势和局限性,接下来我们将对其优缺点进行分析。

优点一:利用未标记数据半监督学习能够有效利用未标记的数据,通过未标记数据的信息来提高模型的泛化能力。

在实际场景中,很多数据都是未标记的,利用这些未标记数据可以帮助模型更好地理解数据分布和特征之间的关系。

这使得半监督学习在数据稀缺的情况下仍然具有很强的实用性。

优点二:节省标注成本相比于监督学习,半监督学习可以节省大量的标注成本。

在很多情况下,标注数据需要耗费大量的时间和精力,而且标注的数据也可能存在错误。

半监督学习能够充分利用已有的标签数据以及未标记数据,从而降低了标注成本,提高了模型的训练效率。

优点三:适用范围广半监督学习在各种领域都有着广泛的应用,包括图像识别、自然语言处理、推荐系统等。

它能够应对大规模的数据,同时也能够处理多类别、高维度的数据。

因此,半监督学习在实际应用中有着广泛的适用性,能够解决许多现实生活中的问题。

然而,半监督学习也存在一些缺点。

缺点一:过拟合风险在半监督学习中,模型可能会过度依赖未标记的数据,导致模型的泛化能力下降,出现过拟合的情况。

尤其是当未标记数据的分布与标记数据的分布不一致时,很容易出现过拟合的问题。

因此,在使用半监督学习时,需要对未标记数据进行充分的分析和处理,以减小过拟合的风险。

缺点二:标签传播不准确半监督学习中常用的标签传播算法在面对复杂的数据分布时,可能会导致传播不准确的问题。

尤其是在高维度、多类别的数据中,标签传播算法很容易出现错误的标注结果,从而影响模型的训练效果。

因此,需要对标签传播算法进行优化和改进,以提高其准确性和可靠性。

缺点三:数据需求较高半监督学习对数据的要求较高,需要足够多的标记数据和未标记数据来进行训练。

尤其是在某些领域,如医疗诊断、金融风控等,数据的获取和标注都具有一定的难度和成本。

OpenAI研究员谈半监督学习:数据不足情况下的学习方法【专栏:前沿进展】随着为机器提供更多高质量的标签,监督学习模型的性能也会提高。

然而,获取大量带标注样本的代价十分高昂。

在机器学习中,有一些方法用于解决标签稀少的场景,半监督学习是其中一种解决方案,它可以利用一小部分有标签数据和大量的无标签数据进行学习。

对于只有有限标签数据的有监督任务,通常有四种候选方案:1.预训练+微调(Pre-Training+Fine-Tuning):在大规模无监督数据上训练一个与任务无关(Task-Agnostic)的模型,例如在文本数据上训练的Pre-Training LMs,以及通过自监督学习在无标签图片上预训练的视觉模型等。

然后,在下游任务中通过少量的有标签样本集合对模型进行微调。

2.半监督学习(Semi-SupervisedLearning):在有标签数据和无标签样本上共同学习。

很多视觉相关的任务研究的就是这种方法。

3.主动学习(Active Learning):为样本打标签的成本很昂贵,但是在给定成本预算的前提下,我们仍然希望可以获得的更多带标签数据。

主动学习旨在选择最有价值的无标签样本进行收集,帮助我们在有限的预算下采取明智的行动。

4.预训练+ 数据集自生成(Pre-Training + Dataset Auto-Generation):给定一个良好的预训练模型,我们可以利用它生成更多的有标签样本。

受少样本学习(Few-Shot Learning)的启发,这种方式在语言领域很流行。

本文是OpenAI研究员Lilian Weng(翁荔)的最新博客文章,智源社区已经获得Lilian Weng个人博客授权。

博客地址:/lil-log/。

本篇为“数据不足情况下的学习”(Learning With Not Enough Data)系列文章的第一部分,主题是半监督学习(Semi-Supervised Learning)。

机器学习知识:机器学习中的半监督学习半监督学习是指在训练机器学习模型时,数据集中只有部分数据被标记,而剩余的数据并没有被标记,但它们同样可以被用于训练模型。

事实上,大型数据集中未标记的数据比标记的数据更为常见,这就使得半监督学习在实际应用中变得极其重要。

半监督学习的目标是利用已标记的数据和未标记的数据训练出具有高泛化能力的模型,从而提高模型的预测准确性。

值得注意的是,与监督学习相比,半监督学习所需的标记数据量要少得多,这使得半监督学习相对于监督学习更加经济实惠。

目前,实现半监督学习的方法有很多种,本文将介绍主流的几种方法以及它们各自的优缺点。

1.基于图的半监督学习基于图的半监督学习是一种流行的方法,它将已知标签的数据点与其余未标签的数据点之间的关系表示为图。

然后,算法利用未标记数据点之间的相似性来预测其标签。

与此同时,已经标记的数据点也在算法中发挥着重要作用。

基于图的半监督学习将数据点之间的关系表示为点之间的边,其中点可以是样本,可以是特征,也可以是混合体。

对于图的构建,有两种常见的方法:- k-邻居图:对于每个数据点,根据距离计算选择距离最近的k 个点。

然后,将它们之间的边添加到图中。

- ε-邻域图:对于每个数据点,找到那些距离它的最近点小于ε的所有点,然后将它们之间添加边到图中。

基于图的半监督学习的优点在于该方法采用了一个非常直观的方法来对相似性进行建模,而且这种方法对于数据集的大小和类型都没有限制。

然而,它的缺点是它可能对错误的相似性进行建模,因此对于一些数据集来说,它可能并不是最好的选择。

2.生成式半监督学习生成式半监督学习是一种利用生成模型建立概率模型的方法,可以在数据集中有大量的未标记的数据时非常有用。

生成式半监督学习利用已知标签的数据和未知标签的数据来建立一个概率模型,该模型最大化数据的似然性,从而得到未知标签的数据的预测标签。

生成式半监督学习的优点在于该方法是非常灵活的,能够适用于各种不同类型的分布。

利用半监督学习进行标签不足的数据训练半监督学习是一种应对标签不足的数据训练的有效方法。

在一些实际场景中,获得大量带有标签的训练样本非常困难,但同时具备大量未标记样本的情况很常见。

半监督学习通过充分利用未标记样本的信息,辅助有限的标记样本进行模型训练,从而提高了分类器的性能。

本文将介绍半监督学习的基本原理、常用算法以及在实际应用中的一些案例。

一、半监督学习简介半监督学习是介于监督学习和无监督学习之间的一种学习方法。

与监督学习不同的是,半监督学习的训练集同时包含带标签和未标签的样本,而无监督学习则只有未标签的样本。

半监督学习的核心思想是通过利用未标签样本的分布信息,学习到更好的模型。

二、半监督学习算法1. 基于生成模型的方法基于生成模型的半监督学习算法假设标签和特征之间存在一定的概率分布关系,通过建立联合概率分布模型进行学习。

其中最经典的方法是"标签传播(label propagation)",该方法通过将未标签样本与已标签样本进行关联,并通过传播标签信息,最终为未标签样本预测标签。

2. 基于分歧的方法基于分歧的半监督学习算法认为在特征空间中,已标签样本和未标签样本应该在一定程度上保持分布一致,因此建立了一个能够测量分布一致性的准则。

典型的方法是"自学习(self-training)"和"协同训练(co-training)",两者都通过不同的方式使用已标签样本和未标签样本进行训练。

3. 基于图的方法基于图的半监督学习算法将已标签样本和未标签样本构建成图的形式,通过图结构对样本进行建模,并利用图结构来传播标签信息。

常见的图算法包括"谱聚类(spectral clustering)"和"Laplacian正则化(Laplacian regularization)"等。

三、半监督学习在实际应用中的案例半监督学习在各个领域中都有广泛的应用,包括计算机视觉、自然语言处理、生物信息学等。

半监督学习是一种结合有标签数据和无标签数据的机器学习方法。

在现实生活中,标记数据通常是昂贵和耗时的,而无标记数据却是相对容易获取的。

因此,半监督学习的优缺点一直是学术和工业界关注的焦点。

本文将对半监督学习的优缺点进行分析,并讨论其在不同领域的应用。

优点:1. 充分利用无标签数据半监督学习能够利用大量的无标签数据,从而提高模型的性能。

在一些领域,无标签数据可能比有标签数据更加丰富和多样化,通过半监督学习,我们可以更好地利用这些宝贵的信息。

2. 降低标注成本标注数据的获取通常需要大量的人力和物力成本,而且标注过程中可能存在主观误差。

半监督学习可以在一定程度上减少对标注数据的依赖,从而降低了标注成本。

3. 解决数据稀疏问题在一些领域,有标签数据可能非常稀缺,而无标签数据却很容易获取。

半监督学习可以通过充分利用无标签数据,从根本上解决了数据稀疏的问题,提高了模型的泛化能力。

缺点:1. 对数据质量要求高在半监督学习中,无标签数据的质量对模型的性能有很大的影响。

如果无标签数据存在噪声或者错误,可能会对模型产生负面影响,因此对数据质量的要求较高。

2. 难以处理标签不准确的情况在实际应用中,有时候标签数据可能存在不准确或者错误的情况。

半监督学习很难处理这种情况,因为模型通常会受到错误标签的干扰,导致性能下降。

3. 难以解决领域转移问题在一些领域,由于数据分布的不同或者特征的变化,可能会导致领域转移的问题。

半监督学习在处理领域转移问题上存在一定的困难,需要更加复杂的方法和技巧。

应用:半监督学习在计算机视觉、自然语言处理、推荐系统等领域都有着广泛的应用。

在计算机视觉领域,半监督学习可以用于图像分类、目标检测等任务,充分利用大量的无标签图像数据,提高模型的性能。

在自然语言处理领域,半监督学习可以用于文本分类、情感分析等任务,充分利用大量的无标签文本数据,提高模型的泛化能力。

在推荐系统领域,半监督学习可以用于用户行为预测、个性化推荐等任务,充分利用大量的用户行为数据,提高模型的准确性。

半监督学习的优缺点分析深度学习作为人工智能领域的热门研究方向之一,其在图像识别、自然语言处理等领域取得了许多突破性成果。

而在深度学习中,半监督学习作为一种利用大量无标签数据和少量有标签数据进行模型训练的方法,也备受关注。

在实际应用中,半监督学习具有一定的优点和缺点,本文将围绕这一主题展开讨论。

首先,让我们来看一下半监督学习的优点。

半监督学习能够充分利用大量的无标签数据,从而可以提高模型的性能。

在许多现实场景中,获取大量有标签数据是非常昂贵甚至是不可能的,而无标签数据却往往是容易获得的。

半监督学习的方法允许模型利用这些无标签数据,从而提高模型的泛化能力和性能表现。

通过合理利用无标签数据,半监督学习能够使得模型在训练阶段更加稳健,从而使得模型在实际应用中表现更加可靠。

其次,半监督学习还能够帮助解决数据稀疏性的问题。

在许多领域中,由于数据采集的成本高昂或者数据样本分布不均匀,导致有标签数据的数量往往较少。

在这种情况下,传统的监督学习方法很容易产生过拟合或者欠拟合的问题,而半监督学习则能够通过合理利用无标签数据来弥补这一不足,从而提高模型的泛化能力。

然而,半监督学习也存在一些缺点。

首先,由于半监督学习利用了大量的无标签数据,因此其训练过程往往更加复杂和耗时。

在实际应用中,这可能会导致模型训练时间较长,而且需要更多的计算资源。

其次,半监督学习的方法往往对数据分布假设较为敏感。

在实际应用中,数据的分布往往是非常复杂的,而半监督学习的方法往往对数据的分布有一定的假设。

如果这一假设与实际的数据分布不符,可能会导致模型性能下降。

另外,半监督学习的方法也容易受到标签噪声的影响。

在实际数据中,标签往往是由人工标注的,而人工标注可能存在一定的错误。

如果模型在训练过程中过度依赖无标签数据,而忽略了有标签数据中的标签噪声,可能会导致模型性能下降。

因此,在实际应用中,需要对标签噪声进行有效的处理,以提高模型的性能。

综上所述,半监督学习作为一种利用大量无标签数据和少量有标签数据进行模型训练的方法,具有一定的优点和缺点。

半监督学习的优缺点分析引言在机器学习领域,监督学习和无监督学习是两种常见的学习方法。

然而,在实际应用中,数据标注的成本和时间消耗往往是制约监督学习效果的重要因素。

为了解决这一问题,半监督学习应运而生。

半监督学习结合了监督学习和无监督学习的优点,在一定程度上能够缓解数据标注的难题。

然而,半监督学习也存在一些缺点,本文将从优点和缺点两个方面进行分析。

半监督学习的优点1. 利用未标记数据监督学习需要大量的标记数据来训练模型,而无监督学习虽然不需要标记数据,但却无法充分利用标记数据的信息。

半监督学习则能够同时利用标记数据和未标记数据,充分利用了数据的信息,提高了模型的性能。

2. 降低人工标注成本在实际应用中,标记数据的获取需要大量的人力物力成本,而且在某些领域如医疗和金融等,标记数据的获取还受到法律法规和隐私保护的限制。

半监督学习能够通过利用未标记数据来降低标记数据的需求量,从而降低了人工标注的成本。

3. 提高模型的泛化能力通过利用未标记数据,半监督学习能够提高模型的泛化能力。

未标记数据包含了更多的数据分布信息,能够帮助模型更好地理解数据的特征和规律,从而提高了模型对未知数据的泛化能力。

4. 适用于大规模数据在面对大规模数据时,人工标注数据的难度和成本都会指数级增加。

而半监督学习能够通过利用大量的未标记数据来构建模型,从而更适用于大规模数据的处理。

半监督学习的缺点1. 对数据分布假设敏感半监督学习方法对数据分布的假设比较敏感,如果数据的分布假设不满足,很容易导致模型性能的下降。

在实际应用中,数据的分布往往是复杂多变的,而半监督学习方法对于非线性和高维数据的处理能力相对较弱。

2. 标记数据的质量对模型影响较大半监督学习方法依然需要一定数量的标记数据来指导模型的学习过程,而标记数据的质量对于模型的影响是非常大的。

如果标记数据本身存在标注错误或者噪声,很容易导致模型性能的下降。

3. 需要合适的算法和模型半监督学习方法需要合适的算法和模型来处理未标记数据和标记数据的融合问题,如果选择不当,很容易导致模型性能的下降。

半监督学习的优缺点分析在机器学习领域中,半监督学习作为一种重要的学习范式,旨在充分利用标记和未标记数据进行模型训练。

相较于监督学习和无监督学习,半监督学习具有独特的优势和劣势,本文将从多个角度对其进行分析。

首先,半监督学习的优势之一在于可以充分利用未标记数据。

在现实世界中,标记数据往往是十分珍贵和昂贵的,而未标记数据却相对容易获取。

半监督学习通过有效地利用未标记数据,可以提高模型的性能和泛化能力。

例如,在图像分类任务中,通过利用大量未标记图片,可以帮助模型更好地学习到底层特征,提高分类准确率。

其次,半监督学习还可以解决高维数据的问题。

在现实任务中,很多数据都是高维的,传统的监督学习方法往往需要大量标记数据才能训练出有效的模型。

而半监督学习可以通过利用未标记数据来进行降维和特征选择,从而有效地解决高维数据的问题,提高模型的训练效率和性能。

此外,半监督学习还可以应对数据标记不完整的情况。

在很多实际应用中,数据的标记往往是不完整的,例如在金融领域中的欺诈检测任务中,只有极少部分数据被标记为欺诈。

利用半监督学习方法,可以通过未标记数据进行半监督训练,从而提高模型对于未标记类别的识别能力。

然而,半监督学习也存在一些不足之处。

首先,半监督学习的性能高度依赖于未标记数据的质量。

如果未标记数据中存在噪声或者冗余信息,那么半监督学习可能会产生负面影响,导致模型性能下降。

因此,如何有效地利用未标记数据成为了半监督学习中的重要挑战之一。

其次,半监督学习需要设计更加复杂的模型和算法。

相较于监督学习和无监督学习,半监督学习需要同时考虑标记和未标记数据,因此需要设计更加复杂的模型和算法。

这就带来了更高的计算成本和实现难度,使得半监督学习在实际应用中的推广受到一定的限制。

另外,半监督学习在处理不平衡数据集时也存在一定的挑战。

在很多实际应用中,数据集往往是不平衡的,例如在医疗诊断任务中,患病样本往往是少数。

半监督学习在处理不平衡数据集时需要设计更加复杂的损失函数和采样策略,以保证模型对于少数类别的识别能力。

利用半监督学习方法进行缺陷检测半监督学习方法在缺陷检测领域具有广泛的应用,能够有效地利用未标记的数据来提高模型的性能。

本文将探讨半监督学习方法在缺陷检测中的应用,包括其原理、具体方法和实验结果等方面。

首先,我们先来了解一下什么是缺陷检测。

在制造业中,产品的质量控制是至关重要的。

而缺陷检测就是一种通过对产品进行检验和测试来发现其中存在的问题和不合格项的过程。

传统上,缺陷检测通常需要大量标记好的数据进行训练,但这种方式存在一些问题,比如标记数据难以获取、成本高昂等。

半监督学习方法提供了一种解决方案,在只有少量标记数据和大量未标记数据情况下仍能有效进行模型训练。

其核心思想是通过利用未标记数据中包含的信息来提高模型性能。

在缺陷检测领域,这些未标记数据可以是从生产线上获取到的大量产品信息。

半监督学习方法有多种具体实现方式,在下面我们将介绍其中几种常见且有效的方法。

首先是基于生成模型的方法。

生成模型是一种能够对数据进行建模的方法,通过学习数据的分布来生成新的样本。

在缺陷检测中,我们可以利用生成模型来对未标记数据进行建模,然后通过与标记数据进行对比来判断是否存在缺陷。

常见的生成模型包括高斯混合模型(GMM)和变分自编码器(VAE)等。

其次是基于图半监督学习的方法。

图半监督学习是一种基于图结构的学习方法,能够利用未标记数据中样本之间的关系来提高分类性能。

在缺陷检测中,我们可以将产品之间的关系构建成一个图结构,然后通过半监督学习算法来利用这个图结构进行缺陷检测。

另外还有基于自训练和协同训练等方法。

自训练是一种迭代式训练算法,在每次迭代中使用已标记数据训练一个初始分类器,然后使用这个分类器对未标记数据进行预测,并将预测结果作为新的标记数据加入到已标记数据中进行下一轮迭代。

协同训练则是将一个分类问题拆分成多个子问题,在每个子问题上使用不同特征和不同分类器进行训练,然后通过协同学习的方式来提高分类性能。

除了上述方法,还有很多其他的半监督学习方法可以应用于缺陷检测。

半监督学习的优缺点分析在机器学习领域中,半监督学习是一种介于监督学习和无监督学习之间的学习方式。

它利用标记和未标记的数据来进行模型训练,相比于监督学习,半监督学习可以更好地利用未标记数据,提高模型的性能。

然而,半监督学习也存在一些缺点,下面将对半监督学习的优缺点进行分析。

优点一:利用未标记数据提高模型性能半监督学习的最大优点之一是能够利用大量的未标记数据来提高模型的性能。

在许多实际场景中,获取标记数据是非常昂贵和耗时的,而未标记数据则往往十分容易获取。

半监督学习通过结合标记和未标记数据,可以更充分地利用数据资源,提高模型的泛化能力和预测准确性。

优点二:对小样本数据效果显著在一些领域中,标记数据的获取是非常困难的,比如医学影像识别、自然语言处理等领域。

在这些领域中,半监督学习可以发挥其优势,利用少量的标记数据和大量的未标记数据,显著提高模型的性能。

因此,半监督学习在小样本数据上有着明显的优势。

缺点一:对数据分布假设严格半监督学习通常基于“流形假设”,即认为数据空间中相邻的点具有相似的标记。

然而,在实际应用中,数据的分布往往是非常复杂的,不同类别之间可能存在重叠,这就使得半监督学习的假设过于简单。

当数据的分布与假设不符时,半监督学习容易受到影响,导致模型性能下降。

缺点二:标记数据质量依赖性强在半监督学习中,标记数据的质量对模型的影响非常大。

如果标记数据质量低下或存在噪声,那么模型的性能可能会受到严重影响。

因此,半监督学习对标记数据的质量有着较强的依赖性,这也是其一个较大的缺点。

优点三:领域适用性广泛半监督学习在各种领域中都有着广泛的适用性,包括计算机视觉、自然语言处理、生物信息学等。

在这些领域中,半监督学习可以利用未标记数据来提高模型性能,因此受到了广泛的关注和应用。

缺点三:训练过程复杂相比于监督学习,半监督学习的训练过程更加复杂。

在半监督学习中,需要对未标记数据进行有效的利用,设计合适的半监督学习算法,并解决标记数据质量、数据分布假设等问题,这使得半监督学习的训练过程更加困难和复杂。

异常检测中的无监督学习与半监督学习方法比较异常检测是机器学习和数据挖掘领域中的一个重要任务。

它的主要目标是通过观察数据集中的模式,识别出与其它样本不同或异常的样本。

异常检测在很多应用领域都有着广泛的应用,如金融欺诈检测、网络入侵检测和设备故障检测等。

目前,异常检测的方法可以分为无监督学习和半监督学习两种。

无监督学习方法是指在异常样本没有明确标记的情况下,仅通过对已有数据的学习来进行异常检测。

这种方法通常基于对正常样本的建模,然后利用这个模型来衡量新样本的异常程度。

常见的无监督学习方法有基于统计的方法、聚类方法和离群因子分析方法。

基于统计的方法是最经典的无监督学习方法之一。

它通过对正常样本的分布进行建模,通常使用概率密度估计方法来描述正常数据的分布。

当新样本与该分布的概率低于预定阈值时,就被判定为异常样本。

这种方法的优点是简单直观,但对数据的分布假设敏感,当数据分布复杂或含有噪声时,容易受到影响。

聚类方法是另一种常见的无监督学习方法。

它的思想是将相似的样本聚集在一起,通过测量新样本与聚类的距离来判断其异常程度。

常用的聚类算法有k-means、DBSCAN等。

聚类方法的优点是能够自动发现数据中的子群体,但它对于数据的分布和聚类数目的敏感性较强,同时处理高维数据时容易受到维度灾难的困扰。

离群因子分析方法是一种基于统计模型的方法,它通过分析样本与模型之间的差异来判断其异常程度。

这种方法将正常样本和异常样本分别作为两个随机过程,通过比较它们之间的因子得分差异来判断新样本的异常程度。

离群因子分析方法的优点是对数据分布和噪声具有较强的鲁棒性,但需要准确建模异常样本的分布。

与无监督学习不同,半监督学习结合了有标记样本和无标记样本的信息来进行异常检测。

这种方法可以利用有标记样本进行异常模型的建模,并且利用无标记样本进行模型的调整,从而提高模型的泛化能力。

常见的半监督学习方法有生成式模型、半监督聚类和半监督支持向量机等。

在机器学习中使用半监督学习的优缺点分析半监督学习在机器学习中的优缺点分析机器学习是一种模拟人类学习能力的技术,它通过从数据中自动分析和学习,从而使计算机能够实现任务的自主完成。

在机器学习中,监督学习是最常用的一种方法,它依赖于标记数据集来进行训练和预测。

然而,由于采集和标记大量的数据集成本高昂,监督学习的应用受到了一定的限制。

为了克服这一问题,人们开始研究半监督学习,这种学习方法在只有一小部分标记数据的情况下,利用未标记数据来进行训练和预测。

本文将对半监督学习在机器学习中的优缺点进行分析。

首先,半监督学习的优点之一是可以提高模型的泛化性能。

由于半监督学习可以利用更多的未标记数据进行训练,相比于只使用有限数量的标记数据,它可以更好地捕捉数据的分布特征,从而改善模型的泛化能力。

通过引入更多的未标记数据,半监督学习可以有效地降低过拟合的风险,提高模型的鲁棒性。

其次,半监督学习能够减轻标记数据的需求。

传统的监督学习需要大量的标记数据来进行训练和预测,这使得数据采集和标记成为机器学习应用的瓶颈。

而半监督学习能够利用未标记数据来进行训练,从而有效地降低标记数据的需求。

通过减轻对标记数据的依赖,半监督学习可以在数据有限的情况下仍然取得很好的性能,大大提高了机器学习的效率和可行性。

此外,半监督学习还可以应用于多领域的机器学习任务。

传统的监督学习方法在遇到多领域的问题时往往需要重新训练,而半监督学习方法可以通过利用共享的未标记数据来实现跨领域的学习。

这使得半监督学习可以在不同的领域应用中节省时间和精力,提高学习模型的适应性和泛化能力。

然而,半监督学习也有一些缺点。

首先,半监督学习的表现高度依赖于未标记数据的质量。

由于未标记数据没有经过专业人员的标注和验证,其中可能存在许多噪音或错误的数据。

如果未标记数据中包含大量的噪音,半监督学习可能会导致错误的模型学习和预测结果。

因此,在使用半监督学习之前,需要对未标记数据进行一定的质量检查和处理。

基于机器学习的软件缺陷预测技术随着计算机技术和软件开发的不断进步,软件开发变得越来越依赖于机器学习技术。

机器学习技术能够自动的学习和优化软件开发过程,提高软件开发的效率和质量。

在软件开发中,软件缺陷经常会给开发者带来很多不必要的麻烦。

因此,基于机器学习的软件缺陷预测技术成为了越来越主流的软件开发技术。

一、机器学习技术机器学习技术是一种基于人工智能的技术。

这种技术使机器能够通过学习过去的经验来完成特定任务。

机器学习技术包括监督式学习,非监督式学习和半监督式学习。

监督式学习是一种通过已知数据来预测未知数据的方式。

非监督式学习则是一种通过对未知数据进行分类来预测新数据的方式。

半监督式学习是一种结合监督式和非监督式学习的方式。

机器学习技术有许多应用,例如语音识别,机器翻译和图像分类等。

在软件开发中,机器学习技术可以用于预测软件缺陷,优化代码,改善软件性能和使用现有代码生成新的代码等。

二、机器学习技术在软件缺陷预测中的应用机器学习技术已经被广泛应用于软件缺陷预测。

机器学习技术可以分析大量的数据并预测软件缺陷。

它可以预测哪些代码区域可能会出现缺陷,并提供针对性的解决方法,以及提高代码的可重用性和可扩展性。

机器学习技术在软件缺陷预测中的应用可以分为两个阶段。

第一阶段是数据的收集和处理。

在这个阶段,需要对历史数据进行处理,包括特征提取和数据清洗。

第二阶段是训练预测模型。

预测模型可以使用监督式学习,非监督式学习和半监督式学习等机器学习算法进行训练。

这些模型可以预测哪些区域可能会出现软件缺陷,并提供解决方案。

三、预测模型在软件缺陷预测的应用实例预测模型已经广泛运用于软件缺陷的预测。

下面列举了一些预测模型在软件缺陷预测中的实例:1. Bagging based Random Forest Model这是一种基于Bagging和Random Forest的模型。

它使用多个决策树来进行分类并预测软件缺陷,可以提高预测结果的准确性和稳定性。

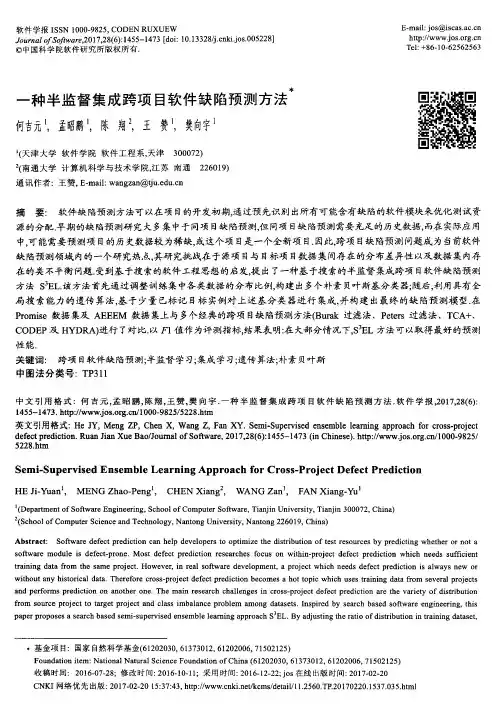

DOI 10.1515/jisys-2013-0030 Journal of Intelligent Systems 2014; 23(1): 75–82 Cagatay Catal*A Comparison of Semi-Supervised Classification Approaches for Software Defect PredictionAbstract: Predicting the defect-prone modules when the previous defect labels of modules are limited is a challenging problem encountered in the software industry. Supervised classification approaches cannot build high-performance prediction models with few defect data, leading to the need for new methods, techniques, and tools. One solution is to combine labeled data points with unlabeled data points during learning phase. Semi-supervised classification methods use not only labeled data points but also unlabeled ones to improve the generalization capability. In this study, we evaluated four semi-supervised classifica-tion methods for semi-supervised defect prediction. Low-density separation (LDS), support vector machine (SVM), expectation-maximization (EM-SEMI), and class mass normalization (CMN) methods have been inves-tigated on NASA data sets, which are CM1, KC1, KC2, and PC1. Experimental results showed that SVM and LDS algorithms outperform CMN and EM-SEMI algorithms. In addition, LDS algorithm performs much better than SVM when the data set is large. In this study, the LDS-based prediction approach is suggested for software defect prediction when there are limited fault data.Keywords: Defect prediction, expectation-maximization, low-density separation, quality estimation, semi-supervised classification, support vector machines.*Corresponding author: Cagatay Catal, Department of Computer Engineering, Istanbul Kultur University, Istanbul 34156, Turkey, e-mail: c.catal@.tr1 IntroductionThe activities of modern society highly depend on software-intensive systems, and therefore, the quality of software systems should be measured and improved. There are many definitions of software quality, but unfortunately, the general perception is that software quality cannot be measured or should not be measured if it works. However, this assumption is not true, and there are many software quality assessment models such as SQALE that investigate and evaluate several internal quality characteristics of software. Software quality can be defined differently based on different views of quality [19]:–Transcendental view: quality can be described with abstract terms instead of measurable characteristics and users can recognize quality if it exists in a software product.–Value-based view: quality is defined with respect to the value it provides and customers decide to pay for the software if the perceived value is desirable.–User view: quality is the satisfaction level of the user in terms of his/her needs.–Manufacturing view: process standards improve the product quality and these standards must be applied.–Product view: This view of quality focuses on internal quality characteristics.In this study, we use the product view definition of software quality, and internal quality characteristics are rep-resented with metrics values. Objective assessment of software quality is performed based on measurement data collected during quality engineering processes. Quality assessment models are quantitative analytical models and more reliable compared with qualitative models based on personal judgment.They can be classified into two categories: generalized and product-specific models [19]:1. Generalized models: Project or product-specific data are not used in generalized models and industrialaverages help to estimate the product quality roughly. They are grouped into three subcategories:–Overall models: a rough estimate of quality is provided.76 C. Catal: Semi-Supervised Classification Approaches for Software Defect Prediction–Segmented models: different quality estimates are applied for different product segments.–Dynamic models: dynamic model graphs depict the quality trend over time for all products.2. Product-specific models: Product-specific data are used in these models in contrast to the generalizedmodels.–Semi-customized models: These models apply historical data from previous releases of the same product instead of a profile for all products.–Observation-based models: These models only use data from the current project, and quality is esti-mated based on observations from the current project.–Measurement-driven predictive models: These models assume that there is a predictive relation between software measurements and quality.Software defect prediction models are in the measurement-driven predictive models group, and the objective of our research is to evaluate several semi-supervised classification methods for software defect prediction models when there are limited defect data. Defect prediction models use software metrics and previous defect data. In these models, metrics are regarded as independent variables and defect label is regarded as depend-ent variable. The aim is to predict the defect labels (defect-prone or non-defect-prone) of the modules for the next release of software. Machine-learning algorithms or statistical techniques are trained with previous software metrics and defect data. Then, these algorithms are used to predict the labels of new modules. The benefits of this quality assurance activity are impressive. Using such models, one can identify the refactoring candidate modules, improve the software testing process, select the best design from design alternatives with class level metrics, and reach a dependable software system.Software defect prediction models have been studied since 1990s and up to now, and defect-prone modules can be identified prior to system tests using these models. According to recent studies, the prob-ability of detection (PD) (71%) of defect prediction models may be higher than PD of software reviews (60%) if a robust model is built [15]. A panel at the IEEE Metrics 2002 conference did not support the claim [6] that inspections can find 95% of defects before testing, and this detection ratio was around 60% [15]. One member of the review team may inspect 8–20 lines of code per minute. This process is performed by all the members of a team consisting of 4–6 members [15]. Therefore, software defect prediction approaches are much more cost-effective in detecting software defects compared with software reviews. Readers who want to get more infor-mation about software defect prediction may read our literature review [2] and systematic review papers [4].Most of the studies in literature assume that there is enough defect data to build the prediction models. However, there are cases when previous defect data are not available, such as when a company deals with a new project type or when defect labels in previous releases may have not been collected. In these cases, we need new models to predict software defects. This research problem is called software defect prediction of unlabeled program modules.Supervised classification algorithms in machine learning can be used to build the prediction model with previous software metrics and previous defect labels. However, sometimes, we cannot have enough defect data to build accurate models. For example, some project partners may not collect defect data for some project components, or the execution cost of metrics collection tools on the whole system may be extremely expensive. In addition, during decentralized software development, some companies may not collect defect data for their components. In these situations, we need powerful classifiers that can build accurate classifi-cation models with very limited defect data or powerful semi-supervised classification algorithms that can benefit from unlabeled data together with labeled one. This research problem is called software defect predic-tion with limited defect data. This study is an attempt to identify a semi-supervised classification algorithm for limited defect data problem.We applied low-density separation (LDS), support vector machine (SVM), expectation-maximization (EM-SEMI), and class mass normalization (CMN) methods to investigate their performance on NASA data sets, which are CM1, KC1, KC2, and PC1. Although previous research on semi-supervised defect prediction reported that EM-SEMI-based semi-supervised approach is very effective [16], we showed that LDS and SVM algorithms provide better performance than EM-based prediction approach. Seliya and K hoshgoftaar [16]C. Catal: Semi-Supervised Classification Approaches for Software Defect Prediction 77 stated that their future work is to compare the EM-based approach with other semi-supervised learning approaches such as SVM or co-training. Therefore, the scope of our study is similar to their article’s scope, but we extend their study with new algorithms and evaluate several methods to find the best method. The details of the algorithms used in this study are explained in Section 3. In addition, recent studies on the use of semi-supervised learning methods for defect prediction are shown in detail in Section 2.This article is organized as follows: Section 2 presents the related work in the software defect prediction area. Section 3 explains the semi-supervised classification methods, whereas Section 4 shows the experimen-tal results. Finally, Section 5 presents the conclusions and the future works.2 Related WorkSeliya and Khoshgoftaar [16] applied EM algorithm for software defect prediction with limited defect data, and they reported that the EM-based approach provides remarkable results compared with classification algo-rithms. In the research project started in April 2008, we showed that naïve Bayes algorithm is the best choice to build a supervised defect prediction model for small data sets, and yet another two-stage idea (YATSI) algo-rithm may improve the performance of naïve Bayes for large data sets [3]. Researchers are very optimistic to apply unlabeled data together with labeled one when there is not enough labeled data for classification. However, we showed some evidence that this optimistic idea is not true for all the classifiers on all the data sets [3]. Naïve Bayes performance degraded when unlabeled data were used for YATSI algorithm on KC2, CM1, and JM1 data sets [3]. Recently, Li et al. [11] proposed two semi-supervised machine-learning methods (CoFor-est and ACoForest) for defect prediction. They showed that CoForest and ACoForest can achieve better perfor-mance than traditional machine-learning techniques such as naïve Bayes. Lu et al. [13] developed an iterative semi-supervised approach for software fault prediction and changed the size of labeled modules from 2% to 50%. They showed that this approach is better than the corresponding supervised learning approach with random forest as the base learner. This approach improves the performance only if the number of labeled modules exceeds 5%. Lu et al. [14] investigated a variant of self-training called fitting-the-confident-fits (FTcF) as a semi-supervised learning approach for fault prediction. In addition to this algorithm, they used a preproc-essing step called multidimensional scaling (MDS). They showed that this model, based on FTcF and MDS, is better than best supervised learning algorithms such as random forest. Jiang et al. [7] proposed a semi-supervised learning approach called ROCUS for software defect prediction. They showed that this approach is better than a semi-supervised learning approach that ignores the class-imbalance nature of the task. In these articles, researchers did not investigate the methods we used in this article. The drawback of these approaches that use semi-supervised learning methods is that they need at least some labeled data points [10].3 MethodsIn this section, semi-supervised classification methods that have been investigated are explained.3.1 Low-Density SeparationChapelle and Zien [5] developed the LDS algorithm in 2005. They showed that LDS provides better perfor-mance than SVM, manifold, transductive support vector machine (TSVM), graph, and gradient TSVM. LDS first calculates the graph-based distances and then applies gradient TSVM algorithm. LDS algorithm com-bines two algorithms [5]: graph (training an SVM on a graph-distance-derived kernel) and ∇TSVM (training a TSVM by gradient descent).78 C. Catal: Semi-Supervised Classification Approaches for Software Defect PredictionSVM was first introduced in 1992 [1], and now it is widely used for machine-learning research. SVM is a supervised learning method that is based on the statistical learning theory and the Vapnik-Chervonenkis dimension. Although standard SVM learns to separate the hyperplane on labeled data points, TSVM learns to separate the hyperplane on both labeled and unlabeled data points. Although TSVM appears to be a very powerful semi-supervised classification method, its objective function is nonconvex, and therefore, it is difficult to minimize this function, and sometimes, bad results may results from optimization heuristics such as SVMlight [5]. Because LDS includes two algorithms that are based on SVM and TSVM, LDS can be thought of as an extended version of SVM algorithms for semi-supervised classification. We used the MATLAB ( M athWorks, Natick, M A, USA) implementation of LDS algorithm, which can be accessed from www.kyb.tuebingen.mpg.de/bs/people/chapelle/lds. The motivation and theory behind this algorithm are discussed in a paper published by Chapelle and Zien [5]. They have shown that the combination of graph and ∇TSVM yields a superior semi-supervised classification algorithm.3.2 Support Vector MachineWe used SVMlight [8] software for the experiments. SVMlight is an implementation of Vapnik’s SVM [20] in C programming language for pattern recognition and regression. There are several optimization algorithms [9] in the SVMlight software.3.3 Expectation-MaximizationEM, which is an iterative algorithm, is used for maximum likelihood estimation with incomplete data. EM replaces missing data with estimated values, estimates model parameters, and then re-estimates the missing data [17]. Therefore, it is an iterative approach. In this study, the class label is used as the missing value. The theory and foundations of EM are discussed by Little and Rubin [12]. EM algorithm has been evaluated for semi-supervised classification in literature [17], and we included it to compare the performance of LDS with the performance of EM. We have noted that LDS algorithm outperforms EM algorithm.3.4 Class Mass NormalizationZhu et al. [21] developed an approach that is based on a Gaussian random field model defined on a weighted graph. On the weighted graph, vertices are labeled and unlabeled data points. The weights are given in terms of a similarity function between instances. Labeled data points are shown as boundary points, and unlabeled points appear as interior points. The CMN method was adopted to adjust the class distributions to match the prior knowledge [21]. Zhu et al. [21] chose Gaussian fields over a continuous state space instead of random fields over the discrete label set. They showed that their approach was promising on several data sets, and they used only the mean of the Gaussian random field, which is characterized in terms of harmonic functions and spectral graph theory [21]. In this article, we used this approach, which uses the CMN method, for our benchmarking analysis.4 Empirical Case StudiesThis section presents the data sets and performance evaluation metrics that were used in this study and the results of the experimental studies.C. Catal: Semi-Supervised Classification Approaches for Software Defect Prediction 794.1 System DescriptionNASA projects located in the PROMISE repository were used for our experiments. We used four public data sets, which are KC1, PC1, KC2, and CM1. The KC1 data set, which uses the C++ programming language, belongs to a storage management project for receiving/processing ground data. It has 2109 software modules, 15% of which are defective. PC1 belongs to a flight software project for an Earth-orbiting satellite. It has 1109 modules, 7% of which are defective, and uses the C implementation language. The KC2 data set belongs to a data-processing project, and it has 523 modules. The C++ language was used for the KC2 implementation, and 21% of its modules have defects. CM1 belongs to a NASA spacecraft instrument project and was developed in the C programming language. CM1 has 498 modules, of which 10% are defective.4.2 Performance Evaluation ParameterThe performance of the semi-supervised classification algorithms was compared using the area under receiver operating characteristic (ROC) curve (AUC) para m eter. ROC curves and AUC are widely used in machine learning for performance evaluation. ROC curve was first used in signal detection theory to evaluate how well a receiver distinguishes a signal from noise, and it is still used in medical diagnostic tests. The ROC curve plots probability of false alarm (PF) on the x-axis and the PD on the y-axis. A ROC curve is shown in Figure 1. ROC curves pass through points (0, 0) and (1, 1) by definition. Because the accuracy and precision parameters are deprecated for unbalanced data sets, we used AUC values for benchmarking.Equations (1) and (2) were used to calculate PD and PF values, respectively. Each pair (PD, PF), represents the classification performance of the threshold value that produces this pair. The pair that produces the best classification performance is the best threshold value to use in practice [18]. PD ,TP TP FN=+ (1) PF .FP FP TN =+ (2)10.50.750.25010.250.750.5Cost-adverse region PF=PD=no informationRisk-adverse regionPreferred curveNegativecurvePF=probability of false alarm P D =p r o b a b i l i t y o f d e t e c t i o n Figure 1. ROC Curve [15].80 C. Catal: Semi-Supervised Classification Approaches for Software Defect PredictionTable 2. Performance Results on KC2.Algorithm5%10%20%LDS 0.800.810.82SVM 0.840.850.85CMN 0.690.690.69EM-SEMI 0.470.560.55Table 3. Performance Results on KC1.Algorithm5%10%20%LDS 0.770.780.79SVM 0.760.750.70CMN 0.730.760.76EM-SEMI 0.490.480.50Table 4. Performance Results on PC1.Algorithm5%10%20%LDS 0.730.750.75SVM 0.700.730.70CMN 0.630.610.80EM-SEMI 0.600.580.55Table 1. Performance Results on CM1.Algorithm5%10%20%LDS 0.660.700.73SVM 0.760.760.73CMN 0.650.670.74EM-SEMI 0.470.470.534.3 ResultsWe simulated small labeled-large unlabeled data problem with 5%, 10%, and 20% rates and evaluated the performance of each semi-supervised classification algorithm under these circumstances. This means that we trained on only 5%, 10%, and 20% of the data set and tested on the rest for LDS, SVM, CMN, and EM-SEMI algorithms.Tables 1–4 show the performance results of each algorithm on different data sets with respect to these rates, and we compared classifiers using AUC values. According to experimental results in Tables 1 and 2, the best classifier for CM1 and KC2 is SVM because SVM has the highest AUC values for these data sets. Accord-ing to experimental results in Tables 3 and 4, the best classifier for KC1 and PC1 is LDS because LDS has the highest AUC values for these data sets.For all the data sets, SVM and LDS, which are based on SVM, provide better performance than EM-SEMI algorithm. When we looked at the size of data sets, we have noted that the size of data set is large when LDS provides better performance than SVM. Based on these experimental results, we can express that applying LDS algorithm is an ideal solution for large data sets when there are not enough labeled data.C. Catal: Semi-Supervised Classification Approaches for Software Defect Prediction 81We compared the results of this study with the performance results we achieved in our previous research [3], and we showed that SVM or LDS provides much better performance than the algorithms we used in our previous research [3]. This research also indicates that although EM algorithm has been suggested for semi-supervised defect prediction in literature [17], it is very hard to get acceptable results with this algorithm.All the classifiers reached their highest AUC values for the KC2 data set. This empirical observation may indicate that the KC2 data set has very few noisy modules or does not have any noisy module. This observa-tion has been observed in our previous research as well [3]. Although CM1 and KC2 data sets have roughly the same number of modules, the classifiers’ performances are higher for KC2 than CM1. Furthermore, lines of code for KC2 are approximately two times longer than CM1 even though they have roughly the same number of modules. According to these observations, we can conclude that SVM performance variations for KC2 and CM1 data sets may be due to the different usage of lines of code per method.5 Conclusions and Future WorksSoftware defect prediction using only few defect data is a challenging research area, where only very few studies were made. The previous works were based on EM-based approaches, and in this study, we showed that the LDS-based defect prediction approach works much better than EM-based defect prediction [3]. The main contribution of our article is two-fold. First, this study compares several semi-supervised algorithms for software defect prediction with limited data and suggests a classifier for further research. Second, we empiri-cally show that LDS is usually the best semi-supervised classification algorithm for large data sets when there are limited defect data. In addition, SVM algorithm worked well on smaller data sets. However, the difference between the performance of SVM and LDS for small data sets is small, and quality managers may directly use LDS algorithm for semi-supervised defect prediction without considering the size of the data set. Because LDS provided high performance for our experiments, there is a potential research opportunity to investigate the LDS for much better models for semi-supervised software fault prediction. We did not encounter any article in literature that used LDS for this problem. In the future, we are planning to compare the performance of LDS with the recent approaches explained in Section 2 and correspond with their respective authors. We are also planning to use genetic algorithms to optimize the parameters of LDS algorithm and propose a new algorithm that can achieve higher performance. In addition, case studies of other systems such as open-source projects, e.g., eclipse, may provide further validation of our study.Received May 8, 2013; previously published online August 31, 2013.Bibliography[1] B. E. Boser, I. Guyon and V. Vapnik, A training algorithm for optimal margin classifiers, COLT1992 (1992), 144–152.[2] C. Catal, Software fault prediction: a literature review and current trends, Expert Syst. Appl.38 (2011), 4626–4636.[3] C. Catal and B. Diri, Unlabelled extra data do not always mean extra performance for semi-supervised fault prediction,Expert Syst. J. Knowledge Eng.26 (2009), 458–471.[4] C. Catal and B. Diri, A systematic review of software fault prediction studies, Expert Syst. Appl.36 (2009), 7346–7354.[5] O. Chapelle and A. Zien, Semi-supervised classification by low density separation, in: Proceedings of the Tenth Interna-tional Workshop on Artificial Intelligence and Statistics, pp. 57–64, Society for Artificial Intelligence and Statistics, 2005.[6] M. Fagan, Advances in software inspections, IEEE Trans. Software Eng.12 (1986), 744–751.[7] Y. Jiang, M. Li and Z. Zhou, Software defect detection with ROCUS, J. Comput. Sci. Technol.26 (2011), 328–342.[8] T. Joachims, Making large-scale SVM learning practical, advances in kernel methods – support vector learning, MIT Press,Cambridge, MA, 1999.[9] T. Joachims, Learning to classify text using support vector machines, dissertation, 2002.[10] E. Kocaguneli, B. Cukic and H. Lu, Predicting more from less: synergies of learning, in: 2nd International Workshop onRealizing Artificial Intelligence Synergies in Software Engineering (RAISE 2013), pp. 42–48, West Virgina University, San Francisco, CA, 2013.82 C. Catal: Semi-Supervised Classification Approaches for Software Defect Prediction[11] M. Li, H. Zhang, R. Wu and Z. Zhou, Sample-based software defect prediction with active and semi-supervised learning,Automated Software Eng. 19 (2012), 201–230.[12] R. J. A. Little and D. B. Rubin, Statistical analysis with missing data, Wiley, New York, 1987.[13] H. Lu, B. Cukic and M. Culp, An iterative semi-supervised approach to software fault prediction, in: Proceedings of the 7thInternational Conference on Predictive Models in Software Engineering (PROMISE ‘11), 10 pp., ACM, New York, NY, Article 15, 2011.[14] H. Lu, B. Cukic and M. Culp, Software defect prediction using semi-supervised learning with dimension reduction, in:Proceedings of the 27th IEEE/ACM International Conference on Automated Software Engineering (ASE 2012), pp. 314–317, ACM, New York, NY, 2012.[15] T. Menzies, J. Greenwald and A. Frank, Data mining static code attributes to learn defect predictors, IEEE Trans. SoftwareEng.33 (2007), 2–13.[16] N. Seliya and T. M. Khoshgoftaar, Software quality estimation with limited fault data: a semi-supervised learningperspective, Software Qual. Contr.15 (2007), 327–344.[17] N. Seliya, T. M. Khoshgoftaar and S. Zhong, Semi-supervised learning for software quality estimation, in: Proceedings ofthe 16th IEEE International Conference on Tools with Artificial Intelligence (ICTAI ‘04), pp. 183–190, IEEE Computer Society, Washington, DC, 2004.[18] R. Shatnawi, W. Li, J. Swain and T. Newman, Finding software metrics threshold values using ROC curves, J. SoftwareMaintenance Evol. Res. Pract.22 (2010), 1–16.[19] J. Tian, Software quality engineering: testing, quality assurance, and quantifiable improvement, John Wiley & Sons,Hoboken, New Jersey, USA, 2005.[20] V. Vapnik, The nature of statistical learning theory, Springer, New York, NY, USA, 1995.[21] X. Zhu, Z. Ghahramani and J. D. Lafferty, Semi-supervised learning using Gaussian fields and harmonic functions, ICML2003 (2003), 912–919.。