网格计算和云计算360度比较

- 格式:pdf

- 大小:635.50 KB

- 文档页数:5

云计算与网格计算的比较研究摘要:网格计算是一种能够整合零散资源并实现资源共享和协同工作的计算模式;云计算是网格计算、并行计算、分布式计算的发展,是一种新兴的商业计算模式。

它具有与网格计算不同的新的特点。

该文在研究网格计算与云计算概念的基础上从体系结构、专注方向、资源管理、作业调度等多种角度对网格计算与云计算进行了分析和研究。

云计算所采用的商业理念、成熟的资源虚拟化技术以及非标准化的规范,使其体系结构、资源管理、作业调度等方面呈现出了不同的特点,也更适宜于为用户提供按需服务的目标,但在安全方面仍需不断完善。

关键词:网格计算;云计算;体系结构;资源管理;作业调度;资源虚拟化中图分类号:TP311文献标识码:A文章编号:1009-3044(2011)17-4032-03Comparing Researh of Cloud Computing and Grid ComputingSHEN Li-jun, YANG Lan-juan, ZHAO Hua(College of Command Automation, PLA University of Science, Nanjing 210007, China)Abstract: Grid computing a computing mode of mergingscattered resources and realizing resources sharing and cooperative working; Cloud computing is the development of Grid computing, parallel computing and distributed computing, is a new business computing mode. It has some new characters, compared with grid computing. On the base of grid computing and cloud computing, Research the differences between grid computing and cloud computing, from kinds of degrees such as protocol architecture ,direction of concentrating, resources management, and job scheduling. Using business concept, mature resource virtualization and not standardized specification make cloud computing different with grid computing in protocol architecture, resources management and job scheduling , etc, which make it more suitable to provide users with on-demand service .But in terms of security still need improvement.Key words: grid computing; cloud computing protocol architecture; resources management; job scheduling; resource virtualization网格计算是伴随着大规模计算需求而产生的一种能够整合零散资源并实现资源共享和协同工作的计算模式,它的出现解决了很多领域复杂的问题。

云计算与网格计算360度比较1,2,3伊恩福斯特,4Y ong赵1Ioan拉伊库,5Shiyong路foster@,yozha@,iraicu@,shiyong@1计算机科学系,芝加哥芝加哥,IL,美国大学2Computation研究所,芝加哥,伊利诺伊州芝加哥大学,美国3Math与计算机科学部,阿贡国家实验室,阿贡,IL,美国4Microsoft公司,雷德蒙,华盛顿州,美国5计算机科学系,韦恩州立大学,密歇根州底特律,美国抽象的云计算已经成为另一个网络流行语后2.0。

不过,也有几十个不同的定义为云计算和似乎有没有什么是云共识。

另一方面,云计算并不是一个完全新的概念;它拥有错综复杂的连接到相对较新,但13年网格计算模式的建立,以及其他有关例如公用计算技术,集群计算和在一般的分布式系统。

本文比较和努力对比云计算从不同的角度与网格计算并给予两成的本质特征的认识。

1 100英里概述云计算还暗示,在未来,我们将不在本地计算机上的计算,而是集中设施由第三方经营的计算和存储工具。

我们相信不会错过的收缩包装拆开包装和安装软件。

不用说,这不是一个新概念。

事实上,早在1961年,计算机先驱约翰麦卡锡预言“计算可能有一天会被组织成一个公共事业” -又到如何推测这可能发生。

在20世纪90年代中期,这个词是用来形容电网技术,使消费者获得计算电源需求。

伊恩福斯特等人假定,通过规范使用要求的计算能力的协议,我们可以推动建立计算网格,类似的创作形式和公用事业的电力网。

研究人员后又在许多令人兴奋的方式对这些想法,例如生产大规模(的T eraGrid联邦制度,开放科学网格,caBIG,EGEE,地球系统网格)的不仅提供计算能力,而且数据和软件,需求。

标准组织(例如,开放网格论坛,绿洲)界定有关标准。

更平凡,这个术语还增选行业作为一个集群营销术语。

但是,没有提供可行的商业网格计算出现,至少直到最近。

那么,“云计算”只是一个新的网格叫什么名字?在信息技术,在技术,规模的命令数量级,并在这个过程中,活力十足,每5多年来,没有直接回答这个问题。

云计算和网格计算有什么本质区别/z/q157731426.htm?w=%CD%F8%B8%F1%BC%C6%CB%E3%BC%BC%CA%F5&spi=1&sr=1&w8=%E7%BD%91%E6%A0%BC%E8%AE%A1%E7%AE%97%E6%8A%80%E6%9 C%AF&qf=10&rn=360[标签:云计算,本质区别,区别]我对云了解的比较深入,对网格计算不太了解,但是初步观察发现相似之处很多,求解两者本质区别限量版回答:4 人气:108 解决时间:2009-10-03 20:35满意答案耐心看吧您可能非常关注云计算和网格计算的比较。

本文介绍了云计算服务类型,云计算和网格计算的相似与不同。

同时本文探讨了云计算优于网格计算的地方,两者面临的共同问题以及一些安全方面的问题。

本文以AmazonWeb Services 为例。

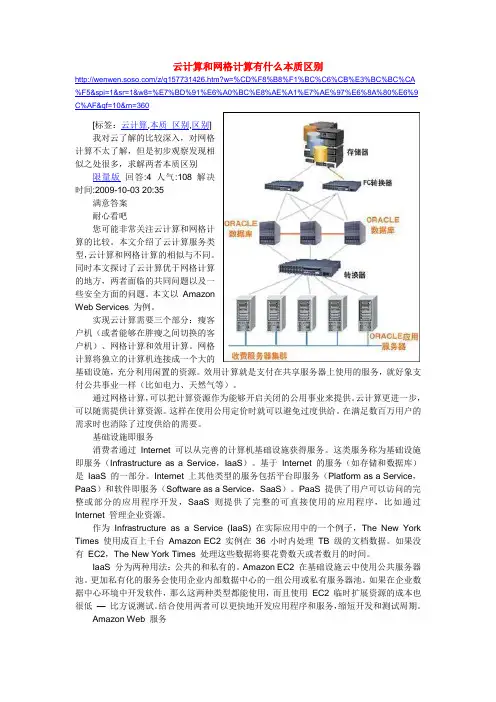

实现云计算需要三个部分:瘦客户机(或者能够在胖瘦之间切换的客户机)、网格计算和效用计算。

网格计算将独立的计算机连接成一个大的基础设施,充分利用闲置的资源。

效用计算就是支付在共享服务器上使用的服务,就好象支付公共事业一样(比如电力、天然气等)。

通过网格计算,可以把计算资源作为能够开启关闭的公用事业来提供。

云计算更进一步,可以随需提供计算资源。

这样在使用公用定价时就可以避免过度供给。

在满足数百万用户的需求时也消除了过度供给的需要。

基础设施即服务消费者通过Internet 可以从完善的计算机基础设施获得服务。

这类服务称为基础设施即服务(Infrastructure as a Service,IaaS)。

基于Internet 的服务(如存储和数据库)是IaaS 的一部分。

Internet 上其他类型的服务包括平台即服务(Platform as a Service,PaaS)和软件即服务(Software as a Service,SaaS)。

如何区别?三个角度看云计算与网格计算对于IT界很多资深工程师而言,网格计算(Grid Computing)是一个耳熟能详的概念,它是把一个需要非常巨大的计算能力才能解决的问题分成许多小的部分,然后把这些部分分配给许多个计算设备来进行处理,最后把这些计算结果综合起来得到最终结果的计算模式。

▲在本世纪初,网格计算和现在的云计算一样是一个非常火热的概念,但是由于网格计算在商业模式,技术和安全性这三方面的不足,使得其并没有在工程界和商业界取得预期的成功,但在学术界,还是有一定的应用,比如,用于寻找外星人的SETI计划等。

在2007年底云计算这个概念刚诞生的时候,由于其在概念上和网格计算比较类似,也希望能让IT资源像水电这类公用事业那样按需使用和随需应变,所以有部分业界的从业者认为云计算相对网格计算而言只是新瓶装旧酒而已,对于这点,我不是很认同,接下来我从下面几个方面来分析两者之间的区别。

概念方面在概念上,两者的各有侧重,网格计算主要强调的将一个巨大的问题分成许多个子问题,并通过许多个子节点分别对这些子问题进行计算,而云计算则强调通过后端的大型云计算中心来同时为多个用户服务。

领域和需求方面网格计算这个概念是诞生在学术界,主要是为了解决处理大型的计算难题,比如,寻找并发现对抗艾滋病毒更为有效的药物或查找那个地方会存有石油等。

而云计算则诞生在工程界,也就是Google 的数据中心,主要是为了满足用户海量的搜索请求等。

大家都应该知道,不同的领域和需求会引发出不同的产品,对于IT模式而言,也同样如此。

架构方面在架构方面,网格计算和云计算都可分为后端和前端这两部分,但在网格计算中计算工作主要由前端来完成,后端主要用于调度任务,而在云计算中计算工作则主要由后端的大型云计算中心完成,其前端是用来接受后端的计算成果并显示,还有,在网格计算中参与计算的设备经常是异构的,比如,运行Windows的笔记本和运行Unix 的小型机等,而在云计算中参与计算的设备往往是同构的,比如运行Linux的X86服务器,这样的做法不仅能简化管理,而且能提升运行效率。

云计算无非是要解决高性能、高可用性、可伸缩性而已。

云计算与网格计算的区别云计算与网格计算的深入比较当然,从定义上来说,二者都试图将各种IT资源看成一个虚拟的资源池,然后向外提供相应的服务。

云计算试图让“用户透明地使用资源”,而网格计算当初的口号就是让“使用IT资源像使用水电一样简单”。

根据维基百科所提供的定义,云计算是一种宽泛的概念,它允许用户通过互联网访问各种基于IT资源的服务,这种服务允许用户无需了解底层IT基础设施架构就能够享受到作为服务的“IT相关资源”。

而网格的内涵包括两个方面,一个方面是所谓的效用计算或者随需计算,在这一点上面,网格计算跟云计算是非常相似的,都是通过一个资源池或者分布式的计算资源来提供在线的计算或者存储等服务;另外一个方面就是所谓的“虚拟超级计算机”,以松耦合的方式将大量的计算资源连接在一起提供单个计算资源所无法完成的超级计算能力,这也是狭义上的网格计算跟云计算概念上最大的差别,也是本文要讨论的出发点。

目标不同一般来说,谈到网格计算大家都会想到当年风靡一时的搜寻外星人项目,也就是说通过在本机安装一个屏幕保护软件,就能够利用大家每个人的PC闲暇时候的计算能力来参与搜寻外星人的计算。

这也说明了网格的目标,是想要尽可能地利用各种资源。

它通过特定的网格软件,将一个庞大的项目分解为无数个相互独立的、不太相关的子任务,然后交由各个计算节点进行计算。

即便某个节点出现问题,没有能够及时返回结果,也不影响整个项目的进程,甚至即便某一个计算节点突然崩溃,其所承担的计算任务也能够被任务调度系统分配给其他的节点继续完成。

应该说,从这一点来说,作业调度是网格计算的核心价值。

现在谈到云计算的时候,我们就能够立刻想到通过互联网将数据中心的各种资源打包成服务向外提供。

一般来说,尽管云计算也像网格计算一样将所有的资源构筑成一个庞大的资源池,但是云计算向外提供的某个资源,是为了完成某个特定的任务。

比如说某个用户可能需要从资源池中申请一定量的资源来部署其应用,而不会将自己的任务提交给整个网格来完成。

云计算与网格计算分析比较在当今数字化的时代,计算技术的发展日新月异,云计算和网格计算作为两种重要的计算模式,为解决大规模数据处理和复杂计算问题提供了强大的支持。

然而,尽管它们都致力于提高计算资源的利用效率,但在许多方面仍存在着显著的差异。

云计算,这个在近年来迅速崛起的概念,以其便捷、灵活和按需服务的特点,赢得了众多企业和个人用户的青睐。

简单来说,云计算就像是一个超级大的计算资源库,用户可以根据自己的需求,随时从这个资源库中获取计算能力、存储空间和各种应用服务。

比如,当我们使用在线办公软件、观看视频流媒体或者存储大量照片时,实际上就是在享受云计算带来的便利。

云计算的优势在于其高度的可扩展性。

对于企业来说,如果业务量突然增长,需要更多的计算资源,云计算可以在短时间内迅速为其调配所需的资源,而无需进行大规模的硬件投资和升级。

同时,云计算还提供了可靠的数据备份和恢复服务,大大降低了数据丢失的风险。

而且,由于云计算服务通常由专业的供应商提供,他们拥有强大的技术团队和完善的安全措施,能够更好地保障服务的稳定性和安全性。

相比之下,网格计算则是一种相对较为传统的计算模式。

它将分布在不同地理位置的计算资源通过网络连接起来,形成一个虚拟的超级计算机。

网格计算的重点在于资源的共享和协同工作,以解决那些需要大量计算资源的科学研究和工程计算问题。

网格计算的一个典型应用场景是在科学研究领域,比如气象预测、药物研发和高能物理研究等。

在这些领域,计算任务往往非常复杂,需要整合多个研究机构的计算资源来共同完成。

网格计算通过统一的中间件和协议,实现了不同计算资源之间的无缝连接和协同工作,使得大规模的科学计算成为可能。

然而,网格计算也存在一些局限性。

首先,网格计算的资源共享通常是在特定的组织或机构之间进行,缺乏像云计算那样广泛的商业应用和用户基础。

其次,网格计算的配置和管理相对复杂,需要较高的技术门槛和专业知识。

此外,由于网格计算中的资源往往来自不同的所有者和管理体系,资源的可用性和稳定性可能会受到一定的影响。

云计算与网格计算的区别云计算与网格计算的概念首先,究竟什么是云计算(Cloud Computing)呢?钱教授指出,云就是互联网——做网络的似乎总是把网络抽象成云;云计算就是利用在Internet中可用的计算系统,能够支持互联网各类应用的系统。

云计算是以第三方拥有的机制提供服务,为了完成功能,用户只关心需要的服务,这是云计算基本的定义。

相对于网格计算(Grid Computing)和分布式计算,云计算拥有明显的特点:第一是低成本,这是最突出的特点。

第二是虚拟机的支持,使得在网络环境下的一些原来比较难做的事情现在比较容易处理。

第三是镜象部署的执行,这样就能够使得过去很难处理的异构的程序的执行互操作变得比较容易处理。

第四是强调服务化,服务化有一些新的机制,特别是更适合商业运行的机制。

那么网格计算的特点又是什么呢?网格计算有了十几年的历史。

网格基本形态是什么?是跨地区的,甚至跨国家的,甚至跨洲的这样一种独立管理的资源结合。

资源在独立管理,并不是进行统一布置、统一安排的形态。

网格这些资源都是异构的,不强调有什么统一的安排。

另外网格的使用通常是让分布的用户构成虚拟组织(VO),在这样统一的网格基础平台上用虚拟组织形态从不同的自治域访问资源。

此外,网格一般由所在地区、国家、国际公共组织资助的,支持的数据模型很广,从海量数据到专用数据以及到大小各异的临时数据集合,在网上传的数据,这是网格目前的基本形态。

云计算与网格计算区别何在可以看出,网格计算和云计算有相似之处,特别是计算的并行与合作的特点;但他们的区别也是明显的。

主要有以下几点:首先,网格计算的思路是聚合分布资源,支持虚拟组织,提供高层次的服务,例如分布协同科学研究等。

而云计算的资源相对集中,主要以数据中心的形式提供底层资源的使用,并不强调虚拟组织(VO)的概念。

其次,网格计算用聚合资源来支持挑战性的应用,这是初衷,因为高性能计算的资源不够用,要把分散的资源聚合起来;后来到了2004年以后,逐渐强调适应普遍的信息化应用,特别在中国,做的网格跟国外不太一样,就是强调支持信息化的应用。

分布式计算((Distributed Computing):是一种把需要进行大量计算的工程数据分割成小块,由多台计算机分别计算,在上传运算结果后,将结果统一合并得出数据结论的科学。

目前常见的分布式计算项目通常使用世界各地上千万志愿者计算机的闲置计算能力,通过互联网进行数据传输。

如分析地外无线电信号,从而搜索地外的生命迹象的SETI@home项目,该项目数据基数很大,超过了千万位数,是目前世界上最大的分布式计算项目,已有一百六十余万台计算机加入了此项目(在中国大陆大约有1万4千位志愿者)。

这些项目很庞大,需要惊人的计算量,由一台电脑计算是不可能完成的。

并行计算(Parallel Computing):是指同时使用多种计算资源解决计算问题的过程。

为执行并行计算,计算资源应包括一台配有多处理机(并行处理)的计算机、一个与网络相连的计算机专有编号,或者两者结合使用。

并行计算的主要目的是快速解决大型且复杂的计算问题。

此外还包括:利用非本地资源,节约成本―使用多个“廉价”计算资源取代大型计算机,同时克服单个计算机上存在的存储器限制。

为利用并行计算,通常计算问题表现为以下特征:将工作分离成离散部分,有助于同时解决;随时并及时地执行多个程序指令;多计算资源下解决问题的耗时要少于单个计算资源下的耗时。

网格计算(Grid Computing):通过利用大量异构计算机(通常为桌面)的未用资源(CPU周期和磁盘存储),将其作为嵌入在分布式电信基础设施中的一个虚拟的计算机集群,为解决大规模的计算问题提供了一个模型。

网格计算的焦点放在支持跨管理域计算的能力,这使它与传统的计算机集群或传统的分布式计算相区别。

网格计算的设计目标是解决对于任何单一的超级计算机来说仍然大得难以解决的问题,并同时保持解决多个较小的问题的灵活性。

这样,网格计算就提供了一个多用户环境。

它的第二个目标就是:更好的利用可用计算力,迎合大型的计算练习的断断续续的需求云计算(Cloud Computing)是分布式处理(Distributed Computing)、并行处理(Parallel Computing)和网格计算(Grid Computing)的发展,或者说就是这些计算机科学概念的商业实现。

网格计算与云计算的区别与联系近年来,随着信息技术的不断发展,网格计算和云计算已经成为了热门话题。

它们都涉及到计算资源的共享和管理,但又在多个方面有着明显的区别。

本文将深入探讨网格计算和云计算之间的异同,以帮助读者更好地理解它们的概念和应用。

**1. 网格计算和云计算的基本概念**在开始探讨区别之前,让我们先了解一下网格计算和云计算的基本概念。

**网格计算:**网格计算是一种分布式计算范式,它通过将多台计算机连接在一起,以共同完成复杂的计算任务。

这些计算机可以位于不同的地理位置,彼此之间可以协同工作,以实现高性能计算。

网格计算的核心思想是将计算资源池化,使其能够动态分配给不同的任务。

**云计算:**云计算是一种提供计算、存储和其他计算资源的服务模型,通常通过互联网提供。

云计算服务通常按需提供,用户可以根据需要获取和释放资源,只需支付实际使用的资源。

云计算通常提供了各种服务层次,包括基础设施即服务(IaaS)、平台即服务(PaaS)和软件即服务(SaaS)。

**2. 区别:架构和目标****2.1. 网格计算的架构:**网格计算的架构通常包括多个分布式计算节点,这些节点连接在一起以构建一个强大的计算资源池。

这些节点可以是各种类型的计算机,包括个人计算机、服务器和超级计算机。

网格计算的主要目标是实现高性能计算,通常用于处理科学和工程计算等计算密集型任务。

**2.2. 云计算的架构:**云计算的架构通常是多租户的,其中多个用户共享云提供商的计算资源。

云计算提供商负责管理硬件和软件,用户可以通过互联网访问这些资源。

云计算的主要目标是提供灵活的计算资源,以满足不同用户的需求,从虚拟机到存储和应用程序。

**3. 区别:资源管理和共享****3.1. 网格计算的资源管理和共享:**在网格计算中,资源管理是一项复杂的任务,需要有效地分配和协调计算资源。

不同的组织和用户可以在网格中共享资源,但资源管理可能涉及到更多的本地配置和设置。

云计算与网格计算的比较

王海艳

【期刊名称】《电脑开发与应用》

【年(卷),期】2012(25)3

【摘要】介绍了当前计算方法的发展趋势,对当前主流的云计算和网格计算两种计算方法从定义、特点方面进行了详细的阐述,并对两种技术进行深入的分析比较,最终对其异同之处进行了概括总结.

【总页数】2页(P26-27)

【作者】王海艳

【作者单位】太原城市职业技术学院,太原030027

【正文语种】中文

【中图分类】TP393.08

【相关文献】

1.云计算与网格计算分析比较 [J], 徐格静;丁函;王毅

2.网格计算和云计算360度比较 [J], Ian Foster;Yong Zhao;Ioan

Raicu;Shiyong Lu;杨莎莎;刘宴兵

3.云计算与网格计算的比较研究 [J], 申丽君;杨兰娟;赵华

4.云计算与网格计算比较研究 [J], 苑野;伞晓娇

5.云计算与网格计算在电子政务中的应用比较 [J], 王景

因版权原因,仅展示原文概要,查看原文内容请购买。

网格计算与云计算的区别

思科系统公司负责云业务的首席信息官Lew Tucker 对大规模计算领域并不陌生。

今年六月加盟思科公司的Tucker 之前在Sun 微系统公司工作,主要负责SUN 的云和大规模网络计算方面的工作。

Tucker 在思科公司的主要职责会涵盖不同的业务部门,因为云会涉及到企业用户,服务提供商和合作伙伴。

对Tucker 而言,云并不只是栅格计算或者其他之前关于大规模分布式计算的新称谓,虽然他们之间有共通性。

Tucker 在接受采访时表示“云与我们从栅格计算处了解的很多东西都有

很强的关联性。

我们知道规模和拥有统一基础架构的优势所在,也明白你必须实现管理的自动化”。

栅格计算是2000 年到2005 年期间最常见的术语,SUN 公司对栅格计算的普及功不可没。

2004 年,SUN 推出了企业级栅格平台,为企业用户提供效用计算。

尽管栅格计算早期向用户的承诺听起来与云的承诺是一样的,但Tucker 强调说他们之间有一些明显的不同。

“SUN的栅格计算很大的不同是,它设计的初衷是以批处理模式来运行

超大规模应用软件”Tuck er 表示“因此栅格计算对于超大规模计算来说非常适用,那些应用软件将数据发送到栅格上进行计算,然后输出”。

相比之下云并不是一个批处理系统,与数据中心和服务器平台不同,云是以网络应用软件交付空间为基础的。

Tucker 强调称,云的概念源自亚马逊这样的大型云公司,这种类型的公司必须构建大规模基础架构来运行一个或者两个应用软件。

“有了云,我们就可以尝试在类似栅格的大型基础架构上完成同样的任务”Tucker表示“但是目前这些基础架构都是针对那些长期运行的应用软件服务。

什么是网格计算,网格计算和云计算区别?随着网络技术的不断的发展和深入,网络信息越来越多,数据海量的进行挖挖取,而随之而来的就是新概念和新技术的诞生,目前有两种网络的计算方法最受人们关注,那就是云计算和网格计算,因为他们在人们日常的生活当中所占的应用比例越来越大。

那么网格计算和云计算之间到底谁更有优势呢?各自的特点是哪些?这个问题引来大家的的关注和讨论。

对这两种技术的概念进行了简要说明,并对其异同之处进行了分析对比。

1 、什么是网格计算网格计算是利用互联网地理位置相对分散的计算机组成一个“虚拟的超级计算机”,其中每一台参与计算的计算机就是一个“节点”,而整个计算是由数以万计个“节点”组成的“一张网格”,网格计算是专门针对复杂科学计算的计算模式。

网格计算模式的数据处理能力超强,使用分布式计算,而且充分利用了网络上闲置的处理能力,网格计算模式把要计算的数据分割成若干“小片”,而计算这些“小片”的软件通常是预先编制好的程序,不同节点的计算机根据自己的处理能力下载一个或多个数据片断进行计算。

2 、什么是云计算云计算是一种借助互联网提供按需的、面向海量数据处理和完成复杂计算的平台。

云计算是网格计算、并行计算、分布式计算、网络存储、虚拟化、负载均衡等计算机技术和网络技术发展融合的产物。

其基本原理是用户端仅负责数据输入和读取,复杂的数据处理工作交给云计算系统中的“云”来处理,“云”是由数以万计的各种各样的计算机、服务器和数据存储系统共同组成。

云计算具有以下特点:①按需采用“即用即付费”的方式分配计算、存储和带宽资源。

客户可以根据自己的需要、随时随地自动获取计算能力,云系统对服务(存储、处理能力、带宽、活动用户)进行适当的抽象,并提供服务计量能力,自动控制和优化资源使用情况。

②云计算描述了一种可以通过互联网进行访问的可扩展和动态重构的模式。

它使用多租户模式可以提供各种各样的服务,根据客户的需求动态提供物理或虚拟化的资源(存储、处理能力、内存、网络带宽和虚拟机)。

云计算与网格计算比较研究云计算是当前信息技术领域非常热门的话题,得到了产业界、学术界、政府等部门广泛的关注。

2007年,云计算作为一个新的概念被正式提出。

经过几年的迅速发展,云计算几乎成为所有IT行业巨头未来发展的主要趋势,其商业前景和应用需求已毋庸置疑。

云计算是一种全新的商业模式。

它使用的硬件设备是成千上万的廉价的服务器集群,企业和个人通过高速互联网调用这些分散的服务器集群,从而获得计算能力。

这种资源的共享方式避免了大量硬件的重复投资。

从具体的运作方式来看,云计算是通过将计算分布在大量的分布式计算机上,使企业数据中心的运行更类似于使用互联网。

这样,企业能够随时将资源切换到需要的应用上,并可以根据需求访问计算机和存储系统。

这种运作方式体现了“网络就是计算机的思想”,将大量的计算资源、存储资源与软件资源链接在一起,形成了规模巨大的共享虚拟IT资源池,为远程的用户提供IT服务。

IT资源服务化是云计算最重要的外部特征。

目前,Amazon、Google、IBM、Microsoft、VMware等国际IT公司已纷纷建立并对外提供各种云计算服务。

亚马逊(Amazon)所推出的“简单存储服务S3(Simple Storage service)”和“弹性计算云EC2(Elastic Compute C1oud)”标志着“云计算”发展的新阶段:即基础架构的网络服务作为提供给客户的新“商品化”的资源,EC2已成为亚马逊当前“增长最快的业务”。

谷歌一直致力于推广以GFS(Google File System),MapReduce、BigTab1e等技术为基础的应用引擎(App Engine),为用户进行海量数据处理提供了手段。

IBM于2007年推出的“蓝云”(Blue cloud)计算平台,采用了xen、PowerVM虚拟技术和Hadoop技术,帮助客户构建云计算环境。

微软在2008年推出了其下一代云计算产品windows Azure,它被认为是继windows NT 之后最重要的产品。

Cloud Computing and Grid Computing 360-Degree Compared1,2,3Ian Foster, 4Yong Zhao, 1Ioan Raicu, 5Shiyong Lufoster@, yozha@, iraicu@, shiyong@1 Department of Computer Science, University of Chicago, Chicago, IL, USA2Computation Institute, University of Chicago, Chicago, IL, USA3Math & Computer Science Division, Argonne National Laboratory, Argonne, IL, USA4Microsoft Corporation, Redmond, WA, USA5 Department of Computer Science, Wayne State University, Detroit, MI, USAAbstract–Cloud Computing has become another buzzword after Web 2.0. However, there are dozens of different definitions for Cloud Computing and there seems to be no consensus on what a Cloud is. On the other hand, Cloud Computing is not a completely new concept; it has intricate connection to the relatively new but thirteen-year established Grid Computing paradigm, and other relevant technologies such as utility computing, cluster computing, and distributed systems in general. This paper strives to compare and contrast Cloud Computing with Grid Computing from various angles and give insights into the essential characteristics of both.1100-Mile OverviewCloud Computing is hinting at a future in which we wo n’t compute on local computers, but on centralized facilities operated by third-party compute and storage utilities. We sure wo n’t miss the shrink-wrapped software to unwrap and install. Needless to say, this is not a new idea. In fact, back in 1961, computing pioneer John McCarthy predicted that ―computation may someday be organized as a public utility‖—and went on to speculate how this might occur.In the mid 1990s, the term Grid was coined to describe technologies that would allow consumers to obtain computing power on demand. Ian Foster and others posited that by standardizing the protocols used to request computing power, we could spur the creation of a Computing Grid, analogous in form and utility to the electric power grid. Researchers subsequently developed these ideas in many exciting ways, producing for example large-scale federated systems (TeraGrid, Open Science Grid, caBIG, EGEE, Earth System Grid) that provide not just computing power, but also data and software, on demand. Standards organizations (e.g., OGF, OASIS) defined relevant standards. More prosaically, the term was also co-opted by industry as a marketing term for clusters. But no viable commercial Grid Computing providers emerged, at least not until recently.So is ―Cloud Computing‖just a new name for Grid? In information technology, where technology scales by an order of magnitude, and in the process reinvents itself, every five years, there is no straightforward answer to such questions. Yes: the vision is the same—to reduce the cost of computing, increase reliability, and increase flexibility by transforming computers from something that we buy and operate ourselves to something that is operated by a third party.But no: things are different now than they were 10 years ago. We have a new need to analyze massive data, thus motivating greatly increased demand for computing. Having realized the benefits of moving from mainframes to commodity clusters, we find that those clusters are quite expensive to operate. We have low-cost virtualization. And, above all, we have multiple billions of dollars being spent by the likes of Amazon, Google, and Microsoft to create real commercial large-scale systems containing hundreds of thousands of computers. The prospect of needing only a credit card to get on-demand access to 100,000+ computers in tens of data centers distributed throughout the world—resources that be applied to problems with massive, potentially distributed data, is exciting! So we are operating at a different scale, and operating at these new, more massive scales can demand fundamentally different approaches to tackling problems. It also enables—indeed is often only applicable to—entirely new problems. Nevertheless, yes: the problems are mostly the same in Clouds and Grids. There is a common need to be able to manage large facilities; to define methods by which consumers discover, request, and use resources provided by the central facilities; and to implement the often highly parallel computations that execute on those resources. Details differ, but the two communities are struggling with many of the same issues.1.1 Defining Cloud ComputingThere is little consensus on how to define the Cloud [49]. We add yet another definition to the already saturated list of definitions for Cloud Computing:A large-scale distributed computing paradigm that isdriven by economies of scale, in which a pool of abstracted, virtualized, dynamically-scalable, managed computing power, storage, platforms, and services are delivered on demand to external customers over the Internet.There are a few key points in this definition. First, Cloud Computing is a specialized distributed computing paradigm; it differs from traditional ones in that 1) it is massively scalable, 2) can be encapsulated as an abstract entity that delivers different levels of services to customers outside the Cloud, 3) it is driven by economies of scale [44], and 4) the services can be dynamically configured (via virtualization or other approaches) and delivered on demand.Governments, research institutes, and industry leaders are rushing to adopt Cloud Computing to solve their ever-increasing computing and storage problems arising in the Internet Age. There are three main factors contributing to the surge and interests in Cloud Computing: 1) rapid decrease in hardware cost and increase in computing power and storage capacity, and the advent of multi-core architecture and modern supercomputers consisting of hundreds of thousands of cores;2) the exponentially growing data size in scientific instrumentation/simulation and Internet publishing and archiving; and 3) the wide-spread adoption of Services Computing and Web 2.0 applications.1.2 Clouds, Grids, and Distributed SystemsMany discerning readers will immediately notice that our definition of Cloud Computing overlaps with many existing technologies, such as Grid Computing, Utility Computing, Services Computing, and distributed computing in general. We argue that Cloud Computing not only overlaps with Grid Computing, it is indeed evolved out of Grid Computing and relies on Grid Computing as its backbone and infrastructure support. The evolution has been a result of a shift in focus from an infrastructure that delivers storage and compute resources (such is the case in Grids) to one that is economy based aiming to deliver more abstract resources and services (such is the case in Clouds). As for Utility Computing, it is not a new paradigm of computing infrastructure; rather, it is a business model in which computing resources, such as computation and storage, are packaged as metered services similar to a physical public utility, such as electricity and public switched telephone network. Utility computing is typically implemented using other computing infrastructure (e.g. Grids) with additional accounting and monitoring services.A Cloud infrastructure can be utilized internally by a company or exposed to the public as utility computing.See Figure 1 for an overview of the relationship between Clouds and other domains that it overlaps with. Web 2.0 covers almost the whole spectrum of service-oriented applications, where Cloud Computing lies at the large-scale side. Supercomputing and Cluster Computing have been more focused on traditional non-service applications. Grid Computing overlaps with all these fields where it is generally considered of lesser scale than supercomputers and Clouds.Figure 1: Grids and Clouds OverviewGrid Computing aims to ―enable resource sharing and coordinated problem solving in dynamic, multi-institutional virtual organizations‖[18][20]. There are also a few key features to this definition: First of all, Grids provide a distributed computing paradigm or infrastructure that spans across multiple virtual organizations (VO) where each VO can consist of either physically distributed institutions or logically related projects/groups. The goal of such a paradigm is to enable federated resource sharing in dynamic, distributed environments. The approach taken by the de facto standard implementation –The Globus Toolkit [16][23], is to build a uniform computing environment from diverse resources by defining standard network protocols and providing middleware to mediate access to a wide range of heterogeneous resources. Globus addresses various issues such as security, resource discovery, resource provisioning and management, job scheduling, monitoring, and data management.Half a decade ago, Ian Foster gave a three point checklist [19] to help define what is, and what is not a Grid: 1) coordinates resources that are not subject to centralized control, 2) uses standard, open, general-purpose protocols and interfaces, and 3) delivers non-trivial qualities of service. Although point 3 holds true for Cloud Computing, neither point 1 nor point 2 is clear that it is the case for today’s Clouds. The vision for Clouds and Grids are similar, details and technologies used may differ, but the two communities are struggling with many of the same issues. This paper strives to compare and contrast Cloud Computing with Grid Computing from various angles and give insights into the essential characteristics of both, with the hope to paint a less cloudy picture of what Clouds are, what kind of applications can Clouds expect to support, and what challenges Clouds are likely to face in the coming years as they gain momentum and adoption. We hope this will help both communities gain deeper understanding of the goals, assumptions, status, and directions, and provide a more detailed view of both technologies to the general audience.2Comparing Grids and Clouds Side-by-Side This section aims to compare Grids and Clouds across a wide variety of perspectives, from architecture, security model, business model, programming model, virtualization, data model, compute model, to provenance and applications. We also outline a number of challenges and opportunities that Grid Computing and Cloud Computing bring to researchers and the IT industry, most common to both, but some are specific to one or the other.2.1Business ModelTraditional business model for software has been a one-time payment for unlimited use (usually on 1 computer) of the software. In a cloud-based business model, a customer will pay the provider on a consumption basis, very much like the utility companies charge for basic utilities such as electricity, gas, and water, and the model relies on economies of scale in order to drive prices down for users and profits up for providers. Today, Amazon essentially provides a centralized Cloud consisting of Compute Cloud EC2 and Data Cloud S3. The former is charged based on per instance-hour consumed for each instance type and the later is charged by per GB-Month of storage used. In addition, data transfer is charged by TB / month data transfer, depending on the source and target of such transfer. The prospect of needing only a credit card to get on-demand access to 100,000+ processors in tens of data centers distributed throughout the world—resources that beapplied to problems with massive, potentially distributed data, is exciting!The business model for Grids (at least that found in academia or government labs) is project-oriented in which the users or community represented by that proposal have certain number of service units (i.e. CPU hours) they can spend. For example, the TeraGrid operates in this fashion, and requires increasingly complex proposals be written for increasing number of computational power. The TeraGrid has more than a dozen Grid sites, all hosted at various institutions around the country. What makes an institution want to join the TeraGrid? When an institution joins the TeraGrid with a set of resources, it knows that others in the community can now use these resources across the country. It also acknowledges the fact that it gains access to a dozen other Grid sites. This same model has worked rather well for many Grids around the globe, giving institutions incentives to join various Grids for access to additional resources for all the users from the corresponding institution.There are also endeavors to build a Grid economy for a global Grid infrastructure that supports the trading, negotiation, provisioning, and allocation of resources based on the levels of services provided, risk and cost, and users’ preferences; so far, resource exchange (e.g. trade storage for compute cycles), auctions, game theory based resource coordination, virtual currencies, resource brokers and intermediaries, and various other economic models have been proposed and applied in practice [8].2.2ArchitectureGrids started off in the mid-90s to address large-scale computation problems using a network of resource-sharing commodity machines that deliver the computation power affordable only by supercomputers and large dedicated clusters at that time. The major motivation was that these high performance computing resources were expensive and hard to get access to, so the starting point was to use federated resources that could comprise compute, storage and network resources from multiple geographically distributed institutions, and such resources are generally heterogeneous and dynamic. Grids focused on integrating existing resources with their hardware, operating systems, local resource management, and security infrastructure.In order to support the creation of the so called ―Virtual Organizations‖—a logical entity within which distributed resources can be discovered and shared as if they were from the same organization, Grids define and provide a set of standard protocols, middleware, toolkits, and services built on top of these protocols. Interoperability and security are the primary concerns for the Grid infrastructure as resources may come from different administrative domains, which have both global and local resource usage policies, different hardware and software configurations and platforms, and vary in availability and capacity.Grids provide protocols and services at five different layers as identified in the Grid protocol architecture (see Figure 2). At the fabric layer, Grids provide access to different resource types such as compute, storage and network resource, code repository, etc. Grids usually rely on existing fabric components, for instance, local resource managers (i.e. PBS [5], Condor [48], etc). General-purpose components such as GARA (general architecture for advanced reservation) [17], and specialized resource management services such as Falkon [40] (although strictly speaking, Falkon also provides services beyond the fabric layer).Figure 2: Grid Protocol ArchitectureThe connectivity layer defines core communication and authentication protocols for easy and secure network transactions. The GSI (Grid Security Infrastructure) [27] protocol underlies every Grid transaction.The resource layer defines protocols for the publication, discovery, negotiation, monitoring, accounting and payment of sharing operations on individual resources. The GRAM (Grid Resource Access and Management) [16] protocol is used for allocation of computational resources and for monitoring and control of computation on those resources, and GridFTP [2] for data access and high-speed data transfer.The collective layer captures interactions across collections of resources, directory services such as MDS (Monitoring and Discovery Service) [43] allows for the monitoring and discovery of VO resources, Condor-G [24] and Nimrod-G [7] are examples of co-allocating, scheduling and brokering services, and MPICH [32] for Grid enabled programming systems, and CAS (community authorization service) [21] for global resource policies.The application layer comprises whatever user applications built on top of the above protocols and APIs and operate in VO environments. Two examples are Grid workflow systems, and Grid portals (i.e. QuarkNet e-learning environment [52], National Virtual Observatory (), TeraGrid Science gateway ()). Clouds are developed to address Internet-scale computing problems where some assumptions are different from those of the Grids. Clouds are usually referred to as a large pool of computing and/or storage resources, which can be accessed via standard protocols via an abstract interface. Clouds can be built on top of many existing protocols such as Web Services (WSDL, SOAP), and some advanced Web 2.0 technologies such as REST, RSS, AJAX, etc. In fact, behind the cover, it is possible for Clouds to be implemented over existing Grid technologies leveraging more than a decade of communityC l o u d A r c h i t e c t u r eefforts in standardization, security, resource management, and virtualization support.There are also multiple versions of definition for Cloud architecture, we define a four-layer architecture for Cloud Computing in comparison to the Grid architecture, composed of 1) fabric, 2) unified resource, 3) platform, and 4) application Layers.Unified ResourceFabricFigure 3: Cloud ArchitectureThe fabric layer contains the raw hardware level resources, such as compute resources, storage resources, and network resources. The unified resource layer contains resources that have been abstracted/encapsulated (usually by virtualization) so that they can be exposed to upper layer and end users as integrated resources, for instance, a virtual computer/cluster, a logical file system, a database system, etc. The platform layer adds on a collection of specialized tools, middleware and services on top of the unified resources to provide a development and/or deployment platform. For instance, a Web hosting environment, a scheduling service, etc. Finally, the application layer contains the applications that would run in the Clouds.Clouds in general provide services at three different levels (IaaS , PaaS , and Saas [50]) as follows, although some providers can choose to expose services at more than one level. Infrastructure as a Service (IaaS) [50] provisions hardware, software, and equipments (mostly at the unified resource layer, but can also include part of the fabric layer) to deliver software application environments with a resource usage-based pricing model. Infrastructure can scale up and down dynamically based on application resource needs. Typical examples are Amazon EC2 (Elastic Cloud Computing) Service [3] and S3 (Simple Storage Service) [4] where compute and storage infrastructures are open to public access with a utility pricing model; Eucalyptus [15] is an open source Cloud implementation that provides a compatible interface to Am azon’s EC2, and allows people to set up a Cloud infrastructure at premise and experiment prior to buying commercial services.Platform as a Service (PaaS) [50] offers a high-level integrated environment to build, test, and deploy custom applications. Generally, developers will need to accept some restrictions on the type of software they can write in exchange for built-in application scalability. An example is Google ’s App Engine [28], which enables users to build Webapplications on the same scalable systems that power Google applications.Software as a Service (SaaS) [50] delivers special-purpose software that is remotely accessible by consumers through the Internet with a usage-based pricing model. Salesforce is an industry leader in providing online CRM (Customer Relationship Management) Services. Live Mesh from Microsoft allows files and folders to be shared and synchronized across multiple devices.Although Clouds provide services at three different levels (IaaS , PaaS , and Saas ), standards for interfaces to these different levels still remain to be defined. This leads to interoperability problems between toda y’s Clouds, and there is little business incentives for Cloud providers to invest additional resources in defining and implementing new interfaces. As Clouds mature, and more sophisticated applications and services emerge that require the use of multiple Clouds, there will be growing incentives to adopt standard interfaces that facilitate interoperability in order to capture emerging and growing markets in a saturated Cloud market.2.3 Resource ManagementThis section describes the resource management found in Grids and Clouds, covering topics such as the compute model, data model, virtualization, monitoring, and provenance. These topics are extremely important to understand the main challenges that both Grids and Clouds face today, and will have to overcome in the future.Compute Model: Most Grids use a batch-scheduled compute model, in which a local resource manager (LRM), such as PBS, Condor, SGE manages the compute resources for a Grid site, and users submit batch jobs (via GRAM) to request some resources for some time. Many Grids have policies in place that enforce these batch jobs to identify the user and credentials under which the job will run for accounting and security purposes, the number of processors needed, and the duration of the allocation. For example, a job could say, stage in the input data from a URL to the local storage, run the application for 60 minutes on 100 processors, and stage out the results to some FTP server. The job would wait in the LRM ’s wait queue until the 100 processors were available for 60 minutes, at which point the 100 processors would be allocated and dedicated to the application for the duration of the job. Due to the expensive scheduling decisions, data staging in and out, and potentially long queue times, many Grids don ’t natively support interactive applications; although there are efforts in the Grid community to enable lower latencies to resources via multi-level scheduling, to allow applications with many short-running tasks to execute efficiently on Grids [40]. Cloud Computing compute model will likely look very different, with resources in the Cloud being shared by all users at the same time (in contrast to dedicated resources governed by a queuing system). This should allow latency sensitive applications to operate natively on Clouds, although ensuring a good enough level of QoS is being delivered to the end users will not be trivial, and will likely be one of the major challenges for Cloud Computing as the Clouds grow in scale, and number of users.Data Model: While some people boldly predicate that future Internet Computing will be towards Cloud Computing centralized, in which storage, computing, and all kind of other resources will mainly be provisioned by the Cloud, we envision that the next-generation Internet Computing will take the triangle model shown in Figure 4: Internet Computing will be centralized around Data, Clouding Computing, as well as Client Computing. Cloud Computing and Client Computing will coexist and evolve hand in hand, while data management (mapping, partitioning, querying, movement, caching, replication, etc) will become more and more important for both Cloud Computing and Client Computing with the increase of data-intensive applications.The critical role of Cloud Computing goes without saying, but the importance of Client Computing cannot be overlooked either for several reasons: 1) For security reasons, people might not be willing to run mission-critical applications on the Cloud and send sensitive data to the Cloud for processing and storage; 2) Users want to get their things done even when the Internet and Cloud are down or the network communication is slow; 3) With the advances of multi-core technology, the coming decade will bring the possibilities of having a desktop supercomputer with 100s to 1000s of hardware threads/cores. Furthermore, many end-users will have various hardware-driven end-functionalities, such as visualization and multimedia playback, which will typically run locally.Figure 4 The triangle model of next-generation Internet Computing. The importance of data has caught the attention of the Grid community for the past decade; Data Grids [10] have been specifically designed to tackle data intensive applications in Grid environments, with the concept of virtual data [22] playing a crucial role. Virtual data captures the relationship between data, programs and computations and prescribes various abstractions that a data grid can provide: location transparency where data can be requested without regard to data location, a distributed metadata catalog is engaged to keep track of the locations of each piece of data (along with its replicas) across grid sites, and privacy and access control are enforced; materialization transparency: data can be either re-computed on the fly or transferred upon request, depending on the availability of the data and the cost to re-compute. There is also representation transparency where data can be consumed and produced no matter what their actual physical formats and storage are, data are mapped into some abstract structural representation and manipulated in that way.Data Locality: As CPU cycles become cheaper and data sets double in size every year, the main challenge for efficient scaling of applications is the location of the data relative to the available computational resources –moving the data repeatedly to distant CPUs is becoming the bottleneck. [46] There are large differences in IO speeds from local disk storage to wide area networks, which can drastically affect application performance. To achieve good scalability at Internet scales for Clouds, Grids, and their applications, data must be distributed over many computers, and computations must be steered towards the best place to execute in order to minimize the communication costs [46]. Google’s MapReduce [13] system runs on top of the Google File System, within which data is loaded, partitioned into chunks, and each chunk replicated. Thus data processing is collocated with data storage: when a file needs to be processed, the job scheduler consults a storage metadata service to get the host node for each chunk, and then schedules a ―m ap‖ process on that node, so that data locality is exploited efficiently. In Grids, data storage usually relies on a shared file systems (e.g. NFS, GPFS, PVFS, Luster), where data locality cannot be easily applied. One approach is to improve schedulers to be data-aware, and to be able to leverage data locality information when scheduling computational tasks; this approach has shown to improve job turn-around time significantly [41].Combining compute and data management: Even more critical is the combination of the compute and data resource management, which leverages data locality in access patterns to minimize the amount of data movement and improve end-application performance and scalability. Attempting to address the storage and computational problems separately forces much data movement between computational and storage resources, which will not scale to tomorrow’s peta-scale datasets and millions of processors, and will yield significant underutilization of the raw resources. It is important to schedule computational tasks close to the data, and to understand the costs of moving the work as opposed to moving the data. Data-aware schedulers and dispersing data close to processors is critical in achieving good scalability and performance. Finally, as the number of processor-cores is increasing (the largest supercomputers today have over 200K processors and Grids surpassing 100K processors), there is an ever-growing emphasis for support of high throughput computing with high sustainable dispatch and execution rates. We believe that data management architectures are important to ensure that the data management implementations scale to the required dataset sizes in the number of files, objects, and dataset disk space usage while at the same time, ensuring that data element information can be retrieved fast and efficiently. Grids have been making progress in combining compute and data management with data-aware schedulers [41], but we believe that Clouds will face significant challenges in handling data-intensive applications without serious efforts invested in harnessing the data locality of application access patterns. Although data-intensive applications may not be typical applications that Clouds deal with today, as the scales of Clouds grow, it may just be a matter of time for many Clouds. Virtualization: Virtualization has become an indispensable ingredient for almost every Cloud, the most obvious reasons are for abstraction and encapsulation. Just like threads were introduced to provide users the ―illusion‖as if the computer were running all the threads simultaneously, and each thread。