chap16 STATA编程基础

- 格式:ppt

- 大小:807.00 KB

- 文档页数:83

Stata软件基本操作和数据分析入门(完整版讲义)Stata软件基本操作和数据分析入门第一讲Stata操作入门张文彤赵耐青第一节概况Stata最初由美国计算机资源中心(Computer Resource Center)研制,现在为Stata公司的产品,其最新版本为7.0版。

它操作灵活、简单、易学易用,是一个非常有特色的统计分析软件,现在已越来越受到人们的重视和欢迎,并且和SAS、SPSS一起,被称为新的三大权威统计软件。

Stata最为突出的特点是短小精悍、功能强大,其最新的7.0版整个系统只有10M左右,但已经包含了全部的统计分析、数据管理和绘图等功能,尤其是他的统计分析功能极为全面,比起1G以上大小的SAS 系统也毫不逊色。

另外,由于Stata在分析时是将数据全部读入内存,在计算全部完成后才和磁盘交换数据,因此运算速度极快。

由于Stata的用户群始终定位于专业统计分析人员,因此他的操作方式也别具一格,在Windows席卷天下的时代,他一直坚持使用命令行/程序操作方式,拒不推出菜单操作系统。

但是,Stata的命令语句极为简洁明快,而且在统计分析命令的设置上又非常有条理,它将相同类型的统计模型均归在同一个命令族下,而不同命令族又可以使用相同功能的选项,这使得用户学习时极易上手。

更为令人叹服的是,Stata 语句在简洁的同时又拥有着极高的灵活性,用户可以充分发挥自己的聪明才智,熟练应用各种技巧,真正做到随心所欲。

除了操作方式简洁外,Stata的用户接口在其他方面也做得非常简洁,数据格式简单,分析结果输出简洁明快,易于阅读,这一切都使得Stata成为非常适合于进行统计教学的统计软件。

Stata的另一个特点是他的许多高级统计模块均是编程人员用其宏语言写成的程序文件(ADO文件),这些文件可以自行修改、添加和下载。

用户可随时到Stata网站寻找并下载最新的升级文件。

事实上,Stata 的这一特点使得他始终处于统计分析方法发展的最前沿,用户几乎总是能很快找到最新统计算法的Stata 程序版本,而这也使得Stata自身成了几大统计软件中升级最多、最频繁的一个。

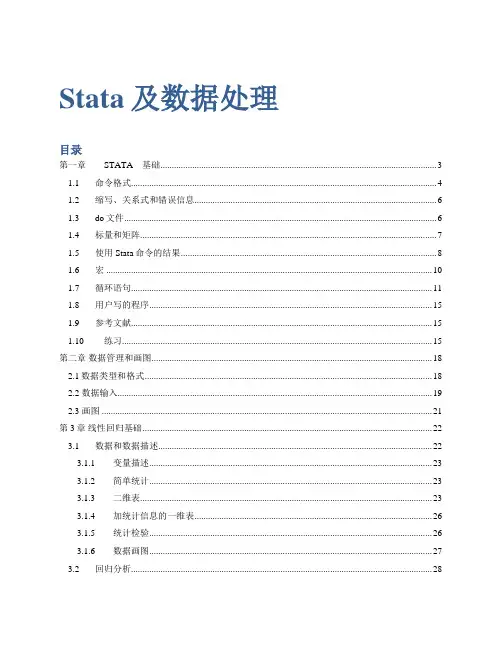

Stata及数据处理目录第一章STATA基础 (3)1.1 命令格式 (4)1.2 缩写、关系式和错误信息 (6)1.3 do文件 (6)1.4 标量和矩阵 (7)1.5 使用Stata命令的结果 (8)1.6 宏 (10)1.7 循环语句 (11)1.8 用户写的程序 (15)1.9 参考文献 (15)1.10 练习 (15)第二章数据管理和画图 (18)2.1数据类型和格式 (18)2.2 数据输入 (19)2.3 画图 (21)第3章线性回归基础 (22)3.1 数据和数据描述 (22)3.1.1 变量描述 (23)3.1.2 简单统计 (23)3.1.3 二维表 (23)3.1.4 加统计信息的一维表 (26)3.1.5 统计检验 (26)3.1.6 数据画图 (27)3.2 回归分析 (28)3.2.1 相关分析 (28)3.2.2 线性回归 (29)3.2.3 假设检验 Wald test (30)3.2.4 估计结果呈现 (30)3.3 预测 (34)3.4 Stata 资源 (35)第4章数据处理的组织方法 (36)1、可执行程序的编写与执行 (36)方法1:do文件 (36)方法2:交互式-program-命令 (36)方法3:在do文件中使用program命令 (38)方法4:do文件合并 (39)方法5:ado 文件 (40)2、do文件的组织 (40)3、数据导入 (40)4、_n和_N的用法 (44)第一章STATA基础STATA的使用有两种方式,即菜单驱动和命令驱动。

菜单驱动比较适合于初学者,容易入学,而命令驱动更有效率,适合于高级用户。

我们主要着眼于经验分析,因而重点介绍命令驱动模式。

图1.1Stata12.1的基本界面关于STATA的使用,可以参考Stata手册,特别是[GS] Getting Started with Stata,尤其是第1章A sample session和第2章The Stata User Interface。

Austin Nichols***********************@austnncholsOne Weird TrickHow to analyze experiments•The only way to be sure we are estimating unbiased causal impacts ofa “treatment” (intervention, policy, program) is to compare means via anexperiment (Freedman 2018a,b, Lin 2013)•But we can always do better by conditioning on observable (pre-treatment) characteristics: these “covariates” can reduce MSE–Stratification/blocking preferred to post hoc statistical adjustment but has its own limitations (Kallus 2018)–How should one adjust for covariates if using a regression to analyze the experimental data? What variables should be included?❖Use the LASSO! Specifically, poregress, dsregress, xporegress, etc.•New to Stata as of Stata 16, explained in the new [LASSO] manual and in Drukker(2019)Partialing out• A series of seminal papers by Belloni, Chernozhukov, and many others (see references) derived partialing-out estimators that provide reliable inference for d after one uses covariate selection to determine which of many covariates “belong” in the model for outcome YY = A d + X g + ewhere A is a treatment variable of interest and X measures the (possibly verylarge) set of potential covariates, but many elements of g are zero •Essentially, run separate LASSO regressions of Y and A on X and regress residualizedŸon residualizedÄ (where Ä = A –Â )•The cost of using these poregress, dsregress, xporegress methods is that they do not produce estimates for the covariate coefficients gStata 16 LASSO manual page 12Add’l Stata implementations•ssc desc lassopack, ssc desc pdslasso(Ahrens, Hansen, and Schaffer 2018) released prior to Stata 16 implementations–They implement the LASSO (Tibshirani1996) and the square-root-lasso (Belloni et al. 2011, 2014).–These estimators can be used to select controls (pdslasso) or instruments (ivlasso) from a large set of variables (possibly numbering more than thenumber of observations), in a setting where the researcher is interested inestimating the causal impact of one or more (possibly endogenous) causal variables of interest.–Two approaches are implemented in pdslasso and ivlasso: (1) The "post-double-selection" (PDS) methodology of Belloni et al. (2012, 2013, 2014,2015, 2016). (2) The "post-regularization" (CHS) methodology ofChernozhukov, Hansen and Spindler (2015). For instrumental variableestimation, ivlasso implements weak-identification-robust hypothesis tests and confidence sets using the Chernozhukov et al. (2013) sup-score test.Regression for experiments•Note that in the model for outcome YY = A d + X g + e•We really should never care about the “effect” of any element of X conditional on A and other elements of X, i.e. we should not care one whit about estimates of g•In expectation, A and X are uncorrelated; we just want a data-driven way to eliminate chance correlation between X and A for any X that also has effects on Y in order to reduce the variance of our estimates of d•These and other points arose in email correspondence in 2016-2017 with David Judkins who has used LASSO in subsequent studies (Judkins 2019)Okay, LASSO, but what kind?•Chetverikov, Liao, and Chernozhukov(2019) show “the cross-validated LASSO estimator achieves the fastest possible rate of convergence in the prediction norm up toa small logarithmic factor”•Drukker(2019) suggests the plug-in estimator has better small-sample performance in simulations (not reported)• A bootstrap could give out-of-sample performance measures akin to RandomForest regressionsSimulations•Suppose we have hundreds of candidate regressors, all distributed lognormal, all uncorrelated with each other• A few are correlated with Y (every 20th)•How big an improvement might we expect with the xporegress cross-fit partialing-out lasso linear regression with plug-in optimal lambda?Typical Simulation Results 10,000 iterationswith N=100Regressions use allavailable controls,zero to 80+Horizontal linesshow performanceof xporegress withCV or plug-inselection optionsConclusions•As we add useless regressors, MSE increases and the occasional useful regressor does not (necessarily) make up for that, but xporegress does better in every realistic case examined •Alternatives in e.g.Judkins (2019) can introduce bias or introduce size errors (rejection rates deviating from nominal size) but xporegress is safe on both frontsCredit (blame) for the title to TimOne Weird Trick (Nichols 2021)11ReferencesAhrens, A., C. Hansen, and M.E. Schaffer. 2018. pdslasso and ivlasso: Progams for post-selection and post-regularization OLS or IV estimation and inference. /c/boc/bocode/s458459.htmlBelloni, A., D. Chen, V. Chernozhukov, and C. Hansen. 2012. Sparse models and methods for optimal instruments with an application to eminent domain. Econometrica80: 2369–2429.Belloni, A., and V. Chernozhukov. 2013. Least squares after model selection in high-dimensional sparse models. Bernoulli19: 521–547.Belloni, A., V. Chernozhukov, and C. Hansen. 2013. Inference for high-dimensional sparse econometric models. In Advances in Economics and Econometrics: 10th World Congress, Vol. 3: Econometrics, Cambridge University Press: Cambridge, 245-295. Belloni, A., V. Chernozhukov, and C. Hansen. 2014a. High-dimensional methods and inference on structural and treatment effects. Journal of Economic Perspectives,28(2): 29–50.Belloni, A., V. Chernozhukov, and C. Hansen. 2014b. Inference on treatment effects after selection among high-dimensional controls. The Review of Economic Studies, 81(2):608–650.Belloni, A., Chernozhukov, V., Hansen, C. and Kozbur, D. 2016. Inference in High Dimensional Panel Models with an Application to Gun Control. Journal of Business and Economic Statistics 34(4):590-605.Belloni, A., Chernozhukov, V. and Wang, L. 2011. Square-root lasso: Pivotal recovery of sparse signals via conic programming. Biometrika98:791-806.Belloni, A., V. Chernozhukov, and L. Wang. 2014. Pivotal estimation via square-root-lasso in nonparametric regression. Annals of Statistics 42(2):757-788.Belloni, A., V. Chernozhukov, and Y. Wei. 2016. Post-selection inference for generalized linear models with many controls. Journal of Business & Economic Statistics34: 606–619.Bühlmann, P., and S. Van de Geer. 2011. Statistics for High-Dimensional Data: Methods, Theory and Applications. Berlin: Springer.Chernozhukov, V., D. Chetverikov, M. Demirer, E. Duflo, C. Hansen, W. Newey, and J. Robins. 2018. Double/debiased machine learning for treatment and structural parameters. The Econometrics Journal, 21(1): C1–C68.36 / 36Chernozhukov, V., D. Chetverikov, K. and Kato. 2013. Gaussian approximations and multiplier bootstrap for maxima of sums of high-dimensional random vectors. Annals of Statistics 41(6):2786-2819.Chernozhukov, V. Hansen, C., and Spindler, M. 2015. Post-selection and post-regularization inference in linear models with many controls and instruments. American Economic Review: Papers & Proceedings,105(5):486-490.Chetverikov, D., Z. Liao, and V. Chernozhukov. 2019. On cross-validated Lasso. arXiv Working Paper No. arXiv:1605.02214. /abs/1605.02214.Drukker, D. 2019. Using the lasso in Stata for inference in high-dimensional models. Presentation at London Stata Conference 5-6 September 2019.Freedman D. A. (2008a). On regression adjustments to experimental data. Adv. in Appl. Math. 40: 180–193. MR2388610Freedman, D. A. (2008b). On regression adjustments in experiments with several treatments. Ann. Appl. Stat. 2: 176–196. MR2415599Hastie, T., R. Tibshirani, and J. Friedman. 2009. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. 2nd ed. New York: Springer.Hastie, T., R. Tibshirani, and M. Wainwright. 2015. Statistical Learning with Sparsity: The Lasso and Generalizations. Boca Rotaon, FL: CRC Press.Judkins, D. 2019. “Covariate Selection in Small Randomized Studies.” https:///meetings/jsm/2019/onlineprogram/AbstractDetails.cfm?abstractid=307372Kallus, N. 2018. Optimal a priori balance in the design of controlled experiments. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 80(1), 85–112.Lin, Winston. 2013. "Agnostic notes on regression adjustments to experimental data: Reexamining Freedman’s critique." Ann. Appl. Stat. 7(1): 295 -318.Spindler, M., V. Chernozhukov, and Hansen, C. 2016. High-dimensional metrics. https:///package=hdm.Tibshirani, R. 1996. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B58: 267–288.Yamada, H. 2017. The Frisch-Waugh-Lovell Theorem for the lasso and the ridge regression. Communications in Statistics -Theory and Methods 46(21):10897-10902.Zou, H. 2006. The adaptive Lasso and its oracle properties. Journal of the American Statistical Association101: 1418–1429.Zou, H., and T. Hastie. 2005. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society, Series B67: 301–320.12 One Weird Trick (Nichols 2021) ***********************。

Stata软件入门教程李昂然浙江大学社会学系Email: ********************版本:2020/02/051. 导论本教程将快速介绍Stata软件(版本16)的一些基本操作技巧和知识。

对于详细的Stata介绍和入门,小伙伴们可以参考Stata官方的英文手册以及教程所提供的学习资料。

跟其他大多数统计软件一样,Stata可以同时通过下拉菜单以及命令语句来操作。

初学者可以通过菜单选项来逐步熟悉Stata,但是命令语句的使用是Stata用户的最佳选择。

因此,本教程将着重介绍命令语句的使用。

对于中文用户来讲,在打开Stata之后,可以通过下拉菜单选项中的用户界面语言选择将中文设置为默认语言。

同时,也可以在命令窗口中输入set locale ui zh_CN来设置中文显示。

在选择完语言后,记得重新启动Stata。

需要提醒大家,虽然Stata用户界面可以显示中文,但是统计分析的结果仍然将以英文显示。

本教程中使用的案列数据源自中国家庭追踪调查(China Family Panel Studies)。

具体数据出自本人于2019年发表于Chinese Sociological Review上“Unfulfilled Promise of Educational Meritocracy? Academic Ability and China’s Urban-Rural Gap in Access to Higher Education”一文中使用的数据。

关于数据的具体问题,请联系本人。

同时,本教程提供相应的do file和数据文件给同学们下载,同学们可以根据do file复制本教程的全部内容。

下载地址为我个人网站:https://angranli.me/teaching/温馨提示:关于Stata操作的大多数疑问,都可以在官方手册上找到答案。

同时,在Stata中输入help [command]便可以查看关于命令使用的详细信息。

STATA基本操作入门1.数据导入在STATA中,可以导入多种格式的数据文件,如Excel、CSV和文本文件。

最常用的命令是"import excel"和"import delimited"。

例如,要导入名为"data.xlsx"的Excel文件,可以使用以下命令:```import excel using "data.xlsx", sheet("Sheet1") firstrow clear```这里,"using"指定了文件路径和文件名,"sheet"指定了工作表名称(如果有多个工作表),"firstrow"表示第一行是变量名。

2.数据清洗在导入数据后,通常需要进行数据清洗,包括处理缺失值、异常值和重复值等。

STATA提供了一些常用的命令来处理这些问题。

- 缺失值处理:使用"drop"命令删除带有缺失值的观测值,使用"egen"命令创建新变量来表示缺失值。

- 异常值处理:可以使用描述性统计命令(如"summarize")来查找异常值,并使用"drop"命令删除异常值所对应的观测值。

- 重复值处理:使用"deduplicate"命令删除重复的观测值,或使用"egen"命令创建新变量来表示重复值。

3.变量操作在STATA中,可以对变量进行各种操作,如创建变量、重命名变量、计算变量和合并变量等。

- 创建变量:可以使用"generate"命令创建新变量,并赋予其数值或字符值。

- 重命名变量:使用"rename"命令将变量重命名为新的名称。

- 计算变量:使用"egen"命令计算新变量,例如,可以使用"egen mean_var = mean(var)"计算变量"var"的均值,并将结果赋值给新的变量"mean_var"。

Stata16—Under the HoodBill RisingStataCorp LLC2019Italian Stata Users Group Meeting26September2019FirenzeContents1Introduction11.1Goals (1)2Frames22.1Basic Frames (2)2.2Linking Frames (3)2.3Copying,Putting,and Posting (10)2.4Side Gains from Frames (10)3Report Generation Additions113.1Report Generation Additions (11)4Conclusion144.1Conclusion (14)1Introduction1.1GoalsGoals•Learn the basics of the frames feature in Stata16•See what is new in report generation,aka dynamic documentsMethods•For frames,it will be easy to demonstrate commands and capture their output•For the dynamic documents,demonstrating commands isfine,but the output are documents,so the presentation will become much less definite•We’ll be working in a series of folders which correspond to each of the topicsIf you copied the italy19_rising.zip folder and expanded thefilesMake the resulting folder your working directoryThe examples here will work relative to that directory2Frames2.1Basic FramesFrames in Stata16•Frames were introduced in Stata16•At their simplest,they are a way to have multiple datasets open at once•They are also something which acts like mergeBut they can save space•Lastly,there are some things which get sped up because of framesBasics of Frames•Think of a frame as a place to hold dataThe data can be in a dataset or simply in the frame•Each frame has an internal Stata nameThefirst frame,which exists when you start Stata,is called default,by defaultStarting Simple:Frames for Multiple Datasets•First,go to the frames folder.cd frames•Open a dataset.use visit_info•Create a second frame.frame create patients•Open another dataset in that other frame.frame patients:use patient_infoGlancing at the Datasets•Open the data editor,to see the dataset.edit•Switch back and forth between frames via cwf.cwf patients•Or switch back and forth using frame change.frame change default•Or switch back and forth using the frames dialog.db framesChanging Frame Names•The default frame has a forgetable name in our caseit forces us to remember which dataset has this special status•We can change the name of the default frame name to something more informative.frame rename default visits•We can then look at what frames we have.frame dirpatients4x4;patient_info.dtavisits9x5;visit_info.dtaThe numbers given are observations×variablesOr if you prefer rows×columns2.2Linking FramesLinking Datasets Using Frames•It would make sense to combine the information in the visit_info and patient_info datasets This is normally a task for the merge command•Instead of using merge,you can link together datasets in framesThis can be good for very long datasetsIt has some other advantages(and disadvantages)How to Link•The possible link types are1:1and m:1There isfine;the1:m really is not needed because all that need be done is to switch the active frame •In this example there can be multiple visits per patient,so we need to have the visits frame active .cwf visits•Now we can link on patid.frlink m:1patid,frame(patients)(3observations in frame visits unmatched)Upshot of Linking•A new variable gets created in the dataset in the active frameBy default,this is named after the frame which was linked•You can tell indirectly which observations matched up in the active frameThose which matched have non-missing values for the linking variableThose which did not match up with data in the linked dataset have missing variables for the linking variable •You cannot tell which observations did not match in the linked frameThis is similar to having_merge values of1and2onlyUsing Variables from a Linked Frame•The frval()function allows you to use values from a variable in the linked frame without actually copying the variable into the current frameWhich saves space if the active frame is long•We could list all the visits from the female patients.list patid-doctor if frval(patients,gender)=="Female"+-----------------------------------------------------+|patid visitdt illness insura~e doctor||-----------------------------------------------------|1.|905oct2015Cold HDHP|3.|120oct2015Pneu.|7.|929dec2015Flu.|9.|923feb2016Sore Throat HMO Smith|+-----------------------------------------------------+•This function can be used in any exp anywhere.gen ins_diff=insurance!=frval(patients,insurance)This shows where the insurance differs in the two datasets.list patid visitdt insurance if ins_diff+------------------------------+|patid visitdt insura~e||------------------------------|1.|905oct2015HDHP|3.|120oct2015.|4.|2512nov2015PPO|5.|415nov2015.|6.|2530nov2015PPO||------------------------------|7.|929dec2015.|8.|61618jan2016HMO|+------------------------------+Adding Variables from a Linked Frame•You can bring over variables from a linked dataset.frget birthdate,from(patients)(3missing values generated)(1variable copied from linked frame)•frget copies the data as well as all metadata from the linked variable•This is similar to.merge m:1patid using patient_info,keepusing(birthdate)As it turns out,linking has better behavior for value labels,as we will see•This is good for computing age.do genage.gen age=year(visitdt)-year(birthdate)///>-(31*month(visitdt)+day(visitdt)///><31*month(birthdate)+day(birthdate))(3missing values generated).end of do-file•Here are the ages.list patid visitdt birthdate age+-------------------------------------+|patid visitdt birthdate age||-------------------------------------|1.|905oct201515jun198728|2.|419oct201528may199817|3.|120oct201518nov200311|4.|2512nov2015..|5.|415nov201528may199817||-------------------------------------|6.|2530nov2015..|7.|929dec201515jun198728|8.|61618jan2016..|9.|923feb201615jun198728|+-------------------------------------+Adding a Variable Whose Name Exists•If you want to bring over a variable whose name matches one of the variable names in the active frame You can generate a new variable with a different name.frget pat_insurance=insurance,from(patients)(3missing values generated)(1variable copied from linked frame)You can use a prefix or a suffix.frget insurance,from(patients)prefix(another_)(3missing values generated)(1variable copied from linked frame)If you don’t try to change the conflicting name,you will get an errorGood Value Label Behavior•If the variable you bring over has a value labelIf the value label does not exist in the active frame,the value label comes overIf the value label exists in the activer frame and the definitions match,then nothing need be doneIf the value label exists in the activer frame and the definitions do not match,then the brought-over value label gets renamedThis is better behavior than with merge,which simply issues a warningRunning Commands in Another Frame•In this example,the value label instype exists in both datasets•It would be good to look at the definitions•We would like to do this without having to switch back and forth between framesIn the visits frame,which is active.label list instypeinstype:1HDHP2HMO3PPOIn the patients dataset.frame patients:label list instypeinstype:1HDHP2HMO3PPOIgnoring that the visits frame is active.frame visits:label list instypeinstype:1HDHP2HMO3PPO•In any case,we can see that the value labels are all defined wellOpening a Dataset with Conflicts•Suppose our patient_info dataset were not quite so nice•The patient_ohno datasetfits this billWe will want to link to this•Let’s look at it the frames way•First create a frame.frame create ohno•Now open up the dataset in that frame.frame ohno:use patient_ohno•And look at it.frame ohno:codebook------------------------------------------------------------------------------------------id Personal ID ------------------------------------------------------------------------------------------type:numeric(byte)range:[1,16]units:1unique values:4missing.:0/4tabulation:Freq.Value111419116------------------------------------------------------------------------------------------birthdate Patient Birth Date ------------------------------------------------------------------------------------------type:numeric daily date(int)range:[8028,16027]units:1or equivalently:[24dec1981,18nov2003]units:daysunique values:4missing.:0/4tabulation:Freq.Value1802824dec198111002715jun198711402728may199811602718nov2003------------------------------------------------------------------------------------------gender Patient Gender ------------------------------------------------------------------------------------------type:string(str6)unique values:2missing"":0/4tabulation:Freq.Value2"Female"2"Male"------------------------------------------------------------------------------------------insurance Insurance Type ------------------------------------------------------------------------------------------type:numeric(long)label:instyperange:[1,2]units:1unique values:2missing.:0/4tabulation:Freq.Numeric Label21HMO22PPOThings to Note•The patid is now called just id•The insurance variable is encoded differently,but still has the instype value labelThis would be a big problem when using merge,updateLinking to Dataset with Differing Key Names•We can still use frlink to link to a dataset where the key variables have different names Key:variable list which identifies individual variables in one dataset•To do this,we must specify the keyvarlist in the frame()option.frlink m:1patid,frame(ohno id)(3observations in frame visits unmatched)Avoiding A Dangerous Data Error•Just to drive home the point,check that the instype value labels differFirst in the active frame.label list instypeinstype:1HDHP2HMO3PPONow in the linked dataset.frame ohno:label list instypeinstype:1HMO2PPO3HDHP•Try to bring in the insurance variable from the ohno frame.frget insurance,from(ohno)prefix(ohno_)(3missing values generated)(1variable copied from linked frame)•Look at the value labels.label listinstype1:1HMO2PPO3HDHPinstype:1HDHP2HMO3PPO•Stata renamed the value label from frget to avoid a data error!This is better behavior than in mergeNotes about Linking•You can use frget to grab many variables from the linked datasetfrget varlist...•You could grab all but some variables by using the exclude()optionfrget_all,exclude(notthisvarlist)•This is like using the keepusing()option in merge except that it allows excluding instead of just including variablesStatic Linking Requires Care•Changing the key in the active frame is dangerous!•Here is such a dangerous change.replace patid=9if patid==4&visitdt==mdy(10,19,2015)(1real change made)•Now go and get the gender variable.frget gender,from(patients)(3missing values generated)(1variable copied from linked frame)•Because the linking is static,you can get odd results.tabulate patid genderPersonal|Patient GenderID|Female Male|Total-----------+----------------------+----------1|10|14|01|19|31|4-----------+----------------------+----------Total|42|6Rebuilding Links•If you are unsure of the state of the links,you should rebuild them.frlink rebuild patientsrebuilding variable patients;executing------------------------------------------------------------------------------------------->frlink m:1patid,frame(patients)(3observations in frame visits unmatched)------------------------------------------------------------------------------------------variable patients successfully rebuilt•Now go and grab the gender variable again.drop gender.frget gender,from(patients)(3missing values generated)(1variable copied from linked frame)•Now there are no problems.tabulate patid genderPersonal|Patient GenderID|Female Male|Total-----------+----------------------+----------1|10|14|01|19|40|4-----------+----------------------+----------Total|51|6Clearing out•The equivalent to clear for frames is.clear framesThis gets rid all data and frames and changes the active frame name to default:.frames dirdefault0x0frames reset is a synonym•In case you wondered,clear all runs a clear frames2.3Copying,Putting,and PostingFrames as Holding Areas•You can also use frames for holding dataIn this case,they are something of a substitute for temporaryfilesThey are also faster,especially in networked environments•frput will copy data to another frameThe opposite of frget•frcopy will copy an entire frame to another frameIt will also create the frame to use the copy,making it a nice manual preserve•frame post can be used to post observationsSimilar to post,but without tmpfiles2.4Side Gains from Framespreserve and Frames•The preserve command now uses frames for preserving in Stata/MPThis happens forfiles under1GB by defaultThe maximum size can be changed using set max_preservemem•This speeds up commands which use preserve heavilygrexample for looking at graph examples•This is especially useful when on a network where temporaryfiles end up being stored on a server,instead of locallyLinking Many Datasets•You can have up to100frames at once•This means you can link together100datasets if need be•This could be useful in very wide datasets3Report Generation Additions3.1Report Generation AdditionsReport Generation Additions•The report generation(aka dynamic document)tools have been extended•dyndoc now has a docx option which produces a docx document directly from markdown •putdocx has many additions for headers and footers,as well as a way to make narrative easier to use •html2docx converts web pages(html)to Microsoft Word compatible documents(docx)•docx2pdf converts docxfiles to pdffiles•There are a few other additions;these are the ones we’ll look atGetting Started•We’ll start with the docx option for dyndocx•Let’s move to the proper location.cd../dyndocLooking at a dyndocfile•Take a look at the paper.mdfile.doedit paper.md•This is an example markdownfile using Stata’s dynamic tagsYou can see that Stata16now has syntax highlighting for markdownThe md extension is what alerted the Do-file Editor to use this highlightingYou can change the language being highlighted•Note that the dyndoc version has changed to2Making an htmlfile•As in Stata15,this can be turned into a webpage.dyndoc paper.mdThe output is not shown,because it would include all the output needed to make the htmlfile •We can click on the link to open the pageConverting to docx•We could then convert this to a docxfile.html2docx paper.html,saving(paper_conv.docx)•Clicking the link will open the docxfile in Microsoft Word•The resultingfile needs somefixing up,but we’ll do this laterGoing Directly from Markdown to docx•We could get the same result by using the new docx option for dyndoc.dyndoc paper.md,docxAgain,the output is not shown•This will look exactly like the preceding example,because in the background,Stata is running plain dyndoc then running html2docx•Generally,this worked wellThere is some wrapping of Stata output,howeverThis is not present here,but there are other html-only things,like special characters,which might need cleaning upTidying Up Wrapping•Doing this conversion is nice,but it sometimes needs some tidying up due to wrappingThe font size of10pt for thefixed-width font allows77characters per line for letter size paper with standard one-inch marginsIf your Stata window is wide,commands like describe and codebook will draw dashed line the entire width of the your window•There are a few things which can helpUse a set linesize command to set the linesize to90or lessChange the margins in the resulting docx documentMake a style sheet(css)for the document and«dd_include»the style sheetSee thefirst example in the dyndoc PDF documentationWorking With putdocx•Thefiles for putdocx are in the putdocx folder.cd../putdocx•First take a look at how putdocx looked in Stata15.doedit putdocx15.do•You can see here that there is no narrative modeEverything is a Stata command•You also cannot put Stata code into the document without repeating itOnce as simple text in afixed-width fontOnce as code that gets runMaking the docx Document•Doing the do-file will make a docx document.do putdocx15.do•On the Mac,you can open the resultingfile from the Command window.!open putdocx15.docxNew putdocx Features in Stata16•Stata16allows headers and footers•Headers and footers can change through the document with sections•Headers and footers can work across appendingfiles•There is now something like a narrative mode•Open up putdocx16.do to see these.doedit putdocx16.doHeaders and Footers to Start•They get constructed in a couple of steps•Here are the steps for a footerUse putdocx begin,footer(name)to name the footerUse putdocx paragraph,tofooter(name)Then add to the paragraphUsing tables is good for multi-piece footers•For headers,simply use header in place of footer aboveHeaders and Footer Changes•When sections change,you can change the header and/or footer•Simply use putdocx sectionbreak in place of putdocx begin from aboveNarrative Mode•While putdocx is mostly all Stata command as before,there are now text blocks:putdocx textblock begin starts a new paragraph which is simply textputdocx textblock append appends to the current paragraphputdocx textblock end ends a text blockputdocx textfile allows inserting afile as a text block•These should make documents with a lot of plain narrative(i.e.most documents)much easier to work withMaking the docx Document•Doing the do-file will make a docx document.do putdocx16.do•Open the resultingfile from the Command window.!open putdocx16.docxOther Changes•While these are most of the changes,there have also been a few changes to markdown,which goes from markdown to html without processing Stata code putexcel had2syntax changesputexcel close has become putexcel saveputexcel has changed picture()to image()Of course,version conrol will protect your Stata15.1and earlier do-files!4Conclusion4.1ConclusionConclusion•Frames are something brand new in Stata16•The dynamic document(aka report)generation has had some nice additionsIndexCclear frames command,see frames reset command codebook command,6computing ages,4cwf command,see frame change commandDdynamic documents,see report generationdyndoc command,10,11Fframe change command,2frame create command,2frame dir command,2frame post command,9frame rename command,2frames,1–10commands in non-active frames,2,5differing value label definitions,7linking,3–9different key variables,7rebuilding links,8,9preserve command behavior,10frames dir command,9frames reset command,9frcopy command,9frget command,4,5,7,8frlink command,3,7frput command,9frval()function,3Hhtml2docx command,11Mmerge command,4Ppreserve command,10putdocx command,12headers and footers,12,13putdocx narrative mode,see putdocx textblock com-mandputdocx sectionbreak command,13putdocx textblock command,13Rreport generation,10–13Vvalue labels,5。