广义线性模型课件

- 格式:ppt

- 大小:571.50 KB

- 文档页数:42

第四届R会议北京2011广义线性模型-李欣海广义线性模型Generalized linear model李欣海中科院动物所Generalized Linear Modelg(µ) = β0+ β1x1+ β2x2+ ···+ βk x k GLM is an extension of general linear model that deals with ordinal and categorical response variables. There are three components that are common to all GLMs(McCullagh& Nelder1989) :–Random component–Systematic Component–Link FunctionMcCullagh, P., and J. A. Nelder1989. Generalized linear models. Chapman and Hall.Random Component:The random component: refers to the probability distribution of theresponse Y.Case 1. (Y 1, Y 2, . . ., Y N ) might be normal. In this case, we would say the random component is the normal distribution. This component leads to ordinary regression and analysis of variance models.Case 2. If the observations are Bernoulli random variables (which havevalues 0 or 1), then we would say the link function is the binomialdistribution. When the random component is the binomial distribution, we are commonly concerned with logistic regression models or probit models.Case 3. Quite often the random variables Y 1, Y 2, . . ., Y N have aPoisson distribution. Then we will be involved with Poisson regressionmodels or loglinear models.Systematic ComponentThe systematic component involves theexplanatory variables x 1, x 2, ···, x k .as linear predictors:β0+ β1x 1+ β2x 2+ ···+ βk x kLink FunctionThe third component of a GLM is the link between the random and systematic components.It says how the meanµ= E(Y) relates to the explanatory variables in the linear predictor through specifying a function g(µ):g(µ) = β0+ β1x1+ β2x2+ ···+ βk x kg(µ) is called the link function.Generalized Linear Models•The y i ’s are allowed to have a distribution fromthe exponential family of distributions.•The link function g(μi ) is any monotonic functionand defines the relationship between μi and x i β.kik i 22i 110i X ...X X )(g ββββμ++++=Logistic regression)(11)1(i x i i i e p x y P −+===Dependent variable is binary)(11)0(i x i i i e p x y P +===Linear function Logistic function P x 00.20.40.60.81-10-50510P x0.20.40.60.81-10-50510dt t p x y P ix i i i )21exp(21)1(2−===∫+∞−βαπProbit regression functionP x 00.20.40.60.81-10-50510)(11)1(ix i i i e p x y P −+===ii x x e e+=1ii x xi e ep +−=−111ix e +=11ix ii ep p Odds =−=1ii i x p p =⎟⎟⎠⎞⎜⎜⎝⎛−1ln Logit transformationModel meanings –nest site use of birdsThe response variable was the odds of a site having a nest, where odds are calculated as p/(1-p) and p is the proportion of sites have a nest. The statistical model was:Odds = exp(β0+ β1X 1+ β2X 2+ …βn X n )where n is the number of explanatory variables. The log of the odds is known as the logit transform of p .i x ii e p p Odds =−=1Advantages of Logit•Properties of a linear regression model•Logit between -∞and + ∞•Probability (P) constrained between 0 and 1•Directly related to odds of eventβx αP -1P ln +=⎟⎠⎞⎜⎝⎛ e P -1P βxα+=Assumptions•Dependent variable is binary or dichotomous, vs.continuous dependent variables in linear regression.•The cases are independent.•The independent variables are not linear combinations of each other•No linearity, the population means of the dependent variables at each level of the independent variable are not on a straight line.•No homogeneity of variance, the variance of the errors are not constant.•No normality, the errors are not normally distributed.Example•Risk of developing coronary heart disease (CD) by age (< 60 and > 60 years old)CD> 60 (1)< 60 (0)Present (1)2823Absent (0)1172Odds of disease among the old = 28/11Odds of disease among the young = 23/72 Odds ratio = 7.97R code# Logistic regression# Risk of developing coronary heart disease by age (<60 and >60 years old)coronary1 <-data.frame (present = rep (1, 28), age = 'old')coronary2 <-data.frame (present = rep (0, 11), age = 'old')coronary3 <-data.frame (present = rep (1, 23), age = 'young')coronary4 <-data.frame (present = rep (0, 72), age = 'young')coronary <-rbind (coronary1, coronary2, coronary3, coronary4)coronary <-rbind (coronary3, coronary4, coronary1, coronary2)fit <-glm (present~age, data = coronary, family = binomial ())summary (fit)Coefficients:Estimate Std. Error z value Pr(>|z|)(Intercept) 0.9343 0.3558 2.626 0.00865 ** ageyoung -2.0755 0.4289 -4.839 1.31e-06 *** Age 2.0755 1.1412- Age βαP 1-P ln 1×+=×+=⎟⎠⎞⎜⎝⎛Coefficients:Estimate Std. Error z value Pr(>|z|)(Intercept) -1.1412 0.2395 -4.765 1.89e-06 ***ageold 2.0755 0.4289 4.839 1.31e-06 ***Logistic Regression ModelCoefficientSE Coeff/SEAge 2.0755 0.4289 4.839 Constant -1.1412 0.2395 -4.76518.53.4, e CI 95%0.05) (p 1df with4.839 Test Wald 7.97e ratio Odds )0.4289 x 1.96(2.0755 22.0755==<===±¾β= increase in logarithm of odds ratio for a one unit increase in x •Test of the hypothesis that β = 0(Wald test)df)(1 ( Variance 22β)β=χInterpretation of the coefficients in terms of the oddsratio –An Example•Whether owning a car as afunction of the income. •17 individuals, 14 own a car and 3 do not.Variables in the EquationB S.E.Wald df Exp(B)INCOME 0.69310.80720.73721 2.0Constant-6.23838.97940.482610.00195car1 <-data.frame (income = c (10:12), carowner = rep (0, 3))car2 <-data.frame (income = rep (c (10:12), c (2, 4, 8)), carowner = rep (1, 14))car <-rbind (car1, car2)fit <-glm (carowner ~ income, data = car, family = binomial ())summary (fit)Income Car owner100101101110111111111111120121121121121121121121121Interpretation of the coefficients in terms of the oddsratio –An Example•e β= 2•So: increasing the income by one unit increases the odds of owning a car by a factor of 2 (increase in 100%) so that:(odds after increasing income)/ (odds before increasing income) = 2•If we look at the data we can see that this model predicts perfectly:income 0.69 α income βαP 1-P ln ×+=×+=⎟⎠⎞⎜⎝⎛ 2P1-Pincome income e e e ×=×=×αα69.0income 10P(own)P(not own)Odds of Owning a car10212/3=0.661/3=0.330.66/0.33=211414/5=0.81/5=0.20.8/0.2=412818/9=0.8881/9=0.1110.888/0.111=8car ownerMarginal effect of a change in Xln[p/(1-p)] = α+ βX + eThe slope coefficient (β) is interpreted as the rate of change in the "log odds" as X changes …not very useful.•We are also interested in seeing the effect of an explanatory variable on the probability of the event occurring•p = 1/[1 + exp(-α-βX)]The marginal effect of a change in X on the probability is:əp/əX = βp(1-p))()(1111X X eeβαβαβ++−+×+×=Basically, the size of the ‘marginal effect’will depend on two things:–βcoefficient–The initial value of XMarginal Effects: βxP(1-P)•Passing or failing an exam as a function of the number of hours of study•Previous study indicated the estimates of αandβwere:α= -5, β= 0.3•So what’s the effect of studying one more hour in the probability of the event occurring:Initial hoursof study P1-P P(1-P)Marginal effect50.029 0.971 0.028 0.009100.119 0.881 0.105 0.031150.378 0.622 0.235 0.071200.731 0.269 0.197 0.059250.924 0.076 0.070 0.021300.982 0.018 0.0180.005The importance of the initial value of X in themarginal effectLogistic Curves0.10.20.30.40.50.60.70.80.91-19-16-13-1-7-4-1258111417Logistic Curve bo=0.5, b1=0.5Big EffectSmall EffectSmall EffectStarting the change from the central values of X will have a higher impact on the probability of the event occurring than starting from very low or very high values of X.Some useful R codes# Logistic regressionfit <-glm(carowner~ income, data = car, family = binomial())summary (fit) # display resultsconfint(fit) # 95% CI for the coefficientsexp(coef(fit)) # exponentiated coefficientsexp(confint(fit)) # 95% CI for exponentiated coefficientspred= predict (fit, type= "response") # predicted values (logit) res= residuals (fit, type= "deviance") # residualsHow to estimate model coefficientsMaximum likelihood estimation (MLE)iiy i y i i )p (p )P(y −−=11For one observationLikelihood function=−−=n i y i y i ii)p (p L 111)(θGoodness of fit for the full model-likelihood ratio test (LR)•We compare the value of the likelihood function in a model with the variables with the value of the likelihood function in a model without the variables. The test:where is the log likelihood value of the null model (only intercept included); is the log likelihood value of the full model (taking into account of all variable parameters).–The statistic is distributed as χ2 with as many degrees of freedomas coefficients we are restrictingkS )L L (L L LR 20ˆ2ˆ2χ⇒−−−=0ˆL L SL L ˆ# likelihood ratio testfit.full <-glm (present ~ ., data = coronary, family = binomial ())fit.null <-glm (present ~ NULL, data = coronary, family = binomial ())lrtest (fit.full, fit.null)Goodness of fit -AnalogousR2)ˆ2(ˆ20SL L L L −−−Refer to total sum of squareRefer to regression sum of square Likelihood ratio index (LRI):200)ˆ2)ˆ2(ˆ2(LRI RL L L L L L S=−−−−=0ˆ2L L −/n adj)L (RR R R202max 222ˆ1−==# R codelibrary (Design) # required for lrm()fit2 <-lrm (y ~ x1 + x2, data = data1)fit2[[3]][10] # R squareStepwise Regression base on Akaike’s Information Criterion (AIC)AIC = -2 ln (likelihood) + 2KK = number of parameters in the model, including 1for the constant and 1 for the error term443322110X X X X Y βββββ++++=K = 6For small samples (n /K < 40), use AIC c for small sample size1)1(2AIC AIC c −−++=K n K K # R codestep (fit) # Stepwise Regression25Sample plots 35Control plots 35Habitat factors 11Elevation (m)Area of rice fields nearby (ha)Human disturbanceNumber of trees within 100 m 2Mean tree height within 100 m 2 (m)Nest position on the slopeSlope aspect (°)Slope gradient (°)Nest tree height (m)Nest aspect (°)Coverage above the nest (%)Nest site selection of the crested ibisControl plots Nest sites26 0 20 40 kmSource data 10500100015002000250005101520253035SitesE l e v a t i o n (m )Elevation (Nest sites)Elevation (Control plots)51015202505101520253035SitesM e t e rHeight of nest tree (Nest sites)Height of nest tree (Control plots)024681005101520253035SitesDisturbance (Nest sites)Disturbance (Control plots)5010015020025030035005101520253035SitesArea of rice field nearby (Nest sites)Area of rice field nearby (Control plots)Source data 20.00.30.60.91.25101520253035SitesNest aspect (Nest sites)Nest aspect (Control plots)0.020.040.060.080.0100.005101520253035SitesCoverage above the nest (Nest sites)Coverage above the nest (Control plots)0.00.40.81.21.62.005101520253035SitesNest position on the slope (Nest sites)Nest position on the slope (Control plots)0.00.30.60.91.205101520253035SitesSlope aspect (Nest sites)Slope aspect (Control plots)Source data 30.030.060.090.05101520253035SitesSlope gradient (Nest sites)Slope gradient (Control plots)0.05.010.015.020.005101520253035SitesMean tree height (Nest sites)Mean tree height (Control plots)0.05.010.015.020.005101520253035SitesNumber of trees within the site (Nest sites)Number of trees within the site (Control plots)CorrelationHabitat variablesCorrelation coefficientsMean S.D. 12345678910111. Elevation (m)1-0.72*-0.48*-0.70*0.21-0.020.39*-0.38*0.1620.34*0.21894.00176.532. Area (ha) of ricefields within 1km210.53*0.49*-0.23-0.08-0.230.230.05-0.21-0.1211.62 5.403. Humandisturbance10.220.06-0.1540.150.38*0.10-0.020.08 1.40 1.52 4. Number of treeswithin 100 m21-0.37*-0.00-0.52*0.34*-0.330.012-0.258.11 3.53 5. Mean tree heightwithin 100 m2 (m)1-0.240.23-0.34*0.32*0.11-0.0611.23 3.06 6. Nest position onthe slope10.030.22-0.21-0.00-0.07 2.030.45 7. Slope aspect(South = 1,North = 0)1-0.150.180.55*0.060.450.298. Slope gradient (°)1-0.050.100.0125.697.019. Nest tree height (m)1-0.08-0.2314.80 2.3610. Nest aspect(South = 1,North = 0)10.320.430.3211. Coverage abovethe nest (%)149.00%16.53%The Pearson correlations between the 11 habitat variables measured at 35 nest sites of crested ibis in Yang county, Shaanxi province, China. Mean values and standard deviations (S.D.) are also shown.Step Habitat features Selection coefficientsStandard ErrorP value for model selectionAIC 1Nest tree height (m)0.940.38<.000163.3562Human disturbance -0.990.400.000150.4753Slope aspect-5.82 3.250.001341.7274Area of rice fields nearby (ha)0.350.190.010936.2525Nest position on the slope 3.73 2.300.047834.3366Mean tree height within 100 m 2(m)0.280.270.0320 31.9247Nest aspect 54.928531.53780.011226.0488Slope gradient (°)-0.40800.36020.286623.2269Coverage above the nest 0.52010.55860.084124.32210Number of trees within 100 m 2-0.0068300.006160.116025.76411Elevation (m)0.076700.13280.145027.275Stepwise logistic regression for modeling nest site selection of crested ibis in Yang County, Shaanxi Province, China.Model equationlogit(p) = –20.99 + 0.94×nest tree height–0.99×human disturbance+ 3.63×nest position+ 0.35×rice paddy area + …Probability of nest selection:P = e logit(p)/(1 + e logit(p))•R-Square 0.7380•Max-rescaled R-Square 0.9840李欣海, 马志军, 李典谟, 丁长青, 翟天庆, 路宝忠。

广义线性模型广义线性模型*(Nelder和Wedderburn,1972)除了正态分布,也允许反应分布,以及模型结构中的一定程度的非线性。

GLM具有基本结构g(μi)=X iβ,其中μi≡E(Yi),g是光滑单调'链接函数',Xi是模型矩阵的第i行,X和β是未知参数的向量。

此外,GLM通常会做出Yi是独立的和Yi服从一些指数族分布的假设。

指数族分布包括许多对实际建模有用的分布,如泊松分布,二项分布,伽马分布和正态分布。

GLM的综合参考文献是McCullagh和Nelder(1989),而Dobson(2001)提供了一个全面的介绍。

因为广义线性模型是以“线性预测器”Xβ的形式详细说明的,所以线性模型的许多一般想法和概念通过一些修改而继续存在到广义线性模型中。

除了必须选择的链接函数和分布之外,基本模型公式与线性模型公式基本相同。

当然,如果恒等函数被选择作为链接以及正态分布,那么普通线性模型将作为特例被恢复。

然而,泛化是以某种成本为代价的:现在的模型拟合必须要迭代完成,而且用于推理的分布结果是近似的,并且由大样本限制结果证明是正确的而不是精确的。

但在深入探讨这些问题之前,请考虑几个简单的例子。

μi=cexp(bt i),例1:在疾病流行的早期阶段,新病例的发生率通常会随着时间以指数方式增加。

因此,如果μi是第ti天的新病例的预期数量,则该形式的模型为请注意,“广义”和“一般”线性模型之间存在区别-后一个术语有时用于指除简单直线以外的所有线性模型。

可能是合适的,其中c和b是未知参数。

通过使用对数链路,这样的模型可以变成GLM形式log(μi)=log(c)+bt i=β0+t iβ1(根据β0=logc和β1=b的定义)。

请注意,模型的右侧现在在参数中是线性的。

反应变量是每天新病例的数量,因为这是一个计数,所以泊松分布可能是一个合理的可以尝试的分布。

因此,针对这种情况的GLM使用泊松反应分布,对数链路和线性预测器β0+tiβ1。

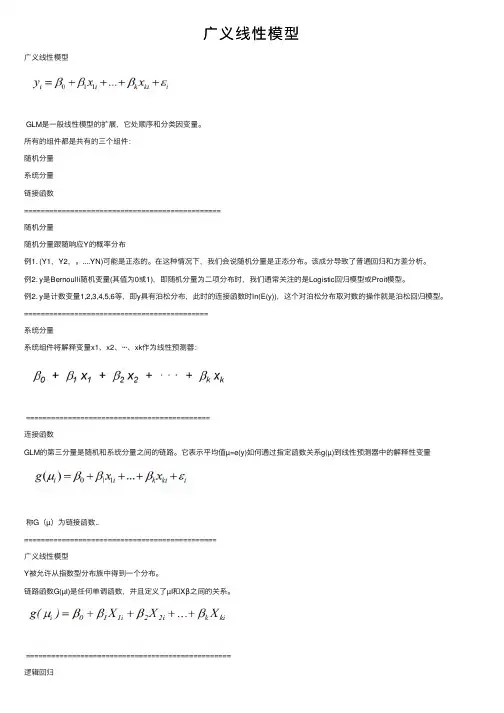

我们知道,混合线性模型是一般线性模型的扩展,而广义线性模型在混合线性模型的基础上又做了进一步扩展,使得线性模型的使用范围更加广阔。

每一次的扩展,实际上都是模型适用范围的扩展,一般线性模型要求观测值之间相互独立、残差(因变量)服从正态分布、残差(因变量)方差齐性,而混合线性模型取消了观测值之间相互独立和残差(因变量)方差齐性的要求,接下来广义线性模型又取消了对残差(因变量)服从正态分布的要求。

残差不一定要服从正态分布,可以服从二项、泊松、负二项、正态、伽马、逆高斯等分布,这些分布被统称为指数分布族,并且引入了连接函数,根据不同的因变量分布、连接函数等组合,可以得到各种不同的广义线性模型。

要注意,虽然广义线性模型不要求因变量服从正态分布,但是还是要求相互独立的,如果不符合相互独立,需要使用后面介绍的广义估计方程。

=================================================一、广义线性模型广义线性模型的一般形式为:有以下几个部分组成1.线性部分2.随机部分εi3.连接函数连接函数为单调可微(连续且充分光滑)的函数,连接函数起了"y的估计值μ"与"自变量的线性预测η"的作用,在一般线性模型中,二者是一回事,但是当自变量取值范围受限时,就需要通过连接函数扩大取值范围,因此在广义线性模型中,自变量的线性预测值是因变量的函数估计值。

广义线性模型设定因变量服从指数族概率分布,这样因变量就可以不局限于正态分布一种形式,并且方差可以不稳定。

指数分布族的概率密度函数为其中θ和φ为两个参数,θ为自然参数,φ为离散参数,a,b,c为函数广义线性模型的参数估计:广义线性模型的参数估计一般不能使用最小二乘法,常用加权最小二乘法或极大似然法。

回归参数需要用迭代法求解。

广义线性模型的检验和拟合优度:广义线性模型的检验一般使用似然比检验、Wald检验。

模型的比较用似然比检验,回归系数使用Wald检验。

线性模型(5)——广义线性模型广义线性模型是一种扩展了一般线性模型的模型,它在混合线性模型的基础上进一步扩展,使得线性模型的使用范围更加广泛。

每次扩展都是为了适用更多的情况。

一般线性模型要求观测值之间相互独立,残差(因变量)服从正态分布,残差(因变量)方差齐性。

而混合线性模型取消了观测值之间相互独立和残差(因变量)方差齐性的要求。

广义线性模型又取消了对残差(因变量)服从正态分布的要求。

残差不一定要服从正态分布,可以服从二项、泊松、负二项、正态、伽马、逆高斯等分布,这些分布被统称为指数分布族,并且引入了连接函数。

根据不同的因变量分布、连接函数等组合,可以得到各种不同的广义线性模型。

需要注意的是,虽然广义线性模型不要求因变量服从正态分布,但是仍要求相互独立。

如果不符合相互独立的要求,需要使用广义估计方程。

广义线性模型的一般形式包括线性部分、随机部分εi和连接函数。

连接函数为单调可微的函数,起到连接因变量的估计值μ和自变量的线性预测值η的作用。

在广义线性模型中,自变量的线性预测值是因变量的函数估计值。

广义线性模型设定因变量服从指数族概率分布,这样因变量就可以不局限于正态分布,并且方差可以不稳定。

指数分布族的概率密度函数包括θ和φ两个参数,其中θ为自然参数,φ为离散参数,a、b、c为函数广义线性模型的参数估计。

广义线性模型的参数估计一般不能使用最小二乘法,常用加权最小二乘法或极大似然法。

回归参数需要用迭代法求解。

广义线性模型的检验和拟合优度一般使用似然比检验和Wald检验。

似然比检验是通过比较两个相嵌套模型的对数似然函数来进行的,统计量为G。

模型P中的自变量是模型K 中自变量的一部分,另一部分是要检验的变量。

G服从自由度为K-P的卡方分布。

回归系数使用Wald检验进行模型比较。

广义线性模型的拟合优度通常使用以下统计量来度量:离差统计量、Pearson卡方统计量、AIC、AICC、BIC、CAIC准则,准则的值越小越好。

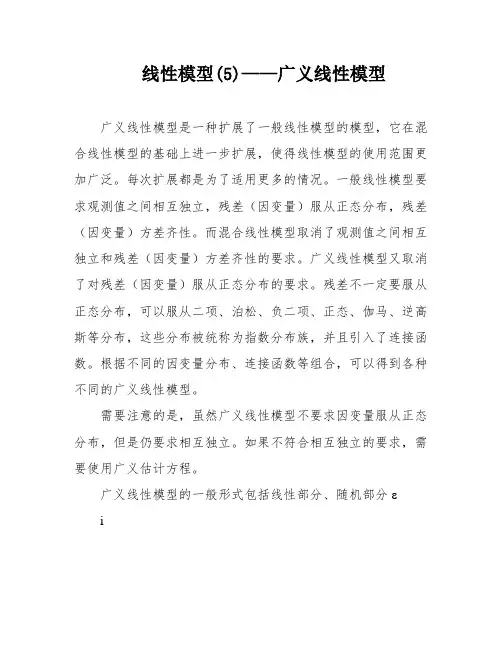

⼴义线性模型⼴义线性模型GLM是⼀般线性模型的扩展,它处顺序和分类因变量。

所有的组件都是共有的三个组件:随机分量系统分量链接函数===============================================随机分量随机分量跟随响应Y的概率分布例1. (Y1,Y2,。

....YN)可能是正态的。

在这种情况下,我们会说随机分量是正态分布。

该成分导致了普通回归和⽅差分析。

例2. y是Bernoulli随机变量(其值为0或1),即随机分量为⼆项分布时,我们通常关注的是Logistic回归模型或Proit模型。

例2. y是计数变量1,2,3,4,5,6等,即y具有泊松分布,此时的连接函数时ln(E(y)),这个对泊松分布取对数的操作就是泊松回归模型。

============================================系统分量系统组件将解释变量x1、x2、···、xk作为线性预测器:============================================连接函数GLM的第三分量是随机和系统分量之间的链路。

它表⽰平均值µ=e(y)如何通过指定函数关系g(µ)到线性预测器中的解释性变量称G(µ)为链接函数..==============================================⼴义线性模型Y被允许从指数型分布族中得到⼀个分布。

链路函数G(µI)是任何单调函数,并且定义了µI和Xβ之间的关系。

=================================================逻辑回归因变量是⼆进制的评估多个解释变量(可以是数值型变量和/或类别型变量)对因变量的影响。

=============================================模型含义:鸟类的巢址使⽤响应变量是有巢的站点的概率,其中概率计算为p/(1-p),p是有巢的站点的⽐例。

广义线性回归模型

广义线性回归模型(Generalized Linear Model,GLM)是一种用于分析多自变量数据的回归模型。

它将统计学中最常见的线性回归模型扩展到一般的统计分布中,以便更好地拟合不同数据类型的数据。

GLM能够对相关变量进行分析,并预测其他变量的变化。

GLM通常主要有三个部分组成:响应变量、线性函数和非线性概率分布函数,其中响应变量是待预测的变量,而线性函数和非线性概率分布函数则是描述响应变量的模型。

GLM使用最小二乘法来估计回归参数,以解释响应变量的变化。

广义线性模型(GLM )PublicLibraryofBioinformatics广义线性模型(generalized linear model, GLM )是简单最小二乘回归(OLS)的扩展,在OLS 的假设中,响应变量是连续数值数据且服从正态分布,而且响应变量期望值与预测变量之间的关系是线性关系。

而广义线性模型则放宽其假设,首先响应变量可以是正整数或分类数据,其分布为某指数分布族。

其次响应变量期望值的函数(连接函数)与预测变量之间的关系为线性关系。

因此在进行GLM 建模时,需要指定分布类型和连接函数。

在R 中通常使用glm 函数构造广义线性模型,其中分布参数包括了binomaial (两项分布)、gaussian (正态分布)、gamma (伽马分布)、poisson(泊松分布)等。

和lm 函数类似,glm 的建模结果可以通过下述的泛型函数进行二次处理,如summary()、coef()、confint()、residuals()、anova()、plot()、predict()一、Logistic 回归Logistic 回归中假设响应变量服从二项分布,参数family 设置为binomial ,连接函数link 设置为logit ,我们以AER 包中的Affairs 数据集作为例子。

该数据集是关于婚姻出轨,其中affairs 变量表示出轨次数,数据集中还包括结婚时间、教育、宗教等其它变量。

由于affairs 为正整数,为了进行Logistic 回归先要将其转化为二元变量。

1 data(Affairs, package='AER')2 Affairs$ynaffair[Affairs$affairs < 0] <- 13 Affairs$ynaffair[Affairs$affairs < 0] <- 04 Affairs$ynaffair <- factor(Affairs$ynaffair,5 levels=c(0,1),6 labels=c("No","Yes"))7 model.L <- glm(ynaffair ~ age + yearsmarried + religiousness +rating, data=Affairs, family=binomial())8 summary(model.L)若样本观测值变异性过大,即出现了过度离散现象,此时仍使用二项分布假设就会影响系数检测的显著性。