An Application Model for Interactive Environments

- 格式:pdf

- 大小:31.81 KB

- 文档页数:3

《建筑工程施工许可证》办理流程图Table of ContentsTable of ContentsI.Introduction ................................................... . (3)II. What is a Construction Project ConstructionPermit? (4)III. The Process of Obtaining a Construction Project Construction Permit (5)A.Requirements ................................................... (5)1. NecessaryDocuments ...................................................... (5)2. Professional Qualification and TechnicalCapacity (6)B. ApplicationProcedure ...................................................... .. (7)2. Pre-applicationProcedures ..................................................... (8)3. Final ApprovalStage .......................................................... .. (9)IV.Conclusion ..................................................... . (10)V.References ..................................................... . (11)I. IntroductionThe construction industry is an important sector in most countries, and construction projects often have a large impact on the economy and the environment. Therefore, it is important that these projects are subject to rigorous control and that only qualified contractors are allowed to undertake them. For this reason, the local government will require that a construction project obtain a Construction Project Construction Permit (CPCP) before any work may begin.In this paper, we will discuss what is needed to obtain a CPCP and the steps involved in the application process.II. What is a Construction Project Construction Permit?III. The Process of Obtaining a Construction Project Construction PermitA. RequirementsIn order to obtain a CPCP, there are several requirements that must be met. These requirements include:1. Necessary DocumentsAny applicant for a CPCP must provide the necessary documents as part of their application. These documents must include the project’s site plan, building plans, and other documents related to the project. Additionally, all applicants must provide proof of financial stability, such as bank statements or a letter of credit.2. Professional Qualification and Technical CapacityB. Application ProcedureOnce the requirements have been met, applicants can begin the application process for a CPCP. This process consists of several steps outlined below.2. Pre-application Procedures3. Final Approval StageIV.ConclusionV. References。

UML i:The Unified Modeling Language forInteractive ApplicationsPaulo Pinheiro da Silva and Norman W.Paton Department of Computer Science,University of Manchester Oxford Road,Manchester M139PL,England,UK.e-mail:{pinheirp,norm}@AbstractUser interfaces(UIs)are essential components of most software sys-tems,and significantly affect the effectiveness of installed applications.Inaddition,UIs often represent a significant proportion of the code deliveredby a development activity.However,despite this,there are no modellinglanguages and tools that support contract elaboration between UI devel-opers and application developers.The Unified Modeling Language(UML)has been widely accepted by application developers,but not so much byUI designers.For this reason,this paper introduces the notation of theUnified Modelling Language for Interactive Applications(UML i),that ex-tends UML,to provide greater support for UI design.UI elements elicitedin use cases and their scenarios can be used during the design of activitiesand UI presentations.A diagram notation for modelling user interfacepresentations is introduced.Activity diagram notation is extended to de-scribe collaboration between interaction and domain objects.Further,acase study using UML i notation and method is presented.1IntroductionUML[9]is the industry standard language for object-oriented software design. There are many examples of industrial and academic projects demonstrating the effectiveness of UML for software design.However,most of these success-ful projects are silent in terms of UI design.Although the projects may even describe some architectural aspects of UI design,they tend to omit important aspects of interface design that are better supported in specialist interface de-sign environments[8].Despite the difficulty of modelling UIs using UML,it is becoming apparent that domain(application)modelling and UI modelling may occur simultaneously.For instance,tasks and domain objects are interde-pendent and may be modelled simultaneously since they need to support each other[10].However,task modelling is one of the aspects that should be consid-ered during UI design[6].Further,tasks and interaction objects(widgets)areinterdependent as well.Therefore,considering the difficulty of designing user interfaces and domain objects simultaneously,we believe that UML should be improved in order to provide greater support for UI design[3,7].This paper introduces the UML i notation which aims to be a minimal exten-sion of the UML notation used for the integrated design of applications an their user interfaces.Further,UML i aims to preserve the semantics of existing UML constructors since its notation is built using new constructors and UML exten-sion mechanisms.This non-intrusive approach of UML i can be verified in[2], which describes how the UML i notation introduced in this paper is designed in the UML meta-model.UML i notation has been influenced by model-based user interface develop-ment environment(MB-UIDE)technology[11].In fact,MB-UIDEs provide a context within which declarative models can be constructed and related,as part of the user interface design process.Thus,we believe that the MB-UIDE technology offers many insights into the abstract description of user interfaces that can be adapted for use with the UML technology.For instance,MB-UIDE technology provides techniques for specifying static and dynamic aspects of user interfaces using declarative models.Moreover,as these declarative models can be partially mapped into UML models[3],it is possible to identify which UI aspects are not covered by UML models.The scope of UML i is restricted to form-based user interfaces.However, form-based UIs are widely used for data-intensive applications such as database system applications and Web applications and UML i can be considered as a baseline for non-form-based UI modelling.In this case,modifications might be required in UML i for specifying a wider range of UI presentations and tasks.To introduce the UML i notation,this paper is structured as follows.MB-UIDE’s declarative user interface models are presented in terms of UML i dia-grams in Section2.Presentation modelling is introduced in Section3.Activity modelling that integrates use case,presentation and domain models is presented in Section4.The UML i method is introduced in Section5when a case study ex-emplifying the use of the UML i notation is presented along with the description of the method.Conclusions are presented in Section6.2Declarative User Interface ModelsA modelling notation that supports collaboration between UI developers and application developers should be able to describe the UI and the application at the same time.From the UI developer’s point of view,a modelling notation should be able to accommodate the description of users requirements at appro-priate levels of abstraction.Thus,such a notation should be able to describe abstract task specifications that users can perform in the application in order to achieve some goals.Therefore,a user requirement model is required to describe these abstract tasks.Further,UI sketches drawn by users and UI developers can help in the elicitation of additional user requirements.Therefore,an abstract presentation model that can present early design ideas is required to describethese UI ter in the design process,UI developers could also refine abstract presentation models into concrete presentation models,where widgets are selected and customised,and their placement(layout)is decided.From the application developer’s point of view,a modelling notation that integrates UI and application design should support the modelling of application objects and actions in an integrated way.In fact,the identification of how user and application actions relate to a well-structured set of tasks,and how this set of tasks can support and be supported by the application objects is a challenging activity for application designers.Therefore,a task model is required to describe this well-structured set of tasks.The task model is not entirely distinct from the user requirement model.Indeed,the task model can be considered as a more structured and detailed view of the user requirement model.The application objects,or at least their interfaces,are relevant for UI de-sign.In fact,these interfaces are the connection points between the UI and the underlying application.Therefore,the application object interfaces compose an application model.In an integrated UI and application development environ-ment,an application model is naturally produced as a result of the application design.UML i aims to show that using a specific set of UML constructors and dia-grams,as presented in Figure1,it is possible to build declarative UI models. Moreover,results of previous MB-UIDE projects can provide experience as to how the declarative UI models should be inter-related and how these models can be used to provide a declarative description of user interfaces.For instance, the links(a)and(c)in Figure1can be explained in terms of state objects,as presented in Teallach[5].The link(d)can be supported by techniques from TRI-DENT[1]to generate concrete presentations.In terms of MB-UIDE technology there is not a common sense of the models that might be used for describing a UI.UML i does not aim to present a new user interface modelling proposal,but to reuse some of the models and techniques proposed for use in MB-UIDEs in the context of UML.Figure1:UML i declarative user interface models.3User Interface DiagramUser interface presentations,the visual part of user interfaces,can be modelled using object diagrams composed of interaction objects,as shown in Figure2(a). These interaction objects are also called widgets or visual components.The selection and grouping of interaction objects are essential tasks for modelling UI presentations.However,it is usually difficult to perform these tasks due to the large number of interaction objects with different functionalities provided by graphical environments.In a UML-based environment,the selection and grouping of interaction objects tends to be even more complex than in UI de-sign environments because UML does not provide graphical distinction between domain and interaction objects.Further,UML treats interaction objects in the same way as any other objects[3].For instance,in Figure2(a)it is not easy to see that the Results Displayer is contained by the SearchBookUI FreeContainer. Considering these presentation modelling difficulties,this section introduces the UML i user interface diagram,a specialised object diagram used for the concep-tual modelling of user interface presentation.(a)(b)Figure2:An abstract presentation model for the SearchBookUI can be modelled as an object diagram of UML,as presented in(a).The same presentation can alternatively be modelled using the UML i user interface diagram,as presented in(b).3.1User Interface Diagram NotationThe SearchBookUI abstract presentation modelled using the user interface dia-gram is presented in Figure2(b).The user interface diagram is composed of six constructors that specify the role of each interaction object in a UI presentation.•FreeContainers,•Containers,,are rendered as a pair of semi-overlapped triangles pointing to the right.They are responsible for receiving information from users in the form of events.Graphically,Containers,Inputters,Displayers,Editors and ActionInvokers must be placed into a FreeContainer.Additionally,the overlapping of the bor-ders of interaction objects is not allowed.In this case,the“internal”lines of Containers and FreeContainers,in terms of their two-dimensional representa-tions,are ignored.3.2From an Abstract to a Concrete PresentationThe complexity of user interface presentation modelling can be reduced by work-ing with a restricted set of abstract interaction objects,as specified by the user interface diagram notation.However,a presentation modelling approach as proposed by the UML i user interface diagram is possible since form-based pre-sentations respect the Abstract Presentation Pattern1(APP)in Figure3.Thus, a user interface presentation can be described as an interaction object acting as a FreeContainer.The APP also shows the relationships between the abstract interaction objects.As we can see,the APP is environment-independent.In fact,a UI presen-tation described using the user interface diagram can be implemented by any object-oriented programming language,using several toolkits.Widgets should be bound to the APP in order to generate a concrete presentation model.In this way,each widget should be classified as a FreeContainer,Container, Inputter,Displayer,Editor or ActionInvoker.The binding of widgets to the APP can be described using UML[3].Widget binding is not efficient to yield afinal user interface implementation. In fact,UML i is used for UI modelling and not for implementation.However, we believe that by integrating UI builders with UML i-based CASE tools we canFigure3:The Abstract Presentation Patternproduce environments where UIs can be modelled and developed in a system-atic way.For instance,UI builder facilities may be required for adjusting UI presentation layout and interaction object’s colour,size and font.4Activity Diagram ModellingUML interaction diagrams(sequence and collaboration diagrams)are used for modelling how objects collaborate.Interaction diagrams,however,are limited in terms of workflow modelling since they are inherently sequential.Therefore, concurrent and repeatable workflows,and especially those workflows affected by users decisions,are difficult to model and interpret from interaction diagrams.Workflows are easily modelled and interpreted using activity diagrams.In fact,Statechart constructors provide a graphical representation for concurrent and branching workflows.However,it is not so natural to model object col-laboration in activity diagrams.Improving the ability to describe object col-laboration and common interaction behaviour,UML i activity diagrams provide greater support for UI design than UML activity diagrams.This section explains how activities can be modelled from use cases,how activity diagrams can be simplified in order to describe common interactive behaviours,and how interaction objects can be related to activity diagrams.4.1Use Cases and Use Case ScenariosUse case diagrams are normally used to identify application functionalities. However,use case diagrams may also be used to identify interaction activi-ties.For instance,a communicates association between a use case and an actor indicates that the actor is interacting with the use case.Therefore,forexample,in Figure4the CollectBook use case cannot identify an interaction activity since its association with Borrower is not a communicates associa-tion.Indeed,the CollectBook use case identifies a functionality not supported by the application.Figure4:A use case diagram for the BorrowBook use case with its component use cases.Use case scenarios can be used for the elicitation of actions[12].Indeed,ac-tions are identified by scanning scenario descriptions looking for verbs.However, actions may be classified as Inputters,Displayers,Editors or ActionInvokers. For example,Figure5shows a scenario for the SearchBook use case in Figure4. Three interaction objects can be identified in the scenario:∇providingthat specifies some query details;and displaysits title,authors,year,or a combination of this information.Addi-tionally,John can∇specifythe details of the matching books,if any.Figure5:A scenario for the SearchBook use case.4.2From Use Cases to ActivitiesUML i assumes that a set of activity diagrams can describe possible user interac-tions since this set can describe possible application workflows from application entry points.Indeed,transitions in activity diagrams are inter-object transi-tions,such as those transitions between interaction and domain objects that can describe interaction behaviours.Based on this assumption,those activity diagrams that belong to this set of activity diagrams can be informally classified as interaction activity diagrams.Activities of interaction activity diagrams can also be informally classified as interaction activities.The difficulty with this classification,however,is that UML does not specify any constructor for mod-elling application entry points.Therefore,the process of identifying in which activity diagram interactions start is unclear.The initial interaction state constructor used for identifying an application’s entry points in activity diagrams is introduced in UML i.This constructor is rendered as a solid square,,and it is used as the UML initial pseudo-state[9], except that it cannot be used within any state.A top level interaction activ-ity diagram must contain at least one initial interaction state.Figure6 shows a top level interaction activity diagram for a library application.Figure6:Modelling an activity diagram from use cases using UML i.Use cases that communicate directly with actors are considered candidate interaction activities in UML i.Thus,we can define a top level interaction ac-tivity as an activity which is related to a candidate interaction activity.This relationship between a top level interaction activity and a candidate interaction activity is described by a realisation relationship,since activity diagrams can describe details about the behaviour of candidate interaction activities.The diagram in Figure6is using the UML i activity diagram notation explained in the next section.However,we can clearly see in the diagram which top level interaction activity realises which candidate interaction activity.For instance, the SearchBook activity realises the SearchBook candidate interaction activity modelled in the use case diagram in Figure4.In terms of UI design,interaction objects elicited in scenarios are primitive interaction objects that must be contained by FreeContainers(see the APP in Figure3).Further,these interaction objects should be contained by FreeCon-tainers associated with top-level interaction activities,such as the SearchBookUI FreeContainer in Figure6,for example.Therefore,interaction objects elicited from scenarios are initially contained by FreeContainers that are related to top-level interaction through the use of a presents objectflow,as described in Section4.4.In that way,UI elements can be imported from use case diagrams to activity diagrams.For example,the interaction objects elicited in Figure5 are initially contained by the SearchBookUI presented in Figure6.4.3Selection StatesStatechart constructors for modelling transitions are very powerful since they can be combined in several ways,producing many different compound transi-tions.In fact,simple transitions are suitable for relating activities that can be executed sequentially.A combination of transitions,forks and joins is suitable for relating activities that can be executed in parallel.A combination of transitions and branches is suitable for modelling the situation when only one among many activities is executed(choice behaviour).However,for the de-signing of interactive applications there are situations where these constructors can be held to be rather low-level,leading to complex models.The following behaviours are common interactive application behaviours,but usually result in complex models.•The order independent behaviour is presented in Figure7(a).There, activities A and B are called selectable activities since they can be acti-vated in either order on demand by users who are interacting with the application.Thus,every selectable activity should be executed once dur-ing the performance of an order independent behaviour.Further,users are responsible for selecting the execution order of selectable activities.An or-der independent behaviour should be composed of one or more selectable activities.An object with the execution history of each selectable activity (SelectHist in Figure7(a))is required for achieving such behaviour.•The optional behaviour is presented in Figure7(b).There,users can execute any selectable activity any number of times,including none.In this case,users should explicitly specify when they arefinishing the Select activity.Like the order independent behaviour,the optional behaviour should be composed of one or more selectable activities.•The repeatable behaviour is presented in Figure7(c).Unlike the order independent and optional behaviours,a repeatable behaviour should have only one associated activity.A is the associated activity of the repeat-able behaviour in Figure7.Further,a specific number of times that the associated activity can be executed should be specified.In the case of the diagram in Figure7(c),this number is identified by the value of X.An optional behaviour with one selectable activity can be used when aselectable activity can be executed an unspecified number oftimes.(a)(b)(c)Figure7:The UML modelling of three common interaction application be-haviours.An order independent behaviour is modelled in(a).An optional behaviour is modelled in(b).A repeatable behaviour is modelled in(c).As optional,order independent and repeatable behaviours are common in interactive systems[5],UML i proposes a simplified notation for them.The no-tation used for modelling an order independent behaviour is presented in Fig-ure8(a).There we can see an order independent selector,rendered as a circle overlying a plus signal,⊕,connected to the activities A and B by return transi-tions,rendered as solid lines with a single arrow at the selection state end and a double arrow at the selectable activity end.The order independent selector identifies an order independent selection state.The double arrow end of return transitions identify the selectable activities of the selection state.The distinc-tion between the selection state and its selectable activities is required when selection states are also selectable activities.Furthermore,a return transition is equivalent of a pair of Statechart transitions,one single transition connecting the selection state to the selectable activity,and one non-guarded transition connecting the selectable activity to the selection state,as previously modelled in Figure7(a).In fact,the order independent selection state notation can beconsidered as a macro-notation for the behaviour described in Figure7(a).(b)The notations for modelling optional and repeatable behaviours are similar, in terms of structure,to the order independent selection state.The main dif-ference between the notation of selection states is the symbols used for their selectors.The optional selector which identifies an optional selection state is rendered as a circle overlaying a minus signal, .The repeatable selector which identifies a repeatable selection state2is rendered as a circle overlaying a times signal,⊗.The repeatable selector additionally requires a REP constraint,as shown in Figure8(c),used for specifying the number of times that the asso-ciated activity should be repeated.The value X in this REP constraint is the X parameter in Figure7(c).The notations presented in Figures8(b)and8(c) can be considered as macro-notations for the notation modelling the behaviours presented in Figures7(b)and7(c).4.4Interaction Object BehaviourObjects are related to activities using objectflows.Objectflows are basically used for indicating which objects are related to each activity,and if the objects are generated or used by the related activities.Objectflows,however,do not describe the behaviour of related objects within their associated activities.Ac-tivities that are action states and that have objectflows connected to them can describe the behaviour of related objects since they can describe how methods may be invoked on these objects.Thus,a complete decomposition of activities into action states may be required to achieve such object behaviour description. However,in the context of interaction objects,there are common functions that do not need to be modelled in detail to be understood.In fact,UML i pro-videsfive specialised objectflows for interaction objects that can describe these common functions that an interaction object can have within a related activity. These objectflows are modelled as stereotyped objectflows and explained as follows.•An interacts objectflow relates a primitive interaction object to an action state,which is a primitive activity.Further,the objectflow indi-cates that the action state involved in the objectflow is responsible for an interaction between a user and the application.This can be an interaction where the user is invoking an object operation or visualising the result of an object operation.The action states in the SpecifyBookDetails activity, Figure9,are examples of Inputters assigning values to some attributes of the SearchQuery domain object.The Results in Figure9is an exam-ple of a Displayer for visualising the result of SearchQuery.SearchBook().As can be observed,there are two abstract operations specified in the APP (Figure3)that have been used in conjunction with these interaction ob-jects.The setValue()operation is used by Displayers for setting the values that are going to be presented to the users.The getValue()op-eration is used by Inputters for passing the value obtained from the users to domain objects.Figure9:The SearchBook activity.•A presents objectflow relates a FreeContainer to an activity.It spec-ifies that the FreeContainer should be visible while the activity is ac-tive.Therefore,the invocation of the abstract setVisible()operation of the FreeContainer is entirely transparent for the developers.In Figure9 the SearchBookUI FreeContainer and its contents are visible while the SearchBook activity is active.•A confirms objectflow relates an ActionInvoker to a selection state. It specifies that the selection state hasfinished normally.In Figure9the event associated with the“Search”directly related to it.The optional selection state in the SpecifyBookDetails relies on theSpecifyDetails a user is also confirming the optional selection state in SpecifyBookDetails.•A cancels objectflow relates an ActionInvoker to any composite ac-tivity or selection state.It specifies that the activity or selection state has notfinished normally.Theflow of control should be re-routed to a previ-ous state.Theinteraction objects of abstract use cases are also very abstract,and may not be useful for exporting to activity diagrams.Therefore,the UML i method suggests that interaction objects can be elicited from less abstract use cases.Step3Candidate interaction activity identification.Candidate interaction activities are use cases that communicate directly with actors,as described in Section4.1.Step4Interaction activity modelling.A top level interaction activity diagram can be designed from identified candidate interaction activities.A top level in-teraction activity diagram must contain at least one initial interaction state. Figure6shows a top level interactive activity diagram for the Library case study.Top level interaction activities may occasionally be grouped into more abstract interaction activities.In Figure6,many top level interaction activ-ities are grouped by the SelectFunction activity.In fact,SelectFunction was created to gather these top level interaction activities within a top level interaction activity diagram.However,the top level interaction activities,and not the SelectFunction activity,remain responsible for modelling some of the major functionalities of the application.The process of moving from candidate interaction activities to top level interaction activities is described in Section4.2. Step5Interaction activity refining.Activity diagrams can be refined,decom-posing activities into action states and specifying objectflows.Activities can be decomposed into sub-activities.The activity decomposition can continue until the action states(leaf activities)are reached.For instance, Figure9presents a decomposition of the SearchBook activity introduced in Figure6.The use of interacts objectflows relating interaction objects to action states indicates the end of this step.Step6User interface er interface diagrams can be refined to support the activity diagrams.User interface modelling should happen simultaneously with Step5in order to provide the activity diagrams with the interaction objects required for describing action states.There are two mechanisms that allow UI designers to refine a conceptual UI presentation model.•The inclusion of complementary interaction objects allows designers to improve the user’s interaction with the application.•The grouping mechanism allows UI designers to create groups of interac-tion objects using Containers.At the end of this step it is expected that we have a conceptual model of the user interface.The interaction objects required for modelling the user interface were identified and grouped into Containers and FreeContainers.Moreover,the interaction objects identified were related to domain objects using action states and UML iflow objects.Step7Concrete presentation modelling.Concrete interaction objects can be bound to abstract interaction objects.The concrete presentation modelling begins with the binding of concrete inter-action objects(widgets)to the abstract interaction objects that are specified by the APP.Indeed,the APP isflexible enough to map many widgets to each abstract interaction object.Step8Concrete presentation refier interface builders can be used for refining user interface presentations.The widget binding alone is not enough for modelling a concrete user interface presentation.Ergonomic rules presented as UI design guidelines can be used to automate the generation of the user interface presentation.Otherwise,the concrete presentation model can be customised manually,for example,by using direct manipulation.6ConclusionsUML i is a UML extension for modelling interactive applications.UML i makes extensive use of activity diagrams during the design of interactive applications. Well-established links between use case diagrams and activity diagrams explain how user requirements identified during requirements analysis are described in the application design.The UML i user interface diagram introduced for mod-elling abstract user interface presentations simplifies the modelling of the use of visual components(widgets).Additionally,the UML i activity diagram notation provides a way for modelling the relationship between visual components of the user interface and domain objects.Finally,the use of selection states in activity diagrams provides a simplification for modelling interactive systems.The reasoning behind the creation of each new UML i constructor and con-straint has been presented throughout this paper.The UML i notation was en-tirely modelled in accordance to the UML i meta-model specifications[2].This demonstrates that UML i is respecting its principle of being a non-intrusive ex-tension of UML,since the UML i meta-model does not replace the functionalities of any UML constructor[2].Moreover,the presented case study indicates that UML i may be an appropriate approach in order to improve UML’s support for UI design.In fact,the UIs of the presented case study were modelled us-ing fewer and simpler diagrams than using standard UML diagrams only,as described in[3].As the UML i meta-model does not modify the semantics of the UML meta-model,UML i is going to be implemented as a plug-in feature of the ARGO/UML case tool.This implementation of UML i will allow further UML i evaluations using more complex case studies.Acknowledgements.Thefirst author is sponsored by Conselho Nacional de Desenvolvimento Cient´ıfico e Tecnol´o gico-CNPq(Brazil)–Grant200153/98-6.。

Research on Teaching Innovation of Art Design Based on Virtual Reality TechnologySu Zhuan, Sun WenDepartment of Art and Design,GuangDong University of Science&TechnologyNancheng District, Dongguan, GuangdongAbstract—The emergence of virtual reality technology has made the art design industry completely new, and has greatly promoted the development of art design, which will bring revolutionary changes to art design. Based on the definition and characteristics of virtual reality technology, this paper puts forward the concrete application method of virtual reality technology in art design teaching based on the analysis of the role of virtual reality technology in art design teaching.Keywords—Teaching innovation; Art design; Virtual reality technologyI.I NTRODUCTIONVirtual Reality Technology (VR) is a technology that simulates the generation of an environment by means ofelectronic devices such as computers, and allows the examiner to “place” it through different sensing devices and realizenatural interaction with the environment. At present, virtualreality technology has been applied to education and teachingactivities, which has promoted the improvement of modernteaching quality and the development of education. Theapplication of virtual reality technology in art design teachingcan vividly express the teaching content and construct a goodteaching space in a real and effective way, thus promotingstudents' mastery of professional knowledge and skills,improving teaching quality and optimizing teaching effects [1-2].In recent years, with the continuous updating of China'seducational concept, the teaching model of the new centuryhas gradually changed from the traditional indoctrination ortest-oriented mode to the modern teaching mode, that is, moreemphasis on students' methods of learning knowledge andthinking-led teaching. In particular, more emphasis is placedon the cultivation of students' innovative abilities [3-4]. At thesame time, it is the top priority of the current education reformto provide students with personalized, intelligent and modernteaching environment and conditions that integrateinformation and time or space, and improve students' ability tojudge, analyze and solve problems. For the art design teaching,the introduction of virtual reality technology can effectivelystimulate the function of the students' senses, help students to accept more design knowledge and content, promote the quality of art design teaching and talent training [5]. Innovation has a very important educational value and significance.II.T HE B ASIC C HARACTERISTICS OF V IRTUAL R EALITYT ECHNOLOGY"Virtuai Reality (VR)" is a computer simulation technology that makes realistic simulations of the real world in a computer. By using auxiliary technologies such as sensor technology, users can have an immersive feeling in the virtual space, interact with the objects of the virtual world and get natural feedback, and create ideas. Therefore, virtual reality can also be simply understood as a technical means for people to interact with computer-generated virtual environments. VR technology has been recognized as one of the important development disciplines of the 21st century and one of the important technologies that affect people's lives. The application of this technology improves the way people use computers to process multiple engineering data, especially when large amounts of abstract data need to be processed.Virtual reality technology is a comprehensive and practical technology. It integrates computer technology, simulation technology, sensing technology, measurement technology and microelectronics technology to form a three-dimensional realistic virtual environment. It has been widely used. In various fields. The user uses a certain sensing device to enter a certain virtual space by using certain input devices, so that he becomes a member of the virtual space to perform real-time interaction, obtain relevant information while perceiving the virtual world, and finally reach the present. The experience of its environment.A.ImmersionVirtual reality technology is based on human visual, auditory and tactile characteristics. It is simulated by computer and other electronic devices to generate three-dimensional images, allowing users to wear helmet-mounted displays and data gloves and other devices to immerse themselves in a virtual environment for interactive experience. . Using virtual reality technology, users can completely immerse themselves in the virtual world, deeply immersing themselves in the physical and psychological impact of a realistic virtual environment.International Conference on Management, Education Technology and Economics (ICMETE 2019)B.InteractivityHuman-computer interaction is a natural interaction between a sensor and a device through special helmets and data gloves. The interactive nature of virtual reality technology: Users can examine or manipulate objects in a virtual environment through their own language and body movements. This is because the computer can adjust the image and sound presented by the system according to the user's movements of hands, eyes, language and body.C.ConceivedVirtual real-world technology expands the range of people's awareness so that people can fully imagine. Because virtual reality technology can not only reproduce the real environment, but also create an environment that people can arbitrarily conceive, objectively non-existent, or even impossible.III.T HE R OLE OF V IRTUAL R EALITY T ECHNOLOGY IN A RTD ESIGN T EACHINGDemonstrating the effect of abstraction as concrete and improving professional knowledge In the process of art design teaching, teachers can use the virtual reality technology to reproduce the process of student movement in the real world that cannot be observed by the naked eye. The abstraction is image, intuitive and specific, and can fully provide students with learning materials and improve students' ability to solve practical problems. Due to its practicability and adaptability, virtual reality technology has been widely used in many aspects of art design, whether it is frame design, graphic design, text design, space design, structural design or multimedia applications. Great results, teachers can make full use of this advantage to develop a perfect and creative teaching curriculum plan, combine theory and practice, and provide students with an immersive experience, which can improve teachers' professional knowledge of art design. Demonstration effect. Therefore, the application of virtual reality technology to art design teaching has improved the teaching quality and teaching effect of art design, and on the other hand, it has enhanced students' understanding and mastery of professional knowledge.Conducive to enhancing the interaction between teachers and students, and promoting a new type of teaching cooperation mode. Teachers use their virtual reality technology in the classroom to give full play to their inherent subjective initiative and guide students to conduct interactive learning according to their own needs. The problems cooperate with each other and discuss together, so as to achieve the purpose of cultivating the initiative and enthusiasm of students' learning; guiding students to cooperate with each other in a certain virtual space to complete the design work of teacher layout; help students to participate in virtual reality technology In the virtual environment provided, intuitively and visually participate in the natural phenomenon of virtual environment objects or the movement development process of things, deepen the understanding and mastery of theoretical knowledge, and improve their thinking ability and innovation ability. In addition, teachers can cooperate with students in the virtual environment simulated by virtual reality technology, which can fully mobilize the enthusiasm of students, and also help teachers and students learn harmoniously. The perfect combination of the environment, thus contributing to a new type of teaching cooperation model.It is conducive to stimulating students' creative interest and grasping the creative connotation. From the perspective of art design, it is very important to maximize the design creativity of students. However, this needs to be expressed in a certain way. Virtual reality technology just provides such a possibility. Teachers use virtual reality technology in the process of art design teaching. Through the simulation of various objects, vivid and intuitive, they can help students to escape from the inherent space and time constraints, and fully rely on the ideas in their own minds. Creative and virtual reproduction, step by step to modify and improve their artistic design ideas, and then help students find a suitable visual design effect for themselves, but also enable students themselves to have a deeper and more realistic art design. Experience.IV.T HE S PECIFIC M ETHOD OF V IRTUAL R EALITYT ECHNOLOGY A PPLIED IN A RT D ESIGN T EACHING The art design teaching method using virtual reality technology has strong flexibility, practicality and creativity. In the process of art design teaching, through a certain virtual environment, other various teaching methods follow, according to the typical, relevance, authenticity, specificity and image teaching principles of art teaching, in the art design teaching process. The middle school teachers can use the demonstration teaching method, the scenario simulation teaching method, and the computer simulation teaching method to carry out teaching activities.A.Demonstration TeachingThe demonstration teaching method refers to the teacher's present teaching mode, using multimedia technology to demonstrate the teaching content, sorting out the difficult points of knowledge, enabling students to perceive the law of theoretical knowledge, deepen students' understanding and mastery of knowledge points, and promote Students have a clear understanding of the law of the development of art design knowledge, construct a scientific and systematic art knowledge structure, and continuously improve students' artistic design skills.B.Scenario SimulationScenario simulation teaching method refers to the process of reproducing natural phenomena or the movement of things and movements through simulation, allowing students to change from onlookers to participants to help students understand the content of art design teaching, so that they can master knowledge and improve in a short time. A teaching method of abilities and learning skills. This teaching method can effectively break through the bottleneck limitation of the traditional teaching mode, and through the simulation of theoretical knowledge, students can understand the knowledge and content learned more intuitively and thoroughly, and improve the effectiveness of art design teaching.puter SimulationThe computer simulation teaching method refers to a teaching method in which teachers use the various elements such as words, images, sounds, etc. to explain the related information of things or phenomena. It has the advantages of high teaching efficiency, large amount of information, and strong participation of students. A very important teaching method in modern teaching. For example, the national art study course, it is well known that there are fifty-six nationalities in China, and the national art is more diverse. It is impossible to lead students into all ethnic groups to experience the impossible tasks in the classroom. However, teachers can use computers. The simulation teaching method uses computer simulation technology to fully display this colorful and regional ethnic customs and religious beliefs, helping students to understand their national art to the maximum extent, and using virtual reality technology to produce multimedia courseware, which helps students to be An immersive experience to appreciate these artistic features and expressions. V.T HE D EVELOPMENT D IRECTION OF V IRTUAL R EALITY T ECHNOLOGY IN A RT D ESIGN T EACHINGA.Virtual Design DirectionSchools can adopt a new type of teaching method for art design students through virtual reality technology. Let students design things through the virtual world according to their own inner real thoughts. For example, for automotive design students, it is possible to avoid the situation where it is inappropriate to change the model after designing the model. By changing and revising its own design through virtual reality technology, it not only enhances its flexibility but also improves the accuracy. This avoids the time-consuming and laborious phenomenon in the previous design process.B.Virtual Experiment DirectionVirtual laboratories can also be used to create virtual laboratories such as structural strength laboratories and aerodynamic laboratories. Students can conduct timely experimental operations through virtual laboratories to better consolidate what they have learned and combine theory with practice.C.Virtual Training DirectionThe interactivity and specificity of virtual reality technology can provide students with a suitable operating environment, so that students can be immersively integrated into the virtual world, so that students can be trained in various skills through specific integration with objective things. Improve their professionalism in the design process and stimulate their own innovation capabilities.VI.R EALISTIC A PPLICATION OF V IRTUAL R EALITYT ECHNOLOGY IN THE F IELD OF E NVIRONMENTAL A RT D ESIGN First, based on the application of virtual reality technology measures, defects in the field of art design can be compensated. At the present stage, the process of artistic design work in China has a high probability of real problem limitation. For example, the problem of insufficient scale and insufficient funds will play a certain degree of hindrance in the process of art design work. effect. However, based on the application of virtual reality technology, art designers can simulate various types of scenes, so that problems in the field of art design have been properly solved.Second, the potential level of risk can be circumvented based on the application of virtual reality technology measures. At this stage, in the field of art design in China, because it is subject to various types of practical conditions, various types of dangerous situations will occur. In order to ensure a certain degree of personal safety, designers will generally not It is a difficult thing to participate in the real scene and to form a personal experience of the art environment on this basis. Based on the application of virtual reality technology, the environment that people have no way to visit can be simulated, so that designers can operate in this environment, avoiding potential dangers and forming a personal experience.Third, based on the application of virtual reality technology measures, the restrictions on the space-time level can be broken down. Virtual reality technology is actually a technical measure that has surpassed the limitations of time and space conditions. It can simulate any situation, from very large cosmic objects to very tiny bacteria, from hundreds of millions of years ago to today. Designers can explore the environment simulated by virtual reality technology. For example, in the case of the study of the dinosaur era, because dinosaurs have long since disappeared on the earth, it is more difficult for people to test it again, but in the virtual reality technology measures On the basis of a certain degree of application, people can actually simulate the era of dinosaur life and explore the work in this environment.VII.I MPACT OF V IRTUAL R EALITY T ECHNOLOGY ONL ABORATORY C ONSTRUCTIONThe market-oriented employment pressure and the diversification of educational choices have made colleges and universities pay more and more attention to the coordination between their training objectives and the needs of the labor market. At the press conference on February 25, 2009, 2009 Greater China VR League Selection Competition, Zhao Heng, global vice president of Dassault Systèmes in France, said in an interview: "One of the development directions of virtual reality is to provide consumers with a A perceived environment. There is a large demand for talent in the field of user experience design. Dean Huang Xinyuan, Dean of the School of Information, Beijing Forestry University, pointed out: "In the field of architectural design, the application trend of virtual reality is the realization of interaction. ”As an important practice base for college students, the laboratory is one of the construction projects that universities attach great importance to. At present, the construction ofenvironmental art design labs in various universities mainly include digital media laboratories, model making laboratories, photography laboratories, materials and construction technology laboratories, and ceramic art laboratories. The application prospects of virtual reality technology and the market-oriented training goal put forward new requirements for the construction of environmental art design professional laboratory at this stage. In addition to the construction of traditional laboratories, universities can build virtual reality laboratories according to actual conditions. The ring screen projection laboratory and the curtain city planning exhibition hall can also install VRP-Builder, Converse3D, WebMax and other virtual reality production software in the computer room, digital media laboratory and other laboratories for teaching.Using virtual reality technology, we can completely break the limitations of space and time. Students can do all kinds of experiments without leaving home, and gain the same experience as real experiments, thus enriching perceptual knowledge and deepening the understanding of teaching content.VIII.C ONCLUSIONIn summary, the reference of virtual reality technology in art design teaching can effectively enhance the intuitiveness and simulation of teaching content, and help students master the more abstract art theory knowledge better and faster. At the same time, the application of virtual reality technology in art design teaching can greatly enrich the teaching content, promote the efficient integration of art and technology, facilitate students' understanding and mastery of theoretical knowledge, and improve the theoretical and practical ability to ensure the actual operation of students. The training of skills, so as to achieve the optimization of the teaching process, the improvement of teaching quality and the ultimate teaching objectives of practical talent training.A CKNOWLEDGMENTProject name: Research and Practice on Teaching Mode Reform of Interior Design Based on Virtual Reality Technology, which is the national education science innovation research project in 2018, Project No.: JKS82916.R EFERENCES[1]Wang Zhaofeng. Teaching Research on Virtual Reality Design Coursefor College Students Majoring in Art Design[J].Science and Technology Information,2011(07):132,397.[2]Cao Yu. Let the design "moving" - the application of virtual realitytechnology in art design teaching [J]. Pictorial, 2006 (04): 43-44.[3]Gao Fei. The Application of Virtual Reality in the Field of Art Design——Taking Interactive Display and Interaction Design as an Example [J].Art Grand View (Art and Design), 2013 (03): 100.[4]Chen Ying. When Chinese movies fall in love with national instrumentalmusic--On the film music of the art film "Three Monks" [J]. Grand Stage, 2010 (03): 112-113.[5] Zhang Xiaofei. The Application of Virtual Reality Art in Art DesignMajor[J]. Big stage,2012(06).54.。

UI怎么翻译你知道UI怎么翻译吗?一起来学习吧!UI翻译:User Interface 用户界面UI翻译例句:1. Add code manually to handle UI events.手工加代码处理UI事件.2. A color picker is an example of a UI type editor.颜色选择器是UI类型编辑器的一个示例.3. At the bottom of the window are several UI elements.窗口的底部有一些UI元素.4. Application menus are another important part of an application's UI.应用程序菜单是另外应用程序UI的另外一个重要的部分.5. Views are the components that display the application's user interface ( UI ).视图是显示应用程序用户界面 ( UI ) 的组件.6. A possible component of any UI that allows users to select colors.任何UI的可能组件都能允许用户选择颜色.7. How should the UI code be structured to meet design requirements?如何让UI代码与设计的需求相融合?8. A Service keeps the music going even when the UI has completed.服务甚至在UI已经结束后可以继续执行.9. The UI is very intuitive and easy to use.这个UI是直观的和容易使用的.10. It handles requests to edit a document by creating an app UI.它通过创建一个应用程序UI来处理编辑文档这类请求.11. The virtual container service is used to customize the UI virtualization behavior.此虚拟容器服务用于自定义UI虚拟化行为.12. Earnings during this time period are used to establish the UI claim.此时间期内的收入用于建立UI索赔.13. What are the implications for the architecture of any UI decisions?任何一个UI的决定都有哪些含义?14. Fixed UI exploit allowing ACU duplication.固定的UI功绩允许ACU副本.15. Can all of the UI requirements be met?所有的UI需求都实现了吗 ?。

智能机器人帮助学习英语作文In the modern era, the integration of technology into education has become increasingly prevalent, and one of the most exciting developments is the use of intelligent robots to assist in learning English. These robots, powered by advanced artificial intelligence, are designed to enhance the learning experience by providing personalized assistance, engaging content, and interactive learning opportunities.One of the key benefits of using intelligent robots in English learning is their ability to offer instant feedback. Students can practice speaking and writing with the robot, which can then provide corrections and suggestions in real time. This immediate feedback loop is invaluable for learners who wish to improve their language skills quickly and effectively.Moreover, intelligent robots can cater to the diverse learning needs of students. They can adjust the complexity of the language used based on the student's proficiency level, ensuring that the material is always challenging yet accessible. This personalized approach helps to keep students motivated and engaged in their learning journey.Another advantage is the use of interactive games and activities. Intelligent robots can create a fun and immersive learning environment that makes the process of learning English less of a chore and more of an enjoyable experience.Through games, students can learn new vocabulary, practice grammar, and improve their listening skills in a way that is both entertaining and educational.Furthermore, the use of intelligent robots can help to bridge the gap between classroom learning and real-world application. Robots can simulate real-life scenarios, allowing students to practice their English in a variety of contexts. This practical application of language skills is crucial for developing fluency and confidence.Lastly, intelligent robots can be a valuable resource for students who may feel shy or anxious about speaking Englishin a traditional classroom setting. With a robot, students can practice speaking without fear of judgment, which can help to build their confidence and improve their language skills.In conclusion, the use of intelligent robots in learning English is a promising development in the field of education. They offer personalized assistance, instant feedback, interactive learning, and practical application, all of which contribute to a more effective and enjoyable learning experience. As technology continues to advance, it is likely that the role of intelligent robots in education will only grow, providing students with even more innovative ways to learn and master the English language.。

第1篇In the rapidly evolving field of education, the traditional methods of teaching English have been supplemented and sometimes replaced by innovative approaches that leverage technology and emphasize student-centered learning. This article outlines a comprehensive English teaching practice method that integrates technology and student-centered learning to enhance the learning experience for students.I. IntroductionThe English language is a global lingua franca, and the ability to communicate effectively in English is essential in today's interconnected world. However, teaching English effectively requires more than just imparting grammatical rules and vocabulary; it involves engaging students in meaningful activities that foster language acquisition and critical thinking skills. This teaching practice method aims to achieve these goals by incorporating the following key components:1. Technology integration2. Student-centered learning3. Interactive and collaborative activities4. Continuous assessment and feedbackII. Technology IntegrationThe integration of technology in English teaching can provide numerous benefits, including increased engagement, personalized learning, and access to a wealth of resources. Here are some ways to integrate technology into English teaching:1. Interactive Whiteboards and Projectors: Use interactive whiteboards and projectors to display lessons, videos, and other multimedia content. This allows for dynamic and interactive lessons that keep students engaged.2. Educational Software and Apps: Utilize educational software and apps that cater to different learning styles and levels of proficiency. Examples include language learning apps like Duolingo, grammar and vocabulary practice software, and online dictionaries.3. Online Learning Platforms: Create or use existing online learning platforms that provide structured lessons, quizzes, and assignments. These platforms can also facilitate communication and collaboration among students and teachers.4. Social Media and Communication Tools: Encourage students to usesocial media and communication tools like WhatsApp or Slack for language practice, group projects, and peer feedback.5. Virtual Reality (VR) and Augmented Reality (AR): Explore the use of VR and AR to create immersive language learning experiences. For example, students can practice English by interacting with virtual environmentsor by overlaying English language content onto real-world objects.III. Student-Centered LearningStudent-centered learning shifts the focus from the teacher to the student, allowing learners to take an active role in their education. Here are some strategies to implement student-centered learning in English classes:1. Project-Based Learning: Assign projects that require students to research, plan, and present information. This encourages students to use English in real-life contexts and fosters critical thinking and problem-solving skills.2. Flipped Classroom: Use the flipped classroom model, where students watch instructional videos or complete readings at home and use class time for activities and discussions. This allows for more personalized learning and more time for interactive tasks.3. Group Work and Peer Collaboration: Divide students into groups and assign them tasks that require collaboration. This promotes communication skills, teamwork, and mutual support among students.4. Reflective Learning: Encourage students to reflect on their learning experiences through journal entries, discussion, or presentations. This helps students to internalize their learning and set personal goals.5. Choice and Autonomy: Give students a choice in their learning activities, such as selecting topics for presentations or projects, or deciding on the type of assessment they prefer. This empowers students and increases their motivation.IV. Interactive and Collaborative ActivitiesInteractive and collaborative activities are essential for creating a dynamic and engaging learning environment. Here are some examples:1. Role-Playing and Simulations: Use role-playing activities to simulate real-life situations and encourage students to practice English conversationally. Simulations can also be used to teach grammar and vocabulary in context.2. Game-Based Learning: Incorporate educational games and activitiesthat are both fun and effective in teaching English. Examples include word searches, crosswords, and language puzzles.3. Discussion and Debate: Organize class discussions and debates on topics of interest to the students. This helps students to develop their critical thinking and public speaking skills.4. Language Labs: Utilize language labs where students can practice listening, speaking, and pronunciation in a controlled environment.V. Continuous Assessment and FeedbackContinuous assessment and feedback are crucial for monitoring student progress and providing timely guidance. Here are some strategies for effective assessment and feedback:1. Formative Assessment: Use formative assessments, such as quizzes, class discussions, and peer reviews, to gauge student understanding and provide immediate feedback.2. Summative Assessment: Administer summative assessments, such as exams and presentations, to evaluate student learning at the end of a unit or course.3. Self-Assessment and Peer Assessment: Encourage students to assess their own work and provide feedback to their peers. This promotes metacognition and collaborative learning.4. Constructive Feedback: Provide specific, constructive feedback that focuses on strengths and areas for improvement. Feedback should be supportive and encourage students to take ownership of their learning.VI. ConclusionIncorporating technology and student-centered learning into English teaching can significantly enhance the learning experience for students. By leveraging technology, promoting student-centered approaches, engaging students in interactive activities, and providing continuous assessment and feedback, teachers can create a dynamic and effective learning environment that prepares students for success in the globalized world.第2篇Introduction:The field of English language teaching (ELT) is constantly evolving, with new methodologies and techniques being introduced to enhance the learning experience. This paper proposes an effective methodology for English teaching practice that combines various teaching strategies and techniques to cater to the diverse needs of learners. The methodology focuses on student-centered learning, interactive activities, and the integration of technology, ensuring that students not only acquire language skills but also develop critical thinking and cultural awareness.I. Student-Centered Learning1. Needs Analysis:Before implementing any teaching methodology, it is essential to conduct a needs analysis to understand the specific requirements and goals of the students. This involves assessing their current level of English proficiency, identifying their strengths and weaknesses, and determining their learning objectives.2. Personalized Learning Plans:Based on the needs analysis, develop personalized learning plans for each student. These plans should outline the learning goals, activities, and resources tailored to meet the individual needs of each student.3. Active Participation:Encourage active participation in the classroom by involving students in discussions, group activities, and role-plays. This approach promotes engagement, motivation, and a deeper understanding of the language.II. Interactive Activities1. Pair and Group Work:Utilize pair and group work to enhance communication skills and collaboration. Assign tasks that require students to work together, such as role-plays, debates, and problem-solving activities. This fosters teamwork and encourages students to share their thoughts and ideas.2. Games and Simulations:Integrate games and simulations into the teaching process to make learning more enjoyable and memorable. Games such as "Pictionary," "Jeopardy," and "Simon Says" can help reinforce vocabulary, grammar, and pronunciation skills.3. Project-Based Learning:Implement project-based learning activities that require students to research, plan, and present information. This approach promotes critical thinking, research skills, and the application of language in real-life situations.III. Technology Integration1. Online Resources:Utilize online resources such as educational websites, e-books, and interactive learning platforms to provide additional support andpractice opportunities for students. These resources can be accessed both inside and outside the classroom, allowing for flexible and self-paced learning.2. Digital Tools:Incorporate digital tools such as presentation software, video conferencing, and collaborative platforms to facilitate communication and collaboration. These tools can enhance the learning experience by providing interactive and engaging activities.3. Mobile Learning:Encourage mobile learning by developing mobile apps and websites that offer language practice exercises and interactive lessons. This allows students to learn anytime, anywhere, using their smartphones or tablets.IV. Assessment and Feedback1. Formative and Summative Assessment:Implement a balanced assessment strategy that includes both formative and summative assessments. Formative assessments, such as quizzes, class discussions, and peer evaluations, provide ongoing feedback to students and teachers. Summative assessments, such as exams and projects, measure the overall progress and achievement of the students.2. Constructive Feedback:Provide constructive feedback to students, focusing on their strengths and areas for improvement. Feedback should be specific, actionable, and encouraging, helping students to identify their learning goals and develop their skills.3. Self-assessment and Reflection:Encourage students to engage in self-assessment and reflection bysetting personal learning goals and evaluating their progress. This promotes metacognition and helps students become more aware of their learning process.Conclusion:This effective methodology for English language teaching practice combines student-centered learning, interactive activities, and technology integration to create a dynamic and engaging learning environment. By focusing on the needs of the students, promoting active participation, and utilizing innovative teaching techniques, this methodology aims to equip learners with the necessary language skills, critical thinking abilities, and cultural awareness to succeed in the globalized world.第3篇摘要:随着我国英语教育的不断发展,传统的英语教学模式已无法满足新时代对英语教学的需求。

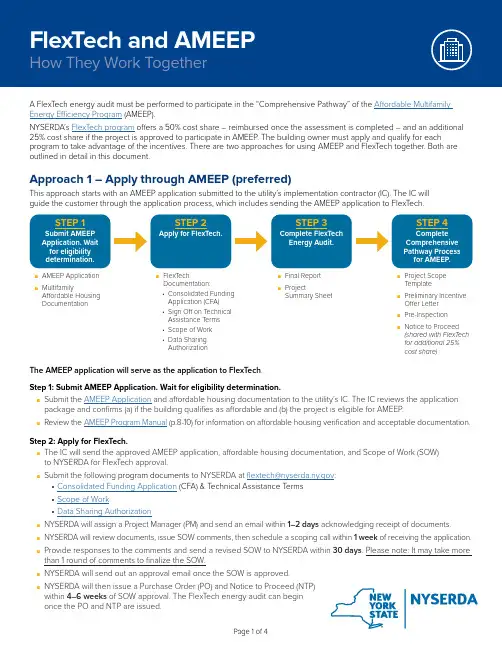

A FlexT ech energy audit must be performed to participate in the “Comprehensive Pathway” of the Affordable Multifamily Energy Efficiency Program (AMEEP).NYSERDA’s FlexT ech program offers a 50% cost share – reimbursed once the assessment is completed – and an additional 25% cost share if the project is approved to participate in AMEEP. The building owner must apply and qualify for each program to take advantage of the incentives. There are two approaches for using AMEEP and FlexT ech together. Both are outlined in detail in this document.Approach 1 – Apply through AMEEP (preferred)This approach starts with an AMEEP application submitted to the utility’s implementation contractor (IC). The IC will guide the customer through the application process, which includes sending the AMEEP application to FlexTech.■ AMEEP Application ■FlexT ech■ Final Report ■Project Scope Documentation:T emplate■Multifamily■ProjectAffordable Housing • Consolidated Funding Summary Sheet■Preliminary Incentive DocumentationApplication (CFA)Offer Letter • Sign Off on T echnical ■ Pre-Inspection Assistance T erms Scope of W ■Notice to Proceed • ork (shared with FlexT ech • Data Sharing for additional 25% Authorizationcost share)The AMEEP application will serve as the application to FlexTech .Step 1: Submit AMEEP Application. Wait for eligibility determination.■Submit the AMEEP Application and affordable housing documentation to the utility’s IC. The IC reviews the application package and confirms (a) if the building qualifies as affordable and (b) the project is eligible for AMEEP.■Review the AMEEP Program Manual (p.8-10) for information on affordable housing verification and acceptable documentation.Step 2: Apply for FlexTech.■The IC will send the approved AMEEP application, affordable housing documentation, and Scope of Work (SOW) to NYSERDA for FlexT ech approval.■Submit the following program documents to NYSERDA at *******************.gov : • Consolidated Funding Application (CFA) & T echnical Assistance T erms • Scope of Work• Data Sharing Authorization■NYSERDA will assign a Project Manager (PM) and send an email within 1–2 days acknowledging receipt of documents. ■NYSERDA will review documents, issue SOW comments, then schedule a scoping call within 1 week of receiving the application.■Provide responses to the comments and send a revised SOW to NYSERDA within 30 days . Please note: It may take more than 1 round of comments to finalize the SOW.■NYSERDA will send out an approval email once the SOW is approved.■NYSERDA will then issue a Purchase Order (PO) and Notice to Proceed (NTP) within 4–6 weeks of SOW approval. The FlexT ech energy audit can begin once the PO and NTP are issued.Step 3: Complete FlexTech Energy Audit.■After completing the FlexT ech audit, complete the two deliverables – a Draft Report per Report Guidelines and aProject Summary Sheet (PSS).■Submit both deliverables to NYSERDA at *******************.gov according to the agreed upon project timeline in the SOW.■NYSERDA will perform a technical review of the deliverables and issue Draft Report comments within 2–3 weeks.■Provide responses to the comments and submit a revised Draft Report within 30 days.■It will take approximately 2–3 weeks after the revised Draft Report is received for the final Draft Report to be approved. ■Once the Draft Report has been approved, NYSERDA will send out an approval email.■NYSERDA will pay a 50% cost share of the total study cost directly to the provider, if the provider is a FlexT ech consultant.• A building owner can use an Independent Service Provider but must pay for the cost of study upfront and apply fora reimbursement from NYSERDA. Refer to the Project Payments section of the FlexT ech Program Guidelines for moredetails on the payment process.■After the 50% cost share has been paid, NYSERDA will transfer the Final Report and PSS back to the utility’s IC.FlexTech Project MilestonesPlease Note:• SOW and Draft Reports may require more than one round of comments• Failure to submit items within specified timeline may result in cancellationStep 4: Complete Comprehensive Pathway Process for AMEEP.■Finalize the scope and initiate the project:• Fill out a Project Scope T emplate provided by the IC.• Receive Preliminary Incentive Offer Letter from the IC.• Design new systems, hire contractors, submit cut sheets, and savings calculations (such as energy model or TRMbased savings calculations accounting for interactive effects).■AMEEP Application Approval:• Perform Pre-Inspection and engineering review.• Receive NTP – triggers additional 25% audit cost share, up to 75% cost share of the overall study cost, fromNYSERDA FlexT ech. Please note: The NTP from AMEEP must be issued within 6 months of the FlexT ech audit report being approved by NYSERDA to qualify for the additional cost share.■Complete project:• Install equipment.• Receive mid-project payment (optional).• Submit completion paperwork.• Post inspection and final engineering review.• Receive incentive payment.Approach 2 – Apply through FlexTechIf a project is already going through a FlexT ech study, and there is interest in the AMEEP program, the Energy Service Provider or the customer should contact FlexT ech and their utility’s IC to coordinate.■FlexT ech Documentation:■ Final Report ■AMEEP application■Project Scope • Multifamily Affordability T emplate■Project Summary Verification Application Sheet■Preliminary Incentive • Scope of Work Offer Letter • Data Sharing ■ Pre-Inspection Authorization■NTP (shared with• Consolidated Funding FlexT ech for additional Application (CFA)25% cost share)Step 1: Apply for FlexTech ■ F lexT ech energy audits must be conducted by an approved Energy Service Provider. Choose an Energy Service Provider through either of the following two networks:• NYSERDA Multifamily Building Solutions Network • NYSERDA FlexT ech Consultants■S ubmit all of the following documents to FlexT ech at *******************.gov to apply to the FlexT ech Program:• Program Application• Multifamily Affordability Verification Application • Scope of Work (SOW)• Data Sharing Authorization■NYSERDA will assign a Project Manager (PM) and send an email within 1–2 days acknowledging receipt of documents.■NYSERDA will review your application materials, issue SOW comments, and schedule a project scoping call within one week of receiving your application. Respond to the comments and send a revised SOW to NYSERDA within 30 days . It can take up to one week after the revised SOW is received for your final SOW to be approved by NYSERDA. Please note that it may take more than one round of comments to finalize the SOW. ■NYSERDA will send out an email when the SOW has been approved.■NYSERDA will issue a Purchase Order (PO) and Notice to Proceed (NTP) within 4–6 weeks of SOW approval. Once the PO and NTP are issued, the FlexT ech audit can begin.Step 2: Complete FlexTech Energy Audit ■When the FlexT ech audit is complete, submit the project deliverables (Draft Report per Report Guidelines and ProjectSummary Sheet (PSS)) to NYSERDA at *******************.gov according to the agreed upon project timeline in the SOW.■NYSERDA will perform a technical review of the project deliverables and issue Draft Report comments within 2–3 weeks . Address the comments and submit a revised Draft Report within 30 days . It will take approximately 2–3 weeks after the revised Draft Report is received for your final Draft Report to be approved by the PM. Please note that it may take more than one round of comments to finalize the Draft Report.■NYSERDA will pay a 50% cost share of the total study cost directly to the provider, if the provider is a FlexT ech consultant. • A building owner can use an Independent Service Provider but must pay for the cost of study upfront and apply for a reimbursement from NYSERDA. Refer to the Project Payments section of the FlexT ech Program Guidelines for more details on the payment process.■ NYSERDA will ask Provider to confirm customer interest in participating in AMEEP. If the customer plans to participate in AMEEP, NYSERDA will transfer the Final Report, Project Summary Sheet, Affordable Housing Documentation and Verification to the utility’s IC.Step 3: Submit AMEEP Application Documents■T o apply for AMEEP, you must submit an AMEEP application for a Comprehensive Pathway. The IC will review your application to confirm that your project is eligible for AMEEP.• Click here for link to AMEEP ApplicationStep 4: Complete Comprehensive Pathway Process for AMEEP■Finalize Scope and Initiate Project:• Fill out a Project Scope T emplate (IC will provide).• Receive Preliminary Incentive Offer Letter.• Design new systems, hire contractors, submit cut sheets and savings calculations (such as energy model or TRM based savings calculations accounting for interactive effects).■AMEEP Application Approval:• Perform Pre-Inspection and engineering review.• Receive NTP – triggers additional 25% audit cost share, up to 75% cost share of the overall study costs, provided by NYSERDA FlexT ech. Please note: The NTP from AMEEP must be issued within 6 months of the FlexT ech audit report being approved by NYSERDA to qualify for the additional cost share.■Complete Project:• Install equipment.• Receive mid-project payment (optional).• Submit completion paperwork.• Post inspection and final engineering review.MF-OWN-ftameepjourney-fs-1-v1 12/22。