A constraints satisfaction approach to the robust spanning tree problem with interval data

- 格式:pdf

- 大小:194.20 KB

- 文档页数:8

ArticleLanguage. Articles must be written in good EnglishLength. Articles should be as concise as possible. Regular articles should not exceed 25 standard manuscript pages and short communications should not exceed 10 standard manuscript pages, including tables and figures. A standard manuscript page is A4 or letter size, text with 1.5 line spacing, 12 pt font and ample margins. In exceptional cases the Editors may waive this requirement. Supplementary material is allowed, which will be available in e-version only.Supplementary material.The authors of accepted papers can be allowed to have some supplementary material, such as large data tables, appendices, or long proofs of theorems, published online alongside the electronic version of the paper in Elsevier web products, including ScienceDirect: . These materials would not appear in the printed version.The supplementary material should be included in any reviewed version of the submission. In order to ensure that submitted material is directly usable, the data should be provided in one of the recommended file formats. Authors should submit the material in electronic format together with the article and supply a concise and descriptive caption for each file via EES. The main body of the paper should reference the supplementary material.Title. Concise and informative. Avoid abbreviations and formulae.Author names and affiliations. Where the family name may be ambiguous (e.g., a double name), please indicate this clearly using appropriate script (capital cases as first letter of authors' first and surnames followed by lower cases). Present the Authors' affiliation addresses (where the actual work was done) below the names. Indicate all affiliations with a lower-case superscript letter immediately after the Author's name and in front of the appropriate address. Provide the full postal address of each affiliation, including the country name, and, if available, the e-mail address of each Author.Corresponding Author. Clearly indicate who is willing to handle correspondence at all stages of refereeing and publication, also post-publication. Ensure that telephone and fax numbers (with country and area code) are provided in addition to the e-mail address and the complete postal address.Abstract. An abstract of between 50 and 250 words should state the purpose of the research and the main results. An abstract is often presented separate from the article, so it must be able to stand alone. Abstracts should not contain formulae.Keywords. Must be included and at least the first one should be selected from the list below. Some keywords from outside the list may be added but the total number of keywords should not exceed five. The letters before the keywords are those of the surnames of the three editors. The paper is submitted to the editor whose initial is given before the first keyword selected from the list.Illustrations.Graphics files can be uploaded via /ejor A guide onelectronic artwork is available on /artworkReferencesAll citations in the text should refer to:- Single Author: the Author's name (without initials, unless there is ambiguity) and the year of publication;- Two Authors: both Authors' names and the year of publication;- Three or more Authors: first Author's name followed by "et al." and the year of publication. Examples: "as demonstrated in (Allan, 1996a, 1996b, 1999; Allan and Jones, 1995). Lee et al. (2000) have recently shown"In the references list references should be arranged first alphabetically and then further sorted chronologically if necessary. More than one reference from the same Author(s) in the same year must be identified by the letters "a", "b", "c", etc., placed after the year of publication. Examples:Reference to a journal publication:-Griffiths W, Judge G. Testing and estimating location vectors when the error covariance matrix is unknown. Journal of Econometrics 1992;54; 121-138 (note that journal names are not to be abbreviated).Reference to a book:-Hawawini G, Swary I. Mergers and acquisitions in the U.S. banking industry: Evidence from the capital markets. North-Holland: Amsterdam; 1990.Reference to a chapter in an edited book:-Brunner K, Melzer AH 1990. Money Supply. In: Friedman BM, Hahn FH (Eds), Handbook of monetary economics, vol.1. North-Holland: Amsterdam; 1990. p. 357-396.Citing and listing of Web references. As a minimum, the full URL should be given. Any further information, if known (Author names, dates, reference to a source publication, etc.), should also be given. Web references can be listed separately (e.g., after the reference list) under a different heading if desired, or can be included in the reference list.Submission checklist" One Author designated as corresponding Author:" E-mail address" Full postal address" Telephone and fax numbers" All necessary files have been uploaded" Keywords" All figure captions" All tables (including title, description, footnotes)" Manuscript has been "spellchecked"" References are in the correct format for this journal" All references mentioned in the Reference list are cited in the text, and vice versa" Permission has been obtained for use of copyrighted material from other sources (including the Web)List of keywords*" (A) Applied probability" (A) Artificial intelligence" (S) Assignment" (D) Auctions/bidding" (S) Branch and bound" (P) Business process reengineering" (S) Combinatorial optimization" (S) Complexity theory" (A) Computing science" (B) Conic programming" (B) Constraints satisfaction" (A) Control" (B) Convex programming" (P) Cost benefit analysis" (S) Cutting" (D) Data envelopment analysis" (A) Data mining" (A) Decision support systems" (D) Decision analysis" (P) Distributed decision making" (B) Distribution" (A) Dynamic programming" (D) E-commerce" (P) Economics" (D) Education" (D) Environment" (S) Evolutionary computations" (S) Expert systems" (B) Facilities planning and design" (P) Finance" (P) Flexible manufacturing systems" (A) Forecasting" (B) Fractional programming" (S) Fuzzy sets" (A) Game theory" (P) Gaming" (S) Genetic algorithms" (B) Geometric programming" (B) Global optimization" (B) Goal programming" (A) Graph theory" (S) Group decisions and negotiations " (S) Heuristics" (D) Human resources" (B) Integer programming" (B) Interior point methods" (B) Inventory" (P) Investment analysis" (S) Knowledge-based systems" (B) Large scale optimization" (A) Linear programming" (A) Location" (P) Logistics" (A) Maintenance" (P) Manufacturing" (D) Marketing" (A) Markov processes" (S) Metaheuristics" (P) Modelling systems and languages " (S) Multi-agent systems" (S) Multiple criteria analysis" (B) Multiple objective programming " (A) Multivariate statistics" (A) Network flows" (A) Neural networks" (B) Nonlinear programming" (P) Organization theory" (A) OR in agriculture" (A) OR in airlines" (P) OR in banking" (A) OR in biology" (A) OR in developing countries" (A) OR in energy" (D) OR in government" (B) OR in health services" (P) OR in manpower planning" (B) OR in medicine" (B) OR in military" (D) OR in natural resources" (D) OR in research and development " (D) OR in societal problem analysis " (D) OR in strategic planning" (A) OR in telecommunications" (S) Packing" (S) Parallel computing" (B) Parametric programming" (B) Penalty methods" (S) Petri nets" (S) Polyhedra" (P) Pricing" (D) Problem structuring" (P) Production" (D) Productivity and competitiveness " (P) Project management" (S) Project scheduling" (D) Psychology" (P) Purchasing" (B) Quadratic programming" (A) Quality control" (P) Quality management" (A) Queueing" (A) Regression" (A) Reliability" (A) Replacement" (P) Retailing" (D) Revenue management" (P) Risk analysis" (P) Risk management" (A) Robustness and sensitivity analysis " (S) Rough sets" (B) Routing" (P) Scenarios" (S) Scheduling" (S) Search theory" (B) Semi-infinite programming" (S) Simulated annealing" (A) Simulation" (A) Stochastic processes" (A) Stochastic programming" (D) Supply chain management" (P) Systems dynamics" (S) Tabu search" (A) Time series" (S) Timetabling" (B) Traffic" (B) Transportation" (B) Travelling salesman" (P) Uncertainty modelling" (P) Utility theory" (P) Visual interactive modelling*Codes of Editors: (A) - Jesus Artalejo, (B) - Jean-Charles Billaut, (D) - Robert Dyson, (P) - Lorenzo Peccati, (S) - Roman Slowinski。

MINESWEEPER,#MINESWEEPERPreslav Nakov,Zile Wei{nakov,zile}@May14,2003”Hence the easiest way to ensure you always win:100-square board,99mines.”–Chris Mattern,SlashdotAbstractWe address the problem offinding an optimal policy for an agent playing the game of minesweeper.The problem is solved exactly,withinthe reinforcement learning framework,using a modified value iteration.Although it is a Partially Observable Markov Decision Problem(MDP),we show that,when using an appropriate state space,it can be definedand solved as a fully observable MDP.As has been shown by Kaye,checking whether a minesweeper config-uration is non-contradictory is an NP-complete problem.We go a step fur-ther,as we define a corresponding counting problem,named#MINESWEEPER, and prove it is#P-complete.We show that computing both the state tran-sition probability and the state value function requiresfinding the numberof solutions of a system of Pseudo-Boolean equations(or0-1Program-ming),or alternatively,counting the models of a CNF formula describinga minesweeper configuration.We provide a broad review of the existingapproaches to exact and approximate model counting,and show none ofthem matches our requirements exactly.Finally,we describe several ideas that cut the state space dramatically and allow us to solve the problem for boards as large as4×4.We presentthe results for the optimal policy and compare it to a sophisticated prob-abilistic greedy one,which relies on the same model counting idea.Weevaluate the impact of the free-first-move and provide a list of the bestopening moves for boards of different sizes and with different number ofmines,according to the optimal policy.1About minesweeperMinesweeper is a simple game,whose popularity is due to a great extent to the fact that it is regularly included in Microsoft Windows since1991(as imple-mented by Robert Donner and Curt Johnson).Why is it interesting?Because it hides in itself one of the most important problems of the theoretical computer1science:the question whether P=NP.P vs.NP is one of the seven Millennium Prize Problems,for each of which the Clay Mathematics Institute of Cambridge, Massachusetts offers the prize of$1,000,000[2].Its connection to minesweeper has been revealed by Kaye,who proved minesweeper is NP-complete[36],and is further discussed by Stewart in Scientific American[48].Some interesting resources about Minesweeper on the Web include:♦Richard Kaye’s minesweeper pages.Focused on the theory behind the game[35].♦The Authoritative Minesweeper.Tips,downloads,scores,cheats,and info for human players.A world records list is also supported[12].♦Programmer’s minesweeper.Java interface that allows a programmer to implement a strategy for playing minesweeper and then observe how it performs. Contains several ready strategies[6].♦Minesweeper strategies.Tips and tricks for human players[4].♦Minesweeper strategies&tactics.Strategies and tactics for the human player[3].2SAT,k-SATWe start with some definitions.Literals are Boolean variables(with values from{0,1})and their negations.A Boolean formula(or propositional formula)is an expression written using AND,OR,NOT,parentheses and variables.A Boolean formula is in Disjunctive Normal Form(DNF)if it is afinite disjunction(logical OR)of a set of DNF clauses.A DNF clause is a conjunction (logical AND)of afinite set of literals.A Boolean formula is in Conjunctive Normal Form(CNF)if it is afinite con-junction(logical AND)of a set of CNF clauses.A CNF clause is a disjunction (logical OR)of afinite set of literals.Unless otherwise stated,below we will work with Boolean formulas(or just formulas)in CNF.We will use n to refer to the number of variables,and m−to the number of CNF clauses(or just clauses).The Boolean formulas are in the heart of thefirst known NP-complete prob-lem:SAT(Boolean SATisfiability)[24].Given a Boolean formula,it asks whether there exists an assignment that would make it true.The problem remains NP-complete even when is restricted to CNF formulas with up to k variables per clause(a.k.a.k-CNF),provided that k≥3.Some particular cases can be solved in polynomial time: e.g.2SAT,Horn clauses etc.It is inter-esting to note that DNF-SAT is also solved in polynomial time(with respect to the number of clauses,not the variables!):it is enough to check whether there exists a clause without contradictions:it should not contain x and¬x at the same time.SAT is an important problem for logic,circuit design,artificial intelligence,probability theory etc.A major source of information about SAT is the SAT Live!site[8].Ready implementations of different solvers,tools, evaluation,benchmarks etc.can be obtained from SATLIB−The Satisfiability2Library[9].3Related WorkThe most systematic study of minesweeper so far is due to Kaye.He tried to find a strategy such that no risk is taken when there is a square that can be safely uncovered and this led him to the following decision problem definition [36]:MINESWEEPER:Given a particular minesweeper configuration determine whether it is consistent,which is whether it can have arisen from some pattern of mines.In fact,he named the problem minesweeper consistency problem,but we pre-fer to use MINESWEEPER.Kaye studied the following strategy:To determine whether a background square is free we can mark it as a mine.If the resulting configuration is inconsistent(MINESWEEPER solution is NO),the square is safe to uncover.It is easy to see that MINESWEEPER is a special case of SAT,and more precisely,8-SAT,since no clause can contain more than8literals.Is it NP-complete however?This question is reasonable since the MINESWEEPER is a proper subset of8-SAT:only formulas of some special forms are possible. Namely,all clauses can have either only positive or only negative literals.In addition,for each positive-only clause there exists exactly one negative-only one, which contains the same set of variables but all they are negated.Kaye showed how different minesweeper configurations could be used to build logic circuits and thus,to simulate digital computers.For the purpose,he presented configurations that can be used as wire,splitter and crossover,as well as a set of logic gates:NOT,AND,OR and XOR.The presence of AND and NOT gates(or alternatively OR and NOT gates)proves that MINESWEEPER is NP-complete[36,1].This proof follows to a great extent the way Berlekamp and al.[19]proved that the John Conway’s game of life[29]is Turing-complete. In addition,Kaye observed that the game of life is played on an infinite board, so he defined an infinite variant of minesweeper and then proved it is Turing-complete as well[37].There was also some work on the analysis and implementation of real game playing strategies.Adamatzky[13]describes a simple cellular automaton,capa-ble to populate a n×n board in timeΩ(n),with each automaton cell having27 states and25neighbors,including itself.Rhee[46],Quartetti[45]used genetic algorithms.There have been also some attempts to use neural networks(see the term projects[18],[33]).Castillo and Wrobel address the game of minesweeper from a machine learn-ing ing Mio,a general-purpose system for Inductive Logic Pro-gramming(ILP),they learned a strategy,which achieved a”better performance than that obtained on average by non-expert human players”[23].In fact the rules learned by the system were for a board of afixed small size:8×8.The sys-tem learned four Prolog-style rules,exploiting very local information only.Three3of the rules were virtually the same as the most trivial ones as implemented in the equation strategy of PGMS[6].While the average human beginner wins35% of the time,they achieved52%.This rate rose to60%when a very simple local probability,defined separately over each equation is employed to help guessing in case no rule is applicable,thus achieving the win rate of PGMS.In fact,all these attempts,although interesting,are inadequate since solving MINESWEEPER requires global logical inference and model counting.4Pseudo-Boolean problemsShould we necessarily think of minesweeper in terms of Boolean formula satis-fiability?The equations that arise there are of the form:x1+x2+x3+x4+x5+x6+x7+x8=5All the coefficients of the c unknowns on the left-hand-side are1,and the number k on the right-hand-side is an integer,1≤k≤7(we do not allow0 and8,which are trivial),and in addition,k<c.Converting this equation to CNF form requires putting positive-only clauses (e.g.x1∨x3∨x4∨x6∨x7)and C58negative-only counterparts(e.g.¯x1∨¯x3∨¯x4∨¯x6∨¯x7),which is112in total.Do we really need to do this?Cant’t we just look at MINESWEEPER as a constraint satisfaction problem of a special kind and try to solve it more efficiently?Let us explore the options.First,note that SAT(suppose a CNF formula is given)can be written as an Integer Programming problem the following way:minimize wsubject to:w+y i∈c+j x i+y i∈c−j(1−x j)≥1,j=1,···,mx i∈0,1,i=1,···,nw>0(1)where x i(1≤i≤n)are the unknowns,c j(1≤j≤m)are the CNF clauses(c−jand c+j are the sets of the negative and the positive literals of the clause c j),and w is a new variable,added to ensure there will be a feasible solution.The formula is unsatisfiable,if w>0.In the case of minesweeper,we can allow not only1,but also other natural numbers on the right-hand-side of the equation.In addition,we will need to put two inequalities for each equation,but we will have no combinatorial explosion of clauses:x1+x2+x3+x4+x5+x6+x7+x8≤5x1+x2+x3+x4+x5+x6+x7+x8≥5(2)In fact,the reduction,although instructive,is not necessarily the best one. Note,that in SAT we do not have a function to optimize.In addition,in4MINESWEEPER we always have only equalities(for the local constraints), which means we need to solve a system of linear equations.In general this can be done inΘ(n3)using Gaussian elimination.In the discrete case this is a problem of solving a system of Diophantine equations,which is an NP-complete problem.Note however,that our problem is more restricted than that:we want the values to be not just integers,but0or1.So,looking back at the Integer Programming problem with inequalities,we can see it is a special instance,namely a pure0-1programming,where the variable values are restricted to0and1.It is natural to allow the inequalities to accept natural numbers other than1on the right-hand-side.If in addition we allow the coefficients to take integer values other than0and1,we obtain the Pseudo-Boolean problem.The problem can be solved by means of pure Boolean methods(e.g.a Pseudo-Boolean version of relsat[26],randomization[44],or DPLL techniques [14])or using Integer Programming techniques(e.g.branch-and-bound and branch-and-cut[26],[15]]).In the latter case it would be more natural to name the problem0-1programming.A good source of information about solving pseudo-Boolean problems is PBLIB−the Pseudo-Boolean Library[5],where can be found several useful links to sites,papers,people,implementations,tools etc.5Model counting5.1#P,#P-complete,#SAT,#k-SATIt has been realized that there are problems,primarily in AI,that require not satisfiability checking but rather counting the number of modelsµ(F)of a CNF formula F.A broad overview and several examples can be found in[47].Some of these include:computing the number of solutions of a constraint satisfac-tion problem,inference in Bayesian networks,finding the degree of belief of a propositional statement s with respect to a knowledge base B(computed asµ(B+{s})/µ(B)),estimating the utility of an approximate theory A with respect to the original one O(i.e.µ(O)−µ(T)),etc.For the purpose of studying counting problems Valiant[49]introduced some new complexity classes:#P,#P-complete etc.Formally,a problem is in#P if there is a nondeterministic,polynomial-time Turing machine that,for each instance of the problem,has a number of accepting computations,exactly equal to the number of its distinct solutions.It is easy to see that a#P problem is necessarily at least as hard as the corresponding NP problem:once we know the number of solutions,we can just compare this number to zero and thus solve the NP problem.So,any#P problem,corresponding to an NP-complete problem,is NP-hard.In addition to#P,Valiant defined the class#P-complete.A problem is in#P-complete,if and only if it is in#P,and every other problem in#P can be reduced to it in polynomial time.The problem offinding the number of models of a Boolean formula is known5in general as #SAT.Just like SAT,#SAT has versions #2SAT,#3SAT,#k -SAT etc.,where the maximum number of literals in a clause is limited by k .#SAT has been shown to be #P-complete in general [49]and thus,it is neces-sarily exponential in the worst case (unless the complexity hierarchy collapses).Note,that while 2SAT is solvable in polynomial time,#2SAT is #P-complete (and so is k -SAT,k ≥2).5.2#MINESWEEPERWhile Kaye [36]addressed the problem of finding sure squares only,this is not enough to play good,since most of the real game configurations will have no sure cases.So,we face a problem of reasoning under uncertainty.A reasonable heuristic would be to probe the square with the lowest probability to contain a mine,thus acting greedily.This probability can be estimated as the ratio of the number of different mine distributions in which a fixed background square contains a mine to the number of all different mine distributions consistent with the current configuration.So,we need to define the #MINESWEEPER problem:#MINESWEEPER:Given a particular minesweeper configuration find the number of the possible mine distributions over the covered squares.Theorem 5.1.#MINESWEEPER is #P-complete.Proof.Specialization to #CIRCUIT-SAT.#CIRCUIT-SAT is the basic #P-complete problem and is defined as fol-lows:given a circuit,find the number of inputs that make it output ing the Kaye’s result that a minesweeper configuration can represent logical gates,wires,splitters and crossovers,it follows that any program that tries to solve #MINESWEEPER should be able to solve any logical circuit,i.e.solve #CIRCUIT-SAT.The theorem above means we cannot hope to solve #MINESWEEPER in polynomial time unless all problems in #P-complete can be solved in polynomial time,i.e.unless P=NP.But we can try to approximate it.5.2.1Inclusion-exclusion principleBefore we present some of the most interesting approaches so far,it is interesting to say that the problem of model counting is a special case of a more general problem (again #P-complete):finding the probability of the union of n events from some probability space.More formally,let A 1,A 2,...,A m be events from s probability space and we want to find P ( A i ).This is given by the inclusion-exclusion formula:P (m i =1A i )=m i =1P (A i )− 1≤i ≤j ≤m P (A i ∩A j )+ 1≤i ≤j ≤k ≤mP (A i ∩A j ∩A k )−···+(−1)m −1P (A 1∩A 2···∩A m )6This formula contains2m−1terms,and thus the direct evaluation would take exponential time.In addition,it cannot be calculated exactly in the absence of any of these terms.So,here comes the natural question:how well it can be approximated?It is interesting to note,that this is an old question,first studied by Boole[21].In fact,he was interested in approximating the size of the union of a family of sets,which is essentially an equivalent problem;we just have to divide by2m,since:|mi=1A i|=mi=1|A i|−1≤i≤j≤m|A i∩A j|+1≤i≤j≤k≤m|A i∩A j∩A k|−···+(−1)m−1|A1∩A2···∩A m|How do we go fromfinding the probability of the union of probabilistic events to#SAT?Let’s assume a probability space{0,1}n under uniform distribution and let A i be the event that the i-th clause(1≤i≤m)in a DNF formula is satisfied.Then|A i|is the number of models of the i-th clause and|∪m i=1A i|is the number of models of all clauses in the formula F,i.e.the number of models µ(F)of F.But we are interested in CNF,not in DNF.While these forms are equivalent, it is not straightforward to see how the inclusion-exclusion principle can be applied to CNF.The solution comes easily though,if we revise the definition of A i:now it will be the event that the i-th clause in the CNF formula is falsified. So,|A i|will be the number of falsifiers of the i-th clause,and|∪m i=1A i|−the number of falsifiers of all CNF clauses in F,i.e.(2m−µ(F)).Note,that the uniform distribution over{0,1}n for DNF(or equivalently for CNF)is in fact a special case.As we will see below,#SAT is much easier problem thanfinding the probability of the union of probabilistic events.5.3Approximation algorithms5.3.1Luby&Velickovic algorithmLuby and Velickovic[41]try to approximate the proportion of truth assignments of the n variables that satisfy the DNF formula F,i.e.P(F)=µ(F)/2n.They propose a deterministic algorithm,which for any andδ( >0,δ>0) calculates an estimate Q,such that(1−δ)P r(F)− ≤Q≤P(F)+ .The running time of this algorithm is polynomial in nm,multiplied by the term:log(nm)δ log2(m/ )δIf the maximum clause length is t,then for anyβ(β>0)the deterministic algorithmfinds an approximation satisfying:(1−β)P(F)≤Q≤(1+β)P(F). This time the algorithm is polynomial in nm,multiplied by:max(t,log n)β t2β75.3.2Inclusion-exclusion algorithmsLinial and Nisan addressed the more general problem of finding the size of the union of a family of sets [38].They showed that it could be approximated effectively by using only part of the intersections.In particular,if the sizes of all intersections of at most k distinct sets are known,and k ≥Ω(√m ),then the union size can be approximated within e −Ω(k/√m ).The bound above has been shown to be non-tight,and has been improved by Kahn&al.[34]to e −˜Ω(k/√m ).This resulted in a polynomial time approximation algorithm,which is optimal in a sense that this bound is tight and in general,it is impossible to achieve a better approximation (regardless of the complexity).Note that this is only an approximation,and there can be an error even when k =m −1.5.4Exact algorithms 5.4.1Exponential algorithmsThe obvious and naive implementation of exact model counting enumerates all the models and thus runs in time O (m 2n ).Can we do better?If we restrict the maximum number of literals contained in a single clause to r ,then we can decrease the basis of the exponention to αr ,the unique positive root of thepolynomial y r −y r −1−...−1.This gives an algorithm running in time O (mαn r ).Note,that this is generally better than the naive approach since α2≈1.62,α3≈1.84,α4≈1.93,...,but in the limit (r →∞)we still have αr →2n .This algorithm,as described,has been proposed by Dubois [27].A similar approach (with similar complexity)has been presented by Zhang [50].Despite the improvement though,the complexity remains exponential (and there is no improvement in the limit).5.4.2Lozinskii algorithmIwama [30]used model counting together with formula falsification to solve SAT.He achieved a polynomial average running time O (m c +1),where c is a constant such that ln c ≥ln m −p 2n ,where p is the probability that a particular literal appears in a particular clause in the target formula.Lozinskii [40]introduced a similar algorithm but for #SAT.Under reason-able assumptions,it calculates the exact number of models in time O (m c n ),where c ∈O (log m ).5.4.3CDP algorithmBirnbaum and Lozinskii [20]proposed an algorithm,named CDP (Counting by Davis-Putnam ),for counting the number of models of a Boolean CNF formula exactly,based on a modification of the classic Davis-Putnam procedure [25].There are two essential changes:first,the pure literal rule is omitted (otherwise some solutions will be missed),and second,in case the formula is satisfied by8setting values to only part of the variables,the remaining unset i variables can take all possible values and thus,the value2i is returned.The average running time of CDP is O(m d n),where d= −1/log2(1−p) and p is the probability that a particular literal appears in a particular clause in the target formula.5.4.4DDP algorithmThe DDP(Decomposing Davis-Putnam)algorithm has been proposed by Ba-yardo and Pehoushek[16].Just like CDP[20],it is an extension of the Davis-Putnam(DP)procedure[25].The idea is to identify groups of variables that are independent of each other,which is essentially a kind of divide and conquer approach:find the connected components of the graph,solve the problem for each of them independently and then combine the results.This is a fairly old idea,already exploited for SAT as well as for more general constraint satisfac-tion problems by Freuder and Quinn[28]and many other researchers thereafter. The idea of DDP is to apply it recursively,as opposed to just once at the begin-ning.During the recursive exploration of the graph DP assigns values to some of the variables and thus breaks it into separate connected components,which are in turn identified and exploited,which dramatically cuts the calculations.The algorithm is implemented as an extension of version2.00of the relsat algorithm[17]and the source code is available on the Internet[7].The rel-sat algorithm is an extension of the Davis-Putnam-Logman-Loveland(DPLL) method[39],and it dynamically learns nogoods(the only difference with DPLL), which can dramatically speed it up:in case the variables form a chain,SAT can be solved in quadratic time.For#SAT though one would need also to record the goods(this was not implemented and tested).In the case of DDP this would lead to quadratic complexity for solving#SAT even in case the variables form a tree.Anyway,in some hard cases(e.g.when m/n≈4)the complexity will be necessarily exponential.Performance evaluation tests show DDP is orders of magnitude faster than CDP both on random instances,as well as on benchmarks like the ones in SATLIB[9].5.4.5Inclusion-exclusion algorithms specialized to#SATLooking carefully at the general problem offinding the size of the union of a family of sets(see5.2.1above),Kahn&al.[34]found that in the special case of #SAT it is enough to require to know the number of models for every subset of up to1+log n clauses.Moreover,this has been proved tofix the number of satisfied assignments for the whole formula,and thus allows the calculation of the exact number of models,not just approximate it.Note also,that this is dependent on the number of the variables only,and not on the number of the clauses!The latter proof of Kahn&al.was only existentional,and they did not come up with an algorithm.It has been found by Melkman and Shimony[42].9The result has been extended thereafter and specialized to#k-SAT,k≥2, as Iwama and Matsuura showed that n can be changed to k and that only the clauses of some fixed size matter(thus,we should not require to know the ones of smaller size).So,for#k-SAT knowing the number of models for all clauses of size exactly2+ log k has been proved to be both necessary and sufficient[30]. Unfortunately,the latter proof is only existentional and there is no practical algorithm developed yet.5.5What are we using?Initially,we used the Boolean Constraint Solver library module[10],provided with SICStus Prolog[11].It is an instance of the general Constraint Logic Pro-gramming scheme introduced in[32].The internal representation of the Boolean functions is based on Boolean Decision Diagrams as described in[22]and it al-lows using linear equalities as constraints,which saved us from combinatorial explosions of the number of clauses needed.While we found it fairly fast for solving MINESWEEPER,it was not suitable for#MINESWEEPER:the only way tofind the number of satisfying assignments was to enumerate them all, which was unacceptable.We then performed an extensive study of the literature on model counting and adopted another approach.Currently,we use the implementation of DDP(see5.4.4)as provided in version2.00of the relsat algorithm[7].We apply the model counting for rea-soning with incomplete information:when we cannot logically conclude that neither there is nor there is not a mine in a particular square x,we solve the #MINESWEEPER problem twice:once with a mine put in x and once for the original configuration,and then take their ratio.We could also use an approximate inclusion-exclusion algorithm,as special-ized to#SAT by Melkman and Shimony[42],or even the one of Iwama and Matsuura[31],if a practical implementation is found in the future.In addition, we think in the case of#MINESWEEPER it might be possible to improve the result of Iwama and Matsuura further because of the special CNF structure:all clauses are paired positive-negative,and for each one with only positive literals there is another one that contains the same variables but all negated.Looking from the Pseudo-Boolean perspective,the problem is still a proper subset of it: we do not need inequalities,and we do not have coefficients,other than0and 1.Ideally,solving#MINESWEEPER would be done by means of model count-ing for Pseudo-Boolean formulas:e.g.a combination between the Boolean Con-straint Solver library module of SICStus Prolog and the relsat model counter. 6MinesweeperMinesweeper is a simple game whose goal is to clear all squares on a board that don’t contain mines.The basic elements of the game are:playing board, remaining mines counter,and a timer.The rules are the following:10♦The player can uncover an unknown square by clicking on it.If it contains a mine,the game is lost.♦If the square is free,a number between0and8appears on it,indicating how many mines are there in its immediate neighbor squares.♦A square can be marked as a mine by right clicking on it.♦The game is won when all non-mine squares are uncovered.Minesweeper is an imperfect information computer game.The player probes on a n×n map,and has to make decisions based on the current observations only,without knowing which squares contain mines and which are free.The only additional information provided is the total number of remaining mines. If the probed square contains a mine,the player loses the game,otherwise the number of mines in the adjacent squares(up to8)appears in the probed square. In most computer implementations(and in our interpretation in particular)the player never dies on thefirst probe,but,as we will see below,this doesn’t change the complexity of the problem substantially.We assign a{0/1}variable to each unknown square in the map:the value is1,if it contains a mine,and0−otherwise.All variables must obey the constraints imposed by the numbers in the uncovered squares,as well as the global constraint on the remaining number of mines,namely:for each known square i:X j∈N(i)X j=O(i)X j X j=M(3)Where N(i)is the set of the neighbors(up to8)of the known square i,O(i)is the value of the uncovered square i and M is total number of mines in the map. An example follows:X1X21X3X4212mThe following equations correspond to the configuration above:X2+X4=1X3+X4=1X1+X2+X3+X4=M(4)When M=1or M=3,there is a unique solution,but in case M=2,there are two:X2=X3=1and X1=X4=1.Let X be the set of all solutions of(3).Then we can easily compute for each square the probability that it contains a mine,as a simple ratio:P(X i=1)=|{X:X∈X,X(i)=1}||X|11Figure1:Optimal policy on2×4map with2minesSo,when M=2we have P(X1=1)=1/2.In order for a square to be a mine or free for sure,its value in all solutions should be1or0,respectively.Once we have computed the probability of containing a mine for each square, we can come up with a fairly simple greedy policy that always tries the safest square.We will show,this is not always optimal.Consider the2×4map with 2mines,shown onfigure(1).There are5unknown squares,2probed free ones and one sure mine.(The mine was not probed,of course,but was discovered as a result of logical inference.)P(X1,1=1)=P(X1,2=1)=P(X2,1=1)=1/3, but P(X1,3=1)=P(X2,3)=1/2.Although(1,1),(1,2)and(2,1)are safer than(1,3)and(2,3),the optimal policy probes(1,3).Let’s try to analyze the probability that the optimal policy will win on this map.At thefirst step,probing at(1,3)will succeed with probability1/2. There are two possible outcomes after a successful probe at(1,3).If the square (1,3)contains3(this will happen with probability1/3),then the other squares containing a mine will be(1,2)and(2,3),and so the game will be won.If(1,3) contains2(which has a probability2/3),the square(1,2)is surely not a mine and(2,3)is surely a mine.Hence,the agent has to guess the position of the last mine by choosing between(1,1)and(2,1)only,and thus will succeed with probability1/2.So the total probability that the optimal policy will win at this configuration is1/2×(1/3+1/2×2/3)=1/3.If the agent follows the greedy policy though,then he has to choose between (1,2),(1,1)and(2,1),which look equally good.Suppose the agent probes(1,2). Then he will succeed with probability2/3.But in the outcome he has to make two guesses,between(1,3)and(2,3),and between(1,1)and(2,1).Hence it can succeed with probability1/4.If the agent probes(1,1)or(2,1),and tries (1,3),which is from the optimal policy,the possibility that it can succeed is at most1/2.So the expected probability that the greedy policy wins at this configuration is1/3(2/3×1/4+2/3×1/2+2/3×1/2)=2/9,which is less than for the optimal policy.12。

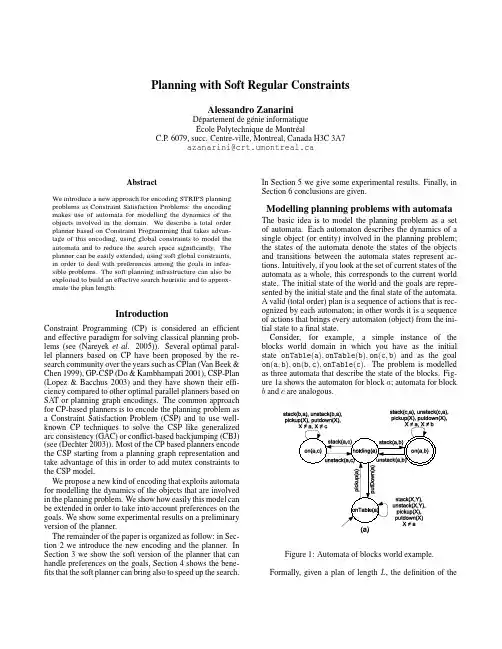

Journal of Artificial Intelligence Research 4 (1996) 419-443Submitted 2/96; published 6/96A Principled Approach Towards SymbolicGeometric Constraint SatisfactionSanjay Bhansali BHANSALI@ School of EECS, Washington State UniversityPullman, WA 99164-2752Glenn A. Kramer GAK@ Enterprise Integration Technologies, 800 El Camino RealMenlo Park, CA 94025Tim J. Hoar TIMHOAR@ Microsoft CorporationOne Microsoft Way, 2/2069Redmond, WA 98052AbstractAn important problem in geometric reasoning is to find the configuration of a collection of geometric bodies so as to satisfy a set of given constraints. Recently, it has been suggested that this problem can be solved efficiently by symbolically reasoning about geometry. This approach, called degrees of freedom analysis, employs a set of specialized routines called plan fragments that specify how to change the configuration of a set of bodies to satisfy a new constraint while preserving existing constraints. A potential drawback, which limits the scalability of this approach, is concerned with the difficulty of writing plan fragments. In this paper we address this limitation by showing how these plan fragments can be automatically synthesized using first principles about geometric bodies, actions, and topology.1. IntroductionAn important problem in geometric reasoning is the following: given a collection of geometric bodies, called geoms, and a set of constraints between them, find a configuration– i.e., position, orientation, and dimension – of the geoms that satisfies all the constraints. Solving this problem is an integral task for many applications like constraint-based sketching and design, geometric modeling for computer-aided design, kinematics analysis of robots and other mechanisms (Hartenberg & Denavit, 1964), and describing mechanical assemblies.General purpose constraint satisfaction techniques are not well suited for solving constraint problems involving complicated geometry. Such techniques represent geoms and constraints as algebraic equations, whose real solutions yield the numerical values describing the desired configuration of the geoms. Such equation sets are highly non-linear and highly coupled and in the general case require iterative numerical solutions techniques. Iterative numerical techniques are not particularly efficient and can have problems with stability and robustness (Press, Flannery, Teukolsky & Vetterling, 1986). For many tasks (e.g., simulation and optimization of mechanical devices) the same equations are solved repeatedly which makes a compiled solution desirable. In theory, symbolic manipulation of equations can often yield a non-iterative, closed form solution. Once found, such a closed-form solution can be executed very efficiently.B HANSALI, K RAMER &H OARHowever, the computational intractability of symbolic algebraic solution of the equations renders this approach impractical (Kramer, 1992; Liu & Popplestone, 1990).In earlier work Kramer describes a system called GCE that uses an alternative approach called degrees of freedom analysis (1992, 1993). This approach is based on symbolic reasoning about geometry, rather than equations, and was shown to be more efficient than systems based on algebraic equation solvers. The approach uses two models. A symbolic geometric model is used to reason symbolically about how to assemble the geoms so as to satisfy the constraints incrementally. The "assembly plan" thus developed is used to guide the solution of the complex nonlinear equations - derived from the second, numerical model - in a highly decoupled, stylized manner.The GCE system was used to analyze problems in the domain of kinematics and was shown to perform kinematics simulation of complex mechanisms (including a Stirling engine, an elevator door mechanism, and a sofa-bed mechanism) much more efficiently than pure numerical solvers (Kramer, 1992). The GCE has subsequently been integrated in a commercial system called Bravo TM by Applicon where it is used to drive the 2D sketcher (Brown-Associates, 1993). Several academic systems are currently using the degrees of freedom analysis for other applications like assembly modeling (Anantha, Kramer & Crawford, 1992), editing and animating planar linkages (Brunkhart, 1994), and feature-based design (Salomons, 1994; Shah & Rogers, 1993).GCE employs a set of specialized routines called plan fragments to create the assembly plan.A plan fragment specifies how to change the configuration of a geom using a fixed set of operators and the available degrees of freedom, so that a new constraint is satisfied while preserving all prior constraints on the geom. The assembly plan is completed when all constraints have been satisfied or the degrees of freedom is reduced to zero. This approach is canonical: the constraints may be satisfied in any order; the final status of the geom in terms of remaining degrees of freedom is the same (p. 80-81, Kramer, 1992). The algorithm for finding the assembly procedure has a time complexity of O(cg) where c is the number of constraints and g is the number of geoms (p. 139, Kramer, 1992).Since the crux of problem-solving is taken care of by the plan fragments, the success of the approach depends on one’s ability to construct a complete set of plan fragments meeting the canonical specification. The number of plan fragments needed grows geometrically as the number of geoms and constraints between them increase. Worse, the complexity of the plan fragments increases exponentially since the various constraints interact in subtle ways creating a large number of special cases that need to be individually handled. This is potentially a serious limitation in extending the degrees of freedom approach. In this paper we address this problem by showing how plan fragments can be automatically generated using first principles about geoms, actions, and topology.Our approach is based on planning. Plan fragment generation can be reduced to a planning problem by considering the various geoms and the invariants on them as describing a state. Operators are actions, such as rotate, that can change the configuration of geoms, thereby violating or achieving some constraint. An initial state is specified by the set of existing invariants on a geom and a final state by the additional constraints to be satisfied. A plan is a sequence of actions that when applied to the initial state achieves the final state.With this formulation, one could presumably use a classical planner, such as STRIPS (Fikes & Nilsson, 1971), to automatically generate a plan-fragment. However, the operators in this domain are parametric operators with a real-valued domain. Thus, the search space consists of an infinite number of states. Even if the real-valued domain is discretized by considering real-valued intervals there is still a very large search space and finding a plan that satisfies theP RINCIPLED S YMBOLIC G EOMETRIC C ONSTRAINT S ATISFACTIONspecified constraints would be an intractable problem. Our approach uses loci information (representing a set of points that satisfy some constraints)to reason about the effects of various operators and thus reduces the search problem to a problem in topology, involving reasoning about the intersection of various loci.An issue to be faced in using a conventional planner is the frame problem: how to determine what properties or relationships do not change as a result of an action. A typical solution is to use the assumption: an action does not modify any property or relationship unless explicitly stated as an effect of the action. Such an approach works well if one knows a priori all possible constraints or invariants that might be of interest and relatively few constraints get affected by each action - which is not true in our case. We use a novel scheme for representing effects of actions. It is based on reifying (i.e., treating as first class objects) actions in addition to geometric entities and invariant types. We associate, with each pair of geom and invariants, a set of actions that can be used to achieve or preserve that invariant for that geom. Whenever a new geom or invariant type is introduced the corresponding rules for actions that can achieve/preserve the invariants have to be added. Since there are many more invariant types than actions in this domain, this scheme results in simpler rules. Borgida, Mylopoulos & Reiter (1993) propose a similar approach for reasoning with program specifications. A unique feature of our work is the use of geometric-specific matching rules to determine when two or more general actions that achieve/preserve different constraints can be reformulated to a less general action.Another shortcoming of using a conventional planner is the difficulty of representing conditional effects of operators. In GCE an operation’s effect depends on the type of geom as well as the particular geometry. For example, the action of translating a body to the intersection of two lines on a plane would normally reduce the body’s translational degrees of freedom to zero; however, if the two lines happen to coincide then the body still retains one degree of translational freedom and if the two lines are parallel but do not coincide then the action fails. Such situations are called degeneracies. One approach to handling degeneracies is to use a reactive planner that dynamically revises its plan at run-time. However, this could result in unacceptable performance in many real-time applications. Our approach makes it possible to pre-compile all potential degeneracies in the plan. We achieve this by dividing the planning algorithm into two phases. In the first phase a skeletal plan is generated that works in the normal case and in the second phase, this skeletal plan is refined to take care of singularities and degeneracies. The approach is similar to the idea of refining skeletal plans in MOLGEN (Friedland, 1979) and the idea of critics in HACKER (Sussman, 1975) to fix known bugs in a plan. However, the skeletal plan refinement in MOLGEN essentially consisted of instantiating a partial plan to work for specific conditions, whereas in our method a complete plan which works for a normal case is extended to handle special conditions like degeneracies and singularities. 1.1 A Plan Fragment Example.We will use a simple example of a plan fragment specification to illustrate our approach. Domains such as mechanical CAD and computer-based sketching rely heavily on complex combinations of relatively simple geometric elements, such as points, lines, and circles and a small collection of constraints such as coincidence, tangency, and parallelism. Figure 1 illustrates some fairly complex mechanisms (all implemented in GCE) using simple geoms and constraints.B HANSALI, K RAMER &H OARStirling EngineFigure 1. Modeling complex mechanisms using simple geoms and constraints. All the constraintsneeded to model the joints in the above mechanisms are solvable using the degrees of freedom approach.Our example problem is illustrated in Figure 2 and is specified as follows:Geom-type: circleName: $cInvariants: (fixed-distance-line $c $L1 $dist1 BIAS_COUNTERCLOCKWISE)To-be-achieved: (fixed-distance-line $c $L2 $dist2 BIAS_CLOCKWISE)In this example, a variable-radius circle $c1 has a prior constraint specifying that the circle is at a fixed distance $dist1 to the left of a fixed line $L1 (or alternatively, that a line drawn parallelto $L1 at a distance $dist1 from the center of $c is tangent in a counterclockwise direction to the circle). The new constraint to be satisfied is that the circle be at a fixed distance $dist2 to the1We use the following conventions: symbols preceded by $ represent constants, symbols preceded by ?represent variables, expressions of the form (>> parent subpart) denote the subpart of a compound term, parent.P RINCIPLED S YMBOLIC G EOMETRIC C ONSTRAINT S ATISFACTION To solve this problem, three different plans can be used: (a) translate the circle from its current position to a position such that it touches the two lines $L2’ and $L1’ shown in the figure (b) scale the circle while keeping its point of contact with $L1’ fixed, so that it touches $L2’ (c) scale and translate the circle so that it touches both $L2’ and $L1’.Each of the above action sequences constitute one plan fragment that can be used in the above situation and would be available to GCE from a plan-fragment library. Note that some of the plan fragments would not be applicable in certain situations. For example, if $L1 and $L2 are parallel, then a single translation can never achieve both the constraints, and plan-fragment (a) would not be applicable. In this paper we will show how each of the plan-fragments can be automatically synthesized by reasoning from more fundamental principles.The rest of the paper is organized as follows: Section 2 gives an architectural overview of the system built to synthesize plan fragments automatically with a detailed description of the various components. Section 3 illustrates the plan fragment synthesis process using the example of Figure 2. Section 4 describes the results from the current implementation of the system. Section 5 relates our approach to other work in geometric constraint satisfaction. Section 6 summarizes the main results and suggests future extensions for this work.2. Overview of System ArchitectureFigure 3 gives an overview of the architecture of our system showing the various knowledge components and the plan generation process. The knowledge represented in the system is broadly categorized into a Geom knowledge-base that contains knowledge specific to particular geometric entities and a Geometry knowledge-base that is independent of particular geoms and can be reused for generating plan fragments for any geom.Figure 3. Architectural overview of the plan fragment generator2.1 Geom Knowledge-baseThe geom specific knowledge-base can be further decomposed into seven knowledge components.B HANSALI, K RAMER &H OAR2.1.1A CTIONSThese describe operations that can be performed on geoms. In the GCE domain, three actions suffice to change the configuration of a body to an arbitrary configuration: (translate g v) which denotes a translation of geom g by vector v; (rotate g pt ax amt) which denotes a rotation of geom g, around point pt, about an axis ax, by an angle amt; and (scale g pt amt) where g is a geom, pt is a point on the geom, and amt is a scalar. The semantics of a scale operation depends on the type of the geom; for example, for a circle, a scale indicates a change in the radius of the circle and for a line-segment it denotes a change in the line-segment’s length. Pt is the point on the geom that is fixed (e.g., the center of a circle).2.1.2 I NVARIANTSThese describe constraints to be solved for the geoms. The initial version of our system has been designed to generate plan fragments for a variable-radius circle and a variable length line-segment on a fixed workplane, with constraints on the distances between these geoms and points, lines, and other geoms on the same workplane. There are seven invariant types to represent these constraints. Examples of two such invariants are:• (Invariant-point g pt glb-coords) which specifies that the point pt of geom g is coincident with the global coordinates glb-coords, and• (Fixed-distance-point g pt dist bias) which specifies that the geom g lies at a fixed distance dist from point pt; bias can be either BIAS_INSIDE or BIAS_OUTSIDE depending on whether g lies inside or outside a circle of radius dist around point pt.2.1.3 L OCIThese represent sets of possible values for a geom parameter, such as the position of a point on a geom. The various kinds of loci can be grouped into either a 1d-locus (representable by a set of parametric equations in one parameter) or a 2d-locus (representable by a set of parametric equations in two variables). For, example a line is a 1d locus specified as (make-line-locus through-pt direc) and represents an infinite line passing through through-pt and having a direction direc. Other loci represented in the system include rays, circles, parabolas, hyperbolas, and ellipses.2.1.4 M EASUREMENTSThese are used to represent the computation of some function, object, or relationship between objects. These terms are mapped into a set of service routines which get called by the plan fragments. An example of a measurement term is: (0d-intersection 1d-locus1 1d-locus2). This represents the intersection of two 1d-loci. In the normal case, the intersection of two 1-dimensional loci is a point. However, there may be singular cases, for example, when the two loci happen to coincide; in such a case their intersection returns one of the locus instead of a point. There may also be degenerate cases, for example, when the two loci do not intersect; in such a case, the intersection is undefined. These exceptional conditions are also represented with each measurement type and are used during the second phase of the plan generation process to elaborate a skeletal plan (see Section 3.3).P RINCIPLED S YMBOLIC G EOMETRIC C ONSTRAINT S ATISFACTION2.1.5 G EOMSThese are the objects of interest in solving geometric constraint satisfaction problems. Examples of geoms are lines, line-segments, circles, and rigid bodies. Geoms have degrees of freedoms which allow them to vary in location and size. For example, in 3D-space a circle with a variable radius, has three translational, two rotational, and one dimensional degree of freedom.The configuration variables of a geom are defined as the minimal number of real-valued parameters required to specify the geometric entity in space unambiguously. Thus, a circle has six configuration variables (three for the center, one for the radius, and two for the plane normal). In addition, the representation of each geom includes the following:•name: a unique symbol to identify the geom;•action-rules: a set of rules that describe how invariants on the geom can be preserved or achieved by actions (see below);•invariants: the set of current invariants on the geom;•invariants-to-be-achieved: the set of invariants that need to be achieved for the geom.2.1.6 A CTION R ULESAn action rule describes the effect of an action on an invariant. There are two facts of interest to a planner when constructing a plan: (1) how to achieve an invariant using an action and (2) how to choose actions that preserve as many of the existing invariants as possible. In general, there are several ways to achieve an invariant and several actions that will preserve an invariant. The intersection of these two sets of actions is the set of feasible solutions. In our system, the effect of actions is represented as part of geom-specific knowledge in the form of Action rules, whereas knowledge about how to compute intersections of two or more sets of actions is represented as geometry-specific knowledge (since it does not depend on the particular geom being acted on).An action rule consists of a three-tuple (pattern, to-preserve, to-[re]achieve). Pattern is the invariant term of interest; to-preserve is a list of actions that can be taken without violating the pattern invariant; and to-[re]achieve is a list of actions that can be taken to achieve the invariant or re-achieve an existing invariant “clobbered” by an earlier action. These actions are stated in the most general form possible. The matching rules in the Geometry Knowledge base are then used to obtain the most general unifier of two or more actions. An example of an action rule, associated with variable-radius circle geoms is:pattern: (1d-constrained-point ?circle (>> ?circle CENTER) ?1dlocus)(AR-1) to-preserve: (scale ?circle (>> ?circle CENTER) ?any)(translate ?circle (v- (>> ?1dlocus ARBITRARY-POINT)(>> ?circle CENTER))to-[re]achieve: (translate ?circle (v- (>> ?1dlocus ARBITRARY-POINT)(>> ?circle CENTER))This action rule is used to preserve or achieve the constraint that the center of a circle geom lie on a 1d locus. There are two actions that may be performed without violating this constraint: (1) scale the circle about its center. This would change the radius of the circle but the position of the center remains the same and hence the 1d-constrained-point invariant is preserved. (2)B HANSALI, K RAMER &H OARtranslate the circle by a vector that goes from its current center to an arbitrary point on the 1-dimensional locus ((v- a b) denotes a vector from point b to point a). To achieve this invariant only one action may be performed: translate the circle so that its center moves from its current position to an arbitrary position on the 1-dimensional locus.2.1.7 S IGNATURESFor completeness, it is necessary that there exist a plan fragment for each possible combination of constraints on a geom. However, in many cases, two or more constraints describe the same situation for a geom (in terms of its degrees of freedom). For example, the constraints that ground the two end-points of a line-segment and the constraints that ground the direction, length, and one end-point of a line-segment both reduce the degrees of freedom of the line-segment to zero and hence describe the same situation. In order to minimize the number of plan fragments that need to be written, it is desirable to group sets of constraints that describe the same situation into equivalence classes and represent each equivalence class using a canonical form.The state of a geom, in terms of the prior constraints on it, is summarized as a signature. A signature scheme for a geom is the set of canonical signatures for which plan fragments need to be written. In Kramer’s earlier work (1993) the signature scheme had to be determined manually by examining each signature obtained by combining constraint types and designating one from a set of equivalent signatures to be canonical. Our approach allows us to construct the signature scheme for a geom automatically by using reformulation rules (described shortly). A reformulation rule rewrites one or more constraints into a simpler form. The signature scheme is obtained by first generating all possible combinations of constraint types to yield the set of all possible signatures. These signatures are then reduced using the reformulation rules until each signature is reduced to the simplest form. The set of (unique) signatures that are left constitute the signature scheme for the geom.As an example, consider the set of constraint types on a variable radius circle. The signature for this geom is represented as a tuple <Center, Normal, Radius, FixedPts, FixedLines> where:•Center denotes the invariants on the center point and can be either Free (i.e., no constraint on the center point), L2 (i.e., center point is constrained to be on a 2-dimensional locus), L1 (i.e., center point is constrained to be on a 1-dimensional locus), or Fixed.•Normal denotes the invariant on the normal to the plane of the circle and can be either Free, L1, or Fixed (in 2D it is always fixed).•Radius denotes the invariant on the radius and can be either Free or Fixed.•FixedPts denotes the number of Fixed-distance-point invariants and can be either 0,1, or 2.•FixedLines denotes the number of Fixed-distance-line invariants and can be either 0,1, or 2.L2 and L1 denote a 2D and 1D locus respectively. If we assume a 2D geometry, the L2 invariant on the Center is redundant, and the Normal is always Fixed. There are then 3 x 1 x 2 x 3 x 3 = 54 possible signatures for the geom. However, several of these describe the same situation. For example, the signature:<Center-Free,Radius-Free, FixedPts-0,FixedLines-2>which describes a circle constrained to be at specific distances from two fixed lines, can be rewritten to:P RINCIPLED S YMBOLIC G EOMETRIC C ONSTRAINT S ATISFACTION<Center-L1, Radius-Free,FixedPts-0,FixedLines-0>which describes a circle constrained to be on a 1-dimensional locus (in this case the angular bisector of two lines). Using reformulation rules, we can derive the signature scheme for variable radius circles consisting of only 10 canonical signatures given below:<Center-Free,Radius-Free, FixedPts-0,FixedLines-0><Center-Free,Radius-Free, FixedPts-0,FixedLines-1><Center-Free,Radius-Free, FixedPts-1,FixedLines-0><Center-Free,Radius-Fixed, FixedPts-0,FixedLines-0><Center-L1,Radius-Free, FixedPts-0,FixedLines-0><Center-L1,Radius-Free, FixedPts-0,FixedLines-1><Center-L1,Radius-Free, FixedPts-1,FixedLines-0><Center-L1,Radius-Fixed, FixedPts-0,FixedLines-0><Center-Fixed,Radius-Free, FixedPts-0,FixedLines-0><Center-Fixed,Radius-Fixed, FixedPts-0,FixedLines-0>Similarly, the number of signatures for line-segments can be reduced from 108 to 19 using reformulation rules.2.2 Geometry Specific KnowledgeThe geometry specific knowledge is organized as three different kinds of rules.2.2.1 M ATCHING R ULESThese are used to match terms using geometric properties. The planner employs a unification algorithm to match actions and determine whether two actions have a common unifier. However, the standard unification algorithm is not sufficient for our purposes, since it is purely syntactic and does not use knowledge about geometry. To illustrate this, consider the following two actions:(rotate $g $pt1 ?vec1 ?amt1), and(rotate $g $pt2 ?vec2 ?amt2).The first term denotes a rotation of a fixed geom $g, around a fixed point $pt1 about an arbitrary axis by an arbitrary amount. The second term denotes a rotation of the same geom around a different fixed point $pt2 with the rotation axis and amount being unspecified as before. Standard unification fails when applied to the above terms because no binding of variables makes the two terms syntactically equal2. However, resorting to knowledge about geometry, we can match the two terms to yield the following term:(rotate $g $pt1 (v- $pt2 $pt1) ?amt1)which denotes a rotation of the geom around the axis passing through points $pt1 and $pt2. The point around which the body is rotated can be any point on the axis (here it is arbitrarily chosen to be one of the fixed points, $pt1) and the amount of rotation can be anything.The planner applies the matching rules to match the outermost expression in a term first; if no rule applies, it tries subterms of that term, and so on. If none of the matching rules apply, then 2 Specifically, unification fails when it tries to unify $pt1 and $pt2.B HANSALI, K RAMER &H OARthis algorithm degenerates to standard unification. The matching rules can also have conditions attached to them. The condition can be any boolean function; however, for the most part they tend to be simple type checks.2.2.2 R EFORMULATION R ULESAs mentioned earlier, there are several ways to specify constraints that restrict the same degrees of freedom of a geom. In GCE, plan fragments are indexed by signatures which summarize the available degrees of freedom of a geom. To reduce the number of plan fragments that need to be written and indexed, it is desirable to reduce the number of allowable signatures. This is accomplished with a set of invariant reformulation rules which are used to rewrite pairs of invariants on a geom into an equivalent pair of simpler invariants (using a well-founded ordering). Here equivalence means that the two sets of invariants produce the same range of motions in the geom. This reduces the number of different combinations of invariants for which plan fragments need to be written. An example of invariant reformulation is the following: (fixed-distance-line ?c ?l1 ?d1 BIAS_COUNTERCLOCKWISE)(fixed-distance-line ?c ?l2 ?d2 BIAS_CLOCKWISE)⇓ (RR-1) (1d-constrained-point ?c (>> ?c center) (angular-bisector(make-displaced-line ?l1 BIAS_LEFT ?d1)(make-displaced-line ?l2 BIAS_RIGHT ?d2)BIAS_COUNTERCLOCKWISEBIAS_CLOCKWISE))This rule takes two invariants: (1) a geom is at a fixed distance to the left of a given line, and (2) a geom is at a fixed distance to the right of a given line. The reformulation produces the invariant that the geom lies on the angular bisector of two lines that are parallel to the two given lines and at the specified distance from them. Either of the two original invariants in conjunction with the new one is equivalent to the original set of invariants.Besides reducing the number of plan fragments, reformulation rules also help to simplify action rules. Currently all action rules (for variable radius circles and line-segments) use only a single action to preserve or achieve an invariant. If we do not restrict the allowable signatures on a geom, it is possible to create examples where we need a sequence of (more than one) actions in the rule to achieve the invariant, or we need complex conditions that need to be checked to determine rule applicability. Allowing sequences and conditionals on the rules increases the complexity of both the rules and the pattern matcher. This makes it difficult to verify the correctness of rules and reduces the efficiency of the pattern matcher.Using invariant reformulation rules allows us to limit action rules to those that contain a single action. Unfortunately, it seems that we still need conditions to achieve certain invariants. For example, consider the following invariant on a variable radius circle:(fixed-distance-point ?circle ?pt ?dist BIAS_OUTSIDE)which states that a circle, ?circle be at some distance ?dist from a point ?pt and lie outside a circle around ?pt with radius ?dist. One action that may be taken to achieve this constraint is: (scale?circle(>> ?circle center)。