Chapter 10 VISION AND VIDEO MODELS AND APPLICATIONS

- 格式:pdf

- 大小:184.86 KB

- 文档页数:30

英语文章the giver 每章概述。

chapter1:The story begins with jonas' anxiety and tension, telling the events and feelings of jonas' family during the day and sharing them over the dinner tablechapter2Jonas talked to his parents, talked about the twelve-year-old ceremony at the dinner table, and expressed his anxiety. The parents used their own examples to reassure jonas.chapter3This chapter mainly tells the difference between jonas' ideas and others. He notices that gabo's eyes are different from others and profound. And her sister lily's desire to be a pregnant mother. Jonas and Arthur play the game and discover the change in the apple and secretly bring the apple back to study chapter4This chapter focuses on jonas who goes to volunteer work and admires the achievements of a boy named Benjamin. He also went to the nursing home to bathe the elderly, and they discussed Robert's liberation ceremonychapter5This chapter focuses on the jonas family's Shared dream story, where jonas shares his dream of "passion" with the family and his mother tells him that he needs to take pills every day from today chapter6This chapter is mainly about rituals. Children of different ages have their own rituals. This chapter is about lily's rituals. She had a wallet, and the rituals of every age gave her the right and the duty to do somethingchapter7This chapter mainly tells about the jobs that twelve-year-old children will be assigned to. Each child has a serial number. Fiona and Arthur were both assigned to the job they wanted, but jonas was skipped, and he wondered why.chapter8This chapter is mainly about jonas being chosen as the successor of memory. The election of a successor to a memory is very rare, and jonas was noticed a decade ago. This task requires integrity, intelligence, courage and vision. Jonas felt a little lost, not knowing what the future would be like. chapter9Chapter 9 focuses on jonas as the grantor of memory. His job is a little different, but very important. His parents are proud of him. But he felt something strange.chapter10This chapter focuses on the work of jonas, whose job it is to receive memories from memory givers,first of all to impart memories of snow, sledges, and hills, which he is very excited about.chapter11This chapter is mainly about the transmission of the old man's memory of jonas. Jonas felt the snow and sunburn by himself, which made him feel happy and uncomfortable. Jonas was surprised chapter12This chapter focuses on a new night in jonas' life, when gabriel feels restless and anxious, and jonas learns a new memory -- the colors of the rainbowchapter13Jonas knew the name of the color and felt the color, but only he and the old man could feel it. He tried to make Arthur feel it, but failed. He felt the community was ordinary.chapter14Jonas felt hunger and pain for the first time. He wondered why he could not share his memory with others and reduce his crying. The old man told him that would cause trouble.chapter15This chapter mainly describes that the grantor could not bear the pain caused by the war, jonas helped him to bear the pain, jonas saw the terrible situation caused by the war, he did not want to happen again.chapter16The chapter grantor passed on to jonas the memories of love and warmth, their favorite memories, but they were almost gone. Jonas went back to ask his parents if they loved him, only to be told that the word did not apply. Jonas didn't think it was right, and the next morning, jonas didn't take his medicine.chapter17This chapter is mainly about the jonas family. They go out to play a shooting game together, which reminds jonas of the scene of his last acceptance of war memory, which leads to the failure of his game.chapter18In this chapter, jonas asks the teacher about liberation, and the teacher tells him that the girl named rosemary ten years ago applied for liberation because she could not bear the pain brought by memory.chapter19Chapter 19 mainly focuses on jonas and memory imparting people together to talk about the liberation ceremony of twins in the morning. Jonas saw his father in the video that the twin with a lower weight was liberated, and jonas also learned that the so-called liberation was to kill the man, which made him hard to accept.chapter20This chapter says that jonas learned the truth, he felt very painful, difficult to accept the truth, so he discussed with the instructor a plan to escapechapter21This chapter focuses on jonas preparing to escape from the community, and his father says gabo will eventually be liberated. Jonas took gabo with him when he fled that night. He escaped on his bicycle during the night. They rode into the forest and dodged the search.chapter22This chapter focuses on jonas and gabo have been further and further away from the community, they all the way to avoid the search, relying on memory support, they found fruit juice to drink. The cold memory of the night evaded the search of the plane.chapter23This chapter focuses on jonas and gabo have come to the top of the mountain, the top of the mountain is very cold, and jonas's memory is disappearing faster and faster, he relies on the remaining memory to keep gabo alive, finally they recall the love of family and friends together feeling.如有侵权请联系告知删除,感谢你们的配合!。

大学人工智能英语教材翻译IntroductionIn recent years, artificial intelligence (AI) has become a ubiquitous presence in our lives, revolutionizing various industries and fields. To meet the growing demand for AI professionals, universities have started offering courses and developing textbooks on the subject. This article aims to translate key contents of a university-level AI English textbook into Chinese, providing students with a comprehensive resource to enhance their understanding of this rapidly evolving field.Chapter 1: Introduction to Artificial Intelligence人工智能简介Artificial intelligence, often referred to as AI, is a branch of computer science that focuses on the creation of intelligent machines capable of performing tasks that typically require human intelligence. AI can be divided into two categories: narrow AI, which is designed to perform a specific task, and general AI, which aims to replicate human-level intelligence across a wide range of domains.Chapter 2: Machine Learning机器学习Machine learning is a subset of AI that enables computers to learn and improve from experience without being explicitly programmed. It involves the development of algorithms and models that allow computers to analyze and interpret data, identify patterns, and make predictions or decisions basedon the observed information. Supervised learning, unsupervised learning, and reinforcement learning are the three main types of machine learning techniques.Chapter 3: Neural Networks神经网络Neural networks are a fundamental concept in AI. Inspired by the structure and function of the human brain, neural networks consist of interconnected nodes or artificial neurons. These networks learn from training data by adjusting the connections between nodes to optimize their performance. Deep learning, a subfield of AI, utilizes neural networks with multiple layers to solve complex problems and achieve higher accuracy in tasks such as image recognition and natural language processing.Chapter 4: Natural Language Processing自然语言处理Natural language processing (NLP) focuses on enabling computers to interact and understand human language in a natural and meaningful way. It involves the development of algorithms and models that can process, analyze, and generate human language, enabling tasks such as machine translation, sentiment analysis, and chatbot development. NLP plays a crucial role in bridging the gap between humans and AI systems.Chapter 5: Computer Vision计算机视觉Computer vision is an interdisciplinary field that deals with the extraction, analysis, and understanding of visual information from images or videos. Through the use of AI techniques, computers can recognize objects, detect and track motion, and perform tasks such as facial recognition and image classification. Computer vision has various applications, including autonomous vehicles, surveillance systems, and augmented reality.Chapter 6: Robotics and Artificial Intelligence机器人与人工智能The integration of AI and robotics has led to significant advancements in the field of robotics. AI-powered robots can perceive their environment, make autonomous decisions, and interact with humans and other robots effectively. This chapter explores the role of AI in robotics, discussing topics such as robot perception, robot control, and human-robot interaction.Chapter 7: Ethical and Social Implications of AI人工智能的伦理和社会影响As AI continues to advance, ethical considerations and potential societal impact become increasingly important. This chapter delves into the ethical dilemmas surrounding AI, including privacy concerns, biases in AI systems, and the impact of AI on employment and workforce. It emphasizes the need for responsible development and deployment of AI technologies, ensuring that they benefit humanity and uphold ethical standards.ConclusionIn conclusion, this article has provided a translated overview of key topics in a university-level AI English textbook. By familiarizing themselves with these concepts, students can deepen their understanding of artificial intelligence and its various applications. Moreover, this translation serves as a valuable resource for educators and researchers in the Chinese-speaking community who seek to expand their knowledge in this rapidly advancing field. With the continued development of AI, it is imperative to bridge language barriers and foster global collaboration in order to drive innovation and ensure responsible AI implementation.。

计算机视觉相关书籍计算机视觉是一门研究如何使计算机能够“看”的学科。

它涉及到图像处理、模式识别、机器学习等多个领域,是人工智能领域中的重要分支之一。

为了帮助读者更好地了解计算机视觉,以下是一些值得推荐的相关书籍。

1.《计算机视觉:模型、学习和推理》(Computer Vision: Models, Learning, and Inference)- Simon J.D. Prince这本书是计算机视觉领域的经典教材之一,全面介绍了计算机视觉的基本原理、方法和技术。

它不仅涵盖了传统的计算机视觉任务如图像分类、目标检测和图像分割,还介绍了最新的深度学习方法在计算机视觉中的应用。

2.《计算机视觉:算法与应用》(Computer Vision: Algorithms and Applications)- Richard Szeliski这本书是一本广泛使用的计算机视觉教材,它系统地介绍了计算机视觉领域的基本概念、算法和应用。

它涵盖了从图像形成和处理到三维重建和运动估计的各个方面,并提供了大量的实际案例和代码示例。

3.《深度学习:计算机视觉的理论与实践》(Deep Learning for Computer Vision)- Adrian Rosebrock这本书主要关注深度学习在计算机视觉中的应用。

它详细介绍了使用深度学习进行图像分类、目标检测、图像分割等任务的方法和技巧。

此外,它还介绍了如何使用流行的深度学习库如TensorFlow和Keras来实现计算机视觉应用。

4.《计算机视觉:现代方法》(Computer Vision: A Modern Approach)- David Forsyth, Jean Ponce这本书是一本综合性的计算机视觉教材,涵盖了计算机视觉的各个方面,包括图像处理、特征提取、目标检测、运动估计等。

它既介绍了传统的计算机视觉方法,又介绍了最新的深度学习技术在计算机视觉中的应用。

The 14th Five-Y ear Plan Starts A New Jour-ney to A Modernized Socialist Country2021 is a year of special im-portance in China’s modern-ization process, since it is the first year of the 14th Five-Year Plan and the start of a new journey to build a modern socialist country.In a period of f ive years, the Five-Year Plan specifies future direc-tion for economic and social develop-ment in China, and is an important way for China to carry out develop-ment strategies and build consensus on development. In March 2021, the 14th Five-Year Plan for National Economic and Social Development and the Long-Range Objectives Through the Year 2035 (draft) was adopted on National People’s Con-gress and Chinese People’s Political Consultative Conference (NPC and CPPCC).According to the 14th Five-Year Plan, to promote high-quality development during the 14th Five-Year Plan period, China has to carry out a new development concept for a new pattern in a new stage, insist on deepening supply-side structural reform, establish an effective system to encourage domestic demand and build a strong domestic market, un-swervingly promote reform and open-ing-up, strengthen the leading role of domestic grand circulation and the role of international circulation in im-proving the efficiency and level of do-mestic circulation, to secure healthy and mutual stimulating domestic and international dual circulation.The new Five-Year Plan highlights high-quality developmentThe 14th Five-Year Plan had 20major indicators of five categories to assess economic and social develop-ment results, and compared with last Five-Year Plan some indicators were new, some were newly described and some were deleted.As in previous Five-Year Plans, indicators of economic development category came first. H owever, the plan had no specific expected average annual GDP growth rate for next five years, but proposed to keep the econ-omy within a reasonable range and put forward specific goals according to each year’s situation.Expected indicators are antic-ipated goals by the central govern-ment, which will be achieved mainly through independent behavior of market players. In addition to GDP growth rate, there were two otherexpected indicators for “economic de-By Audrey Guo10velopment”: annual labor productivity growth (higher than GDP), and the urbanization rate of permanent urban residents (as high as 65% by 2025), reflecting the potential of future de-velopment.In terms of innovation-driven development, the indicators will be upgraded comprehensively in 2025: the value added from core industries in the digital economy will be in-creased to 10% of GDP, the annual average growth of total R&D spend-ing will be more than 7%, accounting for a higher percentage of GDP thanduring the 13th Five-Year Plan pe-riod, and the number of high-value invention patents per 10,000 people will come to 12.The drive of digital economy for economic and social development is becoming increasingly important, so it needs forward-looking minds to develop relevant indicators, reflecting that China is aiming at leading the industrial development, promoting high-quality economic development and building a new development pat-tern during the 14th Five-Year Plan period.The indicators related to inven-tion patents had a new modifier of “high value”, meaning more attention will be paid to the quality rather than the quantity of innovation. Compared with last Five-Year Plan, “contribution of scientific and technological advanc-es to economic growth” and “Internet access” were no longer listed as major indicators, reflecting the indicators were kept pace with the times.Of the 20 main indicators, 7 were directly related to the well-be-ing of people, making the plan truly considerate to people’s livelihood, including three new, namely surveyed urban unemployment rate (lower than 5.5%), number of practicing physi-cians (physician assistants) per 1,000 people (3.2), and nursery capacity for children under 3 years per 1,000 peo-ple (4.5).Compared with the 13th Five-Year Plan, two indicators of rural population lifted out of poverty and rebuilt housing in rundown urban ar-eas were moved out, since China has won the battle against poverty.The “resources and environ-ment” category in the 13th Five-Year Plan was replaced by “ecologicalconservation”, and all such indicatorsare obligatory targets. Although thenumber was cut to 5 from 10, eachof these indicators has great effects.Obligatory targets are commitmentsto the people, and indicators thatfurther strengthen the government’sresponsibility on the basis of antici-pation. 13.5% and 18% of reductionin energy consumption and carbondioxide emissions per unit of GDPrespectively, and 24.1% of forest cov-erage rate. These indicators will fullydemonstrate the “green” economicdevelopment in China.New stage and new goals ofdevelopment require the indicatorsystem to keep pace with the times.20 main indicators not only carry onthose of previous Five-Year Plans, butalso embody the innovations requiredby the new era, as they are more inline with the situation of economicand social development in China atpresent and in next five years.Ministries and commissionsare releasing developmentplans one after anotherState-owned Assets Supervisionand Administration Commission(SASAC): The digital economy, plat-form economy and sharing economywill be vigorously developed duringthe 14th Five-Year Plan periodOn April 16, 2021 at the pressconference, secretary general andnews spokesman of the SASAC,Peng Huagang said, during the 14thFive-Year Plan period, SASAC willguide enterprises to further grasp theopportunities in a new round of tech-nological revolution and industrialtransformation, focus on key sectorsof emerging industries with goodfoundation, features and advantages,and actively take part in the con-struction of new infrastructure, new-type urbanization and key projects oftransportation and water conservancy,etc., vigorously develop digital econ-omy, platform economy and sharingeconomy. While accelerate the cul-tivation of new drives for economicdevelopment.Ministry of Ecological Envi-ronment: China exceeded 2020 car-bon emission reduction goalOn June 4, 2021, according to theMinistry of Ecological Environment,the carbon emission intensity in 2020in China dropped by 18.8% comparedto 2015, exceeding the obligatorytarget of the 13th Five-Year Plan,while the share of non-fossil energyin China’s energy consumption cameto 15.9%, both exceeding the goalsfor 2020 set by China. In September2020, China made a solemn commit-ment to the world that China wouldstrive to achieve carbon emissionpeaking by 2030 and carbon neutralityby 2060. Therefore, in the outline of14th Five-Year Plan and Vision 2035,it was an important topic to developa green mind in production and lives,and to peak carbon emissions and sta-bilize and then decline.Ministry of Industry and Infor-mation Technology: Accelerate thedigital transformation of the manu-facturing industryThe 14th Five-Year Plan for theDevelopment of info-communica-tions Industry (the Plan) was officiallyissued in November 2021, which putforward the overall goal for the 14thFive-Year Plan period, namely, by2025 the overall scale of informationand communication industry willbe further expanded, the quality ofdevelopment will be signif icantlyimproved, the construction of a newdigital infrastructure is basicallycompleted which is high-speed andubiquitous, integrated and intercon-nected, intelligent and green, safe and Obligatory targetsare commitmentsto the people, andindicators thatfurther strengthenthe government’sresponsibility on thebasis of anticipation.11reliable, the innovation capacity will be greatly enhanced, new business models will flourish, and the ability to empower digital transformation and upgrading of the economy and society has been comprehensively strengthened. By the end of 2025, the information and communication in-dustry will reach a new level of green development, with a 15% reduction in comprehensive energy consumption per unit of total telecom services, and the PUE value of newly-built large and super-large data centers below 1.3.General Administration of Customs: Enhance the cooperation of the Belt and RoadOn July 27, 2021, the General Administration of Customs issued the 14th Five-Year Plan for Customs, specif y ing the development and implementation plans for the Belt and Road cooperation, construction of free trade zones, public health at ports, intellectual property protection, bio-safety at national gateway and anti-smuggling, etc. According to the plan, in the next five years, members of international customs cooperation mechanism along the Belt and Road will increase from 53 in 2020 to more than 90 in 2025, and the number of countries (regions) interconnected with China through overseas “sole window” will increase from 1 at pres-ent to 15, the number of countries (regions) who signed AEO (Autho-rized Economic Operator) mutual recognition arrangements with Chi-na will top 60 from 42. In next five years, 35 international sanitary ports will be constructed in China, to en-hance the ability of Chinese portsto respond quickly and effectively topublic health emergencies.Intellectual Property Office:Ensure the milestone goal of con-structing a strong intellectual prop-erty rights powerhouse as scheduledIn late October 2021, the StateCouncil issued National Plan forProtection and Application of Intel-lectual Property Rights During the14th Five-Year Plan Period, specify-ing “four new goals” for the IP-relat-ed work, which include scaling newheights in IP protection, achievingnew results in IP application, reachinga new stage in IP services, and mak-ing new breakthroughs in interna-tional IP cooperation. Meanwhile, itproposed to 8 anticipatory indicators,including in 2025 lifting the numberof high-value invention patents per10,000 people to 12, the number ofoverseas invention patents grantedto 90,000, the registered amount ofintellectual property pledge financingto RMB 320 billion, the total annualimport and export value of intellectu-al property royalties to RMB 350 bil-lion, the value added from patent-in-tensive industries to 13% of GDP, thevalue added from copyright industriesto 7.5% of GDP, the social satis-faction rate of intellectual propertyprotection to 82, and the convictionrate of first instance civil intellectualproperty cases to 85%, in order to en-sure the milestone goal of construct-ing a strong IPR powerhouse will becompleted as scheduled.Ministry of Commerce: Boostand deepen innovations in scienceand technology, institution, indus-try and business modelsRecently, the Ministry of Com-merce issued the Plan for High-qual-ity Development of Foreign Tradeduring the 14th Five-year Plan Peri-od, which highlighted the deepeninginnovations in science and technol-ogy, institution, industry and busi-ness models, outlined 10 major tasksincluding green trade, trade digita-lization, integration of domestic andforeign trade and other new trendsin international trade, to provideguidance for the innovative develop-ment of foreign trade. In 2021, newindustrial forms brought new growth:more than 2,000 overseas warehouseswere built, and in the first ten monthsfrom January to October of 2021,cross-border e-commerce import andexport grew by 19.5%. According tothe plan, during the 14th Five-YearPlan period, China will further expandthe opening-up of domestic market,encourage the import of high-qualityconsumer goods, increase the importof advanced technology, importantequipment, key components, energyresources products and agriculturalproducts in short in domestic mar-ket, optimize the list of cross-bordere-commerce retail imports, furtherpromote local processing of importsin border trade, and stimulate importsfrom neighboring countries, in order tovigorously develop trade of high-qual-ity, high-tech, high value-added greenand low-carbon products. 12。

To dear IE91ersEssentials of Contemporary management—made by yiwen 关于这份总结:这份总结是根据于瑞峰最后一节课所画的考试范围整理的,不考的内容一点没有涵盖^_^。

06、07年三套考题中简答、案例题出现过的重点内容结合答案的给分点详细整理在内,笑脸是最好背下来的,实在记不住至少加阴影的得理解性背下,毕竟作答大题时出现了关键词分数也就到手了。

最重要的三个提醒:一是最好的资料就是三份考题(不解释),二是考试时小简答题写两行就足够了(总得留点时间给案例题啊),三是祝考试顺利!奕雯Chapter 1.The Management Process Achieving High Performance【efficiency】A measure of how well or productively resources are used to achieve a goal. 【effectiveness】A measure of the appropriateness of the goals an organization is pursuing andManagerial FunctionsPlanning】Identifying and selecting appropriate goals【Organizing】Structuring working relationships in a way that allows organizational members to work together to achieve organizational goals.【Leading】Articulating a clear vision and energizing and enabling organizational members so that they understand the part they play in achieving organizational goals.【Controlling】Evaluating how well an organization is achieving its goals and taking action to maintain or improve performance.3.Levels of management【first-line manager】A manager who is responsible for the daily supervision of non managerial employees【Middle manager】A manager who supervises first-line managers and is responsible for finding the best way to use the resources to achieve organizational goals.【first-line manager】Identifying and selecting appropriate goals【top managers】A manager who establishes organizational goals, decides how departments should interact, and monitors the performanceof middle managers.4.Managerial Roles and skills(1) Managerial Roles Identified by Mintzberg【Decisional】→Entrepreneur(commit resources, decide expansion) →Disturbance Handler(deal with unexpected problems) →Resource Allocator → Negotiator交涉者(work with suppliers, distributors, labor unions, other organization)【Informational】→Monitor(evaluate managers,watch environment) →Disseminator传播者→Spokesperson代言人【Interpersonal】→Figurehead (直译是傀儡,意会下)→leader →liaison(establish alliances between different departments or different organizations)(2) Managerial Skills【conceptual skills】The ability to analyze and diagnose a situation and to distinguish between cause and effect. 【human skills】The ability to understand, alter, lead, and control the behavior of other individuals and groups. 【technical skills】Job specific knowledge and techniques that are required to perform an organizational roles.5.Management in a Global Environment(1) Building a Competitive AdvantageIncreasing efficiency →Increasing quality →Increasing speed, flexibility, and innovation→Increasing responsiveness to customers(2)Maintaining Ethical Standards(3)Managing a Diverse Workforce(4)Utilizing Information Technology and E-commerceChapter 3.Maintaining Ethical Standards1.Factors Influencing Behaviors:→External pressures from stockholders for increased organizational financial performance.→Internal pressures from top management on lower-level managers to increase the organization’s competitive performance and profitability.→Societal, cultural, and environment demands on the organization.2. Ethics and Stakeholders【stakeholders】Shareholders, employees, customers, suppliers, and others who have an interest, claim, or stake in an organization and in what it does.→Each group of stakeholders wants a different outcome and managers must work to satisfy as many as possible.→Managers have the responsibility to decide which goals an organization should pursue to most benefit stakeholders—decisions that benefit some stakeholder groups at the expense of others.【Ethics】Moral principles or beliefs about what is right or wrong.→Ethics guide managers in their dealings with stakeholders and others when the best course of action is unclear.→Managers often experience an ethical dilemma in choosing between theconflicting interests of stakeholders.3. Ethical Decision Models【Utilitarian Model】produces the greatest good for the greatest number of people.【Moral Rights Model】best maintains and protects the fundamental rights and privileges of the people affected by it.【Justice Model】distributes benefits and harms among stakeholders in a fair, equitable, or impartial way.Chapter 4.Managing in a global environment 【organizational environment】A set of forces and conditions that operate beyond an organization’s boundaries but affect a manager’s ability to acquire and utilize resources1. The task environment【suppliers】Individuals and organizations that provide an organization with the input resources that it needs to produce goods and services. (eg. Raw materials, component parts, labor) 【distributors】Organizations that help other organizations sell their goods or services to customers. 【customers】Individuals and groups that buy goods and services that an organization produces. 【competitors】Organizations that produce goods and services that are similar to a particular organization’s goods and services. Barriers to Entry —Government regulations, Brand loyalty, Economies of scale2. The general environment【Economic Forces】Interest rates, inflation, unemployment, economic growth, and other factors that affect the general health and well-being of a nation or the regional economy of an organization【Technological Forces】Outcomes of changes in the technology that managers use to design, produce, or distribute goods and services.【Sociocultural Forces】Pressures emanating from the social structure of a country or society or from the national culture.【Demographic Forces】Outcomes of change in, or changing attitudes toward, the characteristics of a population, such as age, gender, ethnic origin, race, sexual orientation, and social class.【Political and legal Forces】Outcomes of changes in laws and regulations, such as the deregulation of industries, the privatization of organizations, and increased emphasis on environmental protection.【Global Forces】Outcomes of changes in international relationships3. The changing global environment(1)The role of national culture【Values】Ideas about what a society believes to be good, desirable and beautiful【Norms】Unwritten rules and codes of conduct that prescribe how people should act in particular situations.【Hofstede’s Model of National Culture】【Individualism versus Collectivism】Individualism values individual freedom and self-expression and holds a strong belief in personal rights and the need for persons to be judged by their achievements rather their social background. Collectivism values subordination of the individual to the goals of the group.(Japan )【Power Distance】A society’s acceptance of differences in the well bei ng of citizens due to differences in heritage, and physical and intellectual capabilities (individualism).In high power distance societies, the gap between rich and poor becomes very wide (e.g., Panama and Malaysia).In the low power distance societies of western cultures (e.g., United States and Germany), the gap between rich and poor is reduced by taxation and welfare programs.【Achievement versus Nurturing Orientation】Achievement-oriented societies value assertiveness, performance, and success and are results-oriented. (United States and Japan)Nurturing-oriented cultures value quality of life, personal relationships, and service.( Sweden and Denmark)【Uncertainty Avoidance】Societies and people differ in their tolerance for uncertainty and risk. Low uncertainty avoidance cultures (e.g., U.S. and Hong Kong) value diversity and tolerate a wide range of opinions and beliefs.High uncertainty avoidance societies (e.g., Japan and France) are more rigid and expect high conformity in their citizens’ beliefs and norms of behavior.【Long Term Outlook】Cultures (e.g., Taiwan and Hong Kong) with a long-term in outlook are based on the values of saving, and persistence.Short-term outlook societies (e.g., France and the United States) seek the maintenance of personal stability or happiness in the present.【National Culture and Global Management】Management practices that areeffective in one culture often will not work as well in another culture.(2)Declining barriers of distance and culture(3)Declining barriers to trade and investment(4)Effects of free trade on managersChapter 5.Decision Making, Learning, Creativity, and Innovation1. The Nature of Managerial Decision Making【Decision Making】The process by which managers respond to opportunities and threats byanalyzing options and making determinations about specific organizational goals and courses of action.(1) Programmed and Non-programmed Decision Making【Programmed Decision Making】Routine, nearly automatic decision making that follows established rules or guidelines.【Non-programmed Decision Making】Non-routine decision making that occurs in response to unusual, unpredictable opportunities and threats.Faced with non-programmed decision making, managers must search for information about alternative courses of action and rely on intuition and judgment to choose wisely among alternatives. →Intuition直觉Ability to make sound decisions based on one’s past experience and immediate feelings about the information at hand. →Judgment(better than intuition)Ability to develop a sound opinion based on one’s evaluation of the importance of the information at hand.(2) Classical Decision Making Model【Classical Decision Making Model】A prescriptive approach to decision making based on the assumption that the decision maker can identify and evaluate all possible alternatives and their consequences and rationally choose the most appropriate course of action. 【Optimum Decision】The most appropriate decision in light of what managers believe to be the most desirable future consequences for their organization.【Administrative Model】An approach to decision making that explains why decision making is inherently uncertain and risky and why managers usually make satisfactory rather than optimum decisions. The administrative model is based on three important concepts: bounded rationality, incomplete information, and satisficing. 【Bounded Rationality】Cognitive认知的limitations that constrain束缚one’s ability to interpret, process, and act on information. 【Incomplete Information】Information is incomplete because of risk and uncertainty, ambiguity, and time constraints. → Risk : The degree of probability that the possible outcomes of a particular course of action will occur. (有明确的数据表示)→Uncertainty : Unpredictability. (Future is unknown and probabilities cannot be determined) →Ambiguous Information : Information that can be interpreted in multiple and often conflicting ways. →Time Constraints and Information Costs. (记得少女老妇人的画不)【Satisficing】Searching for and choosing an acceptable, or satisfactory, response to problems and opportunities, rather than trying to make the best decision.2. Steps in the Decision-making Process(1)Recognize the Need for a Decision(2)Generate Alternatives(3)Assess alternativesLegality →Ethicalness →Economic Feasibility(与钱有关的) →Practicality(have the ability and resources, not threaten other organizational goals)(4)Choose Among Alternatives(5)Implement the Chosen Alternative(6)Learn from Feedback3. Group Decision Making→【Groupthink】A pattern of faulty and biased decision making that occurs in groups whose members strive for agreement among themselves at the expense of accurately assessing information relevant to a decision. Solutions to groupthink: (i)【Devil’s Advocacy唱反调】Critical analysis of a preferred alternative, made in response to challenges raised by a group member who, playing the role of devil’s advocate, defen ds unpopular or opposing alternatives for the sake of argument. (ii)【Diversity Among Decision Makers】(决策者构成的多样性)4. Organizational Learning and Creativity【Organizational Learning】The process through which managers seek to improve employees’ desire and ability to understand and manage the organization and its task environment. 【Learning Organization】An organization in which managers try to maximize the ability of individuals and groups to think and behave creatively and thus maximize the potential for organizational learning to take place. The heart of the organizational learning is creativity. 【Creativity】A decision maker’s ability to discover original and novel (adj.新奇的) ideas that lead to feasible alternative courses of action. 【Innovation】(创新) The implementation of creative ideas in an organization.Creating a Learning Organization (Senge)(1)Develop personal mastery.(2)Build complex, challenging mental models.(3)Promote team learning.(4)Build shared vision.(5)Encourage systems thinking.Promoting Individual CreativityPromoting Group Creativity【Brainstorming】Brainstorming is a group problem-solving technique in which managers meet face-to-face to generate and debate a wide variety of alternatives from which to make a decision. →Production Blocking: A loss of production in brainstorming sessions due to the unstructured nature of brainstorming.【Nominal Group Technique】民意群体法Nominal Group Technique is a decision-making technique in which group members write down ideas and solutions, read their suggestions to the whole group, and discuss and then rank the alternatives. Nominal Group Technique is especiallyuseful when an issue is controversial有争议的and when different managers might be expected to champion different courses of action. The main improvement of nominal group technique: It provides a more structured way of generating alternatives in writing and gives each manager more time and opportunities to generate alternative solutions.Promoting Creativity at the Global LevelChapter 6.Planning, Strategy, and Change 【Strategy】A cluster of decisions about what goals to pursue, what actions to take, and how to use resources to achieve goals. 【Mission】A broad declaration of an organization’s purpose that identifies the organization’s products and customers and distinguishes the organization from its competitors. Overview: Planning process includes three major steps:(1) Determining an organization’s mission and major goals;(2) Choosing strategies to realize the mission and goals;(3) Selecting the appropriate way of organizing resources to implement the strategies.1. Planning【Planning】Identifying and selecting appropriate goals and courses of action; one of the four principle functions of management. Planning is a three-step activity:(1) Determining the organization’s mission and goals;(2) Formulating strategy;(3) Implementing strategy and changing the organization.In large organizations planning usually takes place at three levels of management: corporate, business or division, and department or functional. 【Division】A business unit that has its own set of managers and functions or departments and competes in a distinct industry. 【Divisional Managers】Managers who control the various divisions of an organization. 【Corporate-level plan】Top management’s decisions pertaining to the organization’s missi on, overall strategy, and structure. 【Corporate-level strategy】A plan that indicates in which industries and national markets an organization intends to compete. 【Business-level plan】Divisional managers’ decisions pertaining to division’s long-term goals, overall strategy, and structure.【Business-level strategy】A plan that indicates how a division intends to compete against its rivals in an industry. 【Functional-level plan】Functional managers’ decisions pertaining to the goals that they propose to pursue to help the division attain its business-level goals. 【Functional-level strategy】A plan that indicates how a function intends to achieve its goals. 【Function】A unit of department in which people have the same skills or use the same resources to perform their jobs. 【Functional Manager】Managers who supervise the various functions, such as manufacturing, accounting, and sales, within a division. Functional goals and strategies should be consistent with divisional goals and strategies, which in turn should be consistent withdivisional goals and strategies, which in turn should be consistent with corporate goals and strategies. Although ultimate responsibility for planning may lie with certain select managers within an organization, all managers and many non-managerial employees typically participate in the planning process.Time Horizons of Plans【Time Horizon周期】The intended duration of a plan. 【Long-term Plan】five years or more. 【Immediate-term Plan】between one and five years. 【Short-term Plan】one year or less. 【Rolling Plan】A plan that is updated and amended every year to take account of changing conditions in the external environment.Standing Plans and Single-use Plans【Standing Plans】Used in situations in which programmed decision making is appropriate.(1) Policy: A general guide to action;(2) Rule: A formal, written guide to action;(3) Standing Operating Procedure (SOP): A written instruction describing the exact series of actions that should be followed in a specific situation.【Single-use Plans】Developed to handle non-programmed decision making in unusual orone-of-a-kind situations.Planning’s Importance(1) Planning is a useful way of getting managers to participate in decision making about the appropriate goals and strategies for an organization.(2) Planning is necessary to give the organization a sense of direction and purpose.(3) A plan helps coordinate managers of the different functions and divisions of an organizationto ensure that they all pull in the same direction.(4) A plan can be used as a device for controlling managers within an organization.Effective plans should have four qualities ( By Henri Fayol ):(1) Unity (一致性): At any one time only one central, guiding plan is put into operation to achieve an organizational goal.(2) Continuity (持续性): Planning is an ongoing process in which managers build and refine (精炼) previous plans and continually modify plans at all levels.(3) Accuracy (准确性): Managers need to make every attempt to collect and utilize all available information at their disposal in the planning process.(4) Flexibility (灵活性)2. Determining Mission and GoalsDefining the Business(1) Who are our customers?(2) What customer needs are being satisfied?(3) How are we satisfying customer needs?Establishing Major GoalsGoals must be challenging but realistic with a definite period in which they are to be achieved. 3. Formulating Strategy: SWOT Analysis【Strategy Formulation】Analysis of an organization’s current situation followed by the development of strategies to accomplish its mission and achieve its goals.【SWOT Analysis】A planning exercise in which managers identify organizational strengths (S), weaknesses (W), environmental opportunities(O), and threats.4. Formulating Strategy: Corporate-levelConcentration on a Single Business (全力做好一项业务)Can become a strong competitor, but can be risky.Diversification (多角化)【Diversification】Expanding operations into a new business or industry and producing new goods or services. 【Related Diversification】Entering a new business or industry to create a competitive advantage in one or more of an organization’s existing divisions or business.Synergy协同效应Performance gains that result when individuals and departments coordinate their actions. 【Unrelated Diversification】Entering a new industry or buying a company in a new industry that is not related in any way to an organization’s current businesses or industries. The reasons to pursue unrelated diversification: (1) Managers can buy a poorly performing company, transfer their management skills to it, turn around its business, and increase its performance. (2) Portfolio Strategy 投资组合战略There is evidence that too much diversification can cause managers to lose control of their organization’s core business.International Expansion (国际化扩张)【Global Strategy】Selling the same standardized product and using the same basic marketing approach in each national market. 【Multidomestic Strategy】(本土化战略) Customizing products and marketing strategies to specific national conditions.Vertical Integration (纵向一体化)A strategy that allows an organization to create value by producing its own inputs or distributing its own products. 【Backward Vertical Integration投入方面(原材料等)】a firm seeks to reduce its input costs by producing its own inputs. 【Forward Vertical Integration】产出方面(分销,销售等 a firm distributes its outputs or products to lower distribution costs and ensure the quality service to customers.5. Formulating Strategy: Business-levelLow-cost Strategy (低成本)Driving the organization’s total costs down below the total costs of rivals.Differentiation Strategy (差异化)Distinguishing an organization’s products from the products of compet itors in dimensions such as product design, quality, or after-sales service.Focused Low-cost Strategy (专一低成本)Serving only one market segment and being the lowest-cost organization serving that segment.Focused Differentiation Strategy (专一差异化)Serving only one market segment as the most differentiated organization serving that segment.6. Formulating Strategy: Functional-level7. Implementing Strategy and Changing the OrganizationStrategy Implementing is a five-step process:(1) Allocate implementation responsibility to the appropriate individuals or groups.(2) Draft detailed action plans for implementation.(3) Establish a timetable for implementation.(4) Allocate appropriate resources.(5) Hold specific groups or individuals responsible for the attainment of corporate, divisional, and functional goals.Chapter anizing: Designing Organizational Structure【Organizational Architecture】The organizational structure, control systems, and culture that together determine how efficiently and efficiently and effectively organizational resources are used.1. Designing Organizational Structure【Organizational Structure】A formal system of task and reporting relationships that coordinates and motivates organizational members so that they work together to achieve organizational goals. 【Organizational Design】The process by which managers make specific organizing choices that result in a particular kind of organizational structure. According to contingency theory, managers design organizational structures to fit the factors or circumstances that are affecting the company the most and causing them the most uncertainty. Thus, there is no one best way to design an organization. In some situations stable, mechanistic structures may be most appropriate while in others flexible, organic structures might be the most effective. In some situations flexible, organic structures might be the most effective. Four factors are important determinants of the type of organizational structure of organizing method managers select: (1) The nature of the organizational environment(2) The type of strategy the organization pursues(3) The technology the organization uses(4) The characteristics of the organization’s human resourcesThe Organizational EnvironmentThe more quickly the external environment is changing and the greater the uncertainty within it, a more organic structure is suitable for the situation. If the external environment is stable, resources are readily available, and uncertainty is low, a more formal structure is suitable for the situation.StrategyA differentiation strategy needs a flexible structure, low cost may need a more formal structure. Increased vertical integration or diversification also requires a more flexible structure.TechnologyMore complex technology makes it harder for managers to regulate the organization. 【Technology】The combination of skills, knowledge, tools, equipment, computers and machines used in the organization. 【Small-batch Technology小批量技术】Technology that is used to produce small quantities of customized, one-of-a-kind products and is based on the skills of people who work together in small groups. 【Mass-production Technology大批量生产技术】Technology that is based on the use of automated machines that are programmed to perform the same operations over and over. A structure that decentralizes authority to employees and allows them to respond flexibly is most appropriate with small-batch technology. A formal structure is the preferred choice because it gives managers the most control over the production process.Human ResourcesHighly skilled workers whose jobs require working in teams usually need a more flexible structure. Higher skilled workers (e.g., CPA’s and doctors) often have internalized professional norms.【Job Design】The process by which managers decide how to divide tasks into specific jobs. 【Division of Labor】Splitting the work to be performed into particular tasks and assigning tasks to individual workers. 【Job Simplification】The process of reducing the number of tasks that each worker performs. →Too much simplification may reduce efficiency. Workers may find their jobs boring and monotonous单调的.Job Enlargement and Job Enrichment【Job Enlargement】Increasing the number of tasks for a given job by changing the division of labor. →The intention is to reduce boredom and fatigue疲乏by increasing variety of tasks performed. 【Job Enrichment】Increasing the degree of responsibility a worker has over a job. →The intention is to increase worker involvement. And it requires a flexible organizational structure to allow employees to act flexibly and creatively. →There are four ways to enrich thejob:(1) Empowering workers to experiment to find better ways of doing the certain job.(2) Encouraging workers to develop new skills.(3) Allowing workers to decide how to respond to unexpected situations.(4) Allowing workers to monitor and measure their own performance.The Job Characteristics Model (工作特征模型)By Hackman & Oldham Every job has five characteristics that determine how motivating the job is.(1) Skill Varity: (技能多样性)The extent to which a job requires an employee to use a wide range of different skills, abilities, or knowledge.(2) Task Identity: (任务完整性)The extent to which a job requires a worker to perform all the tasks required to complete the job from the beginning to the end of the production process.(3) Task Significance: (任务重要性)The degree to which a worker feels his or her job is meaningful because of its effect on people inside the organization.(4) Autonomy: (自主性)The degree to which a job gives an employee the freedom and discretion决定权needed to schedule different tasks and decide how to carry them out.(5) Feedback: (反馈)The extent to which actually doing a job provides a worker with clear and direct information about how well he or she has performed the job.The more employees feel that their work is meaningful and that they are responsible for work outcomes and responsible for knowing how those outcomes affect others, the more motivating work becomes and the more likely employees are to be satisfied and to perform at a high level.3. Grouping Jobs into Functions and DivisionsFunctional Structure (职能型结构)【Function】A function is a group of people, working together, who possess similar skills or use the same kind of knowledge, tools, or techniques to perform their jobs. 【Functional Structure】An organizational structure composed of all the departments that an organization requires to produce its goods or services. →Advantages Encourages learning from others doing similar jobs. Easy for managers to monitor and evaluate workers. →Disadvantages Difficult for departments to communicate with others. Preoccupation with own department and losing sight of organizational goals.Divisional Structures (分部型结构)【Divisional Structure】An organizational structure composed of separate business units within which are the functions that work together to produce a specific product for a specific customer. Divisions develop a business-level strategy to compete. And Functional managers report to。

新物体识别障碍英语The rapid advancements in artificial intelligence and machine learning have revolutionized various industries, including computer vision. One of the key challenges in computer vision is the ability to accurately recognize and classify objects in images or videos. While significant progress has been made in this field, the emergence of new and diverse objects presents ongoing challenges that researchers and developers must address.One of the primary challenges in new object recognition is the ever-expanding variety of objects that need to be identified. As technology progresses and new products are constantly introduced, the pool of potential objects that a computer vision system must be able to recognize continues to grow. This requires continuous model updates, retraining, and the incorporation of new data to ensure the system's accuracy and relevance.Another challenge is the inherent complexity of many new objects. As technology becomes more sophisticated, the objects themselves can become increasingly intricate in their design, materials, andfunctionality. This complexity can make it more difficult for computer vision systems to accurately distinguish between different objects, particularly when they share similar visual characteristics.Furthermore, the environments in which these new objects are encountered can also pose challenges. Computer vision systems must be able to operate in a wide range of lighting conditions, backgrounds, and perspectives, which can significantly impact the ability to recognize objects accurately. Adapting to these variable environmental factors is crucial for ensuring reliable object recognition in real-world scenarios.One particular area of concern is the recognition of objects that are not part of the training data used to develop the computer vision models. This is known as the "open-world" problem, where the system must be able to identify and classify objects that it has not been explicitly trained on. This requires the development of more flexible and adaptable models that can generalize beyond the specific instances used in the training process.Another challenge in new object recognition is the need for efficient and scalable algorithms. As the number of objects to be recognized continues to grow, the computational resources required to process and analyze the data can become increasingly demanding. Developing efficient algorithms that can handle large-scale objectrecognition tasks while maintaining high accuracy is crucial for real-time applications and deployment in resource-constrained environments.Additionally, the incorporation of domain-specific knowledge and contextual information can greatly enhance the performance of new object recognition systems. By leveraging external data sources, such as product databases, user manuals, or expert knowledge, computer vision models can gain a deeper understanding of the objects they are tasked with recognizing. This can help to overcome the limitations of purely visual-based approaches and improve overall recognition accuracy.One promising approach to address these challenges is the use of transfer learning and meta-learning techniques. Transfer learning involves leveraging knowledge gained from training on one set of objects to improve the performance on a different but related set of objects. This can help to accelerate the training process and improve the generalization capabilities of the models.Meta-learning, on the other hand, focuses on developing models that can quickly adapt to new tasks or environments with minimal additional training. By incorporating meta-learning principles, computer vision systems can become more flexible and able to adapt to the recognition of new objects without the need forextensive retraining.Furthermore, the integration of multimodal sensing, such as combining visual, auditory, and tactile information, can provide a more comprehensive understanding of the objects being recognized. This can be particularly useful in scenarios where visual cues alone may not be sufficient for accurate identification.Another important aspect to consider is the ethical and societal implications of new object recognition systems. As these technologies become more widespread, it is crucial to ensure that they are developed and deployed in a responsible and transparent manner, addressing concerns related to privacy, bias, and the potential misuse of the technology.In conclusion, the recognition of new and diverse objects presents significant challenges for computer vision systems. From the ever-expanding variety of objects to the inherent complexity and variable environmental factors, researchers and developers must continually innovate and adapt their approaches to overcome these obstacles. By leveraging advanced techniques like transfer learning, meta-learning, and multimodal sensing, as well as addressing ethical considerations, the field of new object recognition can continue to progress and deliver increasingly accurate and reliable solutions for a wide range of applications.。

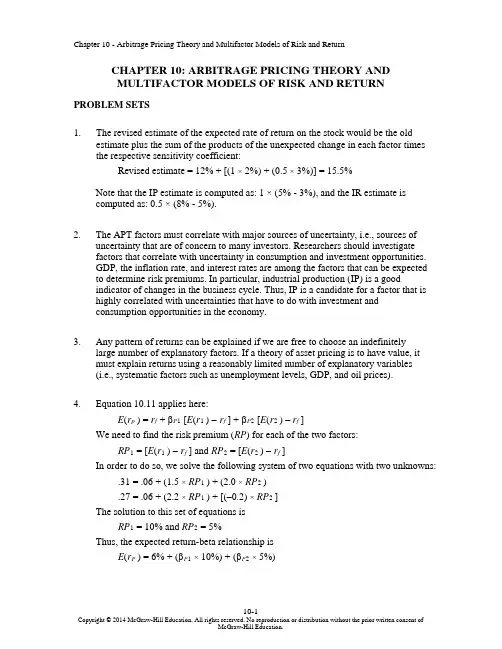

CHAPTER 10: ARBITRAGE PRICING THEORY ANDMULTIFACTOR MODELS OF RISK AND RETURN PROBLEM SETS1. The revised estimate of the expected rate of return on the stock would be the oldestimate plus the sum of the products of the unexpected change in each factor times the respective sensitivity coefficient:Revised estimate = 12% + [(1 × 2%) + (0.5 × 3%)] = 15.5%Note that the IP estimate is computed as: 1 × (5% - 3%), and the IR estimate iscomputed as: 0.5 × (8% - 5%).2. The APT factors must correlate with major sources of uncertainty, i.e., sources ofuncertainty that are of concern to many investors. Researchers should investigatefactors that correlate with uncertainty in consumption and investment opportunities.GDP, the inflation rate, and interest rates are among the factors that can be expected to determine risk premiums. In particular, industrial production (IP) is a goodindicator of changes in the business cycle. Thus, IP is a candidate for a factor that is highly correlated with uncertainties that have to do with investment andconsumption opportunities in the economy.3. Any pattern of returns can be explained if we are free to choose an indefinitelylarge number of explanatory factors. If a theory of asset pricing is to have value, itmust explain returns using a reasonably limited number of explanatory variables(i.e., systematic factors such as unemployment levels, GDP, and oil prices).4. Equation 10.11 applies here:E(r p) = r f + βP1 [E(r1 ) −r f ] + βP2 [E(r2 ) – r f]We need to find the risk premium (RP) for each of the two factors:RP1 = [E(r1 ) −r f] and RP2 = [E(r2 ) −r f]In order to do so, we solve the following system of two equations with two unknowns: .31 = .06 + (1.5 ×RP1 ) + (2.0 ×RP2 ).27 = .06 + (2.2 ×RP1 ) + [(–0.2) ×RP2 ]The solution to this set of equations isRP1 = 10% and RP2 = 5%Thus, the expected return-beta relationship isE(r P) = 6% + (βP1× 10%) + (βP2× 5%)5. The expected return for portfolio F equals the risk-free rate since its beta equals 0.For portfolio A, the ratio of risk premium to beta is (12 − 6)/1.2 = 5For portfolio E, the ratio is lower at (8 – 6)/0.6 = 3.33This implies that an arbitrage opportunity exists. For instance, you can create aportfolio G with beta equal to 0.6 (the same as E’s) by combining portfolio A and portfolio F in equal weights. The expected return and beta for portfolio G are then: E(r G) = (0.5 × 12%) + (0.5 × 6%) = 9%βG = (0.5 × 1.2) + (0.5 × 0%) = 0.6Comparing portfolio G to portfolio E, G has the same beta and higher return.Therefore, an arbitrage opportunity exists by buying portfolio G and selling anequal amount of portfolio E. The profit for this arbitrage will ber G – r E =[9% + (0.6 ×F)] − [8% + (0.6 ×F)] = 1%That is, 1% of the funds (long or short) in each portfolio.6. Substituting the portfolio returns and betas in the expected return-beta relationship,we obtain two equations with two unknowns, the risk-free rate (r f) and the factor risk premium (RP):12% = r f + (1.2 ×RP)9% = r f + (0.8 ×RP)Solving these equations, we obtainr f = 3% and RP = 7.5%7. a. Shorting an equally weighted portfolio of the ten negative-alpha stocks andinvesting the proceeds in an equally-weighted portfolio of the 10 positive-alpha stocks eliminates the market exposure and creates a zero-investmentportfolio. Denoting the systematic market factor as R M, the expected dollarreturn is (noting that the expectation of nonsystematic risk, e, is zero):$1,000,000 × [0.02 + (1.0 ×R M)] − $1,000,000 × [(–0.02) + (1.0 ×R M)]= $1,000,000 × 0.04 = $40,000The sensitivity of the payoff of this portfolio to the market factor is zerobecause the exposures of the positive alpha and negative alpha stocks cancelout. (Notice that the terms involving R M sum to zero.) Thus, the systematiccomponent of total risk is also zero. The variance of the analyst’s profit is notzero, however, since this portfolio is not well diversified.For n = 20 stocks (i.e., long 10 stocks and short 10 stocks) the investor willhave a $100,000 position (either long or short) in each stock. Net marketexposure is zero, but firm-specific risk has not been fully diversified. Thevariance of dollar returns from the positions in the 20 stocks is20 × [(100,000 × 0.30)2] = 18,000,000,000The standard deviation of dollar returns is $134,164.b. If n = 50 stocks (25 stocks long and 25 stocks short), the investor will have a$40,000 position in each stock, and the variance of dollar returns is50 × [(40,000 × 0.30)2] = 7,200,000,000The standard deviation of dollar returns is $84,853.Similarly, if n = 100 stocks (50 stocks long and 50 stocks short), the investorwill have a $20,000 position in each stock, and the variance of dollar returns is100 × [(20,000 × 0.30)2] = 3,600,000,000The standard deviation of dollar returns is $60,000.Notice that, when the number of stocks increases by a factor of 5 (i.e., from 20 to 100), standard deviation decreases by a factor of 5= 2.23607 (from$134,164 to $60,000).8. a. )(σσβσ2222e M +=88125)208.0(σ2222=+×=A50010)200.1(σ2222=+×=B97620)202.1(σ2222=+×=Cb. If there are an infinite number of assets with identical characteristics, then awell-diversified portfolio of each type will have only systematic risk since thenonsystematic risk will approach zero with large n. Each variance is simply β2 × market variance:222Well-diversified σ256Well-diversified σ400Well-diversified σ576A B C;;;The mean will equal that of the individual (identical) stocks.c. There is no arbitrage opportunity because the well-diversified portfolios allplot on the security market line (SML). Because they are fairly priced, there isno arbitrage.9. a. A long position in a portfolio (P) composed of portfolios A and B will offer anexpected return-beta trade-off lying on a straight line between points A and B.Therefore, we can choose weights such that βP = βC but with expected returnhigher than that of portfolio C. Hence, combining P with a short position in Cwill create an arbitrage portfolio with zero investment, zero beta, and positiverate of return.b. The argument in part (a) leads to the proposition that the coefficient of β2must be zero in order to preclude arbitrage opportunities.10. a. E(r) = 6% + (1.2 × 6%) + (0.5 × 8%) + (0.3 × 3%) = 18.1%b.Surprises in the macroeconomic factors will result in surprises in the return ofthe stock:Unexpected return from macro factors =[1.2 × (4% – 5%)] + [0.5 × (6% – 3%)] + [0.3 × (0% – 2%)] = –0.3%E(r) =18.1% − 0.3% = 17.8%11. The APT required (i.e., equilibrium) rate of return on the stock based on r f and thefactor betas isRequired E(r) = 6% + (1 × 6%) + (0.5 × 2%) + (0.75 × 4%) = 16% According to the equation for the return on the stock, the actually expected return on the stock is 15% (because the expected surprises on all factors are zero bydefinition). Because the actually expected return based on risk is less than theequilibrium return, we conclude that the stock is overpriced.12. The first two factors seem promising with respect to the likely impact on the firm’scost of capital. Both are macro factors that would elicit hedging demands acrossbroad sectors of investors. The third factor, while important to Pork Products, is a poor choice for a multifactor SML because the price of hogs is of minor importance to most investors and is therefore highly unlikely to be a priced risk factor. Betterchoices would focus on variables that investors in aggregate might find moreimportant to their welfare. Examples include: inflation uncertainty, short-terminterest-rate risk, energy price risk, or exchange rate risk. The important point here is that, in specifying a multifactor SML, we not confuse risk factors that are important toa particular investor with factors that are important to investors in general; only the latter are likely to command a risk premium in the capital markets.13. The formula is ()0.04 1.250.08 1.50.02.1717%E r =+×+×==14. If 4%f r = and based on the sensitivities to real GDP (0.75) and inflation (1.25),McCracken would calculate the expected return for the Orb Large Cap Fund to be:()0.040.750.08 1.250.02.040.0858.5% above the risk free rate E r =+×+×=+=Therefore, Kwon’s fundamental analysis estimate is congruent with McCracken’sAPT estimate. If we assume that both Kwon and McCracken’s estimates on the return of Orb’s Large Cap Fund are accurate, then no arbitrage profit is possible.15. In order to eliminate inflation, the following three equations must be solvedsimultaneously, where the GDP sensitivity will equal 1 in the first equation,inflation sensitivity will equal 0 in the second equation and the sum of the weights must equal 1 in the third equation.1.1.250.75 1.012.1.5 1.25 2.003.1wx wy wz wz wy wz wx wy wz ++=++=++=Here, x represents Orb’s High Growth Fund, y represents Large Cap Fund and z represents Utility Fund. Using algebraic manipulation will yield wx = wy = 1.6 and wz = -2.2.16. Since retirees living off a steady income would be hurt by inflation, this portfoliowould not be appropriate for them. Retirees would want a portfolio with a return positively correlated with inflation to preserve value, and less correlated with the variable growth of GDP. Thus, Stiles is wrong. McCracken is correct in that supply side macroeconomic policies are generally designed to increase output at aminimum of inflationary pressure. Increased output would mean higher GDP, which in turn would increase returns of a fund positively correlated with GDP.17. The maximum residual variance is tied to the number of securities (n ) in theportfolio because, as we increase the number of securities, we are more likely to encounter securities with larger residual variances. The starting point is todetermine the practical limit on the portfolio residual standard deviation, σ(e P ), that still qualifies as a well-diversified portfolio. A reasonable approach is to compareσ2(e P) to the market variance, or equivalently, to compare σ(e P) to the market standard deviation. Suppose we do not allow σ(e P) to exceed pσM, where p is a small decimal fraction, for example, 0.05; then, the smaller the value we choose for p, the more stringent our criterion for defining how diversified a well-diversified portfolio must be.Now construct a portfolio of n securities with weights w1, w2,…,w n, so that Σw i =1. The portfolio residual variance is σ2(e P) = Σw12σ2(e i)To meet our practical definition of sufficiently diversified, we require this residual variance to be less than (pσM)2. A sure and simple way to proceed is to assume the worst, that is, assume that the residual variance of each security is the highest possible value allowed under the assumptions of the problem: σ2(e i) = nσ2MIn that case σ2(e P) = Σw i2 nσM2Now apply the constraint: Σw i2 nσM2 ≤ (pσM)2This requires that: nΣw i2 ≤ p2Or, equivalently, that: Σw i2 ≤ p2/nA relatively easy way to generate a set of well-diversified portfolios is to use portfolio weights that follow a geometric progression, since the computations then become relatively straightforward. Choose w1 and a common factor q for the geometric progression such that q < 1. Therefore, the weight on each stock is a fraction q of the weight on the previous stock in the series. Then the sum of n terms is:Σw i= w1(1– q n)/(1– q) = 1or: w1 = (1– q)/(1– q n)The sum of the n squared weights is similarly obtained from w12 and a common geometric progression factor of q2. ThereforeΣw i2 = w12(1– q2n)/(1– q 2)Substituting for w1 from above, we obtainΣw i2 = [(1– q)2/(1– q n)2] × [(1– q2n)/(1– q 2)]For sufficient diversification, we choose q so that Σw i2 ≤ p2/nFor example, continue to assume that p = 0.05 and n = 1,000. If we chooseq = 0.9973, then we will satisfy the required condition. At this value for q w1 = 0.0029 and w n = 0.0029 × 0.99731,000In this case, w1 is about 15 times w n. Despite this significant departure from equal weighting, this portfolio is nevertheless well diversified. Any value of q between0.9973 and 1.0 results in a well-diversified portfolio. As q gets closer to 1, theportfolio approaches equal weighting.18. a. Assume a single-factor economy, with a factor risk premium E M and a (large)set of well-diversified portfolios with beta βP. Suppose we create a portfolio Zby allocating the portion w to portfolio P and (1 – w) to the market portfolioM. The rate of return on portfolio Z is:R Z = (w × R P) + [(1 – w) × R M]Portfolio Z is riskless if we choose w so that βZ = 0. This requires that:βZ = (w × βP) + [(1 – w) × 1] = 0 ⇒w = 1/(1 – βP) and (1 – w) = –βP/(1 – βP)Substitute this value for w in the expression for R Z:R Z = {[1/(1 – βP)] × R P} – {[βP/(1 – βP)] × R M}Since βZ = 0, then, in order to avoid arbitrage, R Z must be zero.This implies that: R P = βP × R MTaking expectations we have:E P = βP × E MThis is the SML for well-diversified portfolios.b. The same argument can be used to show that, in a three-factor model withfactor risk premiums E M, E1 and E2, in order to avoid arbitrage, we must have:E P = (βPM × E M) + (βP1 × E1) + (βP2 × E2)This is the SML for a three-factor economy.19. a. The Fama-French (FF) three-factor model holds that one of the factors drivingreturns is firm size. An index with returns highly correlated with firm size (i.e.,firm capitalization) that captures this factor is SMB (small minus big), thereturn for a portfolio of small stocks in excess of the return for a portfolio oflarge stocks. The returns for a small firm will be positively correlated withSMB. Moreover, the smaller the firm, the greater its residual from the othertwo factors, the market portfolio and the HML portfolio, which is the returnfor a portfolio of high book-to-market stocks in excess of the return for aportfolio of low book-to-market stocks. Hence, the ratio of the variance of thisresidual to the variance of the return on SMB will be larger and, together withthe higher correlation, results in a high beta on the SMB factor.b.This question appears to point to a flaw in the FF model. The model predictsthat firm size affects average returns so that, if two firms merge into a largerfirm, then the FF model predicts lower average returns for the merged firm.However, there seems to be no reason for the merged firm to underperformthe returns of the component companies, assuming that the component firmswere unrelated and that they will now be operated independently. We mighttherefore expect that the performance of the merged firm would be the sameas the performance of a portfolio of the originally independent firms, but theFF model predicts that the increased firm size will result in lower averagereturns. Therefore, the question revolves around the behavior of returns for aportfolio of small firms, compared to the return for larger firms that resultfrom merging those small firms into larger ones. Had past mergers of smallfirms into larger firms resulted, on average, in no change in the resultantlarger firms’ stock return characteristics (compared to the portfolio of stocksof the merged firms), the size factor in the FF model would have failed.Perhaps the reason the size factor seems to help explain stock returns is that,when small firms become large, the characteristics of their fortunes (andhence their stock returns) change in a significant way. Put differently, stocksof large firms that result from a merger of smaller firms appear empirically tobehave differently from portfolios of the smaller component firms.Specifically, the FF model predicts that the large firm will have a smaller riskpremium. Notice that this development is not necessarily a bad thing for thestockholders of the smaller firms that merge. The lower risk premium may bedue, in part, to the increase in value of the larger firm relative to the mergedfirms.CFA PROBLEMS1. a. This statement is incorrect. The CAPM requires a mean-variance efficientmarket portfolio, but APT does not.b.This statement is incorrect. The CAPM assumes normally distributed securityreturns, but APT does not.c. This statement is correct.2. b. Since portfolio X has β = 1.0, then X is the market portfolio and E(R M) =16%.Using E(R M ) = 16% and r f = 8%, the expected return for portfolio Y is notconsistent.3. d.4. c.5. d.6. c. Investors will take on as large a position as possible only if the mispricingopportunity is an arbitrage. Otherwise, considerations of risk anddiversification will limit the position they attempt to take in the mispricedsecurity.7. d.8. d.。