Alejandro Jaimes, Nicu Sebe, Multimodal human–computer interaction: A survey, Computer Vision and Image Understanding, 2007.

多模态人机交互综述

摘要:本文总结了多模态人机交互(MMHCI, Multi-Modal Human-Computer Interaction)的主要方法,从计算机视觉角度给出了领域的全貌。我们尤其将重点放在身体、手势、视线和情感交互(人脸表情识别和语音中的情感)方面,讨论了用户和任务建模及多模态融合(multimodal fusion),并指出了多模态人机交互研究的挑战、热点课题和兴起的应用(highlighting challenges, open issues, and emerging applications)。

1. 引言

多模态人机交互(MMHCI)位于包括计算机视觉、心理学、人工智能等多个研究领域的交叉点,我们研究MMHCI是要使得计算机技术对人类更具可用性(Usable),这总是需要至少理解三个方面:与计算机交互的用户、系统(计算机技术及其可用性)和用户与系统间的交互。考虑这些方面,可以明显看出MMHCI 是一个多学科课题,因为交互系统设计者应该具有一系列相关知识:心理学和认知科学来理解用户的感知、认知及问题求解能力(perceptual, cognitive, and problem solving skills);社会学来理解更宽广的交互上下文;工效学(ergonomics)来理解用户的物理能力;图形设计来生成有效的界面展现;计算机科学和工程来建立必需的技术;等等。

MMHCI的多学科特性促使我们对此进行总结。我们不是将重点只放在MMHCI的计算机视觉技术方面,而是给出了这个领域的全貌,从计算机视觉角度I讨论了MMHCI中的主要方法和课题。

1.1. 动机

在人与人通信中本质上要解释语音和视觉信号的混合。很多领域的研究者认识到了这点,并在单一模态技术unimodal techniques(语音和音频处理及计算机视觉等)和硬件技术hardware technologies (廉价的摄像机和其它类型传感器)的研究方面取得了进步,这使得MMHCI方面的研究已经有了重要进展。与传统HCI应用(单个用户面对计算机并利用鼠标或键盘与之交互)不同,在新的应用(如:智能家居[105]、远程协作、艺术等)中,交互并非总是显式指令(explicit commands),且经常包含多个用户。部分原因式在过去的几年中计算机处理器速度、记忆和存储能力得到了显著进步,并与很多使普适计算ubiquitous computing [185,67,66]成为现实的新颖输入和输出设备的有效性相匹配,设备包括电话(phones)、嵌入式系统(embedded systems)、个人数字助理(PDA)、笔记本电脑(laptops)、屏幕墙(wall size displays),等等,大量计算具有不同计算能量和输入输出能力的设备可用意味着计算的未来将包含交互的新途径,一些方法包括手势(gestures)[136]、语音(speech)[143]、触觉(haptics)[9]、眨眼(eye blinks)[58]和其它方法,例如:手套设备(Glove mounted devices)[19] 和and可抓握用户界面(graspable user interfaces)[48]及有形用户界面(Tangible User interface)现在似乎趋向成熟(ripe for exploration),具有触觉反馈、视线跟踪和眨眼检测[69]的点设备(Pointing devices)现也已出现。然而,恰如在人与人通讯中一样,当以组合方式使用不同输入设备时,情感通讯(effective communication)就会发生。

多模态界面具有很多优点[34]:可以防止错误、为界面带来鲁棒性、帮助用户更简单地纠正错误或复原、为通信带来更宽的带宽、对不同的状况和环境增加可选的通信方法。在很多系统中,采用多模态接口消除易出错模态(error prone modalities)的模糊性是多模态应用的重要动机之一,如Oviatt [123]所述,易出错技术可以相互补充,而不是给接口带来冗余和减少纠错的需要。然而,必须指出的是:多模态单独(multiple modalities alone)并不为界面带来好处,多模态的使用可能是无效的(ineffective),甚至是无益的(disadvantageous),据此,Oviatt[124]已经提出了多模态接口的共同错误概念(common misconceptions or myths),其中大多数与采用语音作为输入模态相关。

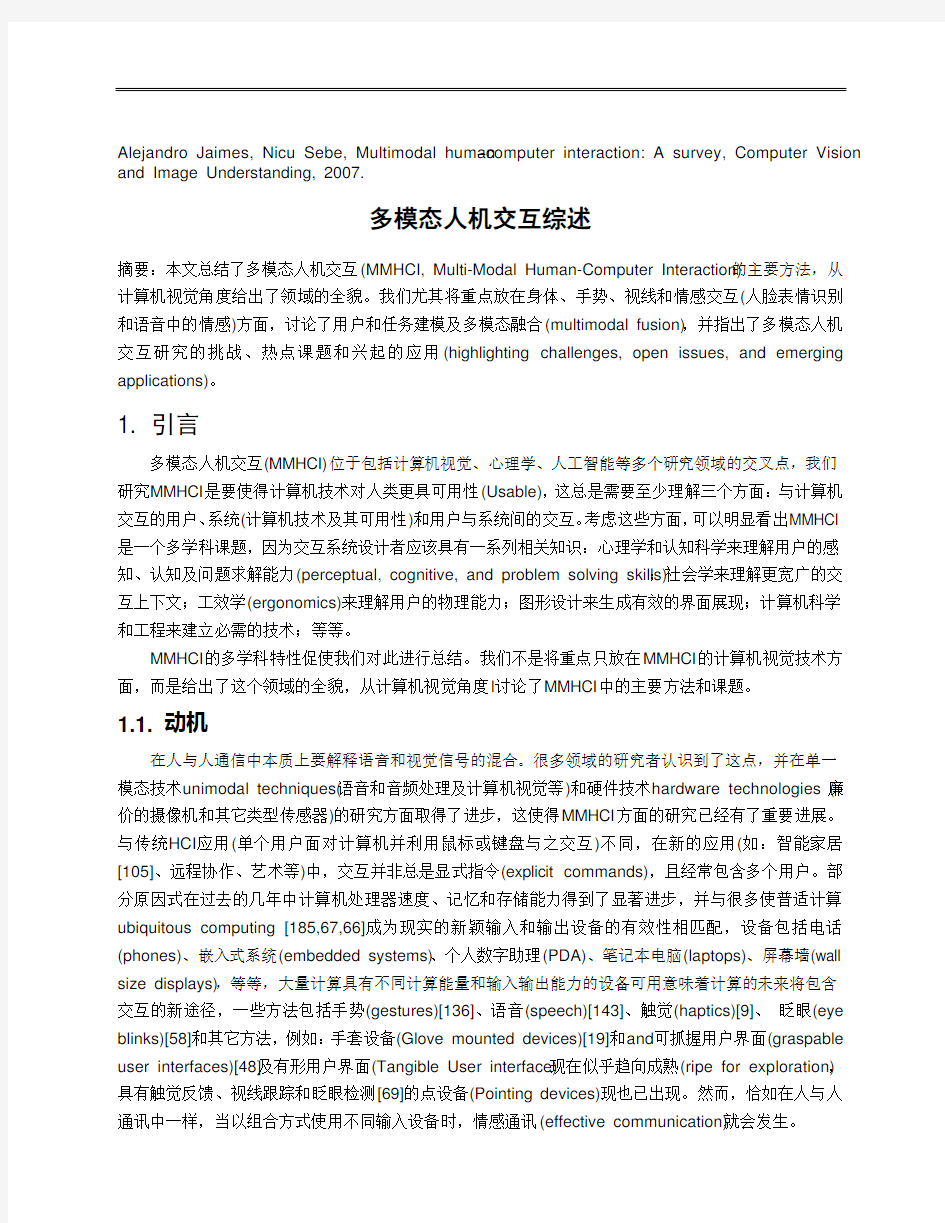

本文中,我们调研了我们认为是MMHCI本质的研究领域,概括了当前研究状况(the state of the art),并以我们的调研结果为基础,给出了MMHCI中的主要趋势和研究课题(identify major trends and open issues)。我们按照人体将视觉技术进行了分组(如图1所示)。大规模躯体运动(Largescale body movement)、姿势(gesture)和注视(gaze)分析用于诸如情感交互中的表情识别任务或其它各种应用。我们讨论了情感计算机交互(affective computer interaction),多模态融合、建模和数据收集中的课题及各种正在出现的MMHCI应用。由于MMHCI是一个非常动态和广泛的研究领域,我们不是去呈现完整的概括,因此,本文的主要贡献是在对在MMHCI中使用的主要计算机视觉技术概括的同时,给出对MMHCI 中的主要研究领域、技术、应用和开放课题的综述。

Fig. 1. 采用以人为中心多模态交互概略

1.2. Related surveys

已经有在多个领域中广泛的综述发表,诸如人脸检测[190,63],人脸识别[196],人脸表情分析(facial expression analysis)[47,131],语音情感(vocal emotion)[119,109],姿态识别(gesture recognition) [96,174,136],人运动分析(human motion analysis)[65,182,182,56,3,46,107],声音-视觉自动语音识别(audio-visual automatic speech recognition)[143]和眼跟踪(eye tracking)[41,36]。对基于视觉HCI的综述呈现在[142]和[73]中,其重点是头部跟踪(head tracking),人脸和脸部表情识别(face and facial expression recognition),眼睛跟踪(eye tracking)及姿态识别(gesture recognition)。文[40]中讨论了自适应和智能HCI,主要是对用于人体运动分析的计算机视觉的综述和较低手臂运动检测、人脸处理和注视分析技术的讨论;[125–128,144,158,135,171]中讨论了多模态接口。[84]和[77]中讨论了HCI的实时视觉技术(Real-time vision),包括人体姿态、对象跟踪、手势、注视力和脸姿态等。这里,我们不讨论前面综述中包含的工作,增加前面综述中没有覆盖的领域(如:[84,40,142,126,115]),并讨论在兴起领域

中的新的应用,着重指出了主要研究课题。相关的的会议和讨论会包括:ACM CHI、IFIP Interact、IEEE CVPR、IEEE ICCV、ACM Multimedia、International Workshop on Human-Centered Multimedia (HCM) in conjunction with ACM Multimedia、International Workshops on Human-Computer Interaction in conjunction with ICCV and ECCV、Intelligent User Interfaces (IUI) conference和International Conference on Multimodal Interfaces (ICMI)。

2. 多模态交互概要

术语“multimodal”已经在很多场合使用并产生了多种释义(如[10-12]中对模态的解释)。对于我们来讲,多模态HCI系统简单地是一个以多种模态或通信通道响应输入的系统(如:语音speech、姿态gesture、书写writing和其它等等),我们采用“以人为中心”的方法(human-centered approach),所指的“借助于模态(by modality)”意味着按照人的感知(human senses)的通信模式和由人激活或衡量人的量(如:血压计)的计算机输入设备,如图1所示。人的感知包括视线(sight)、触觉(touch)、听力(hearing)、嗅觉(smell)和味觉(taste);很多计算机输入设备的输入模态对应于人的感知:摄像机cameras(sight)、触觉传感器haptic sensors (touch)[9]、麦克风microphones(hearing)、嗅觉设备olfactory (smell)和味觉设备taste[92],然而,很多其它由人激活的计算机输入设备可以理解为对于人的感觉的组合或就没有对应物,如:键盘(keyboard)、鼠标(mouse)、手写板(writing tablet)、运动输入(motion input)(如:自身运动用来交互的设备)、电磁皮肤感应器(galvanic skin response)和其它生物传感器(biometric sensors)。.

在我们的定义中,字“input”是最重要的,恰如在实际中大多数与计算机的交互都采用多个模态而发生。例如:当我们打字时,我们接触键盘上的键以将数据输入计算机,但有些人也汇同时用视线阅读我们所输入的或确定所要按压键的位置。因此,牢记交互过程中人所在做的(what the human is doing)与系统实际接收作为输入(what the system is actually receiving as input)间的差异是十分重要的。例如,一台装有麦克风的计算机可能能理解多种语言和仅是不同类型的声音(如:采用人性化界面(humming interface)来进行音乐检索),尽管术语“multimodal”已常用来指这种状况(如:[13]中的多语言输入被认为是多通道的multimodal),但本文仅指那些采用不同模态(如:通信通道)结合的系统是多模态的,如图1所示。例如:一个系统仅采用摄像机对人脸表情和手势作出响应就不是多模态的,即使输入信号来自多个摄像机;利用同样的假设,具有多个键的系统也不是多模态的,但具有鼠标和键盘输入的则是多模态的。尽管对采用诸如鼠标和键盘、键盘和笔等多种设备的多模态交互已经有研究,本文仅涉及视觉(摄像机)输入与其它类型输入结合的人机交互技术。

在HCI中,多模态技术可以用来构造多种不同类型的界面(如图1),我们特别感兴趣的是感知(perceptual)、注意(attentive)和活跃(enactive)界面。如在[177]中定义的那样,感知界面(Perceptual interfaces)[176]是高度互动(interactive)且能使得与计算机丰富、自然和有效交互的多模态界面(multimodal interfaces),感知界面寻找感知输入sensing(input)和绘制输出rendering(output)的杠杆技术以提供利用标准界面及诸如键盘、鼠标和其它监视器等公共I/O设备[177]所不能实现的交互,并使得计算机视觉成为很多情况下的核心要件(central component);注意界面(Attentive interfaces)[180]是依赖于人的注意力作为主要输入的上下文敏感界面(context-aware interfaces)[160],即:注意界面[120]采用收集到的信息来评估与用户通信的最佳时间和方法;由于注意力主要由眼睛注视(eye contact)[160]和姿

态(gestures)(尽管诸如鼠标移动等度量也指示)来体现,计算机视觉在注意界面中起着主要作用。活跃界面(Enactive interfaces)是那些帮助用户以手或身体的主动使用为基础来与一组理解任务的知识通信(help users communicate a form of knowledge based on the active use of the hands or body for apprehension tasks),活跃知识不只是简单的多感知中间知识(multisensory mediated knowledge),而是以机器响应(motor responses)的形式贮存,并由“Doing”动作来获取。典型的例子是诸如打字、驾驶汽车、跳舞、弹奏乐器和粘土制模等任务所需要的能力,所有这些任务是难以采用图标或符号形式来描述的。

3. Human-centered vision

We classify vision techniques for MMHCI using a human-centered approach and we divide them according to the human body: (1) large-scale body movements, (2) hand gestures, and (3) gaze. We make a distinction between command (actions can be used to explicitly execute commands: select menus, etc.) and non-command interfaces (actions or events used to indirectly tune the system to the user’s needs) [111,23].

In general, vision-based human motion analysis systems used for MMHCI can be thought of as having mainly four stages: (1) motion segmentation, (2) object classification, (3) tracking, and (4) interpretation.While some approaches use geometric primitives to model different components (e.g., cylinders used to model limbs, head, and torso for body movements, or for hand and fingers in gesture recognition), others use feature representations based on appearance (appearance-based methods). In the first approach, external markers are often used to estimate body posture and relevant parameters. While markers can be accurate, they place restrictions on clothing and require calibration, so they are not desirable in many applications. Moreover, the attempt to fit geometric shapes to body parts can be computationally expensive and these methods are often not suitable for real-time processing. Appearance based methods, on the other hand, do not require markers, but require training (e.g., with machine learning, probabilistic approaches, etc.). Since they do not require markers, they place fewer constraints on the user and are therefore more desirable.

Next, we briefly discuss some specific techniques for body, gesture, and gaze. The motion analysis steps are similar, so there is some inevitable overlap in the discussions. Some of the issues for gesture recognition, for instance, apply to body movements and gaze detection.

3.1. Large-scale body movements

Tracking of large-scale body movements (head, arms, torso, and legs) is necessary to interpret pose and motion in many MMHCI applications. However, since extensive surveys have been published in this area [182,56,107,183], we discuss the topic briefly.

There are three important issues in articulated motion analysis [188]: representation (joint angles or motion of all the sub-parts), computational paradigms (deterministic or probabilistic), and computation reduction. Body posture analysis is important in many MMHCI applications. For example,

in [172], the authors use a stereo and thermal infrared video system to estimate the driver’s posture for deployment of smart air bags. The authors of [148] propose a method for recovering articulated body pose without initialization and tracking (using learning). The authors of [8] use pose and velocity vectors to recognize body parts and detect different activities, while the authors of [17] use temporal templates.

In some emerging MMHCI applications, group and non-command actions play an important role. In [102], visual features are extracted from head and hand/forearm blobs: the head blob is represented by the vertical position of its centroid, and hand blobs are represented by eccentricity and angle with respect to the horizontal. These features together with audio features (e.g., energy, pitch, and speaking rate, among others) are used for segmenting meeting videos according to actions such as monologue, presentation, white-board, discussion, and note taking. The authors of [60] use only computer vision, but make a distinction between body movements, events, and behaviors, within a rule-based system framework.

Important issues for large-scale body tracking include whether the approach uses 2D or 3D, desired accuracy, speed, occlusion and other constraints. Some of the issues pertaining to gesture recognition, discussed next, can also apply to body tracking.

3.2. Hand gesture recognition

Although in human–human communication gestures are often performed using a variety of body parts (e.g., arms, eyebrows, legs, entire body, etc.), most researchers in computer vision use the term gesture recognition to refer exclusively to hand gestures. We will use the term accordingly and focus on hand gesture recognition in this section.

Psycholinguistic studies of human-to-human communication [103] describe gestures as the critical link between our conceptualizing capacities and our linguistic abilities. Humans use a very wide variety of gestures ranging from simple actions of using the hand to point at objects, to the more complex actions that express feelings and allow communication with others. Gestures should, therefore, play an essential role in MMHCI [83,186,52], as they seem intrinsic to natural interaction between the human and the computer-controlled interface in many applications, ranging from virtual environments [82] and smart surveillance [174], to remote collaboration applications [52].

There are several important issues that should be considered when designing a gesture recognition system [136]. The first phase of a recognition task is choosing a mathematical model that may consider both the spatial and the temporal characteristics of the hand and hand gestures. The approach used for modeling plays a crucial role in the nature and performance of gesture interpretation. Typically, features are extracted from the images or video, and once these features are extracted, model parameters are estimated based on subsets of them until a right match is found. For example, the system might detect n points and attempt to determine if these n points (or a subset of them) could match the characteristics of points extracted from a hand in a particular pose or

performing a particular action. The parameters of the model are then a description of the hand pose or trajectory and depend on the modeling approach used. Among the important problems involved in the analysis are hand localization [187], hand tracking [194], and the selection of suitable features [83]. After the parameters are computed, the gestures represented by them need to be classified and interpreted based on the accepted model and based on some grammar rules that reflect the internal syntax of gestural commands. The grammar may also encode the interaction of gestures with other communication modes such as speech, gaze, or facial expressions. As an alternative to modeling, some authors have explored the use of combinations of simple 2D motion based detectors for gesture recognition [71].

In any case, to fully exploit the potential of gestures for an MMHCI application, the class of possible recognized gestures should be as broad as possible and ideally any gesture performed by the user should be unambiguously interpretable by the interface. However, most of the gesture-based HCI systems allow only symbolic commands based on hand posture or 3D pointing. This is due to the complexity associated with gesture analysis and the desire to build real-time interfaces. Also, most of the systems accommodate only single-hand gestures. Yet, human gestures, especially communicative gestures, naturally employ actions of both hands. However, if the two-hand gestures are to be allowed, several ambiguous situations may appear (e.g., occlusion of hands, intentional vs. unintentional, etc.) and the processing time will likely increase. Another important aspect that is increasingly considered is the use of other modalities (e.g., speech) to augment the MMHCI system [127,162]. The use of such multimodal approaches can reduce the complexity and increase the naturalness of the interface for MMHCI [126].

3.3. Gaze detection

Gaze, defined as the direction to which the eyes are pointing in space, is a strong indicator of attention, and it has been studied extensively since as early as 1879 in psychology, and more recently in neuroscience and in computing applications [41]. While early eye tracking research focused only on systems for in-lab experiments, many commercial and experimental systems are available today for a wide range of applications.

Eye tracking systems can be grouped into wearable or non-wearable, and infrared-based or appearance-based. In infrared-based systems, a light shining on the subject whose gaze is to be tracked creates a ‘‘red-eye effect:’’the difference in reflection between the cornea and the pupil is used to determine the direction of sight. In appearance based systems, computer vision techniques are used to find the eyes in the image and then determine their orientation. While wearable systems are the most accurate (approximate error rates below 1.4_ vs. errors below 1.7_ for nonwearable infrared), they are also the most intrusive. Infrared systems are more accurate than appearance-based, but there are concerns over the safety of prolonged exposure to infrared lights. In addition, most non-wearable systems require (often cumbersome) calibration for each individual

[108,121].

Appearance-based systems usually capture both eyes using two cameras to predict gaze direction. Due to the computational cost of processing two streams simultaneously, the resolution of the image of each eye is often small. This makes such systems less accurate, although increasing computational power and lower costs mean that more computationally intensive algorithms can be run in real time. As an alternative, in [181], the authors propose using a single high-resolution image of one eye to improve accuracy. On the other hand, infrared-based systems usually use only one camera, but the use of two cameras has been proposed to further increase accuracy [152].

Although most research on non-wearable systems has focused on desktop users, the ubiquity of computing devices has allowed for application in other domains in which the user is stationary (e.g., [168,152]). For example, the authors of [168] monitor driver visual attention using a single, non-wearable camera placed on a car’s dashboard to track face features and for gaze detection.

Wearable eye trackers have also been investigated mostly for desktop applications (or for users that do not walk wearing the device). Also, because of advances in hardware (e.g., reduction in size and weight) and lower costs, researchers have been able to investigate uses in novel applications. For example, in [193], eye tracking data are combined with video from the user’s perspective, head directions, and hand motions to learn words from natural interactions with users; the authors of [137] use a wearable eye tracker to understand hand–eye coordination in natural tasks, and the authors of [38] use a wearable eye tracker to detect eye contact and record video for blogging.

The main issues in developing gaze tracking systems are intrusiveness, speed, robustness, and accuracy. The type of hardware and algorithms necessary, however, depend highly on the level of analysis desired. Gaze analysis can be performed at three different levels [23]: (a) highly detailed low-level micro-events, (b) low-level intentional events, and (c) coarse-level goal-based events. Micro-events include micro-saccades, jitter, nystagmus, and brief fixations, which are studied for their physiological and psychological relevance by vision scientists and psychologists. Low-level intentional events are the smallest coherent units of movement that the user is aware of during visual activity, which include sustained fixations and revisits. Although most of the work on HCI has focused on coarse-level goal based events (e.g., using gaze as a pointer [165]), it is easy to foresee the importance of analysis at lower levels, particularly to infer the user’s cognitive state in affective interfaces (e.g., [62]). Within this context, an important issue often overlooked is how to interpret eye-tracking data. In other words, as the user moves his eyes during interaction, the system must decide what the movements mean in order to react accordingly. We move our eyes 2–3 times per second, so a system may have to process large amounts of data within a short time, a task that is not trivial even if processing does not occur in real-time. One way to interpret eye tracking data is to cluster fixation points and assume, for instance, that clusters correspond to areas of interest. Clustering of fixation points is only one option, however, and as the authors of [154] discuss, it can be

difficult to determine the clustering algorithm parameters. Other options include obtaining statistics on measures such as number of eye movements, saccades, distances between fixations, order of fixations, and so on.

4. Affective human–computer interaction

Most current MMHCI systems do not account for the fact that human–human communication is always socially situated and that we use emotion to enhance our communication. However, since emotion is often expressed in a multimodal way, it is an important area for MMHCI and we will discuss it in some detail. HCI systems that can sense the affective states of the human (e.g., stress, inattention, anger, boredom, etc.) and are capable of adapting and responding to these affective states are likely to be perceived as more natural, efficacious, and trustworthy. In her book, Picard [140] suggested several applications where it is beneficial for computers to recognize human emotions. For example, knowing the user’s emotions, the computer can become a more effective tutor. Synthetic speech with emotions in the voice would sound more pleasing than a monotonous voice. Computer agents could learn the user’s preferences through the users’emotions. Another application is to help the human users monitor their stress level. In clinical settings, recognizing a person’s inability to express certain facial expressions may help diagnose early psychological disorders.

The research area of machine analysis and employment of human emotion to build more natural and flexible HCI systems is known by the general name of affective computing [140]. There is a vast body of literature on affective computing and emotion recognition [67,132,140,133]. Emotion is intricately linked to other functions such as attention, perception, memory, decision-making, and learning [43]. This suggests that it may be beneficial for computers to recognize the user’s emotions and other related cognitive states and expressions. Addressing the problem of affective communication, Bianchi-Berthouze and Lisetti [14] identified three key points to be considered when developing systems that capture affective information: embodiment (experiencing physical reality), dynamics (mapping the experience and the emotional state onto a temporal process and a particular label), and adaptive interaction (conveying emotive response, responding to a recognized emotional state).

Researchers use mainly two different methods to analyze emotions [133]. One approach is to classify emotions into discrete categories such as joy, fear, love, surprise, sadness, etc., using different modalities as inputs. The problem is that the stimuli may contain blended emotions and the choice of these categories may be too restrictive, or culturally dependent. Another way is to have multiple dimensions or scales to describe emotions. Two common scales are valence and arousal [61]. Valence describes the pleasantness of the stimuli, with positive or pleasant (e.g., happiness) on one end, and negative or unpleasant (e.g., disgust) on the other. The other dimension is arousal or activation. For example, sadness has low arousal, whereas surprise has a high arousal level. The

different emotional labels could be plotted at various positions on a 2D plane spanned by these two axes to construct a 2D emotion model [88,60].

Facial expressions and vocal emotions are particularly important in this context, so we discuss them in more detail below.

4.1. Facial expression recognition

Most facial expression recognition research (see [131] and [47] for two comprehensive reviews) has been inspired by the work of Ekman [43] on coding facial expressions based on the basic movements of facial features called action units (AUs). In order to offer a comprehensive description of the visible muscle movement in the face, Ekman proposed the Facial Action Coding System (FACS). In the system, a facial expression is a high level description of facial motions represented by regions or feature points called action units. Each AU has some related muscular basis and a given facial expression may be described by a combination of AUs. Some methods follow a feature-based approach, where one tries to detect and track specific features such as the corners of the mouth, eyebrows, etc. Other methods use a region-based approach in which facial motions are measured in certain regions on the face such as the eye/eyebrow and the mouth. In addition, we can distinguish two types of classification schemes: dynamic and static. Static classifiers (e.g., Bayesian Networks) classify each frame in a video to one of the facial expression categories based on the results of a particular video frame. Dynamic classifiers (e.g., HMM) use several video frames and perform classification by analyzing the temporal patterns of the regions analyzed or features extracted. Dynamic classifiers are very sensitive to appearance changes in the facial expressions of different individuals so they are more suited for person-dependent experiments [32]. Static classifiers, on the other hand, are easier to train and in general need less training data but when used on a continuous video sequence they can be unreliable especially for frames that are not at the peak of an expression.

Mase [99] was one of the first to use image processing techniques (optical flow) to recognize facial expressions. Lanitis et al. [90] used a flexible shape and appearance model for image coding, person identification, pose recovery, gender recognition, and facial expression recognition. Black and Yacoob [15] used local parameterized models of image motion to recover non-rigid motion. Once recovered, these parameters are fed to a rule-based classifier to recognize the six basic facial expressions. Yacoob and Davis [189] computed optical flow and used similar rules to classify the six facial expressions. Rosenblum et al. [149] also computed optical flow of regions on the face, then applied a radial basis function network to classify expressions. Essa and Pentland [45] also used an optical flow region-based method to recognize expressions. Otsuka and Ohya [117] first compute optical flow, then compute 2D Fourier transform coefficients, which were then used as feature vectors for a hidden Markov model (HMM) to classify expressions. The trained system was able to recognize one of the six expressions near real-time (about 10 Hz). Furthermore, they used the tracked motions to control the facial expression of an animated Kabuki system [118]. A similar approach, using

different features was used by Lien [93]. Nefian and Hayes [110] proposed an embedded HMM approach for face recognition that uses an efficient set of observation vectors based on DCT coefficients. Martinez [98] introduced an indexing approach based on the identification of frontal face images under different illumination conditions, facial expressions, and occlusions. A Bayesian approach was used to find the best match between the local observations and the learned local features model and an HMM was employed to achieve good recognition even when the new conditions did not correspond to the conditions previously encountered during the learning phase. Oliver et al. [116] used lower face tracking to extract mouth shape features and used them as inputs to an HMM based facial expression recognition system (recognizing neutral, happy, sad, and an open mouth). Chen [28] used a suite of static classifiers to recognize facial expressions, reporting on both person-dependent and person-independent results.

In spite of the variety of approaches to facial affect analysis, the majority suffer from the following limitations [132]: ?handle a small set of posed prototypical facial expressions of six basic emotions from portraits or nearly frontal views of faces with no facial hair or glasses recorded under constant illumination;

?do not perform a context-dependent interpretation of shown facial behavior;

?do not analyze extracted facial information on different time scales (short videos are handled only); consequently, inferences about the expressed mood and attitude (larger time scales) cannot be made by current facial affect analyzers.

4.2. Emotion in audio

The vocal aspect of a communicative message carries various kinds of information. If we disregard the manner in which a message is spoken and consider only the textual content, we are likely to miss the important aspects of the utterance and we might even completely misunderstand the meaning of the message. Nevertheless, in contrast to spoken language processing, which has recently witnessed significant advances, the processing of emotional speech has not been widely explored.

Starting in the 1930s, quantitative studies of vocal emotions have had a longer history than quantitative studies of facial expressions. Traditional as well as most recent studies on emotional contents in speech (see [119,109,72,155]) use ‘‘prosodic’’information, that is information on intonation, rhythm, lexical stress, and other features in speech. This is extracted using measures such as pitch, duration, and intensity of the utterance. Recent studies use ‘‘Ekman’s six’’basic emotions, although others in the past have used many more categories. The reasons for using these basic categories are often not justified since it is not clear whether there exist ‘‘universal’’emotional characteristics in the voice for these six categories [27].

The limitations of existing vocal-affect analyzers are [132]:

?perform singular classification of input audio signals into a few emotion categories such as

anger, irony, happiness, sadness/grief, fear, disgust, surprise and affection;

?do not perform a context-sensitive analysis (environment-, user- and task-dependent analysis) of the input audio signal;

?do not analyze extracted vocal expression information on different time scales (proposed inter-audio-frame analyses are used either for the detection of supra-segmental features, such as the pitch and intensity over the duration of a syllable or word, or for the detection of phonetic features)—inferences about moods and attitudes (longer time scales) are difficult to make based on the current vocal-affect analyzers;

?adopt strong assumptions (e.g., the recordings are noise free, the recorded sentences are short, delimited by pauses etc.) and use the test data sets that are small (one or more words or one or more short sentences spoken by few subjects) containing exaggerated vocal expressions of affective states.

4.3. Multimodal approaches to emotion recognition

The most surprising issue regarding the multimodal affect recognition problem is that although recent advances in video and audio processing could make the multimodal analysis of human affective state tractable, there are only a few research efforts [80,159,195,157] that have tried to implement a multimodal affective analyzer.

Although studies in psychology on the accuracy of predictions from observations of expressive behavior suggest that the combined face and body approaches are the most informative [4,59], with the exception of a tentative attempt of Balomenos et al. [7], there is virtually no other effort reported on automatic human affect analysis from combined face and body gestures. In the same way, studies in facial expression recognition and vocal affect recognition have been done largely independent of each other. Most works in facial expression recognition use still photographs or video sequences without speech. Similarly, works on vocal emotion detection often use only audio information. A legitimate question that should be considered in MMHCI is how much information does the face, as compared to speech, and body movement, contribute to natural interaction. Most experimenters suggest that the face is more accurately judged, produces higher agreement, or correlates better with judgments based on full audiovisual input than on voice input [104,195].

Examples of existing works combining different modalities into a single system for human affective state analysis are those of Chen [27], Yoshitomi et al. [192], De Silva and Ng [166], Go et al.

[57], and Song et al. [169], who investigated the effects of a combined detection of facial and vocal expressions of affective states. In brief, these works achieve an accuracy of 72–85% when detecting one or more basic emotions from clean audiovisual input (e.g., noise-free recordings, closely placed microphone, non-occluded portraits) from an actor speaking a single word and showing exaggerated facial displays of a basic emotion. Although audio and image processing techniques in these systems are relevant to the discussion on the state of the art in affective computing, the systems themselves

have most of the drawbacks of unimodal affect analyzers. Many improvements are needed if those systems are to be used for multimodal HCI where clean input from a known actor/announcer cannot be expected and context independent, separate processing, and interpretation of audio and visual data does not suffice.

5. Modeling, fusion, and data collection

Multimodal interface design [146] is important because the principles and techniques used in traditional GUIbased interaction do not necessarily apply in MMHCI systems. Issues to consider, as identified in Section 2, include the design of inputs and outputs, adaptability, consistency, and error handling, among others. In addition, one must consider dependency of a person’s behavior on his/her personality, cultural, and social vicinity, current mood, and the context in which the observed behavioral cues are encountered [164,70,75].

Many design decisions dictate the underlying techniques used in the interface. For example, adaptability can be addressed using machine learning: rather than using a priori rules to interpret human behavior, we can potentially learn application-, user-, and context-dependent rules by watching the user’s behavior in the sensed context [138]. Well known algorithms exist to adapt the models and it is possible to use prior knowledge when learning new models. For example, a prior model of emotional expression recognition trained based on a certain user can be used as a starting point for learning a model for another user, or for the same user in a different context. Although context sensing and the time needed to learn appropriate rules are significant problems in their own right, many benefits could come from such adaptive MMHCI systems.

First we discuss architectures, followed by modeling, fusion, data collection, and testing.

5.1. System integration architectures

The most common infrastructure that has been adopted by the multimodal research community involves multiagent architectures such as the Open Agent Architecture [97] and Adaptive Agent Architecture [86,31]. Multi-agent architectures provide essential infrastructure for coordinating the many complex modules needed to implement multimodal system processing and permit this to be done in a distributed manner. In a multi-agent architecture, the components needed to support the multimodal system (e.g., speech recognition, gesture recognition, natural language processing, multimodal integration) may be written in different programming languages, on different machines, and with different operating systems. Agent communication languages are being developed that handle asynchronous delivery, triggered responses, multi-casting, and other concepts from distributed systems.

When using a multi-agent architecture, for example, speech and gestures can arrive in parallel or asynchronously via individual modality agents, with the results passed to a facilitator. These results, typically an n-best list of conjectured lexical items and related time-stamp information, are then routed

to appropriate agents for further language processing. Next, sets of meaning fragments derived from the speech, or other modality, arrive at the multimodal integrator which decides whether and how long to wait for recognition results from other modalities, based on the system’s temporal thresholds. It fuses the meaning fragments into a semantically and temporally compatible whole interpretation before passing the results back to the facilitator. At this point, the system’s final multimodal interpretation is confirmed by the interface, delivered as multimedia feedback to the user, and executed by the relevant application.

Despite the availability of high-accuracy speech recognizers and the maturing of devices such as gaze trackers, touch screens, and gesture trackers, very few applications take advantage of these technologies. One reason for this may be that the cost in time of implementing a multimodal interface is very high. If someone wants to equip an application with such an interface, he must usually start from scratch, implementing access to external sensors, developing ambiguity resolution algorithms, etc. However, when properly implemented, a large part of the code in a multimodal system can be reused. This aspect has been identified and many multimodal application frameworks (using multi-agent architectures) have recently appeared such as VTT’s Japis framework [179], Rutgers CAIP Center framework [49], and the Embassi system [44].

5.2. Modeling

There have been several attempts in modeling humans in the human–computer interaction literature [191]. Here we present some proposed models and we discuss their particularities and weaknesses.

One of the most commonly used models in HCI is the Model Human Processor. The model, proposed in [24] is a simplified view of the human processing involved in interacting with computer systems. This model comprises three subsystems namely, the perceptual system handling sensory stimulus from the outside world, the motor system that controls actions, and the cognitive system that provides the necessary processing to connect the two. Retaining the analogy of the user as an information processing system, the components of an MMHCI model include an input–output component (sensory system), a memory component (cognitive system), and a processing component (motor system). Based on this model, the study of input–output channels (vision, hearing, touch, movement), human memory (sensory, short-term, and working or long-term memory), and processing capabilities (reasoning, problem solving, or acquisition skills) should all be considered when designing MMHCI systems and applications. Many studies in the literature analyze each subsystem in detail and we point the interested reader to [39] for a comprehensive analysis.

Another model, proposed by Card et al. [24], is the GOMS (Goals, Operators, Methods, and Selection rules) model. GOMS is essentially a reduction of a user’s interaction with a computer to its elementary actions and all existing GOMS variations [24] allow for different aspects of an interface to be accurately studied and predicted. For all of the variants, the definitions of the major concepts are

the same. Goals are what the user intends to accomplish. An operator is an action performed in service of a goal. A method is a sequence of operators that accomplish a goal and if more than one method exists, then one of them is chosen by some selection rule. Selection rules are often ignored in typical GOMS analyses. There is some flexibility for the designers/analysts definition of all of these entities. For instance, one person’s operator may be another’s goal. The level of granularity is adjusted to capture what the particular evaluator is examining.

All of the GOMS techniques provide valuable information, but they all also have certain drawbacks. None of the techniques address user fatigue. Over time a user’s performance degrades simply because the user has been performing the same task repetitively. The techniques are very explicit about basic movement operations, but are generally less rigid for basic cognitive actions. Further, all of the techniques are only applicable to expert users and the functionality of the system is ignored while only the usability is considered.

The human action cycle [114] is a psychological model which describes the steps humans take when they interact with computer systems. The model can be used to help evaluate the efficiency of a user interface (UI). Understanding the cycle requires an understanding of the user interface design principles of affordance, feedback, visibility, and tolerance. This model describes how humans may form goals and then develop a series of steps required to achieve those goals, using the computer system. The user then executes the steps, thus the model includes both cognitive and physical activities.

5.3. Adaptability

The number of computer users (and computer-like devices we interact with) has grown at an incredible pace in the last few years. An immediate consequence of this is that there is much larger diversity in the ‘‘types’’of computer users. Increasing differences in skill level, culture, language, and goals have resulted in a significant trend towards adaptive and customizable interfaces, which use modeling and reasoning about the domain, the task, and the user, in order to extract and represent the user’s knowledge, skills, and goals, to better serve the users with their tasks. The goal of such systems is to adapt their interface to a specific user, give feedback about the user’s knowledge, and predict the user’s future behavior such as answers, goals, preferences, and actions [76]. Several studies [173] provide empirical support for the concept that user performance can be increased when the interface characteristics match the user skill level, emphasizing the importance of adaptive user interfaces.

Adaptive human–computer interaction promises to support more sophisticated and natural input and output, to enable users to perform potentially complex tasks more quickly, with greater accuracy, and to improve user satisfaction. This new class of interfaces promises knowledge or agent-based dialog, in which the interface gracefully handles errors and interruptions, and dynamically adapts to the current context and situation, the needs of the task performed, and the user model. This

interactive process is believed to have great potential for improving the effectiveness of human–computer interaction [100], and therefore, is likely to play a major role in MMHCI. The overarching aim of intelligent interfaces is to both increase the interaction bandwidth between human and machine and, at the same time, increase interaction effectiveness and naturalness by improving the quality of interaction. Effective human machine interfaces and information services will also increase access and productivity for all users [89]. A grand challenge of adaptive interfaces is therefore to represent, reason, and exploit various models to more effectively process input, generate output, and manage the dialog and interaction between human and machine to maximize the efficiency, effectiveness, and naturalness, if not joy, of interaction [133].

One central feature of adaptive interfaces is the manner in which the system uses the learned knowledge. Some works in applied machine learning are designed to produce expert systems that are intended to replace the human. However, works in adaptive interfaces intend to construct advisory-recommendation systems, which only make recommendations to the user. These systems suggest information or generate actions that the user can always override. Ideally, these actions should reflect the preferences of the individual users, thus providing personalized services to each one.

Every time the system suggests a choice to the user, he or she accepts it or rejects it, thus giving feedback to the system to update its knowledge base either implicitly or explicitly [6]. The system should carry out online learning, in which the knowledge base is updated each time an interaction with the user occurs. Since adaptive user interfaces collect data during their interaction with the user, one naturally expects them to improve during the interaction process, making them ‘‘learning’’systems rather than ‘‘learned’’systems. Because adaptive user interfaces must learn from observing the behavior of their users, another distinguishing characteristic of these systems is their need for rapid learning. The issue here is the number of training cases needed by the system to generate good advice. Thus, it is recommended the use of learning methods and algorithms that achieve high accuracy from small training sets. On the other hand, speed of interface adaptation to user’s needs is also desirable.

Adaptive user interfaces should not be considered a panacea for all problems. The designer should seriously take under consideration if the user really needs an adaptive system. The most common concern regarding the use of adaptive interfaces is the violation of standard usability principles. In fact, evidence exists that suggests that static interface designs sometimes promote superior performance than adaptive ones [64,163]. Nevertheless, the benefits that adaptive systems can bring are undeniable and therefore more and more research efforts are being made in this direction.

An important issue is how the interaction techniques should change to take the varying input and output hardware devices into account. The system might choose the appropriate interaction

techniques taking into account the input and output capabilities of the devices and the user preferences. So, nowadays, many researchers are focusing on context aware interfaces, recognition-based interfaces, intelligent and adaptive interfaces, and multimodal perceptual interfaces [33,76,100,89,176,177]. Although there have been many advances in MMHCI, the level of adaptability in current systems is rather limited and there are many challenges left to be investigated.

5.4. Fusion

Fusion techniques are needed to integrate input from different modalities and many fusion approaches have been developed. Early multimodal interfaces were based on a specific control structure for multimodal fusion. For example, Bolt’s ‘‘Put-That-There’’system [18] combined pointing and speech inputs and searched for a synchronized gestural act that designates the spoken referent. To support more broadly functional multimodal systems, general processing architectures have been developed which handle a variety of multimodal integration patterns and support joint processing of modalities [16,86,97,161].

A typical issue of multimodal data processing is that multisensory data are typically processed separately and only combined at the end. Yet, people convey multimodal (e.g., audio and visual) communicative signals in a complementary and redundant manner (as shown experimentally by Chen [27]). Therefore, in order to accomplish a human-like multimodal analysis of multiple input signals acquired by different sensors, the signals cannot be always considered mutually independently and might not be combined in a context-free manner at the end of the intended analysis but, on the contrary, the input data might preferably be processed in a joint feature space and according to a context-dependent model. In practice, however, besides the problems of context sensing and developing context-dependent models for combining multisensory information, one should cope with the size of the required joint feature space. Problems include large dimensionality, differing feature formats, and time-alignment. A potential way to achieve multisensory data fusion is to develop context-dependent versions of a suitable method such as the Bayesian inference method proposed by Pan et al. [130].

Multimodal systems usually integrate signals at the feature level (early fusion), at a higher semantic level (late fusion), or something in between (intermediate fusion) [178,37]. In the following sections we present these fusion techniques in detail and we analyze their advantages and disadvantages.

5.4.1. Early fusion techniques

In an early fusion architecture, the signal-level recognition process in one mode influences the course of recognition in the other and so, this type of fusion is considered more appropriate for closely temporally synchronized input modalities, such as speech and lip movements. This class of techniques utilizes a single classifier avoiding the explicit modeling of different modalities.

To give an example of audio–visual integration using an early fusion approach, one simply

concatenates the audio and visual feature vectors to obtain a single combined audio–visual vector [1]. In order to reduce the length of the resulting feature vector, dimensionality reduction techniques like LDA are usually applied before the feature vector finally feeds the recognition engine. The classifier utilized by most early integration systems is a conventional hidden Markov model (HMM) which is trained with the mixed audio–visual feature vector.

5.4.2. Intermediate fusion techniques

Since the early fusion techniques avoid explicit modeling of the different modalities, they fail to model both the fluctuations in the relative reliability and the asynchrony problems between the distinct (e.g., audio and visual) streams. Moreover, a multimodal system should be able to deal with imperfect data and generate its conclusion so that the certainty associated with it varies in accordance to the input data. A way of achieving this is to consider the timeinstance versus time-scale dimension of human non-verbal communicative signals [132]. By considering previously observed data with respect to the current data carried by functioning observation channels, a statistical prediction and its probability might be derived about both the information that has been lost due to malfunctioning/inaccuracy of a particular sensor and the currently displayed action/reaction. Probabilistic graphical models, such as Hidden Markov Models (including their hierarchical variants), Bayesian networks, and Dynamic Bayesian networks are very well suited for fusing such different sources of information [156]. These models can handle noisy features, temporal information, and missing values of features by probabilistic inference. Hierarchical HMM-based systems [32] have been shown to work well for facial expression recognition. Dynamic Bayesian Networks and HMM variants [54] have been shown to fuse various sources of information in recognizing user intent, office activity, and event detection in video using both audio and visual information [53]. This suggests that probabilistic graphical models are a promising approach to fusing realistic (noisy) audio and video for context-dependent detection of behavioral events such as affective states.

5.4.3. Late integration techniques

Multimodal systems based on late (semantic) fusion integrate common meaning representations derived from different modalities into a combined final interpretation. This requires a common meaning representation framework for all modalities used and a well-defined operation for integrating partial meanings. Meaning based representation uses data structures such as frames [106], feature structures [81], or typed feature structures [25]. Frames represent objects and relations as consisting of nested sets of attribute/value pairs while feature structures go further to use shared variables to indicate common substructures. Typed feature structures are pervasive in natural language processing and their primary operation is unification, which determines the consistency of two representational structures and, if they are consistent, their combination.

Late integration models often utilize independent classifiers (e.g., HMMs), one for each stream, which can be trained independently. The final classification decision is reached by combining the

partial outputs of the unimodal classifiers. The correlations between the channels are taken into account only later during the integration step. There are advantages though when using late integration [178]. Since the input types can be recognized independently, they do not have to occur simultaneously. Moreover, the training requirements are smaller O(2N) for two separately trained modalities as compared to O(N2) for two modalities trained together. The software development process is also simpler in the late integration case [178].

Systems using the late fusion approach have been applied to processing multimodal speech and pen input or manual gesturing, for which the input modes are less coupled temporally and provide different but complementary information. Late semantic integration systems use individual recognizers that can be trained using unimodal data and can be scaled up more easily in a number of input modes or vocabulary size. To give an example, in an audio–visual speech recognition application [143], for small vocabulary independent word speech recognition, late integration can be easily implemented by combining the audio and visual-only log-likelihood scores for each word model in the vocabulary, given the acoustics and visual observations [1]. However, this approach is intractable in the case of connected word recognition where the number of alternative paths explodes.

A good heuristic alternative in that case is through lattice rescoring. The n-most promising hypotheses are extracted from the audio-only recognizer and they are rescored after taking the visual evidence into account. The hypothesis with the highest combined score is then selected. More details about this approach can be found in [143].

Very important for MMHCI applications is the fusion between the visual and audio channels. Successful audio and visual feature integration requires utilization of advanced techniques and models for cross-model information fusion. This research area is currently very active and many different paradigms have been proposed for addressing the general problem. Therefore, we will confine the following discussion to the problem of feature fusion in the context of audiovisual feature integration. We note, however, that similar issues exist in the integration of any other modalities.

When considering audio–visual integration in speech, the main task that needs to be addressed is the fusion of the heterogeneous pool of audio and visual features in a way that ensures that the combined audiovisual system outperforms its audio- or video-only counterpart in all practical scenarios. This task is complicated due to several issues, the most important being:

(1) audio and visual speech asynchrony. Although the audio and visual observation sequences are certainly correlated over time, they exhibit state asynchrony (e.g., in speech, lip movement is preceding auditory activity by as much as 120 ms [21], close to the average duration of a phoneme). This asynchrony is critical in applications such as audio–visual speech recognition because it renders modeling audiovisual speech with conventional HMMs problematic [145];

(2) the relative discriminating power of the audio and visual streams can vary dramatically in unconstrained environments, making their optimal fusion a challenging task.

Despite important advances, further research is still required to investigate fusion models able to efficiently use the complementary cues provided by multiple modalities.

5.5. Data collection and testing

Collecting MMHCI data and obtaining the ground truth for it is a challenging task. Labeling is time-consuming, error prone, and expensive. For example, in developing multimodal techniques for emotion recognition, one approach consists of asking actors to read material aloud while simultaneously portraying particular emotions chosen by the investigators. Another approach is to use emotional speech from real conversations or to induce emotions from speakers using various methods (e.g., showing photos or videos to induce reactions). Using actor portrayals ensures control of the verbal material and the encoder’s intention, but raises the question about the similarity between posed and naturally occurring expressions. Asking someone to smile often does not create the same picture as an authentic smile. The fundamental reason of course is that the subject often does not feel happy so his smile is artificial and in many subtle ways quite different from a genuine smile [133]. Using real emotional speech, on the other hand, ensures high validity, but renders the control of verbal material and encoder intention more difficult. Induction methods are effective in inducing moods, but it is harder to induce intense emotional states in controlled laboratory settings.

In general, collection of data for an MMHCI application is challenging because there is wide variability in the set of possible inputs (consider the number of possible gestures), often only a small set of training examples is available, and the data is often noisy. Therefore, it is very beneficial to construct methods that use scarcely available labeled data and abundant unlabeled data. Probabilistic graphical models are ideal candidates for tasks in which labeled data is scarce, but abundant unlabeled data is available. Cohen et al. [31] showed that unlabeled data could be used together with labeled data for MMHCI applications using Bayesian networks. However, they have shown that care must be taken when attempting such schemes. In the purely supervised case (only labeled data), adding more labeled data improves the performance of the classifier. Adding unlabeled data, however, can be detrimental to the performance. Such detrimental effects occur when the assumed classifier’s model does not match the data’s distribution. As a consequence, further research is necessary to achieve maximum utilization of unlabeled data for MMHCI problems since it is clear that such methods could provide great benefit.

Picard et al. [141] outlined five factors that influence affective data collection. We list them below since they also apply to the more general problem of MMHCI data collection:

?Spontaneous versus posed: is the emotion elicited by a situation or stimulus outside the subject’s control or the subject is asked to elicit the emotion?

?Lab setting versus real-world: is the data recording taking place in a lab or the emotion is recorded in the usual environment of the subject?

?Expression versus feeling: is the emphasis on external expression or on internal feeling?

?Open recording versus hidden recording: is the subject aware that he is being recorded?

?Emotion-purpose versus other-purpose: does the subject know that he is a part of an experiment and the experiment is about emotion?

Note that these factors are not necessarily independent. The most natural setup would imply that the subject feels the emotion internally (feeling), and the emotion occurs spontaneously, while the subject is in his usual environment (real-world). Also, the subject should not know that he is being recorded (hidden recording) and that he is part of an experiment (other-purpose). Such data are usually impossible to obtain because of privacy and ethical concerns. As a consequence, most researchers who tackled the problem of establishing a comprehensive human-affect expression database used a setup that is rather far from the natural setup [133]: a posed, lab-based, expression-oriented, open-recording, and emotion-purpose methodology.

5.6. Evaluation

Evaluation is a very important issue in the design of multimodal systems. Here, we outline the most important features that could be used as measures in the evaluation of various types of adaptive MMHCI systems, namely efficiency, quality, user satisfaction, and predictive accuracy.

People typically invoke computational decision aids because they expect the system will help them accomplish their tasks more rapidly and with less effort than they do on their own. This makes efficiency an important measure to use in evaluating adaptive systems. One natural measure of efficiency is the time the user takes to accomplish his task. Another facet is the effort the user must exert to make a decision or solve a problem. In this case, the measure would be the number of user actions or commands that take place during the solving of a problem.

Another main reason the users turn to MMHCI systems is to improve the quality of solutions of their task. As with efficiency, there are several ways in which one can define the notion of quality or accuracy of the system. For example, if there is a certain object the user wants to find then the success of finding it constitutes an objective measure of quality. However, it is clear that in some cases it is necessary to rely on a separate measure of user satisfaction to determine the quality of the system’s behavior. One way to achieve this is to present each user with a questionnaire that asks about his subjective experience. Another measure of user’s satisfaction involves giving the user some control over certain features of the system. If the user turns the system’s advisory capability off or disables its personalization module, one can then conclude that the user has not been satisfied by his experience with these features.

Since many adaptive system user models make predictions about the user’s responses, it is natural to measure the predictive accuracy to determine the success of a system. Although this measure can be a useful analytical tool for understanding the details of the system’s behavior, it does not necessarily reflect the overall efficiency or quality of solutions, which should be the main concern.

1,GK-15 The Park 公园 I can see the grass. 我能看见青草。 I can see the flowers. 我能看见花朵。 I can see the trees. 我能看见树木。 I can see the birds. 我能看见小鸟。 I can see the slide. 我能看见滑滑梯。 I can see the swings. 我能看见秋千。 I can see the children. 我能看见孩子们。 I can see the park. 我能看见整个公园。 2,GK-19 Playing Dress Up 换装游戏 I am a little cat. 我是一只小猫。 I am a little bee. 我是一只小蜜蜂。 I am a little duck. 我是一只小鸭子。 I am a little monster. 我是一只小怪物。 I am a little dancer. 我是一个小舞蹈家 I am a little dog. 我是一只小狗。 I am a little bunny. 我是一只小兔子。 I am a little girl.

我是一个小女孩。 3,GK-20 Little Cub 小虎崽 I am eating. 我正在吃东西。 I am running. 我正在跑。 I am riding. 我正在乘车。 I am drawing. 我正在画画。 I am kicking. 我正在踢球。 I am playing. 我正在玩游戏。 I am reading. 我正在看书。 I am dreaming. 我正在做梦。 4,G1-040 Look 看 Look at my feet. 看我的脚。 Look at my legs. 看我的腿。 Look at my tail. 看我的尾巴。 Look at my neck 看我的脖子。 Look at my eyes. 看我的眼睛。 Look at my nose. 看我的鼻子。 Look at my ears.

基于多模态数据融合的视觉目标跟踪算法研究计算机科学技术的高速发展带动了计算机视觉领域的革新,人类对机器学习和人工智能的需求日益增加,这使得视觉目标跟踪成为了当前研究的热门课题。在无人驾驶、安防、人机交互、导航和制导等民事和军事应用领域,视觉目标跟踪扮演着举足轻重的角色。 经过了几十年的发展,当前的目标跟踪算法依然面临着来自外部环境和目标自身的具有挑战性的干扰因素,如背景杂乱、遮挡、低照度、尺度变化、形变、运动模糊和快速运动等,它们严重制约着其发展。本文通过研究不同模态的数据之间的互补特性,结合不同跟踪方法的优缺点分析,提出了一种基于“检测跟踪模型”的多模态数据融合跟踪算法。 该算法采用红外和可见光图像中目标的全局/局部的多种特征,能够应对当前目标跟踪领域所面临的多种复杂干扰。首先,本文算法设计了两个跟踪模块:基于统计模型的跟踪模块(HIST模块)和基于相关滤波的跟踪模块(CFT模块)。 其中,HIST模块采用具有全局统计特性的RGB颜色直方图作为跟踪特征,结合贝叶斯准则设计了一种目标/背景区分算子用于区分目标和干扰物,是一种生成式和判别式的混合跟踪模块。该模块引入了积分图策略,以实现基于检测跟踪模型的改进,得到可与CFT模块的跟踪结果相融合的改进模块。 而CFT模块基于KCF跟踪原理,采用了多种特征(HOG、CN、图像强度)进行跟踪任务,是一种判别式跟踪模型,本文基于检测跟踪模型对该模块进行了改进,并设计了一种去噪融合规则来融合由多种特征得到的响应函数。其次,本文基于KL 距离提出了一种可靠性度量规则来度量上述两个跟踪模块的输出结果的可靠性。 根据度量结果,本文还设计了一种决策级的自适应融合策略来融合上述跟踪

多模态理论的外语教学综述 多模态话语分析理论兴起于上世纪九十年代的西方国家,西方研究学者在批评话语分析的基础上,结合社会符号学、系统功能语法和传统话语分析等领域的研究成果发展而来,它认为语言是社会符号,除语言之外的其他非语言符号(绘画、音乐、舞蹈等)也是意义表达的源泉,非语言符号模态各自独立又相互作用,与语言符号共同生成意义。它突破了传统话语分析孤立研究语言文字本身的局限性,把对话语的研究扩展到了除语言之外的图像、颜色、字体等其他意义表达的模态符号,关注多种模态符号在话语中所起地作用。经过二十多年的发展,多模态话语分析已经被广泛的应用在各个学科之列,其中多模态话语框架理论对于外语教学也有着不可忽视的重要作用。 多模态话语可以将多种符号模态并用,极大地丰富了信息输入法手段,强化了学习者对教学内容的记忆。在多模态教学中,主要强调把多种符号模态(语言、图像、音乐、网络等)引入教学过程,充分调动学生的多种感官能够参与学习中来,刺激学生在词汇记忆的同时产生多方面联想,从而达到增强知识的记忆效果。这种方法比单一的语言讲解更深入,更能加强学习者的迅速记忆能力。多模态教学采用多种教学手段(网络、小组合作、联想、角色扮演等)调动学习者的积极性和主动性来参与教学互动,达到了外语教学中注重视听说写练相结合的一大特点,激发了学生学习语言的兴趣。学生不仅在亲身参与教学活动的过程中发现新知识,师生互动环节中,还掌握了大量的教学知识,在日常的趣味练习中便不知不觉的逐步提升了个人学习能力和听说能力。多模态话语的魅力就在于能够让学生在轻松、活跃、自主的学习氛围中,提高个人的学习成绩并且增强了学习能力。多种教学方法的灵活运用,在大程度上弥补了传统的单模态教学方式的不足。多模态教学的主张是要求教师根据教学内容,教学环境,教学目标等基本理念为学生选择一种或多种教学方法(听说法,交际法、全身反应法、暗示法、直接法、情景教学法、语法翻译法等),并将其合理运用在语言环境中,这些运用对于学生进行语言的理解和掌握是必不可少的。在多模态课堂教学中,教师结合多媒体手段创设真实的情境环境,通过听觉、视觉、触觉等多感官刺激,使学习者体验真实的目标语语言环境,提高学生输出运用词汇的能力。 随着现代信息技术的发展和多媒体教学设备的普及和完善,教学环境也得到了很大的改善,这为多模态教学引入课堂提供了基本的技术条件。尽管目前在国内多模态话语分析理论也仅仅的局限于应用于语言教学的研究,在国内还处在初步发展的阶段,但是我们有理由相信随着多模态理论与外语教学实践研究的进一步探索和深入,该理论将会日趋成熟。多模态话语作为一种新生的教学模式,势必为外语课堂的教学注入了新生命和新理念,它完全符合语言学习的规律和原则,对于外语词汇教学产生的积极影响更是不可取代,相信在以后的教学中,教师可以应用更多种的教学方法、教学手段来进行合理的结合,通过多渠道、多感官刺激的多模态教学将成为日后我国外语教学界的主流发展趋势。

Alejandro Jaimes, Nicu Sebe, Multimodal human–computer interaction: A survey, Computer Vision and Image Understanding, 2007. 多模态人机交互综述 摘要:本文总结了多模态人机交互(MMHCI, Multi-Modal Human-Computer Interaction)的主要方法,从计算机视觉角度给出了领域的全貌。我们尤其将重点放在身体、手势、视线和情感交互(人脸表情识别和语音中的情感)方面,讨论了用户和任务建模及多模态融合(multimodal fusion),并指出了多模态人机交互研究的挑战、热点课题和兴起的应用(highlighting challenges, open issues, and emerging applications)。 1. 引言 多模态人机交互(MMHCI)位于包括计算机视觉、心理学、人工智能等多个研究领域的交叉点,我们研究MMHCI是要使得计算机技术对人类更具可用性(Usable),这总是需要至少理解三个方面:与计算机交互的用户、系统(计算机技术及其可用性)和用户与系统间的交互。考虑这些方面,可以明显看出MMHCI 是一个多学科课题,因为交互系统设计者应该具有一系列相关知识:心理学和认知科学来理解用户的感知、认知及问题求解能力(perceptual, cognitive, and problem solving skills);社会学来理解更宽广的交互上下文;工效学(ergonomics)来理解用户的物理能力;图形设计来生成有效的界面展现;计算机科学和工程来建立必需的技术;等等。 MMHCI的多学科特性促使我们对此进行总结。我们不是将重点只放在MMHCI的计算机视觉技术方面,而是给出了这个领域的全貌,从计算机视觉角度I讨论了MMHCI中的主要方法和课题。 1.1. 动机 在人与人通信中本质上要解释语音和视觉信号的混合。很多领域的研究者认识到了这点,并在单一模态技术unimodal techniques(语音和音频处理及计算机视觉等)和硬件技术hardware technologies (廉价的摄像机和其它类型传感器)的研究方面取得了进步,这使得MMHCI方面的研究已经有了重要进展。与传统HCI应用(单个用户面对计算机并利用鼠标或键盘与之交互)不同,在新的应用(如:智能家居[105]、远程协作、艺术等)中,交互并非总是显式指令(explicit commands),且经常包含多个用户。部分原因式在过去的几年中计算机处理器速度、记忆和存储能力得到了显著进步,并与很多使普适计算ubiquitous computing [185,67,66]成为现实的新颖输入和输出设备的有效性相匹配,设备包括电话(phones)、嵌入式系统(embedded systems)、个人数字助理(PDA)、笔记本电脑(laptops)、屏幕墙(wall size displays),等等,大量计算具有不同计算能量和输入输出能力的设备可用意味着计算的未来将包含交互的新途径,一些方法包括手势(gestures)[136]、语音(speech)[143]、触觉(haptics)[9]、眨眼(eye blinks)[58]和其它方法,例如:手套设备(Glove mounted devices)[19] 和and可抓握用户界面(graspable user interfaces)[48]及有形用户界面(Tangible User interface)现在似乎趋向成熟(ripe for exploration),具有触觉反馈、视线跟踪和眨眼检测[69]的点设备(Pointing devices)现也已出现。然而,恰如在人与人通讯中一样,当以组合方式使用不同输入设备时,情感通讯(effective communication)就会发生。

最佳英文儿童绘本一百本 (Top 100 Picture Book Poll of 2009) (2010-12-23 23:07:09) 转载▼ 分类:儿童英语学习 标签: 英语学习 早教 纯正的美国英语 英文绘本,是儿童学习英语过程中趣味与艺术性最佳的阅读材料。然而在这些童书的海洋中,如何挑选绘本,时常会困扰我们。最佳100这个流行的方法,在选绘本中也常被大家采用。 有很多机构都发布过最佳绘本100本(Top 100),但专家的推荐,有时反而不如大家评选出来的结果值得参考。纽约公共图书馆的一名儿童图书馆员Elizabeth Bird,2009年在她的博客上搞了一个100本最佳绘本的民意投票(Top 100 Picture Books Poll of 2009)。下面这组书目,是通过投票选举出来的结果。(I bring to you the results of the Top 100 Picture Book Poll of 2009.)尽管这个结果产生自2009年,但是由于这些经典绘本大都经历了很多年的考验,因此在现在看来依然可以作为父母选择绘本的参考。 #1: Where the Wild Things Are by Maurice Sendak (1963) #2: Goodnight Moon by Margaret Wise Brown (1947) #3: The Very Hungry Caterpillar by Eric Carle (1979) #4: The Snowy Day by Ezra Jack Keats (1962) #5: Don’t Let the Pigeon Drive the Bus by Mo Willems (2003) #6: Make Way for Ducklings by Robert McCloskey (1941) #7: Harold and the Purple Crayon by Crockett Johnson (1955) #8: Madeline by Ludwig Bemelmans (1939) #9: Millions of Cats by Wanda Gag (1928) #10: Knuffle Bunny: A Cautionary Tale by Mo Willems (2004) #11: The Story of Ferdinand by Monroe Leaf, ill. Robert Lawson (1936) #12: Good Night Gorilla by Peggy Rathmann (1994) #13: Blueberries for Sal by Robert McCloskey (1948) #14: The True Story of the Three Little Pigs by Jon Scieszka, ill. Lane Smith(1989) #15: Lilly’s Purple Plastic Purse by Kevin Henkes (1996) #16: Owl Moon by Jane Yolen (1987) #17: Caps for Sale by Esphyr Slobodkina (1947) #18: In the Night Kitchen by Maurice Sendak (1970) #19: Miss Rumphius by Barbara Cooney (1982) #20: George and Martha by James Marshall (1972)

基于多模态的英语词汇教学- 摘要:根据多模态和多模态教学理论,对可利用的多种促进学生记忆词汇的渠道和方法进行分析,探讨多模态手段在英语词汇教学中的综合和得体应用,以期在提高学生词汇记忆质量方面,对广大英语教师起到一定的借鉴作用。 关键词:词汇教学;多模态;多模态教学手段 一、引言 词汇是构成语言的基础。从Holmes的多层次因素理论提出的大学程度阅读图表中提供的数据可看出,即使在大学水平,词汇能力依然至关重要[1]。要想掌握一门语言,需要大量的词汇积累,如果学习者达不到一定的词汇量,英语水平必然会受到制约。许多学生课后花很多时间和精力记忆词汇,也取得了一定的效果,但不是非常明显。那么作为教师,在英语词汇教学中如何激发学生学习和记忆词汇的兴趣,最大限度地刺激和调动学生的感官和身体,使词汇容易记忆、记忆深刻并能正确使用,从而打好英语学习的坚实基础呢?近年来,多模态的理论和使用方法不断被用于各个学科领域,语言教学也不例外。多模态教学法用于词汇教学,其优势和作用日益突出。为此,有必要对多模态手段及其在词汇教学中的应用进行研究,使之更完善地服务于教学,在词汇教学中能有机结合学生的感官和语言,提高学生词汇记忆的质量。 二、多模态的概念及其在英语词汇教学中的优势 多模态(multimodality)起源于上世纪九十年代的西方国家,Kress、Van Leeuwen 和Machin等人都对多模态进行了研究,从他们的研究中我们可以看出:“多模态就是对不同的交际模态之

间的相互影响与联系所进行的研究,不管是书面还是口头的,视觉还是听觉的。”[2]除了语言之外,图像、颜色、字体、音乐、舞蹈、手势、姿态等非语言符号与语言共同生成意义,即多模态话语。 “多模态教学所倡导的是:在教学过程中,教师通过多种模态的同时刺激,调动学生的多种感官协同运作,以便强化记忆,提高教学效率。”[3]多模态的教学手段运用在词汇教学中,能全方位地调动学习者感官,激发其学习和记忆兴趣、充分提高词汇的听力能力。多模态手段下,结合合理的肢体语言的使用,还能有效巩固词汇的输出应用。 传统的英语教科书主题选材往往有一定的局限性,很多教材字体排版和颜色单一,没有配套的多媒体辅助教学,枯燥乏味,无法提起学生学习兴趣,再加上有些英语教师的词汇教学手段单调,其普遍模式往往是:教师带读单词――讲授单词的意义与用法――学生背单词,使得学生词汇学习和记忆词汇的效果极其有限。如今,随着多模态教学法的不断发展以及计算机和多媒体技术的进步,人们尝试将多种模态的教学方法应用到英语词汇教学中。 三、英语词汇教学中多模态手段的应用 (一)各种手段配合看图描述 教师利用图片等为学生进行直观展示,引入词汇学习。如:教师可以通过图片的形式,要求学生看图描述,在描述的过程中,许多与该篇课文内容主题相关的词汇自然而然被用上。很多学生的描述生动有趣,让大家加深了对单词的理解,对于不会使用的词汇,教师在点评学生描述过程中引入,使用不同颜色的粉笔将其写到黑板上。这样,除了能从视觉上直接对学生的感官形成刺

现代人机交互技术最新情况 ●普适计算 1.IBM研发的BlueBoard(蓝板)技术,BlueBoard是一片薄薄的屏幕板,使用者只用其胸前挂着的看上去与普通员工卡没什么两样的小卡片,对准蓝板一下,就可以显示出其个人主页及定制好的其它内容。其后的一切操作和任务都只靠使用者的手指在蓝板上指指划划就全部搞定了,包括查阅资料、共享文件、与同事实时互传信息、发送指令、布置任务、协同工作等。 2. Informedia数字图书馆系统,Informedia采用了多模态输入方式,用户可以用语音提出查询请求。当用户要求从数据库中查找指定的电视新闻资料时,系统将根据用户的请求返回相应的视频片段。Informedia集成了语音、图像和自然语言理解技术,在目前的原型系统中,系统可以自动地分析视频数据,从中提取出摘要信息并编加索引。视频数据的分析过程结合了针对视频的镜头检测、图像分析,以及字幕文本(Close Caption)和伴音信息分析。与仅采用单一模态数据的分析方法相比,这种合成方式能够更好地提取视频的语义。 3.MIT的Galaxy系统, Galaxy系统向人们展示了系统如何与用户相协调,从而提供对多种异构数据源的无缝访问。对于用户的请求,系统不仅仅是返回来自各方面的答案,而且还尽可能地找出不同领域中的相关答案,并根据用户感兴趣的程度排序。随后,用户与系统进行更进一步的对话,最终获得尽可能准确的结果。这种带有联想性质的交互过程使得用户感觉不是在跟一个死板的计算机系统打交道,而是与一个聪明的代理人交互。 ●可穿戴计算 从谷歌、苹果、RIM相继发布的智能眼镜系列产品,到Fitbit、Jaw bone、耐克相继推出的智能腕带,可穿戴计算逐步成为了IT界的热词。iwatch,可以给智能手机和其他小型电子产品充电的太阳能比基尼,随行键盘牛仔裤,可以使聋人和盲人发送短信的移动框架手套,能更新Facebook状态的社交牛仔裤,能在衣服上呈现任何图案的内置电流回路K服饰,可以打电话的移动手套,电子鼓机T恤,乐器节拍手套,能通过行走的热能给智能手机充电的充电鞋,对饮食和睡眠状态进行跟踪的智能腕带……,可穿戴计算设备层出不穷,不断丰富着新一代IT产品市场。盛大、百度等企业也不甘落后,近期已相继出手,分别推出了geak智能手表和咕咚健康手环等产品。 ●多点触控 1.微软:Surface电脑,5月,《华尔街日报》的“D:All Things Digital”会议上,微软演示了一种Surface Computing(表面计算)技术,并由此组建了一个

外教一对一https://www.doczj.com/doc/0313635460.html, 英语多模态教学的设计 模态与人的感官和行为相关,包括听觉、视觉、触觉及图像、声音和动作,并有单模态、双模态和多模态之分。人际交往常常使用听觉和视觉模态,较少使用触觉和感觉模态,因此早先的教学更多依靠单一的语言文字模态,而较少调动其他辅助模态。随着科技的发展,更多形式的媒体进入人们的生活中,并起到很大的作用,成为学生惯常使用的交际工具,因此,语言教学也顺应时代的发展,开始注重其他模态在语言学习中的作用,多种模态共同协调,更好地辅助语言教学和学习。 根据语言学习理论,结合英语视听说教学实际,运用多模态教学方法的课堂教学可以按照下列思路进行设计: 1. 创设情境。教师提供的情景可运用视频、图片、音频等方式,可以设计真实或模拟的场景,提供相应的设备和仪器,调动学生的听觉、视觉、触觉,甚至嗅觉,以生动的形式展现给学生。 2. 提出问题。基于“问题学习”的原则,教师提出问题,提供可用的词汇、词组、结构和用法,引导学生进行思考。学生可以分组进行讨论,寻找决策,教师组织各小组得出最终的可行方案,对主题内容进行建设。 3. 搭建桥梁。这一过程是教师在学生的旧知识和新知识之间建立联系,以巩固旧知识,掌握新知识。例如教师可以仅提供某种问题解决方式,供学生评价和研究,让学生通过讨论或咨询,发现其他新的解决方式,并将多种方式根据进行对照和研判,做出最佳选择。 4. 组织合作。教师可以将全班分为数个小组,并根据学生特点任命角色,如,策划人、执行人、发言人、情报人(了解和刺探其他小组的情况,对本小组的讨论提供帮助)、小组长,等。这样既保证了全部成员的参与,又发挥了学生的特长;既调动了学生的积极性,又保障了主动学习的效果。 5. 展示成果。教师要求学生以各种方式展示成果,白板、PPT、图画、文字、解说,等,教师或自己评价或引导其他小组互相评价。使用这种方式,学生可以将本课堂所掌握的知识进行整理和呈现,不仅给学生更多的自主,也为学生的交流和共享提供机会,使学习过程更加生动和活泼,自然和逼真。

多模态视角下宋词翻译的意象美 【摘要】宋词作为中国文化的精髓,具有典型的审美价值。本文尝试以多模态话语分析为角度,以宋代词人辛弃疾的《西江月?夜行黄沙道中》的英译为例,分析宋词语篇翻译的意象美及运作过程,旨在帮助读者更好地鉴赏、理解宋词的翻译。 【关键词】多模态话语分析;宋词;翻译;意象 中图分类号:I046 文献标志码:A 文章编号:1007-0125(2016)06-0252-02 一、引言 多模态指的是多种符号系统,即多种模态相结合的交际产物或交际过程(Stokl,2004)。Bemsen(2002)认为多模态可以分为两种或两种以上的单模态。多模态话语分析是一种通过不同的交际模态传递信息的话语,这种交际模态把文字、声音、图像、动作等结合起来。因此,多模态话语分析是听觉、视觉、触觉等多种感觉,利用多样的方式及符号资源进行交际的现象(Kress & Vam Leewen,2001:2)。 宋词作为中国古代文学的精华,具有极高的审美价值。《西江月?夜行黄沙道中》是宋代词人辛弃疾创作的一首描写田园风光的词,近十几年来,不同学者从不同角度对该词

进行解读。如丁俊、轩治峰从认知语言学翻译观为视角解释宋词的翻译,杨红彬从经验功能分析《西江月》词三种英译文等,大多数都基于单模态的文字分析,但更需要从视觉模态、听觉模态等多模态角度进行理解。 多模态话语分析为分析诗词提供了一种新模式,开辟了多角度理解文本的方式。多模态话语分析的多角度形式让读者从形式美感、听觉美感、视觉美感、嗅觉美感等多方面欣赏译作。本文尝试以多模态话语分析为视角,探讨宋词翻译的意象美,更好地体会译作背后深层次的存在。 二、多模态话语分析 20世纪90年代,Kress 和Van Leeuwen 基于Halliday的系统功能语言学理论,开创了多模态话语研究的先河。该视角认为意义是由多种模态来实现的,所有的模态都通过社会使用变成符号资源,所有的语篇都是多模态的。在多模态语篇中,每一种模态都体现一定的意义,各种模态相互作用,共同构建语篇的整体意义。许多学者意识到单模态的局限性,多模态在学术界受到广泛关注。当前,国内外对多模态文本分析处于初始阶段,胡壮麟从理论与实践角度研究了多模态和多模态意义构建,朱永生发展了多模态的理论基础及研究方法,李占子从多模态的社会符号进行分析。大多数研究者着眼于影像资料、多媒体、视觉广告、海报、漫画等,对诗词的研究尚少。随着多模态话语分析的迅速发展,多模态话

经典儿童绘本100简介 六一儿童节给孩子最好的礼物,就是书。最棒的幼儿绘本——不一定是最权威的,但是经过多位教育界人士、海归、资深家长严格筛选,它们一定是亲切的、耐读的、经典的。 1.《我爸爸》:对爸爸的描述,孩子很有共鸣 (英)安东尼·布朗著、余治莹译,河北教育出版社 这本书的图大而夸张,文字很少,关键是书中的爸爸和现实中孩子对爸爸的印象超级一致,孩子会觉得说的是自己的爸爸。有一幅画配文说“爸爸吃得像河马一样多”,宝宝觉得很有趣。 2.《石头汤》:法国故事却很有中国味 (美〕琼·穆特著,南海出版公司 这本书是根据法国民间故事改编的,但是很中国很有禅味,只是我们自己的绘本比较少,只好转手看西方人的理解。那种合作、分享,以及有点小骗局的快乐细致入微地渗透到孩子的心里。 3.《小恩的秘密花园》:亲近自然是孩子都喜欢的 (美)萨拉·斯图尔特著、郭恩惠译,河北教育出版社 对自然的亲近是每个孩子都会喜欢的。这是画面对孩子的影响,而书信的方式也是新颖吸引人的地方,其实每个人都有渴望书信的情结,渴望获取那份亲情和独有的慰藉,更何况是带着从悲观走向希望的一本。 4.《花婆婆》:女儿说海子的诗就像花婆婆 (美)库尼绘、方素珍译,河北教育出版社 这本应该是大人比较喜欢的画风,唯美而又完整的一个女人的一生记录,颇有人淡如菊的味道。是台湾人翻译的,文字很清淡。有小朋友第一次听说海子的诗,写的是面朝大海、春暖花开,她立刻说,海子写得很像花婆婆哎。 5.《多多老板和森林婆婆》:环保主题可以讲得很亲切 (日)藤真知子文、木杨叶子图、蒲蒲兰译,21世纪出版社 按我们大人的话,这就是一本宣传环保的主题绘本,而且反映的就是当下社会发展的现状。为什么有人大肆砍伐树木,为什么有人要大费周章地植树造林?看过这本绘本,已经无需再对孩子进行环保的说教了,他们已经什么都了解! 6.《阿秋和阿狐》:孩子相信玩偶会保护阿秋 (日)林明子著,南海出版社 《阿秋和阿狐》讲述的是一只玩具小狐狸和一个小女孩之间关于友情与探险的故事。作者始终没告诉读者,阿狐是只玩具狐。读这本书的孩子都知道阿狐是奶奶送给阿秋的玩具,但是他们仍然相信阿狐会保护阿秋。 7.“玛蒂娜”系列:家里有女宝宝的请立刻下手 (比)马里耶·德拉艾著、徐兆源译,中国少年儿童出版社 但凡家里有女宝宝的,就请立刻下手。对父母来说,与其买好妈妈胜过啥啥的说教类育子书籍,不如看看这个比利时的小女孩的生活中的点滴和方面。只要心态平和,我们的女儿也可以生活得如此淑娴雅静。

人机交互技术的发展与现状 一.什么是人机交互技术? 二.人机交互技术(Human-Computer Interaction Techniques)是指通过计算机输入、 输出设备,以有效的方式实现人与计算机对话的技术。人机交互技术包括机器通过输出或显示设备给人提供大量有关信息及提示请示等,人通过输入设备给机器输入有关信息,回答问题及提示请示等。人机交互技术是计算机用户界面设计中的重要内容之一。它与认知学、人机工程学、心理学等学科领域有密切的联系。也指通过电极将神经信号与电子信号互相联系,达到人脑与电脑互相沟通的技术,可以预见,电脑甚至可以在未来成为一种媒介,达到人脑与人脑意识之间的交流,即心灵感应。二. 人机交互技术的发展人机交互的发展历史,是从人适应计算机到计算机不断地适应人的发展史。 1959年美国学者B.Shackel从人在操纵计算机时如何才能减轻疲劳出发,提出了被认为是人机界面的第一篇文献的关于计算机控制台设计的人机工程学的论文。1960年,Liklider JCK首次提出人机紧密共栖(Human-Computer Close Symbiosis)的概念,被视为人机界面学的启蒙观点。1969年在英国剑桥大学召开了第一次人机系统国际大会,同年第一份专业杂志国际人机研究(IJMMS)创刊。可以说,1969年是人机界面学发展史的里程碑。在1970年成立了两个HCI研究中心:一个是英国的Loughbocough大学的HUSAT研究中心,另一个是美国Xerox公司的Palo Alto研究中心。 1970年到1973年出版了四本与计算机相关的人机工程学专着,为人机交互界面的发展指明了方向。 20世纪80年代初期,学术界相继出版了六本专着,对最新的人机交互研究成果进行了总结。人机交互学科逐渐形成了自己的理论体系和实践范畴的架构。理论体系方面,从人机工程学独立出来,更加强调认知心理学以及行为学和社会学的某些人文科学的理论指导;实践范畴方面,从人机界面(人机接口)拓延开来,强调计算机对于人的反馈交互作用。人机界面一词被人机交互所取代。HCI中的I,也由Interface(界面/接口)变成了Interaction(交互)。人机

基于多模态的英语词汇教学 摘要:根据多模态和多模态教学理论,对可利用的多种促进学生记忆词汇的渠道和方法进行分析,探讨多模态手段在英语词汇教学中的综合和得体应用,以期在提高学生词汇记忆质量方面,对广大英语教师起到一定的借鉴作用。 关键词:词汇教学;多模态;多模态教学手段 一、引言 词汇是构成语言的基础。从Holmes的多层次因素理论提出的大学程度阅读图表中提供的数据可看出,即使在大学水平,词汇能力依然至关重要[1]。要想掌握一门语言,需要大量的词汇积累,如果学习者达不到一定的词汇量,英语水平必然会受到制约。许多学生课后花很多时间和精力记忆词汇,也取得了一定的效果,但不是非常明显。那么作为教师,在英语词汇教学中如何激发学生学习和记忆词汇的兴趣,最大限度地刺激和调动学生的感官和身体,使词汇容易记忆、记忆深刻并能正确使用,从而打好英语学习的坚实基础呢?近年来,多模态的理论和使用方法不断被用于各个学科领域,语言教学也不例外。多模态教学法用于词汇教学,其优势和作用日益突出。为此,有必要对多模态手段及其在词汇教学中的应用进行研究,使之更完善地服务于教学,在词汇教学中能有机结合学生的感官和语言,提高学生词汇记忆的质量。 二、多模态的概念及其在英语词汇教学中的优势

多模态(multimodality)起源于上世纪九十年代的西方国家,Kress、Van Leeuwen 和Machin等人都对多模态进行了研究,从他们的研究中我们可以看出:“多模态就是对不同的交际模态之间的相互影响与联系所进行的研究,不管是书面还是口头的,视觉还是听觉的。”[2]除了语言之外,图像、颜色、字体、音乐、舞蹈、手势、姿态等非语言符号与语言共同生成意义,即多模态话语。 “多模态教学所倡导的是:在教学过程中,教师通过多种模态的同时刺激,调动学生的多种感官协同运作,以便强化记忆,提高教学效率。”[3]多模态的教学手段运用在词汇教学中,能全方位地调动学习者感官,激发其学习和记忆兴趣、充分提高词汇的听力能力。多模态手段下,结合合理的肢体语言的使用,还能有效巩固词汇的输出应用。 传统的英语教科书主题选材往往有一定的局限性,很多教材字体排版和颜色单一,没有配套的多媒体辅助教学,枯燥乏味,无法提起学生学习兴趣,再加上有些英语教师的词汇教学手段单调,其普遍模式往往是:教师带读单词――讲授单词的意义与用法――学生背单词,使得学生词汇学习和记忆词汇的效果极其有限。如今,随着多模态教学法的不断发展以及计算机和多媒体技术的进步,人们尝试将多种模态的教学方法应用到英语词汇教学中。 三、英语词汇教学中多模态手段的应用 (一)各种手段配合看图描述 教师利用图片等为学生进行直观展示,引入词汇学习。如:教师可

MY DAD(我爸爸) 1、He’s all right, my dad. 1、这是我爸爸,他真的很棒! 2、My dad isn’t afraid of ANYTHING. 2、我爸爸什么都不怕 3、even the Big Bad Wolf. 3、连坏蛋大野狼也不怕。 4、He can jump right over the moon, 4、他可以从月亮上跳过去。 5、And walk on a tightrope (without falling off). 5、还会走高空绳索(不会掉下去) 6、He can wrestle with giants. 6、他敢跟大力士摔跤 7、or win the father’s race on sports day, easily. 7、在运动会比赛中,轻轻松松就获得了第一名。 8、He’s all right, my dad.

8、我爸爸真的很棒! 9、My dad can eat like a house, 9、我爸爸吃得像马一样多 10、and he can swim like a fish. 10、我爸爸游得和鱼一样快。 11、He’s as st rong as a gorilla, 11、他像大猩猩一样强壮。 12、And as happy as a hippopotamus. 12、也像河马一样快乐 13、He’s all right, my dad. 13、我爸爸真的很棒! 14、My dad’s as a house, 14、我爸爸想房子一样高大 15、and as soft as my teddy. 15、有时又像泰迪熊一样柔软 16、He’s as wise as an owl, 16、他像猫头鹰一样聪明。 17、except when he tries to help.

人机交互中的计算机视觉技术 基于视觉的接口概念 计算机视觉是一门试图通过图像处理或视频处理而使计算机具备“ 看” 的能力的计算学科。通过理解图像形成的几何和辐射线测定, 接受器(相机的属性和物理世界的属性, 就有可能 (至少在某些情况下从图像中推断出关于事物的有用信息, 例如一块织物的颜色、一圈染了色的痕迹的宽度、火星上一个移动机器人面前的障碍物的大小、监防系统中一张人脸的身份、海底植物的类型或者是 MRI 扫描图中的肿瘤位置。计算机视觉研究的就是如何能健壮、有效地完成这类的任务。最初计算机视觉被看作是人工智能的一个子方向, 现在已成为一个活跃的研究领域并长达 40年了。 基于视觉的接口任务 至今,计算机视觉技术应用到人机交互中已取得了显著的成功,并在其它领域中也显示其前景。人脸检测和人脸识别获得了最多的关注, 也取得了最多的进展。第一批用于人脸识别的计算机程序出现在 60年代末和 70年代初,但直到 90年代初,计算机运算才足够快,以支持这些实时任务。人脸识别的问题产生了许多基于特征位置、人脸形状、人脸纹理以及它们间组合的计算模型, 包括主成分分析、线性判别式分析、 Gabor 小波网络和 .Active Appearance Model(AAM . 许多公司,例如Identix,Viisage Technology和 Cognitec System,正在为出入、安全和监防等应用开发和出售人脸识别技术。这些系统已经被部署到公共场所, 例如机场、城市广场以及私人的出入受限的环境。要想对人脸识别研究有一个全面的认识,见。 基于视觉的接口技术进展 尽管在一些个别应用中取得了成功,但纵使在几十年的研究之后,计算机视觉还没有在商业上被广泛使用。几种趋势似乎表明了这种情形即将会发生改变。硬件界的摩尔定律的发展, 相机技术的进步, 数码视频安装的快速增长以及软件工具的可获取性(例如 intel 的 OpenCV libraray使视觉系统能够变得小巧、灵

多模态理论下的大学xx教学模式研究 大学英语翻译教学本是提高学生语言综合运用能力,将知识与实践相结合的重要输出环节。在当前大学英语教学中,存在着对翻译教学重视程度不足、探索不够等问题,实际教学效率低下,教学方法呆板滞后,学生的学习效率自然降低了。在翻译教学中运用多模态教学的方式,能够有效提高教学效率,多角度调动学生学习兴趣,实现翻译教学的发展转变。 一、大学英语翻译教学现状及多模态理论运用的意义当前大学英语翻译教学现状并不十分乐观。翻译教学本是英语教学中重要的综合性环节,能够综合运用着词汇、语法知识,并且从语言情境方面影响着教学的发展,是英语实践的重要环节。然而在当前各高校英语教学中,对于翻译教学缺乏足够的重视,仅仅将其作为词汇、语法等学习的附庸,无论在教学体系设计、课程安排等诸多方面都存在着明显的不足。这些现象都说明当前英语翻译教学现状并不乐观。对于英语学习者而言,翻译需要良好的双语素养,然而重视程度的不足,使得无论是教师还是学生都既缺乏探究心理又缺乏努力方向,对于翻译的学习停留在“见招拆招”的单句翻译,而未能形成英语语言环境下的翻译系统与意识,更难把握足够的措辞环境。 在英语翻译教学中运用多模态理论,能够从多个角度调动学生学习的积极性,以更为综合的方式将英语教学展开来。整体而言,在多模态理论指导下翻译教学具有如下优势:其一,想要达到多模态教学就要打破传统课堂“教师――学生”“粉笔+黑板”的教学模式,而探索更为丰富的课堂组织形式,促使了课堂改革的发展;其二,多模态教学下,能够将听、说、语境等多种因素有机的结合起来,完成教学组织,成为翻译教学的关键所在;其三,多模态教学更多的照顾到学生的主观感悟,符合新课标要求,为学生未来的发展奠定基础。经过这些分析可见,多模态理论能从形式与内涵上推动英语教学的发展[1]。 二、多模态理论指导下大学英语翻译教学策略思考 (一)为学生构建一定的语境 多模态教学调动学生多种感官,首先就是要在一个整体上为学生构建一个语言环境。翻译的基础就是要求学生有扎实的双语基础,能够用不同的语言表

4招高效给孩子读英语绘本 孩子学英语时,很多父母或许有过这样的疑问:“多念英文故事书,能不能就可以让孩子听懂英文呢?”我们认为,孩子的耳朵是上帝造物的神奇展现,只要从耳朵听进去的东西,将化为语言消息停留在脑袋里,因而只要孩子听得足够多,就会自然习得一种语言,慢慢地也就能听懂更多的英文。 那么要怎样才能科学地为孩子念读绘本呢?瑞思学科英语老师建议,家长可以在绘本阅读过程中潜移默化中听进脑海里,拼字、句型、文法、时态……故事书上都有,多看英文书,自然而然就会习得十之八九。 1.尽量维持绘本的原汁原味 当然,英文故事书的难易度,有时不能光以内容文字的多寡来衡量,那么要怎么样念不同程度的绘本给孩子听呢?瑞思老师建议可以用一句英文,一句中文的形式念给孩子听。就像自己是一名英文口译员,有人说了一句英文,马上将之翻译成中文说出来,只是这两个角色都是由自己担任而已。例如——英文:Danny is in a hurry. 中文:Danny走得好匆忙。英文:"I am six years old today." Danny said. 中文:Danny说:「我今年六岁了。」 对于书中英文的部分可以照书念,尽量维持原汁原味。而中文翻译的部分要自然、口语化,不用拘泥于逐字翻译。就是当你念完了英文之后,脑子里出现什么样的中文意思,就将这个意思说出来。其实这就像我们帮很小的孩子念中文故事书时,通常不会照本宣科,而是看完文字后再用自己的口语说出来,是一样的道理。 另一个很重要的原则是,家长不要拘泥于英文单字的逐字翻译,让孩子先了解整句的意思,再用汉语说出来就好。多翻译了一个字或少翻译了一个字都不要紧,只要全句的意思没错就好,倒是说得自然、口语化还比较重要。而如果连翻译的汉语部分都说得别扭、不好听,对孩子来说,尤其是在念英文故事书的起步阶段,父母可能要花更多的力气,才能吸引孩子专心聆听。 2.不要自行加上自己的汉语解释 对于绘本里一些词语或名词的解释,瑞思老师也建议,在对孩子念绘本的过程中,除非孩子主动问问题,不然最好尽量保持一句英文、一句中文,接着就换下一句的方式来读,尽量不要在中文翻译之后,又自行加上其他的国语解释。因为在翻译之后,又自行加了与原文无关的字眼,孩子可能就无法正确比对中英文的关系、或是声音与绘图的关系,也可能减低了这个方法的效果。 3.孩子熟悉英语绘本后就只念英文

2009年7月第30卷第4期 外语教学 F ore i gn Langua ge E du ca ti on J u l y.2009 Vo.l30No.4多模态语篇分析与系统功能语言学 杨信彰 (厦门大学外文学院福建厦门361005) 摘要:随着科学技术的发展和社会需求的变化,多模态语篇已成为当代生活的一部分。语篇表达模式的变化给理论描写带来了新要求。在多模态语篇中,各种模态互相作用,互相补充,在语境创建意义。本文综合多模态的研究,讨论多模态的本质,说明系统功能语言学理论在多模态语篇分析的作用,强调多模态语篇分析应重视语言与其他模态之间的密切关系。 关键词:多模态语篇;符号;系统功能语言学 中图分类号:H030文献标识码:A文章编号:100025544(2009)0420011204 Abstr ac t:W ith the deve l op m ent of science and technology and the changes i n socia l de m ands,m ulti m oda l d isco urse has be2 co me part of co ntemporary life.The change of modes of expressi on i n discourse has brought about new de m ands for t heoreti ca l descr i pti on.In m ulti m oda l discourse,different m o des interac t with and co mp le m ent each ot her t o create m ean i ng i n context. Based on the st udies i n mu lti m oda lity,this paper discusses the nature of mu lti m odality,ill ustrates the role of system ic t heory in mu lti m o da l d i scourse ana lysis.The pape r a lso stresses t he i m portance of the c l ose re lati onship bet ween l anguage and other m o da lities i n mu lti m odal discourse ana l ys i s. K ey word s:mu lti m odal d i scourse;si gn;system ic f unctio na l li ng u istics 1.引言 如今,我们生活在一个数字化和信息化的时代。新技术的出现带来了语篇的变化。人们不再把语言作为唯一的意义表达手段。以前人们关注的是单模态语篇,语篇分析以前仅仅集中在语言语篇,但由于技术的发展,语篇出现了照片、图画、精美的布局等多模态特征。 H alliday(1978)认为语境包括语场、语旨和语式。在多模态语篇,语式发生了变化。也就是说,表达层发生了变化。这些变化使得内容层的变化成为可能。K ress等人(1997)意识到了图像在语篇中已作为重要的交际形式,因此引起语篇的形式和特征发生了变化,读者在阅读时不再仅仅依赖于书面语言。他们认为从语言单模态到多模态的发展促使人们从语言学到符号学的变化。 Matthiessen&Nesb itt(1996)意识到技术和社会需求的发展导致出现语言理论和描写脱离的现象。多模态语篇分析的一个方面是分析语篇中的各种表征模态创建意义的机制。多模态的出现对符号学和语言学理论提出了新要求,因而也成为语篇分析的一个热门话题。不同符号系统呈不同的模态。人们寻求和探讨适用于各种符号模态的理论框架。有些学者根据H alli day (1978,1994)的符号理论尝试解释各种模态的语法,如Mart i nec(1998)动作符号学、O.Toole(1994)和Kress& van Lee uwe n(1996)的视觉符号学、van Leeuwen(1999)的声音符号学。本文拟综合多模态的研究,讨论多模态的本质以及多模态与功能语言学理论的关系,说明语言在多模态语篇分析中的地位。 2.多模态的普遍性 多模态是人类生活的一种普遍内在特征。H alli day 早就意识到多模态语篇的存在。他(H alli day1985:4)认为除了语言,人们还有许多表达意义的方法,如绘画、雕塑、音乐、舞蹈等等。语言是文化中的一套符号系统,但语言的这一意义潜势最具有系统性。H alliday(1977/ 2003)就指出语言的独特之处在于它有三层的编码系统,即意义、表达以及位于其中的词汇语法。H alli day (1985:10)把在情景语境中起作用的任何一个语言实例称作语篇,并认为语篇可以是口头或书面的,也可以是其他的表达媒介。这就是说,语篇的媒介可以多种多样,既可以使用某种符号系统作为表达媒介,也可以是多种模态的结合。但对Matthiessen(2007:4)来说,要探讨多模态,首先就要考虑语言这个符号系统。他的观点是,语言的内在本质就是多模态的。Matthiessen(2007: 10)认为语言本身的多模态表现在语言表达层至少有三个系统:语音、文字和其他符号。语言表达层的这些模态与其他符号系统一起发展。Matthiessen(2007)观察了原始母语和成人语言的发展和变化,把原始母语也看作是多模态的。原始母语采用整个身体作为表达层,意义 # 11 #