数据库外文翻译

- 格式:doc

- 大小:94.00 KB

- 文档页数:11

外文翻译:索引原文来源:Thomas Kyte.Expert Oracle Database Architecture .2nd Edition.译文正文:什么情况下使用B*树索引?我并不盲目地相信“法则”(任何法则都有例外),对于什么时候该用B*索引,我没有经验可以告诉你。

为了证明为什么这个方面我无法提供任何经验,下面给出两种等效作法:•使用B*树索引,如果你想通过索引的方式去获得表中所占比例很小的那些行。

•使用B *树索引,如果你要处理的表和索引许多可以代替表中使用的行。

这些规则似乎提供相互矛盾的意见,但在现实中,他们不是这样的,他们只是涉及两个极为不同的情况。

有两种方式使用上述意见给予索引:•作为获取表中某些行的手段。

你将读取索引去获得表中的某一行。

在这里你想获得表中所占比例很小的行。

•作为获取查询结果的手段。

这个索引包含足够信息来回复整个查询,我们将不用去查询全表。

这个索引将作为该表的一个瘦版本。

还有其他方式—例如,我们使用索引去检索表的所有行,包括那些没有建索引的列。

这似乎违背了刚提出的两个规则。

这种方式获得将是一个真正的交互式应用程序。

该应用中,其中你将获取其中的某些行,并展示它们,等等。

你想获取的是针对初始响应时间的查询优化,而不是针对整个查询吞吐量的。

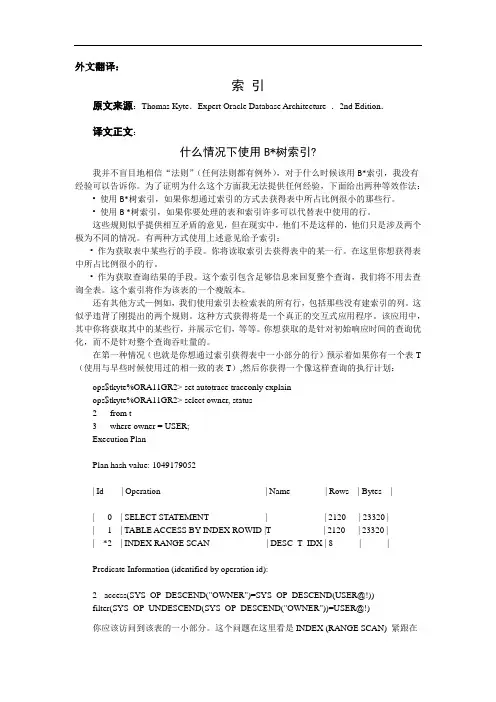

在第一种情况(也就是你想通过索引获得表中一小部分的行)预示着如果你有一个表T (使用与早些时候使用过的相一致的表T),然后你获得一个像这样查询的执行计划:ops$tkyte%ORA11GR2> set autotrace traceonly explainops$tkyte%ORA11GR2> select owner, status2 from t3 where owner = USER;Execution Plan----------------------------------------------------------Plan hash value: 1049179052------------------------------------------------------------------| Id | Operation | Name | Rows | Bytes |------------------------------------------------------------------| 0 | SELECT STATEMENT | | 2120 | 23320 || 1 | TABLE ACCESS BY INDEX ROWID |T | 2120 | 23320 || *2 | INDEX RANGE SCAN | DESC_T_IDX | 8 | |------------------------------------------------------------------Predicate Information (identified by operation id):---------------------------------------------------2 - access(SYS_OP_DESCEND("OWNER")=SYS_OP_DESCEND(USER@!))filter(SYS_OP_UNDESCEND(SYS_OP_DESCEND("OWNER"))=USER@!)你应该访问到该表的一小部分。

数据库概论A database consists of a file or a set of files. The information in these files may be broken down into records, each of which consists of one or more fields. Fields are the basic units of data storage, and each field typically contains information pertaining to one aspect or attribute of the entity described by the database. Using keywords and various sorting commands, users can rapidly search, rearrange, group, and select the fields in many records to retrieve or create reports on particular aggregates of data.一个数据库由一个文件或文件集合组成。

这些文件中的信息可分解成一个个记录,每个记录有一个或多个域。

域是数据存储的基本单位,每个域一般含有由数据库描述的属于实体的一个方面或一个特性的信息。

用户使用键盘和各种排序命令,能够快速查找、重排、分组并在查找的许多记录中选择相应的域,建立特定集上的报表。

Database records and files must be organized to allow retrieval of the information. Early systems were arranged sequentially (i.e., alphabetically, numerically, or chronologically); the development of direct-access storage devices made possible random access to data via indexes. Queries are the main way users retrieve database information. Typically, the user provides a string of characters, and the computer searches the database for a corresponding sequence and provides the source materials in which those characters appear. A user can request, for example, all records in which the content of the field for a pe rson’s last name is the word Smith.数据库记录和文件的组织必须确保能对信息进行检索。

毕业设计(论文)——外文翻译(原文)Database management moves into the GridDatabase management software (DBMS) has been the backbone of enterprise computing for the past many years. The market is growing bigger in terms of size, and will continue to gain prominence in 2004. With the consolidation, standardisation and centralisation of IT systems underway in most organisations, the demand for highly scalable and reliable database systems is on the rise.According to reliable industry estimates, the Indian database market is currently at about $100 million, and the top three players put together have a market share of more than 70 percent. IDC expects the information and data management software segment to grow at a compounded annual growth rate (CAGR) of 17 percent till 2006. “There will be independent solutions like business intelligence that are largely going to drive the use and adoption of databases,” says Tarun Malik, product marketing manager, Microsoft India.The importance of having a database and data warehouses for various specific applications will also be a factor of growth to drive the market. Early adapters of sophisticated database management and business intelligence tools would be large computing verticals like the government, the banking, financial services and insurance (BFSI) sector, telecom, IT services, manufacturing and the retail sector.Current statusFour or five years ago DBMS was just like a data store, with medium and large companies only looking at it as a tool for storing data. Then around three years ago it really moved into what is called the relational database space. This is where the concept of applications on databases came into the picture.In terms of users there has been a shift from meagre database administrators to developers to data warehouse managers and also towards business intelligence usage that involves a whole lot of people and not just CIOs. This means users have also evolved with the evolution of the product, its usage and market. Till the time it was a data store, database administrators could have managed it. But when it became a data warehouse, CIOs and skilled technical experts got involved.That is why DBMS is now an integral and crucial part of the overall IT policy of large enterprises. The importance of DBMS has come to fore especially after the adoption of ERP and CRM solutions. If you look at the top of the pyramid, for the top few IT spenders, DBMS has become as important as network infrastructure. “As a matter of fact, that is why it is also driving the platform strategy of vendors,” says Malik. However, the trend is still evolving in the SME space.One can now see a very strong momentum in the marketplace. As data continues to grow exponentially, one witnesses the type of information changing from record-oriented to content-oriented data. Databases have become content or information repositories. Handling that and supporting applications is not only transaction-oriented but analysis-oriented. Mixed content is going to be a way in which databases differentiate themselves. There is the trend to push more analytics into the database, with abilities like data mining in real-time to support new applications.XML will be important as users now store and build content repositories to represent that kind of content. In terms of topology of database performance, the ability to get performance, scalability and high availability in different environments is also gaining importance.Another clear trend in the database space is towards building infrastructure that is robust, secure and low-cost. That is why almost all vendors are looking at offering unlimited scalability and reliability on low-cost computers.DriversApart from the increasing adoption of databases in different verticals, the return on investment (RoI) and functionality of databases are also fuelling the growth of DBMS in the country. Consumers, especially after the dot-com debacle, have started looking at spending less and deriving more RoI from new technology, products and software. Any vendor who relates his offering to RoI would be a successful vendor.Open SourceNo one has so far dumped a clustered Oracle 9i database and replaced it with a free, open source database downloaded from the Web and running on a bunch of Intel-based Linux/free OS servers. But a growing number of users are pioneering these freely available databases. These users say that open source databases are reaching a stage where they can become the latest addition to their inventory of open source tools, including the Linux operating system, the Apache Web server and the Tomcat Java servlet engine According to these users, the main attractions of an open source database are:•V ery fast performance, especially in read-only applications.•No or nominal licensing costs.•Low administrative and operational costs.As to the back-end servers, users are still ingrained with Oracle or DB2, which has a fair amount of support for Linux.It is a typical pattern in companies that are experimenting with open source databases. High-volume database updates, which are the essence of transaction-processing applications, remain anchored on products such as Oracle‟s 9i and IBM‟s DB2 Universal Database, and increasingly Microsoft‟s SQL Server. But there are a host of new application areas that don‟t require t he complex and equally expensive features of conventional databases.MySQL open source database from MySQL has spread from being used by a few groups to the core infrastructure of the Internet portal. MySQL is a core piece of the content-generation system for many large users. Open source databases are typically available for free or for a nominal charge and include the complete source code. Finally, in accordance with the terms of the GNU General Public License (GPL), users typically have the freedom to change any part of the source code and use it without charge as long as they publish the change. Once published, the change can be used by anyone.An alternative arrangement is the Berkeley Software Development licence which is used by . Developers can use, copy, modify, and distribute this software free of cost.There is an array of open source databases. Firebird, based on Borland‟s venerable Inter Base database is one of the few that have the support and blessings of vendors and the well-organised community of coders.MySQL is also proving to be popular among open source communities. Every time a new programming language comes out, the first thing that developers usually do is add database connectivity to MySQL. PostgreSQL is the most matured of the open databases, and maintains an extensive Web presence for its developer community. It is a Canadian company that offers applications along with support services. Red Hat bases its product offerings on PostgreSQL.The open databases are often storehouses of innovation. MySQL has an architecture that has a core relational manager that can be used by different kinds of plug-in data handlers. These open databases tend to be far simpler than their conventional counterparts in all these areas. They also have low operational overheads.A common criticism of open source databases is that they don‟t support transactions or don‟t do as well as commercial products. For example, MySQL has a fast database for content store, but it is still immature in terms of transaction processing at the back-end. However, immaturity in some areas of an open database might not be a problem if the software has what you need in other areas, or has a credible track record of delivering new features on a regular basis.ConclusionThe database segment will continue to grow as businesses rely more and more on information as a source of competitive advantage. However, the market has definitely evolved over the years though it has not yet reached high maturity levels. As the SME segment has started adopting the technology, experts opine that there is going to be huge momentum in the market. The Indian SME market is no longer just a PC market; rather, it has become a well-networked and well-connected segment, which is why it has also started using servers. On the enterprise side one will witness a lot of momentum coming around solutions like applicationintegration, business intelligence and reporting services. It is expected that three factors are going to drive the Indian DBMS market in this fiscal: solutions, RoI and functionality. With vendors focusing on these aspects, one expects the market to experience good growth this fiscal.Oracle IndiaOracle feels that by adopting Grid computing (the recently announced 10G enablement) with databases like Oracle 9i, organisations can reduce the cost of IT by running it on low-cost commodity hardware. Oracle has the ability in terms of delivering all elements of the information architecture. On one hand are the development tools and database and application servers, and on other hand are the comprehensive suite of applications in the Oracle E-Business Suite. Moreover, being based on open standards, customers can adopt a hybrid model, which has a mix of legacy and customised applications, and offers a stepping-stone for organisations to move into an infrastructure with a common data model.In terms of technology, Oracle‟s focus is all on the components of the Oracle 10g infrastructure software. Oracle Database and Oracle Application Server provide a powerful deployment platform for enterprise applications, starting from companies with turnover of Rs 10 crore to the largest corporates . It has immense applicability in BFSI, manufacturing, telecom, and the government sector. It has also one of the most secure database technologies. Currently, a number of state governments are implementing Oracle-based solutions. Oracle has already launched the next release of its infrastructure software: Oracle 10g. Oracle 10g is the infrastructure software for Grid computing, which lets the user combine the power of multiple low-cost computers to work as a single powerful and reliable computer.Apart from enabling Grid computing, Oracle Database 10g includes new self-management and tuning capabilities that empower a DBA to focus on higher value-added jobs rather than the day to day management of a database. It allows database administrators to work with the consumers of technology to determine service level agreements and use policy-based database management capability to manage the system. With the release of Oracle 10g Infrastructure software, Oracle hopes to further increase its market share in India. MicrosoftMicrosoft is very aggressively growing its base for SQL Server 2000. It promises to meet the demands of customers‟ data management systems. The company has also gained strength with the promise of ease of manageability and better RoI. Again, as a corporation, the kind of support Microsoft offers to its consumers is unmatched. It involves its customers in the development of its new products. For example, development of the next version of SQL Server 2000 called …Y ukon‟ has involved not only Microsoft partners but also prime customers worldwide. The kind of investment that Microsoft puts into R&D is huge.In the days to come, Microsoft will be focusing more on business value to consumers. The consumer understands the business value of a solution, be it Business Intelligence or application integration. To increase its focus on the mid-tier and the SME market, the company is also going to enhance its channels. Microsoft is also looking at evolving its product with its new version coming up by the end of this calendar year.Bettering RoI is at the top of Microsoft‟s agenda. It believes that the b iggest RoI is going to come through the deployment of the solution, which is going to help drive the customer‟s business. Microsoft, all across its server lines, is known for ease of use and manageability.The company recently released Reporting Services in SQL Server 2000 and that too at no additional cost. Last year it had introduced a 64-bit version of SQL Server at no additional cost. The kind of rich product functionalities that the company is bringing in will clearly help users in realising better RoI. Microsoft will continue to focus on segments like government, BFSI, telecom, IT services, manufacturing and retail. Sybase-SAP allianceIn a move to provide customers with greater choice, SAP has started offering its business applications for small com panies on Sybase‟s database platform, in addition to Microsoft‟s SQL Server database. Under the agreement, SAP and Sybase will integrate SAP‟s …Business One‟ product suite for small and mid-size businesses (SMEs) into Sybase‟s Adaptive Server Enterprise (A SE) database system.Previously, SAP‟s Business One application was available on Microsoft‟s SQL Server database only.SAP will market its combined offering with Sybase through its partner distribution channels. Both SAP and Sybase will dedicate marketing, alliance and training resources to the partnership. In addition, SAP and Sybase plan to develop and market Sybase mobile solutions for Business One customers.本文来源于:/flk.aspx?id=191779&fn=OA00338786.mht&url=http%3a%2f%2fwww.expresscomp %2f20040329%2fdms01.shtml毕业设计(论文)——外文翻译(译文)网格中的数据库管理在过去的几年时间里,数据库管理系统(DBMS)已成为企业计算机的运行中枢。

外文翻译About the database of the knowledge of the deadlock Database itself provides lock management mechanism, but from a hand, database is the client applications "puppet", this is mainly because the client to the server has complete control of the gain of locks ability. The client in enquiries in the request and the way to query processing tend to have direct control, so, if we application design reasonable enough, then appear database is normal phenomenon dead lock.Below are listed some easy to have locked application examples:A, the client cancel inquires no roll back after practice.Most of the application is inquires often happens homework. However, users through the front desk the client application inquires the backend database, sometimes will cancel inquires for any variety of reasons. If the user to open the window after mouth query, because users find reflect crash or slow compelled to cancel the query. But, when the client when cancel inquires, if not add rollback transaction statement, then at this time, because the user has to the server sends the inquiry's request, so, the backend database involved in the table, all have been added L locked. So even if the user cancel after inquires, all in the affairs for the locks within will remain. At this point, if other users need to check on the table or the user to open the window through input inquires to query conditions to improve the system response speed occurs when the jam phenomenon.Second, the client not to get all the results of my query.Usually, the user will be sent to the server after queries, foreground application must be done at once extraction all the results do. If the application did not extract all the results trip, it produces a problem. For as long as the application did not withdraw promptly all the results, the lock may stay at table and block other users. Since the application has been submitted to the server will SQ statements, the application must be extracted all results do. If the application does not follow the principle words (such as because at that time and no oversight configuration), can't fundamentally solve congestion.Three, inquires the execution time too long.Some inquires a relatively long time will cost. As for the query design is not reasonable or query design to watch and record it is, will make inquires the execution time lengthen. If sometimes need to Update on users record or Delete operation, if the line is involved in it, you will get a lot of lock. These locks whether finally upgrade to watch the lock, can block other inquiries.So often, don't take long time running decision support search and online transaction processing inquires the mixed together.When database meet blocked, often need to check the application submitted to the SQL statement itself, and check and connection management, all the results do processing and other relevant application behavior. Usually, the lock for to avoid the conflict in the jam, the author has the following Suggestions.Suggest a: after the completion of the extraction of all query results do.Some applications in order to improve the response speed of the user inquires, will have the option of extraction need record. The "smart" looks very reasonable, but, but will cause more waste. Because inquires not timely and fruit extraction of words, the lock cannot be released. When others inquires the data, will be happening.So, the author suggest in application design, database query for record to the extraction of in time. Through other means, such as adding inquires the conditions, or the way backstage inquires, to improve the efficiency of the inquires. At the same time, in the application level set reasonable cache, and can also be very significantly improved query efficiency.Suggest two: in the transaction execution don't let the user input content.Although in the affairs of the process with sex, can let the user participation, in order to improve the interactivity. But, we don't recommend the database administrator tend to do so. Because if the user in affairs during the exec ution of the input and number, will extend the affairs of the execution time. Although people smarter, but the response speed still don't have a computer so fast. So, during the implementation of the user participation to let the process, will extend the a ffairs of waiting time. So unless there is a special needs, not in the application's execution process, reminds the user input parameters. Some affairs of the executive must parameters, best provide beforehand. If can through the variables in the parameters such as need to go in.Suggest three: make affairs as far as possible the brief.The author thinks that, database administrator should put some problem is simplified. When a need to many SQL statements to complete, might as well take the task decomposition. At the same time, it breaks down into some brief business affairs.If the database a product information table, its record number two million. Now in a management needs, the one-time change one of the one million five hundred thousand record. If through a change affairs, the time is long. If it involves cascade update it, is time the meeting is longer.In view of this situation, we can learn affairs brief words. If the product information, may have a product type field. So in the update data, can we not one-time updates. But through the product category fields to control, to record the iteration points. So every category of update firm consumption of time may be greatly reduces. So although operation, will need more steps. But, can effectively avoid to go to the occurrence of congestion, and improve the performance of the database. Suggest four: child inquires the and list box, had better not use at the same time.Sometimes in the application of design, through the list box can really improve user input speed and accuracy, but, if foreground application does not have buffer mechanism, you often can cause congestion.As in a order management system, may need frequent input sales representatives. In order to user input convenience, sales representative often design into a list box. Every time need to input, foreground application from the background of all sales representative inquires information (if the application is not involved in the cache). On one hand, the son of nature, would be speed query slow; Second, the list box have growth time operation of the inquiry. The two parties face touch together, may causethe application of improving the running time process query. And the other user queries, such as the system administrator need to maintain customer information, and cause congestion.So, in the application design, the child inquires the best less. And the child inquires the list box and use at the same time, more need to ban. If you can't avoid it, should be in application realize caching mechanism. That way, the applications need to sales representative information, will from application cache made, not every time to check the database.At the same time, can be in the list box design "to search" function. When there is a change to the user information, such as the system administrator to join a new sales representatives. In no again before inquires, because of their application is achieved in the cache data, so not just updated content. At this time, users will need to run to inquires the function, let the foreground application from a database query information again. This kind of design, can increase the list box and the son of the execution time inquires, effectively avoid congestion.Suggest five: in the set when cancel inquires back issues.Foreground application is designed, should allow users to a temporary change in idea, cancel the query. Such as user inquires the all product information, may feel response time is long, hard to bear. At this time, they will think of cancel inquires the. In this case, the application design need to design a cancel inquires the button. The user can in the process of inquires click this button cancel inquires at any time. Meanwhile, in the button affair, need to pay attention to join a rollback command. Let the database server can prompt to records or table to unlock.At the same time to the best lock or query timeout mechanism. This is largely because, sometimes also can cost a lot inquires user host to a large number of resources, and cause client crash. At this time, to be able to lock the inquires the or overtime mechanisms, namely in inquires after overtime, database server of related objects for automatic unlock. This is also the database administrator need to program developers negotiation of a problem.In addition, explicit database connection to take control in the concurrent users, is expected to full load next use application to bear ability test, use the link, each inquires to set use inquires and lock exceeds the overtime, these methods can effectively avoid the lock conflict obstruction. When database administrators found that blocking the symptoms, can from these aspect, looking for solutions.From the above analysis can see, SQL Server database lock is a double-edged sword. The security database data consistency at the same time, they will give the database caused some negative effect. How do these negative influence to the least, is our database administrators task. In application design, follow the advice above, can effectively solve the problems for the lock blockages, improve the performance of the database. Visible, to basically solve congestion problem, need database management personnel and program developers work together.中文关于数据库死锁的知识数据库本身提供了锁管理机制,但是从一方面,数据库客户端应用程序的“傀儡”,这主要是由于客户端到服务器的完全控制获得的锁的能力。

附录附录A: 外文资料翻译-原文部分:CUSTOMER TARGETTINGThe earliest determinant of success in the development of a profitable card scheme will lie in the quality of applicants that are attracted by the marketing effort. Not only must there be sufficient creditworthy applicants to avoid fruitless and expensive application processing, but it is critical that the overall mix of new accounts meets the standard necessary to ensure ultimate profitability. For example, the marketing initiatives may attract sufficient volume of applicants that are assessed as above the scorecard cut-off, but the proportion of acceptances in the upper bands may be insufficient to deliver the level of profit and lesser bad debt required to achieve the financial objectives of the scheme.This chapter considers the range of data sources available to support the development of a credit card scheme and the tools that can be applied to maximize the flow of applications from the required categories.Data availabilityThe data that makes up the ingredients from which marketing campaigns can be constructed can come from many diverse sources. Typically, it will fall into four categories:1 the national or regional register of voters;2 the national or regional register of court judgments that records the outcomeof creditor-debtor legislation;3 any national or regional pooled information showing the credit history of clients of the participating lenders; and4 commercially compiled data including and culled from name and address lists, survey results and other market analysis data, e.g. neighborhoods and lifestyle categorization through geo-demographic information systems.The availability and quality of this data will vary from country to country and bureau to bureau.Availability is not only governed by the extent to which the responsible agency has undertaken to record it, but also by the feasibility of accessing the data and the extent (if any) to which local consumer legislation or other considerations (e.g. religious principles) will allow it to be used. Other limitations on the use of available data may lie in the simple impossibility or expense of accessing the information sources, perhaps because necessary consumer consent for divulgence has been withheld or because the records are not yet stored electronically.The local credit information bureaux will be able to provide guidance on all of these matters, as will many local trade or professional associations or the relevant government departments.Data segmentation and AnalysesThe following remarks deal with the ways in which lawfully obtained data may then be processed and analyzed in order to maximize its value as the basis of a marketing prospect list. Examples of the types and uses of data that will play a role in the credit decision area are discussed later in the chapter, within the context of application processing.The key categories into which prospects may be segmented include lifestyle, propensity to purchase specific products (financial or otherwise) and levels of risk. The leading international information bureaux will be able to provide segmentation systems that are able to correlate each of these data categories to provide meaningful prospect lists in rank order. Additionally, many bureaux will have the capability to further enhance the strength and value of the data. Through the selective purchasing of data from bona fide market sources, and by overlaying generic factors deduced from the analysis of the broad mass of industry information that routinely passes through their systems, the best international operators are now able to offer marketing and credit information support that can add significantly to the quality of new applicants.The importance of the role and standard of this data in influencing the quality of the target population for mailings, etc. should not be underestimated. Information that is dated or inaccurate may not only lead a marketer and the organization into embarrassment and damage their reputations, but it will also open the credit card scheme to applicants from outside either the target sector or ,worse still, applicants outside the lender’s view of an acceptable credit risk.From this, it follows that you should seek to use an information bureau whose business principles and operating practices comply with the highest levels of both competence and integrity.Developing the prospect databaseThis is the process by which the raw data streams are brought together and subjected to progressive refinement, with the output representing the refined base from which prospecting can begin in earnest. A wide experience-often across many different markets and countries-in the sourcing, handling and analysis of data inevitably improves the quality of the ideas and systems that a bureau can offer for the development of the prospect database.In summary, the typical shape of the service available from the very best bureaux will support a process that runs as follows:1.collect and consolidate all data to be screened for inclusion;2.merge the various streams;3.sort and classify the data by market and credit categories;4.screen the date using predetermined marketing and credit criteria; and5.consolidate and output the refined list.Bureaux will charge for the use of their expertise and systems.Therefore, consideration should be given to the volumes of data that are to be processed and the costs involved at each stage. The most cost-effective approach to constructing prospect databases only undertakes the lowest-cost screening process within the earlier stages. The more expensive screening processes are not employed until the mass of the data has been reduced by earlier filtering.It is impossible to be prescriptive about the range and levels of service that are available, but reference to one of the major bureaux operating in the region could certainly be a good starting point.Campaign Management and AnalysisAgain, this is an area where excellent support is available from the best-of-breed bureaux. They will provide both the operational support and software capabilities to mount, monitor and analyse your marketing campaign, should you so wish. Their depth of experience and capabilities in the credit sector will often open up income: cost possibilities from the solicitation exercise that would not otherwise be available to the new entrant.The First Important Applications of DBMS’sData items include names and addresses of customers, accounts, loans and their balance, and the connection between customers and their accounts and loans, e.g., who has signature authority over which accounts. Queries for account balances are common, but far more common are modifications representing a single payment from or deposit to an account.As with the airline reservation system, we expect that many tellers and customers (through ATM machines) will be querying and modifying the bank’s data at once. It is vital that simultaneous accesses to an account not cause the effect of an ATM transaction to be lost. Failures cannot be tolerated. For example, once the money has been ejected from an ATM machine ,the bank must record the debit, even if the power immediately fails. On the other hand, it is not permissible for the bank to record the debit and then not deliver the money because the power fails. The proper way to handle this operation is far from obvious and can be regarded as one of the significant achievements in DBMS architecture.Database system changed significantly. Codd proposed that database system should present the user with a view of data organized as tables called relations. Behindthe scenes, there might be a complex data structure that allowed rapid response to a variety of queries. But unlike the user of earlier database systems, the user of a relational system would not be concerned with storage structure. Queries could be expressed in a very high level language, which greatly increased the efficiency of database programmers. Relations are tables. Their columns are headed by attributes.Client –Server ArchitectureMany varieties of modern software use a client-server architecture, in which requests by one process (the client ) are sent to another process (the server) for execution. Database systems are no exception, and it is common to divide the work of the components shown into a server process and one or more client processes.In the simplest client/server architecture, the entire DBMS is a server, except for the query interfaces that the user and send queries or other commands across to the server. For example, relational systems generally use the SQL language for representing requests from the client to the server. The database server then sends the answer, in the form of a table or relation, back to client. The relationship between client and server can get more complex, especially when answers are extremely large. We shall have more to say about this matter in section 1.3.3. there is also a trend to put more work in the client, since the server will be a bottleneck if there are many simultaneous database users.附录B: 外文资料翻译-译文部分:客户目标:最早判断发展可收益卡的成功性是在于受市场影响的被吸引的申请人的质量。

外文文摘型数据库简介1.Ei Compendex工程索引数据库EI(The Engineering Index:工程索引)是工程技术领域的综合性检索工具,由美国工程信息中心编辑出版。

侧重提供应用科学和工程领域的文摘索引信息,涉及核技术、生物工程、交通运输、化学和工艺工程、照明和光学技术、农业工程和食品技术、计算机和数据处理、应用物理、电子和通信、控制工程、土木工程、机械工程、材料工程等学科。

Ei的数据来源于5100种工程类期刊、会议论文集和技术报告,每年大约增加文献25万篇。

2000年8月,Ei 推出Engineering Information Village-2(简称EI Village2 或EV2)新版本,对文摘录入格式进行了改进,首次将文后参考文献列入Compemdex 数据库。

用户在EI Village2网上可检索到1884年至今的文献。

EI Village2有“简易检索(Easy Search)”、“快速检索(Quick Search)”和“高级检索(Expert Search)”三种检索方法。

检索结果显示方式有:Citation(引文)、Abstracts(摘要)或Detailed record(详细记录)格式,可以通过文摘页左下角Full-Text Links链接Elsevier、Springer等全文数据库查阅全文。

EI Village2数据库及时报道全世界工程与技术文献,为科学研究者和工程技术人员提供专业化、实用化的最新文献信息服务。

2.SCI(科学引文索引)数据库科学引文索引(SCI)是国际学术界公认的“四大权威检索工具”之一,内容涵盖自然科学、工程技术、生物医学、社会科学、艺术与人文等170多个学科领域。

目前该数据库有“扩展版”和“核心版”2种版本。

2006年12月SCI Expanded扩展版收录期刊6613种、SCI核心版收录3766种期刊。

SCI数据每周更新。

SCI数据库检索系统有General Search(普通检索)、Cited Ref Saerch(引文检索)、Structure Search(结构检索)和Advanced Search(高级检索)四种检索方法。

在线数据库的相关英语小知识

在线数据库的英文:

online databases

adj. 联机的,在线的

Time flies when you are online.

上网的时候时间过得飞快。

I follow you online.

我在网上跟随着你们。

The syllabus is already available online.

现在课程表已经放在网上了。

They have bee a juggernaut in online advertising, pictures, video and online games.

他们在网络广告、图片、视频、网络游戏各个领域横冲直撞。

A sock puppet is an online identity used for purposes of deception within an online munity.

“马甲”是网络社区中为了隐藏身份而的ID。

databases是什

么意思:

n.

(储存在计算机中的)数据库

dump database mand

转储数据库命令 Are you sure to constrict the picture database?

您确定要压缩当前的图像数据库吗?The latter must be presented in the database.

后者必需在数据库中呈现。

The database is updated monthly.

数据库每月更新。

The information is stored on a large database.

信息储存在宏大的数据库中。

数据库英文DatabaseA database is an organized collection of data that is stored electronically. It enables users to access, manipulate, and analyze data efficiently and effectively. Databases can be used for a wide range of applications, from simple record-keeping to complex data analysis and decision-making.Relational DatabaseA relational database is a database that is organized around tables, which are related to each other through common fields. Each table contains records, which are represented by rows, and fields, which are represented by columns. The relationships between the tables are based on common fields, such as a customer ID or an order ID.SQLStructured Query Language (SQL) is a programming language that is used to manage and manipulate data in a relational database. It is used to create, modify, and delete data and to retrieve data from the database. SQL is widely used in business and industry to manage and analyze data.Data TypesIn a relational database, each field has a data type, which defines the kind of data that can be stored in that field. Common data types include text,numeric, date/time, and Boolean. Other data types, such as binary data or images, may also be used in some databases.Primary KeyA primary key is a field or set of fields in a relational database that uniquely identifies each record in a table. The primary key is used to enforce data integrity, ensuring that each record is unique and that records can be related properly between tables.Foreign KeyA foreign key is a field or set of fields in a table that refers to the primary key of another table. The foreign key is used to establish relationships between tables and to maintain data integrity by enforcing referential integrity constraints.ERDAn entity-relationship diagram (ERD) is a graphical representation of the tables and relationships in a relational database. It is used to model the data and to design the database schema.NormalizationNormalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It involves breaking down tables into smaller, more specific tables and establishing relationships between them toeliminate duplicate data and ensure that data is consistent across the database.IndexesIndexes are used to improve the performance of queries in a database by providing faster access to data. An index is a data structure that is created on one or more fields in a table, allowing the database to quickly locate records that match certain criteria.TriggersTriggers are automated procedures that are executed in response to certain database events, such as the insertion, deletion, or modification of data. Triggers can be used to enforce business rules or to automate certain database tasks.TransactionsA transaction is a sequence of database operations that must be executed as a single, atomic unit. Transactions are used to ensure data integrity and to provide a consistent view of the database to all users.Backup and RecoveryBackup and recovery are critical components of database management. Regular database backups are essential for protecting data against loss orcorruption, while recovery procedures are used to restore data in the event of a disaster or other catastrophic event.Concurrency ControlConcurrency control is the process of managing simultaneous access to a database by multiple users or applications. It ensures that transactions are executed in a correct and consistent manner, while also maintaining data integrity and preventing conflicts or errors.。

本科毕业设计(外文翻译)题目小区物业综合管理系统的设计与实现学生姓名专业班级学号院(系)指导教师(职称)完成时间Database space organizationSpatial data management has been an activearea of research in the database field for two decades,with much of the research being focused on develop-ing data structures for storing and indexing spatialdata. However, no commercial database system pro-vides facilities for directly defining and storing spa-tial data, and forniulating queries based on researchconditions on spatial data. We believe the followingare the relevant issues on which near-term researchshould be focused (in the order of decreasing impor-tance and urgency).First, relational query optimization techniquesneed to be extended to deal with spatial queries,thatis,queries that contain search conditions on spatialpredicates to be developed.Second, more work needs to be done on experi-mental validation of the relative performance of someof the more promising data structures and indexingstructures proposed thus far, with consideration of amuch broader set of operations than just a few opera-tions that have typically been used in the limited per-formance studies conducted thus far.Third, it is difficult to build into a single data-base system multiple data structures for spatial index-ing, and all spatial operators that are useful for awide variety of spatial applications,as such, it isdesirable to build a database system so that it will beas easy as possible to extend the system with addi-tional data structures and spatial operators.If the DBMS provides a way to interactively and update the database, as well as interrogate it capability allows for managing personal data-Aces however, it does not automatically leave an audit trail of actions and does not provide the kinds of controla necessary in a multiuser organization. These-controls are only available when a set of application programs are customized for each data entry and updating function.Software for personal computers which perform me of the DBMS functions have beenvery popular arsenal computers were intended for use by individuals for personal information storage and process- These machines have also been used extensively small enterprises, professionals like doctors, acrylics, engineers, lawyers and so on .By the nature of. intended usage, database systems on these machines except from several of the requirements of full doge database systems.Since data sharing is not:Tended, concurrent operations even less so, the) fewer can be less complex. Security and integrity7aintenance are de-emphasized or absent. As data-) limes will be small, performance efficiency Is also important. In fact, the only aspect of a database system that is Important 'is data Independence. Data-.dependence, as stated earlier. Means that applicant programs and user queries need not recognizant‘physical organization of data on secondary storage. The importance of this aspect, particularly for the personal computer user, is that this greatly simplifies database usage. The user can store, access and manipulate data at a high level (close to the application) and be totally shielded from the low level (close to the machine) details of data organization. We will not discuss details of specific PC DBMS software packages here.Let us summarize in the following the strengths and weaknesses of personal computer data-base software systems:The most obvious positive factor is the user friendliness of the software. A user with no prior computer background would be able to use the system to store personal and professional data, retrieve and perform relayed processing. The user should, of course, satiety himself about the quality of software and the freedom from errors (bugs) so that invest-merits in data arc protected.For the programmer implementing applications with them, the advantage lies in the support for applications development in terms of input screen generations, output report generation etc.offered by theses stems.The main negative point concerns absence of data protection features.Unless encrypted, data cane accessed by whoever has access to the machine Data can be destroyed through mistakes or maliciousintent. The second weakness of manv of the PC-basedsystems is that of performance. If data volumes growup to a few thousands of records,performance couldbe a bottleneck.For organization where growth in data volumesis expected, availability of, the same or compatiblesoftware on large machines should be considered.This is one of the most common misconceptionsabout database management systems that are used inpersonal computers.Thoroughly comprehensive andsophisticated business systems can be developed indBASh, Paradox and other DBMSs.However, theyare created by experienced programmers using theDBMS's own programming language. That is not thesame as users who create and manage personal filesthat are not part of the mainstream company system.Data security prevents unauthorized users from viewing or updating the database.Using passwords, the database, called subschema(pronounced "sub-scheme"),For example, an employee database can contain all the data about an individual employee, but one group of users may be authorized to view only payroll data, while others are allowed access to only work history and medical data.The DBMS can maintain the integrity of the database by not allowing more than one user to up-date the same record at the same time, The DBMS can keep duplicate records out of the database, for example, no two customers with the same customer numbers (Key fields) can be entered into the Data-Base.When a DBMS is used, the detailed knowledge of the physical organization of the data does not have to be built into every application program. The application program asks the DBMS for data by field pine, for example, I coded representation of "give customer name and balance due" would be sent to he DBMS.Without a DBMS, the programmer must secrecy space for the full structure of the in the program, Any change in data structure requires£hangs in all the applications programs.The multiple-database model is represented by proposals for shared and private database architect rues, checkout and checking of data to and from shared and private databases.Each user may populates/her private database with data checked out of the shared database, perform updates against the data. and check them back into the shared Database.The multiple-database model can be used to work around" the conflict situations inherent in long lunation database sessions.Since each user from the shared database and work against. is/her private database, "disconnected" from the、hared database (at least on the surface),the usersan avoid the conflict situations.In particular, multile users may besimultaneously updating the same thought having to wait for other users to comate late their updates.However, when updated is to benecked into the shared database, it may have to be checked in as a new version, necessitating version management. Further, 'when data in a private data-base references data in the shared data,or vice versa, a private database is not really disconnected from the shared database. For example, the evaluation of query in general will require the database system to access both a private database and the shared data-base, even if the query may have been formulated against a private database.The multiple-database model is more appropriate than the single-database model in an environment where it is easy to determine in advance logical partitions of the database that correspond to work to be performed.The single-database model has received considerably more attention than the multiple- database model.The focus of research into single-database model has been on addressing the twin problem of long waits and loss of work that the long duration of transactions brings about.The single-database model requires the intro-diction of a notion of database consistency, and protocols for concurrency control and recovery, that are different from those supported in traditional database systems.If a "reasonable" notion of database consistency is to be supported (I.c. the database system is to enforce it automatically), there are bound to be” conflict" situations where one transaction comes into an access conflict against some other transaction. If await is to be avoided,some means of a negotiated settlement of the conflict must be provided, thereby dragging the users into the details of concurrency control.The single-database model is more appropriate-ate than the multiple-database model in an environment where it is difficult to determine in advance logical partitions of the database that correspond to work to be performed, and the users closely cooperate.The objective of long-duration transactions is to model long-duration, interactive Database access sessions in application environments.The fundamental assumption about short-duration of transactions that underlies the traditional model of transactions is inappropriate for long-duration transactions.The implementation of the traditional model of transactions may cause intolerably long waits when transactions aleph to acquire locksbefore accessing data, and may also cause a large amount of work to be lost when transactions are backed out in response to user-initiated aborts or system failure situations.The objective of a transaction model is to pro-vide a rigorous basis for automatically enforcing criterion for database consistency for a set of multiple concurrent read and write accesses to the database in the presence of potential system failure situations.The consistency criterion adopted for traditional transactions is the notion of scrializability.Scrializa-bility is enforced in conventional database systems through the use of locking for automatic concurrency control, and logging for automatic recovery from system failure situations.A "transaction”that doesn’t provide a basis for automatically enforcing data-base consistency is not really a transaction. To be sure, a long-duration transaction need not adopt seri-alizability as its consistency criterion.However. there must be some consistcricv criterion.Despite a large number of proposals on version support in the context of computer aided design and software engineering, the absence of a consensus on version semantics has been a key impediment to version support in database systems.Because of the differences between files and databases, it is intuitively clear that the model of versions in database systems cannot be as simple as that adopted in file systems to support software engineering.For data-bases, it may be necessary to manage not only versions of single objects (e.g. a software module, document, but also versions of a collection of objects (e.g.a compound document, a user manual,etc.and perhaps even versions of the schema of database (c.g. a table or a class, a collection of tables or classes).Broadly, there are three directions of research and development in versioning.First is the notion of a parameterized versioning",that is, designing and implementing a versioning system whose behavior may be tailored by adjusting system parameters This may be the only viable approach, in view of the fact that there are various plausible choices for virtually every single aspect of versioning.The second is to revisit these plausible choices for every aspect of versioning, with the view to discarding some of themes either impractical or flawed. The third is the investigation into the semantics and implementationof versioning collections of objects and of versioning the database schema.数据库的空间组织二十年来空间数据管理一直是数据库研究领域的活跃区,研究的焦点集中在发展存储和索引空间数据的数据结构上。

附录1 外文原文COLOR SYSTEM OVERVIEWIn the age of office automation and electronic imaging, office documents are being processed, transported, and displayed in a variety of ways. The scope of document processing is enormous; it encompasses page layout, document length, collation, simplex/duplex, color, image quality, finishing, and binding. If the office system is networked, then another dimension of network-related issues-protocol, file format, page description language, compression/decompression, job management, error handling, user interface, and device driver-has to be addressed. Digital color-imaging systems process electronic information from various sources; images may come from a local-area network, a remote-sensing device, different color workstations, or a local scanner. After processing, a document is usually compressed and transmitted to several places via a computer network for viewing, editing, or printing. Moreover, the trend in the industry is moving toward an open environment. This means that various devices such as scanners, computers, workstations, modems, and printers from multiple vendors are assembled into one system. Implementations should be based on public-domain technology rather than proprietary standards. This will allow vendors equal access to the market for system components and give users the widest choice in selecting components. It is a vastly large task to enable the communication of all system components regardless of differences in the operating system, file format, page description language, and information content. Ideally, the exchange should not cause information loss or alteration. A closer look at a document may reveal that it consists of different types of images, primarily text, graphs, and pictorial images. These all have different image characteristics and representations such as ASCII (American Standard Code for Information Interchange) for text, vector for graphs, and raster for pictorial images. Each type of image and its associated attributes like the font, font size, halftone, gray level, resolution, and color have to be dealt with differently. In such a complex environment, there is no doubt that many compatibility problems occur when an image is acquired, transmitted, displayed, and rendered. ?With the fast development of Internet technology, large volumes of data in the form of electronic documents from the Web. For the purposes of data integration and data exchange, more and moreexisting sources, such as relational databases, support public XML export, and increasing amount of public and private data is described in a semi-structured way. A number of issues need to be addressed when we integrate data from different sources, including heterogeneous and duplicate data, multiple divisions and partners, and changes.? Data heterogeneity results from the use of different information management systems to store data and each system has its own data structure and access methods. Relational database management systems benefit from the universal acceptance of Structured Query Language (SQL) as the primary means of getting answers whilst document and email repositories are generally accessed using text search engines with varying interfaces and capabilities. Because these systems were not designed with interoperability in mind, each must generally be accessed using source-specific applications or application programming interfaces (APIs).? Another difficulty in data integration is data duplication-different systems represent the same piece of data in different ways. For example, customers may be identified by name in one database, but by account number in a second repository, may identify the same customer by email address. Frequently a required piece of information is derived from multiple data points. Data integration is further complicated when customers do business with multiple divisions within a large company, or with other partners. Similarly, answering questions about the state of a company's supply chain requires access to vendor and distributor information sources. Doing business electronically across the firewall gives rise to security and data ownership issues. Finally, data integration has to deal with different types of changes; change in business requirements and strategies, in IT systems, mergers and acquisitions, and new product launches. This demands that a data integration solution be sufficiently flexible and adaptable.One possible solution for the data integration problems mentioned above is to provide an XML Web services break down the barriers between different computing platforms, development environments and communications networks, allowing organizations to work together electronically without the expense and delay of agreeing on semantics, schema, interfaces, and other application integration. XML provides the flexibility for handling data with differing structures. As XML is becoming the principal medium for data exchange over the Web and for information integration in general,increasing amounts of public and private data are described in XML. XML data is usually defined in a tree or graph based, self-describing object instance model (Boncz and Kersten, 1999). However, semi-structured data is incompatible with the flat structure of relational database tables, and so the growth of XML data requires new and complex query optimization techniques.Creating XML files with a text editor would be a lot easier if you didn't have to close all those HTML tags. First you have to add the XML declaration and the root opening and closing HTML tags. Next, you start adding element opening and closing tags one at a time. Of course, once you have the initial sequence completed you can just copy and paste to repeat the required elements. After doing this hundreds of times you'll be looking for a faster way to create XML files.Some XML editors will automatically add the closing tag after you have finished typing the opening tag but, you still have to type the brackets around the opening tag. I kept thinking this process should be easier. So, I came up with a solution that allows you to create XML files without using HTML tags.This console application will create an XML file based on user input. Just enter the file name, how many element fields you want, and the name of each field. Optionally, you can include a data type separated by a comma after the field name. You can just enter the field name because the data type is not required. The structure of the XML file that is created will be compatible with the .NET Dataset and can be easily added to a database.In addition to creating the XML file, an XSL file and HTML file are also created. The HTML file uses client side JavaScript to transform the XML file using the XSL file. This provides an easy way to view the new XML file by displaying it in a table layout.The download includes both the source code and the already compiled application. You can start using the executable right away or customize it to meet your needs. All you will need is the .NET Framework and a text editor, like Notepad, to build this application.Improving ASP Performance with Data CachingOne of the nicest features of is the ability to cache page content. This can be used to substantially reduce load on a website's database - which is an obvious attraction if the site uses Microsoft's Access to store data rather than SQL Server. Unfortunately there is no built in cachingsystem in classic ASP, but it is easy to build one by using the Application object to store data.When to use ASP Caching. Caching is most useful for data that changes - but not too often. For example an e-commerce store could display a list of popular products, or an information site could display a list of press releases.Don't forget that it is also possible to build functionality into the admin part of the site so that the cache would be flushed if new content is added to the database. That way the website administrator would not have to wait until the cache timed out in order for new content to appear on the website. Remember that data stored in Application variables is visible by all the users of the website。

英文摘要Data Transformation ServicesDTS facilitates the import, export, and transformation of heterogeneous data. It supports transformations between source and target data using an OLE DB-based architecture. This allows you to move and transform data between the following data sources:∙Native OLE DB providers such as SQL Server, Microsoft Excel, Microsoft Works, Microsoft Access, and Oracle.∙ODBC data sources such as Sybase and Informix using the OLE DB Provider for ODBC.∙ASCII fixed-field length text files and ASCII delimited text files.For example, consider a training company with four regional offices, each responsible for a predefined geographical region. The company is using a central SQL Server to store sales data. At the beginning of each quarter, each regional manager populates an Excel spreadsheet with sales targets for each salesperson. These spreadsheets are imported to the central database using the DTS Import Wizard. At the end of each quarter, the DTS Export Wizard is used to create a regional spreadsheet that contains target versus actual sales figures for each region.DTS also can move data from a variety of data sources into data marts or data warehouses. Currently, data warehouse products are high-end, complex add-ons. As companies move toward more data warehousing and decision processing systems, the low cost and ease of configuration of SQL Server 7.0 will make it an attractive choice. For many, the fact that much of the legacy data to be analyzed may be housed in an Oracle system will focus their attention on finding the most cost-effective way to get at that data. With DTS, moving and massaging the data from Oracle to SQL Server is less complex and can be completely automated.DTS introduces the concept of a package, which is a series of tasks that are performed as a part of a transformation. DTS has its own in-process component object model (COM) server engine that can be used independent of SQL Server and that supports scripting for each column using Visual Basic® and JScript® development software. Each transformation can include data quality checks and validation, aggregation, and duplicate elimination. You can also combine multiple columns into a single column, or build multiple rows from a single input.Using the DTS Wizard, you can:∙Specify any custom settings used by the OLD DB provider to connect to the data source or destination.∙Copy an entire table, or the results of an SQL query, such as those involving joins of multiple tables or distributed queries. DTS also can copy schema and data between relational databases. However, DTS does not copy indexes, stored procedures, or referential integrity constraints.∙Build a query using the DTS Query Builder Wizard. This allows users inexperienced with the SQL language to build queries interactively.∙Change the name, data type, size, precision, scale, and nullability of a column when copying from the source to the destination, where a valid data-type conversion applies.∙Specify transformation rules that govern how data is copied between columns of different data types, sizes, precisions, scales, and nullabilities.∙Execute an ActiveX script (Visual Basic or JScript) that can modify (transform) the data when copied from the source to the destination. Or you can perform any operation supported by Visual Basic or JScript development software.∙Save the DTS package to the SQL Server MSDB database, Microsoft Repository, or a COM-structured storage file.Schedule the DTS package for later execution.Once the package is executed, DTS checks to see if the destination table already exists, then gives you the option of dropping and recreating the destination table. If the DTS Wizard does not properly create the destination table, verify that the column mappings are correct, select a different data type mapping, or create the table manually and then copy the data.Each database defines its own data types and column and object naming conventions. DTS attempts to define the best possible data-type matches between a source and a destination. However, you can override DTS mappings and specify a different destination data-type, size, precision, and scale properties in the Transform dialog box.Each source and destination may have binary large object (BLOB) limitations. For example, if the destination is ODBC, then a destination table can contain only one BLOB column and it must have a unique index before data can be imported. For more information, see the OLE DB for ODBC driver documentation.Note DTS functionality may be limited by the capabilities of specificdatabase management system (DBMS) or OLE DB drivers.DTS uses the source object’s name as a default. However, you can also add double quote marks (“ “) or square brackets ([ ])around multiword table and column names if this is supported by your DBMS.Data Warehousing and OLAPDTS can function independent of SQL Server and can be used as a stand-alone tool to transfer data from Oracle to any other ODBC- or OLE DB-compliant database. Accordingly, DTS can extract data from operational databases for inclusion in a data warehouse or data mart for query and analysis.Figure 4. DTS and data warehousingIn the previous diagram, the transaction data resides on an IBM DB2 transaction server. A package is created using DTS to transfer and clean the data from the DB2 transaction server and to move it into the data warehouse or data mart. In this example, the relational database server is SQL Server 7.0, and the data warehouse uses OLAP Services to provide analytical capabilities. Client programs (such as Excel) access the OLAP Services server using the OLE DB for OLAP interface, which is exposed through a client-side component called Microsoft PivotTable® Service. Client programs using PivotTable Service can manipulate data in the OLAP server and even change individual cells.SQL Server OLAP Services is a flexible, scalable OLAP solution, providing high-performance access to information in the data warehouse. OLAP Services supports all implementations of OLAP equally well: multidimensional OLAP (MOLAP), relational OLAP (ROLAP), and a hybrid (HOLAP). OLAP Services addresses the most significant challenges in scalability through partial preaggregation, smart client/server caching, virtual cubes, and partitioning.DTS and OLAP Services offer an attractive and cost-effective solution. Data warehousing and OLAP solutions using DTS and OLAP Services are developed with point-and-click graphical tools that are tightly integrated and easy to use. Furthermore, because the PivotTable Service client is using OLE DB, the interface is more open to access by a variety of client applications.Issues for Oracle versions 7.3 and 8.0Oracle does not support more than one BLOB data type per table. This prevents copying SQL Server tables that contain multiple text and image data types with modification. You may want to map one or more BLOBs to the varchar data type and allow truncation, or split a source table into multiple tables. Oracle returns numeric data types such as precision = 38 and scale =0, even when there are digits to the right of the decimal point. If you copy this information, it will be truncated to integer values. If mapped to SQL Server, the precision is reduced to a maximum of 28 digits.The Oracle ODBC driver does not work with DTS and is not supported by Microsoft. Use the Microsoft Oracle ODBC driver that comes with SQL Server. When exporting BLOB data to Oracle using ODBC, the destination table must have an existing unique primary key.Heterogeneous Distributed QueriesDistributed queries access not only data currently stored in SQL Server (homogeneous data), but also access data traditionally stored in a data store other than SQL Server (heterogeneous data). Distributed queries behave as if all data were stored in SQL Server. SQL Server 7.0 will support distributed queries by taking advantage of the UDA architecture (OLE DB) to access heterogeneous data sources, as illustrated in the following diagram.Figure 5. Accessing heterogeneous data sources with UDA翻译DTS 使进口,出口和不同的数据的转变变得容易。

。 -可编辑修改- A introduction to Database Management System Raghu Ramakrishnan A database (sometimes spelled data base) is also called an electronic database , referring to any collection of data, or information, that is specially organized for rapid search and retrieval by a computer. Databases are structured to facilitate the storage, retrieval , modification, and deletion of data in conjunction with various data-processing operations .Databases can be stored on magnetic disk or tape, optical disk, or some other secondary storage device. A database consists of a file or a set of files. The information in these files may be broken down into records, each of which consists of one or more fields. Fields are the basic units of data storage , and each field typically contains information pertaining to one aspect or attribute of the entity described by the database . Using keywords and various sorting commands, users can rapidly search , rearrange, group, and select the fields in many records to retrieve or create reports on particular aggregate of data. Complex data relationships and linkages may be found in all but the simplest databases .The system software package that handles the difficult tasks associated with creating ,accessing, and maintaining database records is called a database management system(DBMS).The 。 -可编辑修改- programs in a DBMS package establish an interface between the database itself and the users of the database.. (These users may be applications programmers, managers and others with information needs, and various OS programs.) A DBMS can organize, process, and present selected data elements form the database. This capability enables decision makers to search, probe, and query database contents in order to extract answers to nonrecurring and unplanned questions that aren’t available in regular reports. These questions might initially be vague and/or poorly defined ,but people can “browse” through the database until they have the needed information. In short, the DBMS will “manage” the stored data items and assemble the needed items from the common database in response to the queries of those who aren’t programmers. A database management system (DBMS) is composed of three major parts:(1)a storage subsystem that stores and retrieves data in files;(2) a modeling and manipulation subsystem that provides the means with which to organize the data and to add , delete, maintain, and update the data;(3)and an interface between the DBMS and its users. Several major trends are emerging that enhance the value and usefulness of database management systems; Managers: who require more up-to-data information to make effective decision 。 -可编辑修改- Customers: who demand increasingly sophisticated information services and more current information about the status of their orders, invoices, and accounts. Users: who find that they can develop custom applications with database systems in a fraction of the time it takes to use traditional programming languages. Organizations : that discover information has a strategic value; they utilize their database systems to gain an edge over their competitors. The Database Model A data model describes a way to structure and manipulate the data in a database. The structural part of the model specifies how data should be represented(such as tree, tables, and so on ).The manipulative part of the model specifies the operation with which to add, delete, display, maintain, print, search, select, sort and update the data. Hierarchical Model The first database management systems used a hierarchical model-that is-they arranged records into a tree structure. Some records are root records and all others have unique parent records. The structure of the tree is designed to reflect the order in which the data will be used that is ,the record at the root of a tree will be accessed first, then records one level below the root ,and so on. 。 -可编辑修改- The hierarchical model was developed because hierarchical relationships are commonly found in business applications. As you have known, an organization char often describes a hierarchical relationship: top management is at the highest level, middle management at lower levels, and operational employees at the lowest levels. Note that within a strict hierarchy, each level of management may have many employees or levels of employees beneath it, but each employee has only one manager. Hierarchical data are characterized by this one-to-many relationship among data. In the hierarchical approach, each relationship must be explicitly defined when the database is created. Each record in a hierarchical database can contain only one key field and only one relationship is allowed between any two fields. This can create a problem because data do not always conform to such a strict hierarchy. Relational Model A major breakthrough in database research occurred in 1970 when E. F. Codd proposed a fundamentally different approach to database management called relational model ,which uses a table as its data structure. The relational database is the most widely used database structure. Data is organized into related tables. Each table is made up of rows called and columns called fields. Each record contains fields of data