s3+stride_sensor_sim

- 格式:pdf

- 大小:299.25 KB

- 文档页数:10

opencv在esp32及esp32s3上面的移植1Opencv简介OpenCV是一个基于Apache2.0许可(开源)发行的跨平台计算机视觉和机器学习软件库,可以运行在Linux、Windows、Android和Mac OS操作系统上它轻量级而且高效——由一系列C 函数和少量C++ 类构成,同时提供了Python、Ruby、MATLAB等语言的接口,实现了图像处理和计算机视觉方面的很多通用算法。

这就使我们在esp32上实现OpenCV,进行图像处理以及计算机视觉成为了现实。

2Esp32s3简介ESP32-S3 和ESP32 一样是一款同时支持WIFI和蓝牙功能,可以说是专为物联网而生的一款Soc,应用领域贯穿移动设备、可穿戴电子设备、智能家居等,在2,4GHz 频带支持20MHz和40MHz频宽,和以往ESP32 不一样的是,蓝牙除了支持BLE以外,目前支持Bluetooth 5 和Bluetooth mesh,更多的GPIO口使其能控制的外设达到更多,全速USB OTG支持直接通过USB协议与芯片进行通信。

最主要的是esp32s3具有双核的cpu。

在图像处理方面有着先天的优势。

Core0通常使用作为wifi数据传输的处理。

Core1进行视觉处理进程的运行。

3移植硬件电路设计为实现esp32s3的视频处理的内存需求,我们选用内置8M flash,外扩8M spram 的模组;摄像头采用了ov2640作为输入;另外,为了调试方便,采用了240*240的LCD屏作为显示终端,可以实时看见图像处理结果。

以下是原理图:整体系统效果如下:反面的摄像头以及补光灯:此开发板可以在某宝上面搜索esp32s3 opencv。

4Demo软件效果一、Opencv中的目标拾取代码。

通常,我们在图像处理的时候,需要对采集照片进行灰度处理,然后,对照片进行二值化处理。

进而进行目标拾取。

使用的函数为:Mat inputImage(fb->height, fb->width, CV_8UC2, fb->buf);// rgb565 is 2 channels of 8-bit unsignedcvtColor(inputImage, inputImage, COLOR_BGR5652GRAY);threshold(inputImage, inputImage, 128, 255, THRESH_BINARY);轻松就会得到目标物体:效果如下:开发板中提供demo的源代码,可以使用esp-idf进行编译运行。

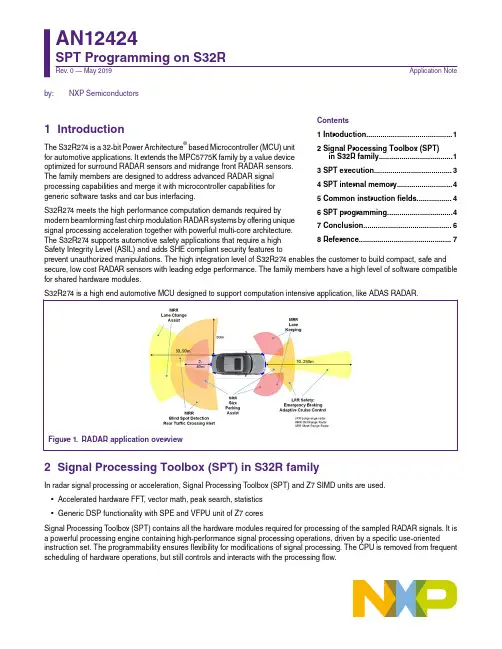

by:NXP Semiconductors1Introduction The S32R274 is a 32-bit Power Architecture ® based Microcontroller (MCU) unitfor automotive applications. It extends the MPC5775K family by a value deviceoptimized for surround RADAR sensors and midrange front RADAR sensors.The family members are designed to address advanced RADAR signalprocessing capabilities and merge it with microcontroller capabilities forgeneric software tasks and car bus interfacing.S32R274 meets the high performance computation demands required by modern beamforming fast chirp modulation RADAR systems by offering unique signal processing acceleration together with powerful multi-core architecture.The S32R274 supports automotive safety applications that require a highSafety Integrity Level (ASIL) and adds SHE compliant security features toprevent unauthorized manipulations. The high integration level of S32R274 enables the customer to build compact, safe and secure, low cost RADAR sensors with leading edge performance. The family members have a high level of software compatible for shared hardware modules.S32R274 is a high end automotive MCU designed to support computation intensive application, like ADAS RADAR.Figure 1.RADAR application overview2Signal Processing Toolbox (SPT) in S32R familyIn radar signal processing or acceleration, Signal Processing T oolbox (SPT) and Z7 SIMD units are used.•Accelerated hardware FFT , vector math, peak search, statistics•Generic DSP functionality with SPE and VFPU unit of Z7 coresSignal Processing T oolbox (SPT) contains all the hardware modules required for processing of the sampled RADAR signals. It is a powerful processing engine containing high-performance signal processing operations, driven by a specific use-orientedinstruction set. The programmability ensures flexibility for modifications of signal processing. The CPU is removed from frequent scheduling of hardware operations, but still controls and interacts with the processing flow.Contents1 Introduction (1)2 Signal Processing Toolbox (SPT)in S32R family....................................13 SPT execution......................................34 SPT internal memory...........................45 Common instruction fields.................46 SPT programming................................47 Conclusion. (6)8 Reference.............................................7AN12424SPT Programming on S32R Rev. 0 — May 2019Application NoteSPT is connected to the device by an advanced high performance master bus and a peripheral bus.Figure 2.SPT in S32R274 block diagramThe system bus master interface performs fast data transfers between external memory and local RAM.The purpose of the peripheral interface is to set configuration, get status information and basic control of SPT (start/stop, program pointer) and to trigger interrupts. It can also be used to exchange small amounts of the data between the CPU and the SPT , such as constant operands.Figure 3.SPT block diagramIt has the following features:•Acquisition of ADC samples—Capturing of ADC samples within programmed window—On-the-fly statistical computation—Supports MIPICSI2 interface•Provides HW acceleration for—FFT (8 - 4096 point)—Histogram calculation—Maximum and peak searchSignal Processing Toolbox (SPT) in S32R family—Mathematical operations on vector data•High-speed DMA data transfer—Supports system RAM, TCM and Flash memory transfers—Includes compression/decompression capability for reduction of storage footprint•Instruction based program flow—High-level commands for signal processing operations—Simple control commands—Local instruction buffer—Automatic instruction fetch from main memory—CPU interaction possible•CPU interruption notification•Watchdog•Debug Support - Single Stepping and Jamming ModeIt has three operation modes:1.STOP mode2.System Debug mode3.Normal mode3SPT executionThe SPT executes a list of instructions. These commands contain all the information to perform signal processing operations on data vectors consisting of a set of numbers. The instruction list is provided by the CPU in a memory buffer (SRAM or flash) and read by the SPT with autonomous triggered DMA operations. Preparation of command scripts is performed off-line duringdevelopment on a different system, such as a PC.Figure 4.SPT operation modelData as results or inputs of signal processing operations can be transferred between operand/twiddle RAM and system RAM or TCM by DMA operations. These kind of DMA operations are also scheduled by commands in the script.CPU application software functions may be invoked between signal processing operations. In order to synchronize these SW functions with the processing flow, interrupts and polling flags are provided, which may be activated as a result of specificcommands. In the same way, eDMA operations can be triggered from the SPT . The data transfer descriptors need to be prepared by application software.T o enable advanced and precisely timed pipelining of HW assisted operations, SPT command execution can also be synchronized with or triggered by events from CTE or MIPICSI2.SPT executionSPT internal memoryProgram execution is performed by a dedicated register machine. The program execution may be stalled at the end of each command and can be synchronized with events provided by the CTE or MIPICSI2. The CPU can stop and release program execution and execute its own instructions in the same time.The SPT command sequence is provided by CPU SW and written to a buffer in the system RAM or flash, where CSDMA fetches or alternatively transfers the list of instructions to the command queue.The program is executed sequentially, one command following the next in the chain with the exception being loops and asynchronous PDMA instruction. The end of a sequence is marked with a specific termination command. The start address of the instruction sequence is configured by application software using the peripheral interface.The command queue stores a number of instructions to be executed, which may be a shorter sequence than the complete instruction list. It maintains an instruction pointer to the current executed operation, which is incremented when the current operation is completed. This instruction pointer indicates the position in the complete instruction list sequence relative to the first instruction.Loop instructions are special cases, the program sequence may continue with a lower address depending on the state of the loop counter. The start and next command address of the loops are saved in special registers. Up to four nested loops are supported. Jump instructions or branch instructions are another case where the program may cause the program to branch to a non-contiguous address location. It is the responsibility of the assembler to ensure that the sanity of the program structure is not violated i.e. infinite loops, jumping into or out of a loop, etc.4SPT internal memorySPT contains internal memory resources namely, Operand RAM, Twiddle RAM and work registers. Out of these, only the work registers can be directly accessed via the peripheral interface and are visible on the SPT external memory map.The CPU can access these internal memory resources via the hardware accelerators. The instruction bitfields SRC_ADD and DEST_ADD point to these memories.The Twiddle RAM extends from location 0x4000 to 0x4FFF. However the area from 0x5000 to 0x7FFF is aliased to the Twiddle RAM i.e. it wraps around to point to the T widdle RAM. It should be noted that the memory accesses to the T widdle RAM should not cross the area from 0x4000 to 0x7FFF.The Operand RAM extends from location 0x8000 to 0xBFFF. But the area from 0xC000 to 0xFFFF is aliased to the Operand RAM. The memory accesses to the Operand RAM should not cross the area from 0x8000 to 0xFFFF.5Common instruction fieldsCommon instructions contains the following fields.•Opcode•Source and Destination address•Indirect Memory address•Source and destination address increment•Vector size•Input datatype/preprocessing6SPT programming6.1Programming based on RSDKNXP Radar SDK(RSDK) provides basic radar processing algorithms and device drivers for S32R hardware devices. Its purpose is to facilitate radar algorithm development (using SPT kernels, MA TLAB models), creation of higher level algorithms (starting from the basic blocks supplied with RSDK) and easy application development by integrating driver and platform support.Radar SDK (Radar SDK for S32R27 SPT accelerator ) could be downloaded from NXP website.RSDK SPT module consists of the SPT Driver, SPT Kernels software components.The SPT Driver serves as an interface between the user application running on the host CPU cores and the SPT hardware. The figure below shows a high-level block diagram of SPT software architecture. Its purpose is to enable the integration and executionof low-level microcode kernels on the SPT (Signal Processing T oolbox) accelerator for baseband radar signal processing.Figure 5.SPT software architectureThe SPT Kernels are individual precompiled routines of SPT code packed into a library, each implementing a part of the radar processing flow.T ogether, the SPT Driver and SPT Kernels library provide a software abstraction of the built-in SPT hardware functions (e.g. FFT ,Maximum Search, Histogram etc.) combined in a series of algorithms.The RSDK package also includes RSDK Sample Applications showing how to integrate these software components into the user code.6.2Programming based on graphic toolS32 Design Studio SPT graphical tool allows user to use graphical modeling workbench by leveraging the Eclipse Modeling technologies. It provides a workbench for model-based architecture engineering. Graphical tool equips teams who have to deal with complex architectures. The graphical tool includes everything necessary to easily create and manipulate models. The output of this tool is SPT assembler source code.The SPT graphical tool is integrated with the New S32DS Project wizard for devices which have SPT module. SPT1, SPT2 and SPT2.5 versions of SPT modules are supported. The SPT graphical tool can be used after project creation with the new graph tools project wizard. For detailed description of new project wizards, please read S32 Design Studio for Power Architecture, Version 2017.R1 reference manual.After the project is generated, SPT graphical tool windows could be gotten in IDE. User could drag 'Instructions', 'Flow' and 'Directives' from Palette window into working flow window.SPT programmingEach 'Instructions', 'Flow' or 'Directives' could change their setting in properties window. After finished the design of SPT working flow, generate SPT code through right click mouse.Figure 6.Generate project with SPT graphical toolFigure 7.SPT graphical tool windowsFigure 8.Example working flow and generate code7ConclusionThe SPT is a powerful processing engine containing high-performance signal processing operations driven by a specific instruction set. Its programmability ensures flexibility while removing the CPU from frequent scheduling of hardware operations, while still controlling and interacting with the processing flow. RSDK supports the API of SPT for customer and graphics tool for SPT programming could meet the flexible design request.8Reference•S32R274 Reference Manual•Radar SDK for S32R27 SPT accelerator •AN5375, S32R RADAR Signal CompressionHow To Reach Us Home Page: Web Support: /support Information in this document is provided solely to enable system and software implementers to use NXP products. There are no express or implied copyright licenses granted hereunder to design or fabricate any integrated circuits based on the information in this document. NXP reserves the right to make changes without further notice to any products herein.NXP makes no warranty, representation, or guarantee regarding the suitability of its products for any particular purpose, nor does NXP assume any liability arising out of the application or use of any product or circuit, and specifically disclaims any and all liability, including without limitation consequential or incidental damages. “Typical” parameters that may be provided in NXP data sheets and/or specifications can and do vary in different applications, and actual performance may vary over time. All operating parameters, including “typicals,” must be validated for each customer application by customer's technical experts. NXP does not convey any license under its patent rights nor the rights of others. NXP sells products pursuant to standard terms and conditions of sale, which can be found at the following address: / SalesTermsandConditions.While NXP has implemented advanced security features, all products may be subject to unidentified vulnerabilities. Customers are responsible for the design and operation of their applications and products to reduce the effect of these vulnerabilities on customer’s applications and products, and NXP accepts no liability for any vulnerability that is discovered. Customers should implement appropriate design and operating safeguards to minimize the risks associated with their applications and products.NXP, the NXP logo, NXP SECURE CONNECTIONS FOR A SMARTER WORLD, COOLFLUX, EMBRACE, GREENCHIP, HIT AG, I2C BUS, ICODE, JCOP, LIFE VIBES, MIFARE, MIFARE CLASSIC, MIFARE DESFire, MIFARE PLUS, MIFARE FLEX, MANTIS, MIFARE ULTRALIGHT, MIFARE4MOBILE, MIGLO, NTAG, ROADLINK, SMARTLX, SMARTMX, ST ARPLUG, TOPFET, TRENCHMOS, UCODE, Freescale, the Freescale logo, AltiVec, C‑5, CodeTEST, CodeWarrior, ColdFire, ColdFire+, C‑Ware, the Energy Efficient Solutions logo, Kinetis, Layerscape, MagniV, mobileGT, PEG, PowerQUICC, Processor Expert, QorIQ, QorIQ Qonverge, Ready Play, SafeAssure, the SafeAssure logo, StarCore, Symphony, VortiQa, Vybrid, Airfast, BeeKit, BeeStack, CoreNet, Flexis, MXC, Platform in a Package, QUICC Engine, SMARTMOS, Tower, TurboLink, and UMEMS are trademarks of NXP B.V. All other product or service names are the property of their respective owners. AMBA, Arm, Arm7, Arm7TDMI, Arm9, Arm11, Artisan, big.LITTLE, Cordio, CoreLink, CoreSight, Cortex, DesignStart, DynamIQ, Jazelle, Keil, Mali, Mbed, Mbed Enabled, NEON, POP, RealView, SecurCore, Socrates, Thumb, TrustZone, ULINK, ULINK2, ULINK-ME, ULINK-PLUS, ULINKpro, µVision, Versatile are trademarks or registered trademarks of Arm Limited (or its subsidiaries) in the US and/or elsewhere. The related technology may be protected by any or all of patents, copyrights, designs and trade secrets. All rights reserved. Oracle and Java are registered trademarks of Oracle and/or its affiliates. The Power Architecture and word marks and the Power and logos and related marks are trademarks and service marks licensed by .© NXP B.V. 2019.All rights reserved.For more information, please visit: Forsalesofficeaddresses,pleasesendanemailto:**********************Date of release: May 2019Document identifier: AN12424。

NuMicro®FamilyArm® ARM926EJ-S BasedNuMaker-HMI-N9H30User ManualEvaluation Board for NuMicro® N9H30 SeriesNUMAKER-HMI-N9H30 USER MANUALThe information described in this document is the exclusive intellectual property ofNuvoton Technology Corporation and shall not be reproduced without permission from Nuvoton.Nuvoton is providing this document only for reference purposes of NuMicro microcontroller andmicroprocessor based system design. Nuvoton assumes no responsibility for errors or omissions.All data and specifications are subject to change without notice.For additional information or questions, please contact: Nuvoton Technology Corporation.Table of Contents1OVERVIEW (5)1.1Features (7)1.1.1NuMaker-N9H30 Main Board Features (7)1.1.2NuDesign-TFT-LCD7 Extension Board Features (7)1.2Supporting Resources (8)2NUMAKER-HMI-N9H30 HARDWARE CONFIGURATION (9)2.1NuMaker-N9H30 Board - Front View (9)2.2NuMaker-N9H30 Board - Rear View (14)2.3NuDesign-TFT-LCD7 - Front View (20)2.4NuDesign-TFT-LCD7 - Rear View (21)2.5NuMaker-N9H30 and NuDesign-TFT-LCD7 PCB Placement (22)3NUMAKER-N9H30 AND NUDESIGN-TFT-LCD7 SCHEMATICS (24)3.1NuMaker-N9H30 - GPIO List Circuit (24)3.2NuMaker-N9H30 - System Block Circuit (25)3.3NuMaker-N9H30 - Power Circuit (26)3.4NuMaker-N9H30 - N9H30F61IEC Circuit (27)3.5NuMaker-N9H30 - Setting, ICE, RS-232_0, Key Circuit (28)NUMAKER-HMI-N9H30 USER MANUAL3.6NuMaker-N9H30 - Memory Circuit (29)3.7NuMaker-N9H30 - I2S, I2C_0, RS-485_6 Circuit (30)3.8NuMaker-N9H30 - RS-232_2 Circuit (31)3.9NuMaker-N9H30 - LCD Circuit (32)3.10NuMaker-N9H30 - CMOS Sensor, I2C_1, CAN_0 Circuit (33)3.11NuMaker-N9H30 - RMII_0_PF Circuit (34)3.12NuMaker-N9H30 - RMII_1_PE Circuit (35)3.13NuMaker-N9H30 - USB Circuit (36)3.14NuDesign-TFT-LCD7 - TFT-LCD7 Circuit (37)4REVISION HISTORY (38)List of FiguresFigure 1-1 Front View of NuMaker-HMI-N9H30 Evaluation Board (5)Figure 1-2 Rear View of NuMaker-HMI-N9H30 Evaluation Board (6)Figure 2-1 Front View of NuMaker-N9H30 Board (9)Figure 2-2 Rear View of NuMaker-N9H30 Board (14)Figure 2-3 Front View of NuDesign-TFT-LCD7 Board (20)Figure 2-4 Rear View of NuDesign-TFT-LCD7 Board (21)Figure 2-5 Front View of NuMaker-N9H30 PCB Placement (22)Figure 2-6 Rear View of NuMaker-N9H30 PCB Placement (22)Figure 2-7 Front View of NuDesign-TFT-LCD7 PCB Placement (23)Figure 2-8 Rear View of NuDesign-TFT-LCD7 PCB Placement (23)Figure 3-1 GPIO List Circuit (24)Figure 3-2 System Block Circuit (25)Figure 3-3 Power Circuit (26)Figure 3-4 N9H30F61IEC Circuit (27)Figure 3-5 Setting, ICE, RS-232_0, Key Circuit (28)Figure 3-6 Memory Circuit (29)Figure 3-7 I2S, I2C_0, RS-486_6 Circuit (30)Figure 3-8 RS-232_2 Circuit (31)Figure 3-9 LCD Circuit (32)NUMAKER-HMI-N9H30 USER MANUAL Figure 3-10 CMOS Sensor, I2C_1, CAN_0 Circuit (33)Figure 3-11 RMII_0_PF Circuit (34)Figure 3-12 RMII_1_PE Circuit (35)Figure 3-13 USB Circuit (36)Figure 3-14 TFT-LCD7 Circuit (37)List of TablesTable 2-1 LCD Panel Combination Connector (CON8) Pin Function (11)Table 2-2 Three Sets of Indication LED Functions (12)Table 2-3 Six Sets of User SW, Key Matrix Functions (12)Table 2-4 CMOS Sensor Connector (CON10) Function (13)Table 2-5 JTAG ICE Interface (J2) Function (14)Table 2-6 Expand Port (CON7) Function (16)Table 2-7 UART0 (J3) Function (16)Table 2-8 UART2 (J6) Function (16)Table 2-9 RS-485_6 (SW6~8) Function (17)Table 2-10 Power on Setting (SW4) Function (17)Table 2-11 Power on Setting (S2) Function (17)Table 2-12 Power on Setting (S3) Function (17)Table 2-13 Power on Setting (S4) Function (17)Table 2-14 Power on Setting (S5) Function (17)Table 2-15 Power on Setting (S7/S6) Function (18)Table 2-16 Power on Setting (S9/S8) Function (18)Table 2-17 CMOS Sensor Connector (CON9) Function (19)Table 2-18 CAN_0 (SW9~10) Function (19)NUMAKER-HMI-N9H30 USER MANUAL1 OVERVIEWThe NuMaker-HMI-N9H30 is an evaluation board for GUI application development. The NuMaker-HMI-N9H30 consists of two parts: a NuMaker-N9H30 main board and a NuDesign-TFT-LCD7 extensionboard. The NuMaker-HMI-N9H30 is designed for project evaluation, prototype development andvalidation with HMI (Human Machine Interface) function.The NuMaker-HMI-N9H30 integrates touchscreen display, voice input/output, rich serial port serviceand I/O interface, providing multiple external storage methods.The NuDesign-TFT-LCD7 can be plugged into the main board via the DIN_32x2 extension connector.The NuDesign-TFT-LCD7 includes one 7” LCD which the resolution is 800x480 with RGB-24bits andembedded the 4-wires resistive type touch panel.Figure 1-1 Front View of NuMaker-HMI-N9H30 Evaluation BoardNUMAKER-HMI-N9H30 USER MANUAL Figure 1-2 Rear View of NuMaker-HMI-N9H30 Evaluation Board1.1 Features1.1.1 NuMaker-N9H30 Main Board Features●N9H30F61IEC chip: LQFP216 pin MCP package with DDR (64 MB)●SPI Flash using W25Q256JVEQ (32 MB) booting with quad mode or storage memory●NAND Flash using W29N01HVSINA (128 MB) booting or storage memory●One Micro-SD/TF card slot served either as a SD memory card for data storage or SDIO(Wi-Fi) device●Two sets of COM ports:–One DB9 RS-232 port with UART_0 used 75C3232E transceiver chip can be servedfor function debug and system development.–One DB9 RS-232 port with UART_2 used 75C3232E transceiver chip for userapplication●22 GPIO expansion ports, including seven sets of UART functions●JTAG interface provided for software development●Microphone input and Earphone/Speaker output with 24-bit stereo audio codec(NAU88C22) for I2S interfaces●Six sets of user-configurable push button keys●Three sets of LEDs for status indication●Provides SN65HVD230 transceiver chip for CAN bus communication●Provides MAX3485 transceiver chip for RS-485 device connection●One buzzer device for program applicationNUMAKER-HMI-N9H30 USER MANUAL●Two sets of RJ45 ports with Ethernet 10/100 Mbps MAC used IP101GR PHY chip●USB_0 that can be used as Device/HOST and USB_1 that can be used as HOSTsupports pen drives, keyboards, mouse and printers●Provides over-voltage and over current protection used APL3211A chip●Retain RTC battery socket for CR2032 type and ADC0 detect battery voltage●System power could be supplied by DC-5V adaptor or USB VBUS1.1.2 NuDesign-TFT-LCD7 Extension Board Features●7” resolution 800x480 4-wire resistive touch panel for 24-bits RGB888 interface●DIN_32x2 extension connector1.2 Supporting ResourcesFor sample codes and introduction about NuMaker-N9H30, please refer to N9H30 BSP:https:///products/gui-solution/gui-platform/numaker-hmi-n9h30/?group=Software&tab=2Visit NuForum for further discussion about the NuMaker-HMI-N9H30:/viewforum.php?f=31 NUMAKER-HMI-N9H30 USER MANUALNUMAKER-HMI-N9H30 USER MANUAL2 NUMAKER-HMI-N9H30 HARDWARE CONFIGURATION2.1 NuMaker-N9H30 Board - Front View Combination Connector (CON8)6 set User SWs (K1~6)3set Indication LEDs (LED1~3)Power Supply Switch (SW_POWER1)Audio Codec(U10)Microphone(M1)NAND Flash(U9)RS-232 Transceiver(U6, U12)RS-485 Transceiver(U11)CAN Transceiver (U13)Figure 2-1 Front View of NuMaker-N9H30 BoardFigure 2-1 shows the main components and connectors from the front side of NuMaker-N9H30 board. The following lists components and connectors from the front view:NuMaker-N9H30 board and NuDesign-TFT-LCD7 board combination connector (CON8). This panel connector supports 4-/5-wire resistive touch or capacitance touch panel for 24-bits RGB888 interface.Connector GPIO pin of N9H30 FunctionCON8.1 - Power 3.3VCON8.2 - Power 3.3VCON8.3 GPD7 LCD_CSCON8.4 GPH3 LCD_BLENCON8.5 GPG9 LCD_DENCON8.7 GPG7 LCD_HSYNCCON8.8 GPG6 LCD_CLKCON8.9 GPD15 LCD_D23(R7)CON8.10 GPD14 LCD_D22(R6)CON8.11 GPD13 LCD_D21(R5)CON8.12 GPD12 LCD_D20(R4)CON8.13 GPD11 LCD_D19(R3)CON8.14 GPD10 LCD_D18(R2)CON8.15 GPD9 LCD_D17(R1)CON8.16 GPD8 LCD_D16(R0)CON8.17 GPA15 LCD_D15(G7)CON8.18 GPA14 LCD_D14(G6)CON8.19 GPA13 LCD_D13(G5)CON8.20 GPA12 LCD_D12(G4)CON8.21 GPA11 LCD_D11(G3)CON8.22 GPA10 LCD_D10(G2)CON8.23 GPA9 LCD_D9(G1) NUMAKER-HMI-N9H30 USER MANUALCON8.24 GPA8 LCD_D8(G0)CON8.25 GPA7 LCD_D7(B7)CON8.26 GPA6 LCD_D6(B6)CON8.27 GPA5 LCD_D5(B5)CON8.28 GPA4 LCD_D4(B4)CON8.29 GPA3 LCD_D3(B3)CON8.30 GPA2 LCD_D2(B2)CON8.31 GPA1 LCD_D1(B1)CON8.32 GPA0 LCD_D0(B0)CON8.33 - -CON8.34 - -CON8.35 - -CON8.36 - -CON8.37 GPB2 LCD_PWMCON8.39 - VSSCON8.40 - VSSCON8.41 ADC7 XPCON8.42 ADC3 VsenCON8.43 ADC6 XMCON8.44 ADC4 YMCON8.45 - -CON8.46 ADC5 YPCON8.47 - VSSCON8.48 - VSSCON8.49 GPG0 I2C0_CCON8.50 GPG1 I2C0_DCON8.51 GPG5 TOUCH_INTCON8.52 - -CON8.53 - -CON8.54 - -CON8.55 - -NUMAKER-HMI-N9H30 USER MANUAL CON8.56 - -CON8.57 - -CON8.58 - -CON8.59 - VSSCON8.60 - VSSCON8.61 - -CON8.62 - -CON8.63 - Power 5VCON8.64 - Power 5VTable 2-1 LCD Panel Combination Connector (CON8) Pin Function●Power supply switch (SW_POWER1): System will be powered on if the SW_POWER1button is pressed●Three sets of indication LEDs:LED Color DescriptionsLED1 Red The system power will beterminated and LED1 lightingwhen the input voltage exceeds5.7V or the current exceeds 2A.LED2 Green Power normal state.LED3 Green Controlled by GPH2 pin Table 2-2 Three Sets of Indication LED Functions●Six sets of user SW, Key Matrix for user definitionKey GPIO pin of N9H30 FunctionK1 GPF10 Row0 GPB4 Col0K2 GPF10 Row0 GPB5 Col1K3 GPE15 Row1 GPB4 Col0K4 GPE15 Row1 GPB5 Col1K5 GPE14 Row2 GPB4 Col0K6GPE14 Row2GPB5 Col1 Table 2-3 Six Sets of User SW, Key Matrix Functions●NAND Flash (128 MB) with Winbond W29N01HVS1NA (U9)●Microphone (M1): Through Nuvoton NAU88C22 chip sound input●Audio CODEC chip (U10): Nuvoton NAU88C22 chip connected to N9H30 using I2Sinterface–SW6/SW7/SW8: 1-2 short for RS-485_6 function and connected to 2P terminal (CON5and J5)–SW6/SW7/SW8: 2-3 short for I2S function and connected to NAU88C22 (U10).●CMOS Sensor connector (CON10, SW9~10)–SW9~10: 1-2 short for CAN_0 function and connected to 2P terminal (CON11)–SW9~10: 2-3 short for CMOS sensor function and connected to CMOS sensorconnector (CON10)Connector GPIO pin of N9H30 FunctionCON10.1 - VSSCON10.2 - VSSNUMAKER-HMI-N9H30 USER MANUALCON10.3 - Power 3.3VCON10.4 - Power 3.3VCON10.5 - -CON10.6 - -CON10.7 GPI4 S_PCLKCON10.8 GPI3 S_CLKCON10.9 GPI8 S_D0CON10.10 GPI9 S_D1CON10.11 GPI10 S_D2CON10.12 GPI11 S_D3CON10.13 GPI12 S_D4CON10.14 GPI13 S_D5CON10.15 GPI14 S_D6CON10.16 GPI15 S_D7CON10.17 GPI6 S_VSYNCCON10.18 GPI5 S_HSYNCCON10.19 GPI0 S_PWDNNUMAKER-HMI-N9H30 USER MANUAL CON10.20 GPI7 S_nRSTCON10.21 GPG2 I2C1_CCON10.22 GPG3 I2C1_DCON10.23 - VSSCON10.24 - VSSTable 2-4 CMOS Sensor Connector (CON10) FunctionNUMAKER-HMI-N9H30 USER MANUAL2.2NuMaker-N9H30 Board - Rear View5V In (CON1)RS-232 DB9 (CON2,CON6)Expand Port (CON7)Speaker Output (J4)Earphone Output (CON4)Buzzer (BZ1)System ResetSW (SW5)SPI Flash (U7,U8)JTAG ICE (J2)Power ProtectionIC (U1)N9H30F61IEC (U5)Micro SD Slot (CON3)RJ45 (CON12, CON13)USB1 HOST (CON15)USB0 Device/Host (CON14)CAN_0 Terminal (CON11)CMOS Sensor Connector (CON9)Power On Setting(SW4, S2~S9)RS-485_6 Terminal (CON5)RTC Battery(BT1)RMII PHY (U14,U16)Figure 2-2 Rear View of NuMaker-N9H30 BoardFigure 2-2 shows the main components and connectors from the rear side of NuMaker-N9H30 board. The following lists components and connectors from the rear view:● +5V In (CON1): Power adaptor 5V input ●JTAG ICE interface (J2) ConnectorGPIO pin of N9H30Function J2.1 - Power 3.3V J2.2 GPJ4 nTRST J2.3 GPJ2 TDI J2.4 GPJ1 TMS J2.5 GPJ0 TCK J2.6 - VSS J2.7 GPJ3 TD0 J2.8-RESETTable 2-5 JTAG ICE Interface (J2) Function●SPI Flash (32 MB) with Winbond W25Q256JVEQ (U7); only one (U7 or U8) SPI Flashcan be used●System Reset (SW5): System will be reset if the SW5 button is pressed●Buzzer (BZ1): Control by GPB3 pin of N9H30●Speaker output (J4): Through the NAU88C22 chip sound output●Earphone output (CON4): Through the NAU88C22 chip sound output●Expand port for user use (CON7):Connector GPIO pin of N9H30 FunctionCON7.1 - Power 3.3VCON7.2 - Power 3.3VCON7.3 GPE12 UART3_TXDCON7.4 GPH4 UART1_TXDCON7.5 GPE13 UART3_RXDCON7.6 GPH5 UART1_RXDCON7.7 GPB0 UART5_TXDCON7.8 GPH6 UART1_RTSCON7.9 GPB1 UART5_RXDCON7.10 GPH7 UART1_CTSCON7.11 GPI1 UART7_TXDNUMAKER-HMI-N9H30 USER MANUAL CON7.12 GPH8 UART4_TXDCON7.13 GPI2 UART7_RXDCON7.14 GPH9 UART4_RXDCON7.15 - -CON7.16 GPH10 UART4_RTSCON7.17 - -CON7.18 GPH11 UART4_CTSCON7.19 - VSSCON7.20 - VSSCON7.21 GPB12 UART10_TXDCON7.22 GPH12 UART8_TXDCON7.23 GPB13 UART10_RXDCON7.24 GPH13 UART8_RXDCON7.25 GPB14 UART10_RTSCON7.26 GPH14 UART8_RTSCON7.27 GPB15 UART10_CTSCON7.28 GPH15 UART8_CTSCON7.29 - Power 5VCON7.30 - Power 5VTable 2-6 Expand Port (CON7) Function●UART0 selection (CON2, J3):–RS-232_0 function and connected to DB9 female (CON2) for debug message output.–GPE0/GPE1 connected to 2P terminal (J3).Connector GPIO pin of N9H30 Function J3.1 GPE1 UART0_RXDJ3.2 GPE0 UART0_TXDTable 2-7 UART0 (J3) Function●UART2 selection (CON6, J6):–RS-232_2 function and connected to DB9 female (CON6) for debug message output –GPF11~14 connected to 4P terminal (J6)Connector GPIO pin of N9H30 Function J6.1 GPF11 UART2_TXDJ6.2 GPF12 UART2_RXDJ6.3 GPF13 UART2_RTSJ6.4 GPF14 UART2_CTSTable 2-8 UART2 (J6) Function●RS-485_6 selection (CON5, J5, SW6~8):–SW6~8: 1-2 short for RS-485_6 function and connected to 2P terminal (CON5 and J5) –SW6~8: 2-3 short for I2S function and connected to NAU88C22 (U10)Connector GPIO pin of N9H30 FunctionSW6:1-2 shortGPG11 RS-485_6_DISW6:2-3 short I2S_DOSW7:1-2 shortGPG12 RS-485_6_ROSW7:2-3 short I2S_DISW8:1-2 shortGPG13 RS-485_6_ENBSW8:2-3 short I2S_BCLKNUMAKER-HMI-N9H30 USER MANUALTable 2-9 RS-485_6 (SW6~8) FunctionPower on setting (SW4, S2~9).SW State FunctionSW4.2/SW4.1 ON/ON Boot from USB SW4.2/SW4.1 ON/OFF Boot from eMMC SW4.2/SW4.1 OFF/ON Boot from NAND Flash SW4.2/SW4.1 OFF/OFF Boot from SPI Flash Table 2-10 Power on Setting (SW4) FunctionSW State FunctionS2 Short System clock from 12MHzcrystalS2 Open System clock from UPLL output Table 2-11 Power on Setting (S2) FunctionSW State FunctionS3 Short Watchdog Timer OFFS3 Open Watchdog Timer ON Table 2-12 Power on Setting (S3) FunctionSW State FunctionS4 Short GPJ[4:0] used as GPIO pinS4Open GPJ[4:0] used as JTAG ICEinterfaceTable 2-13 Power on Setting (S4) FunctionSW State FunctionS5 Short UART0 debug message ONS5 Open UART0 debug message OFFTable 2-14 Power on Setting (S5) FunctionSW State FunctionS7/S6 Short/Short NAND Flash page size 2KBS7/S6 Short/Open NAND Flash page size 4KBS7/S6 Open/Short NAND Flash page size 8KBNUMAKER-HMI-N9H30 USER MANUALS7/S6 Open/Open IgnoreTable 2-15 Power on Setting (S7/S6) FunctionSW State FunctionS9/S8 Short/Short NAND Flash ECC type BCH T12S9/S8 Short/Open NAND Flash ECC type BCH T15S9/S8 Open/Short NAND Flash ECC type BCH T24S9/S8 Open/Open IgnoreTable 2-16 Power on Setting (S9/S8) FunctionCMOS Sensor connector (CON9, SW9~10)–SW9~10: 1-2 short for CAN_0 function and connected to 2P terminal (CON11).–SW9~10: 2-3 short for CMOS sensor function and connected to CMOS sensorconnector (CON9).Connector GPIO pin of N9H30 FunctionCON9.1 - VSSCON9.2 - VSSCON9.3 - Power 3.3VCON9.4 - Power 3.3V NUMAKER-HMI-N9H30 USER MANUALCON9.5 - -CON9.6 - -CON9.7 GPI4 S_PCLKCON9.8 GPI3 S_CLKCON9.9 GPI8 S_D0CON9.10 GPI9 S_D1CON9.11 GPI10 S_D2CON9.12 GPI11 S_D3CON9.13 GPI12 S_D4CON9.14 GPI13 S_D5CON9.15 GPI14 S_D6CON9.16 GPI15 S_D7CON9.17 GPI6 S_VSYNCCON9.18 GPI5 S_HSYNCCON9.19 GPI0 S_PWDNCON9.20 GPI7 S_nRSTCON9.21 GPG2 I2C1_CCON9.22 GPG3 I2C1_DCON9.23 - VSSCON9.24 - VSSTable 2-17 CMOS Sensor Connector (CON9) Function●CAN_0 Selection (CON11, SW9~10):–SW9~10: 1-2 short for CAN_0 function and connected to 2P terminal (CON11) –SW9~10: 2-3 short for CMOS sensor function and connected to CMOS sensor connector (CON9, CON10)SW GPIO pin of N9H30 FunctionSW9:1-2 shortGPI3 CAN_0_RXDSW9:2-3 short S_CLKSW10:1-2 shortGPI4 CAN_0_TXDSW10:2-3 short S_PCLKTable 2-18 CAN_0 (SW9~10) Function●USB0 Device/HOST Micro-AB connector (CON14), where CON14 pin4 ID=1 is Device,ID=0 is HOST●USB1 for USB HOST with Type-A connector (CON15)●RJ45_0 connector with LED indicator (CON12), RMII PHY with IP101GR (U14)●RJ45_1 connector with LED indicator (CON13), RMII PHY with IP101GR (U16)●Micro-SD/TF card slot (CON3)●SOC CPU: Nuvoton N9H30F61IEC (U5)●Battery power for RTC 3.3V powered (BT1, J1), can detect voltage by ADC0●RTC power has 3 sources:–Share with 3.3V I/O power–Battery socket for CR2032 (BT1)–External connector (J1)●Board version 2.1NUMAKER-HMI-N9H30 USER MANUAL2.3 NuDesign-TFT-LCD7 -Front ViewFigure 2-3 Front View of NuDesign-TFT-LCD7 BoardFigure 2-3 shows the main components and connectors from the Front side of NuDesign-TFT-LCD7board.7” resolution 800x480 4-W resistive touch panel for 24-bits RGB888 interface2.4 NuDesign-TFT-LCD7 -Rear ViewFigure 2-4 Rear View of NuDesign-TFT-LCD7 BoardFigure 2-4 shows the main components and connectors from the rear side of NuDesign-TFT-LCD7board.NuMaker-N9H30 and NuDesign-TFT-LCD7 combination connector (CON1).NUMAKER-HMI-N9H30 USER MANUAL 2.5 NuMaker-N9H30 and NuDesign-TFT-LCD7 PCB PlacementFigure 2-5 Front View of NuMaker-N9H30 PCB PlacementFigure 2-6 Rear View of NuMaker-N9H30 PCB PlacementNUMAKER-HMI-N9H30 USER MANUALFigure 2-7 Front View of NuDesign-TFT-LCD7 PCB PlacementFigure 2-8 Rear View of NuDesign-TFT-LCD7 PCB Placement3 NUMAKER-N9H30 AND NUDESIGN-TFT-LCD7 SCHEMATICS3.1 NuMaker-N9H30 - GPIO List CircuitFigure 3-1 shows the N9H30F61IEC GPIO list circuit.Figure 3-1 GPIO List Circuit NUMAKER-HMI-N9H30 USER MANUAL3.2 NuMaker-N9H30 - System Block CircuitFigure 3-2 shows the System Block Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-2 System Block Circuit3.3 NuMaker-N9H30 - Power CircuitFigure 3-3 shows the Power Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-3 Power Circuit3.4 NuMaker-N9H30 - N9H30F61IEC CircuitFigure 3-4 shows the N9H30F61IEC Circuit.Figure 3-4 N9H30F61IEC CircuitNUMAKER-HMI-N9H30 USER MANUAL3.5 NuMaker-N9H30 - Setting, ICE, RS-232_0, Key CircuitFigure 3-5 shows the Setting, ICE, RS-232_0, Key Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-5 Setting, ICE, RS-232_0, Key Circuit3.6 NuMaker-N9H30 - Memory CircuitFigure 3-6 shows the Memory Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-6 Memory Circuit3.7 NuMaker-N9H30 - I2S, I2C_0, RS-485_6 CircuitFigure 3-7 shows the I2S, I2C_0, RS-486_6 Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-7 I2S, I2C_0, RS-486_6 Circuit3.8 NuMaker-N9H30 - RS-232_2 CircuitFigure 3-8 shows the RS-232_2 Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-8 RS-232_2 Circuit3.9 NuMaker-N9H30 - LCD CircuitFigure 3-9 shows the LCD Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-9 LCD Circuit3.10 NuMaker-N9H30 - CMOS Sensor, I2C_1, CAN_0 CircuitFigure 3-10 shows the CMOS Sensor,I2C_1, CAN_0 Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-10 CMOS Sensor, I2C_1, CAN_0 Circuit3.11 NuMaker-N9H30 - RMII_0_PF CircuitFigure 3-11 shows the RMII_0_RF Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-11 RMII_0_PF Circuit3.12 NuMaker-N9H30 - RMII_1_PE CircuitFigure 3-12 shows the RMII_1_PE Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-12 RMII_1_PE Circuit3.13 NuMaker-N9H30 - USB CircuitFigure 3-13 shows the USB Circuit.NUMAKER-HMI-N9H30 USER MANUALFigure 3-13 USB Circuit3.14 NuDesign-TFT-LCD7 - TFT-LCD7 CircuitFigure 3-14 shows the TFT-LCD7 Circuit.Figure 3-14 TFT-LCD7 CircuitNUMAKER-HMI-N9H30 USER MANUAL4 REVISION HISTORYDate Revision Description2022.03.24 1.00 Initial version NUMAKER-HMI-N9H30 USER MANUALNUMAKER-HMI-N9H30 USER MANUALImportant NoticeNuvoton Products are neither intended nor warranted for usage in systems or equipment, anymalfunction or failure of which may cause loss of human life, bodily injury or severe propertydamage. Such applications are deemed, “Insecure Usage”.Insecure usage includes, but is not limited to: equipment for surgical implementation, atomicenergy control instruments, airplane or spaceship instruments, the control or operation ofdynamic, brake or safety systems designed for vehicular use, traffic signal instruments, all typesof safety devices, and other applications intended to support or sustain life.All Insecure Usage shall be made at customer’s risk, and in the event that third parties lay claimsto Nuvoton as a result of customer’s Insecure Usage, custome r shall indemnify the damagesand liabilities thus incurred by Nuvoton.。

cvstartfindcontours_impl参数-概述说明以及解释1.引言1.1 概述在计算机视觉领域,cvstartfindcontours_impl参数被广泛应用于边缘检测和对象识别任务中。

该参数是OpenCV库中cvFindContours()函数的一个重要参数,用于指定边缘检测算法的参数设置。

边缘检测是图像处理中的一项基础任务,它的目标是通过寻找图像中不连续的像素值边界,来获取目标对象的轮廓。

而cvstartfindcontours_impl参数的作用就是对这个过程进行控制和调节,以提高边缘检测的准确性和效率。

cvstartfindcontours_impl参数可以用来调整边缘检测算法的灵敏度,从而适应不同类型的图像和目标对象。

通过设置合适的参数值,我们可以在满足实际应用需求的同时,尽可能地减少边缘检测中的噪声干扰和漏检现象。

这个参数的应用领域非常广泛。

例如,在工业产品质检中,可以利用cvstartfindcontours_impl参数来提取产品的外轮廓,并进一步判断是否存在缺陷或不良现象。

在医学影像处理中,cvstartfindcontours_impl参数可以用于检测病变区域的边缘,从而辅助医生进行疾病诊断和治疗方案的制定。

总之,cvstartfindcontours_impl参数在计算机视觉领域具有重要的意义和应用价值。

它通过调节边缘检测算法的参数,可以提高边缘检测的准确性和稳定性,为后续的图像处理任务提供可靠的基础。

随着计算机视觉技术的不断发展和进步,我们相信cvstartfindcontours_impl参数将会有更多的优化和改进空间,并在更多应用场景中发挥重要作用。

1.2文章结构文章结构部分的内容:在本文中,我们将探讨cvstartfindcontours_impl参数的作用及其重要性。

首先,我们将简要介绍背景知识,以便读者了解该参数的应用背景。

接下来,我们将详细讨论cvstartfindcontours_impl参数在图像处理中的作用及其具体功能。

python实现卷积码译码从一个通道的图片进行卷积生成新的单通道图的过程很容易理解,对于多个通道卷积后生成多个通道的图理解起来有点抽象。

本文以通俗易懂的方式讲述卷积,并辅以图片解释,能快速理解卷积的实现原理。

最后手写python代码实现卷积过程,让Tensorflow卷积在我们面前不再是黑箱子!注意:本文只针对batch_size=1,padding='SAME',stride=[1,1,1,1]进行实验和解释,其他如果不是这个参数设置,原理也是一样。

1 Tensorflow卷积实现原理先看一下卷积实现原理,对于in_c个通道的输入图,如果需要经过卷积后输出out_c个通道图,那么总共需要in_c * out_c个卷积核参与运算。

输入为[h:5,w:5,c:4],那么对应输出的每个通道,需要4个卷积核。

上图中,输出为3个通道,所以总共需要3*4=12个卷积核。

对于单个输出通道中的每个点,取值为对应的一组4个不同的卷积核经过卷积计算后的和。

接下来,我们以输入为2个通道宽高分别为5的输入、3*3的卷积核、1个通道宽高分别为5的输出,作为一个例子展开。

2个通道,5*5的输入定义如下:#输入,shape=[c,h,w]input_data=[[[1,0,1,2,1],[0,2,1,0,1],[1,1,0,2,0],[2,2,1,1,0],[2,0,1,2,0]],[[2,0,2,1,1],[0,1,0,0,2],[1,0,0,2,1],[1,1,2,1,0],[1,0,1,1,1]],]对于输出为1通道map,根据前面计算方法,需要2*1个卷积核。

定义卷积核如下:#卷积核,shape=[in_c,k,k]=[2,3,3]weights_data=[[[ 1, 0, 1],[-1, 1, 0],[ 0,-1, 0]],[[-1, 0, 1],[ 0, 0, 1],[ 1, 1, 1]]]上面定义的数据,在接下来的计算对应关系将按所描述的方式进行。

Package‘SimSST’January9,2023Title Simulated Stop Signal Task DataVersion0.0.5.2Description Stop signal task data of go and stop trials is generated per participant.The simula-tion process is based on the generally non-independent horse race model andfixed stop sig-nal delay or tracking method.Each of go and stop process is assumed having exponentially mod-ified Gaussian(ExG)or Shifted Wald(SW)distributions.The output data can be con-verted to'BEESTS'software input data enabling researchers to test and evaluate vari-ous brain stopping processes manifested by ExG or SW distributional parameters of inter-est.Methods are described in:Soltanifar M(2020)<https:///1807/101208>,Matzke D,Love J,Wiecki TV,Brown SD,Lo-gan GD and Wagenmakers E-J(2013)<doi:10.3389/fpsyg.2013.00918>,Lo-gan GD,Van Zandt T,Verbruggen F,Wagenmakers EJ.(2014)<doi:10.1037/a0035230>. License GPL-3Encoding UTF-8RoxygenNote7.1.2Imports dplyr,gamlss.dist,MASS,statsSuggests knitr,rmarkdownVignetteBuilder knitrNeedsCompilation noAuthor Mohsen Soltanifar[aut](<https:///0000-0002-5989-0082>), Chel Hee Lee[cre,aut](<https:///0000-0001-8209-8176>)Maintainer Chel Hee Lee<***********************>Repository CRANDate/Publication2023-01-0917:20:02UTCR topics documented:simssfixed (2)simssgen (3)simsstrack (4)Index712simssfixed simssfixed Simulatng SSRT data usingfixed SSD methodsDescriptionStop signal task data of go and stop trials is generated per participant.Thefixed stop signal delay method with underlying exponentially modified Gaussian(ExG)or Shifted Wald(SW)distributions for each of go and stop process is applied.The output data can be converted to’BEESTS’software input data enabling researchers to test and evaluate different distributional parameters of interest.Usagesimssfixed(pid,block,n,m,SSD.b,dist.go,theta.go,dist.stop,theta.stop)Argumentspid character vector of size b of participantblock numeric vector of size b blocksn numeric vector of size b of total number of trialsm numeric vector of size b of total number of stopsSSD.b numeric vector of size b of stop signal delaydist.go character vector of size b of distribution of go trials,either ExG or SWtheta.go numeric matrix of size b by columns of mu.go,sigma.go,and tau.godist.stop character vector of size b of distribution of stop.trials,either ExG or SWtheta.stop numeric matrix of size b by columns of mu.stop,sigma.stop,and tau.stopValuematrix with sum(n)rows and8columnsReferencesGordon D.Logan.On the Ability to Inhibit Thought and Action:A User’s Guide to the Stop Signal Paradigm.In D.Dagenbach,&T.H.Carr(Eds.),Inhibitory Process in Attention,Memory and Language.San Diego:Academic Press,1994.Dora Matzke,Jonathon Love,Thomas V.Wiecki,Scott D.Brown,and et al.Release the BEESTS: Bayesian Estimation of Ex-Gaussian Stop Signal Reaction Times Distributions.Frontiers in Psy-chology,4:Article918,2013.Mohsen Soltanifar.Stop Signal Reaction Times:New Estimations with Longitudinal,Bayesian and Time Series based Methods,PhD Dissertation,Biostatistics Division,Dalla Lana School of Public Health,University of Toronto,Toronto,Canada,2020.simssgen3ExamplesmySSTdata1<-simssfixed(pid=c("John.Smith","Jane.McDonald","Jane.McDonald"),n=c(50,100,150),m=c(10,20,30),SSD.b=c(200,220,240),dist.go=c("ExG","ExG","ExG"),theta.go=as.matrix(rbind(c(400,60,30),c(440,90,90),c(440,90,90))),dist.stop=c("ExG","ExG","ExG"),theta.stop=as.matrix(rbind(c(100,70,60),c(120,80,70),c(120,80,70))),block=c(1,1,2))mySSTdata1simssgen Simulating correlated SST data using general tracking methodDescriptionStop signal task data of go and stop trials is generated per participant.The tracking signal delay method with underlying exponentially modified Gaussian(ExG)or Shifted Wald(SW)distributions for each of go and stop process is applied.The output data can be converted to’BEESTS’software input data enabling researchers to test and evaluate different distributional parameters of interest. Usagesimssgen(pid,block,n,m,SSD.b,dist.go,theta.go,dist.stop,theta.stop,rho,d)Argumentspid a character vector of size b of participantblock a numeric vector of size b blocksn a numeric vector of size b of total number of trialsm a numeric vector of size b of total number of stopsSSD.b a numeric vector of size b of starting stop signal delaydist.go a character vector of size b of distribution of go trials,either ExG or SWtheta.go a numeric matrix of size b by columns mu.go,sigma.go,tau.godist.stop a character vector of size b of distribution of stop.trials,either ExG or SW theta.stop a numeric matrix of size b by columns mu.stop,sigma.stop,tau.stoprho a numeric vector of size b of Spearman correlation between GORT and SSRT in range-1to+1d a numeric vector of size b of added constant value to subsequent stop trials SSDValuea matrix with sum(n)rows and(8)columnsReferencesGordon D.Logan.On the Ability to Inhibit Thought and Action:A User’s Guide to the Stop Signal Paradigm.In D.Dagenbach,&T.H.Carr(Eds.),Inhibitory Process in Attention,Memory and Language.San Diego:Academic Press,1994.Dora Matzke,Jonathon Love,Thomas V.Wiecki,Scott D.Brown,and et al.Release the BEESTS: Bayesian Estimation of Ex-Gaussian Stop Signal Reaction Times Distributions.Frontiers in Psy-chology,4:Article918,2013.Mohsen Soltanifar.Stop Signal Reaction Times:New Estimations with Longitudinal,Bayesian and Time Series based Methods,PhD Dissertation,Biostatistics Division,Dalla Lana School of Public Health,University of Toronto,Toronto,Canada,2020.ExamplesmySSTdata1<-simssgen(pid=c("John.Smith","Jane.McDonald","Jane.McDonald"),block=c(1,1,2),n=c(50,100,150),m=c(10,20,30),SSD.b=c(200,220,240),dist.go=c("ExG","ExG","ExG"),theta.go=as.matrix(rbind(c(400,60,30),c(440,90,90),c(440,90,90))),dist.stop=c("ExG","ExG","ExG"),theta.stop=as.matrix(rbind(c(100,70,60),c(120,80,70),c(120,80,70))),rho=c(0.35,0.45,0.45),d=c(50,65,75))mySSTdata1simsstrack Simulating SSRT data using tracking methodDescriptionStop signal task data of go and stop trials is generated per participant.The tracking signal delay method with underlying exponentially modified Gaussian(ExG)or Shifted Wald(SW)distributions for each of go and stop process is applied.The output data can be converted to’BEESTS’software input data enabling researchers to test and evaluate different distributional parameters of interest.Usagesimsstrack(pid,block,n,m,SSD.b,dist.go,theta.go,dist.stop,theta.stop)Argumentspid a character vector of size b of participantblock a numeric vector of size b blocksn a numeric vector of size b of total number of trialsm a numeric vector of size b of total number of stopsSSD.b a numeric vector of size b of starting stop signal delaydist.go a character vector of size b of distribution of go trials,either ExG or SWtheta.go a numeric matrix of size b by columns mu.go,sigma.go,tau.godist.stop a character vector of size b of distribution of stop.trials,either ExG or SW theta.stop a numeric matrix of size b by columns mu.stop,sigma.stop,tau.stopValuea matrix with sum(n)rows and(8)columnsReferencesGordon D.Logan.On the Ability to Inhibit Thought and Action:A User’s Guide to the Stop Signal Paradigm.In D.Dagenbach,&T.H.Carr(Eds.),Inhibitory Process in Attention,Memory and Language.San Diego:Academic Press,1994.Dora Matzke,Jonathon Love,Thomas V.Wiecki,Scott D.Brown,and et al.Release the BEESTS: Bayesian Estimation of Ex-Gaussian Stop Signal Reaction Times Distributions.Frontiers in Psy-chology,4:Article918,2013.Mohsen Soltanifar.Stop Signal Reaction Times:New Estimations with Longitudinal,Bayesian and Time Series based Methods,PhD Dissertation,Biostatistics Division,Dalla Lana School of Public Health,University of Toronto,Toronto,Canada,2020.ExamplesmySSTdata1<-simsstrack(pid=c("John.Smith","Jane.McDonald","Jane.McDonald"),block=c(1,1,2),n=c(50,100,150),m=c(10,20,30),SSD.b=c(200,220,240),dist.go=c("ExG","ExG","ExG"),theta.go=as.matrix.data.frame(rbind(c(400,60,30),c(440,90,90),c(440,90,90))),dist.stop=c("ExG","ExG","ExG"),theta.stop=as.matrix.data.frame(rbind(c(100,70,60),c(120,80,70),c(120,80,70)))) mySSTdata1Indexsimssfixed,2simssgen,3simsstrack,47。

Camera External TriggerInstructionsCopyright(c)2011-2023Tucsen Photonics Co.,Ltd.All Rights ReservedCatalog1.Trigger Connector I/O Definition (2)1.1Dhyan Series (2)1.2FL-20BW/Dhyana401D (2)2.The Timing Diagram of External Trigger and Signal Output Circuit (4)3.Instruction for External Trigger Mode (7)3.1Introduction (8)3.2API (8)3.3External Trigger Functions (8)3.3.1Off (8)3.3.2Standard Mode (9)3.3.3Synchronization Mode (13)3.3.4Global Mode (15)3.3.5Software Trigger (17)4.Output Trigger (18)4.1The Output Level Configuration (19)4.2Edge (19)4.3Delay (20)4.4Width (20)1.Trigger Connector I/O Definition1.1Dhyan SeriesDhyana series cameras(except Dhyana401D)have4hardware interfaces for external triggering,and its definition as shown in Tab1-1,includes a trigger input, three output indicator.Tab1-1I/O Function and Definition of the InterfaceTRIG.IN External trigger signal inputTRIG.OUT1Image readout signalTRIG.OUT2Global exposure signalTRIG.OUT3Exposure start signalFigure1-1Camera interface location map of Dhyana1.2FL-20BW/Dhyana401DFL-20BW/Dhyana401D have6hardware interfaces for external triggering,and its definition as shown in Tab1-2;Tab1-2External Trigger Hardware Interface DefinitionPins for Connector Pins Definition1TRI_IN(Standard mode)2TRI_GND TRI3NC4TRI_OUT0(Exposure Start Signals)5TRI_OUT1(Readout End Signals)6NCFigure1-2Camera interface location map of FL-20BWFigure1-3Camera interface location map of Dhyana401D2.The Timing Diagram of External Trigger and SignalOutput CircuitDhyana series cameras(except Dhyana401D)trigger input and output circuits are shown as below in Figure2-1and Figure2-2.Figure2-1Trigger Input CircuitFigure2-2Trigger Output CircuitThe external trigger input and output circuit diagram of the FL-20BW/Dhyana401D camera is shown in Figure2-3,their external trigger circuits are optocoupler isolated circuits that require an external pull-up resistor to use the trigger function.Voltage3.3V,resistance1kΩ.The trigger signal supports either square wave or sine wave signals.Figure2-3FL-20BW/Dhyana401D Trigger Input&Output CircuitThe relationship between external trigger input pulse,indicator output signal and exposure timing diagram is shown in Figure2-4(Using rising edge level,standard trigger mode as an example).Figure2-4The Timing Diagram of Trigger Input&OutputThere are two time delays when the level signal through the optocoupler circuit:1)Delay T iso:When the external trigger level signal arrives,there will be a delay T isowhen the hardware circuit first passes(the nanosecond level delay,which is determined by the hardware delay).2)Delay T logic:a logic delay after T iso when the trigger signal enters camera andbecomes a level signal.The value range is0-1minimum exposure unit T line.(Minimum exposure unit is the exposure time for one line of the sensor).That is to say,the logic delay time range is0-21µs for Dhyana95.In short,the overall delay(T idelay)from trigger signal input to first line exposure starting is a sum of T iso and T logic.Note:The timing diagrams below ignore signal delays.The definitions of these one input signal interface and three output signal interfaces are shown as below:TRIG.IN:The camera receives the trigger signal.TRIG.OUT1:When choosing readout signal,OUT1will output the indicator level signal starts from last line readout ending.TRIG.OUT2:When choosing global signal,OUT2will output the indicator level signal from last line exposure beginning to first line exposure ending.TRIG.OUT3:When choosing exposure signal,OUT3will output the indicator level signal from first line starts exposure.These three indicator signal outputs are always on by default.The camera outputs a level signal to a third party device as its input signal.Three signals can be output to different devices simultaneously.3.Instruction for External Trigger ModeFigure3-1The Interface of Trigger Setting3.1IntroductionImage output modes are divided into two modes:Frame mode and Stream mode.Stream modeAlso called free mode,the image output is continuously like stream,the mode supports hardware trigger capture.Frame modeFrame mode alse called the external trigger mode.The mode outputs images based on the count of frame by receiving the trigger signals,including hardware trigger(Standard,Synchronization and Global mode)and software trigger.The hardware trigger mode(Hardware)includes three modes:Standard, Synchronization,and Global.The FL-20BW and Dhyana401D only support Standard hardware trigger mode.3.2APIThe external trigger API introduced please see TUCAM API development guide.3.3External Trigger Functions3.3.1OffExternal trigger mode turn off,the camera worked under stream mode.In this mode, the exposure time is set by the software manually or auto exposure,frame frequency is set to continuous imaging.3.3.2Standard ModeThere are two types of standard trigger modes:Level Trigger Mode and Edge Trigger Mode.In level trigger mode,controlling the start and end of the exposure by inputting the rising or falling level of the external trigger signal.The duration of the exposure is determined by the duration of the level.The level trigger mode has a Trigger ready output,which is commonly used to photograph stationary or slow-moving objects.Figure3-2Standard Mode(Exposure:Width/Edge:Rising)In the edge trigger mode,the exposure time is set directly on the software interface. Please note that the time(pulse width+pulse interval)of each pulse cycle of the trigger signal must be longer than or equal to the total time used for image output of each frame(ie,the reciprocal of the frame rate,including the delay time,exposure time,and readout time)to ensure that a frame of image is complete.Figure3-3Standard Mode(Exposure:Timed/Edge:Rising)When the Standard mode is selected,the camera starts exposure after receiving the level signal,and the number of frames is determined by the exposure time,pulse period,and number of pulses.Standard Mode ConfigurationFigure3-41)Exposure:Timed:The exposure time is determined by software setting(Edge Trigger Mode).Trigger Width:The exposure time is determined by the width of the input level signal(Level Trigger Mode).2)EdgeRising Edge:Exposure starts when the camera receives rising edge signal.Falling Edge:Exposure starts when the camera receives falling edge signal.3)Exposure DelaySet the delay time after receiving a trigger signal.The range is between0-10s.Note1)If the time of each pulse cycle of the trigger signal is shorter than the time used forimage output,the next pulse is shielded before the frame is fully output,resulting in less frames than the number of pulses.In a pulse process,the time of image reading is equal to the exposure time of one line of image multiplied by the total number of lines,and the line-by-line reading is started from the end of the first line of exposure.2)Normal conditions,the time interval between each frame is the interval of eachpulse period.The time from the receipt of the trigger signal to the output of an image is between[the total time taken for each frame readout]and[the time of each pulse period].Example1)The maximum frame rate of the Dhyana95camera is24fps at full resolution,andthe time per frame is1000/24≈42ms.If the pulse period is set to10ms and the number of pulses is10,the second,third and fourth pulses will be shielded because the first frame is exposed,then output the first image.The fifth pulse starts the second image exposure,the sixth,seventh and eighth pulses will be shielded,and then outputs the second image.2)Dhyana401D,the time(pulse width+pulse interval)of each pulse cycle of thetrigger signal must be longer than or equal to the total time used for image output of each frame(ie,the reciprocal of the frame rate,including the delay time, exposure time,and readout time)to ensure that a frame of image is completed.3)The main difference between the external trigger of the FL-20BW and the Dhyana401D is that a minimum trigger period is:[readout time+exposure time],while Dhyana401D is the minimum period of exposure time and readout time whichever is greater.The maximum frame rate of FL20BW under full resolution is 8fps,and the frame readout time is about1000ms/8=125ms.Assuming the exposure time is50ms and the rising edge triggers,the period of the trigger signal must be greater than or equal to[exposure time+readout time],that is [T1+T2≥readout time+exposure time=50ms+125ms].Assuming it is pulse width trigger and the rising edge is valid,the high level of the trigger signal is the exposure time,and the low level must be greater than or equal to the frame readout time of125msFigure3-5The trigger Timing Diagram of FL-20BW(1)Exposure select Width:[High level=exposure time]and[Low level≥Frame time].(2)Exposure select Timed:[High level+Low level≥Exposure time+Frame time].3.3.3Synchronization ModeThis mode is not supported on the FL-20BWDhyana401D.When this mode is selected,the camera receives a certain level signal and starts exposure.When the next level signal is received,the exposure is ended and starts to readout the image,at the same time the next exposure begins,and so on.Both the beginning exposure and the readout of the frame are fully synchronized with the external trigger signal.For this mode,the exposure time can only be determined by the trigger signal cycle time.When entering this mode for the first time,the image will not be generated if there is only single pulse and can be generated by the second time.If the signal is multi-pulse,the first trigger will be one frame less than the preset. The trigger will be equal to the preset number of frames at the second time.This mode is very useful for confocal microscopy imaging.For example,it can achieve the synchronous control of the camera's exposure time and the confocal rotation speed of the turntable to eliminate the effect of uneven light.The camera starts to take exposure shooting after receiving a certain level signal.When the next level signal is received,it ends the exposure,reads out and starts a new round of exposure.When clicking apply,it is equivalent to entering the mode for the first time,a level signal is required to start exposure shooting.Figure3-6Synchronization Mode(Edge:Rising)If the pulse width of the external pulse signal is T1and the interval between the pulses is T2,so the exposure time of the image is equal to T1+T2.Taking the Dhyana95 camera as an example,the frame rate is24fps at full resolution,and the time per frame is about42ms.So the pulse T1+T2must be longer than or equal to42ms, which ensures that each frame can have enough time for exposure and readout.If T1+T2is less than42ms,when the second pulse arrives,the first frame is not yet fully read,so the second pulse signal is invalid.ConfigurationOnly one configuration in this mode:the exposure time is determined by inputting level signal width.Figure3-7Edge:chose the type of trigger-response level edge.Rising Edge:exposure starts when the camera receives the rising edge signal. Falling Edge:exposure starts when the camera receives the falling edge signal.Note:All modes are set to invalid for new trigger signal when the exposure is not over.3.3.4Global ModeThis mode is not supported on the FL-20BWDhyana401D.When this mode is selected,all pixels are pre-triggered before the camera triggers, and all pixels are exposed simultaneously when the camera receives an external trigger signal.This mode is often used in scenarios where the light source can be controlled.Figure3-8Global Mode(Exposure:Time/Edge:Rising)Global exposure level trigger has four characteristics:1)The camera is pre-exposed,but not scanned.2)The light source triggers the camera to begin exposure,and the lighting phasemust ensure that all lines are in the exposure phase.3)Exposure time is determined by the level width,and the output data consists of2successive frames.4)It is not a continuous shooting mode.The global mode is characterized by a camera in the rolling mode,which achieves simultaneous exposure of all lines by pre-triggering and cooperation with an external light source.In the rolling mode,after one line is reset,the line starts to be exposed until readout,and one line period is required when a line is reset.In global mode,all lines can be exposed only after all lines are reset.ConfigurationFigure3-91)Exposure time:Timed:The exposure time length is determined by software setting.Width:The exposure time length is determined by the width of inputting level. 2)Activation EdgeRising Edge:exposure starts when the camera receives rising edge signal.Falling Edge:exposure starts when the camera receives falling edge signal.When Timed mode is selected,the exposure time of the image depends on the settings by the software interface.Assuming that the pulse width of the external pulse signal is T1and the interval between the pulses is T2,the pulse T1+T2must be longer than the exposure time set by the software interface plus the sum of the line periods of all the lines,ensuring that each frame can have sufficient time to reset,exposure and readout.If T1+T2is smaller than the exposure time set by the software interface plus the sum of the line periods of all the lines,when the second pulse comes,the first frame image is not fully readout,so the second pulse signal is invalid.When the Width mode is selected,assuming that the pulse width of the external pulse signal is T1and the interval between the pulses is T2,so T2must be longer than or equal to the sum of the line periods of all the lines.Note:Because the sensor does not support a global shutter,this mode is generally not recommended●Dhyana400D:T line=13µs and the sum of the line periods of all lines is2048×13µs=26.624ms;●Dhyana95:T line=21µs and the sum of the line periods of all lines is2048×21µs=43ms;●Dhyana400BSI:T line=14µs and the sum of the line periods of all lines is2048×14µs=28.672ms.●Dhyana400BSI V2:T line=6.6µs and the sum of the line periods of all lines is2048×6.6µs=13.517ms.3.3.5Software TriggerUnder software trigger mode,by clicking Snap,an image acquisition command will be sent to the camera.One image can be captured each time.Note:1)When switching between external trigger mode and real-time free mode,the userneeds to click Apply to enable the new mode to come into effect.2)After selecting external trigger mode,exposure mode,edge and delay,the userneeds to click Apply.4.Output TriggerFigure4-1Dhyana series cameras(except Dhyana401D)have three trigger output ports TRIG.OUT1,TRIG.OUT2,and TRIG.OUT3,which correspond to Port1,Port2,and Port3in the software interface.FL-20BW/Dhyana401D have two trigger output ports TRIG.OUT0and TRIG.OUT1, which correspond to Port1and Port2.These output signals are on by default and are controlled by software.When the software is turned on,the signal will output.The camera outputs the level signal to a third-party device as its input signal.The three signals can work independently and can be output to different devices at the same time.4.1The Output Level ConfigurationFigure4-2Diagram of Output SignalHigh:Output high level signal all the time.Low:Output low level signal all the time.Exposure Start:The signal output by the Exposure Start will be the level signal from the first line starts to exposure and the width could be customized.Exposure Start is the default mode of Port3.Readout End:The signal output by the Readout End will be the level signal from the last line starts to readout and the width could be customized.Readout End is the default mode of Port1.Global:The signal output by the Global Exposure will be the level signal from the last line starts to be exposure to the end of the first line starts to readout(The exposure time need to greater than the frame time).Global Exposure is the default mode of Port 2.4.2EdgeConfiguration of the Output Signal Polarity.Rising:The rising edge of the trigger signal is valid.Falling:The falling edge of the trigger signal is valid.4.3DelayDelay time for configuring the output level signal and the default time is0.4.4WidthConfigure the level width of the pulse and the default width is5ms.Note:1)When the Global Exposure signal output is selected,the pulse width configurationis invalid and its output according to the actual pulse width.2)When High or Low signal output is selected,other configurations are invalid.3)The Delay and Width signals in the streaming mode cannot be too long,otherwisethe next frame signal will be lost;4)The configuration precision of Delay and Width signals is up to us,the settingrange of Delay is0~10s,and the setting range of Width is1us~10s;5)When the camera enters the external trigger mode,the port can also output thesame external trigger input signal.The delay time can be configured.However, the configuration of the pulse width is invalid,depending on the externally triggered input signal.Page20。

simgetdetections返回参数-概述说明以及解释1.引言概述部分的内容可以介绍simgetdetections返回参数这个主题的背景信息和基本概念。

可以从以下几个方面展开阐述。

首先,可以介绍simgetdetections这个API的作用和用途,以及在什么场景下使用。

simgetdetections是一个视觉识别API,用于检测和识别图像中的目标物体,并返回相应的检测结果。

它可以应用于多种实际场景,比如人脸识别、车牌识别、物体检测等,为相关应用提供了基础的功能支持。

其次,可以介绍simgetdetections返回参数这个主题的重要性和意义。

在使用simgetdetections接口时,返回参数包含了关于图像目标检测结果的详细信息,如物体类别、位置坐标、置信度等。

这些返回参数对于用户来说具有很大的实用价值,可以帮助用户判断和处理图像中的目标物体,实现更多复杂的应用需求。

然后,可以简要介绍一下simgetdetections返回参数的基本结构和组成。

一般来说,simgetdetections返回的结果是一个结构化的数据,通常以JSON格式进行表示。

其中,可能包含物体类别的标签、位置坐标的信息以及相关的置信度指标。

通过逐一解析这些返回参数,用户可以获取到每个目标物体的具体特征和属性,进而实现相应的应用逻辑。

最后,可以指出在接下来的篇章中,将会进一步详细介绍simgetdetections返回参数的具体内容和用法。

同时,也可以简要提及本文结构以及后续章节的内容,以便读者对整个文章有个基本的了解和预期。

通过以上的几个方面的介绍,就可以完成文章1.1 概述部分的编写。

需要注意的是,可以根据实际需求和具体情况来进行适当的扩展和补充。

1.2文章结构文章结构文章的结构是指文章在内容上的组织和安排方式。

一个良好的文章结构可以使读者更好地理解文章的逻辑和主题,并帮助作者清晰地表达自己的观点和论据。

在本篇文章中,我们将按照以下的结构来呈现我们所要讨论的内容。

Graph-WaveNet训练数据的⽣成加代码注释1.训练数据的获取1. 获得邻接矩阵 运⾏gen_adj_mx.py⽂件,可以⽣成adj_mx.pkl⽂件,这个⽂件中保存了⼀个列表对象[sensor_ids 感知器id列表,sensor_id_to_ind (传感器id:传感器索引)字典,adj_mx 邻接矩阵 numpy数组[207,207]],注意,这个⽂件的运⾏需要节点距离⽂件distances_la_2012.csv和节点id⽂件graph_sensor_ids.txt,该⽂件代码注释如下:def get_adjacency_matrix(distance_df, sensor_ids, normalized_k=0.1):""":param distance_df: 距离的DataFrame,其中有3列:【from, to, distance】:param sensor_ids: 感知器id列表:param normalized_k: ⼀个阀值,如果邻接矩阵中元素⼩于这个值,被置为0:return: 1.sensor_ids 感知器id列表 2.sensor_id_to_ind (传感器id:传感器索引)字典 3.adj_mx 邻接矩阵 numpy数组【207,207】"""num_sensors = len(sensor_ids)dist_mx = np.zeros((num_sensors, num_sensors), dtype=np.float32)dist_mx[:] = np.inf# 构建传感器id:传感器索引字典形如:{'773869': 0, '767541': 1, '767542': 2}sensor_id_to_ind = {}for i, sensor_id in enumerate(sensor_ids):sensor_id_to_ind[sensor_id] = i# 填充距离矩阵dist_max,规则为:只有from传感器的id和to传感器的id都存在于id列表中,才填充for row in distance_df.values:if row[0] not in sensor_id_to_ind or row[1] not in sensor_id_to_ind:continuedist_mx[sensor_id_to_ind[row[0]], sensor_id_to_ind[row[1]]] = row[2]# 利⽤距离矩阵中所有⾮⽆穷⼤的数的标准差,构建邻接矩阵adj_mx,注意这是⾮对称矩阵,其中,节点到⾃⾝的值为1,形如:"""[[1.0000000e+00 0.0000000e+00 0.0000000e+00 0.0000000e+00 0.0000000e+00][0.0000000e+00 1.0000000e+00 3.9095539e-01 1.7041542e-05 1.6665361e-05][0.0000000e+00 7.1743792e-01 1.0000000e+00 6.9196528e-04 6.8192312e-04][0.0000000e+00 9.7330502e-04 2.4218364e-06 1.0000000e+00 6.3372165e-01][0.0000000e+00 1.4520076e-03 4.0022201e-06 6.2646437e-01 1.0000000e+00]]"""distances = dist_mx[~np.isinf(dist_mx)].flatten()std = distances.std()adj_mx = np.exp(-np.square(dist_mx / std))# Make the adjacent matrix symmetric by taking the max.# adj_mx = np.maximum.reduce([adj_mx, adj_mx.T])# 将邻接矩阵中⼩于这个阀值的元素置为0,形如:"""[[1. 0. 0. 0. 0. ][0. 1. 0.3909554 0. 0. ][0. 0.7174379 1. 0. 0. ][0. 0. 0. 1. 0.63372165][0. 0. 0. 0.62646437 1. ]]"""adj_mx[adj_mx < normalized_k] = 0print(adj_mx[:5, :5])return sensor_ids, sensor_id_to_ind, adj_mxif__name__ == '__main__':# 设置参数:# sensor_ids_filename: 传感器id⽂件的路径# distances_filename: 传感器距离⽂件的路径# output_pkl_filename: 输出的邻接矩阵⽂件的路径# normalized_k:parser = argparse.ArgumentParser()parser.add_argument('--sensor_ids_filename', type=str, default='data/sensor_graph/graph_sensor_ids.txt',help='File containing sensor ids separated by comma.')parser.add_argument('--distances_filename', type=str, default='data/sensor_graph/distances_la_2012.csv',help='CSV file containing sensor distances with three columns: [from, to, distance].')parser.add_argument('--normalized_k', type=float, default=0.1,help='Entries that become lower than normalized_k after normalization are set to zero for sparsity.')parser.add_argument('--output_pkl_filename', type=str, default='data/sensor_graph/adj_mx.pkl',help='Path of the output file.')args = parser.parse_args()# 获取感知器id存⼊sensor_ids列表中with open(args.sensor_ids_filename) as f:sensor_ids = f.read().strip().split(',')# 读取csv距离⽂件,数据格式如下"""from to cost0 1201054 1201054 0.01 1201054 1201066 2610.92 1201054 1201076 2822.73 1201054 1201087 2911.54 1201054 1201100 7160.1"""distance_df = pd.read_csv(args.distances_filename, dtype={'from': 'str', 'to': 'str'})normalized_k = args.normalized_k_, sensor_id_to_ind, adj_mx = get_adjacency_matrix(distance_df, sensor_ids, normalized_k)# 将上⾯的返回值保存成列表对象, protocol=2表⽰以2进制⽅式保存,pickle模块⽤来实现对象的序列化和反序列化with open(args.output_pkl_filename, 'wb') as f:pickle.dump([sensor_ids, sensor_id_to_ind, adj_mx], f, protocol=2)2.获得训练集、测试集和验证集 终端中运⾏以下命令:# METR-LApython generate_training_data.py --output_dir=data/METR-LA --traffic_df_filename=data/metr-la.h5# PEMS-BAYpython generate_training_data.py --output_dir=data/PEMS-BAY --traffic_df_filename=data/pems-bay.h5 产⽣的数据集维度情况: 该⽂件代码注释如下:def generate_graph_seq2seq_io_data(df, x_offsets, y_offsets, add_time_in_day=True, add_day_in_week=False, scaler=None):"""产⽣输⼊数据和输出数据,形状【样本数,序列长度(12),节点数(207),特征数(2)】,函数generate_train_val_test中有解释:param df::param x_offsets: 输⼊数据的索引偏置:param y_offsets: 输出数据的索引偏置:param add_time_in_day: 将时间转为以天为单位,函数generate_train_val_test有解释:param add_day_in_week::param scaler::return: 输⼊数据和输出数据# x: (epoch_size, input_length, num_nodes, input_dim)# y: (epoch_size, output_length, num_nodes, output_dim)"""num_samples, num_nodes = df.shape# 将⼆维数组[M,207]扩张成三维[M,207,1],数据格式如下"""[[[64.375 ],[67.125 ],...,[61.875 ]],...,[[55.88888889],[68.44444444],[62.875 ]]]"""data = np.expand_dims(df.values, axis=-1)feature_list = [data]if add_time_in_day:# 这个语句将⽇期转为以天为单位,形如[0. 0.00347222 0.00694444 0.01041667 0.01388889 0.01736111# 0.02083333 0.02430556 0.02777778 0.03125 ]time_ind = (df.index.values - df.index.values.astype("datetime64[D]")) / np.timedelta64(1, "D")time_in_day = np.tile(time_ind, [1, num_nodes, 1]).transpose((2, 1, 0))feature_list.append(time_in_day)if add_day_in_week:dow = df.index.dayofweekdow_tiled = np.tile(dow, [1, num_nodes, 1]).transpose((2, 1, 0))feature_list.append(dow_tiled)# 将当前样本的时间加⼊到了特征中,所有特征变成了:速度+时间,形状变成[M,207,2],形如"""[[[6.43750000e+01 0.0000],[6.76250000e+01 0.0000],[6.71250000e+01 0.0000],...,[5.92500000e+01 0.0000]],...,[[6.90000000e+01 0.9653],[6.18750000e+01 0.99653],...,[6.26666667e+01 0.99653]]]"""data = np.concatenate(feature_list, axis=-1)x, y = [], []min_t = abs(min(x_offsets))max_t = abs(num_samples - abs(max(y_offsets))) # Exclusivefor t in range(min_t, max_t): # t is the index of the last observation.x.append(data[t + x_offsets, ...])y.append(data[t + y_offsets, ...])x = np.stack(x, axis=0)y = np.stack(y, axis=0)return x, ydef generate_train_val_test(args):"""输⼊参数,产⽣训练集、测试集和测试集,⽐例为:0.7:0.2:0.1,保存在METR-LA⽂件夹中最终数据集的形状为【M,12,207,2】,M表⽰数据集/样本长度,【12,207,2】为单个输⼊数据或输出数据,其中12表⽰时间序列长度,207表⽰节点数量,2表⽰节点特征(⼀个是速度,另⼀个为当前数据的时间)并且这个时间它是⽤天表⽰的,⽐如8⽉12号0点5分,和8⽉13号0点5分是⼀样的,都是(5/60/24)=0.0034722天:param args: 输⼊参数,⾥⾯包括了历史数据序列长度、要预测的时间长度,5分钟为⼀个单位:return: ⽆"""# 输⼊的时间序列长度和输出的预测时间序列长度seq_length_x, seq_length_y = args.seq_length_x, args.seq_length_y# 读取metr-la.h5⽂件,其中列索引为传感器节点id,⾏索引为时间,间隔为5分钟,形如:"""773869 767541 ... 718141 7693732012-03-01 00:00:00 64.375000 67.625000 ... 69.000000 61.8752012-03-01 00:05:00 62.666667 68.555556 ... 68.444444 62.8752012-03-01 00:10:00 64.000000 63.750000 ... 69.857143 62.0002012-03-01 00:15:00 0.000000 0.000000 ... 0.000000 0.0002012-03-01 00:20:00 0.000000 0.000000 ... 0.000000 0.000"""df = pd.read_hdf(args.traffic_df_filename)# 获取输⼊数据的索引偏置,为 numpy⼀维数组:[-11 -10 -9 -8 -7 -6 -5 -4 -3 -2 -1 0],# 这个函数应该不⽤这么复杂,直接np.arange(-(seq_length_x - 1), 1, 1)应该就可以了x_offsets = np.sort(np.concatenate((np.arange(-(seq_length_x - 1), 1, 1),)))# 输出数据也就是预测数据标签的索引偏置,为numpy⼀维数组:[1 1 2 ... 12]y_offsets = np.sort(np.arange(args.y_start, (seq_length_y + 1), 1))# 得到了输⼊数据x和对应标签数据y# x: (num_samples, input_length, num_nodes, input_dim)# y: (num_samples, output_length, num_nodes, output_dim)x, y = generate_graph_seq2seq_io_data(df,x_offsets=x_offsets,y_offsets=y_offsets,add_time_in_day=True,add_day_in_week=args.dow,)print("x shape: ", x.shape, ", y shape: ", y.shape)# 利⽤numpy保存数据进.npz⽂件中,每个⽂件中都包含输⼊数据、标签数据、输⼊数据索引偏置、标签数据索引偏置,# 和上⾯说的差不多,只是形状变成了[12,1]的⼆维数组num_samples = x.shape[0]num_test = round(num_samples * 0.2)num_train = round(num_samples * 0.7)num_val = num_samples - num_test - num_trainx_train, y_train = x[:num_train], y[:num_train]x_val, y_val = (x[num_train: num_train + num_val],y[num_train: num_train + num_val],)x_test, y_test = x[-num_test:], y[-num_test:]for cat in ["train", "val", "test"]:_x, _y = locals()["x_" + cat], locals()["y_" + cat]print(cat, "x: ", _x.shape, "y:", _y.shape)np.savez_compressed(os.path.join(args.output_dir, f"{cat}.npz"),x=_x,y=_y,x_offsets=x_offsets.reshape(list(x_offsets.shape) + [1]),y_offsets=y_offsets.reshape(list(y_offsets.shape) + [1]),)if__name__ == "__main__":parser = argparse.ArgumentParser()# 设置参数,# output_dir:数据输出⽂件夹路径# traffic_df_filename:交通流量数据⽂件路径,这个⽂件类似于excel表,有207列,列标签是传感器id,有34249⾏,每⼀⾏的⾏索引是时间,间隔为5分钟,⾏和列对应的数据为速度?# seq_length_x:输⼊数据的时间序列长度,默认为12,因此表⽰当前时刻之前1个⼩时的历史数据# seq_length_y:输出数据的时间序列长度,默认为12,因此表⽰预测未来1⼩时内的流量parser.add_argument("--output_dir", type=str, default="data/METR-LA", help="Output directory.")parser.add_argument("--traffic_df_filename", type=str, default="data/metr-la.h5", help="Raw traffic readings.", )parser.add_argument("--seq_length_x", type=int, default=12, help="Sequence Length.", )parser.add_argument("--seq_length_y", type=int, default=12, help="Sequence Length.", )parser.add_argument("--y_start", type=int, default=1, help="Y pred start", )parser.add_argument("--dow", action='store_true', )args = parser.parse_args()# 是否覆盖⽂件,不重要if os.path.exists(args.output_dir):reply = str(input(f'{args.output_dir} exists. Do you want to overwrite it? (y/n)')).lower().strip()if reply[0] != 'y': exitelse:os.makedirs(args.output_dir)# 产⽣训练数据generate_train_val_test(args)。

tess模块中英文对照Tess模块是一个强大的光学字符识别(OCR)引擎,它可以将图像中的文字转换为可编辑的文本。

无论是从印刷品、手写字迹还是其他形式的文字,Tess模块都能够准确地识别并提取出来。

本文将介绍Tess模块中一些常用的功能和对应的英文表达。

首先,我们来看一下Tess模块的基本用法。

要使用Tess模块,首先需要安装它并导入相关的库。

在Python中,可以使用以下代码来安装Tess模块:```pip install pytesseract```安装完成后,可以使用以下代码导入Tess模块:```import pytesseract```接下来,我们可以使用Tess模块中的`image_to_string`函数来识别图像中的文字。

该函数的用法如下:```text = pytesseract.image_to_string(image)```其中,`image`是待识别的图像,可以是一个文件路径或者一个图像对象。

`text`是识别出的文本结果。

除了基本的文字识别功能,Tess模块还提供了一些其他的功能。

例如,我们可以使用`image_to_boxes`函数来获取文字的边界框信息。

该函数的用法如下:```boxes = pytesseract.image_to_boxes(image)````boxes`是一个包含边界框信息的字符串,每一行表示一个边界框,包括文字的位置和大小。

此外,Tess模块还支持对多语言的文字进行识别。

我们可以使用`image_to_string`函数的`lang`参数来指定要识别的语言。

例如,要识别中文文本,可以使用以下代码:```text = pytesseract.image_to_string(image, lang='chi_sim')```其中,`chi_sim`是中文简体的语言代码。

Tess模块还支持其他语言,可以在官方文档中找到相应的语言代码。

GAMES101- 现代计算机图形学学习笔记(作业07 )GAMES101- 现代计算机图形学学习笔记(作业07 )Assignment 07原课程视频链接以及官b站视频链接: .课程官⽹链接: .作业作业描述在之前的练习中,我们实现了 Whitted-Style Ray Tracing 算法,并且 BVH 等加速结构对于求交过程进⽹了加速。

在本次实验中,我们将在上⽹次实验的基础上实现完整的 Path Tracing 算法。

⽹此,我们已经来到了光线追踪版块的最后⽹节内容,实现光线追踪。

本次代码的流程为:·Triangle.hpp: 将你的光线- 三⽹形相交函数粘贴到此处,请直接将上次实验中实现的内容粘贴在此。

· IntersectP(const Ray& ray, const Vector3f& invDir, const std::array<int, 3>& rI t s h N g ounds3.hpp: 这个函数的作⽹是判断包围盒BoundingBox 与光线是否相交,请直接将上次实验中实现的内容粘贴在此处,并且注意检查 t_enter = t_exit 的时候的判断是否正确。