基于浮点运算的复杂度分析

- 格式:pdf

- 大小:120.73 KB

- 文档页数:16

大数算法之大数加减法-概述说明以及解释1.引言1.1 概述概述部分:大数算法是指用于处理超过计算机所能直接表示的整数的算法。

在日常生活和工程领域中,经常会遇到需要进行大数计算的情况,例如金融领域的精确计算、密码学的加密算法等。

大数加减法是其中最基本且常见的运算,本文将着重介绍大数加减法的算法原理和步骤。

大数加法指的是对两个甚至更多的大整数进行相加运算。

由于计算机的数值范围有限,无法直接处理超出其表示范围的大整数。

因此,为了进行大数加法,需要借助特定的算法来实现。

大数减法则是对两个大整数进行相减运算。

同样地,由于计算机的表示范围有限,需要采用特殊的算法来处理超出范围的运算。

本文旨在探讨大数加减法的算法原理和步骤,并分析它们在实际应用中的重要性和应用场景。

同时,还将对大数算法的优缺点进行分析,并探讨改进方向,以提升其执行效率和精确度。

通过深入了解和学习大数加减法算法,读者将能够更好地理解和应用这些算法,为解决实际问题提供有效的数值计算方法。

在大数据处理和科学计算等领域,大数算法扮演着至关重要的角色,因此对其进行研究和了解具有重要的实际意义。

1.2文章结构1.2 文章结构本文按照以下结构进行阐述大数加减法的相关内容:1. 引言:介绍大数算法的背景和概述,引发读者对大数算法的兴趣。

2. 正文:2.1 大数加法:- 介绍大数加法的概念和应用:说明大数加法在实际生活和计算中的重要性,例如在金融、科学计算、数据处理等领域的应用案例。

强调大数加法的特点,即能够运算超出常规整数范围的数值。

- 大数加法的算法原理和步骤:详细说明大数加法的运算原理和具体步骤。

包括进位的处理、借位的处理以及运算过程中的数值对齐等关键步骤。

分析各种特殊情况,例如加法中出现不同位数的数值、进位和借位的处理等。

2.2 大数减法:- 介绍大数减法的概念和应用:阐述大数减法在实际生活和计算中的应用场景。

例如在金融领域的差值计算、减法运算超过常规整数范围的数值等。

在C++ 中,浮点数精度问题是由于计算机内部使用二进制表示浮点数,而不是十进制,因此导致某些十进制小数无法精确表示为二进制小数的结果。

这可能会在某些情况下引起舍入误差或不准确的计算结果。

以下是一些浮点数精度问题的常见示例和解决方法:舍入误差:浮点数的表示是有限的,因此在进行一系列计算时,可能会产生小的舍入误差。

这种误差通常不可避免,但可以通过适当的算法来减小其影响。

double result = 0.1 + 0.2; // 结果可能不等于0.3,因为0.1 和0.2 在二进制中无法精确表示解决方法:使用整数计算,或者使用针对浮点数精度的算法,如Kahan算法来减小舍入误差。

比较浮点数:由于舍入误差,使用== 比较浮点数是否相等可能不可靠。

两个看似相等的浮点数可能由于精度问题而不相等。

double a = 0.1 + 0.2;double b = 0.3;if (a == b) {// 这可能不会执行,因为a 和b 可能不相等}解决方法:比较浮点数时,应使用一个容忍的误差范围,例如fabs(a - b) < epsilon,其中epsilon 是足够小的正数。

数值溢出和下溢:在进行大量浮点数计算时,可能会发生数值溢出(结果太大而无法表示)或下溢(结果太小而变为零)的问题。

解决方法:了解浮点数范围,并在可能发生溢出的情况下采取适当的措施,如使用更高精度的数据类型。

精度丢失:进行多次浮点数运算时,每次运算都可能导致精度丢失,最终结果可能不准确。

解决方法:尽量减少连续浮点数运算,或使用高精度的数学库。

C++标准库提供了<cmath> 头文件中的一些函数,如fabs() 和round(),可用于处理浮点数精度问题。

此外,如果需要更高精度的计算,可以考虑使用C++ 中的long double 或引入外部数学库,如Boost.Multiprecision 等。

但请注意,高精度计算可能会导致性能损失。

单片机是一种微型计算机,它的运算能力很强,但是在处理浮点数时,精度会受到一定的影响。

下面就来详细介绍一下单片机浮点数精度的问题。

一、单片机浮点数的表示方法单片机在处理浮点数时,一般采用IEEE 754标准的浮点数表示方法。

这种方法将浮点数分为三部分:符号位、指数位和尾数位。

其中,符号位用来表示浮点数的正负,指数位用来表示浮点数的大小,尾数位用来表示浮点数的精度。

二、单片机浮点数精度的问题由于单片机的运算能力受限,所以在处理浮点数时,精度会受到一定的影响。

这主要是因为单片机在进行浮点数运算时,需要将浮点数转换成二进制数,然后再进行计算。

而在这个过程中,会出现精度损失的情况。

三、单片机浮点数的舍入规则在进行浮点数运算时,单片机需要进行舍入操作。

舍入规则一般有四种,分别是向零舍入、向正无穷舍入、向负无穷舍入和四舍五入。

其中,向零舍入是最简单的一种舍入规则,它直接将小数部分舍去。

而向正无穷舍入和向负无穷舍入则是将小数部分分别向上和向下取整。

四舍五入则是将小数部分四舍五入。

四、单片机浮点数的精度控制方法要提高单片机浮点数的精度,一般有两种方法。

一种方法是增加浮点数的位数,这样可以提高浮点数的精度,但是会增加计算的复杂度和存储的空间。

另一种方法是采用高精度算法,这种算法可以在保证精度的同时减少计算的复杂度和存储的空间。

五、单片机浮点数精度的应用单片机浮点数精度的应用范围很广,主要涉及到科学计算、工程计算、金融计算等方面。

比如在控制系统中,需要对传感器采集到的数据进行处理,这时就需要使用浮点数进行运算。

又比如在金融领域,需要对复杂的财务数据进行分析和计算,这时也需要使用浮点数进行运算。

总之,单片机浮点数精度是一个很重要的问题,对于单片机的应用具有很大的影响。

在实际应用中,我们需要根据具体的情况选择合适的浮点数表示方法和精度控制方法,以达到最优的计算效果。

c++ 浮点数精度问题C++是一种非常流行的编程语言,它支持浮点数数据类型来存储小数。

然而,浮点数在计算机中是以有限的位数来表示的,因此存在精度问题。

本文将探讨C++中浮点数的精度问题,以及如何避免这些问题。

C++中的浮点数主要有两种类型:float和double。

float类型通常占用4个字节,而double类型通常占用8个字节。

虽然double类型提供了更高的精度,但它仍然存在精度问题。

一个常见的问题是浮点数的精度丢失。

例如,当对两个浮点数进行加减乘除运算时,可能会得到一个与预期结果有很大差异的值。

这是因为浮点数在计算机中是以二进制形式表示的,而许多小数无法被准确表示为有限的二进制分数。

这种差异通常是由于舍入误差、截断误差和浮点数溢出导致的。

这些问题都会导致计算结果的不准确性。

另一个精度问题是比较浮点数的相等性。

在C++中,使用==操作符比较两个浮点数是否相等可能会得到错误的结果。

这是因为浮点数很难完全相等,即使它们看起来相等,由于精度问题,它们的二进制表示可能略有不同。

因此,通常应该使用一个误差范围来判断两个浮点数是否相等。

为了避免浮点数精度问题,有一些常见的做法。

首先,应该尽量避免在浮点数之间进行相等性比较,而应使用一个误差范围来判断它们的相等性。

其次,可以使用double类型代替float类型,以提高精度。

另外,可以考虑使用整数来表示固定小数点数,可以避免浮点数精度问题。

另一个解决方法是避免在浮点数上进行迭代运算。

迭代运算会导致误差累积,最终使得浮点数的精度变得不可靠。

如果必须进行迭代运算,可以考虑使用累加器或更高精度的浮点数类型来减少误差。

此外,C++标准库中也提供了一些函数来处理浮点数精度问题。

例如,可以使用std::round()函数来对浮点数进行四舍五入。

另外,还可以使用std::numeric_limits<>模板类来获取浮点数的最大误差范围,以便进行相等性比较。

低复杂度混合基FFT研究与设计快速傅里叶变换(Fast Fourier Transform, FFT)算法是雷达微波探测、通信及图像等领域的核心处理算法, 也是相关处理算法中运算量较大的部分。

但针对合成孔径雷达(Synthetic Aperture Radar, SAR)以及正交频分复用技术(Orthogonal FrequencyDivision Multiplexing, OFDM)应用, 现有FFT数字处理实现方法存在处理长度不灵活、处理器资源浪费严重、处理延迟大等问题。

因此, 研究资源节约、高时效的低复杂度混合基FFT设计技术具有重要的应用价值。

本文通过对各种FFT算法进行分析比较, 提出了低复杂度混合基FFT设计方法。

首先研究了基本蝶形单元的硬件实现方法, 在此基础上, 研究了混合基FFT的低复杂度设计方法以及基于多存储结构的FFT设计方法。

上述研究方法降低了FFT算法在数字电路中实现的复杂度, 提高了FFT处理的实时性。

主要工作和创新成果如下: 1.作为混合基FFT的一种特例, 有必要对固定基FFT进行研究。

现有FFT实现方法通常采用补零方式来满足基-2或基-4FFT, 该方法的不足之处在于浪费存储资源、计算时间长。

针对这一问题, 研究了基于单精度浮点运算的以基-3和基-5蝶形单元为代表的小面积基-rFFT设计方法。

同时, 为了减少占用的存储资源, 在保证精度的前提下用定点格式来表示旋转因子, 设计了一种有效的、高精度的乘法器, 以达到定点存储旋转因子的目的。

2.针对基-rFFT仅限于点数为r的幂次方的情况, 提出了基于原位存储结构的混合基FFT设计。

首先研究了基-r1/r2FFT数据访问地址的生成方案, 该方法通过一个计数器来获得一种低复杂度的地址映射关系。

进一步推导通用混合基, 即基-r1/r2/.../rsFFT数据访问地址的产生方案。

针对混合基FFT中蝶形单元种类增多的问题, 推导出一种可配置的蝶形单元设计方法, 解决了多种蝶形占用大量资源的问题。

浮点数精度问题(一)浮点数精度问题1. 问题背景浮点数(floating-point number)是计算机中用来表示实数的一种数据类型。

由于计算机内部表示浮点数的方式有限,导致浮点数在计算过程中可能会出现精度问题。

2. 问题表现在进行浮点数运算时,可能会出现以下问题:精度丢失由于浮点数的二进制表示形式无法准确表示某些十进制小数,因此在进行浮点数运算时,往往会出现精度丢失的情况。

例如: + =这并不是一个误差,而是由于计算机无法准确表示和所导致的结果。

比较不准确由于浮点数的精度问题,使用不准确的比较运算符(如==)进行浮点数之间的比较时,可能得到错误的结果。

例如:+ == # False上述比较结果为 False,因为计算机内部表示的 + 与的值并不完全相等。

3. 问题解决针对浮点数精度问题,可以采取以下解决措施:使用近似值进行比较由于浮点数的精度限制,无法直接比较两个浮点数的相等性。

因此,可以使用一个较小的误差范围,通过比较它们的差值是否在误差范围内来判断它们是否近似相等。

例如:abs( + - ) < 1e-6 # True上述比较结果为 True,因为差值的绝对值小于给定的误差范围。

使用 Decimal 类型对于需要更高精度的浮点数运算,可以使用 Decimal 类型进行计算。

Decimal 类型提供了更精确的小数运算能力,避免了浮点数精度问题。

例如:from decimal import Decimalx = Decimal('')y = Decimal('')z = Decimal('')x + y == z # True上述比较结果为 True,因为 Decimal 类型能够准确表示、和。

4. 小结浮点数精度问题是由于计算机在表示浮点数时的内部限制导致的。

要解决这一问题,可以通过使用近似值进行比较或使用 Decimal 类型来处理更高精度的运算。

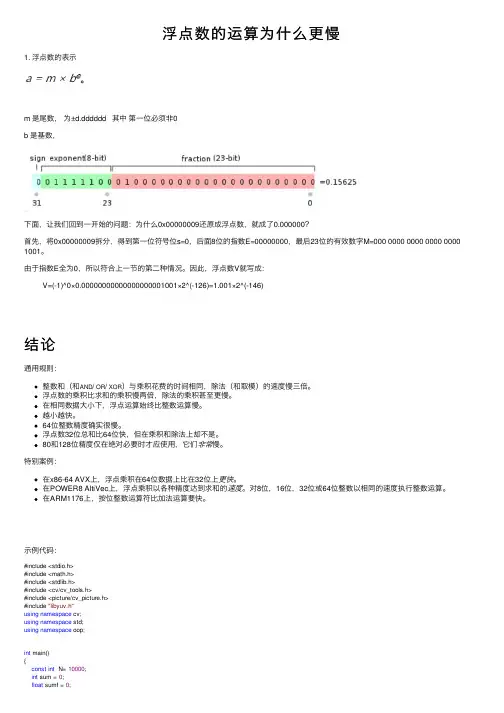

浮点数的运算为什么更慢1. 浮点数的表⽰m 是尾数,为±d.dddddd 其中第⼀位必须⾮0b 是基数,下⾯,让我们回到⼀开始的问题:为什么0x00000009还原成浮点数,就成了0.000000?⾸先,将0x00000009拆分,得到第⼀位符号位s=0,后⾯8位的指数E=00000000,最后23位的有效数字M=000 0000 0000 0000 0000 1001。

由于指数E全为0,所以符合上⼀节的第⼆种情况。

因此,浮点数V就写成:V=(-1)^0×0.00000000000000000001001×2^(-126)=1.001×2^(-146)结论通⽤规则:整数和(和AND/ OR/ XOR)与乘积花费的时间相同,除法(和取模)的速度慢三倍。

浮点数的乘积⽐求和的乘积慢两倍,除法的乘积甚⾄更慢。

在相同数据⼤⼩下,浮点运算始终⽐整数运算慢。

越⼩越快。

64位整数精度确实很慢。

浮点数32位总和⽐64位快,但在乘积和除法上却不是。

80和128位精度仅在绝对必要时才应使⽤,它们⾮常慢。

特别案例:在x86-64 AVX上,浮点乘积在64位数据上⽐在32位上更快。

在POWER8 AltiVec上,浮点乘积以各种精度达到求和的速度。

对8位,16位,32位或64位整数以相同的速度执⾏整数运算。

在ARM1176上,按位整数运算符⽐加法运算要快。

⽰例代码:#include <stdio.h>#include <math.h>#include <stdlib.h>#include <cv/cv_tools.h>#include <picture/cv_picture.h>#include "libyuv.h"using namespace cv;using namespace std;using namespace oop;int main(){const int N= 10000;int sum = 0;float sumf = 0;float nf = 734.0f;int n = 734;timeInit;timeMark("int");for(int j=0;j!=100000;++j){sum = 0;for (int i = 0; i != N; ++i) {sum += n;}}timeMark("float");for (int j = 0; j != 100000; ++j){sumf = 0;for (int i = 0; i != N; ++i) {sumf += nf;}}timeMark(")");timePrint;printf("sum=%d\nsumf=%.2f\n",sum,sumf);getchar();}输出:( int,float ) : 2107 ms( float,) ) : 3951 mssum=7340000sumf=7340000.00Release:( int,float ) : 0 ms( float,) ) : 1814 mssum=7340000sumf=7340000.00实际上: Debug模式下,两者时间差不了多少,两倍的关系但是Release模式下, int ⼏乎很快就完成了!!说明int型被优化得很好了,float型运算不容易被编译器优化!!!我们在Release模式下,优化设置为O2, 连接器设置为-优化以便于调试查看int 乘法汇编指令:xmm0 表⽰128位的SSE寄存器,可见我们的代码都被优化为SSE指令了!!查看float 汇编代码:感觉⾥⾯也有xmm 等SSE指令集,⾄于为啥int型乘法⽐float乘法快很多,还是有点搞不明⽩,需要详细分析⾥⾯的汇编指令才能搞明⽩⽹上关于这⽅⾯的资料太少了,哎~~我们再看看float 和 int乘法对图像进⾏处理的例⼦:我们把BGR 3个通道分别乘以2 3 4 、 2.0f, 3.0f, 4.0f 然后输出,这⾥我们不考虑溢出的问题,仅仅对乘法的效率进⾏测试设置为Release模式,O2int main(){cv::Mat src = imread("D:/pic/nlm.jpg");//cvtColor(src,src,CV_BGR2GRAY);resize(src,src,Size(3840*2,2160*2));cv::Mat dst0(src.size(), src.type());cv::Mat dst1(src.size(), src.type());int w = src.cols;int h = src.rows;int of3=0;timeInit;timeMark("int");for (int j = 0; j != h; ++j) {for (int i = 0; i != w; ++i) {//int of3 = (j*w + i) * 3;dst0.data[of3 ] = src.data[of3] * 2;dst0.data[of3 + 1] = src.data[of3 + 1] * 3;dst0.data[of3 + 2] = src.data[of3 + 2] * 4;of3+=3;}}timeMark("float");of3=0;for (int j = 0; j != h; ++j) {for (int i = 0; i != w; ++i) {//int of3 = (j*w + i)*3;dst1.data[of3] = src.data[of3] * 2.0f;dst1.data[of3+1] = src.data[of3+1] * 3.0f;dst1.data[of3+2] = src.data[of3+2] * 4.0f;of3 += 3;}}timeMark("end");timePrint;myShow(dst0);myShow(dst1);waitKey(0);}输出:( int,float ) : 149 ms( float,end ) : 173 ms输出图像(分别为原图,dst0,dst1)(截取了⼀部分)可见,时间并差不了多少,但int还是要快⼀点!!这是我看到的另外⼀个帖⼦,⾥⾯讲的float乘法确实⽐较复杂,这可能是它⽐较慢的原因之⼀吧总结⼀下: float运算更慢的原因:1. float运算不容易被编译器优化2. float运算本⾝就慢(但并不⽐int型运算慢多少,⼤约1.3-2倍的样⼦)。

磁记录中极化码低复杂迭代SCAN译码算法研究李桂萍;李聪娜【摘要】通过对连续删除译码算法和置信传播译码算法原理的研究,针对软删除译码算法提出了具有较低译码复杂度和空间复杂度的改进算法.与原软删除译码算法相比,提出的算法可减少译码过程中的浮点运算量,并能减少因子图中为每列节点分配的存储空间,同时具有更快的收敛速度.仿真结果表明,与连续删除译码算法、置信传播译码算法以及原软删除译码算法相比,提出的算法具有更好的译码性能.【期刊名称】《中原工学院学报》【年(卷),期】2018(029)001【总页数】7页(P72-77,82)【关键词】Polar码;信道容量;连续删除译码;置信传播译码;软删除译码【作者】李桂萍;李聪娜【作者单位】西安工业大学计算机科学与工程学院,陕西西安710021;陆军边海防学院科研处,陕西西安710108【正文语种】中文【中图分类】TN911.22极化码(Polar Codes)[1]是理论上能够证明可达信道容量限的一种编码技术,其因具有规则的编码结构、明确的构造方法以及低复杂的编译码算法而成为纠错码领域的研究热点。

在连续删除译码算法SC(Successive Cancellation)下,当码长无限增大时,极化码具有良好的渐进性能。

但是在短码下,极化码的性能却是次优的。

针对这一问题,一些学者提出了许多改进的SC译码算法,如连续删除列表译码算法SCL(Successive Cancellation List)[2]、栈译码SD(Stack Decoding)算法[3]以及自适应SCL译码算法[4]等。

尽管在列表宽度或栈深度足够大时,这些改进译码算法能够达到最大似然(Maximum Likelihood)译码的性能,但是它们都是基于串行译码机制的硬判决译码算法,无法满足高输出通信应用的需求,也无法满足磁记录等场合对软信息的需求。

后来Fayyaz U U等在SC译码算法的基础上提出了软输出连续删除译码算法(Soft Cancellation, SCAN)[5],解决了软信息的输出问题。

广义空间调制系统的正则化OMP检测算法刘晓鸣;景小荣【摘要】在广义空间调制(GSM)系统中,最大似然(ML)检测可以取得最优的检测性能,然而其计算复杂度随激活天线数的增加急剧增长.针对这一问题,提出了一种基于稀疏重构理论的低复杂度检测算法——正则化正交匹配追踪(ROMP)算法.该算法首先根据信道矩阵和当前残差的内积选取多个候选激活天线索引,接着对候选天线索引按正则化标准进行可靠性验证,剔除错误索引,缩小信号的搜索空间,最后通过求解最小二乘问题估计信号.仿真结果表明,与经典的正交匹配追踪(OMP)算法相比,所提算法以少许复杂度的增加为代价极大提升了检测性能,能够在检测性能与复杂度之间取得更好的折中.%The optimal detection of generalized spatial modulation(GSM) system is the Maximum Likelihood(ML)algorithm.However,the computational complexity of ML detection increases significantly with the increase of the number of active antennas.To solve this problem,a low-complexity detection algorithm based on sparse reconstruction,termed as Regularized Orthogonal Matching Pursuit (ROMP) algorithm,is proposed.The algorithm starts by producing an estimate of the plausible active antennas indices according to correlation between the MIMO channels and the residual,then removes wrong indexes based on the regularization criteria and hence the search space is shrunk.Finally,it estimates GSM signal by involving the LS problem.Simulation results show that the proposed algorithm can improve the detection performance at the cost of slightly increased complexity compared with the conventional orthogonal matching pursuit (OMP)detector,and a better tradeoff between the performance and complexity is achieved.【期刊名称】《电讯技术》【年(卷),期】2018(058)001【总页数】6页(P78-83)【关键词】广义空间调制;信号检测;最大似然;正则化正交匹配追踪【作者】刘晓鸣;景小荣【作者单位】重庆邮电大学通信与信息工程学院,重庆400065;重庆邮电大学通信与信息工程学院,重庆400065;重庆邮电大学移动通信技术重庆市重点实验室,重庆400065【正文语种】中文【中图分类】TN911.231 引言多输入多输出[1](Multiple-Input Multiple-Output,MIMO)系统相比于单输入单输出(Single-Input Single-Output,SISO)系统,数据传输速率和链路可靠性都得到了明显提升[2]。

基于FPGA的浮点运算器IP核的设计与实现基于现场可编程门阵列(FPGA)的浮点运算器,是一种专门设计用于实现浮点数运算的IP核。

浮点运算器在科学计算、数字信号处理(DSP)、图像处理等领域中具有广泛的应用。

本文将探讨基于FPGA的浮点运算器IP核的设计与实现。

首先,我们需要确定浮点运算器的功能要求和性能指标。

常见的浮点运算器包括加法器、乘法器和除法器,它们能够进行浮点数的加法、乘法和除法运算。

浮点运算器的性能指标包括浮点数位数、运算精度、时钟频率、吞吐量、功耗等。

然后,我们可以选择合适的FPGA芯片进行设计。

不同的FPGA芯片具有不同的资源和性能特点,我们需要根据浮点运算器的功能需求和性能指标,选择具备足够资源和性能的FPGA芯片。

接下来,我们需要进行浮点运算器的架构设计。

浮点运算器的架构通常分为两个主要部分:浮点数运算单元和控制单元。

浮点数运算单元包括加法器、乘法器和除法器,它们实现具体的浮点数运算操作。

控制单元用于控制浮点数运算的流程和时序。

在浮点数运算单元的设计中,我们需要选择合适的浮点数格式。

常见的浮点数格式有IEEE754和自定义浮点数格式。

IEEE754浮点数格式是最常用的浮点数表示方法,它包括单精度浮点数(32位)、双精度浮点数(64位)和扩展精度浮点数(80位)。

自定义浮点数格式可以根据具体应用需求设计,例如定点数格式、定点数加浮点数格式等。

浮点运算器的设计可以采用各种硬件实现方法,如组合逻辑电路、查找表、乘法器阵列和流水线等。

我们需要根据浮点数运算的复杂度和性能要求选择合适的实现方法。

对于较复杂的浮点数运算,可以采用流水线架构来实现并发计算,提高性能和吞吐量。

在控制单元的设计中,我们需要确定浮点数运算的流程和时序。

控制单元可以采用状态机的方式实现,它根据具体的浮点数运算操作,生成相应的控制信号,控制浮点数运算单元的工作状态和时序。

最后,我们需要进行浮点运算器的验证和测试。

验证和测试是设计中非常重要的环节,它可以帮助我们发现并修复设计中的错误和缺陷。

c++浮点运算精度问题在计算机科学和工程领域中,浮点运算是一种处理实数(包括小数和负数)的数学运算,其精度对于许多应用至关重要,包括科学计算、工程模拟、金融分析等。

然而,浮点运算的精度问题一直是计算机科学领域中的一个重要挑战。

本文将探讨C语言中浮点运算的精度问题及其解决方案。

一、浮点运算精度问题概述在计算机内部,浮点数通常以二进制形式表示,由于二进制表示法固有的限制,浮点运算经常会出现精度问题。

这些问题包括但不限于舍入误差、数值稳定性问题、溢出和下溢等。

这些误差可能会导致计算结果的不准确,甚至可能导致错误的结果。

二、C语言中的浮点运算C语言是一种广泛使用的编程语言,它提供了丰富的浮点运算功能。

在C语言中,浮点数通过双精度(double)数据类型来表示,它可以提供很高的精度。

然而,由于计算机内部的实现细节和算法的复杂性,即使使用双精度数据类型,也可能会出现精度问题。

三、解决方案解决浮点运算精度问题的方法包括但不限于以下几点:1.选择合适的算法:选择适合处理浮点数精度的算法可以大大减少精度问题的影响。

例如,对于大规模的科学计算,使用专门设计的算法和库(如GNUScientificLibrary)可以提供更高的精度。

2.精细控制舍入:在编程中,可以通过精细控制舍入来减少精度问题的影响。

例如,可以使用四舍五入、跳过舍入等方法来控制舍入方向,以减少舍入误差的影响。

3.校准和验证:在计算之前和之后进行校准和验证可以大大减少精度问题的影响。

校准可以通过使用适当的基准测试和验证方法来实现,以确保计算结果的准确性。

4.使用高精度数据类型:对于需要更高精度的应用,可以使用高精度数据类型(如长双精度或大整数)来避免精度问题的影响。

四、结论浮点运算精度问题是一个普遍存在的问题,特别是在使用C语言进行编程时。

通过选择合适的算法、精细控制舍入、校准和验证以及使用高精度数据类型等方法,可以大大减少精度问题的影响,提高计算结果的准确性。

详谈浮点精度(float、double)运算不精确的原因⽬录为什么浮点精度运算会有问题精度运算丢失的解决办法拓展:详解浮点型为什么浮点精度运算会有问题我们平常使⽤的编程语⾔⼤多都有⼀个问题——浮点型精度运算会不准确。

⽐如double num = 0.1 + 0.1 + 0.1;// 输出结果为 0.30000000000000004double num2 = 0.65 - 0.6;// 输出结果为 0.05000000000000004笔者在测试的时候发现 C/C++ 竟然不会出现这种问题,我最初以为是编译器优化,把这个问题解决了。

但是C/C++ 如果能解决其他语⾔为什么不跟进?根据这个问题的产⽣原因来看,编译器优化解决这个问题逻辑不通。

后来发现是打印的⽅法有问题,打印输出⽅法会四舍五⼊。

使⽤ printf("%0.17f\n", num); 以及 cout <<setprecision(17) << num2 << endl; 多打印⼏位⼩数即可看到精度运算不准确的问题。

那么精度运算不准确这是为什么呢?我们接下来就需要从计算机所有数据的表现形式⼆进制说起了。

如果⼤家很了解⼆进制与⼗进制的相互转换,那么就能轻易的知道精度运算不准确的问题原因是什么了。

如果不知道就让我们⼀起回顾⼀下⼗进制与⼆进制的相互转换流程。

⼀般情况下⼆进制转为⼗进制我们所使⽤的是按权相加法。

⼗进制转⼆进制是除2取余,逆序排列法。

很熟的同学可以略过。

// ⼆进制到⼗进制10010 = 0 * 2^0 + 1 * 2^1 + 0 * 2^2 + 0 * 2^3 + 1 * 2^4 = 18// ⼗进制到⼆进制18 / 2 = 9 09 / 2 = 4 (1)4 / 2 = 2 02 / 2 = 1 01 / 2 = 0 (1)10010那么,问题来了⼗进制⼩数和⼆进制⼩数是如何相互转换的呢?⼗进制⼩数到⼆进制⼩数⼀般是整数部分除 2 取余,逆序排列,⼩数部分使⽤乘 2 取整数位,顺序排列。

浮点测试用例-概述说明以及解释1.引言1.1 概述概述:在计算机科学领域,浮点数是一种用于表示有限数量的实数的近似值的数据类型。

浮点数通常由两部分组成:尾数和指数。

由于浮点数是近似表示实数的,因此在进行浮点数运算时可能会出现精度问题,这也是我们需要进行浮点数测试的重要原因之一。

本文将介绍浮点数的基本概念,探讨浮点数测试用例的设计方法,并给出一些实际的测试用例示例。

通过深入了解浮点数的特点和测试方法,我们可以更好地发现和解决浮点数计算中潜在的问题,保证程序的正确性和稳定性。

1.2 文章结构本文将分为引言、正文和结论三部分。

在引言部分,我们将对浮点测试用例进行概述,并介绍文章的结构和目的。

在正文部分,我们将详细介绍浮点数的基本概念,以及浮点数测试用例的设计方法和实例。

最后在结论部分,我们将总结本文的主要内容,讨论测试用例的应用以及未来的发展方向。

通过这样的结构,读者可以逐步了解浮点测试用例的重要性和设计方法,从而更好地应用于实际工作中。

1.3 目的在本文中,我们的目的是通过介绍浮点数的基本概念和测试用例设计方法,帮助读者更好地理解浮点数的特性和相关测试技术。

通过学习本文,读者将能够掌握如何设计和执行有效的浮点数测试用例,从而提高软件的质量和稳定性。

我们希望本文能够为开发人员和测试人员提供一些有用的参考,帮助他们更好地应对浮点数相关的软件开发和测试工作。

2.正文2.1 浮点数介绍在计算机科学中,浮点数是一种用来表示带小数点的实数的数据类型。

它们通常由一个小数点、一个整数部分和一个小数部分组成。

浮点数的表示方式是通过科学记数法来实现的,其中一个数值以二进制形式存储,另一个数值表示为指数。

浮点数通常以单精度(32位)或双精度(64位)的格式存储。

单精度浮点数可以表示大约7位有效数字,而双精度浮点数可以表示大约15到16位有效数字。

浮点数的精度是受限的,因为它们只能表示有限数量的数字。

由于浮点数的表示方式是近似的,所以在进行浮点运算时可能会出现舍入误差。

vit 浮点运算量

VIT(Vision Transformer)是一种基于注意力机制的视觉处理模型,它使用Transformer架构来处理图像数据。

在VIT中,浮点运算量是一个重要的指标,它可以帮助我们了解模型的计算复杂度和性能。

浮点运算量通常指的是模型在推断或训练过程中进行的浮点数乘法和加法的总次数。

在VIT模型中,浮点运算量主要来自于多层的自注意力机制和前馈神经网络。

自注意力机制中的矩阵乘法和softmax运算,以及前馈神经网络中的矩阵乘法和激活函数计算都会贡献到总体的浮点运算量。

另外,由于VIT模型通常包含大量的参数,这也会导致较大的浮点运算量。

从计算角度来看,浮点运算量可以帮助我们评估模型的计算资源需求,从而选择合适的硬件设备来部署模型。

较大的浮点运算量意味着需要更多的计算资源来运行模型,而较小的浮点运算量则可以在较低的成本下实现推断或训练过程。

此外,浮点运算量也与模型的速度和能耗密切相关。

较大的浮点运算量可能导致模型推断或训练的速度较慢,并且需要更多的能

耗。

因此,在实际应用中,需要综合考虑浮点运算量、模型性能和硬件资源等因素来选择合适的模型。

总的来说,浮点运算量是评估VIT模型计算复杂度和性能的重要指标,对于模型的部署和优化具有重要意义。

通过对浮点运算量的分析,可以更好地理解模型的计算特性,并进行针对性的优化和改进。

CPU浮点运算能力一、CPU利用率为什么超高CPU出现于大规模集成电路时代,处理器架构设计的迭代更新以及集成电路工艺的不断提升促使其不断发展完善。

从最初专用于数学计算到广泛应用于通用计算,从4位到8位、16位、32位处理器,最后到64位处理器,从各厂商互不兼容到不同指令集架构规范的出现,CPU 自诞生以来一直在飞速发展。

但是,我们会发现,有时候CPU的利用率出奇的高,这是为什么呢?以下分别从CPU温度,CPU超线程,硬件配置,硬件驱动和待机方面分析。

1、CPU温度过高如果CPU风扇散热不好,会导致CPU温度太高,使CPU自动降频,从而使CPU的性能降低。

总之高温时CPU会自动将降低工作效率。

2、超线程超线程导致CPU使用率占用高,这类故障的共同原因就是都使用了具有超线程功能的P4 CPU。

据一些网友总结超线程似乎和天网防火墙有冲突,可以通过卸载天网并安装其它防火墙解决,也可以通过在BIOS中关闭超线程功能解决情况3、硬件配置不合理例如内存不足,当运行一些大型软件时,CPU的资源大部分耗在了虚拟内存的交换处理上。

而电源功率不足,也会使CPU的性能难以发挥。

还有,在购买CPU 时,选的CPU核心频率不足是导致CPU的使用率高的最直接原因。

3、不完善的驱动程序硬件的驱动程序没有经过认证或者是不合法的认证,会造成CPU 资源占用率高。

因大量的测试版的驱动在网上泛滥,造成了难以发现的故障原因。

处理方式:尤其是显卡驱动特别要注意,建议使用微软认证的或由官方发布的驱动,并且严格核对型号、版本。

4、待机经常使用待机功能,也会造成系统自动关闭硬盘DMA模式。

这不仅会使系统性能大幅度下降,系统启动速度变慢,也会使是系统在运行一些大型软件时CPU使用率高。

二、为什么不提高CPU浮点运算能力问:为什么 CPU 的浮点运算能力比 GPU 差,为什么不提高 CPU 的浮点运算能力?「速度区别主要是来自于架构上的区别」是一个表面化的解释。

软浮点效率在处理器架构中,浮点运算一直是一个关键的性能指标。

随着技术的不断发展,处理器对浮点运算的处理方式也在不断进步,其中软浮点实现是一种重要的方法。

本文将深入探讨软浮点效率的相关问题,包括其定义、影响因素、优化策略以及在实际应用中的表现。

一、软浮点效率的概念及重要性软浮点(Soft Float)是指处理器中没有专门的浮点运算单元(FPU),而是通过软件库来模拟浮点运算的实现方式。

与之相对的是硬浮点(Hard Float),即处理器内置了专门的FPU来处理浮点运算。

软浮点效率则是指在软浮点实现下,处理器进行浮点运算时的性能表现,通常用浮点运算速度、资源占用等指标来衡量。

在嵌入式系统、低功耗设备或特定应用场景中,由于成本、功耗或设计复杂度等限制,可能无法集成专门的FPU。

此时,软浮点实现成为了一种可行的解决方案。

因此,提高软浮点效率对于提升这些系统的整体性能至关重要。

二、影响软浮点效率的因素1. 算法复杂度:浮点运算的复杂度直接影响软浮点的效率。

复杂的浮点运算需要更多的软件模拟和计算资源,从而降低效率。

2. 软件库优化:软件库是实现软浮点运算的关键。

优化软件库可以提高浮点运算的速度和准确性,从而提升软浮点效率。

3. 处理器架构:处理器的架构对软浮点效率也有重要影响。

例如,处理器的指令集、寄存器数量、内存访问速度等都会影响浮点运算的执行效率。

4. 编译器优化:编译器是将高级语言转换为机器语言的工具,其优化程度也会影响软浮点效率。

优化编译器可以更好地利用处理器的特性,提高浮点运算的性能。

三、软浮点效率的优化策略1. 算法优化:针对具体的浮点运算任务,选择合适的算法并优化其实现方式,以降低运算复杂度和提高运算速度。

2. 软件库优化:对软件库进行深度优化,包括使用更高效的算法、减少不必要的内存访问、优化函数调用等,以提高软件库的执行效率。

3. 处理器架构优化:针对软浮点运算的特点,优化处理器的架构设计,如增加寄存器数量、提高内存访问速度、优化指令集等,以提升浮点运算的性能。

yolov4参数量和计算量Yolov4是一种基于深度学习的目标检测算法,它具有较高的准确率和较低的计算复杂度。

本文将从参数量和计算量两个方面来介绍Yolov4的特点。

一、参数量Yolov4的参数量是指模型中需要训练的可调整参数的数量。

参数量的多少直接影响了模型的容量和表示能力。

在目标检测任务中,参数量的大小通常与模型的准确率和速度之间存在一定的权衡关系。

Yolov4的参数量相比前几个版本有了显著的增加。

根据官方提供的数据,Yolov4的参数量约为6200万个。

这个数量级的参数量相对较大,说明Yolov4具有较强的表达能力,可以更好地适应复杂的目标检测任务。

二、计算量计算量是指模型在进行推理时需要执行的浮点运算数量。

计算量的多少直接决定了模型的推理速度。

在目标检测任务中,计算量的大小通常与模型的准确率和速度之间存在一定的权衡关系。

Yolov4相对于前几个版本在计算量上也有所增加。

根据官方提供的数据,Yolov4的计算量约为170亿次浮点运算。

这个数量级的计算量相对较大,说明Yolov4在推理时需要较大的计算资源支持。

总结:Yolov4作为一种先进的目标检测算法,具有较高的准确率和较低的计算复杂度。

它的参数量约为6200万个,计算量约为170亿次浮点运算。

这些数据说明Yolov4在目标检测任务中具有较强的表达能力和较快的推理速度。

Yolov4的参数量和计算量的增加是为了提升模型的性能。

较大的参数量可以使模型更好地适应复杂的目标检测任务,提高检测的准确率。

较大的计算量可以保证模型在推理时能够快速地处理大量的输入数据,提高实时性。

然而,较大的参数量和计算量也会带来一些挑战。

首先,训练一个参数量较大的模型需要更多的计算资源和时间。

其次,在部署阶段,较大的计算量可能会对推理设备的性能要求较高,限制了模型的应用范围。

为了解决这些挑战,研究者们一直在努力寻找更好的优化方法。

他们尝试使用剪枝、量化等技术来减少模型的参数量和计算量,以达到在保持准确率的同时提升模型的效率。