重庆交通大学毕业设计中英文翻译

- 格式:doc

- 大小:56.00 KB

- 文档页数:7

英文翻译:PARTⅠ各种光纤接入技术Optical Fiber Technology With Various Access1 光网络主流1.1 光纤技术光纤生产技术已经成熟,现在大批量生产,广泛应用于今天的零色散波长λ0=1.3μm的单模光纤,而零色散波长λ0=1.55μm的单模光纤已开发并已进入实用阶段,这是非常小的1.55μm的波长衰减,约0.22dB/km,它更适合长距离大容量传输,是首选的长途骨干传输介质。

目前,为了适应不同的线路和局域网的发展要求,已经制定了一个非分散纤维,低色散斜率光纤,大有效面积光纤,水峰光纤等新型光纤。

长波光学研究人员研究认为,传输距离可以达到数千公里的理论,可以实现无中继传输距离,但它仍然是阶段理论。

1.2 光纤放大器1550nm波长掺铒(ER)的光纤放大器(EDFA),掺铒数字,模拟和相干光通信中继器可以以不同的速率传输光纤放大器,也可以发送特定波长的光信号。

在从模拟信号转换成数字信号、从低到高比特率比特率的光纤网络升级中,系统采用光复用技术的扩大,他们都不必改变掺铒放大器电路和设备。

掺铒放大器可作为光接收机前置放大器,后置放大器的光发射机和放大器的补偿光源装置。

1.3 宽带接入不同的环境中企业和住宅客户提供了多种宽带接入解决方案。

接入系统主要完成三大功能:高速传输,复用/路由,网络的扩展。

目前,接入系统的主流技术,ADSL 技术可以双绞铜线传输经济每秒几兆比特的信息,即支持传统的语音服务,而且还支持面向数据的因特网接入位,理事会结束的ADSL多路复用访问的数据流量,路由的分组网络,语音流量将传送到PSTN,ISDN或其它分组网络。

电缆调制解调器在HFC网络提供高速数据通信,将带宽分为上行和下行信道同轴电缆渠道,它可以提供挥发性有机化合物的在线娱乐,互联网接入等服务,同时还提供PSTN业务。

固定无线接入系统如智能天线和接收机的无线接入系统使用了许多高新技术,是一个以创新的方式接入的技术,作为目前仍滞留在今后进一步探索实践的方式最不确定的接入技术。

Anti-Aircraft Fire Control and the Development of IntegratedSystems at SperryT he dawn of the electrical age brought new types of control systems. Able to transmit data between distributed components and effect action at a distance, these systems employed feedback devices as well as human beings to close control loops at every level. By the time theories of feedback and stability began to become practical for engineers in the 1930s a tradition of remote and automatic control engineering had developed that built distributed control systems with centralized information processors. These two strands of technology, control theory and control systems, came together to produce the large-scale integrated systems typical of World War II and after.Elmer Ambrose Sperry (I860-1930) and the company he founded, the Sperry Gyroscope Company, led the engineering of control systems between 1910 and 1940. Sperry and his engineers built distributed data transmission systems that laid the foundations of today‟s command and control systems. Sperry‟s fire control systems included more than governors or stabilizers; they consisted of distributed sensors, data transmitters, central processors, and outputs that drove machinery. This article tells the story of Sperry‟s involvement in anti-aircraft fire control between the world wars and shows how an industrial firm conceived of control systems before the common use of control theory. In the 1930s the task of fire control became progressively more automated, as Sperry engineers gradually replaced human operators with automatic devices. Feedback, human interface, and system integration posed challenging problems for fire control engineers during this period. By the end of the decade these problems would become critical as the country struggled to build up its technology to meet the demands of an impending war.Anti-Aircraft Artillery Fire ControlBefore World War I, developments in ship design, guns, and armor drove the need for improved fire control on Navy ships. By 1920, similar forces were at work in the air: wartime experiences and postwar developments in aerial bombing created the need for sophisticated fire control for anti-aircraft artillery. Shooting an airplane out of the sky is essentially a problem of “leading” the target. As aircraft developed rapidly in the twenties, their increased speed and altitude rapidly pushed the task of computing the lead out of the range of human reaction and calculation. Fire control equipment for anti-aircraft guns was a means of technologically aiding human operators to accomplish a task beyond their natural capabilities.During the first world war, anti-aircraft fire control had undergone some preliminary development. Elmer Sperry, as chairman of the Aviation Committee of the Naval Consulting Board, developed two instruments for this problem: a goniometer,a range-finder, and a pretelemeter, a fire director or calculator. Neither, however, was widely used in the field.When the war ended in I918 the Army undertook virtually no new development in anti-aircraft fire control for five to seven years. In the mid-1920s however, the Army began to develop individual components for anti-aircraft equipment including stereoscopic height-finders, searchlights, and sound location equipment. The Sperry Company was involved in the latter two efforts. About this time Maj. Thomas Wilson, at the Frankford Arsenal in Philadelphia, began developing a central computer for firecontrol data, loosely based on the system of “director firing” that had developed in naval gunn ery. Wilson‟s device resembled earlier fire control calculators, accepting data as input from sensing components, performing calculations to predict the future location of the target, and producing direction information to the guns.Integration and Data TransmissionStill, the components of an anti-aircraft battery remained independent, tied together only by telephone. As Preston R. Bassett, chief engineer and later president of the Sperry Company, recalled, “no sooner, however, did the components get to the point of functioning satisfactorily within themselves, than the problem of properly transmitting the information from one to the other came to be of prime importance.”Tactical and terrain considerations often required that different fire control elements be separated by up to several hundred feet. Observers telephoned their data to an officer, who manually entered it into the central computer, read off the results, and telephoned them to the gun installations. This communication system introduced both a time delay and the opportunity for error. The components needed tighter integration, and such a system required automatic data communications.In the 1920s the Sperry Gyroscope Company led the field in data communications. Its experience came from Elmer Spe rry‟s most successful invention, a true-north seeking gyro for ships. A significant feature of the Sperry Gyrocompass was its ability to transmit heading data from a single central gyro to repeaters located at a number of locations around the ship. The repeaters, essentially follow-up servos, connected to another follow-up, which tracked the motion of the gyro without interference. These data transmitters had attracted the interest of the Navy, which needed a stable heading reference and a system of data communication for its own fire control problems. In 1916, Sperry built a fire control system for the Navy which, although it placed minimal emphasis on automatic computing, was a sophisticated distributed data system. By 1920 Sperry had installed these systems on a number of US. battleships.Because of the Sperry Company‟s experience with fire control in the Navy, as well as Elmer Sperry‟s earlier work with the goniometer and the pretelemeter, the Army approached the company for help with data transmission for anti-aircraft fire control. To Elmer Sperry, it looked like an easy problem: the calculations resembled those in a naval application, but the physical platform, unlike a ship at sea, anchored to the ground. Sperry engineers visited Wilson at the Frankford Arsenal in 1925, and Elmer Sperry followed up with a letter expressing his interest in working on the problem. He stressed his company‟s experience with naval problems, as well as its recent developments in bombsights, “work from the other end of the pro position.” Bombsights had to incorporate numerous parameters of wind, groundspeed, airspeed, and ballistics, so an anti-aircraft gun director was in some ways a reciprocal bombsight . In fact, part of the reason anti-aircraft fire control equipment worked at all was that it assumed attacking bombers had to fly straight and level to line up their bombsights. Elmer Sperry‟s interests were warmly received, and in I925 and 1926 the Sperry Company built two data transmission systems for the Army‟s gun directors.The original director built at Frankford was designated T-1, or the “Wilson Director.” The Army had purchased a Vickers director manufactured in England, but encouraged Wilson to design one thatcould be manufactured in this country Sperry‟s two data tran smission projects were to add automatic communications between the elements of both the Wilson and the Vickers systems (Vickers would eventually incorporate the Sperry system into its product). Wilson died in 1927, and the Sperry Company took over the entire director development from the Frankford Arsenal with a contract to build and deliver a director incorporating the best features of both the Wilson and Vickers systems. From 1927 to 193.5, Sperry undertook a small but intensive development program in anti-aircraft systems. The company financed its engineering internally, selling directors in small quantities to the Army, mostly for evaluation, for only the actual cost of production [S]. Of the nearly 10 models Sperry developed during this period, it never sold more than 12 of any model; the average order was five. The Sperry Company offset some development costs by sales to foreign govemments, especially Russia, with the Army‟s approval 191.The T-6 DirectorSperry‟s modified version of Wilson‟s director was designated T-4 in development. This model incorporated corrections for air density, super-elevation, and wind. Assembled and tested at Frankford in the fall of 1928, it had problems with backlash and reliability in its predicting mechanisms. Still, the Army found the T-4 promising and after testing returned it to Sperry for modification. The company changed the design for simpler manufacture, eliminated two operators, and improved reliability. In 1930 Sperry returned with the T-6, which tested successfully. By the end of 1931, the Army had ordered 12 of the units. The T-6 was standardized by the Army as the M-2 director.Since the T-6 was the first anti-aircraft director to be put into production, as well as the first one the Army formally procured, it is instructive to examine its operation in detail. A technical memorandum dated 1930 explained the theory behind the T-6 calculations and how the equations were solved by the system. Although this publication lists no author, it probably was written by Earl W. Chafee, Sperry‟s director of fire control engineering. The director was a complex mechanical analog computer that connected four three-inch anti-aircraft guns and an altitude finder into an integratedsystem (see Fig. 1). Just as with Sperry‟s naval fire control system, the primary means of connection were “data transmitters,” similar to those that connected gyrocompasses to repeaters aboard ship.The director takes three primary inputs. Target altitude comes from a stereoscopic range finder. This device has two telescopes separated by a baseline of 12 feet; a single operator adjusts the angle between them to bring the two images into coincidence. Slant range, or the raw target distance, is then corrected to derive its altitude component. Two additional operators, each with a separate telescope, track the target, one for azimuth and one for elevation. Each sighting device has a data transmitter that measures angle or range and sends it to the computer. The computer receives these data and incorporates manual adjustments for wind velocity, wind direction, muzzle velocity, air density, and other factors. The computer calculates three variables: azimuth, elevation, and a setting for the fuze. The latter, manually set before loading, determines the time after firing at which the shell will explode. Shells are not intended to hit the target plane directly but rather to explode near it, scattering fragments to destroy it.The director performs two major calculations. First, pvediction models the motion of the target and extrapolates its position to some time in the future. Prediction corresponds to “leading” the target. Second, the ballistic calculation figures how to make the shell arrive at the desired point in space at the future time and explode, solving for the azimuth and elevation of the gun and the setting on the fuze. This calculation corresponds to the traditional artillery man‟s task of looking up data in a precalculated “firing table” and setting gun parameters accordingly. Ballistic calculation is simpler than prediction, so we will examine it first.The T-6 director solves the ballistic problem by directly mechanizing the traditional method, employing a “mechanical firing table.” Traditional firing tables printed on paper show solutions for a given angular height of the target, for a given horizontal range, and a number of other variables. The T-6 replaces the firing table with a Sperry ballistic cam.” A three-dimensionally machined cone shaped device, the ballistic cam or “pin follower” solves a pre-determined function. Two independent variables are input by the angular rotation of the cam and the longitudinal position of a pin that rests on top of the cam. As the pin moves up and down the length of the cam, and as the cam rotates, the height of the pin traces a function of two variables: the solution to the ballistics problem (or part of it). The T-6 director incorporates eight ballistic cams, each solving for a different component of the computation including superelevation, time of flight, wind correction, muzzle velocity. air density correction. Ballistic cams represented, in essence, the stored data of the mechanical computer. Later directors could be adapted to different guns simply by replacing the ballistic cams with a new set, machined according to different firing tables. The ballistic cams comprised a central component of Sperry‟s mechanical computing technology. The difficulty of their manufacture would prove a major limitation on the usefulness of Sperry directors.The T-6 director performed its other computational function, prediction, in an innovative way as well. Though the target came into the system in polar coordinates (azimuth, elevation, and range), targets usually flew a constant trajectory (it was assumed) in rectangular coordinates-i.e. straight andlevel. Thus, it was simpler to extrapolate to the future in rectangular coordinates than in the polar system. So the Sperry director projected the movement of the target onto a horizontal plane, derived the velocity from changes in position, added a fixed time multiplied by the velocity to determine a future position, and then converted the solution back into polar coordinates. This method became known as the “plan prediction method”because of the representation of the data on a flat “plan” as viewed from above; it was commonly used through World War II. In the plan prediction method, “the actual movement of the target is mechanically reproduced on a small scale within the Computer and the desired angles or speeds can be measured directly from the movements of these elements.”Together, the ballistic and prediction calculations form a feedback loop. Operators enter an estimated “time of flight” for the shell when they first begin tracking. The predictor uses this estimate to perform its initial calculation, which feeds into the ballistic stage. The output of the ballistics calculation then feeds back an updated time-of-flight estimate, which the predictor uses to refine the initial estimate. Thus “a cumulative cycle of correction brings the predicted future position of the target up to the point indicated by the actual future time of flight.”A square box about four feet on each side (see Fig. 2) the T-6 director was mounted on a pedestal on which it could rotate. Three crew would sit on seats and one or two would stand on a step mounted to the machine. The remainder of the crew stood on a fixed platform; they would have had to shuffle around as the unit rotated. This was probably not a problem, as the rotation angles were small. The direc tor‟s pedestal mounted on a trailer, on which data transmission cables and the range finder could be packed for transportation.We have seen that the T-6 computer took only three inputs, elevation, azimuth, and altitude (range), and yet it required nine operators. These nine did not include the operation of the range finder, which was considered a separate instrument, but only those operating the director itself. What did these nine men do?Human ServomechanismsTo the designers of the director, the operato rs functioned as “manual servomechanisms.”One specification for the machine required “minimum dependence on …human element.‟ The Sperry Company explained, “All operations must be made as mechanical and foolproof as possible; training requirements must visualize the conditions existent under rapid mobilization.” The lessons of World War I ring in this statement; even at the height of isolationism, with the country sliding into depression, design engineers understood the difficulty of raising large numbers of trained personnel in a national emergency. The designers not only thought the system should account for minimal training and high personnel turnover, they also considered the ability of operators to perform their duties under the stress of battle. Thus, nearly all the work for the crew was in a “follow-the-pointer”mode: each man concentrated on an instrument with two indicating dials, one the actual and one the desired value for a particular parameter. With a hand crank, he adjusted the parameter to match the two dials.Still, it seems curious that the T-6 director required so many men to perform this follow-the-pointer input. When the external rangefinder transmitted its data to the computer, it appeared on a dial and an operator had to follow the pointer to actually input the data into the computing mechanism. The machine did not explicitly calculate velocities. Rather, two operators (one for X and one for Y) adjusted variable-speed drives until their rate dials matched that of a constant-speed motor. When the prediction computation was complete, an operator had to feed the result into the ballistic calculation mechanism. Finally, when the entire calculation cycle was completed, another operator had to follow the pointer to transmit azimuth to the gun crew, who in turn had to match the train and elevation of the gun to the pointer indications.Human operators were the means of connecting “individual elements” into an integrated system. In one sense the men were impedance amplifiers, and hence quite similar to servomechanisms in other mechanical calculators of the time, especially Vannevar Bush‟s differential analyzer .The term “manual servomechanism”itself is an oxymoron: by the conventional definition, all servomechanisms are automatic. The very use of the term acknowledges the existence of an automatic technology that will eventually replace the manual method. With the T-6, this process was already underway. Though the director required nine operators, it had already eliminated two from the previous generation T-4. Servos replaced the operator who fed back superelevation data and the one who transmitted the fuze setting. Furthermore, in this early machine one man corresponded to one variable, and the machine‟s requirement for operators corresponded directly to the data flow of its computation. Thus the crew that operated the T-6 director was an exact reflection of the algorithm inside it.Why, then, were only two of the variables automated? This partial, almost hesitating automation indicates there was more to the human servo-motors than Sperry wanted to acknowledge. As much as the company touted “their duties are purely mechanical and little skill or judgment is required on the part of the operators,” men were still required to exercise some judgment, even if unconsciously. The data were noisy, and even an unskilled human eye could eliminate complications due to erroneous or corrupted data. The mechanisms themselves were rather delicate and erroneous input data, especially if it indicated conditions that were not physically possible, could lock up or damage the mechanisms. Theoperators performed as integrators in both senses of the term: they integrated different elements into a system.Later Sperry DirectorsWhen Elmer Sperry died in 1930, his engineers were at work on a newer generation director, the T-8. This machine was intended to be lighter and more portable than earlier models, as well as less expensive and “procurable in quantities in case of emergency.” The company still emphasized the need for unskilled men to operate the system in wartime, and their role as system integrators. The operators were “mechanical links in the apparatus, thereby making it possible to avoid mechanical complication which would be involved by the use of electrical or mechanical servo motors.” Still, army field experience with the T-6 had shown that servo-motors were a viable way to reduce the number of operators and improve reliability, so the requirements for the T-8 specified that wherever possible “electrical shall be used to reduce the number of operators to a minimum.” Thus the T-8 continued the process of automating fire control, and reduced the number of operators to four. Two men followed the target with telescopes, and only two were required for follow-the-pointer functions. The other follow-the-pointers had been replaced by follow-up servos fitted with magnetic brakes to eliminate hunting. Several experimental versions of the T-8 were built, and it was standardized by the Army as the M3 in 1934.Throughout the remain der of the …30s Sperry and the army fine-tuned the director system in the M3. Succeeding M3 models automated further, replacing the follow-the-pointers for target velocity with a velocity follow-up which employed a ball-and-disc integrator. The M4 series, standardized in 1939, was similar to the M3 but abandoned the constant altitude assumption and added an altitude predictor for gliding targets. The M7, standardized in 1941, was essentially similar to the M4 but added full power control to the guns for automatic pointing in elevation and azimuth. These later systems had eliminated errors. Automatic setters and loaders did not improve the situation because of reliability problems. At the start of World War II, the M7 was the primary anti-aircraft director available to the army.The M7 was a highly developed and integrated system, optimized for reliability and ease of operation and maintenance. As a mechanical computer, it was an elegant, if intricate, device, weighing 850 pounds and including about 11,000 parts. The design of the M7 capitalized on the strength of the Sperry Company: manufacturing of precision mechanisms, especially ballistic cams. By the time the U.S. entered the second world war, however, these capabilities were a scarce resource, especially for high volumes. Production of the M7 by Sperry and Ford Motor Company as subcontractor was a “real choke” and could not keep up with production of the 90mm guns, well into 1942. The army had also adopted an English system, known as the “Kerrison Director” or M5, which was less accurate than the M7 but easier to manufacture. Sperry redesigned the M5 for high-volume production in 1940, but passed in 1941.Conclusion: Human Beings as System IntegratorsThe Sperry directors we have examined here were transitional, experimental systems. Exactly for that reason, however, they allow us to peer inside the process of automation, to examine the displacement of human operators by servomechanisms while the process was still underway. Skilled asthe Sperry Company was at data transmission, it only gradually became comfortable with the automatic communication of data between subsystems. Sperry could brag about the low skill levels required of the operators of the machine, but in 1930 it was unwilling to remove them completely from the process. Men were the glue that held integrated systems together.As products, the Sperry Company‟s anti-aircraft gun directors were only partially successful. Still, we should judge a technological development program not only by the machines it produces but also by the knowledge it creates, and by how that knowledge contributes to future advances. Sperry‟s anti-aircraft directors of the 1930s were early examples of distributed control systems, technology that would assume critical importance in the following decades with the development of radar and digital computers. When building the more complex systems of later years, engineers at Bell Labs, MIT, and elsewhere would incorporate and build on the Sperry Company‟s experience,grappling with the engineering difficulties of feedback, control, and the augmentation of human capabilities by technological systems.在斯佩里防空炮火控和集成系统的发展电气时代的到来带来了新类型的控制系统。

ORIGINAL PAPEREggshell crack detection based on acoustic response and support vector data description algorithmHao Lin ÆJie-wen Zhao ÆQuan-sheng Chen ÆJian-rong Cai ÆPing ZhouReceived:21May 2009/Revised:27August 2009/Accepted:28August 2009/Published online:22September 2009ÓSpringer-Verlag 2009Abstract A system based on acoustic resonance and combined with pattern recognition was attempted to dis-criminate cracks in eggshell.Support vector data descrip-tion (SVDD)was employed to solve the classification problem due to the imbalanced number of training samples.The frequency band was between 1,000and 8,000Hz.Recursive least squares adaptive filter was used to process the response signal.Signal-to-noise ratio of acoustic impulse response was remarkably enhanced.Five charac-teristics descriptors were extracted from response fre-quency signals,and some parameters were optimized in building model.Experiment results showed that in the same condition SVDD got better performance than con-ventional classification methods.The performance of SVDD model was achieved with crack detection level of 90%and a false rejection level of 10%in the prediction set.Based on the results,it can be concluded that the acoustic resonance system combined with SVDD has significant potential in the detection of cracked eggs.Keywords Eggshell ÁCrack ÁDetection ÁAcoustic resonance ÁSupport vector data descriptionIntroductionIn the egg industry,the presence of cracks in eggshells is one of the main defects of physical quality.Cracked eggsare very vulnerable to bacterial infections leading to health hazards [1].It mostly results in significant economic loss in the egg industry.Recent research shows that it is possible to detect cracks in eggshells using acoustic response analysis [2–5].Supervised pattern recognition models were also employed to discriminate intact and cracked eggs [6].In these previous researches,training of discrimination models needs a considerable amount of intact egg samples and also corresponding defective ones.However,it is more difficult to acquire sufficient naturally cracked eggs samples than intact ones.Artificial infliction of cracking in eggs is time-consuming and a waste.Moreover,the artificially cracked eggs may not provide completely authentic information on naturally cracked ones.So,the traditional discrimination model shows poor performance when the numbers of sam-ples from the two classes are seriously unbalanced,because the samples of minority group cannot provide sufficient information to support the ultimate decision function.Support vector data description (SVDD),which is inspired by the theory of two-class support vector machine (SVM),is custom-tailored for one-class classification [7].One-class classification is always used to deal with a two-class classification problem,where each of the two classes has a special meaning [8].The two classes in SVDD are target class and outlier class,respectively.Target class is assumed to be sampled well,and many (training)example objects are available.The outlier class can be sampled very sparsely,or can be totally absent.The basic idea of SVDD is to define a boundary around samples of target with a volume as small as possible [9].SVDD has been used to solve the problem of unbalanced samples in the field of machine faults diagnosis,intrusion detection in the network,recog-nition of handwritten digits,face recognition,etc.[10–13].In this work,the algorithm of SVDD was employed to solve the classification problem of eggs due to imbalancedH.Lin ÁJ.Zhao (&)ÁQ.Chen ÁJ.Cai ÁP.ZhouSchool of Food and Biological Engineering,Jiangsu University,212013Zhenjiang,People’s Republic of Chinae-mail:zjw-205@;zhao_jiewen@ H.Line-mail:linhaolt794@Eur Food Res Technol (2009)230:95–100DOI 10.1007/s00217-009-1145-6number of samples.In addition,recursive least squares (RLS)adaptive filter was used to enhance the signal-to-noise ratio.Some excitation resonant frequency charac-teristics of signals were used as input vectors of SVDD model to discriminate intact and cracked eggs.Materials and methods Samples preparationAll barn egg samples were collected naturally from a poultry farm and they were intensively reared.These eggs were on maximum 3days old when they were measured.As much as 130eggs with intact shells and 30eggs with cracks were measured.The sizes of eggs ranged from peewee to jumbo.Irregular eggs were not incorporated into the data analysis.The cracks,which were 10–40mm long and less than 15-l m wide,were measured by a micrometer.Both,intact and cracked samples,were divided into two subsets.One of them called calibration set was used to build a model,and the other one called prediction set was used to test the robustness of the model.The calibration set contained 120samples;the number of intact and cracked samples were 110and 10,respectively.The remaining 40samples constituted the prediction set,with 20intact eggs and 20cracked ones.Experimental systemA system based on acoustic resonance was developed for the detection of crack in eggshell.The system consists of a product support,a light exciting mechanism,a microphone,signal amplifiers,a personal computer (PC)and software to acquire and analyze the results.A schematic diagram of the system is presented in Fig.1.A pair of rolls made of hard rubber was used to support the eggs,and the shape of the support was focused to normal eggshell surfaces.The excitation set included an electromagnetic driver,an adjustable volt DC power and a light metallic stick.The total mass of the stick was 6g,and its length 6cm.The excitation force is an important factor that affects the magnitude and width of the pulse.The adjustable volt DC power was used to control the excitation force.Based on previous test,the voltage of excitation was set at 30V.In this case,optimal signals were achieved without instrumentation overload.The impacting position was close to the crack in the cracked eggshells,which was placed randomly among intact eggshells.Data acquisition and analysisResponse signals obtained from the microphone were amplified,filtered and captured by a 16-bit data acquisition card.The program of data acquisition was compiled based on LabVIEW8.2software(National Instruments,USA)that allows a fast acquisition and processing of the response signal.The sampling rate was 22.05kHz.The time signal was transformed to a frequency signal by using a 512-point fast Fourier (FFT)transformation.The linear frequency spectrum accepted was transformed to a power spectrum.A band-pass filter was used to preserve the information of the frequency band between 1,000and 8,000Hz,because the features of response signals were legible in this frequency band and the signal-to-noise here was also favorable.Brief introduction of support vector data description (SVDD)SVDD is inspired by the idea of SVM [14,15].It is a method of data domain description also calledone-classFig.1Eggshell crackmeasurement system based on acoustic resonance analysisclassification.The basic idea of SVDD is to envelop samples or objects within a high-dimensional space with the volume as small as possible byfitting a hypersphere around the samples.The sketch map in two dimensions of SVDD is shown in Fig.2.By introducing kernels,this inflexible model becomes much more powerful and can give reliable results when a suitable kernel is used[16]. The problem of SVDD is tofind center a and radius R, which have the minimum volume of hypersphere contain-ing all samples X i.For a data set containing i normal data objects,when one or a few very remote objects are in it,a very large sphere is obtained,which will not represent the data very well.Therefore,we allow for some data points outside the sphere and introduce slack variable n i.As a result,the minimization problem can be denoted in thefollowing form:min LðRÞ¼R2þCX Ni¼1n i;s:t x iÀak k2R2þn i;n i!0ði¼1;2;...;NÞ;9>>>>>=>>>>>;ð1Þwhere the variable C gives the trade-off between simplicity (volume of the sphere)and the number of errors(number of target objects rejected).The above problem is usually solved by introducing Lagrange multipliers and can be transformed into maximizing the following function L with respect to the Lagrange multipliers.For an object x,we definef2ðxÞ¼xÀak k2¼ðxÁxÞÀ2X Ni¼1a iðzÁx iÞþX Ni¼1X Nj¼1a i a jðx iÁx jÞ:ð2ÞThe test objects x is accepted when the distance is smaller than the radius.These objects are called the support objects of the description or the SVs.Objects lying outside the sphere are also called bounded support vectors(BSVs). When a sphere is not always a goodfit for the boundary of data distribution,the inner product(x,y)is generalized by a kernel function k x;yðÞ¼/xðÞ;/yðÞf g;where a mapping/ of the data to a new feature space is applied.With such mapping,Eq.(2)will then becomeL¼P Ni¼1a i kðx i;x iÞÀP Ni¼1P Nj¼1a i a j kðx i;x jÞ;s:t0a i C;P Ni¼1a i¼1and a¼Pia i/ðx iÞ:9>>>=>>>;ð3ÞIn brief,SVDDfirst maps the data which are not linearly separable into a high-dimensional feature space and then describe the data by the maximal margin hypersphere.SoftwareAll data-processing algorithms were implemented with the statistical software Matlab7.1(Mathworks,USA)under Windows XP.SVDD Matlab codes were downloaded from http://www-ict.ewi.tudelft.nl/*davidt/dd_tools.html free of charge.Result and discussionResponse signalsSince the acoustic response was an instantaneous impulse, it was difficult to discriminate between the different response signals of cracked and intact eggs in the time domain.The time domain signals were transformed by FFT to frequency domain signals for the next analysis.Typical power spectra of intact egg and cracked egg are shown in Fig.3,and the areas under the spectral envelope for the intact eggs were smaller than that of the cracked eggs.For the intact eggs,the peak frequencies were prominent, generally found in the middle place(3,500–5,000Hz).In contrast,the peak frequencies of cracked eggs were dis-perse and not prominent.Adaptive RLSfilteringSince the detection of cracked eggshells is based on acoustic response measurement,it is vulnerably interfered by the surrounding noise.This fact is reinforced by the much damped behaviors of agro-products[17].Therefore, response signal should be processed to remove noise in further analysis.Adaptive interference canceling is a standard approach to remove environmental noise[18,19].The RLS is a popular algorithm in thefield of adaptive signal processing. In adaptive RLSfiltering,the coefficients are adjusted from sample to sample to minimize the mean square error(MSE) between a measured noisy scalar signal and itsmodeledvalue from the filter [20,21].A scalar,real output signal,y k ,is measured at the discrete time k ,in response to a set of scalar input signals X k ði Þ;i ¼1;2;...;n ;where n is an arbitrary number of filter taps.For this research,n is set to the number of degrees of freedom to ensure conformity of the resulting filter matrices.The input and the output sig-nals are related by the simple regression model:y k ¼X n À1i ¼0w ði ÞÁx k ði Þþe k :ð4Þwhere e k represents measurement error and w (i )represents the proportion that is contained in the primary scalar signal y k .The implementation of the RLS algorithm is optimized by exploiting the inversion matrix lemma and provides fast convergence and small error rates [22].System identification of a 32-coefficient FIR filter combined with adaptive RLS filtering was used to process the signals.The forgetting factor was 1,and the vector of initial filter coefficients was 0.Figure 4shows the fre-quency signals before and after adaptive RLS filtering.Variable selectionBased on the differences of frequency domain response signals from intact and cracked eggs,five characteristic descriptors were extracted from the response frequency signals as the inputs of the discrimination model.These are shown in Table 1.Parameter optimization in SVDD modelThe basic concept of SVDD is to map nonlinearly the original data X into a higher-dimensional feature space.The transformation into a higher-dimensional space is implemented by a kernel function [23].So,selection of kernel function has a high influence on the performance of the SVDD model.Several kernel functions have been proposed for the SVDD classifier.Not all kernel functions are equally useful for the SVDD.It has been demonstrated that Gaussian kernel results in tighter description and gives a good performance under general smoothness assumptions [24].Thus,Gaussian kernel was adopted in this study.To obtain a good performance,the regularization parameter C and the kernel function r have to be opti-mized.Parameter C determines the trade-off between minimizing the training error and minimizing model complexity.By using Gaussian kernel,the data description transforms from a solid hyper-sphere to a Parzen density estimator.An appropriate selection with width parameter r of Gaussian kernel is important to the density estimation of target objects.There is no systematic methodology for the optimization of these parameters.In this study,the procedure of opti-mization was carried out in two search steps.First,a comparatively large step length was attempted to search optimal value of parameters.The favorable results of the model were found with values of C between 0.005and 0.1,and values of r between 10and 500.Therefore,a much smaller step length was employed for further searching these parameters.In the second search step,50parameter r values with the step of 10(r =10,20–500)and 20parameter C values with the step of 0.005(C =0.005,0.01–1)were tested simultaneously in the building model.Identification results of SVDD model influenced by values of r and C are shown in Fig.5.The optimal model was achieved when r was equal to 420and C was equal to 0.085or 0.09.Here,the identification rates of intactandFig.3Typical response frequency signal ofeggsFig.4Frequency signals before and after adaptive RLS filteringcracked eggs were both 90%in the prediction set.Fur-thermore,it was found that the performance of the SVDD model could not be improved by smaller search parison of discrimination modelsConventional two-class linear discrimination analysis (LDA)model and SVM model were used comparatively to classify intact and cracked eggs.Gaussian kernel was recommended as the kernel function of the SVM model.Parameters of SVM model were also optimized as in SVDD.Table 2shows the optimal results from three dis-crimination models in the prediction set.Identification rates of intact eggs were both 100%in the LDA and SVM models,but 50and 35%for cracked eggs,respectively.In other words,at least 50%of cracked eggs could not be identified in conventional discrimination model.However,detection of cracked eggs is the task we focus on.The identification rates of intact and cracked eggs were both 90%in the SVDD pared with conventional two-class discrimination models,SVDD model showed its superior performance in the discrimination of cracked eggs.LDA is a linear and parametric method with discrimi-nating character.In terms of a set of discriminant functions,the classifier is said to assign an unknown example X to thecorresponding class [25].In the case of conventional LDA classification,the ultimate decision function is based on sufficient information support from two-class training samples.In general,such classification does not pay enough attention to the samples in minority class in building model.It is possible to obtain an inaccurate estimation of the centroid between the two classes.Conventional LDA clas-sification always poorly describes the specific class with scarce training samples.Therefore,it is often unpractical to solve the classification problem using tradition LDA clas-sifier,in case of imbalanced number in training samples.The basic concept of SVM is to map the original data X into a higher-dimensional feature space and find the ‘optimal’hyperplane boundary to separate the two classes [26].In SVM classification,the ‘optimal’boundary is defined as the most distant hyperplane from both sets,which is also called the ‘middle point’between the clas-sification sets.This boundary is expected to be the optimal classification of the sets,since it is the best isolated from the two sets [27].The margin is the minimal distance from the separating hyperplane to the closest data points [28].In general,when the information support from both positive and negative training sets are sufficient and equal,an appropriate separating hyperplane can be obtained.How-ever,when the samples from one class are insufficient to support the separating hyperplane,it will result in the hyperplane being excessively close to this class.As a result,most of the unknown sets may be recognized as the other class.Therefore,compared with other discrimination models,SVM showed poorest performance in discrimi-nating cracked eggs.Differing from conventional classification-based app-roach,SVDD is an approach for one-class classification.ItTable 1Frequencycharacteristics selection and expressionSome Low frequency band:1,000–3,720Hz,Middlefrequency band:3,720–7,440HzVariables Resonance frequency characteristics Expression X1Value of the area of amplitudeX 1¼P512i ¼0PiX2Value of the standard deviation of amplitude X 2¼ffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiffiðPi ÀP Þq=nX3Value of the frequency band of maximum amplitude X 3¼Index max ðPi ÞX4Mean of top three frequency amplitude values X 4¼Max 1:3ðPi Þ=3X5Ratio of amplitude values of middle frequency bands to low frequency bandX 5¼P 200i ¼1Pi P 400i ¼201Pi200Fig.5Identification rates of SVDD models with different values ofparameter r and CTable 2Comparison of results from three discrimination models ModelIdentification rates in the prediction set (%)Intact eggsCracked eggs LDA 10050SVM 10035SVDD9090focuses mainly on normal or target objects.SVDD can handle cases with only a few outlier objects.The advantage of SVDD is that the target class can be any one of two training classes.The selection of the target class depends on the reliability of the information provided from training samples.In general,the class containing more samples may provide sufficient information,and it can be selected as target class[29].Furthermore,SVDD can adapt to the real shape of samples andfindflexible boundary with a mini-mum volume by introducing kernel function.The boundary is described by a few training objects,the support vectors. It is possible to replace normal inner products with kernel functions and obtain moreflexible data descriptions[30]. Width parameter r can be set to give the desired number of support vectors.In addition,extra data on the form of outlier objects can be helpful to improve the performance of the SVDD model.ConclusionsDetection of crack in eggshell based on acoustic impulse resonance was attempted in this work.The SVDD method was employed for solving classification problem where the samples of cracked eggs were not sufficient.The results indicated that detection of crack in eggshell based on the acoustic impulse resonance was feasible,and the SVDD model showed its superior performance in contrast to conventional two-class discrimination models.It can be concluded that SVDD is an excellent method of classifi-cation problem with imbalanced numbers.It is a promising method that uses acoustic resonance technique combined with SVDD to detect cracked eggs.Some relative ideas would be attempted for further improvement of the per-formance of SVDD model in our future work,such as follows:(1)introduce new kernel functions,which can help to obtain a moreflexible boundary;(2)try more methods for selection of parameters to obtain the optimal ones,since parameters of kernel functions are closely related to the tightness of the constructed boundary and the target rejection rate,and appropriate parameters are important to improve the performance of SVDD models;(3)investigate the contribution of abnormal targets to the calibration model and develop a robust model,which has an excellent ability to deal with abnormal targets.Acknowledgments This work is a part of the National Key Tech-nology R&D Program of China(Grant No.2006BAD11A12).We are grateful to the Web site http://www-ict.ewi.tudelft.nl/*davidt/ dd_tools.html,where we downloaded SVDD Matlab codes free of charge.References1.Lin J,Puri VM,Anantheswaran RC(1995)Trans ASAE38(6):1769–17762.Cho HK,Choi WK,Paek JK(2000)Trans ASAE43(6):1921–19263.De Ketelaere B,Coucke P,De Baerdemaeker J(2000)J Agr EngRes76:157–1634.Coucke P,De Ketelaere B,De Baerdemaeker J(2003)J SoundVib266:711–7215.Wang J,Jiang RS(2005)Eur Food Res Technol221:214–2206.Jindal VK,Sritham E(2003)ASAE Annual International Meet-ing,USA7.Tax DMJ,Duin RPW(1999)Pattern Recognit Lett20:1191–11998.Pan Y,Chen J,Guo L(2009)Mech Syst Signal Process23:669–6819.Lee SW,Park JY,Lee SW(2006)Patten Recognit39:1809–181210.Podsiadlo P,Stachowiak GW(2006)Tribol Int39:1624–163311.Sanchez-Hernandeza C,Boyd DS,Foody GM(2007)Ecol Inf2:83–8812.Liu YH,Lin SH,Hsueh YL,Lee MJ(2009)Expert Syst Appl36:1978–199813.Cho HW(2009)Expert Syst Appl36:434–44114.Tax DMJ,Duin RPW(2001)J Mach Learn Res2:155–17315.Tax DMJ,Duin RPW(2004)Mach Learn54:45–6616.Guo SM,Chen LC,Tsai JHS(2009)Pattern Recognit42:77–8317.De Ketelaere B,Maertens K,De Baerdemaeker J(2004)MathComput Simul65:59–6718.Adall T,Ardalan SH(1999)Comput Elect Eng25:1–1619.Madsen AH(2000)Signal Process80:1489–150020.Chase JG,Begoc V,Barroso LR(2005)Comput Struct83:639–64721.Wang X,Feng GZ(2009)Signal Process89:181–18622.Djigan VI(2006)Signal Process86:776–79123.Bu HG,Wang J,Huang XB(2009)Eng Appl Artif Intell22:224–23524.Tao Q,Wu GW,Wang J(2005)Pattern Recognit38:1071–107725.Xie JS,Qiu ZD(2007)Pattern Recognit40:557–56226.Devos O,Ruckebusch C,Durand A,Duponchel L,Huvenne JP(2009)Chemom Intell Lab Syst96:27–3327.Liu X,Lu WC,Jin SL,Li YW,Chen NY(2006)Chemom IntellLab Syst82:8–1428.Chen QS,Zhao JW,Fang CH,Wang DM(2007)SpectrochimActa Pt A Mol Biomol Spectrosc66:568–57429.Huang WL,Jiao LC(2008)Prog Nat Sci18:455–46130.Foody GM,Mathur A,Sanchez-Hernandez C,Boyd DS(2006)Remote Sens Environ104:1–14。

英文The road (highway)The road is one kind of linear construction used for travel。

It is made of the roadbed,the road surface, the bridge, the culvert and the tunnel. In addition, it also has the crossing of lines, the protective project and the traffic engineering and the route facility。

The roadbed is the base of road surface, road shoulder,side slope, side ditch foundations. It is stone material structure, which is designed according to route's plane position .The roadbed, as the base of travel, must guarantee that it has the enough intensity and the stability that can prevent the water and other natural disaster from corroding.The road surface is the surface of road. It is single or complex structure built with mixture。

The road surface require being smooth,having enough intensity,good stability and anti—slippery function. The quality of road surface directly affects the safe, comfort and the traffic。

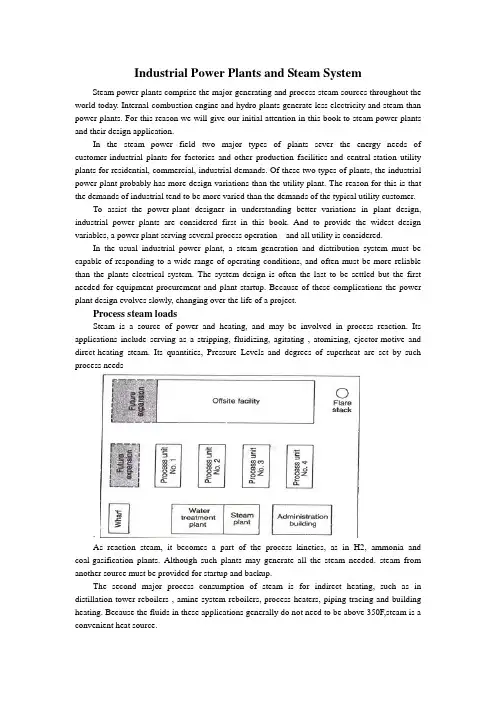

Industrial Power Plants and Steam SystemSteam power plants comprise the major generating and process steam sources throughout the world today. Internal-combustion engine and hydro plants generate less electricity and steam than power plants. For this reason we will give our initial attention in this book to steam power plants and their design application.In the steam power field two major types of plants sever the energy needs of customer-industrial plants for factories and other production facilities-and central-station utility plants for residential, commercial, industrial demands. Of these two types of plants, the industrial power plant probably has more design variations than the utility plant. The reason for this is that the demands of industrial tend to be more varied than the demands of the typical utility customer.To assist the power-plant designer in understanding better variations in plant design, industrial power plants are considered first in this book. And to provide the widest design variables, a power plant serving several process operation and all utility is considered.In the usual industrial power plant, a steam generation and distribution system must be capable of responding to a wide range of operating conditions, and often must be more reliable than the plants electrical system. The system design is often the last to be settled but the first needed for equipment procurement and plant startup. Because of these complications the power plant design evolves slowly, changing over the life of a project.Process steam loadsSteam is a source of power and heating, and may be involved in process reaction. Its applications include serving as a stripping, fluidizing, agitating , atomizing, ejector-motive and direct-heating steam. Its quantities, Pressure Levels and degrees of superheat are set by such process needs.As reaction steam, it becomes a part of the process kinetics, as in H2, ammonia and coal-gasification plants. Although such plants may generate all the steam needed. steam from another source must be provided for startup and backup.The second major process consumption of steam is for indirect heating, such as in distillation-tower reboilers , amine-system reboilers, process heaters, piping tracing and building heating. Because the fluids in these applications generally do not need to be above 350F,steam is a convenient heat source.Again, the quantities of steam required for the services are set by the process design of the facility. There are many options available to the process designer in supplying some of these low-level heat requirements, including heat-exchange system , and circulating heat-transfer-fluid systems, as well as system and electricity. The selection of an option is made early in the design stage and is based predominantly on economic trade-off studies.Generating steam from process heat affords a means of increasing the overall thermal efficiency of a plant. After providing for the recovery of all the heat possible via exchanges, the process designer may be able to reduce cooling requirements by making provisions for the generation of low-pressure(50-150 psig)steam. Although generation at this level may be feasible from a process-design standpoint, the impact of this on the overall steam balance must be considered, because low-pressure steam is excessive in most steam balances, and the generation of additional quantities may worsen the design. Decisions of this type call close coordination between the process and utility engineers.Steam is often generated in the convection section of fired process heaters in order to improve a plant’s thermal efficiency. High-pressure steam can be generated in the furnace convection section of process heater, which have radiant heat duty only.Adding a selective –catalytic-reduction unit for the purpose of lowing NOx emissions may require the generation of waste-heat steam to maintain correct operating temperature to the catalytic-reduction unit.Heat from the incineration of waste gases represents still another source of process steam. Waste-heat flues from the CO boilers of fluid-catalytic crackers and from fluid-coking units, for example, are hot enough to provide the highest pressure level in a steam system.Selecting pressure and temperature levelsThe selecting of pressure and temperature levels for a process steam system is based on:(1)moisture content in condensing-steam turbines,(2)metallurgy of the system,(3)turbine water rates,(4)process requirements ,(5)water treatment costs, and(6)type of distribution system.Moisture content in condensing-steam turbines---The selection of pressure and temperature levels normally starts with the premise that somewhere in the system there will be a condensing turbine. Consequently, the pressure and temperature of the steam must be selected so that its moisture content in the last row of turbine blades will be less than 10-13%. In high speed, a moisture content of 10%or less is desirable. This restriction is imposed in order to minimize erosion of blades by water particles. This, in turn, means that there will be a minimum superheat for a given pressure level, turbine efficiency and condenser pressure for which the system can be designed.System mentallurgy- A second pressure-temperature concern in selecting the appropriate steam levels is the limitation imposed by metallurgy. Carbon steel flanges, for example, are limited to a maximum temperature of 750F because of the threat of graphite (carbides) precipitating at grain boundaries. Hence, at 600 psig and less, carbon-steel piping is acceptable in steam distribution systems. Above 600 psig, alloy piping is required. In a 900- t0 1,500-psig steam system, the piping must be either a r/2 carbon-1/2 molybdenum or a l/2 chromium% molybdenum alloyTurbine water rates - Steam requirements for a turbine are expressed as water rate, i.e., lb of steam/bph, or lb of steam/kWh. Actual water rate is a function of two factors: theoretical water rate and turbine efficiency.The first is directly related to the energy difference between the inlet and outlet of a turbine, based on the isentropic expansion of the steam. It is, therefore, a function of the turbine inlet and outlet pressures and temperatures.The second is a function of size of the turbine and the steam pressure at the inlet, and of turbine operation (i.e., whether the turbine condenses steam, or exhausts some of it to an intermediate pressure level). From an energy stand point, the higher the pressure and temperature, the higher the overall cycle efficiency. _Process requirements - When steam levels are being established, consideration must be given to process requirements other than for turbine drivers. For example, steam for process heating will have to be at a high-enough pressure to prevent process fluids from leaking into the steam. Steam for pipe tracing must be at a certain minimum pressure so that low-pressure condensate can be recovered.Water treatment costs - The higher the steam pressure, the costlier the boiler feedwater treatment. Above 600 psig, the feedwater almost always must be demineralized; below 600 psig, soft,ening may be adequate. It may have to be of high quality if the steam is used in the process, such as in reactions over a catalyst bed (e.g., in hydrogen production).Type of distribution system - There are two types of systems: local, as exemplified by powerhouse distribution; and complex, by wluch steam is distributed to many units in a process plant. For a small local system, it is not impractical from a cost standpoint for steam pressures to be in the 600-1,500-psig range. For a large system, maintaining pressures within the 150-600-psig range is desirable because of the cost of meeting the alloy requirements for higher-pressure steam distribution system.Because of all these foregoing factors, the steam system in a chemical process complex or oil refinery frequently ends up as a three-level arrangement. The highest level, 600 psig, serves primarily as a source of power. The intermediate level, 150 psig, is ideally suitable for small emergency turbines, tracing off the plot, and process heating. The low level, normally 50 psig, can be used for heating services, tracing within the plot, and process requirements. A higher fourth level normally not justified, except in special cases as when alarge amount ofelectric power must be generated.Whether or not an extraction turbine will be included in the process will have a bearing on the intermediate-pressure level selected, because the extraction pressure should be less than 50% of the high-pressure level, to take into account the pressure drop through the throttle valve and the nozzles of the high-pressure section of' the turbine.Drivers for pumps and compressorsThe choice between a steam and an electric driver for a particular pump or compressor depends on a number of things, including the operational philosophy. In the event of a power failure, it must be possible to shut down a plant orderly and safely if normal operation cannot be continued. For an orderly and safe shutdown, certain services must be available during a power failure: (1) instrument air, (2) cooling water, (3) relief and blow down pump out systems, (4) boiler feedwater pumps, (5) boiler fans, (6) emergency power generators, and (7) fire water pumps.These services are normally supplied by steam or diesel drivers because a plant's steam or diesel emergency system is considered more reliable than an electrical tie-line.The procedure for shutting down process units must be analyzed for each type of processplant and specific design. In general, the following represent the minimum services for which spare pumps driven by steam must be provided: column reflux, bottoms and purge-oil circulation, and heater charging. Most important is to maintain cooling; next, to be able to safely pump the plant's inventory into tanks.Driver selection cannot be generalized; a plan and procedure must be developed for each process unit.The control required for a process is at times another consideration in the selection of a driver. For example, a compressor may be controlled via flow or suction pressure. The ability to vary driver speed, easily obtained with a steam turbine, may be basis for selecting a steam driver instead of a constant-speed induction electric motor. This is especially important when the molecular weight of the gas being compressed may vary, as in catalytic-cracking and catalytic-reforming processes.In certain types of plants, gas flow must be maintained to prevent uncontrollable high-temperature excursions during shutdown. For example, hydrocrackers are purged of heavy hydrocarbon with recycle gas to prevent the exothermic reactions from producing high bed temperatures. Steam-driven compressors can do this during a power failure.Each process operation must be analyzed from such a safety viewpoint when selecting drivers for critical equipment. The size of a relief and blowdown system can be reduced by installing steam drivers. In most cases, the size of such a system is based on a total power failure. If heat-removal powered by steam drivers, the relief system can be smaller. For example, a steam driver will maintain flow in the pump-around circuit for removing heat from a column during a power failure, reducing the relief load imposed on the flare system.Equipment support services (such as lubrication and sea-oil systems for compressors) that could be damaged during a loss of power should also be powered by steam drivers.Driver size can also be a factor. An induction electric motor requires large starting currents - typically six times the normal load. The drop in voltage caused by the startup of such a motor imposes a heavy transient demand on the electrical distribution system. For this reason, drivers larger than 10,000 hp are normally steam turbines, although synchronous motors as large as 25,000 hp are used.The reliability of life-support facilities - e.g., building heat, potable water, pipe tracing, emergency lighting-during power failures is of particular concern mates. In such a case, at least one boiler should be equipped with steam-driven auxiliaries to provide these services.Lastly, steam drivers are also selected for the purpose of balancing steam systems and avoiding large amounts of letdown between steam levels. Such decisions regarding drivers are made after the steam balances have been refined and the distribution system has been fully defined. There must be sufficient flexibility to allow balancing the steam system under all operating conditions.Selecting steam driversAfter the number of steam drivers and their services have been established, the utility, or process engineer will estimate the steam consumption for making the steam balance.The standard method of doing this is to use the isentropic expansion of steam correeted for turbine efficiency.Actual steam consumption by a turbine is determined via:SR = (TSR)(bhp)/EHere, SR = actual steam rate, lb/h; TSR = theoretical steam rate, lb/hr/bhp ; bhp = turbine brake horsepower; and E = turbine efficiency.When exhaust steam can be used for process heating, the highest thermodynamic efficiency can be achieved by means of backpressure turbines. Large drivers, which are of high efficiency and require low theoretical steam rates, are normally supplied by the high-pressure header, thus minimizing steam consumption.Small turbines that operate only in emergencies can be allowed to exhaust to atmosphere. Although their water rates are poor, the water lost in short-duration operations may not represent a significant cost. Such turbines obviously play a small role in steam balance planning.Constructing steam balancesAfter the process and steam-turbine demands have been established, the next step is to construct a steam balance for the chemical complex or oil refinery. A sample balance is shown in Fig. 1-4. It shows steam production and consumption, the header systems, letdown stations, and boiler plant. It illustrates a normal (winter) case.It should be emphasized that there is not one balance but a series, representing a variety of operating modes. The object of the balances is to determine the design basis for establishing boiler she, letdown stations and deaerator capacities, boiler feedwater requirements, and steam flows in various parts of the system.The steam balance should cover the following operating modes: normal, all units operating; winter and summer conditions; shutdown of major units; startup of major units; loss of largest condensate source; power failure with flare in service; loss of large process steam generators; and variations in consumption by large steam users.From 50 t0 100 steam balances could be required to adequately cover all the major impacts on the steam system of a large complex.At this point, the general basis of the steam system design should have been developed by the completion of the following work:1. All significant loads have been examined, with particular attention focused on those for which there is relatively little design freedom - i.e., reboilers, sparing steam for process units, large turbines required because of electric power limitation and for shutdown safety.2. Loads have been listed for which the designer has some liberty in selecting drivers. These selections are based on analyses of cost competitiveness.3. Steam pressure and temperature levels have been established.4. The site plan has been reviewed to ascertain where it is not feasible to deliver steam or recover condensate, because piping costs would be excessive.5. Data on the process units are collected according to the pressure level and use of steam - i.e., for the process, condensing drivers and backpressure drivers.6. After Step 5, the system is balanced by trial-and-error calculations or computerized techniques to determine boiler, letdown, deaerator and boiler feedwater requirements.7. Because the possibility of an electric power failure normally imposes one of the major steam requirements, normal operation and the eventuality of such a failure must both be investigated, as a minimum.Checking the design basisAfter the foregoing steps have been completed, the following should be checked:Boiler capacity - Installed boiler capacity would be the maximum calculated (with an allowance of l0-20% for uncertainties in the balance), corrected for the number of boilers operating (and on standby).The balance plays a major role in establishing normal-case boiler specifications, both number and size. Maximum firing typically is based on the emergency case. Normal firing typically establishes the number of boilers required, because each boiler will have to be shut down once a year for the code-required drum inspection. Full-firing levels of the remaining boilers will be set by the normal steam demand. The number of units required (e.g., three 50% units, four 33%units, etc.) in establishing installed boiler capacity is determined from cost studies. It is generally considered double-jeopardy design to assume that a boiler will be out of service during a power failure.Minimum boiler turndown - Most fuel-fired boilers can be operated down to approximately 20% of the maximum continuous rate. The maximum load should not be expected to be below this level.Differences between normal and maximum loads –If the maximum load results from an emergency (such as power failure), consideration should be given to shedding process steam loads under this condition in order to minimize in- stalled boiler capacity. However, the consequences of shedding should be investigated by the process designer and the operating engineers to ensure the safe operation of the entire process.Low-level steam consumption - The key to any steam balance is the disposition of low-level steam. Surplus low-level steam can be reduced only by including more condensing steam turbines in the system, or devising more process applications for it, such as absorption refrigeration for cooling process streams and ranking-cycle systems for generating power. In general, balancing the supply and consumption of low-level steam is a critical factor in the design of the steam system.Quantity of steam at pressure-reducing stations - Because useful work is not recovered from the steam passing through a pressure-reducing station, such flow should be kept at a minimum. In the Fig. 1-5 150/50-psig station, a flow of only 35,000 lb/h was established as normal for this steam balance case (normal, winter). The loss of steam users on the 50-psig systems should be considered, particularly of the large users, because a shutdown of one may demand that the 150/50-psig station close off beyond its controllable limit. If this happened, the 50-psig header would be out of control, and an immediate-pressure buildup in the header wouldbegin, setting off the safety relief valves.The station's full-open capacity should also be checked to ensure that it can make up any 50-psig steam that may be lost through the shutdown of a single large 50-psig source (a turbine sparing a large electric motor, for example}. It would be undesirable for the station to be sized so that it opens more than 80%. In some cases, range ability requirements may dictate two valves (one small and one large).Intermediate pressure level - If large steam users or suppliers may come on stream or go off steam, the normal(day-to-day) operation should be checked. No such change in normal operation should result in a significant upset (e.g.,relief valves set off, or the system pressure control lost).If a large load is lost, the steam supply should be reduced by the letdown-station. If the load suddenly increases, the 600/150-psig station must be able of supplying the additional steam. If steam generated via the process disappears, the station must be capable of making up theload. If150-psig steam is generated unexpectedly, the 600/150-psig station must be able to handle the cutback.The important point here is that where the steam flow could rise t0 700,000 lb/h, this flow should be reduced by a cutback at the 600/150-psig station, not by an increase in the flow to the lower-pressure level, because this steam would have nowhere to go. The normal (600/150-psig) letdown station must be capable of handling some of the negative load swings, even though, overall, this letdown needs to be kept to a minimum.On the other hand, shortages of steam at the 150-psig level can be made up relatively easily via the 600/150-psig station. Such shortages are routinely small in quantity or duration, or both-(startup, purging, electric drive maintenance, process unit shutdown, etc.)High-pressure level - Checking the high-pressure level is generally more straightforward because rate control takes place directly at the boilers. Firing can be increased or lowered to accommodate a shortage or surplus.Typical steam-balance casesThe Fig. 1-4 steam balance represents steady-state condition, winter operation, all process units operating, and no significant unusual demands for steam.An analysis similar to the foregoing might also be required for the normal summertime case, in which a single upset must not jeopardize control but the load may be less (no tank heating, pipe tracing, etc.)The balance representing an emergency (e.g., loss of electric power) is significant. In this case, the pertinent test point is the system's ability to simply weather the upset, not to maintain normal, stable operation. The maximum relief pressure that would develop in any of the headers represents the basis for sizing relief valves. The loss of boiler feed water or condensate return, or both, could result in a major upset, or even a shutdown.Header pressure control during upsetsAt the steady-state conditions associated with the multiplicity of balances, boiler capacity can be adjusted to meet user demands. However, boiler load cannot be changed quickly to accommodate a sharp upset. Response rate is typically limited to 20% of capacity per minute. Therefore, other elements must be relied on to control header pressures during transient conditions.The roles of several such elements in controlling pressures in the three main headers during transient conditions are listed in Table l-3. A control system having these elements will result in a steam system capable of dealing with the transient conditions experienced in moving from one balance point to another.Tracking steam balancesBecause of schedule constraints, steam balances and boiler size are normally established early in the design stage. These determinations are based on assumptions regarding turbine efficiencies, process steam generated in waste-heat furnaces, and other quantities of steam that depend on purchased equipment. Therefore, a sufficient number of steam balances should be tracked through the design period to ensure that the equipment purchased will satisfy the original design concept of the steam system.This tracking represents an excellent application for a utility data-base system and a system linear programming model. During the course of the mechanical design of a large "grass roots" complex, 40 steam balances were continuously updated for changes in steam loads via such an application.Cost tradeoffsTo design an efficient but least-expensive system, the designer ideally develops a total minimum-cost curve – which incorporates all the pertinent costs related to capital expenditures, installation, fuel, utilities, operations and maintenance-and performs a cost study of the final system. However, because the designer is under the constraint of keeping to a project schedule, major, highly expensive equipment must be ordered early in the project, when many key parts of the design puzzle are not available (e.g., a complete load summary, turbine water rates, equipment efficiencies and utility costs).A practical alternative is to rely on comparative-cost estimates, as are conventionally used in assisting with engineering decision points. This approach is particularly useful in making early equipment selections when fine-tuning is not likely to alter decisions, such as regarding the number of boilers required, whether boilers should be shop-fabricated or field-erected, and the practicality of generating steam from waste heat or via cogeneration.The significant elements of a steam-system cost-comparative study are costs for: equipment and installation; ancillaries (i.e., miscellaneous items required to support the equipment,such as additional stacks, upgraded combustion control, more extensive blowdown facilities, etc.); operation(annual); maintenance (annual); and utilities.The first two costs may be obtained from in-house data or from vendors. Operational and maintenance costs can be factored from the capital cost for equipment based on an assessment of the reliability of the purchased equipment.Utility costs are generally the most difficult to establish at an early stage because sources frequently depend on the site of the plant. Some examples of such costs are: purchased fuel gas - $5.35/million Btu, raw water - $0.60/1,000 gal, electricity - $0.07{kWh, and demineralized boiler feedwater -$1.50/1,000 gal. The value of steam at the various pressureLevels can be developed [5J.Let it be further assumed that the emergency balance requires 2,200,000 lb/h of steam (all boilers available). Listed in Table 1 4 are some combinations of boiler installations that meet the design conditions previously stipulated.Table l-4 indicates that any of the several combinations of power-boiler number and size could meet both normal and emergency demand. Therefore, a comparative-cost analysis would be made to assist in making an early decision regarding the number and size of the power boilers.(Table l-4 is based on field-erected, industrial-type boiler Conventional sizing of this type of boiler might range from 100,000 lb/h through 2,000,000 lb/h for each.)An alternative would be the packaged boiler option (although it does not seem practical at this load level. Because it is shop-fabricated, this type of boiler affords a significant saving in terms of field installation cost. Such boilers are available up to a nominal capacity of 100,000 lb/h, with some versions up t0 250,000 lb7h.Selecting turbine water rate i.e., efficiency) represents another major cost concern. Beyond the recognized payout period (e.g., 3 years), the cost of drive steam can be significant in comparison with the equipment capital cost. The typical 30% efficiency ofthe medium-pressure backpressure turbine can be boosted significantly.Driver selections are frequently made with the help of cost-tradeoff studies, unless overriding considerations preclude a drive medium. Electric pump drives are typically recommended on the basis of such studies.Steam tracing has long been the standard way of winterizing piping, not only because of its history of successful performance but also because it is an efficient way to use low-pressure steam.Design consideratonsAs the steam system evolves, the designer identifies steam loads and pressure levels, locates steam loads, checks safety aspects, and prepares cost-tradeoff studies, in order to provide low-cost energy safely, always remaining aware of the physical entity that will arise from the design.How are design concepts translated into a design document? And what basic guidelines will ensure that the physical plant will represent what was intended conceptually?Basic to achieving these ends is the piping and instrument diagram (familiar as the P&ID). Although it is drawn up primarily for the piping designers benefit, it also plays a major role in communicating to the instrumentation designer the process-control strategy, as well as in conveying specialty information to electrical, civil, structural, mechanical and architectural engineers. It is the most important document for representing the specification of the steam。

本科毕业设计(论文)外文翻译译文题目:建筑招投标与赢者诅咒:博弈论方法学院:经济与管理学院专业:工程造价学生姓名:**学号:************指导教师:***完成时间:2017年4月5日译自:Muaz O.Ahmed1;Islam H. El-adaway, M.ASCE2; Kalyn T. Coatney3;and Mohamed S. Eid4. Construction Bidding and the Winner’s Curse: Game Theory Approach[J],Construction Engineering and Management,2016,142(2).建筑招投标与赢者诅咒:博弈论方法Muaz O.Ahmed1;Islam H. El-adaway, M.ASCE2; Kalyn T. Coatney3;and Mohamed S. Eid4美国密西西比州立大学土木与环境工程系摘要:在建筑业中,竞争投标一直是承包商选择的一种方法。

由于施工的真实成本直到项目完工后才知道,所以逆向选择是一个重大问题。

逆向选择是当合同的赢家低估了项目的真实成本,从而中标承包商很有可能赚取负或至少低于正常利润。

赢家的诅咒是当中标人提交一个被低估的出价,因此诅咒被选中承担项目。

在多阶段招标环境下,分包商由总承包商雇用,胜利者的诅咒可能会复合。

在一般情况下,承包商遭受赢者的诅咒,因为各种各样的原因包括项目成本估计不准确;新的承包商进入建筑市场;在建筑行业的衰退的情况下减少损失;在建筑市场激烈的竞争;差的机会成本,从而影响承包商的行为;以及要赢该项目然后弥补订单变更、索赔和其他机制而带来的损失。

本文通过博弈论方法旨在分析并减少潜在的施工招投标中赢家诅咒的影响。

为此,作者确定在两个共同的施工招标环境的赢家诅咒的程度,即单级招标和多级招标。

我们的目标是比较上述两个施工招标环境,并确定如何学习从过去的投标决策和经验可以减轻赢家的诅咒。