Monocular Tracking 3D People with Back Constrained Scaled Gaussian Process Latent Variable

- 格式:pdf

- 大小:399.32 KB

- 文档页数:7

Monocular3D Pose Estimation and Tracking by Detection Mykhaylo Andriluka1,2Stefan Roth1Bernt Schiele1,21Department of Computer Science,TU Darmstadt2MPI Informatics,Saarbr¨u ckenAbstractAutomatic recovery of3D human pose from monocular image sequences is a challenging and important research topic with numerous applications.Although current meth-ods are able to recover3D pose for a single person in con-trolled environments,they are severely challenged by real-world scenarios,such as crowded street scenes.To address this problem,we propose a three-stage process building on a number of recent advances.Thefirst stage obtains an ini-tial estimate of the2D articulation and viewpoint of the per-son from single frames.The second stage allows early data association across frames based on tracking-by-detection. These two stages successfully accumulate the available2D image evidence into robust estimates of2D limb positions over short image sequences(=tracklets).The third and final stage uses those tracklet-based estimates as robust im-age observations to reliably recover3D pose.We demon-strate state-of-the-art performance on the HumanEva II benchmark,and also show the applicability of our approach to articulated3D tracking in realistic street conditions. 1.IntroductionThis work addresses the challenging problem of3D pose estimation and tracking of multiple people in clut-tered scenes using a monocular,potentially moving camera. This is an important problem with many applications in-cluding video indexing,automotive safety,or surveillance. There are multiple challenges that contribute to the diffi-culty of this problem and need to be addressed simulta-neously.Probably the most important challenge in artic-ulated3D tracking is the inherent ambiguity of3D pose from monocular image evidence.This is particularly true for cluttered real-world scenes with multiple people that are often partially or even fully occluded for longer peri-ods of time.Another important challenge,even for2D pose recovery,is the complexity of human articulation and ap-pearance.Additionally,complex and dynamically changing backgrounds of realistic scenes complicate data association across multiple frames.While many of these challenges have been addressed individually,we are not aware of any work that has addressed all of them simultaneously usinga Figure1.Example results:3D tracking in a challenging scene. monocular,potentially moving camera.The goal of this paper is to contribute a sound Bayesian formulation to address this challenging problem.To that end we build on some of the most powerful approaches pro-posed for people detection and tracking in the literature.In three successive stages we accumulate the available2D im-age evidence to enable robust3D pose recovery. Overview of the approach.Ultimately,our goal is to es-timate the3D pose Q m of each person in all frames m of a sequence of length M,given the image evidence E1:M in all frames.To that end,we define a posterior distribution over pose parameters given the evidence:p(Q1:M|E1:M)∝p(E1:M|Q1:M)p(Q1:M).(1) Here,Q1:M denotes the3D pose parameters over the entire sequence.Clearly,a key difficulty is that the posterior in Eq.1has many local optima as the estimation of3D poses is highly ambiguous given monocular images.To address this problem this paper proposes a new three-stage approach sequentially reducing the ambiguity in3D pose recovery.Before giving an overview of the three-stage process,let us define the observation likelihood p(E1:M|Q1:M).We assume conditional independence of the evidence in each frame given the3D pose parameters Q m.The likelihood thus factorizes into single-frame likelihoods:p(E1:M|Q1:M)=Mm=1p(E m|Q m).(2)In this paper,the evidence in each frame is represented by the estimate of the person’s2D viewpoint w.r.t.the camera and the posterior distribution of the2D positions and orien-tations of body parts.To estimate these reliably from single frames,thefirst stage(Sec.2)builds on a recently proposed part-based people detection and pose estimation framework based on discriminative part detectors[3].To accumulate further2D image evidence,the second stage(Sec.3)extracts people tracklets from a small num-ber of consecutive frames using a2D-tracking-by-detection approach.Here,the output of thefirst stage is refined in the sense that we obtain more reliable2D detections of the peo-ple’s body parts as well as more robust viewpoint estimates.The third stage(Sec.4)then uses the image evidence ac-cumulated in the previous two stages to recover3D pose.As described later,we model the temporal prior p(Q1:M)over 3D poses as a hierarchical Gaussian process latent variable model(hGPLVM)[17].We combine this with a hidden Markov model(HMM)that allows to extend the people-tracklets,which cover only a small number of frames at a time,to possibly longer3D people-tracks.Note that our 3D model is assumed to generate the bottom-up evidence from2D body models and thus constitutes a hybrid genera-tive/discriminative approach(c.f.[26]).The contributions of this paper are two-fold.The main contribution is a novel approach to human pose estimation, which combines2D position,pose and viewpoint estimates into an evidence model for3D tracking with a3D motion prior,and is able to accurately estimate3D poses of mul-tiple people from monocular images in realistic street en-vironments.The second contribution,which serves as a building block for3D pose estimation,is a new pedestrian detection approach based on a combination of multiple part-based models.While the power of part-based models for people detection has already been demonstrated(e.g.,[3]), here we show that combining multiple part-based models leads to significant performance gains,and while improving over the state-of-the art in detection,also allows to estimate viewpoints of people in monocular images.Related Work.Due to the difficulties involved in reliable 3D pose estimation,this task has often been considered in controlled laboratory settings[4,6,13,24,29],with so-lutions frequently relying on background subtraction and simple image evidence,such as silhouettes or edge-maps. In order to constrain the search in high-dimensional pose spaces these approaches often use multiple calibrated cam-eras[13],complex dynamical motion priors[29],or de-tailed body models[4].Their combination allows to achieve impressive results[13,15],similar in performance to com-mercial marker-based motion capture systems.However,realistic street scenes do not satisfy many of the assumptions made by these systems.For such scenes multiple synchronized video streams are difficult to obtain,the appearance of people is significantly more complex,and robust extraction of evidence is challenged by frequent full and partial occlusions,clutter,and camera motion.In order to address these challenges,a number of methods leverage recent advances in people detection and either use detection for prefiltering and initialization[11,14],or integrate detec-tion,tracking and pose estimation within a single“tracking-by-detection”framework[2].This paper builds on state-of-the-art people detection and 2D pose estimation and leverages recent work in this area [3,7,10,20],which we combine with a dynamic motion prior[2,28].While[2]has shown to enable2D pose es-timation for people in sideviews,this paper goes beyond by estimating poses in3D from multiple -pared to[14],we are able to estimate poses in monocular images,while their approach uses stereo.[11]proposes to combine detection and3D pose estimation for monocu-lar tracking,but relies on the ability to detect characteristic poses of people,which we do not require here.Estimation of3D poses from2D body part positions was previously proposed in[25].However,this approach was evaluated only in laboratory conditions for a single subject and it remains unclear how well it generalizes to more com-plex settings with multiple people as considered here.There is also a lot of work on predicting3D poses di-rectly from image features using regression[1,16,27],clas-sification[21],or a search over a database of exemplars [19,22].These methods typically require a large database of training examples to achieve good performance and are challenged by the high variability in appearance of people in realistic settings.In contrast,we represent the complex appearance of people using separate appearance models for each body part,which reduces the number of required train-ing images and makes our representation moreflexible. 2.Multiview People Detection in Single Frames2D people detection and pose estimation serves as one of our key building blocks for3D pose estimation and track-ing.Our approach is driven by three major goals:(1)We want to take advantage of the recent developments in2D people detection and pose estimation to define robust ap-pearance models for3D pose estimation and tracking;(2) we aim to reduce the search space of possible3D poses by taking advantage of inferred2D poses;and(3)we want to extract the viewpoint from which people are visible to re-duce the inherent2D-to-3D ambiguity.To that end we build on and extend a recent2D people detection approach[3].2.1.Basic pictorial structures modelPictorial structures[8]represent objects,such as peo-ple,as aflexible configuration of N different parts L m= {l m0,l m1,...,l mN}.m denotes the current frame of(a)(b)(c)(d)(e)(f)(g)(h)Figure2.Training samples shown for each viewpoint:(a)right,(b)r.-back,(c)b.,(d)left-b.,(e)l.,(f)l.-front,(g)f.,(h)r.-f.the sequence.The state of part i is given by l mi={x mi,y mi,θmi,s mi},where x mi and y mi denote its im-age position,θmi the absolute orientation,and s mi the partscale.The posterior probability of the2D part configurationL m given the single frame image evidence D m is given asp(L m|D m)∝p(D m|L m)p(L m).(3)The prior on body configurations p(L m)has a tree structureand represents the kinematic dependencies between bodyparts.It factorizes into a unary term for the root part(here,the torso)and pairwise terms along the kinematic chains:p(L m)=p(l m0)(i,j)∈Kp(l mi|l mj),(4)where K is the set of edges representing kinematic rela-tionships between parts.p(l m0)is assumed to be uniform,and the pairwise terms are taken to be Gaussian in the trans-formed space of the joints between the adjacent parts[3,8].The likelihood term is assumed to factorize into a productof individual part likelihoodsp(D m|L m)=Ni=0p(d mi|l mi).(5)To define the part likelihood,we rely on the boosted partdetectors from[3],which use the truncated output of anAdaBoost classifier[12]and a dense shape context repre-sentation[5,18].Our model is composed of8body parts:left/right lower and upper legs,torso,head and left/rightupper and lower arms(later sideview detectors also useleft/right feet for better performance).Apart from its excellent performance in complex realworld scenes[3,7],the pictorial structures model also hasthe advantage that inference is both optimal and efficientdue to the tree structure of the model.We perform sum-product belief propagation to compute the marginal poste-riors of individual body parts,which can be computed effi-ciently using convolutions[8].Data and evaluation.While the detector of[3]is inprinciple capable of detecting people from arbitrary views,its detection performance has only been evaluated on sideviews.To evaluate its suitability for our multiview set-ting,we collected a dataset of1486images for training,248for validation,and248for testing,which we carefullyselected so that sufficiently many people are visible fromall viewpoints.In addition to the persons’bounding boxes,we also annotated the viewpoint of all people in our datasetby assuming8evenly spaced viewpoints,each45degreesFigure3.Calibrated output of the8viewpoint classifiers.apart from each other(front/back,left/right,and diagonalfront/back left/right).Fig.2shows example images fromour training set,one for each viewpoint.As expected and can be seen in Fig.4(a),the detectortrained on side-views as in[3]shows only modest perfor-mance levels on our multiview dataset.By retraining themodel on our multiview training set we obtain a substantialperformance gain,but still do not achieve the performancelevels of monolithic,discriminative HOG-based detectors[30]or HOG-based detectors with parts[9](see Fig.4(b)).However,since we not only need to detect people,but alsoestimate their2D pose,such monolithic or coarse part-based detectors are not appropriate for our task.2.2.Multiview ExtensionsTo address this shortcoming,we develop an extendedmultiview detector that allows2D pose estimation as well asviewpoint estimation.We train8viewpoint-specific detec-tors using our viewpoint-annotated multiview data.Theseviewpoint-specific detectors not only have the advantagethat their kinematic prior is specific to each viewpoint,butalso that part detectors are tuned to each view.We enrichthis set of detectors with one generic detector trained on allviews,as well as two side-view detectors as in[3]that ad-ditionally contain feet(which improves performance).We explored two strategies for combining the outputof this bank of detectors:(1)We simply add up the log-posterior of a person being at a particular image loca-tion as determined by the different detectors;and(2)wetrain a linear SVM using the11-dimensional vector of themean/variance-normalized detector outputs as features.TheSVM detector was trained on the validation set of248im-ages.Fig.4(a)shows that the simple additive combinationof viewpoint-specific detectors improved over the detectionperformance from each individual viewpoint-specific detec-tor.It also outperforms the approach from[3].Interestingly,the new SVM-based detector not onlysubstantially improves performance,but also outperformsthe current state-of-the-art in multiview people detection[9,30].As is shown in Fig.4(b),the performance improveseven further when we extend our bank of detectors withthe HoG-based detector from[30].While this is not themain focus of our work,this clearly shows the power of thefirst stage of our approach.Several example detections inFig.4(c)demonstrate the benefits of combining viewpoint-specific detectors.(a)(b)(c)Figure parison between (a)viewpoint-specific models and combined model,and (b)comparison to state-of-the-art on the “Multi-viewPeople”dataset;(c)sample detections obtained with the side-view detector of [3](top),the generic detector trained on our multiview dataset (middle),and the proposed detector combining the output of viewpoint-specific detectors with a linear SVM (bottom).Right Right-Back Left-Left Left-Front Right-Avg.%Back Back Front Front Max 53.735.545.722.637.98.640.08.331.1SVM 72.612.748.612.355.744.570.416.242.2SVM-adj 71.422.329.518.084.718.150.729.235.4Table 1.Viewpoint estimation on the “MultiviewPeople”dataset.The task is to classify one of 8viewpoints (chance level 12.5%).Viewpoint estimation.Next we aim to estimate the per-son’s viewpoints,since such viewpoint estimates allow to significantly reduce the ambiguity in 3D pose.To that end,we rely on the bank of viewpoint-specific detectors from above,and train 8viewpoint classifiers,linear SVMs,on the detector outputs of the validation set.We consider two training and evaluation strategies:(SVM )Only training ex-amples from one of the viewpoints are used as positive ex-amples,the remainder as negative ones;and (SVM-adj ),where we group viewpoints into triplets of adjacent ones and train separate classifiers for each such triplet.As a base-line approach (Max ),we estimate the viewpoint by taking the maximum over the outputs of the 8viewpoint-specific detectors.Results are shown in Tab.1.SVM improves over the baseline in case when we require exact recognition of viewpoint by approx.11%,but SVM-adj also performs well.In addition,when we also consider the two adjacent viewpoints as being correct,SVM obtains an average per-formance of 70.0%and SVM-adj of 76.2%.This shows that SVM-adj more gracefully degrades across viewpoints,which is why we adopt it in the remainder.Since the scores of the viewpoint classifiers are not di-rectly comparable with each other,we calibrate them by computing the posterior of the correct label given the clas-sifier score,which maps the scores to the unit interval.The posterior is computed via Bayes’rule from the distributions of classifier scores on the positive and negative examples.We assume these distributions to be Gaussian,and estimate their parameters from classifier scores on the validation set.Fig.3shows calibrated outputs of all 8classifiers com-puted for a sequence of 40frames in which the person first appears from the “right”and then from the “right-back”viewpoint.The correct viewpoint is the most probable for most of the sequence,and failures in estimation often cor-respond to adjacent viewpoints.3.2D Tracking and Viewpoint EstimationAs discussed in the introduction,our goal is to accumu-late all available 2D image evidence prior to the third 3D tracking stage in order to reduce the ambiguity of 2D-to-3D lifting as much as possible.While the person detector described in the previous section is capable of estimating 2D positions of body parts and viewpoints of people from single frames,the second stage (described here)aims to im-prove these estimates by 2D-tracking-by-detection [2,31].To exploit temporal coherency already in 2D,we extract short tracklets of people.This,on the one hand,improves the robustness of estimates for 2D positions,scale and view-point of each person,since they are jointly estimated over an entire tracklet.Improved body localization in turn aids 2D pose estimation.On the other hand,it also allows to perform early data association .This is important for sequences with multiple people,where we can associate “anonymous”sin-gle frame hypotheses with the track of a specific person.Tracklet extraction.From the first stage of our approach we obtain a set of N m potentially overlapping bound-ing box hypotheses H m =[h m 1,...,h mN m ]for each frame m of the sequence,where each hypothesis h mi ={h x mi ,h y mi ,h smi }corresponds to a bounding box at partic-ular image position and scale.In order to obtain a set of tracklets,we follow the HMM-based tracking procedure in-troduced in [2]1.To that end we treat the person hypothe-ses in each frame as states and find state subsequences that1Adetailed description is given in Sec.3.3of [2].Figure 5.People detection based on single frames (top)and tracklets found by our 2D tracking algorithm;Different tracklets are identified by color and the estimated viewpoints are indicated with two letters (bottom).Note that several false positives in the top row are filtered out and additional –often partially occluded –detections are filled in (e.g.,on the left side of the leftmost image).are consistent in position,scale and appearance by itera-tively applying Viterbi decoding.The emission probabili-ties for each state are derived from the detection score.The transition probabilities between states h mi and h m −1,j are modeled using first-order Gaussian dynamics and appear-ance compatibility:p trans (h mi ,h m −1,j )=N (h mi |h m −1,j ,Σpos )·N (d app (h mi ,h m −1,j )|0,σ2app ).where Σpos =diag (σ2x,σ2y ,σ2s ),and d app (h mi ,h m −1,j )is the Euclidean distance between RGB color histograms computed for the bounding rectangle of each hypothesis.We set σx =σy =5,σs =0.1and σapp =0.05.Viewpoint tracking.Finally,for each of the tracklets we estimate the viewpoint sequence ω1:N =(ω1,...,ωN ),again using a simple HMM and Viterbi decoding.We con-sider the 8discrete viewpoints as states,the viewpoint clas-sifiers described in Sec.2as unary evidence,and Gaus-sian transition probabilities that enforce similar subsequent viewpoints to reflect that people tend to turn slowly.Evaluation.Fig.5shows an example of a short subse-quence in which we compare the detection results of the single-frame 2D detector with the extracted tracklets.Note how tracking helps to remove the spurious false positive de-tections in the background,and corrects failures in scale es-timation,which would otherwise hinder correct 2D-to-3D lifting.In Fig.3we visualize the single frame prediction scores for each viewpoint for the tracklet corresponding to the person with index 22on Fig.5.Note that while view-point estimation from single frames is reasonably robust,it can still fail at times (the correct viewpoint is “right”for frames 4to 30and “right-back”for frames 31to 40).The tracklet-based viewpoint estimation,in contrast,yields the correct viewpoint for the entire 40frame sequence.Finally,as we demonstrate in Fig.5,the tracklets also provide data association even in case of realistic sequences with frequent full and partial occlusions.4.3D Pose EstimationTo estimate and track poses in 3D,we take the 2D track-lets extracted in the previous stage and lift the 2D pose es-timated for each frame into 3D (c.f.[25]),which is done with the help of a set of 3D exemplars [19,22].Projections of the exemplars are first evaluated under the 2D body part posteriors,and the exemplar with the most probable pro-jection is chosen as an initial 3D pose.This initial pose is propagated to all frames of the tracklet using the known temporal ordering on the exemplar set.Note that this yields multiple initializations for 3D pose sequences,one for each frame of the tracklet.This 2D-to-3D lifting procedure is robust,because it is based on reliable 2D pose posteriors,and detections and viewpoint estimates from the 2D track-lets.Starting from these initial pose sequences,the actual pose estimation and tracking is done in a Bayesian frame-work by maximizing the posterior defined in Eq.(1),for which they serve as powerful initializations.The 3D pose is parametrized as Q m ={q m ,φm ,h m },where q m denotes the parameters of body joints,φm the rotation of the bodyin world coordinates,and h m ={h x m ,h y m ,h scalem}the po-sition and scale of the person projected to the image.The 3D pose is represented using a kinematic tree with P =10flexible joints,in which each joint has 2degrees of freedom.A configuration example is shown in Fig.6(a).The evidence at frame m is given by the E m ={D m ,ωm },and consists of the single frame image evidence D m and the 2D viewpoint estimate ωm obtained from the entire tracklet.Assuming conditional independence of 2D viewpoint and image evidence given the 3D pose,the single frame likelihood in Eq.2factorizes asp (E m |Q m )=p (ωm |Q m )p (D m |Q m ).(6)Based on the estimated 2D viewpoint ωm ,we model the viewpoint likelihood of the 3D viewpoint φm as Gaussian centered at the rotation component of φm along the y-axis:p (ωm |Q m )=N (ωm |proj y (φm ),σ2ω).(7)We define the likelihood of the3D pose p(D m|Q m)with the help of the part posteriors given by the2D body modelp(D m|Q m)=Nn=1p(proj n(Q m)|D m),(8)where proj n(Q m)denotes the projection of the n-th3Dbody part into the image.While such a3D likelihood is typ-ically defined as the product of individual part likelihoodssimilarly to Eq.5,this leads to highly multimodal posteri-ors and difficult inference.By relying on2D part posteriorsinstead,the3D model is focused on hypotheses for whichthere is sufficient2D image evidence from previous stages.To avoid expensive3D likelihood computations,we rep-resent each2D part posterior using a non-parametric repre-sentation.In particular,for each body part n in frame m wefind the J locations with the highest posterior probability E mn={(l j mn,w j mn),j=1,...,J},where l j mn∈R4cor-responds to the2D location(image position and orientation)and w j mn to the posterior density at this location.Given that,we approximate the2D part posterior as a kernel density es-timate with Gaussian kernelκ:p(proj n(Q m)|D m)≈jw j mnκ(l j mn,proj n(Q m)).(9)Dynamical model.In our approach we represent the tem-poral prior in Eq.1as the product of two terms:p(Q1:M)=p(q1:M)p(h1:M),(10) which correspond to priors on the parameters of3D pose as well as image position and scale.The prior on the person’s position and scale p(h1:M)is taken to be a broad Gaussian and models smooth changes of both the scale of the person and its position in the image.We model the prior over the parameters of the3D pose q1:M with a hierarchical Gaussian process latent variable model(hGPLVM)[17].We denote the M dimensional vec-tor of the values of i-th pose parameter across all frames as q1:M,i.In the hGPLVM each dimension of the original high-dimensional pose is modelled as an independent Gaus-sian process defined over a shared low dimensional latent space Z1:M:p(q1:M|Z1:M)=Pi=1N(q1:M,i|0,K z),(11)where P is the number of parameters in our pose repre-sentation,K z is a covariance matrix of the elements of theshared latent space Z1:M defined by the output of the co-variance function k(z i,z j),which in our case is taken to be squared exponential.The values of the shared latent space Z1:M are them-selves treated as the outputs of Gaussian processes with aone dimensional input,the time T1:M.Our implementationuses a d l=2dimensional shared latent space.Such a hi-erarchy of Gaussian processes allows to effectively modelboth correlations between different dimensions of the orig-inal input space and their dynamics.200200q k(a)(b)Figure6.(a):Representation of the3D pose in our model (parametrized joints are marked with arrows).(b):Initial pose sequence after2D-to-3D lifting(top)and pose sequence after op-timization of the3D pose posterior(bottom).MAP estimation.The hGPLVM prior requires two sets of auxiliary variables Z1:M and T1:M,which need to be dealt with during maximum a-posteriori estimation.Our strategy is to optimize Z only and keep the values of T fixed.This is possible since the values of T roughly cor-respond to the person’s state within a walking cycle,which can be reliably estimated using the2D tracklets.The full posterior over3D pose parameters being maximized is given by:p(Q1:M,Z1:M|E1:M,T1:M)∝p(E1:M|Q1:M)·p(q1:M|Z1:M)p(Z1:M|T1:M)p(h1:M).(12) We optimize the posterior using scaled conjugate gradients and initializing the optimization using the lifted3D poses.4.1.3D pose estimation for longer sequencesThe MAP estimation approach just described is only tractable if the number of frames M is sufficiently small.In order to estimate3D poses in longer sequences we apply a strategy similar to the one used in[2]:First we estimate3D poses in short(M=10)overlapping subsequences of the longer sequence.Since for each subsequence we initialize and locally optimize the posterior multiple times,this leaves us with a large pool of3D pose hypotheses for each of the frames,from which wefind the optimal sequence using a hidden Markov model and Viterbi decoding.To that end we treat the3D pose hypotheses in each frame as discrete states with emission probabilities given by Eq.6and define transition probabilities between states using the hGPLVM.5.ExperimentsWe evaluate our model in two diverse scenarios.First, we show that our approach improves the state-of-the-art in monocular human pose estimation on the standard “HumanEva II”benchmark,for which ground truth poses are available.Additionally,we evaluate our approach on two cluttered and complex street sequences with multiple people including partial and full occlusions.。

Multicamera People Tracking witha Probabilistic Occupancy MapFranc¸ois Fleuret,Je´roˆme Berclaz,Richard Lengagne,and Pascal Fua,Senior Member,IEEE Abstract—Given two to four synchronized video streams taken at eye level and from different angles,we show that we can effectively combine a generative model with dynamic programming to accurately follow up to six individuals across thousands of frames in spite of significant occlusions and lighting changes.In addition,we also derive metrically accurate trajectories for each of them.Our contribution is twofold.First,we demonstrate that our generative model can effectively handle occlusions in each time frame independently,even when the only data available comes from the output of a simple background subtraction algorithm and when the number of individuals is unknown a priori.Second,we show that multiperson tracking can be reliably achieved by processing individual trajectories separately over long sequences,provided that a reasonable heuristic is used to rank these individuals and that we avoid confusing them with one another.Index Terms—Multipeople tracking,multicamera,visual surveillance,probabilistic occupancy map,dynamic programming,Hidden Markov Model.Ç1I NTRODUCTIONI N this paper,we address the problem of keeping track of people who occlude each other using a small number of synchronized videos such as those depicted in Fig.1,which were taken at head level and from very different angles. This is important because this kind of setup is very common for applications such as video surveillance in public places.To this end,we have developed a mathematical framework that allows us to combine a robust approach to estimating the probabilities of occupancy of the ground plane at individual time steps with dynamic programming to track people over time.This results in a fully automated system that can track up to six people in a room for several minutes by using only four cameras,without producing any false positives or false negatives in spite of severe occlusions and lighting variations. As shown in Fig.2,our system also provides location estimates that are accurate to within a few tens of centimeters, and there is no measurable performance decrease if as many as20percent of the images are lost and only a small one if 30percent are.This involves two algorithmic steps:1.We estimate the probabilities of occupancy of theground plane,given the binary images obtained fromthe input images via background subtraction[7].Atthis stage,the algorithm only takes into accountimages acquired at the same time.Its basic ingredientis a generative model that represents humans assimple rectangles that it uses to create synthetic idealimages that we would observe if people were at givenlocations.Under this model of the images,given thetrue occupancy,we approximate the probabilities ofoccupancy at every location as the marginals of aproduct law minimizing the Kullback-Leibler diver-gence from the“true”conditional posterior distribu-tion.This allows us to evaluate the probabilities ofoccupancy at every location as the fixed point of alarge system of equations.2.We then combine these probabilities with a color and amotion model and use the Viterbi algorithm toaccurately follow individuals across thousands offrames[3].To avoid the combinatorial explosion thatwould result from explicitly dealing with the jointposterior distribution of the locations of individuals ineach frame over a fine discretization,we use a greedyapproach:we process trajectories individually oversequences that are long enough so that using areasonable heuristic to choose the order in which theyare processed is sufficient to avoid confusing peoplewith each other.In contrast to most state-of-the-art algorithms that recursively update estimates from frame to frame and may therefore fail catastrophically if difficult conditions persist over several consecutive frames,our algorithm can handle such situations since it computes the global optima of scores summed over many frames.This is what gives it the robustness that Fig.2demonstrates.In short,we combine a mathematically well-founded generative model that works in each frame individually with a simple approach to global optimization.This yields excellent performance by using basic color and motion models that could be further improved.Our contribution is therefore twofold.First,we demonstrate that a generative model can effectively handle occlusions at each time frame independently,even when the input data is of very poor quality,and is therefore easy to obtain.Second,we show that multiperson tracking can be reliably achieved by processing individual trajectories separately over long sequences.. F.Fleuret,J.Berclaz,and P.Fua are with the Ecole Polytechnique Fe´de´ralede Lausanne,Station14,CH-1015Lausanne,Switzerland.E-mail:{francois.fleuret,jerome.berclaz,pascal.fua}@epfl.ch..R.Lengagne is with GE Security-VisioWave,Route de la Pierre22,1024Ecublens,Switzerland.E-mail:richard.lengagne@.Manuscript received14July2006;revised19Jan.2007;accepted28Mar.2007;published online15May2007.Recommended for acceptance by S.Sclaroff.For information on obtaining reprints of this article,please send e-mail to:tpami@,and reference IEEECS Log Number TPAMI-0521-0706.Digital Object Identifier no.10.1109/TPAMI.2007.1174.0162-8828/08/$25.00ß2008IEEE Published by the IEEE Computer SocietyIn the remainder of the paper,we first briefly review related works.We then formulate our problem as estimat-ing the most probable state of a hidden Markov process and propose a model of the visible signal based on an estimate of an occupancy map in every time frame.Finally,we present our results on several long sequences.2R ELATED W ORKState-of-the-art methods can be divided into monocular and multiview approaches that we briefly review in this section.2.1Monocular ApproachesMonocular approaches rely on the input of a single camera to perform tracking.These methods provide a simple and easy-to-deploy setup but must compensate for the lack of 3D information in a single camera view.2.1.1Blob-Based MethodsMany algorithms rely on binary blobs extracted from single video[10],[5],[11].They combine shape analysis and tracking to locate people and maintain appearance models in order to track them,even in the presence of occlusions.The Bayesian Multiple-BLob tracker(BraMBLe)system[12],for example,is a multiblob tracker that generates a blob-likelihood based on a known background model and appearance models of the tracked people.It then uses a particle filter to implement the tracking for an unknown number of people.Approaches that track in a single view prior to computing correspondences across views extend this approach to multi camera setups.However,we view them as falling into the same category because they do not simultaneously exploit the information from multiple views.In[15],the limits of the field of view of each camera are computed in every other camera from motion information.When a person becomes visible in one camera,the system automatically searches for him in other views where he should be visible.In[4],a background/foreground segmentation is performed on calibrated images,followed by human shape extraction from foreground objects and feature point selection extraction. Feature points are tracked in a single view,and the system switches to another view when the current camera no longer has a good view of the person.2.1.2Color-Based MethodsTracking performance can be significantly increased by taking color into account.As shown in[6],the mean-shift pursuit technique based on a dissimilarity measure of color distributions can accurately track deformable objects in real time and in a monocular context.In[16],the images are segmented pixelwise into different classes,thus modeling people by continuously updated Gaussian mixtures.A standard tracking process is then performed using a Bayesian framework,which helps keep track of people,even when there are occlusions.In such a case,models of persons in front keep being updated, whereas the system stops updating occluded ones,which may cause trouble if their appearances have changed noticeably when they re-emerge.More recently,multiple humans have been simulta-neously detected and tracked in crowded scenes[20]by using Monte-Carlo-based methods to estimate their number and positions.In[23],multiple people are also detected and tracked in front of complex backgrounds by using mixture particle filters guided by people models learned by boosting.In[9],multicue3D object tracking is addressed by combining particle-filter-based Bayesian tracking and detection using learned spatiotemporal shapes.This ap-proach leads to impressive results but requires shape, texture,and image depth information as input.Finally, Smith et al.[25]propose a particle-filtering scheme that relies on Markov chain Monte Carlo(MCMC)optimization to handle entrances and departures.It also introduces a finer modeling of interactions between individuals as a product of pairwise potentials.2.2Multiview ApproachesDespite the effectiveness of such methods,the use of multiple cameras soon becomes necessary when one wishes to accurately detect and track multiple people and compute their precise3D locations in a complex environment. Occlusion handling is facilitated by using two sets of stereo color cameras[14].However,in most approaches that only take a set of2D views as input,occlusion is mainly handled by imposing temporal consistency in terms of a motion model,be it Kalman filtering or more general Markov models.As a result,these approaches may not always be able to recover if the process starts diverging.2.2.1Blob-Based MethodsIn[19],Kalman filtering is applied on3D points obtained by fusing in a least squares sense the image-to-world projections of points belonging to binary blobs.Similarly,in[1],a Kalman filter is used to simultaneously track in2D and3D,and objectFig.1.Images from two indoor and two outdoor multicamera video sequences that we use for our experiments.At each time step,we draw a box around people that we detect and assign to them an ID number that follows them throughout thesequence.Fig.2.Cumulative distributions of the position estimate error on a3,800-frame sequence(see Section6.4.1for details).locations are estimated through trajectory prediction during occlusion.In[8],a best hypothesis and a multiple-hypotheses approaches are compared to find people tracks from 3D locations obtained from foreground binary blobs ex-tracted from multiple calibrated views.In[21],a recursive Bayesian estimation approach is used to deal with occlusions while tracking multiple people in multiview.The algorithm tracks objects located in the intersections of2D visual angles,which are extracted from silhouettes obtained from different fixed views.When occlusion ambiguities occur,multiple occlusion hypotheses are generated,given predicted object states and previous hypotheses,and tested using a branch-and-merge strategy. The proposed framework is implemented using a customized particle filter to represent the distribution of object states.Recently,Morariu and Camps[17]proposed a method based on dimensionality reduction to learn a correspondence between the appearance of pedestrians across several views. This approach is able to cope with the severe occlusion in one view by exploiting the appearance of the same pedestrian on another view and the consistence across views.2.2.2Color-Based MethodsMittal and Davis[18]propose a system that segments,detects, and tracks multiple people in a scene by using a wide-baseline setup of up to16synchronized cameras.Intensity informa-tion is directly used to perform single-view pixel classifica-tion and match similarly labeled regions across views to derive3D people locations.Occlusion analysis is performed in two ways:First,during pixel classification,the computa-tion of prior probabilities takes occlusion into account. Second,evidence is gathered across cameras to compute a presence likelihood map on the ground plane that accounts for the visibility of each ground plane point in each view. Ground plane locations are then tracked over time by using a Kalman filter.In[13],individuals are tracked both in image planes and top view.The2D and3D positions of each individual are computed so as to maximize a joint probability defined as the product of a color-based appearance model and2D and 3D motion models derived from a Kalman filter.2.2.3Occupancy Map MethodsRecent techniques explicitly use a discretized occupancy map into which the objects detected in the camera images are back-projected.In[2],the authors rely on a standard detection of stereo disparities,which increase counters associated to square areas on the ground.A mixture of Gaussians is fitted to the resulting score map to estimate the likely location of individuals.This estimate is combined with a Kallman filter to model the motion.In[26],the occupancy map is computed with a standard visual hull procedure.One originality of the approach is to keep for each resulting connex component an upper and lower bound on the number of objects that it can contain. Based on motion consistency,the bounds on the various components are estimated at a certain time frame based on the bounds of the components at the previous time frame that spatially intersect with it.Although our own method shares many features with these techniques,it differs in two important respects that we will highlight:First,we combine the usual color and motion models with a sophisticated approach based on a generative model to estimating the probabilities of occu-pancy,which explicitly handles complex occlusion interac-tions between detected individuals,as will be discussed in Section5.Second,we rely on dynamic programming to ensure greater stability in challenging situations by simul-taneously handling multiple frames.3P ROBLEM F ORMULATIONOur goal is to track an a priori unknown number of people from a few synchronized video streams taken at head level. In this section,we formulate this problem as one of finding the most probable state of a hidden Markov process,given the set of images acquired at each time step,which we will refer to as a temporal frame.We then briefly outline the computation of the relevant probabilities by using the notations summarized in Tables1and2,which we also use in the following two sections to discuss in more details the actual computation of those probabilities.3.1Computing the Optimal TrajectoriesWe process the video sequences by batches of T¼100frames, each of which includes C images,and we compute the most likely trajectory for each individual.To achieve consistency over successive batches,we only keep the result on the first 10frames and slide our temporal window.This is illustrated in Fig.3.We discretize the visible part of the ground plane into a finite number G of regularly spaced2D locations and we introduce a virtual hidden location H that will be used to model entrances and departures from and into the visible area.For a given batch,let L t¼ðL1t;...;L NÃtÞbe the hidden stochastic processes standing for the locations of individuals, whether visible or not.The number NÃstands for the maximum allowable number of individuals in our world.It is large enough so that conditioning on the number of visible ones does not change the probability of a new individual entering the scene.The L n t variables therefore take values in f1;...;G;Hg.Given I t¼ðI1t;...;I C tÞ,the images acquired at time t for 1t T,our task is to find the values of L1;...;L T that maximizePðL1;...;L T j I1;...;I TÞ:ð1ÞAs will be discussed in Section 4.1,we compute this maximum a posteriori in a greedy way,processing one individual at a time,including the hidden ones who can move into the visible scene or not.For each one,the algorithm performs the computation,under the constraint that no individual can be at a visible location occupied by an individual already processed.In theory,this approach could lead to undesirable local minima,for example,by connecting the trajectories of two separate people.However,this does not happen often because our batches are sufficiently long.To further reduce the chances of this,we process individual trajectories in an order that depends on a reliability score so that the most reliable ones are computed first,thereby reducing the potential for confusion when processing the remaining ones. This order also ensures that if an individual remains in the hidden location,then all the other people present in the hidden location will also stay there and,therefore,do not need to be processed.FLEURET ET AL.:MULTICAMERA PEOPLE TRACKING WITH A PROBABILISTIC OCCUPANCY MAP269Our experimental results show that our method does not suffer from the usual weaknesses of greedy algorithms such as a tendency to get caught in bad local minima.We thereforebelieve that it compares very favorably to stochastic optimization techniques in general and more specifically particle filtering,which usually requires careful tuning of metaparameters.3.2Stochastic ModelingWe will show in Section 4.2that since we process individual trajectories,the whole approach only requires us to define avalid motion model P ðL n t þ1j L nt ¼k Þand a sound appearance model P ðI t j L n t ¼k Þ.The motion model P ðL n t þ1j L nt ¼k Þ,which will be intro-duced in Section 4.3,is a distribution into a disc of limited radiusandcenter k ,whichcorresponds toalooseboundonthe maximum speed of a walking human.Entrance into the scene and departure from it are naturally modeled,thanks to the270IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,VOL.30,NO.2,FEBRUARY 2008TABLE 2Notations (RandomQuantities)Fig.3.Video sequences are processed by batch of 100frames.Only the first 10percent of the optimization result is kept and the rest is discarded.The temporal window is then slid forward and the optimiza-tion is repeated on the new window.TABLE 1Notations (DeterministicQuantities)hiddenlocation H,forwhichweextendthemotionmodel.The probabilities to enter and to leave are similar to the transition probabilities between different ground plane locations.In Section4.4,we will show that the appearance model PðI t j L n t¼kÞcan be decomposed into two terms.The first, described in Section4.5,is a very generic color-histogram-based model for each individual.The second,described in Section5,approximates the marginal conditional probabil-ities of occupancy of the ground plane,given the results of a background subtractionalgorithm,in allviewsacquired atthe same time.This approximation is obtained by minimizing the Kullback-Leibler divergence between a product law and the true posterior.We show that this is equivalent to computing the marginal probabilities of occupancy so that under the product law,the images obtained by putting rectangles of human sizes at occupied locations are likely to be similar to the images actually produced by the background subtraction.This represents a departure from more classical ap-proaches to estimating probabilities of occupancy that rely on computing a visual hull[26].Such approaches tend to be pessimistic and do not exploit trade-offs between the presence of people at different locations.For instance,if due to noise in one camera,a person is not seen in a particular view,then he would be discarded,even if he were seen in all others.By contrast,in our probabilistic framework,sufficient evidence might be present to detect him.Similarly,the presence of someone at a specific location creates an occlusion that hides the presence behind,which is not accounted for by the hull techniques but is by our approach.Since these marginal probabilities are computed indepen-dently at each time step,they say nothing about identity or correspondence with past frames.The appearance similarity is entirely conveyed by the color histograms,which has experimentally proved sufficient for our purposes.4C OMPUTATION OF THE T RAJECTORIESIn Section4.1,we break the global optimization of several people’s trajectories into the estimation of optimal individual trajectories.In Section 4.2,we show how this can be performed using the classical Viterbi’s algorithm based on dynamic programming.This requires a motion model given in Section 4.3and an appearance model described in Section4.4,which combines a color model given in Section4.5 and a sophisticated estimation of the ground plane occu-pancy detailed in Section5.We partition the visible area into a regular grid of G locations,as shown in Figs.5c and6,and from the camera calibration,we define for each camera c a family of rectangular shapes A c1;...;A c G,which correspond to crude human silhouettes of height175cm and width50cm located at every position on the grid.4.1Multiple TrajectoriesRecall that we denote by L n¼ðL n1;...;L n TÞthe trajectory of individual n.Given a batch of T temporal frames I¼ðI1;...;I TÞ,we want to maximize the posterior conditional probability:PðL1¼l1;...;L Nül NÃj IÞ¼PðL1¼l1j IÞY NÃn¼2P L n¼l n j I;L1¼l1;...;L nÀ1¼l nÀ1ÀÁ:ð2ÞSimultaneous optimization of all the L i s would beintractable.Instead,we optimize one trajectory after theother,which amounts to looking for^l1¼arg maxlPðL1¼l j IÞ;ð3Þ^l2¼arg maxlPðL2¼l j I;L1¼^l1Þ;ð4Þ...^l Nüarg maxlPðL Nül j I;L1¼^l1;L2¼^l2;...Þ:ð5ÞNote that under our model,conditioning one trajectory,given other ones,simply means that it will go through noalready occupied location.In other words,PðL n¼l j I;L1¼^l1;...;L nÀ1¼^l nÀ1Þ¼PðL n¼l j I;8k<n;8t;L n t¼^l k tÞ;ð6Þwhich is PðL n¼l j IÞwith a reduced set of the admissiblegrid locations.Such a procedure is recursively correct:If all trajectoriesestimated up to step n are correct,then the conditioning onlyimproves the estimate of the optimal remaining trajectories.This would suffice if the image data were informative enoughso that locations could be unambiguously associated toindividuals.In practice,this is obviously rarely the case.Therefore,this greedy approach to optimization has un-desired side effects.For example,due to partly missinglocalization information for a given trajectory,the algorithmmight mistakenly start following another person’s trajectory.This is especially likely to happen if the tracked individualsare located close to each other.To avoid this kind of failure,we process the images bybatches of T¼100and first extend the trajectories that havebeen found with high confidence,as defined below,in theprevious batches.We then process the lower confidenceones.As a result,a trajectory that was problematic in thepast and is likely to be problematic in the current batch willbe optimized last and,thus,prevented from“stealing”somebody else’s location.Furthermore,this approachincreases the spatial constraints on such a trajectory whenwe finally get around to estimating it.We use as a confidence score the concordance of theestimated trajectories in the previous batches and thelocalization cue provided by the estimation of the probabil-istic occupancy map(POM)described in Section5.Moreprecisely,the score is the number of time frames where theestimated trajectory passes through a local maximum of theestimated probability of occupancy.When the POM does notdetect a person on a few frames,the score will naturallydecrease,indicating a deterioration of the localizationinformation.Since there is a high degree of overlappingbetween successive batches,the challenging segment of atrajectory,which is due to the failure of the backgroundsubtraction or change in illumination,for instance,is met inseveral batches before it actually happens during the10keptframes.Thus,the heuristic would have ranked the corre-sponding individual in the last ones to be processed whensuch problem occurs.FLEURET ET AL.:MULTICAMERA PEOPLE TRACKING WITH A PROBABILISTIC OCCUPANCY MAP2714.2Single TrajectoryLet us now consider only the trajectory L n ¼ðL n 1;...;L nT Þof individual n over T temporal frames.We are looking for thevalues ðl n 1;...;l nT Þin the subset of free locations of f 1;...;G;Hg .The initial location l n 1is either a known visible location if the individual is visible in the first frame of the batch or H if he is not.We therefore seek to maximizeP ðL n 1¼l n 1;...;L n T ¼l nt j I 1;...;I T Þ¼P ðI 1;L n 1¼l n 1;...;I T ;L n T ¼l nT ÞP ðI 1;...;I T Þ:ð7ÞSince the denominator is constant with respect to l n ,we simply maximize the numerator,that is,the probability of both the trajectories and the images.Let us introduce the maximum of the probability of both the observations and the trajectory ending up at location k at time t :Èt ðk Þ¼max l n 1;...;l nt À1P ðI 1;L n 1¼l n 1;...;I t ;L nt ¼k Þ:ð8ÞWe model jointly the processes L n t and I t with a hidden Markov model,that isP ðL n t þ1j L n t ;L n t À1;...Þ¼P ðL n t þ1j L nt Þð9ÞandP ðI t ;I t À1;...j L n t ;L nt À1;...Þ¼YtP ðI t j L n t Þ:ð10ÞUnder such a model,we have the classical recursive expressionÈt ðk Þ¼P ðI t j L n t ¼k Þ|fflfflfflfflfflfflfflfflffl{zfflfflfflfflfflfflfflfflffl}Appearance modelmax P ðL n t ¼k j L nt À1¼ Þ|fflfflfflfflfflfflfflfflfflfflfflfflfflfflfflfflffl{zfflfflfflfflfflfflfflfflfflfflfflfflfflfflfflfflffl}Motion modelÈt À1ð Þð11Þto perform a global search with dynamic programming,which yields the classic Viterbi algorithm.This is straight-forward,since the L n t s are in a finite set of cardinality G þ1.4.3Motion ModelWe chose a very simple and unconstrained motion model:P ðL n t ¼k j L nt À1¼ Þ¼1=Z Áe À k k À k if k k À k c 0otherwise ;&ð12Þwhere the constant tunes the average human walkingspeed,and c limits the maximum allowable speed.This probability is isotropic,decreases with the distance from location k ,and is zero for k k À k greater than a constantmaximum distance.We use a very loose maximum distance cof one square of the grid per frame,which corresponds to a speed of almost 12mph.We also define explicitly the probabilities of transitions to the parts of the scene that are connected to the hidden location H .This is a single door in the indoor sequences and all the contours of the visible area in the outdoor sequences in Fig.1.Thus,entrance and departure of individuals are taken care of naturally by the estimation of the maximum a posteriori trajectories.If there are enough evidence from the images that somebody enters or leaves the room,then this procedure will estimate that the optimal trajectory does so,and a person will be added to or removed from the visible area.4.4Appearance ModelFrom the input images I t ,we use background subtraction to produce binary masks B t such as those in Fig.4.We denote as T t the colors of the pixels inside the blobs and treat the rest of the images as background,which is ignored.Let X tk be a Boolean random variable standing for the presence of an individual at location k of the grid at time t .In Appendix B,we show thatP ðI t j L n t ¼k Þzfflfflfflfflfflfflfflfflffl}|fflfflfflfflfflfflfflfflffl{Appearance model/P ðL n t ¼k j X kt ¼1;T t Þ|fflfflfflfflfflfflfflfflfflfflfflfflfflfflfflfflfflffl{zfflfflfflfflfflfflfflfflfflfflfflfflfflfflfflfflfflffl}Color modelP ðX kt ¼1j B t Þ|fflfflfflfflfflfflfflfflfflfflffl{zfflfflfflfflfflfflfflfflfflfflffl}Ground plane occupancy:ð13ÞThe ground plane occupancy term will be discussed in Section 5,and the color model term is computed as follows.4.5Color ModelWe assume that if someone is present at a certain location k ,then his presence influences the color of the pixels located at the intersection of the moving blobs and the rectangle A c k corresponding to the location k .We model that dependency as if the pixels were independent and identically distributed and followed a density in the red,green,and blue (RGB)space associated to the individual.This is far simpler than the color models used in either [18]or [13],which split the body area in several subparts with dedicated color distributions,but has proved sufficient in practice.If an individual n was present in the frames preceding the current batch,then we have an estimation for any camera c of his color distribution c n ,since we have previously collected the pixels in all frames at the locations272IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,VOL.30,NO.2,FEBRUARY2008Fig.4.The color model relies on a stochastic modeling of the color of the pixels T c t ðk Þsampled in the intersection of the binary image B c t produced bythe background subtraction and the rectangle A ck corresponding to the location k .。

视觉惯性导航融合算法研究进展摘要:视觉惯性导航一直是无人驾驶与机器人领域重点、难点环节。

首先介绍经典算法框架,从基于最优平滑算法和预积分理论两个层面介绍经典视觉惯性融合算法。

然后介绍新型算法框架,主要在深度学习基础上,从对整体框架学习的程度,将新型视觉惯性融合算法分为两类,部分学习和整体学习,最后介绍了主流算法中关键技术与未来展望。

关键词:视觉惯性导航;优化;耦合算法;深度学习0 引言近些年来,伴随着图形处理技术、计算机视觉和元宇宙等相关技术的进步,实现无人设备高精度、强鲁棒性的定位导航始终为无人系统中的关键环节和待突破环节。

美国国防研究与工程署连续数年组织机器人生存挑战赛,以求在复杂环境下探索提升导航性能,确保美军在无人领域的领先地位。

中国科协也于2020年将无人车的高精度智能导航问题列为十大工程技术难题之一[1]。

无人系统导航领域一直以即时定位与地图构建(Simultaneous Localization And Mapping,SLAM)技术为核心。

纯视觉导航由于缺乏深度信息导致对运动敏感薄弱,需要依赖回环检测来校正误差,这导致其鲁棒性不足。

视觉导航与卫星导航系统的组合导航在复杂的城市场景下,光影变幻导致有效特征点选取难度变大,诸如地下车库等场景对卫星信号干扰强烈,而本文介绍的视觉惯性导航有着显著的优势。

一是误差的互补:视觉导航的稳定位姿估计可以弥补惯性导航自身固存的累积误差,惯性导航测量单元(Inertial Measurement Unit,IMU)高频率的动态信号可以补足相机的深度信息;二是适用速度领域的互补:视觉导航在低速静态领域的优秀表现,可以抑制IMU的零漂。

而在高速运动场景领域,惯性导航可以解决视觉导航由于图像帧之间缺乏重叠区域,导致特征提取算法失效的问题。

1 经典融合框架经典融合框架中,松耦合算法是视觉惯性领域早期重点研究内容。

松耦合算法主要依靠卡尔曼滤波器及其后续改进版本,诸如应用广泛的扩展卡尔曼滤波器(Extended Kalman Filter,EKF)、基于蒙特卡洛原理的无迹卡尔曼滤波器(Unscented Kalman Filter,UKF)、非线性非高斯领域表现不俗的粒子滤波器(Particle Filter,PF)等的松耦合方案陆续提出。

PETS 2009 Benchmark Data(PETS 2009基准数据集)数据摘要:The datasets are multisensor sequences containing different crowd activities.The aim of this workshop is to employ existing or new systems for the detection of one or more of 3 types of crowd surveillance characteristics/events within a real-world environment. The scenarios are filmed from multiple cameras and involve up to approximately forty actors.More specifically, the challenge includes estimation of crowd person count and density, tracking of individual(s) within a crowd, and detection of flow and crowd events.中文关键词:跟踪,事件检测,拥挤人群,人员计数,人群密度,PETS 2009,英文关键词:tracking,event detection,crowd person,count,person density,PETS 2009,数据格式:TEXT数据用途:The aim of this workshop is to employ existing or new systems for the detection of one or more of 3 types of crowd surveillance characteristics/events within a real-world environment.数据详细介绍:PETS 2009 Benchmark DataOverviewThe datasets are multisensor sequences containing different crowd activities. Please e-mail *********************if you require assistance obtaining these datasets for the workshop.Aims and ObjectivesThe aim of this workshop is to employ existing or new systems for the detection of one or more of 3 types of crowd surveillance characteristics/events within a real-world environment. The scenarios are filmed from multiple cameras and involve up to approximately forty actors. More specifically, the challenge includes estimation of crowd person count and density, tracking of individual(s) within a crowd, and detection of flow and crowd events.News06 March 2009: The PETS2009 crowd dataset is released.01 April 2009: The PETS2009 submission details are released. Please see Author Instructions.PreliminariesPlease read the following information carefully before processing the dataset, as the details are essential to the understanding of when notification of events should be generated by your system. Please check regularly for updates. Summary of the Dataset structureThe dataset is organised as follows:Calibration DataS0: Training Datacontains sets background, city center, regular flowS1: Person Count and Density Estimationcontains sets L1,L2,L3S2: People Trackingcontains sets L1,L2,L3S3: Flow Analysis and Event Recognitioncontains sets Event Recognition and Multiple FlowEach subset contains several sequences and each sequence contains different views (4 up to 8). This is shown in the diagram below:Calibration DataThe calibration data (one file for each of the 8 cameras) can be found here. The ground plane is assumed to be the Z=0 plane. C++ code (available here) is provided to allow you to load and use the calibration parameters in your program (courtesy of project ETISEO). The provided calibration parameters were obtained using the freely available Tsai Camera Calibration Software by Reg Willson. All spatial measurements are in metres.The cameras used to film the datasets are:view Model Resolution frame rate Comments001 A xis 223M 768x576 ~7 Progressive scan002 A xis 223M 768x576 ~7 Progressive scan003 P TZ Axis 233D 768x576 ~7 Progressive scan004 P TZ Axis 233D 768x576 ~7 Progressive scan005 S ony DCR-PC1000E 3xCMOS 720x576 ~7 ffmpeg De-interlaced 006 S ony DCR-PC1000E 3xCMOS 720x576 ~7 ffmpeg De-interlaced 007 C anon MV-1 1xCCD w 720x576 ~7 Progressive scan008 C anon MV-1 1xCCD w 720x576 ~7 Progressive scanFrames are compressed as JPEG image sequences. All sequences (except one) contain Views 001-004. A few sequences also contain Views 005-008. Please see below for more information.OrientationThe cameras are installed at the locations shown below to cover an approximate area of 100m x 30m (the scale of the map is 20m):The GPS coordinates of the centre of the recording are: 51°26'18.5N 000°56'40.00WThe direct link to the Google maps is as follows: Google MapsCamera installation points are shown above and sample frames are shown below:view 001 view 002 view 003 view 004view 005 view 006 view 007 view 008SynchronisationPlease note that while effort has been made to make sure the frames from different views are synchronised, there might be slight delays and frame drops in some cases. In particular View 4 suffers from frame rate instability and we suggest it be used as a supplementary source of information. Please let us know if you encounter any problems or inconsistencies.DownloadDataset S0: Training DataThis dataset contains three sets of training sequences from different views provided to help researchers obtain the following models from multiple views: Background model for all the cameras. Note that the scene can contain people or moving objects. Furthermore, the frames in this set are not necessarily synchronised. For Views 001-004 Different sequences corresponding to the following time stamps: 13-05, 13-06, 13-07, 13-19, 13-32, 13-38, 13-49 are provided. For views 005-008 (DV cameras) 144 non-synchronised frames are provided.Includes random walking crowd flow. Sequence 9 with time stmp 12-34 using Views 001-008 and Sequence 10 with timestamp 14-55 using Views 001-004. Includes regular walking pace crowd flow. Sequences 11-15 with timestamps 13-57, 13-59, 14-03, 14-06, 14-29 and for Views 001-004.DownloadBackground set [1.8 GB]City center [1.8 GB]Regular flow [1.3 GB]Dataset S1: Person Count and Density EstimationThree regions, R0, R1 and R2 are defined in View 001 only (shown in the example image). The coordinates of the top left and bottom right corners (in pixels) are given in the following table.Region Top-left Bottom-rightR0 (10,10) (750,550)R1 (290,160) (710,430)R2 (30,130) (230,290)Definition of crowd density (%): crowd density is based on a maximum occupancy (100%) of 40 people in 10 square metres on the ground. One person is assumed to occupy 0.25 square metres on the ground.Scenario: S1.L1 walkingElements: medium density crowd, overcastSequences: Sequence 1 with timestamp 13-57; Sequence 2 with timestamp 13-59. Sequences 1-2 use Views 001-004.Subjective Difficulty: Level 1Task: The task is to count the number of people in R0 for each frame of the sequence in View 1 only. As a secondary challenge the crowd density in regions R1 and R2 can also be reported (mapped to ground plane occupancy, possibly using multiple views).Download [502 MB]Sample Frames:Scenario: S1.L2 walkingElements: high density crowd, overcastSequences: Sequence 1 with timestamp 14-06; Sequence 2 with timestamp 14-31. Sequences 1-2 use Views 001-004.Subjective Difficulty: Level 2Task: This scenario contains a densely grouped crowd who walk from onepoint to another. There are two sequences corresponding timestamps 14-06 and 14-31.The task related to timestamp 14-06 is to estimate the crowd density in Region R1 and R2 at each frame of the sequence.The designated task for the sequence Time_14-31 is to determine both the total number of people entering through the brown line from the left side AND the total number of people exiting from purple and red lines, shown in the opposite figure, throughout the whole sequence. The coordinates of the entry and exit lines are given below for reference.Line Start EndEntry : brown (730,250) (730,530)Exit1 : red (230,170) (230,400)Exit2 : purple (500,210) (720,210)Download [367 MB]Sample Frames:Scenario: S1.L3 runningElements: medium density crowd, bright sunshine and shadows Sequences: Sequence 1 with timestamp 14-17; Sequence 2 with timestamp 14-33. Sequences 1-2 use Views 001-004.Subjective Difficulty: Level 3Task: This scenario contains a crowd of people who, on reaching a point in the scene, begin to run. The task is to measure the crowd density in Region R1 at each frame of the sequence.Download [476 MB]Sample Frames:Dataset S2: People TrackingScenario: S2.L1 walkingElements: sparse crowdSequences: Sequence 1 with timestamp 12-34 using Views 001-008 except View_002 for cross validation (see below).Subjective Difficulty: L1Task: Track all of the individuals in the sequence. If you undertake monocular tracking only, report the 2D bounding box location for each individual in the view used; if two or more views are processed, report the 2D bounding box location for each individual as back projected into View_002 using the camera calibration parameters provided (this equates to a leave-one-out validation). Note the origin (0,0) of the image is assumed top-left. Validation will be performed using manually labelled ground truth.Download [997 MB]Sample Frames:Scenario: S2.L2 walking Elements: medium density crowd Sequences: Sequence 1 with timestamp 14-55 using Views 001-004. Subjective Difficulty: L2Task: Track the individuals marked A and B (see figure) in the sequence and provide 2D bounding box locations of the individuals in View_002 which will be validated using manually labelled ground truth. Note the origin (0,0) of the image is assumed top-left. Note that individual B exits the field of view and returns toward the end of the sequence.Download [442 MB]Scenario: S2.L3 WalkingElements: dense crowdSequences: Sequence 1 with timestamp 14-41 using Views 001-004. Subjective Difficulty: L3Task: Track the individuals marked A and B in the sequence and provide 2D bounding box information in View_002 for each individual which will be validated using manually labelled ground truth.Download [259 MB]Dataset S3: Flow Analysis and Event RecognitionScenario: S3.Multiple FlowsElements: dense crowd, runningSequences: Sequences 1-5 with timestamps 12-43 (using Views 1,2,5,6,7,8) , 14-13, 14-37, 14-46 and 14-52. Sequences 2-5 use Views 001-004. Subjective Difficulty: L2Task: Detect and estimate the multiple flows in the provided sequences, mapped onto the ground plane as a occupancy map flow. Further details of the exact task requirements are contained under Author Instructions. These would be compared with ground truth optical flow of major flows in the sequences on the ground plane.Download [760 MB]Sample Frames:Scenario: S3.Event RecognitionElements: dense crowdSequences: Sequences 1-4 with timestamps 14-16, 14-27, 14-31 and 14-33. Sequences 1-4 use Views 001-004.Subjective Difficulty: L3Task: This dataset contains different crowd activities and the task is to provide a probabilistic estimation of each of the following events: walking, running, evacuation (rapid dispersion), local dispersion, crowd formation and splitting at different time instances. Furthermore, we are interested in systems that can identify the start and end of the events as well as transitions between them. Download [1.2 GB]Sample Frames:Additional InformationThe scenarios can also be downloaded from ftp:///pub/PETS2009/ (use anonymous login). Warning: ftp:// is not listing files correctly on some ftp clients. If you experience problems you can connect to the http server at /PETS2009/.Legal note: The video sequences are copyright University of Reading and permission is hereby granted for free download for the purposes of the PETS 2009 workshop and academic and industrial research. Where the data is disseminated (e.g. publications, presentations) the source should be acknowledged.数据预览:点此下载完整数据集。

第18卷第4期铁道科学与工程学报Volume18Number4 2021年4月Journal of Railway Science and Engineering April2021 DOI:10.19713/ki.43−1423/u.T20200583基于单目视觉三维重建的货运列车超限检测方法研究杜伦平1,朱天赐1,刘期柏2,梁力东2,王泉东1,傅勤毅1,刘斯斯1(1.中南大学交通运输工程学院,湖南长沙410072;2.广州局集团公司货运部,广东广州510088)摘要:针对基于雷达和激光等技术的货运列车超限检测系统存在检测区域不完整及只能在列车移动状态下进行测量的缺陷,提出一种基于单目视觉三维重建的货运列车超限检测方法。

通过单目视觉三维重建算法对获取的序列图像进行建模处理得到目标货运列车的三维点云模型。

对三维点云模型进行全局坐标系转换与切片投影,得到目标货运列车若干横截面二维点云图形。

结合铁路货运列车超限检测标准,构建标准界限图形。

将获得的二维点云图形代入标准界限图形中进行超限检测判别。

实验结果显示,该方法具有较高的检测精度和检测效率,能够满足铁路货运列车超限检测作业要求。

关键词:货运列车;超限检测;单目视觉;三维重建;点云模型处理中图分类号:TP29文献标志码:A开放科学(资源服务)标识码(OSID)文章编号:1672−7029(2021)04−1009−08Research on detection method of freight train gauge-exceeding based on3D reconstruction of monocular visionDU Lunping1,ZHU Tianci1,LIU Qibai2,LIANG Lidong2,WANG Quandong1,FU Qinyi1,LIU Sisi1(1.School of Traffic and Transportation Engineering,Central South University,Changsha410075,China;2.Freight Department of China Railway Guangzhou Group Co.,Ltd.,Guangzhou510088,China)Abstract:In view of the defects of the detection system of freight train gauge-exceeding based on radar and laser technology that the detection area is incomplete and can only be measured in the moving state of the train,a method of gauge-exceeding detection of freight train based on monocular visual3D reconstruction was proposed. The3D point cloud model of the target freight train was obtained by modeling and processing the acquired sequence images through the monocular visual3D reconstruction algorithm.The global coordinate system conversion and slice projection were performed on the three-dimensional point cloud model to obtain two-dimensional point cloud graphics of several cross sections of the target freight bined with the railway freight train gauge-exceeding detection standard,a standard limit graph was constructed.The obtained two-dimensional point cloud graphics were substituted into the standard boundary graphics for gauge-exceeding detection and discrimination.Experimental results show that the method has high detection accuracy and efficiency,and can meet the requirements of railway freight train gauge-exceeding detection.Key words:freight train;gauge-exceeding detection;monocular vision;three-dimensional reconstruction;point cloud model processing收稿日期:2020−06−24基金项目:广州铁路局科研项目(广铁合货运部(2019)0002);国家自然科学基金青年基金资助项目((2018)61806222)通信作者:刘斯斯(1988−),女,湖南长沙人,讲师,博士,从事计算机视觉、大数据挖掘技术研究;E−mail:********************.cn铁道科学与工程学报2021年4月1010铁路运输在我国运输业中占据决定性地位。

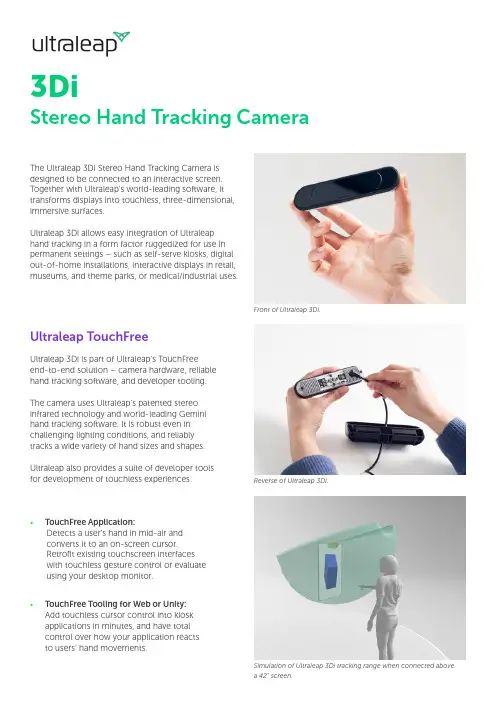

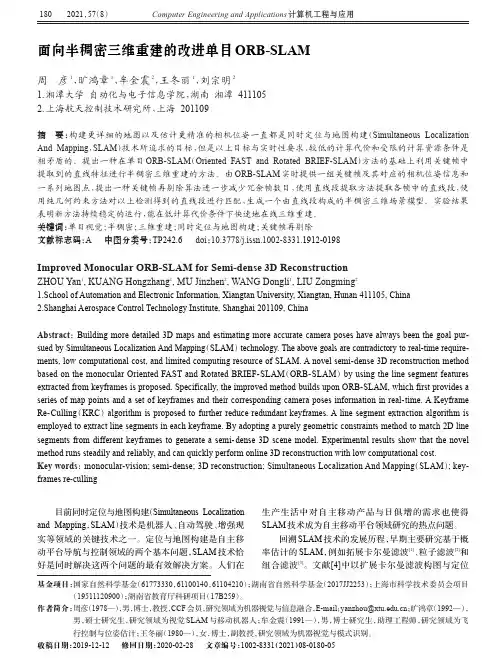

Ultraleap TouchFreeUltraleap 3Di is part of Ultraleap’s TouchFreeend-to-end solution – camera hardware, reliablehand tracking software, and developer tooling.The camera uses Ultraleap’s patented stereoinfrared technology and world-leading Geminihand tracking software. It is robust even inchallenging lighting conditions, and reliablytracks a wide variety of hand sizes and shapes.Ultraleap also provides a suite of developer toolsfor development of touchless experiences:• TouchFree Application:Detects a user’s hand in mid-air andconverts it to an on-screen cursor.Retrofit existing touchscreen interfaceswith touchless gesture control or evaluateusing your desktop monitor.• TouchFree Tooling for Web or Unity:Add touchless cursor control into kioskapplications in minutes, and have totalcontrol over how your application reactsto users’ hand movements. The Ultraleap 3Di Stereo Hand Tracking Camera isdesigned to be connected to an interactive screen.Together with Ultraleap’s world-leading software, ittransforms displays into touchless, three-dimensional,immersive surfaces.Ultraleap 3Di allows easy integration of Ultraleaphand tracking in a form factor ruggedized for use inpermanent settings – such as self-serve kiosks, digitalout-of-home installations, interactive displays in retail,museums, and theme parks, or medical/industrial uses.3DiStereo Hand Tracking CameraSimulation of Ultraleap 3Di tracking range when connected abovea 42” screen.Reverse of Ultraleap 3Di.Front of Ultraleap 3Di.SpecificationsUltraleap reserves the right to update or modify this specification without notice.Device dimensionsAll dimensions in mmw/https://e/******************o/UK: +44 117 325 9002o/US: +1 650 600 9916 UL-005619-DS - 3Di Datasheet (Issue 10)MountingUltraleap 3Di is designed to be integrated directly into permanent installations. Provided with the camera inthe product box is a Multi Mount. This mount holds the camera to the kiosk or screen and provides optimum camera placement. It can also sit on any horizontal surface to support desktop evaluation or deployments.Detailed guidelines on camera placement, mounting options, TouchFree software setup and designing for touchless experiences can be found on https:///touchfree-user-manual/.Multi Mount and Ultraleap 3Di.。

算倣语咅信is与电ifiChina Computer&Communication2021年第5期利用单目图像重建人体三维模型钱融王勇王瑛(广东工业大学计算机学院,广东广州510006)摘要:人体三维模型在科幻电影、网上购物的模拟试衣等方面有广泛的应用场景,但是在单目图像重建中存在三维信息缺失、重建模型不具有贴合的三维表面等问题-为了解决上述的问题,笔者提出基于SMPL模型的人体三维模型重建算法。

该算法先预估人物的二维关节点,使用SMPL模型关节与预估的二维关节相匹配,最后利用人体三维模型数据库的姿势信息对重建的人体模型进行姿势先验,使得重建模型具有合理的姿态与形状.实验结果表明,该算法能有效预估人体关节的三维位置,且能重建与图像人物姿势、形态相似的人体三维模型.关键词:人体姿势估计;三维人体重建;单目图像重建;人体形状姿势;SMPL模型中图分类号:TP391.41文献标识码:A文章编号:1003-9767(2021)05-060-05Reconstruction of a Three-dimensional Human Body Model Using Monocular ImagesQIAN Rong,WANG Yong,WANG Ying(School of Computer,Guangdong University of Technology,Guangzhou Guangdong510006,China) Abstract:The human body3D model are widely used in science fiction movies,online shopping simulation fittings,etc,but there is a lack of3D information in monocular image reconstruction,and the reconstructed model does not have problems such as a fit 3D surface.In order to solve the above mentioned problems,a human body3D model reconstruction algorithm based on SMPL model is proposed.The algorithm first estimates the two-dimensional joint points of the character,and uses the SMPL model joints to match the estimated two-dimensional joints;finally,the posture information of the three-dimensional human body model database is used to perform posture prior to the reconstructed human body model,making the reconstructed model reasonable Posture and shape.The algorithm was tested on the PI-INF-3DHP data set.The experimental results show that the algorithm can effectively predict the3D position of human joints,and can reconstruct a3D model of the human body similar to the pose and shape of the image.Keywords:human pose estimation;3D human reconstruction;monocular image reconstruction;human shape and pose;SMPL0引言人体三维模型所承载的信息量远远大于人体二维图像,能满足高层的视觉任务需求,例如在网购中提供线上试衣体验,为科幻电影提供大量的人体三维数据。