Algorithmic cooling and scalable NMR quantum computers

- 格式:pdf

- 大小:216.36 KB

- 文档页数:23

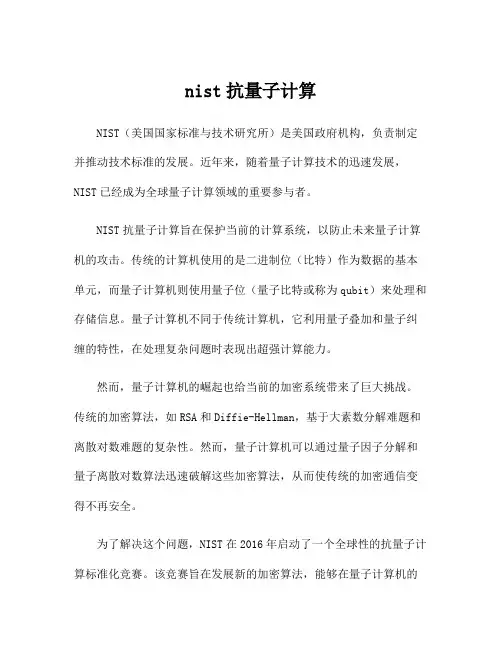

nist抗量子计算NIST(美国国家标准与技术研究所)是美国政府机构,负责制定并推动技术标准的发展。

近年来,随着量子计算技术的迅速发展,NIST已经成为全球量子计算领域的重要参与者。

NIST抗量子计算旨在保护当前的计算系统,以防止未来量子计算机的攻击。

传统的计算机使用的是二进制位(比特)作为数据的基本单元,而量子计算机则使用量子位(量子比特或称为qubit)来处理和存储信息。

量子计算机不同于传统计算机,它利用量子叠加和量子纠缠的特性,在处理复杂问题时表现出超强计算能力。

然而,量子计算机的崛起也给当前的加密系统带来了巨大挑战。

传统的加密算法,如RSA和Diffie-Hellman,基于大素数分解难题和离散对数难题的复杂性。

然而,量子计算机可以通过量子因子分解和量子离散对数算法迅速破解这些加密算法,从而使传统的加密通信变得不再安全。

为了解决这个问题,NIST在2016年启动了一个全球性的抗量子计算标准化竞赛。

该竞赛旨在发展新的加密算法,能够在量子计算机的威胁下保持信息安全。

竞赛的目标是选择一种或多种量子安全算法,并将其纳入到全球加密和身份验证标准中。

竞赛面向全球各个领域的专家,包括学术界、工业界和政府机构。

通过广泛的评估和筛选,NIST将从数百个提交的加密算法中选择出最佳的候选算法。

这些选定的算法将会作为量子安全算法标准,并将在国际上得到推广和采用。

NIST抗量子计算竞赛历经数轮,目前已经评选出了一批候选算法。

这些算法包括基于格的加密算法、多变化的有限域加密算法、哈希函数、数字签名等。

这些算法都采用了不同的技术,以抵抗量子计算机的攻击。

候选算法将在未来的几年内进行进一步的研究和评估,在确保其强安全性和高效性之后,有望成为量子安全加密的有效解决方案。

NIST的抗量子计算工作不仅局限于标准化竞赛。

该机构还致力于推动量子计算机的研究和发展。

NIST负责导航和推动量子计算机的标准化工作,并开展各种研究和实验,以解决量子计算机的挑战和问题。

刊名IEEE Transactions on CyberneticsIEEE TRANSACTIONS ON INDUSTRIAL ELECTRONICSAUTOMATICAIEEE Transactions on Industrial InformaticsIEEE Transactions on Systems Man Cybernetics-SystemsIEEE CONTROL SYSTEMS MAGAZINEIEEE TRANSACTIONS ON AUTOMATIC CONTROLIEEE TRANSACTIONS ON CONTROL SYSTEMS TECHNOLOGYNonlinear Analysis-Hybrid SystemsIEEE-ASME TRANSACTIONS ON MECHATRONICSINTERNATIONAL JOURNAL OF ROBUST AND NONLINEAR CONTROLIEEE Transactions on Automation Science and EngineeringJOURNAL OF THE FRANKLIN INSTITUTE-ENGINEERING AND APPLIED MATHEMATICSIEEE ROBOTICS & AUTOMATION MAGAZINEISA TRANSACTIONSIET Control Theory and ApplicationsENGINEERING APPLICATIONS OF ARTIFICIAL INTELLIGENCEJOURNAL OF PROCESS CONTROLCHEMOMETRICS AND INTELLIGENT LABORATORY SYSTEMSSYSTEMS & CONTROL LETTERSROBOTICS AND AUTONOMOUS SYSTEMSCONTROL ENGINEERING PRACTICEINTERNATIONAL JOURNAL OF ADVANCED MANUFACTURING TECHNOLOGYMECHATRONICSInternational Journal of Fuzzy SystemsANNUAL REVIEWS IN CONTROLJOURNAL OF MACHINE LEARNING RESEARCHINTERNATIONAL JOURNAL OF SYSTEMS SCIENCEINTERNATIONAL JOURNAL OF CONTROL AUTOMATION AND SYSTEMSINTERNATIONAL JOURNAL OF CONTROLINTERNATIONAL JOURNAL OF ADAPTIVE CONTROL AND SIGNAL PROCESSINGEUROPEAN JOURNAL OF CONTROLInternational Journal of Applied Mathematics and Computer ScienceOPTIMAL CONTROL APPLICATIONS & METHODSSIAM JOURNAL ON CONTROL AND OPTIMIZATIONTRANSACTIONS OF THE INSTITUTE OF MEASUREMENT AND CONTROLArchives of Control SciencesASIAN JOURNAL OF CONTROLJOURNAL OF DYNAMIC SYSTEMS MEASUREMENT AND CONTROL-TRANSACTIONS OF THE ASME JOURNAL OF CHEMOMETRICSDISCRETE EVENT DYNAMIC SYSTEMS-THEORY AND APPLICATIONSASSEMBLY AUTOMATIONIMA JOURNAL OF MATHEMATICAL CONTROL AND INFORMATIONInternational Journal of Computers Communications & ControlESAIM-CONTROL OPTIMISATION AND CALCULUS OF VARIATIONSAUTONOMOUS AGENTS AND MULTI-AGENT SYSTEMSINTERNATIONAL JOURNAL OF ROBOTICS & AUTOMATIONStudies in Informatics and ControlPROCEEDINGS OF THE INSTITUTION OF MECHANICAL ENGINEERS PART I-JOURNAL OF SYSTEMS AND MEASUREMENT & CONTROLMATHEMATICS OF CONTROL SIGNALS AND SYSTEMSInformation Technology and ControlControl Engineering and Applied InformaticsJOURNAL OF DYNAMICAL AND CONTROL SYSTEMSMODELING IDENTIFICATION AND CONTROLINTELLIGENT AUTOMATION AND SOFT COMPUTINGJournal of Systems Engineering and ElectronicsAUTOMATION AND REMOTE CONTROLAT-AutomatisierungstechnikRevista Iberoamericana de Automatica e Informatica Industrial AutomatikaSLAS DiscoveryINTERNATIONAL JOURNAL OF COMPUTER VISIONIEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE IEEE Transactions on CyberneticsIEEE TRANSACTIONS ON FUZZY SYSTEMSIEEE TRANSACTIONS ON EVOLUTIONARY COMPUTATIONIEEE Transactions on Neural Networks and Learning Systems NEURAL NETWORKSInformation FusionIEEE Computational Intelligence MagazineMEDICAL IMAGE ANALYSISIEEE TRANSACTIONS ON IMAGE PROCESSINGIEEE Transactions on Affective ComputingInternational Journal of Neural SystemsKNOWLEDGE-BASED SYSTEMSNEURAL COMPUTING & APPLICATIONSPATTERN RECOGNITIONAPPLIED SOFT COMPUTINGSwarm and Evolutionary ComputationARTIFICIAL INTELLIGENCE REVIEWEXPERT SYSTEMS WITH APPLICATIONSINTEGRATED COMPUTER-AIDED ENGINEERINGJOURNAL OF INTELLIGENT MANUFACTURINGDECISION SUPPORT SYSTEMSCognitive ComputationINTERNATIONAL JOURNAL OF INTELLIGENT SYSTEMSADVANCED ENGINEERING INFORMATICSNEUROCOMPUTINGARTIFICIAL INTELLIGENCEACM Transactions on Intelligent Systems and TechnologyIEEE Transactions on Autonomous Mental Development ARTIFICIAL INTELLIGENCE IN MEDICINEENGINEERING APPLICATIONS OF ARTIFICIAL INTELLIGENCEIEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING CHEMOMETRICS AND INTELLIGENT LABORATORY SYSTEMSInternational Journal of Machine Learning and CyberneticsROBOTICS AND AUTONOMOUS SYSTEMSFrontiers in NeuroroboticsIEEE INTELLIGENT SYSTEMSIEEE Transactions on Human-Machine SystemsDATA MINING AND KNOWLEDGE DISCOVERYMECHATRONICSInternational Journal of Fuzzy SystemsCOMPUTER VISION AND IMAGE UNDERSTANDINGEVOLUTIONARY COMPUTATIONSOFT COMPUTINGSIAM Journal on Imaging SciencesJOURNAL OF MACHINE LEARNING RESEARCHInternational Journal of Bio-Inspired ComputationKNOWLEDGE AND INFORMATION SYSTEMSAUTONOMOUS ROBOTSSemantic WebIMAGE AND VISION COMPUTINGFuzzy Optimization and Decision MakingInternational Journal of Computational Intelligence Systems APPLIED INTELLIGENCEIEEE Transactions on Cognitive and Developmental SystemsPATTERN RECOGNITION LETTERSWiley Interdisciplinary Reviews-Data Mining and Knowledge Discovery JOURNAL OF MATHEMATICAL IMAGING AND VISIONMemetic ComputingMACHINE LEARNINGIET BiometricsNEURAL PROCESSING LETTERSCOMPUTER SPEECH AND LANGUAGEINTERNATIONAL JOURNAL OF APPROXIMATE REASONINGINTERNATIONAL JOURNAL OF INFORMATION TECHNOLOGY & DECISION MAKING International Journal of Applied Mathematics and Computer Science NEURAL COMPUTATIONJOURNAL OF INTELLIGENT & ROBOTIC SYSTEMSJournal of Real-Time Image ProcessingSwarm IntelligenceJOURNAL OF CHEMOMETRICSDATA & KNOWLEDGE ENGINEERINGGenetic Programming and Evolvable MachinesEXPERT SYSTEMSJOURNAL OF INTELLIGENT & FUZZY SYSTEMSCognitive Systems ResearchJournal of Ambient Intelligence and Humanized ComputingIET Image ProcessingCOMPUTATIONAL INTELLIGENCEJournal of Web SemanticsJOURNAL OF AUTOMATED REASONINGCOMPUTATIONAL LINGUISTICSMACHINE VISION AND APPLICATIONSInternational Journal on Document Analysis and Recognition PATTERN ANALYSIS AND APPLICATIONSJOURNAL OF ARTIFICIAL INTELLIGENCE RESEARCHACM Transactions on Autonomous and Adaptive SystemsAUTONOMOUS AGENTS AND MULTI-AGENT SYSTEMSINTERNATIONAL JOURNAL OF UNCERTAINTY FUZZINESS AND KNOWLEDGE-BASED SYSTEMS Journal on Multimodal User InterfacesJOURNAL OF HEURISTICSJOURNAL OF INTELLIGENT INFORMATION SYSTEMSIET Computer VisionKNOWLEDGE ENGINEERING REVIEWAI EDAM-ARTIFICIAL INTELLIGENCE FOR ENGINEERING DESIGN ANALYSIS AND MANUFACTURING AI MAGAZINEINTERNATIONAL JOURNAL OF PATTERN RECOGNITION AND ARTIFICIAL INTELLIGENCE JOURNAL OF EXPERIMENTAL & THEORETICAL ARTIFICIAL INTELLIGENCEADAPTIVE BEHAVIORCONNECTION SCIENCEANNALS OF MATHEMATICS AND ARTIFICIAL INTELLIGENCEARTIFICIAL LIFEIEEE Transactions on Computational Intelligence and AI in GamesJournal of Ambient Intelligence and Smart EnvironmentsStatistical Analysis and Data MiningNatural ComputingMINDS AND MACHINESInformation Technology and ControlNatural Language EngineeringInternational Journal on Semantic Web and Information SystemsBiologically Inspired Cognitive ArchitecturesApplied OntologyNETWORK-COMPUTATION IN NEURAL SYSTEMSAdvances in Electrical and Computer EngineeringIntelligent Data AnalysisCONSTRAINTSINTELLIGENT AUTOMATION AND SOFT COMPUTINGMalaysian Journal of Computer ScienceAPPLIED ARTIFICIAL INTELLIGENCETurkish Journal of Electrical Engineering and Computer SciencesInternational Journal on Artificial Intelligence ToolsJOURNAL OF COMPUTER AND SYSTEMS SCIENCES INTERNATIONALJournal of Logic Language and InformationNeural Network WorldInternational Arab Journal of Information TechnologyAI COMMUNICATIONSJOURNAL OF MULTIPLE-VALUED LOGIC AND SOFT COMPUTINGCOMPUTING AND INFORMATICSINTERNATIONAL JOURNAL OF SOFTWARE ENGINEERING AND KNOWLEDGE ENGINEERINGACM Transactions on Asian and Low-Resource Language Information Processing Traitement du SignalIEEE Transactions on CyberneticsIEEE Transactions on Systems Man Cybernetics-SystemsIEEE Transactions on Affective ComputingHUMAN-COMPUTER INTERACTIONUSER MODELING AND USER-ADAPTED INTERACTIONIEEE Transactions on Human-Machine SystemsINTERNATIONAL JOURNAL OF HUMAN-COMPUTER STUDIESIEEE Transactions on HapticsBIOLOGICAL CYBERNETICSBEHAVIOUR & INFORMATION TECHNOLOGYMACHINE VISION AND APPLICATIONSINTERNATIONAL JOURNAL OF HUMAN-COMPUTER INTERACTIONCYBERNETICS AND SYSTEMSUniversal Access in the Information SocietyJournal on Multimodal User InterfacesKYBERNETESACM Transactions on Computer-Human InteractionINTERACTING WITH COMPUTERSMODELING IDENTIFICATION AND CONTROLKYBERNETIKAJOURNAL OF COMPUTER AND SYSTEMS SCIENCES INTERNATIONALPRESENCE-TELEOPERATORS AND VIRTUAL ENVIRONMENTSIEEE WIRELESS COMMUNICATIONSIEEE Transactions on Neural Networks and Learning SystemsIEEE NETWORKIEEE Transactions on Dependable and Secure ComputingJOURNAL OF NETWORK AND COMPUTER APPLICATIONSIEEE-ACM TRANSACTIONS ON NETWORKINGCOMMUNICATIONS OF THE ACMIEEE TRANSACTIONS ON COMPUTERSJournal of Optical Communications and NetworkingIEEE TRANSACTIONS ON RELIABILITYVLDB JOURNALComputer NetworksMOBILE NETWORKS & APPLICATIONSIEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS INTERNATIONAL JOURNAL OF HIGH PERFORMANCE COMPUTING APPLICATIONSCOMPUTERIEEE MICROIEEE MULTIMEDIAPERFORMANCE EVALUATIONCOMPUTERS & ELECTRICAL ENGINEERINGIEEE TRANSACTIONS ON VERY LARGE SCALE INTEGRATION (VLSI) SYSTEMSJOURNAL OF THE ACMACM Journal on Emerging Technologies in Computing SystemsIEEE Design & TestJOURNAL OF SUPERCOMPUTINGIEEE Computer Architecture LettersAdvances in ComputersJOURNAL OF COMPUTER AND SYSTEM SCIENCESCOMPUTER STANDARDS & INTERFACESIEEE Consumer Electronics MagazineSustainable Computing-Informatics & SystemsDISPLAYSACM Transactions on Embedded Computing SystemsACM Transactions on Architecture and Code OptimizationNETWORKSMICROPROCESSORS AND MICROSYSTEMSACM Transactions on Reconfigurable Technology and SystemsCANADIAN JOURNAL OF ELECTRICAL AND COMPUTER ENGINEERING-REVUE CANADIENNE DE GENIEIBM JOURNAL OF RESEARCH AND DEVELOPMENTACM Transactions on StorageJOURNAL OF SYSTEMS ARCHITECTUREINTEGRATION-THE VLSI JOURNALJOURNAL OF COMPUTER SCIENCE AND TECHNOLOGYACM TRANSACTIONS ON DESIGN AUTOMATION OF ELECTRONIC SYSTEMSCOMPUTER SYSTEMS SCIENCE AND ENGINEERINGANALOG INTEGRATED CIRCUITS AND SIGNAL PROCESSINGCOMPUTER JOURNALNEW GENERATION COMPUTINGIET Computers and Digital TechniquesJOURNAL OF CIRCUITS SYSTEMS AND COMPUTERSDESIGN AUTOMATION FOR EMBEDDED SYSTEMSIEICE TRANSACTIONS ON FUNDAMENTALS OF ELECTRONICS COMMUNICATIONS AND COMPUTER SCIENCES IEEE Communications Surveys and TutorialsIEEE WIRELESS COMMUNICATIONSIEEE Transactions on Cloud ComputingIEEE NETWORKIEEE Internet of Things JournalMIS QUARTERLYJOURNAL OF INFORMATION TECHNOLOGYIEEE Transactions on Services ComputingIEEE Transactions on Dependable and Secure ComputingIEEE Systems JournalJOURNAL OF STRATEGIC INFORMATION SYSTEMSINFORMATION SCIENCESJOURNAL OF THE AMERICAN MEDICAL INFORMATICS ASSOCIATIONIEEE TRANSACTIONS ON MOBILE COMPUTINGIEEE TRANSACTIONS ON MULTIMEDIAJournal of CheminformaticsINFORMATION & MANAGEMENTIEEE Journal of Biomedical and Health InformaticsInternet ResearchJournal of Chemical Information and ModelingIEEE Transactions on Emerging Topics in ComputingACM SIGCOMM Computer Communication ReviewDECISION SUPPORT SYSTEMSIEEE AccessINFORMATION PROCESSING & MANAGEMENTIEEE Transactions on Network and Service ManagementINFORMATION SYSTEMS FRONTIERSEUROPEAN JOURNAL OF INFORMATION SYSTEMSAd Hoc NetworksFoundations and Trends in Information RetrievalIEEE Wireless Communications LettersIEEE PERVASIVE COMPUTINGPervasive and Mobile ComputingACM Transactions on Intelligent Systems and TechnologyINTERNATIONAL JOURNAL OF MEDICAL INFORMATICSIEEE Cloud ComputingJournal of the Association for Information SystemsJournal of the Association for Information Science and TechnologyJournal of Grid ComputingIEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERINGJOURNAL OF MANAGEMENT INFORMATION SYSTEMSJournal of Optical Communications and NetworkingVLDB JOURNALCOMPUTERS & SECURITYINFORMATION AND SOFTWARE TECHNOLOGYCOMPUTER COMMUNICATIONSBusiness & Information Systems EngineeringACM Transactions on Information and System SecurityElectronic Commerce Research and ApplicationsINFORMATION SYSTEMSComputer NetworksMOBILE NETWORKS & APPLICATIONSDATA MINING AND KNOWLEDGE DISCOVERYINTERNATIONAL JOURNAL OF GEOGRAPHICAL INFORMATION SCIENCEACM Transactions on Sensor NetworksKNOWLEDGE AND INFORMATION SYSTEMSSemantic WebScience China-Information SciencesIEEE TRANSACTIONS ON INFORMATION THEORYInternational Journal of Critical Infrastructure Protection GEOINFORMATICAACM Transactions on Multimedia Computing Communications and Applications WIRELESS NETWORKSHuman-centric Computing and Information SciencesJOURNAL OF INFORMATION SCIENCEPersonal and Ubiquitous ComputingIEEE MULTIMEDIAJOURNAL OF VISUAL COMMUNICATION AND IMAGE REPRESENTATIONInternational Journal of Distributed Sensor NetworksDigital InvestigationACM TRANSACTIONS ON INFORMATION SYSTEMSINTERNATIONAL JOURNAL OF INFORMATION TECHNOLOGY & DECISION MAKINGJournal of Network and Systems ManagementREQUIREMENTS ENGINEERINGJOURNAL OF THE ACMACM Transactions on Internet TechnologyMULTIMEDIA SYSTEMSEnterprise Information SystemsONLINE INFORMATION REVIEWInternational Journal of Information SecurityIT ProfessionalCluster Computing-The Journal of Networks Software Tools and Applications JOURNAL OF COMPUTER INFORMATION SYSTEMSMULTIMEDIA TOOLS AND APPLICATIONSMETHODS OF INFORMATION IN MEDICINEPeer-to-Peer Networking and ApplicationsACM Transactions on Knowledge Discovery from DataInformation Retrieval JournalDATA & KNOWLEDGE ENGINEERINGAslib Journal of Information ManagementJournal of Ambient Intelligence and Humanized ComputingJOURNAL OF ORGANIZATIONAL COMPUTING AND ELECTRONIC COMMERCE INFORMATICATSINGHUA SCIENCE AND TECHNOLOGYJournal of Web SemanticsInternational Journal of Network ManagementJournal of Internet TechnologyInternational Journal of Computers Communications & ControlSIGMOD RECORDINFORMATION SYSTEMS MANAGEMENTJOURNAL OF COMMUNICATIONS AND NETWORKSIEEE SECURITY & PRIVACYACM Transactions on Autonomous and Adaptive SystemsPHOTONIC NETWORK COMMUNICATIONSSustainable Computing-Informatics & SystemsPROGRAM-ELECTRONIC LIBRARY AND INFORMATION SYSTEMSACM Transactions on the WebWORLD WIDE WEB-INTERNET AND WEB INFORMATION SYSTEMSOptical Switching and NetworkingJOURNAL OF INTELLIGENT INFORMATION SYSTEMSFrontiers of Computer ScienceJournal of Signal Processing Systems for Signal Image and Video Technology International Journal of Web and Grid ServicesACM TRANSACTIONS ON DATABASE SYSTEMSNew Review of Hypermedia and MultimediaACM Transactions on Computer-Human InteractionINFORMATION TECHNOLOGY AND LIBRARIESIBM JOURNAL OF RESEARCH AND DEVELOPMENTMobile Information SystemsDISTRIBUTED AND PARALLEL DATABASESFrontiers of Information Technology & Electronic EngineeringSecurity and Communication NetworksIET Information SecurityACTA INFORMATICAInternational Journal of Sensor NetworksJournal of Ambient Intelligence and Smart EnvironmentsWIRELESS COMMUNICATIONS & MOBILE COMPUTINGInformation Technology and ControlINFORMATION PROCESSING LETTERSInternational Journal on Semantic Web and Information SystemsCOMPUTER JOURNALApplied OntologyAd Hoc & Sensor Wireless NetworksJournal of Organizational and End User ComputingInternational Journal of Ad Hoc and Ubiquitous ComputingComputer Science and Information SystemsKSII Transactions on Internet and Information SystemsIEEE Latin America TransactionsIEICE TRANSACTIONS ON INFORMATION AND SYSTEMSInternational Arab Journal of Information TechnologyACM Transactions on Privacy and SecurityInternational Journal of Web Services ResearchRAIRO-THEORETICAL INFORMATICS AND APPLICATIONSIEICE TRANSACTIONS ON FUNDAMENTALS OF ELECTRONICS COMMUNICATIONS AND COMPUTER SCIENCES JOURNAL OF INFORMATION SCIENCE AND ENGINEERINGINFORJOURNAL OF DATABASE MANAGEMENTINTERNATIONAL JOURNAL OF COOPERATIVE INFORMATION SYSTEMSJournal of Statistical SoftwareARCHIVES OF COMPUTATIONAL METHODS IN ENGINEERINGIEEE TRANSACTIONS ON MEDICAL IMAGINGCOMPUTER-AIDED CIVIL AND INFRASTRUCTURE ENGINEERINGIEEE Transactions on Industrial InformaticsMEDICAL IMAGE ANALYSISCOMPUTERS & EDUCATIONJOURNAL OF THE AMERICAN MEDICAL INFORMATICS ASSOCIATIONENVIRONMENTAL MODELLING & SOFTWAREJOURNAL OF NETWORK AND COMPUTER APPLICATIONSAPPLIED SOFT COMPUTINGJournal of CheminformaticsNEUROINFORMATICSIEEE Journal of Biomedical and Health InformaticsJournal of Chemical Information and ModelingCOMPUTER PHYSICS COMMUNICATIONSINTEGRATED COMPUTER-AIDED ENGINEERINGJournal of InformetricsROBOTICS AND COMPUTER-INTEGRATED MANUFACTURINGSOCIAL SCIENCE COMPUTER REVIEWADVANCES IN ENGINEERING SOFTWARECOMPUTERS & INDUSTRIAL ENGINEERINGCOMPUTERS AND GEOTECHNICSCOMPUTERS & CHEMICAL ENGINEERINGCOMPUTERS & OPERATIONS RESEARCHINDUSTRIAL MANAGEMENT & DATA SYSTEMSCOMPUTERS & STRUCTURESJOURNAL OF BIOMEDICAL INFORMATICSSTRUCTURAL AND MULTIDISCIPLINARY OPTIMIZATIONJOURNAL OF COMPUTATIONAL PHYSICSCOMPUTERS IN INDUSTRYAstronomy and ComputingCOMPUTATIONAL GEOSCIENCESCOMPUTER METHODS AND PROGRAMS IN BIOMEDICINEElectronic Commerce Research and ApplicationsMATCH-COMMUNICATIONS IN MATHEMATICAL AND IN COMPUTER CHEMISTRYCOMPUTERS & GEOSCIENCESIEEE-ACM Transactions on Computational Biology and BioinformaticsCOMPUTERS AND ELECTRONICS IN AGRICULTURESOFT COMPUTINGJOURNAL OF COMPUTER-AIDED MOLECULAR DESIGNSAR AND QSAR IN ENVIRONMENTAL RESEARCHCOMPUTERS & FLUIDSSCIENTOMETRICSCOMPUTERS IN BIOLOGY AND MEDICINESIMULATION MODELLING PRACTICE AND THEORYIEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS INTERNATIONAL JOURNAL OF HIGH PERFORMANCE COMPUTING APPLICATIONSInternational Journal of Computational Intelligence SystemsINTERNATIONAL JOURNAL OF COMPUTER INTEGRATED MANUFACTURINGCOMPUTER METHODS IN BIOMECHANICS AND BIOMEDICAL ENGINEERINGMEDICAL & BIOLOGICAL ENGINEERING & COMPUTINGMolecular InformaticsENGINEERING WITH COMPUTERSJournal of Computational ScienceJOURNAL OF MOLECULAR GRAPHICS & MODELLINGIEEE Transactions on Learning TechnologiesJOURNAL OF COMPUTING IN CIVIL ENGINEERINGJOURNAL OF HYDROINFORMATICSDigital InvestigationINTERNATIONAL JOURNAL OF INFORMATION TECHNOLOGY & DECISION MAKINGCOMPUTERS & ELECTRICAL ENGINEERINGINTERNATIONAL JOURNAL FOR NUMERICAL METHODS IN FLUIDSComputers and ConcreteEarth Science InformaticsJOURNAL OF COMPUTING AND INFORMATION SCIENCE IN ENGINEERINGSPEECH COMMUNICATIONJOURNAL OF MOLECULAR MODELINGBig DataMATHEMATICS AND COMPUTERS IN SIMULATIONCONCURRENT ENGINEERING-RESEARCH AND APPLICATIONSJOURNAL OF ORGANIZATIONAL COMPUTING AND ELECTRONIC COMMERCECOMPUTATIONAL BIOLOGY AND CHEMISTRYINFORMS JOURNAL ON COMPUTINGVIRTUAL REALITYR JournalCOMPUTATIONAL LINGUISTICSINTERNATIONAL JOURNAL OF RF AND MICROWAVE COMPUTER-AIDED ENGINEERINGJournal of SimulationJOURNAL OF COMPUTATIONAL BIOLOGYCOMPUTATIONAL STATISTICS & DATA ANALYSISENGINEERING COMPUTATIONSQUEUEING SYSTEMSCOMPUTER APPLICATIONS IN ENGINEERING EDUCATIONCOMPUTING IN SCIENCE & ENGINEERINGCIN-COMPUTERS INFORMATICS NURSINGAI EDAM-ARTIFICIAL INTELLIGENCE FOR ENGINEERING DESIGN ANALYSIS AND MANUFACTURING JOURNAL OF NEW MUSIC RESEARCHJOURNAL OF VISUALIZATIONSIMULATION-TRANSACTIONS OF THE SOCIETY FOR MODELING AND SIMULATION INTERNATIONAL ACM Transactions on Modeling and Computer SimulationJOURNAL OF COMBINATORIAL OPTIMIZATIONINTERNATIONAL JOURNAL OF MODERN PHYSICS CJOURNAL OF STATISTICAL COMPUTATION AND SIMULATIONStatistical Analysis and Data MiningCurrent Computer-Aided Drug DesignComputer Supported Cooperative Work-The Journal of Collaborative Computing Language Resources and EvaluationComputational and Mathematical Organization TheoryMATHEMATICAL AND COMPUTER MODELLING OF DYNAMICAL SYSTEMSCOMPUTER MUSIC JOURNALInternational Journal on Artificial Intelligence ToolsCOMPEL-THE INTERNATIONAL JOURNAL FOR COMPUTATION AND MATHEMATICS IN ELECTRICAL AND ACM Journal on Computing and Cultural HeritageAPPLICABLE ALGEBRA IN ENGINEERING COMMUNICATION AND COMPUTINGIEEE Transactions on Services ComputingIEEE Transactions on Dependable and Secure ComputingACM TRANSACTIONS ON GRAPHICSJOURNAL OF NETWORK AND COMPUTER APPLICATIONSIEEE TRANSACTIONS ON MULTIMEDIAIEEE TRANSACTIONS ON SOFTWARE ENGINEERINGADVANCES IN ENGINEERING SOFTWAREIEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICSCOMMUNICATIONS OF THE ACMCOMPUTER-AIDED DESIGNEMPIRICAL SOFTWARE ENGINEERINGACM TRANSACTIONS ON MATHEMATICAL SOFTWAREIEEE SOFTWAREIEEE TRANSACTIONS ON RELIABILITYMATHEMATICAL PROGRAMMINGINFORMATION AND SOFTWARE TECHNOLOGYINTERNATIONAL JOURNAL OF ELECTRONIC COMMERCESIAM Journal on Imaging SciencesJOURNAL OF SYSTEMS AND SOFTWAREIMAGE AND VISION COMPUTINGSIMULATION MODELLING PRACTICE AND THEORYCOMPUTER GRAPHICS FORUMACM Transactions on Multimedia Computing Communications and ApplicationsACM TRANSACTIONS ON SOFTWARE ENGINEERING AND METHODOLOGYCOMPUTERIEEE INTERNET COMPUTINGJOURNAL OF MATHEMATICAL IMAGING AND VISIONIEEE MICROIEEE MULTIMEDIACOMPUTER LANGUAGES SYSTEMS & STRUCTURESJOURNAL OF VISUAL COMMUNICATION AND IMAGE REPRESENTATIONAutomated Software EngineeringREQUIREMENTS ENGINEERINGJOURNAL OF THE ACMACM Transactions on Internet TechnologySoftware and Systems ModelingInternational Journal of Information SecurityIEEE COMPUTER GRAPHICS AND APPLICATIONSIT ProfessionalSOFTWARE QUALITY JOURNALSOFTWARE TESTING VERIFICATION & RELIABILITYMULTIMEDIA TOOLS AND APPLICATIONSCOMPUTER AIDED GEOMETRIC DESIGNAdvances in ComputersACM Transactions on Knowledge Discovery from DataMATHEMATICS AND COMPUTERS IN SIMULATIONCOMPUTER STANDARDS & INTERFACESBIT NUMERICAL MATHEMATICSVIRTUAL REALITYTSINGHUA SCIENCE AND TECHNOLOGYJournal of Web SemanticsScientific ProgrammingSOFTWARE-PRACTICE & EXPERIENCESIGMOD RECORDIEEE SECURITY & PRIVACYCOMPUTERS & GRAPHICS-UKOPTIMIZATION METHODS & SOFTWAREJournal of Software-Evolution and ProcessACM Transactions on the WebACM Transactions on Embedded Computing SystemsWORLD WIDE WEB-INTERNET AND WEB INFORMATION SYSTEMSCONCURRENCY AND COMPUTATION-PRACTICE & EXPERIENCEFrontiers of Computer ScienceInternational Journal on Software Tools for Technology TransferInternational Journal of Web and Grid ServicesJOURNAL OF UNIVERSAL COMPUTER SCIENCEVISUAL COMPUTERGRAPHICAL MODELSACM TRANSACTIONS ON DATABASE SYSTEMSRANDOM STRUCTURES & ALGORITHMSJOURNAL OF VISUAL LANGUAGES AND COMPUTINGACM Transactions on Applied PerceptionIBM JOURNAL OF RESEARCH AND DEVELOPMENTIET SoftwareSIMULATION-TRANSACTIONS OF THE SOCIETY FOR MODELING AND SIMULATION INTERNATIONAL ACM Transactions on StorageInformation VisualizationJOURNAL OF SYSTEMS ARCHITECTUREFrontiers of Information Technology & Electronic EngineeringIEEE Transactions on Computational Intelligence and AI in GamesJOURNAL OF COMPUTER SCIENCE AND TECHNOLOGYTHEORY AND PRACTICE OF LOGIC PROGRAMMINGACM TRANSACTIONS ON DESIGN AUTOMATION OF ELECTRONIC SYSTEMSFORMAL ASPECTS OF COMPUTINGCOMPUTER JOURNALInternational Journal of Data Warehousing and MiningACM TRANSACTIONS ON PROGRAMMING LANGUAGES AND SYSTEMSSCIENCE OF COMPUTER PROGRAMMINGFUNDAMENTA INFORMATICAEJOURNAL OF FUNCTIONAL PROGRAMMINGCOMPUTER ANIMATION AND VIRTUAL WORLDSALGORITHMICAComputer Science and Information SystemsDISCRETE MATHEMATICS AND THEORETICAL COMPUTER SCIENCEInternational Journal of Wavelets Multiresolution and Information Processing DESIGN AUTOMATION FOR EMBEDDED SYSTEMSIEICE TRANSACTIONS ON INFORMATION AND SYSTEMSPRESENCE-TELEOPERATORS AND VIRTUAL ENVIRONMENTSInternational Journal of Web Services ResearchINTERNATIONAL JOURNAL OF SOFTWARE ENGINEERING AND KNOWLEDGE ENGINEERING ICGA JOURNALJournal of Web EngineeringPROGRAMMING AND COMPUTER SOFTWAREJOURNAL OF DATABASE MANAGEMENTIEEE TRANSACTIONS ON EVOLUTIONARY COMPUTATIONIEEE Transactions on Neural Networks and Learning SystemsIEEE Transactions on Cloud ComputingInformation FusionIEEE Transactions on Information Forensics and SecurityACM COMPUTING SURVEYSFuture Generation Computer Systems-The International Journal of eScience IEEE TRANSACTIONS ON PARALLEL AND DISTRIBUTED SYSTEMSSwarm and Evolutionary ComputationHUMAN-COMPUTER INTERACTIONINFORMATION SYSTEMS FRONTIERSIEEE-ACM TRANSACTIONS ON NETWORKINGCOMMUNICATIONS OF THE ACMFOUNDATIONS OF COMPUTATIONAL MATHEMATICSINTERNATIONAL JOURNAL OF GENERAL SYSTEMSIEEE Cloud ComputingJournal of Grid ComputingFUZZY SETS AND SYSTEMSCOMPUTER METHODS AND PROGRAMS IN BIOMEDICINEEVOLUTIONARY COMPUTATIONJOURNAL OF SYSTEMS AND SOFTWAREInternational Journal of Bio-Inspired ComputationSemantic WebINTERNATIONAL JOURNAL OF SYSTEMS SCIENCEIMAGE AND VISION COMPUTINGMULTIDIMENSIONAL SYSTEMS AND SIGNAL PROCESSINGACM Transactions on Multimedia Computing Communications and Applications INTERNATIONAL JOURNAL OF HIGH PERFORMANCE COMPUTING APPLICATIONSWiley Interdisciplinary Reviews-Data Mining and Knowledge DiscoveryJournal of Computational ScienceIEEE MULTIMEDIASTATISTICS AND COMPUTINGJOURNAL OF PARALLEL AND DISTRIBUTED COMPUTINGPERFORMANCE EVALUATIONACM TRANSACTIONS ON COMPUTER SYSTEMSJOURNAL OF THE ACMMULTIMEDIA SYSTEMSInternational Journal of Information SecurityCOMPUTINGCluster Computing-The Journal of Networks Software Tools and Applications QUANTUM INFORMATION & COMPUTATIONREAL-TIME SYSTEMSMULTIMEDIA TOOLS AND APPLICATIONSJOURNAL OF SUPERCOMPUTINGJOURNAL OF COMPUTER AND SYSTEM SCIENCESBig DataGenetic Programming and Evolvable MachinesEXPERT SYSTEMSACM Transactions on Autonomous and Adaptive SystemsCryptography and Communications-Discrete-Structures Boolean Functions and Sequences ACM Transactions on Architecture and Code OptimizationJOURNAL OF HEURISTICSCONCURRENCY AND COMPUTATION-PRACTICE & EXPERIENCEDESIGNS CODES AND CRYPTOGRAPHYFrontiers of Computer ScienceMATHEMATICAL STRUCTURES IN COMPUTER SCIENCEINFORMATION AND COMPUTATIONJOURNAL OF UNIVERSAL COMPUTER SCIENCEJOURNAL OF CRYPTOLOGYMICROPROCESSORS AND MICROSYSTEMSInternational Journal of Unconventional ComputingIBM JOURNAL OF RESEARCH AND DEVELOPMENTPARALLEL COMPUTINGCONNECTION SCIENCEDISTRIBUTED AND PARALLEL DATABASESSIAM JOURNAL ON COMPUTINGARTIFICIAL LIFEINTERNATIONAL JOURNAL OF PARALLEL PROGRAMMINGIET Information SecurityTHEORY AND PRACTICE OF LOGIC PROGRAMMINGNatural ComputingCOMPUTER SYSTEMS SCIENCE AND ENGINEERINGDISTRIBUTED COMPUTINGFORMAL METHODS IN SYSTEM DESIGNCOMPUTER JOURNALApplied OntologyTHEORETICAL COMPUTER SCIENCEJOURNAL OF SYMBOLIC COMPUTATIONJOURNAL OF LOGIC AND COMPUTATIONINTERNATIONAL JOURNAL OF QUANTUM INFORMATIONACM Transactions on Computational LogicNEW GENERATION COMPUTINGCOMBINATORICS PROBABILITY & COMPUTINGDISCRETE & COMPUTATIONAL GEOMETRY。

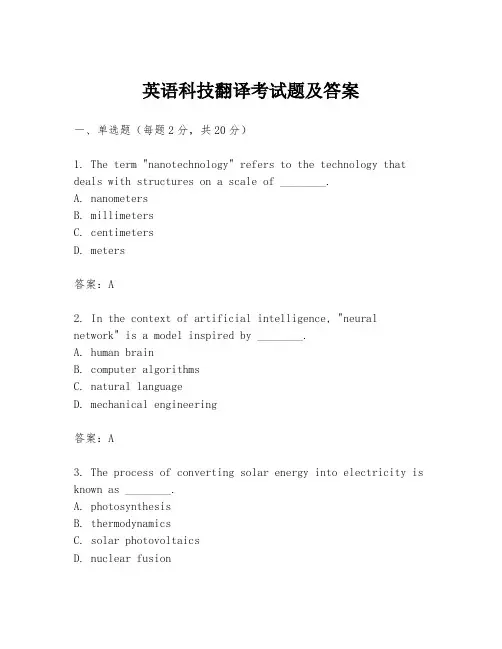

英语科技翻译考试题及答案一、单选题(每题2分,共20分)1. The term "nanotechnology" refers to the technology that deals with structures on a scale of ________.A. nanometersB. millimetersC. centimetersD. meters答案:A2. In the context of artificial intelligence, "neural network" is a model inspired by ________.A. human brainB. computer algorithmsC. natural languageD. mechanical engineering答案:A3. The process of converting solar energy into electricity is known as ________.A. photosynthesisB. thermodynamicsC. solar photovoltaicsD. nuclear fusion答案:C4. Which of the following is not a characteristic of a quantum computer?A. SuperpositionB. EntanglementC. DeterministicD. Quantum tunneling答案:C5. The term "biotechnology" is often associated with the manipulation of ________.A. electronic devicesB. living organismsC. chemical compoundsD. geological formations答案:B6. In the field of robotics, "actuator" refers to a component that ________.A. senses the environmentB. processes informationC. moves or controls a mechanismD. stores energy答案:C7. "Internet of Things" (IoT) involves the interconnection of everyday objects, enabling them to send and receive data,which is based on ________.A. cloud computingB. artificial intelligenceC. machine learningD. traditional networking答案:A8. The abbreviation "AI" stands for ________.A. Artificial IntelligenceB. Artificial InputC. Advanced InterfaceD. Automated Interaction答案:A9. "Genetic engineering" involves the ________ of an organism's genes.A. removalB. additionC. modificationD. replication答案:C10. "Cybersecurity" is concerned with protecting ________.A. physical infrastructureB. digital informationC. environmental resourcesD. financial assets答案:B二、填空题(每题2分,共20分)1. The smallest unit of data in computing is called a________.答案:bit2. A ________ is a type of computer system designed to recognize, interpret, and simulate human actions.答案:robot3. In computer science, "algorithm" refers to a well-defined procedure that produces an answer to a ________.答案:problem4. The study of the behavior and interactions of dispersed phase(s) in a fluid medium is known as ________.答案:fluid mechanics5. A ________ is a device that uses light to transmit information.答案:fiber optic6. The process of converting data into a format that can be easily shared or analyzed is called ________.答案:data mining7. "Virtual reality" is a computer-generated simulation of a ________ environment.答案:three-dimensional8. The ________ is a set of rules that defines how data is formatted, transmitted, received, and processed.答案:protocol9. A ________ is a type of computer program that canreplicate itself and often spread to other computers.答案:virus10. "Machine learning" is a subset of artificial intelligence that allows computers to learn from and make decisions based on ________.答案:data三、阅读理解题(每题5分,共30分)阅读以下段落,并回答问题。

阈值阈值3.4585225序号12345678910111213141516171819202122232425262728 2930 31 32LAB OINTERMATERIALS REVIEWSNanoNanotechnology Biology andPROPHOTOVOLTAICSPROCEEDIEEEJOURNALAnnual Revand Biomolecular EngineeringSPROGRESGROWTH ANDCHARACTERIZATION OFMATERIALSACM COMPUPolymCHEMISTRYTREBIOTECHNOLOGYADVANCEMATERIALSBIOTECADVANCESCURRENBIOTECHNOLOGYMaterBIOMNANOANNUALBIOMEDICAL ENGINEERINGADVANCEANNUALMATERIALS RESEARCHACPROGRESSCIENCENATURE BIEnergy EducTechnologyPROGRESSSCIENCEMATERIAENGINEERING R-REPORTSNanPROGRESAND COMBUSTION刊NATURENature N11111111111111111111111111113区阈值377分区11110018-9219P IEEE0021-9517J CATAL0950-6608INT MATER REV1549-9634NANOMED-NANOTECHNOL1062-7995PROG PHOTOVOLTAICS1558-3724POLYM REV0897-4756CHEM MATER1473-0197LAB CHIP1613-6810SMALL0960-8974PROG CRYST GROWTH CH0360-0300ACM COMPUT SURV1369-7021MATER TODAY0142-9612BIOMATERIALS1947-5438ANNU REV CHEM BIOMOL1616-301X ADV FUNCT MATER0734-9750BIOTECHNOL ADV0958-1669CURR OPIN BIOTECH1936-0851ACS NANO0079-6816PROG SURF SCI0167-7799TRENDS BIOTECHNOL1476-1122NAT MATER1748-3387NAT NANOTECHNOL1531-7331ANNU REV MATER RES0935-9648ADV MATER1区2区期刊数期刊数1.931 1.112ISSN刊名简称NANO TODAY0360-1285PROG ENERG COMBUST1530-6984NANO LETT1523-9829ANNU REV BIOMED ENG1087-0156NAT BIOTECHNOL1301-8361ENERGY EDUC SCI TECH0079-6425PROG MATER SCI0927-796X MAT SCI ENG R1748-01323334 35 36 37 38 39 40 41 42 43 44 45 46 4748 49 50 51 52 5354 55 56 57 58 59 60 61 6263 64INTERNATIOF COMPUTER VISIONIEEE TRANEVOLUTIONARYIEEE TRANINDUSTRIAL ELECTRONICSMACROMOLCOMMUNICATIONSSOLAMATERIALS AND SOLARJOURNASOURCESCRITICALFOOD SCIENCE ANDCURRENSOLID STATE & MATERIALSSoActa BBIORTECHNOLOGYADVANCEMECHANICSELECTROCOMMUNICATIONSMOLECULAFOOD RESEARCHIEEE TRANPATTERN ANALYSIS ANDMACHINE INTELLIGENCEMACROINTERNATIOF PLASTICITYBiotechnolMIS QSIAM JouSciencesRENESUSTAINABLE ENERGYCRITICALBIOTECHNOLOGYNaIEEE CoSurveys and TutorialsIEEE SIGNAMAGAZINECANPG ABIOSEBIOELECTRONICSPROGRESELECTRONICSMRSJOURNALCHEMISTRYMETABOLIC111111111111111111111111111111111089-778X IEEE T EVOLUT COMPUT1040-8398CRIT REV FOOD SCI1359-0286CURR OPIN SOLID ST M0920-5691INT J COMPUT VISION1022-1336MACROMOL RAPID COMM0927-0248SOL ENERG MAT SOL C0378-7753J POWER SOURCES1388-2481ELECTROCHEM COMMUN1613-4125MOL NUTR FOOD RES0278-0046IEEE T IND ELECTRON1742-7061ACTA BIOMATER0960-8524BIORESOURCE TECHNOL0065-2156ADV APPL MECH0276-7783MIS QUART1936-4954SIAM J IMAGING SCI1744-683X SOFT MATTER0024-9297MACROMOLECULES0749-6419INT J PLASTICITY1754-6834BIOTECHNOL BIOFUELS1053-5888IEEE SIGNAL PROC MAG0008-6223CARBON0162-8828IEEE T PATTERN ANAL0738-8551CRIT REV BIOTECHNOL2040-3364NANOSCALE1553-877X IEEE COMMUN SURV TUT0959-9428J MATER CHEM1096-7176METAB ENG1364-0321RENEW SUST ENERG REV0956-5663BIOSENS BIOELECTRON0079-6727PROG QUANT ELECTRON0883-7694MRS BULL1884-4049NPG ASIA MATER65 6667 68 69 70 71 7273 74 75 7677 7879 8081 82 83 84 85 86 87 8889 90 91 9293 949596 97ENINTERNATIOF NONLINEAR SCIENCESAND NUMERICALSIMULATIONFOOD MIBIOMMICRODEVICESIEEE JOSELECTED TOPICS INQUANTUM ELECTRONICSIEEE TRANFUZZY SYSTEMSFOOD CPlaJournal of NMicrofluidicsAnnual RScience and TechnologyNANOTEACM TRANGRAPHICSJOURNALSCIENCESENSACTUATORS B-CHEMICALAPPLIED SREVIEWSAPPLIEACS ApplInterfacesBIOTECHBIOENGINEERINGIEEE TRANPOWER ELECTRONICSBIOMASSELECTROMicrobialInternationalSystemsTRENDS IN& TECHNOLOGYIEEE JOSELECTED AREAS INORGANICACTA MINTERNATIOF HYDROGEN ENERGYJOURNAL OMATERIALSMEDICAL IMHUMANINTERACTION2222222221112221111111111111111111387-2176BIOMED MICRODEVICES0016-2361FUEL1565-1339INT J NONLIN SCI NUM0740-0020FOOD MICROBIOL1741-2560J NEURAL ENG1613-4982MICROFLUID NANOFLUID0360-5442ENERGY1063-6706IEEE T FUZZY SYST0308-8146FOOD CHEM1557-1955PLASMONICS0925-4005SENSOR ACTUAT B-CHEM0570-4928APPL SPECTROSC REV1077-260X IEEE J SEL TOP QUANT0957-4484NANOTECHNOLOGY0730-0301ACM T GRAPHIC0376-7388J MEMBRANE SCI0961-9534BIOMASS BIOENERG0013-4686ELECTROCHIM ACTA1941-1413ANNU REV FOOD SCI T1944-8244ACS APPL MATER INTER0006-3592BIOTECHNOL BIOENG0885-8993IEEE T POWER ELECTR1566-1199ORG ELECTRON1359-6454ACTA MATER0306-2619APPL ENERG0129-0657INT J NEURAL SYST0924-2244TRENDS FOOD SCI TECH0733-8716IEEE J SEL AREA COMM1361-8415MED IMAGE ANAL0737-0024HUM-COMPUT INTERACT1475-2859MICROB CELL FACT0360-3199INT J HYDROGEN ENERG0304-3894J HAZARD MATER9899 100 101 102 103 104 105106 107108 109 110 111 112 113 114 115116 117 118 119120 121 122123 124 125 126 127Journal of Cand ManagementIEEE TRANIMAGE PROCESSINGIEEE TRANSYSTEMS MAN ANDCYBERNETICS PART B-CYBERNETICSInternatioElectrochemical SciencePROCEEDCOMBUSTION INSTITUTEJournal of SINFORMATDENTALCOMPOSAND TECHNOLOGYJOUBIOTECHNOLOGYIEEE CIntelligence MagazineINTERNATIOF ROBOTICS RESEARCHENVIRMODELLING & SOFTWAREIEEE COMMAGAZINEFuJOUNANOPARTICLE RESEARCHMARINE BIOSCIETECHNOLOGY OFFOOD HYDCORROSMICROMICROANALYSISJournal ofBehavior of BiomedicalCOMBUSTICurrenBiomechanicMechanobiologyIEEE JOURSTATE CIRCUITSINTERNATIOF FOOD MICROBIOLOGYInternatioNanomedicineCHEMICALJOURNALAPPLIED MAND BIOTECHNOLOGY2222222222222222222222222222221540-7489P COMBUST INST1057-7149IEEE T IMAGE PROCESS1083-4419IEEE T SYST MAN CY B1452-3981INT J ELECTROCHEM SC0168-1656J BIOTECHNOL1556-603X IEEE COMPUT INTELL M1392-3730J CIV ENG MANAG0020-0255INFORM SCIENCES0109-5641DENT MATER0266-3538COMPOS SCI TECHNOL1388-0764J NANOPART RES1436-2228MAR BIOTECHNOL1548-7660J STAT SOFTW1364-8152ENVIRON MODELL SOFTW0163-6804IEEE COMMUN MAG1615-6846FUEL CELLS1751-6161J MECH BEHAV BIOMED0010-2180COMBUST FLAME0278-3649INT J ROBOT RES0268-005X FOOD HYDROCOLLOID0010-938X CORROS SCI1431-9276MICROSC MICROANAL1178-2013INT J NANOMED1385-8947CHEM ENG J1468-6996SCI TECHNOL ADV MAT1617-7959BIOMECH MODEL MECHAN0018-9200IEEE J SOLID-ST CIRC0168-1605INT J FOOD MICROBIOL0175-7598APPL MICROBIOL BIOT1570-1646CURR PROTEOMICS128 129130131 132133 134 135 136 137 138 139 140 141142 143144 145 146 147 148 149 150 151 152153154155 156157 158Science of AADVABIOCHEMICALENGINEERING /BIOTECHNOLOGYBMC BIOTFOODANNUALINFORMATION SCIENCEAND TECHNOLOGYIEEE INSYSTEMSInnovativeEmerging TechnologiesJournal of Nand RehabilitationPROGRESSSCIENCESBiofIEEE TRANSOFTWARE ENGINEERINGFOODINTERNATIONALJOURNALLEARNING RESEARCHVLDBNanoscaleIEEE ELECLETTERSIEEE TRANINFORMATION THEORYFUEL PTECHNOLOGYJOUSUPERCRITICAL FLUIDSIEEE TRANNEURAL NETWORKSSCRIPTAIBM JORESEARCH ANDJOURNALJOURNALAND COMPATIBLEIEEE IndusMagazineSEPARPURIFICATIONCELGOLDCOMPUTEAND INFRASTRUCTUREENGINEERINGJOURNAL OMATERIALS RESEARCHDYES AN22222222222222222222222222222220066-4200ANNU REV INFORM SCI1541-1672IEEE INTELL SYST0724-6145ADV BIOCHEM ENG BIOT1472-6750BMC BIOTECHNOL0956-7135FOOD CONTROL0098-5589IEEE T SOFTWARE ENG0963-9969FOOD RES INT1947-2935SCI ADV MATER1743-0003J NEUROENG REHABIL0376-0421PROG AEROSP SCI1758-5082BIOFABRICATION0018-9448IEEE T INFORM THEORY0378-3820FUEL PROCESS TECHNOL1466-8564INNOV FOOD SCI EMERG1066-8888VLDB J1931-7573NANOSCALE RES LETT0741-3106IEEE ELECTR DEVICE L0004-5411J ACM0883-9115J BIOACT COMPAT POL1532-4435J MACH LEARN RES1045-9227IEEE T NEURAL NETWOR1359-6462SCRIPTA MATER0018-8646IBM J RES DEV1093-9687COMPUT-AIDED CIV INF1549-3296J BIOMED MATER RES A0896-8446J SUPERCRIT FLUID1383-5866SEP PURIF TECHNOL0969-0239CELLULOSE0017-1557GOLD BULL0143-7208DYES PIGMENTS1932-4529IEEE IND ELECTRON M159 160161162 163164 165166167168 169 170171 172173174 175 176 177178 179 180 181 182 183 184 185 186187 188189JOURNAL OTECHNOLOGYISPRS JPHOTOGRAMMETRY ANDREMOTE SENSINGIEEE PoMagazineCEMENT ARESEARCHIEEE TRANMOBILE COMPUTINGCOMPUTEREnterprisSystemsJournal ofIEEE TRANSIGNAL PROCESSINGREACTIVEPOLYMERSENERGCOMPOSAPPLIED SCIENCE ANDMANUFACTURINGIEEE TRANENERGY CONVERSIONINTEGRATEAIDED ENGINEERINGIEEE TRANGEOSCIENCE AND REMOTESENSINGBIOCENGINEERING JOURNALPATTERNIEEEEARTHQUCOMPREHEIN FOOD SCIENCE ANDFOOD SAFETYFOOD ANTOXICOLOGYIEEE TraRoboticsAUTIEEECOMPUTINGJournal ofARTIFICIALIEEE TRANINTELLIGENTTRANSPORTATIONSYSTEMSRENEWAIEEE-ASMEON MECHATRONICSBIOTE22222222222222222222222222222220360-1315COMPUT EDUC1751-7575ENTERP INF SYST-UK0268-3962J INF TECHNOL1540-7977IEEE POWER ENERGY M0008-8846CEMENT CONCRETE RES1536-1233IEEE T MOBILE COMPUT1359-835X COMPOS PART A-APPL S0885-8969IEEE T ENERGY CONVER0924-2716ISPRS J PHOTOGRAMM1053-587X IEEE T SIGNAL PROCES1381-5148REACT FUNCT POLYM0887-0624ENERG FUEL0272-1732IEEE MICRO8755-2930EARTHQ SPECTRA1570-8268J WEB SEMANT0196-2892IEEE T GEOSCI REMOTE1369-703X BIOCHEM ENG J0031-3203PATTERN RECOGN0005-1098AUTOMATICA1089-7801IEEE INTERNET COMPUT1069-2509INTEGR COMPUT-AID E1541-4337COMPR REV FOOD SCI F0278-6915FOOD CHEM TOXICOL1552-3098IEEE T ROBOT1083-4435IEEE-ASME T MECH0736-6205BIOTECHNIQUES1942-0862MABS-AUSTIN0004-3702ARTIF INTELL1524-9050IEEE T INTELL TRANSP0960-1481RENEW ENERG1556-4959J FIELD ROBOT190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210211 212213214 215 216217 218IEEE TraAutonomous MentalBIOTECPROGRESSJOURNENGINEERINGAUSTRALIAGRAPE AND WINEMEATIEEE PCOMPUTINGINTERNAJOURNALPLANT FOONUTRITIONIEEE TRANPOWER SYSTEMSLEBENWISSENSCHAFT UND-TECHNOLOGIE-FOODSCIENCE ANDJOURNAL OMICROBIOLOGY &BIOTECHNOLOGYCHEMICALSCIENCEIEEE CONTMAGAZINECOMPUINTELLIGENCEEXPERT SAPPLICATIONSIEEE TRANELECTRON DEVICESJOURNEUROPEAN CERAMICIEEE PhoARCCOMPUTATIONALANNALS OENGINEERINGAPPLIED SOINFORMANAGEMENTJournal ofMateriaEngineering C-Materials forJOURNELECTROCHEMICALJOURNAL OTECHNOLOGYJOURNASCIENCETRANSRESEARCH PART B-JOURNALTHERMODYNAMICS222222222222222222222222222220309-1740MEAT SCI1536-1268IEEE PERVAS COMPUT8756-7938BIOTECHNOL PROGR0260-8774J FOOD ENG1322-7130AUST J GRAPE WINE R1367-5435J IND MICROBIOL BIOT0009-2509CHEM ENG SCI1943-0604IEEE T AUTON MENT DE0921-9668PLANT FOOD HUM NUTR0885-8950IEEE T POWER SYST0023-6438LWT-FOOD SCI TECHNOL0955-2219J EUR CERAM SOC1943-0655IEEE PHOTONICS J0958-6946INT DAIRY J0824-7935COMPUT INTELL-US0957-4174EXPERT SYST APPL0018-9383IEEE T ELECTRON DEV1748-0221J INSTRUM0928-4931MAT SCI ENG C-MATER1066-033X IEEE CONTR SYST MAG0090-6964ANN BIOMED ENG1568-4946APPL SOFT COMPUT0378-7206INFORM MANAGE-AMSTER0191-2615TRANSPORT RES B-METH0021-9614J CHEM THERMODYN1134-3060ARCH COMPUT METHOD E0013-4651J ELECTROCHEM SOC0733-8724J LIGHTWAVE TECHNOL0733-5210J CEREAL SCI219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237238 239 240 241 242243 244 245JOURNCOMPOSITION ANDDECISIOSYSTEMSKNOWLINFORMATION SYSTEMSJOURNAL OMATERIALS RESEARCHPART B-APPLIEDBIOMATERIALSCOMPUTEAPPLIED MECHANICS ANDENGINEERINGINTERNATIOF COAL GEOLOGYJOURNAMERICAN SOCIETY FORINFORMATION SCIENCEAND TECHNOLOGYIEEE TRANVISUALIZATION ANDCOMPUTER GRAPHICSDESAJOURNALSCIENCE-MATERIALS INMEDICINEBiomedFUTURECOMPUTER SYSTEMSJOURNALINFORMATION SYSTEMSJOURNAL OCOMPOUNDSPhotonics anFundamentals andIEEE TRANWIRELESSCOMMUNICACMBioinIEEE TRANAUTOMATIC CONTROLMATERIALAND PHYSICSULTRAMUSER MOUSER-ADAPTEDEVOLCOMPUTATIONFOOD QPREFERENCEIEEE WCOMMUNICATIONSIEEE JourTopics in Signal ProcessingSOLA2222222222222222222222222220167-9236DECIS SUPPORT SYST0219-1377KNOWL INF SYST1077-2626IEEE T VIS COMPUT GR0011-9164DESALINATION0889-1575J FOOD COMPOS ANAL0045-7825COMPUT METHOD APPL M0166-5162INT J COAL GEOL1532-2882J AM SOC INF SCI TEC0925-8388J ALLOY COMPD1569-4410PHOTONIC NANOSTRUCT1552-4973J BIOMED MATER RES B1748-6041BIOMED MATER0167-739X FUTURE GENER COMP SY0963-8687J STRATEGIC INF SYST0254-0584MATER CHEM PHYS0304-3991ULTRAMICROSCOPY0957-4530J MATER SCI-MATER M0001-0782COMMUN ACM1934-8630BIOINTERPHASES0018-9286IEEE T AUTOMAT CONTR1932-4553IEEE J-STSP0038-092X SOL ENERGY1536-1276IEEE T WIREL COMMUN1063-6560EVOL COMPUT0950-3293FOOD QUAL PREFER1536-1284IEEE WIREL COMMUN0924-1868USER MODEL USER-ADAP246247 248 249250 251252 253 254255 256257 258259 260261262 263 264 265 266267 268269 270 271 272273JOUMICROELECTROMECHANICAL SYSTEMSJOURNAL OINTERCOMPUTERENGINEERINGIEEE TRANCIRCUITS AND SYSTEMSFOR VIDEO TECHNOLOGYJOURNAL OELECTROCHEMISTRYJOUMANAGEMENTIEEE TRANSYSTEMS MAN ANDCYBERNETICS PART A-SYSTEMS AND HUMANSAICHEJOUBIOMATERIALSENERGY COMANAGEMENTIEEE TraIndustrial InformaticsIEEE TRANBIOMEDICAL ENGINEERINGIEEECOMPOSITIEEE RAUTOMATION MAGAZINECHEMOMINTELLIGENT LABORATORYSYSTEMSINTERNATIOF HEAT AND MASSFLUID PHAIEEE-ACM TON NETWORKINGMATERIANEURAL CInternatioSemantic Web andBUILDENVIRONMENTIEEE JOQUANTUM ELECTRONICSARTIFJOURNAMERICAN CERAMICJOUMICROMECHANICS AND22222222222222222222222222221432-8488J SOLID STATE ELECTR0742-1222J MANAGE INFORM SYST1057-7157J MICROELECTROMECH S0966-9795INTERMETALLICS0098-1354COMPUT CHEM ENG1051-8215IEEE T CIRC SYST VID1551-3203IEEE T IND INFORM0018-9294IEEE T BIO-MED ENG0933-2790J CRYPTOL0001-1541AICHE J0885-3282J BIOMATER APPL0196-8904ENERG CONVERS MANAGE0017-9310INT J HEAT MASS TRAN0378-3812FLUID PHASE EQUILIBR1083-4427IEEE T SYST MAN CY A0263-8223COMPOS STRUCT1070-9932IEEE ROBOT AUTOM MAG0169-7439CHEMOMETR INTELL LAB0360-1323BUILD ENVIRON0018-9197IEEE J QUANTUM ELECT0890-8044IEEE NETWORK0167-577X MATER LETT0899-7667NEURAL COMPUT1552-6283INT J SEMANT WEB INF0002-7820J AM CERAM SOC0960-1317J MICROMECH MICROENG1063-6692IEEE ACM T NETWORK1064-5462ARTIF LIFE274 275 276 277 278 279280 281282 283284 285 286 287288 289290291 292 293 294 295 296IEEE PTECHNOLOGY LETTERSIEEE ENGMEDICINE AND BIOLOGYMAGAZINEINTERNATIOF ADHESION ANDINTERNATIFOR NUMERICAL METHODSIN ENGINEERINGFunctionalIEEE TRANMICROWAVE THEORY ANDTECHNIQUESNEURALJOURNALTECHNOLOGY ANDBIOTECHNOLOGYACM TransaNetworksHYDROMENERGY AMATERIALSENGINEERING A-STRUCTURAL MATERIALSPROPERTIES MICROSTBaltic JourBridge EngineeringINTERNATIOF ELECTRICAL POWER &ENERGY SYSTEMSINDUENGINEERING CHEMISTRYEUROPEJOURNAL EINTERNATIOF MACHINE TOOLS &MANUFACTUREJOUBIOMATERIALS SCIENCE-IEEE TRANSYSTEMS MAN ANDCYBERNETICS PART C-APPLICATIONS AND REJournal of thInformation SystemsMATERIALBULLETINFOOD ADCONTAMINANTSJOURNAL OEDUCATION222222222222222222222221793-6047FUNCT MATER LETT0018-9480IEEE T MICROW THEORY0739-5175IEEE ENG MED BIOL0143-7496INT J ADHES ADHES0029-5981INT J NUMER METH ENG0378-7788ENERG BUILDINGS0921-5093MAT SCI ENG A-STRUCT1041-1135IEEE PHOTONIC TECH L0268-2575J CHEM TECHNOL BIOT1550-4859ACM T SENSOR NETWORK0304-386X HYDROMETALLURGY0890-6955INT J MACH TOOL MANU0920-5063J BIOMAT SCI-POLYM E0893-6080NEURAL NETWORKS0142-0615INT J ELEC POWER0888-5885IND ENG CHEM RES1292-8941EUR PHYS J E1944-0049FOOD ADDIT CONTAM A1069-4730J ENG EDUC1822-427X BALT J ROAD BRIDGE E1094-6977IEEE T SYST MAN CY C1536-9323J ASSOC INF SYST0025-5408MATER RES BULL297298 299300 301 302303 304 305 306 307 308 309 310 311312 313314 315 316 317 318 319 320321322 323 324325 326MACROMATERIALS ANDJournal of FJOURNPROTECTIONCOMPOPERATIONS RESEARCHSYNTHEACM TransaINTERNATIOF APPROXIMATEPOWDERBIOPROBIOSYSTEMSSTRUCTUMONITORING-ANINTERNATIONAL JOURNALTRANSRESEARCH PART A-POLICYMECHANIAND SIGNAL PROCESSINGSURFACETECHNOLOGYIEEE TRANKNOWLEDGE AND DATAENGINEERINGCEMENTCOMPOSITESCOASTALIET NanoDATA MKNOWLEDGE DISCOVERYIEEE TRANANTENNAS ANDMECHANICSJOURNAL OAND ENGINEERINGIEEE TRANNANOTECHNOLOGYELECTROCSOLID STATE LETTERSAPPLIEENGINEERINGSMART MSTRUCTURESSTRUCTUINTERNATIOF ENERGY RESEARCHCOMPULINGUISTICSJournal of DiBIODEG3333333333333333222222222222220379-6779SYNTHETIC MET1559-1131ACM T WEB1756-4646J FUNCT FOODS0362-028X J FOOD PROTECT0305-0548COMPUT OPER RES0965-8564TRANSPORT RES A-POL0888-3270MECH SYST SIGNAL PR1438-7492MACROMOL MATER ENG0032-5910POWDER TECHNOL1615-7591BIOPROC BIOSYST ENG1475-9217STRUCT HEALTH MONIT1751-8741IET NANOBIOTECHNOL1384-5810DATA MIN KNOWL DISC0888-613X INT J APPROX REASON1041-4347IEEE T KNOWL DATA EN0958-9465CEMENT CONCRETE COMP0378-3839COAST ENG1099-0062ELECTROCHEM SOLID ST1359-4311APPL THERM ENG0257-8972SURF COAT TECH0167-6636MECH MATER1226-086X J IND ENG CHEM1536-125X IEEE T NANOTECHNOL1551-319X J DISP TECHNOL0923-9820BIODEGRADATION0018-926X IEEE T ANTENN PROPAG0167-4730STRUCT SAF0363-907X INT J ENERG RES0891-2017COMPUT LINGUIST0964-1726SMART MATER STRUCT327328 329 330 331332 333 334335 336337 338 339 340 341 342 343 344 345 346 347348 349350351 352 353 354MATERIAIEEE MICWIRELESS COMPONENTSLETTERSIEEE TRANBROADCASTINGCHEMICALAND PROCESSINGIEEE-ACMComputational Biology andBioinformaticsFoodPLASMA CPLASMA PROCESSINGOPTICALCHEMIDEPOSITIONSENSACTUATORS A-PHYSICALCOMACM TRANMATHEMATICALJOURNALAND ENGINEERING DATAIEEE TraBiomedical Circuits andACM TRANCOMPUTER SYSTEMSENERGCOMPUTEIMAGE UNDERSTANDINGIEEE TRANMULTIMEDIAARTIFICAPPLIESCIENCEPROGRESCOATINGSIEEE TraInformation Forensics andJOURNALCONTROLINTERNATIOF THERMAL SCIENCESADSORPTIOTHE INTERNATIONALADSORPTION SOCIETYDIAMONDMATERIALSTRANSRESEARCH PART E-LOGISTICS ANDTRANSPORTATION REVIEWTHIN S33333333333333333333333333331531-1309IEEE MICROW WIREL CO0018-9316IEEE T BROADCAST0255-2701CHEM ENG PROCESS0948-1907CHEM VAPOR DEPOS0924-4247SENSOR ACTUAT A-PHYS0261-3069MATER DESIGN1876-4517FOOD SECUR0272-4324PLASMA CHEM PLASMA P0925-3467OPT MATER0734-2071ACM T COMPUT SYST0195-6574ENERG J1545-5963IEEE ACM T COMPUT BI0098-3500ACM T MATH SOFTWARE0021-9568J CHEM ENG DATA1932-4545IEEE T BIOMED CIRC S0300-9440PROG ORG COAT1556-6013IEEE T INF FOREN SEC0018-9162COMPUTER1520-9210IEEE T MULTIMEDIA0160-564X ARTIF ORGANS0169-4332APPL SURF SCI1366-5545TRANSPORT RES E-LOG0040-6090THIN SOLID FILMS1077-3142COMPUT VIS IMAGE UND1290-0729INT J THERM SCI0929-5607ADSORPTION0925-9635DIAM RELAT MATER0959-1524J PROCESS CONTR355 356 357358359 360361362 363 364 365366 367368 369370 371 372373 374375 376 377 378 379 380 381382 383384 385 386387ANNUALCONTROLJOURNAMERICAN OIL CHEMISTSMACRORESEARCHEXPERIMEINTERNATIOF HUMAN-COMPUTERCurrentJOUMICROSCOPY-OXFORDCOMPOSENGINEERINGFoodMEDICAL EPHYSICSGPS SINTERNATIOF DAMAGE MECHANICSBioinspiratioAAPGAPPLIED BAND BIOTECHNOLOGYFOOD ANBULLETININTERNATIOF HEAT AND FLUID FLOWSIAM JOCOMPUTINGMACHINInformPOLYMWJOURNALMATERIALSEMPIRICAENGINEERINGAPPLIEJOURNFRANKLIN INSTITUTE-ENGINEERING ANDAPPLIED MATHEMATICSJOURNALSCIENCESEIET RenGenerationPOLYMADVANCEDKNOWLESYSTEMSINTERNATIOF CIRCUIT THEORY ANDAPPLICATIONSTRANSRESEARCH PART C-EMERGING TECHNOLOGIES3333333333333333333333333333333331573-4137CURR NANOSCI0022-2720J MICROSC-OXFORD1367-5788ANNU REV CONTROL1598-5032MACROMOL RES0723-4864EXP FLUIDS1071-5819INT J HUM-COMPUT ST1056-7895INT J DAMAGE MECH1748-3182BIOINSPIR BIOMIM0003-021X J AM OIL CHEM SOC1557-1858FOOD BIOPHYS1350-4533MED ENG PHYS1080-5370GPS SOLUT0097-5397SIAM J COMPUT0885-6125MACH LEARN1359-8368COMPOS PART B-ENG0273-2289APPL BIOCHEM BIOTECH0379-5721FOOD NUTR BULL0142-727X INT J HEAT FLUID FL1382-3256EMPIR SOFTW ENG1559-128X APPL OPTICS0149-1423AAPG BULL0142-9418POLYM TEST0043-1648WEAR0022-3115J NUCL MATER1042-7147POLYM ADVAN TECHNOL0950-7051KNOWL-BASED SYST1566-2535INFORM FUSION0022-2461J MATER SCI1424-8220SENSORS-BASEL1752-1416IET RENEW POWER GEN0098-9886INT J CIRC THEOR APP0968-090X TRANSPORT RES C-EMER0016-0032J FRANKLIN I388389390 391392 393 394395 396397 398399 400 401 402 403 404 405 406 407408 409410411 412INTERNATIOF ROBUST ANDNONLINEAR CONTROLINTERNATIOF FATIGUEJOURNSCIENCEAPPLIEDMATERIALS SCIENCE &IEEE TRANCIRCUITS AND SYSTEMS I-FUNDAMENTAL THEORYAND APPLICATJOUMICROENCAPSULATIONAPPLIED SIEEE TRANULTRASONICSFERROELECTRICS ANDFREQUENCY CONTROLJOURNALMANAGEMENTAd HoCIRPMANUFACTURINGTECHNOLOGYJOURNALSPRAY TECHNOLOGYIEEE TRANDEVICE AND MATERIALSRELIABILITYACM TRANSOFTWARE ENGINEERINGAND METHODOLOGYEUROPEALIPID SCIENCE ANDMacromolEngineeringCOMPSTRUCTURESMDigesNanomaterials andMODESIMULATION IN MATERIALSSCIENCE ANDENGINEERINGBIOTECHNOIEEE TRANINFORMATIONTECHNOLOGY INBIOMEDICINEMETIEEE SIEEE TRANCONTROL SYSTEMSTECHNOLOGY33333333333333333333333330142-1123INT J FATIGUE0003-7028APPL SPECTROSC0885-3010IEEE T ULTRASON FERR1049-8923INT J ROBUST NONLIN0947-8396APPL PHYS A-MATER1549-8328IEEE T CIRCUITS-I0265-2048J MICROENCAPSUL1530-4388IEEE T DEVICE MAT RE1049-331X ACM T SOFTW ENG METH0022-1147J FOOD SCI1570-8705AD HOC NETW0007-8506CIRP ANN-MANUF TECHN1059-9630J THERM SPRAY TECHN1842-3582DIG J NANOMATER BIOS0965-0393MODEL SIMUL MATER SC1063-8016J DATABASE MANAGE1862-832X MACROMOL REACT ENG0045-7949COMPUT STRUCT1996-1944MATERIALS0740-7459IEEE SOFTWARE1063-6536IEEE T CONTR SYST T1438-7697EUR J LIPID SCI TECH0141-5492BIOTECHNOL LETT1089-7771IEEE T INF TECHNOL B0026-1394METROLOGIA413414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429430 431432433 434 435 436437438 439 440441JOURNALPROCESSINGDRYING TCOMPUTFORUMINTERNATIOF REFRIGERATION-REVUEINTERNATIONALE DUFROIDNUMERTRANSFER PART A-IEEE MMAGAZINEIMAGECOMPUTINGAUTONOMAND MULTI-AGENTWINDTRIBOLOJOURNALINTELLIGENCE RESEARCHTRIINTERNATIONALMINTERNATIOF REFRACTORY METALS& HARD MATERIALSIEEE TraComputational Intelligence andMACROTHEORY AND SIMULATIONSCONTROLPRACTICENanoscaleThermophysical EngineeringMETALLUMATERIALSTRANSACTIONS A-PHYSICAL METALLURGYAND MATERIALJOURNAL OREASONINGCERAMICSLETTERMICROBIOLOGYIEEE TRANVEHICULAR TECHNOLOGYJournal of thof Chemical EngineersDATA & KENGINEERINGBIOLOGICAIEEE TransSpeech and LanguageJOURNAELECTROCHEMISTRYIEEE CGRAPHICS AND333333333333333333333333333331527-3342IEEE MICROW MAG0262-8856IMAGE VISION COMPUT0924-0136J MATER PROCESS TECH0167-7055COMPUT GRAPH FORUM0140-7007INT J REFRIG1040-7782NUMER HEAT TR A-APPL0301-679X TRIBOL INT0968-4328MICRON0737-3937DRY TECHNOL1095-4244WIND ENERGY1023-8883TRIBOL LETT1076-9757J ARTIF INTELL RES1556-7265NANOSC MICROSC THERM1073-5623METALL MATER TRANS A1387-2532AUTON AGENT MULTI-AG1943-068X IEEE T COMP INTEL AI1022-1344MACROMOL THEOR SIMUL0967-0661CONTROL ENG PRACT1876-1070J TAIWAN INST CHEM E0169-023X DATA KNOWL ENG0263-4368INT J REFRACT MET H0272-8842CERAM INT0266-8254LETT APPL MICROBIOL0018-9545IEEE T VEH TECHNOL0021-891X J APPL ELECTROCHEM0272-1716IEEE COMPUT GRAPH0168-7433J AUTOM REASONING0340-1200BIOL CYBERN1558-7916IEEE T AUDIO SPEECH。

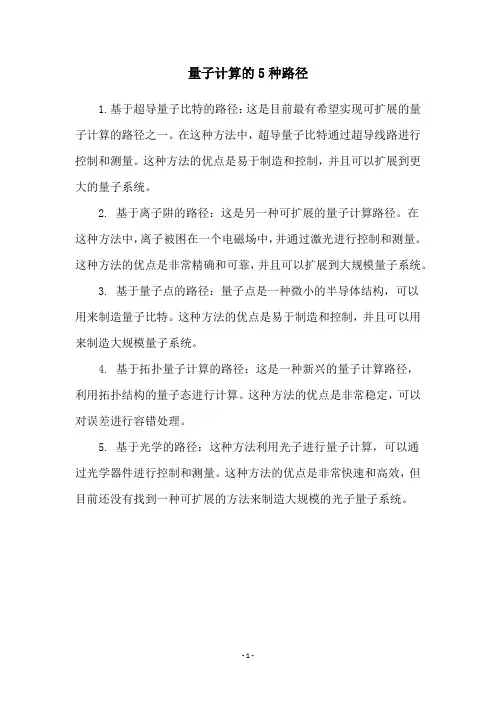

量子计算的5种路径

1.基于超导量子比特的路径:这是目前最有希望实现可扩展的量子计算的路径之一。

在这种方法中,超导量子比特通过超导线路进行控制和测量。

这种方法的优点是易于制造和控制,并且可以扩展到更大的量子系统。

2. 基于离子阱的路径:这是另一种可扩展的量子计算路径。

在

这种方法中,离子被困在一个电磁场中,并通过激光进行控制和测量。

这种方法的优点是非常精确和可靠,并且可以扩展到大规模量子系统。

3. 基于量子点的路径:量子点是一种微小的半导体结构,可以

用来制造量子比特。

这种方法的优点是易于制造和控制,并且可以用来制造大规模量子系统。

4. 基于拓扑量子计算的路径:这是一种新兴的量子计算路径,

利用拓扑结构的量子态进行计算。

这种方法的优点是非常稳定,可以对误差进行容错处理。

5. 基于光学的路径:这种方法利用光子进行量子计算,可以通

过光学器件进行控制和测量。

这种方法的优点是非常快速和高效,但目前还没有找到一种可扩展的方法来制造大规模的光子量子系统。

- 1 -。