Theoretical and Empirical Validation of Software Product Measures

- 格式:pdf

- 大小:65.21 KB

- 文档页数:22

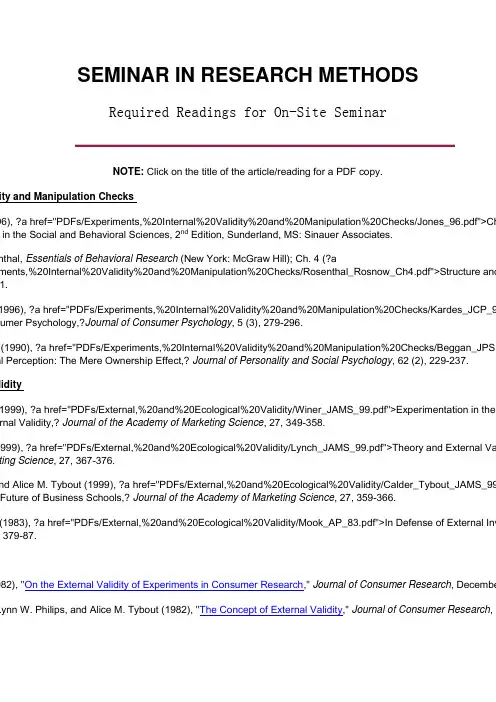

SEMINAR IN RESEARCH METHODSRequired Readings for On-Site SeminarNOTE: Click on the title of the article/reading for a PDF copy.ity and Manipulation Checks96), ?a href="PDFs/Experiments,%20Internal%20Validity%20and%20Manipulation%20Checks/Jones_96.pdf">Ch in the Social and Behavioral Sciences, 2nd Edition, Sunderland, MS: Sinauer Associates.nthal, Essentials of Behavioral Research (New York: McGraw Hill); Ch. 4 (?aments,%20Internal%20Validity%20and%20Manipulation%20Checks/Rosenthal_Rosnow_Ch4.pdf">Structure and 1.1996), ?a href="PDFs/Experiments,%20Internal%20Validity%20and%20Manipulation%20Checks/Kardes_JCP_9 umer Psychology,?Journal of Consumer Psychology, 5 (3), 279-296.(1990), ?a href="PDFs/Experiments,%20Internal%20Validity%20and%20Manipulation%20Checks/Beggan_JPSP al Perception: The Mere Ownership Effect,? Journal of Personality and Social Psychology, 62 (2), 229-237.lidity1999), ?a href="PDFs/External,%20and%20Ecological%20Validity/Winer_JAMS_99.pdf">Experimentation in the rnal Validity,? Journal of the Academy of Marketing Science, 27, 349-358.999), ?a href="PDFs/External,%20and%20Ecological%20Validity/Lynch_JAMS_99.pdf">Theory and External Va ting Science, 27, 367-376.nd Alice M. Tybout (1999), ?a href="PDFs/External,%20and%20Ecological%20Validity/Calder_Tybout_JAMS_99 Future of Business Schools,? Journal of the Academy of Marketing Science, 27, 359-366.(1983), ?a href="PDFs/External,%20and%20Ecological%20Validity/Mook_AP_83.pdf">In Defense of External Inv 379-87.982), "On the External Validity of Experiments in Consumer Research," Journal of Consumer Research, Decembe Lynn W. Philips, and Alice M. Tybout (1982), "The Concept of External Validity," Journal of Consumer Research, DEuropean Journalswill include a detailed review of 2 current articles published in the marketing抯leading journals. During the ses makes manuscripts worthy of publishing in the field抯best journals. Please read the following very carefully: astava (2002), "Effect of face value on product valuation in foreign currencies," Journal of Consumer Research, 29t (2004), "Activating Sound and Meaning: The Role of Language Proficiency in Bilingual Consumer Environments 220-8.s - WSUopment, & Construct Validation. (1979), "A Paradigm for Developing Better Measures of Marketing Constructs," Journal of Marketing Research, 1), "Construct Validity: A Review of Basic Marketing Practices," Journal of Marketing Research, 18 (May), 133-45 (1997), "Dimensions of Brand Personality," Journal of Marketing Research, 34 (August), 347-356.T. and Donald W. Fiske (1959), "Convergent and Discriminant Validation by the Multitrait-Multimethod Matrix," Ps 05.P. and Youjae Yi (1993), "Multitrait-Multimethod Matrices in Consumer Research: Critique and New Developmen 143-70.A. (1994), ?a href="PDFs/Reliability%20and%20Validity/Peterson_JCR_94.pdf">A Meta-Analysis of Cronbach抯ch, 381-391.hat Is Coefficient Alpha? An Examination of Theory and Applications," Journal of Applied Psychology, 78, 98-10 nd M. Ronald Buckley (1988), "Measurement Error and Theory Testing in Consumer Research: An Empirical Illu nstruct Validation," Journal of Consumer Research, 14 (March), 579-82.ability: A Review of Psychometric Basics and Recent Marketing Practices," Journal of Marketing Research, 16, 6-arketing Journalwill include a detailed review of a recently published paper in a premiere marketing journal, including reviews, revpt (January 2006)om First Round Review, April 11, 2006)ents (From First Round Review, April 11, 2006)First Revision (April 19, 2007)tor (After First Round Review, April 19, 2007)iewers (After First Round Review, April 19, 2007)Reviewer Comments (From Second Round Review, June 07, 2007)Second Revision (October 03, 2007)tor (After Second Round Review, October 03, 2007)viewers(After Second Round Review; October 03, 2007)Letter (November 16, 2007)1986), "The Moderator-Mediator Variable Distinction in Social Psychological Research: Conceptual, Strategic, an lity and Social Psychology, 1173-1182., Charles M. Judd and Vincent Y. Yzerbyt (2005), ?aators%20&%20Mediators,%20Contrasts%20&%20Interactions/Muller_Judd_Yzerbyt_JPSP_05.pdf">When Mode ated,?Journal of Personality and Social Psychology, 89 (6), 852-863.s provide good overviews of these issues:/cm/mediate.htmp:///~davidpm/ripl/mediate.htm/cm/moderation.htmarch Ethics986), "College Sophomores in the Laboratory: Influences of a Narrow Data Base on Social Psychology's View of cial Psychology, 515-530.A. (2001), ?a href="PDFs/Student%20Samples%20and%20Research%20Ethics/Peterson_JCR_01.pdf">On the U search: Insights from a Second-Order Meta-Analysis,?Journal of Consumer Research, 28, 450-461.(1994), ?a href="PDFs/Student%20Samples%20and%20Research%20Ethics/Rosenthal_PS_94.pdf">Science a porting Psychological Research,? Psychological Science, 5 (3), 127-134. Plus read short resonses by Pomerantz d Mann (1994),ogical Association (2002), ?ant%20Samples%20and%20Research%20Ethics/Ethical%20Principles%20of%20Psychologists%20and%20Code sychologists and Code of Conduct,?Washington, DC (/ethics/). Familiarize yourself with the ionsM. R. DiMatteo (2001), ?a href="PDFs/Meta-Analysis%20and%20Replications/Rosenthal_DiMatteo_ARP_01.pdf Quantitative Methods for Literature Reviews,? Annual Review of Psychology, 52, 59-82.ardo Salas, and Norman Miller (1991), 揢sing Meta-Analysis to Test Theoretical Hypotheses in Social Psycholog n, 17 (3), 258-264.href="PDFs/Meta-Analysis%20and%20Replications/Hunter_JCR_01.pdf">The Desperate Need for Replications,? -158.f="PDFs/Meta-Analysis%20and%20Replications/Wilk_JCR_01.pdf">The Impossibility and Necessity of Re-Inquir urnal of Consumer Research, 28, 308-312.。

The Importance and Objectives of Research Papers Research papers play a crucial role in academic and scientific communities as they provide a systematic approach to investigate and explore various subjects. These papers are significant as they contribute to the accumulation of knowledge, advancement of scientific fields, and improvement of society as a whole. This article aims to discuss the significance and objectives of research papers.Significance of Research PapersResearch papers serve as a foundation that allows researchers to contribute to the existing body of knowledge. They provide an opportunity to thoroughly examine a topic, formulate hypotheses, gather and analyze data, and draw meaningful conclusions. By conducting research and publishing findings, scholars and experts can contribute to the development of their respective fields.Furthermore, research papers provide a platform for intellectual discourse and debate. They allow researchers to present their work to the scientific community, seek feedback, and engage in discussions that can lead to new ideas and collaborations. This exchange of knowledge and ideas drives innovation and fosters intellectual growth.Research papers also enable the replication and validation of scientific experiments. Through the detailed documentation of research methodologies, data collection techniques, and analysis procedures, other researchers can replicate studies to ensure the reproducibility of results. This validation process strengthens the credibility and reliability of scientific knowledge.Objectives of Research Papers1.Expand Knowledge: The primary objective of research papers is toadvance knowledge in a specific field or discipline. By conducting thoroughliterature reviews and carrying out original research, researchers contribute new insights, theories, or empirical evidence that expands the understanding ofa subject.2.Solve Problems: Research papers aim to address existing problemsor gaps in knowledge. They identify research questions, formulate hypotheses, and conduct investigations to provide potential solutions or recommendations.This objective is particularly important in applied research, where the focus is on solving practical problems and improving real-world situations.3.Test Theories: Another objective of research papers is to test existingtheories or propose new ones. Researchers develop hypotheses based onexisting knowledge and utilize empirical data to either validate or refute thesetheories. This objective strengthens theoretical frameworks and helps refine or develop new concepts.4.Contribute to Policy Development: Research papers can havesignificant implications for policy development and decision-making. Through rigorous research, policymakers can base their decisions on evidence-basedpractices and recommendations provided in these papers. This objectiveensures that policies and actions are informed, effective, and grounded in solid research.5.Promote Innovation: Research papers often aim to push theboundaries of knowledge and foster innovation. By providing new perspectives, original data, or alternative approaches, researchers inspire fellow scholars to explore new avenues of research. This objective contributes to thedevelopment of novel ideas and solutions to pressing issues.In conclusion, research papers serve a critical role in academic and scientific communities. They not only expand knowledge but also solve problems, test theories, contribute to policy development, and promote innovation. Through the dissemination of findings, researchers facilitate intellectual exchange and collaboration, leading to the growth and advancement of various fields.。

机制检验的英语Mechanism validationMechanism validation is the process of testing and verifying the underlying principles and assumptions that govern a particular system or process. It involves a rigorous evaluation of the mechanisms that drive a system or process to ensure that they are accurate, effective, and consistent with established theories and models.Mechanism validation is an important step in scientific research and engineering design, as it helps to ensure that the system or process being studied is reliable and can be trusted to produce consistent and predictable results. This is particularly important in fields such as medicine, where the effectiveness of treatments and drugs depends on the accurate understanding and validation of the underlying mechanisms. The process of mechanism validation typically involves a combination of empirical testing, mathematical modeling, and theoretical analysis. Empirical testing involves conducting experiments and collecting data to test the predictions of the underlying mechanisms, while mathematical modeling and theoretical analysis involve developing mathematical and conceptual models to explain the observed phenomena.In order to ensure that the mechanisms being studied are valid, it is important to carefully consider and test the assumptions that underlie them. These assumptions may include things like the behavior of the materials or fluids involved, the accuracy of the measurements being used, and the influence of external factors such as temperature, pressure, or humidity. Overall, mechanism validation is a critical aspect of scientific research and engineering design, as it helps to ensure that the systems and processes being studied are accurate, reliable, and consistent with established theories and models. By rigorously testing and verifying the underlying mechanisms, researchers and engineers can build systems and processes that are effective, efficient, and capable of producing predictable and consistent results.。

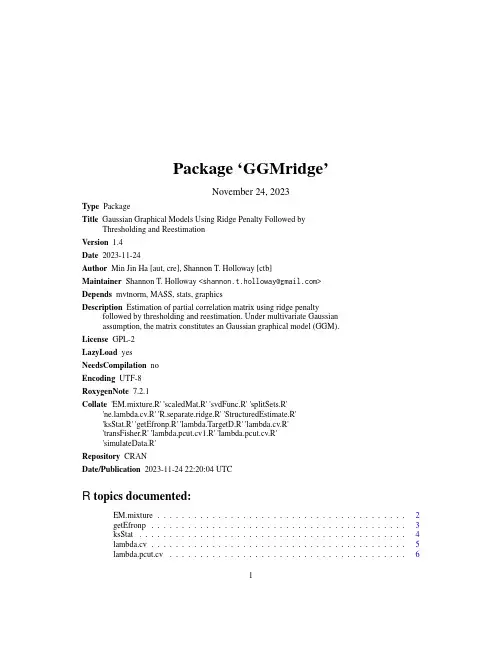

Package‘GGMridge’November24,2023Type PackageTitle Gaussian Graphical Models Using Ridge Penalty Followed byThresholding and ReestimationVersion1.4Date2023-11-24Author Min Jin Ha[aut,cre],Shannon T.Holloway[ctb]Maintainer Shannon T.Holloway<****************************>Depends mvtnorm,MASS,stats,graphicsDescription Estimation of partial correlation matrix using ridge penaltyfollowed by thresholding and reestimation.Under multivariate Gaussianassumption,the matrix constitutes an Gaussian graphical model(GGM).License GPL-2LazyLoad yesNeedsCompilation noEncoding UTF-8RoxygenNote7.2.1Collate'EM.mixture.R''scaledMat.R''svdFunc.R''splitSets.R''mbda.cv.R''R.separate.ridge.R''StructuredEstimate.R''ksStat.R''getEfronp.R''lambda.TargetD.R''lambda.cv.R''transFisher.R''lambda.pcut.cv1.R''lambda.pcut.cv.R''simulateData.R'Repository CRANDate/Publication2023-11-2422:20:04UTCR topics documented:EM.mixture (2)getEfronp (3)ksStat (4)lambda.cv (5)lambda.pcut.cv (6)12EM.mixture lambda.pcut.cv1 (8)lambda.TargetD (9)mbda.cv (10)R.separate.ridge (12)scaledMat (13)simulateData (14)structuredEstimate (15)transFisher (16)Index18 EM.mixture Estimation of the mixture distribution using EM algorithmDescriptionEstimation of the parameters,null proportion,and degrees of freedom of the exact null density in the mixture distribution.UsageEM.mixture(p,eta0,df,tol)Argumentsp A numeric vector representing partial correlation coefficients.eta0An initial value for the null proportion;1-eta0is the non-null proportion.df An initial value for the degrees of freedom of the exact null density.tol The tolerance level for convergence.ValueA list object containingdf Estimated degrees of freedom of the null density.eta0Estimated null proportion.iter The number of iterations required to reach convergence.Author(s)Min Jin HaReferencesSchafer,J.and Strimmer,K.(2005).An empirical Bayes approach to inferring large-scale gene association networks.Bioinformatics,21,754–764.getEfronp3 getEfronp Estimation of empirical null distribution.DescriptionEstimation of empirical null distribution using Efron’s central matching.UsagegetEfronp(z,bins=120L,maxQ=9,pct=0,pct0=0.25,cc=1.2,plotIt=FALSE)Argumentsz A numeric vector of z values following the theoretical normal null distribution.bins The number of intervals for density estimation of the marginal density of z.maxQ The maximum degree of the polynomial to be considered for density estimation of the marginal density of z.pct Low and top(pct*100)f(z).pct0Low and top(pct0*100)estimate f0(z).cc The central parts(µ−σcc,µ+σcc)of the empirical distribution z are used for an estimate of the null proportion(eta).plotIt TRUE if density plot is to be produced.ValueA list containingcorrectz The corrected z values to follow empirically standard normal distribution.correctp The corrected p values using the correct z values.q The chosen degree of polynomial for the estimated marginal density.mu0hat The location parameter for the normal null distribution.sigma0hat The scale parameter for the normal null distribution.eta The estimated null proportion.4ksStatAuthor(s)Min Jin HaReferencesEfron,B.(2004).Large-scale simultaneous hypothesis testing.Journal of the American Statistical Association,99,96–104.Ha,M.J.and Sun,W.(2014).Partial correlation matrix estimation using ridge penalty followed by thresholding and re-estimation.Biometrics,70,762–770.Examplesp<-100#number of variablesn<-50#sample size################################Simulate data###############################simulation<-simulateData(G=p,etaA=0.02,n=n,r=1)data<-simulation$data[[1]]stddata<-scale(x=data,center=TRUE,scale=TRUE)################################estimate ridge parameter###############################lambda.array<-seq(from=0.1,to=20,by=0.1)*(n-1.0)fit<-lambda.cv(x=stddata,lambda=lambda.array,fold=10L)lambda<-fit$lambda[which.min(fit$spe)]/(n-1.0)################################calculate partial correlation#using ridge inverse###############################w.upper<-which(upper.tri(diag(p)))partial<-solve(lambda*diag(p)+cor(data))partial<-(-scaledMat(x=partial))[w.upper]################################get p-values from empirical#null distribution###############################efron.fit<-getEfronp(z=transFisher(x=partial))ksStat The Kolmogorov-Smirnov Statistic for p-ValuesDescriptionCalculates the Kolmogorov-Smirnov statistic for p-valueslambda.cv5UsageksStat(p)Argumentsp A numeric vector with p-values.ValueKolmogorov-Smirnov statisticAuthor(s)Min Jin HaExamplesp<-stats::runif(100)ksStat(p=p)ks.test(p,y="punif")#compare with ks.testlambda.cv Choose the Tuning Parameter of the Ridge InverseDescriptionChoose the tuning parameter of the ridge inverse by minimizing cross validation estimates of the total prediction errors of the p separate ridge regressions.Usagelambda.cv(x,lambda,fold)Argumentsx An n by p data matrix.lambda A numeric vector of candidate tuning parameters.fold fold-cross validation is performed.ValueA list containinglambda The selected tuning parameter,which minimizes the total prediction errors.spe The total prediction error for all the candidate lambda values.Author(s)Min Jin HaReferencesHa,M.J.and Sun,W.(2014).Partial correlation matrix estimation using ridge penalty followed by thresholding and re-estimation.Biometrics,70,762–770.Examplesp<-100#number of variablesn<-50#sample size################################Simulate data###############################simulation<-simulateData(G=p,etaA=0.02,n=n,r=1)data<-simulation$data[[1L]]stddata<-scale(x=data,center=TRUE,scale=TRUE)################################estimate ridge parameter###############################lambda.array<-seq(from=0.1,to=20,by=0.1)*(n-1.0)fit<-lambda.cv(x=stddata,lambda=lambda.array,fold=10L)lambda<-fit$lambda[which.min(fit$spe)]/(n-1.0)################################calculate partial correlation#using ridge inverse###############################partial<-solve(lambda*diag(p)+cor(data))partial<--scaledMat(x=partial)lambda.pcut.cv Choose the Tuning Parameter of the Ridge Inverse and ThresholdingLevel of the Empirical p-ValuesDescriptionChoose the tuning parameter of the ridge inverse and p-value cutoff by minimizing cross validation estimates of the total prediction errors of the p separate ridge regressions.Usagelambda.pcut.cv(x,lambda,pcut,fold=10L)Argumentsx n by p data matrix.lambda A vector of candidate tuning parameters.pcut A vector of candidate cutoffs of pvalues.fold fold-cross validation is performed.ValueThe total prediction errors for all lambda(row-wise)and pcut(column-wise)Author(s)Min Jin HaReferencesHa,M.J.and Sun,W.(2014).Partial correlation matrix estimation using ridge penalty followed by thresholding and re-estimation.Biometrics,70,762–770.Examplesp<-100#number of variablesn<-50#sample size################################Simulate data###############################simulation<-simulateData(G=p,etaA=0.02,n=n,r=1)data<-simulation$data[[1L]]stddata<-scale(x=data,center=TRUE,scale=TRUE)################################Selection of a lambda and a#p-value cutoff###############################lambda.array<-seq(from=0.1,to=5,length=10)*(n-1.0)pcut.array<-seq(from=0.01,to=0.05,by=0.01)tpe<-lambda.pcut.cv(x=stddata,lambda=lambda.array,pcut=pcut.array,fold=3L)w.mintpe<-which(tpe==min(tpe),arr.ind=TRUE)lambda<-lambda.array[w.mintpe[1L]]alpha<-pcut.array[w.mintpe[2L]]lambda.pcut.cv1Choose the Tuning Parameter of the Ridge Inverse and ThresholdingLevel of the Empirical p-Values.Calculate total prediction error fortest data afterfitting partial correlations from train data for all valuesof lambda and pcut.DescriptionChoose the Tuning Parameter of the Ridge Inverse and Thresholding Level of the Empirical p-Values.Calculate total prediction error for test data afterfitting partial correlations from train data for all values of lambda and pcut.Usagelambda.pcut.cv1(train,test,lambda,pcut)Argumentstrain An n x p data matrix from which the model isfitted.test An m x p data matrix from which the model is evaluated.lambda A vector of candidate tuning parameters.pcut A vector of candidate cutoffs of pvalues.ValueTotal prediction error for all the candidate lambda and pvalue cutoff values.Author(s)Min Jin HaReferencesHa,M.J.and Sun,W.(2014).Partial correlation matrix estimation using ridge penalty followed by thresholding and re-estimation.Biometrics,70,762–770.Examplesp<-100#number of variablesn<-50#sample size################################Simulate data###############################simulation<-simulateData(G=p,etaA=0.02,n=n,r=1)data<-simulation$data[[1L]]lambda.TargetD9################################Split into train/test sets###############################testindex<-sample(1L:n,10L)train<-data[-testindex,,drop=FALSE]stdTrain<-scale(x=train,center=TRUE,scale=TRUE)test<-data[testindex,,drop=FALSE]stdTest<-scale(x=test,center=TRUE,scale=TRUE)################################Calculate total prediction#errors for all candidate#lambda and p-value cutoffs###############################lambda.array<-seq(from=0.1,to=5,length=10)*(n-1.0)pcut.array<-seq(from=0.01,to=0.05,by=0.01)tpe<-lambda.pcut.cv1(train=stdTrain,test=stdTest,lambda=lambda.array,pcut=pcut.array)lambda.TargetD Shrinkage Estimation of a Covariance Matrix Toward an Identity Ma-trixDescriptionEstimation of a weighted average of a sample covariance(correlation)matrix and an identity matrix. Usagelambda.TargetD(x)Argumentsx Centered data for covariance shrinkage and standardized data for correlation shrinkage.DetailsAn analytical approach to the estimate ridge parameter.ValueThe estimates of shrinkage intensity.mbda.cv Author(s)Min Jin HaReferencesSchafer,J.and Strimmer,K.(2005).A shrinkage approach to large-scale covariance matrix estima-tion and implications for functional genomics.Statistical Applications in Genetics and Molecular Biology,4,32.Ha,M.J.and Sun,W.(2014).Partial correlation matrix estimation using ridge penalty followed by thresholding and re-estimation.Biometrics,70,762–770.Examples################################Simulate data###############################simulation<-simulateData(G=100,etaA=0.02,n=50,r=10)dat<-simulation$data[[1L]]stddat<-scale(x=dat,center=TRUE,scale=TRUE)mbda<-lambda.TargetD(x=stddat)################################the ridge parameter###############################mbda<mbda/(mbda)################################partial correlation matrix###############################partial<-solve(cor(dat)+mbda*diag(ncol(dat)))partial<--scaledMat(x=partial)mbda.cv Choose the Tuning Parameter of a Ridge Regression Using Cross-ValidationDescriptionChoose the tuning parameter of a ridge regression using cross-validation.Usagembda.cv(y,x,lambda,fold)mbda.cv11 Argumentsy Length n response vector.x n x p matrix for covariates with p variables and n sample size.lambda A numeric vector for candidate tuning parameters for a ridge regression.fold fold-cross validation used to choose the tuning parameter.ValueA list containinglambda The selected tuning parameter,which minimizes the prediction error.spe The prediction error for all of the candidate lambda values.Author(s)Min Jin HaReferencesHa,M.J.and Sun,W.(2014).Partial correlation matrix estimation using ridge penalty followed by thresholding and re-estimation.Biometrics,70,762–770.Examplesp<-100#number of variablesn<-50#sample size################################Simulate data###############################simulation<-simulateData(G=p,etaA=0.02,n=n,r=1)data<-simulation$data[[1L]]stddat<-scale(x=data,center=TRUE,scale=TRUE)X<-stddat[,-1L,drop=FALSE]y<-stddat[,1L]mbda<mbda.cv(y=y,x=X,lambda=seq(from=0.01,to=1,by=0.1),fold=10L)lambda<mbda$lambda[which.min(mbda$spe)]12R.separate.ridgeR.separate.ridge Estimation of Partial Correlation Matrix Using p Separate Ridge Re-gressions.DescriptionThe partial correlation matrix is estimated by p separate ridge regressions with the parameters se-lected by cross validation.UsageR.separate.ridge(x,fold,lambda,verbose=FALSE)Argumentsx n x p data matrix;n is the#of samples and p is the#of variables.fold Ridge parameters are selected by fold-cross validations separately for each re-gression.lambda The candidate ridge parameters for all p ridge regressions.verbose TRUE/FALSE;if TRUE,print the procedure.ValueA list containingR The partial correlation matrix.lambda.sel The selected tuning parameters for p ridge regressions.Author(s)Min Jin HaReferencesHa,M.J.and Sun,W.(2014).Partial correlation matrix estimation using ridge penalty followed by thresholding and re-estimation.Biometrics,70,762–770.Examplesp<-100#number of variablesn<-50#sample size################################Simulate data###############################simulation<-simulateData(G=p,etaA=0.02,n=n,r=1)data<-simulation$data[[1L]]stddata<-scale(x=data,center=TRUE,scale=FALSE)scaledMat13################################estimate ridge parameter###############################w.upper<-which(upper.tri(diag(p)))lambda.array<-seq(from=0.1,to=20,by=0.1)*(n-1.0)partial.sep<-R.separate.ridge(x=stddata,lambda=lambda.array,fold=5L,verbose=TRUE)$R[w.upper]scaledMat Scale a square matrixDescriptionScale a square matrix to have unit diagonal elements.UsagescaledMat(x)Argumentsx A square matrix with positive diagonal elementsValueScaled matrix of x.Author(s)Min Jin HaExamples################################Simulate data###############################simulation<-simulateData(G=100,etaA=0.02,n=50,r=10)dat<-simulation$data[[1L]]correlation<-scaledMat(x=stats::cov(dat))14simulateData simulateData Generate Simulation Data from a Random Network.DescriptionGenerate a random network where both the network structure and the partial correlation coeffi-cients are random.The data matrices are generated from multivariate normal distribution with the covariance matrix corresponding to the network.UsagesimulateData(G,etaA,n,r,dist="mvnorm")ArgumentsG The number of variables(vertices).etaA The proportion of non-null edges among all the G(G-1)/2edges.n The sample size.r The number of replicated G by N data matrices.dist A function which indicates the distribution of sample."mvnorm"is multivari-ate normal distribution and"mvt"is multivariate t distribution with df=2.Thedefault is set by"mvnorm".ValueA list containingdata a list,each element containing an n X G matrix of simulated data.true.partialcorThe partial correlation matrix which the datasets are generated from.truecor.scaledThe covariance matrix calculted from the partial correlation matrix.sig.node The indices of nonzero upper triangle elements of partial correlation matrix. Author(s)Min Jin HaReferencesSchafer,J.and Strimmer,K.(2005).An empirical Bayes approach to inferring large-scale gene association networks.Bioinformatics,21,754–764.Examplessimulation<-simulateData(G=100,etaA=0.02,n=50,r=10)structuredEstimate15 structuredEstimate Estimation of Partial Correlation Matrix Given Zero Structure.DescriptionEstimation of nonzero entries of the partial correlation matrix given zero structure.UsagestructuredEstimate(x,E)Argumentsx n by p data matrix with the number of variables p and sample size n.E The row and column indices of zero entries of the partial correlation matrix. ValueA list containingR The partial correlation matrix.K The inverse covariance matrix.RSS The residual sum of squares.Author(s)Min Jin HaReferencesHa,M.J.and Sun,W.(2014).Partial correlation matrix estimation using ridge penalty followed by thresholding and re-estimation.Biometrics,70,762–770.Examplesp<-100#number of variablesn<-50#sample size################################Simulate data###############################simulation<-simulateData(G=p,etaA=0.02,n=n,r=1)data<-simulation$data[[1L]]stddata<-scale(x=data,center=TRUE,scale=TRUE)################################estimate ridge parameter###############################lambda.array<-seq(from=0.1,to=20,by=0.1)*(n-1.0)fit<-lambda.cv(x=stddata,lambda=lambda.array,fold=10L) lambda<-fit$lambda[which.min(fit$spe)]/(n-1)################################calculate partial correlation#using ridge inverse###############################w.upper<-which(upper.tri(diag(p)))partial<-solve(lambda*diag(p)+cor(data))partial<-(-scaledMat(x=partial))[w.upper]################################get p-values from empirical#null distribution###############################efron.fit<-getEfronp(z=transFisher(x=partial),bins=50L,maxQ=13)################################estimate the edge set of#partial correlation graph with#FDR control at level0.01###############################w.array<-which(upper.tri(diag(p)),arr.ind=TRUE)th<-0.01wsig<-which(p.adjust(efron.fit$correctp,method="BH")<th)E<-w.array[wsig,]dim(E)################################structured estimation###############################fit<-structuredEstimate(x=stddata,E=E)th.partial<-fit$RtransFisher Fisher’s Z-TransformationDescriptionFisher’s Z-transformation of(partial)correlation.UsagetransFisher(x)Argumentsx A vector having entries between-1and1.ValueFisher’s Z-transformed values.Author(s)Min Jin HaExamples################################Simulate data###############################simulation<-simulateData(G=100,etaA=0.02,n=50,r=1) dat<-simulation$data[[1L]]stddat<-scale(x=dat,center=TRUE,scale=TRUE)mbda<-lambda.TargetD(x=stddat)################################the ridge parameter###############################mbda<mbda/(mbda)################################partial correlation matrix###############################partial<-solve(cor(dat)+mbda*diag(ncol(dat)))partial<--scaledMat(x=partial)################################Fisher s Z transformation of#upper diagonal of the partial#correlation matrix###############################w.upper<-which(upper.tri(diag(nrow(dat))))psi<-transFisher(x=partial[w.upper])IndexEM.mixture,2getEfronp,3ksStat,4lambda.cv,5lambda.pcut.cv,6lambda.pcut.cv1,8lambda.TargetD,9mbda.cv,10R.separate.ridge,12scaledMat,13simulateData,14structuredEstimate,15transFisher,1618。

英语作文有关实验的题目Title: The Role of Experiments in Scientific Inquiry。

In the realm of scientific exploration, experiments serve as indispensable tools for probing hypotheses,testing theories, and unraveling the mysteries of the natural world. Through meticulously designed procedures and systematic observations, experiments offer a pathway to uncovering new knowledge and refining existing understanding. This essay delves into the significance of experiments in scientific inquiry, exploring theiressential role in advancing human understanding and driving innovation.To begin with, experiments provide a controlled environment where variables can be manipulated and outcomes measured with precision. This controlled setting allows scientists to isolate specific factors and observe their effects, thus enabling the establishment of cause-and-effect relationships. By carefully controlling variablesand conditions, researchers can minimize external influences and draw reliable conclusions about the phenomena under investigation.Moreover, experiments offer a means of testing hypotheses formulated through deductive reasoning or inspired by empirical observations. Hypotheses serve as educated guesses or tentative explanations for observed phenomena. Through experimentation, scientists subject these hypotheses to empirical scrutiny, either confirming or refuting their validity based on the evidence gathered. This iterative process of hypothesis testing lies at the heart of scientific inquiry, driving the advancement of knowledge and the refinement of theories.Furthermore, experiments foster a spirit of curiosity and exploration, encouraging scientists to push the boundaries of existing knowledge and explore new frontiers. By posing questions and designing experiments to address them, researchers embark on a journey of discovery, driven by a desire to unravel the mysteries of the universe. Whether exploring the depths of outer space or theintricacies of subatomic particles, experiments serve as vehicles for human ingenuity and exploration, fueling the quest for understanding.Additionally, experiments play a crucial role in validating scientific theories and models, providing empirical support for theoretical frameworks. Theories serve as overarching explanations for natural phenomena, offering insights into the underlying principles governing the universe. Through experimentation, scientists gather empirical evidence to either support or challenge these theories, refining and revising them in light of new data. This symbiotic relationship between theory and experiment forms the cornerstone of scientific progress, fostering a dynamic interplay between observation and theory-building.Moreover, experiments drive technological innovation and practical applications, translating theoreticalinsights into real-world solutions. From the development of new materials and medicines to the optimization of industrial processes, experiments underpin technological advancements that shape the course of human civilization.By applying scientific principles to practical problems, researchers harness the power of experimentation to drive innovation and improve the quality of life for people around the globe.In conclusion, experiments occupy a central position in the landscape of scientific inquiry, serving as catalysts for discovery, innovation, and understanding. By providing a systematic framework for hypothesis testing, empirical validation, and theory building, experiments empower scientists to unravel the mysteries of the natural world and push the boundaries of human knowledge. As we continue to harness the power of experimentation to explore new frontiers and tackle complex challenges, we embark on a journey of discovery that promises to enrich our understanding of the universe and transform the world we inhabit.。

Path Dependence and The Validation of Agent-based Spatial Models of Land UseDANIEL G. BROWN, SCOTT PAGE, RICK RIOLO, MOIRA ZELLNER and WILLIAM RAND In this paper, we identify two distinct notions of accuracy of land-use models and highlight a tension between them. A model can have predictive accuracy: its predicted land-use pattern can be highly correlated with the actual land-use pattern. A model can also have process accuracy: the process by which locations or land-use patterns are determined can be consistent with real world processes.To balance these two potentially conflicting motivations, we introduce the concept of the invariant region, i.e., the area where land-use type is almost certain,and thus path independent; and the variant region, i.e., the area where land use depends on a particular series of events, and is thus path dependent. We demonstrate our methods using an agent-based land-use model and using multi-temporal land-use data collected for Wash tenaw County, Michigan, USA. The results indicate that, using the methods we describe, researchers can improve their ability to communicate how well their model performs, the situations or instances in which it does not perform well, and the cases in which it is relatively unlikely to predict well because of either path dependence or stochastic un certainty.Keywords: Agent-based modeling; Land-use change; Urban sprawl; Model validation; Complex systems1.IntroductionThe rise of models that represent the functioning of complex adaptive systems has led to an increased awareness of the possibility for path dependency and multiple equilibria in economic and ecological systems in general and spatial land-use systems in particular (Atkinson and Oelson 1996, Wilson 2000,Balmann 2001). Path dependence arises from negative and positive feedbacks.Negative feedbacks in the form of spatial dis-amenities rule out some patterns of development and positive feedbacks from roads and other infrastructure and from service centers reinforce existing paths (Arthur 1988, Arthur 1989). Thus, a small random component in location decisions can lead to large deviations in settlement patterns which could not result were those feedbacks not present (Atkinson and Oleson 1996). Concurrent with this awareness of the unpredictability of settlement patterns has been an increased availability of spatial data within geographic information systems (GIS). This has led to greater emphasis on the validation of spatial land-use models (Costanza 1989, Pontius 2000, 2002, Kok et al. 2001). These two scientific advances, one theoretical and one empirical, have lead to two contradictory impulses in land-use modeling: the desire for increased accuracy of prediction and the recognition of unpredictability in the process. This paper addresses the balance between these two impulses: the desire for accuracy of prediction and accuracy of process.Accuracy of prediction refers to the resemblance of model output to data about theenvironments and regions they are meant to describe, usually measured as either aggregate similarity and spatial similarity. Aggregate similarity refers to similarities in statistics that describe the mapped pattern of land use such as the distributions of sizes of developed clusters, the functional relationship between distance to city center and density (Batty and Longley 1994, Makse et al. 1998; Andersson et al.2002, Rand et al. 2003), or landscape pattern metrics developed within the landscape ecology literature (e.g., McGarigal and Marks 1995) to measure the degree of fragmentation in the landscape (Parker and Meretsky 2004).Spatial similarity refers to the degree of match between land-use maps and a single run or summary of multiple runs of a land-use model. The most common approaches build on the basic error matrix approach (Congalton 1991), by which agreement can be summarized using the kappa statistic (Cohen 1960). Pontius(2000) has developed map comparison methods for model validation that partitions total errors into those due to the amounts of each land-use type and those due to their locations. Because models rely on generalizations of reality, spatial similarity measures must be considered in light of their scale; the coarser the partition, the easier the matching task becomes (Constanza 1989, Kok et al. 2001, Pontius 2002and Hagen 2003).Because spatial patterns contain more information than can be captured bya handful of aggregate statistics, validation using spatial similarity raises the empirical bar over aggregate similarity. However, as we shall demonstrate in this paper, demanding that modelers get the locations right may be asking too much.Human decision-making is rarely deterministic, and land-use models commonly include stochastic processes as a result (e.g., in the use of random utility theory;Irwin and Geoghegan 2001). Many models, therefore, produce varying results because of stochastic uncertainty in their processes. Further, to represent the feedback processes, land-use modelers are making increasing use of cellular automata (Batty and Xie 1994, Clarke et al. 1996, White and Engelen 1997) and agent-based simulation (Balmann 2001, Rand et al. 2002, Parker and Meretsky2004). These and other modeling approaches that can represent feedbacks can exhibit spatial path dependence, i.e., the spatial patterns that result can be very sensitive to slight differences in processes or initial conditions. How sensitive depends upon specific attributes of the model. Given the presence of path dependence and the effect it can have on magnifying uncertainties in land-use models, any model that consistently returns spatial patterns in which the locations of land uses are similar to the real world could be overfit, i.e., it may represent the outcomes of a particular case well but the description of the process may not be generalizable.We believe that the situation creates an imposing challenge: to make accurate predictions, but to admit the inability to be completely accurate owing to path dependence and stochastic uncertainty. If we pursue only the first part of the dictumat the expense of the second part, we encourage a tendency towards over fitting, in which the model is constrained by more and more information such that its ability to run in the absence of data (e.g., in the future) or to predict surprising results is reduced. If we emphasize the latter, then we abandon hope of predicting those spatial properties that are path or state invariant. Though it is reasonable to ask,‘‘can themodel predict past behavior?’’the answer to this question depends as muchon the dynamic feedbacks and non-linearities of the system itself as on the accuracy of the model. Therefore, the more important question is ‘‘are the mechanisms and parameters of the model correct?’’In this paper we describe and demonstrate an approach to model validation that acknowledges path dependence in land-use models. The invariant-variant method enables us to determine what we know and what we don’t know spatially. Although we can only make limited interpretations about the amount of path dependence that we see for any one model applied to a particular landscape, comparing across a wide range of models and landscape patterns should allow us to understand if a model contains an appropriate level of path dependence and/or stochasticity.2.An agent-based model of land developmentThe model we use to illustrate the validation methods was developed in Swarm(), a multipurpose agent-based modeling platform. In the model,agents choose locations on a heterogeneous two-dimensional landscape. The spatial patterns of development are the result of agent behaviors.We developed this simple model for the purposes of experimentation and pedagogy, and present it as a means to illustrate the validation methods. These concerns created an incentive for simplicity. We needed to be able to accomplish hundreds, if not thousands of runs, in a reasonable time period and to be able tounder stand the driving forces under different assumptions. We could not have a model with dynamics that were so complicated that neither we nor our readers could understand them intuitively. Thus, the modeling decisions have tended to err, ifany thing, on the side of parsimony. We describe each of the three primary parts of the model: the environment, the agents, and the agent’s interaction with the environment.Each location on the landscape (i.e., a lattice) has three characteristics: a score for aesthetic quality scaled to the interval [0, 1], the presence or absence of initial service centers, and an average distance to services, which is updated at each step. On our artificial landscapes we calculate service-center distance as Euclidean distance.When we are working with a real landscape, we incorporate the road network in to the distance calculation. We simplified the calculation of road distance by calculating, first, the straight-line distance to the nearest point on the nearest road,then the straight-line distance from that point to the nearest service center. This approach is likely to underestimate the true road distance, but provides a reasonable approximation that is much quicker to calculate and incorporates the most salient features of road networks.3.Validation methodsThis section describes the two primary approaches to validation that we demonstrate in this paper: aggregate validation with pattern metrics and thein variant-variant method. Each method is used to compare the agent-based model with a reference map and is demonstrated for several cases, which are described in Section 4.To perform statistical validation we make use of landscape pattern metrics,originally developed for landscape ecological investigations. These metrics are included for comparison with our new method. The primary appeal of landscapepattern metrics in validation is that they can characterize several different aspects of the global patterns that emerge from the model (Parker and Meretsky 2004), and they describe the patterns in a way that relates them to the ecological impacts of land-use change.In our approach, we distinguish between those locations that the model always predicts as developed or undeveloped –the invariant region –and those locations that sometimes get developed and sometimes do not –the variant region. Before describing how we construct these regions and their usefulness, we first describe a more standard approach to measuring spatial similarity in a restricted case.Suppose a run of a model locates a land-use type (e.g., development) at M sites among N possible sites, where M is also the number of sites at which the land use is found in the reference map. We could ask how accurately that model run predicted the exact locations. First count the number of the M developed locations predicted by the model that are correct (C). M - C locations that the model predicts are,therefore, incorrect. We can also partition the M developed locations in there ference map into two types: those predicted correctly (C) and those predicted incorrectly (M - C), and calculate user’s and producer’s accuracies for the developed class, which are identical in this situation, as C/M.4.Demonstrations of model validation methodsWe ran multiple experiments with our agent-based model to illustrate both the importance of path dependence and the utility of the validation methods. First, we created artificial landscapes as experimental situations in which the ‘‘true’’process and outcome are known perfectly, which is not possible using real-world data. Next,we used data on land-use change collected and analyzed over Washtenaw County,Michigan, which contains Ann Arbor and is immediately west of Detroit. The primary goal of the latter demonstration was to analyze the effects of different starting times on path dependence and model accuracy using real data.Our initial demonstrations, Cases 1.1 through 1.5 below, were designed to test for two influences on path dependence: agent behavior and the environmental features.For all of these demonstrations, we randomly selected a single run of the model as the reference map. We, therefore, compared the model to a reference map that, by definition, was generated by exactly the same process, i.e., a 100% correct model.Any differences between the model runs and the reference map were, therefore,indicative of inherent unpredictability of the system, due to either stochastic uncertainty or path dependence, and not of any flaw or weakness in the model.The next demonstrations (Cases 2.1 and 2.2) were intended to illustrate how too much focus on getting a strong spatial similarity between model patterns of land use and the reference map can lead one to construct an over fitted model. For both of these cases, we used the landscape with variable aesthetic quality in two peaks described above, and the parameter values listed in Table 1. In each case 10 residents entered per time step, with one new service center per 20 residents. Each run resulted in highly path dependent development, i.e., almost all development is on one peak or another, depending only on the choice of early settlers. We selected one run of this model as the reference map, deliberately choosing a run in which the peak of qx,y tothe northwest was developed. This selected run we designated as the ‘‘true history’’against which we wished to validate our model. The first comparison with this reference map (Case 2.1) was to assume, as before, that we knew the actual process generating the true history, i.e., we ran the same model multiple times with different random seeds.5.ResultsThe results from the first five cases (with parameters set as in table 1) indicated that the degree of predictability in the models was affected by both the behavior of the agents and the pattern of environmental variability (table 3). The landscape pattern metric values from any given case were never significantly different from the reference map, with the possible exception of MNN in Case 1.1. One striking result,however, given that the reference maps were created by the same models, is that the overall prediction accuracies were as low as 22 percent (Case 1.4), a result of the strongly path-dependent development exhibited in some of these cases. This accuracy level would probably be too low to convince referees or policy analysts to accept the model and yet the model is perfectly accurate.The overall prediction accuracy, and the size and accuracy of the invariant region,increased both when positive feedbacks were added to encourage development near existing development (Case 1.2) and when, in addition, the agents were responding to a variable pattern of aesthetic quality (Case 1.3). In addition to improving the size and predictability within the invariant regions, these changes had the effect of increasing the predictive ability of the model in the variant region as well (i.e., VC/VRD). This means that, where the model was less consistent in its prediction, it still made increasingly better predictions than random.6.Discussion and conclusionsIn this paper, we have introduced the invariant-variant method to assess the accuracy and variability of outcomes of spatial agent-based land-use models. This method advances existing techniques that measure spatial similarity. Most importantly, it helps us come to terms with a fundamental tension in land-use modeling –the emphasis on accurate prediction of location and the recognition of path dependence and stochastic uncertainty. The methods described here should apply to any land-use models that have the potential to generate multiple outcomes.They would not apply to models that are deterministic, and therefore make a single prediction of settlement patterns. By definition, deterministic models can not generate path dependence unless one considers the impact of interventions. In that case, our approach would be applicable with the invariant region being that portion of the region that is developed regardless of the policy intervention.Our proposed distinction between invariant and variant regions is a crude measure, but one that allows researchers to better understand the processes that lead to accurate (or inaccurate) predictions by their models. With it we can distinguish between models that always get something right, and those that always get different things right. And that difference matters. It may be possible to further develop the statistical properties of the most useful of these and similar measures. Such measures will enable us to categorize environments and actors who create systems for which anyaccurate model will have low predictive accuracy and those who create systems for which we should demand high accuracy.We expect that, over time and by comparing across models, we can understand what landscape attributes and behavioral characteristics lead to greater or lesser predictability as captured by the relative size of the invariant region. For example,homogeneity in the environment increases unpredictability because the number of paths becomes unwieldy. Admittedly, size of the invariant region is not the only possible measure of predictability, but it is a useful one. A large invariant region suggests a predictable settlement pattern. A small invariant region implies that history or even single events matter.Our analysis emphasized path dependence as opposed to stochastic uncertainty because of our interest, and that of many land-use modelers, in policy intervention.Stochastic uncertainty, like the weather, is something we can all complain about but not affect. Path dependence, at least in theory, offers the opportunity for intervention. If we know that two paths of development patterns are possible, then we might be able to influence the process, through policy and the use of what Holland called ‘‘lever points,’’such that the most desirable path, on some measure,emerges (Holland 1995, Glad well 2000). Path dependence makes fitting a model more difficult and may tempt modelers to overfit the data, since often the one actual path of development depends on specific details that influence the choices of early settlers. On the other hand, path dependence creates the possibility of policy leverage.The results of running the model from multiple starting times in the history (and pseudo-history) of Washtenaw County, Michigan, seem somewhat counter intuitive at first, in that the overall match of the locations of newly settled agents with those in the 1995 map decreased with increasing information (i.e., later starting times).However, the additional metrics tell more of the story. The model actually improved with later starting times when the matches were compared with the numbers that would be expected at random. Fewer agents entering the landscape at the later times means relatively more possible combinations of places they can locate in the undeveloped part of the map. The model does reasonably well at predicting the aggregate patterns, matching three of the four metrics, partially because much of the aggregate pattern is predetermined in the initial maps. The fact that three of the mean pattern-metric values were statistically indistinguishable from the 1995 values when starting at even the earliest dates, however, suggests that the match is not only due to the initial map information. The size of the invariant developed region declined with later starting times, but became more accurate., When we located fewer residents, we were much less likely to see them locate consistently, rightly or wrongly. Further, within the variant region, the model located residents less well than would be expected with simply random location. This suggests that some features were missing or structurally wrong in our model. Two possibilities are that our map of aesthetic quality in the outlying areas does not accurately reflect preferences, or that soil qualities or some other willingness-to-sell characteristic of locations contributes to where settlements occur.The comparison between Cases 2.1 and 2.2 highlights the importance of recognizing path dependence in land-use change processes and the dangers of over fitting the model to data in the modeling processes. This danger, i.e., that the model will match the outcome of a particular case well but misrepresent the process,is endemic to land-use change models. Many models of land-use change are developed through calibration and statistical fitting to observed changes, derived from remotely sensed and GIS data sets. This rather extreme example makes the point that, even though the outcomes of the model may match the reference map in meaningful ways, e.g., both statistically and spatially, we cannot necessarily conclude that the processes contained in the model are correct. If the processes are not well represented, of course, then we possess limited ability to evaluate policy outcomes, for example by changing incentives or creating zones that limit certain activities on the landscape.In the context of the foregoing discussion, it is useful to reflect on how to proceed with model development. If we use the results from Washtenaw County as an indication of the validity of the model and wish to improve its validity, what should be our next steps? There are a number of factors that we did not include in our model that could be included in agent decision-making. These include the price of land, zoning, the different kinds of residential, commercial, and industrial developments, a different representation of roads and distances, and the presence of areas restricted for development (like parks). Any of these factors could be included in the model in a way that would improve the fit of the output to the 1995map. But, each new factor we add will have associated with it parameters that need to be set. As soon as we start fitting these parameters according to the values that produce outputs that best fit the data, we run the risk of losing control of the process-based understanding that models of this sort helps us grapple with. As we proceed, the question becomes: are we interested in fitting the data or understanding the process?基于空间模型使用的路径依赖研究作者:丹尼尔·g·布朗;斯科特·佩奇;里克·瑞路;莫伊拉;威廉·兰德在本文的研究中,我们通过两个明确的、却截然不同的概念模型,准确而明显的凸显了这两者之间的紧张关系。

初中生人际交往中交互现象及其与自我意识的关系陈夏芳1,郑全全1,钟莹2(1.浙江大学心理与行为科学系,浙江杭州31002&;2.华东师范大学心理系,上海2’’’(2)

!摘要"目的#考察初中生人际交往中的交互现象及其与自我意识的关系。方法#运用社会测量法对265名初中生

进行人际关系测试,并在一周后对他们进行自我意识测量。结果#!初中生人际交往中存在交互现象。"不同年级初中生行为和焦虑方面的自我意识差异显著;不同性别初中生自我意识在焦虑维度上差异显著;#自我意识中智力与

学校情况方面显著影响初中生个体接纳水平。结论#初中生人际交往中存在交互现象,并受自我意识个别维度的影响。

!关键词"人际交往;社会交换行为;自我意识

中图分类号:R395.6文献标识码:A文章编号:1005-3611(2005)04-0443-03InterpersonalInteractionof01nior2i345c4ool5t16entsan6its7elations4ip8it45elf9:ons;io1snessCHENXia-fang,ZHENGQuan-quan,ZHONGYinDepartmentofPsychologyandBehavioralScience,ZhejiangUniversity,Hangzhou310028,China!<=stract">=?ecti@eATostudytheinterpersonalinteractionofjuniorhighschoolstudentsanditsrelationshipwithself-consciousness.Bet4o6sC265juniorhighschoolstudentsweregiventhemeasurementofinterpersonalrelationship,andadministeredthequestionnaireofPiers-Harrischildrenself-consciousnessoneweeklater.7es1ltsC!Theinterper!

对VLCC船基于理论和经验计算的波浪增阻预报修正方法杨春蕾;朱仁传;樊涛;周文俊;缪国平【摘要】文章基于一种新的理论方法和经验公式方法对波浪增阻进行预报,通过比较分析给出了一种改进的工程实用的计算公式.势流理论方法由于存在假设在短波增阻预报上精度不高,而经验方法的船型适用性不强.文中选取VLCC船迎浪航行为例,考虑吃水和航速影响进行了计算验证和比较分析.一种水平线段移动脉动源的三维面元法被用于求解速度势问题,进而采用三维船体辐射能量法得到船舶辐射增阻,基于二阶波浪力定常分量公式计算得到绕射增阻;经验方法采用ISO15016-2015推荐的STAWAVE2方法.通过辐射和绕射增阻曲线分析,对方法的适用性进行分析,进而对半经验方法的修正系数进行了研究,给出了反应相似船船型和航速特征的改进公式.【期刊名称】《船舶力学》【年(卷),期】2018(022)012【总页数】12页(P1483-1494)【关键词】辐射增阻;绕射增阻;格林函数;三维面元法;STAWAVE2方法【作者】杨春蕾;朱仁传;樊涛;周文俊;缪国平【作者单位】江南造船(集团)有限责任公司江南研究院, 上海 201913;上海交通大学船舶海洋与建筑工程学院,海洋工程国家重点实验室, 上海 200240;高新船舶与深海开发装备协同创新中心, 上海 200240;江南造船(集团)有限责任公司江南研究院, 上海 201913;上海交通大学船舶海洋与建筑工程学院,海洋工程国家重点实验室, 上海 200240;高新船舶与深海开发装备协同创新中心, 上海 200240;上海交通大学船舶海洋与建筑工程学院,海洋工程国家重点实验室, 上海 200240;高新船舶与深海开发装备协同创新中心, 上海 200240【正文语种】中文【中图分类】U661.30 IntroductionDue to the more stringent requirements of safety enhancement and emissions reduction,prediction of the added wave resistance of ships is getting more and more important.An assessment procedure for minimum power is provided in MEPC(Marine Environment Protection Committee)232(65)resolution,in order to maintain maneuverability in adverse conditions for ships,complying with EEDI(Energy Efficiency Design Index)regulation.The evaluation of EEDI has been enforced since 1 January 2013 in MEPC 232(65)resolution,regarding the Green House Gas emission of the navigation of ships.The effective consideration to improve EEDI index is achieved by reducing speed,but that will result in unconformity to the minimum power requirement which is calculated by minimum power lines,especially for large-scale ships.In such cases more accurate method for predicting the added resistance should be used.Conservative formulas for the added resistance are not applicable for the sophisticated consideration of requirements of two resolutions mentionedabove.Moreover,the accuracy of the added resistance is key to final EEDI report,which is obtained from correction data of sea trials based on ISO-15016:2015[1].Model tests can be conducted for the most reliable prediction when circumstances permit,but factors of cost and time preclude such tests.Therefore the investigation and validation on empirical and numerical methods are getting more and more important. Procedures recommended[2]for calculating added wave resistance by ITTC are adopted by ISO.The procedures include empirical methods from the STA-JIP(Sea Trial Analysis Joint Industry Project),namely STAWAVE1 and STAWAVE2,and the semi-theoretical method of the NMRI(National Maritime Research Institute).However,these methods are applicable to some extent to the ship type,draft,wave frequency and other factors.The Specialist Committee on Performance of Ships in Service(PSS Committee)[3]selected foregoing three methods and six type of ships to have comparison study for added resistance,and understand which method was the best method for the correction of speed/power trial.The results show that NMRI method combined with tank model in short waves gives the considerable accuracy.Returning to the reanalysis,the STAWAVE2 method would be convenient and preferred at the early design stage in the range of intermediate and short wave for the fine hull lines,because the empirical formulas for the diffraction resistance are relatively rational.NMRI(National Maritime Research Insititute)method has a better theoretical basis for the radiation resistance,and is more reasonably comprehensive for the diffraction resistance because of accommodation of correction of speed effect and waterline of the hull.NMRI method recommended by PSS,although better than most others,lacks transparencyand universalization in numerical method of 3D potential in the flow field and Kochin function.Note worthily,prediction of the added resistance in short waves at about λ/Lpp=0.3 relies on empirical parameters for the speed and draft effect whatever employing theoretical or empirical method.For a specialist ship,practical predictions are based on the in-house data relating to a similar basis ship.The difficulty of the accumulation of data is how to correct by the way of synergetic re-analyses of modern and older methods.At the framework of linear potential flow theory,two methods are available to estimate the added resistance:the far-field and near-field methods.The far field method was firstly introduced by Maruo[4]and derived the equation of steady second order force obtained by calculating the change of momentum for radiated wave energy.Joosen[5]revised the added resistance method based on Maruo method,and NMRI adopted the method for added resistance due to ship motions.Maruo method gives considerably accuracy for the cruiser stern ships,and does not apply the hull forms with bulbous bow.Joosen’s method improved the accuracy for the results of transom stern ships,but does not apply cruiser stern ships.Gerritsma[6]introduced the radiation energy method which is based on the far field method.Salvensen(1978)[7]investigated added resistance by calculating second order terms of disturbing forces andmoments.Strøm[8]evaluated these methods and gave the conclusion that the prediction of added resistance lied in the range of ship forms and speed.Faltinsen(1980)calculated added resistance by integrating pressureon average wetted surface of ship hull based on near field method with good validation results.It is important to note that the value of added resistance is a second order term,and the accurate prediction suits the exact solution of the motion response,which requires accurate solution of the velocity potential.Even though there is still controversy for the versatility and accuracy of the empirical approach of the ITTC,the method international organization is willing to promote such methods to improve due to the convenient and transparent calculation.For the practical prediction for the designers,the correction empirical formulas are established from tank tests data and theoretical results.Jinkine[9]developed an estimation formula of added resistance including the principle dimension of ships for the long wave length,however the value for the short wave length should be corrected.Havelock[10]derived the steady drag formula for the fixed vertical cylinders.Fujii[11]established semi-empirical formulas for the diffraction resistance based on Havelock.Kuroda[12-13]further corrected the Fujii formula.MARIN[14]calculated the added resistance due to diffraction and radiation relating to Jinkine method,and formed a basis for STAVWAVE2 method.Though the empirical formulas give considerable accuracy,estimation based on in-house database is important.In this paper,based on the existing data of the basis ships in Jiangnan shipyard,the theoretical and the empirical methods are investigated in order to establish the improved model for predicting the added resistance with better accuracy and reliability for the VLCC type ship.A 3-D panelmethod based on the horizontal line segment translating and pulsating source panel is used to solve potential problem,and added resistance due to the radiation is obtained by 3D radiation energy method for ships,while one due to the diffraction is obtained by the second order steady-state force formula.STAWAVE2 method is adopted as the semi-empirical parable study is conducted and advantage of different methods is analyzed.Revised formulas reflecting the characteristic of VLCC are validated with good agreement with experimental data.1 Theories of potential flow and added resistance1.1 Potential flow theoryA right-handed orthogonal coordinate system fixed in the mean position of the ship with the x-axis in the direction of constant forward speed,and the z-axis vertically upwards through the center of ship gravity is considered.The ship is advancing at a constant mean forward speed U0in sinusoidal regular waves with an arbitrary heading.Assuming the fluid is ideal,fluid motions are irrotational and potential flow theory can be applied to describe the wave.The total spatial velocity potential is decomposed:where φ0is the incident wave potential,φ7is the diffraction potential of the fixed ship,ωeis encounter frequency,φjis the normalized velocity potential due to radiated motions in six degrees of freedom,is the complex amplitude of the j-th degree of freedom.φ0is given by:where A is amplitude of the incident wave,ω0is circular frequency,k is wave number,H is the water depth,β is the incident wave angle.For diffraction and radiation wave potential,the boundary value problem to be solved is:where U0is the ship speed,mjis the m-term,which represents the interaction between the steady forward motion and the periodic oscillatory motion of ships.By assuming that the steady potential is small,a simplified m-term can be written as:(m1,m2,m3)=(0,0,0 )and(m4,m5,m6)=(0,U0n3,-U0n2).To solve the boundary value problem,the mixed source and dipole distribution model[15]and 3D translating&pulsating source Green’s function(3DTP)are used.And the boundary integral equation can be written as:where α is solid angle of field point,P( x,y, z )is field point,Q( ξ,η, ζ)is source point,SB is average wetted surface of ship hull,wl is waterline.The Green’s function is expressed[16-17]by:whereFor the treatment of integrati on on the panel ΔSj,a more effective integration of horizon-tal line segment is used,as Fig.1 shown.The panel integration of the G*(P, )Q and its differential is converted into line integration:Fig.1 Discretization of a panelwhere M is the number of hor izontal line segments,αjis the weight coefficient of the j-th segment which is decided by the integral method used in vertical direction.The line segment integrationthe differentialandare expressed analytically shown in Ref.[16].Fig.2 shows hull lines and panel grids of KVLCC.Fig.2 Hull lines and panel grids of KVLCCRadiation and diffraction potential could be obtained by carrying out calculations for boundary element method(BEM).Withφjknown,hydrodynamic coefficient such as added mass μjkand damping λjkc an be obtained.Wave forces including incident force )and diffraction force)are calculated separately by using respective potential φ0andφ7.Corresponding equation for motions can be given aswhere mkjis inertia matrix,and Cjkis hydrostatic restoring terms.1.2 Added resistance due to radiationIn principle of energy conservation,the radiation energy of an encounter period delivered by the ship should equal to the work done by average added resistance acting on ship hull.Radiation energy method of 3D BEM can be derived with reference of that based on strip method.This added resistance can be expressed as a function of hydrodynamic coefficient:When the speed is zero,Ajk=Ak j,the first term in the parentheses shouldbe taken as zero.Thenwhere XkRand XkIare real part and imagine part of the complex amplitude of ship motion in k-th degree of freedom.The above equation is consistent with the Joosen method[5]for the zero speed ship.1.3 Added resistance due to diffractionIt is assumed for ships that the disturbance potential is small compared to the incident potential (φ Bpp φ0),and therefore the second-order force of steady state derived based on Salvensen[7]can be expressed by:where β is wave angle,is complex conjugate of φ0,φBis body disturbing potential.When an interaction between radiation and diffraction potential is ignored,the added resistance due to diffraction can be given as follows:2 STAWAVE2 method2.1 Estimation for added resistance due to radiationSTAWAVE2 method is based on Jinkine method[9],and an approximate formula of nondimensional coefficient of added resistance due to radiation is as follows:Then the added resistance is:where a1=60.3and a2=Fn1.5exp(-3 .5Fn)account for the effects of the speed and principal dimensions for the peak value of radiationresistance.andresponse to inclination of the curve for the added resistance.Lpp,B,kyyand ζ are ship length,breadth,radius of gyration in lateral direction and wave amplitude,respectively. ω/1.17 is non-dimensional frequency,when=1,the added resistance is a minimum value.,and Cbis block coefficient.After the verification of practical calculations,it is found that the peak of added resistance moves to the area of longer wave with a higher varying speed.2.2 Estimation for added resistance due to diffractionA semi-empirical formula based on Takahashi method[19-20]for calculating the diffraction added resistance is adopted,and the non-dimensional coefficient is:Added resistance due to diffraction is:whereis used to correspond to the effect of speed,draught and characteristic of hull forms.I1is modified Bessel function of the first kind of order 1,K1is modified Bessel function of the second kind of order 1,TMis the mean draught.Fig.3 shows the effects of draft and wave-length on added resistance due to diffraction.Ref.[12]refers to bulk carrier and container ships,validation of the data distributes the range of α1(keTM)~α1(1. 5keTM ).Ref.[20]refers to the different range of α1(0. 74keTM )~α1(1. 0keTM)for the tankers,and the range of α1(0. 56k eTM )~α1(1.0keTM)where radiation added resistance predominates for container ships.Fig.3 Comparison of the effects of draft and wave length3 Comparisons of theory and empirical formulasThe different methods are used to validate two tankers,one is KVLCC2 which is a standard ship model for CFD validation,and another is a VLCC which is a built hull.The accuracy and applicable range will be analyzed by comparing tank test data and computed results.Main dimensions are presented in Tab.1.Tab.1 Main dimension of ships?3.1 Added resistance analysisThe heave and pitch motions are shown in Fig.4(a)and(b)based on 3D frequency domain method.The motion response results are in good agreement with experiment data.Fig.4 RAOThe results of added resistance at the speed of Fn=0.142 for KVLCC2 are shown in Fig.5,and the experimental data is from NTUA and INSEAN.Based on the analysis of theoretical and numerical calculation and comparisons with test data,it is concluded that:(1)As shown in Fig.5(a)the theoretical results of Rawin the range of λ/Lpp>0.7 and the resonance frequency range are in good agreement with experimental data,but the added resistance due to short wave is inaccurate;the empirical formulas enable a reasonably accurate prediction for Raw in the range of λ/Lpp<0.5,but resonance frequency range is not given a reasonable estimation.(2)As shown in Fig.5(b)the range of the maximum resistance obtained by SATWAVE2 method is in the lower frequency range;the added resistancesin the range of λ/Lpp<0.5 for theory and STAWAVE2 are 25%-30%less than experimental data.The advantage of 3D translating and pulsating source method is that free surface condition is satisfied automatically and the dispersed error can be reduced.However,the viscous and nonlinear effects are great and not captured by potential flow theory,especially for full hull forms,moreover the major component at short-wavelengths is the diffraction resistance.Though the panel density is increased to improve the numerical accuracy,it is very difficult to accurately predict in short wavelength range.For the VLCC,the relative wave length is short in the condition of the actual sea trial and service operation.The foregoing results show that the theoretical method has a limitation and empirical method is crude for the prediction of the added resistance in short waves.The direct measurement to improve the accuracy is that more parameters are included in the formulas in order to represent the hull forms better.One alternative measurement is provided by adjusting the parameters of formulas applicable to geosim ships.Fig.5 Added resistance at different speeds3.2 Different component analysisFig.6 shows the results of two different components of the added resistance by using two methods.It can be observed that the peak values of ordinate are at λ/Lpp=0.5 and λ/Lpp=1.0 respectively for theoretical and empirical methods.The added resistance due to diffraction is the main component,so the main reason for underestimating the added resistancein short waves is due to the fact that the diffraction resistance is less than that experiment values.The maximum of radiation resistance of empirical results occurs at lower frequency than indicated by theory results.The peak value is influenced by the radiation resistance.In fact the uncertainty in the added resistance measurements is larger[14],especially for low speed ships,so it is difficult to obtain accurate results in the short wave range.For practical predictions,adequate margin is necessary.Other numerical method such as CFD is influenced by grid density and is limited to predict the short wave resistance.It is technically feasible to improve the existed methods based on the analysis of theoretical and semi-empirical methods and tank test data.Fig.6 Compenents of added resistance3.3 Revised STAWAVE2 methodAn improved expression for radiation resistance can be found to improve the accuracy of prediction according to comparable analysis of calculated results and test data.The formula is given as:The coefficients of b1and d1in Eq.(12)can be adjusted to agree the test data better,like the correction of Rob[14],but the database from different institute may be different.The equation fitted by calculated results can be expressed by:Eq.(14)is limited to extend to the prediction for modern VLCC because it is applicable to the blunt bow shape at low speed,and meets the assumptionof vertical plane barrier.A blunt coefficient which reflects waterline shape can be used to improve the accuracy of prediction,but the practical calculation for this coefficient is not easy and actual ship data of different ship type is fluctuated.The following equation can be given through a number of numerical tests:As is expected,it is seen the added resistance in a wide range of wavelength is in good agreement with experimental data,and the maximum added resistances occur at reasonable wavelengths for two ships.The added resistance of STA2 correction method is shown in Fig.7. Fig.7 Added resistance of STA2 correction methodIn order to validate the applicability to the draught effect,the VLCC case in the ballast condition(T=10 m)is chosen.The performance of VLCC navigation in the ballast condition is often needed for ship owners.For the case of Fr=0.144,results of theoretical and corrected STAWAVE2 method are shown in Fig.8.It is seen that present correction results agree well with the experiment values.It is stressed that the present empirical method is limited to the geosim ships of VLCC type.Fig.8 Added resistance of VLCC at ballast draft4 ConclusionsHydrodynamic coefficients and wave forces are solved by 3-D panel method based on the horizontal line segment translating and pulsating source panel,and added resistance due to the radiation is obtained by 3D radiation energy method for ships,while one due to the diffraction isobtained by the second order steady-state force formula.STAWAVE2 method recommended by ISO15016-2015 is adopted as the semi-empirical method.Improved formulas are presented based on analysis of calculated results.Conclusions are summarized:(1)Theoretical results indicate that the maximum of added resistance agrees with experi-mental data,but underestimate the added resistance in the relative short wave range.The modern numerical method based on line integration is shown a higher efficiency than traditional BEM.In this paper,the hull is divided into discrete elements of 1 300,and cases are computed on a computer with Intel Core I5(3.2 GHz).For each frequency calculation,about 3.9 minutes are needed.(2)The differences between the results of STAWAVE2 and experiment method are large.Improved formulas are presented and the results based on these formulas demonstrate the good agreement can be achieved for VLCC.It can be used to accumulate the database for seakeeping performance.(3)The present theoretical method predicts the added resistance with good accuracy and high efficiency in the range of medium and long wave for variable speeds and drafts.A notable discrepancy between theory and experiment was found in the range of short wave.References【相关文献】[1]IMO MEPC 68/INF.14 2015 Ships and marine technology-Guidelines for the assessment of speed and power performance by analysis of speed trial data[S].2015.[2]ITTC 7.5-04-01-01.2 ITTC-Recommended procedures and guidelines analysis of speed/power trial data[R].2014.[3]ITTC Specialist committee on performance of ships in service-final report and recommendations to 27th ITTC[C].Proceedings of 27th ITTC-Volume III,2014.[4]Maruo H.The excess resistance of a ship in rough seas[J].International Shipbuilding Progress,1957,4(35):337-345.[5]Joosen W P A.Added resistance in waves[C]//Proceedings of the 6th Symposium on Naval Hydrodynamics.Washington,USA,1966.[6]Gerritsma J,Beukelman W.Analysis of the resistance increase in waves of a fast cargo ship[J].International Shipbuilding Progress,1972,19(217):285-293.[7]Salvensen N.Added resistance of ships in waves[J].Journal ofHydronautics,1978,12(1):24-34.[8]Strøm-Tejsen J,Yeh H Y H,Moran D D.Added resistance in waves[J].Transactions of the Society of Naval Architects and Marine Engineers,1973,81:109-143.[9]Jinkine V,Ferdinande V.A method for predicting the added resistance of fast cargo ships in head waves[R].Institute of Office of Naval Architecture,Belgium,1973:1-40. [10]Havelock T H.The pressure of water waves upon a fixed obstacle[C].Proceeding of Royal Society,A.,1940,175:400-421.[11]Fujii H,Takahashi T.Experimental study on the resistance increase of a ship in regular oblique waves[C].Proceeding of the 14th ITTC,1975:351-360.[12]Kuroda M,Tsujimoto M,Fujiwara T.Investigation on components of added resistance in short waves[J].Journal of the Japan Society of Naval Architects and OceanEngineers,2008:171-176.[13]Kuroda M,Ikeda T,Naito S.Effect of a ship hull form on added resistance inwaves[C]//Proceedings of The 4th Asia-Pacific Workshop on Marine Hydrodynamics.Taipei,Taiwan,China,2008:1-7.[14]Rob G.On the prediction of wave-added resistance with empirical methods[J].Journal of Ship Production and Design,2015,31(3):181-191.[15]刘应中,缪国平.船舶在波浪上的运动理论[M].上海:上海交通大学出版社,1986.Liu Yingzhong,Miao Guoping.Theory of ship motion in waves[M].Shanghai:Shanghai JiaoTong University Press,1986.[16]Liang H,Renchuan Z,Guoping M,et al.An investigation into added resistance of vessels advancing in waves[J].Ocean Engineering,2016:238-248.[17]洪亮,朱仁传,缪国平,等.三维频域有航速格林函数的数值计算与分析[J].水动力学研究与进展,2013,4:423-430.Hong Liang,Zhu Renchuan,Miao Guoping,et al.Numerical calculationand analysis of 3-D Green’s function with forward speed in frequency domain[J].Chinese Journal of Hydrodynamic,2013,4:423-430.[18]黄庆立,朱仁传,缪国平,等.Kelvin源格林函数及其在水平线段上的积分计算[C]//第二十七届全国水动力学研讨会,南京,2015.Huang Qingli,Zhu Renchuan,Miao Guoping,et al.Calculation of horizontal line segments Kelvin Green’s function and integration overpanels[C]//Proceedings of the 27th National Hydrodynamics.Nanjing,China,2015. [19]Takahashi T.A practical prediction method of added resistance of a ship in waves and the direction of its application to hull form design[J].Transactions of West Japan Society Naval Architecture,1988,75:75-95.[20]Fujii H,Takahashi T.Experimental study on the resistance increase of a ship in regular oblique waves[C].Proceeding of 14th ITTC,1975:351-360.。

品牌认知消费者忠诚度文献综述和参考文献文献综述(一)品牌认知的构成品牌认知涉及多个方面的因素,在这一研究领域内,国内外很多专家学者都取得了不小的成就。

Keller(1993)主要对品牌知名度和品牌形象进行研究,利用心理学的联想网络记忆模型,对品牌认知的维度进行了系统性分析。