Performance Evaluation of Software Architectures (1998)

- 格式:pdf

- 大小:102.96 KB

- 文档页数:24

Architectural UnificationRalph Melton and David Garlan*School of Computer ScienceCarnegie Mellon UniversityPittsburgh PA 15213{ralph,garlan}@Phone: (412) 268-7673Fax: (412) 268-557631 January 1997Submitted For Publication, January 1997AbstractMany software designs are produced by combining and elaborating existing architectural design frag-ments. These fragments may be design patterns, partially thought-out ideas, or portions of some previ-ously-developed system design. To provide mechanized support for this activity it is necessary to have aprecise characterization of when and how two or more architectural fragments can be combined. In thispaper we describe extensions to notations for software architecture to represent incomplete design frag-ments, and algorithms for combining fragments in a process analogous to unification in logic.1. IntroductionSoftware architecture is increasingly recognized as an important level of design for software systems. At this design level systems are usually represented as a set of coarse-grained interacting components such as databases, clients, servers, filters, blackboards [GP95, GS93, PW92]. Architectural design describes complex systems at a sufficiently high level of abstraction that their conceptual integrity and other key system properties can be clearly understood early in the design cycle.One of the central benefits of architectural design is support for design reuse. Many systems are built by combining and elaborating existing architectural design fragments [BJ94, BMR+96]. These fragments may be design patterns, partially thought-out ideas, or portions of some previously-developed system design.Currently the practice of reusing architectural fragments has a weak engineering basis. Architectural design frag-ments are usually represented informally and the principles by which partial designs are combined are informal and ad hoc. In particular, given an architectural design fragment it is typically not clear either (a) what aspects of that fragment must be filled in to complete the design, or (b) how to determine whether a given context can satisfy its requirements.USAF, and the Advanced Research Projects Agency (ARPA) under grants F33615-93-1-1330 and N66001-95-C-8623; and by National Science Foundation Grant CCR-9357792. Views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of Wright Laboratory, the US Department of Defense, the United States Government, or the National Science Foundation. The US Government is authorized to reproduce and distribute reprints for Government purposes, notwithstanding any copyright notation thereon.Recently, considerable progress has been made in developing ways to represent software architectures. A number of architectural description languages have been developed, together with tools for manipulating those descriptions, for-mally analyzing them, and storing reusable architectural elements [GAO94, MORT96, LAK+95, MQR95, SDK+95, AG94].While the ability to formally represent architectural designs is a necessary first step, notations and tools for architec-tural representation do not by themselves solve the problems of combining architectural design fragments. First, these notations usually lack any means for characterizing “partiality” in architectural design—that is, explicit identification of missing parts and context requirement. Second, they do not provide effective criteria or mechanisms for combining several fragments into a single coherent design.In this paper we attempt to remedy the situation. First, building on standard representational schemes for software architecture, we show how partial designs can be characterized using architectural placeholders. Second, building on theoretical underpinnings from unification in logic, we provide an algorithm for combining multiple such fragments. The underlying ideas behind our approach are straightforward: Placeholders in an architectural design serve as vari-ables in an architectural expression. Legal combination of several such “expressions” is determined by a form of uni-fication that matches variables to instances. However, as we will show, the domain of architectural representations raises a number of challenges that make the naive application of the traditional unification algorithm difficult (if not impossible). We then show how those challenges can be met by extending the algorithm in several significant ways.2. Related WorkThree areas of research are closely related to this work: software architecture, reuse, and logic.2.1. Software ArchitectureWithin the emerging field of software architecture, two related subareas are architectural description languages (ADLs) and architecture-based reuse.Architecture Description LanguagesA large number of ADLs have been proposed (at last count over a dozen). While these languages differ from each other in many significant ways, virtually all share a common view that a software system’s architecture describes the system’s structure as a hierarchical configuration of interacting components. Beyond this, different ADLs typically add flesh to the structural skeleton by allowing additional semantic information that characterizes such things as abstract behavior, implementation choices, and extra-functional behavior (performance, space requirements, etc.) Our work builds on architecture description languages by adopting their common structural basis (detailed in Section 4). Auxiliary information is handled in our framework by viewing that information as residing as attributes of the architectural structure. (The same approach is taken by a number of other languages, including, Aesop, and UniCon.) In this way our results are largely ADL-neutral: to the extent that an existing ADL adopts our generic structural basis for architectural representation, our results will apply. However, as we will see our work also extends the usual archi-tectural representation schemes, by introducing an explicit notion of a placeholder and mechanisms for filling those placeholders.Architecture-based ReuseResearch in software architecture has focused primarily on two aspects of reuse.•Code: A number of systems (such as GenV oca [BO92] and UniCon [SDK+95] provide high-level compil-ers for certain classes of software architecture. These support reuse of implementations, primarily byexploiting shared implementation structures for some class or domain of systems. However, unlike our work they are less concerned with general mechanisms for reuse of architectures themselves.•Style: Some systems (such as Aesop [GAO94] and Wright [AG94]) support the definition of families of architecture that adopt a common set of design constraints - sometimes called an architecture “style”. For systems within such a family it is often possible to provide (and thereby reuse) specialized analysis tools, code generators, and design guidance. Styles typically prescribe general rules for architecture (such as a specialized design vocabulary and constraints on how the elements are composed). This differs from the work described here, which focuses on reuse of architectural fragments.2.2. ReuseArchitecture aside, the general area of reuse has been an active research area for many years. Historically most of that work has focused on code-level reuse, with an emphasis on representation, retrieval, and adaptation of implementa-tions.More recently, work on design patterns has raised the level of concern to design reuse. In particular, design patterns have been extremely successful in capturing idioms of reuse that are intended to solve specific problems and that can be codified and packaged in handbooks [Bus93,GHJV95].Our work is closely related to design patterns. However, there are two significant differences. First, we focus more narrowly on architectural patterns, a subset of the more general body of research on patterns. This more specialized focus allows us to exploit specialized representational schemes for architecture, and to consider carefully the specific needs for architectural design reuse. Second, the main thrust of the design patterns work has been on packaging of good intuitions and ideas about problem solving. Our work complements this by looking at formal representation schemes and algorithms for deciding whether the use of a pattern is valid in a given context.2.3. UnificationUnification in logic has been an area of formal study for many years [Kni89]. In this paper we build on those results, extending and adapting them where necessary to fit the problems at hand. Our research is not intended to make novel contributions to this area, but rather to adapt what already exists to the domain software architecture.3. ExampleTo illustrate the issues of representing and combining architectural design fragments, we use an example from a recent development project which constructed a specialized software development environment. The system was to provide persistent storage for programs that could be viewed and manipulated through a graphical user interface. Theisfy the joint requirements of the two fragments. In particular, it must support the interfaces for interaction with the Object Manager, the Reuse Repository and an Error Handler.Several issues are worth noting about the way in which we (informally) designed and combined the fragments. First, neither architectural fragment can be viewed as a single encapsulated entity: each has substructure that must be exposed. This suggests that traditional techniques (e.g., using parameterization) for composing encapsulated objects do not apply here.Second, design fragments specify not only parts of a design that they are providing, but also parts of the design they are expecting. In this example the Reuse Repository fragment provides the Repository design and the protocol for interaction with it. But it requires a User Interface to complete the fragment. Similarly, the Object Manager fragment provides the Object Manager and its interaction with the User Interface, but requires a User Interface.Third, it is not enough to simply name the parts expected to be filled in by the surrounding context (such as the User Interface in Figure 1a). In addition it is also important to say something about the structure and properties of those missing parts. Even in this simple example, the Repository fragment indicated that the User Interface must have an error handling interface, and an interface that could communicate using the Exporting and Importing protocols required by the Repository.Fourth, the question of which parts of the fragments should be combined (or identified) in the final design is not an obvious one to answer. Can a single user interface satisfy the needs of both designs? If so, should the combined User Interface have one or two Error Handler interfaces? Why is it that the two User Interfaces are combined, but the Object Manager isn’t identified with the Reuse Repository? While the answers to these and similar questions may be intuitive at this informal level of exposition, formal criteria for making such decisions are clearly lacking. In the remainder of this paper we show how a simple representational scheme, together with an algorithm for composition of design fragments can address the issues in a precise way.4. Representing Software ArchitectureTo talk about architectural designs and fragments at all, we first need some way to represent them. W e will take as our starting point the structuring concepts more or less uniformly adopted by the software architecture research commu-nity. Specifically, in software architecture, designs are typically described in terms of the following generic vocabu-lary of architectural design elements [GP95]:•A component is a locus of computation or a data store. (Examples include databases, filters, abstract data types, etc.)•A connector is an interaction between two or more components. (Examples include procedure call, event broadcast, pipes, and interactions involving more complex protocols such as a client-server protocol.)•A port is an interface to a component. A component may have multiple ports. (For example, a filter might have data-in and a data-out ports.)•A role is an interface to a connector. (For example, a pipe might have reader and writer roles.)•A configuration is a set of components and connectors along with a set of attachments between ports of the components and roles of the connectors.Architectural descriptions may be hierarchical. This is accomplished by allowing any component (or connector) to have an associated architectural representation, which describes its internal structure or provides an implementation in some programming language.In addition to this simple topological structure, we will assume that each of the architectural elements can have a set of associated properties attributes(or simply attributes). Properties describe such things as the protocol of a connec-tor, the functional behavior of a component, the data type accepted by a port, or the maximum throughput of a filter.For instance, in the UI–Repository fragment of Figure 1a, the User Interface is a placeholder representing functional-ity that needs to be implemented. The real element that eventually fills that place in the final design must have the ports that are specified on the placeholder: a port that receives architectural descriptions that is attached to a role on the ‘Importing Objects’ connector, a port that sends architectural descriptions that it attached to a role on the ‘Export-ing Objects’ connector, and a port for handling errors.Note that this scheme permits the description of arbitrary complex structures that are required by a fragment for its completion. For instance, it would be easy to characterize a fragment for which a particular real architectural compo-nent C must be connected to a component D, which must in turn be connected to a component E in the final design. Here D and E are placeholder elements. The amount of detail associated with them will determine how tightly they constrain the parts that are eventually substituted for them.It would be possible to start from a single design fragment and simply elaborate it, adding detail to the placeholders as needed. However, more likely several fragments are designed, each indicating some placeholders (and requirements on them). The key question then becomes how can we “merge” such fragments into a single design?Intuitively, to combine two design fragments, we must replace some or all of the placeholders in one fragment with elements from the other fragment. More specifically, we must be able to do two things:•decide whether a real element matches a placeholder, to ensure that any properties that hold of a place-holder still hold when a real element is inserted in its place.•combine two placeholders to get a third, more detailed placeholder.In the next sections, we describe how to adapt the idea of unification from logic to accomplish this.6. Unification in LogicThe problem of merging the parts from two architectural design fragments is essentially one of resolving multiple, possibly incomplete descriptions of elements, to find new elements that satisfy both descriptions -- or report that no common element can be found.Unification is a technique from the first-order predicate logic that does just that for logical terms.In the terminology of logic, a term is a constant, a variable, or a function applied to other terms. Examples of terms are ‘3’, ‘x’, and ‘f(x,3)’. A substitution is a function from terms to terms that replaces variables with other terms. For example, if the substitution {x -> g(y)} is applied to the term ‘f(x,3)’, the result is ‘f(g(y), 3)’.Unification works by finding a substitution of expressions for free variables that can be applied to both expressions to make them identical. Because of the nature of the substitution, any logical property of the expressions prior to the substitution still holds after the substitution is globally applied. If no substitution can be found, the unification algo-rithm determines that there is no possible match between the two expressions.For example, given ‘f(x) = g(x)’ and ‘g(3) = 4’ to show ‘f(3) = 4’, one would apply an appropriate substitution to make the expressions ‘g(x)’ and ‘g(3)’ identical. Unifying ‘g(x)’ and ‘g(4)’ returns the substitution {x -> 3}. This substitution can then be applied to both axioms, yielding ‘f(3) = g(3)’ and ‘g(3) = 4’. Transitivity of equality can then be used to obtain the desired result.Here is the usual algorithm for unification in the domain of logic.Unify (u1,u2: term;var subst: substitution): booleanlet u1’ =subst (u1); let u2’ =subst(u2)if u1’ =u2’ then return true;if u1’ is a variable v which does not occur in u2’add (v->u2’) to subst;return true;(Do the same for u2’)if u1’ and u2’ are constants that are not equal, return falseIf u1’ is a function application f(x1, x2, ... xn) and u2 is a function application g(y1, y2, ..., ym),if f and g are not the same function with the same number of arguments then return falseelsefor each pair of arguments (x i,y i)if not Unify(x i,y i,subst)return falsereturn trueThe algorithm returns a boolean expressing whether the two terms can be unified, and also returns in a variable , subst, the substitution that unifies them. The term that matches both descriptions is obtained by applying the resulting substitution to either of the original arguments.7. Architectural UnificationUnification solves the following problem: “Given two descriptions x and y, can we find an object z that fits both descriptions?” [Kni89]. As noted above, in the domain of logic these descriptions are expressions that may contain variables. We can apply this idea to software architecture; corresponding to expressions and variables in logic are architectural descriptions and placeholder elements (respectively).A naive approach would be this: let Component(...), Connector(...), Port(...), Role(...), and Configuration(...) be func-tions, each with a fixed number of arguments. (For example, Component(Name, Port1, Port2, Port3, Representation1) would be a function that would take a name, three ports, and a representation, and return a component.) Placeholders would be represented by using variables as arguments of those functions. We could then use the straightforward uni-fication algorithm from logic to combine those structures.This use of unification to combine design elements would have several desirable properties. The algorithm would determine when two placeholders can be combined and provide the resulting combination. It would also determine when a fully-specified fragment (i.e., one without placeholders) can be combined with one that contains placeholders. (Note that as a corollary, the algorithm implies that two fully-specialized fragments are unifiable only if they are iden-tical.)Unfortunately, this naive approach will not work for four reasons. First, fixed-arity, functional terms are inadequate to represent architectural structures with extensible lists of attributes. Second, architectural structures naturally contain sets; these require special attention. Third, architectural structures may contain information that cannot be easily expressed as terms containing variables; it is desirable to be able to combine that information also. Fourth, architec-tural structures may be subject to auxiliary constraints (such as those imposed by an architectural style), and unifica-tion must respect those constraints. In the sections that follow, we extend the simple unification algorithm to handle these issues.7.1. Extensible attribute listsThe first problem with the classical unification algorithm is that fixed-arity terms (i.e., functions that take a fixed number of arguments) are inadequate for representing architectural structures. W e wish to use unification to describe the combination of architectural design elements that may have varying lists of arbitrary attributes. These attributes arise as architectural designs are elaborated: when new properties are asserted or derived they are added to the exist-ing design. For example, a tool might calculate a “throughput” value for a component that had no “throughput”attribute before. This poses a problem for unification of terms in which each function symbol has a fixed arity. If, forand one placeholder object. If an algorithm tries to unify the two placeholders first, it will not be able to unify the two real objects.FindMaximalPairing(set1, set2, mapping)let AllPairs =set1× set2return TestPairs(AllPairs, mapping)testPairs(pairs, mapping)// pairs is a set of pairs of elements to try to unify.if pairs = {} return {}let p be an element of pairs.let u1 be (first p); let u2 be (second p)try Unify(u1, u2, mapping)if unification succeedslet L1 be list( p, testPairs( {(u1’,u2’)∈ pairs |u1’ != u1∧u2’ != u2}, mapping)undo unificationlet L2 be testPairs( pairs - {p}, mapping)if (length(L1) >= length(L2))return L1else return L2else return testPairs( pairs -{p}, mapping}7.3. Feature-Specific UnificationThe third problem is that we would like to use feature-specific information to unify elements that could not otherwise be unified. The naive algorithm fails to unify elements that have the same feature but with different values for that feature. Often, however, we can use knowledge of the semantics of the features to combine such information.In general, there are four possible responses to an attempt to unify two different features:•Allow one unificand to override the other according to some policy;•Attempt to merge the two attributes; the exact nature of the merge would depend on the type of the attribute; if the merge fails, we would fail to unify the objects;•Nondeterministically choose one of the attributes;•Fail.In general, no one of these policies is right all of the time. In fact, the best policy is generally feature-specific. There-fore, we introduce feature-specific unification methods as extensions to the basic algorithm.For example, consider an attribute specification that partially specifies the behavior of a component. Two specifica-tion attributes can be combined to create a specification attribute for a unification that captures both of those specifi-cations. The simplest way to do that combination is through logical conjunction. As another example consider merging two elements that contain the visualization information (for example, describing how an element should be displayed on the screen). One reasonable policy is to place the result in the place formerly occupied by one of the unificands. Again, this is difficult to express as an assignment of values to variables.To handle the feature-specific unification, the default policy is to fail when the values of two features of the same name differ. However, the party that defines the attribute type may also define the mechanism for resolving differ-ences between those attributes in unification. To enable this, we add a hook to Unify_feature_structures; when it encounters different values for an attribute, it calls a routine determined by the name of the attribute and the type of the object on which it is being created to determine how to resolve those differences.7.4. Design RestrictionsThe fourth problem with the standard unification algorithm is that it must be modified to support additional con-straints on the results of unification. This arises because many architectural designs are developed in the context of a set of restrictions on how design elements can be used and modified. For example, in the Aesop system [GAO94] the Pipe-and-Filter style supplies a component of typefilter. A filter is subject to the restriction that it may only have reader ports and writer ports; no other type of port may be added to a filter. If one were to naively attempt to unify a filter with some other element, the algorithm presented thus far would lead to an illegal component.Such “auxiliary” restrictions are themselves partial information about that elements in a fragment. Like other sorts of partial information, the restrictions that apply must be maintained and preserved through unification. That is, the restrictions that apply to either unificand must apply to the result of unification.To handle this issue, we must add a check to the algorithm to make sure that any substitution satisfies the additional constraints. In practice, this capability is generally provided by tools that are knowledgeable about the contextual style and design constraints.8. ImplementationWe have implemented an extension to the Aesop architectural development environment that supports unification as defined above. The Aesop system is a family of architectural development environments that share a common graph-ical interface, a database in which architectural designs are stored, and a repository of reusable design elements.In our implementation of unification, placeholder elements are displayed in a lighter color than normal elements. T o attempt to unify two elements, the user drags one element over another element of the same architectural type, and the system automatically attempts to unify them, or reports that they cannot be unified. Fragments can be stored in the reuse repository [MG96], where they may be retrieved and unified with other fragments or an existing design.The Aesop system also provides by default many of the feature-specific unification hooks that were discussed in Sec-tion 7.3. In particular, it provides built in resolution for element names, ports, roles and representations. Other fea-ture-specific unification hooks can be provided through environment customizations.9. Open IssuesThe application of unification to software architecture raises a number of issues that are ripe for further investigation and future research.9.1. Tools and EnvironmentsWhile having a well-specified algorithm for unifying two design fragments is a necessary starting point, there are many questions about how best to exploit these ideas in the construction of practical system development tools.First is the question of interface. How should design fragments be visually presented? Are placeholders distin-guished, and if so how? How should a user indicate that two fragments are to be unified? (As noted above, in our own Aesop environment we use a drag and drop interface. But there are many other alternatives.)Second, is the question of persistence: should a design fragment persist after it has been unified into some larger design? For example, should it be possible to determine what design fragments were used to create a given design?Should it be the case that the user can later directly modify a design even if it violates some aspects of the design frag-ment? Should an environment provide an “undo-unification” operation?9.2. Other Applications of Architectural UnificationWe have focused on the use of unification for combining existing design fragments. But there are other applications that one might make of the same idea. One is the use of unification for search. Suppose you are attempting to find the part of a design that has a certain architectural form Unification could be used to match a partially filled out architec-tural skeleton against an existing design to locate all occurrences of it.A related application would be to search for problem areas. Frequently it is known that certain kinds of architectural structures are a bad idea. (For example, you might know that there are typically performance bottlenecks whenever three specific types of components are interconnected.) Again a query could be phrased in terms of an architectural fragment that captures the bad design.A third application of unification could be in terms of classification. Since unification determines when one structure specializes another, unification could be used to organize and index repositories of design fragments. That is, frag-ments can be related by the fact that there exist unifications of them.9.3. Algorithm ExtensionsAs presented, unification assumes that the different features of an architectural element are unrelated. In practice this is often not the case. For example certain attribute values may depend on others. It would be useful if the algorithm could be extended to support these dependencies. In the case where the dependencies are known and functional, it is possible to structure the algorithm so that it calculates the non-derived values, and then uses recomputed derived val-ues to complete the unification. More general cases of dependency, however, may require more sophisticated modifi-cations.10. ConclusionBased on the observation that architectural design fragments are often combined into larger architectures we have presented a way to represent incomplete architectural designs and an algorithm for combining two or more such frag-ments. To combine design fragments that may overlap, we represent design fragments as pieces of architecture that may contain placeholder elements. The technique of unification from predicate logic provides an effective way to think about the combination of architectural placeholders with other design elements. However, several enhance-ments to the basic unification algorithm are necessary to use unification in the domain of software architecture. With these enhancements, architectural unification promises to be an effective tool for the use of design fragments in archi-tectural design.References[AG94]Robert Allen and David Garlan. Formalizing architectural connection. In Proceedings of the 16th In-ternational Conference on Software Engineering, pages 71–80, Sorrento, Italy, May 1994.[BJ94]Kent Beck and Ralph Johnson. Patterns generate architectures. In Proceedings of ECOOP’94, 1994. [BMR+96]Frank Buschmann, Regine Meunier, Hans Rohnert, Peter Sommerlad, and Michael Stal.Pattern Ori-ented Software Architecture: A System of Patterns. John Wiley & Sons, 1996.[BO92]Don Batory and Sean O’Malley. The design and implementation of hierarchical software systems with。

Software Development MethodologySoftware ArchitectureLecture 8Lecturer:罗铁坚Email:tjluo@Phone:88256308-----------------------------------------------------------------Class Time:Mon / Wed10:00am–11:40amOffice Hour:Friday Morning 10:00 –12:00Office Place:玉泉路教学园区科研楼东5层511Today’ Topics1.Motivation and Problems2.Software Architecture Foundations3.Architecture Frameworks, Styles ,Patterns4.Architecture PracticeReference1. D. Perry and Wolf, “ Foundations for the study of software architecture”,ACM SIGSOFT Software Engineering Notes, 17:4 (October 1992)2.R. Allen and D. Garlan, “ Formalizing Architecture Connection.”, Proc.Int’l conf. Software Eng. IEEE CS Press. 19943.Philippe B. Krunchten, “ The 4+1 View Model of Architecture”, IEEESoftware, Nov./Dec 19954.David Garlan, etc ,” Architectural Mismatch: Why Reuse is So Hard”, IEEESoftware, Nov./Dec 19955.Jeff Tryee, etc., “Architecture Decisions: Demystifying Architecture”,Mar./Apr. 20056./~perry/work/swa/The Roman Coliseum is NOT Architecture. The Roman Coliseum is the RESULT of Architecture Architecture is the set of descriptive representations that are required in order to create an object.Descriptive representations for describing products•Bills of Material–What the object is made of.•Functional Specs–How the object works.•Drawings–Where the components exist relative to one another.•Operating Instructions–Who is responsible for operation.•Timing Diagrams–When do things occur.•Design Objectives–Why does it work the way it does.Abstraction for the productAbstraction for the peopleArchitecture in software system •Architecture design is concerned with describing its decomposition into computational elements and their interactions.•Model of Software Architecture–Software Architecture ={ Elements, Form, Rationale }Design Tasks at Architecture Level anizing the system as a composition ofcomponents;2.Developing global control structures;3.Selecting protocols for communication,synchronization, and data access;4.Assigning functionality to design elements;5.Physically distributing the components;6.Scaling the system and estimating performance;7.Defining the expected evolutionary paths;8.Selecting among design alternatives.Motivations •An architectural description makes a complex system intellectually tractable by characterizing it at a high level of abstraction.•Exploit recurring organizational patterns( or styles) for reuseHot research areas1.Architecture description2.Formal underpinnings3.Design guidance4.Domain-specific architecture5.Architecture in context6.Role of tools and environmentsExamples •Two compiler architectures of the multi-phase style:–organized sequentially; and–organized as a set of parallel processesconnected by means of a shared internalrepresentation.Architectural elements•processing elements:–lexer, parser, semantor, optimizer, and codegenerator.•data elements:–characters, tokens, phrases,correlatedphrases,annotated phrases, and object code.Data Element RelationshipsOrganized sequentially •ProcessingView ofSequentialCompilerArchitectureOrganized sequentially •Data View ofSequentialCompilerArchitecture.Organized as a set of parallel processes •Partial ProcessView of ParallelProcess, SharedData StructureCompilerArchitectureSome possible views1.Functional/logic view2.Code/module view3.Development/structural view4.Concurrency/process/runtime/thread view5.Physical/deployment/install viewer action/feedback view7.Data view/data modelArchitecture frameworks•4+1•RM-ODP (Reference Model of Open Distributed Processing)•SOMF(Service-Oriented Modeling Framework)•Enterprise architecture–Zachman Framework–DODAF–TOGAF4+1 ViewRM-ODP ViewSOMF ViewZachman Framework ViewDODAF View Department of Defense Architecture FrameworkTOGAF View The Open Group Architecture FrameworkArchitectural styles and patterns1.Blackboard2.Client–server model (2-tier, n-tier, Peer-to-peer, cloudcomputing all use this model)3.Database-centric architecture4.Distributed computing5.Event-driven architecture6.Front end and back end7.Implicit invocation8.Monolithic application9.Peer-to-peer10.Pipes and filtersArchitectural styles and patterns11.Plug-in (computing)12.Representational State Transfer13.Rule evaluation14.Search-oriented architecture15.Service-oriented architecture16.Shared nothing architecture17.Software componentry18.Space based architecture19.Structured20.Three-tier modelA short history of Web Services Web Sites (1992)Comparing REST vs. SOAP/WS-* RESTful Web Services (2006)Is REST being used?WS-* vs. RESTApplication Integration StylesArchitectural PrinciplesRESTful Web Service ExampleWeb Service ExampleProtocol LayeringDealing with HeterogeneityThe distinction from functional design •Architectural Design–the process of defining a collection of hardware and software components and their interfaces to establish the framework for the development of acomputer system.•Detailed Design–the process of refining and expanding the preliminary design of a system or component to the extent that the design is sufficiently complete to beginimplementation.•Functional Design–the process of defining the working relationships among the components of a system.•Preliminary Design–the process of analyzing design alternatives and defining the architecture, components, interfaces, and timing/sizing estimates for a system orcomponents.About the real-world performance•An software depends on only two things:–The algorithms chosen and–The suitability and efficiency of the various layers ofimplementation.Architecture Practice1.The role of Use Cases in the process2.The principles of separation of concerns anddependency management3.How to analyze and design application concerns4.How to analyze and design platform specific concerns5.How to build and manage models6.The iterative nature of building an architecture7.How to describe the architectureArchitecture:Challenges and foundations 1.What role does architecture play in thesoftware development process?2.What are some common problems withconventional approaches to architecture?3.What are some of the key characteristicsof a good architecture?Design The Use Case•Each use case is realized by a collaboration -a set of classes • A class plays different roles in different use case realizations•the total responsibility of a class is the composition of these rolesUse caseSpecification Use case design Component design & implementationReserve Room Check-in Customer Check-Out Customer CustomerScreenReserve RoomRoomReservationStaffScreenCheck inRoomReservationStaff Screen Check Out RoomCustomerScreenReserve RoomReservationRoomCheck OutCheck inStaffScreenTest the use caseReserve Room Check InCheck OutCustomerCounter StaffPayment GatewayTest CasesUse Case ScenariosOk Ok OkReserveAvailable Room ReserveUnavailable Room Etc.Many Test Cases for every Use Case•Use Case Modeling Done!•Design Done!•Basis for the Test SpecificationPlan Testing & Define Test CasesGenerate Test Cases•From Sequence diagrams and •State-Chart diagramsUse Case Driven Development•Use case driven development defines a set of models that allow you to gradually refine requirements until you reach executable code.Design and implementSpecify Use CaseUse case modelupdatesAnalyze Use Caseanalysis modelupdatesUse caseDesign modelupdatesImplementation modelUse Case Driven DevelopmentUseCase ModelAnalysis ModelPlatform •Use case model-External perspective •Analysis model-Internal perspective-Platform independent •Design model-Internal perspective《trace》IndependentStructureDesign ModelMinimal Design Structure Extension DesignStructure-platform specific-extension design kept separate fromminimal design《trace》The “Level Of Detail” Challenge •Many practitioners are concerned about how detailed requirements should be, how detailed design should be•This question arise largely because of waterfall mindset.•Never detailed enough until you produce the system.Solution •The solution is apply iterative development and iterative define the architecture and implement and test the architecture,•Proceed until you get the desired systemAgenda •The “level of detail” challenge •Architecture first approach •Describing and evaluating architecture •Summary and review。

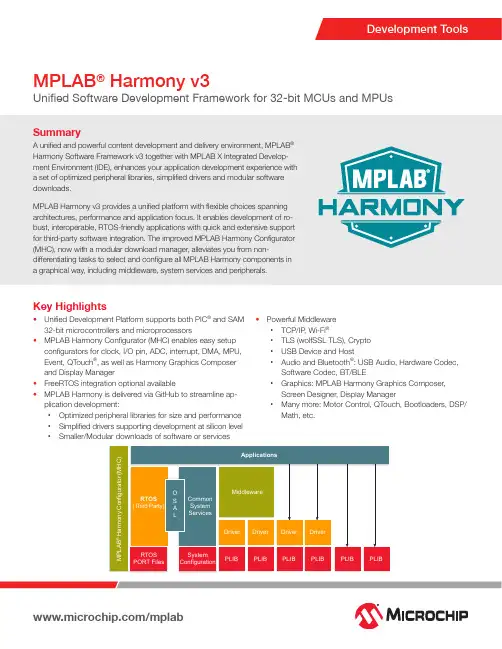

SummaryA unified and powerful content development and delivery environment, MPLAB ® Harmony Software Framework v3 together with MPLAB X Integrated Develop -ment Environment (IDE), enhances your application development experience with a set of optimized peripheral libraries, simplified drivers and modular software downloads.MPLAB Harmony v3 provides a unified platform with flexible choices spanning architectures, performance and application focus. It enables development of ro -bust, interoperable, RTOS-friendly applications with quick and extensive support for third-party software integration. The improved MPLAB Harmony Configurator (MHC), now with a modular download manager, alleviates you from non-differentiating tasks to select and configure all MPLAB Harmony components in a graphical way, including middleware, system services and peripherals.Development ToolsMPLAB ® Harmony v3Unified Software Development Framework for 32-bit MCUs and MPUsC: 100 M: 10 Y: 35 K: 15Key Highlights• Unified Development Platform supports both PIC® and SAM 32-bit microcontrollers and microprocessors•MPLAB Harmony Configurator (MHC) enables easy setup configurators for clock, I/O pin, ADC, interrupt, DMA, MPU, Event, QTouch®, as well as Harmony Graphics Composer and Display Manager• FreeRTOS integration optional available• MPLAB Harmony is delivered via GitHub to streamline ap -plication development:• Optimized peripheral libraries for size and performance • Simplified drivers supporting development at silicon level• Smaller/Modular downloads of software or services•Powerful Middleware• TCP/IP , Wi-Fi ®•TLS (wolfSSL TLS), Crypto • USB Device and Host• Audio and Bluetooth ®: USB Audio, Hardware Codec, Software Codec, BT/BLE• Graphics: MPLAB Harmony Graphics Composer, Screen Designer, Display Manager• Many more: Motor Control, QTouch, Bootloaders, DSP/Math, etc.The Microchip name and logo, the Microchip logo, MPLAB and PIC are registered trademarks of Microchip Technology Incorporated in the U.S.A. and other countries. Arm and Cortex are registered trademarks of Arm Limited (or its subsidiaries) in the EU and other countries. All other trademarks mentioned herein are property of their respective companies. © 2019, Microchip Technology Incorporated. All Rights Reserved. 7/19 DS00003024BTools and Demo ExamplesDevelopment KitsAvailable Resources• MPLAB Harmony v3 Landing Page: https:///mplab/mplab-harmony/mplab-harmony-v3• MPLAB Harmony v3 Device Support: https:///Microchip-MPLAB-Harmony/Micro -chip-MPLAB-Harmony.github.io/wiki/device_support• GitHub MPLAB Harmony v3 WiKi Page: https:///Microchip-MPLAB-Harmony/Microchip-MPLAB-Harmony.github.io/wiki• GitHub MPLAB Harmony v3 User Guide: https://microchip-mplab-harmony.github.io/Complementary Devices• Wireless Connectivity: Wi-Fi, Blue -tooth, BLE, LoRa, IEEE 802.15.4, Sub-G• Wired Connectivity and Interface: CAN Transceivers, Ethernt PHY • Industrial Networking: EtherCAT • CryptoAuthentication Device: ATECC608A, ATSHA204A• Clock and Timing: MEMs Oscillator • Analog: Op Amp, Motor Driver • Power Management: Linear and Switching RegulatorsServices and Third Party• Microchip Training: https:///training/• Third Party Solutions: https:///mplab/mplab-harmony/premium-products/third-party-solutions• ExpressLogic, FreeRTOS, Micrium, Segger and wolfSSL• Integrated Development Environment: IARATSAMC21N-XPRO/ATSAMD21-XPROThe SAMC21N/SAMD21 Xplained Pro Evaluation KitsATSAME54-XPRO/DM320113The SAM E54 Xplained Pro/The SAM E70 Explained Ultra Evaluation KitsDM320007-C/DM320010-CPIC32MZ EF (Connectivity)/PIC32MZ DA (Graphics) Starter KitsDM320104*The Curiosity PIC32MZEF Development Board, including on board Wi-Fi moduleATSAMV71-XULTThe SAM V71 Xplained Ultra evaluation kit is ideal for evaluating and prototyping with the SAM V71, SAM V70, SAM S70 and SAM E70 Arm ® Cortex ®-M7 based microcontrollers.ATSAMA5D2C-XULT*/SAMA5D27-SoM-EK1*The SAMA5D2 Xplained Ultra Evaluation Kit, including eMMC and DDR3/SoM and SiP Evaluation Kit*Harmony support coming soon。

Lawrence RauchwergerDepartment of Computer Science phone:(979)845-8872 Texas A&M University fax:(979)458-0718 College Station,TX77843-3112email:rwerger@ USA url:/∼rwergerEducationPh.D.in Computer Science,University of Illinois at Urbana-Champaign,1995.Ph.D.Thesis:Run-Time Parallelization:A Framework for Parallel Computation.Thesis advisor:David Padua.M.S.in Electrical Engineering,Stanford University,1987.M.S.Research Area:Manufacturing Science and Technology for VLSI(Equipment Modeling).Engineer in Electronics and Telecommunications,Polytechnic Institute,Department of Electronic Engineering,1980,Bucharest,Romania.Diploma Project:Design and Implementation of an Alphanumeric and Graphic Display. Research InterestsCompilers for parallel and distributed computingParallel and distributed C++libraries.Adaptive runtime optimizations.Architectures for parallel computing.AwardsNSF Faculty Early Career Development(CAREER)Award,1998–2002.Elected Member,International Federation of Information Processing(IFIP),WG3.10,2003.TEES Fellow,College of Engineering,Texas A&M University2002-2003.TEES Fellow,College of Engineering,Texas A&M University2005-2006.TEES Select Young Faculty Award,College of Engineering,Texas A&M University2000-2001(awarded to two junior faculty each year by the College of Engineering).Best student paper award,co-authored with Silvius Rus,Int.Conference on Supercomputing,New York, NY,2002.Best student paper award,co-authored with Steve Saunders,Workshop on Performance Optimization for High-level Languages and Libraries,New York,NY,June2002.Intel Foundation Graduate Fellowship,1994.NASA(Langley)High Performance Computing Consortium(HPCC)Graduate Fellowship,1994. ExperienceProfessor,Department of Computer Science,Texas A&M University.(9/06–present)Co-Director,PARASOL Laboratory(3/98–present)Texas A&M University High Performance Computing Steering Committee.(1999–present)Visiting Professor,INRIA Futurs,Orsay,France.(9/04–11/04,7/06–8/06)IBM T.J.Watson Research Center,Yorktown Heights,NY.(10/03–8/04)Associate Professor,Department of Computer Science,Texas A&M University.(9/01–8/06)Assistant Professor,Department of Computer Science,Texas A&M University.(8/96–08/31/01) Visiting Scientist,AT&T Research Laboratories,Murray Hill,NJ.(6/96-8/96)Visiting Assistant Professor,Center for Supercomputing R&D,University of Illinois.(9/95-5/96) Research Assistant,Center for Supercomputing R&D,University of Illinois.(1/89-5/92,1/94-5/94) Teaching Assistant,Computer Science Department,University of Illinois.(1/93-12/93)Summer Intern,IBM T.J.Watson Research Center,Yorktown Heights,NY.(Summer1992)Research Assistant,Center for Integrated Systems,Stanford University.(1986–1988)R&D Engineer,Varian Associates Inc.,Thin Film Technology Division,R&D,Palo Alto,CA.(1984–1985)Design Engineer,Beckman Instruments Inc.,Scientific Instruments Division,Irvine,CA.(1983-1984) Design Engineer,The Felix Computer Company,R.&D.Department,Bucharest,Romania.(1980–1982)Research Grants“Adaptive Parallel Processing for Dynamic Applications”The National Science Foundation(CAREER Program)(CCR-9734471),PI:L.Rauchwerger,$237,000,(includes$10,000in REU Supplements, 1999,$27,000matching supplement,2000.)June1,1998–May31,2004.“CRS–AES:Collaborative Research:SoftCheck:Compiler and Run-Time Technology for Efficient Fault Detection and Correction in Low nm-Scale Multicore Chips,”The National Science Foundation PIs: Maria Garzaran(UIUC)and L.Rauchwerger$200,000,August2006–July2008.“STAPL:A High Productivity Parallel Programming Infrastructure,”Intel Corporation,PI:L.Rauchwerger,$30,000,November03,2005.“SmartApps:Middle-ware for Adaptive Applications on Reconfigurable Platforms,”The Department of Energy,Office of Science(Operating/Runtime Systems for Extreme Scale Scientific Computation Program),PI:L.Rauchwerger,co-PIs:M.Adams(Nuclear Engineering),N.Amato,B.Stroustrup, $1,500,000September1,2004–August31,2007.“ITR/NGS:STAPL:A Software Infrastructure for Computational Biology and Physics”(ACI-0326350), The National Science Foundation(Medium ITR Program),PI:L.Rauchwerger,co-PIs:N.Amato,B.Stroustrup,M.Adams(Nuclear Engineering),$416,000,November1,2003–October31,2007.“Efficient Massively Parallel Adaptive Algorithm for Time-Dependent Transport on Arbitrary Spatial Grids,”The Department of Energy,PI:M.Adams(Nuclear Engineering),co-PIs:N.Amato,P.Nel-son,L.Rauchwerger,$1,668,827,May1,2002–March31,2006.“Geometry Connectivity and Simulation of Cortical Networks”,Texas Higher Education Coordinating Board,PI:N.Amato co-PI:L.Rauchwerger$240,000January1,2002-August31,2004.“ITR/SY:Smart Apps:An Application Centric Approach to Scientific Computing”,The National Sci-ence Foundation(ACR-0113971),PI:L.Rauchwerger,co-PI:N.Amato,$463,809,September1, 2001–August31,2005.“ITR/AP:A Motion Planning Approach to Protein Folding Simulation”,The National Science Founda-tion(CCR-0113974),PI:N.Amato,co-PI:L.Rauchwerger,$318,000,September1,2001–August31,2005.“SmartApps:Smart Applications for Heterogeneous Computing,”(EIA-9975018),The National Science Foundation(Next Generation Software Program),PI:L.Rauchwerger,co-PI:N.Amato,J.Torrellas(Univ.Illinois at Urbana-Champaign),$610,000October1,1999–August31,2003.“PARASOL:An Adaptive Framework for Parallel Processing”(ACI-9872126),The National Science Foundation,PI:L.Rauchwerger,co-PI:N.Amato,$199,662,January1,1999–December31,2002.“Efficient Massively-Parallel Implementation of Modern Deterministic Transport Calculations”(B347886), Department of Energy(ASCI ASAP Level2and3Programs),PI:M.Adams(Nuclear Engineering),co-PIs:N.Amato,P.Nelson,L.Rauchwerger,$889,000,October21,1998–March31,2002.“Parallel Algorithms for Graph Cycle Detection,”Sandia National Laboratory,PI:L.Rauchwerger,$93,448,September1,1999–January15,2002.“Develop the How-to of a Qualified Compiler and Linker”,Aerospace Vehicle Space Institute(AVSI),PI:L.Rauchwerger,March01,2001–January31,2002.$45,500,Fellowship,Equipment,and Software Grants”CRI:A Cluster Testbed for Experimental Research in High Performance Computing”The National Science Foundation,PI:V.Taylor co-PI:N.Amato,L.Rauchwerger,$537,000,May15,2006–April30,2008.“Workshop NGS:Support for the Workshop on Languages and Compilers for Parallel Computing(LCPC),”The National Science Foundation,PI:Lawrence Rauchwerger,Co-PI:Nancy M.Amato,$15,000,September1,2003–August31,2004.“MRI:Development of Brain Tissue Scanner,”The National Science Foundation,PI:B.McCormick,co-PIs:N.Amato,J.Fallon(UCI),L.Rauchwerger,$105,000+$153,000funds from Texas A&M.September1,2000–July31,2001.“Education/Research Equipment Grant–HP V-class Shared Memory Multiprocessor Upgrade,”Hewlett-Packard,PI:Lawrence Rauchwerger and Nancy Amato,May2000,approximately$580,000.“Research Equipment Grant–16Processor V-class Shared Memory Multiprocessor Server,”Hewlett-Packard Co.,PI:L.Bhuyan,co-PIs:N.Amato,L.Rauchwerger,$1,200,000,1998.“Fellowships in Robotics,Training Science,Mobil Computing and High Performance Computing”(P200A80305), U.S.Department of Education(GAANN Fellowship Program),PI:R.V olz,co-PIs:N.Amato,L.Ev-erett,J.Welch,co-Investigators:L.Rauchwerger,J.Trinkle,N.Vaidya,J.Yen,Texas A&M Univer-sity,$601,224,August15,1998–August14,2001.Professional Service&ActivitiesNSF PanelistSeptember1998,October1998,June1999,November2000,May2002.Editorial Board MemberInt.Journal of Parallel Processing(IJPP),since2003.Guest Editor:Journal of Parallel Computing,Special Issue on Parallel Processing for Irregular Applications,2000.Int.Journal of Parallel Computing,2000,Special Issue on Selected Papers from ICS’99.Int.Journal of Parallel Computing,2002,Special Issue on Selected Papers from IWACT’2001.Program Chair16th Int.Conf.on Parallel Architectures and Compilation Techniques(PACT),2007.16th Workshop on Languages and Compilers for Parallel Computing(LCPC),2003.Program Committee Member:Int.Conf.for High Performance Computing and Communications(SC07),2007.Int.Parallel and Distributed Processing Symp.(IPDPS),2002,2006,2007.Int.W-shop on High-Level Parallel Programming Models and Supportive Environments(HIPS),2007.ACM Int.Conf.on Supercomputing(ICS),2000,2006,2007.Int.Conf.on High-Performance Embedded Architectures and Compilers(HiPEAC),Belgium,2007.Int.Conf.on High Performance Computing(HiPC),India,2000,2003,2007.Int.Conf.on Computer Design(ICCD),2006.Int.Conf.on High Performance Computing and Communications(HPCC),2006.ACM Int.Conf.on Computing Frontiers,Italy,2006.Int.IEEE W-shop on High Performance Computational Biology(HICOMB),2005;Int.Conf.on High Performance Computing and Communications(HPCC-05),Italy,2005;Workshop on Languages and Compilers for Parallel Computing(LCPC),2002,2004,2005;Workshop on Patterns in High Performance Computing,Champaign,IL,2005;ACM SIGPLAN Symp.Principles and Practice of Parallel Programming(PPoPP),2005;High Performance Computer Architecture Conf.(HPCA),2001,2002,2004;Int.Symp.on Parallel Architectures,Algorithms and Networks(I-SPAN),Philippines,2002;ACM Int.Conf.on Supercomputing(ICS’00),Santa Fe,NM,May2000;Int.Conf.on Parallel Processing(ICPP),1999,2000;Int.Conf.on Compiler Construction(CC),1999,2000;Int.Conf.on Parallel Architecture and Compilation Techniques(PACT),1999;First Workshop on Parallel Computing for Irregular Applications,Orlando,FL,January1999;Workshop and Publications Chair:High Performance Computer Architecture Conf.(HPCA-6),Toulouse,France,January2000.Exhibits Chair:ACM Int.Conf.on Supercomputing(ICS’02),New York,NY,June2002.Referee for Scientific Journals and Conferences.Member IEEE,ACM and IFIP WG3.10.Significant University and Departmental Service and ActivitiesTexas A&M University High Performance Computing Steering Committee,1999–present. Senator,Faculty Senate,Texas A&M University,2001–2005.Faculty Search Committee,2002–2003,2004–2005,2006–2007.Department Head Search Committee,2001–2002.Endowed Chair Search Committee,2001–2002.Computer Engineering Committee,2002-2003.Graduate Advisory Committee(GAC),2001–2002,2006–2007.Colloqium Committee.1998–1999,1999–2000,2001–2002(Chair).2002–2003(Chair).Advisory Committee2001-2002.Computer Services Advisory Committee,1997–1998.Graduate Admissions and Awards Committee,2000–2001,2004–2005,2006-2007.Library Committee.1998–1999,1999–2000(Chair).Total Quality Management(TQM).1997–1998.Courses TaughtGraduate:CPSC-605:Advanced Compiler Design(Fall96,97,98,99,00,01,02;Spring05,07)CPSC-614:Computer Architecture(Spring03)CPSC-654:Supercomputing(Spring99,01)CPSC-681:Graduate Seminar(Spring07)CPSC-689:Advanced Topics in Compiler Design(Spring00,02,03)CPSC-689:Special Topics:Runtime Systems for Parallel Computing(Spring06) Undergraduate:CPSC-434:Compiler Design(Spring97,98,99,00,01,02,05,06)CPSC-481:Undegraduate Seminar(Spring07)Student Research SupervisionGraduated PhD StudentsSilvius Rus,Ph.D.December2006,“Hybrid Analysis and its Application to Dynamic Compiler Opti-mization”Current position:Google,Mountain View,CA.Hao Yu,Ph.D.August2004,“Run-time Optimizations of Adaptive Irregular Applications.”Current position:Postdoc,IBM T.J.Watson Research Center,Yorktown Heights,NYYe Zhang,Ph.D.(UIUC)May1999,(co-advised with Prof.Josep Torrellas,University of Illinois),“Hardware for Speculative Run-time Parallelization in Distributed Shared-Memory Multiprocessors”, Current position:Software Engineer,Oracle Corporation,CA.Graduated Masters StudentsDevang Patel,M.S.August1998,“Compiler Integration of Speculative Run-Time Parallelization.”Cur-rent position:Software Engineer,Apple Computer,Sunnyvale,CA.Francisco Arzu,M.S.Summer2000,“STAPL:Standard Templates Adaptive Parallel Library.”Current position:Consultant and partner,,Austin,TXJulio Carvallo De Ochoa,M.S.,Summer2000,“Parallelization of Loops with Recurrence and Unknown Iteration Space”,Current position:Software Engineer,Wayne Industries,Austin,TX.William McLendon III,M.S.,Fall2001,“Parallel Detection and Elimination of Strongly Connected Components for Radiation and Transport Sweeps”,Current position:Software Engineer,Scalable Computing Systems Dept.,Sandia National Laboratory,Albuquerque,NM.Mothi Mohan Ram Thoppae,M.S.(EE),Fall2001,“Hardware and Software Co-techniques for Branch Prediction”,Current position:Component Design Engineer,Intel Corporation,Santa Clara,CA. Timmie Smith,M.C.S.,Summer2002,Current Position:Ph.D.Student,Texas A&M University,Dept.of Computer Science.Steven Saunders,M.S.,Spring2003,“A Parallel Communication Infrastructure for STAPL”,Current Position:Software Engineer,Raytheon,Dallas,TX.Current Graduate StudentsFrancis Dang,research area:Speculative Techniques,SmartApps.Alin Jula,research area:Parallel C++library,parallel dynamic memory management.Marinus Pennings,research area:Parallelizing Compilers,run-time optimization and compilation. Antoniu Pop,research area:Run-time systems,Operating Systems for SmartApps and STAPL. Nageswar Rao,research area:Run-time systems,Operating Systems for SmartApps and STAPL. Ioannis Papadopoulos,research area:Operating Systems for SmartApps and STAPL. Androniki Pazarloglu research area:Compilers.Timmie G.Smith,research area:Parallel C++Library,Parallel Memory Management.Gabriel Tanase,research area:Parallel C++Library,Nathan Thomas,research area:Parallel C++Library.Tao Huang,research area:Parallel C++Library.Undergraduate Research ProjectsWilliam Harris,Purdue University,Speculative Parallelization,Summer06.Aditya Awasthi,IIT,India,Summer06,STAPL.Saransh Mittal,IIT,India,Summer06,STAPL.Carson Brownle,Summer2005,CS REU Program.Visualization tool for graphs.Anna Tikhonova,Summer2005,CRA-W DMP Program,Parallel algorithms in STAPL. Armando Solar,Nuclear radiation transport simulation.01/01–07/03.Current Position:PhD student,UC BerkeleyReshma Ananthakrishnan,Test suite for STAPL,04/01–05/04.Current Position:Microsoft,Redmond,WAAndrew Cox,Software Branch Prediction,9/00-5/01.Current Position:Microsoft,Redmond,WA Steven Saunders,Java Parallel Library,1/00-12/00.Current Position:Raytheon,Dallas,TX Carrie Searcey,Java Parallel Library,5/00-8/00.Current Position:IBM,Raleigh,NCMichael Peter,Hardware for speculative run-time parallelization,1/99-7/99.Current Position:PhD student,TU Dresden,Germany.Francis Dang,Run-time parallelization(CPSC485project),6/98-6/99.Current Position:Supercomputing Center,Texas A&MWilliam McLendon,Performance Monitoring(CPSC485project),6/98-6/99.Current Position:Sandia National Labs,Albuquerque,NM.Timmie Smith,STAPL:Standard Parallel Adaptive C++Library,(CPSC485project)7/99-12/99.Current Position:PhD student.Texas A&M.Invited Presentations(Selected,last5years)“Automatic Parallelization with Hybrid Analysis”,IBM,T.J.Watson,Yorktown Heights,NY,April,2005.HP,Cupertino,CA,Feb.2006SIAM Conf.on Computational Science and Engineering,San Francisco,CA,February,2006.Compiler&Architecture Int.Seminar,IBM Haifa Research Labs,Haifa,Israel,December,2005.Fudan University,Shanghai,China,December,2005.“Efficient Massively Parallel Adaptive Algorithms for Time-Dependent Transport on Arbitrary Grids.”SIAM Conf.on Computational Science and Engineering,San Francisco,CA,February,2006.“SmartApps:Middleware for Adaptive Applications on Reconfigurable Platforms”,FAST-OS PI Meeting/Workshop,Rockville,MD,June,2005.“STAPL:A High Productivity Programming Infrastructure for Parallel&Distributed Computing”,Workshop on Patterns in High Performance Computing,Urbana,IL,May,2005.2005SIAM Conf.on Computational Science and Engineering,Orlando,FL,February,2005.“SmartApps:Adaptive Applications for High Productivity/High Performance”,UPC,Barcelona,Spain,December,2004Technical University,Delft,The Netherlands,November,2004Workshop on Scalable Approaches to High Performance and High Productivity Computing,Bertinoro Int.Center for Informatics(ScalPerf04),Italy,September2004.Universita di Pisa,Italy,November,2004.INRIA,Paris,France,2004.IBM,T.J.Watson,Yorktown Heights,NY,2004.“STAPL:A High Productivity Programming Infrastructure for Parallel and Distributed Computing”, Workshop on Domain Specific Languages for Numerical Optimization Argonne National Laboratory, August18-20,2004.IFIP WG3.10,London,UK,February,2004.“Memory Consistency Issues in STAPL”,DAGSTUHL Seminar:Hardware and Software Consistency Models:Programmability and Perfor-mance,Schloss Dagstuhl,Wadern,Germany,October2003.“Hybrid Analsyis”,DAGSTUHL Seminar:Emerging Technologies:Can Optimization Technology meet their Demands?, Schloss Dagstuhl,Wadern,Germany,February2003.“Compiler-Assisted Software and Hardware Support for Reduction Operations”,NSF Next Generation Software Workshop,Fort Lauderdale,FL,April2002.“STAPL:An Adaptive,Generic Parallel C++Library”,NSF Workshop on Software for the IBM Blue Gene Architecture,IBM T.J.Watson Research Center, April,2002.“Removing Architectural Bottlenecks to the Scalability of Speculative Parallelization”,IBM Research,Haifa,Israel,July2001.“SmartApps:An Application Centric Approach to High Performance Computing”,Workshop for Next Generation Software,San Francisco,CA,April,2001.Publications in Refereed Journals,Conferences and Workshops(Organized by topic;most papers available at /∼rwerger/) Compilers[1]A.Jula and L.Rauchwerger“Custom Memory Allocation for Free:Improving Data Locality withContainer-Centric Memory Allocation,”in Proc.of the19-th Workshop on Languages and Compilers for Parallel Computing(LCPC),New Orleans,Louisiana,Nov2006,to appear.[2]S.Rus,G.He and L.Rauchwerger,“Scalable Array SSA and Array Data Flow Analysis”,in Proc.ofthe18-th Workshop on Languages and Compilers for Parallel Computing(LCPC),Hawthorne,NY, 2005.Lecture Notes in Computer Science(LNCS),Springer-Verlag,to appear.[3]S.Rus,G.He,C.Alias and L.Rauchwerger,“Region Array SSA”,in Proc.of the15-th Int.Conf.on Parallel Architecture and Compilation Techniques(PACT),Seattle,WA,2006.[4]Hao Yu,Lawrence Rauchwerger,“An Adaptive Algorithm Selection Framework for Reduction Par-allelization”,IEEE Transactions on Parallel and Distributed Systems,17(19),2006,pp.1084–1096.[5]H.Yu,D.Zhang and L.Rauchwerger,“An Adaptive Algorithm Selection Framework”,in Proc.ofthe13th Int.Conf.on Parallel Architecture and Compilation Techniques(PACT),Antibes Juan-les-Pins,France,October,2004,pp.278–289.[6]S.Rus,D.Zhang and L.Rauchwerger,“The Value Evolution Graph and its Use in Memory ReferenceAnalysis”,in Proc.of the13th Int.Conf.on Parallel Architecture and Compilation Techniques (PACT),Antibes Juan-les-Pins,France,October,2004,pp.243–254.[7]S.Rus,D.Zhang and L.Rauchwerger,“Automatic Parallelization Using the Value Evolution Graph”,in Proc.of the17-th Workshop on Languages and Compilers for Parallel Computing(LCPC),West Lafayette,IN,2004.Also in Lecture Notes in Computer Science(LNCS),3602,Springer-Verlag, 2005,pp.379–393.[8]S.Rus,D.Zhang and L.Rauchwerger,“The Value Evolution Graph and its Use in Memory ReferenceAnalysis”,in Proc.of the11-th Workshop on Compilers for Parallel Computing(CPC),Seeon Monastery,Chiemsee,Germany,2004,pp.175–186.Invited Paper.[9]S.Rus,L.Rauchwerger and J.Hoeflinger,“Hybrid Analysis:Static&Dynamic Memory ReferenceAnalysis”,in Int.Journal of Parallel Programming,Special Issue,31(4),2003,pp.251–283.[10]H.Yu,F.Dang,and L.Rauchwerger,“Parallel Reductions:An Application of Adaptive AlgorithmSelection”,Proc.of the15-th Workshop on Languages and Compilers for Parallel Computing (LCPC),Washington,DC.,July,2002.Also in Lecture Notes in Computer Science(LNCS),Springer-Verlag,2481,2005,pp.188–202.[11]S.Rus,L.Rauchwerger and J.Hoeflinger,“Hybrid Analysis:Static&Dynamic Memory ReferenceAnalysis”,in Proc.of the ACM16-th Int.Conf.on Supercomputing(ICS02),New York,NY,June 2002,pp.274–284.Best Student Paper Award.[12]F.Dang,H.Yu and L.Rauchwerger,“The R-LRPD Test:Speculative Parallelization of PartiallyParallel Loops”,in Proc.of the Int.Parallel and Distributed Processing Symposium(IPDPS2002), Fort Lauderdale,FL,April2002,pp.20–30.[13]L.Rauchwerger,N.M.Amato and J.Torrellas,“SmartApps:An Application Centric Approach toHigh Performance Computing”,in Proc.of the13th Annual Workshop on Languages and Compilers for Parallel Computing(LCPC),Yorktown Heights,NY,August2000.Also in Lecture Notes in Computer Science,2017,Springer-Verlag,2000,pp.82–96.[14]F.Dang and L.Rauchwerger,“Speculative Parallelization of Partially Parallel Loops”,in Proc.of the5-th Workshop on Languages,Compilers,and Run-time Systems for Scalable Computers(LCR2000), Rochester,NY,May2000.Also in Lecture Notes in Computer Science,1915,2000,pp.285–299.[15]H.Yu and L.Rauchwerger,“Adaptive Reduction Parallelization”,in Proc.of the ACM14-th Int.Conf.on Supercomputing,(ICS’00),Santa Fe,NM,May2000,pp.66–77.[16]H.Yu and L.Rauchwerger,“Techniques for Reducing the Overhead of Run-time Parallelization”,in Proc.of the9th Int.Conf.on Compiler Construction(CC’00),Berlin,Germany,March2000, Lecture Notes in Computer Science,1781,Springer-Verlag,2000,pp.232–248.[17]L.Rauchwerger and D.Padua,“The LRPD Test:Speculative Run–Time Parallelization of Loopswith Privatization and Reduction Parallelization,”IEEE Transactions on Parallel and Distributed Systems,Special Issue on Compilers and Languages for Parallel and Distributed Computers,10(2), 1999,pp.160–180.[18]H.Yu and L.Rauchwerger,“Run-time Parallelization Optimization Techniques”,in Proc.of the12thAnnual Workshop on Languages and Compilers for Parallel Computing(LCPC),August1999,San Diego,CA.Also in Lecture Notes in Computer Science,1863,Springer-Verlag,2000,pp.481–484.[19]D.Patel and L.Rauchwerger,“Implementation Issues of Loop-level Speculative Run-time Paral-lelization”,Proc.of the8th Int.Conf.on Compiler Construction(CC’99),Amsterdam,The Nether-lands,March1999.Lecture Notes in Computer Science,1575,Springer-Verlag,1998,pp.183–197.[20]Lawrence Rauchwerger,“Run-Time Parallelization:It’s Time Has Come”,Journal of Parallel Com-puting,Special Issue on Languages&Compilers for Parallel Computers,24(3–4),1998,pp.527–556.[21]D.Patel and L.Rauchwerger,“Principles of Speculative Run-time Parallelization”,Proc.of the11th Annual Workshop on Languages and Compilers for Parallel Computing(LCPC),August1998, Chapel Hill,NC.Also in Lecture Notes in Computer Science,1656,Springer-Verlag,1998,pp.323–337.[22]W.Blume,R.Doallo,R.Eigenmann,J.Grout,J.Hoeflinger,wrence,J.Lee,D.Padua,Y.Paek,W.Pottenger,L.Rauchwerger,and P.Tu,“Advanced Program Restructuring for High-Performance Computers with Polaris,”IEEE Computer,29(12),1996,pp.78–82.[23]W.Blume,R.Eigenmann,K.Faigin,J.Grout,J.Lee,wrence,J.Hoeflinger,D.Padua,Y.Paek,P.Petersen,B.Pottenger,L.Rauchwerger,P.Tu,and S.Weatherford,“Restructuring Programs for High-Speed Computers with Polaris”,Proc.of the1996ICPP Workshop on Challenges for Parallel Processing,August1996,pp.149–162.[24]L.Rauchwerger,N.M.Amato and D.Padua,“A Scalable Method for Run-Time Loop Paralleliza-tion,”Int.Journal of Parallel Programming,23(6),1995,pp.537–576.(CSRD Tech.Rept.1400.)[25]L.Rauchwerger,N.M.Amato and D.Padua,“Run-Time Methods for Parallelizing Partially ParallelLoops,”Proc.of the9th ACM Int.Conf.on Supercomputing(ICS’95),July1995,Barcelona,Spain, pp.137–146.[26]L.Rauchwerger and D.Padua,“The LRPD Test:Speculative Run–Time Parallelization of Loopswith Privatization and Reduction Parallelization,”Proc.of the ACM SIGPLAN1995Conf.on Pro-gramming Language Design and Implementation(PLDI95),June1995,La Jolla,CA.,pp.218–232.[27]L.Rauchwerger and D.Padua,“Parallelizing While Loops for Multiprocessor Systems,”Proc.ofthe9th Int.Parallel Processing Symposium,April1995,Santa Barbara,CA,pp.347–356.[28]L.Rauchwerger and D.Padua,“The Privatizing DOALL Test:A Run-Time Technique for DOALLLoop Identification and Array Privatization,”Proc.8th ACM Int.Conf.on Supercomputing,July 1994,Manchester,England,pp.33–43.[29]W.Blume,R.Eigenmann,J.Hoeflinger,D.Padua,P.Petersen,L.Rauchwerger and P.Tu,“AutomaticDetection of Parallelism:A Grand Challenge for High-Performance Computing,”IEEE Parallel and Distributed Technology,Systems and Applications-Special Issue on High Performance Fortran,Fall 1994,2(3),pp.37–47.[30]W.Blume,R.Eigenmann,K.Faigin,J.Grout,J.Hoeflinger,D.Padua,P.Petersen,B.Pottenger,L.Rauchwerger,P.Tu,and S.Weatherford,“Polaris:Improving the Effectiveness of Parallelizing Compilers,”Proc.7th Ann.Workshop on Languages and Compilers for Parallel Computing(LCPC), August1994,Ithaca,New York;Also in Lecture Notes in Computer Science,892,Springer-Verlag, 1994,pp.141–154.[31]L.Rauchwerger and D.Padua,“Run-Time Methods for Parallelizing DO Loops,”Proc.of the2ndInt.Workshop on Massive Parallelism:Hardware,Software and Applications,October1994,Capri, Italy,pp.1–15.Parallel Libraries&Applications[32]Lawrence Rauchwerger and Nancy Amato,“SmartApps:Middle-ware for Adaptive Applications onReconfigurable Platforms”,ACM SIGOPS Operating Systems Reviews,Special Issue on Operating and Runtime Systems for High-End Computing Systems,40(2):73–82,2006.[33]N.Thomas,S.Saunders,T.Smith,G.Tanase,L.Rauchwerger,“ARMI:A High Level Communica-tion Library for STAPL,”Parallel Processing Letters,June,2006,16(2):261-280.[34]W.McLendon III,B.Hendrickson,S.Plimpton,L.Rauchwerger,“Finding strongly connected com-ponents in distributed graphs”,Journal of Parallel and Distributed Computing(JPDC),March,2005.65(8):901-910,2005.[35]N.Thomas,G.Tanase,achyshyn,J.Perdue,N.M.Amato,L.Rauchwerger,“A Framework forAdaptive Algorithm Selection in STAPL”,in Proc.of ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming(PPOPP),Chicago,IL,June,2005,pp.277–288.[36]Shawna Thomas,Gabriel Tanase,Lucia K.Dale,Jose E.Moreira,Lawrence Rauchwerger,NancyM.Amato,“Parallel Protein Folding with STAPL,”Concurrency and Computation:Practice and Experience,17(14),2005,pp.1643–1656.[37]Steven J.Plimpton,Bruce Hendrickson,Shawn Burns,William McLendon III and Lawrence Rauch-werger,“Parallel Algorithms for S n Transport on Unstructured Grids”,Journal of Nuclear Science and Engineering,150(7),2005,pp.1-17.[38]S.Saunders and L.Rauchwerger,“ARMI:An Adaptive,Platform Independent Communication Li-brary”,in Proc.of ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming (PPOPP),San Diego,CA,June,2003,pp.230–241.[39]S.Saunders and L.Rauchwerger,“A Parallel Communication Infrastructure for STAPL”,in Proc.ofthe Workshop on Performance Optimization for High-level Languages and Libraries(POHLL-02), New York,NY,June2002Best Student Paper Award.[40]P.An,A.Jula,S.Rus,S.Saunders,T.Smith,G.Tanase,N.Thomas,N.M.Amato and L.Rauch-werger,“STAPL:An Adaptive,Generic Parallel C++Library”,in Proc.of the14-th Workshop on Languages and Compilers for Parallel Computing(LCPC),Cumberland Falls,KY,August2001.Also in Lecture Notes in Computer Science(LNCS),2624Springer-Verlag,2003,pp.193–208. [41]W.McLendon III,Bruce Hendrickson,S.Plimpton,L.Rauchwerger,“Finding Strongly ConnectedComponents in Parallel in Particle Transport Sweeps”,in Proc.of13-th Symposium on Parallel Algorithms and Architectures,(SPAA)Crete,Greece,July2001,pp.141–154.[42]L.Rauchwerger,F.Arzu and K.Ouchi,“Standard Templates Adaptive Parallel Library”,Proc.of the4th Int.Workshop on Languages,Compilers and Run-Time Systems for Scalable Computers(LCR), May1998,Pittsburgh,PA;Also in Lecture Notes in Computer Science,1511,Springer-Verlag,1998, pp.402–410.Computer Architecture[43]M.Garzaran,M.Prvulovic,J.Llaberia,V.Vinals,L.Rauchwerger,and J.Torrellas,“Tradeoffsin Buffering Speculative Memory State for Thread-Level Speculation in Multiprocessors”in ACM Transactions on Architecture and Code Optimization(TACO),2(3):247-279,September2005. [44]R.Iyer,J.Perdue,L.Rauchwerger,N.M.Amato,L.Bhuyan,“An Experimental Evaluation of the HPV-Class and SGI Origin2000Multiprocessors using Microbenchmarks and Scientific Applications,”Int.J.of Parallel Programming,33(4),2005,pp.307–350.[45]M.Garzar´a n,M.Prvulovic,V.Vials,J.Llabera,L.Rauchwerger,and J.Torrellas,“Software Loggingunder Speculative Parallelization”,in Proc.of Workshop on High Performance Memory Systems, Goteborg,Sweden,June2001,extended version in Chapter of“High Performance Memory Systems”,Editors:Haldun Hadimioglu, David Kaeli,Jeffrey Kuskin,Ashwini Nanda,and Josep Torrellas,pp.181-193,Springer-Verlag, November2003,ISBN0-387-00310-X.[46]M.Garzar´a n,M.Prvulovic,J.Llaberia,V.Vinals,L.Rauchwerger,and J.Torrellas,“Tradeoffs inBuffering Memory State for Thread-Level Speculation in Multiprocessors”,in Proc.of Conf.on High Performance Computer Architecture2003,(HPCA),Anaheim,CA.,Feb.8-12,2003. [47]M.Garzar´a n,M.Prvulovic,V.Vials,J.Llabera,L.Rauchwerger,and J.Torrellas,“Using SoftwareLogging to Support Multi-Version Buffering in Thread-Level Speculation”,in Proc.of the Int.Conf.on Parallel Architectures and Compilation Techniques(PACT),September2003,pp.170-181. [48]M.Garzar´a n,A.Jula,M.Prvulovic,H.Yu,L.Rauchwerger,and J.Torrellas,“Architectural Supportfor Parallel Reductions in Scalable Shared-Memory Multiprocessors”,in Proc.of the6-th Int.Conf.on Parallel Computing Technologies(PACT),September,2001,pp.243–254.[49]M.Prvulovic,M.Garzar´a n,L.Rauchwerger,and J.Torrellas,“Removing Architectural Bottlenecksto the Scalability of Speculative Parallelization”,in Proc.of the28-th Int.Symp.on Computer Architecture(ISCA),Goteborg,Sweden,June2001,pp.204–215.[50]Y.Zhang,L.Rauchwerger and J.Torrellas,Hardware for Speculative Parallelization in High-EndMultiprocessors The Third PetaFlop Workshop(TPF-3),Annapolis,February1999.。

![[转载]k-折交叉验证(K-fold](https://uimg.taocdn.com/e3435e6b178884868762caaedd3383c4bb4cb43c.webp)

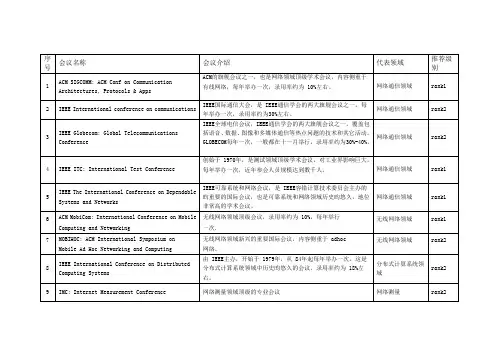

[转载]k-折交叉验证(K-fold cross-validation)原⽂地址:k-折交叉验证(K-fold cross-validation)作者:清风⼩荷塘k-折交叉验证(K-fold cross-validation)是指将样本集分为k份,其中k-1份作为训练数据集,⽽另外的1份作为验证数据集。

⽤验证集来验证所得分类器或者回归的错误码率。

⼀般需要循环k次,直到所有k份数据全部被选择⼀遍为⽌。