Novel Architectures for P2P Applications the Continuous-Discrete Approach

- 格式:pdf

- 大小:211.90 KB

- 文档页数:10

离散傅里叶变换的算术傅里叶变换算法张宪超1,武继刚1,蒋增荣2,陈国良1(1.中国科技大学计算机科学与技术系,合肥230027;2.国防科技大学系统工程与数学系,长沙410073)摘要:离散傅里叶变换(DFT)在数字信号处理等许多领域中起着重要作用.本文采用一种新的傅里叶分析技术—算术傅里叶变换(AFT)来计算DFT.这种算法的乘法计算量仅为0(N);算法的计算过程简单,公式一致,克服了任意长度DFT传统快速算法(FFT)程序复杂、子进程多等缺点;算法易于并行,尤其适合VLSI设计;对于含较大素因子,特别是素数长度的DFT,其速度比传统的FFT方法快;算法为任意长度DFT的快速计算开辟了新的思路和途径.关键词:离散傅里叶变换(DFT);算术傅里叶变换(AFT);快速傅里叶变换(FFT)中图分类号:TN917文献标识码:A文章编号:0372-2112(2000)05-0105-03An Algorithm for Computing DFT Using Arithmetic Fourier TransformZHANG Xian-chao1,WU Ji-gang1,JIANG Zeng-rong2,CHEN Guo-iiang1(1.Dept.of CompUter Science&Technology,Unio.of Science&Technology of China,Hefei230027,China;2.Dept.of System Engineering&Mathematics,National Unio.of Defense Technology,Changsha410073,China)Abstract:The Discrete Fourier Transform(DFT)piays an important roie in digitai signai processing and many other fieids.In this paper,a new Fourier anaiysis technigue caiied the arithmetic Fourier transform(AFT)is used to compute DFT.This aigorithm needs oniy0(N)muitipiications.The process of the aigorithm is simpie and it has a unified formuia,which overcomes the disadvantage of the traditionai fast method that has a compieX program containing too many subroutines.The aigorithm can be easiiy performed in paraiiei,especiaiiy suitabie for VLSI designing.For a DFT at a iength that contains big prime factors,especiaiiy for a DFT at a prime iength,it is faster than the traditionai FFT method.The aigorithm opens up a new approach for the fast computation of DFT.Key words:discrete Fourier transform(DFT);arithmetic Fourier transform(AFT);fast Fourier transform(FFT)!引言离散傅里叶变换(DFT)在数字信号处理等许多领域中起着重要作用.但DFT的计算量很大(N点DFT需0(N2)乘法和加法).因此,DFT的快速计算问题非常重要.1965年,Cooiey 和Tukey开创了快速傅里叶变换(FFT)方法,使N点DFT的计算量从0(N2)降到0(N iog N),开辟了DFT的快速计算时代.但FFT的计算仍较复杂,且对不同长度的DFT其计算公式不一致,致使任意长DFT的FFT程序非常复杂,包含大量子进程.1988年,Tufts和Sadasiv[1]提出了一种用莫比乌斯反演公式(Mibius inversion formuia)计算连续函数的傅立叶系数的方法并命名为算术傅立叶变换(AFT).AFT有许多良好的性质:其乘法量仅为0(N);算法简单,并行性好,尤其适合VLSI设计.因此很快得到广泛关注,并在数字图像处理等领域得到应用.AFT已成为继FFT后一种新的重要的傅立叶分析技术[2~5].根据DFT和连续函数的傅立叶系数的关系,可以用AFT 计算DFT.这种方法保持了AFT的良好性质,且具有公式一致性.大量实验表明,同直接计算相比,AFT方法可以将DFT的计算时间减少90%,对含较大素因子,特别是其长度本身为素数的DFT,它的速度比传统的FFT快.从而它为DFT快速计算开辟了新的途径."算术傅立叶变换本文采用文[3]中的算法.设A(t)为周期为T的函数,它的傅立叶级数只含有限项,即:A(t)=a0+!Nn=1a n cos2!f0t+!Nn=1b n sin2!f0t(1)其中:f0=1/T,a0=1T"TA(t)dt.令:B(2n,!)=12n!2n-1m=0(-1)m A(m2nT+!T),-1<!<1(2)则傅立叶系数a n和b n可以由下列公式计算:a n=![N/n]l=1,3,5,…U(l)B(2nl,0)b n=![N/n]l=1,3,5,…U(l)(-1)(l-1)/2B(2n,14nl),n=1,…,N(3)第5期2000年5月电子学报ACTA ELECTR0NICA SINICAVoi.28No.5May2000其中:!(l )=I ,(-I )r ,0{,l =I l =p I p 2…p r 3p 使p 2\l为莫比乌斯(M bioLS )函数.这就是AFT ,其计算量为:加法:N 2+[N /2]+[N /3]+…+I -2N ;乘法:2N.AFT 需要函数大量的不均匀样本点,而在实际应用中,若计算函数前N 个傅立叶系数,根据奈奎斯特(NygLiSt )抽样定律,只需在函数的一个周期内均匀抽取2N 个样本点.这时可以用零次插值解决样本不一致问题.文献[2、3]已作了详细的分析,本文不再重复.3DFT 的AFT 算法3.1DFT 的定义及性质定义1设X I 为一长度为N 的序列,它的DFT 定义为:Y I =Z N-II =0X I w II ,I =0,I ,…,N -I ;w =e -i 2!/N(4)性质1用记号X I 、=、Y I 表示序列Y I 为序列X I 的DFT ,G I 、=、H I ,则:pX I +gG I 、=、pY I +gH I (5)因此,一个复序列的DFT 可以用两个实序列的DFT 计算.故本文只讨论实序列DFT 的计算问题.性质2设X I 为一实序列,X I 、=、Y I ,则:Re Y I =Re Y N -I ,Im Y I =-Im Y N -I (Re Y I 和Im Y I 分别代表Y I 的实部和虚部)(6)因此,对N 点实序列DFT ,只需计算:Re Y I 和Im Y I (I =0,…,「N /2).3.2DFT 的AFT 算法离散序列的DFT 和连续函数的傅立叶系数有着密切的联系.事实上,若序列X I 是一段区间[0,T ]上的函数A (t )经过离散化后得到的,再设A (t )的傅立叶级数只含前N /2项,即:A (t )=a 0+Z「N /2-II =Ia I coS2!f 0t +Z「N /2-II =I6I Sin2!f 0t(7)则DFT Y I 和傅立叶系数的关系为:Re Y I =「N /2a I /2Im Y I =「N /26I /{2,I =0,…,「N /2(8)式(7)中函数代表的是一种截频信号.对一般函数,式(8)中的“=”要改为“匀”[7].因此,序列X I 的DFT 可以通过函数A (t )的傅里叶系数计算.对于一般给定序列X I ,注意到在任意一个区间上,经过离散后能得到序列X I 的函数有无穷多个.对所有这些插值函数,公式(8)都近似地满足(仅式(7)中的函数精确地满足式(8))[7].AFT 的零次插值实现实质上就是用这些插值函数中的零次插值函数代替原来的函数进行计算的.而从AFT 的零次插值实现方法可知,用AFT 计算傅里叶系数,实际上参与计算的只是函数经离散化后得到的序列,而不必知道函数本身.因此,我们可以任取一个区间,在这个区间上,把序列X算(8)中的“傅里叶系数”,再通过式(8),就可以计算出序列的DFT .算法描述如下(采用[0,I ]区间):for I =I to 「N /2for m =0to 2I -IB (2I ,0):=B (2I ,0)+(-I )mX[Nm /2I +0.5]B (2I ,I /4I ):=B (2I ,I /4I )+(-I )mX[Nm /2I +N /4I +0.5]endforB (2I ,0):=B (2I ,0)/2I B (2I ,I /4I ):=B (2I ,I /4I )/2I endforfor =0to N -I a 0:=a 0+X ( )/N for I =I to 「N /2for I =I to[「N /2/I ]by 2a I :=a I +!(I )B (2II ,0)6I :=6I +!(I )(-I )(K -I )/2B (2II ,I /4II )endforRe Y I :=「N /2a I /2Re Y N -I :=Re Y I Im Y I :=「N /2a I /2Im Y N -I :=-Im Y I endfor endfor图IDFT 的AFT 算法程序AFT 方法的误差主要是由零次插值引起的,大量实验表明,同FFT 相比,其误差是可以接受的(部分实验结果见附录).4算法的性能4.1算法的程序DFT 的AFT 算法具有公式一致性,且公式简单,因此算法的程序也很简单(图I ).图2DFT 的AFT 算法进程示意为便于比较,不妨看一下FFT 的流程.图3FFT 算法进程示意可以看出,FFT 的程序中包含大量子进程,且这些子程序都较复杂.其中素数长度DFT 的FFT 算法程序尤其复杂.因此,任意长DFT 的FFT 算法其程序是非常复杂的.4.2算法的计算效率AFT 方法把DFT 的乘法计算量从0(N 2)降到0(N ),它2电子学报2000年计算时间减少90%.当DFT的长度!为2的幂时,FFT比AFT 方法快"对一般长度的DFT,当!含较大素因子时,AFT方法比FFT快;当!的因子都较小时,AFT方法不如FFT快.当DFT长度!本身为一较大素数时,AFT方法比FFT快"附录中给出部分实验结果以便比较"特别指出,对素数长度DFT,FFT的计算过程非常复杂,很难在实际中应用.而AFT方法算法简单,提供了较好的素数长度DFT快速算法"表1是两种算法计算效率较详细的比较"表1长度52191197114832417FFT效率67.30%68.03%72.50%71.23%76.22% AFT方法效率91.39#91.78#91.63#91.81#91.83# 4.3算法的并行性AFT具有良好的并行性,尤其适合VLSI设计,已有许多VLSI设计方案被提出,并在数字图像处理等领域得到应用.DFT的AFT算法继承了AFT优点,同样具有良好的并行性"5结论和展望本文采用算术傅里叶变换(AFT)计算DFT.这种方法把AFT的各种优点引入DFT的计算中来,开辟了DFT快速计算的新途径.把AFT方法同FFT结合起来,还可以进一步提高DFT的计算速度"参考文献[1] D.W.Tufts and G.Sadasiv.The arithmetic Fourier transform.IEEE ASSP Mag,Jan.1988:13~17[2]I.S.Reed,D.W.Tufts,Xiao Yu,T.K.Troung,M.T.Shih and X.Yin.Fourier anaiysis and signai processing by use of Mobius inversion for-muiar.IEEE Trans.Acoust.Speech Speech Processing,Mar,1990,38(3):458~470[3]I.S.Reed,Ming Tang Shih,T.K.Truong,R.Hendon and D.W.Tufts.A VLSI architecture for simpiified arithmetic fourier transform aigo-rithm.IEEE Trans.Signai Processing,May,1993,40(5):1122~1132[4]H.Park and V.K.Prasanna.Moduiar VLSI architectures for computing the arithmetic fourier transform.IEEE.Signai Processing,June,1993,41(6):2236~2246[5]Lovine.F.P,Tantaratanas.Some aiternate reaiizations of the arithmetic Fourier transform.Conference on Signai,system and Computers,1993,(Cat,93,CH3312-6):310~314[6]蒋增荣,曾泳泓,余品能.快速算法.长沙:国防科技大学出版社,1993[7]E.0.布赖姆.快速傅立叶变换.上海:上海科学技术出版社,1976附录:较详细的实验结果(机型:586微机,主频:166MHz单位:秒)2的幂长度长度AFT方法基-2FFT直接算法2560.005160.002400.115120.018600.004400.4410240.075800.01100 1.81素数长度长度AFT方法FFT直接算法5210.03790.14390.449710.13400.4400 1.6014830.3103 1.0904 3.7924170.8206 2.389910.75任意长度长度因子分解AFT方法FFT直接算法13462!6370.270.44 3.1429862!1483 1.26 2.1414.8235793!1193 1.81 1.9222.1646374637 3.0821.4237.4755742!3!929 4.45 2.4752.2964364!1609 5.94 3.5772.6278933!3!8778.96 1.92105.49最大相对误差长度AFT方法FFT1024实部 2.1939>10-2 2.3328>10-2虚部 2.1938>10-29.9342>10-2 2048实部 4.2212>10-3 1.1967>10-2虚部 6.1257>10-3 4.9385>10-2 4096实部 2.3697>10-3 6.0592>10-3虚部 2.0422>10-3 2.4615>10-3张宪超1971年生"1994年、1998年分别获国防科技大学学士、硕士学位"现在中国科技大学攻读博士学位"主要研究方向为信号处理的快速、并行计算等"武继刚1963年生"烟台大学副教授,现在中国科技大学攻读博士学位"主要研究方向为算法设计和分析等"3第5期张宪超:离散傅里叶变换的算术傅里叶变换算法。

THEORY OF MODELING AND SIMULATIONby Bernard P. Zeigler, Herbert Praehofer, Tag Gon Kim2nd Edition, Academic Press, 2000, ISBN: 0127784551Given the many advances in modeling and simulation in the last decades, the need for a widely accepted framework and theoretical foundation is becoming increasingly necessary. Methods of modeling and simulation are fragmented across disciplines making it difficult to re-use ideas from other disciplines and work collaboratively in multidisciplinary teams. Model building and simulation is becoming easier and faster through implementation of advances in software and hardware. However, difficult and fundamental issues such as model credibility and interoperation have received less attention. These issues are now addressed under the impetus of the High Level Architecture (HLA) standard mandated by the U.S. DoD for all contractors and agencies.This book concentrates on integrating the continuous and discrete paradigms for modeling and simulation. A second major theme is that of distributed simulation and its potential to support the co-existence of multiple formalisms in multiple model components. Prominent throughout are the fundamental concepts of modular and hierarchical model composition. These key ideas underlie a sound methodology for construction of complex system models.The book presents a rigorous mathematical foundation for modeling and simulation. It provides a comprehensive framework for integrating various simulation approaches employed in practice, including such popular modeling methods as cellular automata, chaotic systems, hierarchical block diagrams, and Petri Nets. A unifying concept, called the DEVS Bus, enables models to be transparently mapped into the Discrete Event System Specification (DEVS). The book shows how to construct computationally efficient, object-oriented simulations of DEVS models on parallel and distributed environments. In designing integrative simulations, whether or not they are HLA compliant, this book provides the foundation to understand, simplify and successfully accomplish the task.MODELING HUMAN AND ORGANIZATIONAL BEHAVIOR: APPLICATION TO MILITARY SIMULATIONSEditors: Anne S. Mavor, Richard W. PewNational Academy Press, 1999, ISBN: 0309060966. Hardcover - 432 pages.This book presents a comprehensive treatment of the role of the human and the organization in military simulations. The issue of representing human behavior is treated from the perspective of the psychological and organizational sciences. After a thorough examination of the current military models, simulations and requirements, the book focuses on integrative architectures for modeling theindividual combatant, followed by separate chapters on attention and multitasking, memory and learning, human decision making in the framework of utility theory, models of situation awareness and enabling technologies for their implementation, the role of planning in tactical decision making, and the issue of modeling internal and external moderators of human behavior.The focus of the tenth chapter is on modeling of behavior at the unit level, examining prior work, organizational unit-level modeling, languages and frameworks. It is followed by a chapter on information warfare, discussing models of information diffusion, models of belief formation and the role of communications technology. The final chapters consider the need for situation-specific modeling, prescribe a methodology and a framework for developing human behavior representations, and provide recommendations for infrastructure and information exchange.The book is a valuable reference for simulation designers and system engineers.HANDBOOK OF SIMULATOR-BASED TRAININGby Eric Farmer (Ed.), Johan Reimersma, Jan Moraal, Peter JornaAshgate Publishing Company, 1999, ISBN: 0754611876.The rapidly expanding area of military modeling and simulation supports decision making and planning, design of systems, weapons and infrastructure. This particular book treats the third most important area of modeling and simulation – training. It starts with thorough analysis of training needs, covering mission analysis, task analysis, trainee and training analysis. The second section of the book treats the issue of training program design, examining current practices, principles of training and instruction, sequencing of training objectives, specification of training activities and scenarios, methodology of design and optimization of training programs. In the third section the authors introduce the problem of training media specification and treat technical issues such as databases and models, human-simulator interfaces, visual cueing and image systems, haptic, kinaesthetic and vestibular cueing, and finally, the methodology for training media specification. The final section of the book is devoted to training evaluation, covering the topics of performance measurement, workload measurement, and team performance. In the concluding part the authors outline the trends in using simulators for training.The primary audience for this book is the community of managers and experts involved in training operators. It can also serve as useful reference for designers of training simulators.CREATING COMPUTER SIMULATION SYSTEMS:An Introduction to the High Level Architectureby Frederick Kuhl, Richard Weatherly, Judith DahmannPrentice Hall, 1999, ISBN: 0130225118. - 212 pages.Given the increasing importance of simulations in nearly all aspects of life, the authors find that combining existing systems is much more efficient than building newer, more complex replacements. Whether the interest is in business, the military, or entertainment or is even more general, the book shows how to use the new standard for building and integrating modular simulation components and systems. The HLA, adopted by the U.S. Department of Defense, has been years in the making and recently came ahead of its competitors to grab the attention of engineers and designers worldwide. The book and the accompanying CD-ROM set contain an overview of the rationale and development of the HLA; a Windows-compatible implementation of the HLA Runtime Infrastructure (including test software). It allows the reader to understand in-depth the reasons for the definition of the HLA and its development, how it came to be, how the HLA has been promoted as an architecture, and why it has succeeded. Of course, it provides an overview of the HLA examining it as a software architecture, its large pieces, and chief functions; an extended, integrated tutorial that demonstrates its power and applicability to real-world problems; advanced topics and exercises; and well-thought-out programming examples in text and on disk.The book is well-indexed and may serve as a guide for managers, technicians, programmers, and anyone else working on building simulations.HANDBOOK OF SIMULATION:Principles, Methodology, Advances, Applications, and Practiceedited by Jerry BanksJohn Wiley & Sons, 1998, ISBN: 0471134031. Hardcover - 864 pages.Simulation modeling is one of the most powerful techniques available for studying large and complex systems. This book is the first ever to bring together the top 30 international experts on simulation from both industry and academia. All aspects of simulation are covered, as well as the latest simulation techniques. Most importantly, the book walks the reader through the various industries that use simulation and explains what is used, how it is used, and why.This book provides a reference to important topics in simulation of discrete- event systems. Contributors come from academia, industry, and software development. Material is arranged in sections on principles, methodology, recent advances, application areas, and the practice of simulation. Topics include object-oriented simulation, software for simulation, simulation modeling,and experimental design. For readers with good background in calculus based statistics, this is a good reference book.Applications explored are in fields such as transportation, healthcare, and the military. Includes guidelines for project management, as well as a list of software vendors. The book is co-published by Engineering and Management Press.ADVANCES IN MISSILE GUIDANCE THEORYby Joseph Z. Ben-Asher, Isaac YaeshAIAA, 1998, ISBN 1-56347-275-9.This book about terminal guidance of intercepting missiles is oriented toward practicing engineers and engineering students. It contains a variety of newly developed guidance methods based on linear quadratic optimization problems. This application-oriented book applies widely used and thoroughly developed theories such LQ and H-infinity to missile guidance. The main theme is to systematically analyze guidance problems with increasing complexity. Numerous examples help the reader to gain greater understanding of the relative merits and shortcomings of the various methods. Both the analytical derivations and the numerical computations of the examples are carried out with MATLAB Companion Software: The authors have developed a set of MATLAB M-files that are available on a diskette bound into the book.CONTROL OF SPACECRAFT AND AIRCRAFTby Arthur E. Bryson, Jr.Princeton University Press, 1994, ISBN 0-691-08782-2.This text provides an overview and summary of flight control, focusing on the best possible control of spacecraft and aircraft, i.e., the limits of control. The minimum output error responses of controlled vehicles to specified initial conditions, output commands, and disturbances are determined with specified limits on control authority. These are determined using the linear-quadratic regulator (LQR) method of feedback control synthesis with full-state feedback. An emphasis on modeling is also included for the design of control systems. The book includes a set of MATLAB M-files in companion softwareMATHWORKSInitial information MATLAB is given in this volume to allow to present next the Simulink package and the Flight Dynamics Toolbox, providing for rapid simulation-based design. MATLAB is the foundation for all the MathWorks products. Here we would like to discus products of MathWorks related to the simulation, especially Code Generation tools and Dynamic System Simulation.Code Generation and Rapid PrototypingThe MathWorks code generation tools make it easy to explore real-world system behavior from the prototyping stage to implementation. Real-Time Workshop and Stateflow Coder generate highly efficient code directly from Simulink models and Stateflow diagrams. The generated code can be used to test and validate designs in a real-time environment, and make the necessary design changes before committing designs to production. Using simple point-and-click interactions, the user can generate code that can be implemented quickly without lengthy hand-coding and debugging. Real-Time Workshop and Stateflow Coder automate compiling, linking, and downloading executables onto the target processor providing fast and easy access to real-time targets. By automating the process of creating real-time executables, these tools give an efficient and reliable way to test, evaluate, and iterate your designs in a real-time environment.Real-Time Workshop, the code generator for Simulink, generates efficient, optimized C and Ada code directly from Simulink models. Supporting discrete-time, multirate, and hybrid systems, Real-Time Workshop makes it easy to evaluate system models on a wide range of computer platforms and real-time environments.Stateflow Coder, the standalone code generator for Stateflow, automatically generates C code from Stateflow diagrams. Code generated by Stateflow Coder can be used independently or combined with code from Real-Time Workshop.Real-Time Windows Target, allows to use a PC as a standalone, self-hosted target for running Simulink models interactively in real time. Real-Time Windows Target supports direct I/O, providing real-time interaction with your model, making it an easy-to-use, low-cost target environment for rapid prototyping and hardware-in-the-loop simulation.xPC Target allows to add I/O blocks to Simulink block diagrams, generate code with Real-Time Workshop, and download the code to a second PC that runs the xPC target real-time kernel. xPC Target is ideal for rapid prototyping and hardware-in-the-loop testing of control and DSP systems. It enables the user to execute models in real time on standard PC hardware.By combining the MathWorks code generation tools with hardware and software from leading real-time systems vendors, the user can quickly and easily perform rapid prototyping, hardware-in-the-loop (HIL) simulation, and real-time simulation and analysis of your designs. Real-Time Workshop code can be configured for a variety of real-time operating systems, off-the-shelf boards, and proprietary hardware.The MathWorks products for control design enable the user to make changes to a block diagram, generate code, and evaluate results on target hardware within minutes. For turnkey rapid prototyping solutions you can take advantage of solutions available from partnerships between The MathWorks and leading control design tools:q dSPACE Control Development System: A total development environment forrapid control prototyping and hardware-in-the-loop simulation;q WinCon: Allows you to run Real-Time Workshop code independently on a PC;q World Up: Creating and controlling 3-D interactive worlds for real-timevisualization;q ADI Real-Time Station: Complete system solution for hardware-in-the loopsimulation and prototyping.q Pi AutoSim: Real-time simulator for testing automotive electronic control units(ECUs).q Opal-RT: a rapid prototyping solution that supports real-time parallel/distributedexecution of code generated by Real-Time Workshop running under the QNXoperating system on Intel based target hardware.Dynamic System SimulationSimulink is a powerful graphical simulation tool for modeling nonlinear dynamic systems and developing control strategies. With support for linear, nonlinear, continuous-time, discrete-time, multirate, conditionally executed, and hybrid systems, Simulink lets you model and simulate virtually any type of real-world dynamic system. Using the powerful simulation capabilities in Simulink, the user can create models, evaluate designs, and correct design flaws before building prototypes.Simulink provides a graphical simulation environment for modeling dynamic systems. It allows to build quickly block diagram models of dynamic systems. The Simulink block library contains over 100 blocks that allow to graphically represent a wide variety of system dynamics. The block library includes input signals, dynamic elements, algebraic and nonlinear functions, data display blocks, and more. Simulink blocks can be triggered, enabled, or disabled, allowing to include conditionally executed subsystems within your models.FLIGHT DYNAMICS TOOLBOX – FDC 1.2report by Marc RauwFDC is an abbreviation of Flight Dynamics and Control. The FDC toolbox for Matlab and Simulink makes it possible to analyze aircraft dynamics and flight control systems within one softwareenvironment on one PC or workstation. The toolbox has been set up around a general non-linear aircraft model which has been constructed in a modular way in order to provide maximal flexibility to the user. The model can be accessed by means of the graphical user-interface of Simulink. Other elements from the toolbox are analytical Matlab routines for extracting steady-state flight-conditions and determining linearized models around user-specified operating points, Simulink models of external atmospheric disturbances that affect the motions of the aircraft, radio-navigation models, models of the autopilot, and several help-utilities which simplify the handling of the systems. The package can be applied to a broad range of stability and control related problems by applying Matlab tools from other toolboxes to the systems from FDC 1.2. The FDC toolbox is particularly useful for the design and analysis of Automatic Flight Control Systems (AFCS). By giving the designer access to all models and tools required for AFCS design and analysis within one graphical Computer Assisted Control System Design (CACSD) environment the AFCS development cycle can be reduced considerably. The current version 1.2 of the FDC toolbox is an advanced proof of concept package which effectively demonstrates the general ideas behind the application of CACSD tools with a graphical user- interface to the AFCS design process.MODELING AND SIMULATION TERMINOLOGYMILITARY SIMULATIONTECHNIQUES & TECHNOLOGYIntroduction to SimulationDefinitions. Defines simulation, its applications, and the benefits derived from using the technology. Compares simulation to related activities in analysis and gaming.DOD Overview. Explains the simulation perspective and categorization of the US Department of Defense.Training, Gaming, and Analysis. Provides a general delineation between these three categories of simulation.System ArchitecturesComponents. Describes the fundamental components that are found in most military simulations.Designs. Describes the basic differences between functional and object oriented designs for a simulation system.Infrastructures. Emphasizes the importance of providing an infrastructure to support all simulation models, tools, and functionality.Frameworks. Describes the newest implementation of an infrastructure in the forma of an object oriented framework from which simulation capability is inherited.InteroperabilityDedicated. Interoperability initially meant constructing a dedicated method for joining two simulations for a specific purpose.DIS. The virtual simulation community developed this method to allow vehicle simulators to interact in a small, consistent battlefield.ALSP. The constructive, staff training community developed this method to allow specific simulation systems to interact with each other in a single joint training exercise. HLA. This program was developed to replace and, to a degree, unify the virtual and constructive efforts at interoperability.JSIMS. Though not labeled as an interoperability effort, this program is pressing for a higher degree of interoperability than have been achieved through any of the previous programs.Event ManagementQueuing. The primary method for executing simulations has been various forms of queues for ordering and releasing combat events.Trees. Basic queues are being supplanted by techniques such as Red-Black and Splay trees which allow the simulation store, process, and review events more efficiently than their predecessors.Event Ownership. Events can be owned and processed in different ways. Today's preference for object oriented representations leads to vehicle and unit ownership of events, rather than the previous techniques of managing them from a central executive.Time ManagementUniversal. Single processor simulations made use of a single clocking mechanism to control all events in a simulation. This was extended to the idea of a "master clock" during initial distributed simulations, but is being replaced with more advanced techniques in current distributed simulation.Synchronization. The "master clock" too often lead to poor performance and required a great deal of cross-simulation data exchange. Researchers in the Parallel Distributed Simulation community provided several techniques that are being used in today's training environment.Conservative & Optimistic. The most notable time management techniques are conservative synchronization developed by Chandy, Misra, and Bryant, and optimistic synchronization (or Time Warp) developed by David Jefferson.Real-time. In addition to being synchronized across a distributed computing environment, many of today's simulators must also perform as real-time systems. These operate under the additional duress of staying synchronized with the human or system clock perception of time.Principles of ModelingScience & Art. Simulation is currently a combination of scientific method and artistic expression. Learning to do this activity requires both formal education and watching experienced practitioners approach a problem.Process. When a team of people undertake the development of a new simulation system they must follow a defined process. This is often re-invented for each project, but can better be derived from experience of others on previous projects.Fundamentals. Some basic principles have been learned and relearned by members of the simulation community. These have universal application within the field and allow new developers to benefit from the mistakes and experiences of their predecessors.Formalism. There has been some concentrated effort to define a formalism for simulation such that models and systems are provably correct. These also allow mathematical exploration of new ideas in simulation.Physical ModelingObject Interaction. Military object modeling is be divided into two pieces, the physical and the behavioral. Object interactions, which are often viewed as 'physics based', characterize the physical models.Movement. Military objects are often very mobile and a great deal of effort can be given to the correct movement of ground, air, sea, and space vehicles across different forms of terrain or through various forms of ether.Sensor Detection. Military object are also very eager to interact with each other in both peaceful and violent ways. But, before they can do this they must be able to perceive each other through the use of human and mechanical sensors.Engagement. Encounters with objects of a different affiliation often require the application of combat engagement algorithms. There are a rich set of these available to the modeler, and new ones are continually being created.Attrition. Object and unit attrition may be synonymous with engagement in the real world, but when implemented in a computer environment they must be separated to allow fair combat exchanges. Distributed simulation systems are more closely replicating real world activities than did their older functional/sequential ancestors, but the distinction between engagement and attrition are still important. Communication. The modern battlefield is characterized as much by communication and information exchange as it is by movement and engagement. This dimension of the battlefield has been largely ignored in previous simulations, but is being addressed in the new systems under development today.More. Activities on the battlefield are extremely rich and varied. The models described in this section represent some of the most fundamental and important, but they are only a small fraction of the detail that can be included in a model.Behavioral ModelingPerception. Military simulations have historically included very crude representations of human and group decision making. One of the first real needs for representing the human in the model was to create a unique perception of the battlefield for each group, unit, or individual.Reaction. Battlefield objects or units need to be able to react realistically to various combat environments. These allow the simulation to handle many situations without the explicit intervention of a human operator.Planning. Today we look for intelligent behavior from simulated objects. Once form of intelligence is found in allowing models to plan the details of a general operational combat order, or to formulate a method for extracting itself for a difficult situation.Learning. Early reactive and planning models did not include the capability to learn from experience. Algorithms can be built which allow units to become more effective as they become more experienced. They also learn the best methods for operating on a specific battlefield or under specific conditions.Artificial Intelligence. Behavioral modeling can benefit from the research and experience of the AI community. Techniques of value include: Intelligent Agents, Finite State Machines, Petri Nets, Expert and Knowledge-based Systems, Case Based Reasoning, Genetic Algorithms, Neural Networks, Constraint Satisfaction, Fuzzy Logic, and Adaptive Behavior. An introduction is given to each of these along with potential applications in the military environment.Environmental ModelingTerrain. Military objects are heavily dependent upon the environment in which they operate. The representation of terrain has been of primary concern because of its importance and the difficulty of managing the amount of data required. Triangulated Irregular Networks (TINs) are one of the newer techniques for managing this problem. Atmosphere. The atmosphere plays an important role in modeling air, space, and electronic warfare. The effects of cloud cover, precipitation, daylight, ambient noise, electronic jamming, temperature, and wind can all have significant effects on battlefield activities.Sea. The surface of the ocean is nearly as important to naval operations as is terrain to army operations. Sub-surface and ocean floor representations are also essential for submarine warfare and the employment of SONAR for vehicle detection and engagement.Standards. Many representations of all of these environments have been developed.Unfortunately, not all of these have been compatible and significant effort is being given to a common standard for supporting all simulations. Synthetic Environment Data Representation and Interchange Specification (SEDRIS) is the most prominent of these standardization efforts.Multi-Resolution ModelingAggregation. Military commanders have always dealt with the battlefield in an aggregate form. This has carried forward into simulations which operate at this same level, omitting many of the details of specific battlefield objects and events.Disaggregation. Recent efforts to join constructive and virtual simulations have required the implementation of techniques for cross the boundary between these two levels of representation. Disaggregation attempts to generate an entity level representation from the aggregate level by adding information. Conversely, aggregation attempts to create the constructive from the virtual by removing information.Interoperability. It is commonly accepted that interoperability in these situations is best achieved though disaggregation to the lowest level of representation of the models involved. In any form the patchwork battlefield seldom supports the same level of interoperability across model levels as is found within models at the same level of resolution.Inevitability. Models are abstractions of the real world generated to address a specific problem. Since all problems are not defined at the same level of physical representation, the models built to address them will be at different levels. The modeling an simulation problem domain is too rich to ever expect all models to operate at the same level. Multi-Resolution Modeling and techniques to provide interoperability among them are inevitable.Verification, Validation, and AccreditationVerification. Simulation systems and the models within them are conceptual representations of the real world. By their very nature these models are partially accurate and partially inaccurate. Therefore, it is essential that we be able to verify that the model constructed accurately represents the important parts of the real world we are try to study or emulate.Validation. The conceptual model of the real world is converted into a software program. This conversion has the potential to introduce errors or inaccurately represent the conceptual model. Validation ensures that the software program accurately reflects the conceptual model.Accreditation. Since all models only partially represent the real world, they all have limited application for training and analysis. Accreditation defines the domains and。

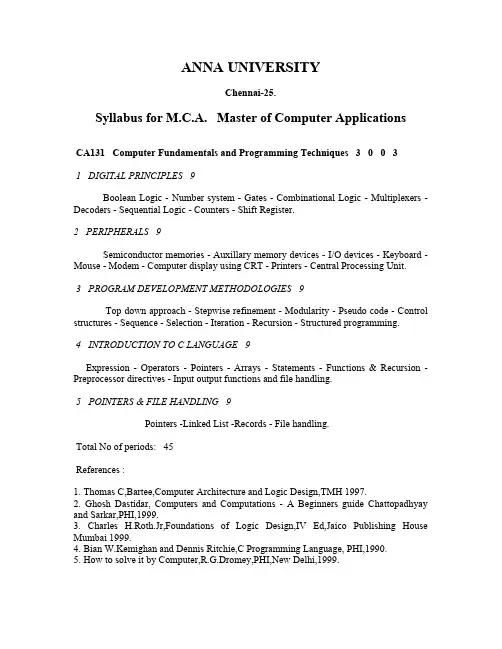

ANNA UNIVERSITYChennai-25.Syllabus for M.C.A. Master of Computer ApplicationsCA131 Computer Fundamentals and Programming Techniques 3 0 0 31 DIGITAL PRINCIPLES 9Boolean Logic - Number system - Gates - Combinational Logic - Multiplexers - Decoders - Sequential Logic - Counters - Shift Register.2 PERIPHERALS 9Semiconductor memories - Auxillary memory devices - I/O devices - Keyboard -Mouse - Modem - Computer display using CRT - Printers - Central Processing Unit.3 PROGRAM DEVELOPMENT METHODOLOGIES 9Top down approach - Stepwise refinement - Modularity - Pseudo code - Control structures - Sequence - Selection - Iteration - Recursion - Structured programming.4 INTRODUCTION TO C LANGUAGE 9Expression - Operators - Pointers - Arrays - Statements - Functions & Recursion - Preprocessor directives - Input output functions and file handling.5 POINTERS & FILE HANDLING 9Pointers -Linked List -Records - File handling.Total No of periods: 45References :1. Thomas C,Bartee,Computer Architecture and Logic Design,TMH 1997.2. Ghosh Dastidar, Computers and Computations - A Beginners guide Chattopadhyay and Sarkar,PHI,1999.3. Charles H.Roth.Jr,Foundations of Logic Design,IV Ed,Jaico Publishing House Mumbai 1999.4. Bian W.Kemighan and Dennis Ritchie,C Programming Language, PHI,1990.5. How to solve it by Computer,R.G.Dromey,PHI,New Delhi,1999.CA132 Data Structures and Algorithms 3 1 0 41 INTRODUCTION 12Introduction - Lists - Stacks - Queues - Linear data structure,Array and Linked lists - Implementation - Applications.2 TREES 13Trees - General and binary trees - Operations - Traversals - Search trees -Balanced trees.3 SORTING 12Sorting - Insertion sort - Quick sort - Merge sort - Heap sort - Sorting on several keys - External sorting.4 GRAPHS 11Graphs representation - Traversal - Topological tables and files - Sorting - Applications - Representation - Marking techniques - Files - Sequential - Index sequential - Random access organization - Implementation.5 ALGORITHM ANALYSIS AND DESIGN 12Algorithms - Time and sapace complexity - Sorting - Design techniques - Knapsack - Travaling salesman - Graph coloring - Squeezing.Total No of periods: 60References :1. Kruse R.L.,Leung BP.Tondo C.L,Data structures and program design in C,PHI,1995.2. Ellis Horowitz, Sahni & Dinesh Mehta Fundamental of data structures in C++,Galgotia,1999.3. Tanenbaum A.S,Langram Y.,Augestein M.J.,Data structures using C,PHI,1992.4. Jean Paul tremblay,Paul G.Sorenson,An Introduction to data structures with Application,Tata McGraw Hill,1995.5. Horowitz, Sahni, S.Rajasekaran, Computer Algorithms, Galgotia,2000.CA133 Data Base Management Systems 3 0 0 31 INTRODUCTION 9Historical prespective - Files versus database systems - Architecture - E-R model - Security and Integrity - Data models.2 RELATIONAL MODEL 9The relation - Keys - Constraints - Relational algebra and Calculus - Queries - Programming and triggers.3 DATA STORAGE 9Disks and Files - file organizations - Indexing - Tree structured indexing - Hash Based indexing.4 QUERY EVALUATION AND DATABASE DESIGN 9External sorting - Query evaluation - Query optimization - Schema refinement and normalization - Physical database design and tuning - Security.5 TRANSACTION MANAGEMENT 9Transaction concepts - Concurrency control - Crash recovery - Decision support - Case studies.Total No of periods: 45References :1. Raghu ramaKrishnan and Johannes gehrke, Database Management Systems, MC Graw Hill International Editions, 2000.2. C.J. Date, An Introduction to Database Systems, 7th Edition,Addision Wesley, 1997.3. Abraham Silberschatz, Henry.F.Korth and S.Sudharshan, Database system Concepts, 3rd Edition, Tata Mc Graw Hill,1997.CA134 Computer Architecture 3 0 0 31 INTRODUCTION TO DIGITAL DESIGN 6Gates to circuits - Combinational and sequential.2 COMPUTER ORGANIZATION 12Basics - Processor design - Instruction sets - addressing modes - ALU design - Control design - Hardwired control unit - Micro programmed control unit.3 MEMORY 9RAM - ROM - Cache memory - Associative memories - Virtual memory - Memory management.4 INPUT-OUTPUT ORGANIZATION 10Input - output organization - Programmed I/O - DMA - Interrupt - I/O hardware - Standard I/O interfaces - I/O devices - Storage devices.5 TRENDS IN ARCHITECTURE 8RISC Vs CISC - Parallel architectures - Pipelining - Case studies.Total No of periods: 45References :1. V.C.Hamacher, Z.G.Vraesic, S.G.Zaky, Computer Organization, Tata McGraw Hill,1996.2. Vincent P.Heuring,Harry F.Jordan, Computer systems Design and Architecturre, Addison Wesley,1999.3. John P.Hayes, Computer Architecture and Organisation, MC Graw Hill,3rd edition,1999.4. David A.Patterson and John L.Hennessy, Computer Organisation and Design, Harcourt asia PTE Ltd, 2nd edition 1999.MA154 Mathematical Foundation of Computer Science 3 1 0 41 LOGIC 12Statements - Connectives -Truth Tables - Normal forms - Predicate calculus - Inference Theory for Statement Calculus and Predicate Calculus - Automatic Theorem proving.2 COMBINATORICS 12Review of Permutation and Combination - Mathematical Induction - Pigeon hole principle - Principle of Inclusion and Exclusion - Recurrence relations.3 ALGEBRAIC STRUCTURES 12Semi group - Monoid - Groups (Definitions and Examples only) Cyclic group - Permutation groups (Sn and Dn ) - substructures - Homomorphism of semi group, monoid and groups - Cosets and Lagrange Theorem - Normal Subgroups - Rings and Fields (Definition and examples only)4 RECURSIVE FUNCTIONS 12Recursive functions - Primitive functions - computable and non-computable functions.5 LATTICES 12Partial order relation, poset - lattices, Hasse diagram - Boolean algebra.Total No of periods: 60References:1. Gersting J.L., Mathematical Structure for Computer Science, 3rd Edition W.H. Freeman and Co., 1993.2. Lidl and Pitz., Applied Abstract Algebra, Springer - Verlag, New York, 1984.3. K.H.Rosen, Discrete Mathematics and its Applications, Mc-Graw Hill Book Company,1999.4. /rosenCA135 Programming Lab 0 0 4 21 MS-DOS EDITOR COMMANDS 4Creating file (COPYCON, EDIT command etc ) - Directory related commands (MD,CD,RD) -Disk Related Commands (FDISK, FORMAT, DISKCOPY,XCOPY etc ) Other Commands.2 UNIX COMMANDS 4Basic Commands (cp,rm , ls, pwd,cat etc) - Basic Shell Programming - Advanced Shell Programming.3 BASIC C PROGRAMMING 4Simple programs using I/O (Entering Input Data, Writing Output data, gets and puts functions - operators - expressions.4 CONTROL STATEMENTS 4Implementation of programs using control statements (Branching Statements IF - BLOCK IF - NESTED IF - GOTO - SWITCH - WHILE - CONTINUE and etc..)5 FUNCTIONS 4Defining a function - accessing a function - Passing arguments to a function - Recursive function6 ARRAYS AND STRUCTURES 8Processing an array - passing Arrays to a function - Passing portion of an Array to a function - multidimenstional Arrays - Defining a structure - Accessing a structure.7 POINTERS 8Declaration and processing pointers - passing pointer to a function - Dynamic memory Allocation - Pointers and one dimensional array.8 FILE HANDLING 8Opening and Closing a Data file - Creating a Data file - Processing a Data file - Unformatted Data files.9 DATA STRUCTURES 12Stacks - Queues - Linked Lists - Trees - Sparse matrix Manipulations.10 GRAPHICS FUNCTION AND ANIMATION 4Line Primitives - 2D Geometrical object constructions, RST - Transformations - Animating Geometrical objects.Total No of periods: 60CA141 Computer Communication and Networks 3 1 0 41 INTRODUCTION 12Communication model - Data communications networking - Data transmission concepts and terminology - Transmission media - Data encoding -Data link control.2 NETWORK FUNDAMENTALS 12Protocol architecture - Protocols - OSI - TCP/IP - LAN architecture - Topologies - MAC - Ethernet, Fast ethernet, Token ring, FDDI, Wirelsee LANS - Bridges.3 NETWORK LAYER 12Network layer - Switching concepts - Circuit switching networks - Packet switching - Routing - Congestion control - X.25 - Internetworking concepts and X.25 architectural models - IP - Unreliable connectionless delivery - Datagrams - Routing IP datagrams - ICMP.4 TRANSPORT LAYER 12Transport layer - Reliable delivery service - Congestion control - connection establishment - Flow control - Transmission control protocol - User datagram protocol.5 APPLICATIONS 12Applications - Sessions and presentation aspects - DNS, Telnet, rlogin, FTP, SMTP - WWW - Security - SNMP.Total No of periods: 60References :1. Larry L.Peterson & Bruce S.Davie, Computer Networks - A systems Approach, 2nd edition, Harcourt Asia/Morgan Kaufmann, 2000.2. William Stallings, Data and Computer Communications, 5th edition, PHI,1997.CA142 System Software 3 0 0 31 INTRODUCTION 9Basic concepts - Machine structure - Typical architectures.2 ASSEMBLERS 9Funtions - Machine dependent and Machine independent assembler Features - Design and Implementation - Examples.3 LOADERS AND LINKERS 9Functions - Machine dependent and Machine independent loader features - Linkage editors - Dynamic linking - Bootstrap loaders - Implementation - Examples.4 MACRO PROCESSORS 9Functions - Features - Recursive macro expansion - General-purpose macro processors - Macro processing within language translators - Implementation - Examples.5 COMPILERS AND UTILITIES 9Introduction to compilers - Different phases of a compiler - Simple one pass compiler - Code optimization techniques - System software tools - Text editors - Interactive debugging systems.Total No of periods: 45References :1. Leland L.Beck, System Software - An Introduction to Systems Programming, 3rd Edition, Addison Wesley,1999.2. D.M.Dhamdhere, System Programming and Operating Systems, Tata Mc Graw Hill Company, 1993.3. A.U.Aho,Ravi Sethi and J.D.Ullman, Compilers Principles Techniques and Tools, Addison Wesley,1988.4. John J.Donovan, systems Programming, Tata Mc Graw Hill Edition,1991.CA143 Software Engineering 3 1 0 41 INTRODUCTION 12Software Engineering paradigms - Waterfall life cycle model, spiral model, prototype model, 4th Generation techniques - Planning - Cost estimation - Organization structure - Software project scheduling, Risk analysis and management - Requirements and specification - Rapid prototyping.2 SOFTWARE DESIGN 12Abstraction - Modularity - Software architecture - Cohesion,coupling - Various design concepts and notations - Real time and distributed system design - Documentation - Dataflow oriented design - Jackson system development - Designing for reuse - Programming standards.3 SOFTWARE METRICS 12Scope - classification of metrics - Measuring Process and Product attributes - Direct and indirect measures - Reliability - Software quality assurance - Standards.4 SOFTWARE TESTING AND MAINTENANCE 12Software testing fundamentals - Software testing strategies - Black box testing, White Box testing, System testing - Testing tools - Test case management - Software maintenance organzation - Maintenance report - Types of maintenance.5 SOFTWARE CONFIGURATION MANAGEMENT(scm) & CASE TOOLS 12 Need for SCM - Version control - SCM process - Software configuration items - Taxonomy - case repository - Features.Total No of periods: 60References :1. Roger S.Pressman, Software Engineering: A Practitioner Approach, 5th Edition, MCGraw Hill, 1999.2. Fairley, Software Engineering Concepts, McGraw Hill, 1985.3. Sommerville I., Software Engineering, 5th Edition, Addison Wesley, 1996.CA144 System Analysis and Design 3 0 0 31 INTRODUCTION 9System Concepts - Subsystems - Types of Systems - Systems and the System analyst - Business as a systems - Information systems - systems Lifecycle - Systems Development Stages - Role of system Analyst - Characeristics of System Analyst.2 SYSTEM PLANNING AND INVESTIGATION 9Approaches to system Development - Methods of Investigation - Recording the investigation feasibility assessment.3 SYSTEM DESIGN 9Analyzing user requirements - Logical system Definition - Physical Definition - Physical Design of Computer subsystem - File Design - Database Design - Output and Input Design - Computer Procedure Design - system security.Form Design - dialogue design - code design - system implementation - Changeover - Maintenance and review.4 PROJECT DOCUMENTATION 9Communitcation skills - Problems in communication written reports - Principles of report writing with structure - standard documentation - study Proposal - System Propposal - User system Specification - Program and Suit Specification - User Manual - Operational Manual - Test Data file - Changeover - Instructions - System audit report.5 MANAGEMENT INFORMATION SYSTEMS 9Introduction to MIS - Survey of IS Technology - Conceptual foundation - IS Requirements - Development - Implementation and Management of IS Resources.Total No of periods: 45References:1. James A Senn, Analysis and Design of Information Systems, McGraw Hill,1989.,2. Igor Gawryszkiewycz, Introduction to system analysis and design, PHI, New Delhi,2000.3. V.Rajaraman,Analysis and Design of information Systems, PHI, New Delhi,2000.CA145 Object Oriented Programming 3 0 0 31 OOP PARADIGM 5Comparison of programming paradigms - Key concepts of Object Oriented Programming - Object identification - Object Oriented design fundamentals - Object Oriented languages.2 INTRODUCTION TO C++ 10Comparison with C - Overview of C++ - Classes and Objects - Arrays, pointers,references and dynamic allocation.3 OVERLOADING 10Function Overloading - Copy constructors - Default arguments - Operator overloading.4 ADDITIONAL FEATURES 10Inheritance - Virtual functions - Polymorphisms - Templates - Exception handling - I/O stream - File I/O - STL5 DESIGN CONCEPTS 10Role of classes - Relationship between classes - Class interface - Components - Study of typical Object Oriented systems.Total No of periods: 45References :1. Herbert Schildt, C++ The complete References, III Edition, 1999.2. Bjame Stroustrup, The C++ Programming Language, Third Edition , Addison Wesley,2000.3. Stanley B.Lippman, Jove Lajoie, C++ Primer, III Edition, Addison Wesley,1998.4. Barkakati N, Object Oriented Programming in C++, PHI, 1995.CA146 Object Oriented Programming and DBMS Lab 0 0 4 21 CLASS 6Implementation of a class - constructor - destructor - friend class - friend functions - static member functions.2 OVERLOADING 6Implementation of overloading in functions and operators - unary and binary operators - other operators.3 INHERITANCE 6Implementation of Inheritance - simple - multilevel - multiple - hybrid.4 POLYMORPHISM 6Implementation of polymorphism - Virtual functions - Pure virtual functions.5 TEMPLATE, EXCEPTION HANDLING AND I/O STREAMS 6Implementation of class templates - exception handling - creation and manipulation of files.6 DATA DEFINITION AND UPDATION 4Creation of tables - Views - Insertion - Modification and deletion of elements.7 DATA CONTROL 4Usage of DCL commands.8 DATA RETRIEVAL 4Implementation of queries - sub queries - correlated sub queries.9 ADVANCED QUERIES 6Implementation of joins - Self - equi join - natural join - aggregate functions and set manipulation.10 HIGH - LEVEL CONSTRUCTS 6Implementation of PL / SQL - triggers - cursors and sub programs.11 DATABASE CONNECTIVITY 6Implementation of database connectivity through front - end tools - Generation of Reports.Total No of periods: 60CA231 Microprocessors and its Applications 3 0 2 41 INTRODUCTION 10Evolution of Microprocessors - Overview of 8- bit microprocessors - Limitations - Applications2 8086 MICROPROCESSOR 32Architecture of intel 8086 microprocessor - Addressing methods - Instruction set - Assembly language programming - Programming examples using MASM.3 8086 BASED SYSTEM DESIGN 11Intel 8086 signals and timing - MIN/MAX mode of operation - Memory and I/O system design using Intel 8086.4 INTERFACING CONCEPTS 14Programmable peripheral interface - Programmable communication interface - DMA controller - Timer - Interrupt controller - Keyboard/display controller.5 APPLICATIONS 8Applications using microcontrollers - Advanced microprocessors(Intel family)Total No of periods: 75References :1. Douglas. V.Hall, Microprocessors and Interfacing - Programming and Hardware, McGraw Hill International Edition,1986.2. Liu and Gibson, Microcomputer System,the 8086/8088 family,Prentice hall of india Pvt.Ltd.,1989.3. Gaonkar,R.S., Microprocessor Architecture, Programming and Applications with 8080/8085 A, Wiley Eastern Ltd, New Delhi,1991.4. Intel manuals.CA232 Operating Systems 3 1 0 41 INTRODUCTION 8Multiprogramming - Time sharing - Distributed system - Real time systems - I/O structure - Dual mod operation - Hardware protection - General system architecture - Operating system services - System calls - System programs - System design and implementation.2 PROCESS MANAGEMENT 11Process concept - Concurrent process - Scheduling concepts - CPU scheduling -Scheduling algorithms - Multiple processor scheduling.3 PROCESS SYCHRONIZATION 11Critical section - Synchronization harware - Semaphores,classical problem of synchronization - Interprocess communication - Deadlock - Characerization,Prevention, Avoidance, Detection.4 STORAGE MANAGEMENT 12Swapping, single and multiple partition allocation - Paging - Segmentation - Paged segmentation, Virtual memory - Demand paging - Page replacement and alogrithms - Thrashing - Secondary storage management - Disk structure - Free space management - Allocation methods - Disk scheduling - Performance and reliability improvements - Storage hierarchy.5 FILES AND PROCTECTION 18File system organization - File operations - Access methods - Consistency semantics - Directory structure organization - File protection - Implementation issues - Securtiy - Encryption - Case study - UNIX and Windows NT - Introduction to distributed OS design.Total No of periods: 60References :1. Silberschatz and Galvin, Operating System Concepts, 4th Edition, Addison Wesley Publishing co.,1995.2. Deital, An Introduction to OPerating systems, Addison Wesley Publishing Co., 1985.3. Milankovic.M., Operatin system Concepts and Design, 2nd Edition, McGraw Hill 1992.4. Madanick SE and Donovan JJ, Operating System, McGraw Hill, 1974.CA233 Object Oriented Analysis and Design 3 0 0 31 OBJECT ORIENTED DESIGN FUNDAMENTALS 9The objec model - Classes and Objects - Complexity - Classification - Notation - Process - Pragmatics - Binary and entity relationship - Object types - Object state - OOSD life cycle.2 OBJECT ORIENTED ANALYSIS 9Overview of object oriented analysis - Shaler/Mellor, Coad/Yourdon, Rumbagh,Booch - UML - Usecase - Conceptual model - Behaviour - Class - Analysis patterns - Overview - Diagrams - Agrregation.3 OBJECT ORIENTED DESIGN METHODS 9UML - Diagrams - Collaboration - Sequence - Class - Design patterns and frameworks - Comparison with other design methods.4 MANAGING OBJECT ORIENTED DEVELOPMENT 9Managing analysis and design - Evaluation testing - Coding - Maintenance - Metrics.5 CASE STUDIES IN OBJECT ORIENTED DEVELOPMENT 9Design of foundation class libraries - Object Oriented databases - Client/Server computing - Middleware.Total No of periods: 45References :1. Craig Larman, Applying UML and patterns, Addison Wesley,2000.2. The Unified Modeling Language User Guide, Grady Booch, James Rumbaugh, Ivar Jacobson, Addison - Wesley Long man, 1999, ISBN 0-201-57 168-4.3. Ali Bahrami, Object Oriented System Development, McGraw Hill International Edition, 1999.4. Fowler, Analysis Patterns, Addison Wesley,1996.5. Erich Gamna, Design Patterns, Addison Wesley, 1994.CA234 Unix and Network Programming 3 0 0 31 INTRODUCTION & FILE SYSTEM 9Overview of UNIX OS - File I/O - Files and directories - Standard I/O library - System data files and information.2 PROCESSES 9Environment of a UNIX process - Process control - Process relationships - Signals terminal I/O - Advanced I/O - threads.3 INTERPROCESS COMMUNICATION 9Introduction - Message passing - Synchronization - Shared memory.4 SOCKETS 9Introduction - TCP sockets - I/O multiplexing - Socket options - UDP sockets - Name and address conversions.5 APPLICATIONS 9Raw sockets - Debugging techniques - TCP echo client server - UDP echo client server - Ping - Trace route - Client server applications.Total No of periods: 45References :1. W.Richard Stevens, Advanced programming in the UNIX environment, Addison Wesley,1999.2. W.Richard Stevens, UNIX Network Programming Volume 1,2, Prentice Hall International,1998.CA235 Unix Lab 0 0 4 21 BASIC COMMANDS 8Learning an Editor, Knowing to use commands like ls,cp,rm,diff,rmdir,cat etc. Learning to write a make file, compiling C programs in Unix.2 IMPLEMENTATION OF SYSTEM CALLS RELATED TO FILE SYSTEM 8To use system calls - create,open,read, write, close, stat,fstat,lseek.3 PROCESS CREATION, EXECUTION 8Implementation of fork, exec system calls.4 PIPES 4Implementation of Inter process communication using pipes.5 SEMAPHORES 8Known to use semaphores. Solving synchronization problems.6 MESSAGE QUEUES 4Implementation of inter process communication using message Queues.7 SHARED MEMORY 4IPC using shared memory8 SOCKET PROGRAMMING - TCP SOCKETS 4Client server communication using TCP Sockets.9 SOCKET PROGRAMMING - UDP SOCKETS 4Client Server communication using UDP Scokets.10 APPLICATIONS USING TCP , UDP SOCKETS 8Application like File transfer , chat using TCP, UDP Sockets.Total No of periods: 60CA241 Internet Programming and Tools 3 1 0 41 BASIC INTERNET CONCEPTS 9History of Internet - Internet addressing - TCP/IP - DNS and directory services - Internet Resources - Applications - Electronic mail, Newgroups, UUCP, FTP, Telnet, Finger.2 WORLD WIDE WEB 5Overview - Hyper Text Markup Language - Uniform Resource Locators - Protocals - MIME Types - Browsers - Plug- ins - Net meeting and chat - Search Engines.3 SCRIPTING LANGUAGE 14Java Script programming -Dynamic HTML - Cascading style sheets - Object model and collections - Event model - Filters and Transitions - ActiveX controls - Multimedia - Client side scripting.4 JAVA 20Java fundamentals - IO Streaming - Object Serialization - Applications - Applets -Networking - Threading - Native Interfaces - Image Processing.5 ADVANCED JAVA 12Remote methods invocation - Multicasting - JDBC - Server side programming - Enterprise Applications - Automated Solutions.Total No of periods: 60References :1. D.Norton and H.Schildt, Java2 : the complete reference, TMH 2000.2. Internet & World wide Web How to program, Deitel & Deitel, Prentice Hall 2000.3. Java How to program,Deitel & Deitel, Prentice Hall 1999.4. Core Java Vol.1 and Vol. 2, Gary Cornell and Cay S.Horstmann, Sun Microsystems Press 1999.4. Active X source Book, Ted coombs,Jason Coombs and Don Brewer, John Wiley &sons 1996.1 INTRODUCTION 8Relational databases - ODBC - JDBC - Stored procedures - Triggers - Forms - Reports - Decision support - Databases - Servers2 PARALLEL AND DISTRIBUTED DATABASES 10Parallel databases - I/O parallelism - Interquery and Intraquery parallelism - Intra operation and Interoperation parallelism - Design of parallel databases - Distributed databases - Distributed data storage - Network transparency distributed query processing - Transaction model - Commit protocols - Coordinator selection - Concurrency control - Deadlock handling - Multidtabase systems.3 SPECIALIZED DATABASES 12Dedutive databases - Temporal and Spatial databases - Web databases - Multimedia databases.4 OBJECT ORIENTED DATABASES 10Objects - Storage - Retrieval - Query language - Object relational databases - Architecture - query processing.5 DATA WAREHOUSING AND DATA MINING 5Data Warehousing architecture - Implementation - Data mining - Rules - Clustering.Total No of periods: 45References :1. Ramez Eimasri, Shamkant, B.Navathe, Fundamentals of Database Systems, 3rd Edition, Addison Wesley,2000.2. Abraham Silberschatz, Henry.F.Korth and S.Sudharshan, Database System Concepts, McGraw Hill International Editions, 1997.3. Setrag Khosafian, Object Oriented Databases, John Wiley & Sons,1993.4. Gary W.Hanson and James V.Hanson, Database Management and Design, Prentice Hall of India Pvt Ltd.,1999.5. M.Tamer Ozsu and Patrick Valduriez, Principles of Distributed Database Systems, Prentice Hall International INC, 1999.1 WINDOWS PROGRAMMING 10Conceptual comparison of traditional programming paradigms - Overview of Windows programming - Data types - Resources - Windows messages - Device contexts - Document interface - Dynamic linking libraries - SDK(Software Development Kit) tools - Context help.2 VISUAL BASIC PROGRAMMING 10Form design - Overview - Programming fundamentals - VBX Controls - Graphics application - Animation - Interfacing - File system controls - Data control - Database applications.3 VISUAL C++ PROGRAMMING 10Frame work calsses - VC++ components - Resources - Event handling - Message dispatch system - Model and Modeless dialogs - Importing VBX controls - Document view architecture - Serialization - Multiple document interface - Splitter windows - Co-ordination between controls - Subclassing.4 DATABASE CONNECTIVITY 8Mini database applications - Embedding controls in view - Creating user defined DLL's - Dialog based applications - Dynamic data transfer functions - User interface classes - Database management with ODBC - Communicating with other applications - Object linking and embedding.5 BASICS OF GUI DESIGN 7Goals of user interface design - Three models - Visual interface design - File system - Storage and retrieval system - Simultaneous multiplatform development - Interoperability.Total No of periods: 45References :1. David Kruglinski J., Inside Visual C++, Microsoft Press , 1993.2. Microsoft Visual C++ and Visual Basic manuals.3. Petzoid, Windows Programming, Microsoft Press, 1992.4. Alan cooper, The Essentials of User Interface Design, IDG Books World Wide Inc., California, USA, 1995.5. Pappar and Murray,Visual C++: The Complete Reference, TMH,2000.CA244 Computer Graphics and Multimedia Systems 3 0 0 31 INTRODUCTION 9I/O devices - VGA card - Bresenham technique - DDA - Display files - Clipping algorithms - circle Drawing algorithms.2 2D TRANSFORMATIONS 9Two dimensional transformations - Interactive Input methods - Polygons - splines - Window viewport mapping.3 3D TRANSFORMATIONS 93D Concepts - Projections - Hidden surface and hidden line elimination - visualization and rendering - color models - Texture mapping - animation - morphing.4 OVERVIEW OF MULTIMEDIA 9Overview of hardware and software components - 2D and 3D graphics in multimedia - audio - video - Standards for multimedia authoring and designing - Multimedia project development.5 APPLICATIONS 9Multimedia Information system - MMDB - Image information system -Video conferencing concepts of virtual reality.Total No of periods: 45References :1. Foley J.D, Van Dam A, Feiner S.K, Hughes J.F, computer Principles and practice, Addison, Wesley publication company, 1993.2. Siamon J. Gibbs and Dionysios C. Tsichritzis, Multimedia programming, Addison, Wesley, 1995.3. Tom Vaughan, Multimedia - making it work, Osborne Mc Graw Hill, 1993.4. Hearn D and Baker M.P, Computer graphics 2nd edition PHI, New Delhi, 1995.5. John Villamil, Casanova and Leony Fernanadez, Eliar, Multimedia Graphics, PHI, 1998.。