2011-Real Time Hand Pose Estimation using Depth Sensors

- 格式:pdf

- 大小:702.62 KB

- 文档页数:7

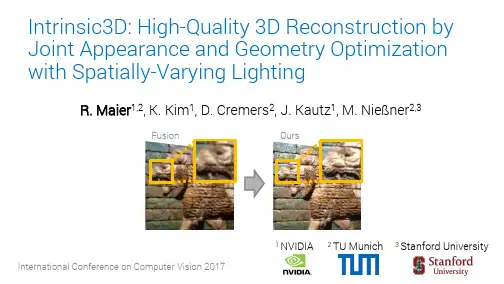

Intrinsic3D: High-Quality 3D Reconstruction by Joint Appearance and Geometry Optimization with Spatially-Varying LightingR. Maier1,2, K. Kim1, D. Cremers2, J. Kautz1, M. Nießner2,3OursFusion2 TU Munich1 NVIDIA 3 Stanford University•Motivation & State-of-the-art •Approach•Results•Conclusion•Motivation & State-of-the-art •Approach•Results•Conclusion•Recent progress in Augmented Reality (AR) /Virtual Reality (VR)Microsoft HoloLensHTC Vive•Recent progress in Augmented Reality (AR) /Virtual Reality (VR)HTC ViveMicrosoft HoloLens •Requirement of high-quality 3D content for AR,VR, Gaming …NVIDIA VR Funhouse•Recent progress in Augmented Reality (AR) /Virtual Reality (VR)Microsoft HoloLensHTC Vive •Requirement of high-quality 3D content for AR,VR, Gaming …•Usually: manual modelling (e.g. Maya)NVIDIA VR Funhouse•Recent progress in Augmented Reality (AR) /Virtual Reality (VR)Microsoft HoloLensHTC Vive •Requirement of high-quality 3D content for AR,VR, Gaming …•Usually: manual modelling (e.g. Maya)•Wide availability of commodity RGB-D sensors:efficient methods for 3D reconstructionNVIDIA VR Funhouse•Recent progress in Augmented Reality (AR) / Virtual Reality (VR)•Requirement of high-quality 3D content for AR, VR, Gaming …•Usually: manual modelling (e.g. Maya)•Wide availability of commodity RGB-D sensors:efficient methods for 3D reconstruction•Challenge: how to reconstruct high-quality 3D models with best-possible geometry and color from low-cost depth sensors?HTC ViveNVIDIA VR Funhouse Microsoft HoloLensRGB-D based 3D Reconstruction•Goal: stream of RGB-D frames of a scene →3D shape that maximizes the geometric consistencyRGB-D based 3D Reconstruction•Goal: stream of RGB-D frames of a scene →3D shape that maximizes the geometric consistency•Real-time, robust, fairly accurate geometric reconstructionsKinectFusion, 2011“KinectFusion: Real-time DenseRGB-D based 3D Reconstruction•Goal: stream of RGB-D frames of a scene →3D shape that maximizes the geometric consistency•Real-time, robust, fairly accurate geometric reconstructionsKinectFusion, 2011DynamicFusion, 2015“KinectFusion: Real-time Dense “DynamicFusion: Reconstruction and Tracking ofNon-rigid Scenes in Real-time”, Newcombe et al.,•Goal: stream of RGB-D frames of a scene →3D shape that maximizes the geometric consistency•Real-time, robust, fairly accurate geometric reconstructionsRGB-D based 3D ReconstructionBundleFusion, 2017DynamicFusion, 2015KinectFusion, 2011“KinectFusion: Real-time Dense “DynamicFusion: Reconstruction and Tracking ofNon-rigid Scenes in Real-time”, Newcombe et al., “BundleFusion: Real-time Globally Consistent 3D Reconstruction using On-the-fly SurfaceVoxel Hashing•Baseline RGB-D based 3D reconstruction framework•initial camera poses•sparse SDF reconstructionVoxel Hashing•Baseline RGB-D based 3D reconstruction framework•initial camera poses•sparse SDF reconstruction •Challenges:•(Slightly) inaccurate and over-smoothed geometryVoxel Hashing•Baseline RGB-D based 3D reconstruction framework•initial camera poses•sparse SDF reconstruction •Challenges:•(Slightly) inaccurate and over-smoothed geometry •Bad colorsVoxel Hashing•Baseline RGB-D based 3D reconstruction framework•initial camera poses•sparse SDF reconstruction •Challenges:•(Slightly) inaccurate and over-smoothed geometry •Bad colors•Inaccurate camera pose estimationVoxel Hashing•Baseline RGB-D based 3D reconstruction framework•initial camera poses•sparse SDF reconstruction •Challenges:•(Slightly) inaccurate and over-smoothed geometry •Bad colors•Inaccurate camera pose estimation•Input data quality (e.g. motion blur, sensor noise)Voxel Hashing•Baseline RGB-D based 3D reconstruction framework•initial camera poses•sparse SDF reconstruction •Challenges:•(Slightly) inaccurate and over-smoothed geometry •Bad colors•Inaccurate camera pose estimation•Input data quality (e.g. motion blur, sensor noise)Voxel Hashing•Baseline RGB-D based 3D reconstruction framework•initial camera poses•sparse SDF reconstruction •Challenges:•(Slightly) inaccurate and over-smoothed geometry •Bad colors•Inaccurate camera pose estimation•Input data quality (e.g. motion blur, sensor noise)•Goal: High-Quality Reconstruction of Geometry and ColorHigh-Quality Colors [Zhou2014]“Color Map Optimization for 3D Reconstruction with Consumer DepthCameras”, Zhou and Koltun, ToG 2014Optimize camera poses and image deformationsto optimally fit initial (maybe wrong) reconstructionBut: HQ images required, no geometry refinement involvedHigh-Quality Colors [Zhou2014]“Color Map Optimization for 3D Reconstruction with Consumer Depth Cameras”, Zhou and Koltun, ToG 2014Optimize camera poses and image deformationsto optimally fit initial (maybe wrong) reconstructionBut: HQ images required, no geometry refinement involvedHigh-Quality Geometry [Zollhöfer2015]“Shading -based Refinement on Volumetric Signed Distance Functions”, Zollhöfer et al., ToG 2015Adjust camera poses in advance (bundle adjustment) to improve color Use shading cues (RGB) to refine geometry (shading based refinement of surface & albedo)But: RGB is fixed (no color refinement based on refined geometry)High-Quality Colors [Zhou2014]“Color Map Optimization for 3D Reconstruction with Consumer Depth Cameras”, Zhou and Koltun, ToG 2014Optimize camera poses and image deformations to optimally fit initial (maybe wrong) reconstruction But: HQ images required, no geometry refinement involved High-Quality Geometry [Zollhöfer2015]“Shading -based Refinement on Volumetric Signed Distance Functions”, Zollhöfer et al., ToG 2015Adjustcamera poses in advance (bundle adjustment) to improve color Use shading cues (RGB) to refine geometry (shading based refinement of surface & albedo)But: RGB is fixed (no color refinement based on refined geometry)Idea:jointly optimize for geometry, albedo and image formation model to•Temporal view sampling & filtering techniques (input frames)•Temporal view sampling & filtering techniques (input frames)•Joint optimization of•surface & albedo (Signed DistanceField)•image formation model•Temporal view sampling & filtering techniques (input frames)•Joint optimization of•surface & albedo (Signed DistanceField)•image formation model•Temporal view sampling & filtering techniques (input frames)•Joint optimization of•surface & albedo (Signed DistanceField)•image formation model•Lighting estimation using Spatially-Varying Spherical Harmonics (SVSH)•Temporal view sampling & filtering techniques (input frames)•Joint optimization of•surface & albedo (Signed DistanceField)•image formation model•Lighting estimation using Spatially-Varying Spherical Harmonics (SVSH)•Optimized colors (by-product)Overview•Motivation & State-of-the-art •Approach•Results•ConclusionOverviewRGB-DOverviewRGB-D SDF FusionOverviewRGB-D SDF Fusion Shading-based Refinement(Shape-from-Shading)Overview RGB-D SDF Fusion Temporal view sampling / filteringShading-based Refinement (Shape-from-Shading)Overview RGB-D SDF Fusion Temporal view sampling / filteringShading-based Refinement (Shape-from-Shading)Spatially-VaryingLighting EstimationOverview RGB-D SDF Fusion Temporal view sampling / filteringShading-based Refinement (Shape-from-Shading)Spatially-VaryingLighting Estimation Joint Appearance andGeometry Optimization •surface•albedo•image formation modelOverview High-Quality 3DReconstruction RGB-D SDF Fusion Temporal view sampling / filteringShading-based Refinement (Shape-from-Shading)Spatially-VaryingLighting Estimation Joint Appearance andGeometry Optimization •surface•albedo•image formation modelOverviewRGB-DRGB-D DataExample: Fountain dataset•1086 RGB-D frames•Sensor:•Depth 640x480px•Color 1280x1024px•~10 Hz•Primesense•Poses estimated using Voxel HashingApproach OverviewSDF FusionVolumetric 3D model representation•Voxel grid: dense(e.g. KinectFusion) or sparse(e.g. Voxel Hashing)Volumetric 3D model representation•Voxel grid: dense(e.g. KinectFusion) or sparse(e.g. Voxel Hashing)•Each voxel stores:•Signed Distance Function (SDF): signed distance to closest surface •Color values•WeightsFusion of depth maps•Integrate depth maps into SDF with their estimated camera posesFusion of depth maps•Integrate depth maps into SDF with their estimated camera poses•Voxel updates using weighted averageFusion of depth maps•Integrate depth maps into SDF with their estimated camera poses•Voxel updates using weighted averageFusion of depth maps•Integrate depth maps into SDF with their estimated camera poses•Voxel updates using weighted averageFusion of depth maps•Integrate depth maps into SDF with their estimated camera poses•Voxel updates using weighted averageFusion of depth maps•Integrate depth maps into SDF with their estimated camera poses•Voxel updates using weighted average•Extract ISO-surface with Marching Cubes (triangle mesh)ApproachOverviewTemporal view sampling / filteringKeyframe Selection•Compute per-frame blur score (for color image)Frame 81Frame 84•Select frame with best score within a fixed size window as keyframe。

一种用于白天星敏感器的星点质心提取方法胡晓东;胡强;雷兴;魏青;刘元正;王继良【摘要】从星空图像中提取星点质心是星敏感器工作的重要基础,针对白天星敏感器所获取的星空图像噪声情况复杂的特点,应用高斯点分布函数为数学模型,提出了一种能够获取高精度星点质心位置的基于帧累加的星点质心提取方法。

首先通过多帧迭代优化目标星像灰度,消除随机噪声的影响,提高信噪比,再利用平方加权质心法计算星点质心的位置,从星空图像中提取星点质心。

仿真实验结果表明:该方法具有较强的抗干扰能力和稳定性,且质心提取精度随迭代帧数的增加而提高,当迭代次数达到100次时平均定位精度可达0.1像素,适用于低信噪比条件下的质心定位计算。

该算法简单易行,运算量小,能够实现对视频图像信息的实时处理,且有效地提高质心的定位精度,可以满足白天星敏感器的应用需求。

%Star centroid extraction from object image in center of mass is the important basis of star sensor. For the star image obtained by daytime star sensors under complicated circumstances, a method of star centroid based on multiframe incremental is proposed by using the mathematical model of Gaussian point distribution function. By optimizing the multi-frame iterative image gray scale, the influence of random noise is eliminated and the signal-to-noise ratio is improved. Then, by utilizing weighted squared centroid algorithm, the star centroid is calculated to extract the star centroid from the star image. The simulation experimental results showthat the method has excellent anti-interference ability and stability, andthe centroid extraction precision is increased with the iteration frames. When the number of iterations reaches 100 times the extraction precisionis increased with the increasing of iteration number and could approach 0.1 pixel, which is suitable for low signal-to-noise ratio. This algorithm is simple, less computations, and can achieve real-time processing of video image information. It can effectively improve the positioning precision, and satisfy the application requirements of daytime star sensors.【期刊名称】《中国惯性技术学报》【年(卷),期】2014(000)004【总页数】5页(P481-485)【关键词】图像处理;质心提取;亚像素定位;帧累加;信噪比【作者】胡晓东;胡强;雷兴;魏青;刘元正;王继良【作者单位】中国航空工业集团西安飞行自动控制研究所,西安 710065;中国航空工业集团西安飞行自动控制研究所,西安 710065;中国航空工业集团西安飞行自动控制研究所,西安 710065;中国航空工业集团西安飞行自动控制研究所,西安 710065;中国航空工业集团西安飞行自动控制研究所,西安 710065;中国航空工业集团西安飞行自动控制研究所,西安 710065【正文语种】中文【中图分类】O436现今主要的三种导航模式分别是GPS导航,天文导航和惯性导航,其中GPS的导航精度最高,但易受干扰、且保密性差,因此具有高隐蔽性、高可靠性要求的导航任务不能依赖GPS导航模式。

Pump family settingsA unique feature uses pre-programmed data for different Grundfos pump families. During installation, the correct values are set for specific pumps for optimum performance and protection of the pump.Control modeGrundfos CUE provides a series of pre-defined application control modes:• Constant pressure with or without stop function• Proportional Differential pressure • Constant level with or without stop function• Constant temperature• Proportional pressureThese pre-defined control modes make it simple to program the drive for the application. When controlled from an exter-nal source, the open loop control mode is used, supplying Grundfos CUE with information about the required speed.Maximum convenience and advanced user interfaceThe Grundfos CUE is a variable frequency drive for the effective speed control of any Grundfos pump without integrated drives, irrespective of size or area of application. Grundfos CUE is an integral partof Grundfos iSOLUTIONS.Offering simple installation and operation coupled with extensive control and monitoring possibili-ties, Grundfos CUE is perfectly suited for pump applications in the water or wastewater network, building services, or industrial pumping solutions.PROPORTIONAL PRESSURE GRUNDFOS iSOLUTIONSSERVICESCLOUD PUMPCONTROL MODETEMPERATURE CONTROL HQCUEHQCUELQLCUECONSTANT LEVEL HQCUEpCONSTANT PRESSURE DUTY/STANDBY OR DUTY/ASSISTCUE 1CUE 2Modbus RTUDuty StandbyIntuitive start-up guideFor easy set-up, Grundfos CUE has a comprehensive start-up guide that allows commissioning simply by asking for your pump type, motor type, control mode, sensor type, setpoint and multi-pump mode. Grundfos CUE will then automatically set all necessary parameters.Furthermore, the advanced HMI panel features:• Favourites menu for one stop adjustment of setpoint, operating mode and control mode• Main menu with password protection for advanced commis-sioning and troubleshooting without the need for additional tools• Info key with comprehensive descriptions for easy settings and status – no manual required• Logging for tuning the performance of the pump system Lowest operating costGrundfos CUE runs with the most energy efficient motor con-struction types for higher flexibility, and not least high pump system efficiency:• Asynchronous induction motors,• Permanent magnet motors,• Synchronous reluctance motors, • PM assisted SynRM.Highest efficiency motors reduce energy consumptionAt Grundfos, our long-term 2030 water and climate ambitions are to do whatever we can to help achieve the United Nations Sustainable Development Goals, in particular 6 and 13. Speed-controlled pumps contribute strongly to water-use effi-ciency across all sectors (SDG 6), and our focus on high-efficiency motors reduces energy consumption, in support of SDG 13.For example, IE5 motor losses are over 30 % lower thanIE3-rated motors, this alone reduces the energy consumption by 10 % with a typical pump load profile.Grundfos iSOLUTIONS brings a new era of intelligence to pump systems and water technology with solutions that look beyond individual components and optimise the entire system.Powered by our deep understanding of water, Grundfos iSOLUTIONS utilises intelligent pumps, cloud connectivity and digital services. Together they enable real-time monitoring, remote control, fault prediction and system optimisation to help you reach a new level of performance.Learn more on /isolutionsConnect to the future of intelligent pump systems• Multi-pump function including alternating, back-up or cascade functionThe Multi-pump function makes it possible to control more parallel-coupled pumps without the need for an external controller. Four different multi-pump functions are available: Alternating time, Back-up, and Cascade control with either fixed or variable speed assist pumps.• Differential pressure control using two sensorsUse two sensors instead of one differential sensor for running in differential pressure.• Proportional pressure and flow estimationProportional pressure control on pumps with user adjustable control curve for pressure loss compensation.• Low flow stop functionImproved energy optimisation, easy configuration and high comfort. • Dry running protectionProtects the pump against failure due to dry running.• Limit Exceed functionThe pump reacts to a measured or an internal value exceed-ing a user-defined limit. The pump can either give an alarm/ warning or change the operating mode, reducing the need for external controllers.• Setpoint influenceThe setpoint influence function makes it possible to influ-ence the controller setpoint using measured values.• Standstill heating (anti-condensation heating)Standstill heating ensures that even during standstill periods, the motor windings are kept at a minimum temperature – heating both motor and terminal box.Superior performance through unique functionality Grundfos CUE offers greater functionality, making it easy to use in a wide rangeof complex applications. The features listed below are pump type dependent.Dedicated functionality for Water Utilities• Pipe fill modeImprove system reliability and prevent water hammering. Designed for both vertical and horizontal pipe systems to increase system performance and prevent water hammer in the application.• Low flow stop functionImproved energy optimisation, easy configuration and high comfort. • Built-in cascade control of two fixed-speed pumpsIncreased flow by simple cascade option of up to two fixed speed pumps, easy set-up from the start-up guide.• High overload and Constant torqueThe torque characteristic is normally set to variable torque for centrifugal pumps. However, Grundfos CUE offers a constant torque characteristic optimised for:- higher starting torque,- axial pumps and positive displacement pumps, or - load shocks in wastewater pumps. • Stop at minimum speed functionEnsures that the pump will stop after a selected time when the controller is in saturation, forcing the pump to run at minimum speed.• DeraggingBy preventing clogged impellers and getting rid of debris, less ragging prolongs pump lifetime and reduces service costs.With dedicated functionality for water and wastewater networks, Grundfos CUE ensures fully optimised operation in these applications.WATER NETWORKSWASTEWATER NETWORKSEMC filter for conducted emission according to IEC61800-3 Ed. 2.• CUE rated 500 V or lower up to 90 kW: Build-in filter for household application (class C1)• CUE rated 500 V or lower above 110 kW: Build-in filter for industrial application (class C2)• CUE rated 525 V or more independent of power size: Build-in filter for industrial application (class C3)Product Range3 x 525-690 V3 x 525-600 V3 x 380-500 V3 x 200-240 V 1 x 200-240 VPower supply0.3131030100250kW7.5-250 kW / 10-350 HP0.75-90 kW / 1-125 HP0.55-250 kW / 0.75-350 HP0.75-45 kW / 1-60 HP1.1-7.5 kW / 1.5-10 HPMCB114 sensor input moduleRequired for using differential pressure control using two sen-sors, or two Pt100/1000 inputs to be used in connection with motor bearings temperature measurement, or temperature measurement such as the media temperature.MCO101 cascade option moduleRequired for cascade of more than one additional variable speed pump.VariantsIP20/IP21/Nema 1 for cabinet installationIP55/Nema 12 with mains disconnect for wall installation.With or without one wire safe stop, safe torque off (STO).Search for CUE on Grundfos Product CenterTechnical specificationsBuilt-in I/O Yes Digital inputs: 4Digital inputs/outputs: 2Relay outputs: 2Analog inputs: 1 dedicated for sensor, 1 dedicated for external setpoint Analog output: 1RS-485:GENIbus, Modbus RTU, and multi-pumpAccessoriesThe Grundfos Product Center online tool lets you size pumps, browse the Grund-fos product catalogue, find appropriate replacement pumps and find pumps forhandling specific liquids.• Search in the way that meets your needs by application,pump design or pump family• Experience faster sizing thanks to a new intelligent “Quick Size” function• Documentation includes pump curves, technical specs, CAD drawings,available spare parts, installation videos, and much more• Optimised for your PC, tablet or smartphoneAs a registered user you will have access to saved preferences, productsand projects and recent browsing history.Visit Global reach and local supportGrundfos is a truly global company and is never far away.Our unique global set-up provides you with benefits, including:• Technical assistance to size your pumping system• Expert know-how and support in your local language• Web-based tools• Fast spare parts delivery on every continentContact your Grundfos sales representative for further information or help matching a GrundfosCUE variable frequency drive to your Grundfos pump and application.T h e n a m e G r u n d f o s , t h e G r u n d f o s l o g o , a n d b e t h i n k i n n o v a t e a r e r e g i s t e r e d t r a d e m a r k s o w n e d b y G r u n d f o s H o l d i n g A /S o r G r u n d f o s A /S , D e n m a r k . A l l r i g h t s r e s e r v e d w o r l d w i d e .98848178 0919/I N D U S T R Y /4011224-B r a n d B o x。

移相相关法计算相位差的研究刘玉周;赵斌【摘要】为了提高相位式测距仪的测量精度,采用移相相关方法来估计两同频正弦信号的相位差。

首先将每路信号移相2π后和原信号做相关来计算自相关,以减少噪声的影响;其次用少许数据初步估算相位差,并将一路信号移相,使两路信号的相位差移到π/2(或3π/2)附近;然后用较多的采样数据计算两路信号的相位差,将结果再减去移相量得到最终的相位差。

同时分析了频率误差对相位差计算精度的影响,进行了理论分析和仿真实验验证。

结果表明,该方法计算的误差大大减小。

这对提高测距仪的测量精度是有帮助的。

%In order to improve the accuracy of a phase-shift range finder , a phase-difference algorithm based on phase-shift correlation analysis was proposed to estimate the phase-difference between two sinusoidal signals with same frequency .For reducing the influence of noise , the autocorrelation between the original and 2πshifted signal was calculated firstly.Secondly, the phase difference was estimated approximately with a few sampled data and the initial phase of one signal was shifted by Δθto make the phase difference between two signals to be near π/2(or 3π/2).Then, the phase-difference was calculated with whole set of data by correlation method and the final phase difference was obtained by subtracting Δθ.The influence of frequency error was analyzed .Theoretical analysis and simulation shows that the error of this method is greatly reduced .The proposed method can improve the accuracy of a range finder .【期刊名称】《激光技术》【年(卷),期】2014(000)005【总页数】5页(P638-642)【关键词】测量与计量;移相相关法;相位差;频率误差【作者】刘玉周;赵斌【作者单位】华中科技大学机械科学与工程学院仪器系,武汉430074;华中科技大学机械科学与工程学院仪器系,武汉430074【正文语种】中文【中图分类】TH741相位式激光测距在3-D成像[1]、机器人导航[2]、表面检测[3]等领域有着广泛的应用,它通过测量光波往返的相位差来计算时间延迟从而计算待测距离[4-5]。

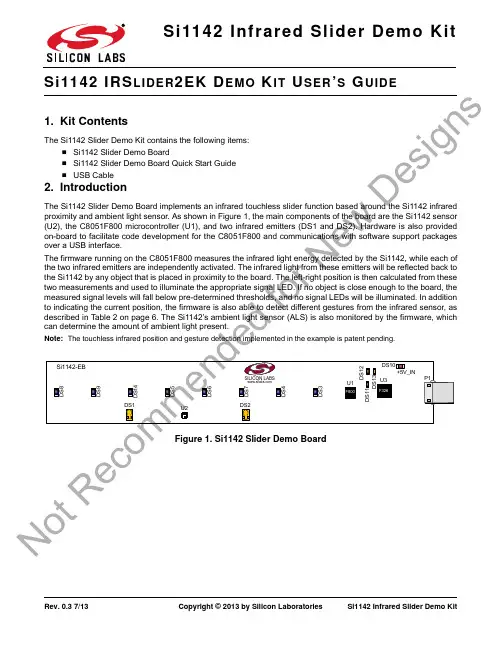

Rev. 0.3 7/13Copyright © 2013 by Silicon LaboratoriesSi1142 Infrared Slider Demo KitLIDER EMO IT SER S UIDE1. Kit ContentsThe Si1142 Slider Demo Kit contains the following items:⏹ Si1142 Slider Demo Board⏹ Si1142 Slider Demo Board Quick Start Guide ⏹ USB Cable2. IntroductionThe Si1142 Slider Demo Board implements an infrared touchless slider function based around the Si1142 infrared proximity and ambient light sensor. As shown in Figure 1, the main components of the board are the Si1142 sensor (U2), the C8051F800 microcontroller (U1), and two infrared emitters (DS1 and DS2). Hardware is also provided on-board to facilitate code development for the C8051F800 and communications with software support packages over a USB interface.The firmware running on the C8051F800 measures the infrared light energy detected by the Si1142, while each of the two infrared emitters are independently activated. The infrared light from these emitters will be reflected back to the Si1142 by any object that is placed in proximity to the board. The left-right position is then calculated from these two measurements and used to illuminate the appropriate signal LED. If no object is close enough to the board, the measured signal levels will fall below pre-determined thresholds, and no signal LEDs will be illuminated. In addition to indicating the current position, the firmware is also able to detect different gestures from the infrared sensor, as described in Table 2 on page 6. The Si1142’s ambient light sensor (ALS) is also monitored by the firmware, which can determine the amount of ambient light present.Note:The touchless infrared position and gesture detection implemented in the example is patent pending.Figure 1.Si1142 Slider Demo BoardD S 13D S 11D S 12D S 8DS1DS2D S 9D S 14D S 5D S 6D S 7D S 4D S 3+5V_INNo tR e co me edf or N e wDe si g n sSi1142 Infrared Slider Demo Kit3. Running the Pre-Loaded Slider Demo1. The board receives its power over the USB interface. Connect one end of a USB cable to the USB connector (P1) on the Si1142 Slider Demo Board and the other end to a USB Port on the PC.2. The red LED DS10 should light on the board, indicating that it is receiving power from the USB.3. Position a hand about 5 to 10cm above and perpendicular to the board. The visible blue LEDs will light according to the position of the hand above the board.4. To initiate a “pause” gesture, hold steady for about 3/4 seconds. The current LED position indicator will blink a few times to indicate that a pause gesture has been detected.5. To perform a “swipe” gesture, move the hand from left to right (swipe right) or right to left (swipe left) above the entire length of the board. The LEDs will briefly light in a sweeping pattern to indicate that a swipe gesture was detected.On this board, the infrared emitters used are OSRAM part number SFH 4056. These emitters have a power rating of 40mW and a half-angle of 22 degrees. Other emitters with different characteristics may also be used,depending on the specific application requirements.4. Software OverviewThere are several optional software packages available to support the Si1142 Slider Demo Board. The Si114x Performance Analysis Tool can be used for initial evaluation to collect data from the board over the USB interface and display it graphically on screen. For users ready to develop their own software, the Si114x Programmer’s Toolkit API enables rapid development of Si114x software in a PC environment using the Si1142 Slider Demo Board. The Si114x Programmer’s Toolkit contains example source code that allows developers to get started quickly and then tailor the code to their needs. In addition, the Silicon Labs Integrated Development Environment (IDE) provides a means of developing code for the C8051F800 and uses the USB connection on the board to program the MCU and perform in-system debugging. All of the supporting software can be downloaded from the web at: /products/sensors/pages/optical-sensor-software.aspx .4.1. Using the Si1142 Slider Demo Board with the Performance Analysis ToolThe Si1142 Slider Demo Board is supported by the Si114x Performance Analysis Tool. The Performance Analysis Tool allows users to see real-time infrared proximity and ambient light measurements from the Si1142 in a graphical form. The communications interface to the Si1142 Slider Demo Board is provided over the USB connection.To use the Performance Analysis Tool with the Si1142 Slider Demo Board, perform the following steps:1. Connect the Si1142 Slider Demo Board to the PC using a USB cable.2. Launch the Performance Analysis Tool from the Start menu.3. Select the board from the “Devices” menu (it should show up as “TS” followed by a serial number).4. Select the channels you wish to display on the picture of the slider board that appears. The individual channels available are described in “4.1.1. Channel Selection” .5. Click the green “Acquisition” arrow to begin collecting data.Note:The Performance Analysis Tool, the Si114x Programmer’s Toolkit, and the IDE cannot connect to the Si1142 SliderDemo Board at the same time. Be certain to disconnect from the board in one software package before trying to connect in the other.Figure 2 shows an example of the Performance Analysis Tool output when connected to the Si1142 Slider Demo Board. To generate the graph, a hand was moved above the slider board. The selected traces shown are the raw data measurements for the amount of Infrared light being reflected onto the part. The pink trace represents the readings using infrared emitter DS1, and the green trace represents the readings using infrared emitter DS2.No tR e co mme nd edf or N e wDe si g n sSi1142 Infrared Slider Demo KitFigure 2.Performance Analysis Tool Main Window4.1.1. Channel SelectionSelecting which channels to display is done by checking the appropriate boxes on the Board Representation window, shown in Figure 3, and the Generic Data window, shown in Figure 4. There are two different groups of measurements available from the example firmware: raw data channels and generic data channels.4.1.1.1. Raw Data ChannelsThe raw data measurements can be seen by selecting the channels from the Board Representation window, shown in Figure 3. The two types of raw data measurements are ambient light and infrared proximity.1. Raw ambient light measurements. The ambient light channels are Channel 0 (red) and Channel 1(blue). Channel 0 displays measurements of the ambient visible light while Channel 1 displays measurements of the ambient infrared light.2. Raw infrared proximity measurements. The infrared proximity channels are Channel 2 (pink) readings using DS1 and Channel 3 (green) readings using DS9. Each output is proportional to the amount ofinfrared light being reflected onto the part by an object above the board. These outputs are 16-bit unsigned values.Figure 3.Raw Data Channel SelectionNo tR e co mme nd edf or N e wDe si g n sSi1142 Infrared Slider Demo Kit4.1.1.2. Generic Data ChannelsThe generic data channels contain any data generated by the host MCU. These 16-bit channels can be anything from simple debug channels to calculated position values. See Table 1 for an explanation of all the channels shown in Figure 4.Figure 4.Generic Data Channel SelectionNo tR e co mme nd edf or N e wDe si g n sSi1142 Infrared Slider Demo KitTable 1. Generic Data ChannelsName Label TypeDescriptionG0Rad1Linearized Distance MeasurementsUsing characterization of the PS measurements with objects at certain distances, it is possible to estimate the distance of an object based on the PS measurement value. These three channels represent the distance estimations for each LED’s measurement.G1Rad2G2X(mm)Estimated Location CoordinatesWith the appropriate distance measurements above, an estimation of X and Y position can be made. These estimations are given in units of mm.G3Y(mm)G4iLED1LED Drive Current LevelsEach LED driver has a specific LED drive current setting for it. These values are given in units of mA.G5iLED2G6VIS AutoRanging Ambient OutputsAutoRanging will automatically change the modes of the photodiodes to avoid saturation. Whenchanging modes, the raw data output changes lev-els so that all measurements are on the same scale. The output from this channel is the pro-cessed value which can be used without knowl-edge of the photodiode modes.G7IRG8PS1AutoRanging PS OutputsThese channels are the AutoRanging PS output from the device. Raw data measurements are pro-cessed by the AutoRanging firmware to make all the readings across different modes have the same magnitude. Since the device switches modes to compensate for ambient light, the raw data will show jumps when changing modes.These outputs will not display the jumps because the firmware is stitching the raw outputs together.G9PS2G10VIS s State of Ambient Visible System These channels help indicate what mode the sen-sor is in during each of their respective measure-ments. The four possible modes are as follows: Low Light, High Sensitivity, High Signal, and Sun-light. These modes are numbered from zero to three. For more information about each mode, please consult the data sheet.G11IR stState of Ambient IR SystemG12PS stState of PS SystemG13PS1blPB Baseline LevelsAutoRanging uses baselining to determine the no-detect threshold for readings. Any readings below the values shown on these channels will be con-sidered no-detect readings. Any values higher than this baseline will be shown in the AutoRang-ing PS Outputs above.G14PS2blG15N/A UnusedThe unused channels are not in use by software, but they are available in firmware to use as needed.G16N/aNo tR e co mme nd edf or N e wDe si g n sSi1142 Infrared Slider Demo Kit4.1.2. Gesture SensingIn addition to infrared and ambient light measurements and distance calculations, the example firmware contains algorithms for gesture recognition. When connected to the board with the Performance Analysis Tool, a group window will appear, as shown in Figure 5. When a gesture is recognized by firmware, the gesture name and parameter information will be added to the top of the 2D Gesture group. Three gestures are supported by the example code. The parameters for each gesture are listed in Table 2.Figure 5.Performance Analysis Tool Group WindowTable 2. Recognized GesturesGesture NameParameter Parameter RangeDescription of Action and LED IndicationPause Position 1–8 (LED position)Hold hand steady in a single position above the board for 3/4 second. Current position LED will blink briefly.Swipe LeftSpeed1–18 (Slow to Fast)Move hand rapidly from the right side to the left sideof the board. LEDs will briefly indicate a leftward sweep pattern.Swipe Right Speed1–18 (Slow to Fast)Move hand rapidly from the left side to the right sideof the board. LEDs will briefly indicate a rightward sweep pattern.No tR e co mme nd edf or N e wDe si g n sSi1142 Infrared Slider Demo Kit4.2. Si114x Programmer’s Toolkit4.2.1. Software APIThe Si114x Programmer’s Toolkit API enables rapid development of Si114x software in a PC environment using the Si1142 Slider Demo Board. By emulating an I 2C interface over USB, the Si114x Programmer’s Toolkit API allows source code to be developed on a PC and then migrated quickly and easily to an MCU environment once target hardware is available. Either commercially-available or free PC-based C compilers can be used for software development with the Si114x Programmer’s Toolkit API.The Si114x Programmer’s Toolkit API also includes the Si114x Waveform Viewer Application. This tool runs in conjunction with user applications to display and debug the measurements taken from the Si1142 Slider Demo Board.Note:The Performance Analysis Tool, Si114x Programmer’s Toolkit and IDE cannot connect to the Si1142 Slider Demo Boardat the same time. Be certain to disconnect from the board in one software package before trying to connect in the other.4.2.2. Command Line UtilitiesFor evaluation of the Si1142 Slider Demo Board without the need to develop and compile source code, a flexible set of command line utilities is also provided with the Si114x Programmer’s Toolkit. These utilities can be used to configure and read samples from the Si1142 Slider Demo Board. For automated configuration and scripting, the command line utilities can be embedded into .bat files.4.2.3. Sample Source CodeFor faster application development, the Si114x Programmer’s Toolkit contains example source code for the Si1142Slider Demo Board and for each of the command line utilities. Developers can get started quickly by using the Si114x example source code and then tailoring it to their needs.4.2.4. Downloading the Si114xe Programmer’s ToolkitThe Si114x Programmer’s Toolkit and associated documentation is available from the web at the following URL:/products/sensors/pages/optical-sensor-software.aspx .No tR e co mme nd edf or N e wDe si g n sSi1142 Infrared Slider Demo Kit4.3. Silicon Laboratories IDEThe Silicon Laboratories IDE integrates a source-code editor, a source-level debugger, and an in-system Flash programmer. This tool can be used to develop and debug code for the C8051F800 MCU, which is included on the Si1142 Slider Demo Board. The use of several third-party compilers and assemblers is supported by the IDE.4.3.1. IDE System RequirementsThe Silicon Laboratories IDE requirements are:⏹ Pentium-class host PC running Microsoft Windows 2000 or newer ⏹ One available USB port4.3.2. Third Party ToolsetsThe Silicon Laboratories IDE has native support for many 8051 compilers. The full list of natively-supported tools is as follows:⏹ Keil⏹ IAR ⏹ Raisonance ⏹ Tasking ⏹ SDCC4.3.3. Downloading the Example Firmware ImageSource code that has been developed and compiled for the C8051F800 MCU on the Si1142 Slider Demo Board may be downloaded to the board using the Silicon Laboratories IDE. Follow the instructions below to update or refresh the .HEX image in the Si1142 Slider Demo Board.1. Connect the Si1142 Slider Demo Board to the PC using a USB cable.2. Launch the Silicon Labs IDE, and click on Options->Connection Options .3. Select “USB Debug Adapter”, and then select the board from the list (it should show up as “TS” followed by a serial number).4. Select “C2” as the debug interface, and press “OK”.5. Connect to the board by pressing the “Connect” icon, or using the keyboard shortcut Alt+C.6. Click on the “Download” icon, or use the keyboard shortcut Alt+D.7. In the download dialog window, click “Browse”.8. Change to Files of Type “Intel Hex (*.hex)” and then browse to select the file.9. Click “Open” then “Download”.10. To run the new image, either press “Run” or “Disconnect” in the IDE.Note:The Performance Analysis Tool, Si114x Programmer’s Toolkit, and the IDE cannot connect to the Si1142 Slider DemoBoard at the same time. Be certain to disconnect from the board in one software package before trying to connect in the other.No tR e co mme nd edf or N e wDe si g n sSi1142 Infrared Slider Demo Kit5. SchematicF i g u r e 6.S i 1142 S l i d e r D e m o B o a r d S c h e m a t i cg n sSi1142 Infrared Slider Demo KitD OCUMENT C HANGE L ISTRevision 0.2 to Revision 0.3Replaced QuickSense Studio references andinstructions with Si114x Programmer’s Toolkit.No tR e co mme nd edf or N e wDe si g n s Silicon Laboratories Inc.400 West Cesar ChavezAustin, TX 78701USASmart. Connected. Energy-Friendly .Products /productsQuality /quality Support and Community Disclaimer Silicon Laboratories intends to provide customers with the latest, accurate, and in-depth documentation of all peripherals and modules available for system and software implementers using or intending to use the Silicon Laboratories products. Characterization data, available modules and peripherals, memory sizes and memory addresses refer to each specific device, and "Typical" parameters provided can and do vary in different applications. Application examples described herein are for illustrative purposes only. Silicon Laboratories reserves the right to make changes without further notice and limitation to product information, specifications, and descriptions herein, and does not give warranties as to the accuracy or completeness of the included information. Silicon Laboratories shall have no liability for the consequences of use of the information supplied herein. This document does not imply or express copyright licenses granted hereunder to design or fabricate any integrated circuits. The products are not designed or authorized to be used within any Life Support System without the specific written consent of Silicon Laboratories. A "Life Support System" is any product or system intended to support or sustain life and/or health, which, if it fails, can be reasonably expected to result in significant personal injury or death. Silicon Laboratories products are not designed or authorized for military applications. Silicon Laboratories products shall under no circumstances be used in weapons of mass destruction including (but not limited to) nuclear, biological or chemical weapons, or missiles capable of delivering such weapons.Trademark InformationSilicon Laboratories Inc.® , Silicon Laboratories®, Silicon Labs®, SiLabs® and the Silicon Labs logo®, Bluegiga®, Bluegiga Logo®, Clockbuilder®, CMEMS®, DSPLL®, EFM®, EFM32®, EFR, Ember®, Energy Micro, Energy Micro logo and combinations thereof, "the world’s most energy friendly microcontrollers", Ember®, EZLink®, EZRadio®, EZRadioPRO®, Gecko®, ISOmodem®, Precision32®, ProSLIC®, Simplicity Studio®, SiPHY®, Telegesis, the Telegesis Logo®, USBXpress® and others are trademarks or registered trademarks of Silicon Laborato-ries Inc. ARM, CORTEX, Cortex-M3 and THUMB are trademarks or registered trademarks of ARM Holdings. Keil is a registered trademark of ARM Limited. All other products or brand names mentioned herein are trademarks of their respective holders. N o t R e co m m e n d e d f o r N e w D e s i g n s。

基于计算机视觉的室外停车场车位检测实验设计张乾;肖永菲;杨玉成;余江浩;王林【摘要】为便于汽车驾驶员在室外停车场中寻找可用空车位,基于以数据采集、图像处理和目标检测等过程的计算机视觉,开发了室外停车场车位检测实验.该实验将Haar-like特征描述和车位中颜色能量变化作为判别模型的数据输入,选取随机森林作为车位可用状态的判别模型.通过在国际公开数据集PKLot上进行实验,对可用车位的检测准确率的均在91%以上;在自建的GZMU-LOT上进行实验,可用空车位的检测准确率达92.21%.【期刊名称】《实验技术与管理》【年(卷),期】2019(036)007【总页数】4页(P138-140,146)【关键词】车位检测;计算机视觉;实验设计;室外停车场【作者】张乾;肖永菲;杨玉成;余江浩;王林【作者单位】贵州民族大学数据科学与信息工程学院,贵州贵阳 550025;贵州民族大学教务处,贵州贵阳 550025;贵州民族大学数据科学与信息工程学院,贵州贵阳550025;贵州省模式识别与智能系统重点实验室,贵州贵阳 550025;贵州民族大学数据科学与信息工程学院,贵州贵阳 550025;贵州省模式识别与智能系统重点实验室,贵州贵阳 550025;贵州民族大学教务处,贵州贵阳 550025;贵州省模式识别与智能系统重点实验室,贵州贵阳 550025;贵州省模式识别与智能系统重点实验室,贵州贵阳 550025【正文语种】中文【中图分类】TP391.41城市停车难是令汽车驾驶员苦恼的问题,在停车场中寻找可用车位也是比较困难的。

采用现代信息技术快速、准确、醒目地告知驾驶员可用车位的具体位置是一个重要的课题[1]。

目前停车场可用车位的检测方法可归纳为基于传感器的方法和基于计算机视觉的方法。

基于传感器的可用车位检测方法是通过在车位安装传感器设备和装置,采集数据后传送到后台服务器,经服务器处理数据后显示车位的可用状态信息。

Improved Biomass Estimation Using the Texture Parameters of Two High-Resolution Optical SensorsJanet E.Nichol and tifur Rahman SarkerAbstract—Accurate forest biomass estimation is essential for greenhouse gas inventories,terrestrial carbon accounting,and climate change modeling studies.Unfortunately,no universal and transferable technique has been developed so far to quantify biomass carbon sources and sinks over large areas because of the environmental,topographic,and biophysical complexity of forest ecosystems.Among the remote sensing techniques tested, the use of multisensors and the spatial as well as the spectral characteristics of the data have demonstrated a strong potential for forest biomass estimation.However,the use of multisensor data accompanied by spatial data processing has not been fully investigated because of the unavailability of appropriate data sets and the complexity of image processing techniques in combining multisensor data with the analysis of the spatial characteris-tics.This paper investigates the texture parameters of two high-resolution(10m)optical sensors(Advanced Visible and Near Infrared Radiometer type2(A VNIR-2)and SPOT-5)in different processing combinations for biomass estimation.Multiple regres-sion models are developed between image parameters extracted from the different stages of image processing and the biomass of 50field plots,which was estimated using a newly developed “allometric model”for the study region.The results demonstrate a clear improvement in biomass estimation using the texture parameters of a single sensor(r2=0.854and rmse=38.54) compared to the best result obtained from simple spectral reflectance(r2=0.494)and simple spectral band ratios (r2=0.59).This was further improved to obtain a very promis-ing result using the texture parameter of both sensors together (r2=0.897and rmse=32.38),the texture parameters from the principal component analysis of both sensors(r2=0.851 and rmse=38.80),and the texture parameters from the av-eraging of both sensors(r2=0.911and rmse=30.10).Im-provement was also observed using the simple ratio of the texture parameters of A VNIR-2(r2=0.899and rmse=32.04)and SPOT-5(r2=0.916),andfinally,the most promising result (r2=0.939and rmse=24.77)was achieved using the ratios of the texture parameters of both sensors together.This high level of agreement between thefield and image data derived from the two novel techniques(i.e.,combination/fusion of the multisensor data and the ratio of the texture parameters)is a very significant improvement over previous work where agreement not exceeding r2=0.65has been achieved using optical sensors.Furthermore, Manuscript received January22,2010;revised June1,2010;accepted July5,2010.Date of publication October14,2010;date of current version February25,2011.This work was supported by GRF Grant PolyU5281/09E. J.E.Nichol is with the Department of Land Surveying and Geo-Informatics, The Hong Kong Polytechnic University,Kowloon,Hong Kong(e-mail: lsjanet@.hk).M.L.R.Sarker is with the Department of Land Surveying and Geo-Informatics,The Hong Kong Polytechnic University,Kowloon,Hong Kong, and also with the Rajshahi University,Rajshahi6205,Bangladesh(e-mail: lrsarker@).Color versions of one or more of thefigures in this paper are available online at .Digital Object Identifier10.1109/TGRS.2010.2068574biomass estimates of up to500t/ha in our study area far exceed the saturation levels observed in other studies using optical sensors.Index Terms—Biomass estimation,multisensors,texture measurement.I.I NTRODUCTIONT HE RECENT United Nations Climate Conference in Copenhagen,Denmark,in December2009once again reminded us that climate change is one of the most severe problems on Earth and that the atmospheric content of green-house gas(particularly CO2),which has risen precipitously in the last250years,particularly in the last50years[1], is the main culprit.Forests can remove this CO2from the atmosphere in the process of photosynthesis and can store it in their biomass,thereby lessening the greenhouse effect[2]. Thus,forest biomass is considered as an important part of the global carbon cycle[3]–[5].As a result,accurate forest biomass estimation is required for many purposes including greenhouse gas inventories,terrestrial carbon accounting,cli-mate change modeling[6]–[11],and implementation of the Kyoto Protocol of the United Nations Framework Conven-tion on Climate Change.Unfortunately,this estimation is not straightforward,and no universal and transferable technique for quantifying carbon sources and sinks has been developed so far[12],[13]because of the environmental,topographic, and biophysical complexity of forest ecosystems,which dif-fer in time and space.Besides traditionalfield-based meth-ods,which are accurate but costly,time consuming,and limited to small areas[14]–[19],remote sensing is the most promising technique for estimating biomass at local,regional, and global scales[20]–[26].A number of studies has been carried out using different types of sensors,including optical [4],[5],[26]–[41],synthetic aperture radar(SAR)[42]–[53], and lidar sensors[54]–[58],for biomass/forest parameter estimation.Apart from the use of a single sensor,combining information from multiple sensors has yielded promising results for the estimation of forest parameters/biomass[13],[18],[19],[59]–[62].A useful approach is to combine SAR and optical sensors [60],[61],[63],[64],but many other options have been tested, including different frequencies and polarizations[21],[65]–[72],different sensors[10],[18],[19],[64],[73]–[78],and multiple spatial resolutions[79],and improvements have been obtained because different sensors often have complementary characteristics in their interaction with land surfaces[61],[60]. From this broad range of approaches,widely varying degrees of success have been obtained because of the complexity of0196-2892/$26.00©2010IEEEbiomass in time and space,the lack of comprehensivefield data, and the limitations in the spatial and spectral characteristics of the satellite data.Beyond the shortcomings of the data,processing techniques may be the most important factor in biomass estimation as previous research has shown that the simple reflectance of the optical sensors[25],[26],[28],[31],[36],[80]–[82]and the backscattering of the radar sensors[46],[68],[70],[72],[83], [84]are unable to provide good estimations.Thus,processing techniques need to be selected to complement suitable data configurations.Now that optical sensors with a wide range of spatial and spectral resolutions are available,optical sensors are still an attractive data source,even though previous research has shown the difficulty of biomass estimation based on raw spectral signatures because of the influence of increased canopy shad-owing within large stands,the heterogeneity of vegetation stand structures,and the spectral data saturation[5],[25],[26],[30], [31],[38],[85]–[89].However,vegetation indices,which have the ability to minimize contributions from the soil background, sun angle,sensor view angle,senesced vegetation,and the atmosphere[90]–[95],are proven to be more successful[25], [31],[37],[38],[85],[89],[96]–[98]but still with generally low to moderate accuracies of up to ca.65%.Moreover,these mod-erate results have been obtained in temperate forests because of their simple canopy structure and tree species composition. In tropical and subtropical regions where biomass levels are high,where the forest canopy is closed with multiple layering, and where a great diversity of species is present([21],[25], [26],[31],[38],[85],[86],vegetation indices have shown less potential,with low or insignificant results.On the other hand,the spatial characteristics of images,such as texture,have been able to identify objects or regions of inter-est in an image[99],and image texture is particularly useful infine spatial resolution imagery[61].Many of the texture measures developed[99],[100]–[102]have shown potential for improvements in land use/land cover mapping using both optical and SAR images[103]–[114].Image texture has also proved to be capable of identifying different aspects of forest stand structure,including age,density,and leaf area index([53], [115]–[119])and has shown a potential for biomass estimation with both optical[5],[38],[89]and SAR data([42],[51],[120]. Moreover,although most previous biomass estimation projects used Landsat TM data with a30-m spatial resolution[60], texture is expected to be more effective withfiner spatial reso-lution imagery sincefiner structural details can be distinguished [51],[61],[110],[121]–[125].Two potential drawbacks of the implementation of texture measurement for biomass estimation are the following:1)texture is a very complex property and can vary widely depending on the object of interest,the envi-ronmental conditions,and the selection of window size[105], [119],[126],[127],and2)texture processing can generate a lot of data which are difficult to manage[119],[127].Thus, although texture measurement holds potential for biomass es-timation,it has not yet been fully investigated,and results so far,when applying texture to optical images,have not exceeded 65%accuracy,even in structurally simple temperate and boreal forests[5].Considering the potential advantages of both image texture and multisensor data,this paper investigates texture processing for biomass estimation using data from two high-resolution optical sensors ANVIR-2and SPOT-5along with raw spectral processing and some simple band ratios.The project data were selected by considering the following facts:1)both sensors havefine spatial resolution(10m),and this higher spatial resolution shows promise for image texture analysis;2)the sensors have some common spectral bands(green,red,and NIR)which may help in reducing any random error in the averaging process;and3)the sensors have uncommon spectral bands(blue in Advanced Visible and Near Infrared Radiometer type2(A VNIR-2)and short-wave near infrared(SWNIR) in SPOT-5)which may be able to provide complementary information.A.ObjectivesThe overall objective of the study is to explore the potential of texture processing combined with multisensor capability for the improvement of biomass estimation using data from two high-resolution optical sensors.More specific objectives are to investigate the performance of the following:1)the spectral reflectance of the individual bands of thesensors individually and together;2)the simple ratio of the different bands of the sensorsindividually and jointly;3)the texture parameters of the sensors individually andtogether;4)the simple ratio of the texture parameters of the sensorsindividually and together for the improvement of biomass estimation.II.S TUDY A REA AND D ATAA.Study AreaThe study area for this research is the Hong Kong Special Administrative Region(Fig.1)which lies on the southeast coast of China,just south of the Tropic of Cancer.The total land area of Hong Kong is1100km2,which includes235small outlying islands.Although the population is over7million, only about15%of the territory is built-up,but less than1% is still actively cultivated.Approximately40%of the total area is designated as country parks,which are reserved for forest succession under the management of the Agriculture,Fisheries and Conservation Department.The native subtropical evergreen broad leaf forest has been replaced by a complex patchwork of regenerating secondary forest in various stages of development, and plantations.Forest grades into woodland,shrubland,and then grassland at higher elevations.B.DataImages from two optical sensors were used in this paper.One image was obtained on October24,2007,by A VNIR-2from the ALOS-2satellite launched in January2006,and the other was collected on December31,2006,by the High-ResolutionFig.1.Study area and sample plots.Geometric(HRG)sensor of the SPOT-5Earth Observation Satellite launched in May2002(Table I).The instantaneous field of view of10m for the A VNIR-2multispectral sensor is the main improvement over the16-m resolution A VNIR. The SPOT-5HRG multispectral data add an improved spatial resolution(20to10m)compared with the previous SPOT-4 platform as well as an additional shortwave infrared band at 10m.With swath widths of70and60km,respectively,both A VNIR-2and SPOT-5HRG are suitable for regional scale mon-itoring and ideal for Hong Kong’s land area of ca.40×60km.III.M ETHODOLOGYThe methodology(Fig.2)of this paper comprises two parts, namely,allometric model development forfield biomass esti-mation and processing of A VNIR-2and SPOT-5images.A.Allometric Model DevelopmentDue to the lack of an allometric model for converting the trees measured in thefield to actual biomass,it was necessary to harvest,dry,and measure a representative sample of trees. Since Hong Kong’s forests are very diverse,the harvesting of a large sample was required.This was done by selecting the dominant tree species comprising a total of75trees in 4diameter at breast height(DBH)classes(less than10,10–15, 15–20,and20cm and above),and standard procedures were followed for tree harvesting[14],[128],[129].The harvested trees were separated into fractions,includ-ing leaves,twigs,small branches,large branches,and stem. After measuring the fresh weight(FW),representative sam-ples(Fig.3)from every part of the tree were taken for dry weight(DW)measurement in an oven at80◦C until a con-stant DW was obtained(Fig.3).The weight of every sample was estimated using the same electric weight balance at a 0.002-g precision.The ratio of DW to FW was calculated for every part of the samples using DW and FW of each part of the ing the ratio,DW was calculated for every part,and finally,the DW of each tree was calculated by summing the DW of all parts.Regression models used by previous researchers[20],[128] were tested in order tofind the bestfit by using DW as the dependent variable and DBH and height as independent variables in different combinations.Finally,using the log trans-formed DBH and DW,the bestfit model(Table II)was found, considering all test parameters including the correlation coef-ficient(r),the coefficient of determination(r2),the adjusted coefficient of determination(adjusted r2),and the rmse.Afit of approximately93.2%(adjusted r2of0.932)and an rmse of13.50were obtained for this bestfit model(Table II).This was deemed highly satisfactory in view of the great variety of tree species,and is similar to the accuracies of several other specialist forest inventories[20],[128].B.Field Plot Measurement and Field Biomass EstimationTo build a relationship between image parameters andfield biomass,50sample plots covering a variety of tree stand types were selected using purposive sampling.Circular plots with a15-m radius were determined by considering the image resolution(approximately10m),the orthorectification error, and the GPS positioning error.All sample plots were positioned within a homogenous area of the forest and at least15m distant from other features such as roads,water bodies,and other infrastructure.A Leica GS5+GPS was used to determine the center of each plot using the Differential Global Positioning System mode for accuracy within±3m.For a precise position, a Position Dilution of Precision value below four was always attempted.Both DBH and tree height were measured for all trees within the circular plot region.The DBH of the trees was measured at1.3m above the ground,and the heights of the small and large trees were measured by Telescopic-5and DIST pro4,respectively.Trees with a DBH below2.5cm were not included but were recorded.Finally,using the measured parameter DBH,the biomass of each tree and the biomass of all trees in a plot were estimated(Table III)using the allometric model developed for this study area.C.AVNIR-2and SPOT-5Data PreprocessingThe digital number values of the A VNIR-2and SPOT-5data were converted to spectral radiance using the conversion factors given in the image headerfiles.Orthorectification was carried out using the Satellite Orbital Math Model to compensate distortions such as sensor geometry,satellite orbit and attitude variations,Earth shape,rotation,and relief.In order to ensure an rms error within0.5pixel,a high-resolution(10m)digital elevation model and well-distributed ground control points were used for orthorectification.D.Texture AnalysisTexture is a function of local variance in the image,which is related to the spatial resolution and size of the dominant scene objects[130],and it can be used to identify these objects orTABLE IC HARACTERISTICS OF THED ATA U SED FOR T HIS PAPERFig.2.Overall methodology.regions of interest in any image [99],[131].Studies have shown that,in many cases,texture may be a more important source of information than reflectance or intensity,and this is especially true in high-resolution images (e.g.,[61],[69],[112],[132],and [133]).Thus,in forested landscapes,texture is dependent on the size and spacing of tree crowns,and on high-resolution images if a pixel falls on a tree,its neighbor may also fall on the same tree,resulting in a low local variance.As the resolution increases to a level that is comparable to the dominant tree crown size,the local variance increases,and this should be especially true in tropical forests with high species diversity,where stands are heterogeneous [130].Several methods and techniques for describing texture,based on statistical models,have been developed [51],[112],[113],[120].For this paper,two categories of texture measurement were selected to test their potential for biomass estimation with A VNIR-2and SPOT-5data (Table IV).The first one is the gray level co-occurrence matrix (GLCM)[99]along with some gray level difference vector based texture measurements.The second one is the sum and difference histogram proposed by theFig.3.Tree harvesting procedure for the allometric model development. authors in[134]as an alternative to the usual co-occurrence ma-trices used.Identifying suitable textures additionally involves the selection of moving window sizes[38],[127].A small window size exaggerates the difference within the window (local variance)but retains a high spatial resolution,while a large window may not extract the texture information efficiently due to over-smoothing of the textural variations.Because the resolution of the data used is high(approximately10m)and the forest structure in the study area is dense and compact, all texture measurements were performed using four small to medium window sizes from3×3to9×9.E.Statistical AnalysisTo represent the relationship betweenfield biomass and remotely sensed data,some researchers have used linear re-gression models with or without log transformation of thefield biomass data[28],[31],[34],[35],[65],[66],[83],while others have used multiple regression with or without stepwise selec-tion[18],[19],[26],[27],[37],[46],[69]–[71],[98].Nonlinear regression[45],[135],artificial neural networks[4],[25],[26], [136]–[138],semiempirical models[48],and nonparametric es-timation methods such as k-nearest neighbor and k-means clus-tering have also been widely used[139].Although no model can perfectly express this complex relationship,researchers are still using multiple regression models as one of the best choices.In this paper,simple linear regression and stepwise multiple-linear regression models were used to compare the data derived from all processing steps withfield biomass.The biomass data were collected from50field plots and were used as independent variables.The spectral reflectance of eachfield plot was extracted using an area-of-interest mask of3×3 pixels,for which the mean reflectance was calculated.In multiple regression modeling,difficulties such as mul-ticollinearity and overfitting may arise when a large number of independent variables are used,such that the independent variables are highly correlated with one another.To avoid overfitting problems as well as to ensurefinding the bestfit model,five common statistical parameters,namely,the corre-lation coefficient(r),the coefficient of determination(r2),the adjusted r2,the rmse,and the p-level(for the model),were computed.Another seven statistical parameters such as the beta coefficient(B),the standard error of B,the p-level,the toler-ance(Tol=Tolerance=1−R2x),the variance inflation factor (VIF j=1/1−R2j),the eigen value(EV),and the condition index(CI=k j=(λmax/λj),j=1,2,...,p)were calculated to test the interceptfitness and multicollinearity effects.To indicate multicollinearity problems,a tolerance value that is less than0.10[140],a VIF value that is greater than10[19], [140]–[142],an EV that is close to zero[142],[143],and a condition index that is greater than30[140],[142],[143]were used as determinants.F.Processing of the AVNIR-2and SPOT-5Data for Modeling The data of A VNIR-2and SPOT-5were processed in the following three steps.First Processing Step—Spectral Bands and Simple Band Ratio:To test the potential of the spectral reflectance of all bands of one sensor and both sensors together and the ratios, the following bands and simple band ratios were used in the model.1)The spectral reflectance extracted from all four bands ofANVIR-2and SPOT-5were used individually in a linear regression model,and all bands of a single sensor were used in a multiple regression model.2)The spectral reflectance extracted from all bands ofANVIR-2and SPOT-5and the principal component anal-ysis(PCA)of all bands of both sensors were used to-gether using a stepwise multiple regression model.3)The spectral reflectance extracted from all six simplespectral band ratios(1/2,1/3,1/4,2/3,2/4,and3/4)of both sensors was used individually in a simple regression model.Multiple regression models were also used to test all simple band ratios of each sensor together.4)The spectral reflectance extracted from all simple bandratios(1/2,1/3,1/4,2/3,2/4,and3/4)of both sensors was used together in stepwise multiple regression models. Second Processing Step—Modeling of the Texture Parame-ters:Fifteen types of texture measurements using four window sizes(from3×3to9×9)were used to generate the texture parameters from four spectral bands(each of the A VNIR-2and SPOT-5data).All texture-derived parameters were used in the model in the following manner.1)The texture parameters derived from each band of bothsensors and all texture parameters of a single sensor were used in the stepwise multiple regression model.2)The texture parameters derived from both sensors to-gether were used in the multiple regression model.3)The texture parameters derived from the PCA of bothsensors were used in the multiple regression model.4)The texture parameters derived from the band averagingof both sensors were used in the multiple regression model.Third Processing Step—Modeling the Simple Ratio of the Texture Parameters:In this processing step,six types of ratios (1/2,1/3,1/4,2/3,2/4,and3/4)were performed using theB EST F IT A LLOMETRIC MODELTABLE IIIDW (B IOMASS )D ISTRIBUTION OF S ELECTED F IELD PLOTStexture parameters of both sensors,and modeling was per-formed in the following ways.1)The parameters derived from each texture parameter ratio were used in the multiple regression model.2)The parameters derived from all six simple texture band ratios of an individual sensor were used in the multiple regression model.3)The parameters derived from all texture parameter ratios of both sensors were used in the stepwise multiple regres-sion model together.IV .R ESULTS AND A NALYSISThe field data collected from 50field plots showed a wide range of biomass levels from 52to 530t/ha.The average of ca.150t/ha biomass for our secondary forest study area is more than twice the biomass levels for other reported tropical secondary forests [86]and is representative of a wide variety of successional stages and tree sizes in the study area.For example,most forest is younger than 70years old,with a biomass below 200t/ha.The fewer plots above this level reflect the more restricted distribution of late successional stage forest.In all modeling processes,the 50field plots were used as the dependent variable,and the parameters derived from different processing steps (A VNIR-2and/or SPOT-5)were used as independent variables.The results of the three processing steps are presented as three separate sections.A.Performance of the Raw Bands and Simple Band Ratio The best estimates of biomass using simple spectral bands from A VNIR-2and SPOT-5,as well as different combinations of bands and PCA,produced only ca.50%usable accuracy.From the individual bands of both sensors,best result (r 2=0.494for A VNIR-2and r 2=0.316for SPOT-5)was obtained from the NIR bands,and the lowest performance (r 2=0.002for A VNIR-2and r 2=0.0345for SPOT-5)was obtained from the red bands [Fig.4(a)and (b)].The per-formance of the model (r 2)increased to 0.631and 0.503using all bands of A VNIR-2and SPOT-5,bining all bands of both sensors together was not able to produce a better performance because of a strong intercorrelation among bands.However,although multiband models appear to improve biomass estimation accuracy,the problem is that these models violate the assumption of uncorrelated independent variables and show strong multicollinearity effects (a CI that is more than 30)except for the PCA-based model which was only able to define field biomass with an accuracy of approxi-mately 50%.The simple band ratios of both sensors (individually and together)improved biomass estimation substantially [Fig.5(a)and (b)],with the highest r 2of 0.59being derived from the red/NIR ratio of A VNIR-2,compared to the highest perfor-mance for SPOT-5of r 2=0.387also from the red/NIR ratio.This improvement may be explained by the fact that ratios can enhance the vegetation signal while minimizing the solar irradiance,soil background,and topographic effects [90],[92],[93],[119],[144]–[152].In addition to the assessment of single band ratios,multiple regression models were developed using all simple band ratios of A VNIR-2and SPOT-5for each sensor individually and both together.The results [Fig.5(c)]showed a significant improvement in biomass estimation,with an r 2of 0.739obtained from the combined use of simple ratios of bothF ORMULAS OF THE T EXTURE M EASUREMENTS U SED IN T HIS PAPERsensors.However,as with the raw spectral bands,very strong multicollinearity effects were observed for all three models [Fig.5(c)]due to a strong correlation among the band ratios.In summary,the attempts to estimate biomass using sim-ple spectral bands of A VNIR-2and SPOT-5with different combinations of band ratios and PCA produced only ca.60%usable accuracy.The reasons for this can be explained as follows.1)The field biomass in this study area is very high (52–530t/ha).2)Although the near-infrared reflectance from a single leaf layer increases initially with increasing leaf cover,asFig.4.Accuracy of biomass estimation using rawdata.Fig.5.Accuracy of biomass estimation using a simple ratio of raw data.additional leaf layers are added to a canopy,these in-creases are not sustained [153].Concurrently,as the canopy matures,creating more layers and increasing in complexity,shadowing acts as a spectral trap for incom-ing energy and reduces the amount of radiation returning to the sensor [87],[154].This is a normal situation in tropical and subtropical forests with high biomass.A lower accuracy was also found using simple spectral bands in linear regression in many other studies [25],[26],[28],[31],[36],[80]–[82].3)Although we used 8spectral bands and 12simple band ratios from the two sensors,almost all bands and ratios were highly correlated,and as a result,the multiple re-gression model was found to be unsuitable because of the violation of the assumption of uncorrelated independent variables [25].Hence,we were unable to take advantage of the potential synergies between the different sensors for biomass estimation.4)Ratios and vegetation indices have been shown to be mainly useful in temperate and boreal forest regions [5],[37],[88],[96],[155]–[158],where forests have a relatively simple structure.In tropical and subtropical regions,the forest structure is very complex,and the relationship between the vegetation index and biomass is asymptotic [25],[159],especially in tropical forests with high biomass.Considering the moderate accuracy obtained so far,this paper decided to investigate further using the spatial characteristics of the images,particularly texture,for biomass estimation.Texture is an important variable,and it has already shown po-tential for biomass estimation using optical data [5],[38],[89],[115]–[118],[160].。

计算机视觉、机器学习相关领域论文和源代码大集合--持续更新……zouxy09@/zouxy09注:下面有project网站的大部分都有paper和相应的code。

Code一般是C/C++或者Matlab代码。