Towards knowledge acquisition from information extraction

- 格式:pdf

- 大小:98.70 KB

- 文档页数:14

知识来源于提问这解释英语作文Knowledge Comes from QuestioningCuriosity is the driving force behind the acquisition of knowledge. It is the innate desire to understand the world around us, to seek answers to our questions, and to uncover the mysteries that lie hidden. This insatiable thirst for knowledge has been the catalyst for countless discoveries and advancements throughout human history.At the heart of this process of knowledge acquisition is the act of questioning. By posing questions, we open the door to new perspectives, challenge existing beliefs, and push the boundaries of our understanding. Every question, no matter how simple or complex, has the potential to unlock a wealth of information and lead us down a path of deeper understanding.The process of questioning begins with a recognition of our own limitations. We acknowledge that there is much we do not know, and this awareness drives us to seek out the answers. This humility in the face of the unknown is a crucial component of the learning process, as it allows us to approach new information with an open and receptive mindset.Once we have identified a question, the real work begins. We must delve into research, investigate various sources, and critically analyze the information we uncover. This process requires a diverse set of skills, including critical thinking, analytical reasoning, and effective communication. By engaging in this rigorous exploration, we not only find the answers we seek but also develop a deeper understanding of the subject matter.One of the most powerful aspects of questioning is its ability to challenge our preconceptions and push us to think outside the box. When we question the status quo, we open ourselves up to new possibilities and alternative perspectives. This can lead to groundbreaking discoveries and innovative solutions to complex problems.For example, the scientific method, which is the foundation of modern scientific inquiry, is built upon the principle of questioning. Scientists begin with a hypothesis, a tentative explanation for a phenomenon, and then design experiments to test the validity of their assumptions. Through this process of questioning and experimentation, they gradually build a deeper understanding of the natural world.Similarly, in the realm of philosophy, the act of questioning has beeninstrumental in the development of our understanding of the human condition, the nature of reality, and the fundamental questions of existence. From the Socratic dialogues of ancient Greece to the existential musings of modern thinkers, the power of questioning has been a driving force in shaping our intellectual landscape.Beyond the realms of science and philosophy, the ability to question is equally crucial in our daily lives. When faced with a problem or a challenge, the act of questioning can help us identify the root causes, explore alternative solutions, and ultimately arrive at a more effective resolution.In the workplace, for instance, employees who are encouraged to ask questions and challenge assumptions are often more engaged, innovative, and productive. By fostering a culture of inquiry, organizations can tap into the collective intelligence of their workforce and drive continuous improvement.In our personal lives, the act of questioning can help us navigate complex decisions, uncover our true desires and values, and ultimately lead us towards a more fulfilling and meaningful existence. By questioning our own beliefs, biases, and assumptions, we can gain a deeper understanding of ourselves and the world around us.In conclusion, the ability to question is a fundamental aspect of thehuman experience. It is the driving force behind the acquisition of knowledge, the catalyst for innovation and discovery, and the key to personal growth and development. By embracing the power of questioning, we can unlock the full potential of our minds and continue to expand the boundaries of our understanding.。

AcquireIntroductionAcquire is a noun that is commonly used to refer to the act of gaining or obtaining something. This article explores the concept of acquisition in various contexts, including personal development, business, and education. It delves into the importance of acquisition and provides strategies for acquiring knowledge, skills, and assets.Importance of AcquisitionAcquisition plays a crucial role in personal and professional growth. It allows individuals to expand their knowledge, broaden their horizons, and improve their capabilities. By acquiring new information, skills, and assets, individuals can enhance their personal and professional lives, opening up new opportunities and avenues for success.Strategies for Knowledge Acquisition1.Read Widely: Reading is one of the most effective ways to acquireknowledge. By reading a diverse range of books, articles, andresources, individuals can gain insights into various subjects and perspectives.2.Seek Mentors: Finding mentors who are experts in a particularfield can accelerate the acquisition process. Mentors can provide guidance, share experiences, and offer valuable advice to helpindividuals acquire knowledge and skills more efficiently.3.Attend Workshops and Conferences: Participating in workshops andconferences allows individuals to acquire knowledge from industry experts and network with like-minded individuals. These eventsprovide a platform for learning, sharing ideas, and acquiringpractical skills.Strategies for Skill Acquisition1.Practice Regularly: Acquiring new skills requires consistentpractice. By allocating dedicated time to practice, individualscan improve their proficiency and mastery of a particular skill. 2.Take Courses and Training Programs: Enrolling in courses andtraining programs tailored to specific skills can providestructured learning opportunities. These programs often includehands-on exercises and assessments to accelerate skill acquisition.3.Find a Mentor or Coach: Similar to knowledge acquisition, havinga mentor or coach can significantly enhance skill acquisition.They can provide guidance, correct mistakes, and supportindividuals in their journey towards skill mastery.Strategies for Asset Acquisition1.Set Clear Goals: Before acquiring assets, it is essential todefine clear goals and outline the purpose of the acquisition.This helps individuals identify the assets they need to acquireand make informed decisions.2.Research and Due Diligence: Conduct thorough research and duediligence before acquiring an asset. This involves gatheringinformation, analyzing market trends, and evaluating potentialrisks and returns.work and Collaborate: Building a strong network andcollaborating with others can provide access to valuableopportunities for asset acquisition. Collaborative efforts canlead to shared resources, knowledge sharing, and mutual support. 4.Financial Planning: Acquiring assets often involves financialconsiderations. Developing a sound financial plan, includingbudgeting and investment strategies, is crucial for successfulasset acquisition.ConclusionAcquisition is a fundamental aspect of personal and professional growth. Whether it is acquiring knowledge, skills, or assets, individuals can apply various strategies to enhance their acquisition process. Byadopting a proactive and systematic approach, individuals can continually improve themselves, seize new opportunities, and achieve success in their chosen endeavors.。

参加会议的原因英语作文Title: Reasons for Attending a Conference。

Attending conferences serves as an invaluable opportunity for personal and professional growth. There are numerous compelling reasons why individuals choose to participate in such gatherings. Here, we explore some of the primary motivations behind attending conferences.1. Knowledge Acquisition: One of the foremost reasons for attending conferences is the opportunity to gain new knowledge. These events often feature keynote speakers, workshops, and presentations on cutting-edge research, industry trends, and innovative practices. Engaging with experts in the field and learning from their experiences can significantly expand one's understanding and expertise.2. Networking Opportunities: Conferences provide a platform for networking with peers, professionals, and industry leaders. Building and nurturing professionalrelationships can open doors to collaboration, career opportunities, and valuable insights. Networking at conferences facilitates the exchange of ideas, best practices, and potential collaborations, fostering a senseof community within the respective field or industry.3. Professional Development: Many conferences offer sessions specifically designed for professional development. These sessions may focus on honing skills such as leadership, communication, project management, or technical proficiency. Attending workshops and seminars gearedtowards professional growth can enhance one's capabilities and competencies, ultimately contributing to career advancement.4. Exposure to New Perspectives: Conferences attract attendees from diverse backgrounds, disciplines, and geographical locations. Engaging with individuals with varying viewpoints and experiences broadens one's perspective and fosters a deeper understanding of global issues and challenges. Exposure to new perspectives stimulates critical thinking and creativity, encouragingattendees to approach problems from different angles.5. Showcasing Research or Work: For academics, researchers, and professionals, conferences offer a platform to showcase their research findings, projects, or innovative solutions. Presenting at a conference allows individuals to receive feedback, validation, and recognition from peers and experts in the field. It also provides an opportunity to disseminate knowledge and contribute to the advancement of the respective discipline or industry.6. Stay Updated on Industry Trends: Industries and fields are constantly evolving, with new developments, technologies, and best practices emerging regularly. Conferences serve as a hub for staying updated on the latest trends, advancements, and disruptions within a particular sector. Attending sessions and discussions focused on industry-specific topics keeps participants informed and abreast of current trends, enabling them to adapt and thrive in a dynamic environment.7. Inspiration and Motivation: Conferences often feature inspirational keynote speakers who share their success stories, challenges overcome, and lessons learned. Such presentations can inspire and motivate attendees to pursue their goals, overcome obstacles, and strive for excellence in their endeavors. The collective energy and enthusiasm of like-minded individuals at conferences can reignite passion and commitment towards one's profession or field of interest.8. Business Opportunities: Conferences serve as afertile ground for exploring potential business opportunities, whether it be partnerships, collaborations, or new ventures. Meeting with representatives from other organizations, startups, or investors can lead to fruitful discussions and partnerships that may not have been possible otherwise. The networking aspect of conferences often extends beyond professional relationships to encompass business opportunities and ventures.In conclusion, attending conferences offers a myriad of benefits ranging from knowledge acquisition and networkingto professional development and business opportunities. Whether it's staying updated on industry trends, showcasing research, or seeking inspiration, these events play a crucial role in personal and professional growth. Embracing the opportunities presented by conferences can lead to enhanced skills, expanded networks, and newfound opportunities for advancement and success.。

Unit 8 Knowledge and Wisdom课文翻译综合教程三Knowledge and WisdomUnit 8 of the Translation Integrated Coursebook Three focuses on the theme of "Knowledge and Wisdom." In this unit, we explore the concept of knowledge and wisdom and discuss their significance in our lives.1. The Meaning of Knowledge and WisdomKnowledge and wisdom are two distinct yet interconnected concepts. Knowledge refers to the information, facts, and understanding that we gain through study, experience, and observation. It involves the accumulation of facts and information about various subjects or fields. On the other hand, wisdom is the ability to apply knowledge effectively in practical situations. It involves using our intellect, experience, and judgment to make wise decisions and choices.2. The Value of Knowledge and WisdomKnowledge and wisdom play essential roles in our personal and professional development. Knowledge allows us to broaden our understanding, enhance our skills, and solve problems. It empowers us to make informed decisions and improves the quality of our lives. However, knowledge alone may not be sufficient to navigate through life's challenges. Wisdom, with its emphasis on a deep understanding of human nature and the ability to make wise choices, ensures that knowledge is applied appropriately and ethically.Throughout history, humans have demonstrated a relentless pursuit of knowledge and wisdom. From ancient philosophers to modern scientists, the thirst for knowledge has led to significant discoveries and breakthroughs. This pursuit involves continuous learning, questioning, and seeking new perspectives. It also necessitates critical thinking and the ability to discern accurate information from falsehoods. Wisdom, in contrast, is acquired through experience and reflection. It requires self-awareness, empathy, and an ability to recognize patterns and anticipate outcomes.4. Knowledge and Wisdom in Different FieldsKnowledge and wisdom are applicable in various disciplines and areas of life. In academia, knowledge is acquired through rigorous study and research, while wisdom is developed through critical thinking and application of knowledge. In professions such as medicine and engineering, both knowledge and wisdom are crucial for making informed decisions and solving complex problems. In personal relationships, wisdom helps us navigate emotional dynamics and make sound judgments.5. Cultivating Knowledge and WisdomCultivating knowledge and wisdom is an ongoing process that requires dedication and effort. It starts with a thirst for learning and a commitment to continuous self-improvement. Reading books, attending seminars, and engaging in intellectual discussions can expand our knowledge base. Additionally, wisdom can be developed by reflecting on past experiences, seeking guidance from mentors, and practicing empathy and self-awareness.The acquisition and application of knowledge and wisdom have transformative effects on individuals and societies. Knowledge has driven technological advancements, scientific breakthroughs, and societal progress. It has the potential to improve our standard of living, promote equality, and advance global development. Wisdom, with its emphasis on ethical decision-making, can lead to more harmonious relationships, effective leadership, and sustainable solutions to complex problems.In conclusion, knowledge and wisdom are essential for personal and societal growth. While knowledge provides us with information and understanding, wisdom allows us to apply that knowledge effectively and ethically. The pursuit of knowledge and wisdom is a lifelong endeavor that enriches our lives and contributes to the betterment of society. Let us continue striving for knowledge and cultivating wisdom in our journey towards a more enlightened and fulfilling existence.。

教育是教授知识还是培育人才英语作文全文共3篇示例,供读者参考篇1Is Education About Imparting Knowledge or Nurturing Talent?This is a question that has puzzled educators, philosophers, and students alike for centuries. As a student myself, I've grappled with this dilemma throughout my academic journey. On one hand, the acquisition of knowledge seems like the fundamental purpose of education. We go to school to learn facts, theories, and skills that will equip us for future careers and life in general. Teachers impart their wisdom, and we diligently take notes, study for exams, and hopefully retain the information.On the other hand, I can't help but feel that reducing education to mere knowledge transmission is a gross oversimplification. Education, at its core, should be about unlocking human potential – nurturing the unique talents and abilities that lie within each individual. Rather than treating students as empty vessels to be filled with information, a trulyeffective education system should ignite our innate curiosity, creativity, and passion for learning.As I reflect on my own educational experiences, both perspectives ring true to some extent. There's no denying that I've acquired a vast amount of knowledge throughout my years in school – from mathematical formulas and scientific theories to historical events and literary analyses. This foundational knowledge has undoubtedly shaped my understanding of the world and prepared me for further academic and professional pursuits.However, some of my most profound learning experiences have come not from passively absorbing information, but from engaging in hands-on projects, thought-provoking discussions, and collaborative problem-solving. It's in these moments when I've felt my critical thinking skills sharpen, my creativity flourish, and my passion for learning ignite. These experiences have taught me that education is not just about accumulating knowledge, but about developing essential skills and nurturing the inherent talents that make each of us unique.Take, for example, my experience in a high school drama club. While I certainly learned about various acting techniques and theatrical styles (the knowledge component), the true valueof the experience lay in nurturing my confidence, public speaking abilities, and ability to empathize with different characters and perspectives. It was a space where I could explore my artistic talents and discover a newfound passion forself-expression.Or consider my involvement in a robotics club, where I not only learned about engineering principles and coding (the knowledge), but also honed my problem-solving, teamwork, and project management skills (the talent nurturing). These extracurricular activities allowed me to apply the knowledge I'd gained in the classroom to real-world contexts, while simultaneously fostering the soft skills and personal talents that are so crucial for success in any field.Of course, one could argue that imparting knowledge is a prerequisite for nurturing talent. After all, without a solid foundation of knowledge, how can we expect students to develop their talents and abilities effectively? This is a fair point, and it highlights the inherent interconnectedness of these two educational objectives.Ultimately, I believe that a truly holistic and effective education system must strike a balance between imparting knowledge and nurturing talent. Knowledge acquisition isundoubtedly essential, providing students with the building blocks they need to understand the world around them and develop specialized expertise in their areas of interest.But education should go beyond mere fact regurgitation. It should create an environment that encourages curiosity, fosters critical thinking, and empowers students to explore their unique strengths and passions. By blending knowledge transmission with talent cultivation, we can produce well-rounded individuals who not only possess a wealth of information but also have the skills, creativity, and drive to apply that knowledge in meaningful and innovative ways.Perhaps the true purpose of education is not to create repositories of knowledge, but rather to unlock the incredible potential that lies within each individual student. By imparting knowledge while simultaneously nurturing talent, we can empower young minds to not only understand the world but also to shape it, to not only absorb information but also to create, innovate, and problem-solve.As I prepare to embark on the next chapter of my educational journey, I carry with me a deep appreciation for the importance of both knowledge acquisition and talent cultivation.I recognize that true learning is not a passive process, but anactive one that requires engagement, curiosity, and a willingness to push beyond one's comfort zone.Education is not just about filling our minds with facts and figures, but about igniting our passion for learning, honing our unique abilities, and empowering us to become agents of positive change in the world. It's a delicate balance, but one that is essential for creating a generation of well-rounded, innovative, and adaptable individuals who can tackle the complex challenges of our rapidly changing world.篇2Is Education About Imparting Knowledge or Cultivating Talent?As a student, I have often pondered the fundamental purpose of education. Is it merely a means of accumulating knowledge and information, or does it serve a greater purpose of nurturing our inherent talents and abilities? This age-old debate has been the subject of countless discussions and philosophical musings, and it is a question that deserves careful consideration.At the core of this discourse lies the very essence of what it means to be educated. On one hand, the acquisition of knowledge is undoubtedly a crucial aspect of education. Withoutthe foundational understanding of various subjects and disciplines, it would be impossible to navigate the complexities of the world around us. Knowledge equips us with the tools to analyze, interpret, and comprehend the intricacies of our existence, shaping our perspectives and enabling us to make informed decisions.However, to reduce education solely to the dissemination of knowledge would be a grave disservice to its true potential. Education is not merely a repository of facts and figures; it is a transformative process that unlocks the innate talents and capabilities within each individual. It is a journey of self-discovery, where students are encouraged to explore their passions, nurture their curiosities, and develop their unique strengths.Talent, in its truest form, is a multifaceted tapestry woven from threads of creativity, critical thinking, problem-solving abilities, and a hunger for lifelong learning. Education should strive to cultivate these qualities, fostering an environment that celebrates diversity of thought, encourages intellectualrisk-taking, and empowers students to challenge conventional wisdom.In my experience, the most profound and lasting lessons have come not from the mere regurgitation of facts, but from theopportunities to apply knowledge in novel and innovative ways. It is through these practical applications that true understanding is born, and talents are allowed to flourish. A well-rounded education should strike a delicate balance between imparting knowledge and providing avenues for personal growth andself-expression.Furthermore, the cultivation of talent extends beyond the confines of the classroom. Education should equip students with the necessary skills to navigate the ever-changing landscape of the modern world. In an era of rapid technological advancement and globalization, adaptability, resilience, and a willingness to embrace change are paramount. By nurturing these traits, education empowers individuals to thrive in diverse environments and contribute meaningfully to society.It is important to acknowledge that knowledge and talent are not mutually exclusive; rather, they are inextricably intertwined. Knowledge serves as the foundation upon which talents are built, and talents, in turn, provide the impetus for the pursuit of knowledge. A truly holistic approach to education must recognize and nurture this symbiotic relationship, fostering an environment that values both the acquisition of knowledge and the cultivation of individual potential.Moreover, the role of education extends beyond the confines of the classroom and into the realm of personal growth and character development. It is through education that we learn to navigate the complexities of human relationships, develop empathy and understanding for diverse perspectives, and cultivate a sense of ethical responsibility towards ourselves and the world around us.In conclusion, education is not a mere vessel for transmitting knowledge; it is a transformative journey that shapes the very fabric of our being. While the imparting of knowledge is undoubtedly a crucial component, true education transcends the boundaries of pure academia and delves into the realms ofself-discovery, talent cultivation, and personal growth. It is a holistic process that nurtures the mind, body, and spirit, equipping individuals with the tools to not only understand the world but also to shape it in meaningful and impactful ways.As students, it is our responsibility to embrace education in its entirety, recognizing its power to unlock our full potential and empower us to become agents of positive change. By striking a harmonious balance between knowledge acquisition and talent cultivation, we can embark on a lifelong journey of learning,growth, and self-actualization, ultimately contributing to the betterment of ourselves and the world around us.篇3Is Education About Imparting Knowledge or Nurturing Talent?As a student, I've often pondered the fundamental purpose of education. Is it merely a means to acquire knowledge, or does it serve a greater purpose of cultivating and nurturing our inherent talents? This question has been a subject of intense debate among educators, philosophers, and policymakers throughout history.At first glance, the primary objective of education might seem to be the dissemination of knowledge. After all, schools are institutions where we learn about various subjects, from mathematics and sciences to literature and history. We are taught facts, theories, and concepts that expand our understanding of the world around us. However, upon closer examination, it becomes evident that education encompasses far more than just the transfer of information.Knowledge, while undoubtedly valuable, is ultimately a tool – a means to an end. It is the application and utilization of thatknowledge that truly matters. Education should not merely fill our minds with facts and figures but should equip us with the ability to think critically, analyze information, and synthesize ideas. It should foster our curiosity, encourage us to ask questions, and inspire us to seek answers.Moreover, education should nurture our inherent talents and help us discover our passions and strengths. Each individual possesses unique abilities and aptitudes, and it is the role of education to identify and cultivate these talents. A truly effective educational system recognizes that students are not blank slates but rather individuals with diverse interests, learning styles, and potential.By nurturing talent, education empowers us to pursue our dreams and aspirations. It provides us with the necessary skills and knowledge to excel in our chosen fields, whether it be in the arts, sciences, or any other discipline. When education is tailored to our talents, it becomes a transformative force, unlocking our potential and enabling us to contribute meaningfully to society.Furthermore, education should not be confined to the realm of academics alone. It should also encompass the development of essential life skills, such as communication, teamwork, problem-solving, and emotional intelligence. These skills areinvaluable in navigating the complexities of our personal and professional lives, and their cultivation should be an integral part of the educational experience.In my opinion, the true purpose of education is to strike a balance between imparting knowledge and nurturing talent. Knowledge provides the foundation upon which talents can flourish, while talents serve as the catalyst for applying that knowledge in meaningful and innovative ways.By embracing this holistic approach, education can become a transformative journey of self-discovery and personal growth. It can empower us to not only acquire knowledge but also to develop our talents, hone our skills, and cultivate the confidence and resilience necessary to navigate the challenges of the modern world.Ultimately, education should be a catalyst for unlocking human potential, fostering creativity, and inspiring individuals to pursue their passions. It should equip us with the tools to think critically, solve problems, and contribute positively to society. When knowledge and talent are nurtured harmoniously, education transcends mere memorization and becomes a powerful force for individual and societal transformation.As students, it is our responsibility to actively engage in the educational process, to embrace both the acquisition of knowledge and the cultivation of our talents. By doing so, we can truly unlock our full potential and become the architects of our own destinies, contributing to the betterment of our communities and the world at large.。

乘勇气之船,扬智慧之帆的英语作文800字全文共3篇示例,供读者参考篇1Embarking on Life's Voyage: Riding the Ship of Courage and Unfurling the Sail of WisdomAs I stand on the precipice of adulthood, gazing out at the vast expanse of possibilities that lie ahead, I am reminded of a profound metaphor – life as a voyage across uncharted waters. This journey, fraught with uncertainties and challenges, demands two essential elements: courage and wisdom. Without courage, we risk remaining anchored in the safety of the harbor, never venturing forth to explore the boundless horizons. Without wisdom, we may find ourselves adrift, lacking the navigational skills to steer through the turbulent seas.Courage, that intrepid force that propels us forward, is the ship upon which we must embark. It is the vessel that carries us through the storms of adversity, the headwinds of doubt, and the treacherous currents of fear. Courage is not the absence of apprehension but the willingness to confront it, to embrace the unknown with a resolute spirit. It is the fuel that ignites ourdetermination, empowering us to take calculated risks and pursue our dreams with unwavering conviction.Yet, courage alone is insufficient for a successful voyage. We must also unfurl the sail of wisdom, harnessing the winds of knowledge and experience to guide our course. Wisdom is the compass that orients us, the lighthouse that illuminates our path, and the anchor that grounds us in times of turbulence. It is the accumulation of lessons learned, the synthesis of diverse perspectives, and the ability to discern truth from falsehood.Wisdom is not merely the acquisition of information but the judicious application of that knowledge. It is the capacity to navigate the complexities of life, to weigh the consequences of our actions, and to make informed decisions that align with our values and principles. Wisdom is the bridge that connects our aspirations to reality, enabling us to chart a course that is both ambitious and pragmatic.As I contemplate the voyage ahead, I am acutely aware of the importance of striking a delicate balance between courage and wisdom. Courage without wisdom can lead to recklessness, while wisdom without courage may result in stagnation. It is the harmonious interplay of these two forces that propels us forward,enabling us to seize opportunities while mitigating risks, to embrace change while maintaining a sense of purpose.The journey of life is replete with challenges, but it is also abundant with opportunities for growth, self-discovery, and fulfillment. Each obstacle we encounter is a chance to test our mettle, to forge ahead with resilience and determination. Every triumph, no matter how small, is a testament to our courage and a validation of the wisdom we have acquired along the way.As I prepare to set sail, I am emboldened by the knowledge that I am not alone on this voyage. I am surrounded by a community of fellow voyagers, each with their own dreams, aspirations, and experiences to share. Together, we can weather the storms, navigate the currents, and chart a course towards our desired destinations, supporting and inspiring one another along the way.In the end, the true measure of success lies not in the destination itself but in the journey itself – the lessons learned, the challenges overcome, and the personal growth attained. It is in the act of courageously embarking on this voyage and unfurling the sail of wisdom that we discover our true potential, our resilience, and our capacity to shape our own destinies.So, let us embrace the call of the open waters, fortified by our courage and guided by our wisdom. For it is in the pursuit of this grand adventure that we truly live, that we truly discover the depths of our character and the boundless possibilities that await us. The voyage may be long, but the rewards are immeasurable – a life lived with purpose, passion, and an enduring sense of fulfillment.篇2Setting Sail on the Ship of Courage, Hoisting the Sails of WisdomAs I stand on the precipice of my academic journey, the vast ocean of knowledge stretches out before me, both exhilarating and daunting. It is a journey that demands not only intellectual prowess but also an unwavering spirit of courage and resilience. For it is only by embracing these virtues that we can truly set sail on the ship of courage and hoist the sails of wisdom.The pursuit of knowledge is a noble endeavor, but it is not without its challenges. The path is often riddled with obstacles, from the complexities of theoretical concepts to the frustrations of practical applications. It is in these moments that the flame ofcourage must burn brightest, fueling our determination to overcome every hurdle that stands in our way.Courage, however, is not merely the absence of fear; it is the willingness to confront our fears head-on, to embrace the unknown with open arms. It is the audacity to question established norms, to challenge conventional wisdom, and to forge new paths where none existed before. It is the unwavering belief that our dreams are worth fighting for, and that no obstacle is too great to be surmounted.Yet, courage alone is not enough to navigate the treacherous waters of learning. It must be complemented by the guiding light of wisdom – the ability to discern truth from falsehood, to separate the wheat from the chaff, and to synthesize disparate pieces of information into a cohesive whole.Wisdom is the compass that steers us through the turbulent seas of knowledge, helping us to chart a course that is both effective and ethical. It is the voice that whispers in our ear, reminding us to approach each challenge with humility and an open mind, to embrace diverse perspectives, and to constantly question our own assumptions.But wisdom is not merely a matter of intellectual prowess; it is also a reflection of our character, our values, and our moralfiber. It is the ability to discern right from wrong, to uphold principles of integrity and compassion, and to use our knowledge for the betterment of humanity.As we embark on this journey, we must embrace both courage and wisdom as our constant companions. We must have the courage to venture into uncharted territories, to grapple with complex ideas, and to challenge the status quo. And we must have the wisdom to navigate these waters with discernment, to separate truth from falsehood, and to use our knowledge in a way that uplifts and empowers others.The path ahead is not an easy one, but it is a path that promises great rewards. For those who embrace the ship of courage and hoist the sails of wisdom will not only attain academic excellence but will also cultivate the skills and character necessary to make a lasting impact on the world.They will become the innovators, the leaders, the visionaries who shape the course of human progress. They will be the ones who push the boundaries of knowledge, who challenge conventional thinking, and who inspire others to follow in their footsteps.So, let us embrace this journey with open hearts and open minds. Let us set sail on the ship of courage, hoisting the sails ofwisdom high, confident in the knowledge that the rewards of this voyage will far outweigh its challenges. For it is in the pursuit of knowledge that we truly discover our potential, and it is through the marriage of courage and wisdom that we can create a better world for all.篇3Sailing Uncharted Waters: A Student's Journey of Courage and WisdomAs a student, the voyage of life often feels like navigating uncharted waters – vast, uncertain, and teeming with challenges that test our mettle. Yet, it is in these very moments that we must summon the courage to climb aboard the ship of daring and unfurl the sails of wisdom, charting a course towards growth, self-discovery, and personal fulfillment.Courage, that intrepid force that propels us forward in the face of adversity, is the wind that fills our sails. It is the unwavering determination to confront our fears, to step outside our comfort zones, and to embrace the unknown. Every academic challenge, every daunting assignment, every looming deadline – these are the waves that threaten to capsize ourvessel. But it is courage that steadies our resolve, empowering us to weather the storms and emerge victorious.I vividly recall the trepidation that gripped me as I embarked on my first public speaking endeavor. The mere thought of standing before a sea of expectant faces, my voice trembling like a leaf in the wind, filled me with dread. Yet, it was in that moment that I realized the true power of courage. By summoning the strength to face my fears head-on, I not only conquered my stage fright but also discovered a newfound confidence that has since propelled me through countless challenges.Wisdom, on the other hand, is the compass that guides our journey, illuminating the path ahead and helping us navigate the treacherous shoals of ignorance and misinformation. It is the accumulated knowledge and understanding that we acquire through diligent study, critical thinking, and a willingness to learn from our experiences.As students, we are constantly exposed to a deluge of information, a vast ocean of data that threatens to overwhelm us. It is wisdom that allows us to discern truth from falsehood, to separate the profound from the superficial, and to synthesize disparate concepts into a cohesive tapestry of understanding. The pursuit of wisdom is a lifelong endeavor, a never-endingquest to expand the horizons of our intellect and deepen our appreciation for the complexities of the world around us.Yet, courage and wisdom are not mere abstract concepts; they are the very essence of personal growt。

The Power of Mind Mapping: Unlocking Creativity and Enhancing LearningIn the realm of knowledge acquisition and information processing, mind mapping has emerged as a powerful toolthat not only enhances learning but also stimulates creativity. Its roots trace back to the early 20th century, but its popularity has skyrocketed in recent years, thanks to the digital revolution and its seamless integration into modern education and workplace cultures.Mind mapping is a visual representation of thoughts and ideas, typically presented as a branching diagram. Itstarts with a central idea or concept, and branches outinto related subtopics, each connected by lines and arrows. This visual layout not only helps to organize information but also makes it easier to identify patterns, relationships, and connections that might not be apparent in a traditional linear text format.The beauty of mind mapping lies in its adaptability and flexibility. It can be used for a wide range of tasks, from planning a project or an essay to brainstorming for a new business idea. The process of creating a mind map oftenleads to unexpected insights and creative breakthroughs, as it encourages free-flowing association and lateral thinking. In the academic world, mind mapping has been shown to improve retention and recall of information. Byrepresenting knowledge visually, it helps students tobetter understand and internalize complex concepts. It also acts as a powerful revision tool, allowing learners to quickly review and consolidate their understanding of a subject.In the professional sphere, mind mapping is aninvaluable tool for project management and decision-making. It helps teams to visualize their workflow, identify potential bottlenecks, and brainstorm solutions. The visual nature of mind mapping also facilitates collaboration and communication, ensuring that all members of the team are on the same page and working towards the same goals.Moreover, mind mapping is not limited to individual learning or team collaboration. It can also be used as a personal development tool to enhance self-awareness andgoal setting. By mapping out one's thoughts and aspirations,individuals can gain a clearer understanding of their strengths, weaknesses, and areas for improvement.In conclusion, mind mapping is a powerful tool that unlocks the potential of both individuals and teams. It enhances learning, stimulates creativity, and improves decision-making. As we increasingly rely on technology to process and present information, the role of mind mappingin education, work, and personal development is becoming increasingly important. Its adaptability and flexibility make it a universal tool that can be used across a wide range of contexts and applications. As we embrace thefuture of learning and collaboration, mind mapping will undoubtedly play a pivotal role in helping us to achieveour full potential.**思维导图的力量:激发创造力,提升学习效果**在知识获取和信息处理领域,思维导图作为一种强大的工具,不仅增强了学习效果,还激发了创造力。

做好准备再出发英语作文开头Prepare: The Key to Embarking on a Fruitful Journey.Preparation is the cornerstone of any successful endeavor. It provides the foundation upon which we build,the blueprint that guides our actions, and the confidence that propels us forward. Without adequate preparation, our aspirations remain mere pipe dreams, and our potential remains untapped.Just as a skilled craftsman carefully gathers his tools before embarking on a project, so too must we meticulously prepare ourselves before undertaking any significant task. Preparation is not merely a formality; it is an essential process that transforms aspirations into tangible realities.The Importance of Planning.In the realm of preparation, planning holds the utmost importance. It allows us to envision our goals, identifypotential obstacles, and develop strategies to overcome them. A well-conceived plan serves as a roadmap, guiding us along the path to success and preventing us from veeringoff course.Without a plan, our actions become fragmented and uncoordinated, like a ship lost at sea. We may expend a great deal of effort, but if it is not directed towards a clear objective, it will ultimately prove futile. Planning provides the necessary structure and direction to harness our energy and ensure that our efforts are aligned with our aspirations.Knowledge Acquisition.Preparation also entails the acquisition of knowledge and skills relevant to the task at hand. Whether it involves mastering a new subject, developing a specific expertise, or honing our physical abilities, knowledge is the fuel that powers our actions. Ignorance, on the other hand, can be a significant impediment, hindering our progress and leading to costly mistakes.In an ever-changing world, the pursuit of knowledge should be an ongoing endeavor. We must constantly seek out new information, expand our horizons, and enhance our understanding of the world around us. Knowledge empowers us to make informed decisions, adapt to unforeseen circumstances, and navigate the complexities of our surroundings.Physical and Mental Preparation.For many endeavors, both physical and mental preparation are essential. Physical preparation ensuresthat our bodies are capable of meeting the demands of the task. It involves conditioning, training, and establishing a healthy lifestyle. Mental preparation, on the other hand, focuses on developing the psychological resilience, focus, and determination necessary to overcome challenges and stay the course.Physical and mental preparation are intertwined and mutually reinforcing. A strong body supports a strong mind,and a healthy mind enhances physical capabilities. By addressing both aspects of preparation, we create aholistic foundation that enables us to perform at our peak and persevere in the face of adversity.Overcoming Fear and Self-Doubt.Fear and self-doubt are common obstacles to preparation. They can paralyze us with apprehension and prevent us from taking the necessary steps towards success. However, it is important to recognize that fear and self-doubt are not insurmountable barriers. They are merely challenges thatcan be overcome with determination and self-belief.Preparation empowers us to confront fear and self-doubt head-on. By gaining knowledge, developing skills, and building confidence, we gradually chip away at the walls of fear and replace it with a sense of assurance and capability. Preparation provides us with the means tobelieve in ourselves and our ability to achieve our goals.Conclusion.Preparation is not about attaining perfection or eliminating all risks. It is about creating a solid foundation, gaining the knowledge and skills necessary for success, and developing the confidence to overcome challenges. By embracing the principles of preparation, we empower ourselves to embark on fruitful journeys, achieve our aspirations, and live lives of purpose and fulfillment.。

青少年教育的好处和坏处英语作文全文共3篇示例,供读者参考篇1Teenage education plays a crucial role in shaping the future of individuals and society. It has both benefits and drawbacks that need to be considered. In this essay, we will explore the advantages and disadvantages of teenage education.Firstly, the benefits of teenage education are numerous. Education provides teenagers with knowledge and skills that are essential for their personal and professional development. It helps them to become more knowledgeable and informed individuals who are better equipped to make informed decisions. Teenagers who receive a good education have better chances of securing stable and fulfilling employment in the future. Education also instills important values such as responsibility, discipline, and respect for others.Furthermore, teenage education can also have positive social impacts. Educated teenagers are more likely to be engaged citizens who contribute positively to their communities. They are better equipped to understand complex social issuesand work towards finding solutions. Education can also help reduce poverty and inequality by empowering teenagers with the means to lift themselves out of difficult circumstances.On the other hand, teenage education also has its drawbacks. One of the main disadvantages is the pressure and stress that many teenagers face in the modern education system. The emphasis on academic achievement can lead to high levels of stress and anxiety among students. This can have negative effects on their mental health and well-being. Additionally, the rigid structure of the education system can limit teenagers' creativity and individuality. It may stifle their natural talents and interests in favor of conformity to standardized curricula.Moreover, some critics argue that the traditional education system is outdated and fails to prepare teenagers for the complexities of the modern world. They argue that the focus on rote memorization and standardized testing does not adequately equip teenagers with the skills they need to succeed in a rapidly changing global economy. This can lead to a mismatch between the skills that teenagers acquire in school and the skills that are in demand in the labor market.In conclusion, teenage education has both benefits and drawbacks. While education provides teenagers with importantknowledge and skills, it can also lead to stress and anxiety. It is important for educators and policymakers to consider these factors and strive to create an education system that maximizes the benefits of education while minimizing the drawbacks. By doing so, we can ensure that teenage education continues to be a positive force for individuals and society as a whole.篇2The Benefits and Drawbacks of Teenage EducationIntroductionTeenage education plays a crucial role in shaping the future of individuals and society as a whole. It provides young people with the knowledge, skills, and values necessary to navigate the challenges of adulthood and contribute positively to their communities. However, like any form of education, it has both benefits and drawbacks that need to be considered.Benefits of Teenage Education1. Academic Achievement: Teenage education helps young people develop the academic skills needed to succeed in school and beyond. It teaches them how to think critically, solve problems, and communicate effectively.2. Social Development: Education provides teenagers with opportunities to form meaningful relationships with peers and adults, fostering social skills and emotional intelligence. It also exposes them to diverse perspectives, cultures, and experiences, promoting tolerance and empathy.3. Personal Growth: Through education, teenagers can discover their interests, passions, and strengths, leading to increased self-confidence and motivation. It also encourages them to set and achieve goals, nurturing a sense of purpose and fulfillment.4. Career Opportunities: A solid education opens doors to a wide range of career options, allowing teenagers to pursue their professional aspirations and secure financial stability. It equips them with the knowledge and skills necessary to compete in a global economy.Drawbacks of Teenage Education1. Stress and Pressure: The demands of teenage education can create high levels of stress and anxiety for students, leading to burnout and mental health problems. The pressure to excel academically and meet societal expectations can take a toll on their well-being.2. Lack of Creativity: Traditional education systems often prioritize rote memorization and standardized testing over creativity and innovation. This can stifle teenagers' creativity and restrict their ability to think outside the box.3. Narrow Focus: Some educational programs have a narrow focus on academic subjects, neglecting important life skills such as financial literacy, emotional intelligence, and critical thinking. This can limit teenagers' overall development and preparedness for the real world.4. Inequality: Not all teenagers have equal access to quality education due to socioeconomic disparities, discriminatory practices, and inadequate resources. This perpetuates inequality and hinders social mobility, creating barriers to opportunities for marginalized youth.ConclusionIn conclusion, teenage education offers numerous benefits in terms of academic achievement, social development, personal growth, and career opportunities. However, it also has drawbacks such as stress and pressure, lack of creativity, narrow focus, and inequality that need to be addressed. By recognizing and addressing these challenges, we can create a more inclusiveand effective education system that empowers all teenagers to reach their full potential.篇3The Benefits and Drawbacks of Teenage EducationIntroductionEducation is paramount in shaping the future of individuals. For teenagers, education plays a crucial role in their development and readiness for adulthood. However, while education has numerous benefits, it also comes with its fair share of drawbacks that need to be considered. In this essay, we will explore the advantages and disadvantages of teenage education.Benefits of Teenage Education1. Knowledge Acquisition: Perhaps the most obvious benefit of teenage education is the acquisition of knowledge. Education equips teenagers with the necessary information and skills to navigate the world around them. From science and math to literature and history, education broadens their horizons and empowers them to make informed decisions.2. Critical Thinking: Education encourages teenagers to think critically and analyze information objectively. By engaging in discussions, debates, and problem-solving activities, students learn how to evaluate different perspectives and come towell-reasoned conclusions. This critical thinking skill is invaluable in both academic and real-world settings.3. Personal Development: Education is not just about academics; it also plays a crucial role in personal development. Teenagers learn important life skills such as time management, organization, and communication through their educational experiences. These skills are essential for success in both their academic and professional pursuits.4. Career Opportunities: A good education opens doors to a wide range of career opportunities for teenagers. By pursuing higher education or vocational training, students increase their chances of finding fulfilling and well-paying jobs in the future. Education provides them with the necessary qualifications and credentials to pursue their desired career paths.Drawbacks of Teenage Education1. Stress and Pressure: The demands of education can put immense stress and pressure on teenagers. From looming deadlines to rigorous exams, students often feel overwhelmedby the workload and expectations placed on them. This stress can have negative effects on their mental health and well-being.2. Standardized Testing: The emphasis on standardized testing in education can be a double-edged sword for teenagers. While tests are designed to assess students' knowledge and skills, they can also lead to a narrow focus on memorization and regurgitation of information. This narrow approach to learning may hinder the development of creativity and critical thinking skills.3. Social Isolation: The traditional education system often limits teenagers' social interactions to their peers in the classroom. This can lead to social isolation and a lack of diversity in their social circles. Teenagers may miss out on valuable opportunities to interact with people from different backgrounds and cultures, which can limit their personal growth and development.4. Financial Burden: Pursuing higher education can be a significant financial burden for teenagers and their families. With the rising costs of tuition, textbooks, and living expenses, many students struggle to afford a quality education. This financial strain can lead to student debt and limit teenagers' future opportunities.ConclusionIn conclusion, teenage education has both benefits and drawbacks that need to be considered. While education provides teenagers with knowledge, critical thinking skills, and personal development opportunities, it can also lead to stress, standardized testing pressures, social isolation, and financial burdens. It is important for educators, parents, and policymakers to address these challenges and create a supportive and holistic learning environment for teenagers. By recognizing and addressing the drawbacks of teenage education, we can ensure that students receive a well-rounded and fulfilling educational experience.。

a knowledge of用法-回复"Using Knowledge: A Step-by-Step Guide"Introduction:Knowledge is a powerful tool that enables us to understand the world around us, make informed decisions, and solve problems. It provides us with the necessary skills and insights to navigate through life effectively. In this article, we will explore the various ways knowledge can be applied and how it impacts different aspects of our lives.Step 1: Acquiring KnowledgeKnowledge acquisition is the first step towards using knowledge effectively. There are multiple sources and methods to acquire knowledge. It can be gained through formal education, reading books, conducting research, online courses, attending seminars, or even through personal experiences. The key is to be open to learning and embrace opportunities for knowledge acquisition.Step 2: Organizing and Structuring KnowledgeOnce knowledge is acquired, it needs to be organized and structured for effective utilization. This can be done by categorizing information into relevant topics, creating mental maps or diagrams, or using digital tools such as note-taking apps or online platforms. By organizing knowledge, we can retrieve and apply it more efficiently when needed.Step 3: Critical Thinking and AnalysisUsing knowledge requires critical thinking and analysis. It involves evaluating information, identifying biases or fallacies, and making logical connections between different concepts or ideas. Critical thinking helps us to identify the validity and reliability of information before utilizing it. It allows us to filter out misinformation and make informed decisions based on evidence and facts.Step 4: Problem-Solving and Decision MakingOne of the most valuable applications of knowledge is in problem-solving and decision making. When faced with achallenge or a decision, we can draw upon our existing knowledge or seek new knowledge to find solutions. Knowledge equips us with the ability to identify patterns, evaluate options, and anticipate potential outcomes. Through this process, we can make better decisions and solve problems more effectively.Step 5: Sharing and CollaboratingKnowledge becomes more powerful when it is shared and collaborated upon. By sharing knowledge with others, we not only contribute to their growth but also broaden our own understanding. Collaboration allows us to benefit from diverse perspectives and expertise. It fosters innovation, creativity, and the development of new ideas. Through sharing and collaborating, knowledge becomes a collective asset that enriches society as a whole.Step 6: Continuous LearningKnowledge is not static; it is constantly evolving. To effectively use knowledge, it is essential to embrace lifelong learning. This involves staying updated with new information, developments, andadvancements in various fields. By continuously learning, we can enhance our existing knowledge, adapt to changes, and remain competitive in an ever-evolving world.Conclusion:In conclusion, knowledge is a valuable resource that empowers us to thrive in a complex and fast-paced world. By following these steps - acquiring knowledge, organizing and structuring it, applying critical thinking, problem-solving, sharing and collaborating, and embracing continuous learning - we can optimize the use of knowledge in our lives. Whether it is making informed decisions, solving problems, or fostering innovation, knowledge forms the foundation for success and growth. So, let us embrace knowledge and harness its power to shape a better future for ourselves and the world.。

信息时代英语作文In the era of information, English has become an indispensable tool for communication and knowledge acquisition. The rapid development of technology has made it easier than ever to access English content, from online courses and educational platforms to international news and social media.The ability to write in English is a skill that can open doors to a world of opportunities. Whether it is for academic purposes, professional growth, or personal enrichment, mastering English composition is crucial. Here are some tips to enhance your English writing skills:1. Read Widely: Reading a variety of materials in Englishwill expose you to different writing styles and vocabulary. It helps you understand sentence structure and the flow of information.2. Practice Regularly: Writing is a muscle that needs constant exercise. Set aside time each day to write, whether it's a journal entry, a letter, or an essay.3. Learn Grammar: A strong foundation in English grammar is essential for clear and effective writing. Make sure you understand the rules of syntax, punctuation, and sentence construction.4. Expand Your Vocabulary: A rich vocabulary allows you to express your thoughts more precisely and creatively. Use a dictionary and thesaurus to find synonyms and learn new words.5. Revise and Edit: Never submit your first draft. Take the time to revise and edit your work. Look for grammatical errors, unclear sentences, and areas where you can improveyour argument or description.6. Seek Feedback: Share your writing with others and ask for constructive criticism. Feedback can provide valuableinsights into how to improve your writing.7. Use Technology: There are many tools available today that can help with writing in English, from spell checkers and grammar checkers to writing assistants and onlinedictionaries.8. Write with Purpose: Every piece of writing should have a clear purpose. Whether you are writing to inform, persuade,or entertain, make sure your writing is focused and directed towards that goal.9. Be Creative: Don't be afraid to express your unique voice and perspective. Creativity can make your writing stand out and be more engaging.10. Stay Updated: Keep up with current events and new developments in your field of interest. This will give you fresh ideas and topics to write about.In conclusion, writing in English in the information age is not just about language proficiency; it's also about being able to convey your thoughts effectively and engagingly. With practice and dedication, you can become a proficient English writer.。

Towards Knowledge Acquisition from Information Extraction

Chris Welty and J. William Murdock IBM Watson Research Center Hawthorne, NY 10532 {welty, murdock}@us.ibm.com

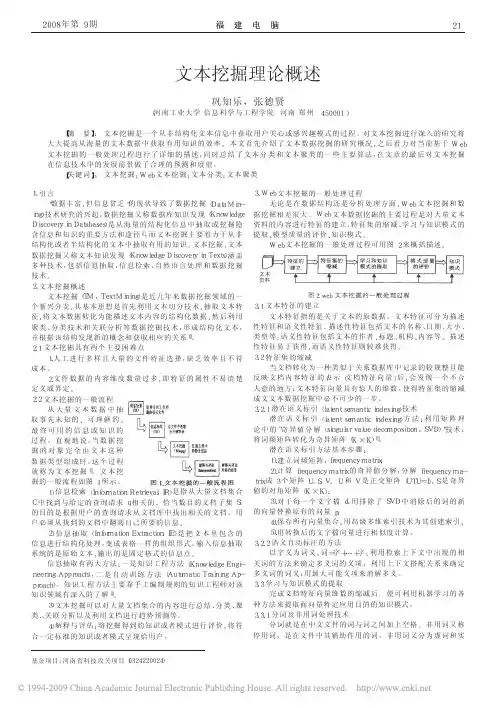

Abstract. In our research to use information extraction to help populate the semantic web, we have encountered significant obstacles to interoperability between the technologies. We believe these obstacles to be endemic to the basic paradigms, and not quirks of the specific implementations we have worked with. In particular, we identify five dimensions of interoperability that must be addressed to successfully populate semantic web knowledge bases from information extraction systems that are suitable for reasoning. We call the task of transforming IE data into knowledge-bases knowledge integration, and briefly present a framework called KITE in which we are exploring these dimensions. Finally, we report on the initial results of an experiment in which the knowledge integration process uses the deeper semantics of OWL ontologies to improve the precision of relation extraction from text.

Keywords: Information Extraction, Applications of OWL DL Reasoning 1 Introduction Ontologies describe the kinds of phenomena (e.g., people, places, events, relationships, etc.) that can exist. Reasoning systems typically rely on ontologies to provide extensive formal semantics that enable the systems to draw complex conclusions or identify unintended models. In contrast, systems that extract information from text (as well as other unstructured sources such as audio, images, and video) typically use much lighter-weight ontologies to encode their results, because those systems are generally not designed to enable complex reasoning. We are working on a project that is exploring the use of large-scale information extraction from text to address the “knowledge acquisition bottleneck” in populating large knowledge-bases. This is by no means a new idea, however our focus is less on theoretical properties of NLP or KR systems in general, and more on the realities of these technologies today, and how they can be used together. In particular, we have focused on state-of-the art text extraction components, many of which consistently rank in the top three at competitions such as ACE (Luo, et al, 2004) and TREC (Chu-Carroll, et al, 2005), that have been embedded in the open-source Unstructured Information Management Architecture (UIMA) (Ferrucci & Lally, 2004), and used to populate semantic-web knowledge-bases. This, too, is not a particularly new idea; recent systems based on GATE (e.g. (Popov, et al 2004)) have been exploring the production of large RDF repositories from text. In our project, however, we are specifically focused on the nature of the data produced by information extraction techniques, and its suitability for reasoning. Most systems that we have come across (see the related work section) do not perform reasoning (or perform at best the most simplistic reasoning) over the extracted knowledge stored in RDF, as the data is either too large or too imprecise. This has led many potential adopters of semantic web technology, as well as many people in the information extraction community, to question the value of the semantic web (at least for this purpose). We believe this community can be important in helping drive adoption of the semantic web. In this paper we will discuss our general approach to generating OWL knowledge-bases from text, present some of the major obstacles to using these knowledge-bases with OWL- and RDF-based reasoners, and describe some solutions we have used. Our research is not in information extraction, ontologies, nor reasoning, but in their combination. Our primary goal is to raise awareness of the real problems presented by trying to use these technologies together, and while we present some solutions, the problems are far from solved and require a lot more attention by the community.

2 Related Work Research on extraction of formal knowledge from text (e.g., Dill, et al. 2003) typically assumes that text analytics are written for the ontology that the knowledge should be encoded in. Building extraction directly on formal ontologies is particularly valuable when the extraction is intended to construct or modify the original ontology (Maynard, Yankova, et al. 2005; Cimiano & Völker, 2005). However, there is a substantial cost to requiring text analytics to be consistent with formal ontology languages. There are many existing systems that extract entities and relations from text using informal ontologies that make minimal semantic commitments (e.g., Marsh, 1998; Byrd & Ravin, 1999; Liddy, 2000; Miller, et al., 2001; Doddington, et al., 2004). These systems use these informal ontologies because those ontologies are relatively consistent with the ambiguous ways concepts are expressed in human language and are well-suited for their intended applications (e.g., document search, content browsing). However, those ontologies are not well-suited to applications that require complex inference. Work on so-called ontology-based information extraction, such as compete in the ACE program, (e.g. (Cunningham, 2005), (Bontcheva, 2004)) and other semantic-web approaches like (Maynard, 2005), (Maynard, et al, 2005), and (Popov, et al, 2004), focus on directly populating small ontologies that have a rich and well-thought out semantics, but very little if any formally specified semantics (e.g. using axioms). The ontologies are extensively described in English, and the results are apparently used mainly for evaluation and search, not to enable reasoning. Our work differs in that we