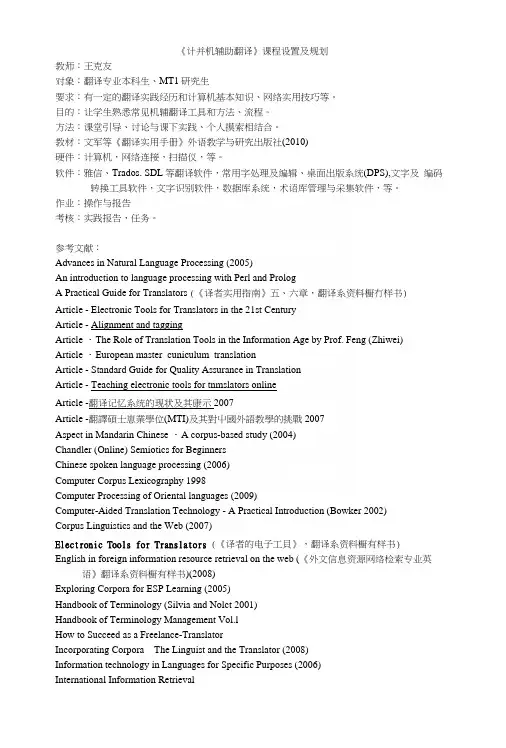

semantic Web mining Integrating Semantic Knowledge with Web Usage Mining

- 格式:pdf

- 大小:667.41 KB

- 文档页数:47

基于概率潜在语义分析的Web用户会话识别

高春贞;吴军华

【期刊名称】《微电子学与计算机》

【年(卷),期】2010(0)6

【摘要】为提高Web用户会话识别的准确性,给出了一种基于概率潜在语义分析模型(PLSA)和竞争奖罚(CRP)算法的Web用户会话识别方法.其核心内容是利用PLSA模型计算出请求页面和每一个活动用户会话的概率,比较概率判定请求页面应该归属的用户会话,并采用竞争奖罚算法判别用户会话的结束.实验结果表明:基于PLSA模型和竞争奖罚算法的用户会话识别方法的识别成功率高于其他常用的会话识别方法.

【总页数】4页(P163-166)

【关键词】会话识别;概率潜在语义分析;竞争奖罚算法;最大期望值算法;Web使用挖掘

【作者】高春贞;吴军华

【作者单位】南京工业大学信息科学与工程学院

【正文语种】中文

【中图分类】TP391

【相关文献】

1.基于概率潜在语义分析的Web用户聚类 [J], 俞辉;景海峰

2.基于混合概率潜在语义分析模型的Web聚类 [J], 王治和;王凌云;党辉;潘丽娜

3.基于聚类的Web用户会话识别优化方法 [J], 凌海峰;余笪

4.基于URL语义分析的Web用户会话识别方法 [J], 朱志国

5.基于语义的Web用户会话识别算法 [J], 张辉;宋瀚涛;徐晓梅

因版权原因,仅展示原文概要,查看原文内容请购买。

Trust Networks on the Semantic WebJennifer Golbeck1, Bijan Parsia1, James Hendler11University of Maryland, College ParkA. V. Williams BuildingCollege Park, Maryland 20742golbeck@,hendler@,bparsia@ Abstract. The so-called "Web of Trust" is one of the ultimate goals of theSemantic Web. Research on the topic of trust in this domain has focused largelyon digital signatures, certificates, and authentication. At the same time, there isa wealth of research into trust and social networks in the physical world. In thispaper, we describe an approach for integrating the two to build a web of trust ina more social respect. This paper describes the applicability of social networkanalysis to the semantic web, particularly discussing the multi-dimensionalnetworks that evolve from ontological trust specifications. As a demonstrationof algorithms used to infer trust relationships, we present several tools thatallow users to take advantage of trust metrics that use the network.1Introduction"Trust" is a word that has come to have several very specific definitions on the Semantic Web. Much research has focused on authentication of resources, including work on digital signatures and public keys. Confidence in the source or author of a document is important, but trust, in this sense, ignores many important points.Just because a person can confirm the source of documents does not have any explicit implication about trusting the content of those documents. This project addresses “trust” as credibility or reliability in a much more human sense. It opens up the door for questions like “how much credence should I give to what this person says about a given topic,” and “based on what my friends say, how much should I trust this new person?"In this paper, we will discuss the application of a social network to the semantic web. Section 2 discusses how to build a meaningful social network from the architecture of the semantic web, and how it conveys meaning about the structure of the world. Section 3 will describe the implementation of such a network. We describe a sample ontology, an algorithm for computing trust in a network, and present tools that use this network to provide users with information about the reputation of others.1.2 Related WorkThis paper uses techniques developed in the field of social network analysis, and applies that to the issue of trust on the semantic web. This section describes the most relevant works from each area.1.2.1 Social NetworksSocial networks have a long history of study in a wide range of disciplines. The more mathematical of the studies have appeared in the "Small World" literature. The work on Small Worlds, also commonly known as "Six Degrees of Separation" originated out of Stanley Milgram's work in the 1960s. His original studies indicated that any two people in the world were separated by only a small number acquaintances (theorized to be six in the original work) [18]. Since then, studies have shown that many complex networks share the common features of the small-world phenomenon: small average distance between nodes, and a high connectance, or clustering coefficient [21].Small world networks have been studied in relation to random graphs [29]. For social systems, both models have been used to describe phenomena such as scientific collaboration networks [20], and models of game theory [29]. The propagation of effects through these types of networks has been studied, particularly with respect to the spread of disease [19, 7]. The web itself has shown the patterns of a small world network, in clustering and diameter [4, 1].Viewing the current web as a graph, where each page represents a node, and the hyperlinks translate to directed edges between nodes, has produced some interesting results. The main focus of this research has been to improve the quality of search [7,6,5,8,14,26]. Other work has used this structure for classification [9] and community discovery [15].1.2.2 Trust on the Semantic WebYolanda Gil and Varun Ratnakar addressed the issue of trusting content and information sources [12]. They describe an approach to derive assessments about information sources based on individual feedback about the sources. As users add annotations, they can include measures of Credibility and Reliability about a statement, which are later averaged and presented to the viewer. Using the TRELLIS system, users can view information, annotations (including averages of credibility, reliability, and other ratings), and then make an analysis.Calculating trust automatically for an individual in a network on the web is partially addressed in Raph Levin's Advogato project [17]. His trust metric uses group assertions for determining membership within a group. The Advogato [3] website, for example, certifies users at three levels. Access to post and edit website information is controlled by these certifications. On any network, the Advogato trust metric is extremely attack resistant. By identifying individual nodes as "bad" and finding any nodes that certify the "bad" nodes, the metric cuts out an unreliable portion of the network. Calculations are based primarily on the good nodes, so the network as a whole remains secure.2 Networks on the Semantic WebStudying the structure of the hypertext web can be used to find community structure in a limited way. A set of pages clustered by hyperlinks may indicate a common topic among the pages, but it does not show more than a generic relationship among the pages. Furthermore, pages with fewer outgoing links are less likely to show up in a cluster at all because their connectance is obviously lower. These two facts make it difficult for a person to actually see any relationship among specific concepts on the web as it currently stands – classification is not specific enough, and it relies on heavy hyperlinking that may not be present.The Semantic Web changes this. Since the semantic data is machine-understandable, there is no need to use heuristics to relate pages. Concepts in semantically marked up pages are automatically linked, relating both pages and concepts across a distributed web.By it's nature, the semantic web is one large graph. Resources (and literals) are connected by predicates. Throughout the rest of this paper, we will refer to resources as objects, and predicates as properties. Mapping objects to nodes and properties to labeled edges in a graph yields the power to use the algorithms and methods of analysis that have been developed for other manifestations of graphs.While the graph of the entire Semantic Web itself is interesting, a subgraph generated by restricting the properties, and thus edges, to a subset of interest allows us to see the relationships among distributed data. Applications in this space are vast. Semantic markup means that retrieving instances of specific classes and the set of properties required for a particular project, becomes easy. Furthermore, merging data collected from many different places on the web is trivial.Generating social networks on the semantic web is a similar task with useful results. Information about individuals in a network is maintained in distributed sources. Individuals can manage data about themselves and their friends. Security measures, like digital signatures of files, go some way toward preventing false information from propagating through the network. This security measure builds trust about the authenticity or data contained within the network, but does not describe trust between people in the network.There are many measures of "trust" within a social network. It is common in a network that trust is based simply on knowing someone. By treating a "Person" as a node, and the "knows" relationship as an edge, an undirected graph emerges (Figure 1 shows the graph of acquaintances used in this study). If A does not know B, but some of A's friends know B, A is "close" to knowing B in some sense. Many existing networks take this measure of closeness into account. We may, for example, reasonably trust a person with a small Erdos number to have a stronger knowledge of graph theory than someone with a large or infinite number.Techniques developed to study naturally occurring social networks apply to these networks derived from the semantic web. Small world models describe a number of algorithms for understanding relationships between nodes. The same algorithms that model the spread of disease [19, 7] in physical social networks, can be used to track the spread of viruses via email.Fig.1. The Trust Network generated for this paperFor trust, however, there are several other factors to consider. Edges in a trust network are directed. A may trust B, but B may not trust A back. Edges are also weighted with some measure of the trust between two people. By building such a network, it is possible to infer how much A should trust an unknown individual based on how much A's friends and friends-of-friends trust that person. Using the edges that exist in the graph, we can infer an estimation of the weight of a non-existent edge. In the next section, we describe one method for making such inferences in the context of two implementations.3 ImplementationThe semantic web of trust requires that users describe their beliefs about others. Once a person has a file that lists who they know and how much they trust them, social information can be automatically compiled and processed.3.1 Base OntologyFriend-Of-A-Friend (FOAF) [10] is one project that allows users to create and interlink statements about who they know, building a web of acquaintances. The FOAF schema [24] is an RDF vocabulary that a web user can use to describe information about himself, such as name, email address, and homepage, as well as information about people he knows. In line with the security mentioned before, users can sign these files so information will be attributed to either a known source, or an explicitly anonymous source. People are identified in FOAF by their email addresses, since they are unique for each person.In this project, we introduce a schema, designed to extend foaf:Person, which allows users to indicate a level of trust for people they know [28]. Since FOAF is used as the base, users are still identified by their email address. Our trust schema adds properties with a domain of foaf:Person. Each of these new properties specifies one level of trust on a scale of 1-9. The levels roughly correspond to the following:1. Distrusts absolutely2. Distrusts highly3. Distrusts moderately4. Distrusts slightly5. Trusts neutrally6. Trusts slightly7. Trusts moderately8. Trusts highly9. Trusts absolutelyTrust can be given in general, or limited to a specific topic. Users can specify several trust levels for a person on several different subject areas. Users can specify topic specific trust levels to refine the network. For example, Bob may trust Dan highly regarding research topics, but distrust him absolutely when it comes to repairing cars. Using the trust ontology, the different trust ratings (i.e. "distrustsAbsolutely," "trustsModerately," etc.) are properties of the "Person" class, with a range of another "Person". These properties are used for general trust, and are encoded as follows:<Person rdf:ID="Joe"><mbox rdf:resource="mailto:bob@"/><trustsHighly rdf:resource="#Sue"/></Person>Another set of properties are defined for trust in a specific area. They correspond to the nine values above, but are indicated as trust regarding a specific topic (i.e. "distrustsAbsolutelyRe," "trustsModeratelyRe," etc). The range of these topic specific properties is the "TrustsRegarding" class, which has been defined to group a Person and a subject of trust together. The "TrustsRegarding" class has two properties: "trustsPerson" indicates the person being trusted, and "trustsOnSubject" indicates the subject that the trust is about. There are no range restrictions on this latter property, which leaves it to the user to specify any subject from any ontology. Consider thefollowing example, that shows syntax for trusting one person relative to several different topics:<Person rdf:ID="Bob"><mbox rdf:resource="mailto:joe@"/><trustsHighlyRe><TrustsRegarding><trustsPerson rdf:resource="#Dan"/><trustsOnSubjectrdf:resource="/ont#Research"/></TrustsRegarding></trustsHighlyRe><distrustsAbsolutelyRe><TrustsRegarding><trustsPerson rdf:resource="#Dan"/><trustsOnSubjectrdf:resource="/ont#AutoRepair"/> </TrustsRegarding></distrustsAbsolutelyRe></Person>With topic-specific information provided, queries can ask for trust levels on a given subject and the network is then trimmed down to include only the relevant edges. Large networks form when users link from their FOAF file to other FOAF files. In the example above, the can include a reference to the trust file for "Dan". Spidering along these links allow a few seeds to produce a large graph. Many interesting projects have emerged from these FOAF networks, including the Co-depiction project [25], SVG image annotation [2], and FoafNaut [11], an IRC bot that provides FOAF data about anyone in its network [10].Having specified trust levels as described above, the network that emerges becomes much more useful and interesting. A directed, weighted graph is generated, where an edge from A to B indicates that A has some trust relationship with B. The weight of that edge reflects the specified trust level.3.2 Computing TrustDirect edges between individual nodes in this graph contain an explicitly expressed trust value. Beyond knowing that a given user explicitly trusts another, the graph can be used to infer the trust that one user should have for individuals to whom they are not directly connected.Several basic calculations are made over the network.•Maximum and minimum capacity paths: In the algorithms used in this research, trust follows the standard rules of network capacity – on any given path, the maximum amount of trust that the source can give to the sink is limited to be no larger than the smallest edge weight along that path. The maximum and minimum capacity path functions identify the trust capacity of the paths with the highest and lowest capacity respectively. This is useful in identifying the range of trust given by neighbors of X to Y.•Maximum and minimum length paths: In addition to knowing the varying weights of paths from X to Y, it is useful to know how many steps it takes to reach Y from X. These path length functions return full the chains of weighted edges that make up the maximum and minimum length paths.•Weighted average: In the algorithm described below, this value represents a recommended trust level for X to Y.The weighted average is designed to give a calculated recommendation on how much the source should trust the sink. The average, according to the formula (1), is the default trust metric used in all applications querying this network. It is designed to be a very simple metric, and it is reasonable that different users would want to use different metrics In our metric, the maximum capacity of each path is used as the trust value for that path. There is no diminution for long path lengths. Users may want a lower trust rating for someone many links away as opposed to a direct neighbor. Another issue deals with "trust" and "distrust." The maximum capacity calculation does not assign any external value to the trust ratings; they are considered simply as real values. Since there is a social relevance to those numbers, variants of the single path trust value calculation may be desirable. For example, if A distrusts B regarding a specific subject and in turn, B distrusts C on that subject, it is possible that A will want to give C a relatively higher rating. That is, if A and B hold opposite views, as do B and C, it may mean that A and C are actually close to one another. Alternately, A may want to distrust C even more that A distrusts B, the logic being if A distrusts B, and B cannot even trust C, then C must be especially untrustworthy.The variants on the trust metric make a long list, and it is unrealistic to provide different functions for each variant. Instead, we have designed an interface where users can create and user their own metrics. This process is described in section 3.3. By default, trust is calculated according to the following simple function. Using the maximum capacity of each path to the sink, a simple recursive algorithm is applied to calculate the average. For any node that has a direct edge to the sink, we ignore paths and use the weight of that edge as its value. For any node that is not directly connected to the sink, the value is determined by a weighted average of the values for each of its neighbors who have a path to the sink. The calculated trust t from node i to node s is given by the following function:(1) where i has n neighbors with paths to s. In calculating the average, this formula also ensures that we do not trust someone down the line more than we trust any intermediary.Though in the current implementation, our metric searches the entire graph, a simple modification could easily limit the number of paths searched from each node. Since this is a social network that follows the small world pattern, the average distance across the network increases only logarithmically with the number of nodes. Thus, with a limit on the number of paths searched, the algorithm will scale well.3.3 Trust Web ServiceA web service is a function or collection of functions that can be accessed over the web. Access to the trust network is available as a web service. Web users can provide two email addresses, and the weighted average trust value, calculated as described above is returned. The benefit of a web service is its accessibility. It can be composed with other web services or used as a component of another application. This also makes access to trust values available for agents to use as components in intelligent web applications.The web service interface also allows users to supply their own algorithms for calculating trust. We provide access to a Java API for querying the trust graph. This includes functions such as retrieving a list of neighbors, getting the trust rating for a given edge, detecting the presence or absence of paths between two individuals, and finding path length. With this information, users can write small Java programs to calculate trust based to their own algorithms. The corresponding class file for this user-defined Java code is passed as an argument to our Trust Web Service, along with the source and sink email addresses, and our service will implement the user defined function.4 Applications4.1 TrustBotTrustBot is an IRC bot that has implemented algorithms to make trust recommendations to users based on the trust network it builds. It allows users to transparently interact with the graph by making a simple series of queries.At runtime, and before joining an IRC network, TrustBot builds an internal representation of the trust network from a collection of distributed sources. Users can add their own URIs to the bot at any time, and incorporate the data into the graph. The bot keeps a collection of these URIs that are spidered when the bot is launched or called upon to reload the graph. From an IRC channel, the bot can be queried to provide the weighted average, as well as maximum and minimum path lengths, and maximum and minimum capacity paths. The TrustBot is currently running on , and can be queried under the nick 'TrustBot'.4.2 TrustMailTrustMail is an email client, developed on top of Mozilla Messenger, that provides an inline trust rating for each email message. Since our graph uses email address as a unique identifier, it is a natural application to use the trust graph to rank email. As with TrustBot, users can configure TrustMail to show trust levels for the mail sender either on a general level or with respect to a certain topic.To generate ratings, TrustMail makes a call to the web service, passing in the email address of the sender and the address of the mailbox to where the message was delivered. It is necessary to use the mailbox email address instead of the "to" address on the email to prevent a lack of data because of cc-ed, bcc-ed, and mailing list messages. Figure 2 shows a sample inbox and message with trust ratings.There are two points to note about the figure below. First is that trust ratings are calculated with respect to the topic of email (using the TrustsRegarding framework described above). If a user has a trust rating with respect to email, that value is used. If there is no trust rating specifically with respect to email, but a general trust rating is available, the latter value is used.Fig. 2: Trust Mail with trust ratingsConsider the case of two research groups working on a project together. The professors that head each group know one another, and each professor knows the students in her own group. However, neither is familiar with the students from the other group. If, as part of the project, a student sends an email to the other group's professor, how will the professor know that the message is from someone worth paying attention to? Since the name is unfamiliar, the message is not distinguishable from other, not-so-important mail in the inbox. This scenario is exactly the type of situation that TrustMail improves upon. The professors need only to rate their own students and the other professor. Since the trust algorithms looks for paths in the graph (and not just direct edges), there will be a path from professor to professor to student. Thus, even though the student and professor have never met or exchanged correspondence, the student gets a high rating because of the intermediate relationship. If it turns out that one of the students is sending junk type messages, but the network is producing a high rating, the professor can simply add a direct rating for that sender, downgrading the trust level. That will not override anyone else's direct ratings, but will be factored into ratings where the professor is an intermediate step in the path.The ratings alongside messages are useful, not only for their value, but because they basically replicate the way trust relationships, and awareness thereof, work in social settings. For example, today, it would sensible and polite for our student from above to start of the unsolicited email with some indication of the relationships between the student and the two professors, e.g., “My advisor has collaborated with you on this topic in the past and she suggested I contact you.” Upon receiving such a note, the professor might check with her colleague that the student’s claims were correct, orjust take those claims at face value, extending trust and attention to the student on the basis of the presumed relationship. The effort needed to verify the student by phone, email, or even walking down the hall weighed against the possible harm of taking the student seriously tends to make extending trust blindly worthwhile. TrustMail lowers the cost of sharing trust judgments even in across widely dispersed and rarely interacting groups of people, at least in the context of email. It does so by gathering machine readably encoded assertions about people and their trustworthiness, reasoning about those assertions, and then presenting those augmented assertions in an end user friendly way.Extending this partial automation of trust judgments to other contexts can be as simple as altering the end user interface appropriate, as we did in earlier work where we presented the trust service as Internet Relay Chat “bot”[30]. However, one might expect other situations to demand different inference procedures (which our service supports), or even different networks of relations. In the current Web, the latter would be difficult to achieve as there is only one sort of link. On the Semantic Web, we can overlay many different patterns of trust relation over the same set of nodes simple by altering the predicate we focus on.5 Conclusions and Future WorkThis paper illustrates a method for creating a trust network on the semantic web. By introducing an ontology, an algorithm for finding trust from the resulting network, and different methods for accessing the network through a web service or applications, this is a first step for showing how non-security based efforts can become part of the foundation of the web of trust.As work in this area progresses, developers should consider more in depth investigation of algorithms for calculating trust. The algorithm here was designed to be a simple proof-of-concept. Studies that look into algorithmic complexity (as graph size increases), as well more social issues like path length considerations and which averages to use for recommendations will become increasingly important.6 AcknowledgementsThis work was supported in part by grants from DARPA, the Air Force Research Laboratory, and the Navy Warfare Development Command. The Maryland Information and Network Dynamics Laboratory is supported by Industrial Affiliates including Fujitsu Laboratories of America, NTT, Lockheed Martin, and the Aerospace Corporation.The applications described in this paper are available from the Maryland Information and Dynamics Laboratory, Semantic Web Agents Project (MIND SWAP) at .References1.Adamic, L., "The Small World Web". Proceedings of ECDL, pages 443-- 452,1999.2.Adding SVG Paths to Co-Depiction RDF,/svg/codepiction.html3.The Advogato Website: 4.Albert, R., Jeong, H. AND Barabasi, A.-L. "Diameter of the world-wide web."Nature 401, 130–131, 19995.Bharat, K and M.R. Henzinger. "Improved algorithms for topic distillation in ahyperlinked environment," Proc. ACM SIGIR, 1998.6.Brin, S and L. Page, "The anatomy of a large-scale hypertextual Web searchengine," Proc. 7th WWW Conf., 1998.7.Broder, R Kumar, F. Maghoul, P. Raghavan, S. Rajagopalan, R. Stata, A.Tomkins, and J. Wiener. "Graph structure in the web. " Proc. 9th International World Wide Web Conference, 2000.8.Carriere, J and R. Kazman, "WebQuery: Searching and visualizing the Webthrough connectivity," Proc. 6th WWW Conf., 1997.9.Chakrabarti, S, B. Dom, D. Gibson, J. Kleinberg, P. Raghavan, and S.Rajagopalan, "Automatic resource compilation by analyzing hyperlink structure and associated text," Proc. 7th WWW Conf., 1998.10.Dumbill, Ed, “XML Watch: Finding friends with XML and RDF.” IBMDeveloper Works, /developerworks/xml/library/x-foaf.html, June 2002.11.FOAFNaut: /12.Gil, Yolanda and Varun Ratnakar, "Trusting Information Sources One Citizen ata Time," Proceedings of the First International Semantic Web Conference(ISWC), Sardinia, Italy, June 2002.13.Kleczkowski, A. and Grenfell, B. T. "Mean-fieldtype equations for spread ofepidemics: The ‘small-world’ model." Physica A 274, 355–360, 1999.14.Kleinberg, J, "Authoritative sources in a hyperlinked environment," Journal ofthe ACM, 1999.15.Kumar, Ravi, Prabhakar Raghavan, Sridhar Rajagopalan, D. Sivakumar, AndrewTomkins, and Eli Upfal. "The web as a graph". Proceedings of the Nineteenth ACM SIGMOD-SIGACT-SIGART Symposium on Principles of DatabaseSystems, May 15-17, 2000.balme, Fen, Kevin Burton, "Enhancing the Internet with Reputations: AnOpenprivacy Whitepaper," /papers/200103-white.html, March 2001.17.Levien, Raph and Alexander Aiken. "Attack resistant trust metrics for public keycertification." 7th USENIX Security Symposium, San Antonio, Texas, January 1998.gram, S. "The small world problem." Psychology Today 2, 60–67, 1967.19.Moore, C. and Newman, M. E. J. "Epidemics and percolation in small-worldnetworks." Physical Review E 61, 5678–5682, 2000.。

Driving healthcare intelligence throughout the continuum of care with standards-based interoperability Philips supports improving care through clinical intelligenceBackgroundSophisticated data mining and health informatics depend on accurate, private, secure, and cost-effective sharingof clinical data among various systems and enterprises. Initiatives such as the Care Connectivity Consortium, which includes Kaiser Permanente, Mayo Clinic, Geisinger Health System, Intermountain Health, and Group Health Cooperative focus on sharing and accessing real time data about patients to help caregivers provide better and more effective care. In Europe, epSOS (European Patients-Smart Open Services) concentrates on advancing the ability to share patient information among different European healthcare systems to improve the quality and safety of healthcare. NEHTA Limited (NationalE-Health Transition Authority), a not-for-profit company established by the Australian government, seeks to develop better ways to electronically collect and securely exchange health information. A major objective of the HITECH Act in the United States involves the exchange and use of health information. These initiatives, and others around the world, have in common the need to reduce healthcare costs and transform patient care.The interchange and management of meaningful clinical data as part of an integrated process across the continuum of care and clinical research can help facilitate early diagnosis, reduce readmissions, better manage population health, and improve the operational efficiency of patient care. Predictive modeling and comparative effectiveness research integrated with daily patient care can help better manage patients before they experience critical or chronic illnesses. Virtual and remote monitoring of the patient during home recovery can assist in interventions to reduce readmissions. Linking community and rural providers and specialists can help better manage population health. Better coordination of care facilitates the best possible outcome for the patient faster and more efficiently.SummaryInitiatives around the globe involve healthcare reform to help manage patient care more effectively and affordably. Predictive modeling provides the potential to intervene before patients become critically or chronically ill to help save lives and manage resources effectively. New healthcare delivery models based on knowledge management use clinical information to provide clinical intelligence to reach a number of goals. These goals include improving the health of populations, advancing patient-centered continuity of care, improving the efficiency of providing care, and reducing healthcare costs through such steps as reducing patient readmissions. Interoperability is essential to the smooth flow of data that makes this possible. This paper reviews some basic concepts of interoperability with a focus on IHE (Integrating the Healthcare Enterprise) and examples of how interoperability can be used to facilitate the exchange of clinical information across the continuum of care.Interoperability considerationsTwo key levels of interoperability to consider are syntactic (functional), which is the ability of two or more systems to exchange information, and semantic, which is the ability to use the information exchanged. Standards and initiativesStandards designed for patient-focused, as well as population-focused, objectives can help systems reach the semantic level of interoperability. Standards suchas ICD-10 for disease classification or CDISC for clinical research data element definitions help facilitate a semantic level of interoperability. As more patient health information is collected, stored, and transmitted electronically the concern for patient privacy and security grows on a global basis. Governments around the world have enacted legislation to protect individually identifiable health information (e.g., USA-HIPAA, Canada-PIPEDA, general privacy legislation underthe European Directive 95/46/EC, Japan-PIPA, and others). IEC 80001-1 is a standard for the purpose of risk management of IT-networks incorporating medical devices to address safety, effectiveness, and data and system security.In addition to standards development activities, initiatives such as IHE and Continua Health Alliance leverage multiple industry and cross-industry standards for practical system-to-system sharing of data. These initiatives facilitate the syntactic level of interoperability and help pave the way for semantic interoperability. The sidebar gives a brief description of some key standards and initiatives. They focus on different aspects of interoperability that together, when widely adopted by the healthcare industry, can contribute to a harmonized approach to interoperability to help advance healthcare intelligence.The remainder of this paper will focus on IHE.Integrating the Healthcare EnterpriseIHE uses a “best practices” approach to solving interoperability issues associated with multiple, heterogeneous information systems to help resolve ambiguities and conflicting interpretations. It coordinates the use of established standards such as DICOM and HL7 and also answers issues that remain unresolved even with the use of these standards. As membersof IHE, industry subject experts--both vendor and healthcare institutions--collaborate and develop detailed implementations of standards to provide consistency for integrations.IHE is organized across a growing number of clinical and operational domains. Each domain produces its own set of technical framework documents, in close coordination with other IHE domains. Domains include:• Cardiology• IT Infrastructure• Patient Care Coordination• Patient Care Device• Radiology• Quality, Research, and Public Health• Anatomic Pathology• Radiation OncologyGlobal deployment and promotion is supported by a product registry of several hundred vendor systems supporting use cases and a network of affiliated national and regional organizations that coordinate testing and interoperability demonstrations such as the Healthcare Information and Management Systems Society (HIMSS) annual Interoperability Showcase.IHE runs an annual Connectathon, which is the healthcare IT industry’s only large-scale international interoperability validation testing event. During the event multi-vendor systems exchange information, performing all the transactions required in supportof defined clinical use cases, removing barriers to integration that would otherwise have to be dealt with repeatedly, and using proprietary approaches,at customer sites during installation.2Standards:HL7 (Health Level Seven International) Clinical data: Standards for healthcare informatics interoperability including the exchange of clinical, financial, and administrative data. DICOM (Digital Imaging and Communications in Medicine)Image data: Standards for handling, storing, printing, and transmitting information in medical imaging. /Technology/DICOMSNOMED CT (Systematized Nomenclature of Medicine – Clinical Terms)Clinical terms: Standards for computerized clinical terminology covering clinical data for diseases, clinical findings, and procedures. LOINC (Logical Observation Identifiers Names and Codes)Lab terms: LOINC offers universal names and codes for identifying lab results to support the exchange of lab information between separate systems. ASTM CCR (Continuity of Care Record) Health summary: Standards for the patient health summary to support capture and exchange of an individual's most relevant personal health information. and/standards/E2369ICD-9/10 (International Classification of Diseases)Disease names: ICD-9/10 code and classify morbidity data from inpatient and outpatient records, physician offices, and most National Center for Health Statistics (NCHS) surveys. /nchs/icdCDISC (Clinical Data Interchange Standards Consortium)CDISC and HL7 together develop standards for clinical data to enable information system interoperability used in medical research and related areas of healthcare. These standards support the acquisition, exchange, submission, and archive of clinical research data and metadata. ISO/IEC 11179Repository metadata: ISO/IEC 11179 provides a standard grammar and syntax for describing data elements and associated metadata that results in unambiguous representation and interpretation ofthe data. CEN ISO/IEEE P11073Medical device communication: Standards for device communication between agents (e.g., blood pressure monitors, weighing scales) that collect information about a person and manager (e.g., cell phone, health appliance, or personal computer) for collection, display, and possible later re-transmission. A brief guide to some key health care standards and initiatives (Note: Not a comprehensive guide)Initiatives:Continua Health AllianceContinua is a non-profit industry alliance to develop interoperable personal connected health solutions in the home. The Alliance operates a testing and certification program to validate interoperability of certified products. IHE (Integrating the Healthcare Enterprise)IHE leverages established healthcare integrationstandards to create best practices for integrating applications, systems, and settings across the entire healthcare enterprise. IHE organizes, managesand publishes results of Connectathon annual interoperability validation testing events for multiplevendor systems. 3As a patient moves among care areas, IHE compliant systems help facilitate a smooth flow of information. To describe examples of integration across the continuum of care, we will explore a scenario of a patient experiencing a heart condition:Emergency department treatment roomA patient with a possible heart condition is under evaluation in the treatment room of the emergency department (ED) where a device is used to monitor his vital signs. He undergoes continuous physiological data monitoring with observation reporting in support of IHE Integration Profile Device Enterprise Communication (DEC). Transmitting information such as complete waveforms, trends, alarms, and numerics from medical devices at the point of care to enterprise applicationsin a consistent way using DEC can help shorten clinician decision time, increase productivity, minimize transcription errors, and facilitate increased contextual information of the data in the electronic health record (EHR), clinical data repository (CDR), or other enterprise systems.The patient vital signs indicate a need for the physician to order an immediate CT. The patient then moves to the imaging department.Imaging departmentThe imaging department personnel process the physician’s order and supportive material from theED complying with IHE Integration Profile Scheduled Workflow (SWF) for order filling. Systems that support SWF reduce manual data entry to a single incidence, which can reduce errors and save time identifying and correcting them. It also facilitates study identification and status are accurately tracked throughout the department to help minimize “lost” studies. The patient undergoes scanning in the imaging department where images are captured and stored. Based on IHE Integration Profiles XDS.b and XDS-I.b, the radiology report and the images are made available in a consistent way to physicians in the ED and ICU as well as to the cardiologist treating the patient. XDS.b enables consistent sharing of EHR documents between healthcare enterprises, physician offices, clinics, acute care inpatient facilities, and personal health records. Systems that support XDS-b help facilitate registration, distribution, and access across health enterprises. XDS-I.b enables consistent sharing of images, diagnostic reports, and related information across a group of care sites.The patient then moves to the intensive care unitfor treatment.Intensive care unit (ICU)In the ICU, physicians are able to view all prior documentation and images by discovering and retrieving this information with their XDS.b compliant registry and repository. The patient may be put on an infusion pump to administer required medication to treat a diagnosed heart condition. He is monitored by a bedside device compliant with the DEC integration profile. The monitor may be integrated with an alarm manager that conforms to IHE Integration Profile Alarm Communication Management (ACM) for communicating alarms to a portable device such as a mobile phone. This provides a secondary means for notifying the appropriate person for a condition needing timely human intervention.It also automates an otherwise manual workflow notification process.The patient condition stabilizes and the patient then moves to the general medical-surgical unit for general oversight during recovery.IHE compliant systems help facilitate exchangeof information throughout the continuum of care 4Medical-surgical unitIn the Med-surg unit, a medical device monitors thepatient’s vital signs and displays them on the hospitalinformation system (HIS) consistent with the IHEIntegration Profile DEC.Over time, the patient continues to be stable and isdischarged. He later sees his primary care physician fora follow-up visit.Primary care physician (PCP) officeThe hospital has provided a discharge summaryreflecting the care provided and makes this availableto the patient’s PCP in a consistent manner usingIHE Integration Profile XDS.b for cross enterprisedocument sharing. The PCP conducts a resting ECG.The electrocardiograph generates ECGs and distributesthem, such as to a community health informationexchange (HIE) in support of IHE Integration ProfileREWF for resting ECG Workflow. Systems that conformto REWF allow users to register a patient, order atest, perform the test (acquiring the waveforms), andinterpret the results (reporting) in an interoperable way.It can handle unordered tests, urgent cases, and ECGsacquired in both hospital and office settings.The results of the ECG show that the patient isresponding well to treatment. The patient goes homeuntil his next follow-up visit with his PCP.ResearchWhile it is uncommon to consider research institutionsas a care area, one can consider predictive modelingefforts and comparative effectiveness research as anextended care area. This is where a continuous flow ofdata from patients with conditions relevant to ongoingresearch can help develop new and more effectivehealthcare delivery models and standards of care basedon realistic clinical conditions. The IHE integrationprofiles identified in this scenario as well as otherprofiles can be applied to integrate data between patientcare enterprises and research institutions.5Philips champions standards-based interoperablesolutions for patient care and clinical informaticsPhilips is deeply committed and actively involved indriving interoperability industry standards. We currentlychair or co-chair approximately 20 different standardscommittees and actively participate in many more, withthe mindset that greater interoperability promotesbetter patient care.Philips strongly supports IHESince 2003 Philips Healthcare has issued more than105 integration statements, evidence that we haveconsistently supported IHE and other standards in verypractical ways.In radiology and cardiology, for example, Philipssolutions such as our image acquisition systems, imagemanagement systems, and workstations have supportedIHE integration profiles for many years.Computed Tomography CT Brilliance, MX8000Electrophysiology EP NavigatorFluoroscopy EasyDiagnost Eleva, MultiDiagnost ElevaInterventional X-ray Allura Xper, Integris, Veradius, PulseraMagnetic Resonance Imaging Intera, Achieva, PanoramaNuclear Medicine GEMINI, OdysseyRadiography Eleva Workspot, Digital Diagnost, MammoDiagnostUltrasound iE33, iU22, HD11, HDI 5000PACS Systems and Web Viewing IntelliSpace PACS, IntelliSpace Portal, iSite, XceleraPatient Monitoring IntelliVue Information Center, IntelliSpace EventManagementStandalone Workstations ViewForum, Extended Workspace EWSPhilips is also committed to providing new solutions forefficient and lower cost standards-based patient care andclinical informatics that are interoperable with multi-vendor medical devices and enterprise systems acrossthe continuum of care.6Device Enterprise Communication (DEC)For transmitting information from medical devices at the point of care to enterprise applications.General floorProviding interoperability for sharing spot check vital signs: SureSigns VS with IntelliBridge Enterprise* now conforms to IHE Integration Profile Device Enterprise Communication (DEC) to allow standards-based enterprise sharing of spot check monitoring data. Critical careProviding interoperability for sharing critical monitoring data: IntelliVue Information Center with IntelliBridge Enterprise* supports the IHE DEC Integration Profile to allow standards-based enterprise sharing of complete waveforms, trends, alarms, and numerics from wired and wireless networked Philips patient monitors and telemetry systems, as well as the HeartStart MRx Monitor/Defibrillator.Cross Enterprise Document Sharing (XDS.b)For sharing and discovering EHR documents between healthcare enterprises, physician offices, clinics, acute care in-patient facilities and personal health records. Facilitates the registration, distribution, and access across health enterprises of patient EHRs.Cross Enterprise Document Sharing for Imaging (XDS-I.b) For sharing images, diagnostic reports, and related information across a group of care sites. Provides an imaging component to the EHR, effective means to contribute and access imaging documents across health enterprises, scalable sharing of imaging documents, and easier access to imaging documents.Scheduled Workflow (SWF)For integrating ordering, scheduling, imaging acquisition, storage, and viewing activities associated with radiology exams. RadiologyProviding interoperability for sharing radiology documents and images: IntelliSpace PACS supports multiple IHE Integration Profiles, including SWF, XDS.b and XDS-I.b.Alarm Communication Management (ACM)For communicating alarms, allowing a patient care device to send a notification of an alert or alarm condition to a mobile device.Multiple care areasProviding interoperability to manage event notifications:IntelliSpace Event Management V10 conforms to IHE Integration Profile Alarm Communication Management (ACM) for a standards-base way to manage alerts from patient monitors, nurse calls, and clinician information systems to simplify complex input and output flow and provide meaningful alerts to caregivers on their communication devices. IEM helps provide better coordination of care by delivery meaningful information to caregivers where they need it, in the right format, at the exact point of care – regardless of a caregiver’s location.* Philips new IntelliBridge Enterprise provides a single, standards-based, interface point for HL7 data between enterprise systems and Philips patientcare and clinical informatics systems. This reduces the number of system connections to the EMR, ADT, CPOE, Lab or other system such as for clinical research. IntelliBridge Enterprise leverages the existing infrastructure and offers virtualization, high availability, disaster recovery, fully automated backups, and remote access and support. It is pretested, reliable, and repeatable to help reduce the cost of implementation. The goal is to help providefor a more affordable interoperable standards-based solution to interchange and manage meaningful clinical data across the continuum of care.Some of the IHE integration profiles that Philips supports7Philips Healthcare is part ofRoyal Philips ElectronicsHow to reach us/healthcare**********************Asia+49 7031 463 2254Europe, Middle East, Africa+49 7031 463 2254Latin America+55 11 2125 0744North America+1 425 487 7000800 285 5585 (toll free, US only)Please visit /himss for more information© 2012 Koninklijke Philips Electronics N.V.All rights are reserved.Philips Healthcare reserves the right to make changes in specifications and/or to discontinue any product at any time without notice orobligation and will not be liable for any consequences resulting from the use of this publication.Printed in The Netherlands.FEB 2012。

远程教育中智能答疑系统的设计与实现完整文档资料可直接使用,可编辑,欢迎下载北京交通大学硕士学位论文远程教育中智能答疑系统的设计与实现姓名:胡娜申请学位级别:硕士专业:教育技术学指导教师:赵宏20071201jb塞銮道盔堂亟±堂僮迨塞生塞翅垂中文摘要摘要:随着网络技术的发展和网络应用的普及,依托于网络技术的远程教育正在迅猛地发展。

基于网络环境下的教育模式,采用的是探索式学习方式,它支持学生根据自己的情况,浏览相关的教学资源,实现优秀教育资源和教育方法的共享。

但是,在远程教学中,学生和教师是时空相对分离的,学生无法与教师直接交流,于是答疑作为其教学活动中的一个重要环节,正日益引起人们的关注。

设计一个好的远程教育答疑系统,能及时有效地解决学生在学习过程中历产生的疑问,这样可以提高远程学生的学习效率,保证远程教育的质量。

一般的答疑系统采用的是基于搜索引擎的关键字查询方式,这种答疑系统需要学生自己输入关键字进行提问,对学生提炼总结关键字的能力有一定要求,并且搜索的效果并不理想,需要学生进一步来筛选系统反馈的答案,使得学习效率不高,这种答疑系统有必要进一步优化。

智能答疑系统是一个具有知识记忆、数据计算统计、逻辑推理、知识学习和实现友好人机交互的智能系统,其本质是一个具有智能性的知识系统。

它支持自然语言的提问、自动检索问题并呈现有效答案,能够通过学习自动扩展和更新答案知识库。

它的这些特点,使学生在学习时能够使用自己熟悉的方式表达问题,并能够及时获得与问题较为相关的一些反馈答案。

本文首先论述了研究智能答疑系统的背景和意义,并在分析了远程教育模式特点及对比了现有的答疑系统的基础上,对答疑系统做了统一的设计和开发,提出了一个基于本体以及XML的智能答疑系统的设计,初步建立了本体库以及知识库,给出了完整的体系结构及其架构开发模式,并对开发智能答疑系统环境中的关键技术进行了深入的研究,最后给出了智能答疑系统的实现方法。

Knowledge Retrieval(KR)Yiyu Yao,1,2Yi Zeng,2Ning Zhong,2,3and Xiangji Huang41Department of Computer Science2International WIC Institute University of Regina Beijing University of Technology Regina,Saskatchewan,Canada S4S0A2Beijing,P.R.China100022yyao@cs.uregina.ca yzeng@3Department of Information Engineering4School of Information Technology Maebashi Institute of Technology York UniversityMaebashi-City,Japan371-0816Toronto,Ontario,Canada M3J1P3 zhong@maebashi-it.ac.jp jhuang@cs.yorku.caWhere is the Life we have lost in living?Where is the wisdom we have lost in knowledge?Where is the knowledge we have lost in information?—T.S.Eliot,The Rock,1934.AbstractWith the ever-increasing growth of data and informa-tion,finding the right knowledge becomes a real challenge and an urgent task.Traditional data and information re-trieval systems that support the current web are no longer adequate for knowledge seeking tasks.Knowledge retrieval systems will be the next generation of retrieval system serv-ing those purposes.Basic issues of knowledge retrieval sys-tems are examined and a conceptual framework of such sys-tems is proposed.Theories and Technologies such as the-ory of knowledge,machine learning and knowledge discov-ery,psychology,logic and inference,linguistics,etc.are briefly mentioned for the implementation of knowledge re-trieval systems.Two applications of knowledge retrieval in rough sets and biomedical domains are presented.1.IntroductionAlthough the quest for knowledge from data and infor-mation is an old problem,it is perhaps more relevant today than ever before.In the last few decades,we have seen an unprecedented growth rate of data and information.It is necessary to reconsider the question:“Where is the knowl-edge we have lost in information?”The data-information-knowledge-wisdom hierarchy is used in information sciences to describe different levels of abstraction in human centered information processing. Computer systems can be designed for the management of each of them.Data Retrieval Systems(DRS),such as database management systems,are well suitable for the storage and retrieval of structured rmation Re-trieval Systems(IRS),such as web search engines,are very effective infinding the relevant documents or web pages that contain the information required by a user.What lacks in those systems is the management at the knowledge level.A user must read and analyze the relevant documents in or-der to extract the useful knowledge.In this paper,we pro-pose that Knowledge Retrieval Systems(KRS)is the next generation retrieval systems for supporting knowledge dis-covery,organization,storage,and retrieval.Such systems will be used by advanced and expert users to tackle the chal-lenging problem of knowledge seeking.While the growth and evolution of the Web makes knowledge retrieval systems a necessity for supporting the future generations of the Web,the extensive results from machine learning,knowledge discovery,in particular,text mining,and knowledge based systems make the implemen-tation of such systems feasible.Many proposals and research efforts have been made re-garding knowledge retrieval[8,13,17,18,26,29,34].They cover various specific aspects and provide us insights into further development of knowledge retrieval.Those efforts suggest that it is the time to study knowledge retrieval on a grand scale.In this paper,we argue that knowledge retrieval systems are the natural next step in the evolution of retrieval systems. Specifically,we examine the characteristics and main fea-tures of data retrieval systems,information retrieval systemsand knowledge retrieval systems.A conceptual framework of knowledge retrieval system is outlined.The main com-ponents in this framework are the discovery of knowledge, the construction of knowledge structures,and the inference of required knowledge based on the knowledge structures. One the one hand,results from existing studies are drawn for the study of knowledge retrieval.On the other hand, knowledge retrieval is studied in its own right by focus-ing on its unique methodologies and theories.A success of knowledge retrieval will have a great impact on future retrieval systems and future generations of the Web.2.Data and Information Retrieval vs.Knowl-edge RetrievalKnowledge retrieval systems may be considered as the next generation in the evolution of retrieval systems.Their unique features and characteristics become clear by a com-parison with existing systems.2.1Generations of Retrieval SystemsIn information and management sciences,one considers the following hierarchy[23]:−Data,−Information,−Knowledge,−Wisdom.It concisely summarizes different types of resources that we can use for problem solving.The hierarchy represents in-creasing levels of complexity that require increasing levels of understanding[30].The generations of the retrieval sys-tems may be studied based on this hierarchy.For exam-ple,Yao suggests an evolution process of retrieval systems from data retrieval to knowledge retrieval,and from infor-mation retrieval to information retrieval support(a step to-wards knowledge retrieval)[29].LaBrie argues the needs for a change from data and information retrieval to knowl-edge retrieval,and considers the problem of retrieving and searching for knowledge objects in advanced knowledge management systems[22].In the data level,we use data retrieval systems tofind relevant data and acquire knowledge.The problem to be solved is well structured,and the concept definitions are clear.Data mining could help us get interesting knowledge from a large volume of data stored in the database.In the information level,we use information retrieval systems to acquire knowledge.The problem is semi-structured,and the concept definitions are not always clear. We retrieve the relevant information by keywords or their combinations and get the implicit knowledge from retrieved results.This is effective if the scale of the information col-lection is small.However keyword based matching and page ranking cannot satisfy users needs in the modern In-ternet age.On the one hand,retrieved results containing the keywords might be huge.Even through page ranking,the most relevant results can be of hundred pages,which will be very difficult for a user to explore one by one.On the other hand,results recommended by page rank might not satisfy various user needs.Some of the most relevant results to specific users might not be ranked in the front portion of the list.In practical situations,we need to perform more tasks in addition to simple search.Taking scientific literature search as an example,most tasks are not searching for specific ar-ticles.We would want tofind out which problems have been solved,which ones have no solutions,whichfields have more audiences and which topics are promising.It will not be easy to extract such knowledge from increas-ing volume of information by using current information re-trieval systems.This may stem from a discrepancy between knowledge representation methods in retrieval systems and human thoughts.The storage methods of information in the Web,litera-ture databases,digital libraries are documents,information flows,etc.They are not closely related to the ways that hu-man organize knowledge.People need tofind,learn,and reorganize retrieved results to extract and construct knowl-edge embedded in information.Those problems cannot be solved satisfactorily in the in-formation level.The task offinding knowledge from in-formation and organizing it into the structures that human can use are the focus of knowledge retrieval systems.The process of retrieving information is changed to retrieving knowledge directly,and the retrieved results is changed from relevant documents to knowledge.Some of the hard problems in information retrieval mayfind their solutions in knowledge retrieval.2.2A Comparison of Retrieval SystemsKnowledge retrieval(KR)focuses on the knowledge level.We need to examine how to extract,represent,and use the knowledge in data and information[2].Knowledge retrieval systems provide knowledge to users in a structured way.They are different from data retrieval systems and in-formation retrieval systems in inference models,retrieval methods,result organization,etc.Table1,extending van Rijsbergen’s comparison of the difference between data re-trieval and information retrieval[27],summarizes the main characteristics of data retrieval,information retrieval,and knowledge retrieval[33].The core of data retrieval and information retrieval are retrieval subsystems.Data retrieval gets results through Boolean match[1].Information retrieval uses partial match and best match.Knowledge retrieval is also based on partialData Retrieval Information Retrieval Knowledge RetrievalMatch Boolean match partial match,best match partial match,best matchInference deductive inference inductive inference deductive inference,inductive inference,associative reasoning,analogical reasoningModel deterministic model statistical and probabilistic model semantic model+inference model Query artificial language natural language knowledge structure+natural languageOrganization table,index table,index knowledge unit andknowledge structure Representation number,rule natural language concept graph,predicate logic,markup language production rule,frame,semantic network,ontologyStorage database document collections knowledge baseRetrieved Results data set sections or documents a set of knowledge unit Table1.A Comparison of Data Retrieval,Information Retrieval,and Knowledge Retrievalmatch and best match.Considering inference perspective,data retrieval uses de-ductive inference,and information retrieval uses inductive inference[27].Considering the limitations from the as-sumptions of different logics,traditional logic systems(e.g., Horn subset offirst order logic)cannot make efficient rea-soning in a reasonable time[7].Associative reasoning,ana-logical reasoning and the idea of unifying reasoning and search may be effective methods of reasoning at the web scale[4,7].From retrieval model perspective,knowledge retrieval systems focus on the semantics and knowledge organi-zation.Data retrieval and information retrieval organize the data and documents by indexing,while knowledge re-trieval organizes knowledge by connections among knowl-edge units and the knowledge structures.3.A Conceptual Framework of KR Systems 3.1Challenges to Traditional KR SystemsThe term“knowledge retrieval”is not new and has been used by many authors.Some authors considered knowledge retrieval as a process of information retrieval[34].On the other hand,there are authors who investigated this topic in its own right.Frisch discussed knowledge retrieval based on a knowledge base(KB),and considered the entire re-trieval process as a form of inference[8].Oertel and Amir presented an approach to retrieve commonsense knowledge for autonomous decision making[18].Martin and Eklund examined different metadata languages for knowledge rep-resentation(e.g.,RDF,OML)and proposed to use general and intuitive knowledge representation languages,rather than XML-based languages to represent knowledge.They proposed methods which satisfy users requirement at dif-ferent levels of details.Kame and Quintana proposed a concept graph based knowledge retrieval system[13].In their system,sentences are converted into concept graphs. Chen and Hsiang presented a logical framework of knowl-edge retrieval by considering fuzziness in inference[5].To some extent,the framework is restricted to information re-trieval and question answering systems.Models and meth-ods for text based knowledge retrieval have also been inves-tigated[17,26,34].In the web age,the traditional understanding of knowl-edge retrieval faces new challenges.New methodologies and techniques need to be explored.(1)Traditional knowledge representation methods are in-vestigated in a small scale environment.How to represent knowledge in a large scale needs to be explored.Visualized structured knowledge may be one of the possible strategy.(2)Traditional knowledge retrieval concentrates on knowledge storage,which is unfortunately not from a hu-man oriented perspective:how to provide and use knowl-edge in more convenient ways.(3)Inductive reasoning and deductive reasoning are ap-plicable in data retrieval and small-scale information re-trieval.Finding knowledge in the web context needs more effective reasoning methodologies.(4)Knowledge based systems usually provide knowl-edge in the form of text.A user needs to extract different views.In knowledge retrieval,knowledge should be exam-ined from different perspectives.(5)Traditional knowledge based systems assume that the stored contents are all trustworthy,but in the web environ-ment,this assumption is not always valid,as many knowl-edge are not reliable.Knowledge validation in the complex environment is a challenge.(6)To some extend,knowledge stored in many systems are static,but in the web environment,knowledge is dynam-InputOutputķUser need is satisfiedĸViews of knowledge structure need to be changed ĹQuery needs to reformulatedFigure1.A Typical KR Systemically changing all the time.How to update knowledge in this environment is also a challenge.3.2A Typical KR SystemKnowledge represented in a structured way is consistent with human thoughts and is easily understandable[20,26]. Sometimes,users do not know exactly what they want or are lack of contextual awareness[28].If knowledge can be provided visually in a structured way,it will be very useful for users to explore and refine the query[22].Figure1shows a conceptual framework of a typical knowledge retrieval system.The main process can be described as follows:(1)Knowledge Discovery:Discovering knowledge from sources by data mining,machine learning,knowledge acquisition and other methods.(2)Query Formulation:Formulating queries from user needs by user inputs.The inputs can be in natural languages and artificial languages.(3)Knowledge Selection:Selecting the range of possible related knowledge based on user query and knowledge discovered from data/information sources.(4)Knowledge Structure Construction:Reasoning according to different views of knowledge,domain knowl-edge,user background,etc.in order to form knowledge structures.Domain knowledge can be provided by expert er background and preference can be provided by user logs.(5)Exploration and Search:Exploring the knowledge structure to get general awareness and refine the search. Through understanding the relevant knowledge structures, users can search into details on what they are interested in to get the required knowledge.(6)Knowledge Structure Reorganization:Reorganizing knowledge structures if users need to explore other views of selected knowledge.(7)Query Reformulation:Reformulating the query if the constructed structures cannot satisfy user needs.One of the key features of knowledge retrieval systems is that knowledge are visualized in a structured way so that users could get contextual awareness of related knowl-edge and make further retrieval.Main operations for explo-ration[12,28]are browsing,zooming-in,and zooming-out. Browsing helps users to navigate.Zoom-out provides gen-eral understanding,while zoom-in presents detailed knowl-edge and its structure.We need to point out that the full process contains two levels of feedbacks.One at a local level dealing with knowl-edge structure reorganization,and the other at a global level dealing with query ers’browsing and exploration history will be stored in user logs as back-ground information for improving the personalized knowl-edge structures.Knowledge retrieval is a typical example of human-centered computing[11],and its evaluation is more related to personal judges,which makes a balance between computer automation and user intervention.3.3Knowledge StructuresThe definition,representation,generation,exploration and retrieval of knowledge structures are the main issues in knowledge retrieval.Concept is the basic unit of human thoughts.We can build knowledge based on concepts and the relations amongthem[32].Knowledge structure is built based on hierarchi-cal structures of concepts.In the context of granular com-puting,a knowledge unit can be considered as a granule. Drawing results from granular structure,knowledge struc-tures can be examined at least at three levels[31]:−internal structures of knowledge units,−collective structures of a family of knowledge units,−hierarchical structures of a web of knowledge units.A unit of knowledge may be decomposed into a family of smaller units.Their decomposition and relationship repre-sent the internal structures[15].The collective structures of a family of knowledge units describe the relations of knowl-edge units in the same level.Different levels of knowledge units form a partial ordering.The hierarchical structures describe the integrated whole of a web of knowledge units from a very high level of abstraction to the veryfinest de-tails.Tree structure and concept graph are most commonly used methods for visualizing knowledge structures[14,16, 26].Semantic network is used for knowledge representa-tion[20,24].Formal concept analysis can be used to gen-erate concept graph[9].Knowledge unit and knowledge association are the two elements of knowledge[34].Knowledge unit is consid-ered to be the basic unit of knowledge,and the complex knowledge structures are based on knowledge associations. Knowledge is organized in hierarchies.Semantic tree is one of the mainly used methods for knowledge representation[20]and the visualized tree struc-tures are convenient for understanding[20,22].A semantic tree represents a hierarchical structure[20].Knowledge can be represented as many semantic trees according to differ-ent views and understandings[6,14,20].The knowledge structures discovered and constructed by a knowledge re-trieval system should be in multilevels and multiviews.The discovery of hierarchical structures is tofind simi-lar or different features of knowledge and make partitions or coverings for it.Hierarchical clustering is a practical method for knowledge structure construction[25].The sources of knowledge may have effects on thefinal knowledge structures.Selecting reliable knowledge from the web environment is one of the key issue for knowledge structure generation.For example,in literature exploration, top journals and conference proceedings may be more im-portant and valuable than some web pages.We should not generate knowledge structures just from contents stored infiles or documents.Various views should be explored.Considering literature on the web,at least con-tent view,structure view and usage view should be explored to generate the multiviews of knowledge structures[32].When users know exactly what they want,a knowledge retrieval system should provide what they need in a more direct way.The retrieval results should be hierarchical.Coarser results provide general knowledge,whilefiner re-sults provide detailed ers can interact with the system to decide which level of results they want.4.Theories and Technologies Supporting KRIt is generally believed that new ideas may be repackag-ing or reinterpretation of old ones[10].As a new research field,knowledge retrieval can draw results from the follow-ing related theories and technologies:-Theory of Knowledge:knowledge acquisition,knowl-edge organization,knowledge representation,knowl-edge validation,knowledge management.-Machine Learning and Knowledge Discovery:prepro-cessing,classification,clustering,prediction,postpro-cessing,statistical learning theory.-Psychology:cognitive psychology,cognitive informat-ics,concept formation and learning,decision making, human-computer interaction.-Logic and Inference:propositional logic,predicate logic,attribute logic,universal logic,inductive infer-ence,deductive inference,associative reasoning,ana-logical reasoning,approximate reasoning.-Information Technology:information theory,informa-tion science,information retrieval,database systems, knowledge-based systems,rule-based systems,expert systems,decision support systems,intelligent agent technology.-Linguistics:computational linguistics,natural lan-guage understanding,natural language processing. Topics listed under each entry serve as examples and do not form a complete list.5.Applications of KRWhen scientists explore the literature,they search for the scientific facts and research results.The goal of a lit-erature exploration support system is to provide those in a knowledgable way[32].Knowledge structures provide a high level understanding of scientific literature and hints re-garding what has been done and what needs to be done.As a demonstration,we examined knowledge structures obtained from an analysis of papers of two rough sets re-lated conferences[32].Different views provide various unique understanding of the literature.By drawing results from web mining,one examines literature and provides rel-evant knowledge in multiple views and at multiple levels,based on the contents,structures,and usages of the liter-ature.The main knowledge structure is constructed based on proceedings indexes,document structures and domain knowledge.The knowledge structures not only provide a relation diagram of specified discipline,but also help re-searchers tofind the contribution of each study and possible future research topics in rough sets[32].Considering biomedical literature explorations,the rela-tionship and connections of various genes and proteins re-lated publications can be found based on the structure im-plicit in the genes and proteins.Current biomedical research is characterized by immense volume of data,accompanied by a tremendous increase in the number of gene and pro-tein related publications.However,many interesting links that connect facts,assertions or hypotheses may be missed because these publications are generated by many authors working independently and the functions of many genes and proteins are separately described in the literature.In order to build knowledge structures for biomedical knowledge retrieval,a large database of abstracts,a subset of PubMed database1[19],will be used as our information search space.For example,each gene is mapped to a docu-ment roughly discussing the gene’s biological function.The literature database is then searched for documents similar to the gene’s document.The resulting set of documents typ-ically discusses the gene’s function.Since each set corre-sponds to a gene,the similar document sets can be mapped back to their originating genes in order to establish func-tional relationships among these genes.The main knowl-edge structure is constructed based on:first,functional re-lationships among genes and proteins,on a genome-wide scale;second,the literature specifically relevant to the func-tion of these genes and proteins;third,a short-term list char-acterizing the document set,which suggests why the genes and proteins are considered relevant to each other,and what their biological functions are.It is clear that knowledge retrieval systems can greatly enhance our understanding of genetic processes for biomed-ical research.The associative organization is more closer to human intuition than conventional keyword match[21]. The visualized structures can provide medical doctors and researchers new ideas on medicine use and production.6.ConclusionThe main contribution for this paper is to suggest knowl-edge retrieval as a new researchfield.A comparative study of different levels of retrieval systems is given based on the data-information-knowledge-wisdom hierarchy.The results suggest that different retrieval systems focus on different levels of retrieval problems.A framework of knowledge 1It contains over17,000,000scientific abstracts retrieval system has been proposed.A list of theories and technologies related to KR has been discussed.The appli-cations of KR may show great impact on the future devel-opment of retrieval systems,digital libraries,and the Web.AcknowledgmentsThis study was supported in part by research grants from the Natural Sciences and Engineering Research Council (NSERC)of Canada.The paper was prepared when Yi Zeng was visiting the University of Regina withfinancial support from an NSERC discovery grant awarded to Yiyu Yao.The authors would like to thank anonymous reviewers for their constructive suggestions.References[1]Baeza-Yates,R.and Ribeiro-Neto,B.Modern Infor-mation Retrieval,Addison Wesley,1999.[2]Bellinger,G.,Castro,D.and Mills,A.Data,Informa-tion,Knowledge,and Wisdom,/dikw/dikw.htm(Accessed on August, 16th,2007).[3]Benjamins,V.R.and Fensel,munity is knowl-edge!in(KA)2,Proceedings of the1998Knowledge Acquisition Workshop,1998.[4]Berners-Lee,T.,Hall,W.,Hendler,J.A.,O’Hara,K.,Shadbolt,N.and Weitzner,D.J.A Framework for Web science,Foundations and Trends in Web Science, 2006,1(1):1-130.[5]Chen, B.C.and Hsiang,J.A logic framework ofknowledge retrieval with fuzziness,Proceedings of the2004IEEE/WIC/ACM International Conference on Web Intelligence,2004:524-528.[6]Collins,A.M.and Quillian,M.R.Retrieval time fromsemantic memory,Journal of Verbal Learning and Verbal Behavior,1969,8:240-248.[7]Fensel,D.and van Harmelen,F.Unifying reasoningand search to web scale,IEEE Internet Computing, 2007,11(2):96,94-95.[8]Frisch,A.M.Knowledge Retrieval as Specialized In-ference,Ph.D thesis,University of Rochester,1986.[9]Ganter,B.and Wille,R.Formal Concept Analysis:Mathematical Foundations,Springer-Verlag,1999. [10]Hawkins,J.and Blakeslee,S.On Intelligence,HenryHolt and Company,2004.[11]Jaimes,A.,Gatica-Perez,D.,Sebe,N.and Huang,T.S.Human-centered computing:toward a human revolu-tion,IEEE Computer,2007,40(5):30-34.[12]Jain,R.Unified access to universal knowledge:nextgeneration search experience,white paper,2004. [13]Kame,M.and Quintana,Y.A graph based knowledgeretrieval system,Proceedings of the1990IEEE Inter-national Conference on Systems,Man and Cybernet-ics,1990:269-275.[14]Keil,F.C.Concepts,Kinds,and Cognitive Develop-ment,MIT Press,1989.[15]Langseth,H.,Aamodt,A.and Winnem,O.M.Learn-ing retrieval knowledge from data,Proceedings of the1999International Joint Conference on Artificial Intelligence Workshop ML-5:Automating the Con-struction of Case-Based Reasoners,1999:77-82. [16]Martin,P.and Alpay,L.Conceptual structures andstructured documents,Proceedings of the4th Inter-national Conference on Conceptual Structures,LNAI 1115,Springer,1996:145-159.[17]Martin,P.and Eklund,P.W.Knowledge retrieval andthe World Wide Web,IEEE Intelligent Systems,2000, 15(3):18-25.[18]Oertel,P.and Amir, E.A framework for com-monsence knowledge retrieval,Proceedings of the7th International Symposium on Logic Formalizations of Commonsense Reasoning,2005.[19]PubMed database:[20]Quillian,M.R.Semantic Memory,Semantic Infor-mation Processing,Minsky,M.L(Eds.),MIT Press, 1968:216-270.[21]Robert,H.and Alfonso,V.Implementing the iHOPconcept for navigation of biomedical literature,Bioin-formatics,2005,21:252-258.[22]LaBrie,R.C.The Impact of Alternative Search Mech-anisms on the Effectiveness of Knowledge Retrieval, Ph.D thesis,Arizona State University,2004.[23]Sharma,N.The Origin of the“Data Informa-tion Knowledge Wisdom”Hierarchy,/˜n sharma/dikw origin.htm(Ac-cessed on August,16th,2007).[24]Sowa,J.F.Knowledge Representation:Logical,Philosophical,and Computational Foundations, Brooks/Cole,2000.[25]Tenenbaum,J.Knowledge Representation:Spaces,Trees,Features,Course Lecture Notes on Computa-tional Cognitive Science,MIT Open Courseware,Fall 2004.[26]Travers,M.A visual representation for knowledgestructures,Proceedings of the2nd annual ACM con-ference on Hypertext and Hypermedia,1989:147-158.[27]van Rijsbergen,rmation Retrieval,Butter-worths,1979.[28]White,R.W.,Kules,B.,Drucker,S.M.and Schraefel,M.C.Supporting exploratory search,Communications of the ACM,2006,49(4):37-39.[29]Yao,rmation retrieval support systems,Pro-ceedings of the2002IEEE International Conference on Fuzzy Systems,2002,1092-1097.[30]Yao,Y.Y.Web Intelligence:new frontiers of explo-ration,Proceedings of the2005International Confer-ence on Active Media Technology,2005:3-8. [31]Yao,Y.Y.Three perspectives of granular computing.Journal of Nanchang Institute of Technology,2006, 25(2):16-21.[32]Yao,Y.Y.,Zeng,Y.and Zhong,N.Supporting liter-ature exploration with granular knowledge structure, Proceedings of the11th International Conference on Rough Sets,Fuzzy Sets,Data Mining and Granular Computing,LNAI4482,Springer,2007,182-189. [33]Zeng,Y.,Yao,Y.Y.and Zhong,N.Granular structure-based knowledge retrieval[In Chinese],Proceedings of the Joint Conference of the Seventh Conference of Rough Set and Soft Computing,the First Forum of Granular Computing,and the First Forum of Web In-telligence,2007.[34]Zhou,N.,Zhang,Y.F.and Zhang,rmationVisualization and Knowledge Retrieval[In Chinese], Science Press,2005.。