Multithreaded systolic computation A novel approach to performance enhancement of systolic

- 格式:pdf

- 大小:288.03 KB

- 文档页数:17

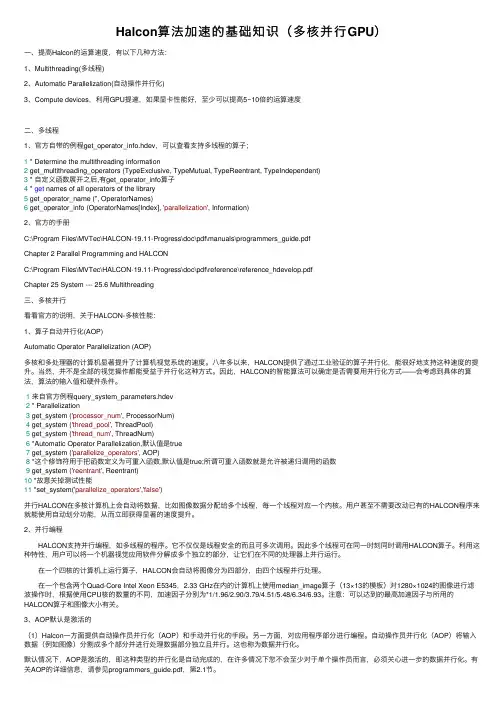

Halcon算法加速的基础知识(多核并⾏GPU)⼀、提⾼Halcon的运算速度,有以下⼏种⽅法:1、Multithreading(多线程)2、Automatic Parallelization(⾃动操作并⾏化)3、Compute devices,利⽤GPU提速,如果显卡性能好,⾄少可以提⾼5~10倍的运算速度⼆、多线程1、官⽅⾃带的例程get_operator_info.hdev,可以查看⽀持多线程的算⼦;1 * Determine the multithreading information2 get_multithreading_operators (TypeExclusive, TypeMutual, TypeReentrant, TypeIndependent)3 * ⾃定义函数展开之后,有get_operator_info算⼦4 * get names of all operators of the library5 get_operator_name ('', OperatorNames)6 get_operator_info (OperatorNames[Index], 'parallelization', Information)2、官⽅的⼿册C:\Program Files\MVTec\HALCON-19.11-Progress\doc\pdf\manuals\programmers_guide.pdfChapter 2 Parallel Programming and HALCONC:\Program Files\MVTec\HALCON-19.11-Progress\doc\pdf\reference\reference_hdevelop.pdfChapter 25 System --- 25.6 Multithreading三、多核并⾏看看官⽅的说明,关于HALCON-多核性能:1、算⼦⾃动并⾏化(AOP)Automatic Operator Parallelization (AOP)多核和多处理器的计算机显著提升了计算机视觉系统的速度。

Synthesis and catalytic performance of novel hierachically porous material beta-MCM-48for diesel hydrodesulfurizationAijun Duan •Chengyin Wang •Zhen Zhao •Zhangfa Tong •Tianshu Li •Huadong Wu •Huili Fan •Guiyuan Jiang •Jian LiuPublished online:22June 2013ÓSpringer Science+Business Media New York 2013Abstract Novel hierachically porous material Beta-MCM-48was successfully synthesized from Beta zeolite seeds by two-step hydrothermal crystallization method using Cetyltrimethylammonium Bromide as the mesostructure directing agent.Beta-MCM-48composite synthesized at the optimization conditions possessed Beta microporous struc-ture and cubic Ia3d mesoporous structure simultaneously.Meanwhile,the acidity of Beta-MCM-48was similar to Beta zeolite and higher than MCM-48mesoporous material.A series of Al 2O 3-Beta-MCM-48supported NiMo catalysts with different Beta-MCM-48contents were prepared by the incipient-wetness impregnation method.The catalytic per-formances were evaluated using DaQing Fluid Catalytic Cracking diesel as feedstock in a high pressure microreactor.Hydrodesulfurization results indicated that NiMo/Al 2O 3-Beta-MCM-48catalyst exhibited better activities than that of NiMo/Al 2O 3traditional catalyst.NiMo/Al 2O 3-Beta-MCM-48catalyst obtained the highest activity as the Beta-MCM-48content in the support was 20wt %,and the corresponding sulfur content of the hydrotreated product reached to 23.02l g g -1.Keywords Catalyst ÁHydrodesulfurization ÁMicro-mesoporous composite materials ÁBeta-MCM-48ÁDiesel oil1IntroductionIn order to promote the sustained economic development and overall social progress,environmental protection efforts were intensified in different countries.The envi-ronmental emission regulations and fuel specifications became more and more stringent,especially the sulfur content of transportation fuels was limited to a very low level [1–3].Meanwhile,the demand for ultraclean diesel is growing.The impetus to remove the sulfur in fuels became more significant for the scientific researches.Being the key technique to produce ultraclean fuels,hydrotreating pro-cess should have the appropriate catalysts with high activity,selectivity and suitable structures to realize deep hydrodesulfurization (HDS)of diesel [4].Thus,the design and development of novel catalyst materials with big pore sizes and suitable acidity attract much attention of the scientists and researchers,since large pore size can elimi-nate the diffusion resistance of reactant molecules and reasonable acidity could improve the hydrogenation and hydrogenolysis reactions of C–S bonds in sulfides [5].Mesoporous materials possess uniform pore sizes,well-defined pore arrangement,and relatively large specific surface area,which can effectively diminish the diffusion resistance,therefore these kinds of materials are considered to be the ideal support candidates for catalytic processes.Since the discovery of M41S in 1992,a variety of meso-porous materials have caught more and more attention in their synthesis and application.Many researchers applied mesoporous material MCM-41in different reactions and found it having good performances [6,7].But the char-acteristic drawbacks of MCM-41still limited its wide application,such as its weak skeleton and lower stability,and two-dimensional (2D)hexagonal (p6mm)structures with relatively lower diffusivity [8].Comparing withA.Duan ÁC.Wang ÁZ.Zhao (&)ÁT.Li ÁH.Wu ÁH.Fan ÁG.Jiang ÁJ.LiuState Key Laboratory of Heavy Oil Processing,China University of Petroleum,Beijing 102249,People’s Republic of China e-mail:zhenzhao@Z.TongGuangxi Key Laboratory of Petrochemical Resource Processing and Process Intensification Technology,Guangxi University,Nanning 530004,People’s Republic of ChinaJ Porous Mater (2013)20:1195–1204DOI 10.1007/s10934-013-9703-5MCM-41,mesoporous material MCM-48exhibits three-dimensional(3D)cubic Ia3d mesostructure which facili-tates the diffusion of large sulfur-bearing molecules throughout the pore channels without pore blockage[9,10]. Thus the suitable properties make MCM-48a potential use for hydrotreating catalysts[11].Although mesoporous materials with larger pore diam-eters will be beneficial to overcome the diffusion con-straints of large reactant molecules,their acidity and hydrothermal stability are still very low compared with the conventional supports,which are related to their amor-phous frameworks and limit their wide use in the catalytic processes.Many measures have been considered to improve the acidity and stability of mesoporous materials, and the synthesis of micro-mesoporous composite materi-als[12–14]is one of the efficient ways to adjust their physico-chemical properties since the incorporation of microporous zeolite into the configuration of micro-meso-porous materials will significantly contribute to modify the surface properties and interphase strength of the compos-ites.Many types of micro-mesoporous materials were synthesized by different methods involving the one-step and two-step hydrothermal crystallization methods with single or double template agents,postsynthesis treatments, and nonaqueous synthesis[15–17].Ooi and his coworkers[18]synthesized Beta-MCM-41 composite material by two-step crystallization method,and the composite material showed a good catalytic performance in palm oil cracking reaction.Xiao and his groups[19] synthesized mesoporous MAS-3and MAS-8aluminosili-cates using Cetyltrimethylammonium Bromide(CTAB)as template from L zeolite precursor,and the results showed that the walls of these two materials contained the structural units of L zeolite.Pinnavaia et al.[20–22]prepared the hexagonal Al-MSU-S mesoporous materials from the zeo-lite seeds of Y,Beta and ZSM-5via self-assembly method. Mazaj[23]prepared an aluminium-free Ti-Beta-SBA-15 composite through the post-synthesis incipient wet deposi-tion of Ti-Beta nanoparticles solution on the SBA-15matrix.Lima and his cooperators[24]synthesized a micro-mesoporous Beta-TUD-1composite material via seeding method,which was an effective catalyst for conversion of xylose into furfural.In this paper,a novel Beta-MCM-48hierachically por-ous composite was successfully synthesized from Beta zeolite seeds by two-step hydrothermal crystallization method using CTAB as the mesostructure directing agent. Al2O3-Beta-MCM-48supported NiMo bimetallic catalysts were also prepared.The catalytic performances were studied by using DaQing Fluid Catalytic Cracking(FCC) diesel.The physicochemical properties of materials and catalysts were characterized by various techniques.2Material and method2.1MaterialsCetyltrimethylammonium bromide(99%,CTAB)and tetraethyl orthosilicate(99%,TEOS)were purchased from Sigma-Aldrich.Tetraethylammonium hydroxide solution (25%,TEAOH)were purchased from Hangzhou Yanshan Chemical Reagent Co.Ltd,NaOH(AR grade),and Na-AlO2(AR grade)were purchased from Sinopharm Chemi-cal Reagent Co.Ltd,People’s Republic of China.All reagents were used as received without further purification.2.2Preparation of composite materials2.2.1Synthesis of beta nanocluster solutionTypical synthesis procedure of Beta nanocluster solution was as follows:0.19g of NaOH,0.23g of NaAlO2,and21.43g of TEOS were added into25.95g of TEAOH aqueous solu-tion(25%),and the mixture molar ratio of Al2O3/SiO2/ Na2O/TEAOH/H2O was 1.0/100/1.4/15/360,then the mixture was kept stirring for4h at room temperature before being transferred into an autoclave to age for12h at 140°C to obtain zeolite Beta nanocluster solution.2.2.2Synthesis of MCM-48MCM-48was synthesized by using CTAB as structure directing agent according to the literature[25].A typical synthesis procedure was as follows: 1.90g NaOH and 12.754g CTAB was dissolved in101g of H2O,the mixture was then kept stirring for1h.Finally,21.43g TEOS (containing6g SiO2)was added dropwise and kept stir-ring,the molar ratio of mixture was SiO2/CTAB/NaOH/ H2O=1/0.35/0.476/56;the mixture was stirred at room temperature for6h and then was transferred into an auto-clave for additional reaction at100°C for72h.The product of MCM-48was collected byfiltration,dried at100°C,and calcined at550°C in air for6h to remove the template.2.2.3Synthesis of beta-MCM-48compositesFor syntheses of the Beta-MCM-48composites(denoted as BM48),0.89g NaOH and4.89g CTAB was dissolved in adequate H2O,the mixture was then kept stirring for1h until CTAB was dissolved thoroughly.Finally,36g zeolite nanocluster(equivalent to the SiO2containing of6g)was added and kept stirring,then acetic acid was used to adjust pH value of the mixture solution until reached to11. The molar ratios of mixture were Al2O3/SiO2/CTAB/H2O=1/100/13/11300.The mixture was stirred at room temperature for6h and then transferred into an autoclave for additional hydrothermal treatment at110°C for48h. The product of BM48was obtained by means offiltration, dried at110°C,and calcined at550°C in air for6h to remove the templates.2.2.4Preparation of H-BM48The protonated form of BM48was ion-exchanged two times with1.0M NH4Cl at80°C for1h before being dried and calcined at550°C in air for4h.2.3Preparation of supportsThe corresponding supports were obtained by mechanical mixing of BM48and Al2O3.The supports were denoted as Al2O3-BM48-x%,where x represents different mass fractions of BM48in composite supports.2.4Preparation of NiMo supported catalystsThe Al2O3-BM48and Al2O3supported NiMo catalysts were prepared by two-step incipient-wetness impregnation method with aqueous solutions of ammonium heptamo-lybdate and nickel nitrate.After each impregnation step,the prepared catalyst precursors were dispersed in an ultra sonic bath for30min to assist the dispersion of active metals on the surface of composite supports.The prepared samples were dried at 110°C for12h,and calcined at550°C for4h.The supported catalysts were obtained with the constant amounts of Mo(MoO310wt%)and Ni(NiO3.5wt%).2.5Characterization of supports and catalystsNitrogen adsorption and desorption measurements were performed at liquid nitrogen temperature of-196°C on a Micromeritics ASAP2020system.The samples were outgassed under vacuum at350°C for4h prior to mea-surement.Total surface area was calculated according to the BET method.Meso-and micropore volumes were determined by the BJH and t-plot methods(desorption).Powder X-ray diffraction(XRD)patterns were recorded on an XRD-6000powder diffractometer under40kV and 30mA in the2h interval of5°–50°with a step size of0.02°and scan rate of1°min-1using Cu K a radiation.Transmission electron microscopy(TEM)was per-formed on a Philips Tecnai G2F20equipment with an accelerating voltage of300kV.A few mg of the powdered samples was suspended in2ml ethanol,and the suspension was treated in a sonicator for1h.Then,the suspension needed to be settled for15min,before a drop was taken and dispersed on a300mesh copper grid coated with holey carbonfilm.FT-IR spectra were obtained in the wave number range from1,750–400cm-1via an FTS-3000spectrophotometer. The measured wafer was prepared with the weight ratio of sample to KBr for1/100.The nature of acid sites of the materials was tested by pyridine-adsorbed Fourier transformed infrared spectros-copy(Py-FTIR)experiments using a MAGNAIR560FTIR instrument(Nicolet Co.,America)with a resolution of 1cm-1,The samples were dehydrated at500°C for5h under a vacuum of1.33910-3Pa,followed by adsorp-tion of purified pyridine vapor at room temperature for 20min.The system was then degassed and evacuated at different temperatures,and the IR spectra were recorded.H2-TPR was tested on a self-assembly instrument with a TCD detector.For the TPR experiments,the samples were pretreated in situ at550°C for1h and then cooled down to room temperature under Ar stream.The reduction step was performed with an H2/Ar mixture(10v%Ar)at a constant flow rate of40mL min-1with a heating rate of 10°C min-1from room temperature to1,000°C.27Al MAS NMR spectra were recorded with a Bruker Avanced III500MHz spectrometer.The27Al spectra were obtained at130.327MHz with a0.9l s pulse width,6l s delay time and12kHz spinning speed.3Experimental procedureThe HDS performances of FCC diesel were evaluated in a fixed-bed inconel reactor with2g catalysts.All the cata-lysts were presulfided by2wt%CS2-cyclohexane and H2 mixture for4h at the temperature of320°C and the pressure of4MPa.After catalyst presulfurization,the catalyst was evaluated under the traditionally commercial conditions with temperature of350°C,the pressure of 5.0MPa,H2/oil ratio of600mL mL-1and LHSV of 1.0h-1.Then the catalytic HDS performance of different catalysts could be evaluated for their desulfurization effi-ciencies of diesel.The typical properties of diesel were listed in Table1.The total sulfur contents in the feed and products were measured by using a LC-4coulometric sulfur analyzer system.The catalytic activity was estimated by HDS effi-ciency,which is defined as follows:HDS%effi-ciency=[(S f-S p)/S f]9100%;where S f and S p are the sulfur concentrations in feed and product respectively.4Results and discussion4.1The effect of synthesis conditions for structureof materials4.1.1The effect of H2O/SiO2The amount of water used in the initial synthesis system can significantly affect the silica framework,and H2O/SiO2 ratio was an important factor on the material structure[26, 27].In this paper,the effect of H2O content was investi-gated with molar gel composition of Al2O3/SiO2/CTAB/ H2O=1/100/13/7300-13300.XRD profiles of the as-synthesized samples are depicted in Fig.1a.It’s observed that when H2O/SiO2ratio was73,the synthesized material was not well-ordered;as the H2O/SiO2ratio increased to 93,MCM-41formed;further increased of H2O/SiO2ratio to113,well-ordered cubic MCM-48structure was found, indicating that the formation of long-range order of cubic Ia3d structure of MCM-48was motivated by higher H2O/ SiO2.While H2O/SiO2ratio exceeded133,the ordering degree decreased.This may be related to that if the H2O/ SiO2ratio was low,high SiO2concentration could lead to a rapid polymerization of the silicate species,and the incomplete hydrolysis resulted in linear chain formation with residual organic groups[26].This would weaken the electrostatic interaction between silicon-aluminum aggre-gate and micelles which was the necessary impetus to the assembly of mesoporous material according to the poly-merization-assembly-arranging speculation[28],so the ordering degree would decrease.Oppositely,at higher molar ratio of H2O/SiO2,the hydrolysis proceeded faster, while the polymerization and condensation of the silicate species took place too slow,which could also decrease the ordering degree of mesoporous material.4.1.2The effect of CTAB/SiO2The influence of CTAB concentration was investigated with molar gel composition of Al2O3/SiO2/CTAB/H2O=1/100/ x/11300,where x=6,13,20and27.XRD profiles of the final samples are shown in Fig.1b.It was observed that when the CTAB concentration was low,XRD pattern showed the typical peaks of the hexagonal MCM-41struc-ture;by increasing the CTAB/SiO2ratio from0.13to0.20,XRD patterns showed the characteristic peaks assigned to the reflections of the cubic structure between3and5°indicating the formation of well-ordered cubic MCM-48. When the CTAB concentration further increased to0.27,the structure of lamellar phase was observed.The phase transitions from hexagonal to cubic and lamellar mesophases as the increase of CTAB/SiO2ratio may be attributed to that the system couldn’t form enough micelles to direct mesoporous structure effectively at low CTAB con-centration.On this occasion,parts of negative ions could convert into amorphous aluminosilicates,which resulted in the decrease of ordering degree.Oppositely,high contents of CTAB produced more CTA?cations,furthermore,based on the charge density matching principle,the correspondingly charge density of inorganic negative anions increased,leading to a higher accumulation of surfactants,which inhibited the efficient assembly and regular array of silicon-aluminum aggregate to form mesoporous configurations[29,30].4.1.3Influence of pH of the gelThe influence of pH value was investigated with molar gel composition of Al2O3/SiO2/CTAB/H2O=1/100/13/11300. Corresponding XRD profiles were depicted in Fig.1c.It can be observed that when pH value was10,well-ordered cubic MCM-48formed;if pH values of the system were too high or too low both do harm to the formation of MCM-48. Meanwhile,the polymerization/depolymerization equilib-rium of the silicate species maintained well at the pH value of10.However,as pH increased,silicon-aluminum aggre-gate would exist in the form of monomer with high charge density,in which the depolymerization effect was weak, resulting in the decrease of ordering degree.When pH became lower,the excessive depolymerization effect led to the decrease of charge density of silicon-aluminum aggre-gate.This could restrain the necessary electrostatic interac-tion between silicon-aluminum aggregate and micelles in process of assembling mesoporous material,which was also disadvantageous to the ordering degree of materials[31,32].4.1.4Influence of SiO2/Al2O3of beta precursorThe influence of SiO2/Al2O3was investigated with molar gel composition of Al2O3/SiO2/CTAB/H2O=x/100/13/ 11300.XRD profiles of thefinal samples are depicted inTable1The typical properties of diesel feedstockProperties Density@20°C(g cm-3)S(l g g-1)N(l g g-1)Distillation/°CIBP30%50%70%FBPData0.87981,290920158212238279374Fig.1d.It can be observed that as the SiO2/Al2O3of beta precursor increased,the crystallinity of mesoporous material increased,when the SiO2/Al2O3=100,the crystallinity was the highest.Oppositively,the intensity of Beta diffraction peak became lower with the increase of SiO2/Al2O3ratio.Briefly,high SiO2/Al2O3ratio favored for the assembly of mesopore structure,but was unfavorable to the synthesis of microspore Beta zeolite [33].This might be explained by that,as SiO2/Al2O3 decreased,the hydrolytic action of aluminum became increased,so the pH value of system decreased and lower pH value could result in the inhibition of the necessary electrostatic interaction between silicon-aluminum aggregate and micells,which was bad to form an order material.Based on the above discussion,BM48microporous-mesoporous material could be synthesized at the optimal conditions of Al2O3/SiO2/CTAB/H2O=1/100/13/11300; pH=10;crystallized at110°C for3d.The optimized sample of BM48was characterized by many techniques to obtain the typical physico-chemical property,involving its structure and acid distribution.Furthermore,BM48was used as the catalyst additive to prepare NiMo bimetallic supported hydrotreating catalyst and the corresponding HDS performance was evaluated in a microreactor.4.2Characterizations of materials4.2.1XRD analysis of materialsFigure2is XRD of the synthesized samples.As shown in Fig.2a,the XRD patterns in the range of small angles of the MCM-48sample displayed a distinct diffraction sharp peak indexed as(211);simultaneously,a peak indexed as (220)and a series of overlapping peaks indexed as(321) (400),(420)and(332)also appeared.It clearly indicated that MCM-48had a well-ordered mesoporous structure which belonged to the bicontinuous cubic space group of Ia3d[29].According to the location of(211),the unit cell parameter a0of MCM-48was7.78nm.The diffraction peaks of BM48sample in Fig.2agreed well with those of MCM-48sample,indicating that it also had a typical cubic mesoporous structure.The peak intensities of BM48were relatively lower than those of MCM-48,which might be ascribed to that zeolite nanoclusters with bigger crystal sizes were more difficult to be assembled by the meso-structure directing agent[34].The large angle X-ray diffraction patterns of samples are shown in Fig.2b.It’s observed that both BM48and Beta zeolite showed very intensive diffraction peaks at7.6°and 22.4°indexed as the characteristic peaks of zeolite Beta[35],which meant that BM48material had the microporous structure of Beta zeolite.The peak intensities of BM48were comparatively weaker since the crystallization time of Beta nanocluster emulsion was shorter than that of Beta zeolite. However,the pure MCM-48exhibited no sharp peak in the high angle diffraction pattern.Briefly,it’s concluded that BM48was a hierachically porous composite containing MCM-48and Beta zeolite structures all in one.4.2.2N2adsorption analysis of materialsFigure3a shows N2adsorption–desorption isotherms of different materials.Obviously,the isotherm of zeolite Beta belonged to the typical type I,while both of MCM-48and BM48showed the type IV isotherm and H1-type hysteresis loop,indicating that they both had mesoporous structure [36].The hysteresis loop of BM48was lower,but more sharper than that of MCM-48,indicating BM48a very narrow range of pore size distribution(PSD).Figure3b shows PSD curve of materials,the pore size distribution of materials showed a binary pore pattern,while the existence of the pore size value of2.4nm which was ascribed to mesoporous pore of particles and the pore size value of3.8nm was attributed to the pore between particles, it’s obvious that MCM-48and BM48both showed these two types pores in PSD,indicating that the structure of BM48 held the characteristics of mesoporous MCM-48.On the contrary,Beta showed the characteristics of microporous zeolite and no ordered mesopore structure was found in its PSD.However,in comparison with pure mesoporous material of MCM-48,BM48micro-mesoporous material showed a narrow PSD and the mean pore size was2.3nm, which was a little smaller than that of MCM-48.Table2shows the textural and structural properties of materials calculated form adsorption isotherm.As shown in Table2,both BM48and MCM-48exhibited high surface areas,even higher than that of Beta.Due to the contribution of Beta zeolite,BM48had relatively higher V mic/V mes than MCM-48.Moreover,compared with MCM-48,BM48had a wider pore diameter and thicker pore wall,which might be derived from the assembling of the zeolite primary unit into the mesoporous framework of MCM-48[8].4.2.3FT-IR spectra result of materialsFigure4shows the FT-IR spectra of materials.As is shown, in the spectrum of MCM-48,the bands centered at*460, 810and1,030cm-1,which belonged to the vibrations of Si–O–Si[37],were observed.The framework vibration spectrum of BM48was similar to that of MCM-48,indicatingthat BM48had similar skeletal structure as MCM-48.However,other two distinct bands at 520and 570cm -1,which could be assigned to the six-and five-member rings presented in the structure of zeolite Beta [35],were observed in the FT-IR spectra of BM48and Beta.But the intensities of bands in BM48were much lower than those of MCM-48,which agreed with the results of XRD and TEM analysis.This result indicated that there was some Beta zeolite precursors assembled in the BM48material.4.2.4TEM characterizations of materialsTEM images of materials are shown in Fig.5.It can be seen that MCM-48showed well ordered cubic 3D meso-porous channels [26].According to the scale of image,the pore of 2–3nm was clearly visible,in coincident with the results of N 2adsorption and XRD analysis.Similar to the channels of MCM-48,BM48possessed a typical Ia3d cubic structure.The average sizes of beta in BM48were about 5nm.The wall thickness of BM48was much lessthan 5nm,thus these Beta micro-crystal particles couldn’t be introduced into the wall configuration of BM48but existed as single nanoparticles.Therefore,it could also be deduced that only Beta precursor units existed in the wall framework of BM48.4.2.527Al MAS NMR measurementFigure 6was the 27Al MAS NMR spectra of materials.As is shown,the spectrum of MCM-48only contained a peak at *54ppm,which was generally ascribed to aluminum species in tetrahedral surroundings (AlO 4structural unit)situated in the framework [27].However,the spectra of zeolite Beta and BM48displayed two sharp peaks at *54and 0ppm.The signal at 0ppm was assigned to the octahedrally coordinated non-framework aluminum species (AlO 6structural unit).It’s observed that the distribution of chemical shift of tetrahedral aluminum in Al-MCM-48was broad,indicating that the Al coordination surrounding was composite,and the signal at 0ppm was very weak,dem-onstrating that only tetrahedral aluminum species existed in Al-MCM-48.The distributions of chemical shift of tetra-hedral aluminum in BM48and Beta were concentrated,and the intensities of peak were very strong.Meanwhile,both spectra of BM48and Beta showed weak signal at 0ppm.In brief,the aluminum coordination surrounding of BM48was similar to that of Beta,therefore,it is possible to deduce that some Al species in zeolite Beta nanocrystals were fixed into BM48framework.4.2.6Pyridine-FTIR of materialTable 3lists the acid strength distributions and the acid quantity of the samples was calculated from the results of pyridine-adsorbed IR spectra degassed at 200and 350°C.The peaks at 1,446and 1,577cm -1were attributed to pyridine adsorbed on L acid sites,and the peak atTable 2Textural properties of different materials Samples S BET (m 2g -1)a V t (cm 3g -1)b V mes (cm 3g -1)c V mic (cm 3g -1)d V mic /V mes a 0(nm)e d BJH (nm)f b w (nm)g MCM-48928.70.740.760.340.457.3 2.5 1.3BM48907.50.660.580.450.788.5 2.2 1.6Beta 5020.34–0.22–––Al 2O 3206.80.48a Surface area was calculated by the BET method with a relative pressure (P/P 0)range of 0.05–0.30in the adsorption isothermb The total pore volume was obtained at a relative pressure (P/P 0)of 0.98c Mesopore volumed Micropore volumee XRD unit cell parameter (a 0)f d BJH is the mesopore diametergb w is the wall thickness,the thickness of the mesoporous materials were evaluated using the formula t =[1-(V p q )/(1?V p q )](a 0/x 0),where V p is the pore volume,q is the density of the pore wall (2.2g/cm 3),a 0is the unit cell parameter,and x 0is a constant (3.0919)1,546cm -1was assigned to pyridine adsorbed onto B acidsites,while the peak at 1,490cm -1was ascribed to pyri-dine adsorbed onto both B and L acid sites.The results showed that BM48possessed much larger amounts of total acidity,especially higher amounts of medium and strong acid sites than MCM-48,and the acidity level was similar to that of Beta.4.3Catalytic activityHDS performances of NiMo series catalysts for FCC diesel are shown in Fig.7.Obviously,the addition of BM48tothe catalyst support could improve the hydrotreating per-formances under the same traditional conditions,and the evaluation results proved that the catalyst of NiMo/Al 2O 3-BM48–20%with 20wt%BM48in the support exhibited the highest HDS conversion,which could reach 98.1%,Meanwhile,all the relative catalytic activities of catalysts were much better than that of traditional NiMo/Al 2O 3supported catalyst(96.7%).The highest catalytic activities of NiMo/Al 2O 3-BM48-20%catalyst might be attributed to the following reasons:(1)the proper introduction of BM48could increase the specific surface area and total pore volume of the catalysts,which were beneficial to the higher dispersion of active metals.However,the average pore diameters decreased with the BM48amount increased,drastic shrinkage of pore diameter was harmful to the diffusion of large molecules such as the steric hindered alkyl-substituted DBTs [38].Therefore,the introductionofFig.5TEM images of MCM-48and BM48Table 3Amounts of Bro¨nsted and Lewis acid sites determined by pyridine-FTIR of the catalysts Samples200°C/l mol g -1350°C/l mol g -1LB L ?B L B L ?B BM48231.0100331.0226.2100.0226.2MCM-48186.90186.975.0075.0Beta252.3145.1397.4247.6128.5376.1Degassed temperatures at 200and 350°C represented the amounts of total and strong acid sites respectivelyNote :L and B stands for Lewis and Bro¨nsted acid。

多用户MIMO-MEC网络中基于APSO的任务卸载研究顾敏;徐雅男;王辛迪;花敏;周雯【期刊名称】《无线电工程》【年(卷),期】2024(54)3【摘要】在移动边缘计算(Mobile Edge Computing,MEC)系统中引入多输入多输出(Multiple Input Multiple Output,MIMO)技术与数据压缩技术,能够降低数据冗余度和提高数据传输速率,从而降低任务的执行时延与能耗。

针对具备数据压缩功能的多用户MIMO-MEC网络,研究了多用户任务卸载问题。

通过联合优化任务卸载比例、数据压缩比例、发送功率、计算频率和信道带宽,来最小化系统总时延。

在能耗、功率和带宽等约束条件下,将任务卸载归纳为一个非凸优化问题。

由于能耗约束较为复杂,构造罚函数将其归并,得到一个相对简单的等价问题。

将所有优化变量视为一个粒子,基于自适应粒子群优化(Adaptive Particle Swarm Optimization,APSO)框架提出多用户的任务卸载方法。

由于粒子更新时可能违反约束条件,提出的方法对粒子越界的情形进行了特别处理。

该方法能自适应地调整惯性权重来提高寻优能力和收敛性,通过不断迭代最终获得最优或者次优解。

仿真实验评估了所提卸载方法的性能,分析了用户数、任务计算强度等参数对系统性能的影响。

结果表明,提出的方法优于本地计算、传统粒子群优化(Particle Swarm Optimization,PSO)算法等对比方案,能够有效降低系统的任务执行时延。

【总页数】8页(P711-718)【作者】顾敏;徐雅男;王辛迪;花敏;周雯【作者单位】南京林业大学信息科学技术学院;安徽大学互联网学院【正文语种】中文【中图分类】TN929.5;TP18【相关文献】1.基于延迟接受的多用户任务卸载策略2.基于稳定匹配的多用户任务卸载策略3.基于深度强化学习多用户移动边缘计算轻量任务卸载优化4.基于深度强化学习的多用户边缘计算任务卸载调度与资源分配算法5.基于博弈论的多服务器多用户视频分析任务卸载算法因版权原因,仅展示原文概要,查看原文内容请购买。

关于序列二次规划(SQP)算法求解非线性规划问题研究兰州大学硕士学位论文关于序列二次规划(SQP)算法求解非线性规划问题的研究姓名:石国春申请学位级别:硕士专业:数学、运筹学与控制论指导教师:王海明20090602兰州大学2009届硕士学位论文摘要非线性约束优化问题是最一般形式的非线性规划NLP问题,近年来,人们通过对它的研究,提出了解决此类问题的许多方法,如罚函数法,可行方向法,Quadratic及序列二次规划SequentialProgramming简写为SOP方法。

本文主要研究用序列二次规划SOP算法求解不等式约束的非线性规划问题。

SOP算法求解非线性约束优化问题主要通过求解一系列二次规划子问题来实现。

本文基于对大规模约束优化问题的讨论,研究了积极约束集上的SOP 算法。

我们在约束优化问题的s一积极约束集上构造一个二次规划子问题,通过对该二次规划子问题求解,获得一个搜索方向。

利用一般的价值罚函数进行线搜索,得到改进的迭代点。

本文证明了这个算法在一定的条件下是全局收敛的。

关键字:非线性规划,序列二次规划,积极约束集Hl兰州人学2009届硕二t学位论文AbstractNonlinearconstrainedarethemostinoptimizationproblemsgenericsubjectsmathematicalnewmethodsareachievedtosolveprogramming.Recently,Manyasdirectionit,suchfunction,feasiblemethod,sequentialquadraticpenaltyprogramming??forconstrainedInthisthemethodspaper,westudysolvinginequalityabyprogrammingalgorithm.optimizationproblemssequentialquadraticmethodaofSQPgeneratesquadraticprogrammingQPsequencemotivationforthisworkisfromtheofsubproblems.OuroriginatedapplicationsinanactivesetSQPandSQPsolvinglarge-scaleproblems.wepresentstudyforconstrainedestablishontheQPalgorithminequalityoptimization.wesubproblemsactivesetofthesearchdirectionisachievedQPoriginalproblem.AbysolvingandExactfunctionsaslinesearchfunctionsubproblems.wepresentgeneralpenaltyunderobtainabetteriterate.theofourisestablishedglobalconvergencealgorithmsuitableconditions.Keywords:nonlinearprogramming,sequentialquadraticprogrammingalgorithm,activesetlv兰州大学2009届硕士学位论文原创性声明本人郑重声明:本人所呈交的学位论文,是在导师的指导下独立进行研究所取得的成果。

基于GPU的zk-SNARK中多标量乘法的并行计算方法王锋;柴志雷;花鹏程;丁冬;王宁【期刊名称】《计算机应用研究》【年(卷),期】2024(41)6【摘要】针对zk-SNARK(zero-knowledge succinct non-interactive argument of knowledge)中计算最为耗时的多标量乘法(multiscalar multiplication,MSM),提出了一种基于GPU的MSM并行计算方案。

首先,对MSM进行细粒度任务分解,提升算法本身的计算并行性,以充分利用GPU的大规模并行计算能力。

采用共享内存对同一窗口下的子MSM并行规约减少了数据传输开销。

其次,提出了一种基于底层计算模块线程级任务负载搜索最佳标量窗口的窗口划分方法,以最小化MSM子任务的计算开销。

最后,对标量形式转换所用数据存储结构进行优化,并通过数据重叠传输和通信时间隐藏,解决了大规模标量形式转换过程的时延问题。

该MSM并行计算方法基于CUDA在NVIDIA GPU上进行了实现,并构建了完整的零知识证明异构计算系统。

实验结果表明:所提出的方法相比目前业界最优的cuZK的MSM计算模块获得了1.38倍的加速比。

基于所改进MSM的整体系统比业界流行的Bellman提升了186倍,同时比业界最优的异构版本Bellperson提升了1.96倍,验证了方法的有效性。

【总页数】8页(P1735-1742)【作者】王锋;柴志雷;花鹏程;丁冬;王宁【作者单位】江南大学人工智能与计算机学院;江南大学物联网工程学院;江苏省模式识别与计算智能工程实验室【正文语种】中文【中图分类】TP311;TP309.7【相关文献】1.基于GPU的域乘法并行算法的改进研究2.基于GPU的车辆-轨道-地基土耦合系统3D随机振动并行计算方法3.基于CPU-GPU异构的电力系统静态电压稳定域边界并行计算方法4.一种基于GPU的核苷酸分子系统发育树条件似然概率可扩展并行计算方法5.基于Seed-PCG法的列车-轨道-地基土三维随机振动GPU并行计算方法因版权原因,仅展示原文概要,查看原文内容请购买。

1.1 What are the three main purposes of an operat ing system?1 To provide an en vir onment for a computer user to execute programs on computerhardware in a convenient and ef ?cie nt manner.2 To allocate the separate resources of the computer as n eeded to solve the problemgive n. The allocati on process should be as fair and ef ?cie nt as possible.3 As a control program it serves two major functions: (1) supervision of the execution of user programs to preve nt errors and improper use of the computer, and (2) man age- ment of the operati on and con trol of I/O devices.环境提供者,为计算机用户提供一个环境,使得能够在计算机硬件上方便、高效的执行程序资源分配者,为解决问题按需分配计算机的资源,资源分配需尽可能公平、高效控制程序监控用户程序的执行,防止岀错和对计算机的不正当使用管理I/O设备的运行和控制1.2 List the four steps that are n ecessary to run a program on a completely dedicatedmachi ne.An swer: Gen erally, operati ng systems for batch systems have simpler requireme nts tha n for pers onal computers. Batch systems do not have to be con cer ned with in teract ing with a useras much as a personal computer. As a result, an operating system for a PC must be concernedwith resp onse time for an in teractive user. Batch systems do not have such requireme nts.A pure batch system also may have not to handle time sharing,whereas an operating systemmust switch rapidly betwee n differe nt jobs.木有找到中文答案1.6 Define the esse ntial properties of the follow ing types of operat ing systems:a. Batchb. In teractivec. Time shar ingd. Real timee. Networkf. Distributeda. Batch. Jobs with similar n eeds are batched together and run through the computer as a group by an operator or automatic job seque ncer. Performa nee is in creased by attempting to keep CPU and I/O devices busy at all times through buffering, off-line operati on, spooli ng, and multiprogram ming. Batch is good for executi ng large jobs that need little interaction; it can be submitted and picked up later.b. In teractive. This system is composed of many short tran sacti ons where the results of the n ext tran sacti onmay be un predictable. Resp onse time n eeds to be short (sec on ds) since the user submits and waits for the result.c. Time shar in g.Thissystemsuses CPU scheduli ng and multiprogram ming to provide econo mical in teractive use of a system. The CPU switches rapidly from one user toano ther. In stead of hav ing a job de? ned by spooled card images, each program readsits next control card from the terminal, and output is normally printed immediately to the scree n.d. Real time. Often used in a dedicated application, this system reads information fromsen sors and must resp ond with in a ?xed amou nt of time to en sure correct perfor-man ce.e. Network.f. Distributed.This system distributes computati on among several physical processors.The processors do not share memory or a clock. In stead, each processor has its own local memory. They commu ni cate with each other through various commu ni cati on lin es, such as a high-speed bus or teleph one line.a. Batch相似需求的Job分批、成组的在计算机上执行,Job由操作员或自动Job程序装置装载;可以通过采用buffering, off-line operation, spooling, multiprogramming 等技术使CPU 禾口I/O不停忙来提高性能批处理适合于需要极少用户交互的Job。

多队列VC调度算法研究 吴舜贤 刘衍珩 田明 吉林大学计算机科学与技术学院,吉林 长春 130012 E-mail:wushxian@摘 要 本文首先分析了VC和GPS/PGPS分组调度算法的优点和缺点,在此基础上提出了一种具有GPS调度算法特性的多队列VC调度算法MQVC。

完整阐述了MQVC的设计目标、改进措施,并给出了MQVC算法模型和算法描述,通过定理和引理证明了该模型比单队列VC 和PGPS调度算法模型在实现复杂度、系统调度性能和包的丢失等方面的明显改善。

网络仿真结果显示该算法具有良好的服务质量性能。

关键词 调度算法,虚拟时钟,MQVC,GPS,服务质量。

中图分类号:TP393.02 文献标识码:A1引言 在高速路由器技术研究中,核心部分之一是路由器中分组调度算法研究[1]。

通过对调度算法的综合比较[2]发现集成服务网络分组调度算法中,VC (Virtual Clock) [3,4]和GPS (Generalized Processor Sharing)/PGPS(Packet-by-packet Generalized Processor Sharing)[5]是两种比较典型的调度模型。

VC算法是一种基于TDM的统计时分的服务模型。

文献[6]认为VC调度算法是 “不公平”的,但文献[3,4,7,8]从理论和仿真结果表明它的有效性、可行性和优越性,认为VC算法是一种能够有效满足一定的服务速率保证、时延保证和流隔离监视的调度算法。

相对于本文提出的算法模型,称原来的VC 算法为单队列VC调度算法。

GPS调度算法是一种理想的绝对的基于流的公平队列算法,在现实条件下是不可实现的,对GPS的模拟演化出一系列调度算法,如WFQ[1]、PGPS[5]、WF2Q[9]、WF2Q+[10]、SFQ[11]等,其最大差异在于虚拟时间定义和调度条件选定。

PGPS是GPS的一种较好的逼近模拟。

当前对VC算法主要的改进研究有前跳虚拟时钟[12]、核心无状态虚拟时钟[13]及基于虚拟时钟的接纳控制技术[14]等。

关于aps多线程仿真的若干问题释疑最近坛子里经常有人问aps的问题,能够给出明确答案的不多,因此有必要把我了解的一些信息共享一下,也为坛子做点贡献。

本贴内容在机缘巧合下,均经过亲手试用或者请教cadence技术支持人员,可靠性比较高。

1. 什么是aps。

aps=Advanced Parallel Simulator, 即先进并行仿真,可以利用多核心多线程cpu,进行多线程并行仿真,大大提高仿真速度,提高的速度理论上最高可达到n,n是并发线程的个数。

当然这是理论速度,从一些仿真测试来看,达到n/4到n/2的速度提升还是比较靠谱的。

2. aps支持的仿真类型。

MMSIM7.2早期的版本只能支持tran、dc等少数几个仿真,MMSIM7.2的2011年之后的ISR确定可以支持几乎全部spectre支持的仿真,如pss等,2011年之前ISR尚不确定。

MMSIM10.1的Base版本就可以支持几乎全部spectre仿真。

3. IC平台版本对图形界面调用aps的影响早期的IC平台,仿真器是集成在IC平台里边的,从IC6开始,仿真器从IC平台里边独立出来,叫做MMSIM,包括spectre、AMS、Ultrasim等等。

IC514平台也可以调用MMSIM的仿真器,比如spectre等。

但是调用aps就会有问题,或者没有aps选项,或者aps功能不全,原因就是此IC平台发布的时候,aps还没有发布。

所以,IC5141的U1~U6在图形界面中都没有aps的选项;IC60~IC613的Base版本也都没有aps选项,IC614 Base版本有aps选项,但只能仿真tran和dc(即使MMSIM本身支持其他仿真)。

IC615及其以后的版本,都能在图形界面中完整支持aps。

以上IC514-IC61系列2009年后期到2011年前的版本,包括ISR、hotfix或者update,可以支持在图形界面通过simulator/directory/host菜单切换仿真器来调用aps,但是可能无法调用全部功能;2011年之后的ISR、hotfix或者update,都能够支持在spectre界面直接调用aps,而无需通过simulator/directory/host菜单切换仿真器,特征就是ADE界面具有Setup->High-performance sumulaiton菜单,打开这个菜单,在选项中点中aps,并在Multithreading options 选项中选中auto,即可开启多线程仿真。

PPAT:一种Pthread并行程序线程性能分析工具温莎莎;刘轶;刘弢;宋平;李博;钱德沛【摘要】随着多核/众核处理器技术的快速发展,程序需要越来越多地采用多线程并行技术以提升性能。

随着线程个数的增多,线程并行运行过程中相互间同步/互斥及资源竞争关系更加复杂,导致程序性能优化的难度增大。

为了使编程人员直观地了解线程的动态运行过程,特别是线程间同步及资源共享带来的影响,帮助其进行程序性能优化,设计实现了一种面向Pthread的并行程序线程性能分析工具PPAT(Pthreadsprogramanalysistool),该工具可在程序运行过程中动态获取线程运行及线程间互斥/同步信息,生成线程通信图,并以多种可视化的方法显示,为编程人员优化程序性能提供依据。

【期刊名称】《计算机应用与软件》【年(卷),期】2012(000)011【总页数】6页(P43-47,115)【关键词】众核处理器;多线程;资源共享;资源竞争;性能优化【作者】温莎莎;刘轶;刘弢;宋平;李博;钱德沛【作者单位】北京航空航天大学计算机学院北京100191;北京航空航天大学计算机学院北京100191;北京航空航天大学计算机学院北京100191;北京航空航天大学计算机学院北京100191;北京航空航天大学计算机学院北京100191;北京航空航天大学计算机学院北京100191【正文语种】中文【中图分类】TP310 引言近年来,多核处理器技术快速发展,并呈现向众核方向发展的趋势。

Intel以及AMD都相继推出了多核的高端处理器,Intel的Knights Corner处理器核数可以达到50核,甚至更多。

与此同时,Pthreads、OpenMP、MPI这些传统的并行编程语言已日渐成熟,CIlk、TBB等一些并行编程模型也逐渐面向市场。

在这些并行编程语言及模型中,多线程仍是实现并行的主要方式。

线程的分配数量、任务的派发以及在硬件资源上的调度与并行程序的执行性能密切相关。

Multithreaded systolic computation: A novel approach to performance enhancement of systolic arrays R. Sernec, M. Zajc Digital Signal Processing Laboratory University of Ljubljana Slovenia

Abstract

In this paper we propose a synergy of processing on parallel processor arrays (systolic or SIMD) and multithreading named multithreaded systolic computation. Multithreaded systolic computation enables simultaneous execution of independent algorithm data sets, or even different algorithms, on systolic array level. This approach results in higher throughput and improved utilization of programmable systolic arrays, when processing elements are designed with pipelined functional units. The real benefit of multithreaded systolic computation is that it increases the throughput of systolic arrays without changing systolic algorithm.

The multithreaded systolic computation principle is demonstrated on a programmable systolic array executing a set of linear algebra algorithms. A cycle accurate simulation presents throughput improvement results for three different processing element models. We demonstrate that multithreaded systolic computation can provide throughput improvements that asymptotically approach the number of simultaneously executable threads.

Povzetek V članku je predstavljen pristop, ki združuje vzporedna procesorska polja ter večnitnost, imenovan večnitno sistolično računanje. Večnitno sistolično računanje pomeni hkratno izvajanje neodvisnih podatkovnih nizov istega algoritma ali več različnih algoritmov na sistoličnem polju. Pristop omogoča povečanje prepustnosti in boljšo izrabo programljivih sistoličnih polj s cevovodnimi funkcionalnimi enotami. Prednost podanega pristopa je povečanje prepustnosti brez spreminjanja sistoličnega algoritma.

Večnitno sistolično računanje je predstavljeno na programljivem sistoličnem polju, ki izvaja nabor algoritmov linearne algebre. Povečanje prepustnosti sistoličnega polja predstavljajo rezultati simulacij treh različnih modelov procesorskih elementov. Izboljšanje prepustnosti se asimptotično približuje številu niti.

Keywords: multithreading, homogeneous processor array, systolic array, systolic algorithm, linear algebra. Ključne besede: večnitnost, homogena procesorska polja, sistolični algoritem, linearna algebra. 1 Introduction Modern digital signal processing (DSP) and image processing applications depend on high processing throughput and massive data bandwidths [1, 2]. DSP, image processing as well as linear algebra algorithms have found an efficient implementation medium in parallel computing domain. These algorithms can be efficiently mapped onto array processors (systolic arrays, wavefront arrays and SIMD arrays), due to their regularity on data level [3, 4, 5]. The data parallelism property allows us to simultaneously trigger execution on the whole processor array with the same instruction, whereby each processing element (PE) operates on its own data element or data set.

Systolic arrays (SA) were designed to tackle fine-grained computation and exploit data level parallelism of these problems directly [4]. They feature a very homogeneous (regular) design and consist of a number of identical processing elements. These are all ideal features for system-on-chip implementations [6].

Designing systolic algorithms begins with parallelisation of a sequential algorithm, definition of a suitable signal flow graph (SFG) and systolisation by mapping of the resulting signal flow graph to a systolic array. The result of mapping an algorithm to a systolic array is not always optimal in the maximum throughput sense. In order to increase systolic array's efficiency/throughput, several systolic algorithm transformation techniques can be applied [3, 4, 7]. These transformations are performed on the algorithm level, after the algorithm parallelisation and systolisation procedures have been done. As a result, the systolic algorithm is altered and consequently the structure/design of underlying systolic array and processing element functionality changes, too.

The advantage of systolic array computing is a homogeneous pool of identical processing elements that results in the replication of execution resources. In addition to the systolic array structure only one scalar processor is needed, which executes serial parts of algorithms and at the same time controls the systolic array. Synchronization between the processing elements is achieved implicitly via synchronous inter processing element communication, i.e. data exchange. This keeps control overhead low and simplifies parallel programming.