Making Information Sources Available for a New Market in an Electronic Commerce Environment

- 格式:pdf

- 大小:245.92 KB

- 文档页数:23

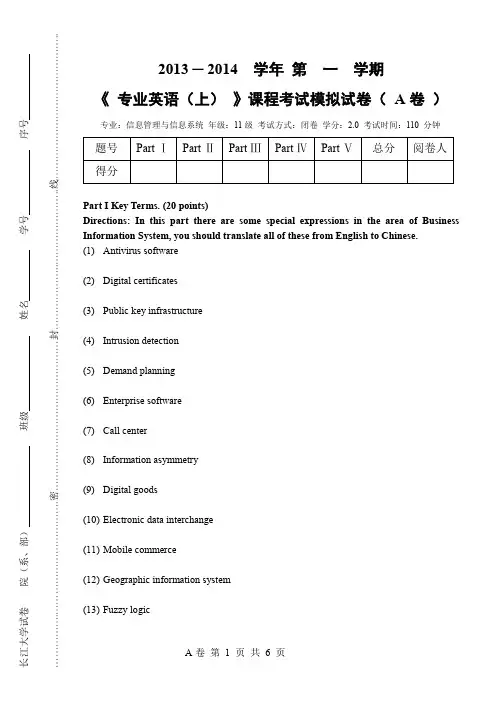

学试卷院(系、部)班级姓名学号序号…….…………………………….密………………………………………封………………..…………………..线……………………………………..2013 ─ 2014 学年 第 一 学期 《 专业英语(上) 》课程考试模拟试卷( A 卷 ) 专业:信息管理与信息系统 年级:11级 考试方式:闭卷 学分:2.0 考试时间:110 分钟 Part I Key Terms. (20 points) Directions: In this part there are some special expressions in the area of Business Information System, you should translate all of these from English to Chinese. (1) Antivirus software (2) Digital certificates (3) Public key infrastructure (4) Intrusion detection (5) Demand planning (6) Enterprise software (7) Call center (8) Information asymmetry (9) Digital goods (10) Electronic data interchange (11) Mobile commerce (12) Geographic information system (13) Fuzzy logic 题号 Part Ⅰ Part Ⅱ Part Ⅲ Part Ⅳ Part Ⅴ 总分 阅卷人 得分(14)Component-based development(15)Feasibility study(16)Joint application development(17)System development lifecycle(18)Information rights(19)Portfolio analysis(20)PrototypingNotes: PartⅡ to Part Ⅳare based on the following case of ‘Stikeman Elliott Computerizes Its Brainpower’ , you should read the passages carefully. Also the passages are followed by some questions. Answer all the questions according to the passages and what you’ve learned.Stikeman Elliott Computerizes Its BrainpowerStikeman Elliott is an international (21) business law firm based in Toronto. Ontario, Canada, noted (22) for its work in mergers (23) and acquisitions, antitrust, banking and finance, insolvency (24), intellectual property (25), and technology. The firm started with two lawyers in 1952 and today operates with more than 400 lawyers in nine offices in Canada, New York, London, and Sydney. It is one of the top business law firms in Canada.Stikeman Elliott tries to promote a culture of initiative and high-performance standards. The key to creating and maintaining such a culture is in finding the best way to share the vast repositories of knowledge that reside in the brains of the lawyers and in the documents and files that the lawyers have been collecting throughout their careers.(26) Foremost among the forms of knowledge critical to lawyers are precedents, which can include documents, forms, guidelines, and best practices.Stikeman Elliott realized that an effective knowledge management (KM) system would enable the firm’s lawyers to be more productive and contribute to sustaining the growth of the firm over the long term. In 2001, Stikeman Elliott selected Hummingbird Enterprise Webtop from Hummingbird Ltd. to build a portal for the firm’s corporate intranet.(27) The portal officially launched in 2002 under the name STELLA, which is a play on the name of the firm.With STELLA in place, all of the firm's lawyers have easy acce ss to the firm’s knowledge assets, including important precedents, through a single access point using a Web browser. STELLA includes an expertise database, identifying lawyers with proficiency in specific areas. The portal also codifies the generation and organization of new precedents. Margaret Grottenthaler, theco-chair of Stikeman Elliott’s national knowledge management committee, points out the importance of STELLA to the firm’s junior employees: “It's the way to access all our research, all the legal how-tos. It’s absolutely critical they use it. The more junior they are, the more likely they are to use it for those purposes.”(28)An additional benefit of STELLA has been its ability to encourage the sense of community that Stikeman Elliott wishes to foster in its firm by growing organically rather than through mergers or acquisitions. Everyone in the firm, regardless of which office they work in, has access to the same resources. With everyone on equal footing, the multiple-office structure maintains the feel of a single organization. Stikeman Elliott believes that this working atmosphere positions the firm well among its competitors. The increased level of communication among the offices also prevents lawyers from duplicating work that has already been done. Lawyers can customize the portal’s home page so that they have quick access to the information they need most, whether it is their case files, news about their clients, or news about their clients' industries.(29)Stikeman Elliott integrated its portal closely with its document management (DM) system, which was also based on Hummingbird DOCS Open software. Stikeman employees use the Hummingbird SearchServer search engine to search through the firm’s document repository and internal legal and business content, including e-mail, and some external resources, such as LexisNexis.Of course, a KM system is only useful if it is populated with the knowledge of its users. Some firms have difficulty with partners who hoard their knowledge, it being a valuable commodity. At Stikeman Elliott, the greater obstacle has been time. Partners are often too busy to contribute their work to the system. To combat this problem, the firm is building tools to automate the population of the knowledge database. With these tools, lawyers can easily create Web sites for their cases, clients, and industry research. STELLA has extranet capabilities that enable Stikeman Elliott to create sites on which clients can review and work with documents pertaining to their cases in a collaborative manner. Grottenthaler points out that the firm’s KM system is actually geared toward the client, not the lawyer, because the ultimate goal is to serve the client better.(30) The KM team at Stikeman Elliott includes library staff and law clerks in addition to lawyers. All three groups can add precedents, memos, and even meeting notes to the system. The team emphasizes the importance of the human presence in KM and keeps in close contact with the firm’s lawyers to make sure they have access to the knowledge they need. A human subject matter expert also reviews content that has been added and categorized by automated procedures, which ensures the quality of the information.Sources: Judith Lamont. "Smart by Any Name—Enterprise Suites Offer Broad Benefits." KMWorld Magazine. April 2005: “Stikeman Elliott Collaborates with Hummingbird and ii3," , accessed February 8, 2005:. accessed August 15, 2005: and Gerry Blackwell. “Computer and Technology Law," Canadia n Lawyer, August 2003.Part Ⅱ Give the meaning of the words bolded and Italicized both in Chinese and English. (10 points)(21)(22)(23)(24)(25)Part Ⅲ Translate the sentences underlined into Chinese. (25 points)(26)(27)(28)(29)(30)Part ⅣQuestions. (20points)(31)What are the problems and challenges that a law firm such as Stikeman Elliott faces?(32)What solutions are avail-able to solve these problems?(33)How did implementing Hummingbird address these problems?(34)How successful was the solution? Did Stikeman Elliott choose the best alternative?Part ⅤWriting. (25 points)Directions: Write a letter based on the following information.Your school is to hold the Annual International Conference on Discourse and Translation from August 15 to 18, 2011. Write a letter as co-chair to invite Prof. Chris Mellish (Edinburgh University) to attend the conference.。

商务英语听说(第⼆版)参考答案u...商务英语听说(第⼆版)参考答案unit1-to-test-I--the-keys-o f--listening-practiceUnit1. Welcome and farewell.Part A. intensive listeningPhonetics: A B A D CDictation:1.Flight AF 463 to Paris is now boarding at Gate number 7.2.This is the final call for flight No. AZ 963 to Rome.3.I’d like to make a reservation for a flight to Boston on Nov. 28th.4.Do you have a single room available tomorrow night?5.I’m looking forward to our future cooperation.6.I’ll need an economy ticket with an open return.7.I’d like to have my laundry by 9 o’clock tomorrow morning.8.Thank you for all your help during our stay here in China.Part B. Extensive listeningDialogues: B C B B D1.W: do you have anything particular to declare?M: No. I don’t think so. I haven’t got anything dutiable with me.Q: where does the conversation most probably take place?2. M: will you attend the Fair in Tianjin in two days?W: No. I’m leaving Tianjin for Shanghai tomorrow morning for a 3-day meeting, and will visit Guangzhou afterwards. Q: where is the man going tomorrow?3.W: your flight will be departing from Gate 18. the boarding time is 8:45 andyour flight leaves at 9:15, have a nice journey!M: thank you very much.Q: what time does the man’s flight depart?4.M: did you enjoy your flight?W: Not really, I was a little airsick when the plane experienced a few bumps.Q: how does the woman like her flight?5.W: room reservation, good afternoon.M: I’d like to book a double room for Tuesday next week.Q: what’s the probable relationship between the two speakers? Conversation:Meeting a foreign businessman at the airportMr. Wang: excuse me, sir, but are you Mr. Stone from New York?Mr. Stone: Yes, I am Michael Stone, the sales manager of ABC Import & Export Company, Ltd.Mr. Wang: I am Wang Qiang from Eastern Electronics Company. I’ve come to meet you, Mr. Stone.Mr. Stone: how are you, Mr. Wang? Thank you for coming to the airport to meet me.Mr. Wang: it’s my pleasure. How many people are there in your party?Mr. Stone: only two. This is Miss White, my assistant.Mr. Wang: nice to meet you, Miss White.Miss. White: nice to meet you too, Mr. Wang.Mr. Stone: I’m sorry to have kept you waiting for long, but the flight was delayed30 minutes. If it weren’t for the heavy fog, we would have been here by2:00 pm.Mr. Wang: never mind. I was stuck in traffic, too.Mr. Stone: where are we heading now?Mr. Wang: I guess you must be very tired after the long trip, so it is best if we go to your hotel to check in first. If you don’t mind, we’d like to accommodate you at Sheraton Hotel.Mr. Stone: terrific! That’s very considerate of you.(on the way)Mr. Stone: how far is it to the hotel?Mr. Wang: about 45 minutes. Is this your first time here in Tianjin, Mr. Stone? Mr. Stone: yes. We’ve never been here before. Mr. Wang: so you might as well have a look at the city along the way. And we’ll show you around the city after our business. Mr. Stone: that would be great! Thank you very much.Section A: B C B D APart C. Listening & Speaking IntegrationConversation: Bon VoyageWang: it’s a shame that you can not stay in Beijing for a few more days, Mr. Knox!Knox: Yes, I’d like to, very much indeed. But I have to rush home and preside over the committee meeting. Anyhow, I really enjoyed every minute of my stay here, your warm reception, as well as your working enthusiasm have left me a deep and vivid impression and helped make my trip a productive one.Wang: it’s very kind of you to say so. Through beautiful negotiation we finally have all the disputes solved and the contract signed. I’m sure our initial transaction will pave the way for further cooperation between our two companies. We’ve been brought closer to each other by this transaction.It’s essential for us, or for a country, to strengthen economic contact with the outside world, isn’t it?Knox: I quite agree with you. In the long run, it makes sense for a nation to specialize in certain activities, producing the goods in which it has the most advantages and exchanging them for those in which it does not have the advantages. Wang: you seem to be an economist, Mr. Knox!Knox: you are to blame for it, Wang. If you hadn’t started this talk about a country’s…well, let’s drop this topic. Economist or not, I hope business between us will prosper. Then we’ll have more opportunities to meet each other. To tell you the truth, I find it very hard to say goodbye. I shall be missing you, Wang.Wang: me too, I shall be looking forward to your visit again.Knox: next time I come, I shall see more of the city. And I’ve got to try Beijing Roast Duck again, very impressive. But listen, are they announcing my flight? I’m afraid I have to board the plane now.Wang: Bon Voyage, Mr. Knox!Knox: Good-bye. Let’s keep in contact.Wang: Good-bye and take care.Section A: F F T T TUnit 2. Companies and Occupations.Part A. intensive listeningPhonetics: B A D C ADictation:1.Our market share in China has increased by 6%, accounting for 15%.2.How many sections come under the Production Department?3.We have 70330employees world-wide and sales of $19806 million.4.The world wide company has operations in more than 100 countries.5.Secretaries who receive visitors are called receptionists.6.Business hours usually start at 9 am. And finish at 5 pm., Monday to Friday.7.Most of our work consists of looking after the taxation and financial affairs.8.In the United States alone we have a turnover of over $1 billion annually. Part B. Extensive listeningDialogues: D A D C B1.M: I’ve got a job offer in P&J Chemicals. Do you think I should take it?W: well, I’m not quite familiar with it. You’d better do some research on the internet.Q: what does the woman think of P&J Chemicals?2.W: I’d appreciate your professional opinion. Do you think that I should suethe company?M: not really. I think that we can settle this out of court.Q: what is the probable relationship between the two speakers?3.M: should I come for an interview?W: I’ll let you know in two weeks when I hear from the Personnel Department.Q: when should the man come for an interview?4.W: may I have a look around your company?M: sure, I’ll show you. This way please. The canteen is on the ground floor, the Personnel Department and the Sales are on the second floor. And you’ll find our biggest department on the third floor, which is the Production Department.Q: on which floor is the Sales Department?5. M: Good morning. I’m John Green from General Sales Company. I have anappointment with Mr. Smith of the Purchasing Department at tenW: Good morning. Mr. Smith’s office is on the third floor. You can take the lift around the corner.Q: which department does the woman work at?Conversation:McDonald’sTom: Where are we having lunch today?Mary: Since we are visiting another client at 1:30, I guess we’ll just grab sth at McDonald’s. what do you think?Tom: that’s pk with me. We don’t need to bother finding a place to eat, because you can find McDonal d’s everywhere. Bur do you know the history ofthe big M?Mary: not really, you seem to be an expert on that. Tell me some. Did a person named McDonald start it?Tom: yes, actually brothers Richard and Maurice McDonald opened their first restaurant in California in 1940.Mary: it has many restaurants around the world now.Tom: quite right. It’s one of the two most recognized and powerful brands in the world. The other is Coca Cola, the only soft drink supplier to McDonal d’s today. McDonal d’s operates over 32000 restaurants in 119 countries worldwide, employing more than 1.5 million people. It is growing with an average of 396 new restaurants to the system annually in the past five years.This means a new McDonal d’s will open somewhere in the world every single day.Mary: incredible! I wonder how much they make!Tom: sales across all of its company-owned and franchised restaurants totaled $56.9 billion in 2009. its revenues were of $22.7 billion and the netprofit amounts to $4.3 billion.Mary: very good business. Do you know when we had the first McDonal d’s in Beijing?Tom: if I’m not wrong. It was 1992. the date , yes, April 23. but actually McDonal d’s very first appearance in China was in Shenzhen on October 8, 1990.Mary: you seem to know everything! Did you work there?Tom: No, I just came across an article in the newspaper the other day!Part C. Listening & Speaking IntegrationConversation: a job interviewInterviewer: thank you for coming to see us, Emily. Have a seat please. Now, I’dlike to start by checking a few details with you.Emily: OKInterviewer: your resume says you worked in ABC Electronics, when did you join it?Emily: five years ago. It is a large international company, which provided a trainee program for people from university and, well that was my first job, trainee marketing manager.Interviewer: what exactly did you do?Emily: well, the program lasted 18 months. During that time I worked in different departments—in personnel, purchasing, marketing and such things. O also went out with the sales representatives to visit customers. Interviewer: did you enjoy it? Emily: yes, I did. I didn’t really know what I wanted to do when I left university, so it was god to see what the different departments did. I was really practical.Interviewer: it sounds interesting.Emily: yes, it was. But it was very badly paid. I did the same work as other people. I think a lot of the trainees feel they are a cheap source of labor. Interviewer: how long did you stay there?Emily: till the end of the trainee program. And then I saw a job vacancy in the marketing department of GM, and I applied for a job there. That’s whereI work now.Interviewer: but why do you want to leave now?Emily: I want sth more challenging. And I want a job closer to home, too. Interviewer: all right, and what career development are you looking for in our company?Unit 3. products and salesPart A. intensive listeningPhonetics: A B D C CDictation:1.we will allow you another 2% discount for its new product.2.the pants are available in four different colors and three sizes.3.our machine is of better quality though the price is a little higher.4.there is a close relationship between building a reputation and establishinggoodwill./doc/1c3915655.htmlpared with competing products, ours is smaller and lighter.6.we have a wide selection of shirts that will appeal to all ages.7.our company relies on quick sales and low profits.8.the sales reached a peak of 850 million in 2006, before falling to under 600million in 2008.Part B. Extensive listeningDialogues: A B D A C1.W: Do you think we should put an ad in the newspaper for the new product?M: by all means.Q: what does the man think of n ad in the newspaper?2.M: is it the latest model you have?W: Yes, this model is specially designed for personal cyclists. It’s got Italian frame and Japanese components.Q: what product are they talking about?3.W: In what newspapers, magazines or websites does your company advertise?M: we send brochures and samples to our potential customers. That’s more direct.Q: how does the man’s company advertise?4.M: any news from the annual conference?W: yes. The sales of this year are reported to be 120 million, increased by 20% compared with last year.Q: what were the sales last year?5. W: I’d like to get some information about your vacuum cleaner.M: OK. Unlike any other vacuum cleaner, ours is unique. It has some space age design features. Most importantly, there’s no bag inside.Q: what do we learn about the vacuum cleaner?Conversation:Section A: C B D A CSection A1.warranty2.selling price\3.yearly on-site maintenance service after one-year warranty period4.money-back guarantee5.free deliveryUnit 4. MarketingPart A. intensive listeningPhonetics: B D A B BDictation:1.From what I’ve heard, you’re already well up in shipping work.2.I’m sorry to say that your price has soared.3.the next thing I’d like to bring up for discussion is insurance.4.it would be very difficult for us to push any sakes if we buy it at this price.5.we wish our opinions on marketing will be passed on to our manager.6.we sell our goods on loaded weight and not on landed weight.7.it’s too expensive, do you have any discount?8.we can effect shipment in December or early next year at the latest.Part B. Extensive listeningDialogues: A C D D B1.M: How many do you intend to order?W: I want to order 900 dozen.M: the most we can offer you at present is 600 dozen.Q: how many can the woman order?2.M: TV is much more effective to advertise our new product, but it will cost alot of money.W: it is worth doing so as long as the result is satisfactory.Q: what does the woman mean?3.W: could we use booklets, letters, and catalogues for direct mail advertising?M: Yes. But you should build up a mailing list of possible customers for direct mail advertising.Q: what should the woman do first?4.W: you are going to Chicago tomorrow, aren’t you?M: Yes. I thought I’d fly, but then I decided that taking a Greyhound bus would be cheaper than driving or flying. Q: how will the man get to Chicago?5. M: I intend to get in the American market, but we know little about the localconditions and preferences.W: market research can help.Q: what does the woman mean?Conversation:Section A: F T F T FSection B:1.advertising\promotional2.on television\in a national newspaper3.posters\point-of-sales displays4.experienced salespeople5.high\T-shirts\umbrellaPart C. Listening & Speaking IntegrationSection A:1.have very little knowledge\blaze a trial2.defend and compete against3.various kinds and in scorching competition4.keep good relations and co-operationsUnit 5. Business FairsPart A. intensive listeningPhonetics: B D D C ADictation:1.I’m calling to inform you that we have decided to make the purchase.2.do you have any plans to sell in Europe?3.our company is ranked second in the business.4.we doubled our output in this department as a result.5.we could make a delivery of this parcel as soon as possible.6.we find our price 25% higher compared with other companies’.7.how much does she have to pay if she stays for 3 days?8.the new company can give him 2000 dollars a month as a start.Part B. Extensive listeningDialogues: C B D C D1. W: Do you think we should park the car downtown?M: it’s hard to find a place.Q: what does the man mean?2. M: if I place an order on this product, when can you deliver them?W: for these products, we can arrange shipment at once. It would take longer, say, three months, if you want to order special designs.Q: when can products of special design delivered?3. W: are you glad that you came to work in Washington?M: Yes. Indeed. I’d considered going to New York or Boston, but I’ve never regretted my decision.Q: where does the man live now?4. M: registration always takes so long.W: what bothers me is all the people who cut in line.Q: what bothers the woman?5. M: did you buy your car from that dealer in the city?W: he went out of business last week.Q: what does the woman mean about the car dealer?Conversation:Section A: B D C B CSection B:1.calling from\get some information2.vacant suites\conference hall3.the experts\get the VIP treatment4.the reservation form\by fax5. 1000 RMB Yuan or 120 US DollarsPart C. Listening & Speaking IntegrationSection A:1.china international agricultural machinery exhibition2.concerning agriculture\increase the farmers’ income\supporting policies andlaws/doc/1c3915655.html\0086-10-68596444Test IPart A. intensive listeningPhonetics: A C B C B D D A B CDictation:1.can you give me an account of your product?2.I want to take part in the exhibition in Miami.3.I know the factory is operating at full capacity.4.I suggest we ride the subway back to our hotel and rest for a while.5.my watch reads 11:30, so we have about 45 minutes to get there.6.have you filled in the Customs Declaration Form?7.we have to arrive at the airport one hour earlier.8.the company was established in 1990 and we have about 1500 employees now.9.our net profits were over 100 million US dollars last year.10.I think some of the items may find a ready market in our country.Part B. Extensive listening-----Dialogues: D B A A B1. W: Jack, have you finished your research paper for economics?M: not yet. I always seem to put things off until the last minute.Q: what are they talking about?2. M: this black bag is $ 2.00 and that blue one is a dollar more.W: the red one is twice as much as the blue one.Q: how much is the red bag?3. W: watching the news on TV is a good way to learn English.M: it’s especially helpful when you check out the same information in the newspaper.Q: what are they talking about?4. M: I like to travel by air. I like getting different places fast. Do you liketraveling by air?W: flying makes me nervous. I like feeling the ground under my feet. Traveling by rail and road are my favorite ways of traveling.Q: what does the woman feel about traveling by air?5. W: I’d like to cash this check.M: please sign the back. Do you have any account here?Q: what does the man ask the woman to do to cash money?Passage: D A B C BConversation: Leather products\leather garments\Europe\ FOB ShanghaiPart C. Listening & Speaking IntegrationSection A:Newspapers, magazines, online, E-mail, blog, Facebook, twitter and cinema advertising. Making counter displays for dealers to exhibit in their shops.。

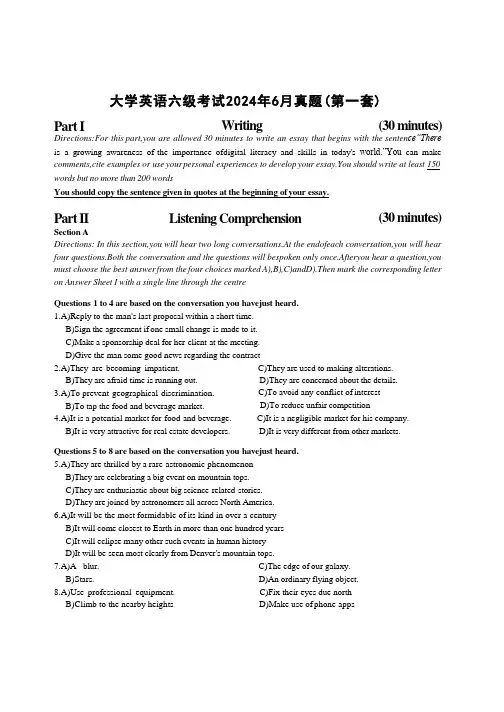

大学英语六级考试2024年6月真题(第一套)Part I Writing (30 minutes) Directions:For this part,you are allowed 30 minutes to write an essay that begins with the senten ce“There is a growing awareness of the importance ofdigital literacy and skills in today's world.”You can make comments,cite examples or use your personal experiences to develop your essay.You should write at least 150 words but no more than 200 wordsYou should copy the sentence given in quotes at the beginning of your essay.Part II Listening Comprehension (30 minutes) Section ADirections: In this section,you will hear two long conversations.At the endofeach conversation,you will hear four questions.Both the conversation and the questions will bespoken only once.Afteryou hear a question,you must choose the best answer from the four choices marked A),B),C)andD).Then mark the corresponding letter on Answer Sheet I with a single line through the centreQuestions 1 to 4 are based on the conversation you havejust heard.1.A)Reply to the man's last proposal within a short time.B)Sign the agreement if one small change is made to it.C)Make a sponsorship deal for her client at the meeting.D)Give the man some good news regarding the contract2.A)They are becoming impatient. C)They are used to making alterations.B)They are afraid time is running out. D)They are concerned about the details.3.A)To prevent geographical discrimination. C)To avoid any conflict of interestB)To tap the food and beverage market. D)To reduce unfair competition4.A)It is a potential market for food and beverage. C)It is a negligible market for his company.B)It is very attractive for real estate developers. D)It is very different from other markets. Questions 5 to 8 are based on the conversation you havejust heard.5.A)They are thrilled by a rare astronomic phenomenonB)They are celebrating a big event on mountain tops.C)They are enthusiastic about big science-related stories.D)They are joined by astronomers all across North America.6.A)It will be the most formidable of its kind in over a centuryB)It will come closest to Earth in more than one hundred yearsC)It will eclipse many other such events in human historyD)It will be seen most clearly from Denver's mountain tops.7.A)A blur. C)The edge of our galaxy.B)Stars. D)An ordinary flying object.8.A)Use professional equipment. C)Fix their eyes due northB)Climb to the nearby heights D)Make use of phone appsSection BDirections: In this section,you will hear twopassages.At the end of each passage,you will hear three or four questions.Both thepassage and the questions will be spoken only once.After you hear a question,you must choose the best answer from the four choices marked A),B),C)and D).Then mark the corresponding letter on Answer Sheet I with a single line through the centreQuestions 9 to 11 are based on the passage you have just heard.9.A)Whether consumers should be warned against ultra-processed foods.B)Whether there is sufficient scientific consensus on dietary guidelinesC)Whether guidelines can form the basis for nutrition advice to consumers.D)Whether food scientists will agree on the concept of ultra-processed foods10.A)By the labor cost for the final products C)By the extent of chemical alteration.B)By the degree of industrial processing. D)By the convention of classification.11.A)Increased consumers'expenses. C)People's misunderstanding of nutrition.B)Greater risk of chronic diseases.D)Children's dislike for unprocessed foodsQuestions 12 to 15 are based on the passage you have just heard.12.A)They begin to think of the benefits of constraints.C)They try hard to maximize their mental energy.B)They try to seek solutions from creative people. D)They begin to see the world in a different way13.A)It is characteristic of all creative people. C)It is a creative person's response to limitation.B)It is essential to pushing society forward. D)It is an impetus to socio-economic development.14.A)Scarcity or abundance of resources has little impact on people's creativity.B)Innovative people are not constrained in connecting unrelated conceptsC)People have no incentive to use available resources in new ways.D)Creative people tend to consume more available resources15.A)It is key to a company's survival. C)It is essential to meeting challengesB)It shapes and focuses problems. D)It thrives best when constrained.Section CDirections:I n this section,you will hear three recordingsoflectures or talks followed by three or four questions. The recordings will be played only once.After you hear a question,you must choose the best answer from the four choices marked A),B),C)and D).Then mark the corresponding letter on Answer Sheet 1 with a single line through the centre.Questions 16 to 18 are based on the recording you havejust heard.16.A)Because they are learned. C)Because they have to be properly personalizedB)Because they come naturally. D)Because there can be more effective strategies17.A)The extent of difference and of similarity between the two sides.B)The knowledge of the specific expectation the other side holds.C)The importance of one's goals and of therelationship.D)The approaches one adopts to conflict management.18.A)The fox. C)The sharkB)The owl. D)The turtle.Questions 19 to 21 are based on the recording you have just heard.19.A)Help save species from extinction and boosthuman health.B)Understand how plants and animals perished over the past.C)Help gather information publicly available to researchersD)Find out the cause of extinction of Britain's 66,000 species20.A)It was once dominated by dinosaurs C)Its prospects depend on future human behaviourB)It has entered the sixth mass extinction. D)Its climate change is aggravated by humans21.A)It dwarfs all other efforts to conserve,protect and restore biodiversity on earthB)It is costly to get started and requires thejoint efforts of thousands of scientistsC)It can help to bringback the large numbers of plants and animals that have gone extinctD)It is the most exciting,most relevant,mosttimely and most internationally inspirationalQuestions 22 to 25 are based on the recording you havejust heard.22.A)Cultural identity. C)The Copernican revolution.B)Social evolution. D)Human individuality23.A)It is a delusion to be disposed of. C)It is a myth spread by John Donne's poem.B)It is prevalent even among academics. D)It is rooted in the mindset of the 17th century24.A)He believes in Copernican philosophical doctrines about the universe.B)He has gained ample scientific evidence at the University of Reading.C)He has found that our inner self and material self are interconnected.D)He contends most of our body cells can only live a few days or weeks.25.A)By coming to see how disruptive such problems have got to be.B)By realising that we all can do our own bit in such endeavours.C)By becoming aware that we are part of a bigger world.D)By making joint efforts resolutely and persistently.Part Ⅲ Reading Comprehension (40 minutes) Section ADirections: In this section,there is a passage with ten blanks.You are required to select one word for each blank from a list of choices given in a word bank following the passage.Read the passage through carefully before making your choices.Each choice in the bank is identified by a letter.Please mark the corresponding letter for each item on Answer Sheet 2 with a single line through the centre.You may not use any of thewords in the bank more than once.It's quite remarkable how different genres of music can spark unique feelings,emotions,and memories. Studies have shown that music can reduce stress and anxiety before surgeries and we are all attracted toward our own unique life soundtrack.If you're looking to 26stress,you might want to give classical music a try.The sounds of classical music produce a calming effect letting 27 pleasure-inducing dopamine(多巴胺 )in the brain that helps control attention,learning and emotional responses.It can also turn down the body's stress response,resulting in an overall happier mood.It turns out a pleasant mood can lead to 28in a person's thinking.Although there are many great 29 of classical music like Bach,Beethoven and Handel,none of these artists'music seems to have the same health effects as Mozart's does.According to researchers,listening toMozart can increase brain wave activity and improve 30 function.Another study found that the distinctive features of Mozart's music trigger parts of the brain that are responsible for high-level mental functions.Even maternity 31 u se Mozart to help newborn babies adapt to life outside of the mother's belly.It has been found that listening to classical music 32 _reduces a person's blood pressure.Researchers believe that the calming sounds of classical music may help your heart 33 from stress.Classical music can also be a great tool to help people who have trouble sleeping.One study found that students who had trouble sleeping slept better while they were listening to classical music.Whether classical music is something that you listen to on a regular basis or not,it wouldn't 34 totake time out of your day to listen to music that you find 35 .You will be surprised at how good it makes you feel and the potentially positive change in your healthSection BDirections: In this section,you are going to read a passage with ten statements attached to it.Each statement contains information given in one of the paragraphs.Identify theparagraph from which the information is derived.You may choose a paragraph more than once.Each paragraph is marked with a letter.Answer the questions bymarking the corresponding letteron Answer Sheet 2.The Curious Case of the Tree That Owns ItselfA)In the city of Athens,Georgia,there exists a rather curious local landmark—a large white oak that is almostuniversally stated to own itself.Because of this,it is considered one of the most famous trees in the world.So how did this treecome to own itself and the land around it?B)Sometime in the 19th century a Georgian called Colonel William Jackson reportedly took a liking to the saidtree and endeavored to protect it from any danger.As to why he loved it so,the earliest documented account of this story is an anonymously written front page article in the Athens WeeklyBanner published on August 12,1890.It states,“Col.Jackson had watched the tree grow from his childhood,and grew to love it almost as he would a human.Its luxuriant leaves and sturdy limbs had often protected him from the heavy rains, and out of its highest branches he had many a time gotten the eggs of the feathered singers.He watched its growth,and when reaching a ripe old age he saw the tree standing in its magnificent proportions,he was pained to think that after his death it would fall into the hands of those who might destroy it.”C)Towards this end,Jackson transferred by means of a deed ownership of the tree and a little land around it tothe tree itself.The deed read,“W.H.Jack son for and in consideration of the great affection which he bears the said tree,and his great desire to see it protected has conveyed unto the said oak tree entire possession of itself and of all land within eight feet of it on all sides.”D)In time,the tree came to be something ofa tourist attraction,known as The TreeThat Owns Itself.However,in the early 20th century,the tree started showing signs of its slow death,with little that could be done aboutit.Father time comes for us all eventually,even our often long lived,tall and leafy fellow custodians(看管者) of Earth.Finally,on October 9,1942,the over 30 meter tall and 200-400 year old tree fell,rumor has it,as a result of a severe windstorm and/or via having previously died and its roots rotted.E)About four years later,members of the Junior Ladies Garden Club(who'd tended to the tree before itsunfortunate death)tracked down a small tree grown from a nut taken from the original tree.And so it was that on October 9,1946,under the direction of Professor Roy Bowden of the College of Agriculture at the University of Georgia,this little tree was transplanted to the location of its ancestor.A couple of months later,an official ceremony was held featuring none other than the Mayor ofAthens,Robert L McWhorter,to commemorate the occasion.F)This new tree became known as The Son of the Tree That Owns Itself and it was assumed that,as the originaltree's heir,it naturally inherited the land it stood on.Of course,there are many dozens of other trees known to exist descending from the original,as people taking a nut from it to grow elsewhere was a certainty.That said,to date,none of the original tree's other children have petitioned the courts for their share of the land,so it seems all good.In any event,The Son of the Tree That Owns Itself still stands today,though often referred to simply as The Tree That Owns Itself.G)Thisall brings us around to whether Jackson ever actually gave legal ownership of the tree to itself in the firstplace and whether such a deed is legally binding.H)Well,to begin with,it turns out Jackson only spent about three years of his life in Athens,starting at theage of 43 from 1829 to 1832,sort of dismissing the idea that he loved the tree from spending time under it as a child and watching it grow,and then worrying about what would happen to it after he died.Further,an extensive search of land ownership records in Athens does not seem to indicate Jackson ever owned the land the tree sits on.I)He did live on a lot of land directly next to it for those three years,but whether he owned that land or notisn't clear.Whatever the case,in 1832 a four acre parcel,which included the land the tree was on and the neighboring land Jackson lived on,among others,was sold to University professor Malthus A Ward.In the transaction,Wardwas required to pay Jackson a sum of $1,200(about $31,000 today),either for the property itself or simply in compensation for improvements Jackson had made on the lot.In the end,whether he ever owned the neighboring lot or was simply allowed to use it while he allegedly worked at the University,he definitely never owned the lot the tree grew on,which is the most important bit for the topic at hand.J)After Professor Ward purchased the land,Jackson and his family purchased a 655 acre parcel a few miles away and moved there.Ten years later,in 1844,Jackson seemed to have come into financial difficulties and had his little plantation seized by the Clarke County Sheriff's office and auctioned off to settle the mortgage.Thus,had he owned some land in Athens itself,including the land the tree sat on,presumably he would have sold it to raise funds or otherwise had it takenas well.K)And whatever the case there,Jackson would have known property taxes needed to be paid on the deeded land for the tree to be truly secure in its future.Yet no account or record indicates any trust or the like was set up to facilitate thisL)On top of all this,there is no hard evidence such a deed ever existed,despite the fact that deed records in Athens go back many decades before Jackson's death in 1876 and that it was supposed to have existed in 1890 in the archives according to the original anonymous news reporter who claims to have seen it.M)As you might imagine from all of this,few give credit to this side of the story.So how did all of this come about then?N)It is speculated to have been invented by the imagination of the said anonymous author at the Athens Weekly Banner in the aforementioned 1890 front page article titled“Deeded to Itself”,which by the way containedseveral elements that are much more easily proved to be false.As to why the author would do this,it's speculated perhaps it was a 19th century version of a click-bait thought exercise on whether it would be legal for someone to deed such a non-conscious living thing to itselfor not.O)Whatever the case,the next known instance of the TreeThat Owns Itselfbeing mentioned wasn't until 1901 in the Centennial Edition of that same paper,the Athens Weekly Banner.This featured another account very clearly just copying the original article published about a decade before,only slightly reworded.The next account was in 1906,again in the Athens Weekly Banner,again very clearly copying the original account, only slightly reworded,the 19th century equivalent of re-posts when the audience has forgotten about the original.36.Jackson was said to have transferred his ownership of the oak tree to itself in order to protect it from beingdestroyed.37.No proof has been found from an extensive search that Jackson had ever owned the land where the oak treegrew38.When it was raining heavily,Jackson often took shelter under a big tree that is said to own itself.39.There is no evidence that Jacksonhad made arrangements to pay property taxes for the land onwhich the oaktree sat.40.Professor Ward paid Jackson over one thousand dollars when purchasing a piece ofland from him.41.It is said the tree that owned itself fell in a heavy windstorm.42.The story of the oak tree is suspected to have been invented as a thought exercise.43.Jackson's little plantation was auctioned off to settle his debt in the mid-19th century44.An official ceremony was held to celebrate the transplantingof a small tree to where its ancestor had stood.45.The story of the Tree That Owns Itself appeared in the local paper several times,with slight alterations inwording.Section CDirections: There are 2 passages in this section.Each passage is followed by some questions or unfinished statements.For each of them there are four choices marked A),B),C)and D).You should decide on the best choice and mark the corresponding letter on AnswerSheet 2 with a single linethrough the centre.Passage OneQuestions 46 to 50 are based on the following passage.It is irrefutable that employees know the difference between right and wrong.So why don't more employees intervene when they see someone exhibiting at-risk behavior in the workplace?There are a number of factors that influence whether people intervene.First,they need to be able to see a risky situation beginning to unfold.Second,the company's culture needs to make them feel safe to speak up.And third,they need to have the communication skills to say something effectively.This is not strictly a workplace problem;it's a growing problem off the job too.Every day people witness things on the street and choose to stand idly by.This is known as the bystander effect—the more people who witness an event,the less likely anyone in that group is to help the victim.The psychology behind this is called diffusion of responsibility.Basically,the larger the crowd,the more people assume that someone else will take care of it—meaning no one effectively intervenes or acts in a moment of need.This crowd mentality is strong enough for people to evade their known responsibilities.But it's not only frontline workers who don't make safety interventions in the workplace.There are also instances where supervisors do not intervene either.When a group of employees sees unsafe behavior not being addressed at a leadership level it creates the precedent that this is how these situations should be addressed,thus defining the safety culture for everyone.Despite the fact that workers are encouraged to intervene when they observe unsafe operations,this happens less than half of the time.Fear is the ultimate factor in not intervening.There is a fear of penalty,a fear that they'll have to do more work if they intervene.Unsuccessful attempts in the past are another strong contributing factor to why people don't intervene—they tend to prefer to defer that action to someone else for all future situations.On many worksites,competent workers must be appointed.Part of theirjob is to intervene when workers perform a taskwithoutthe proper equipment or if the conditions are petentworkers are alsorequired to stop work from continuing when there's a danger.Supervisors also play a critical role.Even if a competent person isn't required,supervisors need a broad set of skills to not only identify and alleviate workplace hazards but also build a safety climate within theirteam that supports intervening and open communication among them.Beyond competent workers and supervisors,it's important to educate everyone within the organization that they are obliged to intervene if they witness a possible unsafe act,whether you're adesignated competent person, a supervisoror a frontline worker46.What is one of the factors contributing to failure of intervention in face of riskybehavior in the workplace?A)Slack supervision style C)Unforeseeable risk.B)Unfavorable workplace culture. D)Blocked communication.47.What does the author mean by“diffusion of responsibility”(Line4,Para.3)?A)The more people are around,the more they need to worry about their personal safety.B)The more people who witness an event,the less likely anyone will venture to participate.C)The more people idling around on the street,the more likely they need taking care of.D)The more people are around,the less chance someone will step forward to intervene.48.What happens when unsafe behavior at the workplace is not addressed by the leaders?A)No one will intervene when they see similar behaviors.B)Everyone will see it as the easiest way to deal with crisis.C)Workers have to take extra caution executing their dutiesD)Workers are left to take care of the emergency themselves.49.What is the ultimate reason workers won't act when they see unsafe operations?A)Preference of deferring the action to others.B)Anticipation of leadership intervention. C)Fear of being isolated by coworkersD)Fear of having to do more work50.What is critical to ensuring workplace safety?A)Workers be trained to operate their equipment properlyB)Workers exhibiting at-risk behavior be strictly disciplined.C)Supervisors create a safety environment for timely intervention.D)Supervisors conduct effective communication with frontline workers.Passage TwoQuestions 51 to 55 are based on the following passage.The term“environmentalist”can mean different things.It used to refer to people trying to protect wildlife and natural ecosystems.In the 21st century,the term has evolved to capture the need to combat human-made climate changeThe distinction between these two strands of environmentalism is the cause of a split within the scientific community about nuclear energyOn one side are purists who believe nuclear power isn't worth the risk and the exclusive solution to the climate crisis is renewable energy.The opposing side agrees that renewables are crucial,but says society needs an amount of power available to meet consumers'basic demands when the sun isn't shining and the wind isn't blowing.Nuclear energy,being far cleaner than oil,gas and coal,is a natural option,especially where hydroelectric capacity is limitedLeon Clarke,who helped author reports for the UN's Intergovernmental Panel on Climate Change,isn't an uncritical supporter of nuclear energy,but says it's a valuable option to have if we're serious about reaching carbon neutrality.“Core to all of this is the degree to which you think we can actually meet climate goals with 100% renewables,”he said.“If you don't believe we can do it,and you care about the climate,you are forced to think about something like nuclear.”The achievability of universal 100%renewability is similarly contentious.Cities such as Burlington, Vermont,have been“100%renewable”for years.But these cities often have small populations,occasionally still rely on fossil fuel energy and have significant renewable resources at their immediate disposal.Meanwhile, countries that manage to run off renewables typically do so thanks to extraordinary hydroelectric capabilities.Germany stands as the best case study for a large,industrialized country pushing into green energy Chancellor Angela Merkel in 2011 announced Energiewende,an energy transition that would phase out nuclear and coal while phasing in renewables.Wind and solar power generation has increased over 400%since 2010, and renewables provided 46%of the country's electricity in 2019.But progress has halted in recent years.The instability of renewables doesn't just mean energy is often not produced at night,but also that solar and wind can overwhelm the grid during the day,forcing utilities to pay customers to use their gging grid infrastructure struggles to transport this overabundance of green energy from Germany's north to its industrial south,meaning many factories still run on coal and gas.The political limit has also been reached in some places,with citizens meeting the construction of new wind turbines with loud protests.The result is that Germany's greenhouse gas emissions have fallen by around 11.5%since 2010—slower than the EU average of 13.5%.51.What accounts for the divide within the scientific community about nuclear energy?A)Attention to combating human-made climate change.B)Emphasis on protecting wildlife and natural ecosystems.C)Evolution of the term ‘green energy'over the last centuryD)Adherence to different interpretations of environmentalism.52.What is the solution to energy shortage proposed by purists'opponents?A)Relying on renewables firmly and exclusively. C)Opting for nuclear energy when necessaryB)Using fossil fuel and green energy alternately. D)Limiting people's non-basic consumption.53.What point does the author want to make with cities like Burlington as an example?A)It is controversial whether the goal of the whole world's exclusive dependence on renewables is attainableB)It is contentious whether cities with large populations have renewable resources at their immediatedisposal.C)It is arguable whether cities that manage to run off renewables have sustainable hydroelectric capabilities.D)It is debatable whether traditional fossil fuel energy can be done away with entirely throughout the world.54.What do we learn about Germany regarding renewable energy?A)It has increased its wind and solar power generation four times over the last two decades.B)It represents a good example of a major industrialized country promoting green energy.C)It relies on renewable energy to generate more than half ofits electricity.D)It has succeeded in reaching the goal of energy transition setby Merkel.55.What may be one ofthe reasons for Germany's progress having halted in recent years?A)Its grid infrastructure's capacityhas fallen behind its development of greenenergyB)Its overabundance of green energy has forced power plants to suspend operation during daytime.C)Its industrial south is used to running factories on conventional energy suppliesD)Its renewable energy supplies areunstable both at night and during the dayPart IV Translation(30 minutes) Directions: For this part,you are allowed 30 minutes to translate a passagefrom Chinese into English.You should write your answer on Answer Sheet 2.中国的传统婚礼习俗历史悠久,从周朝开始就逐渐形成了一套完整的婚礼仪式,有些一直沿用至今。

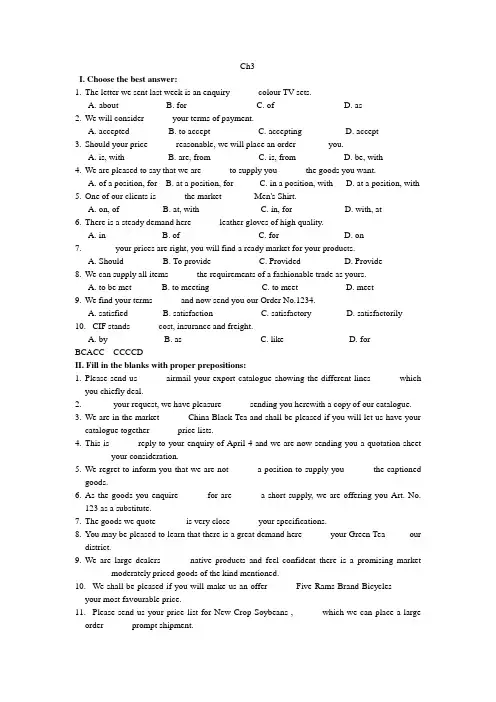

Ch3I. Choose the best answer:1.The letter we sent last week is an enquiry ______colour TV sets.A. aboutB. forC. ofD. as2.We will consider ______your terms of payment.A. acceptedB. to acceptC. acceptingD. accept3.Should your price ______reasonable, we will place an order _______you.A. is, withB. are, fromC. is, fromD. be, with4.We are pleased to say that we are ______to supply you ______the goods you want.A. of a position, forB. at a position, forC. in a position, withD. at a position, with5.One of our clients is ______the market _______Men's Shirt.A. on, ofB. at, withC. in, forD. with, at6.There is a steady demand here ______leather gloves of high quality.A. inB. ofC. forD. on7._______your prices are right, you will find a ready market for your products.A. ShouldB. To provideC. ProvidedD. Provide8.We can supply all items ______the requirements of a fashionable trade as yours.A. to be metB. to meetingC. to meetD. meet9.We find your terms ______and now send you our Order No.1234.A. satisfiedB. satisfactionC. satisfactoryD. satisfactorily10.CIF stands ______cost, insurance and freight.A. byB. asC. likeD. forBCACC CCCCDII. Fill in the blanks with proper prepositions:1.Please send us ______airmail your export catalogue showing the different lines ______whichyou chiefly deal.2.______ your request, we have pleasure ______sending you herewith a copy of our catalogue.3.We are in the market ______China Black Tea and shall be pleased if you will let us have yourcatalogue together ______price lists.4.This is ______reply to your enquiry of April 4 and we are now sending you a quotation sheet______your consideration.5.We regret to inform you that we are not ______a position to supply you ______the captionedgoods.6.As the goods you enquire ______for are ______a short supply, we are offering you Art. No.123 as a substitute.7.The goods we quote ______ is very close ______your specifications.8.You may be pleased to learn that there is a great demand here ______your Green Tea _____ourdistrict.9.We are large dealers ______native products and feel confident there is a promising market______moderately priced goods of the kind mentioned.10.We shall be pleased if you will make us an offer ______Five Rams Brand Bicycles ______your most favourable price.11.Please send us your price list for New Crop Soybeans , ______which we can place a largeorder ______prompt shipment.12.We have so far bought these goods ______other sources, but we now wish to switch ourpurchases ______your corporation.13.As the goods are required _______re-export, the packing material must be suitable ______long distance ocean transportation.14.Please let us have your quotations _______bicycles of various brands and styles available______supply.15.We have just received an enquiry ______one of our customers, who wishes to have a sampleof the animal toys displayed ______the recent Guangzhou Fair.1 by , in2 As, in3for, with4 in , for5 in ,with6 in7 for, to8 for, in9 in , for10 of , at11 from , for12 from, to13 for, for14for, for15from ,atIII. Translate the following sentences into English:1.请对下列商品报竞争性的巴黎成本加运费5%佣金价。

Unit 1【Ex1.】根据课文容,回答以下问题。

1.In human terms and in the broadest sense, information is anything that you are capable of perceiving. 2.It includes written munications, spoken munications, photographs, art, music, and nearly anything that is perceptible.3.If we consider information in the sense of all stimuli as information, then we can’t really find organization in all cases.4.No.5.Traditionally, in libraries, information was contained in books, periodicals, newspapers, and other types of recorded media. People access it through a library’s catalog and with the assistance of indexes, in the case of periodical and newspaper articles.6.puterized “information systems〞.7.The problem for most researchers is that they have yet to discover the organizing principles that are designed to help them find the information they need.8.For library materials, the organizing principle is a detailed subject classification system available for searching in an online “catalog〞.9.The one thing mon to all of these access systems is organization.10.No, it isn’t.【Ex2.】根据给出的汉语词义和规定的词类写出相应的英语单词。

广东省江门市台山市华侨中学2023-2024学年高二上学期第二次月考英语试题学校:___________姓名:___________班级:___________考号:___________一、阅读选择Volunteer in South AfricaAre you looking for chances to volunteer in Africa? Well, explore International Volunteer HQ’s popular South Africa volunteer abroad program. Choose from its different projects and contribute to the local communities.Animal Care in Cape Town●Duration: 2 weeks (from August 12 to 26)●Fees: $200 per weekAnxious to make some four-legged friends? Animal Care project enables volunteers to help at an animal shelter which takes care of cats and dogs that have been homeless. Give them the best chance of finding a new loving home.Childcare in Cape Town●Duration: 4 weeks (from August 5 to September 2)●Fees: $275 per weekFull of energy and want to be a childcare volunteer? Offer help with early childhood education in low-income areas of Cape Town. V olunteers help local teachers provide high quality care and education to children from nearby farms, villages and communities. Holiday Club in Table View●Duration: 3 weeks (from July 9 to 30)●Fees: $300 per weekV olunteers help to organize and run fun activities for students during their school holidays, making a meaningful difference to the local community. It is designed to help keep students active in positive ways.Sports Development in Table View●Duration; 6 weeks (from July 21 to September 1)●Fees: $350 per weekThrough Sports Development project, volunteers support after-school projects by helping more disadvantaged students practice and play a range of sports. Play local sports orintroduce your own for students aged 6-15, encouraging improvement, teamwork and fun. 1.Which of the four projects begins the earliest?A.Childcare in Cape Town.B.Animal Care in Cape Town,C.Holiday Club in Table View.D.Sports Development in Table View.2.How much should you pay for attending to animals?A.$400.B.$900.C.$1,100.D.$2,100. 3.What are the volunteers of the Sports Development supposed to do?A.Supply childhood care in poor areas.B.Look for a new home for homeless animals.C.Bring fun to students during school holidays.D.Attract more students to take part in sports.Lin Wanqi, a 26-year-old resident of Shanghai, was among the earliest to try Luckin Coffee’s new Moutai-flavored latte. Eager to sample this “young people’s first sip of Moutai”, she was curious about how her beloved coffee tastes with Chinese liquor in it. “The aroma of the alcohol is very strong and is well blended with the milk,” Lin shared.The coffee drink, packaged with an iconic Moutai-themed label and containing less than 0.5 percent (alcohol by volume) of 53 degrees Moutai, is priced at 38 yuan, although consumers can get it for 19 yuan using coupons. In recent years, Moutai has embarked on various creative campaigns to appeal to younger customers, introducing products like Moutai ice cream, scented sachets, and canvas bags. “This creative partnership lets Moutai make its brand younger,” Li Honghui, a marketing director for drinks. Li also pointed out that such creative collaborations (合作) can bring benefits to both brands. “Through partnerships, brands can share resources, expand the market, and bring more diversified and innovative products to consumers,” Li said.However, flawed partnerships may lead to negative consequences. Take the collaboration between Chinese coffee chain Manner and French luxury brand Louis Vuitton(LV), for example.Consumers could get a free LV canvas bag by buying at least two books in the coffee shop. The two books would cost at least 580 yuan. The campaign was sharply criticized forthe high barrier of entry to receive the gift and many people doubted whether it was worth the price. “It’s important to maintain the high quality of the products in these partnerships rather than merely generate hype(炒作),” Li said.4.What did Lin Wanqi think of the new Moutai-flavored latte?A.Bitter.B.Smooth.C.Creamy.D.Aromatic. 5.Why is Moutai in association with Luckin Coffee?A.To get coupons.B.To attract other consumers.C.To share resources D.To maintain the high quality.6.What does Li Honghui say about the partnership between Kweichow Moutai and Luckin Coffee?A.It is a win-win.B.It is creative-yet-risky.C.It still needs more promotion.D.It sets a good example for the market. 7.Which is the shortcoming of Manner Coffee and Louis Vuitton partnerships ?A.The gifts were of low quality.B.They come from different countries.C.The promotional method was improper.D.Their employees sold the gifts privately.Last Friday, a special thing took place at the National Theater of Korea. A robot called EveR 6 led an orchestra (管弦乐队) in a performance of Korean music. Robots have led orchestras in other countries, but this was the first time ever in Korea.EveR 6 is a robot built by the Korea Institute of Industrial Technology (KITECH). It’s about as tall as a person, and has a human-like face that can show feelings. EveR 6 has joints (关节) in its neck, shoulders, etc. As a result, it can move its arms quickly in many different directions.But EveR 6 doesn’t think on its own like some Artificial Intelligence programs. Instead, it has a limited group of movements that it has been trained to perform. To make these movements as natural as possible, EveR 6’s movements are learnt from real human conductors.When EveR 6 moved its baton (指挥棒) for the first time, a piece of music rang out. Every movement of the robot’s arms brought more instruments into the mix, and the orchestra’s music grew louder and more exciting. Mr White, a human conductor, wasimpressed with the way EveR 6 moved. “The robot was able to present such difficult moves much better than I had imagined,” he said.But the robot still is not nearly ready to supersede a human conductor. Mr White says the robot’s greatest weakness is that it can’t hear, “Some people think that conducting is just about hand waving and keeping the beat,” says Mr White. But a good conductor needs to listen to the orchestra, so that he or she can correct and encourage the orchestra as it plays.Mr White doesn’t think robots are likely to replace human conductors. But he believes robots could be helpful in situations like practice parts where the same thing needs to be done many times.8.What is paragraph 2 mainly about?A.The effect of EveR 6.B.The advantage of EveR 6.C.The importance of EveR 6.D.The basic information of EveR 6.9.What does the underlined word “supersede” in paragraph 5 mean?A.Look forward to.B.Take the place of.C.Show interest in.D.Make an impression on.10.Which of the following may Mr White agree with?A.Robots could work in some fields of conductors’ work.B.Robots are useless in practice parts of a conductor.C.Robots’ role as a conductor will be overlooked.D.Robots leave human no chance to be a conductor.11.In which section of a newspaper may this text appear?A.Health.B.Sports.C.Science.D.Education.Most environmental pollution on Earth comes from humans and their inventions, such as cars or plastic. Today, car emissions(排放物) area major source of air pollution leading to climate change, and plastics fill our ocean, creating a significant health issue to marine(海洋的) animals.And what about the electric light, thought to be one of the greatest human inventions of all time? Electric light can be a beautiful thing, guiding us home when the sun goes down,keeping us safe and making our homes bright. However, like carbon dioxide emissions andplastic, too much of a good thing has started to impact the environment. Light pollution, the inappropriate use of outdoor light, is affecting human health, wildlife behavior and our ability to observe stars.Light pollution is a global issue. This became obvious when the World Atlas of Artificial Night Sky Brightness, a computer-generated map based on thousands of satellite photos, was published in 2016. Available online for viewing, the map shows how and where our globe is lit up at night. Vast areas of North America, Europe, the Middle East and Asia are glowing(发光) with light, while only the most remote regions on Earth(Greenland, Central African Republic and Niue) are in total darkness. Some of the most light-polluted countries in the world are Singapore, Qatar, and Kuwait.Sky glow is the brightening of the night sky, mostly over cities, due to the electric lights of cars, street lamps, offices, factories, outdoor advertising, and buildings, turning night into day for people who work and play long after sunset.People living in cities with high levels of sky glow have a hard time seeing more than a handful of stars at night. Astronomers are particularly concerned with sky glow pollution as it reduces their ability to view stars.More than 80 percent of the world’s population, and 99 percent of Americans and Europeans, live under sky glow.12.What can we learn from paragraph 2?A.The use of outdoor light must be forbidden.B.Electric light has both advantages and disadvantages.C.Electric light is the main factor to keep us safe.D.Electric light is the greatest human invention.13.Which of the following places is least affected by sky glow?A.Qatar.B.Singapore.C.Kuwait.D.Niue. 14.Why do astronomers especially complain about sky glow?A.Sky glow costs too much.B.Sky glow has a bad effect on their sleep.C.Sky glow affects their viewing stars.D.Sky glow wastes too much electricity.15.What is the best title for the text?A.Light pollution B.Plastic pollutionC.Different kinds of pollution D.Air pollutionProblems between parents and children are common and timeless. If you are looking to improve your relationship with your parents, you are not alone. There are a number of steps that can be taken to make that happen.16This is not to say that you should not care for and love your parents. But, if you are less emotionally attached to your parents, you may be less invested in arguments or disagreements with them. This way you can walk away from a situation more easily and not let it damage the relationship.Take their perspective.17 So once you can understand their position and see the reasons behind it, you will likely be more willing to take their perspective and improve the relationship. Accept that your parents are different. Think about the ways that their lives may have been different from your life, and how these different histories may be contributing to issues in the relationship.Develop your own identity.It is OK and even healthy for you to think for yourself and to have your own opinion on matters. 18 Moreover, in gaining a new sense of independence and separation from your parents, you may find that your relationship improves organically.Don’t ask for their advice unless you really want it.Sometimes problems arise between parents and children, particularly at the teenage years and beyond, because parents try to give advice in an overpowering way that makes the kids feeling uncomfortable. 19 However, if you are just feeling lazy to think about things on your own, you can also ask your parents.Establish boundaries and make rules.If you want to maintain a positive relationship with parents, but find that you always end up disagreeing, consider setting up some topics as off-limits. This may work better if you are older or no longer live with your parents. 20A.In this way, you gain your own identity.B.Separate from your parents emotionally.C.Parents’ opinions on your issues are constructive.D.Construct strong emotional attachment with your parents.E.Also try creating rules that both you and your parents agree to abide by (遵守).F.Often, kids and parents do not get along because they fail to consider each other’s perspectives.G.To get around this, try only asking for their advice when you are sure that you really want their view.二、完形填空at that time ran a hotel. Just a month earlier, he had got a dog, Leo, on September 25, 2011. Aat home.“It was the fault that saved my 24 ,” he says. By the time the pair got to La Spezia, the storm had already 25 — heavy rain, thunder and hail. Paradisi decided to leave early, 26 the weather would only get worse. “Even in the first mile, it 27 —I’d never seen it worsen like that before,” he says. “There was a tornado of water that hit the mountains, and I couldn’t even see a meter ahead. I had 28 of about 30 centimeters, so was driving extremely slowly.”Paradisi had put Leo in the back of his car for the 17-mile journey, and for most of it, the dog had 29 there in silence. Until, getting closer and closer to Prevo, as the car wound round the cliff, Leo made his move.” He 30 into the front and onto my knees, so I had to stop,” says Paradisi. “I was angry — I said, “Leo, I’m driving.” At that moment —just as he was trying to 31 the dog off his lap, and get going again — the cliff fell away in front of them. “The rocks just came down. It almost 32 the car. A meter further forward, and we’d have been 33 ,” he says.In 34 , he says, he managed to turn the car around and make it as far as Manarola, another of the Cinque Terre villages. “It was only then that I understood what was happening. It was 35 Leo that I’m alive. ”21.A.threw B.struck C.crashed D.burst 22.A.protected B.fought C.banned D.permitted 23.A.satisfied B.happy C.unconcerned D.uncomfortable24.A.car B.dog C.house D.life 25.A.started B.grew C.worsened D.weaken 26.A.predicting B.fearing C.promising D.hoping 27.A.stopped B.happened C.changed D.improved 28.A.distance B.vision C.feeling D.action 29.A.watched B.slept C.sat D.observed 30.A.leapt B.slipped C.walked D.raced 31.A.give B.break C.draw D.move 32.A.destroyed B.touched C.broke D.pressed 33.A.gone B.hurt C.missing D.regretful 34.A.sorrow B.thrill C.terror D.confusion 35.A.regardless of B.thanks to C.according to D.apart from三、其他阅读下面短文,有5处划线,从每题所给四个选项中选出与划线意义的最匹配选项。