数据挖掘应用程序Bagged RBF分类器性能评估(IJIEEB-V5-N5-7)

- 格式:pdf

- 大小:291.86 KB

- 文档页数:8

数据挖掘算法准确性和效率评估说明数据挖掘算法是对庞大、复杂数据集进行分析和挖掘的过程,用于发现隐藏在数据中的模式、关系和规律。

数据挖掘算法的准确性和效率是衡量其优劣的两个重要指标。

准确性指算法在预测、分类、聚类或模式挖掘等任务中的预测能力和准确率,而效率则指算法在处理大量数据时所消耗的时间和资源。

在评估数据挖掘算法的准确性方面,常用的方法有交叉验证、留出法和自助法等。

交叉验证是将数据集划分为训练集和测试集,多次重复实验,每一次都选择不同的训练集和测试集,计算平均准确率来评估算法的预测能力。

留出法是将数据集划分为训练集和验证集两部分,通过在验证集上计算准确率来评估算法的性能。

自助法是通过重复抽取数据集生成多个大小相等的训练集,对每个训练集进行训练和测试,计算平均准确率来评估算法的准确性。

这些方法都可以有效地评估数据挖掘算法的准确性,但不同的方法适用于不同的场景和数据量。

此外,还可以使用混淆矩阵、ROC曲线和精确率-召回率曲线等评价指标来评估算法的准确性。

混淆矩阵可以显示算法在不同类别上的分类结果,从而计算出准确率、召回率和F1值等指标;ROC曲线则可以评估算法的分类性能,通过绘制真阳性率和假阳性率之间的关系来判断算法的预测能力;精确率-召回率曲线可以用来判断算法在不同阈值下的分类结果,以及平衡算法的准确性和召回率。

在评估数据挖掘算法的效率方面,通常使用算法的运行时间和所消耗的计算资源来衡量。

数据挖掘算法的运行时间可以通过对算法进行时间复杂度分析来预估,以了解算法在处理大规模数据时所需的时间。

此外,还可以通过实际运行算法并记录运行时间来评估其效率。

计算资源的消耗则可以通过算法对内存和CPU的占用情况来评估。

对于处理大规模数据的算法来说,能够高效地利用计算资源是非常重要的。

综上所述,准确性和效率是评估数据挖掘算法的两个重要指标。

准确性是指算法在预测、分类、聚类或模式挖掘等任务中的预测能力和准确率,可以通过交叉验证、留出法和自助法等方法来评估。

第34卷第6期2017年 12月贵州大学学报(自然科学版)Journal of Guizhou University!Natural Sciences)Vol.34 No.6Dec.2017文章编号 1000-5269 (2017 #06-0054-05 DOI:10.1595C/ k i.gdxbzrl〇.2017.06.11不平衡数据的随机平衡采样bagging算法分类研究季梦遥\袁磊2!(1.武汉大学人民医院消化内科,湖北武汉430000#2.武汉大学人民医院信息中心,湖北武汉430000)摘要:不平衡数据广泛存在于现实世界中,严重影响了传统分类器的分类性能。

本文提出了随机 平衡采样算法(random balance s a m p lin g,R B S),并以此为基础提出了随机平衡采样b a g g in g算法 (RBSBagging)用于解决不平衡数据集的分类问题。

最后,采用6组U C I数据集对提出的分类算法 进行验证,结果表明本文提出的R B S B a g g in g算法可以较好地解决不平衡数据集的分类问题。

关键词:不平衡数据;采样;b a g g in g算法中图分类号:T P311.11 文献标识码:A在现实世界的应用领域中,不平衡数据广泛存 在。

例如:在故障诊断[1_2]中,故障的机率远远低 于正常运行情况,此类情况还广泛分布于网络人 侵[3-5]、疾病诊断[6-7]、信用卡欺骗[M]等。

在分类 问题中,分类对象的样本分布通常是不均匀的,即某一类的样本数目远远大于其他类的样本数目,称 之为不平衡数据集。

在不平衡数据集中,样本较少 的类称之为少数类,样本较多的类称之为多数类,而且少数类通常包含更加重要的有用信息。

然 而,传统的机器学习算法大都基于样本的数据分 布是均匀的,分类器对整体的预测准确性较高,但 对少数有用信息的预测准确率却十分低。

例如%网络人侵的历史数据中,只有1%的人侵记录,其 余99k的非人侵记录。

基于模糊聚类与RBF网络集成分类器的验证码识别宋人杰;刘娟【摘要】This paper puts forward a kind of integrated classifier based on fuzzy clusteringand the back propagation neural network, for the Captcha recognition with merged charac-ters. This classifier using of the dynamic feedback thought combination with segmentation and recognition. First, extracted feature of character by fuzzy clustering algorithm, and take it as the input of the RBF neural network. Then the network selects the node dynami-cally based on recognition confidence and membership degree of character features. Finally, Validation effectiveness and recognition rate through experiments. This classifier reflects the idea that considering the overall as priority and the details as compensation, making fulluse ving recognition rates of low quaity characters. of information of the training sample set, and impro-%针对粘连字符验证码识别率低的问题,提出了一种基于模糊聚类和径向基神经网络的动态集成分类器。

数据挖掘模型评估数据挖掘在现代社会中扮演着重要角色,通过从大量数据中发现并提取有价值的信息,帮助企业做出准确的决策。

然而,数据挖掘的结果往往依赖于所选择的模型,因此对模型进行评估成为必要的步骤。

本文将介绍数据挖掘模型的评估方法,以及常用的评估指标。

一、数据集拆分在进行模型评估之前,我们需要先将数据集划分为训练集和测试集。

训练集用于模型的训练和参数调优,而测试集则用于评估模型的性能。

通常,我们采用随机拆分的方式,保证训练集和测试集的数据分布一致。

二、评估指标选择不同的数据挖掘任务需要使用不同的评估指标来衡量模型的性能。

以下是一些常用的评估指标:1. 准确率(Accuracy):准确率是分类模型最常用的指标之一,它衡量模型预测正确的样本数与总样本数的比例。

准确率越高,模型的性能越好。

2. 精确率(Precision):精确率是衡量模型预测结果中正例的准确性,即真正例的数量与预测为正例的样本数之比。

精确率越高,模型预测的正例越准确。

3. 召回率(Recall):召回率是衡量模型对正例的覆盖率,即真正例的数量与实际为正例的样本数之比。

召回率越高,模型对正例的识别能力越强。

4. F1值(F1-Score):F1值是精确率和召回率的调和均值,综合考虑了模型的准确性和覆盖率。

F1值越高,模型的综合性能越好。

5. AUC-ROC:AUC-ROC(Area Under Curve of Receiver Operating Characteristic)是用于衡量二分类模型性能的指标。

ROC曲线绘制了模型在不同分类阈值下的假正例率和真正例率之间的变化关系,AUC-ROC值越大,模型的性能越好。

三、常用的模型评估方法评估模型的方法多种多样,根据任务和数据类型的不同,我们可以选择不同的方法来评估模型的性能。

以下是几种常用的模型评估方法:1. 留出法(Hold-Out):留出法是最简单的模型评估方法之一,将数据集划分为训练集和测试集,并使用训练集训练模型,最后使用测试集来评估模型的性能。

数据分析知识:如何度量数据挖掘算法的性能随着大量数据的产生和存储,数据分析技术在各个领域中得到了广泛的应用,使数据挖掘技术日益成为大数据处理的重要手段之一。

而在实际应用中,如何度量数据挖掘算法的性能显得非常重要。

本文就此为题,将从评估指标、数据集划分和交叉验证、模型选择和调参等方面介绍如何度量数据挖掘算法的性能。

一、评估指标评估指标是衡量数据挖掘算法性能的关键因素之一。

根据数据挖掘任务的不同类型,可选择不同的评估指标。

例如分类问题可选用准确率(Accuracy)、召回率(Recall)、精度(Precision)和F1值等,而回归问题可选用均方误差(MSE)和R2等指标。

总的来说,评估指标应该具有准确、可解释性和可比性等特点,方便建模者对模型进行调整和改进。

以分类问题为例,给出常用的评估指标:1.准确率(Accuracy)准确率是最常见的分类评估指标,其计算方式为分类正确的样本数占总样本数的比例。

但准确率不一定能真实反映分类模型的性能,因为它无法区分不同类别的分类结果,对于不平衡的数据集表现较差。

2.召回率(Recall)召回率表示在所有实际为正例中,模型预测为正例的比例。

它是用于检测分类器对所有正例的识别能力的指标。

它可以识别少数类数据,因此能在不平衡的数据集上提供更好的性能评估。

3.精度(Precision)精度表示在所有模型预测结果为正例中,实际为正例的比例。

与召回率相反,精度主要用于检测分类器对所有负例的识别能力。

精度和召回率常常被结合在一起,用F1值度量分类器的性能。

4. F1值F1值综合考虑了精度和召回率两个指标,是二者的调和平均数。

F1值越接近1,说明分类器的性能越好。

二、数据集划分和交叉验证数据集划分和交叉验证是度量数据挖掘算法性能的另一个重要方面。

数据集划分的目的是将原始数据集划分为训练集和测试集,训练集用于训练模型,测试集用于评估模型的性能。

常见的数据集划分方法有留出法和交叉验证法。

第35卷第5期 测绘科学V o L 35 No .52010年9月S ci e n c e of Su rv ey in g a nd Ma pp in gSep .Boosting 和Bagging 算法的高分辨率遥感影像分类探讨陈绍杰①,逄云峰②(①龙岩学院资源工程学院,福建龙岩364012;②龙1=I 矿业集团生产处,山东龙1=1265700)【摘要】多分类器集成能够有效地提高遥感分类精度、降低结果中的不确定性,基于样本操作的Boosting 和 Bagging 算法是多分类器系统常用的两种算法。

针对高分辨率卫星遥感分类的需求,以Quickbird 数据为例,分别 以BP 神经网络、RBF 神经网络和决策树为基分类器,对Boosting 和Bagging 算法的应用效果进行了实验和分析评 价。

结果表明Boosting 算法和Bagging 算法能够用于高分辨率遥感影像分类,具有较好的分类性能。

【关键词】多分类器集成;Boost ing ;Bagg ing ;高分辨率遥感【中图分类号】Tfr75l 【文献标识码】A 【文章编号】1009-2307(20io)05-0169-041引言类判决将根据分量分类器判决结果的投票来决定。

一般来说,这些分量分类器是同构的,可能都是神经网络分类器、当前遥感分类的两个主要的发展方向是:①提出和构 SVM 分类器或都是决策树分类器等。

对于Bagging 算法,组 建新的遥感分类方法,如人工神经网络、支持向量机、人 合多个分类器能通过减小误差方差从而减小期望误差值, 工免疫算法等;②多分类器的集成与组合 。

多分类器系 越多的分类器参与,误差方差就越小"J J 。

Bagging 算法通 统的基本思想是通过对分类器集合中分类器的选择与组合, 过随机的有放同的选取训练样本,改变训练样本集合,使 获得比任何单一分类器更高的精度。

由于不同分类器能够 多个分类器进行组合,得到一个性能改进的组合分类器。

数据挖掘技术的分类算法与性能评估数据挖掘技术是一种通过从大量数据中发现有用信息的过程和方法。

数据挖掘技术被广泛应用于商业领域、金融领域、医疗领域等各个行业,帮助企业和组织发现隐藏在数据背后的模式和规律,帮助做出更明智的决策。

其中,分类算法是数据挖掘中最重要的技术之一,用于将数据集中的对象划分为不同的类别。

一、分类算法的分类在数据挖掘领域,有多种分类算法被广泛使用。

这些算法可以根据不同的属性进行分类,下面将介绍几种常见的分类算法。

1. 决策树算法决策树算法是一种基于树结构的分类算法,它将数据集根据特征属性的取值进行分割,并形成一个树状结构,从而进行预测和分类。

决策树算法简单易懂,可以显示特征重要性,但容易过拟合。

2. 朴素贝叶斯算法朴素贝叶斯算法是一种概率模型,以贝叶斯定理为基础,通过计算各个特征值在已知类别条件下的条件概率,对新的数据进行分类。

朴素贝叶斯算法有较高的分类准确率,并且对缺失数据具有很好的鲁棒性。

3. 支持向量机算法支持向量机算法是一种基于统计学习理论的分类算法,通过找到最优的超平面来将数据集划分为不同的类别。

支持向量机算法在处理线性可分问题时表现良好,但对于复杂的非线性问题可能会面临挑战。

4. K近邻算法K近邻算法是一种基于实例的分类算法,它根据离新数据点最近的K个邻居来判断其所属的类别。

K近邻算法简单直观,但在处理大规模数据时会比较耗时。

二、性能评估方法对于分类算法的性能评估,有多种指标和方法可以使用。

下面介绍几种常见的性能评估方法。

1. 准确率准确率是最直观的评估分类算法性能的指标,它表示分类器正确分类的样本数量占总样本数量的比例。

然而,当数据集存在不平衡的情况下,准确率可能不是一个很好的评估指标,因为算法可能更倾向于预测数量较多的类别。

2. 精确率与召回率精确率和召回率是一种用于评估分类算法性能的常用指标,尤其在存在不平衡数据集的情况下更能体现算法的表现。

精确率指分类器正确分类为阳性的样本数量与所有被分类为阳性的样本数量的比例。

基于Bagging算法和遗传神经网络的交通事件检测

朱红斌

【期刊名称】《计算机应用与软件》

【年(卷),期】2010(027)001

【摘要】提出一种集成遗传神经网络的交通事件检测方法,以上下游的流量和占有率作为特征,RBF神经网络作为分类器进行交通事件的自动分类与检测.在RBF 神经网络的训练过程中,采用遗传算法GA(Genetic Algorithm)对RBF神经网络的隐层中心值和宽度进行优化,用递推最小二乘法训练隐层和输出层之间的权值.为了提高神经网络的分类能力,采用Bagging算法,进行网络集成.通过Matlab仿真实验,证明该方法相对于传统的事件检测算法能更准确、快速地实现分类.

【总页数】3页(P234-236)

【作者】朱红斌

【作者单位】丽水学院计算机与信息工程学院,浙江,丽水,323000

【正文语种】中文

【相关文献】

1.基于TAN分类算法的交通事件检测 [J], 凃强;李大韦;程琳

2.基于视频的公路交通事件检测算法研究 [J], 胡永

3.基于视频的公路交通事件检测算法研究 [J], 胡永

4.基于车辆积压长度的高速公路交通事件检测算法 [J], 李翠;李雪

5.基于遗传算法和遗传神经网络算法的堆石料参数反演分析研究 [J], 吕松召;张墩;付志昆;吴长彬

因版权原因,仅展示原文概要,查看原文内容请购买。

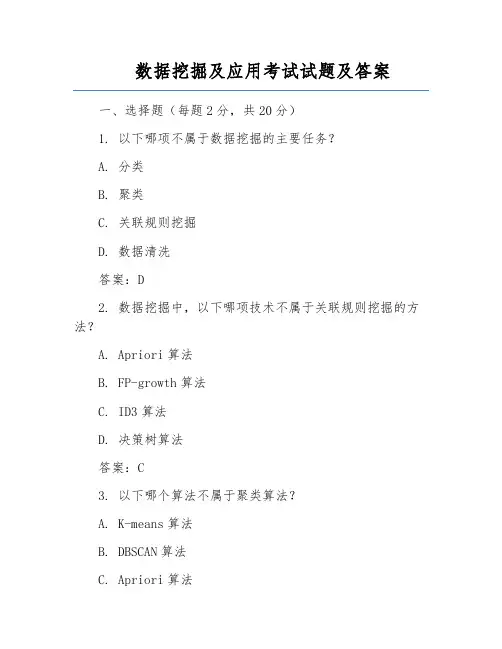

数据挖掘及应用考试试题及答案一、选择题(每题2分,共20分)1. 以下哪项不属于数据挖掘的主要任务?A. 分类B. 聚类C. 关联规则挖掘D. 数据清洗答案:D2. 数据挖掘中,以下哪项技术不属于关联规则挖掘的方法?A. Apriori算法B. FP-growth算法C. ID3算法D. 决策树算法答案:C3. 以下哪个算法不属于聚类算法?A. K-means算法B. DBSCAN算法C. Apriori算法D. 层次聚类算法答案:C4. 数据挖掘中,以下哪个属性类型不适合进行关联规则挖掘?A. 连续型属性B. 离散型属性C. 二进制属性D. 有序属性答案:A5. 数据挖掘中,以下哪个评估指标用于衡量分类模型的性能?A. 准确率B. 精确度C. 召回率D. 所有以上选项答案:D二、填空题(每题3分,共30分)6. 数据挖掘的目的是从大量数据中挖掘出有价值的________和________。

答案:知识;模式7. 数据挖掘的主要任务包括分类、聚类、关联规则挖掘和________。

答案:预测分析8. Apriori算法中,最小支持度(min_support)和最小置信度(min_confidence)是两个重要的参数,它们分别用于控制________和________。

答案:频繁项集;强规则9. 在K-means聚类算法中,聚类结果的好坏取决于________和________。

答案:初始聚类中心;迭代次数10. 数据挖掘中,决策树算法的构建过程主要包括________、________和________三个步骤。

答案:选择最佳分割属性;生成子节点;剪枝三、判断题(每题2分,共20分)11. 数据挖掘是数据库技术的一个延伸,它的目的是从大量数据中提取有价值的信息。

()答案:√12. 数据挖掘过程中,数据清洗是必不可少的步骤,用于提高数据质量。

()答案:√13. 数据挖掘中,分类和聚类是两个不同的任务,分类需要训练集,而聚类不需要。

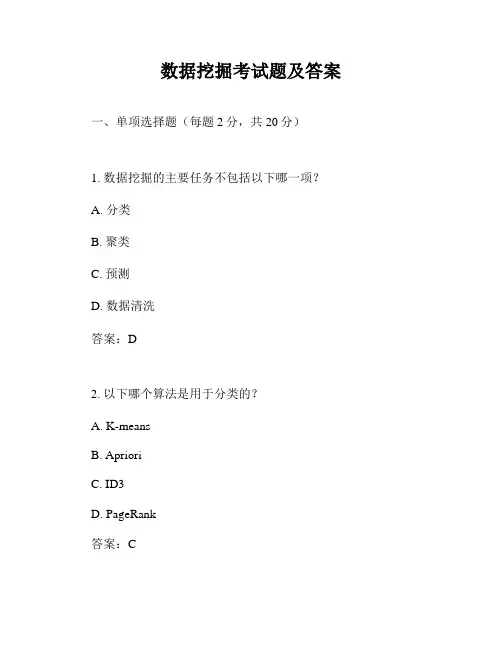

数据挖掘考试题及答案一、单项选择题(每题2分,共20分)1. 数据挖掘的主要任务不包括以下哪一项?A. 分类B. 聚类C. 预测D. 数据清洗答案:D2. 以下哪个算法是用于分类的?A. K-meansB. AprioriC. ID3D. PageRank答案:C3. 在数据挖掘中,哪个指标用于衡量分类模型的性能?A. 准确率B. 召回率C. F1分数D. 所有以上答案:D4. 决策树算法中,哪个算法是基于信息增益来构建树的?A. ID3B. C4.5C. CARTD. CHAID答案:A5. 以下哪个算法是用于关联规则挖掘的?A. K-meansB. AprioriC. ID3D. KNN答案:B6. 在数据挖掘中,哪个算法是用于异常检测的?A. K-meansB. DBSCANC. Isolation ForestD. Naive Bayes答案:C7. 以下哪个算法是用于特征选择的?A. PCAB. AprioriC. ID3D. K-means答案:A8. 在数据挖掘中,哪个算法是用于神经网络的?A. K-meansB. AprioriC. BackpropagationD. ID3答案:C9. 以下哪个算法是用于聚类的?A. K-meansB. AprioriC. ID3D. KNN答案:A10. 在数据挖掘中,哪个算法是用于时间序列预测的?A. ARIMAB. AprioriC. ID3D. K-means答案:A二、多项选择题(每题3分,共15分)11. 数据挖掘中的预处理步骤可能包括哪些?A. 数据清洗B. 数据集成C. 数据转换D. 数据降维E. 特征提取答案:ABCDE12. 以下哪些是数据挖掘中常用的聚类算法?A. K-meansB. DBSCANC. Hierarchical ClusteringD. AprioriE. Mean Shift答案:ABCE13. 在数据挖掘中,哪些是常用的分类算法?A. Naive BayesB. Decision TreesC. Support Vector MachinesD. Neural NetworksE. Apriori答案:ABCD14. 以下哪些是数据挖掘中常用的评估指标?A. 准确率B. 召回率C. F1分数D. ROC曲线E. AUC值答案:ABCDE15. 在数据挖掘中,哪些是异常检测算法?A. Isolation ForestB. One-Class SVMC. Local Outlier FactorD. K-meansE. DBSCAN答案:ABC三、填空题(每题2分,共20分)16. 数据挖掘中的________是指从大量数据中提取或推导出有价值信息的过程。

机器学习技术中的文本分类方法性能评估指标在机器学习中,文本分类是一项重要的任务,它涉及将文本数据分为不同的类别或标签。

而为了评估文本分类方法的性能,需要使用一些指标来衡量其准确性和效果。

下面将介绍一些常用的文本分类方法性能评估指标。

1. 准确率(Accuracy)准确率是评估分类器的常用指标之一,它表示分类器正确预测的样本数与总样本数之比。

准确率计算公式如下:准确率 = (TP + TN) / (TP + TN + FP + FN)其中,TP(True Positive)表示真正例,即分类器正确预测为正例的样本数;TN(True Negative)表示真负例,即分类器正确预测为负例的样本数;FP(False Positive)表示假正例,即分类器错误预测为正例的样本数;FN(False Negative)表示假负例,即分类器错误预测为负例的样本数。

准确率越高,分类器的性能越好。

2. 精确率(Precision)与召回率(Recall)精确率和召回率是衡量分类器性能的重要指标,它们可以用来评估分类器在某个类别上的预测能力。

精确率表示分类器正确预测为正例的样本数占分类器预测为正例的样本总数之比,计算公式如下:精确率 = TP / (TP + FP)召回率表示分类器正确预测为正例的样本数占真正例的样本总数之比,计算公式如下:召回率 = TP / (TP + FN)精确率和召回率都越高,分类器的性能越好。

但是精确率和召回率之间存在一种权衡关系,提高精确率通常会导致召回率降低,反之亦然。

因此,常常使用F1值来综合评估分类器的性能。

3. F1值F1值是基于精确率和召回率的综合评估指标,它综合了两者的性能并给出了一个综合的评价结果。

F1值的计算公式如下:F1 = 2 * (精确率 * 召回率) / (精确率 + 召回率)F1值越高,分类器的性能越好。

4. ROC曲线与AUCROC曲线和AUC是针对二分类问题的性能评估指标,用于评估分类器在不同阈值下的准确性。

数据挖掘考试题及答案一、单项选择题(每题2分,共20分)1. 数据挖掘的主要任务不包括以下哪一项?A. 分类B. 聚类C. 预测D. 数据清洗答案:D2. 以下哪个算法不是用于分类的?A. 决策树B. 支持向量机C. K-meansD. 神经网络答案:C3. 在数据挖掘中,关联规则挖掘主要用于发现以下哪种类型的模式?A. 频繁项集B. 异常检测C. 聚类D. 预测答案:A4. 以下哪个指标用于评估分类模型的性能?A. 准确率B. 召回率C. F1分数D. 以上都是答案:D5. 在数据挖掘中,过拟合是指模型:A. 过于复杂,无法泛化到新数据B. 过于简单,无法捕捉数据的复杂性C. 无法处理缺失值D. 无法处理异常值答案:A6. 以下哪个算法是用于异常检测的?A. AprioriB. K-meansC. DBSCAND. ID3答案:C7. 在数据挖掘中,哪个步骤是用于减少数据集中的噪声和不相关特征?A. 数据预处理B. 数据探索C. 数据转换D. 数据整合答案:A8. 以下哪个是时间序列分析中常用的模型?A. 线性回归B. ARIMAC. 决策树D. 神经网络答案:B9. 在数据挖掘中,哪个算法是用于处理高维数据的?A. 主成分分析(PCA)B. 线性回归C. 逻辑回归D. 随机森林答案:A10. 以下哪个是文本挖掘中常用的技术?A. 词袋模型B. 决策树C. 聚类分析D. 以上都是答案:D二、多项选择题(每题3分,共15分)11. 数据挖掘过程中可能涉及的步骤包括哪些?A. 数据清洗B. 数据转换C. 数据探索D. 模型训练答案:ABCD12. 以下哪些是数据挖掘中常用的数据预处理技术?A. 缺失值处理B. 特征选择C. 特征缩放D. 数据离散化答案:ABCD13. 在数据挖掘中,哪些因素可能导致模型过拟合?A. 训练数据量过少B. 模型过于复杂C. 训练数据噪声过多D. 训练数据不具代表性答案:ABCD14. 以下哪些是评估聚类算法性能的指标?A. 轮廓系数B. 戴维斯-邦丁指数C. 兰德指数D. 互信息答案:ABCD15. 在数据挖掘中,哪些是常用的特征工程方法?A. 特征选择B. 特征提取C. 特征构造D. 特征降维答案:ABCD三、简答题(每题10分,共30分)16. 简述数据挖掘中的“挖掘”过程通常包括哪些步骤。

第1篇一、基础知识1. 请解释什么是数据挖掘?它与数据分析、数据仓库等概念有什么区别?解析:数据挖掘是从大量数据中提取有价值信息的过程,通常涉及使用统计方法、机器学习算法等。

数据分析侧重于对数据的理解和解释,而数据仓库则是存储大量数据的系统,用于支持数据分析和挖掘。

2. 什么是特征工程?为什么它在数据挖掘中很重要?解析:特征工程是指将原始数据转换为更适合模型处理的形式的过程。

它包括特征选择、特征提取和特征变换等。

特征工程的重要性在于,它可以提高模型的准确性和泛化能力,减少过拟合,提高模型的可解释性。

3. 请解释什么是机器学习?它与数据挖掘有什么关系?解析:机器学习是使计算机能够从数据中学习并做出决策或预测的方法。

数据挖掘是机器学习的一个应用领域,它使用机器学习算法来发现数据中的模式和知识。

4. 什么是监督学习、无监督学习和半监督学习?解析:- 监督学习:在已知输入和输出关系的情况下,学习一个函数来预测输出。

例如,分类和回归。

- 无监督学习:在只有输入数据的情况下,学习数据的结构和模式。

例如,聚类和关联规则学习。

- 半监督学习:结合了监督学习和无监督学习,使用部分标记数据和大量未标记数据。

5. 什么是交叉验证?它在数据挖掘中有什么作用?解析:交叉验证是一种评估模型性能的方法,通过将数据集分为训练集和验证集,不断替换验证集来评估模型在不同数据子集上的表现。

它有助于减少模型评估中的偏差和方差。

二、数据处理与预处理6. 什么是数据清洗?请列举至少三种常见的数据清洗任务。

解析:数据清洗是指识别和纠正数据中的错误、异常和不一致的过程。

常见的数据清洗任务包括:- 缺失值处理:识别并处理缺失的数据。

- 异常值检测:识别和修正异常值。

- 数据格式化:统一数据格式,如日期格式、货币格式等。

7. 什么是数据标准化?它与数据归一化有什么区别?解析:数据标准化是指将数据缩放到具有相同尺度范围的过程,通常使用z-score 标准化。

人工智能练习题及答案一、单选题(共103题,每题1分,共103分)1.以下关于Bagging(装袋法)的说法不正确的是A、能提升机器学习算法的稳定性和准确性,但难以避免overfittingB、Bagging(装袋法)是一个统计重采样的技术,它的基础是BootstrapC、主要通过有放回抽样)来生成多个版本的预测分类器,然后把这些分类器进行组合D、进行重复的随机采样所获得的样本可以得到没有或者含有较少的噪声数据正确答案:A2.在RNN中,目前使用最广泛的模型便是()模型,该模型能够更好地建模长序列。

A、SLTMB、SLMTC、LSTMD、LSMT正确答案:C3.做一个二分类预测问题,先设定阈值为0.5,概率不小于0.5的样本归入正例类(即1),小于0.5的样本归入反例类(即0)。

然后,用阈值n(n>0.5)重新划分样本到正例类和反例类,下面说法正确的是()。

A、增加阈值会降低查准率B、增加阈值不会降低查准率C、增加阈值会提高召回率D、增加阈值不会提高召回率正确答案:D4.若变量间存在显式的因果关系,则常使用()模型。

A、贝叶斯网B、马尔可夫网C、切比雪夫网D、D珀尔网正确答案:A5.操作系统主要是对计算机系统的全部()进行管理,以方便用户、提高计算机使用效率的一种系统软件。

A、资源B、应用软件C、系统软硬件D、设备正确答案:A6.被誉为计算机科学与人工智能之父的是()。

A、西蒙B、图灵C、纽维尔D、费根鲍姆正确答案:B7.关于RBF神经网络描述错误的是A、单隐层前馈神经网络B、隐层神经元激活函数为径向基函数C、输出层是对隐层神经元输出的非线性组合D、可利用BP算法来进行参数优化正确答案:C8.超市收银员用扫描器直接扫描商品的条形码,商品的价格信息就会呈现出来,这主要利用了人工智能中的()技术。

A、智能代理B、专家系统C、模式识别D、机器翻译正确答案:C9.1x1卷积的主要作用是A、加快卷积运算B、通道降维C、扩大感受野D、增大卷积核正确答案:B10.下面哪项操作能实现跟神经网络中Dropout的类似效果?A、BoostingB、BaggingC、StackingD、Mapping正确答案:B11.将原始数据进行集成、变换、维度规约、数值规约是在以下哪个步骤的任务?()A、频繁模式挖掘B、数据流挖掘C、分类和预测D、数据预处理正确答案:D12.标准正态分布的均数与标准差是()。

数据挖掘中的异常检测算法综述引言随着大数据时代的到来,数据挖掘成为了从海量数据中发现有用信息的重要工具。

然而,在大量的数据中,异常值常常存在,它们可能代表了潜在的异常情况,也可能是错误的数据记录。

因此,异常检测算法在数据挖掘中起着重要的作用。

本文将综述常见的数据挖掘中的异常检测算法及其应用领域。

一、什么是异常检测异常检测又被称为离群点检测,它是数据挖掘领域的一个重要任务。

异常检测旨在发现与大多数数据样本不符的个别异常数据。

这些异常可以是真实的异常事件,也可以是噪声或错误。

异常检测通常被应用在金融欺诈检测、网络入侵检测、健康监测、设备故障检测等领域。

二、常见的异常检测算法1. 基于统计的方法:基于统计的异常检测方法是最常见的技术之一。

它假设正常数据遵循某种已知的统计分布,而异常数据则不符合该分布。

基于统计的方法包括正态分布或其他概率分布的假设,通过计算样本点与分布之间的差异来判断异常值。

常见的统计方法包括Z-Score、箱线图、泊松分布等。

2. 基于聚类的方法:基于聚类的异常检测方法通过将数据集划分为不同的簇来检测异常。

它假设正常数据点应该聚集在一起,而异常数据点则偏离这些簇。

常见的聚类算法包括K-Means、DBSCAN、LOF等。

其中,LOF算法基于每个数据点周围邻居的密度来估计异常度。

3. 基于近邻的方法:基于近邻的方法是一种常见的异常检测方法,它利用数据点之间的距离或相似性度量来判断异常值。

常见的基于近邻的方法包括k近邻算法、孤立森林等。

孤立森林是一种基于集成学习的方法,它通过构建一个随机森林来判断数据点是否为异常。

4. 基于分类的方法:基于分类的异常检测方法将异常检测问题视为一个二分类问题,通过训练一个分类模型来判断数据点是否为异常。

常见的基于分类的方法包括支持向量机、决策树、神经网络等。

这些方法需要标记的训练数据集,其中包含了正常和异常的样本。

5. 基于深度学习的方法:深度学习方法在异常检测中也取得了显著的成果。

基于Bagging集成方法的互联网金融信用风险评估

陈凯玥

【期刊名称】《应用数学进展》

【年(卷),期】2022(11)4

【摘要】互联网金融是对传统金融模式的延伸,但由于部分借款人在借款后无法按期、足额还款,使得互联网金融平台面临着信用风险。

对借款人的信用风险进行准确评估,可以降低风险,并且能够在一定程度上为互联网金融行业的稳定发展提供保障。

数据分析方法在信用风险评估领域已有广泛应用。

本文从国内某互联网金融平台借款人的个人、资产、借款信息三类数据提取特征,研究了数据分析方法中Logistic回归的衍生方法逐步Logistic回归、弹性网络和Bagging集成方法的代表Bagging、极端随机树和随机森林。

研究发现随机森林与逐步Logistic回归分别在F1-score、Accuracy、FPR和AUC指标下效果最优,且筛选出的重要特征也保持一致。

【总页数】11页(P1657-1667)

【关键词】信用风险评估;LOGISTIC回归;Bagging集成;特征重要性

【作者】陈凯玥

【作者单位】河北工业大学理学院

【正文语种】中文

【中图分类】F83

【相关文献】

1.基于Logistic回归模型的互联网金融信用风险评估

2.基于BP神经网络模型的互联网金融信用风险评估研究

3.基于Bagging神经网络集成的燃料电池性能预测方法

4.LGB-BAG在P2P网贷借款者信用风险评估中的应用

5.基于改善Bagging-SVM集成多样性的网络入侵检测方法

因版权原因,仅展示原文概要,查看原文内容请购买。

矿产资源开发利用方案编写内容要求及审查大纲

矿产资源开发利用方案编写内容要求及《矿产资源开发利用方案》审查大纲一、概述

㈠矿区位置、隶属关系和企业性质。

如为改扩建矿山, 应说明矿山现状、

特点及存在的主要问题。

㈡编制依据

(1简述项目前期工作进展情况及与有关方面对项目的意向性协议情况。

(2 列出开发利用方案编制所依据的主要基础性资料的名称。

如经储量管理部门认定的矿区地质勘探报告、选矿试验报告、加工利用试验报告、工程地质初评资料、矿区水文资料和供水资料等。

对改、扩建矿山应有生产实际资料, 如矿山总平面现状图、矿床开拓系统图、采场现状图和主要采选设备清单等。

二、矿产品需求现状和预测

㈠该矿产在国内需求情况和市场供应情况

1、矿产品现状及加工利用趋向。

2、国内近、远期的需求量及主要销向预测。

㈡产品价格分析

1、国内矿产品价格现状。

2、矿产品价格稳定性及变化趋势。

三、矿产资源概况

㈠矿区总体概况

1、矿区总体规划情况。

2、矿区矿产资源概况。

3、该设计与矿区总体开发的关系。

㈡该设计项目的资源概况

1、矿床地质及构造特征。

2、矿床开采技术条件及水文地质条件。