IOS Press Supervised model-based visualization of high-dimensional data

- 格式:pdf

- 大小:797.10 KB

- 文档页数:15

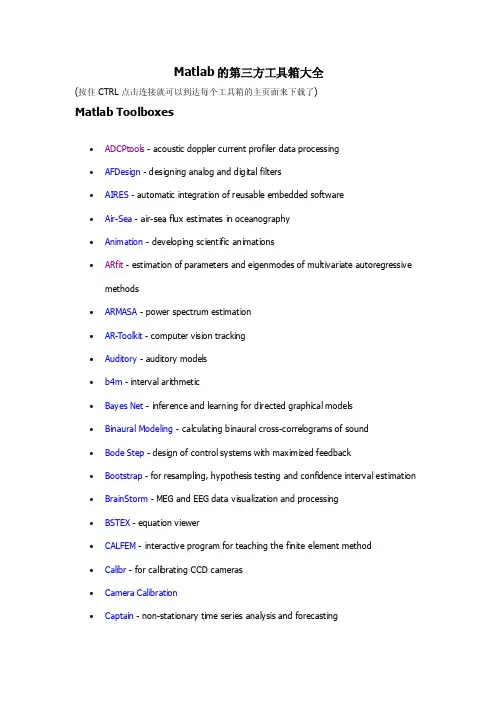

Matlab的第三方工具箱大全(按住CTRL点击连接就可以到达每个工具箱的主页面来下载了)Matlab Toolboxes∙ADCPtools - acoustic doppler current profiler data processing∙AFDesign - designing analog and digital filters∙AIRES - automatic integration of reusable embedded software∙Air-Sea - air-sea flux estimates in oceanography∙Animation - developing scientific animations∙ARfit - estimation of parameters and eigenmodes of multivariate autoregressive methods∙ARMASA - power spectrum estimation∙AR-Toolkit - computer vision tracking∙Auditory - auditory models∙b4m - interval arithmetic∙Bayes Net - inference and learning for directed graphical models∙Binaural Modeling - calculating binaural cross-correlograms of sound∙Bode Step - design of control systems with maximized feedback∙Bootstrap - for resampling, hypothesis testing and confidence interval estimation ∙BrainStorm - MEG and EEG data visualization and processing∙BSTEX - equation viewer∙CALFEM - interactive program for teaching the finite element method∙Calibr - for calibrating CCD cameras∙Camera Calibration∙Captain - non-stationary time series analysis and forecasting∙CHMMBOX - for coupled hidden Markov modeling using max imum likelihood EM ∙Classification - supervised and unsupervised classification algorithms∙CLOSID∙Cluster - for analysis of Gaussian mixture models for data set clustering∙Clustering - cluster analysis∙ClusterPack - cluster analysis∙COLEA - speech analysis∙CompEcon - solving problems in economics and finance∙Complex - for estimating temporal and spatial signal complexities∙Computational Statistics∙Coral - seismic waveform analysis∙DACE - kriging approximations to computer models∙DAIHM - data assimilation in hydrological and hydrodynamic models∙Data Visualization∙DBT - radar array processing∙DDE-BIFTOOL - bifurcation analysis of delay differential equations∙Denoise - for removing noise from signals∙DiffMan - solv ing differential equations on manifolds∙Dimensional Analysis -∙DIPimage - scientific image processing∙Direct - Laplace transform inversion via the direct integration method∙DirectSD - analysis and design of computer controlled systems with process-oriented models∙DMsuite - differentiation matrix suite∙DMTTEQ - design and test time domain equalizer design methods∙DrawFilt - drawing digital and analog filters∙DSFWAV - spline interpolation with Dean wave solutions∙DWT - discrete wavelet transforms∙EasyKrig∙Econometrics∙EEGLAB∙EigTool - graphical tool for nonsymmetric eigenproblems∙EMSC - separating light scattering and absorbance by extended multiplicative signal correction∙Engineering Vibration∙FastICA - fixed-point algorithm for ICA and projection pursuit∙FDC - flight dynamics and control∙FDtools - fractional delay filter design∙FlexICA - for independent components analysis∙FMBPC - fuzzy model-based predictive control∙ForWaRD - Fourier-wavelet regularized deconvolution∙FracLab - fractal analysis for signal processing∙FSBOX - stepwise forward and backward selection of features using linear regression∙GABLE - geometric algebra tutorial∙GAOT - genetic algorithm optimization∙Garch - estimating and diagnosing heteroskedasticity in time series models∙GCE Data - managing, analyzing and displaying data and metadata stored using the GCE data structure specification∙GCSV - growing cell structure visualization∙GEMANOVA - fitting multilinear ANOVA models∙Genetic Algorithm∙Geodetic - geodetic calculations∙GHSOM - growing hierarchical self-organizing map∙glmlab - general linear models∙GPIB - wrapper for GPIB library from National Instrument∙GTM - generative topographic mapping, a model for density modeling and data visualization∙GVF - gradient vector flow for finding 3-D object boundaries∙HFRadarmap - converts HF radar data from radial current vectors to total vectors ∙HFRC - importing, processing and manipulating HF radar data∙Hilbert - Hilbert transform by the rational eigenfunction expansion method∙HMM - hidden Markov models∙HMMBOX - for hidden Markov modeling using maximum likelihood EM∙HUTear - auditory modeling∙ICALAB - signal and image processing using ICA and higher order statistics∙Imputation - analysis of incomplete datasets∙IPEM - perception based musical analysisJMatLink - Matlab Java classesKalman - Bayesian Kalman filterKalman Filter - filtering, smoothing and parameter estimation (using EM) for linear dynamical systemsKALMTOOL - state estimation of nonlinear systemsKautz - Kautz filter designKrigingLDestimate - estimation of scaling exponentsLDPC - low density parity check codesLISQ - wavelet lifting scheme on quincunx gridsLKER - Laguerre kernel estimation toolLMAM-OLMAM - Levenberg Marquardt with Adaptive Momentum algorithm for training feedforward neural networksLow-Field NMR - for exponential fitting, phase correction of quadrature data and slicing LPSVM - Newton method for LP support vector machine for machine learning problems LSDPTOOL - robust control system design using the loop shaping design procedure LS-SVMlabLSVM - Lagrangian support vector machine for machine learning problemsLyngby - functional neuroimagingMARBOX - for multivariate autogressive modeling and cross-spectral estimation MatArray - analysis of microarray dataMatrix Computation- constructing test matrices, computing matrix factorizations, visualizing matrices, and direct search optimizationMCAT - Monte Carlo analysisMDP - Markov decision processesMESHPART - graph and mesh partioning methodsMILES - maximum likelihood fitting using ordinary least squares algorithmsMIMO - multidimensional code synthesisMissing - functions for handling missing data valuesM_Map - geographic mapping toolsMODCONS - multi-objective control system designMOEA - multi-objective evolutionary algorithmsMS - estimation of multiscaling exponentsMultiblock - analysis and regression on several data blocks simultaneously Multiscale Shape AnalysisMusic Analysis - feature extraction from raw audio signals for content-based music retrievalMWM - multifractal wavelet modelNetCDFNetlab - neural network algorithmsNiDAQ - data acquisition using the NiDAQ libraryNEDM - nonlinear economic dynamic modelsNMM - numerical methods in Matlab textNNCTRL - design and simulation of control systems based on neural networks NNSYSID - neural net based identification of nonlinear dynamic systemsNSVM - newton support vector machine for solv ing machine learning problems NURBS - non-uniform rational B-splinesN-way - analysis of multiway data with multilinear modelsOpenFEM - finite element developmentPCNN - pulse coupled neural networksPeruna - signal processing and analysisPhiVis- probabilistic hierarchical interactive visualization, i.e. functions for visual analysis of multivariate continuous dataPlanar Manipulator - simulation of n-DOF planar manipulatorsPRT ools - pattern recognitionpsignifit - testing hyptheses about psychometric functionsPSVM - proximal support vector machine for solving machine learning problems Psychophysics - vision researchPyrTools - multi-scale image processingRBF - radial basis function neural networksRBN - simulation of synchronous and asynchronous random boolean networks ReBEL - sigma-point Kalman filtersRegression - basic multivariate data analysis and regressionRegularization ToolsRegularization Tools XPRestore ToolsRobot - robotics functions, e.g. kinematics, dynamics and trajectory generation Robust Calibration - robust calibration in statsRRMT - rainfall-runoff modellingSAM - structure and motionSchwarz-Christoffel - computation of conformal maps to polygonally bounded regions SDH - smoothed data histogramSeaGrid - orthogonal grid makerSEA-MAT - oceanographic analysisSLS - sparse least squaresSolvOpt - solver for local optimization problemsSOM - self-organizing mapSOSTOOLS - solving sums of squares (SOS) optimization problemsSpatial and Geometric AnalysisSpatial RegressionSpatial StatisticsSpectral MethodsSPM - statistical parametric mappingSSVM - smooth support vector machine for solving machine learning problems STATBAG - for linear regression, feature selection, generation of data, and significance testingStatBox - statistical routinesStatistical Pattern Recognition - pattern recognition methodsStixbox - statisticsSVM - implements support vector machinesSVM ClassifierSymbolic Robot DynamicsTEMPLAR - wavelet-based template learning and pattern classificationTextClust - model-based document clusteringTextureSynth - analyzing and synthesizing visual texturesTfMin - continous 3-D minimum time orbit transfer around EarthTime-Frequency - analyzing non-stationary signals using time-frequency distributions Tree-Ring - tasks in tree-ring analysisTSA - uni- and multivariate, stationary and non-stationary time series analysisTSTOOL - nonlinear time series analysisT_Tide - harmonic analysis of tidesUTVtools - computing and modifying rank-revealing URV and UTV decompositions Uvi_Wave - wavelet analysisvarimax - orthogonal rotation of EOFsVBHMM - variation Bayesian hidden Markov modelsVBMFA - variational Bayesian mixtures of factor analyzersVMT- VRML Molecule Toolbox, for animating results from molecular dynamics experimentsVOICEBOXVRMLplot - generates interactive VRML 2.0 graphs and animationsVSVtools - computing and modifying symmetric rank-revealing decompositions WAFO - wave analysis for fatique and oceanographyWarpTB - frequency-warped signal processingWAVEKIT - wavelet analysisWaveLab - wavelet analysisWeeks - Laplace transform inversion via the Weeks methodWetCDF - NetCDF interfaceWHMT - wavelet-domain hidden Markov tree modelsWInHD - Wavelet-based inverse halftoning via deconvolutionWSCT - weighted sequences clustering toolkitXMLTree - XML parserYAADA - analyze single particle mass spectrum dataZMAP - quantitative seismicity analysis。

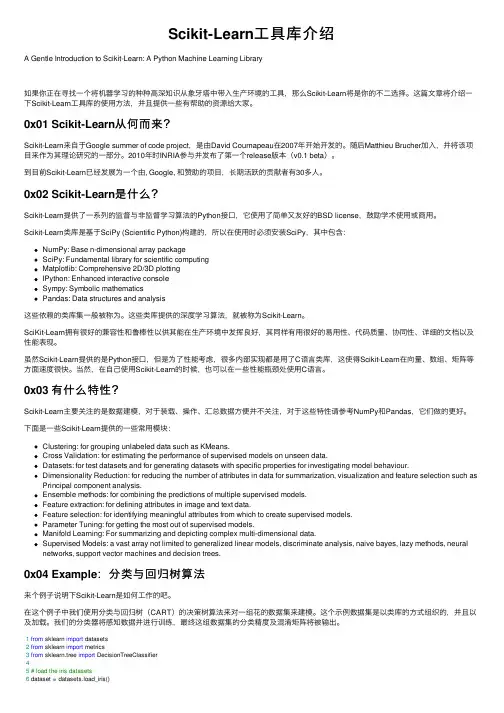

Scikit-Learn⼯具库介绍A Gentle Introduction to Scikit-Learn: A Python Machine Learning Library如果你正在寻找⼀个将机器学习的种种⾼深知识从象⽛塔中带⼊⽣产环境的⼯具,那么Scikit-Learn将是你的不⼆选择。

这篇⽂章将介绍⼀下Scikit-Learn⼯具库的使⽤⽅法,并且提供⼀些有帮助的资源给⼤家。

0x01 Scikit-Learn从何⽽来?Scikit-Learn来⾃于Google summer of code project,是由David Cournapeau在2007年开始开发的。

随后Matthieu Brucher加⼊,并将该项⽬来作为其理论研究的⼀部分。

2010年时INRIA参与并发布了第⼀个release版本(v0.1 beta)。

到⽬前Scikit-Learn已经发展为⼀个由, Google, 和赞助的项⽬,长期活跃的贡献者有30多⼈。

0x02 Scikit-Learn是什么?Scikit-Learn提供了⼀系列的监督与⾮监督学习算法的Python接⼝,它使⽤了简单⼜友好的BSD license,⿎励学术使⽤或商⽤。

Scikit-Learn类库是基于SciPy (Scientific Python)构建的,所以在使⽤时必须安装SciPy,其中包含:NumPy: Base n-dimensional array packageSciPy: Fundamental library for scientific computingMatplotlib: Comprehensive 2D/3D plottingIPython: Enhanced interactive consoleSympy: Symbolic mathematicsPandas: Data structures and analysis这些依赖的类库集⼀般被称为。

label matrixLabel Matrix: An Ultimate GuideIntroductionIn the world of data analysis and machine learning, label matrix is an essential concept that plays a crucial role in various tasks such as classification, clustering, and supervised learning. This guide aims to delve into the depths of label matrix, explaining its definition, properties, and applications.What is a Label Matrix?A label matrix, also known as a target matrix or classification matrix, is a table-like data structure that represents the labels or categories to which data points belong. It is often used in supervised machine learning tasks, where the objective is to predict the label or category of unseen data based on a trained model.Properties of a Label Matrix1. Dimensions: A label matrix has two dimensions - rows and columns. The rows represent the unique data points or instances, while the columns represent the distinct labels or categories.2. Binary or Multiclass: A label matrix can be binary or multiclass, depending on the nature of the classification task. In binary classification, there are only two possible labels, often denoted as 0 or 1. On the other hand, multiclass classification involves more than two labels.3. Sparse or Dense: Label matrices can be sparse, meaning that a majority of the entries are empty or zero, or dense, where most of the entries have non-zero values. The sparsity of a label matrix depends on the distribution of the labels in the dataset.4. Class Imbalance: Class imbalance refers to the scenario where one or more labels have significantly more instances compared to others. This property is common in real-world datasets and can affect the model's performance. Handling class imbalance is crucial in machine learning tasks.Applications of Label Matrix1. Classification: The primary application of label matrix is in classification tasks. Given a labeled dataset, a model is trained using an algorithm such as logistic regression, support vector machines, or deep learning techniques. The label matrix is used during model training and evaluation, allowing the model to learn the relationship between the input features and the corresponding labels.2. Evaluation Metrics: Label matrix is essential in evaluating the performance of a classification model. Various evaluation metrics such as accuracy, precision, recall, and F1 score are calculated based on the values in the label matrix. These metrics provide insights into the model's predictive power and its ability to correctly classify different labels.3. Imbalanced Data Analysis: As mentioned earlier, label matrices often exhibit class imbalance. This property requires special attention to ensure the model performs well on minority classes. Various techniques such as oversampling, undersampling, and cost-sensitive learning can be applied to tackle class imbalance.4. Interpretation and Visualization: Label matrices can also be used for visualization and interpretation purposes.Techniques such as confusion matrices, heatmaps, and precision-recall curves can provide insights into the model's strengths and weaknesses in classifying different labels. These visualizations aid in identifying patterns and making informed decisions.Tips for Working with Label Matrices1. Preprocessing: Before working with label matrices, it is essential to preprocess the data. This may involve handling missing values, encoding categorical variables, and scaling numerical features. The quality of the label matrix greatly depends on the preprocessing steps performed.2. Model Selection: Choosing an appropriate model for a classification task is critical. Consider factors such as the dataset size, label imbalance, and the complexity of the problem. Different algorithms have their strengths and weaknesses, and it is crucial to select the one that suits the problem at hand.3. Cross-validation: To ensure the robustness of the model, it is advisable to use techniques like cross-validation. Cross-validation involves splitting the data into training and validation sets, allowing the model's performance to beevaluated on multiple partitions of the dataset. This technique helps in estimating the model's ability to generalize to unseen data.ConclusionLabel matrix is a fundamental concept in the field of data analysis and machine learning. It provides a structured representation of labels or categories associated with data points. Understanding the properties and applications of a label matrix is crucial for successful classification tasks, model evaluation, and handling class imbalance. By utilizing label matrix techniques, practitioners and researchers can enhance the accuracy and effectiveness of their machine learning models.。

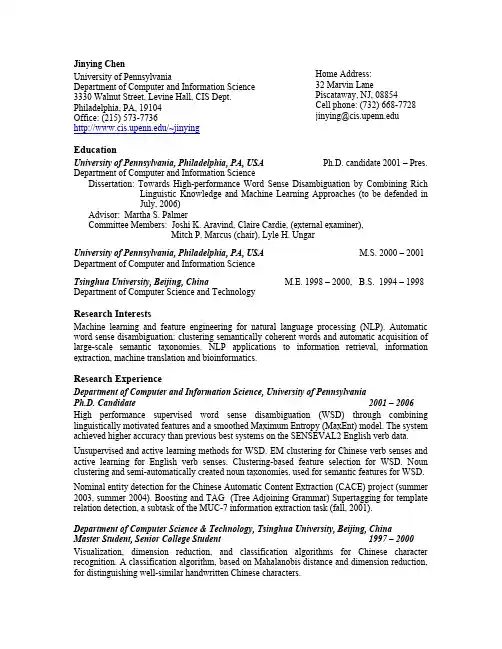

jinying@ Jinying Chen, page 1 Jinying ChenHome Address: 32 Marvin Lane Piscataway, NJ, 08854 Cell phone: (732) 668-7728 jinying@University of PennsylvaniaDepartment of Computer and Information Science 3330 Walnut Street, Levine Hall, CIS Dept.Philadelphia, PA, 19104Office: (215) 573-7736/~jinyingEducationUniversity of Pennsylvania, Philadelphia, PA, USA Ph.D. candidate 2001 – Pres. Department of Computer and Information ScienceDissertation: Towards High-performance Word Sense Disambiguation by Combining RichLinguistic Knowledge and Machine Learning Approaches (to be defended inJuly, 2006)Advisor: Martha S. PalmerCommittee Members: Joshi K. Aravind, Claire Cardie, (external examiner),Mitch P. Marcus (chair), Lyle H. UngarUniversity of Pennsylvania, Philadelphia, PA, USAM.S. 2000 – 2001 Department of Computer and Information ScienceTsinghua University, Beijing, ChinaM.E. 1998 – 2000, B.S. 1994 – 1998 Department of Computer Science and TechnologyResearch InterestsMachine learning and feature engineering for natural language processing (NLP). Automatic word sense disambiguation; clustering semantically coherent words and automatic acquisition of large-scale semantic taxonomies. NLP applications to information retrieval, information extraction, machine translation and bioinformatics.Research ExperienceDepartment of Computer and Information Science, University of PennsylvaniaPh.D. Candidate 2001 – 2006High performance supervised word sense disambiguation (WSD) through combining linguistically motivated features and a smoothed Maximum Entropy (MaxEnt) model. The system achieved higher accuracy than previous best systems on the SENSEVAL2 English verb data.Unsupervised and active learning methods for WSD. EM clustering for Chinese verb senses and active learning for English verb senses. Clustering-based feature selection for WSD. Noun clustering and semi-automatically created noun taxonomies, used for semantic features for WSD. Nominal entity detection for the Chinese Automatic Content Extraction (CACE) project (summer 2003, summer 2004). Boosting and TAG (Tree Adjoining Grammar) Supertagging for template relation detection, a subtask of the MUC-7 information extraction task (fall, 2001).Department of Computer Science & Technology, Tsinghua University, Beijing, ChinaMaster Student, Senior College Student 1997 – 2000Visualization, dimension reduction, and classification algorithms for Chinese character recognition. A classification algorithm, based on Mahalanobis distance and dimension reduction, for distinguishing well-similar handwritten Chinese characters.Honors and Awards• Graudate student research fellowship from the Department of Computer and Information Science, University of Pennsylvania. Sept. 2000 – pres.• Tsinghua-Motorola Outstanding Student Scholarship, top 3 among over 50 graduate students in the Department of Computer Science and Technology, Tsinghua University.Oct. 1999• Honor of Excellent Student of Tsinghua University, top 10 among over 150 undergraduate students in the Department of Computer Science and Technology, Tsinghua University. Nov. 1997• Tsinghua-Daren Chen Scholarship, top 5 among over 150 undergraduate students in the Department of Computer Science and Technology, Tsinghua University. Nov. 1996• Honor of Excellent Student of Tsinghua University, top 10 among over 150 undergraduate students in the Department of Computer Science and Technology, Tsinghua University. Nov. 1995• First Prize in the Tenth National High School Student Contest in Physics in Tianjin, sponsored by Chinese Physical Society and Tianjin Physical Society. Top 10 among over 1,000 competition participants in Tianjin area. Nov. 1993.Publications• Nianwen Xue, Jinying Chen and Martha Palmer. Aligning Features with Sense Distinction Dimensions. Submitted.• Jinying Chen, Andrew Schein, Lyle Ungar and Martha Palmer. An Empirical Study of the Behavior of Active Learning for Word Sense Disambiguation. Accepted by Human Language Technology conference - North American chapter of the Association for Computational Linguistics annual meeting (HLT-NAACL) 2006.New York City.• Jinying Chen and Martha Palmer. Clustering-based Feature Selection for Verb Sense Disambiguation. In Proceedings of the 2005 IEEE International Conference on Natural Language Processing and Knowledge Engineering (IEEE NLP-KE 2005), pp. 36-41. Oct.30- Nov. 1, Wuhan, China, 2005.• Jinying Chen and Martha Palmer. Towards Robust High Performance Word Sense Disambiguation of English Verbs Using Rich Linguistic Features, In Proceedings of the 2nd International Joint Conference on Natural Language Processing (IJCNLP2005), pp.933-944. Oct. 11-13, Jeju, Korea, 2005.• Martha Palmer, Nianwen Xue, Olga B Babko-Malaya, Jinying Chen and Benjamin Snyder. A Parallel Proposotion Bank II for Chinese and English, in Proceedings of the 2005 ACL Workshop in Frontiers in Annotation II: Pie in the Sky, pp. 61-68. June 29, Ann Arbor, Michigan, 2005.• Jinying Chen and Martha Palmer. Unsupervised Learning of Chinese Verb Senses by Using an EM Clustering Model with Rich Linguistic Features. In Proceedings of the 42nd Annual Meeting of Computational Linguists (ACL-04), pp. 295-302. July 21-23, Barcelona, Spain. 2004.• Jinying Chen, Nianwen Xue and Martha Palmer. Using a Smoothing Maximum Entropy Model for Chinese Nominal Tagging (poster presentation), In Proceedings of the 1st International Joint Conference on Natural Language Processing, pp. 493-500. March 22-24, Hainan Island, China, 2004.• Libin Shen and Jinying Chen. Using Supertag in MUC-7 Template Relation Task, Technical Report, MS-CIS-02-26, CIS Dept., University of Pennsylvania, 2002.• Jinying Chen, Yijiang Jin and Shaoping Ma. The Visualization Analysis of Handwritten Chinese Characters in Their Feature Space. Journal of Chinese Information Processing.Vol.14, No. 5, pp42~48, 2000.• Jinying Chen, Yijiang Jin and Shaoping Ma. A Learning Algorithm Detecting the Similar Chinese Characters’ Boundary Based on Unequal-Contraction of Dimension. In Proceedings of the 3rd World Congress on Intelligent Control and Automation, pp. 2765-2769, vol. 4. June 28-July 02, Hefei, China, 2000.Oral Presentations (2001-2006)• “Towards Robust High Performance Word Sense Disambiguation by Combining Rich Linguistic Knowledge and Machine Learning Methods”, in the 7th Penn Engineering Graduate Research Symposium, Feb. 15, 2006.• “What We Learned from Supervised Word Sense Disambiguation for English Verbs” in a visit to the Center for Spoken Language Research at University of Colorado, Dec. 7, 2005.• “Clustering-based Feature Selection for Verb Sense Disambiguation” in the 2005 IEEE International Conference on Natural Language Processing and Knowledge Engineering (IEEE NLP-KE 2005) in Wuhan, China, Oct. 30, 2005.• “Towards Robust High Performance Word Sense Disambiguation of English Verbs Using Rich Linguistic Features” in the 2nd International Joint Conference on Natural Language Processing (IJCNLP2005) in Jeju, Korea, Oct. 13, 2005.• “Unsupervised Learning of Chinese Verb Senses by Using an EM Clustering Model with Rich Linguistic Features” in the 42nd Annual Meeting of Computational Linguists (ACL-04),in Barcelona, Spain, July 23, 2004.• “Fine-grained and Coarse-grained Supervised Word Sense Disambiguation” in ARDA (Advanced Research and Development Activity)’s visit at the Computer and Information Science Department at the University of Pennsylvania, Aug. 22, 2003.Other Professional Activities• Organizer of the weekly seminar, the Computational Linguists’ Lunch (CLUNCH), attended by about 30 faculty members and students mainly from the Department of Computer Science and Information and the Department of Linguistics, University of Pennsylvania. Spring, 2003• Teaching assistant for the graduate-level course CIT594 II – Programming Languages and Techniques, which is oriented to master students in the Department of Computer and Information Science, University of Pennsylvania. Spring, 2002• Teaching assistant for the graduate-level course CIS500 – Programming Languages, which is oriented to Ph.D. students in the Department of Computer and Information Science, University of Pennsylvania. Fall, 2001• Teaching assistant for the undergraduate-level course “Introduction to Artificial Intelligence” in the Department of Computer Science and Technology, Tsinghua University. Fall, 1998• Participation in the editorial work (collecting and editing about 200 vocabulary entries) for a major computer dictionary – English-Chinese Dictionary of Computers and Multimedia (published by Tsinghua University Press, 2003) in Tsinghua University under the supervision of Dr. Fuzong Lin. Summer, 1998ReferenceJoshi K. Aravind, PhD (joshi@, 215-898-8540)Martha S. Palmer, PhD (Martha.Palmer@, 303-492-1300) Lyle H. Ungar, PhD (ungar@, 215-898-7449)。

Matlab Toolboxes- acoustic doppler current profiler data processing- designing analog and digital filters- automatic integration of reusable embedded software- air-sea flux estimates in oceanography- developing scientific animations- estimation of parameters and eigenmodes of multivariateautoregressive methods- power spectrum estimation- computer vision tracking- auditory models- interval arithmetic- inference and learning for directed graphical models- calculating binaural cross-correlograms of sound- design of control systems with maximized feedback- for resampling, hypothesis testing and confidence intervalestimation- MEG and EEG data visualization and processing- equation viewer- interactive program for teaching the finite element method- for calibrating CCD cameras- non-stationary time series analysis and forecasting- for coupled hidden Markov modeling using maximumlikelihood EM- supervised and unsupervised classification algorithms- for analysis of Gaussian mixture models for data set clustering - cluster analysis- cluster analysis- speech analysis- solving problems in economics and finance- for estimating temporal and spatial signal complexities- seismic waveform analysis- kriging approximations to computer models- data assimilation in hydrological and hydrodynamic models- radar array processing- bifurcation analysis of delay differential equations- for removing noise from signals- solving differential equations on manifolds-- scientific image processing- Laplace transform inversion via the direct integration method - analysis and design of computer controlled systems with process-oriented models- differentiation matrix suite- design and test time domain equalizer design methods- drawing digital and analog filters- spline interpolation with Dean wave solutions- discrete wavelet transforms- graphical tool for nonsymmetric eigenproblems- separating light scattering and absorbance by extended multiplicative signal correction- fixed-point algorithm for ICA and projection pursuit- flight dynamics and control- fractional delay filter design- for independent components analysis- fuzzy model-based predictive control- Fourier-wavelet regularized deconvolution- fractal analysis for signal processing- stepwise forward and backward selection of features using linear regression- geometric algebra tutorial- genetic algorithm optimization- estimating and diagnosing heteroskedasticity in time series models- managing, analyzing and displaying data and metadata stored using the GCE data structure specification- growing cell structure visualization- fitting multilinear ANOVA models- geodetic calculations- growing hierarchical self-organizing map- general linear models- wrapper for GPIB library from National Instrument- generative topographic mapping, a model for densitymodeling and data visualization- gradient vector flow for finding 3-D object boundaries- converts HF radar data from radial current vectors to totalvectors- importing, processing and manipulating HF radar data- Hilbert transform by the rational eigenfunction expansionmethod- hidden Markov models- for hidden Markov modeling using maximum likelihood EM- auditory modeling- signal and image processing using ICA and higher orderstatistics- analysis of incomplete datasets- perception based musical analysis2007-8-29 15:04助理工程师- Matlab Java classes- Bayesian Kalman filter- filtering, smoothing and parameter estimation (using EM) for linear精华0 积分49 帖子76 水位164 技术分0 dynamical systems- state estimation of nonlinear systems- Kautz filter design- estimation of scaling exponents- low density parity check codes- wavelet lifting scheme on quincunx grids- Laguerre kernel estimation tool- Levenberg Marquardt with Adaptive Momentum algorithm for training feedforward neural networks- for exponential fitting, phase correction of quadrature data and slicing - Newton method for LP support vector machine for machine learning problems- robust control system design using the loop shaping design procedure- Lagrangian support vector machine for machine learning problems- functional neuroimaging- for multivariate autogressive modeling and cross-spectral estimation - analysis of microarray data- constructing test matrices, computing matrix factorizations, visualizing matrices, and direct search optimization- Monte Carlo analysis- Markov decision processes- graph and mesh partioning methods- maximum likelihood fitting using ordinary least squares algorithms- multidimensional code synthesis- functions for handling missing data values- geographic mapping tools- multi-objective control system design- multi-objective evolutionary algorithms- estimation of multiscaling exponents- analysis and regression on several data blocks simultaneously- feature extraction from raw audio signals for content-based music retrieval - multifractal wavelet model- neural network algorithms- data acquisition using the NiDAQ library- nonlinear economic dynamic models- numerical methods in Matlab text- design and simulation of control systems based on neural networks- neural net based identification of nonlinear dynamic systems- newton support vector machine for solving machine learning problems- non-uniform rational B-splines- analysis of multiway data with multilinear models- finite element development- pulse coupled neural networks- signal processing and analysis- probabilistic hierarchical interactive visualization, . functions for visual analysis of multivariate continuous data- simulation of n-DOF planar manipulators- pattern recognition- testing hyptheses about psychometric functions- proximal support vector machine for solving machine learning problems - vision research- multi-scale image processing- radial basis function neural networks- simulation of synchronous and asynchronous random boolean networks - sigma-point Kalman filters- basic multivariate data analysis and regression- robotics functions, . kinematics, dynamics and trajectory generation- robust calibration in stats- rainfall-runoff modelling- structure and motion- computation of conformal maps to polygonally bounded regions- smoothed data histogram- orthogonal grid maker- oceanographic analysis- sparse least squares- solver for local optimization problems- self-organizing map- solving sums of squares (SOS) optimization problems- statistical parametric mapping- smooth support vector machine for solving machine learning problems- for linear regression, feature selection, generation of data, and significance testing- statistical routines- pattern recognition methods- statistics- implements support vector machines- wavelet-based template learning and pattern classification- model-based document clustering- analyzing and synthesizing visual textures- continous 3-D minimum time orbit transfer around Earth- analyzing non-stationary signals using time-frequency distributions- tasks in tree-ring analysis- uni- and multivariate, stationary and non-stationary time series analysis- nonlinear time series analysis- harmonic analysis of tides- computing and modifying rank-revealing URV and UTV decompositions- wavelet analysis- orthogonal rotation of EOFs- variation Bayesian hidden Markov models- variational Bayesian mixtures of factor analyzers- VRML Molecule Toolbox, for animating results from molecular dynamicsexperiments- generates interactive VRML graphs and animations- computing and modifying symmetric rank-revealing decompositions - wave analysis for fatique and oceanography- frequency-warped signal processing- wavelet analysis- wavelet analysis- Laplace transform inversion via the Weeks method- NetCDF interface- wavelet-domain hidden Markov tree models- Wavelet-based inverse halftoning via deconvolution- weighted sequences clustering toolkit- XML parser- analyze single particle mass spectrum data- quantitative seismicity analysis。

IntelligentDataAnalysis4(2000)213–227IOSPress

Supervisedmodel-basedvisualizationofhigh-dimensionaldata

PetriKontkanen,JussiLahtinen,PetriMyllym¨aki,TomiSilanderandHenryTirriComplexSystemsComputationGroup(CoSCo)1P.O.Box26,DepartmentofComputerScience,FIN-00014UniversityofHelsinki,Finland

Received8October1999Revised22November1999Accepted24December1999

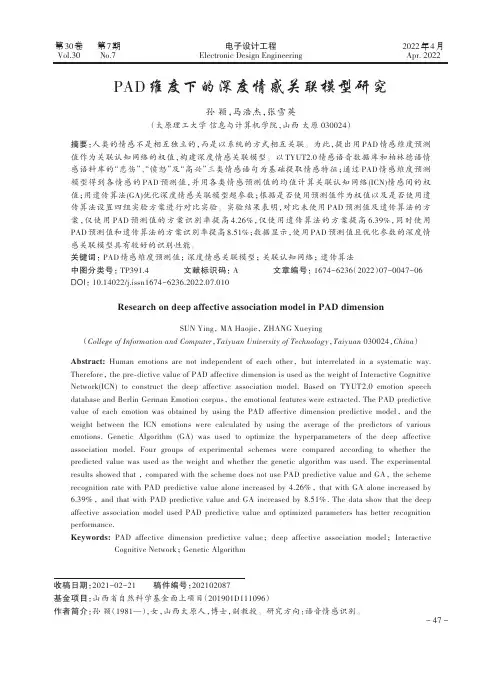

Abstract.Whenhigh-dimensionaldatavectorsarevisualizedonatwo-orthree-dimensionaldisplay,thegoalisthattwovectorsclosetoeachotherinthemulti-dimensionalspaceshouldalsobeclosetoeachotherinthelow-dimensionalspace.Traditionally,closenessisdefinedintermsofsomestandardgeometricdistancemeasure,suchastheEuclideandistance,basedonamoreorlessstraightforwardcomparisonbetweenthecontentsofthedatavectors.However,suchdistancesdonotgenerallyreflectproperlythepropertiesofcomplexproblemdomains,wherechangingonebitinavectormaycompletelychangetherelevanceofthevector.Whatismore,inreal-worldsituationsthesimilarityoftwovectorsisnotauniversalproperty:eveniftwovectorscanberegardedassimilarfromonepointofview,fromanotherpointofviewtheymayappearquitedissimilar.Inordertocapturetheserequirementsforbuildingapragmaticandflexiblesimilaritymeasure,weproposeadatavisualizationschemewherethesimilarityoftwovectorsisdeterminedindirectlybyusingaformalmodeloftheproblemdomain;inourcase,aBayesiannetworkmodel.Inthisscheme,twovectorsareconsideredsimilariftheyleadtosimilarpredictions,whengivenasinputtoaBayesiannetworkmodel.Theschemeissupervisedinthesensethatdifferentperspectivescanbetakenintoaccountbyusingdifferentpredictivedistributions,i.e.,bychangingwhatistobepredicted.Inaddition,themodelingframeworkcanalsobeusedforvalidatingtherationalityoftheresultingvisualization.Thismodel-basedvisualizationschemehasbeenimplementedandtestedonreal-worlddomainswithencouragingresults.

Keywords:Datavisualization,Multidimensionalscaling,Bayesiannetworks,Sammon’smapping

1IntroductionWhendisplayinghigh-dimensionaldataonatwo-orthree-dimensionaldevice,eachdatavectorhastobeprovidedwiththecorresponding2Dor3Dcoordinateswhichdetermineitsvisuallocation.Intraditionalstatisticaldataanalysis(see,e.g.,[8,6]),thistaskisknownasmul-tidimensionalscaling.Fromoneperspective,multidimensionalscalingcanbeseenasadatacompressionordatareductiontask,wherethegoalistoreplacetheoriginalhigh-dimensionaldatavectorswithmuchshortervectors,whilelosingaslittleinformationaspossible.Conse-quently,apragmaticallysensibledatareductionschemeissuchthattwovectorsclosetoeachotherinthemultidimensionalspacearealsoclosetoeachotherinthethree-dimensionalspace.Thisraisesthequestionofadistancemeasure—whatisameaningfuldefinitionofsimilaritywhendealingwithhigh-dimensionalvectorsincomplexdomains?

1URL:http://www.cs.Helsinki.FI/research/cosco/,E-mail:cosco@cs.Helsinki.FI

1088-467X/00/$8.00©–2000IOSPress.Allrightsreserved214P.Kontkanenetal./Supervisedmodel-basedvisualizationofhigh-dimensionaldataTraditionally,similarityisdefinedintermsofsomestandardgeometricdistancemeasure,suchastheEuclideandistance.However,suchdistancesdonotgenerallyproperlyreflectthepropertiesofcomplexproblemdomains,wherethedatatypicallyisnotcodedinageometricorspatialform.Inthistypeofdomains,changingonebitinavectormaytotallychangetherelevanceofthevector,andmakeitinsomesensequiteadifferentvector,althoughgeometricallythedifferenceisonlyonebit.Inaddition,inreal-worldsituationsthesimilarityoftwovectorsisnotauniversalproperty,butdependsonthespecificfocusoftheuser—eveniftwovectorscanberegardedassimilarfromonepointofview,theymayappearquitedissimilarfromanotherpointofview.Inordertocapturetheserequirementsforbuildingapragmaticallysensiblesimilaritymeasure,inthispaperweproposeandanalyzeadatavisualizationschemewherethesimilarityoftwovectorsisnotdirectlydefinedasafunctionofthecontentsofthevectors,butratherdeterminedindirectlybyusingaformalmodeloftheproblemdomain;inourcase,aBayesiannetworkmodeldefiningajointprobabilitydistributiononthedomain.Fromonepointofview,theBayesiannetworkmodelcanberegardedasamodulewhichtransformsthevectorsintoprobabilitydistributions,andthedistanceofthevectorsisthendefinedinprobabilitydistributionspace,notinthespaceoftheoriginalvectors.Ithasbeensuggestedthattheresultsofmodel-basedvisualizationtechniquescanbecom-promisediftheunderlyingmodelisbad.Thisisofcoursetrue,butweclaimthatthesameholdsalsofortheso-calledmodel-freeapproaches,aseachoftheseapproachescanbeinterpretedasamodel-basedmethodwherethemodelisjustnotexplicitlyrecognized.Forexample,thestan-dardEuclideandistancemeasureisindirectlybasedonadomainmodelassumingindependentandnormallydistributedattributes.Thisisofcourseaverystrongassumptionthatdoesnotusuallyholdinpractice.Wearguethatbyusingformalmodelsitbecomespossibletorecog-nizeandrelaxtheunderlyingassumptionsthatdonothold,whichleadstobettermodels,andmoreover,tomorereasonableandreliablevisualizations.ABayesian(belief)network[20,21]isarepresentationofaprobabilitydistributionoverasetofrandomvariables,consistingofanacyclicdirectedgraph,wherethenodescorrespondtodomainvariables,andthearcsdefineasetofindependenceassumptionswhichallowthejointprobabilitydistributionforadatavectortobefactorizedasaproductofsimpleconditionalprobabilities.Techniquesforlearningsuchmodelsfromsampledataarediscussedin[11].OneofthemainadvantagesoftheBayesiannetworkmodelisthefactthatwithcertaintechnicalassumptionsitispossibletomarginalize(integrate)overallparameterinstantiationsinordertoproducethecorrespondingpredictivedistribution[7,13].Asdemonstratedin,e.g.,[18],suchmarginalizationimprovesthepredictiveaccuracyoftheBayesiannetworkmodel,especiallyincaseswheretheamountofsampledataissmall.PracticalalgorithmsforperformingpredictiveinferenceingeneralBayesiannetworksarediscussedforexamplein[20,19,14,5].Inoursupervisedmodel-basedvisualizationscheme,twovectorsareconsideredsimilariftheyleadtosimilarpredictions,whengivenasinputtothesameBayesiannetworkmodel.Theschemeissupervised,sincedifferentperspectivesofdifferentusersaretakenintoaccountbyusingdifferentpredictivedistributions,i.e.,bychangingwhatistobepredicted.TheideaisrelatedtotheBayesiandistancemetricsuggestedin[17]asamethodfordefiningsimilarityinthecase-basedreasoningframework.ThereasonforustouseBayesiannetworksinthiscontextisthefactthatthisisthemodelfamilywearemostfamiliarwith,andaccordingtoourexperience,itproducesmoreaccuratepredictivedistributionsthanalternativetechniques.Nevertheless,thereisnoreasonwhysomeothermodelfamilycouldnotalsobeusedinthesuggestedvisualizationscheme,aslongasthemodelsaresuchthattheyproduceapredictivedistribution.TheprinciplesofthegenericvisualizationschemearedescribedinmoredetailinSection2.Visualizationsofhigh-dimensionaldatacanbeexploitedinfindingregularitiesincomplex