ffmpeg+sdl 播放器最新代码

- 格式:doc

- 大小:82.50 KB

- 文档页数:17

ffplay源码分析6-⾳频重采样在尝试分析源码前,可先阅读如下参考⽂章作为铺垫:[1].[2].[3].[4].[5].“ffplay源码分析”系列⽂章如下:[1].[2].[3].[4].[5].[6].[7].6. ⾳频重采样FFmpeg解码得到的⾳频帧的格式未必能被SDL⽀持,在这种情况下,需要进⾏⾳频重采样,即将⾳频帧格式转换为SDL⽀持的⾳频格式,否则是⽆法正常播放的。

⾳频重采样涉及两个步骤:1. 打开⾳频设备时进⾏的准备⼯作:确定SDL⽀持的⾳频格式,作为后期⾳频重采样的⽬标格式2. ⾳频播放线程中,取出⾳频帧后,若有需要(⾳频帧格式与SDL⽀持⾳频格式不匹配)则进⾏重采样,否则直接输出6.1 打开⾳频设备⾳频设备的打开实际是在解复⽤线程中实现的。

解复⽤线程中先打开⾳频设备(设定⾳频回调函数供SDL⾳频播放线程回调),然后再创建⾳频解码线程。

调⽤链如下:main() -->stream_open() -->read_thread() -->stream_component_open() -->audio_open(is, channel_layout, nb_channels, sample_rate, &is->audio_tgt);decoder_start(&is->auddec, audio_thread, is);audio_open()函数填⼊期望的⾳频参数,打开⾳频设备后,将实际的⾳频参数存⼊输出参数is->audio_tgt中,后⾯⾳频播放线程⽤会⽤到此参数,使⽤此参数将原始⾳频数据重采样,转换为⾳频设备⽀持的格式。

static int audio_open(void *opaque, int64_t wanted_channel_layout, int wanted_nb_channels, int wanted_sample_rate, struct AudioParams *audio_hw_params){SDL_AudioSpec wanted_spec, spec;const char *env;static const int next_nb_channels[] = {0, 0, 1, 6, 2, 6, 4, 6};static const int next_sample_rates[] = {0, 44100, 48000, 96000, 192000};int next_sample_rate_idx = FF_ARRAY_ELEMS(next_sample_rates) - 1;env = SDL_getenv("SDL_AUDIO_CHANNELS");if (env) { // 若环境变量有设置,优先从环境变量取得声道数和声道布局wanted_nb_channels = atoi(env);wanted_channel_layout = av_get_default_channel_layout(wanted_nb_channels);}if (!wanted_channel_layout || wanted_nb_channels != av_get_channel_layout_nb_channels(wanted_channel_layout)) {wanted_channel_layout = av_get_default_channel_layout(wanted_nb_channels);wanted_channel_layout &= ~AV_CH_LAYOUT_STEREO_DOWNMIX;}// 根据channel_layout获取nb_channels,当传⼊参数wanted_nb_channels不匹配时,此处会作修正wanted_nb_channels = av_get_channel_layout_nb_channels(wanted_channel_layout);wanted_spec.channels = wanted_nb_channels; // 声道数wanted_spec.freq = wanted_sample_rate; // 采样率if (wanted_spec.freq <= 0 || wanted_spec.channels <= 0) {av_log(NULL, AV_LOG_ERROR, "Invalid sample rate or channel count!\n");return -1;}while (next_sample_rate_idx && next_sample_rates[next_sample_rate_idx] >= wanted_spec.freq)next_sample_rate_idx--; // 从采样率数组中找到第⼀个不⼤于传⼊参数wanted_sample_rate的值// ⾳频采样格式有两⼤类型:planar和packed,假设⼀个双声道⾳频⽂件,⼀个左声道采样点记作L,⼀个右声道采样点记作R,则:// planar存储格式:(plane1)LLLLLLLL...LLLL (plane2)RRRRRRRR...RRRR// packed存储格式:(plane1)LRLRLRLR...........................LRLR// 在这两种采样类型下,⼜细分多种采样格式,如AV_SAMPLE_FMT_S16、AV_SAMPLE_FMT_S16P等,注意SDL2.0⽬前不⽀持planar格式// channel_layout是int64_t类型,表⽰⾳频声道布局,每bit代表⼀个特定的声道,参考channel_layout.h中的定义,⼀⽬了然// 数据量(bits/秒) = 采样率(Hz) * 采样深度(bit) * 声道数wanted_spec.format = AUDIO_S16SYS; // 采样格式:S表带符号,16是采样深度(位深),SYS表采⽤系统字节序,这个宏在SDL中定义wanted_spec.silence = 0; // 静⾳值wanted_spec.samples = FFMAX(SDL_AUDIO_MIN_BUFFER_SIZE, 2 << av_log2(wanted_spec.freq / SDL_AUDIO_MAX_CALLBACKS_PER_SEC)); // SDL声⾳缓冲区尺⼨,单位是单声道采样 wanted_spec.callback = sdl_audio_callback; // 回调函数,若为NULL,则应使⽤SDL_QueueAudio()机制wanted_erdata = opaque; // 提供给回调函数的参数// 打开⾳频设备并创建⾳频处理线程。

ASP视频播放器通用代码1.avi格式<object id="video" width="400" height="200" border="0"classid="clsid:CFCDAA03-8BE4-11cf-B84B-0020AFBBCCFA"><param name="ShowDisplay" value="0"><param name="ShowControls" value="1"><param name="AutoStart" value="1"><param name="AutoRewind" value="0"><param name="PlayCount" value="0"><param name="Appearance value="0 value="""><param name="BorderStyle value="0 value="""><param name="MovieWindowHeight" value="240"><param name="MovieWindowWidth" value="320"><param name="FileName" value="file:///D|/work/vod/Mbar.avi"><embed width="400" height="200" border="0" showdisplay="0" showcontrols="1" autostart="1" autorewind="0" playcount="0" moviewindowheight="240" moviewindowwidth="320" filename="file:///D|/work/vod/Mbar.avi" src="Mbar.avi"> </embed></object>2.mpg格式<object classid="clsid:05589FA1-C356-11CE-BF01-00AA0055595A" id="ActiveMovie1" width="239" height="250"><param name="Appearance" value="0"><param name="AutoStart" value="-1"><param name="AllowChangeDisplayMode" value="-1"><param name="AllowHideDisplay" value="0"><param name="AllowHideControls" value="-1"><param name="AutoRewind" value="-1"><param name="Balance" value="0"><param name="CurrentPosition" value="0"><param name="DisplayBackColor" value="0"><param name="DisplayForeColor" value="16777215"><param name="DisplayMode" value="0"><param name="Enabled" value="-1"><param name="EnableContextMenu" value="-1"><param name="EnablePositionControls" value="-1"><param name="EnableSelectionControls" value="0"><param name="EnableTracker" value="-1"><param name="Filename" value="../../../mpeg/halali.mpg" valuetype="ref"><param name="FullScreenMode" value="0"><param name="MovieWindowSize" value="0"><param name="PlayCount" value="1"><param name="Rate" value="1"><param name="SelectionStart" value="-1"><param name="SelectionEnd" value="-1"><param name="ShowControls" value="-1"><param name="ShowDisplay" value="-1"><param name="ShowPositionControls" value="0"><param name="ShowTracker" value="-1"><param name="Volume" value="-480"></object>3.rm格式<OBJECT ID=video1 CLASSID="clsid:CFCDAA03-8BE4-11cf-B84B-0020AFBBCCFA"HEIGHT=288 WIDTH=352><param name="_ExtentX" value="9313"><param name="_ExtentY" value="7620"><param name="AUTOSTART" value="0"><param name="SHUFFLE" value="0"><param name="PREFETCH" value="0"><param name="NOLABELS" value="0"><param name="SRC" value="rtsp://203.207.131.35/vod/dawan-a.rm";><param name="CONTROLS" value="ImageWindow"><param name="CONSOLE" value="Clip1"><param name="LOOP" value="0"><param name="NUMLOOP" value="0"><param name="CENTER" value="0"><param name="MAINTAINASPECT" value="0"><param name="BACKGROUNDCOLOR" value="#000000"><embed SRCtype="audio/x-pn-realaudio-plugin" CONSOLE="Clip1" CONTROLS="ImageWindow" HEIGHT="288" WIDTH="352" AUTOSTART="false"></OBJECT>4.wmv格式<object id="NSPlay" width=200 height=180classid="CLSID:22d6f312-b0f6-11d0-94ab-0080c74c7e95"codebase="/activex/controls/mplayer/en/nsmp2inf.cab #Version=6,4,5,715" standby="Loading Microsoft Windows Media Player components..." type="application/x-oleobject" align="right" hspace="5"><!-- ASX File Name --><param name="AutoRewind" value=1><param name="FileName" value="xxxxxx.wmv"><!-- Display Controls --><param name="ShowControls" value="1"><!-- Display Position Controls --><param name="ShowPositionControls" value="0"><!-- Display Audio Controls --><param name="ShowAudioControls" value="1"><!-- Display Tracker Controls --><param name="ShowTracker" value="0"><!-- Show Display --><param name="ShowDisplay" value="0"><!-- Display Status Bar --><param name="ShowStatusBar" value="0"><!-- Diplay Go To Bar --><param name="ShowGotoBar" value="0"><!-- Display Controls --><param name="ShowCaptioning" value="0"><!-- Player Autostart --><param name="AutoStart" value=1><!-- Animation at Start --><param name="Volume" value="-2500"><param name="AnimationAtStart" value="0"><!-- Transparent at Start --><param name="TransparentAtStart" value="0"><!-- Do not allow a change in display size --><param name="AllowChangeDisplaySize" value="0"><!-- Do not allow scanning --><param name="AllowScan" value="0"><!-- Do not show contect menu on right mouse click --><param name="EnableContextMenu" value="0"><!-- Do not allow playback toggling on mouse click --><param name="ClickToPlay" value="0"></object>最简单的播放代码<embedsrc="/Aboutmedia/warner/mtv/naying-021011_01v_120k .wmv";;autostart="true" loop="true" width="200" height="150" >把这个网址/Aboutmedia/warner/mtv/naying-021011_01v_120k.wmv 换成你要看的电影就行了有图像的rm格式:<OBJECT classid=clsid:CFCDAA03-8BE4-11cf-B84B-0020AFBBCCFA height=288 id=video1 width=305 VIEWASTEXT><param name=_ExtentX value=5503><param name=_ExtentY value=1588><param name=AUTOSTART value=-1><param name=SHUFFLE value=0><param name=PREFETCH value=0><param name=NOLABELS value=0><param name=SRC value=/ram/new/xiaoxin1.ram><param name=CONTROLS value=Imagewindow,StatusBar,ControlPanel><param name=CONSOLE value=RAPLAYER><param name=LOOP value=0><param name=NUMLOOP value=0><param name=CENTER value=0><param name=MAINTAINASPECT value=0><param name=BACKGROUNDCOLOR value=#000000></OBJECT>无图像的rm格式:如相声,歌曲。

ffmpeg 前端用法ffmpeg是一款广泛使用的多媒体处理工具,它提供了丰富的功能和灵活的参数设置,可以用于音视频编码、格式转换、剪辑等各种应用场景。

本文将介绍ffmpeg的前端用法,包括基本命令和常用选项的使用。

一、安装ffmpeg在开始使用ffmpeg前,首先需要将其安装到计算机上。

ffmpeg支持多个平台,可以通过官方网站或其他渠道下载对应平台的安装包进行安装。

安装完成后,可以在命令行界面输入ffmpeg命令,检查是否安装成功。

二、基本命令用法1. 查看ffmpeg版本信息要查看ffmpeg的版本信息,可以使用以下命令:```ffmpeg -version```运行该命令后,会输出ffmpeg的版本号、编译信息等详细信息。

2. 转码视频文件ffmpeg可以将一个视频文件转换成不同的编码格式。

要转码视频文件,可以使用以下命令:```ffmpeg -i input.mp4 output.mp4```其中,input.mp4是输入文件的路径,output.mp4是输出文件的路径。

通过这个命令,ffmpeg会将input.mp4文件转码为output.mp4文件。

3. 转码音频文件类似于视频文件的转码,ffmpeg也可以将音频文件转换成不同的编码格式。

要转码音频文件,可以使用以下命令:```ffmpeg -i input.mp3 output.mp3```其中,input.mp3是输入文件的路径,output.mp3是输出文件的路径。

这个命令会把input.mp3文件转码为output.mp3文件。

4. 剪辑视频文件ffmpeg还可以对视频文件进行剪辑,即提取其中的一段作为输出。

要剪辑视频文件,可以使用以下命令:```ffmpeg -i input.mp4 -ss 00:00:10 -t 00:00:30 output.mp4```其中,input.mp4是输入文件的路径,output.mp4是输出文件的路径,ss选项指定了起始时间,t选项指定了持续时间。

FFmpeg命令行工具的使用方式本文将重点介绍ffmpeg、ffprobe与ffplay这三个命令行工具,而ffserver则是作为简单的流媒体服务器存在的,与客户端开发关系不大,因此本书将不做介绍。

前文曾经提到ffmpeg是进行媒体文件转码的命令行工具,ffprobe是用于查看媒体文件头信息的工具,ffplay则是用于播放媒体文件的工具。

下面按照从简单开始的原则,先介绍ffprobe——查看媒体文件格式的工具。

1.ffprobe首先用ffprobe查看一个音频的文件:ffprobe ~/Desktop/32037.mp3键入上述命令之后,先看如下这行信息:Duration:00:05:14.83,start:0.000000,bitrate:64kb/s这行信息表明,该音频文件的时长是5分14秒零830毫秒,开始播放时间是0,整个媒体文件的比特率是64Kbit/s,然后再看另外一行:Stream#0:0 Audio:mp3,24000Hz,stereo,s16p,64kb/s这行信息表明,第一个流是音频流,编码格式是MP3格式,采样率是24kHz,声道是立体声,采样表示格式是SInt16(short)的planner(平铺格式),这路流的比特率是64Kbit/s。

然后再使用ffprobe查看一个视频的文件:ffprobe ~/Desktop/32037.mp4键入上述命令之后,可以看到第一部分的信息是Metadata信息:Metadata:major_brand: isomminor_version: 512compatible_brands: isomiso2avc1mp41encoder: Lavf55.12.100这行信息表明了该文件的Metadata信息,比如encoder是Lavf55.12.100,其中Lavf代表的是FFmpeg输出的文件,后面的编号代表了FFmpeg的版本代号,接下来的一行信息如下:Duration:00:04:34.56 start:0.023220,bitrate:577kb/s上面一行的内容表示Duration是4分34秒560毫秒,开始播放的时间是从23ms 开始播放的,整个文件的比特率是577Kbit/s,紧接着再来看下一行:Stream#0:0(un):Video:h264(avc1/0x31637661),yuv420p,480*480,508kb/s,24fps这行信息表示第一个stream是视频流,编码方式是H264的格式(封装格式是AVC1),每一帧的数据表示是YUV420P的格式,分辨率是480×480,这路流的比特率是508Kbit/s,帧率是每秒钟24帧(fps是24),紧接着再来看下一行:Stream#0:1(und):Audio:aac(LC)(mp4a/0x6134706D),44100Hz,stereo,fltp,63kb/s这行信息表示第二个stream是音频流,编码方式是AAC(封装格式是MP4A),并且采用的Profile是LC规格,采样率是44100Hz,声道数是立体声,数据表示格式是浮点型,这路音频流的比特率是63Kbit/s。

播放器代码大全===========<TABLE borderColor=#4F3256 background=/DownloadImg/2011/0 5/1210/11656795_1.jpg border=1><TBODY><TR><TD style="FILTER: alpha(opacity=50,style=3)"><P align=center><EMBED src=/data/music/1688812/%E5%AE%8C%E7%BE%8E.mp3?xcode=84ca6d959848266479614d12dac32591 width=300 height=25 type=audio/mpeg loop="-1" autostart="false" volume="0"></P></TD></TR></TBODY></TABLE>背景音乐,是博客的重要组成部分!进入博客就有锐耳的音乐,小家会增色不少,有喜欢的歌声伴随着玩博、写博、阅读、网上冲浪等,心旷神怡、妙不可言,而这一切,都来源于可靠的背景音乐。

希望打开博客就听到自己喜欢的音乐,也是很多博客所盼望的。

要把新浪播放器安装成自动循环播放的是不容易的,一是新浪播放器没有自动循环功能,用破坏第一首歌地址虽然能自动,但毕竟属于旁门左道,可靠性差;二是新浪系统速度长时间慢,任何歌曲到新浪播放都困难,再要自动循环,就更困难了。

新浪不好用,很多人都采用了新浪以外的播放器,对大多数初学者而言,自己到网站申请代码,自己添加歌曲有一定的难度,现在网络又不好,很多情况连进去都困难,即使进去添加了质量也没有保证。

我曾经推荐过逍遥族播放器,也有不少网友采用,可惜网站关闭了,而且短时间没有恢复的可能。

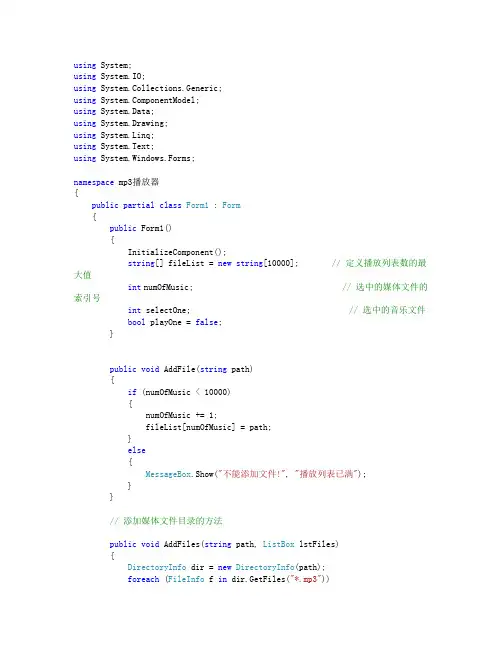

using System;using System.IO;using System.Collections.Generic;using ponentModel;using System.Data;using System.Drawing;using System.Linq;using System.Text;using System.Windows.Forms;namespace mp3播放器{public partial class Form1 : Form{public Form1(){InitializeComponent();string[] fileList = new string[10000]; // 定义播放列表数的最大值int numOfMusic; // 选中的媒体文件的索引号int selectOne; // 选中的音乐文件bool playOne = false;}public void AddFile(string path){if (numOfMusic < 10000){numOfMusic += 1;fileList[numOfMusic] = path;}else{MessageBox.Show("不能添加文件!", "播放列表已满");}}// 添加媒体文件目录的方法public void AddFiles(string path, ListBox lstFiles){DirectoryInfo dir = new DirectoryInfo(path);foreach (FileInfo f in dir.GetFiles("*.mp3")){AddFile(f.FullName);int i;string strFile = Convert.ToString(numOfMusic);for (i = 1; i <= 5 - strFile.Length; i++){strFile += "";}strFile = ;lstFileList.Items.Add(strFile);}foreach (DirectoryInfo d in dir.GetDirectories()){AddFiles(d.FullName, lstFileList);}}// 删除单个媒体文件的方法public void DelFile(int selectNum){int i;for (i = selectNum; i <= numOfMusic - 1; i++){fileList[i] = fileList[i + 1];}numOfMusic -= 1;}// 播放媒体文件的方法public void Play(int selectNum){mediaPlayer.URL = fileList[selectNum]; // 播放选中的媒体文件this.Text = "正在播放 -- " + lstFileList.SelectedItem.ToString(); }// 使部分按钮可用的方法public void OpenBtn(){btnPlay.Enabled = true;btnBack.Enabled = true;btnForward.Enabled = true;}// 使部分按钮不可用的方法public void CloseBtn(){btnPlay.Enabled = false;btnBack.Enabled = false;btnForward.Enabled = false;btnStop.Enabled = false;btnReplay.Enabled = false;btnDelete.Enabled = false;}private void Form1_Load(object sender, EventArgs e){lstFileList.Items.CopyTo(fileList, 0);// 将列表框(lstFileList)中的列表项全部复制到数组(fileList)中numOfMusic = 0; // 选中第一个媒体文件CloseBtn();}private void btnAddFile_Click(object sender, EventArgs e){int i;odlgMedia.FileName = ""; // 设置默认文件名odlgMedia.InitialDirectory = "C:\\"; // 设置默认路径odlgMedia.Filter = "mp3文件|*.mp3|所有文件|*.*"; // 设置文件类型if (odlgMedia.ShowDialog() == DialogResult.OK){string path = odlgMedia.FileName;FileInfo f = new FileInfo(path);AddFile(f.FullName);string strFile = Convert.ToString(numOfMusic);for (i = 1; i <= 5 - strFile.Length; i++){strFile += "";}strFile = ;lstFileList.Items.Add(strFile);if (lstFileList.Items.Count > 0){OpenBtn();}}}private void btnAddFiles_Click(object sender, EventArgs e){fbdlgMedia.SelectedPath = "C:\\";fbdlgMedia.ShowNewFolderButton = true;fbdlgMedia.Description = "请选择媒体文件目录:";fbdlgMedia.ShowNewFolderButton = false;if (fbdlgMedia.ShowDialog() == DialogResult.OK){AddFiles(fbdlgMedia.SelectedPath, lstFileList);if (lstFileList.Items.Count > 0){OpenBtn();}}}private void btnDelete_Click(object sender, EventArgs e){int i = lstFileList.SelectedIndex;if (lstFileList.SelectedIndex >= 0){if ((selectOne == lstFileList.SelectedIndex + 1) && (mediaPlayer.URL != "")){MessageBox.Show("不能删除正在播放的文件", "错误"); }else{DelFile(i + 1);lstFileList.Items.RemoveAt(i);if (i < lstFileList.Items.Count){lstFileList.SelectedIndex = i;}else if (lstFileList.Items.Count == 0){CloseBtn();}else{lstFileList.SelectedIndex = 0;}}}}private void btnPlay_Click(object sender, EventArgs e){if (lstFileList.SelectedIndex < 0){selectOne = 1;lstFileList.SelectedIndex = 0;}else{selectOne = lstFileList.SelectedIndex + 1;}Play(selectOne);tmrMedia.Enabled = true;btnStop.Enabled = true;btnReplay.Enabled = true;}private void btnBack_Click(object sender, EventArgs e){if (lstFileList.SelectedIndex > 0){lstFileList.SelectedIndex -= 1;}else if (lstFileList.SelectedIndex == 0){lstFileList.SelectedIndex = lstFileList.Items.Count - 1; }else{lstFileList.SelectedIndex = numOfMusic - 1;}selectOne = lstFileList.SelectedIndex + 1;Play(selectOne);btnStop.Enabled = true;btnReplay.Enabled = true;}private void btnForward_Click(object sender, EventArgs e){if (lstFileList.SelectedIndex < lstFileList.Items.Count - 1){lstFileList.SelectedIndex = lstFileList.SelectedIndex + 1;}else{if (lstFileList.SelectedIndex > 0){lstFileList.SelectedIndex = 0;}}selectOne = lstFileList.SelectedIndex + 1;Play(selectOne);btnStop.Enabled = true;btnReplay.Enabled = true;}private void btnReplay_Click(object sender, EventArgs e){if (playOne == true){playOne = false;btnReplay.FlatStyle = FlatStyle.Standard; // 设置按钮外观为三维btnReplay.Text = "单曲循环";}else{playOne = true;btnReplay.FlatStyle = FlatStyle.Popup; // 设置按钮外观为平面显示btnReplay.Text = "取消循环";}lstFileList.SelectedIndex = selectOne - 1;}private void btnStop_Click(object sender, EventArgs e){mediaPlayer.URL = "";this.Text = "媒体播放器";tmrMedia.Enabled = false;btnReplay.Enabled = false;lstFileList.SelectedIndex = selectOne - 1;}private void odlgMedia_FileOk(object sender, CancelEventArgs e){}private void lstFileList_SelectedIndexChanged(object sender, EventArgs e) {// 双击播放列表中的媒体文件时,则播放该文件btnPlay_Click(sender, e);playOne = false;btnReplay.Text = "单曲循环";}private void tmrMedia_Tick(object sender, EventArgs e){// 用Timer控件控制连续播放if (mediaPlayer.playState == WMPLib.WMPPlayState.wmppsStopped){if (playOne == false){if (selectOne < lstFileList.Items.Count){selectOne += 1;}else if (selectOne == lstFileList.Items.Count){// 如果列表中所有媒体文件都播放完毕,则从头开始。

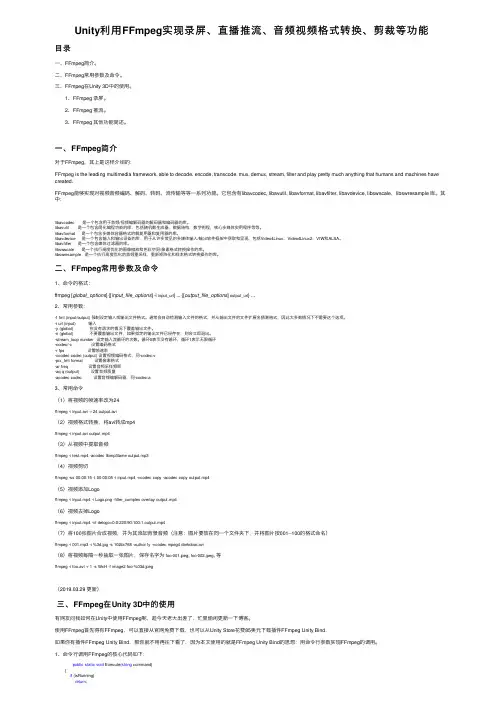

Unity利⽤FFmpeg实现录屏、直播推流、⾳频视频格式转换、剪裁等功能⽬录⼀、FFmpeg简介。

⼆、FFmpeg常⽤参数及命令。

三、FFmpeg在Unity 3D中的使⽤。

1、FFmpeg 录屏。

2、FFmpeg 推流。

3、FFmpeg 其他功能简述。

⼀、FFmpeg简介对于FFmpeg,其上是这样介绍的:FFmpeg is the leading multimedia framework, able to decode, encode, transcode, mux, demux, stream, filter and play pretty much anything that humans and machines have created.FFmpeg能够实现对视频⾳频编码、解码、转码、流传输等等⼀系列功能。

它包含有libavcodec, libavutil, libavformat, libavfilter, libavdevice, libswscale,libswresample 库。

其中:libavcodec 是⼀个包含⽤于⾳频/视频编解码器的解码器和编码器的库。

libavutil 是⼀个包含简化编程功能的库,包括随机数⽣成器,数据结构,数学例程,核⼼多媒体实⽤程序等等。

libavformat 是⼀个包含多媒体容器格式的解复⽤器和复⽤器的库。

libavdevice 是⼀个包含输⼊和输出设备的库,⽤于从许多常见的多媒体输⼊/输出软件框架中获取和呈现,包括Video4Linux,Video4Linux2,VfW和ALSA。

libavfilter 是⼀个包含媒体过滤器的库。

libswscale 是⼀个执⾏⾼度优化的图像缩放和⾊彩空间/像素格式转换操作的库。

libswresample 是⼀个执⾏⾼度优化的⾳频重采样,重新矩阵化和样本格式转换操作的库。

⼆、FFmpeg常⽤参数及命令1、命令的格式:ffmpeg [global_options] {[input_file_options] -i input_url} ... {[output_file_options] output_url} ...2、常⽤参数:-f fmt (input/output) 强制设定输⼊或输出⽂件格式。

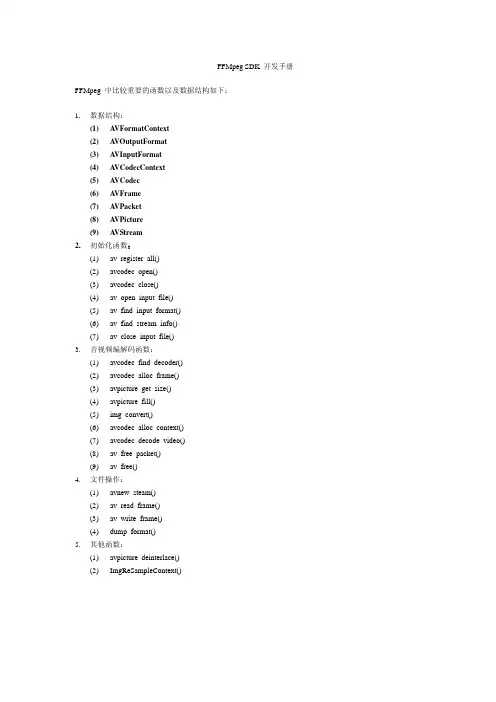

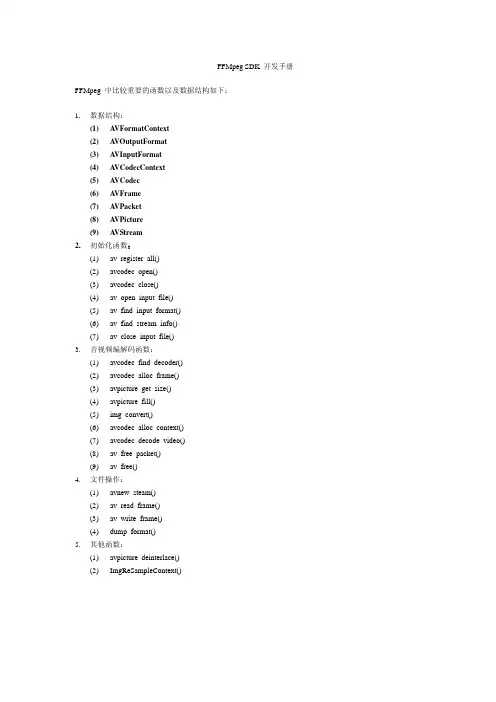

FFMpeg SDK 开发手册FFMpeg 中比较重要的函数以及数据结构如下:1.数据结构:(1)A VFormatContext(2)A VOutputFormat(3)A VInputFormat(4)A VCodecContext(5)A VCodec(6)A VFrame(7)A VPacket(8)A VPicture(9)A VStream2.初始化函数:(1)av_register_all()(2)avcodec_open()(3)avcodec_close()(4)av_open_input_file()(5)av_find_input_format()(6)av_find_stream_info()(7)av_close_input_file()3.音视频编解码函数:(1)avcodec_find_decoder()(2)avcodec_alloc_frame()(3)avpicture_get_size()(4)avpicture_fill()(5)img_convert()(6)avcodec_alloc_context()(7)avcodec_decode_video()(8)av_free_packet()(9)av_free()4.文件操作:(1)avnew_steam()(2)av_read_frame()(3)av_write_frame()(4)dump_format()5.其他函数:(1)avpicture_deinterlace()(2)ImgReSampleContext()以下就根据,以上数据结构及函数在ffmpeg测试代码output_example.c中出现的前后顺进行分析。

在此之前还是先谈一下ffmpeg的编译问题。

在linux下的编译比较简单,这里不多说了。

在windows下的编译可以参考以下网页:/viewthread.php?tid=1897&extra=page%3D1值得一提的是,在使用编译后的sdk进行测试时(用到ffmpeg目录下的output_example.c)编译过程中可能会有以下两个问题:1.Output_example.c用到了snprintf.h这个头文件。

FFMpeg SDK 开发手册FFMpeg 中比较重要的函数以及数据结构如下:1.数据结构:(1)A VFormatContext(2)A VOutputFormat(3)A VInputFormat(4)A VCodecContext(5)A VCodec(6)A VFrame(7)A VPacket(8)A VPicture(9)A VStream2.初始化函数:(1)av_register_all()(2)avcodec_open()(3)avcodec_close()(4)av_open_input_file()(5)av_find_input_format()(6)av_find_stream_info()(7)av_close_input_file()3.音视频编解码函数:(1)avcodec_find_decoder()(2)avcodec_alloc_frame()(3)avpicture_get_size()(4)avpicture_fill()(5)img_convert()(6)avcodec_alloc_context()(7)avcodec_decode_video()(8)av_free_packet()(9)av_free()4.文件操作:(1)avnew_steam()(2)av_read_frame()(3)av_write_frame()(4)dump_format()5.其他函数:(1)avpicture_deinterlace()(2)ImgReSampleContext()以下就根据,以上数据结构及函数在ffmpeg测试代码output_example.c中出现的前后顺进行分析。

在此之前还是先谈一下ffmpeg的编译问题。

在linux下的编译比较简单,这里不多说了。

在windows下的编译可以参考以下网页:/viewthread.php?tid=1897&extra=page%3D1值得一提的是,在使用编译后的sdk进行测试时(用到ffmpeg目录下的output_example.c)编译过程中可能会有以下两个问题:1.Output_example.c用到了snprintf.h这个头文件。

FFMPEG编译、使用与常见问题一. Linux下FFMPEG的安装与测试 (1)二. FFMPEG编译中出现的一些问题与解决方法 (4)三. FFMpeg简介及命令选项参数 (8)四. FFMPEG与x264的编译 (13)一.Linux下FFMPEG的安装与测试a. 先装mp3在linux下的包:lame-3.97.tar.gz;tar -xvzf lame-3.97.tar.gz;cd lame-3.97;./configure --enable-shared --prefix=/usr/;make;make install;b. 支持Ogg Vorbis:as4自带相应的rpm包,你可以安装一下如下rpm包:libvorbis, libvorbis-devel,libogg, libogg-devel 一般情况下as4都会安装c. 支持xvid x264,现在最流行的两种高质量的压缩格式xvid的编译安装wget /downloads/xvidcore-1.1.0.tar.gztar zvxf xvidcore-1.1.0.tar.gzcd xvidcore-1.1.2/build/generic./configure --prefix=/usr --enable-sharedmakemake installx264的获取用git:git clone git:///x264.gitcd x264./configure --prefix=/usr --enable-sharedmakemake install3d. AC3和dts编码的支持as4系统似乎已经支持ac3编码,编译的时候只要加--enable-a52 --enable-gpl参数就行libdts编译参数tar zxvf libdts-0.0.2.tar.gz./configure --prefix=/usrmakemake installe. mpg4 aac格式支持,由于服务器还针对手机用户服务,所以,类似aac,mpg4铃声格式的支持,我们也得做。

看到有人发了嵌入式Linux通过帧缓存截图 - Embedded Linux Framebuffer Screenshot,我在前些时间也做了一些类似的工作,不过我截的是视频文件的帧画面。

文章我在blog内发表了,现看到有人做了相似的工作,一起凑个热闹。

文章写的有点乱,精力有限,不进行修改了。

2010/4/10说起某科学的超电磁炮,从我漫长的动漫生涯来看,其实并不算一部顶尖之作。

但是其OP 动人心弦,确为绝佳。

在片头动感的音乐中,首先出现的是经典的黑白场景,对炮姐从脚到头作一次扫描,接着停格在炮姐的拼发电流的手上,画面渐入彩色。

这短短的几秒钟,令我兴奋难以自抑。

之后,我就一直在纠结了。

因为太过喜欢那几帧画面,想取来做桌面壁纸、屏保、手机屏保。

但是在网上老找不到这些图片,或者质量太次。

纠结了几个月,前两天忽然想起之前曾详细分析过ffmpeg的源码的,为什么不自己写个软件从stream中抓frame呢?于是立马动手做这件事。

欲望才是创作的原动力啊,对炮姐的爱令我心中满怀热情。

有人问我:为啥不播放时printscreen呢?但是printscreen可以实现某个时间段的连续帧获取吗?开发环境:Debian /Etch准备工作:1、 svn checkout svn://svn.mplayerhq.hu/ffmpeg/trunk ffmpeg(下载最新版本的ffmpeg)2、 cd ffmpeg3、 ./configure --enable-gpl --enable-shared --prefix=/usr(编译为动态库,懒得改.bash_profile,prefix路径选/usr,默认为/usr/local)4、 make(编译,如果要用到ffplay的话,先sudo apt-get install libsdl-dev,ffplay.c用到sdl的库)5、 sudo make install(安装lib和include file,注意include file分几个目录存放的,不像之前版本那样统一放到/usr/include/ffmpeg下)注:在进行这些准备工作前,最好将之前安装的ffmpeg的lib和include file全部清除干净。

***********************************************'函数名:SelPlay'作 用:在网页中播放视频'参 数:strUrl ---- 视频地址 strWidth ---显示视频宽 strHeight ---显示视频高'***********************************************Sub SelPlay(strUrl,strWidth,StrHeight)Dim Exts,isExtIf strUrl <> "" Then isExt = LCase(Mid(strUrl,InStrRev(strUrl, ".")+1)) Else isExt = ""End IfExts = "avi,wmv,asf,mov,rm,ra,ram"If Instr(Exts,isExt)=0 Then Response.write "非法视频文件"Else Select Case isExt Case "avi","wmv","asf","mov" Response.write "<EMBED id=MediaPlayer src="&a mp;strUrl&" width="&strWidth&" height="&strHeight&" loop=""false"" autostart=""true""></EMBED>" Case "mov","rm","ra","ram" Response.Write "<OBJECT height="&strHeight& " width="&strWidth&" classid=clsid:CFCDAA03-8BE4-11cf-B84B-0020 AFBBCCFA>" Response.Write "<PARAM NAME=""_ExtentX"" V ALUE=""12700"">" Response.Write "<PARAM NAME=""_ExtentY"" V ALUE=""9525"">" Response.Write "<PARAM NAME=""AUTOSTART""&nb sp;V ALUE=""-1"">" Response.Write "<PARAM NAME=""SHUFFLE"" V ALUE=""0"">" Response.Write "<PARAM NAME=""PREFETCH""  ;V ALUE=""0"">" Response.Write "<PARAM NAME=""NOLABELS""&nbs p;V ALUE=""0"">" Response.Write "<PARAM NAME=""SRC"" V ALU E="""&strUrl&""">" Response.Write "<PARAM NAME=""CONTROLS""&nbs p;V ALUE=""ImageWindow"">" Response.Write "<PARAM NAME=""CONSOLE"" V ALUE=""Clip"">" Response.Write "<PARAM NAME=""LOOP"" V AL UE=""0"">" Response.Write "<PARAM NAME=""NUMLOOP""  ;V ALUE=""0"">" Response.Write "<PARAM NAME=""CENTER"" V ALUE=""0"">" Response.Write "<PARAM NAME=""MAINTAINASPECT "" V ALUE=""0"">" Response.Write "<PARAM NAME=""BACKGROUNDCO LOR"" V ALUE=""#000000"">" Response.Write "</OBJECT>" Response.Write "<BR>" Response.Write "<OBJECT height=32 width="& ;strWidth&" classid=clsid:CFCDAA03-8BE4-11cf-B84B-0020AFBBCCFA>" Response.Write "<PARAM NAME=""_ExtentX"" V ALUE=""12700"">" Response.Write "<PARAM NAME=""_ExtentY"" V ALUE=""847"">" Response.Write "<PARAM NAME=""AUTOSTART""&nb sp;V ALUE=""0"">" Response.Write "<PARAM NAME=""SHUFFLE"" V ALUE=""0"">" Response.Write "<PARAM NAME=""PREFETCH""  ;V ALUE=""0"">" Response.Write "<PARAM NAME=""NOLABELS""&nbs p;V ALUE=""0"">" Response.Write "<PARAM NAME=""CONTROLS""&nbs p;V ALUE=""ControlPanel,StatusBar"">" Response.Write "<PARAM NAME=""CONSOLE"" V ALUE=""Clip"">" Response.Write "<PARAM NAME=""LOOP"" V AL UE=""0"">" Response.Write "<PARAM NAME=""NUMLOOP""  ;V ALUE=""0"">" Response.Write "<PARAM NAME=""CENTER"" V ALUE=""0"">" Response.Write "<PARAM NAME=""MAINTAINASPECT "" V ALUE=""0"">" Response.Write "<PARAM NAME=""BACKGROUNDCO LOR"" V ALUE=""#000000"">" Response.Write "</OBJECT>" End SelectEnd IfEnd Sub 调用程序代码Call SelPlay(DvUrl,280,220)更多信息请查看IT技术专栏。

VLC源代码分析一、VLC简介VLC的全名是Video Lan Client,是一个开源的、跨平台的视频播放器。

VLC支持大量的音视频传输、封装和编码格式,完整的功能特性列表可以在这里获得/vlc/features.html,下面给出一个简要的不完整的列表:操作系统:Windows、WinCE、Linux、MacOSX、BEOS、BSD访问形式:文件、DVD/VCD/CD、http、ftp、mms、TCP、UDP、RTP、IP组播、IPv6、rtsp编码格式:MPEG*、DIVX、WMV、MOV、3GP、FLV、H.263、H.264、FLAC视频字幕:DVD、DVB、Text、Vobsub视频输出:DirectX、X11、XVideo、SDL、FrameBuffer、ASCII控制界面:WxWidgets、QT4、Web、Telnet、Command line浏览器插件:ActiveX、Mozilla(firefox)实际上为了更清晰一点,我们可以反过来说说VLC不支持哪些常见的。

首先是RealVideo(Real的Audio部分支持),因为Real的Video加码器存在版权的问题。

实际上VLC 0.9.0已经加入了RealVideo的支持,但是需要额外的解码器(类似MPlayer)。

另外,VLC不支持3GP的音频格式AMR。

VLC原先是几个法国的大学生做的项目,后来他们把VLC作为了一个开源的项目,吸引了来自世界各国的很多优秀程序员来共同编写和维护VLC,才逐渐变成了现在这个样子。

至于为什么叫VideoLan Client,是因为以前还有一个VideoLan Server的项目(简称VLS),而目前VLS的功能已经合并到VLC中来,所以VLC不仅仅是一个视频播放器,它也可以作为小型的视频服务器,更可以一边播放一边转码,把视频流发送到网络上。

VLC最为突出的就是网络流的播放功能,例如MPEG2的UDP TS流的播放和转发,几乎是无可替代的。

FFmpeg开发环境构建本⽂为作者原创,转载请注明出处:1. 相关资源介绍本⽂主要讲述 linux 平台 x86(及x86-64) 架构下的 ffmpeg 编译安装过程。

其他嵌⼊式平台需要交叉编译,过程类似,不详述。

本实验在 opensuse 和 ubuntu 两个平台作了验证。

使⽤lsb_release -a命令查看系统发⾏版版本:opensuse 平台版本:openSUSE Leap 15.1。

ubuntu 平台版本:Ubuntu 16.04.5 LTS。

1.1 ffmpegffmpeg官⽹:1.2 SDLSDL(Simple DirectMedia Layer) 是⼀套开源的跨平台多媒体开发库。

SDL提供了数种控制图像、声⾳、输出输⼊的函数,封装了复杂的视⾳频底层操作,简化了视⾳频处理的难度。

⽬前SDL多⽤于开发游戏、模拟器、媒体播放器等多媒体应⽤领域。

SDL 官⽹:1.3 yasm/nasm旧版 ffmpeg 及 x264/x265 使⽤ yasm 汇编器Yasm 是英特尔x86架构下的⼀个汇编器和反汇编器。

Yasm 是⼀个完全重写的 Netwide 汇编器(NASM)。

Yasm 通常可以与 NASM 互换使⽤,并⽀持 x86 和 x86-64 架构。

其许可协议为修订过的 BSD 许可证。

此处 Yasm ⽤来编译 x86 平台下 ffmpeg 中部分汇编代码。

注意,Yasm 是 x86 平台汇编器,不需要交叉编译。

若是 arm 等其他平台,交叉编译⼯具链中包含有对应的汇编器,则交叉编译ffmpeg 时需要 --disable-yasm 选项。

Yasm 官⽹:新版 ffmpeg 及 x264/x265 改⽤ nasm 汇编器Netwide Assembler (简称NASM) 是⼀款基于英特尔 x86 架构的汇编与反汇编⼯具。

NASM 被认为是 Linux 平台上最受欢迎的汇编⼯具之⼀。

注意,NASM 是 x86 平台汇编器,不需要交叉编译。

CentOs7.5安装FFmpeg⼀、FFmpeg简介FFmpeg是⼀个⾃由软件,可以运⾏⾳频和视频多种格式的录影、转换、流功能,包含了libavcodec ─这是⼀个⽤于多个项⽬中⾳频和视频的解码器库,以及libavformat——⼀个⾳频与视频格式转换库"FFmpeg"这个单词中的"FF"指的是"Fast Forward"。

有些新⼿写信给"FFmpeg"的项⽬负责⼈,询问FF是不是代表“Fast Free”或者“Fast Fourier”等意思,"FFmpeg"的项⽬负责⼈回信说“Just for the record, the original meaning of "FF" in FFmpeg is "Fast Forward"...”FFmpeg在Linux平台下开发,但它同样也可以在其它操作系统环境中编译运⾏,包括Windows、Mac OS X等。

这个项⽬最初是由Fabrice Bellard发起的,⽽现在是由Michael Niedermayer在进⾏维护。

许多FFmpeg的开发者同时也是MPlayer项⽬的成员,FFmpeg在MPlayer项⽬中是被设计为服务器版本进⾏开发。

组成组件此计划由⼏个组件组成:ffmpeg是⼀个命令⾏⼯具,⽤来对视频⽂件转换格式,也⽀持对电视卡实时编码ffserver是⼀个HTTP多媒体实时⼴播流服务器,⽀持时光平移ffplay是⼀个简单的播放器,基于SDL与FFmpeg库libavcodec包含了全部FFmpeg⾳频/视频编解码库libavformat包含demuxers和muxer库libavutil包含⼀些⼯具库libpostproc对于视频做前处理的库libswscale对于图像作缩放的库参数FFmpeg可使⽤众多参数,参数内容会根据ffmpeg版本⽽有差异,使⽤前建议先参考参数及编解码器的叙述。

ffmpeg学习六:avcodec_open2函数源码分析(以mp4文件为例)avformat_open_input函数的源码,这个函数的虽然比较复杂,但是它基本是围绕着创建和初始化一些数据结构来展开的,比如,avformat_open_input函数会创建和初始化A VFormatContext,A VClass ,A VOption,URLContext,URLProtocol ,A VInputFormat ,A VStream 等数据结构,这些数据结构的关系如下:(这里的箭头是包含关系,不是继承关系)那么,我们可以推测,同样作为Open系列的函数,avcodec_open2的使命也必然是构建和初始化一系列的数据结构,那么是不是这样呢?avcodec_open2函数定义在libavcodec/aviocodec.h中:/*** Initialize the A VCodecContext to use the given A VCodec. Prior to using this* function the context has to be allocated with avcodec_alloc_context3().** The functions avcodec_find_decoder_by_name(), avcodec_find_encoder_by_name(),* avcodec_find_decoder() and avcodec_find_encoder() provide an easy way for* retrieving a codec.** @warning This function is not thread safe!** @note Always call this function before using decoding routines (such as* @ref avcodec_receive_frame()).** @code* avcodec_register_all();* av_dict_set(&opts, "b", "2.5M", 0);* codec = avcodec_find_decoder(A V_CODEC_ID_H264);* if (!codec)* exit(1);** context = avcodec_alloc_context3(codec);** if (avcodec_open2(context, codec, opts) < 0)* exit(1);* @endcode** @param avctx The context to initialize.* @param codec The codec to open this context for. If a non-NULL codec has been* previously passed to avcodec_alloc_context3() or* for this context, then this parameter MUST be either NULL or* equal to the previously passed codec.* @param options A dictionary filled with A VCodecContext and codec-private options.* On return this object will be filled with options that were not found.** @return zero on success, a negative value on error* @see avcodec_alloc_context3(), avcodec_find_decoder(), avcodec_find_encoder(),* av_dict_set(), av_opt_find().*/int avcodec_open2(A VCodecContext *avctx, const A VCodec *codec, A VDictionary **options);从它的注释中我们可以得到如下信息:1.使用所给的A VCodec结构体构造A VCodecContext结构体。