mysql+lvs+keepalived 复制负载配置

- 格式:doc

- 大小:82.50 KB

- 文档页数:15

LVS+Keepalived实现高可用集群一、基础介绍 (2)二、搭建配置LVS-NA T模式 (2)三、搭建配置LVS-DR模式 (4)四、另外一种脚本方式实现上面LVS-DR模式 (6)五、keepalived + LVS(DR模式) 高可用 (8)六、Keepalived 配置文件详细介绍 (11)一、基础介绍(一)根据业务目标分成三类:High Availability 高可用Load Balancing 负载均衡High Performance 高性能(二)实现集群产品:HA类:rhcs、heartbeat、keepalivedLB类:haproxy、lvs、nginx、f5、piranhaHPC类:/index/downfile/infor_id/42(三)LVS 负载均衡有三种模式:LVS-DR模式(direct router)直接路由模式进必须经过分发器,出就直接出LVS-NAT模式(network address translation)进出必须都经过分发器LVS-TUN模式(ip tunneling)IP隧道模式服务器可以放到全国各地二、搭建配置LVS-NAT模式1 、服务器IP规划:DR服务器添加一张网卡eth1,一个网卡做DIP,一个网口做VIP。

设置DIP、VIP IP地址:DIP的eth1和所有RIP相连同一个网段CIP和DIP的eth0(Vip)相连同一个网段Vip eth0 192.168.50.200Dip eth1 192.168.58.4客户机IP:Cip 192.168.50.32台真实服务器IP:Rip1 192.168.58.2Rip2 192.168.58.32 、R ealServer1配置:mount /dev/xvdd /media/vi /var/www/html/index.html写入:this is realserver1启动httpdvi /etc/sysconfig/network-scripts/ifcfg-eth0设置RIP,子网掩码必须设置成DIPIPADDR=192.168.58.2NETMASK=255.255.255.0GA TEWAY=192.168.58.43 、R ealServer2 配置:vi /var/www/html/index.html写入:this is realserver2启动httpdvi /etc/sysconfig/network-scripts/ifcfg-eth0设置RIP,子网掩码必须设置成DIPIPADDR=192.168.58.3NETMASK=255.255.255.0GA TEWAY=192.168.58.44 、在DR服务器上做以下设置:开启IP数据包转发vi /etc/sysctl.confnet.ipv4.ip_forward = 0 ##0改成1 ,此步很重要查看是否开启:sysctl -p5 、安装LVS服务:ipvsadmyum -y install ipvsadmlsmod |grep ip_vsTurbolinux系统没有带rpm包,必须下载源码安装:#ln -s /usr/src/kernels/2.6.18-164.el5-x86_64/ /usr/src/linux##如果不做连接,编译时会包错#tar zxvf ipvsadm-1.24.tar.gz#cd ipvsadm-1.24#make && make install运行下ipvsadm ,就加到ip_vs模块到内核了lsmod | grep ip 可以看到有ip_vs模块了6 、配置DR服务器,添加虚拟服务ipvsadm -L -n 查询信息ipvsadm -A -t 192.168.50.200:80 -s rr #添加集群服务、调度算法,rr为调度算法ipvsadm -a -t 192.168.50.200:80 -r 192.168.58.2 -m -w 1 # -m代表net模式,-w代表权重ipvsadm -a -t 192.168.50.200:80 -r 192.168.58.3 -m -w 2ipvsadm -L -n 再次查看是就有了realserverservice ipvsadm save 保存配置iptables -L 关闭或者清空防火墙watch -n 1 'ipvsadm -L -n' 查看访问记录的数显示如下:-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 192.168.50.200:80 rr-> 192.168.58.2:80 Masq 1 0 13-> 192.168.58.3:80 Masq 2 0 12ActiveConn是活动连接数,也就是tcp连接状态的ESTABLISHED;InActConn是指除了ESTABLISHED以外的,所有的其它状态的tcp连接.7 、测试:http://192.168.58.200配完后若想修改算法:ipvsadm -E -t 192.168.58.200:80 -s wlc修改Rip的权重:ipvsadm -e -t 192.168.58.200:80 -r 192.168.58.2 -m -w 1ipvsadm -e -t 192.168.58.200:80 -r 192.168.58.3 -m -w 5三、搭建配置LVS-DR模式lo:1 回应客户端,lo:1上的IP跟机器有关,跟网卡没有关系arp_announce 对网络接口上本地IP地址发出的ARP回应作出相应级别的限制arp_ignore 定义对目标地址为本地IP的ARP询问不同的请求一、3台服务器IP配置规划:DIP:eth0:1 192.168.58.200/32 (VIP)eth0 192.168.58.3/24 (DIP)RIP1 lo:1 192.168.58.200/32 (VIP)eth0 192.168.58.4/24RIP2 lo:1 192.168.58.200/32 (VIP)eth0 192.168.58.5/24 .................................................................RIP n lo:1 192.168.58.200/32 (VIP)eth0 192.168.58.N/24二、每台realserver都加上下面四个步骤配置:1 、配置每台rip的IP、http,web页面2 、关闭每台rip服务器的ARP广播:echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignoreecho 2 > /proc/sys/net/ipv4/conf/lo/arp_announceecho 1 > /proc/sys/net/ipv4/conf/all/arp_ignoreecho 2 > /proc/sys/net/ipv4/conf/all/arp_announce3 、配置VIPifconfig lo:1 192.168.58.200 netmask 255.255.255.255 broadcast 192.168.58.200 up4 、配置网关route add -host 192.168.58.200 dev lo:1三、DR上的配置(DR模式下Dip不用开启转发):1 、配置DIP,在eth0上添加一个子VIP接口:添加VIP:ifconfig eth0:1 192.168.58.200 broadcast 192.168.58.200 netmask 255.255.255.255 up2 、配置网关:route add -host 192.168.58.200 dev eth0:1route -n3 、安装ipvsadm(方法见文档上面)yum -y install ipvsadmlsmod |grep ip_vs4 、配置LVS集群:ipvsadm -A -t 192.168.58.200:80 -s rr #添加集群服务、调度算法,rr为调度算法ipvsadm -a -t 192.168.58.200:80 -r 192.168.58.3 -g -w 1 # -g代表DR模式,-w代表权重ipvsadm -a -t 192.168.58.200:80 -r 192.168.58.2 -g -w 2service ipvsadm saveipvsadm -L -n 查看信息四、测试:http://192.168.58.200四、另外一种脚本方式实现上面LVS-DR模式IP规划:Dip eth0 192.168.58.139VIP:192.168.58.200RIP1:192.168.58.2RIP2:192.168.58.31 、D R服务器上安装ipvsadm#yum -y install ipvsadm#lsmod | grep ip_vs 查看没有输出#modprobe ip_vs 安装即可2 、配置DIP服务器、LVS这里也是个写脚本为了方便vim /etc/init.d/lvsdr#!/bin/bash#lvs of DRVIP=192.168.58.200RIP1=192.168.58.2RIP2=192.168.58.3case "$1" instart)echo "start lvs of DR"/sbin/ifconfig eth0:0 $VIP broadcast $VIP netmask 255.255.255.0 up echo "1" > /proc/sys/net/ipv4/ip_forward/sbin/iptables -C/sbin/ipvsadm -A -t $VIP:80 -s rr/sbin/ipvsadm -a -t $VIP:80 -r $RIP1:80 -g/sbin/ipvsadm -a -t $VIP:80 -r $RIP2:80 -g/sbin/ipvsadm;;stop)echo "stop lvs of DR"echo "0" > /proc/sys/net/ipv4/ip_forward/sbin/ipvsadm -C/sbin/ifconfig eth0:0 down;;*)echo "Usage :$0 {start|stop}"exit1esacexit 0#chmod o+x /etc/init.d/lvsdr启动脚本:#service lvsdr start3 、2台RIP服务器都配置这里我们也都可以写成脚本开启2台RIP的httpd服务。

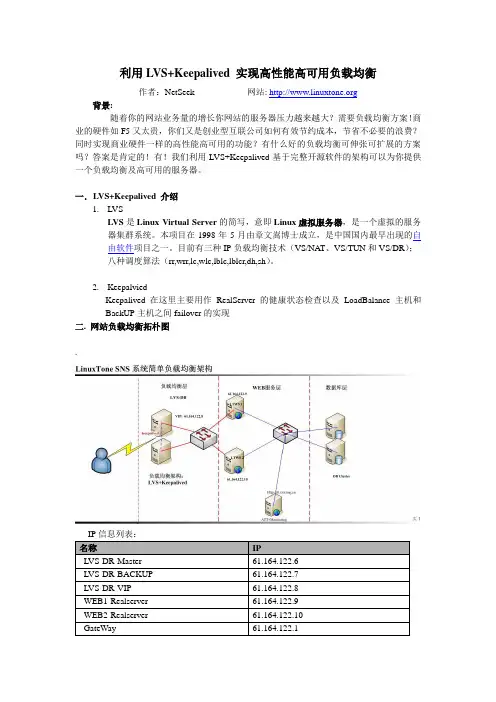

利用LVS+Keepalived 实现高性能高可用负载均衡作者:NetSeek 网站: 背景:随着你的网站业务量的增长你网站的服务器压力越来越大?需要负载均衡方案!商业的硬件如F5又太贵,你们又是创业型互联公司如何有效节约成本,节省不必要的浪费?同时实现商业硬件一样的高性能高可用的功能?有什么好的负载均衡可伸张可扩展的方案吗?答案是肯定的!有!我们利用LVS+Keepalived基于完整开源软件的架构可以为你提供一个负载均衡及高可用的服务器。

一.L VS+Keepalived 介绍1.LVSLVS是Linux Virtual Server的简写,意即Linux虚拟服务器,是一个虚拟的服务器集群系统。

本项目在1998年5月由章文嵩博士成立,是中国国内最早出现的自由软件项目之一。

目前有三种IP负载均衡技术(VS/NA T、VS/TUN和VS/DR);八种调度算法(rr,wrr,lc,wlc,lblc,lblcr,dh,sh)。

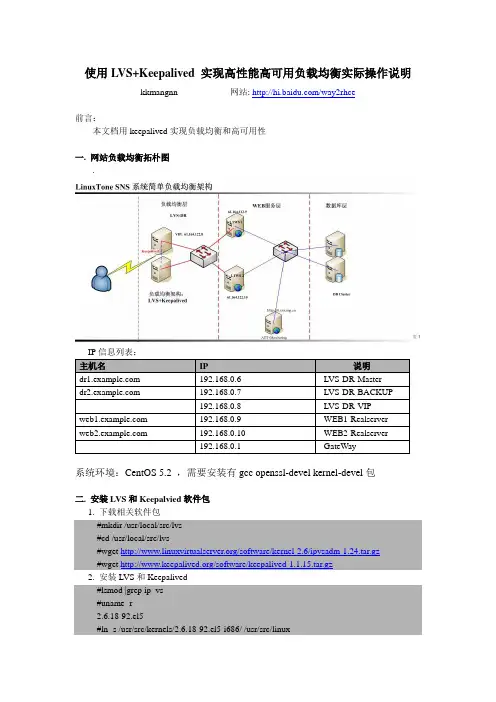

2.KeepalviedKeepalived在这里主要用作RealServer的健康状态检查以及LoadBalance主机和BackUP主机之间failover的实现二. 网站负载均衡拓朴图.IP信息列表:名称IPLVS-DR-Master 61.164.122.6LVS-DR-BACKUP 61.164.122.7LVS-DR-VIP 61.164.122.8WEB1-Realserver 61.164.122.9WEB2-Realserver 61.164.122.10GateWay 61.164.122.1三. 安装LVS和Keepalvied软件包1. 下载相关软件包#mkdir /usr/local/src/lvs#cd /usr/local/src/lvs#wget /software/kernel-2.6/ipvsadm-1.24.tar.gz #wget /software/keepalived-1.1.15.tar.gz2. 安装LVS和Keepalived#lsmod |grep ip_vs#uname -r2.6.18-53.el5PAE#ln -s /usr/src/kernels/2.6.18-53.el5PAE-i686/ /usr/src/linux#tar zxvf ipvsadm-1.24.tar.gz#cd ipvsadm-1.24#make && make install#find / -name ipvsadm # 查看ipvsadm的位置#tar zxvf keepalived-1.1.15.tar.gz#cd keepalived-1.1.15#./configure && make && make install#find / -name keepalived # 查看keepalived位置#cp /usr/local/etc/rc.d/init.d/keepalived /etc/rc.d/init.d/#cp /usr/local/etc/sysconfig/keepalived /etc/sysconfig/#mkdir /etc/keepalived#cp /usr/local/etc/keepalived/keepalived.conf /etc/keepalived/#cp /usr/local/sbin/keepalived /usr/sbin/#service keepalived start|stop #做成系统启动服务方便管理.四. 配置LVS实现负载均衡1.LVS-DR,配置LVS脚本实现负载均衡#vi /usr/local/sbin/lvs-dr.sh#!/bin/bash# description: start LVS of DirectorServer#Written by :NetSeek GW=61.164.122.1# website director vip.SNS_VIP=61.164.122.8SNS_RIP1=61.164.122.9SNS_RIP2=61.164.122.10./etc/rc.d/init.d/functionslogger $0 called with $1case "$1" instart)# set squid vip/sbin/ipvsadm --set 30 5 60/sbin/ifconfig eth0:0 $SNS_VIP broadcast $SNS_VIP netmask 255.255.255.255 broadcast $SNS_VIP up/sbin/route add -host $SNS_VIP dev eth0:0/sbin/ipvsadm -A -t $SNS_VIP:80 -s wrr -p 3/sbin/ipvsadm -a -t $SNS_VIP:80 -r $SNS_RIP1:80 -g -w 1/sbin/ipvsadm -a -t $SNS_VIP:80 -r $SNS_RIP2:80 -g -w 1touch /var/lock/subsys/ipvsadm >/dev/null 2>&1;;stop)/sbin/ipvsadm -C/sbin/ipvsadm -Zifconfig eth0:0 downifconfig eth0:1 downroute del $SNS_VIProute del $SS_VIPrm -rf /var/lock/subsys/ipvsadm >/dev/null 2>&1echo "ipvsadm stoped";;status)if [ ! -e /var/lock/subsys/ipvsadm ];thenecho "ipvsadm stoped"exit 1elseecho "ipvsadm OK"fi;;*)echo "Usage: $0 {start|stop|status}"exit 1esacexit 02.配置Realserver脚本.#vi /usr/local/sbin/realserver.sh#!/bin/bash# description: Config realserver lo and apply noarp#Written by :NetSeek SNS_VIP=61.164.122.8. /etc/rc.d/init.d/functionscase "$1" instart)ifconfig lo:0 $SNS_VIP netmask 255.255.255.255 broadcast $SNS_VIP/sbin/route add -host $SNS_VIP dev lo:0echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "1" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/all/arp_announcesysctl -p >/dev/null 2>&1echo "RealServer Start OK";;stop)ifconfig lo:0 downroute del $SNS_VIP >/dev/null 2>&1echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "0" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/all/arp_announceecho "RealServer Stoped";;*)echo "Usage: $0 {start|stop}"exit 1esacexit 0或者采用secondary ip address方式配置# vi /etc/sysctl.confnet.ipv4.conf.lo.arp_ignore = 1net.ipv4.conf.lo.arp_announce = 2net.ipv4.conf.all.arp_ignore = 1net.ipv4.conf.all.arp_announce = 2#sysctl –p#ip addr add 61.164.122.8/32 dev lo#ip add list 查看是否绑定3. 启动lvs-dr脚本和realserver启本,在DR上可以查看LVS当前状态:#watch ipvsadm –ln五.利用Keepalvied实现负载均衡和和高可用性1.配置在主负载均衡服务器上配置keepalived.conf#vi /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {notification_email {cnseek@# failover@firewall.loc# sysadmin@firewall.loc}notification_email_from sns-lvs@smtp_server 127.0.0.1# smtp_connect_timeout 30router_id LVS_DEVEL}# 20081013 written by :netseek# VIP1vrrp_instance VI_1 {state MASTER #备份服务器上将MASTER改为BACKUP interface eth0virtual_router_id 51priority 100 # 备份服务上将100改为99advert_int 1authentication {auth_type PASSauth_pass 1111}virtual_ipaddress {61.164.122.8#(如果有多个VIP,继续换行填写.)}}virtual_server 61.164.122.8 80 {delay_loop 6 #(每隔10秒查询realserver状态)lb_algo wrr #(lvs 算法)lb_kind DR #(Direct Route)persistence_timeout 60 #(同一IP的连接60秒内被分配到同一台realserver) protocol TCP #(用TCP协议检查realserver状态)real_server 61.164.122.9 80 {weight 3 #(权重)TCP_CHECK {connect_timeout 10 #(10秒无响应超时)nb_get_retry 3delay_before_retry 3connect_port 80}}real_server 61.164.122.10 80 {weight 3TCP_CHECK {connect_timeout 10nb_get_retry 3delay_before_retry 3connect_port 80}}}2. BACKUP服务器同上配置,先安装lvs再按装keepalived,仍后配置/etc/keepalived/keepalived.conf,只需将红色标示的部分改一下即可.3. vi /etc/rc.local#/usr/local/sbin/lvs-dr.sh 将lvs-dr.sh这个脚本注释掉。

部署LVS+keepalived⾼可⽤负载均衡集群⽬录⼀ LVS + keepalived 集群概述在这个⾼度信息化的 IT 时代,企业的⽣产系统、业务运营、销售和⽀持,以及⽇常管理等环节越来越依赖于计算机信息和服务,对⾼可⽤(HA)技术的应⽤需求不断提⾼,以便提供持续的、不间断的计算机系统或⽹络服务。

Keepalived是⼀个基于VRRP协议来实现的LVS服务⾼可⽤⽅案,可以解决静态路由出现的单点故障问题。

1.1 Keepalived⼯具介绍专为LVS和HA设计的⼀款健康检查⼯具• ⽀持故障⾃动切换(Failover)• ⽀持节点健康状态检查(Health Checking)• 官⽅⽹站:1.2 ⼯作原理在⼀个LVS服务集群中通常有主服务器(MASTER)和备份服务器(BACKUP)两种⾓⾊的服务器,但是对外表现为⼀个虚拟IP,主服务器会发送VRRP通告信息给备份服务器,当备份服务器收不到VRRP消息的时候,即主服务器异常的时候,备份服务器就会接管虚拟IP,继续提供服务,从⽽保证了⾼可⽤性。

keepalived 采⽤VRRP热备份协议实现Linux服务器的多机热备功能1.3 VRRP (虚拟路由冗余协议)是针对路由器的⼀种备份解决⽅案由多台路由器组成⼀个热备组,通过共⽤的虚拟IP地址对外提供服务每个热备组内同时只有⼀台主路由器提供服务,其他路由器处于冗余状态若当前在线的路由器失效,则其他路由器会根据设置的优先级⾃动接替虚拟IP地址,继续提供服务1.4 故障转移机制Keepalived⾼可⽤服务之间的故障切换转移,是通过VRRP 来实现的。

在 Keepalived服务正常⼯作时,主 Master节点会不断地向备节点发送(多播的⽅式)⼼跳消息,⽤以告诉备Backup节点⾃⼰还活着,当主 Master节点发⽣故障时,就⽆法发送⼼跳消息,备节点也就因此⽆法继续检测到来⾃主 Master节点的⼼跳了,于是调⽤⾃⾝的接管程序,接管主Master节点的 IP资源及服务。

A tlas+lvs+keepalived +mysql+主从复制负载均衡搭建部署文档2015-8-31(V1.0)一、部署背景信息基于mysql的负载均衡有很多种方式,如haproxy,前面一篇博客有介绍,还可以用更高效lvs 做负载均衡,下面是基于percona xtradb cluster的三个节点的多主复制+atlas的lvs负载均衡,其实这里是不需要用atlas的,因为atlas是用来做连接池和读写分离的,而多主架构是不需要读写分离的(如果是基于mysql replication的就需要atlas做负载均衡了),但为了测试atlas能不能用lvs做负载均衡,就顺便做了下实验。

1.节点规划1.1 mysql数据节点:db169db172db173三个节点为xtradb cluster节点。

1.2 keepalived节点:db162db163虚拟ip为192.168.1.201haproxy节点(仅为了对比lvs的性能才安装的):db169(部署在xtradb cluster的一个节点上)1.3 atlas节点:和xtradb cluster节点部署在一起,也为三个节点注意:atlas和mysql要部署在一个节点上,如果不在一个节点上则不能用lvs dr模式负载均衡1.4 客户端测试节点:db55ip地址为192.168.1.* ,节点名为db+ip地址末位2.安装lvs及keepavlied(db162、db163上)2.1安装依赖包yum -y install kernel-devel make gcc openssl-devel libnl*下载并连接linux kernel文件,注意版本要一致(uname -a)[root@db163 ~]# ln -s /usr/src/kernels/2.6.32-358.el6.x86_64/ /usr/src/linux安装keepalived、lvs[root@db162 ~]# yum install ipvsadm[root@db162 ~]# yum install keepalived[root@db163 ~]# yum install ipvsadm[root@db163 ~]# yum install keepalived2.2.配置keepavlied,注意lvs不需要单独配置,在keepalived里配置就行了[root@db162 ~]# cat /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {router_id MySQL_LB1}vrrp_sync_group VSG {group {MySQL_Loadblancing}}vrrp_instance MySQL_Loadblancing { state MASTERinterface eth0virtual_router_id 51priority 101advert_int 1authentication {auth_type PASSauth_pass 123456}virtual_ipaddress {192.168.1.201}}virtual_server 192.168.1.201 1234 { delay_loop 6lb_algo rrlb_kind DR# nat_mask 255.255.255.0#persistence_timeout 50protocol TCPreal_server 192.168.1.169 1234 {weight 3TCP_CHECK {connect_timeout 3nb_get_retry 3delay_before_retry 3connect_port 1234}}real_server 192.168.1.172 1234 {weight 3TCP_CHECK {connect_timeout 3nb_get_retry 3delay_before_retry 3connect_port 1234}}real_server 192.168.1.173 1234 {weight 3TCP_CHECK {connect_timeout 3nb_get_retry 3delay_before_retry 3connect_port 1234}}}备机上的keepalived配置[root@db163 ~]# cat /etc/keepalived/keepalived.conf ! Configuration File for keepalivedglobal_defs {router_id MySQL_LB2}vrrp_sync_group VSG {group {MySQL_Loadblancing}}vrrp_instance MySQL_Loadblancing {state BACKUPinterface eth0virtual_router_id 51priority 100advert_int 1authentication {auth_type PASSauth_pass 123456}virtual_ipaddress {192.168.1.201}}virtual_server 192.168.1.201 1234 {delay_loop 6lb_algo rrlb_kind DR# nat_mask 255.255.255.0#persistence_timeout 50protocol TCPreal_server 192.168.1.169 1234 {weight 3TCP_CHECK {connect_timeout 3nb_get_retry 3delay_before_retry 3connect_port 1234}}real_server 192.168.1.172 1234 {weight 3TCP_CHECK {connect_timeout 3nb_get_retry 3delay_before_retry 3connect_port 1234}}real_server 192.168.1.173 1234 {weight 3TCP_CHECK {connect_timeout 3nb_get_retry 3delay_before_retry 3connect_port 1234}}}3.realserver(数据节点)上的配置分别在三个数据节点db169、db172、db173上安装如下脚本:[root@db172 ~]# cat /etc/init.d/lvsdr.sh#!/bin/bashVIP=192.168.1.201. /etc/rc.d/init.d/functionscase "$1" instart)/sbin/ifconfig lo down/sbin/ifconfig lo upecho "1" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "1" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/all/arp_announce/sbin/sysctl -p >/dev/null 2>&1/sbin/ifconfig lo:0 $VIP netmask 255.255.255.255 up/sbin/route add -host $VIP dev lo:0echo "LVS-DR real server starts successfully.\n";;stop)/sbin/ifconfig lo:0 down/sbin/route del $VIP >/dev/null 2>&1echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "0" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/all/arp_announceecho "LVS-DR real server stopped.";;status)isLoOn=`/sbin/ifconfig lo:0 | grep "$VIP"`isRoOn=`/bin/netstat -rn | grep "$VIP"`if [ "$isLoOn" == "" -a "$isRoOn" == "" ]; thenecho "LVS-DR real server has to run yet."elseecho "LVS-DR real server is running."fiexit 3;;*)echo "Usage: $0 {start|stop|status}"exit 1esacexit 0增加x权限:chmod +x /etc/init.d/lvsdr.sh增加开机自启动:echo "/etc/init.d/lvsdr.sh start" >> /etc/rc.local4.分别在三个数据节点db169、db172、db173上安装atlas下载atlas,并yum安装yum install -y Atlas-2.1.el6.x86_64.rpm配置atlas[root@db172 ~]# cat /usr/local/mysql-proxy/conf/f [mysql-proxy]#带#号的为非必需的配置项目#管理接口的用户名admin-username = admin#管理接口的密码admin-password = 123456#Atlas后端连接的MySQL主库的IP和端口,可设置多项,用逗号分隔proxy-backend-addresses = 192.168.1.173:3306#Atlas后端连接的MySQL从库的IP和端口,@后面的数字代表权重,用来作负载均衡,若省略则默认为1,可设置多项,用逗号分隔proxy-read-only-backend-addresses = 192.168.1.169:3306@1,192.168.1.172:3306@1#用户名与其对应的加密过的MySQL密码,密码使用PREFIX/bin目录下的加密程序encrypt加密,下行的user1和user2为示例,将其替换为你的MySQL的用户名和加密密码!pwds = usr_test:/iZxz+0GRoA=, usr_test2:/iZxz+0GRoA= ,root:/iZxz+0GRoA=#设置Atlas的运行方式,设为true时为守护进程方式,设为false时为前台方式,一般开发调试时设为false,线上运行时设为truedaemon = true#设置Atlas的运行方式,设为true时Atlas会启动两个进程,一个为monitor,一个为worker,monitor在worker意外退出后会自动将其重启,设为false时只有worker,没有monitor,一般开发调试时设为false,线上运行时设为truekeepalive = true#工作线程数,对Atlas的性能有很大影响,可根据情况适当设置event-threads = 10#日志级别,分为message、warning、critical、error、debug五个级别log-level = message#日志存放的路径log-path = /usr/local/mysql-proxy/log#SQL日志的开关,可设置为OFF、ON、REALTIME,OFF代表不记录SQL日志,ON代表记录SQL 日志,REALTIME代表记录SQL日志且实时写入磁盘,默认为OFF#sql-log = OFF#实例名称,用于同一台机器上多个Atlas实例间的区分#instance = test#Atlas监听的工作接口IP和端口proxy-address = 0.0.0.0:1234#Atlas监听的管理接口IP和端口admin-address = 0.0.0.0:2345#分表设置,此例中person为库名,mt为表名,id为分表字段,3为子表数量,可设置多项,以逗号分隔,若不分表则不需要设置该项#tables = person.mt.id.3#默认字符集,设置该项后客户端不再需要执行SET NAMES语句#charset = utf8#允许连接Atlas的客户端的IP,可以是精确IP,也可以是IP段,以逗号分隔,若不设置该项则允许所有IP连接,否则只允许列表中的IP连接#client-ips = 127.0.0.1, 192.168.1#Atlas前面挂接的LVS的物理网卡的IP(注意不是虚IP),若有LVS且设置了client-ips则此项必须设置,否则可以不设置#lvs-ips = 192.168.1.15.启动数据节点(分别在三个数据节点上db169、db172、db173)5.1 启动mysql数据库5.2 启动atlas : /usr/local/mysql-proxy/bin/mysqld-proxyd test start5.3 启动lvs脚本:/etc/init/lvsdr.sh start6.启动keepalived(db162、db163上)/etc/init.d/keepalived start7.验证:启动keepalived后,主节点为db162,查看vip是不是启动了:[root@db162 ~]# ip ad1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWNlink/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host loinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:1d:7d:a8:40:d9 brd ff:ff:ff:ff:ff:ffinet 192.168.1.162/24 brd 192.168.1.255 scope global eth0inet 192.168.1.201/32 scope global eth0inet6 fe80::21d:7dff:fea8:40d9/64 scope linkvalid_lft forever preferred_lft forever验证此节点没有1234端口监听:[root@db162 ~]# netstat -anp|grep 1234此处无输出在192.168.1.55(db55)上连接192.168.1.201(注意此节点没有1234端口在监听,发来的连接会被路由到真正的数据节点)[root@db55 ~]# mysql -h 192.168.1.201 -P1234 -uroot -p123456Welcome to the MySQL monitor. Commands end with ; or \g.Your MySQL connection id is 1871354501Server version: 5.0.81-log Percona XtraDB Cluster binary (GPL) 5.6.19-25.6, Revision 824, wsrep_25.6.r4111Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved.Oracle is a registered trademark of Oracle Corporation and/or itsaffiliates. Other names may be trademarks of their respectiveowners.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.mysql> show databases;+--------------------+| Database |+--------------------+| information_schema || dd || mcldb || mysql || mysqlslap || performance_schema || test |+--------------------+7 rows in set (0.00 sec)数据是正确的8.监控lvs使用sysbench压力测试,然后监控线程分布:[root@topdb soft]# sysbench --test=oltp --num-threads=100 --max-requests=100000 --oltp-table-size=1000000 --oltp-test-mode=nontrx --db-driver=mysql --mysql-db=dd --mysql-host=192.168.1.201 --mysql-port=1234 --mysql-user=root --mysql-password=123456 --oltp-nontrx-mode=select --oltp-read-only=on --db-ps-mode=disable runsysbench 0.4.12: multi-threaded system evaluation benchmarkRunning the test with following options:Number of threads: 100Doing OLTP test.Running non-transactional testDoing read-only testUsing Special distribution (12 iterations, 1 pct of values are returned in 75 pct cases) Using "BEGIN" for starting transactionsUsing auto_inc on the id columnMaximum number of requests for OLTP test is limited to 100000Threads started!Done.OLTP test statistics:queries performed:read: 100033write: 0other: 0total: 100033transactions: 100033 (13416.81 per sec.)deadlocks: 0 (0.00 per sec.)read/write requests: 100033 (13416.81 per sec.)other operations: 0 (0.00 per sec.)Test execution summary:total time: 7.4558stotal number of events: 100033total time taken by event execution: 744.5136per-request statistics:min: 0.71msavg: 7.44msmax: 407.23msapprox. 95 percentile: 28.56msThreads fairness:events (avg/stddev): 1000.3300/831.91execution time (avg/stddev): 7.4451/0.00[root@db162 ~]# ipvsadm -LnIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 192.168.1.201:1234 rr-> 192.168.1.169:1234 Route 3 0 33-> 192.168.1.172:1234 Route 3 0 34-> 192.168.1.173:1234 Route 3 0 34可以看出负载均衡在了三个节点。

MySQL中的复制配置和使用方法引言:MySQL是一种开源的关系型数据库管理系统,被广泛应用于各种Web应用中。

其中,复制是MySQL强大功能之一,使得用户可以创建多个数据库实例之间的镜像拷贝并保持同步。

本文将介绍MySQL中的复制配置和使用方法,帮助读者更好地理解和使用该功能。

一、复制的基本概念MySQL的复制是指通过将一个数据库实例的数据拷贝到其他一个或多个实例,从而实现数据的备份、高可用性和负载均衡的功能。

在复制中,通常存在主库和从库两个角色。

主库是数据的源头,从库则复制并同步主库中的数据。

复制的基本原理是主库将其数据更改(insert、update、delete)的日志传到从库,并在从库上执行相同的操作,以使两个数据库保持一致。

这种机制使得从库能够及时跟上主库的数据更新,并提供高可用性和负载均衡的支持。

二、复制配置步骤要配置MySQL中的复制功能,需要遵循以下步骤:1. 确保主库和从库的MySQL版本一致,以免版本不兼容导致问题;2. 在主库上开启二进制日志功能,通过在f配置文件中添加"log-bin"选项;3. 在主库上创建用于复制的用户,并将其授权访问权限;4. 在从库上设置主库的连接信息,包括主机名、用户名、密码等;5. 在从库上启动复制进程,使得从库能够连接到主库并开始复制;6. 监控复制进程,及时发现和解决复制过程中的错误或延迟。

以上步骤是复制配置的基本流程,具体的操作细节可能会依赖于MySQL的版本和环境配置的不同。

三、复制的使用方法一旦完成了复制的配置,就可以开始使用该功能了。

下面将介绍一些常见的复制使用方法。

1. 数据备份和恢复:通过复制,可以将主库的数据实时拷贝到从库,从而实现数据备份的目的。

在主库数据发生故障时,可以通过从库来进行数据恢复,确保业务的可用性。

2. 负载均衡:通过配置多个从库,并将读请求分发到不同的从库,可以实现负载均衡的功能。

这样,不仅可以提高系统的性能和吞吐量,还可以减轻主库的负担。

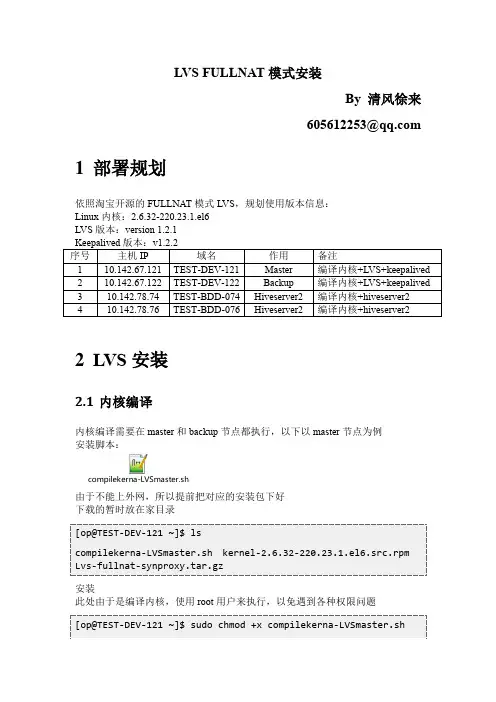

LVS FULLNAT 模式安装 By 清风徐来 605612253@1 部署规划依照淘宝开源的 FULLNAT 模式 LVS,规划使用版本信息: Linux 内核:2.6.32-220.23.1.el6 LVS 版本:version 1.2.1 Keepalived 版本:v1.2.2 序号 主机 IP 域名 作用 备注 1 10.142.67.121 TEST-DEV-121 Master 编译内核+LVS+keepalived 2 10.142.67.122 TEST-DEV-122 Backup 编译内核+LVS+keepalived 3 10.142.78.74 TEST-BDD-074 Hiveserver2 编译内核+hiveserver2 4 10.142.78.76 TEST-BDD-076 Hiveserver2 编译内核+hiveserver22 LVS 安装2.1 内核编译内核编译需要在 master 和 backup 节点都执行,以下以 master 节点为例 安装脚本:compilekerna-LVSmaster.sh由于不能上外网,所以提前把对应的安装包下好 下载的暂时放在家目录 [op@TEST-DEV-121 ~]$ ls compilekerna-LVSmaster.sh kernel-2.6.32-220.23.1.el6.src.rpm Lvs-fullnat-synproxy.tar.gz 安装 此处由于是编译内核,使用 root 用户来执行,以免遇到各种权限问题 [op@TEST-DEV-121 ~]$ sudo chmod +x compilekerna-LVSmaster.sh[op@TEST-DEV-121 ~]$ sudo su – [root@TEST-DEV-121 ~]# cd /home/op/ [root@TEST-DEV-121 op]# uname -r 2.6.32-431.el6.x86_64 # 此处是编译前看一下内核版本号 [root@TEST-DEV-121 op]# ./compilekerna-LVSmaster.sh 安装依赖包的时候,发现默认 yum 源没有这些东西 Error Downloading Packages: newt-devel-0.52.11-3.el6.x86_64: failure: Packages/newt-devel-0.52.11-3.el6.x86_64.rpm from base: [Errno 256] No more mirrors to try. slang-devel-2.2.1-1.el6.x86_64: failure: Packages/slang-devel-2.2.1-1.el6.x86_64.rpm from base: [Errno 256] No more mirrors to try. asciidoc-8.4.5-4.1.el6.noarch: failure: Packages/asciidoc-8.4.5-4.1.el6.noarch.rpm from base: [Errno 256] No more mirrors to try. 所以,要自己下newt-devel-0.52.11-3.el6.x86_64.rpm slang-devel-2.2.1-1.el6.x86_64.rpmasciidoc-8.4.5-4.1.el6.noarch.rpm[root@TEST-DEV-121 op]# rpm -ivh slang-devel-2.2.1-1.el6.x86_64.rpm warning: slang-devel-2.2.1-1.el6.x86_64.rpm: Header V4 DSA/SHA1 Signature, key ID 192a7d7d: NOKEY Preparing... ########################################### [100%] 1:slang-devel ########################################### [100%] [root@TEST-DEV-121 op]# rpm -ivh newt-devel-0.52.11-3.el6.x86_64.rpmwarning: newt-devel-0.52.11-3.el6.x86_64.rpm: Header V3 RSA/SHA1 Signature, key ID c105b9de: NOKEY Preparing... ########################################### [100%] 1:newt-devel ########################################### [100%] [root@TEST-DEV-121 op]# rpm -ivh asciidoc-8.4.5-4.1.el6.noarch.rpm warning: asciidoc-8.4.5-4.1.el6.noarch.rpm: Header V4 DSA/SHA1 Signature, key ID 192a7d7d: NOKEY Preparing... ########################################### [100%] 1:asciidoc ########################################### [100%] 再次运行即可 编译结束后: DEPMOD 2.6.32sh /home/op/rpms/BUILD/kernel-2.6.32-220.23.1.el6/linux-2.6.32-22 0.23.1.el6.x86_64/arch/x86/boot/install.sh 2.6.32 arch/x86/boot/bzImage \ System.map "/boot" ERROR: modinfo: could not find module xen_procfs ERROR: modinfo: could not find module xen_scsifront ERROR: modinfo: could not find module xen_hcall 感觉像是虚拟机是 xen 做的底层支持。

使用LVS+Keepalived 实现高性能高可用负载均衡实际操作说明kkmangnn 网站: /way2rhce前言:本文档用keepalived实现负载均衡和高可用性一. 网站负载均衡拓朴图.系统环境:CentOS 5.2 ,需要安装有gcc openssl-devel kernel-devel包二. 安装LVS和Keepalvied软件包1. 下载相关软件包#mkdir /usr/local/src/lvs#cd /usr/local/src/lvs#wget /software/kernel-2.6/ipvsadm-1.24.tar.gz#wget /software/keepalived-1.1.15.tar.gz2. 安装LVS和Keepalived#lsmod |grep ip_vs#uname -r2.6.18-92.el5#ln -s /usr/src/kernels/2.6.18-92.el5-i686/ /usr/src/linux#tar zxvf ipvsadm-1.24.tar.gz#cd ipvsadm-1.24#make && make install#tar zxvf keepalived-1.1.15.tar.gz#cd keepalived-1.1.15#./configure && make && make install把keepalived做成系统启动服务方便管理#cp /usr/local/etc/rc.d/init.d/keepalived /etc/rc.d/init.d/#cp /usr/local/etc/sysconfig/keepalived /etc/sysconfig/#mkdir /etc/keepalived#cp /usr/local/etc/keepalived/keepalived.conf /etc/keepalived/#cp /usr/local/sbin/keepalived /usr/sbin/#service keepalived start|stop三.配置web服务器的脚本.#vi /usr/local/src/lvs/web.sh#!/bin/bashSNS_VIP=192.168.0.8case "$1" instart)ifconfig lo:0 $SNS_VIP netmask 255.255.255.255 broadcast $SNS_VIP/sbin/route add -host $SNS_VIP dev lo:0echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "1" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/all/arp_announcesysctl -p >/dev/null 2>$1echo "RealServer Start OK";;stop)ifconfig lo:0 downroute del $SNS_VIP >/dev/null 2>&1echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "0" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/all/arp_announceecho "RealServer Stoped";;*)echo "Usage: $0 {start|stop}"exit 1esacexit 0# chmod +x /usr/local/src/lvs/web.sh ##修改成可执行#vi /etc/rc.local ##将该脚本写入开机文件/usr/local/src/lvs/web.sh start配置好一台web服务器之后,将配置文件拷贝到第二台服务器。

LVS+keepalived负载均衡(FULLNAT模式)lvsfullnat模式安装1部署规划依照淘宝开源的fullnat模式lvs,规划采用版本信息:linux内核:2.6.32-220.23.1.el6lvs版本:version1.2.1keepalived版本:v1.2.2序号主机ip域名促进作用附注110.142.67.121test-dev-121master编程内核+lvs+keepalived210.142.67.122test-dev-122backup编程内核+lvs+keepalived310.142.78.74test-bdd-074hiveserver2编程内核+hiveserver2410.142.78.76test-bdd-076hiveserver2编程内核+hiveserver22lvs加装2.1内核编译内核编程须要在master和backup节点都继续执行,以下以master节点为基准加装脚本:由于不能上外网,所以提前把对应的安装包下好下载的暂时放在家目录[op@test-dev-121~]$ls加装[op@test-dev-121~]$sudosuc[root@test-dev-121~]#cd/home/op/[root@test-dev-121op]#uname-r2.6.32-431.el6.x86_64#此处是编译前看一下内核版本号newt-devel-0.52.11-3.el6.x86_64:failure:packages/newt-devel-0.52.11-3.el6.x86_64.rpmfrombase:[errno256]nomoremirrorstotry.slang-devel-2.2.1-1.el6.x86_64:failure:packages/slang-devel-2.2.1-1.el6.x86_64.rpmfrombase:[errno256]nomoremirrorstotry.asciidoc-8.4.5-4.1.el6.noarch:failure:packages/asciidoc-8.4.5-4.1.el6.noarch.rpmfrombase:[errno256]nomoremirrorstotry.所以,必须自己下newt-devel-0.52.11-3.el6.x86_64.rpmslang-devel-2.2.1-1.el6.x86_64.rpmasciidoc-8.4.5-4.1.el6.noarch.rpm[root@test-dev-121op]#rpm-ivhslang-devel-2.2.1-1.el6.x86_64.rpmwarning:slang-devel-2.2.1-1.el6.x86_64.rpm:headerv4dsa/sha1signature,keyid192a7d7d:nokeypreparing...###########################################[100%]1:slang-devel###########################################[100%][root@test-dev-121op]#rpm-ivhnewt-devel-0.52.11-3.el6.x86_64.rpmwarning:newt-devel-0.52.11-3.el6.x86_64.rpm:headerv3rsa/sha1signature,keyidc105b9de:nokeypreparing...###########################################[100%]1:newt-devel###########################################[100%][root@test-dev-121op]#rpm-ivhasciidoc-8.4.5-4.1.el6.noarch.rpmwarning:asciidoc-8.4.5-4.1.el6.noarch.rpm:headerv4dsa/sha1signature,keyid192a7d7d:nokeypreparing...###########################################[100%]1:asciidoc###########################################[100%]再次运行即可编译结束后:depmod2.6.32sh/home/op/rpms/build/kernel-2.6.32-220.23.1.el6/linux-2.6.32-220.23.1.el6.x86_64/arch/x86/boot/install.sh2.6.32arch/x86/boot/bzimage\\system.map\error:modinfo:couldnotfindmodulexen_procfserror:modinfo:couldnotfindmodulexen_ scsifronterror:modinfo:couldnotfindmodulexen_hcall感觉像虚拟机就是xen搞的底层积极支持。

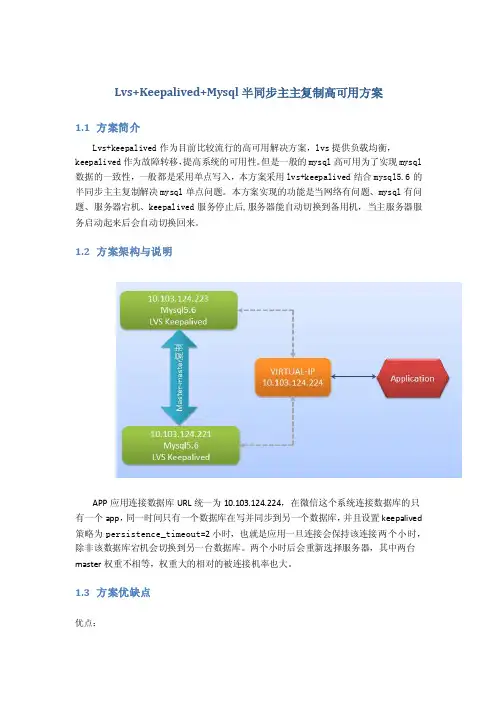

Lvs+Keepalived+Mysql半同步主主复制高可用方案1.1方案简介Lvs+keepalived作为目前比较流行的高可用解决方案,lvs提供负载均衡,keepalived作为故障转移,提高系统的可用性。

但是一般的mysql高可用为了实现mysql 数据的一致性,一般都是采用单点写入,本方案采用lvs+keepalived结合mysql5.6的半同步主主复制解决mysql单点问题。

本方案实现的功能是当网络有问题、mysql有问题、服务器宕机、keepalived服务停止后,服务器能自动切换到备用机,当主服务器服务启动起来后会自动切换回来。

1.2方案架构与说明APP应用连接数据库URL统一为10.103.124.224,在微信这个系统连接数据库的只有一个app,同一时间只有一个数据库在写并同步到另一个数据库,并且设置keepalived 策略为persistence_timeout=2小时,也就是应用一旦连接会保持该连接两个小时,除非该数据库宕机会切换到另一台数据库。

两个小时后会重新选择服务器,其中两台master权重不相等,权重大的相对的被连接机率也大。

1.3方案优缺点优点:✓安装配置简单,实现方便,高可用效率好,可以根据服务与系统的可用性多方面进行切换。

✓可以将写VIP和读VIP分别进行设置,为读写分离做准备。

✓可以在后面添加多个从服务器,并做到负载均衡。

缺点:✓在当前所用数据库发生宕机而切换数据库时可能会导致当前事务丢失。

1.4方案实战1.4.1适用场景这个方案适用于只有两台数据库服务器并且还没有实现数据库的读写分离的情况,读和写都配置VIP。

这个方案能够便于单台数据库的管理维护以及切换工作。

比如进行大表的表结构更改、数据库的升级等都是非常方便的。

1.4.2实战环境介绍1.4.3Mysql的安装和配置在master1、master2服务器都进行安装,先卸载旧版本再安装:1.4.4Mysql的主主同步配置10.103.124.223:/etc/f配置:先注释上述5个参数然后登入mysqlSET PASSWORD = PASSWORD('123456');INSTALL PLUGIN rpl_semi_sync_master SONAME 'semisync_master.so';连接10.103.124.223数据库后执行:10.103.124.221:/etc/f配置:连接10.103.124.221数据库后执行:service iptables statusservice iptables stopchkconfig iptables off清除linux防火墙,让3306能让其他机器连接1.4.5Lvs的安装和配置在master1、master2服务器都进行安装:1.4.6Keepalived的安装在master1、master2服务器都进行安装:1.4.7Keepalived的配置master1的/etc/keepalived/keepalived.conf配置:Master2的/etc/keepalived/keepalived.conf配置:1.4.8高可用方案测试方案搭建好以后就要进行全方位的可靠性测试了,看看是否达到了我们的预期效果,大致测试步骤如下:✓∙ 停掉master1上的mysql,看看能否自动切换到master2。

keepalive+lvs+mysql的高可用的双机解决方案对照这个计划,分享存储对于解决mysql的高可用的数据同步,无疑要优异的多,对于随着对keepaliv了解的慢慢,无意间发觉了mysql数据同步的解决计划,非常的感爱好,自然而然本计划的技术重点在于mysql的数据同步,废话不说了,开头做试验。

试验原理:两台VM 下的linux(CentOS5.5)服务器,采纳keepalived软件作为高可用和的处理软件。

主节点IP:192.168.1.196 备用节点IP:192.168.1.195 自行配置好yum源,采纳本地镜像和外部的yum源都可以,以下的这个步骤是在两台服务器上安装相关的软件,固然按照系统不同,需要安装的软件不尽相同,这里是列出来我需要安装的软件,大家可以按照提醒自行安装。

第一:先说在双节点都要做的相同的步骤 [root@localhost ~] yum -y install ipvsadm kernel-devel openssl openssl-devel [root@localhost ~] -s /usr/src/kerne/2.6.18-194.el5-i686//usr/src/linux [root@localhost ~] wget /software/keepalived-1.2.1.tar.gz [root@localhost ~] ls keepalived-1.2.1.tar.gzkeepalived-1.2.1.tar.gz [root@localhost ~] tar zxvf keepalived-1.2.1.tar.gz [root@localhost ~] keepalived-1.2.1 [root@localhost keepalived-1.2.1] ./configure //结束后浮现以下的内容表示可以编译安装了 Keepalived configuration------------------------ Keepalived version : 1.2.1Compiler : gcc Compiler flags : -g -O2 -DETHERTYPE_IPV6=0x86 Exa Lib : -lpopt -lssl -lcrypto Use IPVS Framework : Yes IPVS sy daemon pport : Yes Use VRRP Framework : Yes Use Debug flags : No [root@localhost keepalived-1.2.1] make make install[root@localhost ~] yum -y install mysql-server mysql 其次:mysql 在主节点上的设置(MASTER)首先是对mysql的设置(关键)[root@localhost ~] /etc/init.d/mysqld start [root@localhost ~]mysql Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection is 4 Server version: 5.0.77-log Source distribution Type \'help;\' or \'h\' for help. Type \'c\' to the buffer. mysql grant repliion slave, on *.* to\'repl1\'@\'192.168.1.195\' identified by \'123456\'; Query OK, 0 rows affected (0.02 sec) mysql q Bye [root@localhost ~] /etc/init.d/mysqld stop [root@localhost ~] vi /etc/f [mysqld] datadir=/var/lib/mysqlsocket=/var/lib/mysql/mysql.sock user=mysql log-bin=mysql-bin server-id=1 binlog-do-db=test binlog-ignore-db=mysqlreplicate-do-db=test replicate-ignore-db=mysql log-slave-ups slave-skip-errors=all _binlog=1 auto_increment_increment=2 auto_increment_off=1 [root@localhost ~] /etc/init.d/mysqld start [root@localhost ~] mysql Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 4 Server version: 5.0.77-log Source distribution Type \'help;\' or\'h\' for help. Type \'c\' to clear the buffer. mysql flush tables with read lockG Query OK, 0 rows affected (0.00 sec) mysql show master usG *************************** 1. row*************************** File: mysql-bin.000001 Position: 98 Binlog_Do_DB: test Binlog_Ignore_DB: mysql 1 rowin set (0.00 sec) mysql change master tomaster_host=\'192.168.1.195\', master_user=\'repl2\',master_password=\'123456\',master_log_file=\'mysql-bin.000001\', master_log_pos=98; mysql slave start; Query OK, 0 rows affected, 1 warning (0.00 sec) mysql unlock tables; Query OK, 0 rows affected (0.15 sec) mysql show slave statusG; //这个步骤关键是看到以下两项开启Slave_IO_Running: Yes Slave_SQL_Running: Yes mysql grant all privileges on *.* to \'root\'@\'%\' identified by \'123456\'; Query OK, 0 rows affected (0.00 sec) mysql flush privileges; Query OK, 0 rows affected (0.00 sec) 第三:mysql在备用节点上的设置 [root@localhost ~] /etc/init.d/mysqld start[root@localhost ~] mysql Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 4 Server version: 5.0.77-log Source distribution Type \'help;\' or \'h\' for help. Type \'c\' to clear the buffer. mysql grant replication slave,file on *.* to \'repl2\'@\'192.168.1.196\' identified by \'123456\'; Query OK, 0 rows affected (0.02 sec) mysql q Bye [root@localhost ~] /etc/init.d/mysqld stop [root@localhost ~] vi /etc/f [mysqld] datadir=/var/lib/mysqlsocket=/var/lib/mysql/mysql.sock user=mysql log-bin=mysql-bin server-id=2 binlog-do-db=test binlog-ignore-db=mysql replicate-do-db=test replicate-ignore-db=mysqllog-slave-updates slave-skip-errors=all sync_binlog=1auto_increment_increment=2 auto_increment_offset=2[root@localhost ~] /etc/init.d/mysqld start [root@localhost ~] mysql Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 4 Server version: 5.0.77-log Source distribution Type \'help;\' or \'h\' for help. Type \'c\' to clear the buffer. mysql flush tables with read lockG Query OK, 0 rows affected (0.00 sec) mysql show master statusG *************************** 1. row*************************** File: mysql-bin.000001 Position: 98。

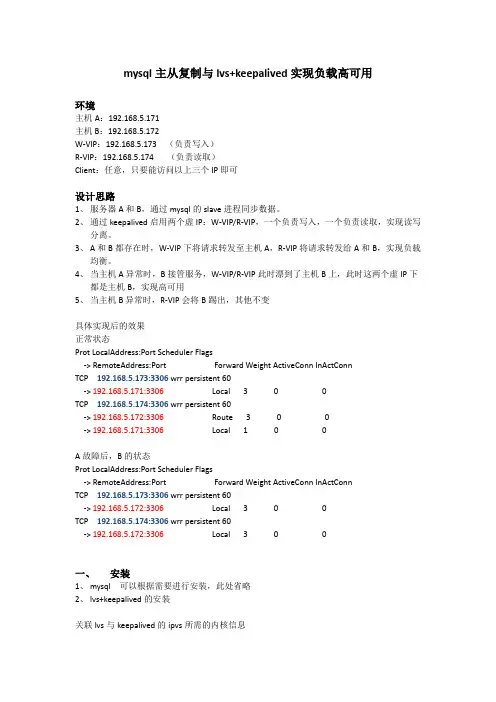

mysql主从复制与lvs+keepalived实现负载高可用环境主机A:192.168.5.171主机B:192.168.5.172W-VIP:192.168.5.173 (负责写入)R-VIP:192.168.5.174 (负责读取)Client:任意,只要能访问以上三个IP即可设计思路1、服务器A和B,通过mysql的slave进程同步数据。

2、通过keepalived启用两个虚IP:W-VIP/R-VIP,一个负责写入,一个负责读取,实现读写分离。

3、A和B都存在时,W-VIP下将请求转发至主机A,R-VIP将请求转发给A和B,实现负载均衡。

4、当主机A异常时,B接管服务,W-VIP/R-VIP此时漂到了主机B上,此时这两个虚IP下都是主机B,实现高可用5、当主机B异常时,R-VIP会将B踢出,其他不变具体实现后的效果正常状态Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 192.168.5.173:3306 wrr persistent 60-> 192.168.5.171:3306 Local 3 0 0TCP 192.168.5.174:3306 wrr persistent 60-> 192.168.5.172:3306 Route 3 0 0-> 192.168.5.171:3306 Local 1 0 0A故障后,B的状态Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 192.168.5.173:3306 wrr persistent 60-> 192.168.5.172:3306 Local 3 0 0TCP 192.168.5.174:3306wrr persistent 60-> 192.168.5.172:3306Local 3 0 0一、安装1、mysql 可以根据需要进行安装,此处省略2、lvs+keepalived的安装关联lvs与keepalived的ipvs所需的内核信息ln -s /usr/src/kernels/2.6.18-194.el5-x86_64/ /usr/src/linux安装lvstar -zxvf ipvsadm-1.24.tar.gzcd tar -zxvf ipvsadm-1.24makemake install验证ipvsadm -v返回ipvsadm v1.24 2005/12/10 (compiled with popt and IPVS v1.2.1)说明安装成功安装keepalivedtar –zxvf keepalived-1.1.20.tar.gzcd keepalived-1.1.20./configure --prefix=/usr/local/keepalived/makemake installln -s /usr/local/keepalived/etc/keepalived /etc/ln -s /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/rc.d/init.d/ln -s /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ln -s /usr/local/keepalived/bin/genhash /bin/ln -s /usr/local/keepalived/sbin/keepalived /sbin/configure时注意Use IPVS Framework、IPVS sync daemon support 、Use VRRP Framework要返回yes,否则无法关联ipvs功能二、配置mysql的主从Master的配置vi /etc/f添加如下内容:server-id = 1 ##master IDbinlog-do-db = ppl ##允许同步的库binlog-ignore-db = mysql ##忽略同步的库,也就是不能同步的库##配置文件中还需开启log-bin,例如log-bin = mysql-binmysql –uroot –p以下内容在mysql中执行mysql> grant replication slave on *.* to ‘slave’@’%’ identified by '123456';mysql>create database ppl;mysql>flush logs;mysql>show master status;mysql>use ppl;mysql> create table test(name char);返回一表格如下,记住File的内容,等下slave的配置中要用到Slave的配置vi /etc/f添加如下内容:server-id = 2 ##slave IDmaster-host = 192.168.5.171 ##指定master的地址master-user = slave ##同步所用的账号master-password = 123456 ##同步所用的密码master-port = 3306 ##master上mysql的端口replicate-do-db = ppl ##要同步的库名replicate-ignore-db = mysql ##忽略的库名slave-skip-errors = 1062 ##当同步异常时,那些错误跳过,本例为1062错误#log-slave-updates ##同步的同时,也记录自己的binlog日志,如果还有台slave是通过这台机器进行同步,那需要增加此项,#skip-slave-start ##启动时不自动开启slave进程#read-only ##将库设为只读模式,只能从master同步,不能直接写入(避免自增键值冲突)mysql –uroot –p以下内容在mysql中执行mysql>create database ppl;mysql>change master to master_log_file=’mysql-bin.000007’,master_log=106;mysql>slave start;mysql>show slave status \G在返回值中查看,如果slave_IO_Runing与slave_SQL_Runing的值都为Yes说明同步成功三、通过lvs+keepalived实现负载与热备,并实现读写分离Master上的配置vi /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {router_id MySQL-HA}vrrp_instance VI_1 {state BACKUPinterface eth0virtual_router_id 90priority 100advert_int 1notify_master "/usr/local/mysql/bin/remove_slave.sh"nopreemptauthentication {auth_type PASSauth_pass }virtual_ipaddress {192.168.5.173 label eth0:1192.168.5.174 label eth0:2}}virtual_server 192.168.5.173 3306 {delay_loop 2lb_algo wrrlb_kind DRpersistence_timeout 60protocol TCPreal_server 192.168.5.171 3306 {weight 3notify_down /usr/local/mysql/bin/mysql.shTCP_CHECK {connect_timeout 10nb_get_retry 3delay_before_retry 3connect_port 3306}}}virtual_server 192.168.5.174 3306 {delay_loop 2lb_algo wrrlb_kind DRpersistence_timeout 60protocol TCPreal_server 192.168.5.171 3306 {weight 1notify_down /usr/local/mysql/bin/mysql.shTCP_CHECK {connect_timeout 10nb_get_retry 3delay_before_retry 3connect_port 3306}}real_server 192.168.5.172 3306 {weight 3TCP_CHECK {connect_timeout 10nb_get_retry 3delay_before_retry 3connect_port 3306}}}vi /usr/local/mysql/bin/remove_slave.sh#!/bin/bashuser=rootpassword=123456log=/root/mysqllog/remove_slave.log#--------------------------------------------------------------------------------------echo "`date`" >> $log/usr/bin/mysql -u$user -p$password -e "set global read_only=OFF;reset master;stop slave;change master to master_host='localhost';" >> $log/bin/sed -i 's#read-only#\#read-only#' /etc/fchomd 755 /usr/local/mysql/bin/remove_slave.shvi /usr/local/mysql/bin/mysql.sh/etc/init.d/keepalived stopSlave上的配置vi /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {router_id MySQL-HA}vrrp_instance VI_1 {state BACKUPinterface eth0virtual_router_id 90priority 99advert_int 1notify_master "/usr/local/mysql/bin/remove_slave.sh"authentication {auth_type PASSauth_pass }virtual_ipaddress {192.168.5.173 label eth0:1192.168.5.174 label eth0:2}}virtual_server 192.168.5.173 3306 {delay_loop 2lb_algo wrrlb_kind DRpersistence_timeout 60protocol TCPreal_server 192.168.5.172 3306 {weight 3notify_down /usr/local/mysql/bin/mysql.shTCP_CHECK {connect_timeout 10nb_get_retry 3delay_before_retry 3connect_port 3306}}virtual_server 192.168.5.174 3306 {delay_loop 2lb_algo wrrlb_kind DRpersistence_timeout 60protocol TCPreal_server 192.168.5.172 3306 {weight 3notify_down /usr/local/mysql/bin/mysql.shTCP_CHECK {connect_timeout 10nb_get_retry 3delay_before_retry 3connect_port 3306}}# real_server 192.168.5.172 3306 {# weight 3# TCP_CHECK {# connect_timeout 10# nb_get_retry 3# delay_before_retry 3# connect_port 3306# }# }}vi /usr/local/mysql/bin/remove_slave.sh#!/bin/bashuser=rootpassword=123456log=/root/mysqllog/remove_slave.log#--------------------------------------------------------------------------------------echo "`date`" >> $log/usr/bin/mysql -u$user -p$password -e "set global read_only=OFF;reset master;stop slave;change master to master_host='localhost';" >> $log/bin/sed -i 's#read-only#\#read-only#' /etc/fchomd 755 /usr/local/mysql/bin/remove_slave.shvi /usr/local/mysql/bin/mysql.sh#!/bin/bash/etc/init.d/keepalived stopvi /usr/local/keepalived/bin/lvs-rs.sh#!/bin/bashWEB_VIP=192.168.5.174. /etc/rc.d/init.d/functionscase "$1" instart)ifconfig lo:0 $WEB_VIP netmask 255.255.255.255 broadcast $WEB_VIP/sbin/route add -host $WEB_VIP dev lo:0echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "1" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/all/arp_announcesysctl -p >/dev/null 2>&1echo "RealServer Start OK";;stop)ifconfig lo:0 downroute del $WEB_VIP >/dev/null 2>&1echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "0" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/all/arp_announceecho "RealServer Stoped";;status)# Status of LVS-DR real server.islothere=`/sbin/ifconfig lo:0 | grep $WEB_VIP`isrothere=`netstat -rn | grep "lo:0" | grep $web_VIP`if [ ! "$islothere" -o ! "isrothere" ];then# Either the route or the lo:0 device# not found.echo "LVS-DR real server Stopped."elseecho "LVS-DR Running."fi;;*)# Invalid entry.echo "$0: Usage: $0 {start|status|stop}"exit 1;;esacexit 0chmod 755 /usr/local/keepalived/bin/lvs-rs.shecho “/usr/local/keepalived/bin/lvs-rs.sh start” >>/etc/rc.localvi /etc/f将这两个参数前边的# 去掉,重启mysql#skip-slave-start#read-only登陆mysql,手动将slave进程启动mysql>slave start;先启动master上的keepalived,正常后再启动slave上的。

MySQL中的复制机制与主备配置方法引言:MySQL是目前最流行的关系型数据库之一,其复制机制是保证数据可靠性和高可用性的重要手段。

本文将介绍MySQL中的复制机制以及主备配置方法。

一、MySQL中的复制机制1.1 什么是MySQL的复制机制MySQL的复制机制是指将一个数据库服务器(主服务器)的数据同步到其他数据库服务器(备服务器)的过程。

主备服务器之间通过二进制日志(binlog)实现数据的同步。

1.2 复制机制的优点复制机制具有以下优点:1.2.1 数据备份和恢复:通过复制机制,可以将主服务器上的数据同步到备服务器上,以实现数据的备份和恢复。

1.2.2 分布式读取:通过配置主备服务器,可以实现读写分离,提高数据库服务器的性能。

1.2.3 高可用性:当主服务器发生故障时,备服务器可以接管主服务器的功能,确保系统的高可用性。

1.3 复制机制的原理MySQL的复制机制主要涉及三个角色:主服务器(Master)、备服务器(Slave)和中继日志(relay log)。

1.3.1 主服务器(Master):主服务器负责接收客户端的写操作,并将写操作记录到二进制日志中。

1.3.2 备服务器(Slave):备服务器通过连接到主服务器,并请求从主服务器获取二进制日志中的写操作,并执行这些写操作,以保持数据的一致性。

1.3.3 中继日志(relay log):备服务器接收并执行主服务器的写操作后,会生成一个中继日志,以记录备服务器执行的操作。

这个中继日志会被其他备服务器作为二进制日志,从而实现多个备服务器之间的数据同步。

二、MySQL主备配置方法2.1 配置主服务器2.1.1 启用二进制日志在主服务器的配置文件(f)中,将`log_bin`参数设置为`ON`,以启用二进制日志功能。

2.1.2 设置服务器ID在主服务器的配置文件中,设置一个唯一的服务器ID,以便备服务器可以识别主服务器。

2.1.3 创建备份用户在主服务器上创建一个用于备份的用户,并为其赋予适当的权限。

MariaDB Master-Master双主+Lvs+Keepalived配置DB业务的负载均衡和高可用环境一.架构介绍架构介绍:利用Keepalived构建高可用的MariaDB-HA,保证两台MariaDB数据的一致性,然后用Keepalived实现虚拟IP,通过Keepalived自带的服务监控功能来实现MariaDB故障时自动切换。

Keepalived的设计目的是构建高可用的LVS负载均衡集群,可以调用ipvsadm工具来创建虚拟服务器,管理服务器池,而不仅仅用来做双机热备。

使用keepalived构建LVS 集群更加简单易用,主要优势体现在:1.对LVS负载均衡调度器实现热备切换,提高可用性。

2.对服务器池中的节点进行健康检查,自动移除失效节点,恢复后再重新加入。

在基于LVS+Keepalived实现的LVS群集结构中,至少包括2台热备的负载调度器,两台以上的节点服务器。

本例中以DR模式的LVS群集为基础,增加负载调度器,使用Keepalived来实现调度器的热备,从而构建建有负载均衡、高可用两种能力的LVS网站群集平台。

Keepalived可以实现高可用或者热备,用来防止单点故障的问题,而Keepalived的核心是VRRP协议,VRRP 协议主要实现了在路由器或者三层交换机处的冗余,Keepalived就是使用VRRP协议来实现高可用。

通过LVS+Keepalived构建的LVS集群,LVS负载均衡用户请求到后端的MariaDB服务器,Keepalived的作用是检测MariaDB服务器的状态,如果有一台MariaDB服务器宕机,或者工作出现故障,Keepalived将检测到,并且将有故障的MariaDB服务器从系统中剔除,当MariaDB服务器工作正常后Keepalived自动将MariaDB服务器加入到服务器群中,这些工作全部自动完成,不需要人工干涉,需要人工做的只是修复故障的MariaDB服务器。

Keepalive实现Mysql主主复制,高可用群集一、安装前准备关闭iptables:service iptables stop;chkconfig iptables off关闭SELinuxvi /etc/selinux/config查询系统是否有安装mysql,如有则移除。

rpm -qa | grep mysql移除已安装的mysql:yum remove mysql-libs-5.1.66-2.el6_3.x86_64二、安装数据库安装需要的插件:yum install perl perl-devel libaiolibaio-devel安装MySQL-shared-compat 替换mysql-libs,如果不替换,在删除mysql-libs,会提示postfix 依赖于mysql-libsrpm -ivh MySQL-shared-compat-5.6.26-1.el6.x86_64.rpm安装服务端:rpm -ivh MySQL-server-5.6.26-1.el6.x86_64.rpm安装客户端:rpm -ivh MySQL-client-5.6.26-1.el6.x86_64.rpm设置开机自动启动:chkconfig mysql on启动mysql:service mysql start停止mysql:service mysql stop获取root的密码:more /root/.mysql_secret修改root密码并删除匿名账户:/usr/bin/mysql_secure_installation--user=mysql修改主机名:vi /etc/sysconfig/networkvi /etc/hosts修改主服务器配置文件(配置文件见附件):vi /usr/f修改从服务器配置文件(配置文件见附件):进入mysql:mysql -u root -pshow master statusshow global variables like …%uuid%‟在两台机器上都创建复制账户并授权:grant replication slave on *.* to …mysql‟@‟192.168.2.%‟identified by …mysql‟;flush privileges; #重加载权限从库连接主库:change master to master_host=…192.168.2.61‟,master_user=…mysql‟,master_password=…mysql‟, master_port=3306,master_auto_position=1;start slave;主库连接从库:change master to master_host=…192.168.2.62‟,master_user=…mysql‟,master_password=…mysql‟, master_port=3306,master_auto_position=1;start slave;三、安装并配置keepalived(配置文件见附件)yum install keepalivedvi /etc/keepalived/keepalived.conf四、测试:grant all privileges on *.* to …root‟@‟%‟identified by …123456‟;mysql -h “192.168.2.60” -u root –pshow variables like “server_id”;show processlist;show databases;show slave status\G;show master status\G;有兴趣朋友可以了解更多java教程/java/video.shtml。

一、环境Master(主机A):192.168.1.1Slave(主机B):192.168.1.2W-VIP(写入):192.168.1.3R-VIP(读取):192.168.1.4Client(测试):192.168.1.100操作系统版本:CentOS release 6.4MySQL数据库版本:5.6.14keepalived版本:1.2.7LVS版本:1.26所有环境均为虚拟机二、设计思路1. 服务器A和B,通过mysql的slave进程同步数据。

2. 通过keepalived启用两个虚IP:W-VIP/R-VIP,一个负责写入,一个负责读取,实现读写分离。

3. A和B都存在时,W-VIP下将请求转发至主机A,R-VIP将请求转发给A和B,实现负载均衡。

4. 当主机A异常时,B接管服务,W-VIP/R-VIP此时漂到了主机B上,此时这两个虚IP下都是主机B,实现高可用5. 当主机B异常时,R-VIP会将B踢出,其他不变三、架构图四、软件安装主从两个主机都要装以下软件:1. MySQL的安装(略)2. keepalived安装yum install keepalived2. LVS安装yum install ipvsadm五、配置1. 配置MySQL的主从复制(略)2. 配置keepalivedMaster上的配置vi /etc/keepalived/keepalived.conf[plain]view plaincopy1.! Configuration File for keepalived2.3.global_defs {4. router_id MySQL-ha5.}6.7.vrrp_instance VI_1 {8. state BACKUP9. interface eth110. virtual_router_id 9011. priority 10012. advert_int 113. notify_master "/usr/local/mysql/bin/remove_slave.sh"14. nopreempt15. authentication {16. auth_type PASS17. auth_pass 111118. }19. virtual_ipaddress {20. 192.168.1.3 label eth1:121. 192.168.1.4 label eth1:222. }23.}24.25.virtual_server 192.168.1.3 6603 {26. delay_loop 227. lb_algo wrr28. lb_kind DR29. persistence_timeout 6030. protocol TCP31. real_server 192.168.1.1 6603 {32. weight 333. notify_down /usr/local/mysql/bin/mysql.sh34. TCP_CHECK {35. connect_timeout 1036. nb_get_retry 337. delay_before_retry 338. connect_port 660339. }40. }41.}42.43.virtual_server 192.168.1.4 6603 {44. delay_loop 245. lb_algo wrr46. lb_kind DR47. persistence_timeout 6048. protocol TCP49. real_server 192.168.1.1 6603 {50. weight 151. notify_down /usr/local/mysql/bin/mysql.sh52. TCP_CHECK {53. connect_timeout 1054. nb_get_retry 355. delay_before_retry 356. connect_port 660357. }58. }59. real_server 192.168.1.2 6603 {60. weight 361. TCP_CHECK {62. connect_timeout 1063. nb_get_retry 364. delay_before_retry 365. connect_port 660366. }67. }68.}keepalived配置成服务并开机启动[plain]view plaincopy1.cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/rc.d/init.d/2.cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/3.cp /usr/local/keepalived/sbin/keepalived /usr/sbin/4.chkconfig --add keepalived5.chkconfig --level 345 keepalived onvi /usr/local/mysql/bin/remove_slave.sh[plain]view plaincopy1.#!/bin/basher=u13.password=123454.log=/usr/local/mysql/log/remove_slave.log5.echo "`date`" >> $log6./usr/local/mysql/bin/mysql -u$user -p$password -e "set global read_only=OFF;reset master;stop slave;change master to master_host='localhost';" >> $log7./bin/sed -i 's#read-only#\#read-only#' /etc/fvi /usr/local/mysql/bin/mysql.sh[plain]view plaincopy1.#!/bin/bash2./etc/init.d/keepalived stopSlave上的配置vi /etc/keepalived/keepalived.conf[plain]view plaincopy1.! Configuration File for keepalived2.3.global_defs {4. router_id MySQL-ha5.}6.7.vrrp_instance VI_1 {8. state BACKUP9. interface eth110. virtual_router_id 9011. priority 9912. advert_int 113. notify_master "/usr/local/mysql/bin/remove_slave.sh"14. authentication {15. auth_type PASS16. auth_pass 111117. }18. virtual_ipaddress {19. 192.168.1.3 label eth1:120. 192.168.1.4 label eth1:221. }22.}23.24.virtual_server 192.168.1.3 6603 {25. delay_loop 226. lb_algo wrr27. lb_kind DR28. persistence_timeout 6029. protocol TCP30. real_server 192.168.1.2 6603 {31. weight 332. notify_down /usr/local/mysql/bin/mysql.sh33. TCP_CHECK {34. connect_timeout 1035. nb_get_retry 336. delay_before_retry 337. connect_port 660338. }39. }40.}41.42.virtual_server 192.168.1.4 6603 {43. delay_loop 244. lb_algo wrr45. lb_kind DR46. persistence_timeout 6047. protocol TCP48. real_server 192.168.1.2 6603 {49. weight 350. notify_down /usr/local/mysql/bin/mysql.sh51. TCP_CHECK {52. connect_timeout 1053. nb_get_retry 354. delay_before_retry 355. connect_port 660356. }57. }58.}keepalived配置成服务并开机启动[plain]view plaincopy1.cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/rc.d/init.d/2.cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/3.cp /usr/local/keepalived/sbin/keepalived /usr/sbin/4.chkconfig --add keepalived5.chkconfig --level 345 keepalived onvi /usr/local/mysql/bin/remove_slave.sh[plain]view plaincopy1.#!/bin/basher=u13.password=123454.log=/usr/local/mysql/log/remove_slave.log5.echo "`date`" >> $log6./usr/local/mysql/bin/mysql -u$user -p$password -e "set global read_only=OFF;reset master;stop slave;change master to master_host='localhost';" >> $log7./bin/sed -i 's#read-only#\#read-only#' /etc/fvi /usr/local/mysql/bin/mysql.sh[plain]view plaincopy1.#!/bin/bash2./etc/init.d/keepalived stop3. 配置LVSMaster与Slave上的配置相同:vi /usr/local/bin/lvs_real.sh[plain]view plaincopy1.#!/bin/bash2.# description: Config realserver lo and apply noarp3.4.SNS_VIP=192.168.1.35.SNS_VIP2=192.168.1.46.source /etc/rc.d/init.d/functions7.case "$1" in8.9.start)10. ifconfig lo:0 $SNS_VIP netmask 255.255.255.255 broadcast $SNS_VIP11. ifconfig lo:1 $SNS_VIP2 netmask 255.255.255.255 broadcast $SNS_VIP212. /sbin/route add -host $SNS_VIP dev lo:013. /sbin/route add -host $SNS_VIP2 dev lo:114. echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore15. echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce16. echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore17. echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce18. sysctl -p >/dev/null 2>&119. echo "RealServer Start OK"20. ;;21.22.stop)23. ifconfig lo:0 down24. ifconfig lo:1 down25. route del $SNS_VIP >/dev/null 2>&126. route del $SNS_VIP2 >/dev/null 2>&127. echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore28. echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce29. echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore30. echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce31. echo "RealServer Stoped"32. ;;33.34.*)35.36. echo "Usage: $0 {start|stop}"37. exit 138.esac39.exit 0[plain]view plaincopy1.chmod 755 /usr/local/bin/lvs_real.sh2.echo "/usr/local/bin/lvs_real.sh start" >> /etc/rc.local五、Master和Slave的启动1. 启动Master上的MySQLservice mysql start2. 启动Slave上的MySQLservice mysql start3. 启动Master上的realserver脚本/usr/local/bin/lvs_real.sh start4. 启动Slave上的realserver脚本/usr/local/bin/lvs_real.sh start5. 启动Master上的keepalivedservice keepalived start6. 启动Slave上的keepalivedservice keepalived start六、测试1. 查看lvs能否进行负载均衡转发在Master和Slave上分别执行:ipvsadm -ln2. 在Client上验证连通性:ping 192.168.1.3ping 192.168.1.4mysql -u u1 -p12345 -P 6603 -h 192.168.1.3 -e "show variables like 'server_id'"mysql -u u1 -p12345 -P 6603 -h 192.168.1.4 -e "show variables like 'server_id'"3. 停掉Master上的MySQL,看写IP否自动切换到Slave,看读IP是否去掉了Master的MySQL在Master和Slave上分别执行:ipvsadm -ln在Client上执行:mysql -u u1 -p12345 -P 6603 -h 192.168.1.3 -e "show variables like 'server_id'" mysql -u u1 -p12345 -P 6603 -h 192.168.1.4 -e "show variables like 'server_id'"4. 停掉Master上的keepalived,看读写VIP是否会迁移到Slave上。

lvs+keepalive 负载MySQL读一、个别名词VIP:Virtual IPRIP:real server IPDIRECTOR:调度器(安装ipvsadm的机子)二、三种模式:NAT、TUN、DRDR:直接路由模式,(回复包不需要经过DIRECTOR, 这种模式要求RS与DIRECTOR中间不能用路由器隔开,因为这个模式只改变目标的mac地址),lvs和real server同网段*LVS的DR模式不需要开启路由转发功能,就可以正常的工作,出于安全考虑,如果不需要转发功能,最好关闭。

三、集群LVS的十种调度算法调度算法说明rr纯轮询方式wrr带权重轮询方式lc根据最小连接数分派wlc带权重的最小连接数分派lblc基于地区的最少连接调度。

把请求传递到负载小的服务器上lblcr带有复制调度的基于地区的最少连接调度dh目标散列调度sh源散列调度基于client地址的来源区分sed最短的期望的延迟nq最少队列调度四、安装、配置环境:RIP1:192.168.2.61RIP2:192.168.2.62LVS1:192.168.2.60LVS2:192.168.2.63VIP:192.168.2.2154.1 添加VIP地址(real server)因为在LVS的DR和TUN模式下,用户的访问请求到达real server后,是直接返回给用户的,不再经过前端的调度器(Director Server),所以需要在每个Real server节点上增加虚拟的VIP地址,这样数据才能直接返回给用户。

其中echo这些段是抑制arp广播。

在两台realserver主机上添加脚本[root@wch ~]# cat /etc/lvs.sh#!/bin/bashVIP=192.168.2.215. /etc/rc.d/init.d/functionscase "$1" instart)/sbin/ifconfig lo:0 $VIP broadcast $VIP ne tmask 255.255.255.255 upecho "real server lvs start!"echo "1" >/proc/sys/net/ipv4/conf/lo/arp_igno reecho "2" >/proc/sys/net/ipv4/conf/lo/arp_anno unceecho "1" >/proc/sys/net/ipv4/conf/all/arp_ign oreecho "2" >/proc/sys/net/ipv4/conf/all/arp_ann ounce;;stop)/sbin/ifconfig lo:0 downecho "real server lvs stop!"echo "0" >/proc/sys/net/ipv4/conf/lo/arp_igno reecho "0" >/proc/sys/net/ipv4/conf/lo/arp_anno unceecho "0" >/proc/sys/net/ipv4/conf/all/arp_ign oreecho "0" >/proc/sys/net/ipv4/conf/all/arp_ann ounce;;*)echo "Usage: $0 {start|stop}"exit 1esacexit 0LVS_MASTER4.2 安装keepalived(调度器)这里编译之前必须先安装几个依赖包gcc、opensll、openssl-devel、popt、popt-devel [root@localhost ~]# tar zxvf keepalived-1.1.15.tar.gz如果你的usr/src/kernel/目录下没有内核文件那就需要安装“kernel-devel”[root@localhost keepalived-1.1.15]# ./configure --sysconf=/etc --with-kern el-dir=/usr/src/kernels/2.6.32-358.11.1.el6.x86_64--sysconf指定配置文件安装路径/etc/keepalived/keepalived.conf--with-kernel-dir指定使用内核源码的头文件(即include目录)[root@localhost keepalived-1.1.15]# make && make install[*注意]要执行“ln -s /usr/local/sbin/keepalived /sbin/”使/etc/init.d/keepalived可执行。

1.MySQL-VIP:192.168.1.102.2. MySQL-master1:192.168.1.43.3. MySQL-master2:192.168.1.94.4. lvs_server master 192.168.1.55.5. lvs_server backup 192.168.1.66.6. liunx版本:Linux version 2.6.18-164.el57.7. MySQL版本:5.0.568.8. Keepalived版本:1.1.17实施步骤实施步骤实施步骤实施步骤::::① 在realserver主机上实行脚本realserver,为lo:0绑定VIP地址192.168.1.10,这步分别在二个mysql主机上192.168.1.4、192.168.1.9实施。

这步提前做,是因为以后的过程中这一步是不会发生更改的Vim /usr/local/bin/lvs_real.sh#!/bin/bash#description : start realserverVIP=192.168.1.10source /etc/rc.d/init.d/functions case "$1" in start) echo " start LVS of realserver"/sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 upecho"1">/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "1" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "2" >/proc/sys/net/ipv4/conf/all/arp_announce;;stop)/sbin/ifconfig lo:0 downecho " close LVS directorserver"echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/lo/arp_announceecho "0" >/proc/sys/net/ipv4/conf/all/arp_ignoreecho "0" >/proc/sys/net/ipv4/conf/all/arp_announce;;*)echo "Usage: $0 {start|stop}"exit 1Esac完成运行此脚本:. Lvs_real.sh start(or stop)简单说明下上述脚本的作用:1)vip(virtual ip)。

直接路由模式的vip必须跟服务器对外提供服务的ip地址在同一个网段,并且lvs 负载均衡器和其他所有提供相同功能的服务器都使用这个vip;2)vip被绑定在环回接口lo0:0上,其广播地址是其本身,子网掩码是255.255.255.255。

这与标准的网络地址设置有很大的不同。

采用这种可变长掩码方式把网段划分成只含一个主机地址的目的是避免ip地址冲突;3)echo这段的作用是抑制arp广播。

如果不做arp抑制,将会有众多的机器向其他宣称:“嗨!我是奥巴马,我在这里呢!”,这样就乱套了。

②为二台lvs主机安装lvs+keepalived软件。

安装lvs软件是必须做的,因为keepalived是运行在lvs之上的,因此lvs及keepalived必须装在一个系统里面。

过程如下:1.#mkdir /usr/local/src/lvs2.#cd /usr/local/src/lvs3.#wget /software/kernel-2.6/ipvsadm-1.24.tar.gz4.#ln -s /usr/src/kernels/2.6.18-53.el5PAE-i686/ /usr/src/linux5.#tar zxvf ipvsadm-1.24.tar.gz6. #cd ipvsadm-1.247.#make8.#make install9.执行ipvsadm,10查看当前加载的内核模块:lsmod|grep ip_vs11.运行ipvsadm[root@lvs ipvsadm-1.24]#lsmod|grep ip_vsIp_vs 77569 0③编辑keepalived.conf文件,直接用keepalived实现负载均衡及高可用性1.a)Keepalived的安装2.3.#wget /software/keepalived-1.1.15.tar.gz4.5. #tar zxvf keepalived-1.1.15.tar.gz6.7. #cd keepalived-1.1.158.9. #./configure10.显示出以下结果:Keepalived configuration------------------------Keepalived version : 1.1.17Compiler : gccCompiler flags : -g -O2Extra Lib : -lpopt -lssl -lcryptoUse IPVS Framework : Y eIPVS sync daemon support : Y esUse VRRP Framework : Y esUse LinkWatch : NoUse Debug flags : No11.#make12.13.#make install将keepalived做成启动脚务,方便管理:1.#cp /usr/local/etc/rc.d/init.d/keepalived /etc/rc.d/init.d/2.3. #cp /usr/local/etc/sysconfig/keepalived /etc/sysconfig/4.5. #mkdir /etc/keepalived6.7. #cp /usr/local/etc/keepalived/keepalived.conf /etc/keepalived/8.9. #cp /usr/local/sbin/keepalived /usr/sbin/10.11. #service keepalived start|stop12.配置主lvs1.! Configuration File for keepalived2.3. global_defs {4.6.7. yuhongchun027@8.9. }10.11. notification_email_from sns-lvs@12.13. smtp_server 127.0.0.114.15. router_id LVS_DEVEL_116.17. }18.19. vrrp_instance VI_1 {20.21. state MASTER22.23. interface eth024.25. virtual_router_id 5126.27. priority 10028.29. advert_int 130.31. authentication {32.33. auth_type PASS34.35. auth_pass 111136.37. }38.39. virtual_ipaddress {40.41. 192.168.1.1042.43. }44.45. }46.47. virtual_server 192.168.1.10 3360 {48.49. delay_loop 650. notification_email {51. lb_algo wrr52. lb_kind DR54.55. persistence_timeout 6056.57. protocol TCP58.59. real_server 192.168.1.4 3306 {60.61. weight 362.63. TCP_CHECK {64.65. connect_timeout 1066.67. nb_get_retry 368.69. delay_before_retry 370.71. connect_port 330672.73. }74.75. }76.77. real_server 192.168.1.9 3306 {78.79. weight 380.81. TCP_CHECK {82.83. connect_timeout 1084.85. nb_get_retry 386.87. delay_before_retry 388.89. connect_port 330690.91. }92.93. }94.95. }96. 配置备份lvs 1.! Configuration File for keepalived2.3. global_defs {4.5. notification_email {6.7. yuhongchun027@8.9. }10.11. notification_email_from sns-lvs@12.13. smtp_server 127.0.0.114.15. router_id LVS_DEVEL_2 注意与主不一样的地方16.18.19. vrrp_instance VI_1 {20.21. state BACKUP 注意与主不一样的地3.22.23. interface eth024.25. virtual_router_id 5126.27. priority 99 注意与主不一样的地28.29. advert_int 130.31. authentication {32.33. auth_type PASS34.35. auth_pass 111136.37. }38.39. virtual_ipaddress {40.41. 192.168.1.1042.43. }44.45. }46.47. virtual_server 192.168.1.10 3306 {48.49. delay_loop 650.51. lb_algo wrr52.53. lb_kind DR54.55. persistence_timeout 6056.57. protocol TCP58.59. real_server 192.168.1.4 3306 {61. weight 362.63. TCP_CHECK {64.65. connect_timeout 1066.67. nb_get_retry 368.69. delay_before_retry 370.71. connect_port 330672.73. }74.75. }76.77. real_server 192.168.1.9 3306 {78.79. weight 380.81. TCP_CHECK {82.83. connect_timeout 1084.85. nb_get_retry 386.87. delay_before_retry 388.89. connect_port 330690.91. }92.93. }94.95. }96.②分别在二台lvs机上启动servcie keepalived start就可实现负载均衡及高可用集群;keepalived.conf内容说明如下:●●●●全局定义块全局定义块全局定义块全局定义块1、email通知。