Behavior classification by eigendecomposition of periodic motions

- 格式:pdf

- 大小:617.74 KB

- 文档页数:11

基于深度学习的行为识别与异常行为检测算法研究随着科技的不断进步和深度学习领域的快速发展,人工智能在各个领域得到了广泛应用。

其中,基于深度学习的行为识别与异常行为检测算法成为了近年来备受关注的研究方向。

本文将从行为识别和异常行为检测两个方面介绍该领域的研究进展,并探讨其应用前景。

首先,行为识别是深度学习中的一个重要任务。

通过分析人类和其他生物的行为,可以更好地理解他们的意图和动机,并且这对于智能系统的交互和决策具有非常重要的意义。

在行为识别任务中,主要通过深度学习模型对输入数据进行特征提取和分类。

传统的行为识别技术通常采用手工设计的特征提取方法,但这种方法存在一定的局限性。

而深度学习可以通过端到端的训练方式,自动学习数据中的特征表示,并在一定程度上提高行为识别的性能。

目前,常用的深度学习模型包括卷积神经网络(Convolutional Neural Network,CNN)和循环神经网络(Recurrent Neural Network,RNN)等。

CNN主要用于处理图像和视频数据,对于行为识别中的图像序列或视频数据具有良好的表达能力。

而RNN则适用于处理时间序列数据,可以建模动态行为以及时间依赖关系。

另外,为了提高行为识别的性能,研究者们还结合了其他技术,如注意力机制、迁移学习和强化学习等。

通过引入注意力机制,可以使模型更关注重要的行为片段或特征,从而提高行为识别的准确性。

迁移学习可以利用已有的知识来加快新任务的学习速度,这对于行为识别中数据量较小的问题非常有用。

在强化学习中,可以通过与环境的交互,使模型能够自主学习并优化行为策略。

除了行为识别,异常行为检测也是基于深度学习的重要研究方向。

异常行为通常指的是与正常行为不一致或具有潜在风险的行为。

在许多实际应用中,如视频监控、工业安全等领域,对异常行为的检测具有重要意义。

相比于传统的异常检测方法,基于深度学习的异常行为检测算法具有更高的准确性和鲁棒性。

人工智能生态相关的专业名词解释下载温馨提示:该文档是我店铺精心编制而成,希望大家下载以后,能够帮助大家解决实际的问题。

文档下载后可定制随意修改,请根据实际需要进行相应的调整和使用,谢谢!并且,本店铺为大家提供各种各样类型的实用资料,如教育随笔、日记赏析、句子摘抄、古诗大全、经典美文、话题作文、工作总结、词语解析、文案摘录、其他资料等等,如想了解不同资料格式和写法,敬请关注!Download tips: This document is carefully compiled by the editor. I hope that after you download them, they can help yousolve practical problems. The document can be customized and modified after downloading, please adjust and use it according to actual needs, thank you!In addition, our shop provides you with various types of practical materials, such as educational essays, diary appreciation, sentence excerpts, ancient poems, classic articles, topic composition, work summary, word parsing, copy excerpts,other materials and so on, want to know different data formats and writing methods, please pay attention!人工智能技术的快速发展,推动着人工智能生态系统的形成和完善。

![基于微表情分析的抑郁症识别系统[发明专利]](https://uimg.taocdn.com/9eb0a911abea998fcc22bcd126fff705cc175cf1.webp)

(19)中华人民共和国国家知识产权局(12)发明专利申请(10)申请公布号 (43)申请公布日 (21)申请号 202011101287.9(22)申请日 2020.10.15(71)申请人 南京邮电大学地址 210003 江苏省南京市鼓楼区新模范马路66号(72)发明人 张晖 李可欣 赵海涛 孙雁飞 朱洪波 (74)专利代理机构 南京苏高专利商标事务所(普通合伙) 32204代理人 曹坤(51)Int.Cl.G06K 9/00(2006.01)G06K 9/62(2006.01)G06N 3/04(2006.01)G06T 7/269(2017.01)(54)发明名称基于微表情分析的抑郁症识别系统(57)摘要本发明公开了一种基于微表情分析的抑郁症识别系统。

属于计算机视觉领域;具体步骤:1、训练深度多任务识别网络;2、对人脸的重要的局部区域进行划分,剔除与微表情无关的区域;3、训练自适应的双流神经网络,对微表情运动的开始帧、Apex帧、结束帧进行定位;4、根据在不同背景下对微表情的分析判断该人是否患有抑郁症。

本发明以深度多任务神经网络为基础,对图像进行预处理,从而进行人脸重要局部区域划分,提高双流神经网络的识别速度,满足实时性的要求;并通基于注意力机制的BLSTM ‑CNN神经网络提取重要的帧图片特征以及自适应融合双流神经网络提取到的双流特征提高微表情运动帧的定位,进而提高微表情识别的速度和准确性。

权利要求书1页 说明书6页 附图2页CN 112232191 A 2021.01.15C N 112232191A1.基于微表情分析的抑郁症识别系统,其特征在于,具体步骤包括如下:步骤(1.1)、对视频信息预处理,通过训练深度多任务识别网络得到人脸状态;步骤(1.2)、根据得到的输出人脸状态结果,对人脸的局部区域进行划分,剔除与微表情无关的区域;步骤(1.3)、将划分的局部区域作为原始信息,对其进行提取光流,后将原始信息与提取的光流信息输入到自适应的双流神经网络中,对双流神经网络进行训练,进而对微表情运动的开始帧、峰值帧、结束帧进行定位;步骤(1.4)、通过输出的定位结果对微表情进行分析,根据在不同背景下对微表情的分析判断该人是否患有抑郁症。

classification名词解释Classification(分类)是一种在计算机科学中常用的数据挖掘主题,是学习者根据训练数据集中的某些特征,将潜在的数据分类、组织和区分到多个类别群体中的一种算法。

是在统计学、机器学习和模式识别领域,发展起来的学科,专门用来解决分类、聚类问题。

二、Classification(分类)的方法Classification(分类)的方法有很多,但最常用的方法之一就是朴素贝叶斯(Naive Bayes)分类,它是一种贝叶斯统计方法,可以把一组特征关联到多个类别的分类算法。

它基于概率论,依据给定的特征和类别,基于概率原理确定最有可能的类别。

朴素贝叶斯(Naive Bayes)分类属于监督学习,在给定训练集上可以有效地进行分类,能够良好地处理多维特征和较多的类别。

另外,还有 K邻算法(K-nearest neighbour,KNN),它是一种基于实例的学习方法,可以根据现有数据集中的模式去预测新的数据,不需要先构建模型。

KNN类器会搜索训练集中的数据点,并以它们为基础来确定新数据点的分类,它的好处是很容易实现,但是属于比较慢的算法,而且会产生大量的计算量。

决策树(Decision tree)也是一种常用的分类算法,它可以根据一定特征属性对目标变量进行划分,属于监督式学习,是典型的树形模型,实现的较为简单。

它的优点是在某些数据集上表现出较高的准确率,而且它可以很好地理解和可视化,在实际工作中使用较多。

最后还有神经网络(Neural Networks),它是一种模拟人类脑细胞的网络,由于它的“深度”,可以用来解决比较复杂的分类问题,而且可以自动从训练数据中学习分类规则,省去了人工定义特征的工作量。

三、Classification(分类)在实际应用中的作用Classification(分类)技术已经被用于许多实际应用,包括机器学习、数据挖掘、信息检索、网页排名、文本处理、自然语言处理、图像处理等场景中。

Gender discrimination is a pervasive issue that affects individuals of all genders,but it is particularly prevalent against women and nonbinary individuals.Addressing this issue requires a multifaceted approach that includes education,legislation,and societal change.Here are some strategies to combat gender discrimination:cation and Awareness:The first step in solving gender discrimination is to raise awareness about its existence and cational programs in schools and workplaces can help to challenge stereotypes and promote understanding of gender equality.2.Legislation and Policies:Governments and organizations should enact and enforce laws that prohibit gender discrimination.This includes equal pay for equal work, maternity and paternity leave,and protection against harassment and violence.3.Workplace Practices:Companies should implement policies that promote diversity and inclusion,such as blind recruitment processes,diversity training,and mentorship programs for underrepresented groups.4.Representation in Leadership:Encouraging and supporting women and nonbinary individuals in leadership positions can help to break down barriers and set a precedent for gender equality.5.Media Representation:The media plays a significant role in shaping societal attitudes. By promoting diverse and positive representations of all genders,the media can help to challenge and change harmful stereotypes.munity Engagement:Grassroots movements and community initiatives can be powerful tools for change.Encouraging dialogue and organizing events that bring attention to gender discrimination can help to mobilize support for change.7.Support Networks:Creating safe spaces and support networks for individuals who have experienced gender discrimination can provide a platform for sharing experiences and advocating for change.8.Men as Allies:Engaging men in the conversation and encouraging them to be allies in the fight against gender discrimination can help to shift societal norms and expectations.9.Intersectionality:Recognizing that gender discrimination often intersects with other forms of discrimination,such as race or sexual orientation,is crucial.Addressing these intersections holistically can lead to more comprehensive solutions.10.Technology and Innovation:Utilizing technology to create platforms for reporting and tracking gender discrimination can help to hold individuals and institutions accountable.11.International Cooperation:Gender discrimination is a global issue that requires international cooperation.Working with international organizations to set standards and share best practices can help to drive global progress.12.Cultural Shift:Ultimately,solving gender discrimination requires a fundamental shift in cultural attitudes.This involves challenging traditional gender roles and expectations, and promoting a culture of respect and equality.By implementing these strategies,societies can work towards a more equitable future where all individuals are valued and treated equally,regardless of their gender.。

基于堆叠降噪自编码器的神经–符号模型及在晶圆表面缺陷识

别

刘国梁;余建波

【期刊名称】《自动化学报》

【年(卷),期】2022(48)11

【摘要】深度神经网络是具有复杂结构和多个非线性处理单元的模型,通过模块化的方式分层从数据提取代表性特征,已经在晶圆缺陷识别领域得到了较为广泛的应用.但是,深度神经网络在应用过程中本身存在“黑箱”和过度依赖数据的问题,显著地影响深度神经网络在晶圆缺陷识别的工业可应用性.提出一种基于堆叠降噪自编码器的神经–符号模型.首先,根据堆叠降噪自编码器的网络特点采用了一套符号规则系统,规则形式和组成结构使其可与深度神经网络有效融合.其次,根据网络和符号规则之间的关联性提出完整的知识抽取与插入算法,实现了深度网络和规则之间的知识转换.在实际工业晶圆表面图像数据集WM-811K上的试验结果表明,基于堆叠降噪自编码器的神经–符号模型不仅取得了较好的缺陷探测与识别性能,而且可有效提取规则并通过规则有效描述深度神经网络内部计算逻辑,综合性能优于目前经典的深度神经网络.

【总页数】15页(P2688-2702)

【作者】刘国梁;余建波

【作者单位】同济大学机械与能源工程学院

【正文语种】中文

【中图分类】TP3

【相关文献】

1.基于混合模型与流形调节的晶圆表面缺陷识别

2.基于局部与非局部线性判别分析和高斯混合模型动态集成的晶圆表面缺陷探测与识别

3.晶圆表面缺陷模式识别的二维主成分分析卷积自编码器

4.基于稀疏堆叠降噪自编码器-深层神经网络的语音DOA估计算法

5.基于堆叠稀疏降噪自编码器的暂态稳定评估模型

因版权原因,仅展示原文概要,查看原文内容请购买。

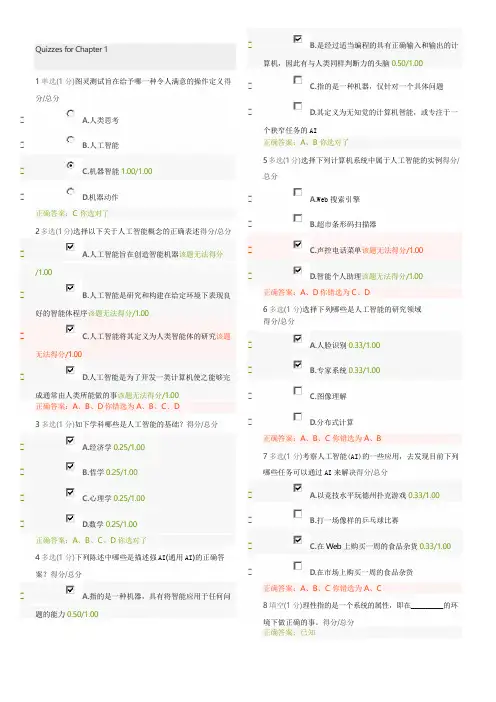

Quizzes for Chapter11单选(1分)图灵测试旨在给予哪一种令人满意的操作定义得分/总分A.人类思考B.人工智能C.机器智能1.00/1.00D.机器动作正确答案:C你选对了2多选(1分)选择以下关于人工智能概念的正确表述得分/总分 A.人工智能旨在创造智能机器该题无法得分/1.00B.人工智能是研究和构建在给定环境下表现良好的智能体程序该题无法得分/1.00C.人工智能将其定义为人类智能体的研究该题无法得分/1.00D.人工智能是为了开发一类计算机使之能够完成通常由人类所能做的事该题无法得分/1.00正确答案:A、B、D你错选为A、B、C、D3多选(1分)如下学科哪些是人工智能的基础?得分/总分A.经济学0.25/1.00B.哲学0.25/1.00C.心理学0.25/1.00D.数学0.25/1.00正确答案:A、B、C、D你选对了4多选(1分)下列陈述中哪些是描述强AI(通用AI)的正确答案?得分/总分A.指的是一种机器,具有将智能应用于任何问题的能力0.50/1.00 B.是经过适当编程的具有正确输入和输出的计算机,因此有与人类同样判断力的头脑0.50/1.00C.指的是一种机器,仅针对一个具体问题D.其定义为无知觉的计算机智能,或专注于一个狭窄任务的AI正确答案:A、B你选对了5多选(1分)选择下列计算机系统中属于人工智能的实例得分/总分A.Web搜索引擎B.超市条形码扫描器C.声控电话菜单该题无法得分/1.00D.智能个人助理该题无法得分/1.00正确答案:A、D你错选为C、D6多选(1分)选择下列哪些是人工智能的研究领域得分/总分A.人脸识别0.33/1.00B.专家系统0.33/1.00C.图像理解D.分布式计算正确答案:A、B、C你错选为A、B7多选(1分)考察人工智能(AI)的一些应用,去发现目前下列哪些任务可以通过AI来解决得分/总分A.以竞技水平玩德州扑克游戏0.33/1.00B.打一场像样的乒乓球比赛C.在Web上购买一周的食品杂货0.33/1.00D.在市场上购买一周的食品杂货正确答案:A、B、C你错选为A、C8填空(1分)理性指的是一个系统的属性,即在_________的环境下做正确的事。

创造力的影响因素分析徐树鹏(摘要:研究从三个方面总结了影响创造力的因素:紧张性刺激刺激,个体因素,和社会影响。

结果发现同一个因素对创造力的影响模式很少有统一的观点,不少研究甚至得出了截然相反的结论。

目前的研究试图采用元分析的技术和分析调节变量来解决这些冲突。

本文还简要阐述了当前的一些研究趋势,并提供了未来研究的一些建议。

关键词: 创造力;紧张性刺激;弱连带关系;元分析;调节变量0 前言目前学者们对于创造力的定义较为一致,一般将其定义为产生新颖的和合适的观点,问题解决方案或见解的能力(Runco, 2004)。

创造力的研究已有60年的历史,期间关于创造力影响因素的研究浩如烟海,且结论大多不统一。

如今越来越多地研究试图去弄清这些影响模式,且不再局限于简单的文献综述,而是采用更高级的研究方法,比如元分析技术。

Feist(1998)就用元分析技术分析了人格特质对科学和艺术领域创造力的影响;Kristin等人(2010)也使用元分析技术去研究紧张性刺激与创造力之间的复杂关系。

本研究总结了影响创造力的诸多因素,尤其关注了其中有冲突的观点,为今后进一步的元分析研究提供一些可供分析的领域。

此外本外还指出了目前研究的一些思路和方向,为后续的研究提供一些线索。

Zorana(2009)认为个体潜能和社会环境之间的交互作用将会决定创造力是否被表达以及如何表达。

本文根据该观点并结合已有文献内容从以下三个方面来分析创造力的影响因素:紧张性刺激刺激,个体因素,和社会影响。

1.紧张性刺激紧张性刺激指能够引起紧张或压力的刺激(Kristin,Shalini,& Deborah,2010),包括竞争,时间压力,表现评估等。

关于紧张性刺激和创造力的关系已做过大量的研究,但是研究结果却并不一致。

Little (引自Kristin,et al.2010)的研究表明与他人的竞争可以提高被试的创造力表现,但是也有研究表明竞争会导致创造力表现的降低(Gerrard, Poteat, & Ironsmith, 1996)。

llamaforsequenceclassification 分类Llama for Sequence Classification: A Step-by-Step GuideIntroduction:In recent years, sequence classification has gained significant attention in various fields such as natural language processing, speech recognition, and bioinformatics. One popular approach that has shown promising results is the Llama algorithm. In this article, we will provide a step-by-step guide on how to effectively use Llama for sequence classification tasks. From understanding the concept to implementing the algorithm, let's dive into the world of Llama.Step 1: Understanding LlamaLlama stands for Long Short-Term Memory (LSTM) and Attention architecture. It combines the power of recurrent neural networks (RNNs) with attention mechanisms to learn dependencies and patterns in sequential data. Llama has proven to be highly effective in capturing long-term dependencies and achievingstate-of-the-art results in various sequence classification tasks.Step 2: Data PreparationTo apply Llama for sequence classification, we need to prepare our data by encoding sequences into numerical representations. This can be done using techniques like one-hot encoding, tokenization, or word embeddings, depending on the nature of the data. It is also essential to split our data into training, validation, and test sets to evaluate the model's performance accurately.Step 3: Building the Llama ModelNow that our data is ready, we can proceed with building the Llama model. The architecture consists of multiple LSTM layers followed by an attention layer and a dense layer for classification. The LSTM layers help capture the sequential information, while the attention layer allows the model to focus on relevant parts of the sequence. The dense layer produces the final classification output.Step 4: Training the Llama ModelWith our model architecture defined, it's time to train the Llama model on our training data. During the training process, the model learns to optimize its parameters based on a given loss function (usually cross-entropy for classification tasks) and minimizes the difference between predicted and actual labels. Training proceeds through multiple epochs, and the model adjusts its weights toimprove performance.Step 5: Model EvaluationOnce the training is complete, it's crucial to evaluate the Llama model's performance on the validation set. Metrics such as accuracy, precision, recall, and F1-score can be used to assess the model's effectiveness. By monitoring these metrics, we can tune hyperparameters and optimize the model accordingly.Step 6: Fine-tuning and OptimizationIf the validation results are not satisfying, we may need to fine-tune our Llama model. This can involve adjusting hyperparameters (e.g., learning rate, number of LSTM layers) or applying techniques like dropout or batch normalization to improve generalization and prevent overfitting.Step 7: Testing the Llama ModelOnce we are confident with the Llama model's performance, we can finally test it on unseen data, or the test set, to assess itsreal-world effectiveness. By evaluating metrics on this set, we can determine the model's ability to generalize to new sequences and compare its performance with other algorithms.Step 8: Deployment and ApplicationAfter successfully training and testing our Llama model, we can deploy it for real-world applications. Whether it's sentiment analysis, speech recognition, or DNA sequence classification, Llama can be deployed in various domains where sequence classification is required. The model can be integrated into existing systems or used to build standalone applications, helping automate decision-making processes.Conclusion:Llama, the Long Short-Term Memory and Attention architecture, is an effective algorithm for sequence classification. By understanding its concept, preparing data, building the model, training, evaluating, and optimizing it, we can harness the power of Llama in solving real-world problems that involve sequences. With its ability to capture long-term dependencies and attention to relevant parts of the sequence, Llama provides a promising and highly accurate approach to sequence classification tasks.。

B light source B 光源B reaction B 反应B score B 分数B type B 型B type BMR B 型基础代谢率B type personality B 型人格B.P. 血压babble 咿呀语babbler 说话不清楚的人babbling stage 咿呀语期babe 婴儿Babinsky law 巴宾斯基定律Babinsky phenomenon 巴宾斯基现象Babinsky reflex 巴宾斯基反射Babinsky sign 巴宾斯基症Babinsky Nageotte syndrome 巴纳二氏综合症baboon 狒狒baby biography 婴儿传记baby boom 生育高峰期baby bust 生育低谷期baby farm 育婴院baby talk 儿语babyhood 婴儿期babyish 孩子气的bacchanal 狂饮作乐者bacchant 酗酒的bacchante 酗酒的女人bach 独身男人bachelor 未婚男子bachelor girl 独身女子bachelor mother 独身母亲bachelordom 独身bachelorette 未婚少女bacillophobia 恐细菌症back action 反向作用back breaking 劳累至极的back reaction 反向反应back up data 备查资料backache 背痛backer 支持者background 背景background characteristics 背景特征background checks 背景考核background condition 背景条件background information 背景信息background music 背景音乐background noise 背景噪声background of feeling 感觉的背景background of information 信息背景background reflectance 背景反差background science 基本科学backknee 膝反屈backlash 间隙backrest 靠背backscatter 反向散射backtrack 反退backward acceleration 后向加速度backward association 倒行联想backward classical conditioning 逆向古典条件反射backward conditioning 后向条件作用backward conditioning reflex 倒行条件反射backward curve 反向曲线backward elimination procedure 反向淘汰法backward inference 逆向推理backward learning curve 倒行学习曲线逆向学习曲 backward masking 倒行掩蔽backward moving 后向运动backward reading 反向阅读backward shifting 逆转backward transfer 逆向迁移Baconian 培根哲学的Baconian method 培根法bad 恶的bad blood 恨bad language 骂人的话bad me 劣我badger 纠缠badinage 开玩笑badly off 穷的bad weather flight 复杂天气飞行baffle 隔音板baffle 阻碍bagatelle 不重要的东西baggage 过时的观念bagged 喝醉的baggy 宽松下垂的Baillarge bands 贝亚尔惹带Baillarge layer 贝亚尔惹层Baillarge lines 贝亚尔惹线Baillarge sign 贝亚尔惹症Baillarge striae 贝亚尔惹纹Baillarge striation 贝亚尔惹纹Baillarge stripes 贝亚尔惹纹bait shyness 怯饵balance 平衡balance force 平衡力balance frequency 均衡频率balance hypothesis 平衡假说balance of control 控制平衡balance of nature 自然平衡balance of power 均势balance of power 势力平衡balance scale 平衡量表balance test 平衡检验balance theory 平衡论balance type 平衡型balanced bilingual 平衡型双重语言balanced error 对称误差balanced experimental design 平衡实验设计balanced sample 对称样本balanced sampling 对称抽样balanced structural change 平衡结构改变balanced type 平衡型balancing response 平衡反应balderdash 胡言乱语bale 灾祸Balint s syndrome 巴林特综合症Ball Adjustment Inventory 贝尔适应性调查表ball and field test 球与运动场测验Ball and Field Test 寻找失球测验Ballard phenomenon 巴拉德无意识记现象Ballard Williams phenomenon 巴拉德 威廉斯现象Ballet sign 巴雷症ballism 颤搐ballistic 颤搐的ballistic movement 冲击运动ballistics 弹道学ballistics 射击学ballistocardiogram 心冲击描记图ballistocardiograph 心冲击描记器ballistocardiography 心冲击描记术ballistophobia 飞弹恐怖症balmy 有香气的baloney 骗人的鬼话balsam 香油balsamic 香料香bamboozle 欺骗ban 禁令banal 平庸的banality 陈词滥调band 带band chart 带形图band curve chart 带形曲线图band movement 带形运动band wagon technique 运用流行思想的宣传术banding 能力分组Bandura s personality theory 班图拉个性理论bandwagon effect 潮流效应bandwagon effect 从众效应bandwagon technique 挟众宣传技术bandwidth 频带宽度bandwidth fidelity dilemma 宽度 精确度两难band pass filter 带通滤光器band pass sound pressure level 带通声压水平baneful influence 恶劣影响banish 消除bankrupt 破坏者bankruptcy 破产banter 开玩笑bantering 开玩笑的bar 巴bar 棒bar chart 条形图bar diagram 条形图bar diagram 柱形图bar graph 柱形统计图baragnosis 压觉缺失Barany Pointing Test 巴兰尼指向测验Barany Test 巴兰尼平衡觉测验Barany s sign 巴兰尼症Barany s symptom 巴兰尼症状barbaralalia 异国语言涩滞barbarian 不文明的barbarism 粗暴行为barbarism 非规范语言现象barbarity 残暴barbarize 变得野蛮barbital 巴比妥barbiturate 巴比妥酸盐Barclay Classroom Climate Inventory 巴克雷班级气氛测验baresthesia 压觉baresthesiometer 压觉计bargain for 指望bargaining 协商bargaining theory 谈判理论barium sulfate 硫酸钡Barnum effect 巴奴姆效应baroagnosis 压觉缺失baroelectroesthesiometer 压觉电测计barognosis 辨重能barometer 气压计baropacer 血压调节器barophilic 嗜压的barophobia 重量恐怖症baroreceptor 压力感受器barothermograph 气压温度计barracoon 奴隶集中场所Barret Lennard Relationship Inventory 巴伦二氏关系量表Barre Guillain syndrome 急性热病性多神经炎Barre Lieou syndrome 后颈交感神经综合症barrier 隔栅Barron Conformity Scale 贝伦从众量表Barron Welsh Art Scale 贝韦二氏美术量表barye 微巴baryencephalia 智力迟钝baryesthesia 压觉baryglossia 言语拙笨barylalia 言语不清baryphonia 语声涩滞basal 底的basal age 基础年龄basal age 基准年龄basal body temperature 基础体温basal ganglia 基底神经节basal ganglion 基底神经节basal metabolic rate 基础代谢率basal metabolic rate 新陈代谢基率basal metabolism 基础代谢basal reflex 基础反射basal year level 基础年龄base 基础base component 基础部分base configuration 基础构造base form 基础形式base frequency 基础频率base line 基线base material 基质base of skull 颅底base point 基点base rate 基础比率base rule 基础规则base structure 基础结构base year 基础年龄Basedow s disease 巴塞道病baseline activity 基线活动baseline group 基准组baseline time 基线时间basement effect 底层效应bashfulness 羞怯basiarachnitis 颅底蛛膜炎basiarachnoiditis 颅底蛛膜炎basic acts 基本动作Basic and Applied Social Psychology 基础与应用社会心理学basic anxiety 基本焦虑basic anxiety theory 基本焦虑理论basic body dimension drawings 基本人体尺寸图basic capacity 基本能力basic cells of thinking 思维的基本单位思维的基本单位basic concept 基本概念basic conflict 基本冲突basic contention 基本论点basic contradiction 基本矛盾basic element 基本元素basic energy level 基本能量水平basic fact 基本事实basic feature 基本特征basic form 基本形式basic formal pattern 基本形式模式basic frequency 基本频率basic function of commodities 商品的基本功能basic human right 基本人权basic law 基本法律basic line 基线basic logic 基础逻辑basic meaning 基本意义basic mistrust 基本怀疑basic modal logic 基本模态逻辑basic need 基本需要basic number 基数Basic Occupational Literacy Test 基本职业识字测验basic operation 基本运算basic personality 基本人格basic personality structure 基本人格构造basic personality type 基本人格类型basic principle 基本原理basic process 基本过程basic process of nervous activity 神经活动的基本过程basic representation 基本表示basic research 基本研究basic rule 基本规则basic sentence 基本句子basic skill 基本技能basic solution 基本解basic statement 基本陈述basic statistical method 基本统计方法basic statistics 基本统计basic structure 基本结构basic structure of a subject 学科的基本结构basic symbol 基本符号basic terminology 基本术语basic thought 基本思想basic trust 基本信任basic variable 基本变量basic vocabulary 基本语汇basilar membrane 基底膜basilemma 基膜basioccipital 枕骨底部basis 基础basis cerebri 大脑底basis of calculation 计算标准basis of ethics 伦理学基础basis reference 参考基准basiscopic 下侧的basket 蓝状细胞basket endings 蓝状末梢basograph 步态描记器basophobia 恐走动症basophobia 走动恐怖症basophobiac 步行恐怖者bastard 私生子Bastick s intuition theory 巴斯蒂克直觉论bathesthesia 深部感觉bathmic 生长力的bathmic force 进化控制力bathmotropic 变阈性bathmotropic action 变兴奋性作用bathomorphic 凹眼的bathophobia 望深恐怖症bathyanesthesia 深部感觉缺失bathyesthesia 深部觉bathyhyperesthesia 深部感觉过敏bathyhypesthesia 深部感觉迟钝bathypsychology 深蕴心理学BATNA 达成谈判协议的最佳选择方案batrachophobia 恐蛙症batrachophobia 蛙恐怖症bats 发疯的battarism 口吃battered child syndrome 被虐儿童综合症battery of tests 成套测验battle fatigue 战争神经症Battley s sedative 巴特利镇静剂bawdry 淫乱Bayesian analysis 贝叶斯分析Bayesian inference 贝叶斯推论Bayesian statistic 贝叶斯统计Bayes s estimator 贝叶斯估计量Bayes s theorem 贝叶斯定理Bayley Scales 贝氏量表Bayley Scales of Infant Development 贝氏婴儿发展量表Bayle s disease 贝尔病BBB 血脑屏障血脑屏障BBT 基础体温beamy 放光的bear with 忍受Beard s disease 神经衰弱bearing capacity 承载能力bearing down 下坠力bearish 粗鲁的beastliness 兽性beastly 残忍的beat 拍beat generation 美国“垮了的一代”美国“垮了的一代”beat of pulse 脉搏beaten 筋疲力尽的beating fantasy 毒打幻想beat frequency oscillator 节拍器beat tone 拍音beauty 美beauty of art 艺术美beauty of behavior 行为美beauty of defect 缺陷美beauty of environment 环境美beauty of human body 人体美beauty of human society 人类社会美beauty of language 语言美beauty of mind 心灵美beauty of nature 自然美beauty of neutrality 中和美beauty of silence 无言美beauty of staunchness 刚性美Bechterev technique 别赫切列夫法Bechterev s nucleus 别赫切列夫核Bechterev s nucleus 前庭神经上核Beck Depression Inventory 白氏抑郁症量表Becker Test 贝克尔试验Beckman thermometer 贝克曼氏温度计贝克曼氏温度计beckon 召唤bedevil 折磨bedevilment 着魔bedfast 卧床不起bedim 模糊不清bedlamism 疯狂状态bedlamite 精神病患者bedrid 卧床不起bedridden 卧床不起bed wetting 遗尿Beevor s sign 比佛症befit 适合before after design 事前事后设计前後设计法before after experiment 事前事后测验beginning of personality 人格起源beginning psychology 早期心理学behavior 行为behavior act 行为动作behavior adaptation 行为适应behavior adjustment 行为适应behavior analysis 行为分析Behavior and Philosophy 行为与哲学behavior assumption 行为假定behavior biology 行为生物学behavior case study 行为事例研究法behavior chain 行为连锁behavior chaining 行为连锁化behavior change 行为变化behavior characteristics 行为特征behavior check list 行为检核表behavior component 行为成分behavior contagion 行为感染behavior contract 行为契约behavior control 行为控制behavior control power 行为控制力behavior description 行为描述behavior determinant 行为定因behavior disorder 行为障碍behavior disposition 行为意向behavior dynamics 行为动力学behavior ecology 行为生态学behavior emergence 行为显现behavior engineering 行为工程学behavior environment 行为环境behavior feedback 行为反馈behavior field 行为场behavior genetics 行为遗传学behavior gradient 行为梯度behavior homology 行为同源behavior integration 行为整合behavior interaction 行为相互作用behavior level 行为水平behavior measure 行为测量behavior medicine 行为医学behavior method 行为研究法behavior modification 行为矫正behavior observation 行为观察behavior observation scales 行为评定量表Behavior Observation Survey 行为观察调查表behavior of men 人类行为behavior patter 行为模式behavior patter in old age 老年期的行为模式behavior potential 行为势能behavior problem 行为问题behavior psychology 行为心理学behavior rating 行为评定法Behavior Rating Instrument for Autistic and Other Atypical Children 孤独和反常儿童行为评定工具behavior rating schedule 行为评定表behavior reaction 行为反应behavior record 行为记录behavior reflex 行为反射Behavior Research and Therapy 行为研究与治疗behavior rigidity 行为僵化behavior sampling 行为抽样behavior segment 行为片段behavior setting 行为定势behavior shaping 行为塑造behavior socialization 行为社会化behavior space 行为空间behavior system 行为系统behavior theory 行为理论Behavior Therapy 行为疗法behavior therapy groups 行为治疗团体行为治疗团体behavior transfer 行为转移behavior type 行为类型behavior unit 行为单元behavioral 行为的behavioral analysis of society 社会的行为分析Behavioral Anchored Rating Scale 行为定位评价量表behavioral and learning principles 行为与学习原则behavioral competence 行为能力behavioral component 行为性成分behavioral concept 行为概念behavioral constraint model 行为受限模型behavioral contrast 行为反差behavioral counseling 行为咨询behavioral decision theory 行为决策理论behavioral dimorphism 行为二形性behavioral disposition 行为意向behavioral ecology 行为生态学behavioral engineering 行为工程学behavioral environment 行为环境behavioral equivalence 行为等值behavioral expression 行为表现behavioral facilitation 行为便利化behavioral genetics 行为遗传学behavioral indicator 行为指标behavioral intention 行为意向behavioral learning model 行为学习模式行为学习模式behavioral measure 行为测量behavioral medicine 行为医学behavioral model 行为模式behavioral motive 行为动机Behavioral Neuroscience 行为神经科学behavioral norms 行为规范behavioral objective 行为目标behavioral pattern 行为模式behavioral pattern theories 行为模式理论behavioral phase 行为阶段behavioral psychology 行为主义心理学行为主义心理学behavioral psychotherapy 行为心理治疗行为心理治疗法behavioral school 行为学派behavioral science 行为科学behavioral scientific decision model 行为科学的决策模式behavioral scientific decision rule 行为科学的决策规则behavioral scientist 行为科学家behavioral sink 行为的沉沦behavioral stimulus field 行为刺激场behavioral styles theory 行为类型理论行为类型理论behavioral taxonomy 行为分类学behavioral techniques 行为技术behavioral theories of leadership 领导的行为理论behavioral theories of learning 学习的行为理论behavioral theory 行为理论behavioral trait 行为特性behavioral unity 行为统一behavioral pattern theories of leadership 领导的行为模式理论behaviorism 行为主义behaviorist 行为主义者behavioristic 行为主义的behavioristic approach 行为主义方法behavioristic psychology 行为主义心理学behavioristic resistance 行为阻力behavioristics 行为学behavior mapping 行为区划behind conditioned reflex 错后条件反射错後制约反射Behn Rorschach Test 贝 罗测验beholder 观看者being 存在being and becoming 存在和生成being and freedom 存在和自由being and meaning 存在与意义being and not being 有与无being and time 存在与时间being cognition 存在认知being fond of learning 好学being love 存在之爱being motivation 存在动机being need 存在需求being value 存在价值beingless 不存在的beingless 无实在性being beyond the world 超现实存在being for itself 自为之有being for self 自为存在being imitated effect 受模仿效应being in itself 实在本身being in the world 现实中存在Bekesy audiometry 贝克赛测听法Bekhterev technique 别赫捷列夫法Bekhterev Test 别赫捷列夫试验Bekhterev s layer 别赫捷列夫层Bekhterev s nucleus 前庭神经上核Bekhterev s reflex 别赫捷列夫反射Bekhterev s symptom 面肌麻痹bel 贝尔bel esprit 才子belabor 痛打belief 信念belief and worship 信仰与崇拜belief systems 信仰体系belief value matrix 信念价值方阵believe 信任believing and necessity 信仰与需要belittle 贬低Bell Adjustment Inventory 贝尔适应量表belladonna 颠茄belle indifference 泰然淡漠Bellevue Scale 贝尔夫智力量表belligerence 好战性bellow 吼叫belly laugh 捧腹大笑Bell s law 贝尔定律Bell s mania 急性谵妄Bell s nerve 胸长神经Bell s palsy 贝尔麻痹Bell s phenomenon 贝尔现象Bell Magendic law 贝 马神经运动感知定律bell shaped 钟形bell shaped curve 钟形曲线bell shaped distribution 钟形分布bell shaped symmetrical curve 钟形对称曲线belonephobia 尖物恐怖症belonephobia 恐针症belonging 隶属belonging need 从众需要belonging need 归属需要belongingness 从众性belongingness 归属感belongingness and love need 归属与相爱需要belonoskiascopy 针形检影法bemoan 悲叹Benary effect 贝纳瑞效应bench height 工作台高度benchmark 基准benchmark data 基准数据benchmark program 基准程序benchmark system 基准系统Bender Gestalt Test 本德尔格式塔测验班达完形测验Bender Visual Motor Gestalt Test 本德尔视觉动作完形测验beneath contempt 卑鄙之极beneath notice 不值得注意的beneceptor 良性感受器Benedikt s syndrome 本尼迪克特综合症benefaction 善行benefit 利益benevolence 仁爱benevolent authoritative management 恩威式管理Benham top 贝汉圆板benign psychosis 良性精神病benignity 慈祥Bennett Mechanical Comprehension Test 贝纳特机械理解测验Bennett Test 贝纳特大学智力测验benny 安非他明片Benton Visual Retention Test 本顿视觉保持测验benumb 使僵化berate 训斥bereavement 丧亲之哀bereavement and mourning reaction 居丧反应Berger rhythm 贝格尔节律Berger rhythm 甲种脑电波Bergeron s chorea 贝尔热隆病Berger s paresthesia 贝格尔感觉异常柏格感觉 常Berger s sign 贝格尔症Berger s symptom 贝格尔症状Bergmann s cells 贝格曼细胞Bergmann s cords 第四脑室髓纹Bergmann s fibers 贝格曼纤维Bergsonian 柏格森派Bergsonism 柏格森主义Bergson s doctrine of duration 柏格森的绵延说Berkeleian 贝克莱派Berkeleian idealism 贝克莱派唯心主义贝克莱派唯心主义Berkeleianism 贝克莱主义Berkeley s theory of vision 贝克莱视觉说Berlin s disease 视网膜震荡Bernheimer s fibers 伯恩海默纤维Bernreuter Personality Inventory 本罗特人格量表berserk fury 暴怒berserker 狂暴的人beseech 恳求besetment 困扰beshrew 诅咒beside oneself with joy 欣喜若狂besmirch 沾污best allocation 最佳配置best estimate 最佳推算数best estimator 最佳估计量best fit 最佳配合best in quality 品质优良best linear unbiased estimator 最佳线性不偏估计量best test 最佳检验bestiality 兽恋症bestiality 兽性bestow 赠给best answer test 选答测验beta coefficient β系数beta distribution β分布Beta Examination β智力测验beta hypothesis β假说beta model β模式beta motor neuron β运动神经元beta movement β似动现象beta movement β运动beta particle β粒子beta ray β射线beta receptor β受体beta response β节律beta value β值beta wave β波beta wave 乙型波beta binomial models β二项式模式beta fiber β纤维beta receptor β 肾上腺受体betrayal 背叛行为bettered child 被虐儿童bettered child syndrome 被虐儿童综合症bettered wife 被虐妻子betterment 改善betterness 优等better off 境况较好between group design 组间设计between group variable 组间方差between word spacing 字间距betweenbrain 间脑betweenness 中间性between Ss design 组间法between subjects design 被试者间设计被试者间设计between subjects variance 被试者间方差Betz cell area 贝兹细胞区Betz cells 贝兹细胞bewail 悲叹beware 谨防bewilderment 迷惑状态bewitch 蛊惑bewitchment 被蛊惑bewray 泄露Bezold s color mixing effect 贝措尔德混色效应Bezold s ganglion 贝措尔德神经节Bezold s triad 贝措尔德三症Bezold Brucke effect 贝 勃效应Bezold Brucke phenomenon 贝 勃色觉现象Bezold Brucke shift 贝 勃转移bhang 印度大麻bi serial 双列Bianchi s syndrome 比昂基综合症bias 偏差bias 偏向biased choice 偏见选择biased error 偏误biased estimator 有偏估计量biased group 选择组biased result 有偏结果biased sample 偏性样本biased sampling 偏性抽样biased statistic 有偏统计量bibber 酒鬼bibliofilm 图书缩微胶片Bibliographic Guide to Psychology 心理学文献指南bibliography 文献目录bibliomania 藏书癖bibliophilism 爱书癖bibliophilism 藏书家bibliophobia 恐书症bibliotherapy 读书疗法bicameral 两腔的Bichat s canal 大脑大静脉Bichat s fissure 大脑横裂Bichat s foramen 蛛网膜孔bicker 争吵bicoordinate navigation 双坐标导航bicycle ergometer 自行车测力计Bidder s ganglion 比德尔神经节bidding 命令Biedl s disease 比德尔病Biedl s syndrome 比德尔综合症Bielschowsky s method 比尔肖夫斯基法比尔肖夫斯基法Bielschowsky Jansky disease 晚期婴儿型家族性黑蒙性痴呆Biernacki s sign 别尔纳茨基症bifactor theory of conditioning 条件反射二因素说bifuration 分支亲系bifurcate 两枝的bifurcation point 分歧点bifurcation problem 分歧问题bifurcation theory 分歧理论big business 大企业big cheese 粗鲁男子big mouth 多话的人bigamist 犯重婚罪者bigamy 重婚bigot 抱偏见的人bigotry 偏执bilateral 两侧的bilateral cooperative model of reading 阅读的双边协作模式bilateral descent 双亲遗传bilateral power relationship 双方分权关系bilateral summation 两侧积累bilateral symmetry 两侧对称bilateral transfer 两侧性迁移bilateral transfer 左右迁移bilateral type 两侧对称式bilateralism 两侧对称bilaterality 两侧对称bilaterally symmetrical 两侧对称的bile 胆液bilineurine 胆碱bilingual 双重语言的bilingual children 双语儿童bilingual distribution 双语分布bilingual education 双语教育bilingual interference 双语阻扰bilingual society 双语社会bilingualism 双语制bilingualism 双重语言bilious 暴躁的bilious 胆汁的bilious temperament 胆汁质bilis 胆汁bimanual synergia 双手协同运动bimodal 双峰的bimodal curve 双峰曲线bimodal distribution 双峰分配bimodality 双峰性binac 二进制自动计算机binary 二进制的binary arithmetic 二进制算术binary bit 二进位binary cell 二进制单位binary digits 二进位数binary information 二进制信息binary internal number base 内二进制数基binary number 二进制数binary signal 二进制信号binary word 二进制字binaural 双耳的binaural fusion 双耳融合binaural hearing 双耳听觉binaural interaction 双耳相互作用binaural phase difference 双耳相位差binaural ratio 双耳比例binding energy 结合能bindle stiff 流浪汉Binet Simon Intelligence ScaleBinet class 比内班Binet laboratory 比内实验室Binet Scale 比内量表Binet Test 比内测验Binetgram 比内量表图解Binet Simon classification 比内 西蒙分类Binet Simon Scale of Intelligence 比内 西蒙智力量表Binet Simon Test 比内 西蒙测验binocular 双眼的binocular accommodation 双眼调节binocular cell 双眼性细胞binocular color mixture 双眼混色binocular colormixture 双眼色混合binocular contrast 双眼对比binocular cues 双眼线索binocular depth perception 双眼深度知觉binocular diplopia 双眼复视binocular disparity 双眼像差binocular fixation 双眼注视binocular flicker 双眼集中binocular fusion 双眼视像融合binocular information 双眼信息binocular luster 双眼光泽现象binocular matching 双眼调节binocular parallax 双眼视差binocular perception 双眼知觉binocular perspective 双眼透视binocular rivalry 双眼竞争binocular stereopsis 双眼实体视觉binocular summation 双眼总和binocular synergy 双眼共同运动binocular vision 双眼视觉binomial 二项式binomial coefficient 二项系数binomial distribution 二项分布binomial group testing 二项分组测验法二项分组测验法binomial theorem 二项式定理binomial variable 二项变量binophthalmoscope 双目检眼镜binoscope 双目单视镜Binswanger dementia 宾斯万格痴呆Binswanger encephalitis 宾斯万格脑炎宾斯万格脑炎bioassay 生物鉴定biobalance 生物平衡biocenology 生物群落学biocenose 生物群落biocenosis 生物群落biochemical defect 生化缺陷biochemical energy 生化能biochemical engineering 生化工程biochemical evolution 生化进化biochemical genetics 生化遗传学biochemical mechanism 生化机制biochemist 生物化学家biochemistry 生物化学biochemorphology 形态生物化学bioclimatology 物候学biocoenology 生物群落学biocoenosis 生物群落biocybernetics 生物控制论biocycle 生活周期biodynamics 生物动力学bioecology 生物生态学bioelectric current 生物电流bioelectric potential 生物电位bioelectricity 生物电bioelectrogenesis 生物电发生bioelement 生物元素bioenergetics 生物能量学bioengineering 生物工程学bioethics 生物伦理学biofeedback 生物反馈biofeedback therapy 生物反馈疗法biofeedback training 生物反馈训练biogenesis 生源说biogenetic law 生物发生原则biogenic 生源的biogenic amine 生物胺biogenic amine hypothesis of depression 抑郁症的生物胺假说biogenic motive 生物发生动机biogenic succession 生物进化演替biogenous 生物发生的biogeochemistry 生物地球化学biogeography 生物地理学biograph 生物运动描记器biographer 传记作者biographical characteristics 传记特点biographical data 传记式资料biographical inventory 传记式量表Biographical Inventory for R & T Talent 科研人才甄选传记式量表biographical inventory tests 传记式问卷测验biographical method 传记法biographical study 传记研究biographical type case study 传记式个案研究biography 传记biokinetics 生物运动学biolinguistics 生物语言学biologic therapy 生理疗法biological aging 生物性老化biological anthropology 生物人类学biological balance 生态平衡biological clock 生物钟biological cycle 生物周期biological determinism 生物决定论biological drive 生物性驱力biological engineering 生物工程学biological gratification 生物满足biological heritage 生理承袭biological imperative 生理的必然性biological intelligence 生理智力biological memory 生物性记忆biological motivation 生物性动机biological motive 生物性动机biological nature of man 人的生物性biological need 生理需要biological rhythm 生物节律biological school in crimin 犯罪生物学派biological sex difference 生物性别差异生物性别差biological tendency 生物倾向biological theory 生物学理论biological theory of sex type 性别类型的生物学理论biologicalization 生物学化biologism 生物学主义biologist 生物学家biologos 生物活力biology 生物学biomathematics 生物数学biome 生物群落biomechanics 生物力学biomechanism 生物机械论biomedical engineering 生物医学工程学生物医学工程学biomedical model 生物医学模式biomedical therapy 生物医学疗法biomedicine 生物医学biometeorology 生物气象学biometric 生物计量的biometric procedure 生物统计法biometrics 生物计量学biometrics method 生理计量法biometry 生物统计学biomophic 生物形态的bionergy 生命力bionics 仿生学bionomic 生态的bionomic factor 生态因素bionomics 生态学bionomy 生命规律学bionomy 生态学bionuclenonics 生物核子学biophilia 生物自卫本能biophotometer 光度适应计biophysical intervention 生物物理干涉生物物理干涉biophysicist 生物物理学家biophysics 生物物理学bioplasm 原生质biopoiesis 生命自生bioprogram language 生物程序语言biopsychic 生物心理的biopsychology 生物心理学biopsychosocial medical model 生物心理社会医学模式biopsychosocial model 生物心理社会模式bioreactor 生物反应器bioreversible 生物可逆的biorgan 生理器官biorhythm 生物节律bios 生物活素类biosensor 生理传感器biosis 生命现象biosocial 生物社会的biosocial approach 生物社会性研究方法biosocial environment 生物社会环境biosocial psychology 生物社会心理学biosocial theory 生物社会论biosphere 生物圈biostatics 生物静力学biostatistician 生物统计学家biostatistics 生物统计学biotaxy 生物分类学biotechnology 生物技术学biotelemetry 生物遥测法biotic community 生物共同体biotic energy 生命力biotic environment 生物环境biotic experiment 生物实验biotic influence 生物影响biotics 生命学biotomy 生物解剖学biotype 生物类型biotypology 生物属型学bio acoustics 生物声学bio clock 生物钟bio ecology 生物生态学bio electricity 生物电bio electrochemistry 生物电化学bio energetics 生物能学bio engineering 生物工程bio information 生物信息bio medical model 生物医学模式biparental 双亲的biparental inheritance 双亲遗传biphasic 两相的biphasic symptom 两相症状bipolar 双极的bipolar adjective scale 双极形容词量表双极形容量表bipolar affective disorder 两极性情绪紊乱bipolar affective psychosis 两极情感性精神病bipolar cell 双极细胞bipolar cell layer 双极细胞层bipolar depression 两极型忧郁症bipolar disorder 两极型异常bipolar lead 双极导出bipolar nerve cell 双极神经细胞bipolar neuron 双极神经元bipolar rating scale 两极式评定量表bipolarity 两极性bipolarity of affective 情感两极性bipolarity of feeling 情感两极性bipotentiality 双潜能bipotentiality of the gonad 生殖腺两性潜能birth 分娩birth adjustment 出生顺应birth age specific 年龄别出生率birth age marital 年龄 婚龄别出生率birth cohort 出生组birth control 节制生育birth differential 级差的出生率birth injury 产伤birth intrinsic 固定人口出生率birth nuptial 婚姻出生率birth order 出生次序birth place population 出生的人口birth planned 计划出生率birth process 出生过程birth rate 出生率birth specific 类别出生率birth stable rate 稳定出生率birth standardized 标准化出生率birth statistics 出生统计birth symbolism 出生表象birth total 合计出生率birth trauma 产伤birth trauma 出生创伤birthright 生来就有的权利birth and death process 出生死亡过程出生死亡历程bisect 分开bisected brain 分裂脑bisection 平分bisector 平分线bisectrix 等分角线biserial 双数列的biserial coefficient 双数列系数biserial coefficient of correlation 双列相关系数biserial correlation 二列相关biserial correlation 双列相关biserial correlation coefficient 双列相关系数biserial ratio of correlation 双数列相关比bisexual libido 两性欲力bisexuality 两性俱有Bishop s sphygmoscope 毕晓普脉搏检视器bit 比特bit 二进制位bit combination 位组合bit of information 信息单位bit per second 每秒比特bit site 数位位置bit time 一位时间bitter 苦bitterness 苦味bivalent releaser 二价释放刺激bivariate 双变量的bivariate analysis 双变量分析bivariate correlation 双变量相关bivariate distribution 二元分布bivariate frequency table 双变量次数表双变项次数表bivariate normal distribution 双变量正态分布bizarre delusion 怪异妄想bizarre experience 奇异经验bizarre image 怪诞意象bi directional goal gradient 双向目标梯度bi directional movement 双向移动bi directional replication 二向复制bi modality 双通道blabber 喋喋不休的人black 黑black box 暗箱black box 黑匣子black box theory 黑箱理论black despair 绝望black in the face 脸色发紫black light 黑线black out 撤光black propaganda 暗宣传blackbox 暗箱blackbox theory 暗箱说blackdamp 窒息性空气blackguard 恶棍blackguardism 恶棍行为blackmail 敲榨blackout 黑视blackout 昏厥blackout threshold 失觉阈限Blacky Pictures 布莱克漫画测验Blacky Test 布莱克测验black box organization model 黑箱组织模型black box paradigm 黑箱范式bladder 膀胱bladder control 膀胱控制bladder training 排尿训练blah 废话Blake s disks 布莱克盘blame 责备blank experiment 插入实验blank experiment 空白实验blank field effect 空虚视野效应blank instruction 空白指令blank trial 空白练习blarney 奉承话blase 厌于享乐的blaspheme 辱骂blasphemer 辱骂者blast 胚叶blastocyte 胚细胞blastomere 裂球blastophyly 种族史blastoprolepsis 发育迅速blastula 囊胚blather 胡说bleaching 漂白blemish 污点blench 退缩blend 混合blepharism 脸痉挛blepharoplegia 脸瘫痪blight 使失望blind 盲点blind action 盲动blind alley 盲路blind alley job 盲目职业blind analysis 匿情分析blind child 盲童blind path 盲路blind positioning movement 盲目定位动作blind research procedure 保密研究法blind spot 盲点blinder 障眼物blinding glare 失明眩光blindism 盲人主义blindman 盲人blindman s buff 捉迷藏blindness 盲blink 瞬目blink rate 眨眼率blink reflex 眨眼反应blinker 闪光警戒标blinking 瞬目blinking coding 闪烁编码blinking light 闪光信号灯blinkpunkt 注视点blink eyed 习惯性眨眼blob 模糊的东西Bloch s law 布洛奇法则block 区block 阻断block access 字组存取block building 积木block chart 方块图block code 分组码block design 分组实验设计block design 区组设计block diagram 直方图block encoding 分块编码block sample 区域样本block sampling 区域抽样。

求解TSP问题的自适应邻域遗传算法汪金刚;罗辞勇【摘要】提出结合自适应邻域法与遗传算法来求解TSP问题.在自适应邻域法中,从某个城市出发,下一城市不一定是其最近城市,而是在比其最近城市稍远的邻域范围进行动态随机选取.在求解TSP时,采用自适应邻域法对种群初始化,然后采用选择、交叉、变异进行迭代.在选择中仅保留父代90%的样本,剩下的采用自适应邻域法产生新样本进行补充.仿真实验结果表明所提算法与其他算法相比具有竞争能力.【期刊名称】《计算机工程与应用》【年(卷),期】2010(046)027【总页数】5页(P20-24)【关键词】遗传算法;旅行商问题;最近邻法;自适应邻域法【作者】汪金刚;罗辞勇【作者单位】重庆大学输配电装备及系统安全与新技术国家重点实验室,重庆,400044;重庆大学输配电装备及系统安全与新技术国家重点实验室,重庆,400044【正文语种】中文【中图分类】TP3011 引言TSP是典型的组合优化问题,给定n个城市以及各城市之间的距离,要求找到一条遍历所有城市且每个城市只被访问一次的路线,并使得总路线距离最短,或表述为在有n个结点的完全图中找出最短的Hamilton回路。

TSP问题的求解主要有两类算法,一类是保证得到最优解的完全算法,典型例子如枚举法,但这种算法必须考虑(n-1)!个不同的路径,规模稍大的实例,在时间上是不可行的。

另一类是近似算法,如最近邻法[1]和智能算法(蚁群算法、遗传算法、模拟退火算法、禁忌搜索算法、Hopfield神经网络、粒子群优化算法、免疫算法等[2-5])。

最近邻法的优点是算法简单,直观可行,实现方便,但缺点是容易陷入局部最优解,而得不到全局最优解[1,5]。

智能算法虽然不能保证在有限时间内获得最优解,但选择充分的多个解验证后,错误概率可以降到可以接受的程度。

遗传算法(Genetic Algorithm)是模拟生物在自然环境中的遗传和进化过程而形成的一种自适应全局优化概率搜索算法。

专利名称:与基质芯片相关的方法和组合物

专利类型:发明专利

发明人:安德鲁·J·曼尼托斯,罗伯特·弗伯格,卡拉·瓦尼纳吉申请号:CN200480037140.0

申请日:20041013

公开号:CN101389753A

公开日:

20090318

专利内容由知识产权出版社提供

摘要:本发明涉及用于评估细胞间和细胞与基质材料间相互作用的设备和方法,其中由于这些相互作用形成的细胞分布模式是对细胞入侵潜能的指示物。

此外,这些设备和方法可以提供对入侵性细胞转移的优选位点的指示;对使用到这些细胞上的抗癌药物的功效的指示;以及对试剂促进或提高肿瘤生长或转移的潜能的指示。

申请人:伊利诺伊大学评仪会

地址:美国伊利诺伊州

国籍:US

代理机构:北京金信立方知识产权代理有限公司

代理人:黄威

更多信息请下载全文后查看。

生物学中功能解释的合理性研究喻莉姣【摘要】以亨普尔为代表的学者认为功能解释不具预见性,故应被排除在科学解释之外.另一部分学者尝试为功能解释的合理性进行辩护,其辩护途径之一是期望在生物学中找出一条普遍定律使得功能解释符合覆盖律模型;辩护途径之二认为功能解释不同于演绎律则解释,其合理性在于功能解释说明了某物对于维持某个状态的“价值”,这两种辩护途径各有缺陷.生物学中很难有普遍定律,不同的生物学科有不同类型的功能解释,不同的功能解释在生物学实践中有着不同的价值.【期刊名称】《重庆理工大学学报(社会科学版)》【年(卷),期】2017(031)010【总页数】7页(P13-19)【关键词】功能解释;生物学;科学解释;合理性【作者】喻莉姣【作者单位】湖北文理学院马克思主义学院,湖北襄阳441053【正文语种】中文【中图分类】B08;N02功能解释因其通常用结果来解释现象,故而被归于后果论。

这种解释最不令人满意的地方就是没有预见性,常被人称为“事后聪明”,因此也常常不被正统的科学所承认。

但是,在正统科学的生物学领域又常常使用功能解释,如问“人为什么有心脏”?答曰“心脏有泵血的功能”。

正统科学一方面不承认功能解释,一方面却又在使用功能解释。

生物学中的功能解释意义到底何在?它与正统科学中所倡导的科学解释有什么不同?一部分学者,如格鲁纳(Rolf Gruner)、维姆萨特(William C.Wimsatt)、亨普尔(Carl G.Hempel)等认为功能解释遵循演绎律则模型,而以格瑞斯蒂(Harold Greenstein)为代表的另一部分则认为功能解释与演绎律则解释模型不同。

亨普尔认为科学解释需满足两个基本要求——“解释相关要求和可检验性要求”[1]75,并且遵循覆盖律模型。

作为维护功能解释恰当性的一派,如格鲁纳和维姆萨特认为功能解释符合覆盖律模型,故而是合理的科学解释。

而亨普尔却认为功能解释不具有预见性,不能满足可检验性要求,并且功能解释也不满足覆盖律模型模型,故而应被排除在科学解释之外。

Pattern Recognition 38(2005)1033–1043/locate/patcogBehavior classification by eigendecomposition of periodic motionsRoman Goldenberg ∗,Ron Kimmel,Ehud Rivlin,Michael RudzskyComputer Science Department,Technion—Israel Institute of Technology,Technion City,Haifa 32000,IsraelReceived 23January 2004;received in revised form 25October 2004;accepted 8November 2004AbstractWe show how periodic motions can be represented by a small number of eigenshapes that capture the whole dynamic mech-anism of the motion.Spectral decomposition of a silhouette of a moving object serves as a basis for behavior classification by principle component analysis.The boundary contour of the walking dog,for example,is first computed efficiently and accurately.After normalization,the implicit representation of a sequence of silhouette contours given by their corresponding binary images,is used for generating eigenshapes for the given motion.Singular value decomposition produces these eigen-shapes that are then used to analyze the sequence.We show examples of object as well as behavior classification based on the eigendecomposition of the binary silhouette sequence.᭧2005Pattern Recognition Society.Published by Elsevier Ltd.All rights reserved.Keywords:Visual motion;Segmentation and tracking;Object recognition;Activity recognition;Non-rigid motion;Active contours;Periodicity analysis;Motion-based classification;Eigenshapes1.IntroductionFuturism is a movement in art,music,and literature that began in Italy at about 1909and marked especially by an effort to give formal expression to the dynamic energy and movement of mechanical processes.A typical example is the ‘Dynamism of a Dog on a Leash’by Giacomo Balla,who lived during the years 1871–1958in Italy,see Fig.1[1].In this painting one could see how the artist captures in one still image the periodic walking motion of a dog on a leash.Following a similar philosophy,we show how periodic mo-tions can be represented by a small number of eigenshapes that capture the whole dynamic mechanism of periodic mo-tions.Singular value decomposition of a silhouette of an ob-ject serves as a basis for behavior classification by principle∗Corresponding author.Tel.:+97248294940;fax:+97248293900.E-mail addresses:romang@cs.technion.ac.il (R.Goldenberg),ron@cs.technion.ac.il (R.Kimmel),ehudr@cs.technion.ac.il (E.Rivlin),rudzsky@cs.technion.ac.il (M.Rudzsky).0031-3203/$30.00᭧2005Pattern Recognition Society.Published by Elsevier Ltd.All rights reserved.doi:10.1016/j.patcog.2004.11.024component analysis.Fig.2present a running horse video sequence and its eigenshape decomposition.One can see the similarity between the first eigenshapes—Fig.2(c and d),and another futurism style painting “The Red Horseman”by Carlo Carra [1]—Fig.2(e).The boundary contour of the moving non-rigid object is computed efficiently and accu-rately by the fast geodesic active contours [2].After normal-ization,the implicit representation of a sequence of silhou-ette contours given by their corresponding binary images,is used for generating eigenshapes for the given motion.Sin-gular value decomposition produces the eigenshapes that are used to analyze the sequence.We show examples of object as well as behavior classification based on the eigendecom-position of the sequence.2.Related workMotion-based recognition received a lot of attention in the field of image analysis.The main reason is that direct usage of temporal data can improve our ability to solve a1034R.Goldenberg et al./Pattern Recognition 38(2005)1033–1043number of basic computer vision problems.Examples are image segmentation,tracking,object classification,etc.In general,when analyzing a moving object,one can use two main sources of information:changes of the moving object position (and orientation)in space,and the object’s deformations.Object position is an easy-to-get characteristic,applica-ble both for rigid and non-rigid bodies.It is provided by most of existing target detection and tracking systems.A number of techniques [3–6]were proposed for the detection of motion events and recognition of various types of mo-tions based on the analysis of the moving object trajectory and its derivatives.Detecting object orientation is a more challenging problem.It is usually solved by fitting a model that may vary from a simple ellipsoid [6]to a complex 3-D vehicle model [7]or a specific aircraft-class model adapted for noisy radar images as in Ref.[8].Fig.1.‘Dynamism of a Dog on a Leash’1912,by Giacomo Balla,Albright-Knox Art Gallery,Buffalo.Fig.2.(a)Running horse video sequence,(b)first 10eigenshapes,(c,d)first and second eigenshapes enlarged,(e)‘The Red Horseman’,1914,by Carlo Carra,Civico Museo d’Arte Contemporanea,Milan.While object orientation characteristic is more applicable for rigid objects,it is object deformation that contains the most essential information about the nature of the non-rigid body motion.This is especially true for natural non-rigid ob-jects in locomotion that exhibit substantial changes in their apparent view.In this case the motion itself is caused by these deformations,e.g.walking,running,hopping,crawl-ing,flying,etc.There exists a large number of papers dealing with the classification of moving non-rigid objects and their motions,based on their appearance.Lipton et al.describe a method for moving target classification based on their static appear-ance [9]and using their skeletons [10].Polana and Nelson [11]used local motion statistics computed for image grid cells to classify various types of activities.An approach using the temporal templates and motion history images (MHI)for action representation and classification was sug-gested by Davis and Bobick in Ref.[12].Cutler and Davis [13]describe a system for real-time moving object classifi-cation based on periodicity analysis.Describing the whole spectrum of papers published in this field is beyond the scope of this paper,and we refer the reader to the surveys [14–16].Related to our approach are the works of Yacoob and Black [17]and Murase and Sakai [18].In Ref.[17],human activities are recognized using a parameterized representa-tion of measurements collected during one motion period.The measurements are eight motion parameters tracked for five body parts (arm,torso,thigh,calf and foot).Murase and Sakai [18]describe a method for moving object recognition for gait analysis and lip reading.Their method is based on parametric eigenspace representation of the object silhouettes.Moving object segmentation is per-formed by using simple background subtraction.The eigen-basis is computed for one class of objects.Murase and Sakai used an optimization technique for finding the shift and scale parameters which is not trivial to implement.R.Goldenberg et al./Pattern Recognition38(2005)1033–10431035In this paper we concentrate on the analysis of defor-mations of moving non-rigid bodies.We thereby attempt to extract characteristics that allow us to distinguish be-tween different types of motions and different classes of objects.Essentially we build our method based on approaches suggested in Refs.[18,17]by revisiting and improving some of the components.Unlike previous related methods,we first introduce an advanced variational model for accurate segmentation and tracking.The proposed segmentation and tracking method allows extraction of moving object con-tours with high accuracy and,yet,is computationally effi-cient enough to be implemented in a real time system.The algorithm analyzing high-precision object contours achieves better classification results and can be easier adopted to var-ious object classes than the methods based on control points tracking[17]or background subtraction[18].We then compute eigenbasis for several classes of objects (e.g.humans and animals).Moreover,at thisfirst stage we select the right class by comparing the distance to the right feature space in each of the eigenbases.Next,we create a principle basis not only for static object view(single frames), but also for the whole period subsequences.For example,we create a principle basis for one human motion step and then project every period onto this basis.Unlike[18],we are not trying to build a separate basis for every motion type,but instead,build a common basis for the whole human motion class.Different types of human motions are then detected by looking at the projections of motion samples onto this basis.Apparently,this type of analysis can only be possible due to the high quality of segmentation and tracking results. We propose an explicit temporal alignment process for finding the shift and scale.Finally,we use a simple clas-sification approach to demonstrate the performances of our framework and test it on various classes of moving objects.3.Our approachOur basic assumption is that for any given class of moving objects,like humans,dogs,cats,and birds,the apparent object view in every phase of its motion can be encoded as a combination of several basic body views or configurations. Assuming that a living creature exhibits a pseudo-periodic motion,one motion period can be used as a comparable information unit.The basic views are then extracted from a large training set.An observed sequence of object views collected from one motion period is projected onto this basis. This forms a parameterized representation of the object’s motion that can be used for classification.Unlike[17]we do not assume an initial segmentation of the body into parts and do not explicitly measure the motion parameters.Instead,we work with the changing apparent view of deformable objects and use the parameterization induced by their form variability.In what follows we describe the main steps of the process that include,•Segmentation and tracking of the moving object that yield an accurate external object boundary in every frame.•Periodicity analysis,in which we estimate the frequency of the pseudo-periodic motion and split the video se-quence into single-period intervals.•Frame sequence alignment that brings the single-period sequences to a standardized form by compensating for temporal shift,speed variations,different object sizes and imaging conditions.•Parameterization by building an eigenshape basis from a training set of possible object views and projecting the apparent view of a moving body onto this basis.3.1.Segmentation and trackingOur approach is based on the analysis of deformations of the moving body.Therefore the accuracy of the segmentation and tracking algorithm infinding the target outline is crucial for the quality of thefinal result.This rules out a number of available or easy-to-build tracking systems that provide only a center of mass or a bounding box around the target and calls for more precise and usually more sophisticated solutions.Therefore,we decided to use the geodesic active contour approach[19]and specifically the‘fast geodesic active con-tour’method described in Ref.[2].The segmentation prob-lem is expressed as a geometric energy minimization.We search for a curve C that minimizes the functionalS[C]=L(C)g(C)d s,where d s is the Euclidean arclength,L(C)is the total Eu-clidean length of the curve,and g is a positive edge indi-cator function in a3-D hybrid spatial-temporal space that depends on the pair of consecutive frames I t−1(x,y)and I t(x,y),where I t(x,y): ⊂R2×[0,T]→R+.It gets small values along the spatial-temporal edges,i.e.moving object boundaries,and higher values elsewhere.In addition to the scheme described in Ref.[2],we also use the background information whenever a static back-ground assumption is valid and a background image B(x,y) is available.In the active contours framework this can be achieved either by modifying the g function to reflect the edges in the difference image D(x,y)=|B(x,y)−I t(x,y)|, or by introducing additional area integration terms to the functional S(C):S[C]=L(C)g(C)d s+ 1C|D(x,y)−c1|2d a + 2\ C|D(x,y)−c2|2d a,1036R.Goldenberg et al./Pattern Recognition 38(2005)1033–1043Fig.3.Non-rigid moving object segmentation and tracking.where C is the area inside the contour C , 1and 2are fixed parameters and c 1,c 2are given by c 1=average C [D(x,y)],c 2=average \ C [D(x,y)].The latter approach is inspired by the ‘active contours with-out edges’model proposed by Chan and Vese [20].It forces the curve C to close on a region whose interior and exte-rior have approximately uniform D(x,y)values.A different approach to utilize the region information by coupling be-tween the motion estimation and the tracking problem was suggested by Paragios and Deriche in Ref.[21].Fig.3shows some results of moving object segmentation and tracking using the proposed method.Contours can be represented in various ways.Here,in or-der to have a unified coordinate system and be able to apply a simple algebraic tool,we use the implicit representation of a simple closed curve as its binary image.That is,the contour is given by an image for which the exterior of the contour is black while its interior is white.3.2.Periodicity analysisHere we assume that the majority of non-rigid moving objects are self-propelled alive creatures,whose motion is almost periodic.Thus,one motion period,like a step of a walking man or a rabbit hop,can be used as a natural unit of motion.Extracted motion characteristics can by normalized by the period size.The problem of detection and characterization of periodic activities was addressed by several research groups and the prevailing technique for periodicity detection and measure-ments is the analysis of the changing 1-D intensity signals along spatio-temporal curves associated with a moving ob-ject or the curvature analysis of feature point trajectories [22–25].Here we address the problem using global charac-teristics of motion such as moving object contour deforma-tions and the trajectory of the center of mass.By running frequency analysis e.g.Refs.[13,26]on such 1-D contour metrics as the contour area,velocity of the cen-ter of mass,principal axes orientation,etc.we can detect the basic period of the motion.Figs.4and 5present global motion characteristics derived from segmented moving ob-jects in two sequences.One can clearly observe the common dominant frequency in all three graphs.The period can also be estimated in a straightforward manner by looking for the frame where the external object contour best matches the object contour in the current frame.Fig.6shows the deformations of a walking man contour during one motion period (step).Samples from two different steps are presented and each vertical pair of frames is phase synchronized.One can clearly see the similarity between the corresponding contours.An automated contour matching can be performed in a number of ways,e.g.by comparing contour signatures or by looking at the correlation between the object silhouettes in different frames as we chose to do in our work.Fig.7shows four graphs of inter-frame silhouette correlation values measured for four different starting frames taken within one motion period.It is clearly visible that all four graphs nearly coincide and the local maxima peaks are approximately evenly spaced.The period,therefore,was estimated as the average distance between the neighboring peaks.R.Goldenberg et al./Pattern Recognition 38(2005)1033–10431037020406080100120140-0.2 -0.100.10.2r a d020406080100120140600800100012001400s q . p i x e l s20406080100120140-4 -2024p i x e l s /f r a m eframe numberWalking man − global motion parametersPrincipal axis inclination angleContour areaCenter of mass − vertical velocityFig.4.Global motion characteristics measured for walking man sequence.02040608010012000.050.10.150.2r a d0204060801001207000750080008500s q . p i x e l s020406080100120-2-1012p i x e l s /f r a m eframe numberWalking cat − global motion parametersPrincipal axis inclination angleContour areaCenter of mass − vertical velocityFig.5.Global motion characteristics measured for walking cat sequence.1038R.Goldenberg et al./Pattern Recognition 38(2005)1033–1043Fig.6.Deformations of a walking man contour during one motion period (step).Two steps synchronized in phase are shown.One can see the similarity between contours in corresponding phases.1020304050600.550.60.650.70.750.80.850.90.951frame offseti n t e r −f r a m e c o r r e l a t i o n Fig.7.Inter-frame correlation between object silhouettes.Four graphs show the correlation measured for four initial frames.3.3.Frame sequence alignmentOne of the most desirable features of any classification system is the invariance to a set of possible input transfor-mations.As the input in our case is not a static image,but a sequence of images,the system should be robust to both spatial and temporal variations.In the section below we de-scribe the methods for spatial and temporal alignment of input video sequence bringing it to a canonical,comparable form allowing later classification.3.3.1.Spatial alignmentWe want to compare every object to a single pattern re-gardless of its size,viewing distance and other imaging con-ditions.This is achieved by cropping a square bounding box around the center ofmass of the tracked target silhouette and re-scaling it to a predefined size (see Fig.8).Note that this is not a scale invariant transformation unlike other reported normalization techniques e.g.Ref.[27].Fig.8.Scale alignment.A minimal square bounding box around the center of the segmented object silhouette (a)is cropped and re-scaled to form a 50×50binary image (b).One way to have orientation invariance is to keep a col-lection of motion samples for a wide range of possible motion directions and then look for the best match.This approach was used by Yacoob and Black in Ref.[17]to distinguish between different walking directions.Although here we experiment only with motions nearly parallel to the image plane,the system proved to be robust to small vari-ations in orientation.Since we do not want to keep models for both left-to-right and right-to-left motion directions,the right-to-left moving sequences are converted to left-to-right by horizontal mirror flip.3.3.2.Temporal alignmentA good estimate of the motion period allows us to com-pensate for motion speed variations by re-sampling each period subsequence to a predefined duration.This can be done by interpolation between the binary silhouette im-ages themselves or between their parameterized represen-tation as explained below.Fig.9presents an original and re-sampled one-period subsequence after scaling from 11to 10frames.Temporal shift is another issue that has to be addressed in order to align the phase of the observed one-cycle sample and the models stored in the training base.In Ref.[17]it was done by solving a minimization problem of finding theR.Goldenberg et al./Pattern Recognition38(2005)1033–10431039Fig.9.Temporal alignment.Top:original11frames of one period subsequence.Bottom:re-sampled10framessequence.Fig.10.Temporal shift alignment:(a)average starting frame of all the training set sequences,(b)temporally shifted single-cycle test sequence,(c)correlation between the reference starting frame and the test sequence frames.optimal parameters of temporal scaling and time shift trans-formations so that the observed sequence is best matched to the training samples.Polana and Nelson[11]handled this problem by matching the test one-period subsequence to reference template at all possible temporal translations. Murase and Sakai[18]used an optimization technique for finding the shift and scale parameters.Here we propose an explicit temporal alignment process.Let us assume that in the training set all the sequences are accurately aligned.Then wefind the temporal shift of a test sequence by looking for the starting frame that best matches the generalized(averaged)starting frame of the training samples,as they all look alike.Fig.10shows(a)the reference starting frame taken as an average over the tem-porally aligned training set,(b)a re-sampled single-period test sequence and,(c)the correlation between the reference starting frame and the test sequence frames.The maximal correlation is achieved at the seventh frame,therefore the test sequence is aligned by cyclically shifting it7frames to the left.3.4.ParameterizationIn order to reduce the dimensionality of the problem we first project the object image in every frame onto a low-dimensional base.The base is chosen to represent all pos-sible appearances of objects that belong to a certain class, like humans,four-leg animals,etc.Let n be number of frames in the training base of a certain class of objects and M be a training samples matrix,where each column corresponds to a spatially aligned image of a moving object written as a binary vector.In our experiments we use50×50normalized images,therefore,M is a2500×n matrix.The correlation matrix MM T is decomposed using Singular Value Decomposition as MM T=U V T,where U is an orthogonal matrix of principal directions and the is1040R.Goldenberg et al./Pattern Recognition38(2005)1033–1043Fig.11.The principal basis for the‘dogs and cats’training set formed of20first principal component vectors.a diagonal matrix of singular values.In practice,the decom-position is performed on M T M,which is computationally more efficient[28].The principal basis{U i,i=1,...,k}for the training set is then taken as k columns of U correspond-ing to the largest singular values in .Fig.11presents a principal basis for the training set formed of800sample im-ages collected from more than60sequences showing dogs and cats in motion.The basis is built by taking the k=20first principal component vectors.We build such representative bases for every class of objects.Then,we can distinguish between various object classes byfinding the basis that best represents a given ob-ject image in the distance to the feature space(DTFS)sense. Fig.12shows the distances from more than1000various im-ages of people,dogs and cats to the feature space of people and to that of dogs and cats.In all cases,images of people were closer to the people feature space than to the animals’feature space and vise a versa.This allows us to distinguish between these two classes.A similar approach was used in Ref.[29]for the detection of pedestrians in traffic scenes. If the object class is known(e.g.we know that the object is a dog),we can parameterize the moving object silhouette image I in every frame by projecting it onto the class basis. Let B be the basis matrix formed from the basis vectors {U i,i=1,...,k}.Then,the parameterized representation of the object image I is given by the vector p of length k as p=B T v I,where v I is the image I written as a vector. The idea of using a parameterized representation in motion-based recognition context is certainly not a new one.To name a few examples we mention again the works of Yacoob and Black[17],and of Murase and Sakai[18].0510******** 051015202530Distance to the "people" feature spaceDistancetothe"dogs&cats"featurespacedogs and catspeopleFig.12.Distances to the‘people’and‘dogs and cats’feature spaces from more than1000various images of people,dogs and cats.Cootes et al.[30]used similar technique for describing fea-ture point locations by a reduced parameter set.Baumberg and Hogg[31]used PCA to describe a set of admissible B-spline models for deformable object tracking.Chomat and Crowley[32]used PCA-based spatio-temporalfilter for human motion recognition.Fig.13shows several normalized moving object images from the original sequence and their reconstruction from a parameterized representation by back-projection to the im-age space.The numbers below are the norms of differences between the original and the back-projected images.These norms can be used as the DTFS estimation.Now,we can use these parameterized representations to distinguish between different types of motion.The refer-ence base for the activity recognition consists of temporally aligned one-period subsequences.The moving object sil-houette in every frame of these subsequences is represented by its projection to the principal basis.More formally,let {I f:f=1,...,T}be a one-period,temporally aligned set of normalized object images,and p f,f=1,...,T a pro-jection of the image I f onto the principal basis B of size k.Then,the vector P of length kT formed by concatenation of all the vectors p f,f=1,...,T,represent a one-period subsequence.By choosing a basis of size k=20and the nor-malized duration of one-period subsequence to be T=10 frames,every single-period subsequence is represented by a feature point in a200-dimensional feature space.In the following experiment we processed a number of sequences of dogs and cats in various types of locomotion. From these sequences we extracted33samples of walking dogs,9samples of running dogs,9samples with gallop-ing dogs and14samples of walking cats.Let S200×m beR.Goldenberg et al./Pattern Recognition 38(2005)1033–10431041Fig.13.Image sequence parameterization.Top:11normalized target images of the original sequence.Bottom:the same images after the parameterization using the principal basis and back-projecting to the image basis.The numbers are the norms of the differences between the original and the back-projected images.Fig.14.Feature points extracted from the sequences with walking,running and galloping dogs and walking cats and projected to the 3D space for visualization.the matrix of projected single-period subsequences,where m is the number of samples and the SVD of the correla-tion matrix is given by SS T =U S S V S .In Fig.14we de-pict the resulting feature points projected for visualization to the 3-D space using the three first principal directions {U S i :i =1,...,3},taken as the column vectors of U S cor-responding to the three largest eigen-values in S .One can easily observe four separable clusters corresponding to the four groups.Another experiment was done over the ‘people’class of images.Fig.15presents feature points corresponding to several sequences showing people walking and running parallel to the image plane and running at oblique angle to the camera.Again,all three groups lie in separable clusters.The classification can be performed,for example,using the k -nearest-neighbor algorithm.We conducted the ‘leave one out’test for the dogs set above,classifying every sample by taking them out from the training set one at a time.The three-nearest-neighbors strategy resulted in 100%successrate.Fig.15.Feature points extracted from the sequences showing peo-ple walking and running parallel to the image plane and at 45◦angle to the camera.Feature points are projected to the 3-D space for visualization.Another option is to further reduce the dimensionality of the feature space by projecting the 200-dimensional feature vectors in S to some principal basis.This can be done using a basis of any size,exactly as we did in 3-D for visualiza-tion.Fig.16presents learning curves for both animals and people data sets.The curves show how the classification er-ror rate achieved by one-nearest-neighbor classifier changes as a function of principal basis size.The curves are obtained by averaging over a hundred iterations when the data set is randomly split into training and testing sets.The principal basis is built on the training set and the testing is performed using the ‘leave one out’strategy on the testing set.4.Concluding remarksWe presented a new framework for motion-based clas-sification of moving non-rigid objects.The technique is based on the analysis of changing appearance of moving ob-jects and is heavily relying on high accuracy results of seg-mentation and tracking by using the fast geodesic contour。