A parallel adaptive tabu search approach

- 格式:pdf

- 大小:750.50 KB

- 文档页数:17

高三英语学术研究方法创新不断探索单选题30题及答案1.In academic research, where is the best place to start looking for relevant literature?A.The libraryB.The internetC.A professor's officeD.A friend's bookshelf答案:A。

在学术研究中,图书馆通常拥有丰富的学术资源,包括书籍、期刊等,是开始查找相关文献的最佳地方。

选项B 互联网上的信息可能不准确或不权威。

选项C 教授的办公室不是查找文献的主要场所。

选项D 朋友的书架上的书可能不具有学术性。

2.When searching for literature, which keyword should you avoid using?mon wordsB.Specific termsC.Technical jargonD.Academic phrases答案:A。

在查找文献时,应避免使用常见词汇,因为这样会得到大量不相关的结果。

选项B 特定术语、选项C 技术行话、选项D 学术短语都可以帮助缩小搜索范围。

3.Which of the following is NOT a reliable source of literature for academic research?A.Scholarly journalsB.Popular magazinesC.Academic booksD.Research papers答案:B。

学术研究中,可靠的文献来源包括学术期刊、学术书籍和研究论文。

流行杂志通常不具有学术性和权威性。

4.If you can't find a particular piece of literature in your local library, what should you do?A.Give upB.Ask a friendC.Try an online databaseD.Wait for it to appear答案:C。

2023全国高考英语甲卷d篇解析一、文章概述2023年全国高考英语甲卷的D篇阅读理解是一篇关于人工智能(AI)在医疗领域应用的文章。

文章主要介绍了AI在诊断和治疗疾病方面的优势,以及在医疗领域中的广泛应用。

二、解题思路1. 文章主旨:本文主要介绍了AI在医疗领域的应用,因此,理解文章主旨的关键是要把握住AI在医疗领域中的重要作用。

2. 细节理解:文章中涉及了许多关于AI在医疗领域应用的细节,如AI的诊断准确性、治疗效率等,因此,在解题时,需要仔细阅读文章,找出与问题相关的细节。

3. 逻辑推理:根据文章中的信息,进行合理的逻辑推理,有助于准确解答问题。

例如,根据文章中提到的AI应用案例,可以推断出AI 在医疗领域中的广泛应用前景。

三、题目解析1. 题目:D篇第一题“What is the main advantage of using artificial intelligence in healthcare?”(使用人工智能在医疗领域的主要优势是什么?)答案:文章中提到,AI在医疗领域的主要优势在于提高诊断和治疗疾病的准确性、效率和减少医生的工作负担。

因此,答案为“提高诊断和治疗疾病的准确性、效率和减少医生的工作负担”。

2. 题目:D篇第四题“How does artificial intelligence help doctors in the diagnosis of lung cancer?”(AI如何帮助医生诊断肺癌?)答案:根据文章中的描述,AI可以通过分析肺部CT扫描图像,帮助医生更准确地诊断肺癌。

同时,AI还可以提供相关的疾病信息,帮助医生制定更有效的治疗方案。

因此,答案为“AI可以通过分析肺部CT扫描图像,帮助医生更准确地诊断肺癌;同时提供相关的疾病信息,帮助医生制定更有效的治疗方案”。

四、知识点总结1. 医学影像诊断:本文主要讨论了AI在医学影像诊断中的应用,包括CT扫描、MRI等影像技术的分析。

PRIDE:A Data Abstraction Layer for Large-Scale2-tier Sensor NetworksWoochul Kang University of Virginia Email:wk5f@Sang H.SonUniversity of VirginiaEmail:son@John A.StankovicUniversity of VirginiaEmail:stankovic@Abstract—It is a challenging task to provide timely access to global data from sensors in large-scale sensor network applica-tions.Current data storage architectures for sensor networks have to make trade-offs between timeliness and scalability. PRIDE is a data abstraction layer for2-tier sensor networks, which enables timely access to global data from the sensor tier to all participating nodes in the upper storage tier.The design of PRIDE is heavily influenced by collaborative real-time ap-plications such as search-and-rescue tasks for high-rise building fires,in which multiple devices have to collect and manage data streams from massive sensors in cooperation.PRIDE achieves scalability,timeliness,andflexibility simultaneously for such applications by combining a model-driven full replication scheme and adaptive data quality control mechanism in the storage-tier. We show the viability of the proposed solution by implementing and evaluating it on a large-scale2-tier sensor network testbed. The experiment results show that the model-driven replication provides the benefit of full replication in a scalable and controlled manner.I.I NTRODUCTIONRecent advances in sensor technology and wireless connec-tivity have paved the way for next generation real-time appli-cations that are highly data-driven,where data represent real-world status.For many of these applications,data streams from sensors are managed and processed by application-specific devices such as PDAs,base stations,and micro servers.Fur-ther,as sensors are deployed in increasing numbers,a single device cannot handle all sensor streams due to their scale and geographic distribution.Often,a group of such devices need to collaborate to achieve a common goal.For instance,during a search-and-rescue task for a buildingfire,while PDAs carried byfirefighters collect data from nearby sensors to check the dynamic status of the building,a team of suchfirefighters have to collaborate by sharing their locally collected real-time data with peerfirefighters since each individualfirefighter has only limited information from nearby sensors[1].The building-wide situation assessment requires fusioning data from all(or most of)firefighters.As this scenario shows,lots of future real-time applications will interact with physical world via large numbers of un-derlying sensors.The data from the sensors will be managed by distributed devices in cooperation.These devices can be either stationary(e.g.,base stations)or mobile(e.g.,PDAs and smartphones).Sharing data,and allowing timely access to global data for each participating entity is mandatory for suc-cessful collaboration in such distributed real-time applications.Data replication[2]has been a key technique that enables each participating entity to share data and obtain an understanding of the global status without the need for a central server. In particular,for distributed real-time applications,the data replication is essential to avoid unpredictable communication delays[3][4].PRIDE(Predictive Replication In Distributed Embedded systems)is a data abstraction layer for devices performing collaborative real-time tasks.It is linked to an application(s) at each device,and provides transparent and timely access to global data from underlying sensors via a scalable and robust replication mechanism.Each participating device can transparently access the global data from all underlying sen-sors without noticing whether it is from local sensors,or from remote sensors,which are covered by peer devices. Since global data from all underlying sensors are available at each device,queries on global spatio-temporal data can be efficiently answered using local data access methods,e.g.,B+ tree indexing,without further communication.Further,since all participating devices share the same set of data,any of them can be a primary device that manages a sensor.For example,when entities(either sensor nodes or devices)are mobile,any device that is close to a sensor node can be a primary storage node of the sensor node.Thisflexibility via decoupling the data source tier(sensors)from the storage tier is very important if we consider the highly dynamic nature of wireless sensor network applications.Even with these advantages,the high overhead of repli-cation limits its applicability[2].Since potentially a vast number of sensor streams are involved,it is not generally possible to propagate every sensor measurement to all devices in the system.Moreover,the data arrival rate can be high and unpredictable.During critical situations,the data rates can significantly increase and exceed system capacity.If no corrective action is taken,queues will form and the laten-cies of queries will increase without bound.In the context of centralized systems,several intelligent resource allocation schemes have been proposed to dynamically control the high and unpredictable rate of sensor streams[5][6][7].However, no work has been done in the context of distributed and replicated systems.In this paper,we focus on providing a scalable and robust replication mechanism.The contributions of this paper are: 1)a model-driven scalable replication mechanism,which2significantly reduces the overall communication and computation overheads,2)a global snapshot management scheme for efficientsupport of spatial queries on global data,3)a control-theoretic quality-of-data management algo-rithm for robustness against unpredictable workload changes,and4)the implementation and evaluation of the proposed ap-proach on a real device with realistic workloads.To make the replication scalable,PRIDE provides a model-driven replication scheme,in which the models of sensor streams are replicated to peer storage nodes,instead of data themselves.Once a model for a sensor stream is replicated from a primary storage node of the sensor to peer nodes,the updates from the sensor are propagated to peer nodes only if the prediction from the current model is not accurate enough. Our evaluation in Section5shows that this model-driven approach makes PRIDE highly scalable by significantly re-ducing the communication/computation overheads.Moreover, the Kalmanfilter-based modeling technique in PRIDE is light-weight and highly adaptable because it dynamically adjusts its model parameters at run-time without training.Spatial queries on global data are efficiently supported by taking snapshots from the models periodically.The snapshot is an up-to-date reflection of the monitored situation.Given this fresh snapshot,PRIDE supports a rich set of local data orga-nization mechanisms such as B+tree indexing to efficiently process spatial queries.In PRIDE,the robustness against unpredictable workloads is achieved by dynamically adjusting the precision bounds at each node to maintain a proper level of system load,CPU utilization in particular.The coordination is made among the nodes such that relatively under-loaded nodes synchronize their precision bound with an relatively overloaded node. Using this coordination,we ensure that the congestion at the overloaded node is effectively resolved.To show the viability of the proposed approach,we imple-mented a prototype of PRIDE on a large-scale testbed com-posed of Nokia N810Internet tablets[8],a cluster computer, and a realistic sensor stream generator.We chose Nokia N810 since it represents emerging ubiquitous computing platforms such as PDAs,smartphones,and mobile computers,which will be expected to interact with ubiquitous sensors in the near future.Based on the prototype implementation,we in-vestigated system performance attributes such as communica-tion/computation loads,energy efficiency,and robustness.Our evaluation results demonstrate that PRIDE takes advantage of full replication in an efficient,highly robust and scalable manner.The rest of this paper is organized as follows.Section2 presents the overview of PRIDE.Section3presents the details of the model-driven replication.Section4discusses our pro-totype implemention,and Section5presents our experimental results.We present related work in Section6and conclusions in Section7.II.O VERVIEW OF PRIDEA.System ModelFig.1.A collaborative application on a2-tier sensor network. PRIDE envisions2-tier sensor network systems with a sensor tier and a storage tier as shown in Figure1.The sensor tier consists of a large number of cheap and simple sensors;S={s1,s2,...,s n},where s i is a sensor.Sensors are assumed to be highly constrained in resources,and per-form only primitive functions such as sensing and multi-hop communication without local storage.Sensors stream data or events to a nearest storage node.These sensors can be either stationary or mobile;e.g.,sensors attached to afirefighter are mobile.The storage tier consists of more powerful devices such as PDAs,smartphones,and base stations;D={d1,d2,...,d m}, where d i is a storage node.These devices are relatively resource-rich compared with sensor nodes.However,these devices also have limited resources in terms of processor cycles,memory,power,and bandwidth.Each storage node provides in-network storage for underlying sensors,and stores data from sensors in its vicinity.Each node supports multiple radios;an802.11radio to connect to a wireless mesh network and a802.15.4to communicate with underlying sensors.Each node in this tier can be either stationary(e.g.,base stations), or mobile(e.g.,smartphones and PDAs).The sensor tier and the storage tier have loose coupling; the storage node,which a sensor belongs to,can be changed dynamically without coordination between the two tiers.This loose coupling is required in many sensor network applications if we consider the highly dynamic nature of such systems.For example,the mobility of sensors and storage nodes makes the system design very complex and inflexible if two tiers are tightly coupled;a complex group management and hand-off procedure is required to handle the mobility of entities[9]. Applications at each storage node are linked to the PRIDE layer.Applications issue queries to underlying PRIDE layer either autonomously,or by simply forwarding queries from external users.In the search-and-rescue task example,each storage node serves as both an in-network data storage for nearby sensors and a device to run autonomous real-time applications for the mission;the applications collect data by issuing queries and analyzing the situation to report results to thefirefighter.Afterwards,a node refers to a storage node if it is not explicitly stated.3Fig.2.The architecture of PRIDE(Gray boxes).age ModelIn PRIDE,all nodes in the storage tier are homogeneous in terms of their roles;no asymmetrical function is placed on a sub-group of the nodes.All or part of the nodes in the storage tier form a replication group R to share the data from underlying sensors,where R⊂D.Once a node joins the replication group,updates from its local sensors are propagated to peer nodes;conversely,the node can receive updates from remote sensors via peer nodes.Any storage node,which is receiving updates directly from a sensor,becomes a primary node for the sensor,and it broadcasts the updates from the sensor to peer nodes.However,it should be noted that,as will be shown in Section3,the PRIDE layer at each node performs model-driven replication,instead of replicating sensor data,to make the replication efficient and scalable.PRIDE is characterized by the queries that it supports. PRIDE supports both temporal queries on each individual sensor stream and spatial queries on current global data.Tem-poral queries on sensor s i’s historical data can be answered using the model for s i.An example of temporal query is “What is the value of sensor s i5minutes ago?”For spatial queries,each storage node provides a snapshot on the entire set of underlying sensors(both local and remote sensors.)The snapshot is similar to a view in database ing the snapshot,PRIDE provides traditional data organization and access methods for efficient spatial query processing.The access methods can be applied to any attributes,e.g.,sensor value,sensor ID,and location;therefore,value-based queries can be efficiently supported.Basic operations on the access methods such as insertion,deletion,retrieval,and the iterating cursors are supported.Special operations such as join cursors for join operations are also supported by making indexes to multiple attributes,e.g.,temperature and location attributes. This join operation is required to efficiently support complex spatial queries such as“Return the current temperatures of sensors located at room#4.”III.PRIDE D ATA A BSTRACTION L AYERThe architecture of PRIDE is shown in Figure2.PRIDE consists of three key components:(i)filter&prediction engine,which is responsible for sensor streamfiltering,model update,and broadcasting of updates to peer nodes,(ii)query processor,which handles queries on spatial and temporal data by using a snapshot and temporal models,respectively,and (iii)feedback controller,which determines proper precision bounds of data for scalability and overload protection.A.Filter&Prediction EngineThe goals offilter&prediction engine are tofilter out updates from local sensors using models,and to synchronize models at each storage node.The premise of using models is that the physical phenomena observed by sensors can be captured by models and a large amount of sensor data can be filtered out using the models.In PRIDE,when a sensor Input:update v from sensor s iˆv=prediction from model for s i;1if|ˆv−v|≥δthen2broadcast to peer storage nodes;3update data for s i in the snapshot;4update model m i for s i;5store to cache for later temporal query processing;6else7discard v(or store for logging);8end9Algorithm2:OnUpdateFromPeer.stream s i is covered by PRIDE replication group R,each storage node in R maintains a model m i for s i.Therefore, all storage nodes in R maintain a same set of synchronized models,M={m1,m2,...,m n},for all sensor streams in underlying sensor tier.Each model m i for sensor s i are synchronized at run-time by s i’s current primary storage node (note that s i’s primary node can change during run-time because of the network topology changes either at sensor tier or storage tier).Algorithms1and2show the basic framework for model synchronization at a primary node and peer nodes,respec-tively.In Algorithm1,when an update v is received from sensor s i to its primary storage node d j,the model m i is looked up,and a prediction is made using m i.If the gap between the predicted value from the model,ˆv,and the sensor update v is less than the precision boundδ(line2),then the new data is discarded(or saved locally for logging.)This implies that the current models(both at the primary node and the peer nodes)are precise enough to predict the sensor output with the given precision bound.However,if the gap is bigger than the precision bound,this implies that the model cannot capture the current behavior of the sensor output.In this case, m i at the primary node is updated and v is broadcasted to all peer nodes(line3).In Algorithm2,as a reaction to the broadcast from d j,each peer node receives a new update v and updates its own model m i with v.The value v is stored in local caches at all nodes for later temporal query processing.4As shown in the Algorithms,the communication among nodes happens only when the model is not precise enough. Models,Filtering,and Prediction So far,we have not discussed a specific modeling technique in PRIDE.Several distinctive requirements guide the choice of modeling tech-nique in PRIDE.First,the computation and communication costs for model maintenance should be low since PRIDE han-dles a large number of sensors(and corresponding models for each sensor)with collaboration of multiple nodes.The cost of model maintenance linearly increases to the number of sensors. Second,the parameters of models should be obtained without an extensive learning process,because many collaborative real-time applications,e.g.,a search-and-rescue task in a building fire,are short-term and deployed without previous monitoring history.A statistical model that needs extensive historical data for model training is less applicable even with their highly efficientfiltering and prediction performance.Finally, the modeling should be general enough to be applied to a broad range of applications.Ad-hoc modeling techniques for a particular application cannot be generally used for other applications.Since PRIDE is a data abstraction layer for wide range of collaborative applications,the generality of modeling is important.To this end,we choose to use Kalmanfilter [10][6],which provides a systematic mechanism to estimate past,current,and future state of a system from noisy measure-ments.A short summary on Kalmanfilter follows.Kalman Filter:The Kalmanfilter model assumes the true state at time k is evolved from the state at(k−1)according tox k=F k x k−1+w k;(1) whereF k is the state transition matrix relating x k−1to x k;w k is the process noise,which follows N(0,Q k);At time k an observation z k of the true state x k is made according toz k=H k x k+v k(2) whereH k is the observation model;v k is the measurement noise,which follows N(0,R k); The Kalmanfilter is a recursive minimum mean-square error estimator.This means that only the estimated state from the previous time step and the current measurement are needed to compute the estimate for the current and future state. In contrast to batch estimation techniques,no history of observations is required.In what follows,the notationˆx n|m represents the estimate of x at time n given observations up to,and including time m.The state of afilter is defined by two variables:ˆx k|k:the estimate of the state at time k givenobservations up to time k.P k|k:the error covariance matrix(a measure of theestimated accuracy of the state estimate). Kalmanfilter has two distinct phases:Predict and Update. The predict phase uses the state estimate from the previous timestep k−1to produce an estimate of the state at the next timestep k.In the update phase,measurement information at the current timestep k is used to refine this prediction to arrive at a new more accurate state estimate,again for the current timestep k.When a new measurement z k is available from a sensor,the true state of the sensor is estimates using the previous predictionˆx k|k−1,and the weighted prediction error. The weight is called Kalman gain K k,and it is updated on each prediction/update cycle.The true state of the sensor is estimated as follows,ˆx k|k=ˆx k|k−1+K k(z k−H kˆx k|k−1).(3)P k|k=(I−K k H k)P k|k−1.(4) The Kalman gain K k is updated as follows,K k|k=P k|k−1H T k(H k P k|k−1H T k+R k).(5) At each prediction step,the next state of the sensor is predicted by,ˆx k|k−1=F kˆx k−1|k−1.(6) Example:For instance,a temperature sensor can be described by the linear state space,x k= x dxdtis the derivative of the temperature with respect to time.As a new(noisy)measurement z k arrives from the sensor1,the true state and model parameters are estimated by Equations3-5.The future state of the sensor at(k+1)th time step after∆t can be predicted using the Equation6, where the state transition matrix isF= 1∆t01 .(7) It should be noted that the parameters for Kalmanfilter,e.g., K and P,do not have to be accurate in the beginning;they can be estimated at run-time and their accuracy improves gradually by having more sensor measurements.We do not need massive past data for modeling at deployment time.In addition,the update cycle of Kalmanfilter(Equations3-5) is performed at all storage nodes when a new measurement is broadcasted as shown in Algorithm1(line5)and Algorithm2 (line2).No further communication is required to synchronize the parameters of the models.Finally,as will be shown in Section5,the prediction/update cycle of Kalmanfilter incurs insignificant overhead to the system.1Note that the temperature component of zk is directly acquired from the sensor,and dx5B.Query ProcessorThe query processor of PRIDE supports both temporal queries and spatial queries with planned extension to support spatio-temporal queries.Temporal Queries:Historical data for each sensor stream can be processed in any storage node by exploiting data at the local cache and linear smoother[10].Unlike the estimation of current and future states using one Kalmanfilter,the optimized estimation of historical data(sometimes called smoothing) requires two Kalmanfilters,a forwardfilterˆx and a backward filterˆx b.Smoothing is a non-real-time data processing scheme that uses all measurements between0and T to estimate the state of a system at a certain time t,where0≤t≤T(see Figure3.)The smoothed estimateˆx(t|T)can be obtained as a linear combination of the twofilters as follows.ˆx(t|T)=Aˆx(t)+A′ˆx(t)b,(8) where A and A′are weighting matrices.For detailed discus-sion on smoothing techniques using Kalmanfilters,the reader is referred to[10].Fig.3.Smoothing for temporal query processing.Spatial Queries:Each storage node maintains a snapshot for all underlying local and remote sensors to handle queries on global spatial data.Each element(or data object)of the snapshot is an up-to-date value from the corresponding sensor.The snapshot is dynamically updated either by new measurements from sensors or by models2.The Algorithm1 (line4)and Algorithm2(line1)show the snapshot updates when a new observation is pushed from a local sensor and a peer node,respectively.As explained in the previous section, there is no communication among storage nodes when models well represent the current observations from sensors.When there is no update from peer nodes,the freshness of values in the snapshot deteriorate over time.To maintain the freshness of the snapshot even when there is no updates from peer nodes,each value in the snapshot is periodically updated by its local model.Each storage node can estimate the current state of sensor s i using Equation6without communication to the primary storage node of s i.For example,a temperature after30seconds can be predicted by setting∆t of transition matrix in Equation7to30seconds.The period of update of data object i for sensor s i is determined,such that the precision boundδis observed. Intuitively,when a sensor value changes rapidly,the data object should be updated more frequently to make the data object in the snapshot valid.In the example of Section3.1.1, 2Note that the data structures for the snapshot such as indexes are also updated when each value of the snapshot is updated.the period can be dynamically estimated as follows:p[i]=δ/dxdtis the absolute validity interval(avi)before the data object in the snapshot violates the precision bound,which is±δ.The update period should be as short as the half of the avi to make the data object fresh[11].Since each storage node has an up-to-date snapshot,spatial queries on global data from sensors can be efficiently han-dled using local data access methods(e.g.,B+tree)without incurring further communication delays.(a)δ=5C(b)δ=10CFig.4.Varying data precision.Figure4shows how the value of one data object in the snapshot changes over time when we apply different precision bounds.As the precision bound is getting bigger,the gap be-tween the real state of the sensor(dashed lines)and the current value at the snapshot(solid lines)increases.In the solid lines, the discontinued points are where the model prediction and the real measurement from the sensor are bigger than the precision bound,and subsequent communication is made among storage nodes for model synchronization.For applications and users, maintaining the smaller precision bound implies having a more accurate view on the monitored situation.However, the overhead also increases as we have the smaller precision bound.Given the unpredictable data arrival rates and resource constraints,compromising the data quality for system sur-vivability is unavoidable in many situations.In PRIDE,we consider processor cycles as the primary limited resource,and the resource allocation is performed to maintain the desired CPU utilization.The utilization control is used to enforce appropriate schedulable utilization bounds of applications can be guaranteed despite significant uncertainties in system work-loads[12][5].In utilization control,it is assumed that any cycles that are recovered as a result of control in PRIDE layer are used sensibly by the scheduler in the application layer to relieve the congestion,or to save power[12][5].It can also enhance system survivability by providing overload protection against workloadfluctuation.Specification:At each node,the system specification U,δmax consists of a utilization specification U and the precision specificationδmax.The desired utilization U∈[0..1]gives the required CPU utilization not to overload the system while satisfying the target system performance6 such as latency,and energy consumption.The precisionspecificationδmax denotes the maximum tolerable precision bound.Note there is no lower bound on the precision as in general users require a precision bound as short as possible (if the system is not overloaded.)Local Feedback Control to Guarantee the System Spec-ification:Using feedback control has shown to be very effec-tive for a large class of computing systems that exhibit unpre-dictable workloads and model inaccuracies[13].Therefore,to guarantee the system specification without a priori knowledge of the workload or accurate system model we apply feedbackcontrol.Fig.5.The feedback control loop.The overall feedback control loop at each storage node is shown in Figure5.Let T is the sampling period.The utilization u(k)is measured at each sampling instant0T,1T,2T,...and the difference between the target utilization and u(k)is fed into the ing the difference,the controller computes a local precision boundδ(k)such that u(k)converges to U. Thefirst step for local controller design is modeling the target system(storage node)by relatingδ(k)to u(k).We model the the relationship betwenδ(k)and u(k)by using profiling and statistical methods[13].Sinceδ(k)has higher impact on u(k)as the size of the replication group increases, we need different models for different sizes of the group. We change the number of members of the replication group exponentially from2to64and have tuned a set offirst order models G n(z),where n∈{2,4,8,16,32,64}.G n(z)is the z-transform transfer function of thefirst-order models,in which n is the size of the replication group.After the modeling, we design a controller for the model.We have found that a proportional integral(PI)controller[13]is sufficient in terms of providing a zero steady-state error,i.e.,a zero difference between u(k)and the target utilization bound.Further,a gain scheduling technique[13]have been used to apply different controller gains for different size of replication groups.For instance,the gain for G32(z)is applied if the size of a replication group is bigger than24and less than or equal to48. Due to space limitation we do not provide a full description of the design and tuning methods.Coordination among Replication Group Members:If each node independently sets its own precision bound,the net precision bound of data becomes unpredictable.For example, at node d j,the precision bounds for local sensor streams are determined by d j itself while the precision bounds for remote sensor streams are determined by their own primary storage nodes.PRIDE takes a conservative approach in coordinating stor-age nodes in the group.As Algorithm3shows,the global precision bound for the k th period is determined by taking the maximum from the precision bounds of all nodes in theInput:myid:my storage id number/*Get localδ.*/1measure u(k)from monitor;2calculateδmyid(k)from local controller;3foreach peer node d in R−˘d myid¯do4/*Exchange localδs.*/5/*Use piggyback to save communication cost.*/ 6sendδmyid(k)to d;7receiveδi(k)from d;8end9/*Get thefinal globalδ.*/10δglobal(k)=max(δi(k)),where i∈R;11。

高三英语计算机语言单选题40题(含答案)1.The computer's CPU is like the ______ of a car.A.engineB.wheelC.seatD.window答案:A。

CPU(中央处理器)就像汽车的发动机一样,是计算机的核心部件。

选项B“wheel”是轮子;选项C“seat”是座位;选项D“window”是窗户,都与CPU 的功能和作用不相符。

2.The monitor is often called the ______ of a computer.A.heartB.eyeC.handD.foot答案:B。

显示器通常被称为计算机的眼睛,因为它像眼睛一样展示信息。

选项A“heart”是心脏;选项C“hand”是手;选项D“foot”是脚,都不符合显示器的特点。

3.The hard drive is like a computer's ______.A.memory bankB.speakerC.keyboardD.mouse答案:A。

硬盘就像计算机的存储库,用于存储数据。

选项B“speaker”是扬声器;选项C“keyboard”是键盘;选项D“mouse”是鼠标,都与存储功能无关。

4.The RAM of a computer is similar to the ______ of a building.A.foundationB.roofC.wallD.door答案:A。

计算机的随机存取存储器((RAM)类似于建筑物的基础,为计算机的运行提供临时的存储空间。

选项B“roof”是屋顶;选项C“wall”是墙;选项D“door”是门,都与RAM 的作用不相符。

5.The graphics card is mainly responsible for ______.A.processing dataB.displaying imagesC.storing filesD.typing words答案:B。

2023年12月四级考试阅读原文在2023年12月的四级考试中,阅读理解部分包含三篇文章,以下是其原文和相应的题目。

文章一:Title: 电子书与纸质书的比较With the increasing popularity of e-books, there has been much discussion about their advantages and disadvantages compared to traditional printed books. While e-books offer convenient access and the ability to search and alter text, some argue that there is something missing in their reading experience.Traditional printed books allow readers to flip through pages, annotate, and feel the weight and texture of the book, which some claim enhances the reading experience. On the other hand, e-books offer portability, ease of storage, and the ability to change font size and background color, which can be beneficial for individuals with vision problems.A recent study found that while most people prefer the feel of a printed book, a significant minority prefers the convenience of e-books. This highlights the fact that personal preference plays a significant role in choosing between the two formats.问答题:1.What are some of the advantages of e-books?⏹答案:E-books offer convenient access, the ability to search and alter text, andportability. They are also easy to store.1.Why do some people prefer printed books to e-books?⏹答案:Some people prefer printed books because they enjoy flipping throughpages, annotating, and feeling the weight and texture of the book, which they claim enhances the reading experience.1.How can individuals with vision problems benefit from e-books?⏹答案:Individuals with vision problems can benefit from e-books by changingthe font size and background color to improve readability.文章二:Title: The impact of social media on mental healthSocial media has become a ubiquitous part of modern life, with nearly everyone using platforms such as Facebook, Twitter, and Instagram. While social media has brought many benefits, there is growing concern about its impact on mental health.One of the main concerns is the pressure social media places on individuals to portray a perfect life that often does not exist. This can lead to feelings ofinadequacy, anxiety, and depression among users as they compare their lives to others'.In addition, social media fosters a culture of narcissism, where people are constantly preoccupied with their own image and validation from others. This can lead to a lack of empathy and a focus on self-gratification rather than meaningful relationships.However, some argue that social media can also be a tool for connecting and supporting individuals facing mental health challenges. Platforms allow individuals to share their experiences, seek help, and build communities around shared interests or illnesses.问答题:1.What are some potential negative impacts of social media on mental health?⏹答案:Social media can lead to feelings of inadequacy, anxiety, depression,narcissism, a lack of empathy, and a focus on self-gratification rather than meaningful relationships.1.How can social media be a positive tool for individuals facing mental healthchallenges?⏹答案:Social media can allow individuals facing mental health challenges toconnect and seek support by sharing their experiences, seeking help, and building communities around shared interests or illnesses.文章三:Title: The importance of sleep for memory retentionSleep is essential for maintaining physical and mental well-being, and recent research has shown that it is particularly crucial for memory retention. Sleep deprivation can lead to memory impairment and negative effects on cognitive function.During sleep, the brain undergoes a process of consolidation where it reviews and strengthens memories, making them more accessible during wakeful hours. Sleep also plays a role in integrating new information into existing knowledge networks.Studies have shown that individuals who do not get enough sleep struggle with memory retention and cognitive tasks compared to those who sleep well. The quality of sleep is also important; disrupted or shallow sleep can interfere with memory consolidation.In conclusion, sleep is essential for optimal cognitive function and memory retention. To ensure healthy brain function, it is important to prioritize sleep and aim for a consistent sleep schedule.。

高二英语科技流派练习题40题答案解析1.The new technology has greatly improved our lives. It is really _____.A.advancedB.outdatedC.primitiveD.obsolete答案解析:A。

本题考查科技相关词汇的辨析。

选项A“advanced”表示先进的,符合新科技改善生活的语境。

选项B“outdated”表示过时的,与题意不符。

选项C“primitive”表示原始的,也不符合新科技的特点。

选项D“obsolete”表示废弃的,同样不恰当。

2.In the field of technology, innovation is crucial. We need to constantly develop _____ ideas.A.old-fashionedB.traditionalC.innovativeD.conservative答案解析:C。

“innovation is crucial”表明需要创新的想法。

选项C“innovative”就是创新的意思。

选项A“old-fashioned”是老式的,不对。

选项B“traditional”传统的,也不符合。

选项D“conservative”保守的,不合适。

3.The latest smartphone has many _____ features.A.advancedB.basicC.simpleD.primitive答案解析:A。

最新的智能手机应该有先进的功能。

选项A“advanced”正确。

选项B“basic”基础的,没有突出最新手机的特点。

选项C“simple”简单的,不准确。

选项D“primitive”原始的,完全错误。

4.Technology has made our communication more _____.A.difficultB.easyplicatedD.confusing答案解析:B。

高考真题英语2024新课标一卷一、听力部分(共30分)Section A1. 根据所听对话,选择正确答案。

- 问题1:What is the man going to do this weekend?- A. Visit his parents.- B. Go to a concert.- C. Work on a project.- 问题2:Why does the woman suggest the man should take a break?- A. He has been working too hard.- B. He needs to prepare for an exam.- C. He is going to have a meeting....Section B1. 根据所听短文,选择正确答案。

- 问题1:What is the main topic of the passage?- A. The importance of environmental protection.- B. The impact of technology on education.- C. The benefits of physical exercise.- 问题2:What does the speaker think about the future ofeducation?- A. It will be completely online.- B. It will be a combination of online and offline learning.- C. It will not change much....二、阅读理解部分(共40分)Passage 1A new study has found that regular exercise can significantly improve memory and cognitive function in older adults. The research, conducted by a team of scientists at the University of XYZ, involved a group of participants aged between 60 and 80. They were asked to engage in moderate physical activity for 30 minutes a day, five days a week, over a period of six months.Questions:1. What was the age range of the participants in the study?2. How long did the participants engage in physical activity each day?3. What was the duration of the study?Passage 2The article discusses the impact of social media on teenagers' mental health. It highlights the negative effects such as increased anxiety and depression, while alsoacknowledging the positive aspects like the ability toconnect with others and share experiences.Questions:1. What is the main concern of the article?2. What are some of the negative effects of social media mentioned?3. How does the article view the positive aspects of social media?Passage 3...三、完形填空部分(共20分)Once upon a time, in a small village, there lived an old man named John. He was known for his wisdom and kindness. One day, a young boy approached him with a problem. The boy had losthis favorite toy, and he was very upset.John listened to the boy's story and then said, "Life is full of ups and downs. It's important to learn from our losses and to keep moving forward."The boy looked at John with confusion and asked, "But how can I find my toy?"John smiled and said, "Sometimes, the things we lose are not meant to be found. Instead, we should focus on the lessons we learn from the experience."The boy thought about John's words and slowly began to understand the importance of resilience and acceptance.四、语法填空部分(共10分)In recent years, the popularity of online shopping has grown rapidly. Many people prefer to buy products online because it is convenient and time-saving. However, there are also some disadvantages, such as the inability to try products before buying them.1. The popularity of online shopping has grown rapidly because it is convenient and time-saving.2. Despite its convenience, online shopping also has some disadvantages, such as the inability to try products before buying them.五、短文改错部分(共10分)One day, a boy was walking along the street when he suddenly saw a wallet on the ground. He picked it up and found that it was full of money. He decided to hand it in to the police station. The police officer thanked him and asked for his name, but the boy refused to tell. He said that he did not want to receive any reward for his honesty.六、书面表达部分(共30分)假如你是李华,你的美国朋友Tom对中国的传统节日非常感兴趣。

2025年全国大学英语CET四级考试复习试题及解答参考一、写作(15分)Part I Writing (30 points)Directions: For this part, you are allowed 30 minutes to write a short essay on the topic “The Impact of Artificial Intelligence on Daily Life.” You should start your essay with a brief introduction to the topic, then give specific examples to illustrate your point, and finally, provide a conclusion with your personal view. Your essay should be about 120 to 150 words but no less than 100 words.Writing Sample:The advent of artificial intelligence (AI) has revolutionized our daily lives in numerous ways. From smart homes to advanced medical diagnosis, AI has become an integral part of modern society.In smart homes, AI systems like voice assistants and smart security cameras enhance our convenience and safety. These systems learn from our habits and preferences, making our homes more comfortable and efficient. Moreover, in the healthcare sector, AI algorithms are being used to analyze medical images and identify potential diseases at an early stage, which can significantly improvepatient outcomes.However, the rise of AI also brings challenges. For example, job displacement is a major concern, as AI can perform certain tasks more efficiently than humans. Additionally, there are ethical questions about privacy, data security, and the potential misuse of AI technology.In conclusion, while AI has brought substantial benefits to our daily lives, we must also address its challenges to ensure a balanced and ethical integration of AI into our society.Writing Analysis:•Introduction: The essay starts with a clear introduction to the topic of AI and its impact on daily life, providing a broad perspective.•Body Paragraphs: The body of the essay presents two distinct impacts of AI:•The positive impact of AI in smart homes and healthcare.•The negative impacts of job displacement and ethical concerns.•Conclusion: The essay concludes with a balanced view, acknowledging both the benefits and challenges of AI, and emphasizing the need for ethical considerations.•Structure and Coherence: The essay has a clear structure and is well-organized, making the flow of ideas easy to follow.•Length: The essay meets the required word count, with 120 words, demonstrating the writer’s ability to convey the main points concisely.二、听力理解-短篇新闻(选择题,共7分)第一题News:In recent years, global attention has been drawn to the rapid development of electric vehicles (EVs). According to a recent report by the International Energy Agency (IEA), the number of electric vehicles on the roads worldwide reached 13 million in 2021, up from just 2 million in 2015. The report also indicates that by 2030, the number of electric vehicles is expected to surpass 145 million.Question 1:What has the number of electric vehicles on the roads reached as of 2021 according to the recent report by the IEA?A) 1 millionB) 13 millionC) 2 millionAnswer: BQuestion 2:How many years is it mentioned from 2015 to 2021 in the report?A) 5 yearsB) 6 yearsC) 7 yearsAnswer: BQuestion 3:What is the expected number of electric vehicles by 2030 according to the report?A) 13 millionB) 2 millionC) 145 millionAnswer: C第二题News Item 1:A new study reveals that the global use of electric scooters has increased significantly in recent years. These scooters are becoming a popular form of transportation in cities around the world. However, the study also highlights the environmental and safety concerns associated with the rapid growth in electric scooter usage.Cities are faced with the challenge of managing the increased demand for parking spaces, as well as the potential risks of accidents involving these scooters. Improved infrastructure and regulations are being considered to address these issues.Questions:1、What is the primary topic of the news item?A. The decline of traditional scootersB. The environmental impact of electric scootersC. The safety concerns of using electric scootersD. The rise in global use of electric scooters2、“These scooters are becoming a popular form of transportation in cities around the world.” Which of the following is true regarding the use of electric scooters?A. They are only popular in developed countries.B. They have no environmental impact.C. They are causing a decrease in car usage.D. They have become a common mode of transportation globally.3、“Improved infrastructure and regulations are being considered to address these issues.” What is the implied issue that needs to be addressed?A. The overuse of public transportation.B. The need for more parking spaces for cars.C. The decline in bicycle usage.D. The potential safety risks and management challenges posed by electric scooters.Answers:1.D2.D3.D三、听力理解-长对话(选择题,共8分)First QuestionConversationA: Hey, Sarah! Did you finish listening to the podcast this morning?B: Yeah, I did. It was quite fascinating. Have you checked the transcript on their webpage?A: Not yet. I plan to review what we heard today after work. By the way, I was thinking it would be nice to form a study circle this semester.B: That sounds like a good idea. Could you host a meeting this weekend?A: Sure, I can. I’ll prepare some questio ns for us to discuss, and you can bring in your notes. It’ll make our learning more productive.B: Great! Should we stick to the topics in the podcast or choose something else?A: Let’s talk about the topics in the podcast first. That way, it’ll help us understand the context better.B: Sounds perfect. I have a couple of questions for you. How long have you been listening to podcasts?A: Since about a year now. I find it’s a great way to learn English while doing something productive.B: I agree. What’s your favorite podcast?A: Hmm, I really like “The Economist Briefing.” It covers current events and history, which are topics I find interesting.B: Nice choice. I’m a fan of “TED Talks Daily.” It’s a bit different from “The Economist Briefing” but still educational.A: That’s true. We can switch up the topics as we like. What are youstudying?B: I’m majoring in international relations. The podcast really helps me get more insights into what I’m studying.A: That’s awesome. What about yo ur plans for the future?B: I hope to travel around Europe for my study abroad program next year, so I’m trying to learn more European languages. It would be a great opportunity to practice my English as well.A: That sounds exciting! This weekend, let’s m eet for an hour at my place, okay?B: Sure, that works for me.Q1. What is one reason Sarah likes listening to this podcast?a)To practice her English.b)To pass CET-4.c)To prepare for a trip.d)To learn her major subject.Answer: aQ2. How long has the speaker been listening to podcasts?a)One yearb)Two yearsc)Three yearsd)Half a yearAnswer: aQ3. Who does the speaker admire for choosing “TED Talks Daily”?a)Sarahb) A friendc) A professord)Another studentAnswer: aQ4. What will they do this weekend?a)Meet for an hour at the speaker’s place.b)Join a club activity.c)Go to a coffee shop.d)Attend a lecture on English.Answer: aQuestion 2:Why does Liu feel a bit nervous about the exam?A) He is preparing for it for too long.B) He hasn’t studied hard enough.C) His friends are also enrolled in CET-4 course classes.D) He needs to take a break soon.Answer: AQuestion 3:What advice does Amy give to Liu?A) Enroll in a CET-4 course class.B) Review the past papers.C) Study every day.D) Take a break.Answer: BQuestion 4:What can be inferred about Liu from the conversation?A) He is confident about the exam.B) He has been preparing for the exam for a long time.C) He is ready for the upcoming exam.D) He doesn’t like studying hard.Answer: B四、听力理解-听力篇章(选择题,共20分)第一题Directions: In this section, you will hear a passage. Listen carefully and answer the questions that follow.Passage:In today’s fast-paced digital world, it has become increasingly important for businesses to adopt technologies that improve their efficiency and customer satisfaction. The rise of artificial intelligence (AI) and machine learning (ML) has led to significant advancements in the field of business operations. Companies are now exploring various ways to integrate these technologies to enhance their processes.1、What aspect of business operations has seen significant advancements dueto AI and ML integration?A) Customer serviceB) LogisticsC) Financial managementD) A2、Why is the adoption of AI and ML technologies regarded as important for businesses?A) To reduce operational costsB) To improve customer satisfactionC) To increase operational efficiencyD) C3、Which of the following is NOT an example of how businesses can integrate AI and ML?A) Enhancing predictive analyticsB) Automating routine tasksC) Increasing manual data entryD) C第二题Passage 1The globalization of the economy has brought about significant changes in the world, and one area that has been heavily affected is the sports industry. In this essay, we will explore how globalization has impacted the sports industry,focusing on the growth of international sports events and the role of sports in global culture.1、Why is globalization having a profound impact on the sports industry?A) Because it allows sports to be practiced anywhere in the world.B) Because it has led to the growth of international sports events.C) Because it has changed the way people culture around the world.D) Because it has increased the salaries of professional athletes.2、Which of the following is not mentioned as a change brought about by globalization in the sports industry?A) The increase in cross-cultural interactions.B) The decline in local sports teams.C) The rise of regional sports leagues.D) The increase in global fan bases for various sports.3、What is the main argument made by the essay about the role of sports in global culture?A) Sports have a单一 focus on winning and losing.B) Sports help to foster national pride and identity.C) Sports have become a way for countries to cooperatively compete.D) Sports have lost their relevance due to increased commercialization.Answer Key:1、B2、BThird Question: Listening Comprehension - Listening PassagePassage:Welcome to our final research trip to India. We are in a small village in the state of Kerala, known for its rich cultural heritage and scenic beauty. The village, named Paravoor, has a population of approximately 15,000. Today, we focus on the local economy, which is largely dependent on farming, tourism, and small-scale industries. Currently, the village is facing several challenges, including water scarcity and lack of proper infrastructure. The government plans to implement a new irrigation project, which will provide a significant boost to the agricultural sector. In addition, the village is promoting eco-tourism to diversify its economic base. However, these initiatives require support and investment from both the government and the local community.1、Which of the following is NOT a challenge facing Paravoor Village?A、Water scarcityB、Lack of proper infrastructureC、Dependence on large-scale industriesD、C、2、What is the villagers’ plan to diversify their economic base?A、Developing new industriesB、Promoting eco-tourismC、Increasing agricultural production3、Which of the following is a potential benefit of the new irrigation project?A、It will help diversify the local economy.B、It will improve the infrastructure.C、It will provide water to the entire state.D、C、五、阅读理解-词汇理解(填空题,共5分)第一题Reading PassageAlice, receiving a ring, was extremely pleased. Her father promptly asked, “Have you made up your mind, my dear?” “Not quite,” said Alice ominously, stepping out of her ring. “But I will do so directly,” she declared.With a faint shiver of delight, the father experienced her civil but firm decision and then together they went to bet {?1?} her little servant girl a seventeen-pound horse. While they were thus occupied, the children saw their disagreement. The richest and keenest-uprisinguchepest, perfectly struck their fancy, and though their(Game) competitive position was, by no means, satisfactory, they had no objection to feel very sorry for the seller.1、civil A. 非常高兴的B. 礼貌的;文明的C. 无数的;无休止的D. 非常出色的2、competititive A. 竞争性的;竞赛的B. 嫉妒的;充满敌意的C. 令人厌恶的;讨厌的D. 无能的;不称职的3、keen A. 苦涩的;尖利的B. 明锐的;敏锐的C. 高兴的;愉快的D. 枯燥的;乏味的4、Ominous A. 不吉利的;不祥的B. 温和的;文雅的C. 欢快的;愉快的D. 兴奋的;激动的5、shiver A. 战栗;发抖B. 淡水C. 快速降雨D. 柔软的动物答案:1、B2、A3、B4、A5、A第二题Directions: Read the following text and complete the sentences below. There is one word or phrase missing in each sentence. Choose the most appropriate word or phrase from the options given below each sentence.Reading Passage:The rapid growth of technology has profoundly transformed our social fabric. From the emergence of the internet to the advent of smartphones, our daily interactions and work routines have been fundamentally altered. These technological advancements have not only facilitated instant communication but also expanded our access to information. However, this shift comes with its own set of challenges. For instance, while the internet provides a vast array of resources, it also exposes us to misinformation and the need for digital literacy is increasingly important. Moreover, the reliance on technology in the workplace has raised concerns about job security, as automation and artificial intelligence continue to evolve and change the nature of work.1、The word “fabric” (Line 1) most closely r elates to the following word: _[Options: a) fabric b) structure c) society d) clothing_]•1、c) society2、The phrase “emergence of the internet” (Line 3) can be replaced with which of the following: _[Options: a) the start of the internet b) the appearance of the internet c) the deployment of the internet d) the invention of the internet_]•2、b) the appearance of the internet3、The word “instant” (Line 4) is synonymous with: _[Options: a) immediate b) brief c) quick d) rapid_]•3、a) immediate4、The challenge mentioned in the passage regarding the internet is: _[Options: a) accessing information b) exposure to misinformation c) maintaining digital literacy d) balancing physical and digital interactions_]•4、b) exposure to misinformation5、The phrase “nature of work” (Line 7) refers to: _[Options: a) the quality of work b) the purpose of work c) the essence of work d) the value of work_]•5、c) the essence of work六、阅读理解-长篇阅读(选择题,共10分)第一题Reading Passage OneIt is widely accepted that education is of great importance to all people. However, there are many arguments on its necessity. While some people believe it is important to receive an education, others argue that education is not essential in one’s life.One of the main arguments for education is that it offers opportunities for personal development. With a good education, individuals can acquire the knowledge and skills needed to succeed in life. They can also improve theircritical thinking abilities and make informed decisions. Furthermore, an education can help individuals become more adaptable and flexible, enabling them to thrive in a changing world.Opponents of education argue that people can succeed without it. They cite examples of successful individuals who dropped out of school, such as Steve Jobs and比尔·盖茨. They believe that talent and opportunities can compensate for a lack of formal education.In the following passage, there are some statements about education. Choose the most suitable answer for each of the following questions.Questions 1-51、Which of the following is the main issue discussed in the reading passage?A. The benefits of educationB. The drawbacks of educationC. The importance of personal developmentD. The relationship between education and success2、What do the proponents of education believe about the role of education in personal development?A. Education hinders personal growth.B. Education does not contribute to skill acquisition.C. Education improves critical thinking and decision-making skills.D. Education makes individuals less adaptable.3、What is the main argument against education mentioned in the passage?A. Education limits personal development.B. Successful individuals can compensate for a lack of education.C. Education stifles creativity and innovation.D. Education takes away opportunities for self-betterment.4、Which of the following does the reading passage NOT mention as a reason for supporting education?A. Increased opportunities for employment.B. Enhanced critical thinking abilities.C. Improved adaptability and flexibility.D. Theernenment in international cooperation.5、What is the author’s attitude towards the debate on education?A. The author believes that education is unnecessary.B. The author supports the idea that education is essential for personal development.C. The author prefers talent and opportunities over education.D. The author is neutral on the issue of education.Answer Key:1、A2、C3、B4、D5、B第二题Passage:The concept of cloud computing has been discussed for decades, but it has only recently become a practical solution for businesses and individuals. Itall began with the idea of using the Internet as a transmission medium for data and applications. As technology advanced, the costs of storage and bandwidth became more affordable, making cloud computing a viable option. Today, cloud services range from simple file storage to complex application delivery, and they are accessible via web browsers or special software applications.The benefits of cloud computing are numerous. First, there is no need for costly hardware or maintenance. Cloud providers handle all the backend operations, ensuring that the service runs smoothly without requiring any intervention from users. Second, cloud services are highly scalable, meaning they can handle sudden increases in demand without additional investment. Third, cloud computing encourages collaboration and mobility, as users can access data and applications from anywhere with an internet connection. Finally, cloud services often come with robust security features, which are continuously updated, minimizing the risk of data breaches.However, cloud computing also comes with challenges. Security remains a significant concern, as data is stored remotely and vulnerable to cyberattacks. Additionally, there is the issue of data sovereignty, where data stored outside a country’s borders may be subject to the laws of that country. Furthermore, some companies may be hesitant to switch to cloud services due to the lack of control over their data, a common concern known as “control issues.”Questions:1、What is the main idea of the passage?a) The history of cloud computing.b) The benefits and challenges of cloud computing.c) The security concerns of cloud computing.d) The scalability of cloud computing.2、Why did cloud computing become practical recently?a) Because of the decreased costs of storage and bandwidth.b) Because of the widespread availability of the Internet.c) Because of the advancement in technology.d) Because of the decreasing demand for hardware.3、What are the benefits of cloud computing mentioned in the passage?a) No need for costly hardware, scalability, collaboration and mobility, and robust security features.b) High scalability, easy maintenance, and data sovereignty.c) Low costs, easy access, and increased data security.d) Remote access, data availability, and decreased bandwidth requirements.4、Which of the following is a challenge of cloud computing?a) The lack of mobility.b) The high costs of hardware.c) The security risks associated with remote data storage.d) The limited availability of web browsers.5、What is the common concern known as “control issues” mentioned in the passage?a) Users have no control over their data.b) Users have control over their data, but it is stored remotely.c) Data stored outside a country’s borders may be subject to the laws of that country.d) Users can choose to control their data through special software applications.Answers:1、b) The benefits and challenges of cloud computing.2、a) Because of the decreased costs of storage and bandwidth.3、a) No need for costly hardware, scalability, collaboration and mobility, and robust security features.4、c) The security risks associated with remote data storage.5、a) Users have no control over their data.七、阅读理解-仔细阅读(选择题,共20分)First Reading Comprehension Part AReading PassageThe following is a passage about the importance of exercise for mental health and productivity. This passage is followed by some questions to which the answers can be found in the passage.In today’s fast-paced world, stress has become an integral part of our lives. It’s essential to find ways to manage and reduce stress to maintain both our mental and physical health. One effective way to combat stress is through regularexercise. Research has consistently shown that physical activity can have a profound impact on our mental well-being and productivity.1.Physical activity has been found to:A) improve mental healthB) enhance productivityC) both improve mental health and enhance productivityD) have no effect on mental health2.The passage primarily discusses:A) the negative impact of stress on mental healthB) the benefits of exercise in reducing stressC) the effectiveness of various stress management techniquesD) the effects of different types of stress on the body3.It is mentioned that physical activity can have a “profound impact” on our:A) attention spanB) moodC) ability to sleepD) All of the above4.The word “integral” in the first paragraph most closely means:A) essentialB) foundationC) simpleD) occasional5.According to the passage, what is one effective way to combat stress?A) Avoiding situations that cause stressB) Seeking professional helpC) Regular physical activityD) Meditating for a few minutes dailyOptions:1、C2、B3、D4、A5、C第二题阅读下面的文章,然后回答问题。

What is the main purpose of the author's writing the passage?A. To introduce a new technology.B. To discuss the impact of social media.C. To analyze the causes of climate change.D. To promote a healthy lifestyle.The underlined word "it" in Paragraph 3 refers to _____.A. the bookB. the museumC. the exhibitionD. the artworkWhich of the following statements is TRUE according to the text?A. The new policy has been widely criticized.B. The project will be completed next year.C. The company has faced financial difficulties.D. The research has led to significant breakthroughs.The tone of the author in the last paragraph can be best described as _____.A. sarcasticB. optimisticC. pessimisticD. indifferentThe phrase "in the long run" in the passage means _____.A. eventuallyB. quicklyC. recentlyD. suddenlyWhat is the author's attitude towards online education?A. Supportive.B. Skeptical.C. Neutral.D. Disapproving.The main idea of the second paragraph is that _____.A. exercise is essential for physical healthB. mental health is as important as physical healthC. stress can be easily managedD. sleep patterns affect productivityThe word "ubiquitous" in the sentence "Smartphones have become ubiquitous in our daily lives" means _____.A. rareB. expensiveC. everywhereD. outdatedWhich of the following is NOT mentioned as a benefit of reading in the passage?A. Improving vocabulary.B. Reducing stress levels.C. Enhancing creativity.D. Increasing social activities.。

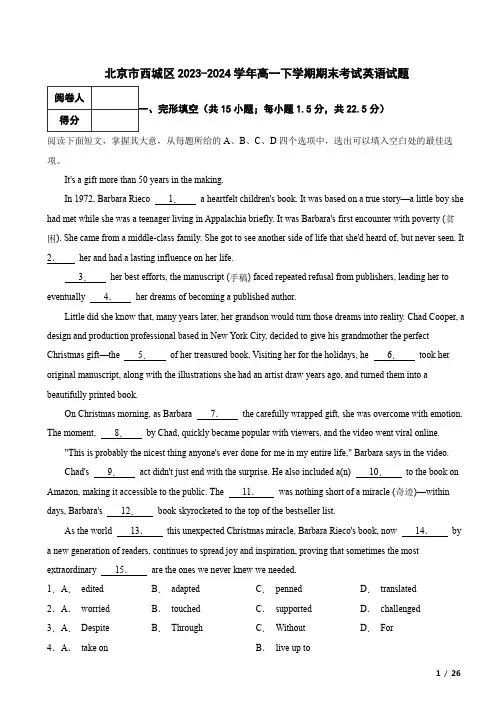

北京市西城区2023-2024学年高一下学期期末考试英语试题15小题;每小题1.5分,共22.5分)阅读下面短文,掌握其大意,从每题所给的A、B、C、D四个选项中,选出可以填入空白处的最佳选项。

It's a gift more than 50 years in the making.In 1972, Barbara Rieco 1. a heartfelt children's book. It was based on a true story—a little boy she had met while she was a teenager living in Appalachia briefly. It was Barbara's first encounter with poverty (贫困). She came from a middle-class family. She got to see another side of life that she'd heard of, but never seen. It 2.her and had a lasting influence on her life.3.her best efforts, the manuscript (手稿) faced repeated refusal from publishers, leading her to eventually 4.her dreams of becoming a published author.Little did she know that, many years later, her grandson would turn those dreams into reality. Chad Cooper, a design and production professional based in New York City, decided to give his grandmother the perfect Christmas gift—the 5.of her treasured book. Visiting her for the holidays, he 6.took her original manuscript, along with the illustrations she had an artist draw years ago, and turned them into a beautifully printed book.On Christmas morning, as Barbara 7.the carefully wrapped gift, she was overcome with emotion. The moment, 8.by Chad, quickly became popular with viewers, and the video went viral online."This is probably the nicest thing anyone's ever done for me in my entire life," Barbara says in the video.Chad's 9.act didn't just end with the surprise. He also included a(n) 10.to the book on Amazon, making it accessible to the public. The 11.was nothing short of a miracle (奇迹)—within days, Barbara's 12.book skyrocketed to the top of the bestseller list.As the world 13.this unexpected Christmas miracle, Barbara Rieco's book, now 14.by a new generation of readers, continues to spread joy and inspiration, proving that sometimes the most extraordinary 15.are the ones we never knew we needed.1.A.edited B.adapted C.penned D.translated 2.A.worried B.touched C.supported D.challenged 3.A.Despite B.Through C.Without D.For4.A.take on B.live up toC.hold on to D.set aside5.A.promotion B.revisionC.publication D.recommendation6.A.curiously B.secretly C.calmly D.shyly 7.A.bought B.held C.opened D.showed 8.A.photographed B.filmed C.written D.described 9.A.respectful B.faithful C.thankful D.thoughtful 10.A.link B.introduction C.approach D.guide 11.A.comment B.impression C.response D.prediction 12.A.once-forgotten B.badly-neededC.well-known D.fully-prepared13.A.accepts B.presents C.ignores D.celebrates 14.A.sold B.donated C.rated D.loved 15.A.videos B.gifts C.belongings D.materials阅读下面短文,从每题所给的A、B、C、D四个选项中选出最佳选项。

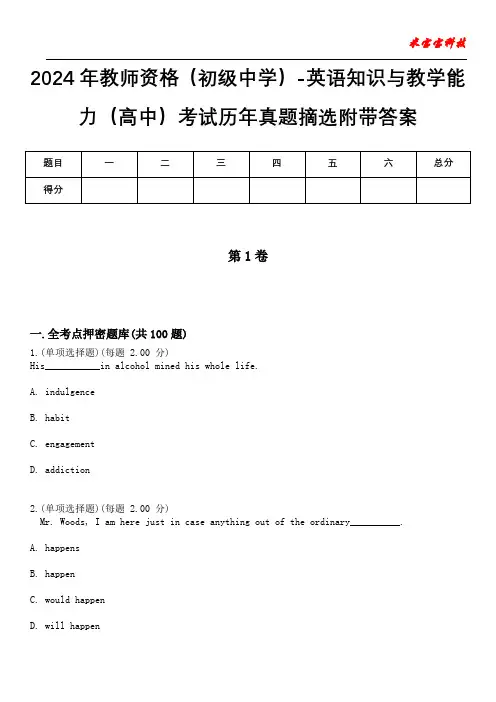

2024年教师资格(初级中学)-英语知识与教学能力(高中)考试历年真题摘选附带答案第1卷一.全考点押密题库(共100题)1.(单项选择题)(每题2.00 分)His___________in alcohol mined his whole life.A. indulgenceB. habitC. engagementD. addiction2.(单项选择题)(每题 2.00 分)Mr. Woods, I am here just in case anything out of the ordinary__________.A. happensB. happenC. would happenD. will happen3.(单项选择题)(每题 2.00 分) Which of the following activities is the most suitable for group work?______.A. guessing gameB. story tellingC. information-gapD. drama performance4.(单项选择题)(每题 2.00 分) Which of the following activities can be used to get the main idea of a passage?A. Reading the passage in detail.B. Reading to sequence the events.C. Reading to fill in the charts.D. Reading the first and last sentences of the passage and the paragraphs.5.(单项选择题)(每题 2.00 分) To our surprise, the stranger______to be an old friend of my mother’s.A. turned outB. turned upC. set outD. setup6.(单项选择题)(每题 2.00 分) new buildings will be built in my hometown.A. A great deals ofB. A lots ofC. A plenty ofD. A great number of7.(单项选择题)(每题 2.00 分) 请阅读Passage2,完成下列小题.Passage 2We had been wanting to expand our children‘s horizons by taking them to a place that was unlike anything wed been exposed to during our travels in Europe and the United States. In thinking about what was possible from Geneva,where we are based, we decided on a trip to Istanbul a two-hour plane ride from Zurich.We envisioned the trip as a prelude to more exotic ones, perhaps to New Delhi or Bangkok later this year, but thought our 11- and 13-year-old needed a first step away from manicured boulevards and pristine monuments.What we didn’t foresee was the reaction of friends, who warned that we were putting our children “in danger",referring vaguely, and most incorrectly, to disease, terrorism or just the unknown. To help us get acquainted with the peculiarities of Istanbul and to give our children a chance to choose what they were particularly interested in seeing, we bought anexcellent guidebook and read it thoroughly before leaving. Friendly warnings didn’t change our planning:although we might have more prudently checked with the U.S. Department’s list of troublespots. We didn’t see a lot of children among the foreign visitors during our six-day stay in Istanbul, but we found the tourist areas quite safe, veryinteresting and varied enough even to suit our son, whose oft-repeated request is that we not see “every single” church and museum in a given city.Vaccinations werent needed for the city, but we were concerned about adapting to the water for a short stay. So we used bottled water for drinking and brushing our teeth, a precaution that may seem excessive, but we all stayed healthy.Taking the advice of a friend, we booked a hotel a 20-minute walk from most of Istanbul’s major tourist sites. This not only got us some morning exercise, strolling over the Karakoy Bridge, but took us past a colorful assortment of fishermen, vendors and shoe shiners. From a teenager and preteens view. Istanbul street life is fascinating since almost everything can be bought outdoors. They were at a good age to spend time wandering the labyrinth of the Spice Bazaar, where shops display mounds of pungent herbs in sacks. Doing this with younger children would be harder simply because the streets are so packed with people; it would be easy to get lost.For →our two←, whose buying experience consisted of department stores and shopping mall boutiques, it was amazing to discover that you could bargain over price and perhaps end up with two of something for the price of one. They also learned to figure out the relative value of the Turkish lira, not a small matter with its many zeros.Being exposed to Islam was an important part of our trip. Visiting the mosques, especially the enormous Blue Mosque, was our first glimpse into how this major religion is practiced. Our children’s curiosity already had been piqued by the five daily calls to prayer over loudspeakers in every comer of the city, and the scarves covering the heads of many women. Navigating meals can be troublesome with children, but a kebab, bought on the street or in restaurants, was unfailingly popular. Since we had decided this trip was not for gourmets, kebabs spared us th e agony of trying to find a restaurant each day that would suit the adults’ desire to try something new amid children’s insistence that the food be served immediately. Gradually, we branched out to try some other Turkish specialties. Although our son had studied Islam briefly, it is impossible to be prepared for every awkward question that might come up, such as during our visits to the Topkapi Sarayi, the Ottoman Sultans9 palace, no guides were available so it was do-it-yourself, using our guidebook, which cheated us of a lot of interesting history and anecdotes that a professional guide could provide. Next time, we resolved to make such arrangements in advance.On this trip, we wandered through the magnificent complex, with its imperial treasures, its courtyards and its harem. The last required a bit of explanation that we would have happily left to a learned third party.Whom does“our two”in PARAGRAPH 5 refer to? ______ .A. the coupleB. the kidsC. the gourmetsD. local-style markets8.(单项选择题)(每题 2.00 分) Which of the following statements is NOT a way of presenting new vocabulary?____.A. definingB. using real objectsC. writing a passage by using new wordsD. giving explanations9.(单项选择题)(每题 2.00 分) Exceptional children are different in some significant ways from others of the same age, for the same age, for these children to develop to their full adult potential; their education must be adapted to those differences.Although we focus on the needs of exceptional children, we find ourselves describing their environment as well. While the leading actor on the stage captures our attention, we are aware of the importance of the supporting players and the scenery of the play itself. Both the family and the society in which exceptional children live are often the key to their growth and development. And it is in the public schools that we find the full expression of society5s understanding the knowledge, hopes, and fears that are passed on to the next generation.Education in any society is a mirror of that society. In that mirror we can see the strengths, the weaknesses, the hopes, the prejudices, and the central values of the culture itself. The great interest in exceptional children shown in public education over the past three decades indicates the strong feeling in our society that all citizens, whatever their special conditions, deserve the opportunity to fully develop their capabilities.“All men are created equal.” We’ve heard it many times, but it still has important meaning for education in a democratic society. Although the phrase was used by this countrys founders to denote equality before the law, it has also been interpreted to mean equality of opportunity. That concept implies educational opportunity for all children—the right of each child to receive help in learning to the limits of his or her capacity, whether that capacity be small or great. Recent court decisions have confirmed the right of all children—disabled or not —to an appropriate education, and have ordered that public schools take the necessary steps to provide that education. In response, schools are modifying their programs, adapting instruction to children who are exceptional to those who cannot profit substantially from regular programs.From its passage we learn that the educational concern for exceptional children______.A. is now enjoying legal supportB. disagrees with the tradition of the countryC. was clearly stated by the country’s foundersD. will exert great influence over court decisions10.(单项选择题)(每题 2.00 分)What stage can the following grammar activity be used at?The teacher asked students to arrange the words of sentences into different columns marked subject, predicate, object, object complement, adverbial and so on.A. Presentation.B. Practice.C. Production.D. Preparation.11.(单项选择题)(每题 2.00 分)According to Britain linguist F. Palmer, there are no real synonyms. Though"cast" and"throw" are considered synonyms, they are different in__________.A. styleB. collocationC. emotive meaningD. regions where they are used12.(单项选择题)(每题 2.00 分)What advantage do the new generation Latino writers have over LatinAmericanwriters according to the passage?A. The former are able to write in two different languages.B. The former can translate their works into different languages.C. The former are able to express ideas from a bi-cultural perspective.D. The former can travel freely across the border between two countries.13.(单项选择题)(每题 2.00 分) It is not so much the language______the cultural background that makes the book difficult to understanD.A. asB. butC. likeD. nor14.(单项选择题)(每题 2.00 分)Which inference in the brackets of the following sentences is apresupposition?A. Ede caught a trout. (Edecaught a fish.)B. Don' t sit on Carol' s bed. (Carol has a bed.)C. This blimp is over the house. (The house is under the blimp.)D. Coffee would keep me awake all night. (I don' t want coffee.)15.(单项选择题)(每题 2.00 分) There are many different ways of presenting grammar in the classroom. Among them, three are most frequently used and discusseD. Which one does not belong to them?______.A. deductive methodB. inductive methodC. guided discovery methodD. productive method16.(单项选择题)(每题 2.00 分) When a teacher teaches young learners English pronunciation, he should______.A. listen as much as possibleB. input regardless of students5 abilityC. tolerate small errors in continuous speechD. read more English materials17.(单项选择题)(每题 2.00 分) Which of the following activities is the best for training detailed reading?A. drawing a diagram to show the text structureB. giving the text an appropriate titleC. transforming information from the text to a diagramD. finding out all the unfamiliar words18.(单项选择题)(每题 2.00 分) Which of the following teacher‘s instruction could serve the purpose of eliciting ideas?A. Shall we move on?B. Read after me, everyoneC. What can you see in this picture?D. What does the world "quickly" mean?19.(单项选择题)(每题 2.00 分)Which of the following is suggested in the last paragraph?A. The quality of writing is of primary importance.B. Common humanity is central to news reporting.C. Moral awareness matters in editing a newspaper.D. Journalists need stricter industrial regulations.20.(单项选择题)(每题 2.00 分)What is the best title for this passage?A. Creativity. and InsightsB. Insights and Problem SolvingC. Where Do Insight Moments Come?D. Where Do Creativity Moments Come?21.(单项选择题)(每题 2.00 分) —Where did you find the wallet?—It was at the stadium______I played football.A. thatB. whereC. whichD. there22.(单项选择题)(每题 2.00 分) Water problems in the future will become more intense and more complex. Our increasing population will tremendously increase urban wastes, primarily sewage. On the other hand, increasing demands for water will decrease substantially the amount of water available for diluting wastes. Rapidly expanding industries which involve more and more complex chemical processes will produce large volumes of liquid wastes, and many of these will contain chemicals which are poisonous. To feed our rapidly expanding population, agriculture will have to be intensifieD. This will involve even increasing quantities of agriculture chemicals. From this, it is apparent that drastic steps must be taken immediately to develop corrective measures for the pollution problem.There are two ways by which this pollution problem can be lesseneD. The first relates to the treatment of wastes to decrease their pollution hazarD. This involves the processing of solid wastes prior to disposal and the treatment of liquid wastes, or effluents, to permit the reuse of the water or best reduce pollution upon final disposal.A second approach is to develop an economic use for all or a part of the wastes. Farm manure is spread in fields as a nutrient or organic supplement. Effluents from sewage disposal plants are used in some areas both for irrigation and for the nutrients containeD. Effluents from other processing plants may also be used as a supplemental source of water. Many industries,such as meat and poultry processing plants, are currently converting former waste production into marketable byproducts. Other industries have potential economic uses for their waste products.The phrase “prior to”(ParA. 2) probably means______.A. afterB. duringC. beforeD. beyond23.(单项选择题)(每题 2.00 分) 请阅读passage2,完成下列小题。

【含答案解析】人教版高三英语英语学习动力保持练习题30题1. The passage mainly discusses the development of artificial intelligence in recent years. Which of the following statements best summarizes the main idea?A. AI has made great progress in various fieldsB. AI only benefits the technology industryC. AI is too complex to be widely usedD. AI has no significant impact on our daily life答案:A。

解析:文章重点讲述了近年来人工智能在各个领域取得的巨大进步,A选项准确概括了这一主要内容。

B选项中说人工智能只对技术行业有益是错误的,文章提到了多个领域。

C选项说人工智能太复杂而不能被广泛使用与原文不符,原文强调了其发展成果。

D选项说人工智能对日常生活没有重大影响也是错误的,文中体现了其多方面的影响。

2. In a historical article about the Industrial Revolution, it mentions that many workers had to adapt to new working conditions. What was the main reason for this?A. The invention of new machinesB. The change of weatherC. The demand of workers themselvesD. The government's new policy答案:A。

高三英语科学前沿动态引人关注单选题30题1.Scientists are researching on artificial intelligence. Which of the following is NOT related to artificial intelligence?A.Machine learningB.Big dataC.Genetic engineeringD.Natural language processing答案:C。

本题考查对人工智能相关新科技名词的理解。

选项A“Machine learning(机器学习)”、选项B“Big data(大数据)”和选项D“Natural language processing 自然语言处理)”都与人工智能密切相关。

而选项C“Genetic engineering( 基因工程)”属于生物学领域,与人工智能无关。

2.The development of 5G technology brings many changes. What is one of the characteristics of 5G?A.Low speedB.High latencyC.High bandwidthD.Limited connectivity答案:C。

5G 技术的特点之一是高带宽。

选项A“Low speed( 低速度)”错误;选项B“High latency( 高延迟)”错误;选项D“Limited connectivity( 有限的连接性)”错误。

只有选项C“High bandwidth( 高带宽)”符合5G 的特点。

3.Which new technology is mainly used for virtual reality experiences?A.Sensor technologyB.3D printingC.BlockchainD.Cloud computing答案:A。

高三英语询问技术创新单选题50题1. Many tech companies are investing heavily in ______ to improve data security.A. artificial intelligenceB. blockchainC. virtual realityD. augmented reality答案:B。

解析:本题考查新兴科技词汇的理解。

A选项人工智能主要用于模拟人类智能,如语音识别、图像识别等,与数据安全关联不大。