netty学习

- 格式:doc

- 大小:953.00 KB

- 文档页数:28

Netty⾯试题总结(含答案)Netty⾯试题及答案,每道都是认真筛选出的⾼频⾯试题,助⼒⼤家能找到满意的⼯作!下载链接:Netty是⼀个异步事件驱动的⽹络应⽤程序框架,⽤于快速开发可维护的⾼性能协议服务器和客户端。

Netty是基于nio的,它封装了jdk的nio,让我们使⽤起来更加⽅法灵活。

⼀个⾼性能、异步事件驱动的 NIO 框架,它提供了对 TCP、UDP 和⽂件传输的⽀持使⽤更⾼效的 socket 底层,对 epoll 空轮询引起的 cpu 占⽤飙升在内部进⾏了处理,避免了直接使⽤ NIO 的陷阱,简化了 NIO 的处理⽅式。

采⽤多种 decoder/encoder ⽀持,对 TCP 粘包/分包进⾏⾃动化处理可使⽤接受/处理线程池,提⾼连接效率,对重连、⼼跳检测的简单⽀持可配置 IO 线程数、TCP 参数, TCP 接收和发送缓冲区使⽤直接内存代替堆内存,通过内存池的⽅式循环利⽤ ByteBuf通过引⽤计数器及时申请释放不再引⽤的对象,降低了 GC 频率使⽤单线程串⾏化的⽅式,⾼效的 Reactor 线程模型⼤量使⽤了 volitale、使⽤了 CAS 和原⼦类、线程安全类的使⽤、读写锁的使⽤1.I/O线程模型:同步⾮阻塞,⽤最少的资源做更多的事2.内存零拷贝:尽量减少不必要的内存拷贝,实现了更⾼效率的传输。

3.内存池设计:申请的内存可以重⽤,主要指直接内存。

内部实现是⽤⼀颗⼆叉查找树管理内存分配情况。

4.串形化处理读写:避免使⽤锁带来的性能开销。

5.⾼性能序列化协议:⽀持protobuf等⾼性能序列化协议。

BIO:⼀个连接⼀个线程,客户端有连接请求时服务器端就需要启动⼀个线程进⾏处理。

线程开销⼤。

伪异步IO:将请求连接放⼊线程池,⼀对多,但线程还是很宝贵的资源。

NIO:⼀个请求⼀个线程,但客户端发送的连接请求都会注册到多路复⽤器上,多路复⽤器轮询到连接有I/O请求时才启动⼀个线程进⾏处理。

netty serverbootstrap 参数-概述说明以及解释1.引言1.1 概述Netty是一个开源的、高性能、异步事件驱动的网络应用框架,它提供了简单而强大的API,使得网络编程变得更加容易。

Netty提供了一种简单的方式来处理复杂的网络通信,包括TCP、UDP和HTTP等协议。

在Netty中,ServerBootstrap是用于配置服务器端的主要类之一,它提供了一系列参数来配置服务器端的各种属性,以便于用户根据自身需求进行定制。

本文将对Netty框架进行简要介绍,并重点讨论ServerBootstrap的参数配置,以及如何根据实际需求进行参数的调整和优化。

通过对ServerBootstrap参数的详细解释和示例,读者可以更好地了解Netty框架的使用和定制,从而在实际应用中更好地发挥其优势和性能。

1.2 文章结构本文将分为三个部分进行讨论:引言、正文和结论。

在引言部分,我们将对文章的背景和重要性进行概述,介绍本文的结构以及研究的目的。

在正文部分,我们将首先对Netty框架进行简要介绍,了解其在网络编程中的重要性和应用场景。

接着我们将深入探讨ServerBootstrap参数的作用和功能,详细解析各个参数的含义和配置方式,帮助读者更好地理解和应用Netty框架。

在结论部分,我们将对整篇文章进行总结,提出一些应用建议并展望未来Netty框架的发展方向,为读者提供更深入的思考和研究方向。

1.3 目的:在本文中,我们的目的是通过详细讲解Netty框架中ServerBootstrap参数的作用和配置方法,帮助读者更好地理解和掌握Netty框架,在实际项目开发中更加灵活地配置ServerBootstrap,提高网络编程的效率和性能。

通过对参数的深入理解和应用,读者可以优化自己的网络通信系统,满足不同的需求和场景,为项目的发展和稳定性提供技术支持。

同时,我们也希望通过本文的介绍,激发读者对网络编程的兴趣,提升其技术水平和实践能力,为未来的网络开发工作打下坚实基础。

Netty⼊门使⽤教程原创:转载需注明原创地址本⽂介绍Netty的使⽤, 结合我本⼈的⼀些理解和操作来快速的让初学者⼊门Netty, 理论知识会有, 但是不会太深⼊, 够⽤即可, 仅供⼊门! 需要想详细的知识可以移步Netty官⽹查看官⽅⽂档!理论知识 : Netty提供异步的、事件驱动的⽹络应⽤程序框架和⼯具,⽤以快速开发⾼性能、⾼可靠性的⽹络服务器和客户端程序当然, 我们这⾥主要是⽤Netty来发送消息, 接收消息, 测试⼀下demo, 更厉害的功能后⾯再慢慢发掘, 我们先看看这玩意怎么玩, 后⾯再深⼊需要⼯具和Java类: netty-4.1.43 netty服务器类 SayHelloServer.java netty服务端处理器类 SayHelloServerHandler.java netty客户端类 SayHelloClient.java netty客户端处理器类 SayHelloClientHandler.java 服务器main⽅法测试类 MainNettyServer.java 客户端main⽅法测试类 MainNettyClient.java⾸先先来⼀张演⽰图, 最下⾯也会放:我们看完以下部分就能实现这个东西了!话不多说, 先贴代码:package netty.server;import ty.bootstrap.ServerBootstrap;import ty.channel.ChannelFuture;import ty.channel.ChannelInitializer;import ty.channel.ChannelOption;import ty.channel.EventLoopGroup;import ty.channel.nio.NioEventLoopGroup;import ty.channel.socket.SocketChannel;import ty.channel.socket.nio.NioServerSocketChannel;import netty.handler.SayHelloServerHandler;/*** sayhello 服务器*/public class SayHelloServer {/*** 端⼝*/private int port ;public SayHelloServer(int port){this.port = port;}public void run() throws Exception{/*** Netty 负责装领导的事件处理线程池*/EventLoopGroup leader = new NioEventLoopGroup();/*** Netty 负责装码农的事件处理线程池*/EventLoopGroup coder = new NioEventLoopGroup();try {/*** 服务端启动引导器*/ServerBootstrap server = new ServerBootstrap();server.group(leader, coder)//把事件处理线程池添加进启动引导器.channel(NioServerSocketChannel.class)//设置通道的建⽴⽅式,这⾥采⽤Nio的通道⽅式来建⽴请求连接.childHandler(new ChannelInitializer<SocketChannel>() {//构造⼀个由通道处理器构成的通道管道流⽔线@Overrideprotected void initChannel(SocketChannel socketChannel) throws Exception {/*** 此处添加服务端的通道处理器*/socketChannel.pipeline().addLast(new SayHelloServerHandler());}})/*** ⽤来配置⼀些channel的参数,配置的参数会被ChannelConfig使⽤* BACKLOG⽤于构造服务端套接字ServerSocket对象,* 标识当服务器请求处理线程全满时,* ⽤于临时存放已完成三次握⼿的请求的队列的最⼤长度。

netty 通道关闭方法-回复Netty是一个用于快速开发可扩展的事件驱动网络应用程序的Java框架。

在Netty中,通道(Channel)是两个节点之间的打开连接,用于网络传输。

但是,在使用Netty时,我们也需要了解如何正确关闭通道,以确保资源的正确释放和程序的优雅退出。

本文将以"Netty通道关闭方法"为主题,逐步介绍如何关闭通道以及相关的注意事项。

第一步:正常关闭通道在使用Netty时,我们可以通过`ChannelHandlerContext`或者`Channel`对象来关闭通道。

具体的方法如下:1. 通过`ChannelHandlerContext`关闭通道:javaChannelHandlerContext ctx = ...;ctx.close();2. 通过`Channel`对象关闭通道:javaChannel channel = ...;channel.close();以上两种方法都可以正常关闭通道,释放相关资源。

需要注意的是,一旦调用了关闭通道的方法,通道将不再可用,即无法再发送或接收消息。

第二步:等待通道关闭在调用关闭通道的方法后,通道不会立即关闭,而是需要一定时间来完成关闭操作。

为了等待通道真正关闭,我们可以使用`ChannelFuture`类的`await`或`sync`方法。

这些方法将会阻塞当前线程,直到通道关闭。

具体的使用方法如下:javaChannel channel = ...;ChannelFuture future = channel.close();future.await();通道已关闭或者:javaChannelFuture future = ctx.close();future.sync();通道已关闭需要注意的是,如果在等待通道关闭时发生异常或超时,可以通过`isSuccess()`方法来判断关闭操作是否成功。

另外,`sync`方法会抛出异常,`await`方法会返回一个`boolean`值来表示操作是否成功。

Netty是一个基于Java NIO的网络应用框架,它能够快速简单地开发可维护的高性能的协议服务器和客户端。

在Netty中,AttributeKey 是一个用于存储和获取Channel、ChannelHandlerContext和Attribute的关联的键,它是Netty中非常重要的一个概念。

下面是对Netty AttributeKey的用法进行详细的介绍和讲解:一、AttributeKey的定义和作用在Netty中,AttributeKey是一个用于标识Attribute的键,它是一个带有类型参数的泛型类,在Netty的AttributeKey中常用的类型参数包括Channel、ChannelHandlerContext和Attribute。

通过AttributeKey,我们可以将属性绑定到Channel、ChannelHandlerContext上。

在Netty中,Channel表示一个socket连接,ChannelHandlerContext表示ChannelHandler和ChannelPipeline之间的关联,Attribute表示一个可以附加到Channel或ChannelHandlerContext上的对象,可以在Channel、ChannelHandlerContext的生命周期内存储、获取属性。

二、AttributeKey的创建和获取在Netty中,我们可以通过AttributeKey的newInstance方法创建一个AttributeKey对象,例如:```javaprivate static final AttributeKey<String> MY_ATTRIBUTE_KEY = AttributeKey.newInstance("myAttributeKey");```在上面的代码中,我们通过AttributeKey的newInstance方法创建了一个AttributeKey对象,它的类型参数为String类型,并且指定了属性的名称为"myAttributeKey"。

Netty是一个开源的基于NIO的客户端/服务器框架,用于快速开发可维护的高性能协议服务器和客户端。

而MQTT是一个轻量级的发布/订阅消息传输协议,特别适用于受限制的环境,比如网络带宽稀缺或者网络延迟较高的情况。

在实际的应用开发中,经常需要使用Netty对MQTT协议进行解析,以便于实现对MQTT协议的处理和消息的传输。

本文将针对Netty中的MQTTDecoder进行解析,介绍其原理和使用方法。

一、Netty的MQTTDecoder概述MQTTDecoder是Netty中实现MQTT协议解析的解码器,其主要作用是将原始的MQTT消息数据转换为Netty的消息对象,以便于Netty的后续处理。

在Netty中,有多种解码器用于不同类型的协议,如LineBasedFrameDecoder用于处理基于换行符的文本协议,DelimiterBasedFrameDecoder用于处理自定义分隔符的协议等。

而MQTTDecoder则专门用于处理MQTT协议。

通过MQTTDecoder,我们可以将MQTT协议的数据包解析成Netty的ByteBuf对象,方便后续的处理。

二、MQTTDecoder的实现原理MQTTDecoder的实现原理主要是通过Netty提供的ReplayingDecoder来实现的。

ReplayingDecoder是Netty提供的一种特殧的解码器,它可以在解码过程中动态地切换状态,并且可以在接受到不完整或者错误的数据时进行回滚,并重新解码。

这样就大大简化了解码器的实现过程。

在MQTTDecoder中,主要实现了对MQTT协议中各种类型消息的解析逻辑,包括固定头部的解析、可变头部的解析和有效载荷的解析。

通过ReplayingDecoder的机制,MQTTDecoder可以在接受到不完整或者错误的数据时进行回滚,并重新解码,以确保消息被正确地解析。

三、MQTTDecoder的使用方法使用MQTTDecoder非常简单,只需要将其添加到Netty的ChannelPipeline中即可。

一、介绍Java是一种广泛使用的编程语言,而Netty框架是一个高性能的网络通信框架,Mqtt是一种轻量级的消息传输协议。

本文将介绍如何在Java中基于Netty框架实现Mqtt协议的案例。

二、Mqtt协议简介Mqtt协议是一种基于发布/订阅模式的消息传输协议,它非常适合于物联网设备之间的消息传递。

Mqtt协议具有轻量级、低带宽消耗、易于实现和开放性等特点。

三、Netty框架简介Netty是一个基于NIO的高性能网络通信框架,它可以帮助开发者快速构建各种网络应用程序。

Netty框架提供了简单、抽象、可重用的代码,利用Netty框架可以有效地实现Mqtt协议的通信。

四、Mqtt协议的Java实现在Java中实现Mqtt协议可以使用Eclipse Paho项目提供的Mqtt客户端库。

该库提供了完整的Mqtt协议实现,包括连接、订阅、发布消息等功能。

通过使用Paho Mqtt客户端库,开发者可以轻松地在Java应用程序中实现Mqtt协议的通信。

五、Netty框架集成Mqtt协议Netty框架提供了丰富的API和组件,通过它可以轻松构建各种高性能的网络通信应用。

在Netty框架中集成Mqtt协议可以利用Netty框架的优势实现高效、稳定的Mqtt通信。

六、基于Netty框架的Mqtt案例以下是一个基于Netty框架实现Mqtt协议的简单案例:1. 定义消息处理器我们需要定义一个Mqtt消息处理器,用于处理Mqtt消息的接收和发送。

可以继承Netty提供的SimpleChannelInboundHandler类,实现其中的channelRead0()方法对接收到的Mqtt消息进行处理。

2. 配置Netty服务端接下来,需要配置一个Netty服务端,用于接收Mqtt客户端的连接并处理Mqtt消息。

通过设置不同的ChannelHandler,可以实现Mqtt协议的连接、订阅和消息发布功能。

3. 实现Mqtt客户端实现一个Mqtt客户端,连接到Netty服务端并进行消息的订阅和发布。

netty的bytebuf的内存动态扩容原理Netty的ByteBuf是一种高性能的数据容器,它用于在网络通信中存储和传输数据。

ByteBuf的内存动态扩容原理是Netty框架中的重要部分,它使得网络应用程序能够高效地处理大数据量和高并发的网络通信。

Netty的ByteBuf的内存动态扩容原理基于分段的缓冲区技术。

当我们往ByteBuf写入数据时,如果当前分配的内存空间已经被写满,Netty会通过内部的算法来判断是否需要扩容。

如果需要扩容,Netty将会创建一个新的更大的分段缓冲区,然后将老的数据复制到新的缓冲区中。

ByteBuf的内存动态扩容过程涉及到以下几个关键步骤:1. 扩容判断:Netty会实时监测当前ByteBuf的可写空间是否已满,通常通过比较写指针和容量来判断。

如果写指针已经达到了容量上限,说明需要进行内存扩容。

2. 分配新内存:一旦需要扩容,Netty会根据一定的策略(如指数增长)来计算新的内存大小,并分配一块更大的内存空间。

3. 数据迁移:接下来,Netty会将老的数据从旧的内存块复制到新的内存块中。

这个过程会涉及到内存的拷贝,所以是比较耗时的操作。

为了提高性能,Netty会尽可能地使用一些底层的零拷贝技术来加速数据迁移的过程。

4. 释放旧内存:数据迁移完成后,Netty会释放掉旧的内存块,以便回收内存资源。

通过这样的动态扩容机制,Netty的ByteBuf能够高效地处理动态的内存需求。

它能够根据实际情况灵活地分配和释放内存,避免了内存浪费和频繁的内存分配操作,提高了应用程序的性能和稳定性。

总之,Netty的ByteBuf的内存动态扩容原理基于分段的缓冲区技术,通过动态分配和释放内存来适应不同的数据存储和传输需求。

这个机制使得Netty成为了一个高效和可靠的网络应用框架。

(1)介绍Netty框架Netty是一个基于NIO的网络框架,提供了易于使用的API和高性能的网络编程能力。

它被广泛应用于各种网络通信场景,包括游戏服务器、聊天系统、金融交易系统等。

Netty的优点在于其高度的可定制性和灵活性,能够满足各种复杂的网络通信需求。

(2)介绍MQTT协议MQTT是一种基于发布/订阅模式的轻量级通信协议,最初是为传感器网络设计的,具有低带宽、低功耗等特点,能够在不可靠的网络环境下实现可靠的消息传递。

MQTT广泛应用于物联网、移动设备通信、实时数据传输等领域,受到了广泛的关注和应用。

(3)Java中使用Netty框架实现MQTT通过在Java中使用Netty框架实现MQTT协议,可以实现高性能、可靠的消息传递系统。

在实际项目中,可以基于Netty框架快速开发定制化的MQTT服务器或客户端,满足各种特定的通信需求。

下面我们将介绍一个基于Netty框架实现MQTT的案例。

(4)案例介绍我们以一个智能家居控制系统为例,介绍如何使用Java和Netty框架实现MQTT协议。

假设我们有多个智能设备(如灯、风扇、空调等)和一个中心控制系统,智能设备与中心控制系统通过MQTT协议进行通信。

我们将分为以下几个步骤来实现该案例:(5)搭建MQTT服务器我们需要搭建一个MQTT服务器,用于管理智能设备和接收他们发送的消息。

我们可以使用Netty框架开发一个基于MQTT协议的服务器,监听指定的端口,接收来自智能设备的连接和消息。

(6)实现MQTT客户端我们需要为每个智能设备实现一个MQTT客户端,用于与MQTT服务器进行通信。

我们可以使用Netty框架开发一个基于MQTT协议的客户端,连接到MQTT服务器并发送相应的消息。

(7)消息的发布和订阅在该案例中,我们将实现消息的发布和订阅功能。

智能设备可以向MQTT服务器发布状态信息,比如灯的开关状态、温度传感器的温度等;中心控制系统可以订阅这些信息,及时获取智能设备的状态并做出相应的控制。

一、概述Netty是一个基于NIO的网络应用框架,它可以快速地开发可维护的高性能协议服务器和客户端。

Channel是Netty的核心组件,它代表了一个网络连接,通过Channel可以进行读写操作。

在实际的应用中,为了提高性能和减少资源消耗,通常会使用Channelpool来管理Channel的复用。

二、Channelpool的作用Channelpool主要用于管理和复用Channel,可以通过Channelpool 来提高应用的性能和降低资源消耗。

当需要与多个远程服务进行通信时,如果为每个通信创建新的Channel,对资源的消耗会很大。

而通过Channelpool可以在需要通信时从池中获取可用的Channel,使用完毕后再归还给池,这样可以减少频繁创建和关闭Channel带来的性能开销。

三、Channelpool的用法1.初始化Channelpool当需要使用Channelpool时,需要首先初始化Channelpool。

可以通过ChannelPoolMap接口中的newPool方法来创建Channelpool,例如:```javaChannelPoolMap<InetSocketAddress, SimpleChannelPool> poolMap = new AbstractChannelPoolMap<InetSocketAddress,SimpleChannelPool>() {Overrideprotected SimpleChannelPool newPool(InetSocketAddress key) {Bootstrap bootstrap = new Bootstrap();bootstrap.group(new NioEventLoopGroup());bootstrap.channel(NioSocketChannel.class);return newFixedChannelPool(bootstrap.remoteAddress(key), new MyChannelPoolHandler(), 10);}};```在上述代码中,首先创建了一个ChannelPoolMap对象poolMap,然后通过newPool方法初始化了Channelpool。

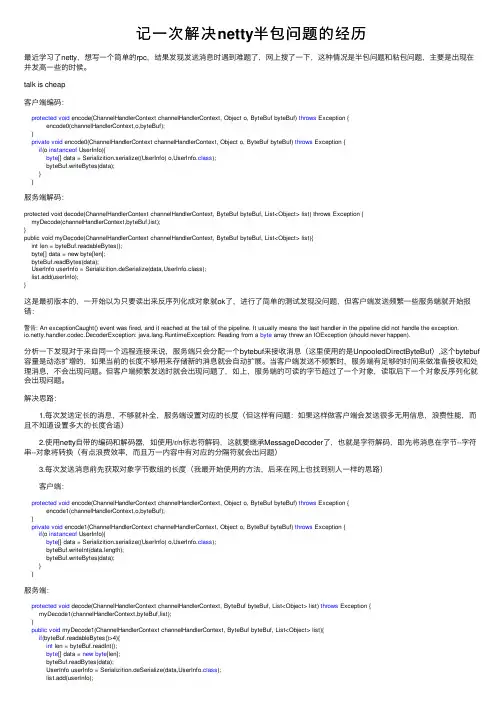

记⼀次解决netty半包问题的经历最近学习了netty,想写⼀个简单的rpc,结果发现发送消息时遇到难题了,⽹上搜了⼀下,这种情况是半包问题和粘包问题,主要是出现在并发⾼⼀些的时候。

talk is cheap客户端编码:protected void encode(ChannelHandlerContext channelHandlerContext, Object o, ByteBuf byteBuf) throws Exception {encode0(channelHandlerContext,o,byteBuf);}private void encode0(ChannelHandlerContext channelHandlerContext, Object o, ByteBuf byteBuf) throws Exception {if(o instanceof UserInfo){byte[] data = Serializition.serialize((UserInfo) o,UserInfo.class);byteBuf.writeBytes(data);}}服务端解码:protected void decode(ChannelHandlerContext channelHandlerContext, ByteBuf byteBuf, List<Object> list) throws Exception {myDecode(channelHandlerContext,byteBuf,list);}public void myDecode(ChannelHandlerContext channelHandlerContext, ByteBuf byteBuf, List<Object> list){int len = byteBuf.readableBytes();byte[] data = new byte[len];byteBuf.readBytes(data);UserInfo userInfo = Serializition.deSerialize(data,UserInfo.class);list.add(userInfo);}这是最初版本的,⼀开始以为只要读出来反序列化成对象就ok了,进⾏了简单的测试发现没问题,但客户端发送频繁⼀些服务端就开始报错:警告: An exceptionCaught() event was fired, and it reached at the tail of the pipeline. It usually means the last handler in the pipeline did not handle the exception.ty.handler.codec.DecoderException: ng.RuntimeException: Reading from a byte array threw an IOException (should never happen).分析⼀下发现对于来⾃同⼀个远程连接来说,服务端只会分配⼀个bytebuf来接收消息(这⾥使⽤的是UnpooledDirectByteBuf),这个bytebuf 容量是动态扩增的,如果当前的长度不够⽤来存储新的消息就会⾃动扩展。

The Netty Project 3.1 User Guide The Proven Approachto Rapid Network Application Development3.1.5.GA, r1772Preface (iii)1. The Problem (iii)2. The Solution (iii)1. Getting Started (1)1.1. Before Getting Started (1)1.2. Writing a Discard Server (1)1.3. Looking into the Received Data (3)1.4. Writing an Echo Server (4)1.5. Writing a Time Server (5)1.6. Writing a Time Client (7)1.7. Dealing with a Stream-based Transport (8)1.7.1. One Small Caveat of Socket Buffer (8)1.7.2. The First Solution (9)1.7.3. The Second Solution (11)1.8. Speaking in POJO instead of ChannelBuffer (12)1.9. Shutting Down Your Application (15)1.10. Summary (18)2. Architectural Overview (19)2.1. Rich Buffer Data Structure (19)2.2. Universal Asynchronous I/O API (19)2.3. Event Model based on the Interceptor Chain Pattern (20)2.4. Advanced Components for More Rapid Development (21)2.4.1. Codec framework (21)2.4.2. SSL / TLS Support (21)2.4.3. HTTP Implementation (22)2.4.4. Google Protocol Buffer Integration (22)2.5. Summary (22)PrefaceThis guide provides an introduction to Netty and what it is about.1. The ProblemNowadays we use general purpose applications or libraries to communicate with each other. For example, we often use an HTTP client library to retrieve information from a web server and to invoke a remote procedure call via web services.However, a general purpose protocol or its implementation sometimes does not scale very well. It is like we don't use a general purpose HTTP server to exchange huge files, e-mail messages, and near-realtime messages such as financial information and multiplayer game data. What's required is a highly optimized protocol implementation which is dedicated to a special purpose. For example, you might want to implement an HTTP server which is optimized for AJAX-based chat application, media streaming, or large file transfer. You could even want to design and implement a whole new protocol which is precisely tailored to your need.Another inevitable case is when you have to deal with a legacy proprietary protocol to ensure the interoperability with an old system. What matters in this case is how quickly we can implement that protocol while not sacrificing the stability and performance of the resulting application.2. The SolutionThe Netty project is an effort to provide an asynchronous event-driven network application framework and tooling for the rapid development of maintainable high-performance high-scalability protocol servers and clients.In other words, Netty is a NIO client server framework which enables quick and easy development of network applications such as protocol servers and clients. It greatly simplifies and streamlines network programming such as TCP and UDP socket server development.'Quick and easy' does not mean that a resulting application will suffer from a maintainability or a performance issue. Netty has been designed carefully with the experiences earned from the implementation of a lot of protocols such as FTP, SMTP, HTTP, and various binary and text-based legacy protocols. As a result, Netty has succeeded to find a way to achieve ease of development, performance, stability, and flexibility without a compromise.Some users might already have found other network application framework that claims to have the same advantage, and you might want to ask what makes Netty so different from them. The answer is the philosophy where it is built on. Netty is designed to give you the most comfortable experience both in terms of the API and the implementation from the day one. It is not something tangible but you will realize that this philosophy will make your life much easier as you read this guide and play with Netty.Chapter 1.Getting StartedThis chapter tours around the core constructs of Netty with simple examples to let you get started quickly. You will be able to write a client and a server on top of Netty right away when you are at the end of this chapter.If you prefer top-down approach in learning something, you might want to start from Chapter 2, Architectural Overview and get back here.1.1. Before Getting StartedThe minimum requirements to run the examples which are introduced in this chapter are only two; the latest version of Netty and JDK 1.5 or above. The latest version of Netty is available in the project download page. To download the right version of JDK, please refer to your preferred JDK vendor's web site.Is that all? To tell the truth, you should find these two are just enough to implement almost any type of protocols. Otherwise, please feel free to contact the Netty project community and let us know what's missing.At last but not least, please refer to the API reference whenever you want to know more about the classes introduced here. All class names in this document are linked to the online API reference for your convenience. Also, please don't hesitate to contact the Netty project community and let us know if there's any incorrect information, errors in grammar and typo, and if you have a good idea to improve the documentation.1.2. Writing a Discard ServerThe most simplistic protocol in the world is not 'Hello, World!' but DISCARD. It's a protocol which discards any received data without any response.To implement the DISCARD protocol, the only thing you need to do is to ignore all received data. Let us start straight from the handler implementation, which handles I/O events generated by Netty.Writing a Discard ServerChannelPipelineCoverage annotates a handler type to tell if the handler instance of the annotated type can be shared by more than one Channel (and its associated ChannelPipeline).DiscardServerHandler does not manage any stateful information, and therefore it is annotated with the value "all".DiscardServerHandler extends SimpleChannelHandler, which is an implementation of ChannelHandler. SimpleChannelHandler provides various event handler methods that you can override. For now, it is just enough to extend SimpleChannelHandler rather than to implement the handler interfaces by yourself.We override the messageReceived event handler method here. This method is called with a MessageEvent, which contains the received data, whenever new data is received from a client. In this example, we ignore the received data by doing nothing to implement the DISCARD protocol.exceptionCaught event handler method is called with an ExceptionEvent when an exception was raised by Netty due to I/O error or by a handler implementation due to the exception thrown while processing events. In most cases, the caught exception should be logged and its associated channel should be closed here, although the implementation of this method can be different depending on what you want to do to deal with an exceptional situation. For example, you might want to send a response message with an error code before closing the connection.So far so good. We have implemented the first half of the DISCARD server. What's left now is to write the main method which starts the server with the DiscardServerHandler.Looking into the Received DataChannelFactory is a factory which creates and manages Channel s and its related resources. It processes all I/O requests and performs I/O to generate ChannelEvent s. Netty provides various ChannelFactory implementations. We are implementing a server-side application in this example, and therefore NioServerSocketChannelFactory was used. Another thing to note is that it does not create I/O threads by itself. It is supposed to acquire threads from the thread pool you specified in the constructor, and it gives you more control over how threads should be managed in the environment where your application runs, such as an application server with a security manager.ServerBootstrap is a helper class that sets up a server. You can set up the server using a Channel directly. However, please note that this is a tedious process and you do not need to do that in most cases.Here, we add the DiscardServerHandler to the default ChannelPipeline. Whenevera new connection is accepted by the server, a new ChannelPipeline will be created fora newly accepted Channel and all the ChannelHandler s added here will be added tothe new ChannelPipeline. It's just like a shallow-copy operation; all Channel and their ChannelPipeline s will share the same DiscardServerHandler instance.You can also set the parameters which are specific to the Channel implementation. We are writing a TCP/IP server, so we are allowed to set the socket options such as tcpNoDelay and keepAlive.Please note that the "child." prefix was added to all options. It means the options will be applied to the accepted Channel s instead of the options of the ServerSocketChannel. You could do the following to set the options of the ServerSocketChannel:We are ready to go now. What's left is to bind to the port and to start the server. Here, we bind to the port 8080 of all NICs (network interface cards) in the machine. You can now call the bind method as many times as you want (with different bind addresses.)Congratulations! You've just finished your first server on top of Netty.1.3. Looking into the Received DataNow that we have written our first server, we need to test if it really works. The easiest way to test it is to use the telnet command. For example, you could enter "telnet localhost 8080" in the command line and type something.However, can we say that the server is working fine? We cannot really know that because it is a discard server. You will not get any response at all. To prove it is really working, let us modify the server to print what it has received.We already know that MessageEvent is generated whenever data is received and the messageReceived handler method will be invoked. Let us put some code into the messageReceived method of the DiscardServerHandler:It is safe to assume the message type in socket transports is always ChannelBuffer.ChannelBuffer is a fundamental data structure which stores a sequence of bytes in Netty. It's similar to NIO ByteBuffer, but it is easier to use and more flexible. For example, Netty allows you to create a composite ChannelBuffer which combines multiple ChannelBuffer s reducing the number of unnecessary memory copy.Although it resembles to NIO ByteBuffer a lot, it is highly recommended to refer to the API reference. Learning how to use ChannelBuffer correctly is a critical step in using Netty without difficulty.If you run the telnet command again, you will see the server prints what has received.The full source code of the discard server is located in the ty.example.discard package of the distribution.1.4. Writing an Echo ServerSo far, we have been consuming data without responding at all. A server, however, is usually supposed to respond to a request. Let us learn how to write a response message to a client by implementing the ECHO protocol, where any received data is sent back.The only difference from the discard server we have implemented in the previous sections is that it sends the received data back instead of printing the received data out to the console. Therefore, it is enough again to modify the messageReceived method:A ChannelEvent object has a reference to its associated Channel. Here, the returned Channelrepresents the connection which received the MessageEvent. We can get the Channel and call the write method to write something back to the remote peer.If you run the telnet command again, you will see the server sends back whatever you have sent to it. The full source code of the echo server is located in the ty.example.echo package of the distribution.1.5. Writing a Time ServerThe protocol to implement in this section is the TIME protocol. It is different from the previous examples in that it sends a message, which contains a 32-bit integer, without receiving any requests and loses the connection once the message is sent. In this example, you will learn how to construct and send a message, and to close the connection on completion.Because we are going to ignore any received data but to send a message as soon as a connection is established, we cannot use the messageReceived method this time. Instead, we should override the channelConnected method. The following is the implementation:As explained, channelConnected method will be invoked when a connection is established. Let us write the 32-bit integer that represents the current time in seconds here.To send a new message, we need to allocate a new buffer which will contain the message. We are going to write a 32-bit integer, and therefore we need a ChannelBuffer whose capacity is 4 bytes. The ChannelBuffers helper class is used to allocate a new buffer. Besides the buffer method, ChannelBuffers provides a lot of useful methods related to the ChannelBuffer. For more information, please refer to the API reference.On the other hand, it is a good idea to use static imports for ChannelBuffers:As usual, we write the constructed message.But wait, where's the flip? Didn't we used to call ByteBuffer.flip() before sending a message in NIO? ChannelBuffer does not have such a method because it has two pointers; one for read operations and the other for write operations. The writer index increases when you write something to a ChannelBuffer while the reader index does not change. The reader index and the writer index represents where the message starts and ends respectively.In contrast, NIO buffer does not provide a clean way to figure out where the message content starts and ends without calling the flip method. You will be in trouble when you forget to flip the buffer because nothing or incorrect data will be sent. Such an error does not happen in Netty because we have different pointer for different operation types. You will find it makes your life much easier as you get used to it -- a life without flipping out!Another point to note is that the write method returns a ChannelFuture. A ChannelFuture represents an I/O operation which has not yet occurred. It means, any requested operation might not have been performed yet because all operations are asynchronous in Netty. For example, the following code might close the connection even before a message is sent:Therefore, you need to call the close method after the ChannelFuture, which was returned by the write method, notifies you when the write operation has been done. Please note that, close might not close the connection immediately, and it returns a ChannelFuture.How do we get notified when the write request is finished then? This is as simple as addinga ChannelFutureListener to the returned ChannelFuture. Here, we created a newanonymous ChannelFutureListener which closes the Channel when the operation is done.Alternatively, you could simplify the code using a pre-defined listener:1.6. Writing a Time ClientUnlike DISCARD and ECHO servers, we need a client for the TIME protocol because a human cannot translate a 32-bit binary data into a date on a calendar. In this section, we discuss how to make sure the server works correctly and learn how to write a client with Netty.The biggest and only difference between a server and a client in Netty is that different Bootstrap and ChannelFactory are required. Please take a look at the following code:NioClientSocketChannelFactory, instead of NioServerSocketChannelFactory was used to create a client-side Channel.Dealing with a Stream-based TransportClientBootstrap is a client-side counterpart of ServerBootstrap.Please note that there's no "child." prefix. A client-side SocketChannel does not have a parent.We should call the connect method instead of the bind method.As you can see, it is not really different from the server side startup. What about the ChannelHandler implementation? It should receive a 32-bit integer from the server, translate it into a human readable format, print the translated time, and close the connection:It looks very simple and does not look any different from the server side example. However, this handler sometimes will refuse to work raising an IndexOutOfBoundsException. We discuss why this happens in the next section.1.7. Dealing with a Stream-based Transport1.7.1. One Small Caveat of Socket BufferIn a stream-based transport such as TCP/IP, received data is stored into a socket receive buffer. Unfortunately, the buffer of a stream-based transport is not a queue of packets but a queue of bytes. It means, even if you sent two messages as two independent packets, an operating system will not treat them as two messages but as just a bunch of bytes. Therefore, there is no guarantee that what you read is exactly what your remote peer wrote. For example, let us assume that the TCP/IP stack of an operating system has received three packets:Because of this general property of a stream-based protocol, there's high chance of reading them in the following fragmented form in your application:Therefore, a receiving part, regardless it is server-side or client-side, should defrag the received data into one or more meaningful frames that could be easily understood by the application logic. In case of the example above, the received data should be framed like the following:1.7.2. The First SolutionNow let us get back to the TIME client example. We have the same problem here. A 32-bit integer is a very small amount of data, and it is not likely to be fragmented often. However, the problem is that it can be fragmented, and the possibility of fragmentation will increase as the traffic increases.The simplistic solution is to create an internal cumulative buffer and wait until all 4 bytes are received into the internal buffer. The following is the modified TimeClientHandler implementation that fixes the problem:This time, "one"was used as the value of the ChannelPipelineCoverage annotation.It's because the new TimeClientHandler has to maintain the internal buffer and therefore cannot serve multiple Channel s. If an instance of TimeClientHandler is shared by multiple Channel s (and consequently multiple ChannelPipeline s), the content of the buf will be corrupted.A dynamic buffer is a ChannelBuffer which increases its capacity on demand. It's very usefulwhen you don't know the length of the message.First, all received data should be cumulated into buf.And then, the handler must check if buf has enough data, 4 bytes in this example, and proceed to the actual business logic. Otherwise, Netty will call the messageReceived method again when more data arrives, and eventually all 4 bytes will be cumulated.There's another place that needs a fix. Do you remember that we added a TimeClientHandler instance to the default ChannelPipeline of the ClientBootstrap? It means one same TimeClientHandler instance is going to handle multiple Channel s and consequently the data will be corrupted. To create a new TimeClientHandler instance per Channel, we have to implement a ChannelPipelineFactory:Now let us replace the following lines of TimeClient:with the following:It might look somewhat complicated at the first glance, and it is true that we don't need to introduce TimeClientPipelineFactory in this particular case because TimeClient creates only one connection.However, as your application gets more and more complex, you will almost always end up with writing a ChannelPipelineFactory, which yields much more flexibility to the pipeline configuration.1.7.3. The Second SolutionAlthough the first solution has resolved the problem with the TIME client, the modified handler does not look that clean. Imagine a more complicated protocol which is composed of multiple fields such as a variable length field. Your ChannelHandler implementation will become unmaintainable very quickly.As you may have noticed, you can add more than one ChannelHandler to a ChannelPipeline, and therefore, you can split one monolithic ChannelHandler into multiple modular ones to reduce the complexity of your application. For example, you could split TimeClientHandler into two handlers:•TimeDecoder which deals with the fragmentation issue, and•the initial simple version of TimeClientHandler.Fortunately, Netty provides an extensible class which helps you write the first one out of the box:There's no ChannelPipelineCoverage annotation this time because FrameDecoder is already annotated with "one".FrameDecoder calls decode method with an internally maintained cumulative buffer whenever new data is received.If null is returned, it means there's not enough data yet. FrameDecoder will call again when there is a sufficient amount of data.If non-null is returned, it means the decode method has decoded a message successfully.FrameDecoder will discard the read part of its internal cumulative buffer. Please remember that you don't need to decode multiple messages. FrameDecoder will keep calling the decoder method until it returns null.If you are an adventurous person, you might want to try the ReplayingDecoder which simplifies the decoder even more. You will need to consult the API reference for more information though.Additionally, Netty provides out-of-the-box decoders which enables you to implement most protocols very easily and helps you avoid from ending up with a monolithic unmaintainable handler implementation. Please refer to the following packages for more detailed examples:•ty.example.factorial for a binary protocol, and•ty.example.telnet for a text line-based protocol.1.8. Speaking in POJO instead of ChannelBufferAll the examples we have reviewed so far used a ChannelBuffer as a primary data structure of a protocol message. In this section, we will improve the TIME protocol client and server example to use a POJO instead of a ChannelBuffer.The advantage of using a POJO in your ChannelHandler is obvious; your handler becomes more maintainable and reusable by separating the code which extracts information from ChannelBuffer out from the handler. In the TIME client and server examples, we read only one 32-bit integer and it is not a major issue to use ChannelBuffer directly. However, you will find it is necessary to make the separation as you implement a real world protocol.First, let us define a new type called UnixTime.We can now revise the TimeDecoder to return a UnixTime instead of a ChannelBuffer.FrameDecoder and ReplayingDecoder allow you to return an object of any type. If they were restricted to return only a ChannelBuffer, we would have to insert another ChannelHandler which transforms a ChannelBuffer into a UnixTime.With the updated decoder, the TimeClientHandler does not use ChannelBuffer anymore:Much simpler and elegant, right? The same technique can be applied on the server side. Let us update the TimeServerHandler first this time:Now, the only missing piece is the ChannelHandler which translates a UnixTime back into a ChannelBuffer. It's much simpler than writing a decoder because there's no need to deal with packet fragmentation and assembly when encoding a message.The ChannelPipelineCoverage value of an encoder is usually "all" because this encoder is stateless. Actually, most encoders are stateless.An encoder overrides the writeRequested method to intercept a write request. Please note that the MessageEvent parameter here is the same type which was specified in messageReceived but they are interpreted differently. A ChannelEvent can be either an upstream or downstreamevent depending on the direction where the event flows. For instance, a MessageEvent can be an upstream event when called for messageReceived or a downstream event when called for writeRequested. Please refer to the API reference to learn more about the difference between a upstream event and a downstream event.Once done with transforming a POJO into a ChannelBuffer, you should forward the new buffer to the previous ChannelDownstreamHandler in the ChannelPipeline. Channels provides various helper methods which generates and sends a ChannelEvent. In this example, Channels.write(...)method creates a new MessageEvent and sends it to the previous ChannelDownstreamHandler in the ChannelPipeline.On the other hand, it is a good idea to use static imports for Channels:The last task left is to insert a TimeEncoder into the ChannelPipeline on the server side, and it is left as a trivial exercise.1.9. Shutting Down Your ApplicationIf you ran the TimeClient, you must have noticed that the application doesn't exit but just keep running doing nothing. Looking from the full stack trace, you will also find a couple I/O threads are running. To shut down the I/O threads and let the application exit gracefully, you need to release the resources allocated by ChannelFactory.The shutdown process of a typical network application is composed of the following three steps:1.Close all server sockets if there are any,2.Close all non-server sockets (i.e. client sockets and accepted sockets) if there are any, and3.Release all resources used by ChannelFactory.To apply the three steps above to the TimeClient, TimeClient.main()could shut itself down gracefully by closing the only one client connection and releasing all resources used by ChannelFactory:The connect method of ClientBootstrap returns a ChannelFuture which notifies whena connection attempt succeeds or fails. It also has a reference to the Channel which is associatedwith the connection attempt.Wait for the returned ChannelFuture to determine if the connection attempt was successful or not.If failed, we print the cause of the failure to know why it failed. the getCause()method of ChannelFuture will return the cause of the failure if the connection attempt was neither successful nor cancelled.Now that the connection attempt is over, we need to wait until the connection is closed by waiting for the closeFuture of the Channel. Every Channel has its own closeFuture so that you are notified and can perform a certain action on closure.Even if the connection attempt has failed the closeFuture will be notified because the Channel will be closed automatically when the connection attempt fails.All connections have been closed at this point. The only task left is to release the resources being used by ChannelFactory. It is as simple as calling its releaseExternalResources() method.All resources including the NIO Selector s and thread pools will be shut down and terminated automatically.Shutting down a client was pretty easy, but how about shutting down a server? You need to unbind from the port and close all open accepted connections. To do this, you need a data structure that keeps track of the list of active connections, and it's not a trivial task. Fortunately, there is a solution, ChannelGroup. ChannelGroup is a special extension of Java collections API which represents a set of open Channel s. If a Channel is added to a ChannelGroup and the added Channel is closed, the closed Channel is removed from its ChannelGroup automatically. You can also perform an operation on all Channel s in the same group. For instance, you can close all Channel s in a ChannelGroup when you shut down your server.To keep track of open sockets, you need to modify the TimeServerHandler to add a new open Channel to the global ChannelGroup, TimeServer.allChannels:。

Netty学习——Netty和Protobuf的整合(⼀)Netty学习——Netty和Protobuf的整合Protobuf作为序列化的⼯具,将序列化后的数据,通过Netty来进⾏在⽹络上的传输1.将proto⽂件⾥的java包的位置修改⼀下,然后再执⾏⼀下protoc异常捕获:启动服务器端正常,在启动客户端的时候,发送消息,报错警告: An exceptionCaught() event was fired, and it reached at the tail of the pipeline. It usually means the last handler in the pipeline did not handle the exception.ty.handler.codec.DecoderException: com.google.protobuf.InvalidProtocolBufferException: Protocol message contained an invalid tag (zero).at ty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:98)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360)at ty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:352)at ty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:326)at ty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:313)at ty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:427)at ty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:281)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360)at ty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:352)at ty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1422)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360)at ty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:931)at ty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:163)at ty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:700)at ty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:635)at ty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:552)at ty.channel.nio.NioEventLoop.run(NioEventLoop.java:514)at ty.util.concurrent.SingleThreadEventExecutor$6.run(SingleThreadEventExecutor.java:1050)at ty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)at ty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)at ng.Thread.run(Thread.java:748)Caused by: com.google.protobuf.InvalidProtocolBufferException: Protocol message contained an invalid tag (zero).at com.google.protobuf.InvalidProtocolBufferException.invalidTag(InvalidProtocolBufferException.java:102)at com.google.protobuf.CodedInputStream$ArrayDecoder.readTag(CodedInputStream.java:627)at ty.sixthexample.MyDataInfo$Person.<init>(MyDataInfo.java:109)at ty.sixthexample.MyDataInfo$Person.<init>(MyDataInfo.java:69)at ty.sixthexample.MyDataInfo$Person$1.parsePartialFrom(MyDataInfo.java:881)at ty.sixthexample.MyDataInfo$Person$1.parsePartialFrom(MyDataInfo.java:875)at com.google.protobuf.AbstractParser.parsePartialFrom(AbstractParser.java:158)at com.google.protobuf.AbstractParser.parseFrom(AbstractParser.java:191)at com.google.protobuf.AbstractParser.parseFrom(AbstractParser.java:197)at com.google.protobuf.AbstractParser.parseFrom(AbstractParser.java:48)at ty.handler.codec.protobuf.ProtobufDecoder.decode(ProtobufDecoder.java:121)at ty.handler.codec.protobuf.ProtobufDecoder.decode(ProtobufDecoder.java:65)at ty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:88)... 23 more⼗⼆⽉ 03, 2019 11:01:51 上午 ty.channel.DefaultChannelPipeline onUnhandledInboundException警告: An exceptionCaught() event was fired, and it reached at the tail of the pipeline. It usually means the last handler in the pipeline did not handle the exception.ty.handler.codec.DecoderException: com.google.protobuf.InvalidProtocolBufferException: While parsing a protocol message, the input ended unexpectedly in the middle of a field. This could mean either that the input has been truncated at ty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:98)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360)at ty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:352)at ty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:326)at ty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:300)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360)at ty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:352)at ty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1422)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:374)at ty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:360)at ty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:931)at ty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:163)at ty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:700)at ty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:635)at ty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:552)at ty.channel.nio.NioEventLoop.run(NioEventLoop.java:514)at ty.util.concurrent.SingleThreadEventExecutor$6.run(SingleThreadEventExecutor.java:1050)at ty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)at ty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)at ng.Thread.run(Thread.java:748)Caused by: com.google.protobuf.InvalidProtocolBufferException: While parsing a protocol message, the input ended unexpectedly in the middle of a field. This could mean either that the input has been truncated or that an embedded message at com.google.protobuf.InvalidProtocolBufferException.truncatedMessage(InvalidProtocolBufferException.java:84)at com.google.protobuf.CodedInputStream$ArrayDecoder.readRawByte(CodedInputStream.java:1238)at com.google.protobuf.CodedInputStream$ArrayDecoder.readRawVarint64SlowPath(CodedInputStream.java:1126)at com.google.protobuf.CodedInputStream$ArrayDecoder.readRawVarint32(CodedInputStream.java:1020)at com.google.protobuf.CodedInputStream$ArrayDecoder.readTag(CodedInputStream.java:623)at ty.sixthexample.MyDataInfo$Person.<init>(MyDataInfo.java:109)at ty.sixthexample.MyDataInfo$Person.<init>(MyDataInfo.java:69)at ty.sixthexample.MyDataInfo$Person$1.parsePartialFrom(MyDataInfo.java:881)at ty.sixthexample.MyDataInfo$Person$1.parsePartialFrom(MyDataInfo.java:875)at com.google.protobuf.AbstractParser.parsePartialFrom(AbstractParser.java:158)at com.google.protobuf.AbstractParser.parseFrom(AbstractParser.java:191)at com.google.protobuf.AbstractParser.parseFrom(AbstractParser.java:197)at com.google.protobuf.AbstractParser.parseFrom(AbstractParser.java:48)at ty.handler.codec.protobuf.ProtobufDecoder.decode(ProtobufDecoder.java:121)at ty.handler.codec.protobuf.ProtobufDecoder.decode(ProtobufDecoder.java:65)at ty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:88)... 21 more开始找问题:ty.handler.codec.DecoderException: 在解码过程中出错,可能是服务器端出的错2.Caused by: com.google.protobuf.InvalidProtocolBufferException: Protocol message contained an invalid tag (zero).3.While parsing a protocol message, the input ended unexpectedly in the middle of a field. This could mean either that the input has been truncated or that an embeddedmessage misreported its own length.检查了⼀下代码:猜测了⼀下:成了,效果图如下可怕,原因竟然出现在编码器添加的顺序不同导致的:正确的顺序是这样的:@Overrideprotected void initChannel(SocketChannel ch) throws Exception {ChannelPipeline pipeline = ch.pipeline();//这⾥和之前的不⼀样了,但也就是处理器的不⼀样//编解码处理器,protobuf提供的专门的编解码器.4个处理器pipeline.addLast(new ProtobufVarint32FrameDecoder());//Decoder是重点,解码器,将字节码转换成想要的数据类型//参数 messageLite,外层的要转换的类的实例pipeline.addLast(new ProtobufDecoder(MyDataInfo.Person.getDefaultInstance()));pipeline.addLast(new ProtobufVarint32LengthFieldPrepender());pipeline.addLast(new ProtobufEncoder());pipeline.addLast(new TestServerHandler());}接下来存放⼀下完整的客户端和服务器端的代码proto⽂件yntax ="proto2";package com.dawa.protobuf;option optimize_for = SPEED;option java_package ="ty.sixthexample";option java_outer_classname = "MyDataInfo";message Person{required string name = 1;optional int32 age = 2;optional string address = 3;}message Person2{required string name = 1;optional int32 age = 2;optional string address = 3;}服务器端代码:package ty.sixthexample;import ty.bootstrap.ServerBootstrap;import ty.channel.ChannelFuture;import ty.channel.EventLoopGroup;import ty.channel.nio.NioEventLoopGroup;import ty.channel.socket.nio.NioServerSocketChannel;import ty.handler.logging.LogLevel;import ty.handler.logging.LoggingHandler;/*** @Title: TestServer* @Author: ⼤娃* @Date: 2019/12/3 10:01* @Description:*/public class TestServer {public static void main(String[] args) throws Exception {EventLoopGroup bossGroup = new NioEventLoopGroup();EventLoopGroup workerGroup = new NioEventLoopGroup();try {ServerBootstrap serverBootstrap = new ServerBootstrap();serverBootstrap.group(bossGroup, workerGroup).channel(NioServerSocketChannel.class).handler(new LoggingHandler()).childHandler(new TestServerInitializer());ChannelFuture channelFuture = serverBootstrap.bind(8899).sync();channelFuture.channel().closeFuture().sync();} finally {bossGroup.shutdownGracefully();workerGroup.shutdownGracefully();}}}package ty.sixthexample;import ty.channel.ChannelHandlerContext;import ty.channel.SimpleChannelInboundHandler;/*** @Title: TestServerHandler* @Author: ⼤娃* @Date: 2019/12/3 10:09* @Description: handler本⾝是个泛型,这⾥的泛型就是取要处理的类型*/public class TestServerHandler extends SimpleChannelInboundHandler<MyDataInfo.Person> {@Overrideprotected void channelRead0(ChannelHandlerContext ctx, MyDataInfo.Person msg) throws Exception { //对接受到的消息进⾏处理:System.out.println(msg.getName());System.out.println(msg.getAge());System.out.println(msg.getAddress());}}package ty.sixthexample;import ty.channel.ChannelInitializer;import ty.channel.ChannelPipeline;import ty.channel.socket.SocketChannel;import ty.handler.codec.protobuf.ProtobufDecoder;import ty.handler.codec.protobuf.ProtobufEncoder;import ty.handler.codec.protobuf.ProtobufVarint32FrameDecoder;import ty.handler.codec.protobuf.ProtobufVarint32LengthFieldPrepender;/*** @Title: TestServerInitializer* @Author: ⼤娃* @Date: 2019/12/3 10:05* @Description:*/public class TestServerInitializer extends ChannelInitializer<SocketChannel> {@Overrideprotected void initChannel(SocketChannel ch) throws Exception {ChannelPipeline pipeline = ch.pipeline();//这⾥和之前的不⼀样了,但也就是处理器的不⼀样//编解码处理器,protobuf提供的专门的编解码器.4个处理器pipeline.addLast(new ProtobufVarint32FrameDecoder());//Decoder是重点,解码器,将字节码转换成想要的数据类型//参数 messageLite,外层的要转换的类的实例pipeline.addLast(new ProtobufDecoder(MyDataInfo.Person.getDefaultInstance()));pipeline.addLast(new ProtobufVarint32LengthFieldPrepender());pipeline.addLast(new ProtobufEncoder());pipeline.addLast(new TestServerHandler());}}客户端代码package ty.sixthexample;import ty.bootstrap.Bootstrap;import ty.channel.ChannelFuture;import ty.channel.EventLoopGroup;import ty.channel.nio.NioEventLoopGroup;import ty.channel.socket.nio.NioSocketChannel;/*** @Title: TestClient* @Author: ⼤娃* @Date: 2019/12/3 10:15* @Description: 客户端内容*/public class TestClient {public static void main(String[] args) throws Exception {EventLoopGroup eventLoopGroup = new NioEventLoopGroup();try {Bootstrap bootstrap = new Bootstrap();bootstrap.group(eventLoopGroup).channel(NioSocketChannel.class).handler(new TestClientInitializer());//注意此处,使⽤的是connect,不是使⽤的bindChannelFuture channelFuture = bootstrap.connect("localhost", 8899).sync();channelFuture.channel().closeFuture().sync();} finally {eventLoopGroup.shutdownGracefully();}}}package ty.sixthexample;import ty.channel.ChannelInitializer;import ty.channel.ChannelPipeline;import ty.channel.socket.SocketChannel;import ty.handler.codec.protobuf.ProtobufDecoder;import ty.handler.codec.protobuf.ProtobufEncoder;import ty.handler.codec.protobuf.ProtobufVarint32FrameDecoder;import ty.handler.codec.protobuf.ProtobufVarint32LengthFieldPrepender;/*** @Title: TestClientInitializer* @Author: ⼤娃* @Date: 2019/12/3 10:43* @Description:*/public class TestClientInitializer extends ChannelInitializer<SocketChannel> {@Overrideprotected void initChannel(SocketChannel ch) throws Exception {ChannelPipeline pipeline = ch.pipeline();//这⾥和之前的不⼀样了,但也就是处理器的不⼀样//编解码处理器,protobuf提供的专门的编解码器.4个处理器pipeline.addLast(new ProtobufVarint32FrameDecoder());//Decoder是重点,解码器,将字节码转换成想要的数据类型//参数 messageLite,外层的要转换的类的实例pipeline.addLast(new ProtobufDecoder(MyDataInfo.Person.getDefaultInstance()));pipeline.addLast(new ProtobufVarint32LengthFieldPrepender());pipeline.addLast(new ProtobufEncoder());//⾃⼰的处理器pipeline.addLast(new TestClientHandler());}}package ty.sixthexample;import ty.channel.ChannelHandlerContext;import ty.channel.SimpleChannelInboundHandler;/*** @Title: TestClientHandler* @Author: ⼤娃* @Date: 2019/12/3 10:44* @Description:*/public class TestClientHandler extends SimpleChannelInboundHandler<MyDataInfo.Person> {@Overrideprotected void channelRead0(ChannelHandlerContext ctx, MyDataInfo.Person msg) throws Exception {}@Overridepublic void channelActive(ChannelHandlerContext ctx) throws Exception {//处于活动状态MyDataInfo.Person person = MyDataInfo.Person.newBuilder().setName("⼤娃").setAge(22).setAddress("北京").build(); ctx.channel().writeAndFlush(person);}}这程序是有瑕疵的,解码器那⾥不通⽤,耦合性太强,有两个很明显的问题,但是要怎么解决呢?会专门写个帖⼦进⾏分析,Netty学习——Netty和Protobuf的整合(⼆)。

一、介绍NettyNetty是一个基于Java NIO的网络应用框架,它可以帮助开发者快速、高效地编写网络应用程序。

通过使用Netty,开发者可以轻松地创建各种类型的网络应用,包括但不限于服务器和客户端。

二、Netty的ChannelOption参数概述在Netty中,ChannelOption是一个枚举类型,它代表了一些用于配置Channel的选项。

通过设置ChannelOption参数,开发者可以对网络连接进行各种配置,以满足特定的需求。

在Netty中,常见的ChannelOption参数包括SO_KEEPALIVE、TCP_NODELAY、SO_RCVBUF、SO_SNDBUF等。

三、各种ChannelOption参数的作用1. SO_KEEPALIVESO_KEEPALIVE参数是用于设置保持连接。

当设置为true时,操作系统会主动检测连接是否还存活,如果不存活则断开连接。

在一些长连接应用场景中,可以通过设置SO_KEEPALIVE参数来确保连接的可靠性。

2. TCP_NODELAYTCP_NODELAY参数用于控制是否启用Nagle算法。

Nagle算法通过将小的数据包合并成更大的数据包来减少网络传输的次数,从而提高网络效率。

但是在一些实时性要求较高的应用中,可能需要禁用Nagle算法,以减少数据传输的延迟。

通过设置TCP_NODELAY参数为true,可以禁用Nagle算法。

3. SO_RCVBUF和SO_SNDBUFSO_RCVBUF和SO_SNDBUF参数分别用于设置接收缓冲区和发送缓冲区的大小。

在网络传输过程中,数据会先被写入到发送缓冲区,然后再通过网络传输到接收端的接收缓冲区。

通过调整这两个缓冲区的大小,可以对网络传输的性能产生一定的影响。

四、如何设置ChannelOption参数在Netty中,可以通过调用ChannelOption的set方法来设置对应的参数值。

可以通过以下方式来设置SO_KEEPALIVE参数:```channel.config().setOption(ChannelOption.SO_KEEPALIVE, true); ```通过调用setOption方法,开发者可以灵活地配置各种ChannelOption参数,以满足不同的网络应用需求。

8086汇编语⾔学习(⼀)8086汇编介绍1. 学习汇编的⼼路历程 进⾏8086汇编的介绍之前,想先分享⼀下我学习汇编的⼼路历程。

rocketmq的学习 其实我并没有想到这么快的就需要进⼀步学习汇编语⾔,因为汇编对于我的当前的⼯作内容来说太过底层。

但在⼏个⽉前,当时我正尝试着阅读rocketmq的源码。

和许多流⾏的java中间件、框架⼀样,rocketmq底层的⽹络通信也是通过netty实现的。

但由于我对netty并不熟悉,在⼯作中使⽤spring-cloud-gateway的时候甚⾄写出了⼀些导致netty内存泄漏的代码,却不太明⽩个中原理。

出于我个⼈的习惯,在学习源码时,抛开整体的程序架构不论,希望⾄少能对其中涉及到的底层内容有⼀个⼤致的掌握,能让我像⿊盒⼦⼀样去看待它们。

趁热打铁,我决定先学习netty,这样既能在⼯作时更好的定位、解决netty相关的问题,⼜能在研究依赖netty的开源项⽬时更加得⼼应⼿。

netty的学习 随着对netty学习的深⼊,除了感叹netty统⼀规整的api接⼝设计,内部交互灵活可配置、同时⼜提供了⾜够丰富的开箱即⽤组件外;更进⼀步的,netty或者说java nio涉及到了许多更底层的东西,例如:io多路复⽤,零拷贝,事件驱动等等。

⽽这些底层技术在redis,nginx,node-js等以⾼效率io著称的应⽤中被⼴泛使⽤。

扪⼼⾃问,⾃⼰在多⼤程度上理解这些技术?为什么io多路复⽤在io密集型的应⽤中,效率能够⽐之传统的同步阻塞io显著提⾼?⼀次⽹络或磁盘的io传输内部到底发⽣了什么,零拷贝到底快在了哪⾥? 如果没有很好的弄明⽩这些问题,那么我的netty学习将是不完整的。

我有限的知识告诉我,答案就在操作系统中。

操作系统作为软硬件的⼤管家,对上提供应⽤程序接⼝(程序员们通常使⽤⾼级语⾔提供的api间接调⽤);对下控制硬件(cpu、内存、磁盘⽹卡等外设);依赖硬件提供控制并发的系统原语;其牵涉的许多模块内容都已经独⽴发展了(多系统进程间通信->计算机⽹络、⽂件系统->数据库)。

netty addlistener用法Netty是一个高性能、异步事件驱动的网络编程框架,常用于构建可伸缩和可维护的服务器和客户端应用程序。

在Netty中,有一个非常重要的概念称为"addListener",它用于向ChannelFuture对象添加一个ChannelFutureListener,以便在操作完成时接收通知和执行相应的逻辑。

本文将以"Netty addListener用法"为主题,详细介绍Netty中addListener方法的用法和原理,并通过实例来说明其在实际开发中的应用。

一、Netty中的addListener方法简介在Netty中,每个IO操作都会返回一个ChannelFuture对象,用于表示操作的异步结果。

通过为ChannelFuture对象添加ChannelFutureListener,我们可以在I/O操作成功或失败时做出相应的响应。

addListener方法即用于向ChannelFuture对象添加一个或多个ChannelFutureListener。

二、addListener方法的使用步骤下面我们逐步介绍如何使用addListener方法,并通过一个实例来说明具体用法。

步骤1:创建一个Netty项目并引入Netty库首先,我们需要创建一个基于Netty的Java项目,并在项目中引入Netty 的相关库。

你可以选择使用Maven、Gradle等构建工具来管理依赖。

步骤2:初始化和配置Netty服务器在创建Netty服务器之前,我们需要进行相关的初始化和配置操作。

具体而言,我们需要创建一个EventLoopGroup、ServerBootstrap以及ChannelInitializer来设置服务器的选项和处理事件的逻辑。

步骤3:添加addListener方法在Netty服务器初始化和启动阶段,我们需要用addListener方法来注册一个ChannelFutureListener,以便在服务器启动成功或失败时做出响应。

Netty获取连接时的参数1. 引言Netty是一个高性能、异步事件驱动的网络编程框架,它提供了简单而强大的API,用于快速开发可扩展的网络应用程序。

在使用Netty构建网络应用程序时,获取连接时的参数是非常重要的一环。

本文将详细介绍Netty获取连接时的参数及其相关知识。

2. 连接参数在Netty中,连接参数是指在客户端与服务器建立连接时需要设置的一些参数。

这些参数包括:2.1 连接超时时间连接超时时间指定了客户端在尝试与服务器建立连接时最长等待时间。

如果超过这个时间仍然无法建立连接,则会抛出ConnectTimeoutException异常。

可以通过以下方式设置连接超时时间:Bootstrap b = new Bootstrap();b.option(ChannelOption.CONNECT_TIMEOUT_MILLIS, timeout);2.2 连接重试次数连接重试次数指定了客户端在尝试与服务器建立连接失败后的重试次数。

可以通过以下方式设置连接重试次数:Bootstrap b = new Bootstrap();b.option(ChannelOption.MAX_CONNECTION_ATTEMPTS, maxAttempts);2.3 TCP_NODELAY选项TCP_NODELAY选项决定了是否启用Nagle算法。

Nagle算法通过延迟发送小数据包来优化网络传输效率,但会增加延迟。

在某些场景下,禁用Nagle算法可以提高网络传输性能。

可以通过以下方式设置TCP_NODELAY选项:Bootstrap b = new Bootstrap();b.option(ChannelOption.TCP_NODELAY, true);2.4 SO_KEEPALIVE选项SO_KEEPALIVE选项决定了是否启用TCP心跳机制。

TCP心跳机制可以检测连接是否存活,并在连接断开时及时关闭资源。

可以通过以下方式设置SO_KEEPALIVE选项:Bootstrap b = new Bootstrap();b.option(ChannelOption.SO_KEEPALIVE, true);2.5 连接缓冲区大小连接缓冲区大小指定了客户端与服务器之间传输数据时的缓冲区大小。

Netty学习记录ty框架是基于事件机制的,简单说,就是发生什么事,就找相关处理方法。

ty的优点:Netty是一个NIO client-server(客户端服务器)框架,使用Netty可以快速开发网络应用,例如服务器和客户端协议。

Netty提供了一种新的方式来使开发网络应用程序,这种新的方式使得它很容易使用和有很强的扩展性。

Netty的内部实现时很复杂的,但是Netty提供了简单易用的api从网络处理代码中解耦业务逻辑。

Netty是完全基于NIO实现的,所以整个Netty都是异步的。

Netty提供了高层次的抽象来简化TCP和UDP服务器的编程,但是你仍然可以使用底层地API。

Netty为Java NIO中的bug和限制也提供了解决方案3.启动服务器步骤:a)启动服务器应先创建一个ServerBootstrap对象,因为使用NIO,所以指定NioEventLoopGroup来接受和处理新连接,指定通道类型为NioServerSocketChannel,设置InetSocketAddress让服务器监听某个端口已等待客户端连接。

b)调用childHandler放来指定连接后调用的ChannelHandler,这个方法传ChannelInitializer类型的参数,ChannelInitializer是个抽象类,所以需要实现initChannel方法,这个方法就是用来设置ChannelHandler。

c)最后绑定服务器等待直到绑定完成,调用sync()方法会阻塞直到服务器完成绑定,然后服务器等待通道关闭,因为使用sync(),所以关闭操作也会被阻塞。

现在你可以关闭EventLoopGroup和释放所有资源,包括创建的线程。

4.启动客户端步骤:a)创建Bootstrap对象用来引导启动客户端b)创建EventLoopGroup对象并设置到Bootstrap中,EventLoopGroup可以理解为是一个线程池,这个线程池用来处理连接、接受数据、发送数据c)创建InetSocketAddress并设置到Bootstrap中,InetSocketAddress是指定连接的服务器地址d)添加一个ChannelHandler,客户端成功连接服务器后就会被执行e)调用Bootstrap.connect()来连接服务器f)最后关闭EventLoopGroup来释放资源ty核心概念看netty in action 第三章Netty提供了ChannelInitializer类用来配置Handlers。

ChannelInitializer是通过ChannelPipeline来添加ChannelHandler的,如发送和接收消息,这些Handlers将确定发的是什么消息。

所有的Netty程序都是基于ChannelPipeline。

ChannelPipeline和EventLoop和EventLoopGroup密切相关,因为它们三个都和事件处理相关,所以这就是为什么它们处理IO的工作由EventLoop管理的原因。

Netty中所有的IO操作都是异步执行的,例如你连接一个主机默认是异步完成的;写入/发送消息也是同样是异步。

也就是说操作不会直接执行,而是会等一会执行,因为你不知道返回的操作结果是成功还是失败,但是需要有检查是否成功的方法或者是注册监听来通知;Netty使用Futures和ChannelFutures来达到这种目的。

Future注册一个监听,当操作成功或失败时会通知。

ChannelFuture封装的是一个操作的相关信息,操作被执行时会立刻返回ChannelFuture。

5.2 Channels,Events and Input/Output(IO)netty是一个非阻塞、事件驱动的网络框架。

Netty实际上是使用多线程处理IO事件,因为同步会影响程序的性能,Netty的设计保证程序处理事件不会有同步.Netty中的EventLoopGroup包含一个或多个EventLoop,而EventLoop就是一个Channel执行实际工作的线程。

EventLoop总是绑定一个单一的线程,在其生命周期内不会改变。

当注册一个Channel后,Netty将这个Channel绑定到一个EventLoop,在Channel的生命周期内总是被绑定到一个EventLoop。

在Netty IO操作中,你的程序不需要同步,因为一个指定通道的所有IO始终由同一个线程来执行。

5.3 什么是Bootstrap两种bootsstraps之间有一些相似之处,其实他们有很多相似之处,也有一些不同。

Bootstrap和ServerBootstrap之间的差异:Bootstrap用来连接远程主机,有1个EventLoopGroupServerBootstrap用来绑定本地端口,有2个EventLoopGroup第一个差异很明显:“ServerBootstrap”监听在服务器监听一个端口轮询客户端的“Bootstrap”或DatagramChannel是否连接服务器。

通常需要调用“Bootstrap”类的connect()方法,但是也可以先调用bind()再调用connect()进行连接,之后使用的Channel包含在bind()返回的ChannelFuture中。

第二个差别也许是最重要的:客户端bootstraps/applications使用一个单例EventLoopGroup,而ServerBootstrap使用2个EventLoopGroup ,一个ServerBootstrap可以认为有2个channels组,第一组包含一个单例ServerChannel,代表持有一个绑定了本地端口的socket;第二组包含所有的Channel,代表服务器已接受了的连接。

EventLoopGroup A唯一的目的就是接受连接然后交给EventLoopGroup B。

Netty可以使用两个不同的Group,因为服务器程序需要接受很多客户端连接的情况下,一个EventLoopGroup将是程序性能的瓶颈,因为事件循环忙于处理连接请求,没有多余的资源和空闲来处理业务逻辑,最后的结果会是很多连接请求超时。

若有两EventLoops,即使在高负载下,所有的连接也都会被接受,因为EventLoops接受连接不会和哪些已经连接了的处理共享资源。

EventLoopGroup和EventLoop是什么关系:EventLoopGroup可以包含很多个EventLoop,每个Channel绑定一个EventLoop不会被改变,因为EventLoopGroup包含少量的EventLoop的Channels,很多Channel会共享同一个EventLoop。

这意味着在一个Channel 保持EventLoop繁忙会禁止其他Channel绑定到相同的EventLoop。

我们可以理解为EventLoop是一个事件循环线程,而EventLoopGroup是一个事件循环集合。

5.4 Channel Handlers and Data FlowNetty中有两个方向的数据流,入站(ChannelInboundHandler)和出站(ChannelOutboundHandler)之间有一个明显的区别:若数据是从用户应用程序到远程主机则是“出站(outbound)”,相反若数据时从远程主机到用户应用程序则是“入站(inbound)”。

为了使数据从一端到达另一端,一个或多个ChannelHandler将以某种方式操作数据。

这些ChannelHandler会在程序的“引导”阶段被添加ChannelPipeline 中,并且被添加的顺序将决定处理数据的顺序。

ChannelPipeline的作用我们可以理解为用来管理ChannelHandler的一个容器,每个ChannelHandler处理各自的数据(例如入站数据只能由ChannelInboundHandler处理),处理完成后将转换的数据放到ChannelPipeline中交给下一个ChannelHandler继续处理,直到最后一个ChannelHandler处理完成。

在ChannelPipeline中,如果消息被读取或有任何其他的入站事件,消息将从ChannelPipeline的头部开始传递给第一个ChannelInboundHandler,这个ChannelInboundHandler可以处理该消息或将消息传递到下一个ChannelInboundHandler中,一旦在ChannelPipeline中没有剩余的ChannelInboundHandler后,ChannelPipeline就知道消息已被所有的饿Handler 处理完成了。

反过来也是如此,任何出站事件或写入将从ChannelPipeline的尾部开始,并传递到最后一个ChannelOutboundHandler。

ChannelOutboundHandler的作用和ChannelInboundHandler相同,它可以传递事件消息到下一个Handler或者自己处理消息。

不同的是ChannelOutboundHandler是从ChannelPipeline的尾部开始,而ChannelInboundHandler是从ChannelPipeline的头部开始,当处理完第一个ChannelOutboundHandler处理完成后会出发一些操作,比如一个写操作。

出站和入站的操作不同,能放在同一个ChannelPipeline工作?Netty的设计是很巧妙的,入站和出站Handler有不同的实现,Netty能跳过一个不能处理的操作,所以在出站事件的情况下,ChannelInboundHandler将被跳过,Netty 知道每个handler都必须实现ChannelInboundHandler或ChannelOutboundHandler。

也就是说Netty中发送消息有两种方法:直接写入通道或写入ChannelHandlerContext对象。

这两种方法的主要区别如下:直接写入通道导致处理消息从ChannelPipeline的尾部开始写入ChannelHandlerContext对象导致处理消息从ChannelPipeline的下一个handler开始5.5 业务逻辑在处理消息时有一点需要注意的,在Netty中事件处理IO一般有很多线程,程序中尽量不要阻塞IO线程,因为阻塞会降低程序的性能。

必须不阻塞IO线程意味着在ChannelHandler中使用阻塞操作会有问题。

幸运的是Netty提供了解决方案,我们可以在添加ChannelHandler到ChannelPipeline中时指定一个EventExecutorGroup,EventExecutorGroup会获得一个EventExecutor,EventExecutor将执行ChannelHandler的所有方法。