Effective file data-block placement for different types of page cache on hybrid main memory

- 格式:pdf

- 大小:643.03 KB

- 文档页数:22

[检索词汇] altium designer 中英文技术词汇对照PCB AD常用术语翻译对译protel 单词Accept 接受Accuracy 精确度准确度Activate 激活活动启动Add 添加Address 地址Advance 高级Aide 助手辅助Align 排列对齐Alpha 开端Analog 模拟的Analyzer 分析器测定仪Angle 角度观点Annotate 注解Aperture 孔径光圈Applocation 应用程序Approximation 接近近似值Arc 圆弧弧度Architecture 结构体构造Array 阵列数组Ascend 登高上升Assembly 集合装配Associate 关联的辅助的Asynchronous 异步的Automatical 自动的Access 存取通道接近Action 行动作用Active 积极的活泼的Adder 加法器Administration 管理员管理器Aggressor 干扰源入侵者Alias 别名化名混淆Allow 允许Always 总是永远Analysis 分析研究Animation 动画Any 任意的Applicable 可应用的适用的Apply 应用Arbiter 仲裁器Architect 设计者制造者Area 面积范围Arrange 安排排列调整Arrow 箭形Assembler 装配器汇编Assign 分配分派指定Astable 非稳态的多谐振荡的Attempt 尝试Available 有效的有用的Backup 备用Bar 标签Base 基极基础基地Batch 批处理批量Begin 开始创建Behavior 行为举止态度Bell 铃钟Between 两者之间Bidir 允许双向Bidirectional 双向性Bill 清单Binary 二进制二元的Bistable 双稳Bit 位Bitmap 位图Black 黑色黑色的Blind 盲孔Blip 标志信号Block 框栏隔阻Board 板子牌子委员会Body 物体主干主体Boolean 布尔值Border 边线Bottom 底部Bounce 反弹抖动Breakpoint 中断点断点Broken 破裂的损坏的Browse 浏览Buffer 缓冲器Build 构建Bullet 锥形体Bury 埋藏Bus 总线Butterfly 蝶形Button 按钮Bypass 省略Byte 字节Cable 电缆Calculation 计算估计CAM(computer aided manufacturing)计算机辅助制造Cancel 作废删除Capacitor 电容Caption 标题Capture 捕获收集记录Case 实情案例Category 类目范畴部属Cathode 阴极Center 中心Centimeter 厘米Chain 链Change 改变Channel 通道Charge 充电指责指示Check 检测Chart 制成图表Child 子女Chip 集成芯片Circuit 电路Circular 圆环弧形Class 阶层等级Cleanup 清扫工作Clear 清除清零Clearance 清除余地间隙Click 单击点击Clipboard 剪切板Clock 时钟Close 关闭结束Closure 关闭闭幕Code 编码代码Collector 集电极Color 彩色着色Colour 颜色Column 圆柱纵列栏目Combination 组合Comma 逗号Command 命令Comparator 比较器Community 社区群落Compilation 编辑物Compatible 兼容的和谐的Component 元器件组成成分Compile 编辑收集汇编Computer 计算机Composite 合成的复合的综合的Condition 条件Concurrent 并发事件同行Configure 配置Confidence 置信度自信信赖Conflict 冲突Confirm 确认证实Connector 连接端Connectivity 连线Constraint 约束限制因素Console 主控台表盘托架Consumer 用户使用者Construction 构造Continue 继续延伸Contract 缩短Control 控制Convert 转化转变Coordinate 坐标Copper 铜Copy 拷贝复制Core 核Cord 绳线索Corner 角落拐角Corporation 公司企业法人Counter 计数器Courtyard 天井庭院Create 创建Cross 十字符号混合Crosspoint 插入测试点Crosstalk 串扰CRTCrystal 晶体Current 电流当前的流行的Cursor 光标游标指示器Custom 惯例Customer 用户客户Cutout 切出划出挖空Cycle 周期Comment 注释发表评论说明书Dashed 下划线Data 数字数据Databse 数据库资料库Date 日期Daughtrer 子系子插件派生Debug 排错调试Dead 死的Decimal 十进制小数Decade 十进制十年Default 默认值缺省值弃权Decoder 译码器Definition 定义式限定分辨率Define 定义下定义Delay 延时Degree 度等级Demo 演绎演示版Delete 删除Demote 降级降低Deny 否认Description 描述Designator 标识指示者Designer 设计师Destination 目标目的Detail 细节零件Device 装置设备图样器件Diagram 示意图Dialog 对话Diagonal 对角线Difference 差异查分差额Diamond 菱形钻石Digital 数字的Different 不同的Dimension 尺度Dim 朦胧暗淡Direct 指示指令Diode 二极管Disable 无能无效无用Director 指南指导咨询Disk 圆盘Discharge 放点排出释放Distribution 分配分布分发Display 显示Dock 停放连接接驳Divider 分配分割Dot 小点虚线点缀Donut 环形Download 下载Down 下降Draw 绘制描写冲压成型Drag 拖拽Drop 下拉滴落遗漏Drill 钻Duplicate 复制副本Dual 双数的两倍Duty 占空比Edge 边缘Edit 编辑Efficient 高效的有能力的Electrical 电气的电学的电力的Ellipse 椭圆Embed 潜入插入Emitter 射极Emulate 效法Enable 使能激活有效Encoder 编码器End 结束Engineer 设计建造工程师Enter 进入参加Entity 实体Entry 入口Enum 列举型别Error 错误Evaluation 评价评估鉴定Example 例子Excel 胜过优秀突出Execute 执行实施签署Exist 存在的现有的Expand 扩大推广展开Expansion 扩大扩展扩张Expiry 终止期满Explode 爆炸分解Exponent 指数Export 输出出品Explore 探究查询Extra 额外的附加的External 外部的外面的外形Extrude 压制突出Extract 提取摘录Fabrication 制造Frequency 频率Failure 无效毁坏的Function 函数功能False 虚假的伪造的Fail 失败不足Fanout 扇出Fall 下降降落落差Favorite 最爱的(sth sb)Famale 凹的阴的Figure 图形Fatal 致命的Fill 填充Field 现场域范围Filter 过滤器File 文件Find 建立发现Film 胶片First 第一首先Finally 最终的Flash 闪光闪烁曝光Finish 完成结束Flatten 弄平弄直Fit 适合相配Flip-flop 触发器Flat 平的平坦的Floorplan 层平面图Flip 倒转Focus 集中聚焦Float 发行Footprint 封装Flow 流动源自Format 格式Folder 文件夹折叠Formula 公式Force 强制FPGAForum 讨论论坛会Frame 框图塑造Framework 架构结构Form 从····起由于Gate 门Generate 产生导致造成Graphic 图形green 绿色Grid 栅格Ground 地面基础Group 组类集聚Guide 引导指南手册Hard 坚硬的困难的Hardware 硬件设备五金Harmonic 谐波谐振Harness 束Hatch 策划画影线舱口Hazard 冒险Hazy 模糊的混浊的Height 高度Help 帮助Hexadecimal 十六进制Hide 隐藏Hierarchy 体系分层系列High 高的高级的高尚的Hint 暗示History 历史记录Horizontal 水平横向Hug 拥抱紧靠Ideal 理想的Identical 相同的相等的恒等的Identifier 识别符Identify 识别标记IDF(integrated data file) 综合资料文件IEEE 电气电子工程师协会Impedance 阻抗Ignore 忽略Import 输入导入Imperial 英制的Incident 事变入射Index 索引Increment 增量Inductor 电感Indication 指示表示Information 信息Industry 工业企业产业行业Innovation 创新Initial 最初的开始的Insert 插入嵌入添Input 输入Insight 洞察顿悟Inside 内部的里面的Install 安装Inspector 检查视察Instrument 仪器Instance 实例Integrate 积分的集成使完成使结合Integer 整数Interactive 互动的交互的Integrity 完整的Interface 接口界面Interconnect 互联器Internet 因特网Internal 内部的IPC(industry process control)工业过程控制Interrupt 打断中断Isolate 隔离Island 孤岛岛屿Item 项目条款ISP(In-system programmable)在线编程JTAG(joint test action group) 联合测试行为组Job 工作职业Joint 联合的Jump 跳跃Junctions 结点接点Keep 保持Key 关键钥匙Kind 种类Knowledge 知识Label 标签商标Lattice 晶格点阵Landscape 横向Layer 层Latch 锁存器锁扣LCD(liquid crystal display) 液晶显示器Launch 发射投掷出版LED(light emitting diode) 发光二级管Layout 布置布局Legacy 老化遗留下Learn 学习学会认知Length 长度Left 左边向左Less 较少的Legend 图例图注Library 库图书馆Lens 镜Line 线条线路Level 水平Link 链接连接License 注册Linear 线性的直线的一次的Liquid 液体流体不稳的Live 激活Localize 本地局部本地化定位Locate 把···设置在位置场所Lock 锁定Log 记录Logarithmic 对数的Logic 逻辑逻辑学Logical 逻辑的合理的Login 登录Lookup 查找查阅Loop 循环环状物Low 低的矮的Lot 地段许多Laboratory 实验室LPM(library of parameter modules) 参数化模块库Language 语言用语术语Machine 机器机械Multivibrator 多谐振荡器Maker 制造者Magnitude 幅值强度量值Manage 管理操纵Male 阳的Manual 手册手动的Manager 管理者经理主任Map 图Manufacture 制造制作加工Match 匹配比赛Mask 表面掩膜屏蔽Matrix 矩阵混合物Material 材料物质Maximum 最大值最大量Maximize 增加扩大Measure 测量Meal 粉状物膳食Medium 中间的媒介Mechanical 机械的Membership 会员资格Mega 许多非常强大Memory 存储器内存Menu 菜单Merge 合并Message 信息Meter 米(长度单位)Metric 公制的Microsoft 微软Millimeter 毫米Minimum 最小值Miscellaneous 混合杂项多样Miser 钻探机Miss 损失差错遗漏Miter 斜接斜角Mix 混合结合杂交Mode 形状方式风格Model 模型模特型号Modify 更改修饰Module 模块组件单元Moire 网纹纹波龟纹Mold 模块模型Monitor 监控Monostable 单稳状态Moor 固定系住Mount 安装Move 移动Mult 多种多元多路Magnify 放大Name 名称Nano 十亿分之一纳Navigator 领航导航Neck 领口瓶颈Negative 负的Net 网络Netlable 网络标号Netlist 网络表New 新的Next 下一步再Nexus 关系Node 结点波峰Noise 噪音None 忽略一个也没Normal 正常的常规的Number 数字号码数量Object 物体目标Octagon 八边形Octal 八进制Octave 八行八度八位ODBC(ovject database connectivity) 开放数据库互联Ohm 欧姆(电阻单位)OLE DB(object lin king and embedding databse) 目标链接及嵌入式数据库Online 在线Opcode 运算码Openbus 开放总线公共总线Open 开放的公开的Operate 工作运转营业Operand 操作数运算域Optimizer 优化程序优化器Operator 运算符Orange 橙子桔子Option 选择选项Orientation 取向Order 顺序Original 原本的最初的Origin 起源原点Outline 外形略述概括Orthogonal 直角的正交的Outside 外部的外观Output 输出Overlay 覆盖层Overall 全部的Owner 物主所有者Overshoot 过冲Package 封装包装Pad 焊盘基座垫料Page 页码翻阅Pair 成对成双Palette 调色盘选盘控制板Panel 面板画板嵌镶板Parallel 并行并联Parameter 参数系数因数Parent 父系Parsing 部析Part 部件Passive 无源的被动的Paste 助焊贴敷铜Path 路径轨迹PCBPeak 峰值Peripheral 周边的外围的Permission 同意Persistent 坚持不懈固执的Physical 物理的Pickbox 点选框取景框Pickup 拾取收集Pin 引脚管脚Place 放置Placement 布局Plane 平板平面飞机Plugin 插件栓Point 点Plus 十字记号加号Polygon 多边形多角形Pole 极极地Popup 弹出Polyline 折线Portable 可移植的移动的Port 端口Position 位置Portrait 竖向纵向Postpone 延时搁置Positive 正的Power 电源功率Pour 倾泻倒灌Preference 偏好优先Predefined 预先定义Preliminary 预备的初步语言的Prefix 前缀字首Preserve 保护保藏维护Prepreg 预浸料半固化品Preview 预览预习排练Preset 置数Primary 主要的Previous 以前的早先的Print 打印Primitive 原始的纯朴的Priority 优先级优先权Printout 打印输出Process 进程步骤Probe 探测调查Profile 外形轮廓部面Processor 处理器加工者Program 程序设计Programmable 可编程的Project 工程项目Proper 适当的恰当的Promote 促进创办Provider 提供者供应商Property 性质特性Pull 拉牵拖PSD(programmable system device) 可编程的系统部件Pulse 脉冲跳动Pullback 障碍阻扰拉回Push 推挤推进拓展Quality 质量特性Query 疑问质问问号Quiet 静态的安静的Radix 根基数RAMRange 级别排行类别Raster 光栅屏面Ray 射线光线闪现Rebuild 重建重构Recent 最近的Record 记录经历Rectangle 矩形长方形Rectangular 矩形的成直角的Red 红色的Reference 参考基准Reflect 反射Region 区域地带Register 寄存器Remove 删除移除Repeat 重复Report 报告Require 需要需求Reserve 储备保存Reset 复位置零Reshape 改造矫形变形Resistance 电阻值Resistor 店主Resource 资源Restore 恢复还原返回Restrict 限制约束Result 结果导致Retrieve 取回恢复Revision 修订本校正版Right 右的右方Ring 铃声环形物Rise 上升起立增强ROMRoom 房间空间位置Root 根根源本质Rotation 旋转自转Round圆的Routing 走线布线Row 成形排列Rule 规则Run 运转流行的趋势Server 服务服务器Same 相同的同样的Sans 没有无Save 保存Scalar 标量数量Scale 刻度调节Schematic 图表示意图Scope 范围域Score 成绩计分Script 脚本Scroll 卷动Search 搜索Secondary 次要的从属的Section 部分片断Seed 原因种子Select 挑选选拔Separate 分离区别标识Sequential 有顺序的相继的Serial 串行的系列的序列的Serif 细体字Series 串联连贯成套Session 学期期间Service 服务检修劳务Shader 材质Set 设置置位Shadow 阴影着色Shape 造型形状Share 共享Sheet 图纸方块Shelve 搁置暂缓考虑Shift 移动变换Shortcut 快捷方式Show 显示展示Signal 信号Sign 签署有符号的征兆标志Silent 沉默的Silkscreen 丝印层Similar 相似的类似的Simple 简单的朴实的Simulation 仿真Sitemap 网站地图网站导航Situs 地点位置Silce 切片薄片Slider 滑块滑动Slot 槽Small 小的细的微的Smart 智慧灵气Snap 跳转突然折断Snippet 片断摘录Software 软件Solder 焊接Solid 实心的固体的坚固的Sort 分类Source 电源根源Space 间隔间隙Speaker 扬声器Special 特殊的Specification 说明书明细表Specify 具体指定详细指明列入清单Speed 速度Split 分离Spreadsheet 电子表格试算表Square 方形正直的Stack 堆积层叠Standalone 单板机Standard 标准规范Standoff 支架平淡Start 起始Starve 不足饥饿State 状态形势州Static 静止的Station 平台地位Status 地位资格身份Step 步进踏步步骤Stimulus 激励促进刺激Stop 停止Storage 储存器Strategy 策略方案战略String 字符串串条弦Structure 构成结构组织Style 风格文体作风Subversion 颠覆Summary 摘要概括总结Support 支持Suppress 压制抑制阻止Surface 表面Suspend 暂停挂起终止Swap 交换Sweep 扫描环视Switch 开关Symbol 符号Synchronous 同步的Syntax 语法Synthesis 综合合成System 系统Table 表格True 真实Target 目标对象指标Tail 末尾尾部Technology 技术工艺Teardrop 泪滴Template 样板Temperature 温度气温Terminal 极限的末端的端子Tenting 掩盖遮掩Terminator 终端负载Terminate 终结终止Text 文本正本Test 测试Themselves 他们自己TFT 触摸屏显示器Thruhole 通孔Thermal 热的热量的Time 时间Tile 铺排Timer 定时器Timebase 时基Toggle 切换开关双稳Timing 时序定时Tool 工具Tolerance 公差容限容差Top 顶部Toolbar 工具栏工具条Total 总体的合计为Topic 题目Track 轨迹Touchscreen 触摸屏Train 培训Trail 拖Transfer 传递Trance 恍惚Transistor 三极管晶体管Transient 暂态瞬态Transparency 透明度Translate 转变翻译Triangle 三角Transport 传输Trigonometry 三角法Trigger 触发器启动引起Tube 真空管电子管试管Tune 调谐曲调协调Tutorial 指导导师Type 类型Unassign 未定义Uncouple 解耦松开Undershoot 下冲负尖峰Undo 取消还原Uniform 相同的一致的单调的Unique 独特的唯一的Unit 单位Universal 普遍的全体的宇宙的Unspecified 不规定不确定Up 向上Update 更新Usage 用法习惯处理Utility 实用的通用的Use 实用Valid 有效的确实的合法的Validate 使生效Validation 确认验证Value 数值价值价格评价Variant 变化派生Vector 矢量向量Vendor 供应商卖主Version 版本翻译Vertical 垂直纵向Vertex 顶点制高点极点Very 很非常甚至VHDLVia 经由过孔Victim 被干扰受害者Video 视频录像View 查看Violation 违犯冲突Virtual 实质上的虚拟的Visible 可视的V oltage 电压Wait 等待延缓Walkaround 环绕步行栈桥Warn 警告提醒预告Wave 波动起伏挥动Waveform 波形图Where 地点在哪里Wide 宽的广泛的Window 窗口Width 宽度幅度带宽Wizard 向导精灵Wire 导线Workspace 工作区工作空间Worksheet 工作表单WOSA(windows open services architecture) 开放服务结构Worst 最差的最坏的Zero 零Zone 地段区域Zoom 缩放陡升。

故障树与可靠性框图故障树分析(FTA)的历史故障树分析(FTA)是可靠性和安全分析的另外一种技术。

1962年美国贝尔实验室为美国空军在民兵导弹发射控制系统而发展了该理论,以后被Boeing公司引进并扩展。

故障树分析是许多建立在运筹学和系统可靠性之中的符号"逻辑分析方法"的其中一种方法。

其他方法包括可靠性框图( RBDs)。

?什么是故障树图(FTD)?故障树图( 或者负分析树)是一种逻辑因果关系图,它根据元部件状态(基本事件)来显示系统的状态(顶事件)。

就像可靠性框图(RBDs),故障树图也是一种图形化设计方法,并且作为可靠性框图的一种可替代的方法。

一个故障树图是从上到下逐级建树并且根据事件而联系,它用图形化"模型"路径的方法,使一个系统能导致一个可预知的,不可预知的故障事件(失效),路径的交叉处的事件和状态,用标准的逻辑符号(与,或等等)表示。

在故障树图中最基础的构造单元为门和事件,这些事件与在可靠性框图中有相同的意义并且门是条件。

故障树和可靠性框图FTDs 和RBDs最基本的区别在于RBD 工作在"成功的空间",从而系统看上去是成功的集合,然而,故障树图工作在"故障空间"并且系统看起来是故障的集合。

传统上,故障树已经习惯使用固定概率(也就是,组成树的每一个事件都有一个发生的固定概率) 然而可靠性框图对于成功(可靠度公式)来说可以包括以时间而变化的分布,并且其他特点。

画故障树:门和事件故障树是由门和事件(块)建立,通常在故障树中运用最多的两个门与门和或门。

例如,由2个事件(或块or blocks)组成一个顶事件(或一个系统)。

如果任何一个事件的发生都引起顶事件发生,那么这些事件(块)用一个或门连接。

再者,如果两个事件同时发生才能引起顶事件的发生,那么它们用与门连接。

用一个可视化的例子,假设由组件A和B组成系统的一个简单案例,任何一个组件发生故障都会导致系统故障。

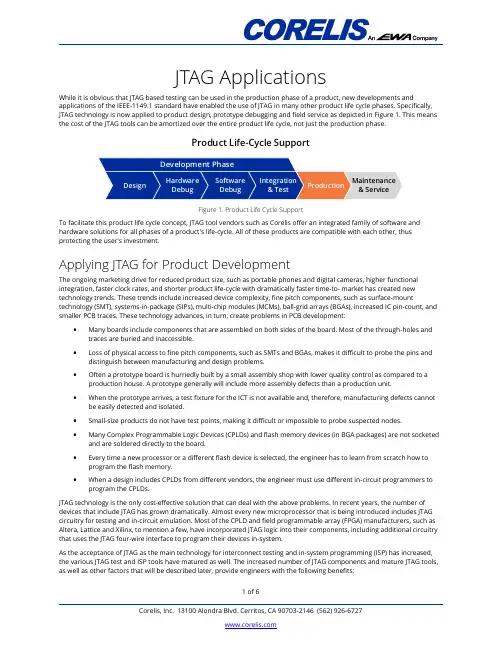

JTAG ApplicationsWhile it is obvious that JTAG based testing can be used in the production phase of a product, new developments and applications of the IEEE-1149.1 standard have enabled the use of JTAG in many other product life cycle phases. Specifically, JTAG technology is now applied to product design, prototype debugging and field service as depicted in Figure 1. This means the cost of the JTAG tools can be amortized over the entire product life cycle, not just the production phase.Product Life-Cycle SupportTo facilitate this product life cycle concept, JTAG tool vendors such as Corelis offer an integrated family of software and hardware solutions for all phases of a product's life-cycle. All of these products are compatible with each other, thus protecting the user's investment.Applying JTAG for Product DevelopmentThe ongoing marketing drive for reduced product size, such as portable phones and digital cameras, higher functional integration, faster clock rates, and shorter product life-cycle with dramatically faster time-to- market has created new technology trends. These trends include increased device complexity, fine pitch components, such as surface-mount technology (SMT), systems-in-package (SIPs), multi-chip modules (MCMs), ball-grid arrays (BGAs), increased IC pin-count, and smaller PCB traces. These technology advances, in turn, create problems in PCB development:∙Many boards include components that are assembled on both sides of the board. Most of the through-holes and traces are buried and inaccessible.∙Loss of physical access to fine pitch components, such as SMTs and BGAs, makes it difficult to probe the pins and distinguish between manufacturing and design problems.∙Often a prototype board is hurriedly built by a small assembly shop with lower quality control as compared to a production house. A prototype generally will include more assembly defects than a production unit.∙When the prototype arrives, a test fixture for the ICT is not available and, therefore, manufacturing defects cannot be easily detected and isolated.∙Small-size products do not have test points, making it difficult or impossible to probe suspected nodes.∙Many Complex Programmable Logic Devices (CPLDs) and flash memory devices (in BGA packages) are not socketed and are soldered directly to the board.∙Every time a new processor or a different flash device is selected, the engineer has to learn from scratch how to program the flash memory.∙When a design includes CPLDs from different vendors, the engineer must use different in-circuit programmers to program the CPLDs.JTAG technology is the only cost-effective solution that can deal with the above problems. In recent years, the number of devices that include JTAG has grown dramatically. Almost every new microprocessor that is being introduced includes JTAG circuitry for testing and in-circuit emulation. Most of the CPLD and field programmable array (FPGA) manufacturers, such as Altera, Lattice and Xilinx, to mention a few, have incorporated JTAG logic into their components, including additional circuitry that uses the JTAG four-wire interface to program their devices in-system.As the acceptance of JTAG as the main technology for interconnect testing and in-system programming (ISP) has increased, the various JTAG test and ISP tools have matured as well. The increased number of JTAG components and mature JTAG tools, as well as other factors that will be described later, provide engineers with the following benefits:∙ Easy to implement Design-For- Testability (DFT) rules. A list of basic DFT rules is provided later in this article. ∙ Design analysis prior to PCB layout to improve testability. ∙ Packaging problems are found prior to PCB layout. ∙ Little need for test points. ∙ No need for test fixtures.∙ More control over the test process.∙ Quick diagnosis (with high resolution) of interconnection problems without writing any functional test code. ∙ Program code in flash devices.∙ Design configuration data placement into CPLDs. ∙JTAG emulation and source-level debugging.What JTAG Tools are needed?In the previous section, we listed many of the benefits that a designer enjoys when incorporating boundary-scan in his product development. In this section we describe the tools and design data needed to develop JTAG test procedures and patterns for ISP, followed by a description of how to test and program a board. We use a typical board as an illustration for the various JTAG test functions needed. A block diagram of such a board is depicted in . A typical digital board with JTAG devices includes the following main components:∙ Various JTAG components such as CPLDs, FPGAs, Processors, etc., chained together via the boundary-scan path. ∙ Non-JTAG components (clusters). ∙ Various types of memory devices. ∙ Flash Memory components. ∙Transparent components such as series resistors or buffers.Most of the boundary-scan test systems are comprised of two basic elements: Test Program Generation and Test Execution. Generally, a Test Program Generator (TPG) requires the netlist of the Unit Under Test (UUT) and the BSDL files of the JTAG components. The TPG automatically generates test patterns that allow fault detection and isolation for all JTAG testable nets of the PCB. A good TPG can be used to create a thorough test pattern for a wide range of designs. For example, ScanExpress TPG typically achieves net coverage of more than 60%, even though the majority of the PCB designs are not optimized for boundary-scan testing. The TPG also creates test vectors to detect faults on the pins of non-scannable components, such as clusters and memories that are surrounded by scannable devices.Some TPGs also generate a test coverage report that allows the user to focus on the non-testable nets and determine whatadditional means are needed to increase the test coverage.I/O I/OFigure 2. Typical Board with JTAG ComponentsTest programs are generated in seconds. For example, when Corelis ScanExpress TPG™ was used, it took a 3.0 GHz Pentium 4 PC 23 seconds to generate aninterconnect test for a UUT with 5,638 nets (with 19,910 pins). This generation time includes netlist and all other input files processing as well as test pattern file generation.Test execution tools from various vendors provide means for executing JTAG tests and performing in-system programming in a pre-planned specific order, called a test plan. Test vectors files, which have been generated using the TPG, are automatically applied to the UUT and the results are compared to the expected values. In case of a detected fault, the systemdiagnoses the fault and lists the failures as depicted in Figure 3. Figure 3 shows the main window of the Corelis test execution tool, ScanExpress Runner™. ScanExpress Runner gives the user an overview of all test steps and the results of executed tests. Theseresults are displayed both for individual tests as well as for the total test runs executed. ScanExpress Runner provides the ability to add or delete various test steps from a test plan, or re-arrange the order of the test steps in a plan. Tests can also be enabled or disabledand the test execution can be stopped upon the failure of any particular test.Different test plans may be constructed for different UUTs. Tests within a test plan may be re-ordered, enabled or disabled, and unlimited different tests can be combined into a test plan. ScanExpress Runner can be used to develop a test sequence or test plan from various independent sub-tests. These sub-tests can then be executed sequentially as many times asspecified or continuously if desired. A sub-test can also program CPLDs and flash memories. For ISP, other formats, such as SVF, JAM, and STAPL, are also supported.To test the board depicted in Figure 2, the user must execute a test plan that consists of various test steps as shown in Figure 3.The first and most important test is the scan chain infrastructure integrity test. The scan chain must work correctly prior to proceeding to other tests and ISP. Following a successful test of the scan chain, the user can proceed to testing all the interconnectionsbetween the JTAG components. If the interconnect test fails, ScanExpress Runner displays a diagnostic screen that identifies the type of failure (such as stuck-at, Bridge, Open) and lists the failing nets and pins as shown in Figure 4. Once the interconnect test passes, including the testing of transparent components, it makes sense to continue testing the clusters and the memory devices. At this stage, the system is ready for in-system programming, which typically takes more time as compared to testing.Figure 3. ScanExpress Runner Main WindowFigure 4. ScanExpress Runner Diagnostics WindowDuring the design phase of a product, some JTAG vendors will provide design assistance in selecting JTAG-compliant components, work with the developers to ensure that the proper BSDL files are used, and provide advice in designing the product for testability.Applying JTAG for Production TestProduction testing, utilizing traditional In-Circuit Testers that do not have JTAG features installed, experience similar problems that the product developer had and more:∙Loss of physical access to fine pitch components, such as SMTs and BGAs, reduces bed-of-nails ICT fault isolation.∙Development of test fixtures for ICTs becomes longer and more expensive.∙Development of test procedures for ICTs becomes longer and more expensive due to more complex ICs.∙Designers are forced to bring out a large number of test points, which is in direct conflict with the goal to miniaturize the design.∙In-system programming is inherently slow, inefficient, and expensive if done with an ICT.∙Assembling boards with BGAs is difficult and subject to numerous defects, such as solder smearing. JTAG Embedded Functional TestRecently, a test methodology has been developed which combines the ease-of-use and low cost of boundary-scan with the coverage and security of traditional functional testing. This new technique, called JTAG Emulation Test (JET), lets engineers automatically develop PCB functional test that can be run at full speed., If the PCB has an on-board processor with a JTAG port (common, even if the processor doesn't support boundary-scan), JET and boundary-scan tests can be executed as part of the same test plan to provide extended fault coverage to further complement or replace ICT testing.Corelis ScanExpress JET™ provides JTAG embedded test for a wide range of processors. For more information about this technology and product, visit the ScanExpress JET product page.Production Test FlowFigure 5 shows different production flow configurations. The diagram shows two typical ways that JTAG is deployed:∙As a stand-alone application at a separate test station or testbench to test all theinterconnects and perform ISPof on-board flash and othermemories. JTAG embeddedfunctional test (JET) may beintegrated with boundary-scan.∙Integrated into the ICT system,where the JTAG controlhardware is embedded in theICT system and the boundary-scan (and possibly JET) softwareis a module called from the ICTsoftware system.In the first two cases, the test flow is sometimes augmented with a separate ICT stage after the JTAG-based testing is completed, although it is becoming more common for ICT to be skipped altogether or at least to be limited to analog or special purpose functional testing.Figure 5. Typical Production FlowsThe following are major benefits in using JTAG test and in-system programming in production:∙No need for test fixtures.∙Integrates product development, production test, and device programming in one tool/system.∙Engineering test and programming data is reused in Production.∙Fast test procedure development.∙Preproduction testing can start the next day when prototype is released to production.∙Dramatically reduces inventory management – no pre-programmed parts eliminates device handling and ESD damage.∙Eliminates or reduces ICT usage time – programming and screening.Production test is an obvious area in which the use of boundary-scan yields tremendous returns. Automatic test program generation and fault diagnostics using JTAG software products and the lack of expensive fixturing requirements can make the entire test process very economical. For products that contain edge connectors and digital interfaces that are not visible from the boundary-scan chain, JTAG vendors offer a family of boundary-scan controllable I/Os that provide a low cost alternative to expensive digital pin electronics.Field Service and InstallationThe role of JTAG does not end when a product ships. Periodic software and hardware updates can be performed remotely using the boundary-scan chain as a non-intrusive access mechanism. This allows flash updates and reprogramming of programmable logic, for example. Service centers that normally would not want to invest in special equipment to support a product now have an option of using a standard PC or laptop for JTAG testing. A simple PC-based JTAG controller can be used for all of the above tasks and also double as a fault diagnostic system, using the same test vectors that were developed during the design and production phase. This concept can be taken one step further by allowing an embedded processor access to the boundary-scan chain. This allows diagnostics and fault isolation to be performed by the embedded processor. The same diagnostic routines can be run as part of a power-on self-test procedure.JTAG Design-for-Test Basic ConsiderationsAs mentioned earlier in this article, the design for JTAG test guidelines are simple to understand and follow compared to other traditional test requirements. It is important to remember that JTAG testing is most successful when the design and test engineering teams work together to ensure that testability is "designed in" from the start. The boundary-scan chain is the most critical part of JTAG implementations. When that is properly implemented, improved testability inevitably follows. Below is a list of basic guidelines to observe when designing a JTAG-testable board:∙If there are programmable components in a chain, such as FPGAs, CPLDs, etc., group them together in the chain order and place the group at either end of the chain. It is recommended that you provide access to Test Data In(TDI) and Test Data Out (TDO) signals where the programmable group connects to the non-programmable devices.∙All parts in the boundary-scan chain should have 1149.1-compliant test access ports (TAPs).∙Use simple buffering for the Test Clock (TCK) and Test Mode Select (TMS) signals to simplify test considerations for the boundary-scan TAP. The TAP signals should be buffered to prevent clocking and drive problems.∙Group similar device families and have a single level converter interface between them, TCK, TMS, TDI, TDO, and system pins.∙TCK should be properly routed to prevent skew and noise problems.∙Use the standard JTAG connector on your board as depicted in Corelis documentation.∙Ensure that BSDL files are available for each JTAG component that is used on your board and that the files are validated.Design for interconnect testing requires board-level system understanding to ensure higher test coverage and elimination of signal level conflicts.∙Determine which JTAG components are on the board. Change as many non-JTAG components to IEEE 1149.1-compliant devices as possible in order to maximize test coverage.∙Check non-JTAG devices on the board and design disabling methods for the outputs of those devices in order to prevent signal level conflicts. Connect the enable pins of the conflicting devices to JTAG controllable outputs. Corelis tools will keep the enable/disable outputs at a fixed disabling value during the entire test.∙Ensure that your memory devices are surrounded by JTAG components. This will allow you to use a test program generator, such as ScanExpress TPG, to test the interconnects of the memory devices.∙Check the access to the non-boundary-scan clusters. Make sure that the clusters are surrounded by JTAG components. By surrounding the non-boundary-scan clusters with JTAG devices, the clusters can then be testedusing a JTAG test tool.∙If your design includes transparent components, such as series resistors or non-inverting buffers, your test coverage can be increased by testing through these components using ScanExpress TPG.∙Connect all I/Os to JTAG controllable devices. This will enable the use of JTAG, digital I/O module, such as the ScanIO-300LV, to test all your I/O pins, thus increasing test coverage.。

专业英语--电力电子方面AbsorberCircuit汲取电路AC/ACFrequencyConverter交交变频电路ACpowercontrol交流电力操纵ACPowerController交流调功电路ACPowerElectronicSwitch交流电力电子开关AcV oltageController交流调压电路AsynchronousModulation异步调制BakerClampingCircuit贝克箝位电路Bi-directionalTriodeThyristor双向晶闸管BipolarJunctionTransistor--BJT双极结型晶体管Boost-BuckChopper升落压斩波电路BoostChopper升压斩波电路BoostConverter升压变换器BridgeReversibleChopper桥式可逆斩波电路BuckChopper落压斩波电路BuckConverter落压变换器Commutation换流ConductionAngle导通角ConstantV oltageConstantFrequency--CVCF恒压恒频ContinuousConduction--CCM〔电流〕连续模式ControlCircuit操纵电路CukCircuitCUK斩波电路CurrentReversibleChopper电流可逆斩波电路CurrentSourceTypeInverter--CSTI电流〔源〕型逆变电路Cycloconvertor周波变流器DC-AC-DCConverter直交直电路DCChopping直流斩波DCChoppingCircuit直流斩波电路DC-DCConverter直流-直流变换器DeviceCommutation器件换流DirectCurrentControl直截了当电流操纵DiscontinuousConductionmode〔电流〕断续模式displacementfactor位移因数printedcircuit印制电路printedwiring印制线路printedboard印制板printedcircuitboard印制板电路printedwiringboard印制线路板printedcomponent印制元件printedcontact印制接点printedboardassembly印制板装配board板rigidprintedboard刚性印制板flexibleprintedcircuit挠性印制电路flexibleprintedwiring挠性印制线路flushprintedboard齐平印制板metalcoreprintedboard金属芯印制板metalbaseprintedboard金属基印制板mulit-wiringprintedboard多重布线印制板moldedcircuitboard模塑电路板discretewiringboard散线印制板microwireboard微线印制板buile-upprintedboard积层印制板surfacelaminarcircuit外表层合电路板B2itprintedboard埋进凸块连印制板chiponboard载芯片板buriedresistanceboard埋电阻板motherboard母板daughterboard子板backplane背板bareboard裸板copper-invar-copperboard键盘板夹心板dynamicflexboard动态挠性板staticflexboard静态挠性板break-awayplanel可断拼板cable电缆flexibleflatcable(FFC)挠性扁平电缆membraneswitch薄膜开关hybridcircuit混合电路thickfilm厚膜thickfilmcircuit厚膜电路thinfilm薄膜thinfilmhybridcircuit薄膜混合电路interconnection互连conductortraceline导线flushconductor齐平导线transmissionline传输线crossover跨交edge-boardcontact板边插头stiffener增强板substrate基底realestate基板面conductorside导线面componentside元件面solderside焊接面printing印制grid网格pattern图形conductivepattern导电图形non-conductivepattern非导电图形legend字符mark标志basematerial基材laminate层压板metal-cladbadematerial覆金属箔基材copper-cladlaminate(CCL)覆铜箔层压板compositelaminate复合层压板thinlaminate薄层压板basismaterial基体材料prepreg预浸材料bondingsheet粘结片preimpregnatedbondingsheer预浸粘结片epoxyglasssubstrate环氧玻璃基板masslaminationpanel预制内层覆箔板corematerial内层芯板bondinglayer粘结层filmadhesive粘结膜unsupportedadhesivefilm无支撑胶粘剂膜coverlayer(coverlay)覆盖层stiffenermaterial增强板材copper-cladsurface铜箔面foilremovalsurface往铜箔面uncladlaminatesurface层压板面basefilmsurface基膜面adhesivefaec胶粘剂面platefinish原始光洁面mattfinish粗面lengthwisedirection纵向crosswisedirection模向cuttosizepanel剪切板ultrathinlaminate超薄型层压板A-stageresinA阶树脂B-stageresinB阶树脂C-stageresinC阶树脂epoxyresin环氧树脂phenolicresin酚醛树脂polyesterresin聚酯树脂polyimideresin聚酰亚胺树脂bismaleimide-triazineresin双马来酰亚胺三嗪树脂acrylicresin丙烯酸树脂melamineformaldehyderesin三聚氰胺甲醛树脂polyfunctionalepoxyresin多官能环氧树脂brominatedepoxyresin溴化环氧树脂epoxynovolac环氧酚醛fluroresin氟树脂siliconeresin硅树脂silane硅烷polymer聚合物amorphouspolymer无定形聚合物crystallinepolamer结晶现象dimorphism双晶现象copolymer共聚物synthetic合成树脂thermosettingresin热固性树脂thermoplasticresin热塑性树脂photosensitiveresin感光性树脂epoxyvalue环氧值dicyandiamide双氰胺binder粘结剂adesive胶粘剂curingagent固化剂flameretardant阻燃剂opaquer遮光剂plasticizers增塑剂unsatuiatedpolyester不饱和聚酯polyester聚酯薄膜polyimidefilm(PI)聚酰亚胺薄膜polytetrafluoetylene(PTFE)聚四氟乙烯reinforcingmaterial增强材料glassfiber玻璃纤维E-glassfibreE玻璃纤维D-glassfibreD玻璃纤维S-glassfibreS玻璃纤维glassfabric玻璃布non-wovenfabric非织布glassmats玻璃纤维垫yarn纱线filament单丝strand绞股weftyarn纬纱warpyarn经纱denier但尼尔warp-wise经向threadcount织物经纬密度weavestructure织物组织plainstructure平纹组织greyfabric坏布wovenscrim稀松织物bowofweave弓纬endmissing断经mis-picks缺纬bias纬歪crease折痕waviness云织fisheye鱼眼featherlength毛圈长mark厚薄段split裂缝twistofyarn捻度sizecontent浸润剂含量sizeresidue浸润剂残留量finishlevel处理剂含量size浸润剂couplintagent偶联剂finishedfabric处理织物polyarmidefiber聚酰胺纤维aromaticpolyamidepaper聚芳酰胺纤维纸breakinglength断裂长heightofcapillaryrise吸水高度wetstrengthretention湿强度保持率whitenness白度ceramics陶瓷conductivefoil导电箔copperfoil铜箔rolledcopperfoil压延铜箔annealedcopperfoil退火铜箔thincopperfoil薄铜箔adhesivecoatedfoil涂胶铜箔resincoatedcopperfoil涂胶脂铜箔compositemetallicmaterial复合金属箔carrierfoil载体箔invar殷瓦foilprofile箔〔剖面〕轮廓shinyside光面matteside粗糙面treatedside处理面stainproofing防锈处理doubletreatedfoil双面处理铜箔shematicdiagram原理图logicdiagram逻辑图printedwirelayout印制线路布设masterdrawing布设总图computeraideddrawing计算机辅助制图computercontrolleddisplay计算机操纵显示placement布局routing布线layout布图设计rerouting重布simulation模拟logicsimulation逻辑模拟circitsimulation电路模拟timingsimulation时序模拟modularization模块化layouteffeciency布线完成率MDFdatabse机器描述格式数据库designdatabase设计数据库designorigin设计原点optimization(design)优化〔设计〕predominantaxis供设计优化坐标轴tableorigin表格原点mirroring镜像drivefile驱动文件intermediatefile中间文件manufacturingdocumentation制造文件queuesupportdatabase队列支撑数据库componentpositioning元件安置graphicsdispaly图形显示scalingfactor比例因子scanfilling扫描填充rectanglefilling矩形填充regionfilling填充域physicaldesign实体设计logicdesign逻辑设计logiccircuit逻辑电路hierarchicaldesign层次设计top-downdesign自顶向下设计bottom-updesign自底向上设计net线网digitzing数字化designrulechecking设计规那么检查router(CAD)走〔布〕线器netlist网络表subnet子线网objectivefunction目标函数postdesignprocessing(PDP)设计后处理interactivedrawingdesign交互式制图设计costmetrix费用矩阵engineeringdrawing工程图blockdiagram方块框图moze迷宫componentdensity元件密度travelingsalesmanproblem回售货员咨询题degreesfreedom自由度outgoingdegree进度incomingdegree出度manhattondistance曼哈顿距离euclideandistance欧几里德距离network网络array阵列segment段logic逻辑logicdesignautomation逻辑设计自动化separatedtime分线separatedlayer分层definitesequence定顺序conduction(track)导线〔通道〕conductorwidth导线〔体〕宽度conductorspacing导线距离conductorlayer导线层conductorline/space导线宽度/间距conductorlayerNo.1第一导线层roundpad圆形盘squarepad方形盘diamondpad菱形盘oblongpad长方形焊盘bulletpad子弹形盘teardroppad泪滴盘snowmanpad雪人盘V-shapedpadV形盘annularpad环形盘non-circularpad非圆形盘isolationpad隔离盘monfunctionalpad非功能连接盘offsetland偏置连接盘back-bardland腹〔背〕裸盘anchoringspaur盘址landpattern连接盘图形landgridarray连接盘网格阵列annularring孔环componenthole元件孔mountinghole安装孔supportedhole支撑孔unsupportedhole非支撑孔via导通孔platedthroughhole(PTH)镀通孔accesshole余隙孔blindvia(hole)盲孔buriedviahole埋孔buriedblindvia埋,盲孔anylayerinnerviahole任意层内部导通孔alldrilledhole全部钻孔toalinghole定位孔landlesshole无连接盘孔interstitialhole中间孔landlessviahole无连接盘导通孔pilothole引导孔terminalclearomeehole端接全隙孔dimensionedhole准尺寸孔via-in-pad在连接盘中导通孔holelocation孔位holedensity孔密度holepattern孔图drilldrawing钻孔图assemblydrawing装配图datumreferan参考基准1〕元件设备三绕组变压器:three-columntransformerThrClnTrans双绕组变压器:double-columntransformerDblClmnTrans 电容器:Capacitor并联电容器:shuntcapacitor电抗器:Reactor母线:Busbar输电线:TransmissionLine发电厂:powerplant断路器:Breaker刀闸(隔离开关):Isolator分接头:tap电动机:motor〔2〕状态参数有功:activepower无功:reactivepower电流:current容量:capacity电压:voltage档位:tapposition有功损耗:reactiveloss无功损耗:activeloss功率因数:power-factor功率:power功角:power-angle电压等级:voltagegrade空载损耗:no-loadloss铁损:ironloss铜损:copperloss空载电流:no-loadcurrent阻抗:impedance正序阻抗:positivesequenceimpedance负序阻抗:negativesequenceimpedance零序阻抗:zerosequenceimpedance电阻:resistor电抗:reactance电导:conductance电纳:susceptance无功负载:reactiveload或者QLoad有功负载:activeloadPLoad远测:YC(telemetering)远信:YX励磁电流(转子电流):magnetizingcurrent定子:stator功角:power-angle上限:upperlimit下限:lowerlimit并列的:apposable高压:highvoltage低压:lowvoltage中压:middlevoltage电力系统powersystem发电机generator励磁excitation励磁器excitor电压voltage电流current母线bus变压器transformer升压变压器step-uptransformer高压侧highside输电系统powertransmissionsystem输电线transmissionline固定串联电容补偿fixedseriescapacitorcompensation 稳定stability电压稳定voltagestability功角稳定anglestability暂态稳定transientstability电厂powerplant能量输送powertransfer交流AC装机容量installedcapacity电网powersystem落点droppoint开关站switchstation双回同杆并架double-circuitlinesonthesametower 变电站transformersubstation补偿度degreeofcompensation高抗highvoltageshuntreactor无功补偿reactivepowercompensation故障fault调节regulation裕度magin三相故障threephasefault故障切除时刻faultclearingtime极限切除时刻criticalclearingtime切机generatortriping高顶值highlimitedvalue强行励磁reinforcedexcitation线路补偿器LDC(linedropcompensation)机端generatorterminal静态static(state)动态dynamic(state)单机无穷大系统onemachine-infinitybussystem 机端电压操纵A VR电抗reactance电阻resistance功角powerangle有功〔功率〕activepower无功〔功率〕reactivepower功率因数powerfactor无功电流reactivecurrent下落特性droopcharacteristics歪率slope额定rating变比ratio参考值referencevalue电压互感器PT分接头tap下落率drooprate仿真分析simulationanalysis传递函数transferfunction框图blockdiagram受端receive-side裕度margin同步synchronization失往同步lossofsynchronization阻尼damping摇摆swing保卫断路器circuitbreaker电阻:resistance电抗:reactance阻抗:impedance电导:conductance电纳:susceptance导纳:admittance电感:inductance电容:capacitance金属化聚丙烯膜电容\metallizationpolypropylenefilmcapacitor\插件磁芯电感\magneticcoreinductance\涤纶电容\terylenecapacity\接地片\groundlug\碳膜电阻\carbonfilmresistor\瓷片电容\ceramicdisccapacitor\莲花插座\lotussocket\贴片磁珠\coatedmageticbead贴片三极管\coateddynatron话题:专业词汇1backplane背板2Bandgapvoltagereference带隙电压参考3benchtopsupply工作台电源4BlockDiagram方块图5BodePlot波特图6Bootstrap自举7BottomFETBottomFET8bucketcapcitor桶形电容9chassis机架10Combi-senseCombi-sense11constantcurrentsource恒流源12CoreSataration铁芯饱和13crossoverfrequency交叉频率14currentripple纹波电流15CyclebyCycle逐周期16cycleskipping周期跳步17DeadTime死区时刻18DIETemperature核心温度19Disable非使能,无效,禁用,关断20dominantpole主极点21Enable使能,有效,启用22ESDRatingESD额定值23EvaluationBoard评估板24Exceedingthespecificationsbelowmayresultinpermanentdamagetothedevice,ordevicemalfunctio n.OperationoutsideoftheparametersspecifiedintheElectricalCharacteristicssectionisnotimplied.超过下面的规格使用可能引起永久的设备损害或设备故障。

专利名称:PERFORMANCE-AWARE AND RELIABILITY-AWARE DATA PLACEMENT FOR N-LEVELHETEROGENEOUS MEMORY SYSTEMS发明人:Manish Gupta,David A. Roberts,Mitesh R.Meswani,Vilas Sridharan,StevenRaasch,Daniel I. Lowell申请号:US15331270申请日:20161021公开号:US20170277441A1公开日:20170928专利内容由知识产权出版社提供专利附图:摘要:Techniques for selecting one of a plurality of heterogeneous memory units for placement of blocks of data (e.g., memory pages), based on both reliability and performance, are disclosed. A “cost” for each data block/memory unit combination is determined, based on the frequency of access of the data block, the latency of the memory unit, and, optionally, an architectural vulnerability factor (which represents the level of exposure of a particular memory data value to memory faults such as bit flips). A memory unit is selected for the data block for which the determined cost is the lowest, out of all memory units considered, and the data block is placed into that memory unit.申请人:Advanced Micro Devices, Inc.地址:Sunnyvale CA US国籍:US更多信息请下载全文后查看。

[检索词汇] altium designer 中英文技术词汇对照PCB AD常用术语翻译对译protel 单词Accept 接受Accuracy 精确度准确度Activate 激活活动启动Add 添加Address 地址Advance 高级Aide 助手辅助Align 排列对齐Alpha 开端Analog 模拟的Analyzer 分析器测定仪Angle 角度观点Annotate 注解Aperture 孔径光圈Applocation 应用程序Approximation 接近近似值Arc 圆弧弧度Architectur结构体构造Array 阵列数组Ascend 登高上升Assembly 集合装配Associate关联的辅助的Asynchronous 异步的Automatical 自动的Access 存取通道接近Action 行动作用Active 积极的活泼的Adder 加法器Administration 管理员管理器Aggressor 干扰源入侵者Alias 别名化名混淆Allow 允许Always 总是永远Analysis 分析研究Animation 动画Any 任意的Applicable 可应用的适用的Apply 应用Arbiter 仲裁器Architect 设计者制造者Area 面积范围Arrange安排排列调整Arrow 箭形Assembler 装配器汇编Assign 分配分派指定Astable 非稳态的多谐振荡的Attempt 尝试Available 有效的有用的Backup 备用Bar 标签Base 基极基础基地Batch 批处理批量Begin 开始创建Behavior 行为举止态度Bell 铃钟Between 两者之间Bidir 允许双向Bidirectional 双向性Bill 清单Binary 二进制二元的Bistable 双稳Bit 位Bitmap 位图Black 黑色黑色的Blind 盲孔Blip 标志信号Block 框栏隔阻Board 板子牌子委员会Body 物体主干主体Boolean 布尔值Border 边线Bottom 底部Bounce 反弹抖动Breakpoint 中断点断点Broken 破裂的损坏的Browse 浏览Buffer 缓冲器Build 构建Bullet 锥形体Bury 埋藏Bus 总线Butterfly 蝶形Button 按钮Bypass 省略Byte 字节Cable 电缆Calculation 计算估计CAM(computer aided manufacturing)计算机辅助制造Cancel 作废删除Capacitor 电容Caption 标题Capture 捕获收集记录Case 实情案例Category 类目范畴部属Cathode 阴极Center 中心Centimeter 厘米Chain 链Change 改变Channel 通道Charge 充电指责指示Check 检测Chart 制成图表Child 子女Chip 集成芯片Circuit 电路Circular 圆环弧形Class 阶层等级Cleanup 清扫工作Clear 清除清零Clearance 清除余地间隙Click 单击点击Clipboard 剪切板Clock 时钟Close 关闭结束Closure 关闭闭幕Code 编码代码Collector 集电极Color 彩色着色Colour 颜色Column 圆柱纵列栏目Combination 组合Comma 逗号Command 命令Comparator 比较器Community 社区群落Compilation 编辑物Compatible 兼容的和谐的Component 元器件组成成分Compile 编辑收集汇编Computer 计算机Composite 合成的复合的综合的Condition 条件Concurrent 并发事件同行Configure 配置Confidence 置信度自信信赖Conflict 冲突Confirm 确认证实Connector 连接端Connectivity 连线Constraint 约束限制因素Console 主控台表盘托架Consumer 用户使用者Construction 构造Continue 继续延伸Contract 缩短Control 控制Convert 转化转变Coordinate 坐标Copper 铜Copy 拷贝复制Core 核Cord 绳线索Corner 角落拐角Corporation 公司企业法人Counter 计数器Courtyard 天井庭院Create 创建Cross 十字符号混合Crosspoint 插入测试点Crosstalk 串扰CRTCrystal 晶体Current 电流当前的流行的Cursor 光标游标指示器Custom 惯例Customer 用户客户Cutout 切出划出挖空Cycle 周期Comment 注释发表评论说明书Dashed 下划线Data 数字数据Databse 数据库资料库Date 日期Daughtrer 子系子插件派生Debug 排错调试Dead 死的Decimal 十进制小数Decade 十进制十年Default 默认值缺省值弃权Decoder 译码器Definition 定义式限定分辨率Define 定义下定义Delay 延时Degree 度等级Demo 演绎演示版Delete 删除Demote 降级降低Deny 否认Description 描述Designator 标识指示者Designer 设计师Destination 目标目的Detail 细节零件Device 装置设备图样器件Diagram 示意图Dialog 对话Diagonal 对角线Difference 差异查分差额Diamond 菱形钻石Digital 数字的Different 不同的Dimension 尺度Dim 朦胧暗淡Direct 指示指令Diode 二极管Disable 无能无效无用Director 指南指导咨询Disk 圆盘Discharge 放点排出释放Distribution 分配分布分发Display 显示Dock 停放连接接驳Divider 分配分割Dot 小点虚线点缀Donut 环形Download 下载Down 下降Draw 绘制描写冲压成型Drag 拖拽Drop 下拉滴落遗漏Drill 钻Duplicate 复制副本Dual 双数的两倍Duty 占空比Edge 边缘Edit 编辑Efficient 高效的有能力的Electrical 电气的电学的电力的Ellipse 椭圆Embed 潜入插入Emitter 射极Emulate 效法Enable 使能激活有效Encoder 编码器End 结束Engineer 设计建造工程师Enter 进入参加Entity 实体Entry 入口Enum 列举型别Error 错误Evaluation 评价评估鉴定Example 例子Excel 胜过优秀突出Execute 执行实施签署Exist 存在的现有的Expand 扩大推广展开Expansion 扩大扩展扩张Expiry 终止期满Explode 爆炸分解Exponent 指数Export 输出出品Explore 探究查询Extra 额外的附加的External 外部的外面的外形Extrude 压制突出Extract 提取摘录Fabrication 制造Frequency 频率Failure 无效毁坏的Function 函数功能False 虚假的伪造的Fail 失败不足Fanout 扇出Fall 下降降落落差Favorite 最爱的(sthsb)Famale 凹的阴的Figure 图形Fatal 致命的Fill 填充Field 现场域范围Filter 过滤器File 文件Find 建立发现Film 胶片First 第一首先Finally 最终的Flash 闪光闪烁曝光Finish 完成结束Flatten 弄平弄直Fit 适合相配Flip-flop 触发器Flat 平的平坦的Floorplan 层平面图Flip 倒转Focus 集中聚焦Float 发行Footprint 封装Flow 流动源自Format 格式Folder 文件夹折叠Formula 公式Force 强制FPGAForum 讨论论坛会Frame 框图塑造Free 自由Framework 架构结构Form 从··起由于Gate 门Generate 产生导致造成Graphic 图形green 绿色Grid 栅格Ground 地面基础Group 组类集聚Guide 引导指南手册Hard 坚硬的困难的Hardware 硬件设备五金Harmonic 谐波谐振Harness 束Hatch 策划画影线舱口Hazard 冒险Hazy 模糊的混浊的Height 高度Help 帮助Hexadecimal 十六进制Hide 隐藏Hierarchy 体系分层系列High 高的高级的高尚的Hint 暗示History 历史记录Horizontal 水平横向Hug 拥抱紧靠Ideal 理想的Identical 相同的相等的恒等的Identifier 识别符Identify 识别标记IDF(integrated data file) 综合资料文件IEEE 电气电子工程师协会Impedance 阻抗Ignore 忽略Import 输入导入Imperial 英制的Include 包含Incident 事变入射Index 索引Increment 增量Inductor 电感Indication 指示表示Information 信息Industry 工业企业产业行业Innovation 创新Initial 最初的开始的Insert 插入嵌入添Input 输入Insight 洞察顿悟Inside 内部的里面的Install 安装Inspector 检查视察Instrument 仪器Instance 实例Integrate 积分的集成使完成使结合Integer 整数Interactive 互动的交互的Integrity 完整的Interface 接口界面Interconnect 互联器Internet 因特网Internal 内部的IPC(industry processcontrol)工业过程控制Interrupt 打断中断Isolate 隔离Island 孤岛岛屿Item 项目条款ISP(In-systemprogrammable)在线编程JTAG(joint test actiongroup) 联合测试行为组Job 工作职业Joint 联合的Jump 跳跃Junctions 结点接点Keep 保持Key 关键钥匙Kind 种类Knowledge 知识Label 标签商标Lattice 晶格点阵Landscape 横向Layer 层Latch 锁存器锁扣LCD(liquid crystaldisplay) 液晶显示器Launch 发射投掷出版LED(light emitting diode)发光二级管Layout 布置布局Legacy 老化遗留下Learn 学习学会认知Length 长度Left 左边向左Less 较少的Legend 图例图注Library 库图书馆Lens 镜Line 线条线路Level 水平Link 链接连接License 注册Linear 线性的直线的一次的Liquid 液体流体不稳的Live 激活Localize 本地局部本地化定位Locate 把··设置在位置场所Lock 锁定Log 记录Logarithmic 对数的Logic 逻辑逻辑学Logical 逻辑的合理的Login 登录Lookup 查找查阅Loop 循环环状物Low 低的矮的Lot 地段许多Laboratory 实验室LPM(library of parameter modules) 参数化模块库Language 语言用语术语Machine 机器机械Multivibrator 多谐振荡器Maker 制造者Magnitude 幅值强度量值Manage 管理操纵Male 阳的Manual 手册手动的Manager 管理者经理主任Map 图Manufacture 制造制作加工Match 匹配比赛Mask 表面掩膜屏蔽Matrix 矩阵混合物Material 材料物质Maximum 最大值最大量Maximize 增加扩大Measure 测量Meal 粉状物膳食Medium 中间的媒介Mechanical 机械的Membership 会员资格Mega 许多非常强大Memory 存储器内存Menu 菜单Merge 合并Message 信息Meter 米(长度单位)Metric 公制的Microsoft 微软Millimeter 毫米Minimum 最小值Miscellaneous 混合杂项多样Miser 钻探机Miss 损失差错遗漏Miter 斜接斜角Mix 混合结合杂交Mode 形状方式风格Model 模型模特型号Modify 更改修饰Module 模块组件单元Moire 网纹纹波龟纹Mold 模块模型Monitor 监控Monostable 单稳状态Moor 固定系住Mount 安装Move 移动Mult 多种多元多路Magnify 放大Name 名称Nano 十亿分之一纳Navigator 领航导航Neck 领口瓶颈Negative 负的Net 网络Netlable 网络标号Netlist 网络表New 新的Next 下一步再Nexus 关系Node 结点波峰Noise 噪音None 忽略一个也没Normal 正常的常规的Number 数字号码数量Object 物体目标Octagon 八边形Octal 八进制Octave 八行八度八位ODBC(ovject database connectivity) 开放数据库互联Ohm 欧姆(电阻单位)OLE DB(object lin king and embedding databse) 目标链接及嵌入式数据库Online 在线Opcode 运算码Openbus 开放总线公共总线Open 开放的公开的Operate 工作运转营业Operand 操作数运算域Optimizer 优化程序优化器Operator 运算符Orange 橙子桔子Option 选择选项Orientation 取向Order 顺序Original 原本的最初的Origin 起源原点Outline 外形略述概括Orthogonal 直角的正交的Outside 外部的外观Output 输出Overlay 覆盖层Overall 全部的Owner 物主所有者Overshoot 过冲Package 封装包装Pad 焊盘基座垫料Page 页码翻阅Pair 成对成双Palette 调色盘选盘控制板Panel 面板画板嵌镶板Parallel 并行并联Parameter 参数系数因数Parent 父系Parsing 部析Part 部件Passive 无源的被动的Paste 助焊贴敷铜Path 路径轨迹PCBPeak 峰值Peripheral 周边的外围的Permission 同意Persistent 坚持不懈固执的Physical 物理的Pickbox 点选框取景框Pickup 拾取收集Pin 引脚管脚Place 放置Placement 布局Plane 平板平面飞机Plugin 插件栓Point 点Plus 十字记号加号Polygon 多边形多角形Pole 极极地Popup 弹出Polyline 折线Portable 可移植的移动的Port 端口Position 位置Portrait 竖向纵向Postpone 延时搁置Positive 正的Power 电源功率Pour 倾泻倒灌Preference 偏好优先Predefined 预先定义Preliminary 预备的初步语言的Prefix 前缀字首Preserve 保护保藏维护Prepreg 预浸料半固化品Preview 预览预习排练Preset 置数Primary 主要的Previous 以前的早先的Print 打印Primitive 原始的纯朴的Priority 优先级优先权Printout 打印输出Process 进程步骤Probe 探测调查Profile 外形轮廓部面Processor 处理器加工者Program 程序设计Programmable 可编程的Project 工程项目Proper 适当的恰当的Promote 促进创办Provider 提供者供应商Property 性质特性Pull 拉牵拖PSD(programmable system device) 可编程的系统部件Pulse 脉冲跳动Pullback 障碍阻扰拉回Push 推挤推进拓展Quality 质量特性Query 疑问质问问号Quiet 静态的安静的Radix 根基数RAMRange 级别排行类别Raster 光栅屏面Ray 射线光线闪现Rebuild 重建重构Recent 最近的Record 记录经历Rectangle 矩形长方形Rectangular 矩形的成直角的Red 红色的Reference 参考基准Reflect 反射Region 区域地带Register 寄存器Remove 删除移除Repeat 重复Report 报告Require 需要需求Reserve 储备保存Reset 复位置零Reshape 改造矫形变形Resistance 电阻值Resistor 店主Resource 资源Restore 恢复还原返回Restrict 限制约束Result 结果导致Retrieve 取回恢复Revision 修订本校正版Right 右的右方Ring 铃声环形物Rise 上升起立增强ROMRoom 房间空间位置Root 根根源本质Rotation 旋转自转Round圆的Routing 走线布线Row 成形排列Rule 规则Run 运转流行的趋势Server 服务服务器Same 相同的同样的Sans 没有无Save 保存Scalar 标量数量Scale 刻度调节Schematic 图表示意图Scope 范围域Score 成绩计分Script 脚本Scroll 卷动Search 搜索Secondary 次要的从属的Section 部分片断Seed 原因种子Select 挑选选拔Separate 分离区别标识Sequential 有顺序的相继的Serial 串行的系列的序列的Serif 细体字Series 串联连贯成套Session 学期期间Service 服务检修劳务Shader 材质Set 设置置位Shadow 阴影着色Shape 造型形状Share 共享Sheet 图纸方块Shelve 搁置暂缓考虑Shift 移动变换Shortcut 快捷方式Show 显示展示Signal 信号Sign 签署有符号的征兆标志Silent 沉默的Silkscreen 丝印层Similar 相似的类似的Simple 简单的朴实的Simulation 仿真Sitemap 网站地图网站导航Situs 地点位置Silce 切片薄片Slider 滑块滑动Slot 槽Small 小的细的微的Smart 智慧灵气Snap 跳转突然折断Snippet 片断摘录Software 软件Solder 焊接Solid 实心的固体的坚固的Sort 分类Source 电源根源Space 间隔间隙Speaker 扬声器Special 特殊的Specification 说明书明细表Specify 具体指定详细指明列入清单Speed 速度Split 分离Spreadsheet 电子表格试算表Square 方形正直的Stack 堆积层叠Standalone 单板机Standard 标准规范Standoff 支架平淡Start 起始Starve 不足饥饿State 状态形势州Static 静止的Station 平台地位Status 地位资格身份Step 步进踏步步骤Stimulus 激励促进刺激Stop 停止Storage 储存器Strategy 策略方案战略String 字符串串条弦Structure 构成结构组织Style 风格文体作风Subversion 颠覆Summary 摘要概括总结Support 支持Suppress 压制抑制阻止Surface 表面Suspend 暂停挂起终止Swap 交换Sweep 扫描环视Switch 开关Symbol 符号Synchronous 同步的Syntax 语法Synthesis 综合合成System 系统Table 表格True 真实Target 目标对象指标Tail 末尾尾部Technology 技术工艺Teardrop 泪滴Template 样板Temperature 温度气温Terminal 极限的末端的端子Tenting 掩盖遮掩Terminator 终端负载Terminate 终结终止Text 文本正本Test 测试Themselves 他们自己TFT 触摸屏显示器Thruhole 通孔Thermal 热的热量的Time 时间Tile 铺排Timer 定时器Timebase 时基Toggle 切换开关双稳Timing 时序定时Tool 工具Tolerance 公差容限容差Top 顶部Toolbar 工具栏工具条Total 总体的合计为Topic 题目Track 轨迹Touchscreen 触摸屏Train 培训Trail 拖Transfer 传递Trance 恍惚Transistor 三极管晶体管Transient 暂态瞬态Transparency 透明度Translate 转变翻译Triangle 三角Transport 传输Trigonometry 三角法Trigger 触发器启动引起Tube 真空管电子管试管Tune 调谐曲调协调Tutorial 指导导师Type 类型Unassign 未定义Uncouple 解耦松开Undershoot 下冲负尖峰Undo 取消还原Uniform 相同的一致的单调的Unique 独特的唯一的Unit 单位Universal 普遍的全体的宇宙的Unspecified 不规定不确定Up 向上Update 更新Usage 用法习惯处理Utility 实用的通用的Use 实用Valid 有效的确实的合法的Validate 使生效Validation 确认验证Value 数值价值价格评价Variant 变化派生Vector 矢量向量Vendor 供应商卖主Version 版本翻译Vertical 垂直纵向Vertex 顶点制高点极点Very 很非常甚至VHDLVia 经由过孔Victim 被干扰受害者Video 视频录像View 查看Violation 违犯冲突Virtual 实质上的虚拟的Visible 可视的Voltage 电压Wait 等待延缓Walkaround 环绕步行栈桥Warn 警告提醒预告Wave 波动起伏挥动Waveform 波形图Where 地点在哪里Wide 宽的广泛的Window 窗口Width 宽度幅度带宽Wizard 向导精灵Wire 导线Workspace 工作区工作空间Worksheet 工作表单WOSA(windows open services architecture) 开放服务结构Worst 最差的最坏的Zero 零Zone 地段区域Zoom 缩放陡升。

TRIOS Software OverviewTRIOS is TA Instruments’ state-of-the-art software package that uses cutting-edge technology for instrument control, data collection, and data analysis for thermal analysis and rheology instruments. The intuitive user interface allows you to simply and effectively program experiments and move easily between processing experiments and viewing and analyzing data. TRIOS software delivers a whole new experiment experience.•Easy organization and data fi le management •A unique fi le-naming system allows for effortless organization of data fi les •The History View and File Manager offer simple data fi le location •Compatibility with the latest Windows Operating System platforms •M aximum fl exibility •Instrument control and data analysis via any networked computer •Confi gurable for multiple monitors •Ability to control multiple instruments at once •Remote data analysis•Seamless integration between instrument control and data analysis•Easy data export in a variety of output formats, including XML, Word, Excel, and PDF •Simple graph formatting using The Ribbon •Customization of the displayThe TRIOS software is supported by a full range of services, including onsite training, customer service that is only a phone call away, and easy-to-use, easy-to-understand online help. All of these items refl ect TA Instruments’ commitment to providing thermal analysis and rheology products and related services that deliver maximum value for your investment.TA InstrumentsWhat’s New in TRIOS SoftwareWhat’s New in TRIOS Software V3.1TRIOS software is now better than ever with increased stabilization and key fi xes and enhancements, including User Interface changes that will make your TRIOS experience exceptional. The next generation of instrument control and data collection and analysis, TRIOS V3.1 is more effi cient and intuitive, allowing you to work faster and easier.General TRIOS EnhancementsLimited-Bandwidth Download OptionYou can now download TRIOS faster than ever by installing a version of TRIOS that does not include TRIOS Online Help.Ribbon ChangesThe Ribbon has been simplifi ed with the Home and View tabs consolidated into a single Experiment tab, removing redundancy and unused functionality.Previous Home tab:The File Manager button no longer exists. The ability to close the File Manager was removed, and overlay and analysis document creation is now only available from the File M anager right-click menu. New analysis is available from the Analysis tab. The Geometry information is now available from the Experiment tab. When a geometry is attached to the instrument, you can select the installed geometry from the list of geometry fi les previously created on the system. If your geometry does not appear in the list, clicking Add New Geometry will launch the New Geometry wizard. Editing a geometry can now only be done from the File Manager’s Geometries pane or on the geometry Experiment node.Previous View tab:Document Views functionality (creating a new spreadsheet and graph) as well as Layout functionality (saving and loading fi les) is now located solely in the File Manager. Switch Documents and Views functionality was removed. Access to the Properties panel now only exists from the right-click menu.New Legend User InterfaceUse the Legend menu to make your Legend customizations in one place. With one click of the mouse, you can select the desired Entry Type, choose what items you want to appear in the legend, modify text color and title justifi cation, and turn on/off the Legend title.Additionally, when editing the Legend directly from the graph, use the Quick Format option for editing text that automatically displays when you select an item in the Legend. From the Quick Format box, you canchange the font face, size, and style.Previous Home tabPrevious View tabNew Legend User InterfaceQuick Format optionQuick Format optionNew Curves User InterfaceFormatting curves on a graph is now easier and more intuitive with the introduction of the Curves Format dialog box.•Choose to format your curves Automatically or Manually. Use the Automatic Formatting option to apply your customizations based on the fi le, step, and/or variable, or use the Manual Formatting option to apply your customizations per curve. •Use the palette to specify the order of the colors, symbols, line styles, and extra symbols used on your curves. •Additional formatting options include setting the line thickness, symbol size, and symbol density, and adding extra symbols to the curve •Set the style selection with the use of Quick Styles so that you can easily and quickly apply previously defi ned formatting to your curvesRHEOLOGYARES-G2 EnhancementsOrthogonal Superposition (OSP) FeatureThe Orthogonal Superposition (OSP) feature was added for the ARES-G2. In the OSP mode, the normal force transducer operates as an actuator applying a small sinusoidal linear deformation to the sample while recording the force at the same time. Instead of holding the transducer shaft at a fi xed position, the shaft can now be periodically oscillated in the vertical direction at small amplitudes.The major applications of the OSP mode include: •The superposition of a small strain oscillatory deformation normal to the direction of steady shear fl ow (Orthogonal Superposition) •Oscillation testing in the two orthogonal directions at the same frequency (2D-SAOS)A new group of test modes has been created for Orthogonal oscillation. These test modes are only available for the Orthogonal double wall concentric cylinder, Parallel plate, and Orthogonal torsion fi xture. This feature requires an ARES-G2 with Serial Number 4010-0383 or higher or an earlier version that has been upgraded together with appropriate geometries.DMA ModeThe ARES-G2 DM A feature is designed to allow geometries such as tension/compression and bending to be used with the instrument. DM A testing uses the standard oscillation test modes; when one of these geometries (Three point bending, Mixed bending, Clamped bending, or Linear tension) is selected, the mode of deformation is changed from shear to linear, with the appropriate set of variables. This feature is limited to oscillation tests only since that is the only motor mode available.This feature requires an ARES-G2 with Serial Number 4010-0383 or higher or an earlier version that has been upgraded together withappropriate geometries.Curves User InterfaceOrthogonal Superposition (OSP) FeatureProportional Axial Force ControlAxial force control on an ARES-G2 now has the ability to adjust the commanded force level to follow changes in sample stiffness. Typically this is used to decrease the axial force on a sample as it softens to avoid issues with samples being squashed or stretched too much as they soften. This is done by taking a reference value for the sample stiffness at the start of the test, and using the ratio of the reference stiffness and the current sample stiffness to adjust the commanded axial force.This option is enabled by selecting Compensate for stiffness changes. The compensation scaling factor is a value between 0.0 and 1.0 which controls the relationship between the changes in stiffness and the axial force changes. A value of 0.0 results in a constant force, and a value of 1.0 result in the axial force being a linear function of the stiffness ratio.Motor Control PanelThe ARES-G2 motor control panel and Real time variable signal list were updated to allow for better control of sample displacement and strain by using relative rather than absolute positioning.There are now separate signals for motor position (angular offset relative to encoder home position), and displacement (a running change in displacement since a tare in the DSP). The reported strain signal is now based on the geometry strain constant and the measured displacement.Button changes:•Zerodisplacement: Used to zero the displacement signal •Go to home position: Goes to the encoder zero position•Move to orientation angle: M oves to the geometry alignment angle. Enabled only if the active geometry has an alignment angle (i.e. ARES-G2 DMA fi xture)NOTE: The Move to orientation angle has been added to allow thecorrect positioning of the bending and the tension fi xtures for DM A testing after installing the geometry. The orientation angle is calibrated for every geometry and stored with the geometry parameters.ARES-G2 Phase CalibrationThe phase angle calibration code has been updated to use a hermite spline rather than a polynomial fi t to the phase error. This new phase angle correction provides a better fi t at lower frequencies than the polynomial fi t.When the analysis code is invoked, it checks the instrument fi rmware to see if the hermite spline correction is supported. If supported, it uses the new analysis. If this correction is not supported, it reverts back to the older polynomial implementation.The new form shows the user-adjustable number of terms used in the spline, as well as the correction coeffi cients between the measured data and the corrected data for both the phase and amplitude corrections. It also shows the agreement between the calibration data and the spline coeffi cients that are currently loaded in the instrument. An overlay can be created to show this graphically, if desired, by looking at the “Source data” and “Interpolated data” zones.Motor Control PanelARES-G2 Phase Calibration Compensate for stiffness changesARES-G2 Procedure ChangeIn order to repeat the same step in a procedure, the step has to be entered multiple times. With the new repeat function, a single step can be repeated multiple times. In addition some key test parameters can be changed during the repeat runs; for example the temperature can be incremented by a certain value at each repeat run.ARES-G2 Motor BoostTRIOS V3.1 now supports a second power amplifi er to double the motor power output. T his feature allows applying larger strains on high viscosity materials such as rubber compounds. No user interface changes are associated with this feature. TRIOS automatically recognizes when a second power amplifi er is connected and confi gures itself.Electro Rheology Conditioning BlockThe electro rheology conditioning block was updated to allow for voltage ramps and disconnection of the power amplifi er for a better “zero voltage” point.Three different types of voltage ramps are available: •A simple ramp from an initial voltage from a fi nal voltage •A ramp and hold profi le where the voltage is ramped from an initial value to a fi nal value, and the fi nal value is maintained for specifi ed period of time •A double ramp where the voltage is ramped from an initial value to a fi nal value, then back to the initial value The zero voltage mode sets what happens when a zero voltage level is commanded: Command zero volts uses the function generator to commanded a zero volt level into the power amplifi er, Disable amplifi er uses the ARES-G2 enable relay to open the HV circuit.ARES-G2 and RSA-G2 Enhancements Geometry CalibrationsARES-G2/RSA-G2 geometry calibrations were updated to match the method used by DHR/AR rheometers. Previously, geometry-specifi c calibrations where performed by using the Calibration pane in the TRIOS File M anager, and then applying the resulting calibration parameters to the active geometry. The update includes a Calibrations tab that was added to the geometry document, which shows the current calibration value and the date the calibration was performed, as well as hosts a control panel that can be used to run the geometry calibration in place.The Calibration panels displayed depend on the specifi cs of thegeometry.ARES-G2 Procedure ChangeGeometry CalibrationsARES-G2 Procedure ChangeARES-G2 and DHR EnhancementsTribo-Rheometry Accessory for ARES-G2 and DHRThe Tribo-rheometry option for the ARES-G2 and DHR is now available. Tribology test procedures are used to measure the friction coeffi cient, CoF, as a function of the sliding speed under dry and lubricated conditions between any two substrates in contact. Applications range from biological, personal products like creams and lotions, to automotive components and lubrication in machinery design.Tribology tests can only be used with the special Tribo-rheometry geometries. The tribo-rheometry geometry is supported in the following test modes:•Flow Sweep, which is converted to a “Tribology Sweep” when executed.•Transient fl ow steps (Step Rate, Flow Rate, Flow Temperature ramp), which are converted to Tribology Steps when executing.Procedure templates for common Tribology tests can be loaded from the template folder.The modular Tribo-Rheometry Accessory can be confi gured with 4 different geometries (Ring on Plate, Ball on Three Plates, Three Balls on Plate, and Ball on Three Balls), offering a range of contact profi les that are compatible with ARES-G2 FCO and APS or the DHR ETC and Stepped Disposable Peltier Plate.DHR/AR Enhancements•Support for DHR Optics Plate Accessory, Building M aterial Cell, and Bayonet Peltier Plate•DHR Pressure Cell•Support for vane and starch rotor added•Calibration page correctly refl ects mapping status•Resetting of geometry gap fi xed•M ap only applied during test to avoid over speed error when magnetic coupling not engaged•DHR Gap Compensation Calibration: Now allows calibrations to be performed from high to low temperatures•DHR zero gap: Deceleration added to existing standard and axial force modes•DHR Flow Sweep: Scaled time average option fi xed•Concentric cylinder•The end effect fi eld can be changed in a results fi le which will force a recalculation of the data•Rheology Advantage fi les now load with the end effect set to 1•Rheology Advantage fi les measured with ver.1 Double Gap load with the correct immersed heightRheology Analysis•Spriggs and Oldroyd models for oscillation data that were previously available in Rheology Advantage are now available in TRIOSV3.1•Carreau-Yasuda model for fl ow data added•Arrhenius model improved with better starting conditionsTemplate folderNew geometryRheology AnalysisProcedure templateTHERMAL ANALYSISDiscovery TGA EnhancementsModulated TGA (MTGA) SupportM odulated TGA (M TGA) is now supported by TRIOS software V3.1. This option, used with the Discovery TGA, is used to study the same decomposition or volatilization transitions as conventional TGA, plus provides new information that permits unique insights into the behavior of the weight loss reaction — specifi cally, obtaining kinetic information about one or more weight losses, in a shorter period of time than the multiple heating rate approach. M TGA also provides continuous measured values for activation energy throughout the weight loss reaction, not just at specifi c reaction levels.M odulated TGA experiments can be run as either Standard or High Resolution procedures.Discovery DSC Enhancements•To ensure the safety of the user, the Gas 1 selection for the Discovery DSC can now be used for Nitrogen only.INSTALLING TRIOS SOFTW AREFor instructions on installing TRIOS software, refer to the Installing TRIOS Software instructions.ADDITIONAL RESOURCESA number of additional resources are available to you. For assistance with the TRIOS software, fi rst consult the Online Help.For immediate assistance contact the TA Instruments Hotline at +1 302-427-4000 from 8:00 am to 4:30 pm EST.For email support, please send your question to one of the following:t*********************************************************************************************************PREVIOUS WHAT’S NEW DOCUMENTSFor Previous What’s New in TRIOS Software documents, click here.TA INSTRUMENTS OFFICESFor information on our latest products, contact information, and more, see our web site at:TA Instruments — Waters LLCCorporate Headquarters159 Lukens DriveNew Castle, DE 19720USATelephone: 302-427-4000Fax: 302-427-4001Email: **********************。