数据挖掘外文翻译参考文献

- 格式:doc

- 大小:42.50 KB

- 文档页数:16

大连理工大学本科外文翻译为客户服务支持进行的数据挖掘Data mining for customer service support学院(系):软件学院专业:软件工程学生姓名:XXX学号:xxx指导教师:XXX完成日期:2010-3-20大连理工大学Dalian University of Technology为客户服务支持进行的数据挖掘摘要在生产环境的传统客户服务支持系统中,一个客户服务数据库通常包括两种形式的服务信息:(1)无结构的客户服务报表用来记录机器故障和维修方法。

(2)为日常管理操作而产生的销售、雇员和客户方面的数据结构。

这次研究怎样将数据挖掘技术应用于将有用的数据从数据库中提取出来以支持两种客户服务活动:决策支持和机器故障分析。

一个数据挖掘过程是基于数据挖掘工具DBMiner,是为了给决策支持提供结构化的管理数据而调查的。

另外,用于将中性网络、基本事件推理和基本规则推理结合起来的数据挖掘技术正在被提出。

它将可能会为机器故障分析探询到无结构的客户服务记录。

这个被提出的技术已经履行用来支持全球范围内WEB的高级错误的分析。

关键字:数据挖掘,数据中的知识发现,客户服务支持,决策支持,机器故障诊断1 介绍客户服务支持正在成为大多数国内外制造公司生产贵重机器和电子设备的一块整体部分。

许多公司都有一个为世界范围内的客户提供安装、检查、维修的服务部门。

虽然大部分的公司都有工程师来处理日常维护和小范围内的故障,但是为了更复杂的维护和维修工作,专家的意见也常常要从制造公司那里得到。

为了使消费者满意,要对他们的要求进行立即回复。

因此,热线要建立服务中心来帮助回答消费者所遇到的普遍问题。

这个服务中心是用于接收关于错误机器的报告或者是通过电话从客户得到的咨询。

当有问题出现时,服务工程师就会通过热线咨询系统为客户建议一系列检查点,这些建议都是基于过去的经验而提出的。

这是从客户服务数据库中提取出来的,它包括那些与现行的问题相近或相似的服务记录。

外文文献原稿和译文原稿DATABASEA database may be defined as a collection interrelated data store together with as little redundancy as possible to serve one or more applications in an optimal fashion .the data are stored so that they are independent of programs which use the data .A common and controlled approach is used in adding new data and in modifying and retrieving existing data within the data base .One system is said to contain a collection of database if they are entirely separate in structure .A database may be designed for batch processing , real-time processing ,or in-line processing .A data base system involves application program, DBMS, and database.THE INTRODUCTION TO DATABASE MANAGEMENT SYSTEMSThe term database is often to describe a collection of related files that is organized into an integrated structure that provides different people varied access to the same data. In many cases this resource is located in different files in different departments throughout the organization, often known only to the individuals who work with their specific portion of the total information. In these cases, the potential value of the information goes unrealized because a person in other departments who may need it does not know it or it cannot be accessed efficiently. In an attempt to organize their information resources and provide for timely and efficient access, many companies have implemented databases.A database is a collection of related data. By data, we mean known facts that can be recorded and that have implicit meaning. For example, the names, telephone numbers, and addresses of all the people you know. You may have recorded this data in an indexed address book, or you may have stored it on a diskette using a personalcomputer and software such as DBASE Ⅲor Lotus 1-2-3. This is a collection of related data with an implicit meaning and hence is a database.The above definition of database is quite general. For example, we may consider the collection of words that made up this page of text to be usually more restricted. A database has the following implicit properties:● A database is a logically coherent collection of data with some inherent meaning. A random assortment of data cannot be referred to as a database.● A database is designed, built, and populated with data for a specific purpose. It has an intended group of user and some preconceived applications in which these users are interested.● A database represents some aspect of the real world, sometimes called the miniworld. Changes to the miniworld are reflected in the database.In other words, a database has some source from which data are derived, some degree of interaction with events in the real world, and an audience that is actively interested in the contents of the database.A database management system (DBMS) is composed of three major parts: (1) a storage subsystem that stores and retrieves data in files; (2)a modeling and manipulation subsystem that provides the means with which to organize the data and to add, delete, maintain, and update the data; and (3) an interface between the DBMS and its users. Several major trends are emerging that enhance the value and usefulness of database management systems.●Managers who require more up-to-date information to make effective decisions.●Customers who demand increasingly sophisticated information services and more current information about the status of their orders, invoices, and accounts.●Users who find that they can develop custom applications with database systems in a fraction of the time it takes to use traditional programming languages.●Organizations that discover information has a strategic value; they utilize their database systems to gain an edge over their competitors.A DBMS can organize, process, and present selected data elements from the database. This capability enables decision makers to search, probe, and query database contents in order to extract answers to nonrecurring and unplanned questions that aren’t available in regular reports. These questions might initially be vague and/or p oorly defined, but people can “browse” through the database until they have the needed information. In short, the DBMS will “mange” the stored data items and assemble the needed items from the common database in response to the queries of those who aren’t programmers. In a file-oriented system, user needing special information may communicate their needs to a programmer, who, when time permits, will write one or more programs to extract the data and prepare the information. The availability of a DBMS, however, offers users a much faster alternative communications path.DATABASE QUERYIf the DBMS provides a way to interactively enter and update the database ,as well as interrogate it ,this capability allows for managing personal database. However, it does not automatically leave an audit trail of actions and does not provide the kinds of controls necessary in a multi-user organization .There controls are only available when a set of application programs is customized for each data entry and updating function.Software for personal computers that perform some of the DBMS functions has been very popular .Individuals for personal information storage and processing intended personal computers for us .Small enterprises, professionals like doctors, architects, engineers, lawyers and so on have also used these machines extensively. By the nature of intended usage ,database system on there machines are except from several of the requirements of full-fledged database systems. Since data sharing is not intended, concurrent operations even less so ,the software can be less complex .Security and integrity maintenance are de-emphasized or absent .as data volumes will be small, performance efficiency is also less important .In fact, the only aspect of a database system that is important is data independence. Data independence ,as stated earlier ,means that application programs and user queries need not recognize physical organization of data on secondary storage. The importance of this aspect , particularly for the personal computer user ,is that this greatly simplifies database usage . The user can store ,access and manipulate data at ahigh level (close to the application)and be totally shielded from the low level (close to the machine )details of data organization.DBMS STRUCTURING TECHNIQUESSpatial data management has been an active area of research in the database field for two decades ,with much of the research being focused on developing data structures for storing and indexing spatial data .however, no commercial database system provides facilities for directly de fining and storing spatial data ,and formulating queries based on research conditions on spatial data.There are two components to data management: history data management and version management .Both have been the subjects of research for over a decade. The troublesome aspect of temporal data management is that the boundary between applications and database systems has not been clearly drawn. Specifically, it is not clear how much of the typical semantics and facilities of temporal data management can and should be directly incorporated in a database system, and how much should be left to applications and users. In this section, we will provide a list of short-term research issues that should be examined to shed light on this fundamental question.The focus of research into history data management has been on defining the semantics of time and time interval, and issues related to understanding the semantics of queries and updates against history data stored in an attribute of a record. Typically, in the context of relational databases ,a temporal attribute is defined to hold a sequence of history data for the attribute. A history data consists of a data item and a time interval for which the data item is valid. A query may then be issued to retrieve history data for a specified time interval for the temporal attribute. The mechanism for supporting temporal attributes is to that for supporting set-valued attributes in a database system, such as UniSQL.In the absence of a support for temporal attributes, application developers who need to model and history data have simply simulated temporal attributes by creating attribute for the time interval ,along with the “temporal” attribute. This of course may result in duplication of records in a table, and more complicated search predicates in queries. The one necessary topic of research in history data management is to quantitatively establish the performance (and even productivity) differences betweenusing a database system that directly supports attributes and using a conventional database system that does not support either the set-valued attributes or temporal attributes.Data security, integrity, and independenceData security prevents unauthorized users from viewing or updating the database. Using passwords, users are allowed access to the entire database of the database, called subschemas. For example, an employee database can contain all the data about an individual employee, but one group of users may be authorized to view only payroll data, while others are allowed access to only work history and medical data.Data integrity refers to the accuracy, correctness, or validity of the data in the database. In a database system, data integrity means safeguarding the data against invalid alteration or destruction. In large on-line database system, data integrity becomes a more severe problem and two additional complications arise. The first has to do with many users accessing the database concurrently. For example, if thousands of travel agents book the same seat on the same flight, the first agent’s booking will be lost. In such cases the technique of locking the record or field provides the means for preventing one user from accessing a record while another user is updating the same record.The second complication relates to hardware, software or human error during the course of processing and involves database transaction which is a group of database modifications treated as a single unit. For example, an agent booking an airline reservation involves several database updates (i.e., adding the passenger’s name and address and updating the seats-available field), which comprise a single transaction. The database transaction is not considered to be completed until all updates have been completed; otherwise, none of the updates will be allowed to take place.An important point about database systems is that the database should exist independently of any of the specific applications. Traditional data processing applications are data dependent.When a DMBS is used, the detailed knowledge of the physical organization of the data does not have to be built into every application program. The application program asks the DBMS for data by field name, for example, a coded representationof “give me customer name and balance due” would be sent to the DBMS. Without a DBMS the programmer must reserve space for the full structure of the record in the program. Any change in data structure requires changes in all the applications programs.Data Base Management System (DBMS)The system software package that handles the difficult tasks associated with creating ,accessing and maintaining data base records is called a data base management system (DBMS). A DBMS will usually be handing multiple data calls concurrently.It must organize its system buffers so that different data operations can be in process together .It provides a data definition language to specify the conceptual schema and most likely ,some of the details regarding the implementation of the conceptual schema by the physical schema.The data definition language is a high-level language, enabling one to describe the conceptual schema in terms of a “data model “.At the present time ,there are four underling structures for database management systems. They are :List structures.Relational structures.Hierarchical (tree) structures.Network structures.Management Information System(MIS)An MIS can be defined as a network of computer-based data processing procedures developed in an organization and integrated as necessary with manual and other procedures for the purpose of providing timely and effective information to support decision making and other necessary management functions.One of the most difficult tasks of the MIS designer is to develop the information flow needed to support decision making .Generally speaking ,much of the information needed by managers who occupy different levels and who have different levels and have different responsibilities is obtained from a collection of exiting information system (or subsystems)Structure Query Language (SQL)SQL is a data base processing language endorsed by the American NationalStandards Institute. It is rapidly becoming the standard query language for accessing data on relational databases .With its simple ,powerful syntax ,SQL represents a great progress in database access for all levels of management and computing professionals.SQL falls into two forms : interactive SQL and embedded SQL. Embedded SQL usage is near to traditional programming in third generation languages .It is the interactive use of SQL that makes it most applicable for the rapid answering of ad hoc queries .With an interactive SQL query you just type in a few lines of SQL and you get the database response immediately on the screen.译文数据库数据库可以被定义为一个相互联系的数据库存储的集合。

数据挖掘论文英语教学论文摘要:数据挖掘技术以及专家系统是目前比较热门的计算机应用技术。

针对高校英语教学的难题,本文设计并实现了一套英语专家系统,同时应用数据挖掘技术对专家系统内的数据进行处理,使得英语教师的工作事半功倍,达到了良好效果。

关键词:数据挖掘;专家系统;英语教学application of data mining and expert system in english teachingxia tao,an jing(college of informationscience&technology,beijing university of chemical technology,beijing100029,china)abstract:the data mining technology and expert system is popular computer applications now.this thesis designed and accomplished an english expert system which gave an application of data mining technology for data processing in this expert system which could make teachers’work more effective and get an excellent achievement.keywords:data mining;expert system;englishteaching一、引言近年来,以互联网为代表的计算机信息技术的迅速普及,使人们的生活条件和社会环境发生了巨大的变化。

大量的数据库被广泛的应用于社会生活的各个领域,并且这一趋势仍将继续。

信息爆炸给人们的生活带来便利的同时,也带来了诸多不便。

云计算外文翻译参考文献(文档含中英文对照即英文原文和中文翻译)原文:Technical Issues of Forensic Investigations in Cloud Computing EnvironmentsDominik BirkRuhr-University BochumHorst Goertz Institute for IT SecurityBochum, GermanyRuhr-University BochumHorst Goertz Institute for IT SecurityBochum, GermanyAbstract—Cloud Computing is arguably one of the most discussedinformation technologies today. It presents many promising technological and economical opportunities. However, many customers remain reluctant to move their business IT infrastructure completely to the cloud. One of their main concerns is Cloud Security and the threat of the unknown. Cloud Service Providers(CSP) encourage this perception by not letting their customers see what is behind their virtual curtain. A seldomly discussed, but in this regard highly relevant open issue is the ability to perform digital investigations. This continues to fuel insecurity on the sides of both providers and customers. Cloud Forensics constitutes a new and disruptive challenge for investigators. Due to the decentralized nature of data processing in the cloud, traditional approaches to evidence collection and recovery are no longer practical. This paper focuses on the technical aspects of digital forensics in distributed cloud environments. We contribute by assessing whether it is possible for the customer of cloud computing services to perform a traditional digital investigation from a technical point of view. Furthermore we discuss possible solutions and possible new methodologies helping customers to perform such investigations.I. INTRODUCTIONAlthough the cloud might appear attractive to small as well as to large companies, it does not come along without its own unique problems. Outsourcing sensitive corporate data into the cloud raises concerns regarding the privacy and security of data. Security policies, companies main pillar concerning security, cannot be easily deployed into distributed, virtualized cloud environments. This situation is further complicated by the unknown physical location of the companie’s assets. Normally,if a security incident occurs, the corporate security team wants to be able to perform their own investigation without dependency on third parties. In the cloud, this is not possible anymore: The CSP obtains all the power over the environmentand thus controls the sources of evidence. In the best case, a trusted third party acts as a trustee and guarantees for the trustworthiness of the CSP. Furthermore, the implementation of the technical architecture and circumstances within cloud computing environments bias the way an investigation may be processed. In detail, evidence data has to be interpreted by an investigator in a We would like to thank the reviewers for the helpful comments and Dennis Heinson (Center for Advanced Security Research Darmstadt - CASED) for the profound discussions regarding the legal aspects of cloud forensics. proper manner which is hardly be possible due to the lackof circumstantial information. For auditors, this situation does not change: Questions who accessed specific data and information cannot be answered by the customers, if no corresponding logs are available. With the increasing demand for using the power of the cloud for processing also sensible information and data, enterprises face the issue of Data and Process Provenance in the cloud [10]. Digital provenance, meaning meta-data that describes the ancestry or history of a digital object, is a crucial feature for forensic investigations. In combination with a suitable authentication scheme, it provides information about who created and who modified what kind of data in the cloud. These are crucial aspects for digital investigations in distributed environments such as the cloud. Unfortunately, the aspects of forensic investigations in distributed environment have so far been mostly neglected by the research community. Current discussion centers mostly around security, privacy and data protection issues [35], [9], [12]. The impact of forensic investigations on cloud environments was little noticed albeit mentioned by the authors of [1] in 2009: ”[...] to our knowledge, no research has been published on how cloud computing environments affect digital artifacts,and on acquisition logistics and legal issues related to cloud computing env ironments.” This statement is also confirmed by other authors [34], [36], [40] stressing that further research on incident handling, evidence tracking and accountability in cloud environments has to be done. At the same time, massive investments are being made in cloud technology. Combined with the fact that information technology increasingly transcendents peoples’ private and professional life, thus mirroring more and more of peoples’actions, it becomes apparent that evidence gathered from cloud environments will be of high significance to litigation or criminal proceedings in the future. Within this work, we focus the notion of cloud forensics by addressing the technical issues of forensics in all three major cloud service models and consider cross-disciplinary aspects. Moreover, we address the usability of various sources of evidence for investigative purposes and propose potential solutions to the issues from a practical standpoint. This work should be considered as a surveying discussion of an almost unexplored research area. The paper is organized as follows: We discuss the related work and the fundamental technical background information of digital forensics, cloud computing and the fault model in section II and III. In section IV, we focus on the technical issues of cloud forensics and discuss the potential sources and nature of digital evidence as well as investigations in XaaS environments including thecross-disciplinary aspects. We conclude in section V.II. RELATED WORKVarious works have been published in the field of cloud security and privacy [9], [35], [30] focussing on aspects for protecting data in multi-tenant, virtualized environments. Desired security characteristics for current cloud infrastructures mainly revolve around isolation of multi-tenant platforms [12], security of hypervisors in order to protect virtualized guest systems and secure network infrastructures [32]. Albeit digital provenance, describing the ancestry of digital objects, still remains a challenging issue for cloud environments, several works have already been published in this field [8], [10] contributing to the issues of cloud forensis. Within this context, cryptographic proofs for verifying data integrity mainly in cloud storage offers have been proposed,yet lacking of practical implementations [24], [37], [23]. Traditional computer forensics has already well researched methods for various fields of application [4], [5], [6], [11], [13]. Also the aspects of forensics in virtual systems have been addressed by several works [2], [3], [20] including the notionof virtual introspection [25]. In addition, the NIST already addressed Web Service Forensics [22] which has a huge impact on investigation processes in cloud computing environments. In contrast, the aspects of forensic investigations in cloud environments have mostly been neglected by both the industry and the research community. One of the first papers focusing on this topic was published by Wolthusen [40] after Bebee et al already introduced problems within cloud environments [1]. Wolthusen stressed that there is an inherent strong need for interdisciplinary work linking the requirements and concepts of evidence arising from the legal field to what can be feasibly reconstructed and inferred algorithmically or in an exploratory manner. In 2010, Grobauer et al [36] published a paper discussing the issues of incident response in cloud environments - unfortunately no specific issues and solutions of cloud forensics have been proposed which will be done within this work.III. TECHNICAL BACKGROUNDA. Traditional Digital ForensicsThe notion of Digital Forensics is widely known as the practice of identifying, extracting and considering evidence from digital media. Unfortunately, digital evidence is both fragile and volatile and therefore requires the attention of special personnel and methods in order to ensure that evidence data can be proper isolated and evaluated. Normally, the process of a digital investigation can be separated into three different steps each having its own specificpurpose:1) In the Securing Phase, the major intention is the preservation of evidence for analysis. The data has to be collected in a manner that maximizes its integrity. This is normally done by a bitwise copy of the original media. As can be imagined, this represents a huge problem in the field of cloud computing where you never know exactly where your data is and additionallydo not have access to any physical hardware. However, the snapshot technology, discussed in section IV-B3, provides a powerful tool to freeze system states and thus makes digital investigations, at least in IaaS scenarios, theoretically possible.2) We refer to the Analyzing Phase as the stage in which the data is sifted and combined. It is in this phase that the data from multiple systems or sources is pulled together to create as complete a picture and event reconstruction as possible. Especially in distributed system infrastructures, this means that bits and pieces of data are pulled together for deciphering the real story of what happened and for providing a deeper look into the data.3) Finally, at the end of the examination and analysis of the data, the results of the previous phases will be reprocessed in the Presentation Phase. The report, created in this phase, is a compilation of all the documentation and evidence from the analysis stage. The main intention of such a report is that it contains all results, it is complete and clear to understand. Apparently, the success of these three steps strongly depends on the first stage. If it is not possible to secure the complete set of evidence data, no exhaustive analysis will be possible. However, in real world scenarios often only a subset of the evidence data can be secured by the investigator. In addition, an important definition in the general context of forensics is the notion of a Chain of Custody. This chain clarifies how and where evidence is stored and who takes possession of it. Especially for cases which are brought to court it is crucial that the chain of custody is preserved.B. Cloud ComputingAccording to the NIST [16], cloud computing is a model for enabling convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications and services) that can be rapidly provisioned and released with minimal CSP interaction. The new raw definition of cloud computing brought several new characteristics such as multi-tenancy, elasticity, pay-as-you-go and reliability. Within this work, the following three models are used: In the Infrastructure asa Service (IaaS) model, the customer is using the virtual machine provided by the CSP for installing his own system on it. The system can be used like any other physical computer with a few limitations. However, the additive customer power over the system comes along with additional security obligations. Platform as a Service (PaaS) offerings provide the capability to deploy application packages created using the virtual development environment supported by the CSP. For the efficiency of software development process this service model can be propellent. In the Software as a Service (SaaS) model, the customer makes use of a service run by the CSP on a cloud infrastructure. In most of the cases this service can be accessed through an API for a thin client interface such as a web browser. Closed-source public SaaS offers such as Amazon S3 and GoogleMail can only be used in the public deployment model leading to further issues concerning security, privacy and the gathering of suitable evidences. Furthermore, two main deployment models, private and public cloud have to be distinguished. Common public clouds are made available to the general public. The corresponding infrastructure is owned by one organization acting as a CSP and offering services to its customers. In contrast, the private cloud is exclusively operated for an organization but may not provide the scalability and agility of public offers. The additional notions of community and hybrid cloud are not exclusively covered within this work. However, independently from the specific model used, the movement of applications and data to the cloud comes along with limited control for the customer about the application itself, the data pushed into the applications and also about the underlying technical infrastructure.C. Fault ModelBe it an account for a SaaS application, a development environment (PaaS) or a virtual image of an IaaS environment, systems in the cloud can be affected by inconsistencies. Hence, for both customer and CSP it is crucial to have the ability to assign faults to the causing party, even in the presence of Byzantine behavior [33]. Generally, inconsistencies can be caused by the following two reasons:1) Maliciously Intended FaultsInternal or external adversaries with specific malicious intentions can cause faults on cloud instances or applications. Economic rivals as well as former employees can be the reason for these faults and state a constant threat to customers and CSP. In this model, also a malicious CSP is included albeit he isassumed to be rare in real world scenarios. Additionally, from the technical point of view, the movement of computing power to a virtualized, multi-tenant environment can pose further threads and risks to the systems. One reason for this is that if a single system or service in the cloud is compromised, all other guest systems and even the host system are at risk. Hence, besides the need for further security measures, precautions for potential forensic investigations have to be taken into consideration.2) Unintentional FaultsInconsistencies in technical systems or processes in the cloud do not have implicitly to be caused by malicious intent. Internal communication errors or human failures can lead to issues in the services offered to the costumer(i.e. loss or modification of data). Although these failures are not caused intentionally, both the CSP and the customer have a strong intention to discover the reasons and deploy corresponding fixes.IV. TECHNICAL ISSUESDigital investigations are about control of forensic evidence data. From the technical standpoint, this data can be available in three different states: at rest, in motion or in execution. Data at rest is represented by allocated disk space. Whether the data is stored in a database or in a specific file format, it allocates disk space. Furthermore, if a file is deleted, the disk space is de-allocated for the operating system but the data is still accessible since the disk space has not been re-allocated and overwritten. This fact is often exploited by investigators which explore these de-allocated disk space on harddisks. In case the data is in motion, data is transferred from one entity to another e.g. a typical file transfer over a network can be seen as a data in motion scenario. Several encapsulated protocols contain the data each leaving specific traces on systems and network devices which can in return be used by investigators. Data can be loaded into memory and executed as a process. In this case, the data is neither at rest or in motion but in execution. On the executing system, process information, machine instruction and allocated/de-allocated data can be analyzed by creating a snapshot of the current system state. In the following sections, we point out the potential sources for evidential data in cloud environments and discuss the technical issues of digital investigations in XaaS environmentsas well as suggest several solutions to these problems.A. Sources and Nature of EvidenceConcerning the technical aspects of forensic investigations, the amount of potential evidence available to the investigator strongly diverges between thedifferent cloud service and deployment models. The virtual machine (VM), hosting in most of the cases the server application, provides several pieces of information that could be used by investigators. On the network level, network components can provide information about possible communication channels between different parties involved. The browser on the client, acting often as the user agent for communicating with the cloud, also contains a lot of information that could be used as evidence in a forensic investigation. Independently from the used model, the following three components could act as sources for potential evidential data.1) Virtual Cloud Instance: The VM within the cloud, where i.e. data is stored or processes are handled, contains potential evidence [2], [3]. In most of the cases, it is the place where an incident happened and hence provides a good starting point for a forensic investigation. The VM instance can be accessed by both, the CSP and the customer who is running the instance. Furthermore, virtual introspection techniques [25] provide access to the runtime state of the VM via the hypervisor and snapshot technology supplies a powerful technique for the customer to freeze specific states of the VM. Therefore, virtual instances can be still running during analysis which leads to the case of live investigations [41] or can be turned off leading to static image analysis. In SaaS and PaaS scenarios, the ability to access the virtual instance for gathering evidential information is highly limited or simply not possible.2) Network Layer: Traditional network forensics is knownas the analysis of network traffic logs for tracing events that have occurred in the past. Since the different ISO/OSI network layers provide several information on protocols and communication between instances within as well as with instances outside the cloud [4], [5], [6], network forensics is theoretically also feasible in cloud environments. However in practice, ordinary CSP currently do not provide any log data from the network components used by the customer’s instances or applications. For instance, in case of a malware infection of an IaaS VM, it will be difficult for the investigator to get any form of routing information and network log datain general which is crucial for further investigative steps. This situation gets even more complicated in case of PaaS or SaaS. So again, the situation of gathering forensic evidence is strongly affected by the support the investigator receives from the customer and the CSP.3) Client System: On the system layer of the client, it completely depends on the used model (IaaS, PaaS, SaaS) if and where potential evidence could beextracted. In most of the scenarios, the user agent (e.g. the web browser) on the client system is the only application that communicates with the service in the cloud. This especially holds for SaaS applications which are used and controlled by the web browser. But also in IaaS scenarios, the administration interface is often controlled via the browser. Hence, in an exhaustive forensic investigation, the evidence data gathered from the browser environment [7] should not be omitted.a) Browser Forensics: Generally, the circumstances leading to an investigation have to be differentiated: In ordinary scenarios, the main goal of an investigation of the web browser is to determine if a user has been victim of a crime. In complex SaaS scenarios with high client-server interaction, this constitutes a difficult task. Additionally, customers strongly make use of third-party extensions [17] which can be abused for malicious purposes. Hence, the investigator might want to look for malicious extensions, searches performed, websites visited, files downloaded, information entered in forms or stored in local HTML5 stores, web-based email contents and persistent browser cookies for gathering potential evidence data. Within this context, it is inevitable to investigate the appearance of malicious JavaScript [18] leading to e.g. unintended AJAX requests and hence modified usage of administration interfaces. Generally, the web browser contains a lot of electronic evidence data that could be used to give an answer to both of the above questions - even if the private mode is switched on [19].B. Investigations in XaaS EnvironmentsTraditional digital forensic methodologies permit investigators to seize equipment and perform detailed analysis on the media and data recovered [11]. In a distributed infrastructure organization like the cloud computing environment, investigators are confronted with an entirely different situation. They have no longer the option of seizing physical data storage. Data and processes of the customer are dispensed over an undisclosed amount of virtual instances, applications and network elements. Hence, it is in question whether preliminary findings of the computer forensic community in the field of digital forensics apparently have to be revised and adapted to the new environment. Within this section, specific issues of investigations in SaaS, PaaS and IaaS environments will be discussed. In addition, cross-disciplinary issues which affect several environments uniformly, will be taken into consideration. We also suggest potential solutions to the mentioned problems.1) SaaS Environments: Especially in the SaaS model, the customer does notobtain any control of the underlying operating infrastructure such as network, servers, operating systems or the application that is used. This means that no deeper view into the system and its underlying infrastructure is provided to the customer. Only limited userspecific application configuration settings can be controlled contributing to the evidences which can be extracted fromthe client (see section IV-A3). In a lot of cases this urges the investigator to rely on high-level logs which are eventually provided by the CSP. Given the case that the CSP does not run any logging application, the customer has no opportunity to create any useful evidence through the installation of any toolkit or logging tool. These circumstances do not allow a valid forensic investigation and lead to the assumption that customers of SaaS offers do not have any chance to analyze potential incidences.a) Data Provenance: The notion of Digital Provenance is known as meta-data that describes the ancestry or history of digital objects. Secure provenance that records ownership and process history of data objects is vital to the success of data forensics in cloud environments, yet it is still a challenging issue today [8]. Albeit data provenance is of high significance also for IaaS and PaaS, it states a huge problem specifically for SaaS-based applications: Current global acting public SaaS CSP offer Single Sign-On (SSO) access control to the set of their services. Unfortunately in case of an account compromise, most of the CSP do not offer any possibility for the customer to figure out which data and information has been accessed by the adversary. For the victim, this situation can have tremendous impact: If sensitive data has been compromised, it is unclear which data has been leaked and which has not been accessed by the adversary. Additionally, data could be modified or deleted by an external adversary or even by the CSP e.g. due to storage reasons. The customer has no ability to proof otherwise. Secure provenance mechanisms for distributed environments can improve this situation but have not been practically implemented by CSP [10]. Suggested Solution: In private SaaS scenarios this situation is improved by the fact that the customer and the CSP are probably under the same authority. Hence, logging and provenance mechanisms could be implemented which contribute to potential investigations. Additionally, the exact location of the servers and the data is known at any time. Public SaaS CSP should offer additional interfaces for the purpose of compliance, forensics, operations and security matters to their customers. Through an API, the customers should have the ability to receive specific information suchas access, error and event logs that could improve their situation in case of aninvestigation. Furthermore, due to the limited ability of receiving forensic information from the server and proofing integrity of stored data in SaaS scenarios, the client has to contribute to this process. This could be achieved by implementing Proofs of Retrievability (POR) in which a verifier (client) is enabled to determine that a prover (server) possesses a file or data object and it can be retrieved unmodified [24]. Provable Data Possession (PDP) techniques [37] could be used to verify that an untrusted server possesses the original data without the need for the client to retrieve it. Although these cryptographic proofs have not been implemented by any CSP, the authors of [23] introduced a new data integrity verification mechanism for SaaS scenarios which could also be used for forensic purposes.2) PaaS Environments: One of the main advantages of the PaaS model is that the developed software application is under the control of the customer and except for some CSP, the source code of the application does not have to leave the local development environment. Given these circumstances, the customer obtains theoretically the power to dictate how the application interacts with other dependencies such as databases, storage entities etc. CSP normally claim this transfer is encrypted but this statement can hardly be verified by the customer. Since the customer has the ability to interact with the platform over a prepared API, system states and specific application logs can be extracted. However potential adversaries, which can compromise the application during runtime, should not be able to alter these log files afterwards. Suggested Solution:Depending on the runtime environment, logging mechanisms could be implemented which automatically sign and encrypt the log information before its transfer to a central logging server under the control of the customer. Additional signing and encrypting could prevent potential eavesdroppers from being able to view and alter log data information on the way to the logging server. Runtime compromise of an PaaS application by adversaries could be monitored by push-only mechanisms for log data presupposing that the needed information to detect such an attack are logged. Increasingly, CSP offering PaaS solutions give developers the ability to collect and store a variety of diagnostics data in a highly configurable way with the help of runtime feature sets [38].3) IaaS Environments: As expected, even virtual instances in the cloud get compromised by adversaries. Hence, the ability to determine how defenses in the virtual environment failed and to what extent the affected systems havebeen compromised is crucial not only for recovering from an incident. Also forensic investigations gain leverage from such information and contribute to resilience against future attacks on the systems. From the forensic point of view, IaaS instances do provide much more evidence data usable for potential forensics than PaaS and SaaS models do. This fact is caused throughthe ability of the customer to install and set up the image for forensic purposes before an incident occurs. Hence, as proposed for PaaS environments, log data and other forensic evidence information could be signed and encrypted before itis transferred to third-party hosts mitigating the chance that a maliciously motivated shutdown process destroys the volatile data. Although, IaaS environments provide plenty of potential evidence, it has to be emphasized that the customer VM is in the end still under the control of the CSP. He controls the hypervisor which is e.g. responsible for enforcing hardware boundaries and routing hardware requests among different VM. Hence, besides the security responsibilities of the hypervisor, he exerts tremendous control over how customer’s VM communicate with the hardware and theoretically can intervene executed processes on the hosted virtual instance through virtual introspection [25]. This could also affect encryption or signing processes executed on the VM and therefore leading to the leakage of the secret key. Although this risk can be disregarded in most of the cases, the impact on the security of high security environments is tremendous.a) Snapshot Analysis: Traditional forensics expect target machines to be powered down to collect an image (dead virtual instance). This situation completely changed with the advent of the snapshot technology which is supported by all popular hypervisors such as Xen, VMware ESX and Hyper-V.A snapshot, also referred to as the forensic image of a VM, providesa powerful tool with which a virtual instance can be clonedby one click including also the running system’s mem ory. Due to the invention of the snapshot technology, systems hosting crucial business processes do not have to be powered down for forensic investigation purposes. The investigator simply creates and loads a snapshot of the target VM for analysis(live virtual instance). This behavior is especially important for scenarios in which a downtime of a system is not feasible or practical due to existing SLA. However the information whether the machine is running or has been properly powered down is crucial [3] for the investigation. Live investigations of running virtual instances become more common providing evidence data that。

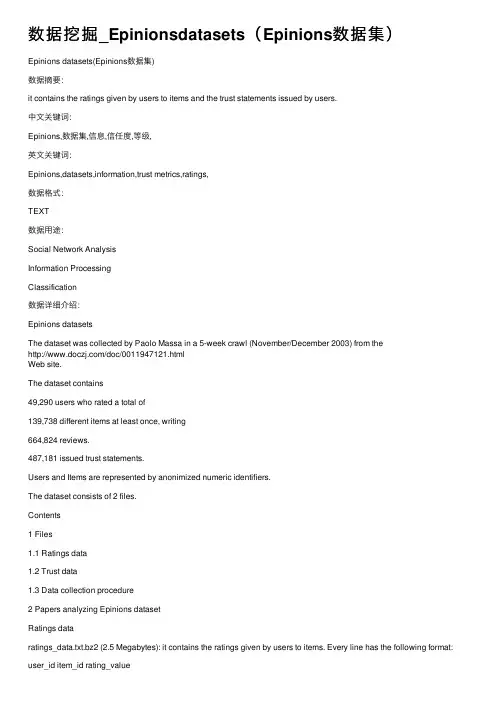

数据挖掘_Epinionsdatasets(Epinions数据集)Epinions datasets(Epinions数据集)数据摘要:it contains the ratings given by users to items and the trust statements issued by users.中⽂关键词:Epinions,数据集,信息,信任度,等级,英⽂关键词:Epinions,datasets,information,trust metrics,ratings,数据格式:TEXT数据⽤途:Social Network AnalysisInformation ProcessingClassification数据详细介绍:Epinions datasetsThe dataset was collected by Paolo Massa in a 5-week crawl (November/December 2003) from the/doc/0011947121.htmlWeb site.The dataset contains49,290 users who rated a total of139,738 different items at least once, writing664,824 reviews.487,181 issued trust statements.Users and Items are represented by anonimized numeric identifiers.The dataset consists of 2 files.Contents1 Files1.1 Ratings data1.2 Trust data1.3 Data collection procedure2 Papers analyzing Epinions datasetRatings dataratings_data.txt.bz2 (2.5 Megabytes): it contains the ratings given by users to items. Every line has the following format: user_id item_id rating_valueFor example,23 387 5represents the fact "user 23 has rated item 387 as 5"Ranges:user_id is in [1,49290]item_id is in [1,139738]rating_value is in [1,5]Trust datatrust_data.txt.bz2 (1.7 Megabytes): it contains the trust statements issued by users. Every line has the following format: source_user_id target_user_id trust_statement_valueFor example, the line22605 18420 1represents the fact "user 22605 has expressed a positive trust statement on user 18420"Ranges:source_user_id and target_user_id are in [1,49290]trust_statement_value is always 1 (since in the dataset there are only positive trust statements and not negative ones (distrust)).Note: there are no distrust statements in the dataset (block list) but only trust statements (web of trust), because the block list is kept private and not shown on the site.Data collection procedureThe data were collected using a crawler, written in Perl.It was the first program I (Paolo Massa) ever wrote in Perl (and an excuse for learning Perl) so the code is probably very ugly. Anyway I release the code under the GNU Generic Public Licence (GPL) so that other people might be use the code if they so wish.epinionsRobot_pl.txt is the version I used, this version parses the HTML and saves minimal information as perl objects. Later on, I saw this was not a wise choice (for example, I didn't save demographic information about users which might have been useful for testing, for example, is users trusted by user A comes from the same city or region). So later on I created a version that saves the original HTML pages(epinionsRobot_downloadHtml_pl.txt) but I didn't test it. Feel free to let me know if it works. Both Perl files are released under GNU Generic Public Licence (GPL), see first lines of the files. --PaoloMassaBe aware that the script was working in 2003, I didn't check but it is very likely that the format of HTML pages has changed significantly in the meantime so the script might needsome adjustments. Luckily, the code is released as open source so you can modify it. --Paolo Massa 11:34, 16 July 2010 (UTC)Papers analyzing Epinions datasetTrust-aware Recommender Systemsadd another paper!Retrieved from "/doc/0011947121.html/wiki/Downloaded_Epinions_dataset"数据预览:点此下载完整数据集。

数据挖掘英文论文数据挖掘的论文Web数据挖掘中XML的应用研究摘要:网络的普及基于信息的获取,随着Html技术的发展,数据信息与日俱增.面对浩瀚如烟的信息,要想得到想要的、有用的的信息,必须要对Web信息进行挖掘。

而对于Html语言的数据,结构性很差,Web数据挖掘工作很难满足搜索的需要。

XML语言的出现极大的改观了这一现状。

由于它具有良好的结构性、层次性,所以利用它组织网络页面信息,更有利于进行数据挖掘工作。

通过对XML语言的介绍,提出一个基于XML的Web Miner模型,认识XML在Web数据挖掘中的应用。

关键词:HTML;XML;电子商务;Web数据挖掘XML Web Application Studies In Data MiningNIU Yan-cheng1, BAO Ying2(nzhou Jiaotong University, Lanzhou 730030, China; 2.Northwest Normal University, Lanzhou 730070, China)Abstract: The popularization of the Internet is based on the acquisition of information. As the Html technology developing, a number of data information is growing. Facing with the massive information, we must explore the Web information that we wanted and useful. But for the Html language data, its structure is very poor. So the exploration of the Web data is hard to meet the needs of searching. The emergence of the XML language has changed that situation greatly. XML language has good structural property and organizational property, which used for organizing the network information is more conducive to the data miningwork. The goal of this paper is to recommend a Miner model based on XML Web by the introduce of the XML language and to know the application of XML Web in the data mining.Key words: HTML; XML; e-commerce; web data mining随着Internet的迅速发展与普及,我们进入了一个数据信息时代。

挖掘机外文翻译外文文献中英翻译Excavator Translation: English Translation of Foreign LiteratureAbstract:The translation of foreign literature plays a significant role in acquiring knowledge and broadening horizons. In this article, we will explore the translation of foreign literature related to excavators. By analyzing various examples, we aim to provide an insightful understanding of the translation process and techniques involved in conveying the essence of foreign texts to the target language.1. IntroductionThe field of construction machinery presents numerous challenges when it comes to translation due to the technical nature of the content. Translating related literature helps engineers and professionals gain access to international best practices, safety guidelines, and advancements. One of the key areas within construction machinery is excavators, which serve as the focus of this article's English translation of foreign literature.2. Historical Background and EvolutionBefore delving into translations of foreign literature, it is essential to understand the historical background and evolution of excavators. The first excavators can be traced back to ancient civilizations such as the Egyptians and Greeks. Through detailed research and analysis, these ancient excavation methods have gradually evolved into the sophisticated machinery used today.3. Translation Techniques for Excavator-Related TerminologyTranslating technical terms accurately is crucial in preserving the integrity and clarity of the original text. When it comes to excavators, some terms might not have a direct equivalent in the target language. In such cases, the translator must employ various techniques like borrowing, calque, explanation, or using a closest possible translation. Balancing accuracy and readability is a crucial aspect of this translation process.4. Examples of Translated Excavator LiteratureTo demonstrate the translation techniques mentioned earlier, several examples will be provided in this section. These examples will range from user manuals, safety guidelines, to technical specifications and advancements. Through examining these examples, readers will gain insight into the specific challenges faced during translation and the strategies usedto overcome them.5. Cultural Considerations in Excavator TranslationsIn addition to technical accuracy, cultural considerations play a vital role in translating excavator literature. Different cultures may have varying perceptions and terminologies related to construction machinery. Translators need to be aware of cultural nuances to ensure that the translated materials are not only accurate but also culturally appropriate for the target audience.6. ConclusionIn conclusion, the translation of foreign literature on excavators is of great importance in the field of construction machinery. By accurately conveying the technical details, safety guidelines, and advancements fromforeign texts, engineers and professionals can broaden their knowledge and stay updated with international practices. The translation process involves employing various techniques and considering cultural aspects. As a result, it is crucial for translators to possess both technical expertise and cultural sensitivity when undertaking such translations.Through this article, we have explored the significance of excavator translation, the challenges faced, and the techniques employed. It is the bridge that connects language barriers, facilitates learning, and fosters advancements in the field.。

数据挖掘论文的参考文献数据挖掘论文的参考文献有哪些?以下是小编为大家整理的数据挖掘论文参考文献范文,希望能帮到你哦,更多内容请浏览()。

[1]刘莹.基于数据挖掘的商品销售预测分析[J].科技通报.2019(07)[2]姜晓娟,郭一娜.基于改进聚类的电信客户流失预测分析[J].太原理工大学学报.2019(04)[3]李欣海.随机森林模型在分类与回归分析中的应用[J].应用昆虫学报.2019(04)[4]朱志勇,徐长梅,刘志兵,胡晨刚.基于贝叶斯网络的客户流失分析研究[J].计算机工程与科学.2019(03)[5]翟健宏,李伟,葛瑞海,杨茹.基于聚类与贝叶斯分类器的网络节点分组算法及评价模型[J].电信科学.2019(02)[6]王曼,施念,花琳琳,杨永利.成组删除法和多重填补法对随机缺失的二分类变量资料处理效果的比较[J].郑州大学学报(医学版).2019(05)[7]黄杰晟,曹永锋.挖掘类改进决策树[J].现代计算机(专业版).2019(01)[8]李净,张范,张智江.数据挖掘技术与电信客户分析[J].信息通信技术.2019(05)[9]武晓岩,李康.基因表达数据判别分析的随机森林方法[J].中国卫生统计.2019(06)[10]张璐.论信息与企业竞争力[J].现代情报.2019(01)[11]杨毅超.基于Web数据挖掘的作物商务平台分析与研究[D].湖南农业大学2019[12]徐进华.基于灰色系统理论的数据挖掘及其模型研究[D].北京交通大学2019[13]俞驰.基于网络数据挖掘的客户获取系统研究[D].西安电子科技大学2019[14]冯军.数据挖掘在自动外呼系统中的应用[D].北京邮电大学2019[15]于宝华.基于数据挖掘的高考数据分析[D].天津大学2019[16]王仁彦.数据挖掘与网站运营管理[D].华东师范大学2019[17]彭智军.数据挖掘的若干新方法及其在我国证券市场中应用[D].重庆大学2019[18]涂继亮.基于数据挖掘的智能客户关系管理系统研究[D].哈尔滨理工大学2019[19]贾治国.数据挖掘在高考填报志愿上的应用[D].内蒙古大学2019[20]马飞.基于数据挖掘的航运市场预测系统设计及研究[D].大连海事大学2019[21]周霞.基于云计算的太阳风大数据挖掘分类算法的研究[D].成都理工大学2019[22]阮伟玲.面向生鲜农产品溯源的基层数据库建设[D].成都理工大学2019[23]明慧.复合材料加工工艺数据库构建及数据集成[D].大连理工大学2019[24]陈鹏程.齿轮数控加工工艺数据库开发与数据挖掘研究[D].合肥工业大学2019[25]岳雪.基于海量数据挖掘关联测度工具的设计[D].西安财经学院2019[26]丁翔飞.基于组合变量与重叠区域的SVM-RFE方法研究[D].大连理工大学2019[27]刘士佳.基于MapReduce框架的频繁项集挖掘算法研究[D].哈尔滨理工大学2019[28]张晓东.全序模块模式下范式分解问题研究[D].哈尔滨理工大学2019[29]尚丹丹.基于虚拟机的Hadoop分布式聚类挖掘方法研究与应用[D].哈尔滨理工大学2019[30]王化楠.一种新的混合遗传的基因聚类方法[D].大连理工大学2019[31]杨毅超.基于Web数据挖掘的作物商务平台分析与研究[D].湖南农业大学2019[32]徐进华.基于灰色系统理论的数据挖掘及其模型研究[D].北京交通大学2019[33]俞驰.基于网络数据挖掘的客户获取系统研究[D].西安电子科技大学2019[34]冯军.数据挖掘在自动外呼系统中的应用[D].北京邮电大学2019[35]于宝华.基于数据挖掘的高考数据分析[D].天津大学2019[36]王仁彦.数据挖掘与网站运营管理[D].华东师范大学2019[37]彭智军.数据挖掘的若干新方法及其在我国证券市场中应用[D].重庆大学2019[38]涂继亮.基于数据挖掘的智能客户关系管理系统研究[D].哈尔滨理工大学2019[39]贾治国.数据挖掘在高考填报志愿上的应用[D].内蒙古大学2019[ 40]马飞.基于数据挖掘的航运市场预测系统设计及研究[D].大连海事大学2019。

基于知识发现的网络安全态势感知框架摘要:由于以往的安全警戒事件,网络安全态势感知提供了独特的高级别安全观。

但基于网络的安全警报数据的复杂性和多样性使得对其作分析极为困难。

在本文中,我们分析的网络安全态势感知系统中存在的问题,并提出了基于知识发现的网络安全态势感知框架。

该框架包括网络安全态势模型生成、网络安全态势产生。

建模的目的,是构建基于d-s理论的网络安全态势检测的形式化模型,并支持融合和分析来自于传感器安全态势的安全警报事件的一般化过程。

新一代网络安全态势就是从基于知识发现方法的网络安全态势数据集中提取出频繁模式和序列模式,并把这些模式转换为网络安全态势的相关规则,最后自动生成网络安全态势图。

集成网络安全态势感知系统(Net-SSA )的应用表明,这种框架支持网络安全态势模型的精确生成和有效发展。

关键词:网络安全;态势感知;数据挖掘;知识发现一、引言传统的网络安全设备,如入侵检测系统(IDS ),防火墙,安全扫描器彼此独立运作,它们几乎没有自己所要保护的网络资源的信息。

由于缺乏信息,在对安全警告作解释和对相应的态势作出决策时,它往往给出很多模棱两可的答案。

网络系统遭受各种安全威胁,包括网络蠕虫、大规模的网络攻击等,网络安全态势感知是解决这些问题的有效途径。

网络安全态势感知的一般过程就是,感知发生在一定时间段和网络环境的网络安全事件,综合处理安全数据,分析系统所受到攻击行为,提供全局的网络安全观,评估整体的安全态势并预测未来的网络安全趋势。

在实现网络安全态势感知时存在着一些困难,如下:(1)从各种安全传感器生成的警报事件数量是巨大的,假阳性率太高。

(2)由于大规模网络攻击(例如:DDos)所产生的琐碎的安全警报非常复杂,并且它们之间的关系难以确定。

(3)安全传感器产生的警报事件数据类型数量巨大,然而对警报事件进行处理时警报处理程序得不到足够的信息,而且自动获取这些信息是相当困难的。

在本文中,我们总结的网络安全态势感知的研究过程,提出了一个基于知识发现的网络安全态势感知的框架,并应用到我们的网络安全态势感知系统(NET- SSA)。

数据挖掘概念与技术英文原书名: Data Mining:Concepts and Techniques作者: (加)Jiawei Han Micheline Kamber译者: 范明孟小峰等译书号: 7-111-09048-9出版社: 机械工业出版社出版日期: 2001-8-1页码: 374定价: ¥39.00"数据挖掘"(Data Mining)是一种新的商业信息处理技术,其主要特点是对商业数据库中的大量业务数据进行抽取、转换、分析和其他模型化处理,从中提取辅助商业决策的关键性数据。

近年来,数据挖掘引起了信息产业界的极大关注,其主要原因是由于企业数据库的广泛使用,存在大量的数据,并且迫切需要从这些数据中获取有用的信息的知识。

获取的信息和知识有广泛的应用,例如:商务管理、生产管理、市场控制、市场分析、工程设计和科学探索等。

越来越多的IT企业看到了这一诱人的市场,纷纷加入到数据挖掘工具的开发中来,并获得丰厚的回报。

例如微软公司在它的最新的关系数据库系统SQL Server 2000加入了先进的数据挖掘功能,在基于NT的数据库软件市场中打败了Oracle公司,成为销售额最大的产品。

又如IBM公司发布了一项新型的基于标准的数据挖掘技术--IBMDB2智能挖掘器积分服务(IBM DB2 Intelligent Miner Scoring Service),它可以帮助企业轻松地为自己的客户和供应商开发出个性化的解决方案。

从种种迹象表明,数据挖掘这一研究领域的发展充满了机遇和挑战。

《数据挖掘:概念与技术》一书从数据库专业人员的角度,全面深入地介绍了数据挖掘原理和在大型企业数据库中知识发现的方法。

该书首先用浅显的语言介绍了数据挖掘的概念、数据挖掘系统的基本结构、数据挖掘系统的分类等,逐渐地把读者领入该领域,这一点做得非常好。

作者接着便全面而详细的介绍了数据挖掘技术,其中还包括了当前的最新进展。

Enron Email Dataset(安然公司邮件数据集)数据摘要:This dataset was collected and prepared by the CALO Project (A Cognitive Assistant that Learns and Organizes). It contains data from about 150 users, mostly senior management of Enron, organized into folders. The corpus contains a total of about 0.5M messages. This data was originally made public, and posted to the web, by the Federal Energy Regulatory Commission during its investigation.中文关键词:数据挖掘,安然公司,邮件,150个用户,CALO项目,英文关键词:Data mining,Enron,Email,150 users,CALO Project,数据格式:TEXT数据用途:The data can be used for data mining and analysis.数据详细介绍:Enron Email DatasetThis dataset was collected and prepared by the CALO Project(A Cognitive Assistant that Learns and Organizes). It contains data from about 150 users, mostly senior management of Enron, organized into folders. The corpus contains a total of about 0.5M messages. This data was originally made public, and posted to the web, by the Federal Energy Regulatory Commission during its investigation.The email dataset was later purchased by Leslie Kaelbling at MIT, and turned out to have a number of integrity problems. A number of folks at SRI, notably Melinda Gervasio, worked hard to correct these problems, and it is thanks to them (not me) that the dataset is available. The dataset here does not include attachments, and some messages have been deleted "as part of a redaction effort due to requests from affected employees". Invalid email addresses were converted to something of the formuser@ whenever possible (i.e., recipient is specified in some parse-able format like "Doe, John" or "Mary K. Smith") and tono_address@ when no recipient was specified.I get a number of questions about this corpus each week, which I am unable to answer, mostly because they deal with preparation issues and such that I just don't know about. If you ask me a question and I don't answer, please don't feel slighted.I am distributing this dataset as a resource for researchers who are interested in improving current email tools, or understanding how email is currently used. This data is valuable; to my knowledge it is the only substantial collection of "real" email that is public. The reason other datasets are not public is because of privacy concerns. In using this dataset, please be sensitive to the privacy of the people involved (and remember that many of these people were certainly not involved in any of the actions which precipitated the investigation.)∙March 2, 2004 Version of dataset (about 400Mb, tarred and gzipped) is no longer being distributed. If you are using this dataset for your work, you are requested to replace it with the newer version of the dataset below, or make the a corresponding change to your local copy.∙August 21, 2009 Version of dataset (about 400Mb, tarred and gzipped). The difference between this dataset and the March 2, 2004version is removal of one message (due to a request from the original sender). The removed message is maildir/skilling-j/1584.There are also at least two on-line databases that allow you to search the data, at and UCBResearch uses of the datasetThis is a partial and poorly maintained list. If I've left your work out, don't take it personally, and feel free to send me a pointer and/or description.∙ A paper describing the Enron data was presented at the 2004 CEAS conference.∙Some experiments associated with this data are described on Ron Bekkerman's home page.∙ A social-network analysis of the data, including "useful mappings between the MD5 digest of the email bodies and such things as authors, recipients, etc", is available from Andres Corrada-Emmanuel.∙ A group from SIMS, UC Berkeley provides search, visualization, and some email that has been labeled with topic and sentiment labels ∙Jitesh Shetty has put up a database of link-analysis results.∙ A version of the dataset with all attachments is available from EDRM.William W. Cohen, MLD, CMULast modified: Fri Aug 21 11:53:35 EDT 2009数据预览:Message-ID: <20264067.1075842025121.JavaMail.evans@thyme>Date: Wed, 6 Feb 2002 13:12:40 -0800 (PST)From: w..white@To: john.zufferli@, tim.belden@, m..presto@, dan.davis@Subject: Simulation CurvesCc: john.postlethwaite@, casey.evans@,kathy.reeves@,wayne.vinson@Mime-Version: 1.0Content-Type: text/plain; charset=us-asciiContent-Transfer-Encoding: 7bitBcc: john.postlethwaite@, casey.evans@,kathy.reeves@,wayne.vinson@X-From: White, Stacey W. </O=ENRON/OU=NA/CN=RECIPIENTS/CN=SWHITE>X-To: Zufferli, John </O=ENRON/OU=NA/CN=RECIPIENTS/CN=Jzuffer>, Belden, Tim </O=ENRON/OU=NA/CN=RECIPIENTS/CN=Tbelden>, Presto, Kevin M.</O=ENRON/OU=NA/CN=RECIPIENTS/CN=Kpresto>, Davis, Dan</O=ENRON/OU=EU/cn=Recipients/cn=ddavis2>X-cc: Postlethwaite, John </O=ENRON/OU=NA/CN=RECIPIENTS/CN=JPOSTLE>, Evans, Casey </O=ENRON/OU=NA/CN=RECIPIENTS/CN=Cevans>, Reeves, Kathy</O=ENRON/OU=NA/CN=RECIPIENTS/CN=Kreeve1>, Vinson, Donald Wayne</O=ENRON/OU=NA/CN=RECIPIENTS/CN=Dvinson>X-bcc:X-Folder: \ExMerge - Zufferli, John\InboxX-Origin: ZUFFERLI-JX-FileName: john zufferli 6-26-02.PSTMake sure that all curves are downloaded by the end of simulation, 11:00 CST, tomorrow. Ideally, the 2/7/02 curves should be marked in the morning prior to simulation. We have been instructed to kick the calculation off as close to 11:00 CST as possible. This is to ensure that ALL downstream systems are able to run through the simulation.Please forward to all applicable traders.Thanks,Stacey点此下载完整数据集。

附录一调研报告数据挖掘在CRM中运用(1)通过数据挖掘获得新的客户。

在CRM中首先应识别潜在客户,然后将他们转化为客户。

Big Bank and Credit Card(BB&CC)公司每年通过邮递的方式开展25 次促销活动,每次给一百万人提供申请信用卡的机会,BB&CC 公司会将信用高的申请者接受为服务对象,最终只有1%的申请者成为用户。

BB&CC公司所面临的挑战是如何让邮递促销活动更加有效。

首先,BB&CC公司抽取了一个50,000人的样本,做了一个测试。

在样本测试结果分析的基础上建立了两个模型,一个用来预测谁将填写申请表(使用决策树方法),另一个是信用评估模型(使用神经网络方法)。

从剩下的950,000 个人中再次抽取700,000个样本,使用模型找出哪些人会对促销活动做出反应,并且具有良好的信用。

结果如下:包括建模型时用的50,000 共抽取了750,000个样本,其中9,000 个申请者被接受,接受率从1%上升到了1.2%。

数据挖掘虽然不能准确的识别哪10,000个申请者最终会成为用户,但是可以促使营销活动更加有效。

(2)通过数据挖掘使用交叉销售提高现有客户的价值。

Guns and Rouses(G&R)公司销售的产品是:仿迫击炮与大炮的室外花盆和仿大口径手枪与长枪的室内花盆。

产品表被发往12,000,000个家庭。

当客户电话定购某个产品时,(G&R)公司会积极的推销其它的产品——交叉销售。

但是,(G&R)公司发现只有1/3的客户允许他们提出建议,最终的交叉销售率不足1%,并招致了一片抱怨声。

为此B&R公司想确定到底是哪些人在定购某个产品的同时需要其他的产品。

G&R公司建立了两个数据挖掘模型,一个是用来预测某个客户是否会被建议触怒,另一个用来预测什么样的建议会被很好的接受。

数据挖掘模型使用客户信息数据库中客户的信息和新的客户信息,告诉销售代表哪种人可以采用交叉销售的方式以及建议什么产品。

The Kansas Event Data System(堪萨斯事件数据系统)数据摘要:KEDS - The Kansas Event Data System uses automated coding of English-language news reports to generate political event data focusing on the Middle East, Balkans, and West Africa. These data are used in statistical early warning models to predict political change. The ten-year project is based in the Department of Political Science at the University of Kansas; it has been funded primarily by the U.S. National Science Foundation.中文关键词:提前预警模型,堪萨斯事件数据系统,新闻报道,政治事件,英文关键词:Early warning models,The Kansas Event Data System,News reports,Political event,数据格式:TEXT数据用途:The data can be used for Networks & Communications.数据详细介绍:The Kansas Event Data System∙AbstractKEDS - The Kansas Event Data System uses automated coding of English-language news reports to generate political event data focusing on the Middle East, Balkans, and West Africa. These data are used in statistical early warning models to predict political change. The ten-year project is based in the Department of Political Science at the University of Kansas; it has been funded primarily by the U.S. National Science Foundation.∙Data Description directed multirelational temporal network with 174 vertices and 57131 arcs. From 'leads' Gulf event data, granularity is 1 day. directed multirelational temporal network with 174 vertices and 57131 arcs. From 'leads' Gulf event data, granularity is 1 month. directed multirelational temporal network with 174 vertices and 57131 arcs. From 'leads' Gulf event data, in day of the week classes. directed multirelational temporal network with 202 vertices and 304401 arcs. From Gulf event data, granularity is 1 day. directed multirelational temporal network with 202 vertices and 304401 arcs. From Gulf event data, granularity is 1 month. directed multirelational temporal network with 485 vertices and 196364 arcs. From Levant event data, granularity is 1 day. directed multirelational temporal network with 485 vertices and 196364 arcs. From Levant event data, granularity is 1 month. directed multirelational temporal network with 325 vertices and 78667 arcs. From Balkan event data, granularity is 1 day. directed multirelational temporal network with 325 vertices and 78667 arcs. From Balkan event data, granularity is 1 month.Gulf data set: This data set covers the states of the Gulf region and the Arabian peninsula for the period 15 April 1979 to 31 March 1999. The source texts prior to 10 June 97 were located using a NEXIS search command specifically designed to return relevant data. There are two versions of the data: a set coded from the lead sentences only (57,000 events), and a set coded from full stories (304,000 events).There are some errors in the GulfAll data. Events 118196 and 118197 have REUT-0 in place of the date; and in event 173526 the first actor is missing. In Gulf99All.dat the wrong dates are replaced with 890319, and the incomplete event is skiped.Levant data set: Folder containing WEIS-coded events (N=196,337) for dyadic interactions within the following set of countries: Egypt, Israel, Jordan, Lebanon, Palestinians, Syria, USA, and USSR/Russia.Coverage is April 1979 to June 2004. TABARI coding dictionaries are also included.There are some errors (333) in data set - relation codes 012], O24, O53, 213] are replaced with 012, 024, 053, 213 in Levant.dat. Some events don't have description codes - they are marked with *** in relation labels in *.net files.Balkans data set, 1989-2003: Folder containing WEIS-coded events (N = 78,667) for the major actors (including ethnic groups) involved in the conflicts in the former Yugoslavia. Coverage is April 1989 through July 2003. TABARI coding dictionaries are included in the folder.There are some errors (197) in data set - relation codes ---], O24, O53 are replaced with 000, 024, 053 in Balkan.dat. Some events don't have description codes - they are marked with *** in relation labels in *.net files.The original data sets are on MAC files. They should be saved as PC files before processing.The detailed information is in the file “readme.txt”.ReferenceStuffIt - uncompress program for SIT files数据预览:点此下载完整数据集。

Dolphin social network(海豚社会网络)数据摘要:Lusseau等在新西兰对62只宽吻海豚的生活习性进行了长时间的观察,他们研究发现这些海豚的交往呈现出特定的模式,并构造了包含有62个结点的社会网络。

如果某两只海豚经常一起频繁活动,那么网络中相应的两个结点之间就会有一条边存在。

中文关键词:海豚网络,复杂网络,数据挖掘,测试,社团结构,英文关键词:dolphin network,complex network,dataming,test,community,数据格式:TEXT数据用途:用于测试社团划分算法准确性数据详细介绍:海豚社会网络数据集数据介绍:Lusseau等在新西兰对62只宽吻海豚的生活习性进行了长时间的观察,他们研究发现这些海豚的交往呈现出特定的模式,并构造了包含有62个结点的社会网络。

如果某两只海豚经常一起频繁活动,那么网络中相应的两个结点之间就会有一条边存在。

数据格式:8 39 59 610 010 213 513 613 914 014 315 016 1417 117 617 1318 1519 119 720 8 20 1620 1821 1822 17 24 14 24 1524 1825 1726 126 2527 1 27 7 27 17 27 2527 2628 1 28 8 28 2029 18 29 2129 2430 7 30 1930 2831 1732 9 32 13 13 33 12 14 33 1633 2134 1434 3335 2936 1 36 2036 2337 8 37 14 37 16 37 2137 3437 3638 14 38 16 38 2038 3339 3640 0 40 7 40 14 40 15 40 33 40 3640 3741 1 41 941 1342 0 42 2 42 1042 3043 14 43 2943 3743 3844 2 44 20 44 3444 3845 8 45 15 45 18 45 21 45 23 45 24 45 2945 3746 4347 0 47 10 47 20 47 28 47 30 47 42 49 34 49 4650 16 50 20 50 33 50 4250 4551 4 51 11 51 18 51 21 51 23 51 24 51 29 51 4551 5052 14 52 29 52 3852 4053 4354 1 54 6 54 7 54 1354 4155 1555 5156 556 657 5 57 6 57 9 57 13 57 17 57 39 57 41 57 4857 5458 3859 3 59 8 59 15 59 3659 4560 3261 2 61 37Creator "Mark Newman on Wed Jul 26 15:04:20 2006"graph[directed 0node[id 0label "Beak"]node[id 1label "Beescratch"]数据信息:Dolphin social network: an undirected social network of frequent associations between 62 dolphins in a community living off Doubtful Sound, New Zealand. Please cite D. Lusseau, K. Schneider, O. J.Boisseau, P. Haase, E. Slooten, and S. M. Dawson, Behavioral Ecology and Sociobiology54, 396-405 (2003). Thanks to David Lusseau for permission to post these data on this web site.数据预览:数据格式:8 39 59 610 010 213 513 613 914 014 315 016 1417 117 6数据规模:62个点,159条边点此下载完整数据集。