THEORY OF REPRODUCING KERNELS(')

- 格式:pdf

- 大小:5.72 MB

- 文档页数:68

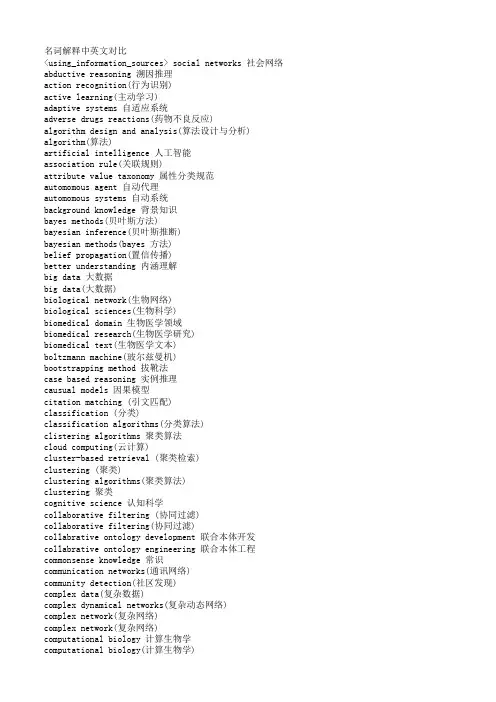

名词解释中英文对比<using_information_sources> social networks 社会网络abductive reasoning 溯因推理action recognition(行为识别)active learning(主动学习)adaptive systems 自适应系统adverse drugs reactions(药物不良反应)algorithm design and analysis(算法设计与分析) algorithm(算法)artificial intelligence 人工智能association rule(关联规则)attribute value taxonomy 属性分类规范automomous agent 自动代理automomous systems 自动系统background knowledge 背景知识bayes methods(贝叶斯方法)bayesian inference(贝叶斯推断)bayesian methods(bayes 方法)belief propagation(置信传播)better understanding 内涵理解big data 大数据big data(大数据)biological network(生物网络)biological sciences(生物科学)biomedical domain 生物医学领域biomedical research(生物医学研究)biomedical text(生物医学文本)boltzmann machine(玻尔兹曼机)bootstrapping method 拔靴法case based reasoning 实例推理causual models 因果模型citation matching (引文匹配)classification (分类)classification algorithms(分类算法)clistering algorithms 聚类算法cloud computing(云计算)cluster-based retrieval (聚类检索)clustering (聚类)clustering algorithms(聚类算法)clustering 聚类cognitive science 认知科学collaborative filtering (协同过滤)collaborative filtering(协同过滤)collabrative ontology development 联合本体开发collabrative ontology engineering 联合本体工程commonsense knowledge 常识communication networks(通讯网络)community detection(社区发现)complex data(复杂数据)complex dynamical networks(复杂动态网络)complex network(复杂网络)complex network(复杂网络)computational biology 计算生物学computational biology(计算生物学)computational complexity(计算复杂性) computational intelligence 智能计算computational modeling(计算模型)computer animation(计算机动画)computer networks(计算机网络)computer science 计算机科学concept clustering 概念聚类concept formation 概念形成concept learning 概念学习concept map 概念图concept model 概念模型concept modelling 概念模型conceptual model 概念模型conditional random field(条件随机场模型) conjunctive quries 合取查询constrained least squares (约束最小二乘) convex programming(凸规划)convolutional neural networks(卷积神经网络) customer relationship management(客户关系管理) data analysis(数据分析)data analysis(数据分析)data center(数据中心)data clustering (数据聚类)data compression(数据压缩)data envelopment analysis (数据包络分析)data fusion 数据融合data generation(数据生成)data handling(数据处理)data hierarchy (数据层次)data integration(数据整合)data integrity 数据完整性data intensive computing(数据密集型计算)data management 数据管理data management(数据管理)data management(数据管理)data miningdata mining 数据挖掘data model 数据模型data models(数据模型)data partitioning 数据划分data point(数据点)data privacy(数据隐私)data security(数据安全)data stream(数据流)data streams(数据流)data structure( 数据结构)data structure(数据结构)data visualisation(数据可视化)data visualization 数据可视化data visualization(数据可视化)data warehouse(数据仓库)data warehouses(数据仓库)data warehousing(数据仓库)database management systems(数据库管理系统)database management(数据库管理)date interlinking 日期互联date linking 日期链接Decision analysis(决策分析)decision maker 决策者decision making (决策)decision models 决策模型decision models 决策模型decision rule 决策规则decision support system 决策支持系统decision support systems (决策支持系统) decision tree(决策树)decission tree 决策树deep belief network(深度信念网络)deep learning(深度学习)defult reasoning 默认推理density estimation(密度估计)design methodology 设计方法论dimension reduction(降维) dimensionality reduction(降维)directed graph(有向图)disaster management 灾害管理disastrous event(灾难性事件)discovery(知识发现)dissimilarity (相异性)distributed databases 分布式数据库distributed databases(分布式数据库) distributed query 分布式查询document clustering (文档聚类)domain experts 领域专家domain knowledge 领域知识domain specific language 领域专用语言dynamic databases(动态数据库)dynamic logic 动态逻辑dynamic network(动态网络)dynamic system(动态系统)earth mover's distance(EMD 距离) education 教育efficient algorithm(有效算法)electric commerce 电子商务electronic health records(电子健康档案) entity disambiguation 实体消歧entity recognition 实体识别entity recognition(实体识别)entity resolution 实体解析event detection 事件检测event detection(事件检测)event extraction 事件抽取event identificaton 事件识别exhaustive indexing 完整索引expert system 专家系统expert systems(专家系统)explanation based learning 解释学习factor graph(因子图)feature extraction 特征提取feature extraction(特征提取)feature extraction(特征提取)feature selection (特征选择)feature selection 特征选择feature selection(特征选择)feature space 特征空间first order logic 一阶逻辑formal logic 形式逻辑formal meaning prepresentation 形式意义表示formal semantics 形式语义formal specification 形式描述frame based system 框为本的系统frequent itemsets(频繁项目集)frequent pattern(频繁模式)fuzzy clustering (模糊聚类)fuzzy clustering (模糊聚类)fuzzy clustering (模糊聚类)fuzzy data mining(模糊数据挖掘)fuzzy logic 模糊逻辑fuzzy set theory(模糊集合论)fuzzy set(模糊集)fuzzy sets 模糊集合fuzzy systems 模糊系统gaussian processes(高斯过程)gene expression data 基因表达数据gene expression(基因表达)generative model(生成模型)generative model(生成模型)genetic algorithm 遗传算法genome wide association study(全基因组关联分析) graph classification(图分类)graph classification(图分类)graph clustering(图聚类)graph data(图数据)graph data(图形数据)graph database 图数据库graph database(图数据库)graph mining(图挖掘)graph mining(图挖掘)graph partitioning 图划分graph query 图查询graph structure(图结构)graph theory(图论)graph theory(图论)graph theory(图论)graph theroy 图论graph visualization(图形可视化)graphical user interface 图形用户界面graphical user interfaces(图形用户界面)health care 卫生保健health care(卫生保健)heterogeneous data source 异构数据源heterogeneous data(异构数据)heterogeneous database 异构数据库heterogeneous information network(异构信息网络) heterogeneous network(异构网络)heterogenous ontology 异构本体heuristic rule 启发式规则hidden markov model(隐马尔可夫模型)hidden markov model(隐马尔可夫模型)hidden markov models(隐马尔可夫模型) hierarchical clustering (层次聚类) homogeneous network(同构网络)human centered computing 人机交互技术human computer interaction 人机交互human interaction 人机交互human robot interaction 人机交互image classification(图像分类)image clustering (图像聚类)image mining( 图像挖掘)image reconstruction(图像重建)image retrieval (图像检索)image segmentation(图像分割)inconsistent ontology 本体不一致incremental learning(增量学习)inductive learning (归纳学习)inference mechanisms 推理机制inference mechanisms(推理机制)inference rule 推理规则information cascades(信息追随)information diffusion(信息扩散)information extraction 信息提取information filtering(信息过滤)information filtering(信息过滤)information integration(信息集成)information network analysis(信息网络分析) information network mining(信息网络挖掘) information network(信息网络)information processing 信息处理information processing 信息处理information resource management (信息资源管理) information retrieval models(信息检索模型) information retrieval 信息检索information retrieval(信息检索)information retrieval(信息检索)information science 情报科学information sources 信息源information system( 信息系统)information system(信息系统)information technology(信息技术)information visualization(信息可视化)instance matching 实例匹配intelligent assistant 智能辅助intelligent systems 智能系统interaction network(交互网络)interactive visualization(交互式可视化)kernel function(核函数)kernel operator (核算子)keyword search(关键字检索)knowledege reuse 知识再利用knowledgeknowledgeknowledge acquisitionknowledge base 知识库knowledge based system 知识系统knowledge building 知识建构knowledge capture 知识获取knowledge construction 知识建构knowledge discovery(知识发现)knowledge extraction 知识提取knowledge fusion 知识融合knowledge integrationknowledge management systems 知识管理系统knowledge management 知识管理knowledge management(知识管理)knowledge model 知识模型knowledge reasoningknowledge representationknowledge representation(知识表达) knowledge sharing 知识共享knowledge storageknowledge technology 知识技术knowledge verification 知识验证language model(语言模型)language modeling approach(语言模型方法) large graph(大图)large graph(大图)learning(无监督学习)life science 生命科学linear programming(线性规划)link analysis (链接分析)link prediction(链接预测)link prediction(链接预测)link prediction(链接预测)linked data(关联数据)location based service(基于位置的服务) loclation based services(基于位置的服务) logic programming 逻辑编程logical implication 逻辑蕴涵logistic regression(logistic 回归)machine learning 机器学习machine translation(机器翻译)management system(管理系统)management( 知识管理)manifold learning(流形学习)markov chains 马尔可夫链markov processes(马尔可夫过程)matching function 匹配函数matrix decomposition(矩阵分解)matrix decomposition(矩阵分解)maximum likelihood estimation(最大似然估计)medical research(医学研究)mixture of gaussians(混合高斯模型)mobile computing(移动计算)multi agnet systems 多智能体系统multiagent systems 多智能体系统multimedia 多媒体natural language processing 自然语言处理natural language processing(自然语言处理) nearest neighbor (近邻)network analysis( 网络分析)network analysis(网络分析)network analysis(网络分析)network formation(组网)network structure(网络结构)network theory(网络理论)network topology(网络拓扑)network visualization(网络可视化)neural network(神经网络)neural networks (神经网络)neural networks(神经网络)nonlinear dynamics(非线性动力学)nonmonotonic reasoning 非单调推理nonnegative matrix factorization (非负矩阵分解) nonnegative matrix factorization(非负矩阵分解) object detection(目标检测)object oriented 面向对象object recognition(目标识别)object recognition(目标识别)online community(网络社区)online social network(在线社交网络)online social networks(在线社交网络)ontology alignment 本体映射ontology development 本体开发ontology engineering 本体工程ontology evolution 本体演化ontology extraction 本体抽取ontology interoperablity 互用性本体ontology language 本体语言ontology mapping 本体映射ontology matching 本体匹配ontology versioning 本体版本ontology 本体论open government data 政府公开数据opinion analysis(舆情分析)opinion mining(意见挖掘)opinion mining(意见挖掘)outlier detection(孤立点检测)parallel processing(并行处理)patient care(病人医疗护理)pattern classification(模式分类)pattern matching(模式匹配)pattern mining(模式挖掘)pattern recognition 模式识别pattern recognition(模式识别)pattern recognition(模式识别)personal data(个人数据)prediction algorithms(预测算法)predictive model 预测模型predictive models(预测模型)privacy preservation(隐私保护)probabilistic logic(概率逻辑)probabilistic logic(概率逻辑)probabilistic model(概率模型)probabilistic model(概率模型)probability distribution(概率分布)probability distribution(概率分布)project management(项目管理)pruning technique(修剪技术)quality management 质量管理query expansion(查询扩展)query language 查询语言query language(查询语言)query processing(查询处理)query rewrite 查询重写question answering system 问答系统random forest(随机森林)random graph(随机图)random processes(随机过程)random walk(随机游走)range query(范围查询)RDF database 资源描述框架数据库RDF query 资源描述框架查询RDF repository 资源描述框架存储库RDF storge 资源描述框架存储real time(实时)recommender system(推荐系统)recommender system(推荐系统)recommender systems 推荐系统recommender systems(推荐系统)record linkage 记录链接recurrent neural network(递归神经网络) regression(回归)reinforcement learning 强化学习reinforcement learning(强化学习)relation extraction 关系抽取relational database 关系数据库relational learning 关系学习relevance feedback (相关反馈)resource description framework 资源描述框架restricted boltzmann machines(受限玻尔兹曼机) retrieval models(检索模型)rough set theroy 粗糙集理论rough set 粗糙集rule based system 基于规则系统rule based 基于规则rule induction (规则归纳)rule learning (规则学习)rule learning 规则学习schema mapping 模式映射schema matching 模式匹配scientific domain 科学域search problems(搜索问题)semantic (web) technology 语义技术semantic analysis 语义分析semantic annotation 语义标注semantic computing 语义计算semantic integration 语义集成semantic interpretation 语义解释semantic model 语义模型semantic network 语义网络semantic relatedness 语义相关性semantic relation learning 语义关系学习semantic search 语义检索semantic similarity 语义相似度semantic similarity(语义相似度)semantic web rule language 语义网规则语言semantic web 语义网semantic web(语义网)semantic workflow 语义工作流semi supervised learning(半监督学习)sensor data(传感器数据)sensor networks(传感器网络)sentiment analysis(情感分析)sentiment analysis(情感分析)sequential pattern(序列模式)service oriented architecture 面向服务的体系结构shortest path(最短路径)similar kernel function(相似核函数)similarity measure(相似性度量)similarity relationship (相似关系)similarity search(相似搜索)similarity(相似性)situation aware 情境感知social behavior(社交行为)social influence(社会影响)social interaction(社交互动)social interaction(社交互动)social learning(社会学习)social life networks(社交生活网络)social machine 社交机器social media(社交媒体)social media(社交媒体)social media(社交媒体)social network analysis 社会网络分析social network analysis(社交网络分析)social network(社交网络)social network(社交网络)social science(社会科学)social tagging system(社交标签系统)social tagging(社交标签)social web(社交网页)sparse coding(稀疏编码)sparse matrices(稀疏矩阵)sparse representation(稀疏表示)spatial database(空间数据库)spatial reasoning 空间推理statistical analysis(统计分析)statistical model 统计模型string matching(串匹配)structural risk minimization (结构风险最小化) structured data 结构化数据subgraph matching 子图匹配subspace clustering(子空间聚类)supervised learning( 有support vector machine 支持向量机support vector machines(支持向量机)system dynamics(系统动力学)tag recommendation(标签推荐)taxonmy induction 感应规范temporal logic 时态逻辑temporal reasoning 时序推理text analysis(文本分析)text anaylsis 文本分析text classification (文本分类)text data(文本数据)text mining technique(文本挖掘技术)text mining 文本挖掘text mining(文本挖掘)text summarization(文本摘要)thesaurus alignment 同义对齐time frequency analysis(时频分析)time series analysis( 时time series data(时间序列数据)time series data(时间序列数据)time series(时间序列)topic model(主题模型)topic modeling(主题模型)transfer learning 迁移学习triple store 三元组存储uncertainty reasoning 不精确推理undirected graph(无向图)unified modeling language 统一建模语言unsupervisedupper bound(上界)user behavior(用户行为)user generated content(用户生成内容)utility mining(效用挖掘)visual analytics(可视化分析)visual content(视觉内容)visual representation(视觉表征)visualisation(可视化)visualization technique(可视化技术) visualization tool(可视化工具)web 2.0(网络2.0)web forum(web 论坛)web mining(网络挖掘)web of data 数据网web ontology lanuage 网络本体语言web pages(web 页面)web resource 网络资源web science 万维科学web search (网络检索)web usage mining(web 使用挖掘)wireless networks 无线网络world knowledge 世界知识world wide web 万维网world wide web(万维网)xml database 可扩展标志语言数据库附录 2 Data Mining 知识图谱(共包含二级节点15 个,三级节点93 个)间序列分析)监督学习)领域 二级分类 三级分类。

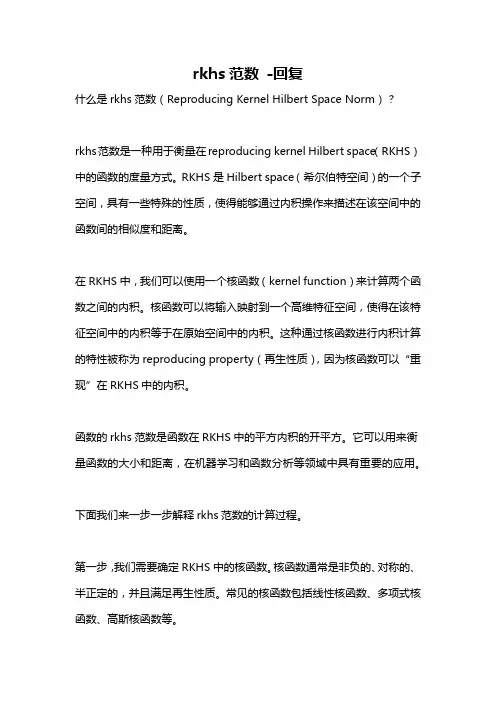

rkhs范数-回复什么是rkhs范数(Reproducing Kernel Hilbert Space Norm)?rkhs范数是一种用于衡量在reproducing kernel Hilbert space(RKHS)中的函数的度量方式。

RKHS是Hilbert space(希尔伯特空间)的一个子空间,具有一些特殊的性质,使得能够通过内积操作来描述在该空间中的函数间的相似度和距离。

在RKHS中,我们可以使用一个核函数(kernel function)来计算两个函数之间的内积。

核函数可以将输入映射到一个高维特征空间,使得在该特征空间中的内积等于在原始空间中的内积。

这种通过核函数进行内积计算的特性被称为reproducing property(再生性质),因为核函数可以“重现”在RKHS中的内积。

函数的rkhs范数是函数在RKHS中的平方内积的开平方。

它可以用来衡量函数的大小和距离,在机器学习和函数分析等领域中具有重要的应用。

下面我们来一步一步解释rkhs范数的计算过程。

第一步,我们需要确定RKHS中的核函数。

核函数通常是非负的、对称的、半正定的,并且满足再生性质。

常见的核函数包括线性核函数、多项式核函数、高斯核函数等。

第二步,给定一个函数f,我们需要将其表示为RKHS中的一个线性组合。

这可以通过使用核函数进行内积计算来实现。

具体来说,我们可以将f表示为:f(x) = \sum_{i=1}^{n} \alpha_i k(x_i, x)其中,k(x_i, x)是核函数在数据点x_i和x之间的值,\alpha_i是系数。

这样,我们就可以使用核函数和系数来在RKHS中表示函数f。

第三步,我们可以使用内积的性质来计算函数f在RKHS中的平方内积。

根据内积的定义,函数f和g的内积可以表示为:\langle f, g \rangle_{\text{rkhs}} = \sum_{i=1}^{n} \sum_{j=1}^{n} \alpha_i \alpha_j k(x_i,x_j)根据上述表示,我们可以将函数f的rkhs范数定义为:f _{\text{rkhs}} = \sqrt{\langle f, f \rangle_{\text{rkhs}}} =\sqrt{\sum_{i=1}^{n} \sum_{j=1}^{n} \alpha_i \alpha_j k(x_i,x_j)}最后一步,我们根据rkhs范数的定义,可以计算函数f在RKHS空间中的大小和距离。

a rX iv:mat h /7197v3[mat h.ST]1J ul28The Annals of Statistics 2008,Vol.36,No.3,1171–1220DOI:10.1214/009053607000000677c Institute of Mathematical Statistics ,2008KERNEL METHODS IN MACHINE LEARNING 1By Thomas Hofmann,Bernhard Sch ¨o lkopf and Alexander J.Smola Darmstadt University of Technology ,Max Planck Institute for Biological Cybernetics and National ICT Australia We review machine learning methods employing positive definite kernels.These methods formulate learning and estimation problems in a reproducing kernel Hilbert space (RKHS)of functions defined on the data domain,expanded in terms of a kernel.Working in linear spaces of function has the benefit of facilitating the construction and analysis of learning algorithms while at the same time allowing large classes of functions.The latter include nonlinear functions as well as functions defined on nonvectorial data.We cover a wide range of methods,ranging from binary classifiers to sophisticated methods for estimation with structured data.1.Introduction.Over the last ten years estimation and learning meth-ods utilizing positive definite kernels have become rather popular,particu-larly in machine learning.Since these methods have a stronger mathematical slant than earlier machine learning methods (e.g.,neural networks),there is also significant interest in the statistics and mathematics community for these methods.The present review aims to summarize the state of the art on a conceptual level.In doing so,we build on various sources,including Burges [25],Cristianini and Shawe-Taylor [37],Herbrich [64]and Vapnik [141]and,in particular,Sch¨o lkopf and Smola [118],but we also add a fair amount of more recent material which helps unifying the exposition.We have not had space to include proofs;they can be found either in the long version of the present paper (see Hofmann et al.[69]),in the references given or in the above books.The main idea of all the described methods can be summarized in one paragraph.Traditionally,theory and algorithms of machine learning and2T.HOFMANN,B.SCH¨OLKOPF AND A.J.SMOLAstatistics has been very well developed for the linear case.Real world data analysis problems,on the other hand,often require nonlinear methods to de-tect the kind of dependencies that allow successful prediction of properties of interest.By using a positive definite kernel,one can sometimes have the best of both worlds.The kernel corresponds to a dot product in a(usually high-dimensional)feature space.In this space,our estimation methods are linear,but as long as we can formulate everything in terms of kernel evalu-ations,we never explicitly have to compute in the high-dimensional feature space.The paper has three main sections:Section2deals with fundamental properties of kernels,with special emphasis on(conditionally)positive defi-nite kernels and their characterization.We give concrete examples for such kernels and discuss kernels and reproducing kernel Hilbert spaces in the con-text of regularization.Section3presents various approaches for estimating dependencies and analyzing data that make use of kernels.We provide an overview of the problem formulations as well as their solution using convex programming techniques.Finally,Section4examines the use of reproduc-ing kernel Hilbert spaces as a means to define statistical models,the focus being on structured,multidimensional responses.We also show how such techniques can be combined with Markov networks as a suitable framework to model dependencies between response variables.2.Kernels.2.1.An introductory example.Suppose we are given empirical data (1)(x1,y1),...,(x n,y n)∈X×Y.Here,the domain X is some nonempty set that the inputs(the predictor variables)x i are taken from;the y i∈Y are called targets(the response vari-able).Here and below,i,j∈[n],where we use the notation[n]:={1,...,n}. Note that we have not made any assumptions on the domain X other than it being a set.In order to study the problem of learning,we need additional structure.In learning,we want to be able to generalize to unseen data points.In the case of binary pattern recognition,given some new input x∈X,we want to predict the corresponding y∈{±1}(more complex output domains Y will be treated below).Loosely speaking,we want to choose y such that(x,y)is in some sense similar to the training examples.To this end,we need similarity measures in X and in{±1}.The latter is easier, as two target values can only be identical or different.For the former,we require a function(2)k:X×X→R,(x,x′)→k(x,x′)KERNEL METHODS IN MACHINE LEARNING3Fig. 1.A simple geometric classification algorithm:given two classes of points(de-picted by“o”and“+”),compute their means c+,c−and assign a test input x to the one whose mean is closer.This can be done by looking at the dot product between x−c [where c=(c++c−)/2]and w:=c+−c−,which changes sign as the enclosed angle passes throughπ/2.Note that the corresponding decision boundary is a hyperplane(the dotted line)orthogonal to w(from Sch¨o lkopf and Smola[118]).satisfying,for all x,x′∈X,k(x,x′)= Φ(x),Φ(x′) ,(3)whereΦmaps into some dot product space H,sometimes called the featurespace.The similarity measure k is usually called a kernel,andΦis called its feature map.The advantage of using such a kernel as a similarity measure is that it allows us to construct algorithms in dot product spaces.For instance, consider the following simple classification algorithm,described in Figure1, where Y={±1}.The idea is to compute the means of the two classes inthe feature space,c+=1n− {i:y i=−1}Φ(x i), where n+and n−are the number of examples with positive and negative target values,respectively.We then assign a new pointΦ(x)to the class whose mean is closer to it.This leads to the prediction ruley=sgn( Φ(x),c+ − Φ(x),c− +b)(4)with b=1n+ {i:y i=+1} Φ(x),Φ(x i)k(x,x i)−12(1n2+ {(i,j):y i=y j=+1}k(x i,x j)).Let us consider one well-known special case of this type of classifier.As-sume that the class means have the same distance to the origin(hence, b=0),and that k(·,x)is a density for all x∈X.If the two classes are4T.HOFMANN,B.SCH¨OLKOPF AND A.J.SMOLAequally likely and were generated from two probability distributions that are estimatedp+(x):=1n− {i:y i=−1}k(x,x i),(6)then(5)is the estimated Bayes decision rule,plugging in the estimates p+ and p−for the true densities.The classifier(5)is closely related to the Support Vector Machine(SVM) that we will discuss below.It is linear in the feature space(4),while in the input domain,it is represented by a kernel expansion(5).In both cases,the decision boundary is a hyperplane in the feature space;however,the normal vectors[for(4),w=c+−c−]are usually rather different.The normal vector not only characterizes the alignment of the hyperplane, its length can also be used to construct tests for the equality of the two class-generating distributions(Borgwardt et al.[22]).As an aside,note that if we normalize the targets such thatˆy i=y i/|{j:y j= y i}|,in which case theˆy i sum to zero,then w 2= K,ˆyˆy⊤ F,where ·,· F is the Frobenius dot product.If the two classes have equal size,then up to a scaling factor involving K 2and n,this equals the kernel-target alignment defined by Cristianini et al.[38].2.2.Positive definite kernels.We have required that a kernel satisfy(3), that is,correspond to a dot product in some dot product space.In the present section we show that the class of kernels that can be written in the form(3)coincides with the class of positive definite kernels.This has far-reaching consequences.There are examples of positive definite kernels which can be evaluated efficiently even though they correspond to dot products in infinite dimensional dot product spaces.In such cases,substituting k(x,x′) for Φ(x),Φ(x′) ,as we have done in(5),is crucial.In the machine learning community,this substitution is called the kernel trick.Definition1(Gram matrix).Given a kernel k and inputs x1,...,x n∈X,the n×n matrixK:=(k(x i,x j))ij(7)is called the Gram matrix(or kernel matrix)of k with respect to x1,...,x n.Definition2(Positive definite matrix).A real n×n symmetric matrix K ij satisfyingi,j c i c j K ij≥0(8)for all c i∈R is called positive definite.If equality in(8)only occurs for c1=···=c n=0,then we shall call the matrix strictly positive definite.KERNEL METHODS IN MACHINE LEARNING 5Definition 3(Positive definite kernel).Let X be a nonempty set.A function k :X ×X →R which for all n ∈N ,x i ∈X ,i ∈[n ]gives rise to a positive definite Gram matrix is called a positive definite kernel .A function k :X ×X →R which for all n ∈N and distinct x i ∈X gives rise to a strictly positive definite Gram matrix is called a strictly positive definite kernel .Occasionally,we shall refer to positive definite kernels simply as kernels .Note that,for simplicity,we have restricted ourselves to the case of real valued kernels.However,with small changes,the below will also hold for the complex valued case.Since i,j c i c j Φ(x i ),Φ(x j ) = i c i Φ(x i ), j c j Φ(x j ) ≥0,kernels of the form (3)are positive definite for any choice of Φ.In particular,if X is already a dot product space,we may choose Φto be the identity.Kernels can thus be regarded as generalized dot products.While they are not generally bilinear,they share important properties with dot products,such as the Cauchy–Schwarz inequality:If k is a positive definite kernel,and x 1,x 2∈X ,thenk (x 1,x 2)2≤k (x 1,x 1)·k (x 2,x 2).(9)2.2.1.Construction of the reproducing kernel Hilbert space.We now de-fine a map from X into the space of functions mapping X into R ,denoted as R X ,viaΦ:X →R X where x →k (·,x ).(10)Here,Φ(x )=k (·,x )denotes the function that assigns the value k (x ′,x )to x ′∈X .We next construct a dot product space containing the images of the inputs under Φ.To this end,we first turn it into a vector space by forming linear combinationsf (·)=n i =1αi k (·,x i ).(11)Here,n ∈N ,αi ∈R and x i ∈X are arbitrary.Next,we define a dot product between f and another function g (·)= n ′j =1βj k (·,x ′j )(with n ′∈N ,βj ∈R and x ′j ∈X )asf,g :=n i =1n ′j =1αi βj k (x i ,x ′j ).(12)To see that this is well defined although it contains the expansion coefficients and points,note that f,g = n ′j =1βj f (x ′j ).The latter,however,does not depend on the particular expansion of f .Similarly,for g ,note that f,g = n i =1αi g (x i ).This also shows that ·,· is bilinear.It is symmetric,as f,g =6T.HOFMANN,B.SCH¨OLKOPF AND A.J.SMOLAg,f .Moreover,it is positive definite,since positive definiteness of k implies that,for any function f,written as(11),we havef,f =ni,j=1αiαj k(x i,x j)≥0.(13)Next,note that given functions f1,...,f p,and coefficientsγ1,...,γp∈R,we havepi,j=1γiγj f i,f j = p i=1γi f i,p j=1γj f j ≥0.(14)Here,the equality follows from the bilinearity of ·,· ,and the right-hand inequality from(13).By(14), ·,· is a positive definite kernel,defined on our vector space of functions.For the last step in proving that it even is a dot product,we note that,by(12),for all functions(11),k(·,x),f =f(x)and,in particular, k(·,x),k(·,x′) =k(x,x′). (15)By virtue of these properties,k is called a reproducing kernel(Aronszajn [7]).Due to(15)and(9),we have|f(x)|2=| k(·,x),f |2≤k(x,x)· f,f .(16)By this inequality, f,f =0implies f=0,which is the last property that was left to prove in order to establish that ·,· is a dot product. Skipping some details,we add that one can complete the space of func-tions(11)in the norm corresponding to the dot product,and thus gets a Hilbert space H,called a reproducing kernel Hilbert space(RKHS).One can define a RKHS as a Hilbert space H of functions on a set X with the property that,for all x∈X and f∈H,the point evaluations f→f(x) are continuous linear functionals[in particular,all point values f(x)are well defined,which already distinguishes RKHSs from many L2Hilbert spaces]. From the point evaluation functional,one can then construct the reproduc-ing kernel using the Riesz representation theorem.The Moore–Aronszajn theorem(Aronszajn[7])states that,for every positive definite kernel on X×X,there exists a unique RKHS and vice versa.There is an analogue of the kernel trick for distances rather than dot products,that is,dissimilarities rather than similarities.This leads to the larger class of conditionally positive definite kernels.Those kernels are de-fined just like positive definite ones,with the one difference being that their Gram matrices need to satisfy(8)only subject toni=1c i=0.(17)KERNEL METHODS IN MACHINE LEARNING7 Interestingly,it turns out that many kernel algorithms,including SVMs and kernel PCA(see Section3),can be applied also with this larger class of kernels,due to their being translation invariant in feature space(Hein et al.[63]and Sch¨o lkopf and Smola[118]).We conclude this section with a note on terminology.In the early years of kernel machine learning research,it was not the notion of positive definite kernels that was being used.Instead,researchers considered kernels satis-fying the conditions of Mercer’s theorem(Mercer[99],see,e.g.,Cristianini and Shawe-Taylor[37]and Vapnik[141]).However,while all such kernels do satisfy(3),the converse is not true.Since(3)is what we are interested in, positive definite kernels are thus the right class of kernels to consider.2.2.2.Properties of positive definite kernels.We begin with some closure properties of the set of positive definite kernels.Proposition4.Below,k1,k2,...are arbitrary positive definite kernels on X×X,where X is a nonempty set:(i)The set of positive definite kernels is a closed convex cone,that is, (a)ifα1,α2≥0,thenα1k1+α2k2is positive definite;and(b)if k(x,x′):= lim n→∞k n(x,x′)exists for all x,x′,then k is positive definite.(ii)The pointwise product k1k2is positive definite.(iii)Assume that for i=1,2,k i is a positive definite kernel on X i×X i, where X i is a nonempty set.Then the tensor product k1⊗k2and the direct sum k1⊕k2are positive definite kernels on(X1×X2)×(X1×X2).The proofs can be found in Berg et al.[18].It is reassuring that sums and products of positive definite kernels are positive definite.We will now explain that,loosely speaking,there are no other operations that preserve positive definiteness.To this end,let C de-note the set of all functionsψ:R→R that map positive definite kernels to (conditionally)positive definite kernels(readers who are not interested in the case of conditionally positive definite kernels may ignore the term in parentheses).We defineC:={ψ|k is a p.d.kernel⇒ψ(k)is a(conditionally)p.d.kernel},C′={ψ|for any Hilbert space F,ψ( x,x′ F)is(conditionally)positive definite}, C′′={ψ|for all n∈N:K is a p.d.n×n matrix⇒ψ(K)is(conditionally)p.d.},whereψ(K)is the n×n matrix with elementsψ(K ij).8T.HOFMANN,B.SCH¨OLKOPF AND A.J.SMOLAProposition5.C=C′=C′′.The following proposition follows from a result of FitzGerald et al.[50]for (conditionally)positive definite matrices;by Proposition5,it also applies for (conditionally)positive definite kernels,and for functions of dot products. We state the latter case.Proposition6.Letψ:R→R.Thenψ( x,x′ F)is positive definite for any Hilbert space F if and only ifψis real entire of the formψ(t)=∞ n=0a n t n(18)with a n≥0for n≥0.Moreover,ψ( x,x′ F)is conditionally positive definite for any Hilbert space F if and only ifψis real entire of the form(18)with a n≥0for n≥1.There are further properties of k that can be read offthe coefficients a n:•Steinwart[128]showed that if all a n are strictly positive,then the ker-nel of Proposition6is universal on every compact subset S of R d in the sense that its RKHS is dense in the space of continuous functions on S in the · ∞norm.For support vector machines using universal kernels,he then shows(universal)consistency(Steinwart[129]).Examples of univer-sal kernels are(19)and(20)below.•In Lemma11we will show that the a0term does not affect an SVM. Hence,we infer that it is actually sufficient for consistency to have a n>0 for n≥1.We conclude the section with an example of a kernel which is positive definite by Proposition6.To this end,let X be a dot product space.The power series expansion ofψ(x)=e x then tells us thatk(x,x′)=e x,x′ /σ2(19)is positive definite(Haussler[62]).If we further multiply k with the positive definite kernel f(x)f(x′),where f(x)=e− x 2/2σ2andσ>0,this leads to the positive definiteness of the Gaussian kernelk′(x,x′)=k(x,x′)f(x)f(x′)=e− x−x′ 2/(2σ2).(20)KERNEL METHODS IN MACHINE LEARNING9 2.2.3.Properties of positive definite functions.We now let X=R d and consider positive definite kernels of the form(21)k(x,x′)=h(x−x′),in which case h is called a positive definite function.The following charac-terization is due to Bochner[21].We state it in the form given by Wendland [152].Theorem7.A continuous function h on R d is positive definite if and only if there exists afinite nonnegative Borel measureµon R d such thath(x)= R d e−i x,ω dµ(ω).(22)While normally formulated for complex valued functions,the theorem also holds true for real functions.Note,however,that if we start with an arbitrary nonnegative Borel measure,its Fourier transform may not be real. Real-valued positive definite functions are distinguished by the fact that the corresponding measuresµare symmetric.We may normalize h such that h(0)=1[hence,by(9),|h(x)|≤1],in which caseµis a probability measure and h is its characteristic function.For instance,ifµis a normal distribution of the form(2π/σ2)−d/2e−σ2 ω 2/2dω, then the corresponding positive definite function is the Gaussian e− x 2/(2σ2); see(20).Bochner’s theorem allows us to interpret the similarity measure k(x,x′)= h(x−x′)in the frequency domain.The choice of the measureµdetermines which frequency components occur in the kernel.Since the solutions of kernel algorithms will turn out to befinite kernel expansions,the measureµwill thus determine which frequencies occur in the estimates,that is,it will determine their regularization properties—more on that in Section2.3.2 below.Bochner’s theorem generalizes earlier work of Mathias,and has itself been generalized in various ways,that is,by Schoenberg[115].An important generalization considers Abelian semigroups(Berg et al.[18]).In that case, the theorem provides an integral representation of positive definite functions in terms of the semigroup’s semicharacters.Further generalizations were given by Krein,for the cases of positive definite kernels and functions with a limited number of negative squares.See Stewart[130]for further details and references.As above,there are conditions that ensure that the positive definiteness becomes strict.Proposition8(Wendland[152]).A positive definite function is strictly positive definite if the carrier of the measure in its representation(22)con-tains an open subset.10T.HOFMANN,B.SCH¨OLKOPF AND A.J.SMOLAThis implies that the Gaussian kernel is strictly positive definite.An important special case of positive definite functions,which includes the Gaussian,are radial basis functions.These are functions that can be written as h(x)=g( x 2)for some function g:[0,∞[→R.They have the property of being invariant under the Euclidean group.2.2.4.Examples of kernels.We have already seen several instances of positive definite kernels,and now intend to complete our selection with a few more examples.In particular,we discuss polynomial kernels,convolution kernels,ANOVA expansions and kernels on documents.Polynomial kernels.From Proposition4it is clear that homogeneous poly-nomial kernels k(x,x′)= x,x′ p are positive definite for p∈N and x,x′∈R d. By direct calculation,we can derive the corresponding feature map(Poggio [108]):x,x′ p= d j=1[x]j[x′]j p(23)= j∈[d]p[x]j1·····[x]j p·[x′]j1·····[x′]j p= C p(x),C p(x′) ,where C p maps x∈R d to the vector C p(x)whose entries are all possible p th degree ordered products of the entries of x(note that[d]is used as a shorthand for{1,...,d}).The polynomial kernel of degree p thus computes a dot product in the space spanned by all monomials of degree p in the input coordinates.Other useful kernels include the inhomogeneous polynomial, (24)k(x,x′)=( x,x′ +c)p where p∈N and c≥0,which computes all monomials up to degree p.Spline kernels.It is possible to obtain spline functions as a result of kernel expansions(Vapnik et al.[144]simply by noting that convolution of an even number of indicator functions yields a positive kernel function.Denote by I X the indicator(or characteristic)function on the set X,and denote by ⊗the convolution operation,(f⊗g)(x):= R d f(x′)g(x′−x)dx′.Then the B-spline kernels are given by(25)k(x,x′)=B2p+1(x−x′)where p∈N with B i+1:=B i⊗B0.Here B0is the characteristic function on the unit ball in R d.From the definition of(25),it is obvious that,for odd m,we may write B m as the inner product between functions B m/2.Moreover,note that,for even m,B m is not a kernel.KERNEL METHODS IN MACHINE LEARNING11 Convolutions and structures.Let us now move to kernels defined on struc-tured objects(Haussler[62]and Watkins[151]).Suppose the object x∈X is composed of x p∈X p,where p∈[P](note that the sets X p need not be equal). For instance,consider the string x=AT G and P=2.It is composed of the parts x1=AT and x2=G,or alternatively,of x1=A and x2=T G.Math-ematically speaking,the set of“allowed”decompositions can be thought of as a relation R(x1,...,x P,x),to be read as“x1,...,x P constitute the composite object x.”Haussler[62]investigated how to define a kernel between composite ob-jects by building on similarity measures that assess their respective parts; in other words,kernels k p defined on X p×X p.Define the R-convolution of k1,...,k P as[k1⋆···⋆k P](x,x′):=¯x∈R(x),¯x′∈R(x′)P p=1k p(¯x p,¯x′p),(26)where the sum runs over all possible ways R(x)and R(x′)in which we can decompose x into¯x1,...,¯x P and x′analogously[here we used the con-vention that an empty sum equals zero,hence,if either x or x′cannot be decomposed,then(k1⋆···⋆k P)(x,x′)=0].If there is only afinite number of ways,the relation R is calledfinite.In this case,it can be shown that the R-convolution is a valid kernel(Haussler[62]).ANOVA kernels.Specific examples of convolution kernels are Gaussians and ANOVA kernels(Vapnik[141]and Wahba[148]).To construct an ANOVA kernel,we consider X=S N for some set S,and kernels k(i)on S×S,where i=1,...,N.For P=1,...,N,the ANOVA kernel of order P is defined as k P(x,x′):= 1≤i1<···<i P≤N P p=1k(i p)(x i p,x′i p).(27)Note that if P=N,the sum consists only of the term for which(i1,...,i P)= (1,...,N),and k equals the tensor product k(1)⊗···⊗k(N).At the other extreme,if P=1,then the products collapse to one factor each,and k equals the direct sum k(1)⊕···⊕k(N).For intermediate values of P,we get kernels that lie in between tensor products and direct sums.ANOVA kernels typically use some moderate value of P,which specifiesthe order of the interactions between attributes x ip that we are interestedin.The sum then runs over the numerous terms that take into account interactions of order P;fortunately,the computational cost can be reduced to O(P d)cost by utilizing recurrent procedures for the kernel evaluation. ANOVA kernels have been shown to work rather well in multi-dimensional SV regression problems(Stitson et al.[131]).12T.HOFMANN,B.SCH¨OLKOPF AND A.J.SMOLABag of words.One way in which SVMs have been used for text categoriza-tion(Joachims[77])is the bag-of-words representation.This maps a given text to a sparse vector,where each component corresponds to a word,and a component is set to one(or some other number)whenever the related word occurs in the ing an efficient sparse representation,the dot product between two such vectors can be computed quickly.Furthermore, this dot product is by construction a valid kernel,referred to as a sparse vector kernel.One of its shortcomings,however,is that it does not take into account the word ordering of a document.Other sparse vector kernels are also conceivable,such as one that maps a text to the set of pairs of words that are in the same sentence(Joachims[77]and Watkins[151]).n-grams and suffix trees.A more sophisticated way of dealing with string data was proposed by Haussler[62]and Watkins[151].The basic idea is as described above for general structured objects(26):Compare the strings by means of the substrings they contain.The more substrings two strings have in common,the more similar they are.The substrings need not always be contiguous;that said,the further apart thefirst and last element of a substring are,the less weight should be given to the similarity.Depending on the specific choice of a similarity measure,it is possible to define more or less efficient kernels which compute the dot product in the feature space spanned by all substrings of documents.Consider afinite alphabetΣ,the set of all strings of length n,Σn,and the set of allfinite strings,Σ∗:= ∞n=0Σn.The length of a string s∈Σ∗is denoted by|s|,and its elements by s(1)...s(|s|);the concatenation of s and t∈Σ∗is written st.Denote byk(x,x′)= s#(x,s)#(x′,s)c sa string kernel computed from exact matches.Here#(x,s)is the number of occurrences of s in x and c s≥0.Vishwanathan and Smola[146]provide an algorithm using suffix trees, which allows one to compute for arbitrary c s the value of the kernel k(x,x′) in O(|x|+|x′|)time and memory.Moreover,also f(x)= w,Φ(x) can be computed in O(|x|)time if preprocessing linear in the size of the support vectors is carried out.These kernels are then applied to function prediction (according to the gene ontology)of proteins using only their sequence in-formation.Another prominent application of string kernels is in thefield of splice form prediction and genefinding(R¨a tsch et al.[112]).For inexact matches of a limited degree,typically up toǫ=3,and strings of bounded length,a similar data structure can be built by explicitly gener-ating a dictionary of strings and their neighborhood in terms of a Hamming distance(Leslie et al.[92]).These kernels are defined by replacing#(x,s)KERNEL METHODS IN MACHINE LEARNING13 by a mismatch function#(x,s,ǫ)which reports the number of approximate occurrences of s in x.By trading offcomputational complexity with storage (hence,the restriction to small numbers of mismatches),essentially linear-time algorithms can be designed.Whether a general purpose algorithm exists which allows for efficient comparisons of strings with mismatches in linear time is still an open question.Mismatch kernels.In the general case it is only possible tofind algorithms whose complexity is linear in the lengths of the documents being compared, and the length of the substrings,that is,O(|x|·|x′|)or worse.We now describe such a kernel with a specific choice of weights(Cristianini and Shawe-Taylor[37]and Watkins[151]).Let us now form subsequences u of strings.Given an index sequence i:= (i1,...,i|u|)with1≤i1<···<i|u|≤|s|,we define u:=s(i):=s(i1)...s(i|u|). We call l(i):=i|u|−i1+1the length of the subsequence in s.Note that if iis not contiguous,then l(i)>|u|.The feature space built from strings of length n is defined to be H n:=R(Σn).This notation means that the space has one dimension(or coordinate) for each element ofΣn,labeled by that element(equivalently,we can thinkof it as the space of all real-valued functions onΣn).We can thus describe the feature map coordinate-wise for each u∈Σn via(28)[Φn(s)]u:= i:s(i)=uλl(i).Here,0<λ≤1is a decay parameter:The larger the length of the subse-quence in s,the smaller the respective contribution to[Φn(s)]u.The sum runs over all subsequences of s which equal u.For instance,consider a dimension of H3spanned(i.e.,labeled)by the string asd.In this case we have[Φ3(Nasd as)]asd= 2λ5.In thefirst string,asd is a contiguous substring.In the second string,it appears twice as a noncontiguous substring of length5in lass das,the two occurrences are las as and la d。

rkhs范数-回复什么是RKHS范数RKHS(Reproducible Kernel Hilbert Space,再生核希尔伯特空间)范数是衡量向量函数的长度的一种度量方式。

在机器学习和核方法领域中,RKHS范数广泛应用于支持向量机和核岭回归等算法中。

RKHS范数不仅能够量化向量函数的复杂程度,还能够提供一种评估向量函数的性质的方法。

为了更好地理解RKHS范数,我们一步一步来解释它的意义和相关概念。

第一步:理解再生核希尔伯特空间(RKHS)RKHS是希尔伯特空间的一个特殊子空间,在RKHS中定义了一个核函数。

核函数通常是非线性函数,能够将低维的输入空间映射到高维的特征空间。

在特征空间中,向量的内积可以通过核函数来计算,而不需要显式地计算实际的映射。

第二步:为什么使用RKHS范数RKHS范数是在RKHS中度量向量函数长度的度量方式。

在RKHS中定义的范数能够提供一种评估向量函数性质的方法。

具体而言,RKHS范数能够量化向量函数的光滑程度、振荡频率等特征。

通过RKHS范数,我们能够对向量函数的复杂程度有一个量化的认识。

第三步:RKHS范数的定义和计算方式RKHS范数的定义取决于具体的核函数和RKHS空间的结构。

常见的核函数包括线性核、多项式核和高斯核等。

以高斯核为例,定义RKHS范数为:f ²_H = ∫f(x)²K(x,x') dx dx'其中,f(x)是RKHS中的向量函数,K(x,x')是高斯核函数,H表示RKHS空间, f _H表示f在RKHS中的范数。

计算RKHS范数通常需要利用核函数的性质和RKHS空间的特殊结构。

由于核函数的选择和计算方法的不同,对RKHS范数的计算也存在一定的差异。

对于一些常见的核函数,已经有了一些计算RKHS范数的有效方法和算法。

第四步:RKHS范数的应用RKHS范数在机器学习和核方法中有着广泛的应用。

其中,最著名的应用之一是支持向量机(SVM)。

华中师范大学物理学院物理学专业英语仅供内部学习参考!2014一、课程的任务和教学目的通过学习《物理学专业英语》,学生将掌握物理学领域使用频率较高的专业词汇和表达方法,进而具备基本的阅读理解物理学专业文献的能力。

通过分析《物理学专业英语》课程教材中的范文,学生还将从英语角度理解物理学中个学科的研究内容和主要思想,提高学生的专业英语能力和了解物理学研究前沿的能力。

培养专业英语阅读能力,了解科技英语的特点,提高专业外语的阅读质量和阅读速度;掌握一定量的本专业英文词汇,基本达到能够独立完成一般性本专业外文资料的阅读;达到一定的笔译水平。

要求译文通顺、准确和专业化。

要求译文通顺、准确和专业化。

二、课程内容课程内容包括以下章节:物理学、经典力学、热力学、电磁学、光学、原子物理、统计力学、量子力学和狭义相对论三、基本要求1.充分利用课内时间保证充足的阅读量(约1200~1500词/学时),要求正确理解原文。

2.泛读适量课外相关英文读物,要求基本理解原文主要内容。

3.掌握基本专业词汇(不少于200词)。

4.应具有流利阅读、翻译及赏析专业英语文献,并能简单地进行写作的能力。

四、参考书目录1 Physics 物理学 (1)Introduction to physics (1)Classical and modern physics (2)Research fields (4)V ocabulary (7)2 Classical mechanics 经典力学 (10)Introduction (10)Description of classical mechanics (10)Momentum and collisions (14)Angular momentum (15)V ocabulary (16)3 Thermodynamics 热力学 (18)Introduction (18)Laws of thermodynamics (21)System models (22)Thermodynamic processes (27)Scope of thermodynamics (29)V ocabulary (30)4 Electromagnetism 电磁学 (33)Introduction (33)Electrostatics (33)Magnetostatics (35)Electromagnetic induction (40)V ocabulary (43)5 Optics 光学 (45)Introduction (45)Geometrical optics (45)Physical optics (47)Polarization (50)V ocabulary (51)6 Atomic physics 原子物理 (52)Introduction (52)Electronic configuration (52)Excitation and ionization (56)V ocabulary (59)7 Statistical mechanics 统计力学 (60)Overview (60)Fundamentals (60)Statistical ensembles (63)V ocabulary (65)8 Quantum mechanics 量子力学 (67)Introduction (67)Mathematical formulations (68)Quantization (71)Wave-particle duality (72)Quantum entanglement (75)V ocabulary (77)9 Special relativity 狭义相对论 (79)Introduction (79)Relativity of simultaneity (80)Lorentz transformations (80)Time dilation and length contraction (81)Mass-energy equivalence (82)Relativistic energy-momentum relation (86)V ocabulary (89)正文标记说明:蓝色Arial字体(例如energy):已知的专业词汇蓝色Arial字体加下划线(例如electromagnetism):新学的专业词汇黑色Times New Roman字体加下划线(例如postulate):新学的普通词汇1 Physics 物理学1 Physics 物理学Introduction to physicsPhysics is a part of natural philosophy and a natural science that involves the study of matter and its motion through space and time, along with related concepts such as energy and force. More broadly, it is the general analysis of nature, conducted in order to understand how the universe behaves.Physics is one of the oldest academic disciplines, perhaps the oldest through its inclusion of astronomy. Over the last two millennia, physics was a part of natural philosophy along with chemistry, certain branches of mathematics, and biology, but during the Scientific Revolution in the 17th century, the natural sciences emerged as unique research programs in their own right. Physics intersects with many interdisciplinary areas of research, such as biophysics and quantum chemistry,and the boundaries of physics are not rigidly defined. New ideas in physics often explain the fundamental mechanisms of other sciences, while opening new avenues of research in areas such as mathematics and philosophy.Physics also makes significant contributions through advances in new technologies that arise from theoretical breakthroughs. For example, advances in the understanding of electromagnetism or nuclear physics led directly to the development of new products which have dramatically transformed modern-day society, such as television, computers, domestic appliances, and nuclear weapons; advances in thermodynamics led to the development of industrialization; and advances in mechanics inspired the development of calculus.Core theoriesThough physics deals with a wide variety of systems, certain theories are used by all physicists. Each of these theories were experimentally tested numerous times and found correct as an approximation of nature (within a certain domain of validity).For instance, the theory of classical mechanics accurately describes the motion of objects, provided they are much larger than atoms and moving at much less than the speed of light. These theories continue to be areas of active research, and a remarkable aspect of classical mechanics known as chaos was discovered in the 20th century, three centuries after the original formulation of classical mechanics by Isaac Newton (1642–1727) 【艾萨克·牛顿】.University PhysicsThese central theories are important tools for research into more specialized topics, and any physicist, regardless of his or her specialization, is expected to be literate in them. These include classical mechanics, quantum mechanics, thermodynamics and statistical mechanics, electromagnetism, and special relativity.Classical and modern physicsClassical mechanicsClassical physics includes the traditional branches and topics that were recognized and well-developed before the beginning of the 20th century—classical mechanics, acoustics, optics, thermodynamics, and electromagnetism.Classical mechanics is concerned with bodies acted on by forces and bodies in motion and may be divided into statics (study of the forces on a body or bodies at rest), kinematics (study of motion without regard to its causes), and dynamics (study of motion and the forces that affect it); mechanics may also be divided into solid mechanics and fluid mechanics (known together as continuum mechanics), the latter including such branches as hydrostatics, hydrodynamics, aerodynamics, and pneumatics.Acoustics is the study of how sound is produced, controlled, transmitted and received. Important modern branches of acoustics include ultrasonics, the study of sound waves of very high frequency beyond the range of human hearing; bioacoustics the physics of animal calls and hearing, and electroacoustics, the manipulation of audible sound waves using electronics.Optics, the study of light, is concerned not only with visible light but also with infrared and ultraviolet radiation, which exhibit all of the phenomena of visible light except visibility, e.g., reflection, refraction, interference, diffraction, dispersion, and polarization of light.Heat is a form of energy, the internal energy possessed by the particles of which a substance is composed; thermodynamics deals with the relationships between heat and other forms of energy.Electricity and magnetism have been studied as a single branch of physics since the intimate connection between them was discovered in the early 19th century; an electric current gives rise to a magnetic field and a changing magnetic field induces an electric current. Electrostatics deals with electric charges at rest, electrodynamics with moving charges, and magnetostatics with magnetic poles at rest.Modern PhysicsClassical physics is generally concerned with matter and energy on the normal scale of1 Physics 物理学observation, while much of modern physics is concerned with the behavior of matter and energy under extreme conditions or on the very large or very small scale.For example, atomic and nuclear physics studies matter on the smallest scale at which chemical elements can be identified.The physics of elementary particles is on an even smaller scale, as it is concerned with the most basic units of matter; this branch of physics is also known as high-energy physics because of the extremely high energies necessary to produce many types of particles in large particle accelerators. On this scale, ordinary, commonsense notions of space, time, matter, and energy are no longer valid.The two chief theories of modern physics present a different picture of the concepts of space, time, and matter from that presented by classical physics.Quantum theory is concerned with the discrete, rather than continuous, nature of many phenomena at the atomic and subatomic level, and with the complementary aspects of particles and waves in the description of such phenomena.The theory of relativity is concerned with the description of phenomena that take place in a frame of reference that is in motion with respect to an observer; the special theory of relativity is concerned with relative uniform motion in a straight line and the general theory of relativity with accelerated motion and its connection with gravitation.Both quantum theory and the theory of relativity find applications in all areas of modern physics.Difference between classical and modern physicsWhile physics aims to discover universal laws, its theories lie in explicit domains of applicability. Loosely speaking, the laws of classical physics accurately describe systems whose important length scales are greater than the atomic scale and whose motions are much slower than the speed of light. Outside of this domain, observations do not match their predictions.Albert Einstein【阿尔伯特·爱因斯坦】contributed the framework of special relativity, which replaced notions of absolute time and space with space-time and allowed an accurate description of systems whose components have speeds approaching the speed of light.Max Planck【普朗克】, Erwin Schrödinger【薛定谔】, and others introduced quantum mechanics, a probabilistic notion of particles and interactions that allowed an accurate description of atomic and subatomic scales.Later, quantum field theory unified quantum mechanics and special relativity.General relativity allowed for a dynamical, curved space-time, with which highly massiveUniversity Physicssystems and the large-scale structure of the universe can be well-described. General relativity has not yet been unified with the other fundamental descriptions; several candidate theories of quantum gravity are being developed.Research fieldsContemporary research in physics can be broadly divided into condensed matter physics; atomic, molecular, and optical physics; particle physics; astrophysics; geophysics and biophysics. Some physics departments also support research in Physics education.Since the 20th century, the individual fields of physics have become increasingly specialized, and today most physicists work in a single field for their entire careers. "Universalists" such as Albert Einstein (1879–1955) and Lev Landau (1908–1968)【列夫·朗道】, who worked in multiple fields of physics, are now very rare.Condensed matter physicsCondensed matter physics is the field of physics that deals with the macroscopic physical properties of matter. In particular, it is concerned with the "condensed" phases that appear whenever the number of particles in a system is extremely large and the interactions between them are strong.The most familiar examples of condensed phases are solids and liquids, which arise from the bonding by way of the electromagnetic force between atoms. More exotic condensed phases include the super-fluid and the Bose–Einstein condensate found in certain atomic systems at very low temperature, the superconducting phase exhibited by conduction electrons in certain materials,and the ferromagnetic and antiferromagnetic phases of spins on atomic lattices.Condensed matter physics is by far the largest field of contemporary physics.Historically, condensed matter physics grew out of solid-state physics, which is now considered one of its main subfields. The term condensed matter physics was apparently coined by Philip Anderson when he renamed his research group—previously solid-state theory—in 1967. In 1978, the Division of Solid State Physics of the American Physical Society was renamed as the Division of Condensed Matter Physics.Condensed matter physics has a large overlap with chemistry, materials science, nanotechnology and engineering.Atomic, molecular and optical physicsAtomic, molecular, and optical physics (AMO) is the study of matter–matter and light–matter interactions on the scale of single atoms and molecules.1 Physics 物理学The three areas are grouped together because of their interrelationships, the similarity of methods used, and the commonality of the energy scales that are relevant. All three areas include both classical, semi-classical and quantum treatments; they can treat their subject from a microscopic view (in contrast to a macroscopic view).Atomic physics studies the electron shells of atoms. Current research focuses on activities in quantum control, cooling and trapping of atoms and ions, low-temperature collision dynamics and the effects of electron correlation on structure and dynamics. Atomic physics is influenced by the nucleus (see, e.g., hyperfine splitting), but intra-nuclear phenomena such as fission and fusion are considered part of high-energy physics.Molecular physics focuses on multi-atomic structures and their internal and external interactions with matter and light.Optical physics is distinct from optics in that it tends to focus not on the control of classical light fields by macroscopic objects, but on the fundamental properties of optical fields and their interactions with matter in the microscopic realm.High-energy physics (particle physics) and nuclear physicsParticle physics is the study of the elementary constituents of matter and energy, and the interactions between them.In addition, particle physicists design and develop the high energy accelerators,detectors, and computer programs necessary for this research. The field is also called "high-energy physics" because many elementary particles do not occur naturally, but are created only during high-energy collisions of other particles.Currently, the interactions of elementary particles and fields are described by the Standard Model.●The model accounts for the 12 known particles of matter (quarks and leptons) thatinteract via the strong, weak, and electromagnetic fundamental forces.●Dynamics are described in terms of matter particles exchanging gauge bosons (gluons,W and Z bosons, and photons, respectively).●The Standard Model also predicts a particle known as the Higgs boson. In July 2012CERN, the European laboratory for particle physics, announced the detection of a particle consistent with the Higgs boson.Nuclear Physics is the field of physics that studies the constituents and interactions of atomic nuclei. The most commonly known applications of nuclear physics are nuclear power generation and nuclear weapons technology, but the research has provided application in many fields, including those in nuclear medicine and magnetic resonance imaging, ion implantation in materials engineering, and radiocarbon dating in geology and archaeology.University PhysicsAstrophysics and Physical CosmologyAstrophysics and astronomy are the application of the theories and methods of physics to the study of stellar structure, stellar evolution, the origin of the solar system, and related problems of cosmology. Because astrophysics is a broad subject, astrophysicists typically apply many disciplines of physics, including mechanics, electromagnetism, statistical mechanics, thermodynamics, quantum mechanics, relativity, nuclear and particle physics, and atomic and molecular physics.The discovery by Karl Jansky in 1931 that radio signals were emitted by celestial bodies initiated the science of radio astronomy. Most recently, the frontiers of astronomy have been expanded by space exploration. Perturbations and interference from the earth's atmosphere make space-based observations necessary for infrared, ultraviolet, gamma-ray, and X-ray astronomy.Physical cosmology is the study of the formation and evolution of the universe on its largest scales. Albert Einstein's theory of relativity plays a central role in all modern cosmological theories. In the early 20th century, Hubble's discovery that the universe was expanding, as shown by the Hubble diagram, prompted rival explanations known as the steady state universe and the Big Bang.The Big Bang was confirmed by the success of Big Bang nucleo-synthesis and the discovery of the cosmic microwave background in 1964. The Big Bang model rests on two theoretical pillars: Albert Einstein's general relativity and the cosmological principle (On a sufficiently large scale, the properties of the Universe are the same for all observers). Cosmologists have recently established the ΛCDM model (the standard model of Big Bang cosmology) of the evolution of the universe, which includes cosmic inflation, dark energy and dark matter.Current research frontiersIn condensed matter physics, an important unsolved theoretical problem is that of high-temperature superconductivity. Many condensed matter experiments are aiming to fabricate workable spintronics and quantum computers.In particle physics, the first pieces of experimental evidence for physics beyond the Standard Model have begun to appear. Foremost among these are indications that neutrinos have non-zero mass. These experimental results appear to have solved the long-standing solar neutrino problem, and the physics of massive neutrinos remains an area of active theoretical and experimental research. Particle accelerators have begun probing energy scales in the TeV range, in which experimentalists are hoping to find evidence for the super-symmetric particles, after discovery of the Higgs boson.Theoretical attempts to unify quantum mechanics and general relativity into a single theory1 Physics 物理学of quantum gravity, a program ongoing for over half a century, have not yet been decisively resolved. The current leading candidates are M-theory, superstring theory and loop quantum gravity.Many astronomical and cosmological phenomena have yet to be satisfactorily explained, including the existence of ultra-high energy cosmic rays, the baryon asymmetry, the acceleration of the universe and the anomalous rotation rates of galaxies.Although much progress has been made in high-energy, quantum, and astronomical physics, many everyday phenomena involving complexity, chaos, or turbulence are still poorly understood. Complex problems that seem like they could be solved by a clever application of dynamics and mechanics remain unsolved; examples include the formation of sand-piles, nodes in trickling water, the shape of water droplets, mechanisms of surface tension catastrophes, and self-sorting in shaken heterogeneous collections.These complex phenomena have received growing attention since the 1970s for several reasons, including the availability of modern mathematical methods and computers, which enabled complex systems to be modeled in new ways. Complex physics has become part of increasingly interdisciplinary research, as exemplified by the study of turbulence in aerodynamics and the observation of pattern formation in biological systems.Vocabulary★natural science 自然科学academic disciplines 学科astronomy 天文学in their own right 凭他们本身的实力intersects相交,交叉interdisciplinary交叉学科的,跨学科的★quantum 量子的theoretical breakthroughs 理论突破★electromagnetism 电磁学dramatically显著地★thermodynamics热力学★calculus微积分validity★classical mechanics 经典力学chaos 混沌literate 学者★quantum mechanics量子力学★thermodynamics and statistical mechanics热力学与统计物理★special relativity狭义相对论is concerned with 关注,讨论,考虑acoustics 声学★optics 光学statics静力学at rest 静息kinematics运动学★dynamics动力学ultrasonics超声学manipulation 操作,处理,使用University Physicsinfrared红外ultraviolet紫外radiation辐射reflection 反射refraction 折射★interference 干涉★diffraction 衍射dispersion散射★polarization 极化,偏振internal energy 内能Electricity电性Magnetism 磁性intimate 亲密的induces 诱导,感应scale尺度★elementary particles基本粒子★high-energy physics 高能物理particle accelerators 粒子加速器valid 有效的,正当的★discrete离散的continuous 连续的complementary 互补的★frame of reference 参照系★the special theory of relativity 狭义相对论★general theory of relativity 广义相对论gravitation 重力,万有引力explicit 详细的,清楚的★quantum field theory 量子场论★condensed matter physics凝聚态物理astrophysics天体物理geophysics地球物理Universalist博学多才者★Macroscopic宏观Exotic奇异的★Superconducting 超导Ferromagnetic铁磁质Antiferromagnetic 反铁磁质★Spin自旋Lattice 晶格,点阵,网格★Society社会,学会★microscopic微观的hyperfine splitting超精细分裂fission分裂,裂变fusion熔合,聚变constituents成分,组分accelerators加速器detectors 检测器★quarks夸克lepton 轻子gauge bosons规范玻色子gluons胶子★Higgs boson希格斯玻色子CERN欧洲核子研究中心★Magnetic Resonance Imaging磁共振成像,核磁共振ion implantation 离子注入radiocarbon dating放射性碳年代测定法geology地质学archaeology考古学stellar 恒星cosmology宇宙论celestial bodies 天体Hubble diagram 哈勃图Rival竞争的★Big Bang大爆炸nucleo-synthesis核聚合,核合成pillar支柱cosmological principle宇宙学原理ΛCDM modelΛ-冷暗物质模型cosmic inflation宇宙膨胀1 Physics 物理学fabricate制造,建造spintronics自旋电子元件,自旋电子学★neutrinos 中微子superstring 超弦baryon重子turbulence湍流,扰动,骚动catastrophes突变,灾变,灾难heterogeneous collections异质性集合pattern formation模式形成University Physics2 Classical mechanics 经典力学IntroductionIn physics, classical mechanics is one of the two major sub-fields of mechanics, which is concerned with the set of physical laws describing the motion of bodies under the action of a system of forces. The study of the motion of bodies is an ancient one, making classical mechanics one of the oldest and largest subjects in science, engineering and technology.Classical mechanics describes the motion of macroscopic objects, from projectiles to parts of machinery, as well as astronomical objects, such as spacecraft, planets, stars, and galaxies. Besides this, many specializations within the subject deal with gases, liquids, solids, and other specific sub-topics.Classical mechanics provides extremely accurate results as long as the domain of study is restricted to large objects and the speeds involved do not approach the speed of light. When the objects being dealt with become sufficiently small, it becomes necessary to introduce the other major sub-field of mechanics, quantum mechanics, which reconciles the macroscopic laws of physics with the atomic nature of matter and handles the wave–particle duality of atoms and molecules. In the case of high velocity objects approaching the speed of light, classical mechanics is enhanced by special relativity. General relativity unifies special relativity with Newton's law of universal gravitation, allowing physicists to handle gravitation at a deeper level.The initial stage in the development of classical mechanics is often referred to as Newtonian mechanics, and is associated with the physical concepts employed by and the mathematical methods invented by Newton himself, in parallel with Leibniz【莱布尼兹】, and others.Later, more abstract and general methods were developed, leading to reformulations of classical mechanics known as Lagrangian mechanics and Hamiltonian mechanics. These advances were largely made in the 18th and 19th centuries, and they extend substantially beyond Newton's work, particularly through their use of analytical mechanics. Ultimately, the mathematics developed for these were central to the creation of quantum mechanics.Description of classical mechanicsThe following introduces the basic concepts of classical mechanics. For simplicity, it often2 Classical mechanics 经典力学models real-world objects as point particles, objects with negligible size. The motion of a point particle is characterized by a small number of parameters: its position, mass, and the forces applied to it.In reality, the kind of objects that classical mechanics can describe always have a non-zero size. (The physics of very small particles, such as the electron, is more accurately described by quantum mechanics). Objects with non-zero size have more complicated behavior than hypothetical point particles, because of the additional degrees of freedom—for example, a baseball can spin while it is moving. However, the results for point particles can be used to study such objects by treating them as composite objects, made up of a large number of interacting point particles. The center of mass of a composite object behaves like a point particle.Classical mechanics uses common-sense notions of how matter and forces exist and interact. It assumes that matter and energy have definite, knowable attributes such as where an object is in space and its speed. It also assumes that objects may be directly influenced only by their immediate surroundings, known as the principle of locality.In quantum mechanics objects may have unknowable position or velocity, or instantaneously interact with other objects at a distance.Position and its derivativesThe position of a point particle is defined with respect to an arbitrary fixed reference point, O, in space, usually accompanied by a coordinate system, with the reference point located at the origin of the coordinate system. It is defined as the vector r from O to the particle.In general, the point particle need not be stationary relative to O, so r is a function of t, the time elapsed since an arbitrary initial time.In pre-Einstein relativity (known as Galilean relativity), time is considered an absolute, i.e., the time interval between any given pair of events is the same for all observers. In addition to relying on absolute time, classical mechanics assumes Euclidean geometry for the structure of space.Velocity and speedThe velocity, or the rate of change of position with time, is defined as the derivative of the position with respect to time. In classical mechanics, velocities are directly additive and subtractive as vector quantities; they must be dealt with using vector analysis.When both objects are moving in the same direction, the difference can be given in terms of speed only by ignoring direction.University PhysicsAccelerationThe acceleration , or rate of change of velocity, is the derivative of the velocity with respect to time (the second derivative of the position with respect to time).Acceleration can arise from a change with time of the magnitude of the velocity or of the direction of the velocity or both . If only the magnitude v of the velocity decreases, this is sometimes referred to as deceleration , but generally any change in the velocity with time, including deceleration, is simply referred to as acceleration.Inertial frames of referenceWhile the position and velocity and acceleration of a particle can be referred to any observer in any state of motion, classical mechanics assumes the existence of a special family of reference frames in terms of which the mechanical laws of nature take a comparatively simple form. These special reference frames are called inertial frames .An inertial frame is such that when an object without any force interactions (an idealized situation) is viewed from it, it appears either to be at rest or in a state of uniform motion in a straight line. This is the fundamental definition of an inertial frame. They are characterized by the requirement that all forces entering the observer's physical laws originate in identifiable sources (charges, gravitational bodies, and so forth).A non-inertial reference frame is one accelerating with respect to an inertial one, and in such a non-inertial frame a particle is subject to acceleration by fictitious forces that enter the equations of motion solely as a result of its accelerated motion, and do not originate in identifiable sources. These fictitious forces are in addition to the real forces recognized in an inertial frame.A key concept of inertial frames is the method for identifying them. For practical purposes, reference frames that are un-accelerated with respect to the distant stars are regarded as good approximations to inertial frames.Forces; Newton's second lawNewton was the first to mathematically express the relationship between force and momentum . Some physicists interpret Newton's second law of motion as a definition of force and mass, while others consider it a fundamental postulate, a law of nature. Either interpretation has the same mathematical consequences, historically known as "Newton's Second Law":a m t v m t p F ===d )(d d dThe quantity m v is called the (canonical ) momentum . The net force on a particle is thus equal to rate of change of momentum of the particle with time.So long as the force acting on a particle is known, Newton's second law is sufficient to。

rkhs范数-回复什么是rkhs范数。

RKHS范数是指Reproducing Kernel Hilbert Space(再生核希尔伯特空间)范数的简称。

在机器学习领域中,RKHS范数是指为了衡量RKHS中函数的"长度"或"大小"而定义的一种度量。

本文将一步一步回答什么是RKHS范数,并详细讨论其性质和应用。

第一步:介绍RKHS再生核希尔伯特空间(RKHS)是函数分析中的一个重要概念,它是一种带有内积和范数的函数空间。

一个函数f属于RKHS,当且仅当其核函数K满足一定的条件。

具体而言,一个函数f属于RKHS,如果对于所有的x,存在一个核函数K使得f(x)可以通过线性组合的方式表示为:f(x) = ⟨Φ(x), K⟨, 其中Φ(x)为特征映射函数,⟨·, ·⟨为内积运算。

RKHS的一个重要性质是再生性,即对于任意给定的x,核函数K(x, ·)与f(x)之间有以下等式成立:⟨f, K(x, ·)⟨ = f(x)第二步:定义RKHS范数RKHS范数是为了衡量RKHS空间中的函数“长度”或“大小”而定义的一种度量。

对于一个函数f∈RKHS,其RKHS范数∥f∥定义如下:∥f∥^2 = ⟨f, f⟨其中⟨·, ·⟨为RKHS中定义的内积运算。

第三步:RKHS范数的性质1. 非负性:RKHS范数是非负的,即∥f∥≥0,并且只有当f=0时,范数等于零。

2. 齐次性:RKHS范数具有齐次性,即对于任意的标量c,有∥cf∥= c ∥f∥。

3. 三角不等式:RKHS范数满足三角不等式,即对于任意的函数f和g,有∥f+g∥≤∥f∥+∥g∥。

4. 范数的等价性:在RKHS中,∥f∥定义了一个等价的度量,即对于所有的f和g,存在正常数γ1和γ2使得γ1∥f∥≤∥g∥≤γ2∥f∥。

RKHS范数还具有其他一些性质,例如可分性、完备性等,这些性质使得RKHS范数成为RKHS空间中一个重要的工具。

rkhs范数-回复rkhs范数是一种用于度量在reproducing kernel Hilbert space(RKHS)中的向量的概念。

RKHS是函数空间的一种特殊类型,其中有一个特定的函数,称为reproducing kernel,可以用来描述该空间中的函数之间的内积。

在RKHS中,我们可以定义一个范数,用来衡量该空间中向量的大小。

本文将逐步介绍rkhs范数的概念、性质以及在实际问题中的应用。

首先,我们需要了解什么是RKHS。

RKHS是一个具有特殊内积结构的函数空间,其中的函数可以通过reproducing kernel与其他函数进行内积运算。

reproducing kernel可以看作是RKHS中的特殊函数,其具有一些特殊的性质,包括线性性、对称性和正定性等。

基于reproducing kernel,我们可以定义RKHS中函数之间的内积,使得RKHS具有向量空间的结构。

在RKHS中,我们可以定义范数来度量向量的大小。

rkhs范数是一种特殊的范数,它基于reproducing kernel的性质来定义。

具体来说,我们定义rkhs范数为向量在RKHS中的内积的平方根。

这个定义有一个重要的性质,即rkhs范数满足范数的三个常见性质:非负性、齐次性和三角不等式。

同时,rkhs范数还具有一个额外的性质,即Cauchy-Schwarz不等式,即对于任意两个向量,它们的rkhs范数的乘积不大于它们的内积。

rkhs范数在实际问题中有着广泛的应用。

其中一个重要的应用是函数逼近和插值。

在某些情况下,我们希望通过已知的数据点来逼近一个未知的函数。

利用RKHS中的reproducing kernel,我们可以构造一个插值函数,该函数通过已知数据点的函数值来逼近未知函数。

并且通过计算插值函数的rkhs范数,我们可以衡量逼近的效果。

这种方法在信号处理、图像处理和机器学习等领域中得到了广泛的应用。

除了函数逼近和插值,rkhs范数还可以用于解决最优化问题。