Tuning the Multivariate Poisson Mixture Model for Clustering Supermarket Shoppers

- 格式:pdf

- 大小:321.37 KB

- 文档页数:9

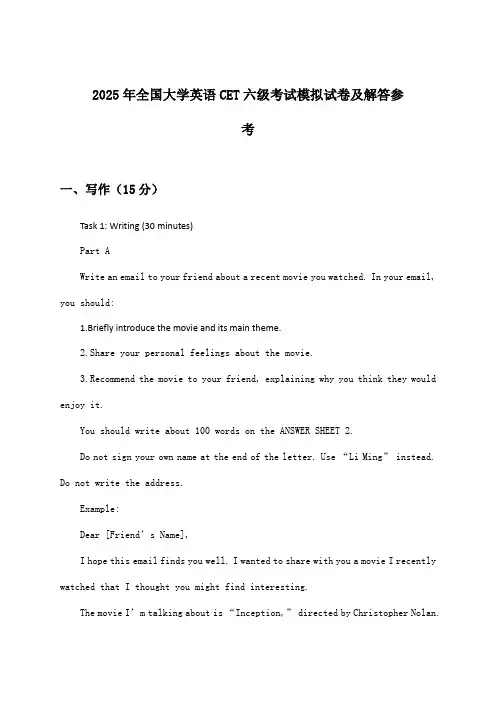

2025年全国大学英语CET六级考试模拟试卷及解答参考一、写作(15分)Task 1: Writing (30 minutes)Part AWrite an email to your friend about a recent movie you watched. In your email, you should:1.Briefly introduce the movie and its main theme.2.Share your personal feelings about the movie.3.Recommend the movie to your friend, explaining why you think they would enjoy it.You should write about 100 words on the ANSWER SHEET 2.Do not sign your own name at the end of the letter. Use “Li Ming” in stead. Do not write the address.Example:Dear [Friend’s Name],I hope this email finds you well. I wanted to share with you a movie I recently watched that I thought you might find interesting.The movie I’m talking about is “Inception,” directed by Chris topher Nolan.It revolves around the concept of dream manipulation and the layers of reality. The story follows Dom Cobb, a skilled thief who specializes in extracting secrets from within the subconscious during the dream state.I was deeply impressed by t he movie’s intricate plot and the exceptional performances of the cast. The visual effects were breathtaking, and the soundtrack was perfectly matched to the action sequences. The movie made me think a lot about the nature of reality and the power of dreams.I highly recommend “Inception” to you. I believe it will be a captivating experience, especially if you enjoy films that challenge your perceptions and make you think.Looking forward to your thoughts on this movie.Best regards,Li MingAnalysis:This example follows the structure required for Part A of the writing task. It starts with a friendly greeting and a brief introduction to the subject of the email, which is the movie “Inception.”The writer then shares their personal feelings about the movie, highlighting the plot, the cast’s performances, the visual effects, and the soundtrack. This personal touch helps to engage the reader and provide a more authentic recommendation.Finally, the writer makes a clear recommendation, explaining that theybelieve the movie would be enjoyable for their friend based on itsthought-provoking nature and entertainment value. The email concludes with a friendly sign-off, maintaining a warm and inviting tone.二、听力理解-长对话(选择题,共8分)第一题听力原文:M: Hi, Lisa. How was your trip to Beijing last weekend?W: Oh, it was amazing! I’ve always wanted to visit the Forbidden City. The architecture was so impressive.M: I’m glad you enjoyed it. By the way, did you manage to visit the Great Wall?W: Yes, I did. It was a long journey, but it was worth it. The Wall was even more magnificent in person.M: Did you have any problems with transportation?W: Well, the subway system was very convenient, but some of the bus routes were confusing. I ended up getting lost a couple of times.M: That’s a common problem. It’s always a good idea to download a map or use a GPS app.W: Definitely. I also found the people in Beijing to be very friendly and helpful. They spoke English well, too.M: That’s great to hear. I’m thinking of visiting Beijing next month. Arethere any other places you would recommend?W: Oh, definitely! I would suggest visiting the Summer Palace and the Temple of Heaven. They are both beautiful and culturally significant.M: Thanks for the ti ps, Lisa. I can’t wait to see these places myself.W: You’re welcome. Have a great trip!选择题:1、Why did Lisa visit Beijing?A. To visit the Great Wall.B. To see her friends.C. To experience the local culture.D. To study Chinese history.2、How did Lisa feel about the Forbidden City?A. It was boring.B. It was too crowded.C. It was impressive.D. It was not as beautiful as she expected.3、What was the biggest challenge Lisa faced during her trip?A. Finding accommodation.B. Getting lost.C. Eating healthy food.D. Visiting all the tourist spots.4、What other places does Lisa recommend visiting in Beijing?A. The Summer Palace and the Temple of Heaven.B. The Great Wall and the Forbidden City.C. The National Museum and the CCTV Tower.D. The Wangfujing Street and the Silk Market.答案:1、C2、C3、B4、A第二题Part Two: Listening ComprehensionSection C: Long ConversationsIn this section, you will hear one long conversation. At the end of the conversation, you will hear some questions. Both the conversation and the questions will be spoken only once. After you hear a question, you must choose the best answer from the four choices marked A), B), C), and D).1.What is the main topic of the conversation?A) The importance of cultural exchange.B) The challenges of teaching English abroad.C) The experiences of a language teacher in China.D) The impact of language barriers on communication.2.Why does the speaker mention studying Chinese?A) To show his respect for Chinese culture.B) To express h is gratitude for the Chinese students’ hospitality.C) To emphasize the importance of language learning.D) To explain his reasons for choosing to teach English in China.3.According to the speaker, what is one of the difficulties he faced in teaching English?A) The students’ lack of motivation.B) The limited resources available.C) The cultural differences between Chinese and Western students.D) The high expectations from the school administration.4.How does the speaker plan to overcome the language barrier in his future work?A) By learning more Chinese.B) By using visual aids and non-verbal communication.C) By collaborating with local language experts.D) By relying on his previous teaching experience.Answers:1.C2.C3.C4.B三、听力理解-听力篇章(选择题,共7分)第一题Passage:A new study has found that the way we speak can affect our relationships and even our physical health. Researchers at the University of California, Los Angeles, have been investigating the connection between language and well-being for several years. They have discovered that positive language can lead to better health outcomes, while negative language can have the opposite effect.The study involved 300 participants who were monitored for a period of one year. The participants were asked to keep a daily diary of their interactions with others, including both positive and negative comments. The researchers found that those who used more positive language reported fewer physical symptoms and a greater sense of well-being.Dr. Emily Thompson, the l ead researcher, explained, “We were surprised to see the impact that language can have on our health. It’s not just about what we say, but also how we say it. A gentle tone and supportive language can make a significant difference.”Here are some examples of positive and negative language:Positive Language: “I appreciate your help with the project.”Negative Language: “You always mess up the project.”The researchers also looked at the effects of language on relationships. They found that couples who used more positive language were more likely toreport a satisfying relationship, while those who used negative language were more likely to experience relationship stress.Questions:1、What is the main focus of the study conducted by the University of California, Los Angeles?A) The impact of diet on physical health.B) The connection between language and well-being.C) The effects of exercise on mental health.D) The role of social media in relationships.2、Which of the following is a positive example of language from the passage?A) “You always mess up the project.”B) “I can’t believe you did that again.”C) “I appreciate your help with the project.”D) “This is a waste of time.”3、According to the study, what is the likely outcome for couples who use negative language in their relationships?A) They will have a more satisfying relationship.B) They will experience fewer physical symptoms.C) They will report a greater sense of well-being.D) They will likely experience relationship stress.Answers:1、B2、C3、D第二题Passage OneIn the United States, there is a long-standing debate over the best way to educate children. One of the most controversial issues is the debate between traditional public schools and charter schools.Traditional public schools are operated by government and are funded by tax dollars. They are subject to strict regulations and are required to follow a standardized curriculum. Teachers in traditional public schools are typically unionized and receive benefits and pensions.On the other hand, charter schools are publicly funded but operate independently of local school districts. They are free to set their own curriculum and teaching methods. Charter schools often have a longer school day and a more rigorous academic program. They are also subject to performance-based evaluations, which can lead to their closure if they do not meet certain standards.Proponents of charter schools argue that they provide more choices for parents and that they can offer a more personalized education for students. They also claim that charter schools are more accountable because they are subject to more direct oversight and can be closed if they fail to meet their goals.Opponents of charter schools argue that they take resources away fromtraditional public schools and that they do not provide a level playing field for all students. They also claim that charter schools can be more selective in their admissions process, which may lead to a lack of diversity in the student body.Questions:1、What is a key difference between traditional public schools and charter schools?A) Funding sourceB) CurriculumC) Teacher unionsD) Academic rigor2、According to the passage, what is a potential advantage of charter schools?A) They are subject to fewer regulations.B) They offer more choices for parents.C) They are more likely to receive government funding.D) They typically have a shorter school day.3、What is a common concern expressed by opponents of charter schools?A) They are less accountable for their performance.B) They may lead to a lack of diversity in the student body.C) They are more expensive for local taxpayers.D) They do not follow a standardized curriculum.Answers:1、B) Curriculum2、B) They offer more choices for parents.3、B) They may lead to a lack of diversity in the student body.四、听力理解-新闻报道(选择题,共20分)第一题News ReportA: Good morning, everyone. Welcome to today’s news broadcast. Here is the latest news.News Anchor: This morning, the World Health Organization (WHO) announced that the number of confirmed cases of a new strain of the H1N1 flu virus has reached 10,000 worldwide. The WHO has declared the outbreak a public health emergency of international concern. Health officials are urging countries to take immediate measures to contain the spread of the virus.Q1: What is the main topic of the news report?A) The announcement of a new strain of the H1N1 flu virus.B) The declaration of a public health emergency.C) The measures taken to contain the spread of the virus.D) The number of confirmed cases of the new strain.Answer: BQ2: According to the news report, who declared the outbreak a public health emergency?A) The World Health Organization (WHO)B) The Centers for Disease Control and Prevention (CDC)C) The European Union (EU)D) The United Nations (UN)Answer: AQ3: What is the main purpose of the health officials’ urging?A) To increase awareness about the flu virus.B) To encourage people to get vaccinated.C) To take immediate measures to contain the spread of the virus.D) To provide financial assistance to affected countries.Answer: C第二题News Report 1:[Background music fades in]Narrator: “This morning’s top news includes a major announcement from the Ministry of Education regarding the upcoming changes to the College English Test Band Six (CET-6). Here’s our correspondent, Li Hua, with more details.”Li Hua: “Good morning, everyone. The Ministry of Education has just announced that starting from next year, the CET-6 will undergo significant modifications. The most notable change is the inclusion of a new speaking section, which will be mandatory for all test-takers. This decision comes in response to the increasing demand for English proficiency in various fields. Let’s goto the Education Depar tment for more information.”[Background music fades out]Questions:1、What is the main topic of this news report?A) The cancellation of the CET-6 exam.B) The addition of a new speaking section to the CET-6.C) The difficulty level of the CET-6 increasing.D) The results of the CET-6 exam.2、Why has the Ministry of Education decided to include a new speaking section in the CET-6?A) To reduce the number of test-takers.B) To make the exam more difficult.C) To meet the demand for English proficiency.D) To replace the written test with an oral test.3、What will be the impact of this change on students preparing for the CET-6?A) They will need to focus more on writing skills.B) They will have to learn a new type of test format.C) They will no longer need to take the exam.D) They will be able to choose between written and oral tests.Answers:1、B2、C3、B第三题You will hear a news report. For each question, choose the best answer from the four choices given.Listen to the news report and answer the following questions:1、A) The number of tourists visiting the city has doubled.B) The city’s tourism revenue has increased significantly.C) The new airport has attracted many international tourists.D) The city’s infrastructure is not ready for the influx of tou rists.2、A) The government plans to invest heavily in transportation.B) Local businesses are benefiting from the tourism boom.C) The city is experiencing traffic congestion and overcrowding.D) The city is working on expanding its hotel capacity.3、A) Th e city’s mayor has expressed concern about the impact on local culture.B) The tourism industry is collaborating with local communities to preserve traditions.C) There are concerns about the negative environmental effects of tourism.D) The city is implementing strict regulations to control tourist behavior.Answers:1.B) The city’s tourism revenue has increased significantly.2.C) The city is experiencing traffic congestion and overcrowding.3.B) The tourism industry is collaborating with local communities to preserve traditions.五、阅读理解-词汇理解(填空题,共5分)第一题Read the following passage and then complete the sentences by choosing the most suitable words or phrases from the list below. Each word or phrase may be used once, more than once, or not at all.Passage:In the past few decades, the internet has revolutionized the way we communicate and access information. With just a few clicks, we can now connect with people from all over the world, share our thoughts and experiences, and even conduct business transactions. This rapid advancement in technology has not only brought convenience to our lives but has also raised several challenges and concerns.1、_________ (1) the internet has made it easier for us to stay connected with friends and family, it has also led to a decrease in face-to-face interactions.2、The increasing reliance on digital devices has raised concerns about the impact on our physical and mental health.3、Despite the many benefits, there are also significant_________(2) associated with the internet, such as privacy breaches and cybersecuritythreats.4、To mitigate these risks, it is crucial for individuals and organizations to adopt robust security measures.5、In the future, we need to strike a balance between embracing technological advancements and maintaining a healthy lifestyle.List of Words and Phrases:a) convenienceb) challengesc) privacy breachesd) physicale) significantf) mentalg) privacyh) embracei) reliancej) face-to-face1、_________ (1)2、_________ (2)第二题Reading PassagesPassage OneMany people believe that a person’s personality is established at birthand remains unchanged throughout life. This view is supported by the idea that personality is determined by genetic factors. However, recent studies have shown that personality can be influenced by a variety of environmental factors as well.The word “personality” can be defined as the unique set of characteristics that distinguish one individual from another. It includes traits such as extroversion, neuroticism, and agreeableness. These traits are often measured using psychological tests.According to the passage, what is the main idea about personality?A. Personality is solely determined by genetic factors.B. Personality remains unchanged throughout life.C. Personality is influenced by both genetic and environmental factors.D. Personality is determined by a combination of psychological tests.Vocabulary Understanding1、The unique set of characteristics that distinguish one individual from another is referred to as ________.A. personalityB. genetic factorsC. environmental factorsD. psychological tests2、The view that personality is established at birth and remains unchanged throughout life is ________.A. supportedB. challengedC. irrelevantD. misunderstood3、According to the passage, traits such as extroversion, neuroticism, and agreeableness are part of ________.A. genetic factorsB. environmental factorsC. personalityD. psychological tests4、The passage suggests that personality can be influenced by ________.A. genetic factorsB. environmental factorsC. both genetic and environmental factorsD. neither genetic nor environmental factors5、The word “personality” is best defined as ________.A. the unique set of characteristics that distinguish one individual from anotherB. the genetic factors that determine personalityC. the environmental factors that influence personalityD. the psychological tests used to measure personalityAnswers:1、A2、A3、C4、C5、A六、阅读理解-长篇阅读(选择题,共10分)First QuestionPassage:In the digital age, technology has transformed almost every aspect of our lives, including education. One significant impact technology has had on learning is through online platforms that offer a wide variety of courses and educational materials to anyone with internet access. This democratization of knowledge means that individuals no longer need to rely solely on traditional educational institutions for learning. However, while online learning provides unprecedented access to information, it also poses challenges such as ensuring the quality of the content and maintaining student engagement without the structure of a classroom setting. As educators continue to adapt to these changes, it’s clear that technology will play an increasingly important role in s haping the future of education.1、According to the passage, what is one major advantage of online learning?A) It guarantees higher academic achievements.B) It makes educational resources more accessible.C) It eliminates the need for traditional learning methods entirely.D) It ensures that all students remain engaged with the material.2、What challenge does online learning present according to the text?A) It makes it difficult to assess the quality of educational content.B) It increases the reliance on traditional educational institutions.C) It decreases the amount of available educational material.D) It simplifies the process of student engagement.3、The term “democratization of knowledge” in this context refers to:A) The ability of people to vote on educational policies.B) The equal distribution of printed books among citizens.C) The process by which governments control online information.D) The widespread availability of educational resources via the internet.4、How do educators respond to the changes brought about by technology in education?A) By rejecting technological advancements in favor of conventional methods.B) By adapting their teaching practices to incorporate new technologies.C) By insisting that online learning should replace traditional classrooms.D) By ignoring the potential benefits of online learning platforms.5、Based on the passage, which statement best reflects the future outlook for education?A) Traditional educational institutions will become obsolete.B) Technology will have a diminishing role in the education sector.C) Online learning will complement but not completely replace traditional education.D) Students will no longer require any form of structured learning environment.Answers:1.B2.A3.D4.B5.CThis is a fictional example designed for illustrative purposes. In actual CET exams, the passages and questions would vary widely in topic and complexity.第二题Reading PassagesPassage OneGlobal warming is one of the most pressing environmental issues facing the world today. It refers to the long-term increase in Earth’s average surface temperature, primarily due to human activities, particularly the emission of greenhouse gases. The consequences of global warming are far-reaching, affecting ecosystems, weather patterns, sea levels, and human health.The Intergovernmental Panel on Climate Change (IPCC) has warned that if global warming continues at its current rate, we can expect more extreme weather events, such as hurricanes, droughts, and floods. Additionally, rising sealevels could displace millions of people, leading to social and economic instability.Several measures have been proposed to mitigate the effects of global warming. These include reducing greenhouse gas emissions, transitioning to renewable energy sources, and implementing sustainable agricultural practices. However, despite the urgency of the situation, progress has been slow, and many countries have failed to meet their commitments under the Paris Agreement.Questions:1、What is the primary cause of global warming according to the passage?A、Natural climate changesB、Human activitiesC、Ecosystem changesD、Increased carbon dioxide levels in the atmosphere2、Which of the following is NOT mentioned as a consequence of global warming?A、Extreme weather eventsB、Rising sea levelsC、Improved crop yieldsD、Increased global biodiversity3、What is the IPCC’s main concern regarding the current rate of global warming?A、It is causing a decrease in Earth’s average surface temperatu re.B、It is leading to more extreme weather events.C、It is causing the Earth’s magnetic field to weaken.D、It is causing the ozone layer to thin.4、What are some of the proposed measures to mitigate the effects of global warming?A、Reducing greenhouse gas emissions, transitioning to renewable energy sources, and implementing sustainable agricultural practices.B、Building more coal-fired power plants and expanding deforestation.C、Increasing the use of fossil fuels and reducing the number of trees.D、Ignoring the issue and hoping it will resolve itself.5、Why has progress in addressing global warming been slow, according to the passage?A、Because it is a complex issue that requires international cooperation.B、Because people are not concerned about the consequences of global warming.C、Because scientists do not have enough information about the issue.D、Because the Paris Agreement has not been effective.Answers:1、B2、C3、B4、A5、A七、阅读理解-仔细阅读(选择题,共20分)First QuestionPassage:In the age of rapid technological advancement, the role of universities has shifted beyond traditional academic pursuits to include fostering innovation and entrepreneurship among students. One such initiative taken by many institutions is the integration of technology incubators on campus. These incubators serve as platforms where students can turn their innovative ideas into tangible products, thereby bridging the gap between theory and practice. Moreover, universities are increasingly collaborating with industry leaders to provide practical training opportunities that prepare students for the challenges of the modern workforce. Critics argue, however, that this shift might come at the cost of undermining the foundational academic disciplines that have historically formed the core of higher education.Questions:1、What is one key purpose of integrating technology incubators in universities according to the passage?A) To reduce the cost of university education.B) To bridge the gap between theory and practice.C) To compete with other universities.D) To focus solely on theoretical knowledge.Answer: B) To bridge the gap between theory and practice.2、According to the text, how are universities preparing students for the modern workforce?A) By isolating them from industry professionals.B) By providing practical training through collaboration with industry leaders.C) By discouraging entrepreneurship.D) By focusing only on historical academic disciplines.Answer: B) By providing practical training through collaboration with industry leaders.3、What concern do critics raise about the new initiatives in universities?A) They believe it will enhance foundational academic disciplines.B) They fear it could undermine the core of higher education.C) They think it will make universities less competitive.D) They are worried about the overemphasis on practical skills.Answer: B) They fear it could undermine the core of higher education.4、Which of the following best describes the role of universities in the current era as depicted in the passage?A) Institutions that strictly adhere to traditional teaching methods.B) Centers that foster innovation and entrepreneurship among students.C) Organizations that discourage partnerships with industries.D) Places that prevent students from engaging with real-world challenges.Answer: B) Centers that foster innovation and entrepreneurship among students.5、How does the passage suggest that technology incubators benefit students?A) By ensuring they only focus on theoretical studies.B) By giving them a platform to turn ideas into products.C) By limiting their exposure to practical experiences.D) By encouraging them to avoid modern workforce challenges.Answer: B) By giving them a platform to turn ideas into products.This set of questions aims to test comprehension skills including inference, detail recognition, and understanding the main idea of the given passage. Remember, this is a mock example and should be used for illustrative purposes only.Second QuestionReading Passage:The Future of Renewable Energy SourcesIn recent years, there has been a growing interest in renewable energy sources due to their potential to reduce dependency on fossil fuels and mitigate the effects of climate change. Solar power, wind energy, and hydropower have all seen significant advancements in technology and cost-efficiency. However, challenges remain in terms of storage and distribution of these energy sources. For solar energy to become a viable primary energy source worldwide, it must overcome the limitations posed by weather conditions and geographical location. Wind energy faces similar challenges, particularly in areas with low wind speeds. Hydropower, while more consistent than both solar and wind energies, is limited。

小学上册英语第二单元测验试卷考试时间:90分钟(总分:100)B卷一、综合题(共计100题共100分)1. 选择题:What is the capital of Russia?A. MoscowB. St. PetersburgC. KievD. Minsk2. 选择题:What do you call a large body of saltwater?A. RiverB. OceanC. PondD. Lake答案:B3. 听力题:The dog is _______ (barking) loudly.4. (Ottoman) Empire lasted for over six centuries. 填空题:The ____5. 填空题:____(Rainforests) have many trees and receive a lot of rain.6. 听力题:A ____ has soft fur and likes to cuddle.7. 填空题:I want to create a ________ to celebrate love.8. 选择题:What do you call the process of growing plants?A. FarmingB. GardeningC. AgricultureD. All of the above答案:D9. 填空题:I like to take ________ (照片) of nature.10. 听力题:Chemical reactions can produce heat, light, or ______.11. 听力题:The bear catches _______ in the river.12. 选择题:What do we call the frozen form of water?A. IceB. SteamC. LiquidD. Vapor13. 听力题:A _______ can help provide food for your family.14. 选择题:What is the capital of Singapore?A. SingaporeB. Kuala LumpurC. JakartaD. Manila15. 听力题:The Pacific Ocean is __________ than the Atlantic Ocean.16. 填空题:The ________ (农业实践改进) ensures sustainability.17. 听力题:The stars are _______ (twinkling) in the sky.18. 填空题:The __________ is a large lake located in East Africa. (维多利亚湖)19. 填空题:I love to smell the _____ (玫瑰).20. 填空题:I feel _______ when I sing.21. 听力题:Friction happens when two surfaces ______ (rub) against each other.22. 填空题:My dad is a great __________ (支持者) of my dreams.23. 选择题:What is the term for the area of space where tiny particles collide and create cosmic rays?A. Cosmic Ray ZoneB. Particle AcceleratorC. High-Energy ZoneD. Collision Zone24. 听力题:The process of separating mixtures is called ______.25. 填空题:I love to _______ (体验) new adventures.26. 填空题:A plant’s _____ (生命周期) includes germination, growth, and reproduction.27. 选择题:What is the largest ocean on Earth?a. Atlantic Oceanb. Indian Oceanc. Arctic Oceand. Pacific Ocean答案:d28. 选择题:What is the opposite of 'thin'?A. SmallB. FatC. NarrowD. Slim29. 选择题:What do you call a young female horse?A. ColtB. FillyC. FoalD. Mare答案: B30. 听力题:The __________ is the main pathway for trade and travel across Europe.31. Wall is located in ________ (中国). 填空题:The Grea32. 听力题:A _______ can help to demonstrate the principles of thermodynamics.33. 听力题:Dissolving sugar in water is a _______ change.34. 选择题:What do you call the process of making food?A. CookingB. EatingC. CleaningD. Serving35. 选择题:What is the name of the famous river in Peru?A. AmazonB. UcayaliC. MarañónD. All of the above36. 选择题:What is the capital of Croatia?A. ZagrebB. BelgradeC. LjubljanaD. Sarajevo答案:A. Zagreb37. 听力题:She is _____ (talking) to her friend.38. 听力题:The chemical formula for phthalic acid is ______.39. 填空题:My ________ (玩具名称) can float on water.40. 填空题:The _____ (香草) in my garden is used for cooking.41. 填空题:_____ (植物观察) teaches us about ecology.42. 填空题:The _______ (鲸鱼) communicates with others through songs.43. 选择题:What do we call the time when you celebrate your birth?A. AnniversaryB. BirthdayC. HolidayD. Festival44. 听力题:The Earth's surface is shaped by both internal and ______ processes.45. 听力题:The __________ can indicate areas at risk of environmental degradation.46. 选择题:What do you call a young kangaroo?A. JoeyB. PupC. CubD. Kid答案:A47. 选择题:What is the color of a ripe tomato?A. GreenB. YellowC. RedD. Orange答案:C48. 听力题:The flower is _______ (beautiful).49. 选择题:What do bees make?A. MilkB. HoneyC. BreadD. Jam答案:B50. 听力题:My dad works as a _____ (engineer/teacher).51. 填空题:The weather is _______ (非常好).52. 听力题:The capital of the Maldives is _______.53. 填空题:I enjoy playing ______ (排球) at the beach during summer vacations.54. 填空题:My cousin loves to __________ (写作) poetry.55. 填空题:The _______ (The French Revolution) overthrew the monarchy in France.56. 填空题:We saw a ________ in the park.57. 填空题:The country known for its pyramids is ________ (以金字塔闻名的国家是________).58. 填空题:A _____ (城市绿化) initiative can improve living conditions.59. peninsula) is a landform surrounded by water on three sides. 填空题:The ____60. 选择题:How many legs does an octopus have?A. SixB. EightC. FourD. Ten61. 填空题:The ________ is a small animal that hops around.62. 听力题:The __________ is a region known for its traditional crafts.Which planet is closest to the Sun?A. EarthB. VenusC. MercuryD. Mars64. 选择题:What is the name of the ocean on the east coast of the USA?A. Atlantic OceanB. Pacific OceanC. Indian OceanD. Arctic Ocean答案:A65. 填空题:The scientist studies _____ (生物) in the lab.66. 填空题:A __________ (气相色谱) separates and analyzes compounds in the gas phase.67. 选择题:What do you call a person who plays the piano?A. PianistB. GuitaristC. DrummerD. Violinist68. 听力题:The _______ can be very fragrant.69. 选择题:What is the term for the amount of space an object occupies?A. VolumeB. AreaC. MassD. Density答案:A70. 选择题:What do we call a person who writes poetry?A. NovelistB. PoetC. WriterD. Dramatist答案:B__________ reactions can produce heat or light.72. 填空题:My sister is __________ (善解人意的).73. 填空题:My niece is very .74. 填空题:The ________ (生态互动) is intricate.75. 选择题:What do we breathe in?A. WaterB. Carbon DioxideC. OxygenD. Helium答案:C76. 填空题:I enjoy ______ (与朋友一起) discussing books.77. 选择题:Which of these is a large body of water?A. LakeB. RiverC. OceanD. All of the above答案:D78. 填空题:The __________ (历史的丰厚底蕴) inform practices.79. 听力题:The boy has a new ________.80. 填空题:The filmmaker creates _____ (电影) that tell stories.81. 填空题:The kids are _______ in the playground.82. 听力填空题:I think everyone should learn how to __________.The ant builds its home in the _________. (土壤)84. 填空题:I found a ________ (神秘的盒子) hidden in my attic. I wonder what’s inside!85. 听力题:I have a _______ (friend) named Tom.86. 填空题:I enjoy baking ______ (饼干) to share with my friends.87. 填空题:My mom is a great __________ (影响者).88. 听力题:A _______ is a tool that helps us measure time.89. 听力题:The __________ is known for its unique cultural practices.90. 听力题:My friend enjoys playing ____ (guitar) at home.91. 听力题:A __________ is a flat area of land that is higher than the surrounding land.92. oasis) is a fertile area in a desert. 填空题:The ____93. 听力题:The Earth's magnetic field protects it from solar ______.94. 选择题:What is the largest mammal in the world?A. ElephantB. WhaleC. GiraffeD. Hippopotamus答案:B95. 选择题:What do you call a person who helps people?A. TeacherB. DoctorC. ChefD. Scientist96. 填空题:In the garden, we have many ________ (树) and flowers that attract ________ (蜜蜂).97. 填空题:I often draw pictures of ________.98. 填空题:My friend is a great __________ (摄影师) with a creative eye.99. 选择题:What do you call a baby dog?A. PuppyB. KittenC. CalfD. Foal答案:A100. 听力题:Different types of atoms are represented by different _____ on the periodic table.。

金融博士书目经济学、金融学博士书目(A:数学分析微分方程矩阵代数)微观金融学包括金融市场及金融机构研究、投资学金融工程学金融经济学、公司金融财务管理等方面,宏观金融学包括货币经济学货币银行学、国际金融学等方面,实证和数量方法包括数理金融学、金融计量经济学等方面,以下书目侧重数学基础、经济理论和数理金融学部分。

◎函数与分析《什么是数学》,牛津丛书●集合论Paul R. Halmos,Naive Set Theory 朴素集合论(美)哈莫斯(好书,深入浅出但过简洁)集合论(英文版)Thomas Jech(有深度)Moschovakis,Notes on Set Theory集合论基础(英文版)——图灵原版数学·统计学系列(美)恩德滕●数学分析○微积分Tom M. Apostol, Calculus vol Ⅰ&Ⅱ(数学家写的经典高等微积分教材/参考书,写法严谨,40年未再版,致力于更深刻的理解,去除微积分和数学分析间隔,衔接分析学、微分方程、线性代数、微分几何和概率论等的学习,学实分析的前奏,线性代数应用最好的多元微积分书,练习很棒,对初学者会难读难懂,但具有其他教材无法具备的优点。

Stewart 的书范围相同,也较简单。

)Carol and Robert Ash,The Calculus Tutoring Book(不错的微积分辅导教材)R. Courant, F. John, Introduction to Calculus and Analysis vol Ⅰ&Ⅱ(适合工科,物理和应用多)Morris Kline,Calculus, an intuitive approachRon LarsonCalculus (With Analytic Geometry(微积分入门教材,难得的清晰简化,与Stewart同为流行教材)《高等微积分》Lynn H.Loomis / Shlomo StermbergMorris Kline,Calculus: An Intuitive and Physical Approach (解释清晰的辅导教材)Richard Silverman,Modern Calculus with Analytic GeometryMichael,Spivak,Calculus(有趣味,适合数学系,读完它或者Stewart的就可以读Rudin 的Principles of Mathematical Analysis 或者Marsden的Elementary Classical Analysis,然后读Royden的Real Analysis学勒贝格积分和测度论或者Rudin的Functional Analysis 学习巴拿赫和希尔伯特空间上的算子和谱理论)James Stewart,Calculus(流行教材,适合理科及数学系,可以用Larson书补充,但解释比它略好,如果觉得难就用Larson的吧)Earl W. Swokowski,Cengage Advantage Books: Calculus: The Classic Edition(适合工科)Silvanus P. Thompson,Calculus Made Easy(适合微积分初学者,易读易懂)○实分析(数学本科实变分析水平)(比较静态分析)Understanding Analysis,Stephen Abbott,(实分析入门好书,虽然不面面俱到但清晰简明,Rudin, Bartle, Browder等人毕竟不擅于写入门书,多维讲得少)T. M. Apostol, Mathematical AnalysisProblems in Real Analysis 实分析习题集(美)阿里普兰斯,(美)伯金肖《数学分析》方企勤,北大胡适耕,实变函数《分析学》Elliott H. Lieb / Michael LossH. L. Royden, Real AnalysisW. Rudin, Principles of Mathematical AnalysisElias M.Stein,Rami Shakarchi, Real Analysis:MeasureTheory,Integration and Hilbert Spaces,实分析(英文版) 《数学分析八讲》辛钦《数学分析新讲》张筑生,北大社周民强,实变函数论,北大周民强《数学分析》上海科技社○测度论(与实变分析有重叠)概率与测度论(英文版)(美)阿什(Ash.R.B.),(美)多朗-戴德(Doleans-Dade,C.A.)?Halmos,Measure Theory,测度论(英文版)(德)霍尔姆斯○傅里叶分析(实变分析和小波分析各有一半)小波分析导论(美)崔锦泰H. Davis, Fourier Series and Orthogonal FunctionsFolland,Real Analysis:Modern Techniques and Their ApplicationsFolland,Fourier Analysis and its Applications,数学物理方程:傅里叶分析及其应用(英文版)——时代教育.国外高校优秀教材精选(美)傅兰德傅里叶分析(英文版)——时代教育·国外高校优秀教材精选(美)格拉法科斯B. B. Hubbard, The World According to Wavelets: The Story of a Mathematical Technique in the MakingKatanelson,An Introduction to Harmonic AnalysisR. T. Seeley, An Introduction to Fourier Series and IntegralsStein,Shakarchi,Fourier Analysis:An Introduction○复分析(数学本科复变函数水平)L. V. Ahlfors, Complex Analysis ,复分析——华章数学译丛,(美)阿尔福斯(Ahlfors,L.V.)Brown,Churchill,Complex Variables and Applications Convey, Functions of One Complex Variable Ⅰ&Ⅱ《简明复分析》龚升, 北大社Greene,Krantz,Function Theory of One Complex VariableMarsden,Hoffman,Basic Complex AnalysisPalka,An Introduction to Complex Function TheoryW. Rudin, Real and Complex Analysis 《实分析与复分析》鲁丁(公认标准教材,最好有测度论基础)Siegels,Complex VariablesStein,Shakarchi,Complex Analysis 《复变函数》庄坼泰●泛函分析(资产组合的价值)○基础泛函分析(实变函数、算子理论和小波分析)实变函数与泛函分析基础,程其衰,高教社Friedman,Foundations of Modern Analysis《实变与泛函》胡适耕《泛函分析引论及其应用》克里兹格泛函分析习题集(印)克里希南Problems and methods in analysis,Krysicki夏道行,泛函分析第二教程,高教社夏道行,实变函数与泛函分析《数学分析习题集》谢惠民,高教社泛函分析·第6版(英文版) K.Yosida《泛函分析讲义》张恭庆,北大社○高级泛函分析(算子理论)J.B.Conway, A Course in Functional Analysis,泛函分析教程(英文版)Lax,Functional AnalysisRudin,Functional Analysis,泛函分析(英文版)[美]鲁丁(分布和傅立叶变换经典,要有拓扑基础)Zimmer,Essential Results of Functional Analysis○小波分析Daubeches,Ten Lectures on WaveletsFrazier,An Introduction to Wavelets Throughout Linear Algebra Hernandez,《时间序列的小波方法》PercivalPinsky,Introduction to Fourier Analysis and WaveletsWeiss,A First Course on WaveletsWojtaszczyk,An Mathematical Introduction to Wavelets Analysis●微分方程(期权定价、动态分析)○常微分方程和偏微分方程(微分方程稳定性,最优消费组合)V. I. Arnold, Ordinary Differential Equations,常微分方程(英文版)(现代化,较难)W. F. Boyce, R. C. Diprima, Elementary Differential Equations and Boundary Value Problems《数学物理方程》陈恕行,复旦E. A. Coddington, Theory of ordinary differential equationsA. A. Dezin, Partial differential equationsL. C. Evans, Partial Differential Equations丁同仁《常微分方程教程》高教《常微分方程习题集》菲利波夫,上海科技社G. B. Folland, Introduction to Partial Differential EquationsFritz John, Partial Differential Equations《常微分方程》李勇The Laplace Transform: Theory and Applications,Joel L. Schiff(适合自学)G. Simmons, Differntial Equations With Applications and Historecal Notes索托梅约尔《微分方程定义的曲线》《常微分方程》王高雄,中山大学社《微分方程与边界值问题》Zill○偏微分方程的有限差分方法(期权定价)福西斯,偏微分方程的有限差分方法Kwok,Mathematical Models of Financial Derivatives(有限差分方法美式期权定价)?Wilmott,Dewynne,Howison,The Mathematics of Financial Derivatives (有限差分方法美式期权定价)○统计模拟方法、蒙特卡洛方法Monte Carlo method in finance (美式期权定价)D. Dacunha-Castelle, M. Duflo,Probabilités et Statistiques IIFisherman,Monte Carlo Glasserman,Monte Carlo Mathods in Financial Engineering (金融蒙特卡洛方法的经典书,汇集了各类金融产品)Peter Jaeckel,Monte Carlo Methods in Finance(金融数学好,没Glasserman的好)?D. P. Heyman and M. J. Sobel, editors,Stochastic Models, volume 2 of Handbooks in O. R. and M. S., pages 331-434. Elsevier Science Publishers B.V. (North Holland) Jouini,Option Pricing,Interest Rates and Risk ManagementD. Lamberton, B. Lapeyre, Introduction to Stochastic Calculus Applied to Finance (连续时间)N. Newton,Variance reduction methods for diffusion process :H. Niederreiter,Random Number Generation and Quasi-Monte Carlo Methods. CBMS-NSF Regional Conference Series in Appl. Math. SIAMW.H. Press and al.,Numerical recepies.B.D. Ripley. Stochastic SimulationL.C.G. Rogers et D. Talay, editors,Numerical Methods in Finance. Publicationsof the Newton Institute.D.V. Stroock, S.R.S. Varadhan,Multidimensional diffusion processesD. Talay,Simulation and numerical analysis of stochastic differential systems, a review. In P. Krée and W. Wedig, editors,Probabilistic Methods in Applied Physics, volume 451 of Lecture Notes in Physics, chapter 3, pages 54-96.P.Wilmott and al.,Option Pricing (Mathematical models and computation). Benninga,Czaczkes,Financial Modeling ○数值方法、数值实现方法Numerical Linear Algebra and Its Applications,科学社K. E. Atkinson, An Introduction to Numerical AnalysisR. Burden, J. Faires, Numerical Methods《逼近论教程》CheneyP. Ciarlet, Introduction to Numerical Linear Algebra and Optimisation, Cambridge Texts in Applied MathematicsA. Iserles, A First Course in the Numerical Analysis of Differential Equations, Cambridge Texts in Applied Mathematics 《数值逼近》蒋尔雄《数值分析》李庆杨,清华《数值计算方法》林成森J. Stoer, R. Bulirsch, An Introduction to Numerical AnalysisJ. C. Strikwerda, Finite Difference Schemes and Partial Differential Equations L. Trefethen, D. Bau, Numerical Linear Algebra《数值线性代数》徐树芳,北大其他(不必)《数学建模》Giordano《离散数学及其应用》Rosen《组合数学教程》Van Lint◎几何学和拓扑学(凸集、凹集)●拓扑学○点集拓扑学Munkres,Topology:A First Course《拓扑学》James R.MunkresSpivak,Calculus on Manifolds◎代数学(深于数学系高等代数)(静态均衡分析)○线性代数、矩阵论(资产组合的价值)M. Artin,AlgebraAxler, Linear Algebra Done RightCurtis,Linear Algeria:An Introductory ApproachW. Fleming, Functions of Several VariablesFriedberg, Linear Algebra Hoffman & Kunz, Linear AlgebraP.R. Halmos,Finite-Dimensional Vector Spaces(经典教材,数学专业的线性代数,注意它讲抽象代数结构而不是矩阵计算,难读)J. Hubbard, B. Hubbard, Vector Calculus, Linear Algebra, and Differential Forms: A Unified ApproachN. Jacobson,Basic Algebra Ⅰ&ⅡJain《线性代数》Lang,Undergraduate AlgeriaPeter D. Lax,Linear Algebra and Its Applications(适合数学系)G. Strang, Linear Algebra and its Applications(适合理工科,线性代数最清晰教材,应用讲得很多,他的网上讲座很重要)●经济最优化Dixit,Optimization in Economic Theory●一般均衡Debreu,Theory of Value●分离定理Hildenbrand,Kirman,Equilibrium Analysis(均衡问题一般处理)Magill,Quinzii,Theory of Incomplete Markets(非完备市场的均衡)Mas-Dollel,Whinston,Microeconomic Theory(均衡问题一般处理)Stokey,Lucas,Recursive Methods in Economic Dynamics (一般宏观均衡)经济学、金融学博士书目(B:概率论、数理统计、随机)◎概率统计●概率论(金融产品收益估计、不确定条件下的决策、期权定价)○基础概率理论(数学系概率论水平)《概率论》(三册)复旦Davidson,Stochastic Limit TheoryDurrett,The Essential of Probability,概率论第3版(英文版)W. Feller,An Introduction to Probability Theory and its Applications概率论及其应用(第3版)——图灵数学·统计学丛书《概率论基础》李贤平,高教G. R. Grimmett, D. R. Stirzaker, Probability and Random ProcessesRoss,S. A first couse in probability,中国统计影印版;概率论基础教程(第7版)——图灵数学·统计学丛书(例子多)《概率论》汪仁官,北大王寿仁,概率论基础和随机过程,科学社《概率论》杨振明,南开,科学社○基于测度论的概率论测度论与概率论基础,程式宏,北大D. L. Cohn, Measure TheoryDudley,Real Analysis and ProbabilityDurrett,Probability:Theory and ExamplesJacod,Protter,Probability Essentials Resnick,A Probability PathShirayev,Probability严加安,测度论讲义,科学社钟开莱,A Course in Probability Theory○随机过程微积分Introduction of diffusion processes (期权定价)K. L. Chung, Elementary Probability Theory with Stochastic ProcessesCox,Miller,The Theory of StochasticR. Durrett, Stochastic calculus黄志远,随机分析入门黄志远《随机分析学基础》科学社姜礼尚,期权定价的数学模型和方法,高教社《随机过程导论》KaoKarlin,Taylor,A First Course in Stochastic Prosses(适合硕士生)Karlin,Taylor,A Second Course in Stochastic Prosses(适合硕士生)随机过程,劳斯,中国统计J. R. Norris,Markov Chains(需要一定基础)Bernt Oksendal, Stochastic differential equations(绝佳随机微分方程入门书,专注于布朗运动,比Karatsas和Shreve的书简短好读,最好有概率论基础,看完该书能看懂金融学术文献,金融部分没有Shreve的好)Protter,Stochastic Integration and Differential Equations (文笔优美)D. Revuz, M. Yor, Continuous martingales and Brownian motion(连续鞅)Ross,Introduction to probability model(适合入门)Steel,Stochastic Calculus and Financial Application(与Oksendal的水平相当,侧重金融,叙述有趣味而削弱了学术性,随机微分、鞅)《随机过程通论》王梓坤,北师大○概率论、随机微积分应用(连续时间金融)Arnold,Stochastic Differential Equations《概率论及其在投资、保险、工程中的应用》BeanDamien Lamberton,Bernard Lapeyre. Introduction to stochastic calculus applied t o finance.David Freedman.Browian motion and diffusion.Dykin E. B. Markov Processes.Gihman I.I., Skorohod A. V.The theory of Stochastic processes 基赫曼,随机过程论,科学Lipster R. ,Shiryaev A.N. Statistics of random processes.Malliaris,Brock,Stochastic Methods in Economics and FinanceMerton,Continuous-time FinanceSalih N. Neftci,Introduction to the Mathematics of Financial DerivativesSteven E. Shreve ,Stochastic Calculus for Finance I: The Binomial Asset Pric ing Model;II: Continuous-Time Models(最佳的随机微积分金融(定价理论)入门书,易读的金融工程书,没有测度论基础最初几章会难些,离散时间模型,比Naftci的清晰,S hreve的网上教程也很优秀)Sheryayev A. N. Ottimal stopping rules.Wilmott p., J.Dewynne,S. Howison. Option Pricing: Mathematical Models and Compu tations.Stokey,Lucas,Recursive Methods in Economic Dynamics Wentzell A. D. A Course in the Theory of Stochastic Processes.Ziemba,Vickson,Stochastic Optimization Models in Finance○概率论、随机微积分应用(高级)Nielsen,Pricing and Hedging of Derivative SecuritiesRoss,《数理金融初步》An Introduction to Mathematical Finance:Options and othe r TopicsShimko,Finance in Continuous Time:A Primer○概率论、鞅论P. Billingsley,Probability and MeasureK. L. Chung & R. J. Williams,Introduction to Stochastic IntegrationDoob,Stochastic Processes严加安,随机分析选讲,科学○概率论、鞅论Stochastic processes and derivative products (高级)J. Cox et M. Rubinstein : Options MarketIoannis Karatzas and Steven E. Shreve,Brownian Motion and Stochastic Calculu s(难读的重要的高级随机过程教材,若没有相当数学功底,还是先读Oksendal的吧,结合Rogers & Williams的书读会好些,期权定价,鞅)M. Musiela - M. Rutkowski : (1998) Martingales Methods in Financial Modelling ?Rogers & Williams,Diffusions, Markov Processes, and Martingales: Volume 1, F oundations;Volume 2, Ito Calculus (深入浅出,要会实复分析、马尔可夫链、拉普拉斯转换,特别要读第1卷)David Williams,Probability with Martingales(易读,测度论的鞅论方法入门书,概率论高级教材)○鞅论、随机过程应用Duffie,Rahi,Financial Market Innovation and Security Design:An Introduction,Journal of Economic Theory Kallianpur,Karandikar,Introduction to Option Pricing TheoryDothan,Prices in Financial Markets (离散时间模型)Hunt,Kennedy,Financial Derivatives in Theory and Practice何声武,汪家冈,严加安,半鞅与随机分析,科学社Ingersoll,Theory of Financial Decision MakingElliott Kopp,Mathematics of Financial Markets(连续时间)Marek Musiela,Rutkowski,Martingale Methods in Financial Modeling(资产定价的鞅论方法最佳入门书,读完Hull书后的首选,先读Rogers & Williams、Karatzas and Sh reve以及Bjork打好基础)○弱收敛与随机过程收敛Billingsley,Convergence of Probability MeasureDavidson,Stochastic Limit TheoremEthier,Kurtz,Markov Process:Characterization and Convergence Hall,Marting ale Limit TheoremsJocod,Shereve,Limited Theorems for Stochastic Process Van der Vart,Weller,Weak Convergence and Empirical Process◎运筹学●最优化、博弈论、数学规划○随机控制、最优控制(资产组合构建)Borkar,Optimal control of diffusion processesBensoussan,Lions,Controle Impulsionnel et Inequations Variationnelles Chiang,Elements of Dynamic Optimization Dixit,Pindyck,Investment under UncertaintyFleming,Rishel,Deterministic and Stochastic Optimal ControlHarrison,Brownian Motion and Stochastic Flow SystemsKamien,Schwartz,Dynamic OptimizationKrylov,Controlled diffusion processes○控制论(最优化问题)●数理统计(资产组合决策、风险管理)○基础数理统计(非基于测度论)R. L. Berger, Cassell, Statistical InferenceBickel,Dokosum,Mathematical Stasistics:Basic Ideas andSelected TopicsBirrens,Introdution to the Mathematical and Statistical Foundation of Econom etrics数理统计学讲义,陈家鼎,高教Gallant,An Introduction to Econometric TheoryR. Larsen, M. Mars, An Introduction to Mathematical Statistics《概率论及数理统计》李贤平,复旦社Papoulis,Probability,random vaiables,and stochastic processStone,《概率统计》《概率论及数理统计》中山大学统计系,高教社○基于测度论的数理统计(计量理论研究)Berger,Statistical Decision Theory and Bayesian Analysis陈希儒,高等数理统计Shao Jun,Mathematical StatisticsLehmann,Casella,Theory of Piont EstimationLehmann,Romano,Testing Statistical Hypotheses《数理统计与数据分析》Rice○渐近统计Van der Vart,Asymptotic Statistics○现代统计理论、参数估计方法、非参数统计方法参数计量经济学、半参数计量经济学、自助法计量经济学、经验似然经济学、金融学博士书目(C:计量经济学、数理金融)统计学基础部分1、《统计学》《探索性数据分析》 David Freedman等,中国统计(统计思想讲得好)2、Mind on statistics 机械工业(只需高中数学水平)3、Mathematical Statistics and Data Analysis 机械工业(这本书理念很好,讲了很多新东西)4、Business Statistics a decision making approach 中国统计(实用)5、Understanding Statistics in the behavioral science 中国统计回归部分1、《应用线性回归》中国统计(蓝皮书系列,有一定的深度,非常精彩)2、Regression Analysis by example,(吸引人,推导少)3、《Logistics回归模型——方法与应用》王济川郭志刚高教(不多的国内经典统计教材)多元1、《应用多元分析》王学民上海财大(国内很好的多元统计教材)2、Analyzing Multivariate Data,Lattin等机械工业(直观,对数学要求不高)3、Applied Multivariate Statistical Analysis,Johnson & Wichem,中国统计(评价很高)《应用回归分析和其他多元方法》Kleinbaum《多元数据分析》Lattin时间序列1、《商务和经济预测中的时间序列模型》弗朗西斯著(侧重应用,经典)2、Forecasting and Time Series an applied approach,Bowerman & Connell(主讲Box-Jenkins(ARIMA)方法,附上了SAS和Minitab程序)3、《时间序列分析:预测与控制》 Box,Jenkins 中国统计《预测与时间序列》Bowerman抽样1、《抽样技术》科克伦著(该领域权威,经典的书。

IEEE Journal of Lightwave Technology/archives/May 2001VOLUME 19NUMBER 5JLTEDG (ISSN 0733-8724)PAPERThis material is posted here with permission of the IEEE.Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must beobtained from the IEEE by sending a blank email message topubs-permissions@.By choosing to view this document, you agree to all provisions ofthe copyright laws protecting it.Copyright © 2001 IEEE.Reprinted fromJournal of Lightwave Technology, vol. 19, no. 5, pp. 700-707, May 2001Multimode Enterference Couplers with Tunable Power Splitting RatiosJ. Leuthold, C.H. Joyner700JOURNAL OF LIGHTW A VE TECHNOLOGY,VOL.19,NO.5,MAY2001 Multimode Interference Couplers with Tunable PowerSplitting RatiosJuerg Leuthold,Member,IEEE,and Charles H.JoynerAbstract—New,compact multimode interference couplers withtunable power splitting ratios have been realized.Experimentsshow large tuning ranges.Such couplers are needed to optimizeON–OFF ratios in interferometric devices and may find applicationsas extremely compact switches.Index Terms—Integrated optics,multimode interference(MMI),optical coupler,optoelectronic devices,semiconductor devices.I.I NTRODUCTIONT UNABLE mode couplers are of high interest in integratedoptics.They can be used to increase design and fabrica-tion tolerances of devices that need accurately defined couplersplitting ratios[1]–[3].For instance,interferometric-typeelectrooptic and all-optical devices need well-defined couplerrelations in order to provide high extinction ratios[3].Recently,a fully integrated100-Gb/s wavelength converter has beendemonstrated[4].The device has two interferometer armsof different length that consequently have different fabrica-tion-related waveguide losses.Nevertheless,extinction ratiosexceeding14dB were achieved with this interferometer-basedswitch.Such a result can only be reproducibly achieved by useof integrable,tunable power splitters that allow adaptation ofthe splitting ratio to the actual propagation losses.Thus,tunablepower splitters are key elements for performance improvementof integrated optic devices and may lead to new integrated op-tics circuits and eventually to ultracompact integrable switches.Standard couplers that allow for tuning of the splitting ratiosinclude digital-optical switches like0.1LEUTHOLD AND JOYNER:MMI COUPLERS WITH TUNABLE POWER SPLITTING RATIOS701Fig.1.Tuning the output power from one output into the other in a222MMI by biasing the refractive index by as little as0.15%(or1n=00:005)in the black shaded areas within the MMI.The figures show the result of a BPM simulation for(a)an edge-biased,(b)a nonbiased,and(c)a center-biased MMI.sequently,the output images can be changed by modifying the refractive index around some selected spots within one interval of the MMI where such self-images occur.This will lead to new phase relations between the self-images at the next interval and with that to a modified output image[8],[10],[11],[15].The simplest approach to build MMIs with tunable splitting ratios is to modify the material properties around spots within the MMI,where the most dominant self-images u-ally,self-image pattern formation is most dominant and simple halfway along the propagation direction of an MMI.For in-stance,in a22MMI,as depicted in Fig.1(b),visualizes these self-images found around the center.In our simulation,they ap-pear as spots with higher field intensities(darker color)and are marked with black and white circles.In the case of the22MMIs can be designed in the geometry of the general-MMI type as well as in the geometry of the overlap-MMI type[10]. Overlap MMIs are a special case of the general MMIs,where the input and output ports have been chosen at a very specific loca-tion within the MMI input side.The special choice of the input and output positions leads to very simple interference patterns for wider MMIs that would normally have much more compli-cated patterns.Although these two types have almost the same performance,their geometry is quite different.For example,for a general22MMI of the same length, the overlap type MMI(with its input ports at1/3of the MMI width)can be designed witha2MMI.This is important,since one is usu-ally interested in designing the MMIs as wide and as short as possible.The reason for this is that short MMIs provide larger optical wavelength bandwidths while wider MMI widths lead to relaxed fabrication tolerances.(The relative error for a given technological width deviation is less severe for a wide MMI in comparison to an MMI with small width.)In addition,in our case,we would like to modify the refractive index around certain spots within an MMI.This,of course,is much simpler the larger the spots around which we may modify the refractive index.For this reason,we subsequently restrict our discussion to tunable MMI splitting ratios of the overlap type.Tuning of the splitting ratios of the general MMIs can be performed with the same tech-nique and in most cases even by tuning the refractive index at the same spots as described for the overlap MMIs.III.T HEORY OF MMI S WITH T UNABLE S PLITTING R ATIOS This section describes a theory that gives a correct descrip-tion of all overlap-type MMIs that tune the splitting ratios by702JOURNAL OF LIGHTW A VE TECHNOLOGY ,VOL.19,NO.5,MAY2001Fig.2.Generic overlap MMI with N 01possible access waveguides.The positions of the waveguides are determined by the MMI width W and the integer number N .The tuning section comprises several shaded areas that indicate the locations where self-images appear.The output splitting ratios are tuned by biasing the refractive index in one or simultaneously in several of these areas.varying the refractive index at certain spots halfway in the light propagation direction of the MMI.The theory can easily be ex-tended to other overlap MMIs where the material parameter has to be tuned at other spots.As mentioned above,we can restrict the discussion to overlap-type MMIs that have wider geometrical widths and therefore are simpler to tune than the thinner general-type MMIs.The geometry of a generic overlap-type MMI with atmost.(1)wherewavelength in vacuum.To describe the tunable MMI from Fig.2,we split the MMI into three sections.The first section describes the mappingofandmatrices.describes the mapping of the fieldsfrom the input to the center of the MMI and again from the center to the output guides.Thematrixhas been given by Heaton and Jenkins [18],which we would like to use in a slightly modified form to express theelementswith(3)and.The diagonalmatrixthe phase shift induced bythe change of the effective refractiveindexalong the tunable section oflength.Refractive-index changes in a material are associated with absorption changes as implied by the Kramers–Krönig relations.The intensity absorptiontermconsiders these refractive-index change losses.These losses may be negligible or stronger or even might be turned into gain,depending on the underlying process that has been chosen to change the refractive index.IV .C OMPARISON OF T HEORY WITH E XPERIMENT ,T UNABLE22MMIs with symmetric splitting ratios.A.Device Geometry and Structure The geometry of the2andhas five possible input and output guides,each displacedbyand2splitter [8].The2m quaternary layer is 260nm thick and completely sur-rounded by InP.The top InP cladding layer is1.5m for the length and11.3LEUTHOLD AND JOYNER:MMI COUPLERS WITH TUNABLE POWER SPLITTING RATIOS703Fig.3.(a)Geometry of a symmetric 222MMI with locations of the pads where we induce a negative refractive index change in order to tune the splitting ratios.The inset shows the structure of the waveguide.(b)Simultaneously biasing the edge pads decreases the bar intensity P,whereas biasing the center pad leads to the opposite effect.Both thetheoretical model (solid and dashed lines)used in this paper as well as a BPM simulation (box and cross symbols)lead to the same results.(c)Experimental verification of the tuning characteristics by applying a forward current at the edge pads and alternatively at the center pad.B.Method for Tuning the Refractive IndexIn order to tune the refractive index within the different tuning sections of the MMI,we applied gold contacts on top of the waveguides where tuning was desired.This allowed us to indi-vidually current bias the different sections,exploiting the car-rier-related plasma effect.The plasma effect leads to a negative refractive index change.Good metal contacts are advantageous in order to avoid heating of the MMI sections,which otherwise would lead to a temperature-related refractive index change with an opposite sign.In the simulation,the dimensions of the gold contacts,where the refractive indices were tuned,were2m50for the center pad.Yet,in the experiment,we have used MMIs with shorter MMI pads.The pad length was kept as small as25withlosses in units of cm;experimentally determined loss constant.Comparison of simulation with experiment also allowed us to approximate the refractive index change as a function of the applied current.Wefoundwithis the constant relating the applied current with therefractive index change.Theconstantmay vary de-pending on the device implementation.Both in experiment and in our model,we need and measure the effective refractive index changes and effective absorption changes rather than the real changes in the guiding layer.However,these are approximately interrelated by the confinement factorviaand2MMI of Fig.3(a).The results are shownin Fig.3(b).The effect from edge biasing the device are shown toward the left side of the figure,whereas the right-hand side shows the effect from a center-biased negative refractive-index change.It can be seen that edge biasing changes the power in favor of the crossport704JOURNAL OF LIGHTW A VE TECHNOLOGY,VOL.19,NO.5,MAY2001and18andand.However,the axis in the figure is always given in terms of the effectiverefractive index changes.The measured splitting ratio changes as a function of the ap-plied current bias are shown in Fig.3(c)for both the edge-and center-biased case of the22MMI S WITH A SYMMETRIC S PLITTINGR ATIOSA variety of2or PA2a nd.A l l p o s s i b l e i n p u t g u i d e s a r e l y i n g ep l a c e d byLEUTHOLD AND JOYNER:MMI COUPLERS WITH TUNABLE POWER SPLITTING RATIOS705(a)(b)Fig.5.(a)Geometry of a 222MMI with a 15:85splitting ratio when not biased.(b)Small negative refractive index baising of the edge pads increases the intensity in the cross ports,whereas center biasing favors the intensity in the bar output port.the output that guides less light is hardly affected by this asym-metry.B.Tunable 15:85Splitter2withand1MMI.It maps all thelight from either of the two input guides into the corresponding cross port at the output.Due to its simplicity,we can increase the dimension of the pads considerably.A BPM simulation has shown that for a device oflengthm.Thus we choose the pad length twice as large as for the previous devices.In comparison with the device introduced in [14],our device has the same width but only half the length.It works with only one metallization pad instead of four pads.Tuning of the refractive index at either pad will decrease the power coupled intothe3splitter is shown in Fig.7(a).The device has the same geometry as the2discussed in Fig.5.However,in contrast to the devicein Fig.5,we have chosen the central input port to introduce the signal.The device behaves as follows.Without any biasing at the edge pads,all the light from the central input guide will be mapped onto the central outputguide1MMI [21].When tuning theedge pads,light will be redirected to the two outer output guides with increasing refractive index change.The light coupled out into the two outer ports might be used for detection purposes or any other operation.The MMI might be used instead of the device shown in Fig.6.The advantage of this tunable MMI over706JOURNAL OF LIGHTW A VE TECHNOLOGY,VOL.19,NO.5,MAY2001(a)(b)Fig.7.The concept of tuning the output powers in the output guides of MMIs is more generally applicable.For example,in(a),we show the power distribution for a123MMI when left or right biasing one of the pads.(b)shows the output-power tuning of a butterfly shaped MMI under edge biasing.the one proposed in Fig.6is its complete symmetric geometry,which makes it more tolerant of fabrication inhomogenities.Italso has a large dynamic range when used as a power equalizer.MMIs that do not have a rectangular shape can be tunedas well.Recently parabolically tapered MMIs have been intro-duced in order to reduce the MMI size[22].Earlier butterflyshaped MMIs have been proposed[22]and applied[3]to buildMMIs with asymmetric fixed splitting ratios.Our tuning con-cept can be applied onto all of these MMIs.In Fig.7(b),wedemonstrate how the splitting ratio of a butterfly MMI with awider width at the center is tuned under a negative refractiveindex change applied at the two pads at the edge.Such MMIsmight indeed be very interesting to optimize extinction ratiosof electrooptic switches.The butterfly asymmetry provides asmall asymmetry,which by edge-biasing,can be easily tunedtoward the ideal50:50splitting ratio.In the device of Fig.7(b),only the edge bias will be needed to make the MMI symmetric.In addition,by using the MMI of Fig.7(b),we can exploit theedge-biasing technique,which in our experiment was more ef-ficient than center-biasing and moreover benefit from a widerMMI section allowing for larger pads.VII.C ONCLUSIONWe have successfully demonstrated new tunable MMI split-ters by changing the refractive index around some determinedspots within the MMI.Tunability around20%was obtained forthe splitting ratios of a symmetric2LEUTHOLD AND JOYNER:MMI COUPLERS WITH TUNABLE POWER SPLITTING RATIOS707[12]i,M.Bachmann,W.Hunziker,P.A.Besse,and H.Melchior,“Arbi-trary ratio power splitters using angled silica on silicon multimode inter-ference couplers,”Electron.Lett.,vol.32,no.17,pp.1576–1577,Aug.1996.[13]G.A.Fish,L.A.Coldren,and S.P.DenBaars,“Compact InGaAsP/InP122optical switch based on carrier induced suppression of modal interference,”Electron.Lett.,vol.33,no.22,pp.1898–1900,Oct.1997.[14]S.Nagai,G.Morishima,M.Yagi,and K.Utaka,“InGaAsP/InP mul-timode inteterference photonic switches for monolithic photonic inte-grated circuits,”Jpn.J.Appl.Phys.,vol.38,no.2B,pp.1269–1272, Feb.1999.[15]M.Yagi,S.Nagai,H.Inayoshi,and K.Utaka,“Versatile multimodeinterfernece photonic switches with partial index-modulation regions,”Electron.Lett.,vol.36,no.6,pp.533–534,Mar.2000.[16]R.M.Jenkins,R.W.J.Devereux,and J.M.Heaton,“Waveguide beamsplitters and recombiners based on multimode propagation phenomena,”Opt.Lett.,vol.17,no.14,pp.991–993,July1992.[17]M.Bachmann,P.A.Besse,and H.Melchior,“General self-imagingproperties in N2N multimode interference couplers including phase relations,”Appl.Opt.,vol.33,pp.3905–3911,July1994.[18]J.M.Heaton and R.M.Jenkins,“General matrix theory of self-imagingin multimode interference(MMI)couplers,”IEEE Photon.Technol.Lett.,vol.11,pp.212–214,Feb.1999.[19]J.Leuthold,C.H.Joyner,B.Mikkelsen,G.Raybon,J.L.Pleumeekers,ler,K.Dreyer,andC.A.Burrus,“Compact and fully packagedwavelength converter with integrated delay loop for40Gbit-s RZ Sig-nals,”in Proc.Optical Fiber Communication Conf.(OFC’2000),Balti-more,MD,Mar.2000,postdeadline paper17.[20]M.Bachmann,P.A.Besse,and H.Melchior,“Overlapping-image mul-timode interference couplers with reduced number of self-images for uniform and nonuniform power splitting,”Appl.Opt.,vol.34,no.30, pp.6898–6910,Oct.1995.[21]J.M.Heaton,R.M.Jenkins,D.R.Wight,J.T.Parker,J.C.H.Bir-beck,and K.P.Hilton,“Novel1-to-N way integrated optical beam split-ters using symmetric mode mixing in GaAs/AlGaAs multimode waveg-uides,”Appl.Phys.Lett.,vol.61,pp.1754–1756,Oct.1992.[22] D.S.Levy,K.H.Park,R.Scarmozzino,R.M.Osgood,C.Dries,P.Stu-denkov,and St.Forrest,“Fabrication of ultracompact3-dB222MMI power splitters,”IEEE Photon.Technol.Lett.,vol.11,pp.1009–1011, Aug.1999.Juerg Leuthold(S’95–A’98–M’99)was born in St.Gallen,Switzerland,on July11,1966.He received the Diploma degree in physics and the Ph.D.degree from the Swiss Federal Institute of Technology(ETH),Zurich,Switzerland,in 1991and1998,respectively.In1992,he joined the Institute of Quantum Electronics of the Swiss Fed-eral Institute of Technology,where he worked in the field of integrated optics. His doctoral work was in the field of all-optical switching and other integrated optoelectronic components.In1999,he first was with the Integrated Photonics Laboratory,Tokyo University,before joining Bell Labs,Lucent Technologies, Holmdel,NJ.Charles H.Joyner was born in Decatur,GA,in1953.He received the bach-elor’s degree in chemistry from Furman University,Greenville,SC,in1975and the master’s and Ph.D.degrees in physical chemical engineering from Harvard University,Cambridge,MA,in1978and1981,respectively.In1981,he joined AT&T Bell Laboratories as a Member of Technical Staff. He currently works on design and fabrication of InP-based integrated photonic devices.。