Content Based Image Retrieval using Color and Texture

- 格式:pdf

- 大小:948.79 KB

- 文档页数:19

20204/298随着CT 、MRI 、PET 、SPECT 、超声等成像技术的迅猛发展,医学图像作为一种信息丰富、表现直观的视觉信息资源在现代医疗诊断中发挥着越来越重要的作用。

医学图像归档与传输系统PACS 作为现代数字诊断的重要基础已被众多医疗机构重视和使用。

面对海量的医学图像资源,如何对它们有效的组织与管理,并快速准确地找到用户需要的图像数据已成为一个迫切需要解决的重要课题。

1图像检索的历史发展图像检索最早以人工文本标注为基础。

此时的图像检索本质上是标注文本的关键词匹配,依据文本匹配程度将相关图像呈现给用户。

该方法简单易实现,但缺点也显而易见:首先,人工标注图像,标注内容完全取决于标注者对图像的理解,主观性强。

其次,随着持续增加的图像数量,人工标注图像需要耗费大量的人力劳动。

面对海量的图像数据,这已越来越成为一个无法完成的任务。

20世纪90年代,研究者们开始寻求从图像自身寻找线索来检索图像,提出基于内容的图像检索(Content Based Image Retrieval ,CBIR )。

不同于人工文本标注,基于内容的图像检索直接从图像提取颜色、纹理、形状等特征信息,在特征空间中匹配检索需求,具有客观,自动化程度高等优点。

系统检索时需要用户提供匹配检索需求的图像样例,系统从图像样例中提取检索特征,在特征空间中进行近似匹配,按相关度由大到小排列,呈现给用户。

经过近二十年该领域的研究,人们发现如果图像检索仅仅依赖底层特征,获得的检索结果无法匹配抽象的高层语义,在很多情况下无法满足用户的需要,这极大地限制了检索系统的应用。

为此,研究人员开始考虑基于高级语义的图像检索(Semantic Based ImageRetrieval ,SBIR )问题。

基于语义的图像检索是基于内容图像检索的进一步发展,也是未来图像检索的发展方向。

在医学影像检索应用中,其要求结合从医学视觉信息中提取的特征语义和领域知识,跨越图像底层特征与高级语义之间存在的“语义鸿沟”,将医学图像检索由特征空间拓展到更广阔的高摘要随着现代医学影像技术的迅猛发展,医学图像数量呈现了爆炸性增长,高效的医学图像检索研究显得尤为迫切。

A Review of Benchmarking Content Based Image RetrievalGareth Loy∗and Jan-Olof EklundhRoyal Institute of Technology(KTH)Stockholm,Swedentel:+4687906353fax:+4687230302e-mail:gareth@nada.kth.seAbstractBenchmarking Content Based Image Retrieval(CBIR)systems allows researchers and de-velopers to compare the strengths of different approaches,and is an essential step towardsestablishing the credibility of CBIR systems for commercial applications.Here we intro-duce the problem of developing a benchmark,discuss some of the issues involved,andprovide a review of current and recent benchmarking efforts.We also propose a solutionto one of the key stumbling blocks hampering benchmarking campaigns to date:the avail-ability of large royalty free test databases.1IntroductionA benchmark is a basis on which to compare performance.In the case of Content Based Image Retrieval(CBIR)systems[29]it is typically a common set of tasks,such as“detect all faces”, together with a set of test images with accompanying ground truth,and some metrics for mea-suring performance.The aim of a benchmark is not just to evaluate the performance of an algorithm,but also to do so in a way that is directly comparable between different algorithms. Whilst this premise is simple enough,effectively implementing a benchmark is no simple task.To be successful a benchmark must be embraced by the researchers and developers design-ing the algorithms.This requires that the benchmarking task,or tasks,be highly relevant to the intended tasks promoted for the CBIR systems,and it must be clear that improved perfor-mance on the benchmark correlates strongly with improved performance in the real world,i.e., improved end-user satisfaction.Standardised performance metrics are also essential.Consider the face detection example above,the performance could be measured in many ways,e.g.via a simple binary response for each image:Does this image contain a face?;a numerical response:How many faces are present?;or a complex numerical response:What are the locations of all faces in the image?∗This work was carried out during the tenure of a MUSCLE internal fellowship.What are the locations of the eyes and mouth of all faces present?Should confidence values be associated with each response,and if so how should these be incorporated into thefinal performance measure?In addition to broadly accepted and clearly understood tasks and performance metrics there is the question of test data.The purpose of CBIR systems is to efficiently search huge amounts of widely varying images for some specified content.It is therefore essential to benchmark the performance of these systems on large amounts of data,both in order to evaluate the per-formance in as realistic a manner as possible and obtain a statistically significant result.The problem then arises:where is this data obtained and how is the ground truth generated?It is desirable to distribute the test data freely,which introduces copyright issues,whilst the ground truth labelling of the data has the potential to be an extremely arduous task.Difficulties aside,it is widely agreed that a well-designed benchmark is central to the ad-vancement of thefield.In1893Lord Kelvin[31]summarised the importance of benchmarking: When you can measure what you are speaking about,and express it in numbers,youknow something about it;but when you cannot measure it,when you cannot expressit in numbers,your knowledge is of a meager and unsatisfactory kind:it may bethe beginning of knowledge,but you have scarcely,in your thoughts,advanced tothe state of science.Providing a clear and broadly understood performance measure both allows researchers to more fully understand the strengths and limitations of their systems and compare their results with other systems on a level playingfield.It also provides industry,potential commercial partners, and potential buyers of CBIR systems with a universal measure of how good the systems really are,which is central to establishing the credibility of CBIR systems in the marketplace.Benchmarking CBIR systems,and computer vision algorithms in general,has long been considered an important task[6]and has led to a number of extensive benchmarking efforts, e.g.[7,8,19,17,11,10,14,15].This paper reviews several of these,and then moves on to tackle one of the key issues hampering benchmarking at present,the availability of royalty free images and ground truth data.A proposed method is outlined for generating extensive benchmarking databases from the growing population of online images available from sites such as Flickr and licenced under the new Creative Commons copyright.2Benchmarking ProjectsThis section gives a brief overview of a number of current and recent benchmarking projects relevant to CBIR systems.2.1VIPERThe VIPER1(Visual Information Processing for Enhanced Retrieval)network based at the Uni-versity of Geneva has been associated with several benchmarking efforts.These include,a web-1http://viper.unige.ch/research/benchmarking/based benchmark for Query By Example(QBE)-based CBIR systems,image browser bench-marking[20],and the Benchathlon2event.The group is also behind the development of the Multimedia Retrieval MarkUp Language3(MRML)that aims to provide a unified interface for multimedia retrieval and management software.The web-accessible benchmark for QBE-based CBIR systems was designed to allow devel-opers of image retrieval systems the opportunity to benchmark their systems online at any time. MRML was used to access the retrieval system,and queries were made based on image URLs and the results transmitted as URLs.The Benchathlon began with BIRDS-I4(Benchmark for Image Retrieval using Distributed Systems over the Internet)and was an initial step towards a standardized benchmark for CBIR systems.BIRDS-I was an image retrieval benchmark that was presented as a contest during EI2001.Participants were required to implement an image retrieval server to be tested against a ground-truth via a set of defined metrics.The Benchathlon aimed to develop a networked system benchmark for CBIR,along the lines of existing benchmarks for text retrieval and rela-tional database management.Muller et al.[17]reported that while the Benchathlon initiated discussion amongst participants no systematic comparison between systems was started.2.2IAPRThe International Association for Pattern Recognition’s(IAPR)Technical Committee12(TC-12)has worked towards a standardised benchmark for the comparison of multimedia retrieval systems[8,23].The aim was to identify and evaluate the relative strengths and weaknesses of different approaches by providing standard sets of data,queries,and performance metrics.Leung and Ip[8]made several initial recommendations concerning the development of a CBIR benchmark.They proposed that an extensible suite of benchmarks should be developed to cater for the disparate requirements of different applications,and that benchmarking image collections must be made freely available to researchers and must be free from any conditions or restrictions of use.It was recommended that initially1,000images be used for a CBIR benchmark,and this number be increased over time.All images should be in JPEG format and should contain multiple objects,with diverse relationships between them.Twenty evaluation queries covering a representative cross-section of contents should be designed against these images.Queries should be based entirely on the visual contents of the images(meta-data must not be used)and each query should be correctly“answered”by a known ground truth set of images.The number of answer images per query should be less than a specified amount,as should the number of images the algorithm returns at any one time.The proposed measures for evaluating performance included:recall,precision,average number of stages for retrieving the relevant images,average rank of the relevant images,effectiveness of query language and model,and effectiveness of relevance feedback.These specifications formed the basis of the IAPR TC-21Benchmark[23].The bench-mark consisted of,a set of still natural images,a representative set or queries,ground truths 2/4/associated with these queries,and a set of recommended performance metrics.The benchmark concentrated on the retrieval of semantic content(e.g.image contains car,person,etc.).2.3Computer Vision BenchmarksComputer vision algorithms offer a means for CBIR systems to extract semantic content from images.Two key areas of growing relevance for CBIR systems are segmentation and object recognition.Both of these have recently been the subjects of major benchmarking campaigns.2.3.1The Berkeley Segmentation Dataset and BenchmarkThe Berkeley Segmentation Dataset and Benchmark5consist of12,000hand-labeled segmen-tations of1,000images from30human subjects[11].The original images are from the Corel database.Half of the segmentations were done on colour images,and half on grayscale.The initial public release of this data consisted of all colour and grayscale segmentations for300 images,and was divided into200training images and100test images.A method was also pre-sented for measuring how well an automatically generated segmentation matched a ground-truth segmentation,and Matlab code was provided for running the benchmark.The motivation for the benchmark was to provide a basis for comparing different algorithms,and to track progress towards human-level performance.The performance metrics developed for boundary detection[12,13]are relevant for any boundary dataset–not just the hand-labelled segmentations in the Berkley benchmark.Over-laying all human segmented boundaries for a given image generated the ground truth.The benchmark then measured the performance of a given soft segmentation6against the ground truth.A threshold was applied to the soft boundary map at many different levels(e.g.30).At each level a precision-recall curve was generated,where precision was the probability that a hypothesised boundary pixel was a true boundary pixel,and recall was the probability that a true boundary pixel was detected.Therefore,the curve showed the trade-off between misses and false positives as the detector threshold changes.A summary statistic was also produced to provide a single number summarising the algo-rithm’s performance.In the case where the precision-recall curves do not intersect,the curve furthest from the origin dominates.The harmonic mean of the precision and recall was cal-culated at each point on this curve and the maximum value over the curve was taken as the summary statistic encapsulating the algorithm’s performance.2.3.2Evaluation of Feature Detectors and DescriptorsThe pastfive years has seen a drastic increase in the success of feature-based methods in computer vision.These approaches have been used for CBIR on large databases[25,30] 5/projects/vision/grouping/segbench/6a soft segmentation is not binary,instead it gives a continuous measure for each pixel describing the confidence that that pixel is a boundary.as well as numerous other applications including,visual data mining[28],object retrieval in video[27,26],model based recognition[5,9,21,24],and object categorisation[2,3,4,22].These approaches involve three steps.Given a collection of images between which tofind correspondences:detect a set of feature points in each image,construct a feature vector provid-ing a local description of each feature point(typically invariant to orientation and scale),and match these feature vectors tofind potential matching features over the collection of images. The success of these methods relies on detecting and describing feature points in a manner that is robust to some variations in lighting,viewpoint,scale and orientation,while providing feature vectors that are distinctive enough for good matching performance.Given two images contain-ing the same object,these methods aim to extract a sufficient number of similar feature points from each image of the object to allow the objects to be matched between the two images.Recently a concerted effort was made within the EU-funded project VIBES7to present a cohesive performance comparison of methods for detecting and describing local features8.This campaign focused on affine invariant methods that provide the most generic and useful means for feature-based recognition of objects or scenes.The treatment was presented in two papers, one describing methods for detecting local feature points[15]and the other describing methods for constructing feature vectors describing these points[14],software for executing the different methods and running the benchmark was also made available9.Thefirst paper[15]reviews affine-invariant region detectors and considers performance under:blur,JPEG artifacts,light change,and scale change.Detectors considered are:Harris affine detector,Hessian affine detector,maximally stable extremal region detector,edge-based region detector,intensity extrema-based region detector,and entropy-based region detector.The second paper[14]compares the performance of local descriptors.Descriptors consid-ered are shape context,steerablefilters,PCA-SIFT,differential invariants,spin images,SIFT, complexfilters,moment invariants and cross-correlation.2.4ImageCLEFImageCLEF is the image retrieval track of the Cross Language Evaluation Forum(CLEF)[1, 18].It is not strictly a CBIR benchmarking event as it allows the use of meta-data,i.e.,text that appears around the image or in the image title.The primary purpose of the CLEF campaign is to promote the multi-lingual searching of such data,however,a secondary goal is to investigate combining text and CBIR.ImageCLEF offers two main image retrieval tasks,one over collections of photographic images and one over collections of medical images.Since its inception in2003,ImageCLEF has drawn interest from both academics and commercial research organisations from the areas of CBIR,Cross-Language Information Retrieval,and user interaction.7http://www.nada.kth.se/sullivan/VIBES/8/˜vgg/research/affine/9/˜vgg/research/affine/2.5Neural Networks Council Standards CommitteeIEEE Neural Networks Council Standards Committee has a working group on pattern recog-nition benchmarks10with the goal of providing“easy access to data sets and algorithms which may be used for benchmarking and comparison purposes via the[internet]”.There is a webpage with lists of datasets,algorithm code,and publications.2.6MUSCLEThe EU-sponsored Network of Excellence MUSCLE11(Multimedia Understanding through Semantics,Computation and Learning)is developing systems and methodologies for automat-ically extracting semantic information from multimedia data.The MUSCLE benchmarking workpackage12has been established to develop tools for evaluating and comparing these algo-rithms,and to promote the use of these tools amongst the MUSCLE members.To achieve this large test databases are being assembled and regular evaluation projects are planned.In France the Million image CLIC(CEAList Image Collection)database[16]has been as-sembled by the Laboratoire d’ingnerie de la connaissance multimdia multilingue(LIC2M/CEA-LIST).The database contains15,900images that have undergone69different transformations. The group have also organised the ImagEV AL13competition for automatic recognition of pic-tures.The competition consists of several tasks including retrieval of transformed images(about 30,000images generated from2000using various image transformations),combined text and image retrieval,text detection and recognition in images,object recognition,and attribute ex-traction(e.g.indoor,outdoor,people present,etc.).The Paris School of Mines has provided another image database containing images under different ground truth illumination conditions.In Austria the massive CIS-Benchmark database of coin images,containing over100,000 ground-truthed images and1,500object classes,has been assembled as part of Project Dagobert at the ARC Seibersdorf Research Centre.In addition,the Partial Planar Objects Database has been compiled at TU Graz and consists of20different objects seen from varying angles;ground truth includes both the object name and viewing angle.Network members have also collect numerous databases containing video with ground truth labelling.The majority of databases compiled are available for public(if not always commer-cial)use.2.7TRECVIDA recent success story in an area closely related to CBIR benchmarking is TRECVID14that started in2001as a video retrieval track of the Text REtrieval Conference(TREC).In2003 TRECVID grew into an independent evaluation forum for research in automatic segmentation, 10/nnc/index1.html1112http://muscle.prip.tuwien.ac.at/index.php14/projects/trecvid/indexing,and content-based retrieval of digital video.Its aim is to promote progress in content-based retrieval from digital video via open,metrics-based evaluation15.The evaluation is an annual event and provides a large test collection,uniform scoring procedures,and a two-day workshop for organizations interested in comparing the results of their video retrieval systems.For2005the tasks considered for the TRECVID evaluation were:shot boundary detection, classification of types of camera motion,high-level feature extraction,a high-level search task which including query-based retrieval and browsing,and exploration of raw unedited BBC footage(rushes).High-level features were considered to be labels that were clear to humans, such as people walking/running:segment contains video of more than one person walking or running,or USflag:segment contains video of a USflag.A request to establish a CBIR track at TREC was rejected on the grounds that there were no databases large enough that could be freely distributed[18].2.8PEIPAThe principal aim of the Pilot European Image Processing Archive16(PEIPA)is to provide in-formation,datasets and software to measure and compare the effectiveness of image processing and computer vision algorithms.The archive is supported by the EU-funded project Perfor-mance Characterization in Computer Vision(PCCV)for Benchmarking Vision Systems17,the University of Essex,and the British Machine Vision Association.It offers a comprehensive online resource covering many aspects of benchmarking and performance characterisation in computer vision,including tutorials on benchmarking and links to databases.PEIPA aims to distribute test datasets to researchers so they can quantify and compare the performance of their algorithms.A test harness called HATE has been prepared to automate much of this process, and results can optionally be uploaded to the PEIPA website and made available to other re-searchers interested in making comparisons.3The Challenge of Assembling Test DataOne of the greatest challenges facing the CBIR benchmarking community is the availability of test databases[18].These databases not only need to contain thousands of images with associated ground truths,but they must be royalty-free so they can be freely distributed.This leads to two key problems:obtaining royalty free images,and generating ground truth.Many databases do exist,but these have typically been limited to contain material that has been donated or specially captured by the creators of the database.This has restricted the devel-opment of very large databases and made database generation a labour intensive process.While some creative solutions have been found,such as collaboration with Project Dagobert to form the CIS-benchmark,and using image transformations to extend database size[16],it remains to find a general solution to generating diverse,realistic databases for CBIR benchmarking.15Guidelines for the TRECVID2005Evaluation.16/index.html17/benchmark/A solution to the copyright issue is offered by Creative Commons18,a non-profit organi-sation that offers artists and authors an alternative to full copyright.Breaking away from the traditional“all rights reserved”copyright Creative Commons offers a“some rights reserved”copyright,allowing copyright holders to licence their material freely or with constraints such as Noncommercial,No Derivative Works or Share Alike.Importantly Creative Commons has been embraced by many online organisations including Flickr19—one of the most popular image sharing site on the internet—which is the home of millions images from users across the globe.Flickr’s Creative Commons pool20provides a searchable database of publicly licensed pho-tos.There are currently more than4million images available under Creative Commons licenses at Flickr and this number is steadily increasing.Many of these images also have“tags”describ-ing their content and comments from users who have viewed the image.Automatically building databases from publicly licensed images from sites like Flickr(or OpenPhoto21)simply requires writing a web-crawler to autonomously browse the site,down-loading images and their associated tags,comments,and author and copyright information,and compiling this into a database.Tags and comments can provide an initial basis for generating ground truth,and author and copyright information can be used to ensure that copyright restric-tions are adhered.As Flickr allows uses to“tag”and comment on images on an ongoing basis, it is possible to have the web-crawler automatically update the tags and comments associated with each image as additional information is posted.Thousands of users add images,tags and comments to Flickr on a daily basis.This is an invaluable opportunity to generate test data from the very environment that CBIR systems are built for,and to spur development towards the day when CBIR systems are helping the users of such sitesfind the content they require.4ConclusionToday effective benchmarking of CBIR is within reach.Frameworks have been established and effective methodologies outlined.The challenge remains to tune performance measures to the needs of developers,potential users and buyers of CBIR systems,and assemble large realistic test databases.With the ever-increasing growing amount of image content available online under the Creative Commonsflexible copyright,material for databases can be compiled rapidly and efficiently without traditional copyright bining this with forums like Flickr that allow multi-user posting,labelling and commenting of images,and it is becoming feasible to establish the realistic test databases required for a general“TREC-style”CBIR benchmark. References[1]Paul Clough,Mark Sanderson,and Henning M¨u ller.The clef cross language image retrieval track(imageclef)2004.In CIVR,pages243–251,2004.518/19fl20http://www.fl/creativecommons/21/[2]G.Csurka,C.Dance,C.Bray,and L.Fan.Visual categorization with bags of keypoints.InProceedings Workshop on Statistical Learning in Computer Vision,2004.5[3]Gy.Dork´o and C.Schmid.Selection of scale-invariant parts for object class recognition.In ICCV,pages634–640,2003.5[4]R.Fergus,P.Perona,and A.Zisserman.Object class recognition by unsupervised scale-invariantlearning.In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, volume2,pages264–271,Madison,Wisconsin,June2003.5[5]Vittorio Ferrari,Tinne Tuytelaars,and Luc J.Van Gool.Simultaneous object recognition andsegmentation by image exploration.In ECCV(1),pages40–54,2004.5[6]O.Firschein,M.Fischler,and Takeo Kanade.Creating benchmarking problems in machine vision:Scientific challenge problems.In Proceedings of the1993DARPA Image Understanding Workshop, April1993.2[7]N.Gunther and G.Beretta.Benchmark for image retrieval using distributed systems over theinternet:Birds.Technical report,HP Labs,2000.2[8]Clement H.C.Leung and Horace Ho-Shing Ip.Benchmarking for content-based visual informationsearch.In VISUAL’00:Proceedings of the4th International Conference on Advances in Visual Information Systems,pages442–456,London,UK,2000.Springer-Verlag.2,3[9]David G.Lowe.Object recognition from local scale-invariant features.In ICCV’99:Proceedingsof the International Conference on Computer Vision-Volume2,page1150,Washington,DC,USA, 1999.IEEE Computer Society.5[10]St´e phane Marchand-Maillet.Construction of a formal multimedia benchmark.In Proceedings ofthe European Signal Processing Conference(EUSIPCO2002),Toulouse,France,September2002.(Invited paper).2[11]D.Martin,C.Fowlkes,and J.Malik.A database of human segmented natural images and its ap-plication to evaluating segmentation algorithms and measuring ecological statistics.International Conference on Computer Vision,July2001.2,4[12]David R.Martin,Charless Fowlkes,and Jitendra Malik.Learning to detect natural image bound-aries using brightness and texture.In NIPS,pages1255–1262,2002.4[13]David R.Martin,Charless Fowlkes,and Jitendra Malik.Learning to detect natural image bound-aries using local brightness,color,and texture cues.IEEE Trans.Pattern Anal.Mach.Intell., 26(5):530–549,2004.4[14]K.Mikolajczyk and C.Schmid.A performance evaluation of local descriptors.IEEE Trans PatternRecognition and Machine Intelligence,pages1615–1630,October2005.2,5[15]K.Mikolajczyk,T.Tuytelaars,C.Schmid,A.Zisserman,J.Matas,F.Schaffalitzky,T.Kadir,andL.Van Gool.A comparison of affine region detectors.Accepted in International Journal of Com-puter Vision,2005.2,5[16]P-A.Mo¨e llic,P.H`e de,G.Grefenstette,and let.Evaluating content based image retrievaltechniques with the one million images clic testbed.In Proc World Enformatika Congress,pages 171–174,Istanbul,Turkey,February25-272005.6,7[17]H.M¨u ller,P.Clough,A.Geissbuhler,and W.Hersh.Imageclef2004-2005:results,experiencesand new ideas for image retrieval evaluation.In Proceedings of the Fourth International Workshop on Content-Based Multimedia Indexing(CBMI2005),to appear,Riga,Latvia,2005.2,3[18]H.M¨u ller,A.Geissbuhler,S.Marchand-Maillet,and P.Clough.Benchmarking image retrievalapplications.In Visual Information Systems,2004.5,7[19]Henning M¨u ller,Wolfgang M¨u ller,St´e phane Marchand-Maillet,David McG.Squire,and ThierryPun.A framework for benchmarking in visual information retrieval,2003.2[20]Wolfgang M¨u ller,St´e phane Marchand-Maillet,Henning M¨u ller,and Thierry Pun.Towards a fairbenchmark for image browsers.In SPIE Photonics East,Voice,Video,and Data Communications, Boston,MA,USA,5–82000.3[21]Step´a n Obdrz´a lek and Jiri Matas.Object recognition using local affine frames on distinguishedregions.In BMVC,2002.5[22]Andreas Opelt,Michael Fussenegger,Axel Pinz,and Peter Auer.Weak hypotheses and boostingfor generic object detection and recognition.In ECCV(2),pages71–84,2004.5[23]Paul Over,Clement H.C.Leung,Horace Ho-Shing Ip,and Micheal Grubinger.Multimedia re-trieval benchmarks.IEEE Multimedia,11(2):80–84,April-June2004.3[24]F.Rothganger,zebnik,C.Schmid,and J.Ponce.3d object modeling and recognition usingaffine-invariant patches and multi-view spatial constraints.In CVPR(2),pages272–280,2003.5 [25]Cordelia Schmid and Roger Mohr.Local grayvalue invariants for image retrieval.IEEE Trans.Pattern Anal.Mach.Intell.,19(5):530–535,1997.4[26]Josef Sivic,Frederik Schaffalitzky,and Andrew Zisserman.Object level grouping for video shots.In ECCV(2),pages85–98,2004.5[27]Josef Sivic and Andrew Zisserman.Video google:A text retrieval approach to object matching invideos.In ICCV,pages1470–1477,2003.5[28]Josef Sivic and Andrew Zisserman.Video data mining using configurations of viewpoint invariantregions.In CVPR(1),pages488–495,2004.5[29]Arnold W.M.Smeulders,Marcel Worring,Simone Santini,Amarnath Gupta,and Ramesh Jain.Content-based image retrieval at the end of the early years.IEEE Trans.Pattern Anal.Mach.Intell.,22(12):1349–1380,2000.1[30]Tinne Tuytelaars and Luc J.Van Gool.Content-based image retrieval based on local affinely in-variant regions.In VISUAL’99:Proceedings of the Third International Conference on Visual Information and Information Systems,pages493–500,London,UK,1999.Springer-Verlag.4 [31]Kelvin William Thompson.Popular lectures and addresses[1891-1894].In Bartlett’s FamiliarQuotations,Fourteenth Edition,page723a.1968.2。

Urban, J. and Jose, J.M. and van Rijsbergen, C.J. (2006) An adaptive technique for content-based image retrieval. Multimedia Tools and Applications 31(1):pp. 1-28./3586/An Adaptive Technique for Content-Based Image Retrieval Jana Urban,Joemon M.Jose and Cornelis J.van Rijsbergen({jana,jj,keith}@)Department of Computing Science,University of Glasgow,Glasgow G128RZ,UK Abstract.We discuss an adaptive approach towards Content-Based Image Re-trieval.It is based on the Ostensive Model of developing information needs—a special kind of relevance feedback model that learns from implicit user feedback and addsa temporal notion to relevance.The ostensive approach supports content-assisted browsing through visualising the interaction by adding user-selected images to a browsing path,which ends with a set of system recommendations.The suggestionsare based on an adaptive query learning scheme,in which the query is learnt from previously selected images.Our approach is an adaptation of the original Ostensive Model based on textual features only,to include content-based features to char-acterise images.In the proposed scheme textual and colour features are combined using the Dempster-Shafer theory of evidence combination.Results from a user-centred,work-task oriented evaluation show that the osten-sive interface is preferred over a traditional interface with manual query facilities. This is due to its ability to adapt to the user’s need,its intuitiveness and thefluidway in which it operates.Studying and comparing the nature of the underlying information need,it emerges that our approach elicits changes in the user’s need based on the interaction,and is successful in adapting the retrieval to match the changes.In addition,a preliminary study of the retrieval performance of the osten-sive relevance feedback scheme shows that it can outperform a standard relevance feedback strategy in terms of image recall in category search.Keywords:content-based image retrieval,adaptive retrieval,ostensive relevance, relevance feedback,user evaluationAbbreviations:CBIR–Content-based Image Retrieval;RF–Relevance Feedback;OM–Ostensive Model1.IntroductionThe semantic gap has become a buzzword in Content-based Image Retrieval(CBIR)research.It refers to the gap between low-level im-age features and high-level semantic concepts.Despite considerable research effort in thisfield over the last decade,there has not been any significant success for generic applications.Today,the research community has accepted the fact that it will probably be impossible to retrieve images by semantic content for several years.Instead,people have started to exploit relevance feedback techniques.Relevance feed-back is regarded as an invaluable tool to improve CBIR effectiveness,c 2004Kluwer Academic Publishers.Printed in the Netherlands.2Urban et alnot only because it provides a way to embrace the individuality of users, but it is indispensable in bridging the semantic gap.The semantic gap has further implications on the query formulation process.Since low-level features do not directly reflect the user’s high-level perception of the image content,the query formulation process is even more difficult than in text retrieval systems.Moreover,the underlying search need is dynamic and evolving in the course of a search session.Most often,image searching is explorative in nature, where searchers initiate a session and learn as they interact with the system.However,current CBIR systems fail to deal with the dynamic nature of search needs.In this work,we introduce an adaptive retrieval system,which places particular emphasis on the“human in the loop”.In the proposed system the retrieval process is iterative,updating the system’s knowledge of the user’s information need based on the user’s implicit feedback.To this end,it incorporates an adaptive image retrieval technique based on the Ostensive Model(OM)of developing information needs[3].In the underlying interaction model the user builds up a browsing tree of interesting images by choosing one image from a recommended set to be appended to the browsing path in each iteration.The system’s recommendations are based on a query constructed from the current path of images.For the query,each image in the path is considered relevant,but the degree of relevance is dependent on age:it decreases over time when new images are appended.In this way,the OM is a special kind of relevance feedback model,in which a query is refined by the user implicitly selecting images for feedback.It recognises and addresses the issue of dynamic nature of information needs,and has the advantage of allowing for an intuitive and user-centred search process.In order to evaluate the effectiveness of the ostensive relevance ap-proach,we built three systems:a baseline system with manual query facilities and two variances based on the OM.Results of a user-centred, work task-oriented evaluation show that the ostensive browsing inter-faces are generally preferred over the comparative system.Its strengths are considered to lie in its ability to adapt to the user’s need,and its very intuitive andfluid way of operation.In addition,the retrieval performance of the underlying technique is evaluated in a simulated environment assuming a user conducting cate-gory search.In comparison to a traditional relevance feedback technique as baseline,it shows that the query learning scheme based on the OM can outperform the baseline strategy in terms of the total number of images belonging to a specific category found.The main contributions of this paper are three-fold.First,we show how the OM can be adapted to both textual and content-based featuresAn Adaptive Technique for CBIR3 with the proposed query learning scheme.Second,we provide results from an extensive user evaluation of CBIR st but not least, we highlight the merits of the ostensive relevance feedback strategy in terms of retrieval performance and point out future directions for a continuative evaluation.The remainder of the paper is organised as follows.Section2pro-vides an account of the motivations for our approach:the intrinsic difficulties of the query formulation process and a review of current approaches to relevance feedback.In particular,these are compared to the notion of ostensive relevance.In Sections3&4we introduce the systems we used for the evaluation and describe the proposed adaptive query learning scheme.The experimental methodology is detailed in Section5,followed by a review of our experimental results.The main findings of the user study are summarised and discussed in Section7, while Section8adds results of a quantitative evaluation of the under-lying query learning scheme.Finally,we conclude and provide a brief outlook to the future.2.Content-based Image RetrievalEvery information seeking process is necessarily initiated by an infor-mation need on the user’s side.Therefore,the success of a retrieval system depends largely on its ability to allow the user to communicate this need.For the user,the query formulation often poses a signifi-cant hurdle due to manifold reasons,which will be discussed briefly in this section.A popular means to overcome these problems is relevance feedback.This section therefore also covers the idea behind relevance feedback and discusses some examples in the CBIR literature.2.1.Query Formulation ProblemOne of the major issues in information searching is the problems asso-ciated with initiating a good query.However,it has been well accepted that searchersfind it hard to generate a query due to the following reasons[21].Firstly,searchers do not know how the documents are rep-resented.This is especially true for CBIR systems due to the low-level representation of content-based features.It is substantially difficult to formulate a query in terms of the system’s internal representation of the documents,which is often composed of a collection of low-level features in the pixel domain.Secondly,the underlying information need itself is typically vague (“I don’t know what I’m looking for,but I’ll know when Ifind it”[28]).4Urban et alDue to the uncertainty about what information is available or about the actual need itself,a search process usually starts with an explorative phase,in which the user tries the system and its options,and tests what kind of information is returned.Through exposure to new objects, the user’s context is changing and their knowledge state is developing, triggering changes in the user’s need[10,8].This often leads the user to reformulate the initial query either to make it more precise after having gained some knowledge about the collection make-up,or to steer it in different directions after having seen other interesting documents,or a combination of both.2.2.Relevance FeedbackIn order to alleviate the query formulation problem,a popular approach is to incorporate relevance feedback into the retrieval system.Relevance feedback is a commonly accepted means to improve retrieval effective-ness and has been studied extensively(e.g.[19,21]).In IR systems incorporating relevance feedback,the search process is initiated with a user-supplied query,returning a small number of documents to the user.The user is then given the possibility of indicating which of the returned documents are actually useful(relevant).Based upon those user relevance judgments the original query is automatically reformu-lated.The new query is again matched against the documents in the collection,returning an improved set of documents.This process can continue until the user’s information need is satisfied.As a consequence, a retrieval system based on relevance feedback is inherently interactive.As mentioned earlier,relevance feedback is a tool to kill two birds with one stone.It is used to bridge the semantic gap by avoiding or helping the query formulation process,while at the same time it natu-rally provides a way to embrace the individuality of users.The user’s judgement of relevance is naturally based on their current context, their preferences,and also their way of judging the semantic content of the images(e.g.[23,7]).Initially,the system uses the low-level image features as a quick way to‘estimate’the relevance values of the images. By prompting the user for relevance feedback,this rough estimation can be improved to steer the results in the direction the user has in mind.A comprehensive study of existing relevance feedback techniques in image retrieval can be found in[35].2.2.1.AssumptionsDifferent methods have been adopted on the basis of often diverging assumptions.One major variance is what actually is fed back to the sys-tem.Often,binary feedback for positive and negative examples is usedAn Adaptive Technique for CBIR5(e.g.,[29]),some additionally associate a‘degree of(ir)relevance’(e.g.,[18]),and others interpret the feedback only as a‘comparative judg-ment’(e.g.,[6]).Depending on the assumptions taken in this respect, the resulting systems can be distinguished further:While positive feed-back has been used for feature selection(e.g.,[17])or feature relevance weighting(e.g.,[18,11]),using both positive and negative feedback gives rise to treating the retrieval process as a classification or learning problem.Many systems now strike the latter path,transferring meth-ods previously employed mainly in thefield of artificial intelligence (e.g.,[30,34,29]).However,they are hindered by one major obstacle, namely the small sample issue[35].The user feedback in each iteration only gives a tiny number of training samples relative to the high dimen-sion of the feature space and the possible number of classes for general multimedia data.Consequently the results are unreliable on their own, requiring a lot of extra effort,e.g.an off-line training phase following the on-line search as employed in[34]to arrive at meaningful results.This is often undesirable,since it militates against the real-time requirement of relevance feedback.The main advantage of relevance feedback,namely that it allows real-time learning from user interaction to improve the system’s performance during one search session,is thus undermined.A further characteristic of existing systems is how they gain infor-mation about the user’s judgment of relevance.One can distinguish between two distinct approaches:explicit and implicit relevance feed-back.Explicit relevance feedback,which is assumed in most current systems(e.g.,[6,18,30]),asks the user to explicitly state whether a returned document is relevant or not.Therefore,the user interface has to provide for facilities to input this judgment by the user.This (additional)task is often considered as a burden to the user,since it is difficult for most users to assess the degree of relevance of one document in terms of a numeric value[33],which presumes consid-erable knowledge of the retrieval environment.Even though it might be much easier to determine whether an image is actually relevant to the user compared to formulating a good query,it still requires often considerable cognitive effort from the user to communicate this relevance assessment to the system.For this reason,a less-distracting possibility to gain relevance feedback is implicitly from the users,simply by observing their interaction with the system[32].Another assumption underlying nearly all current relevance feedback techniques is that a user’s information need is static and there is no provision for updating user’s judgements.Especially those techniques that attempt to classify or separate the document space into relevant and non-relevant,explicitly rely on the assumption that—within one search session—all documents are either relevant or not regarding the6Urban et aluser’s information need.In other words all documents are assumed of having constant relevance values.However,this is a rather simplifying view of the real-world.Not only are the user’s actions time-dependent—resulting in giving inconsistent feedback,but even more importantly, the user’s goals are also time-dependent and might change either grad-ually or quite abruptly.The trigger for such changes is most often a result of having come across something interesting that they have not even considered at the beginning of the search.For this reason the system proposed here is based on the Ostensive Model,which captures “the intentionality of an information need that is assumed to be devel-oping during the searching session”[2].The details of the model will be described in Section2.4.In order to avoid asking the user for explicit relevance feedback, the approach taken here is the one of interpreting a user’s selection of one document over others as an indication that this document is more relevant.Due to this‘ostensive’approach,and in fact the Ostensive Model underlying this system,only positive examples are there to work with.The positive feedback is used for query learning,in which the query itself is learnt and subject to adaptation.On the basis of the documents selected,the system creates a new query consisting of a combination of these documents’features.This query adapts in every iteration of the retrieval process.2.3.Query Learning ApproachesTo relieve the user from the query formulation problem,a method that is able to“guess”or“learn”the user’s desires purely from a set of exam-ples is believed to be very advantageous.Algorithms for CBIR that rely on query refinement as a way of incorporating relevance feedback have attracted a lot of interest(e.g.,[11,18,20]).They are based on the early work on query shifting in the text retrieval domain[19].Query shifting is the prevalent technique of adapting an initial query,which aims at moving the query toward the region of the feature space containing the set of relevant documents and away from the region of the set of non-relevant documents[11,18,20].The underlying assumption is that the user has an ideal query in mind,and the system’s task is tofind this ideal query.Often query refinement methods are used in combination with feature re-weighting,which is based on a weighted similarity mea-sure where relevance feedback is used to update the weights associated with each feature in order to model the user’s need[11,18,20].The approach proposed in this paper is a form of dynamic query learning,combining both query shifting and feature re-weighting tech-niques.Unlike other query learning methods,which lack the ability toAn Adaptive Technique for CBIR7 adjust the degree of relevance over time,the emphasis in our approach lies on dynamically adapting relevance values.The temporal dimen-sion to the notion of relevance introduced in the Ostensive Model is exploited to achieve this different view of adaptive query.The details of the model will be described in the following.2.4.Ostensive RelevanceThe Ostensive Model(OM)of developing information needs was ini-tially proposed by Campbell and van Rijsbergen[4].It combines the two complementary approaches to information seeking:query-based and browse-based.It supports a query-less interface,in which the user’s indication of interest in an object—by pointing at it—is interpreted as evidence for it being relevant to their current information need.There-fore,it allows direct searching without the need of formally describing the information need.The query is automatically evolved from a path of documents selected in the course of one search session.By accepting that the user’s need is dynamically changing during a search session,the OM adds a temporal dimension to the notion of relevance.A recently selected object is regarded more indicative to the current information need than a previously selected one.So,in this sense,the degree to which a document is considered relevant is continuously updated to reflect the changing context.The definition of Ostensive Relevance summarises the main points[3]:The Ostensive Relevance of an information object is the degree to which evidence from the object is representative/indicative of the current information need.The interaction with an Ostensive Browser follows an intuitive scheme. Here the user starts with one example document as the query,and as a result is presented with a new set of candidate documents(top ranking documents according to the similarity measure used).As a next step,the user—through selecting one of the returned documents—updates the query,which now consists of the original document and the selected document of the set of returned candidates.After a couple of iterations,the query is based on a path of documents.Similarly to the Path Model described in[5]for activity-centred information access, emphasis is set on the user’s activity and the context,rather than the predefined internal representation of the data.A path represents the user’s motion through information,and taken as a whole is used to build up a representation of the instantaneous information need.The weight of how much each document along the path contributes to the next query can be chosen with different objectives in mind. The weighting schemes are referred to as ostensive profiles,and reflect8Urban et alFigure1.The ostensive pathhow relevance(or uncertainty)changes with age(age being interpreted as the order of selection or the position along the path).With the previously elaborated considerations in mind,the most plausible profile supports uncertainty increasing with age.The further back in time one document has been selected during the retrieval process,the more uncertainty is associated with it that it actually reflects the user’s infor-mation need,or in other words,the less relevant it is considered for the query.This profile is also the one favoured by the original definition of Ostensive Relevance.For a comparative evaluation of different profiles and their interpretations please refer to[2].Since the whole path is visible to the users,they can jump back to a previous object along the path if they get the feeling that they are stuck or moving in the wrong direction.From there a new path can be explored,starting from the original object(the root)and the newly selected object.The resulting paths form a tree-like structure, originating from one root and branching at various objects(see Fig.1).The OM thus captures the developing information need of the user during a search process,and incorporates the uncertainty,which nec-essarily exists due to the imprecise awareness of one’s own information need and the difficulties of expressing it.In its original conception the OM was integrated with the Binary Probabilistic Model(BPM)of IR to create an operational retrieval model[3].This was possible,since the images that were used in the implementation of the OM were represented by a set of index terms. However,if one takes into account content-based features to index images,the interpretation of the BPM becomes rather difficult.In the BPM,relevance scores are based on estimating or calculating the prob-abilities that,if the document is relevant(or non-relevant respectively),An Adaptive Technique for CBIR9 a particular feature will be observed.In other words,the probability is assessed depending on whether some chosen feature is either present or absent.This interpretation was developed in the text retrieval domain, where a document can be represented by a set of index terms only. CBIR systems rely on more complex indexing features,in which it is hard to tell whether a particular feature can be observed.It is ques-tionable whether or not content-based image features can be treated in a binary fashion,e.g.is it sensible to say the image contains the index term“green”if the colour histogram contains non-zero values for the bins referring to green?What makes matters even more complicated is the fact that most CBIR systems rely on multiple representations of image content.It becomes apparent that the interpretation of the binary probabilistic model in terms of content-based image features is rather inappropriate.For this reason,we introduce the use of adaptive queries within an operational retrieval system based on the OM.3.The SystemsTo test our ideas about adaptive query learning strategies,three proto-type system have been implemented and evaluated.In this section we will describe these systems.3.1.Features&SimilaritiesThe systems use two distinct features:text annotations and visual features.The text feature is extracted from the keyword annotations of the images,and the visual feature is based on colour histograms representing an image’s global colour distribution represented in the HSV colour space.An image is represented by a two-dimensional feature vector,which is a term vector(text feature)and a histogram bin vector(colour feature)respectively.The term vector is weighted by the tf×id f (term frequency,inverse document frequency)weighting scheme.The similarity between documents is calculated as the combined score of the two similarity values for each feature using the Dempster-Shafer combination(see Section4.1).In the case of text similarity,the cosine measure[22]is used:sim(D,Q)=T V D·T V Q ||T V D||||T V Q||while the visual similarity is determined by histogram intersection[27]:sim(D,Q)= lHi=1min(H D[i],H Q[i]) min(||H D||,||H Q||)where T V stands for a document’s term vector,H for its colour his-togram vector,and l H for the histogram vector length.Both similar-ity measures are widely used in combination with the chosen feature representation.3.2.The Interfaces3.2.1.The Ostensive BrowsersTwo versions of the ostensive browsing approach have been imple-mented:one with a pure ostensive browsing scheme(Fig.2(b))and the other allowing explicit feedback within ostensive browsing(Fig.2(c)). In both systems the user starts with an image in the browse panel (in Fig.2(c)-2).The initial image is obtained in a pre-keyword search from which the user is given the opportunity to choose an image to explore further.When selecting an image,the system returns a set of most similar images as candidate images.We chose to present six images as new candidates.Of those candidates,the user clicks on the most appropriate one.At this stage,the system computes a new set of similar images based on an adapted query and presents it to the user. As in Figure2(b)&(c),this process creates a path of images,which is represented in the interface.At any point the user can go back to previously selected images in the path and also branch off,by selecting a different candidate.The complete search session can continue to iterate between keyword search followed by browsing sessions,as long as the user is not satisfied with the retrieved images.Since the screen space is very limited the different paths are often overlapped resulting in a large degree of clutter,afish-eye view as alternative(see Fig.2(b))is provided.The user can switch between these views during the search.To view details of the image,there is the possibility of viewinga single selected image in full-size in a separate panel(in Fig.2(c)-3).It also contains some meta-data about the document,such as the photographer,title,date,and description.In between the full-size view and the thumbnails,a quick view is shown as a popup when the user moves the mouse over a thumbnail in the browse panel.Both browsers(Fig.2(b-c))attempt to adapt the query based on the user’s implicit feedback,which will be described in Section4.We provided two slightly different versions of the Ostensive Browser to allow for different levels of control.The Pure Ostensive Browser (POB)(Fig.2(b))does not allow for any control of feature terms or(a)MQS(b)POB(c)COBFigure2.The interfaces.weighting between the features.The system automatically adapts the query and also the feature weights.The learning of the feature weights is achieved in a similar fashion to[18],and they will be used as trust values in Dempster-Shafer’s evidence combination(see Section4.1)to combine the similarity scores.In addition,the interface for the Controlled Ostensive Browser (COB)provides options for selecting the features and their associated weights(in Fig.2(c)-1).It displays the search terms the system used to obtain the currently shown candidates.The automatically selected terms(the strategy of the selection is described in Section4),can bechanged by the user and thus the current candidates are exchanged for the ones resulting from the updated query.Another aspect of control is the adjustment of the feature weights.The user can control the weights between the two features by means of a slider.How to start the search?The problem with the ostensive search is the question of how to initiate the search,i.e.how to choose thefirst image that starts the path.As mentioned earlier,the current solution is to ask the user to formulate a keyword query,which returns a set of relevant images based on the textual feature.One of the returned images can then be chosen as the starting point.However,this is a rather ad-hoc approach,which again requires the user to formulate a query.We are currently investigating different solutions to this problem. One approach could be to pre-cluster the whole collection,and let the user browse through these clusters to choose a starting point.3.2.2.The Manual Query SystemAs baseline system,we used the Manual Query System(MQS)(Fig.2(a)) resembling a‘traditional’image retrieval system,which returns a set of relevant images in response to a user-given query.A query can be formulated by a set of keywords,and/or one or more images as‘visual examples’.The user can also set the weighting between the two features. If not satisfied with the results returned by the system,the user has to alter their query and so forth.4.Query Adaptation TechniquesIn the course of a search session,a user creates and moves along a path of images.During each iteration,the path changes and the query needs to be adapted accordingly.The selected documents are treated as evidence of the user’s information need,with a changing degree of uncertainty associated to each document:the older the evidence,the more uncertain we are that it is still indicative of the current infor-mation need.The degree of uncertainty is represented by an ostensive relevance profile[2],used to weigh the contribution of each path doc-ument.A separate query is constructed for each feature as a weighted combination of the documents’features.Text Query:A new text query vector is created by updating the term’s intrinsic weights(e.g.inverse document frequency(idf))with the ostensive relevance weights resulting from the ostensive profile.The query vector then consists of the union of the set of terms that appear in。

计算机工程应用技术本栏目责任编辑:梁书阿里云平台快速接入企业数据带来的益处戴俊梅1,陈龙2(1.南京大学金陵学院,江苏南京210000;2.南京烽火星空通信发展有限公司,江苏南京210000)摘要:据IDC 报告显示我国已进入大数据时代。

众厂商各类服务系统中传统的数据处理逐渐演变成独立的计算业务,从而为社会各界提供服务。

但企业自行建造大数据平台门要求高,诸如资金、场地、人员、技术等。

有幸,阿里云平台提供了一个开放、兼容的大数据生态平台体系,为中、小、微企业对大数据计算的需求提供了坚实后盾。

关键词:大数据;中小微企业;开放的计算平台;集群;在线计算;兼容;优势中图分类号:G642文献标识码:A文章编号:1009-3044(2020)34-0217-02开放科学(资源服务)标识码(OSID ):The Benefit of Enterprise Using Alibaba Cloud Platform DAI Jun-mei 1,CHEN Long 2(1.Nanjing University Jinling College,Nanjing 210000,China;2.Nanjing Fiberhome Starrysky Co.,Ltd,Nanjing 210000,China)Abstract:According to the IDC report,China has entered the era of big data.The traditional data processing in various service sys⁃tems which made by various manufacturers has gradually evolved into an independent business which supplys calculation.Howev⁃er,companies will be encounter with huge difficulties when building own big data platform.luckly,Alibaba Cloud platform can support computing capability of big data compatibly and sharing.these services can satisfy the desire of SME enterprises on big da⁃ta processing.Keywords :big data ;SME enterprises ;opening cloud platform ;cluster ;OLTP;compatibility ;advantage1大数据基本背景2018年IDC 发布的数字研究报告(Digital Universe )显示,我们所产生的数据量将超过40ZB (泽字节)。

专利名称:CONTENT BASED IMAGE RETRIEVAL 发明人:PÉREZ DE LA COBA, Sira申请号:EP2014/067056申请日:20140808公开号:WO2015/032585A1公开日:20150312专利内容由知识产权出版社提供专利附图:摘要:A method and non-transitory computer readable medium for content based image retrieval. The method includes selecting a query image, segmenting the selected query image by applying a segmentation technique, extracting features from the segmented query image by determining at least two feature descriptors, including colorfeature descriptors and texture feature descriptors, and determining a similarity of the query image to a plurality of images included in a database using the determined at least two feature descriptors of the segmented query image, features being extracted from each of the plurality of images included in the database by determining the at least two feature descriptors, the color feature descriptors and the texture feature descriptors including a simultaneous combination of different color spaces, and global and local statistical measurements being carried out on the simultaneous combination of the different color spaces.申请人:SHOT & SHOP地址:Avda. de los Prunos, 15, PE, 1ºB E-28042 Madrid ES国籍:ES代理人:DE CARLOS HERNANDO, Borja更多信息请下载全文后查看。

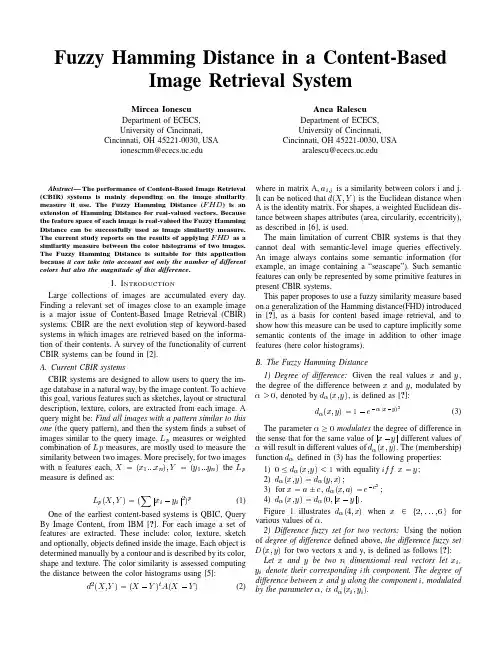

Fuzzy Hamming Distance in a Content-Based Image Retrieval SystemMircea IonescuDepartment of ECECS,University of Cincinnati, Cincinnati,OH45221-0030,USA ionescmm@Anca RalescuDepartment of ECECS,University of Cincinnati, Cincinnati,OH45221-0030,USA aralescu@Abstract—The performance of Content-Based Image Retrieval (CBIR)systems is mainly depending on the image similarity measure it use.The Fuzzy Hamming Distance()is an extension of Hamming Distance for real-valued vectors.Because the feature space of each image is real-valued the Fuzzy Hamming Distance can be successfully used as image similarity measure. The current study reports on the results of applying as a similarity measure between the color histograms of two images. The Fuzzy Hamming Distance is suitable for this application because it can take into account not only the number of different colors but also the magnitude of this difference.I.I NTRODUCTIONLarge collections of images are accumulated every day. Finding a relevant set of images close to an example image is a major issue of Content-Based Image Retrieval(CBIR) systems.CBIR are the next evolution step of keyword-based systems in which images are retrieved based on the informa-tion of their contents.A survey of the functionality of current CBIR systems can be found in[2].A.Current CBIR systemsCBIR systems are designed to allow users to query the im-age database in a natural way,by the image content.To achieve this goal,various features such as sketches,layout or structural description,texture,colors,are extracted from each image.A query might be:Find all images with a pattern similar to this one(the query pattern),and then the systemfinds a subset of images similar to the query image.measures or weighted combination of measures,are mostly used to measure the similarity between two images.More precisely,for two images with n features each,the measure is defined as:(1) One of the earliest content-based systems is QBIC,Query By Image Content,from IBM[?].For each image a set of features are extracted.These include:color,texture,sketch and optionally,objects defined inside the image.Each object is determined manually by a contour and is described by its color, shape and texture.The color similarity is assessed computing the distance between the color histograms using[5]:(2)where in matrix A,is a similarity between colors i and j. It can be noticed that is the Euclidean distance when A is the identity matrix.For shapes,a weighted Euclidean dis-tance between shapes attributes(area,circularity,eccentricity), as described in[6],is used.The main limitation of current CBIR systems is that they cannot deal with semantic-level image queries effectively. An image always contains some semantic information(for example,an image containing a“seascape”).Such semantic features can only be represented by some primitive features in present CBIR systems.This paper proposes to use a fuzzy similarity measure based on a generalization of the Hamming distance(FHD)introduced in[?],as a basis for content based image retrieval,and to show how this measure can be used to capture implicitly some semantic contents of the image in addition to other image features(here color histograms).B.The Fuzzy Hamming Distance1)Degree of difference:Given the real values and, the degree of the difference between and,modulated by ,denoted by,is defined as[?]:(3) The parameter modulates the degree of difference in the sense that for the same value of different values of will result in different values of.The(membership)function defined in(3)has the following properties:1)with equality;2)3)for,;4).Figure1illustrates when for various values of.2)Difference fuzzy set for two vectors:Using the notion of degree of difference defined above,the difference fuzzy set for two vectors x and y,is defined as follows[?]: Let and be two dimensional real vectors let, denote their corresponding th component.The degree of difference between and along the component,modulated by the parameter,is.2 2.53 3.54 4.55 5.560.10.20.30.40.50.60.70.80.91alpha=0.1alpha=0.6alpha=1alpha=20Fig.1.The membership function withand varying from 0.1to 20(some graphs for intermediate values of are removed forclarity).[?]The difference fuzzy set corresponding toiswith membership function given by:(4)In words,is the degree to which the vectors and are different along their th component.The properties of are determined from the properties of .3)The fuzzy Hamming distance as cardinality of a fuzzy set:Since the Hamming distance is the number of components along which two bit vectors are different (which is also in fact,the square of the Euclidean distance between two bit vectors)a fuzzy extension of this concept must necessarily be based on the concept of cardinality of a (discrete)fuzzy set.This concept has been studied by several authors including [?],[?],[?],[?],etc.Here the definition put forward in [?]and further developed in [?]is used.Accordingly,the cardinality of the fuzzy setis the fuzzy setwhere(5)In (5)denotes the th largest value of ;the valuesand are introduced for convenience,and denotes the operation.By analogy with the definition of the classical Hamming distance,and using the cardinality of a fuzzy set [?],[?],[?],the Fuzzy Hamming Distance ()is defined as follows [?]:Definition 1:Given two real dimensional vectors,and ,for which the difference fuzzy set ,with member-ship function defined in (4),the fuzzy Hammingdistance between and ,denoted byis the fuzzy cardinality of the difference fuzzy set,.denotes the member-ship function forcorresponding to the parameter .More precisely,(6)forwhere .In words,(6)means that for a given value ,is the degree to which the vectors andare different on exactly components (with the modulation constant ).Example 1:Considering two vectors and with,their is the same as that betweenthe vector,and(by property (4)of ).Therefore,their is the cardinality of the fuzzy setobtained according to (5).Forthis is obtained to be In words,the meaning of is as follows:the degree to which and differ along exactly components (that is,do not differ)is ,along exactly one component is ,along exactly two components is ,along exactly threecomponents is,and so on.4)Defuzzification of the Fuzzy Hamming Distance:As it is often the case with many fuzzy concepts,a non-fuzzy (crisp)approximation for them is useful.The non-fuzzy cardinality,of a fuzzy set is defined in [?]where it is alsoshown that this approximation of the fuzzy cardinality is equalto the cardinality (in classical sense)ofthe -level set of with .More precisely,denotes the closure of .Since the fuzzy Hamming distance is the (fuzzy)cardinality of the difference fuzzy set ,its defuzzification leads to a non fuzzy approximation for it.It can be easily verified[?],that withthe defuzzification of by taking its 0.5level set is the standard Hamming distance.In other words the defuzzification of ,is the number ofelements ofwith membership greater or equal to 0.5.In the remainder of the paper will denote the crispquantity obtained according to (7)whenis the fuzzy set defined in (6).That is,for the real vectors and ,(8)II.A DAPTINGTHEF UZZY H AMMING D ISTANCEIn this section a closer look is devoted to the modulator parameter .In particular,it is shown that can be tuned,to include a scaling factor which controls the sensitivity to the extent of variation.Tuning of the parameter can be doneso as to include context(other components of the two vectors) or to capture only local(current component)information. One way to set the value for is to impose a lower bound on the membership function subject to constraints on the difference between vector components,such as described in (9)and(10).(9) subject to(10) for a generic component pair,a desired lower bound on the membership value to(as defined in(6)), and some positive constant.In particular,can be set as where and denotes the maximum value in the column domain,which leads tofrom which it follows that(11) This leads to the the following formula for defining a global value for the parameter:M e m b e r s h i p d e g r e eFig.4.Membership function for the distance 2.Fig.5.Distance distribution overA.The Preprocessing ModuleThe images of interest (data based and query image)are preprocessed according to the following steps:1)Segmentation:Each image is segmented in amatrix of regions.For the experiments reported here twovalues,and are used;2)Compute color histograms:The color histogram for each region is computed.Because the RGB color space can have 16M colors,quantization to 4096colors,giving a color histogram of 4096bins,is used.The number of pixels from each region that have the color correspond-ing to each of these bins is computed.The output of the preprocessing module is the collection ofcolor histograms.B.The Similarity Assessment ModuleThe collection of histograms corresponding to the image data base,and the color histogram of the query image,are the input to this module.Each image is represented as a matrix of histograms,more precisely,image is represented as.The query image is represented as .The similarity function,,mapstwo images into a matrix whose entries are the FHD between corresponding regions of the argument images.More precisely,for two images,and ,(13)where thedenotes the Fuzzy Hamming Distance parameterized by (introduced in equation (12)),the per-centage of difference in two colors (relative to the possible range)needed in order to consider these colors different.Forthe current experiments,the value,that is of the maximum range,is used.It is important to note here,that segmentation of the image into regions and evaluation of the similarity between two images as a function of the similarities of their corresponding regions,assures that position information is also considered,along with color information.This way two images which have the same color histogram but different semantic content (e.g.an image and the one obtained by rotation)will not necessarily be identical.C.The Ranking ModuleThis module computes a score from the output of the similarity module and ranks images in order of the values of this score.To achieve this,in the current implementation ranking is done in two steps:1)Defuzzification:The fuzzy sets returned by the simi-larity module are defuzzified.For comparison purpose three defuzzification methods were used:the center of gravity(COG),extracting the crisp version of the Fuzzy Hamming Distance,nFHD (defined in section I-B),and the area under the curve (AUC).2)Aggregation and Ranking:The results of the defuzzi-fication step are aggregated into a final score using a weighted aggregation.Scores are ranked in nondecreas-ing order (images closest to the query have smallest number of different different colors).The first images,where is a user-defined value,are returned as relevant to the query image.IV.R ESULTSThe approach described in the preceding sections is applied to 850images in JPEG format from the Washington database [?](which contains 1000images,the remaining 150are inGIF).Images are represented asregions for both .For comparison purpose,a non-fuzzy similarity measure,based on the Euclidean distance,is also used.For the similarity method using FHD,each of the three defuzzification methods are applied for each query.In the rank-ing module two weighting schemes are used for aggregation: (1)equal weights,and(2)regions in the top portion of an image were assigned higher weights.The latter is important to enforce additional positional requirements(such as“make sure the retrieved image has a sky like the one in the query image”)for the retrieval procedure.Figure6shows the topfive results returned for a query.The first three columns represent,in order,the results obtained using FHD,COG,nFHD,AUC,for similarity assessment, with equal aggregation weights.The last column represents the topfive results returned by the Euclidean distance.The query image appears in thefirst row.To better analyze the results returned by the four methods,it is helpful to notice that the (semantic)category for the query image could be described as “seascape”(the sea in the bottom part of the image,an island across the middle of the image,and the sky in the top part of the image).Above each image is its score.Because of the different defuzzification methods these scores appear in different units. Only the score for the second column(nFHD)has a direct meaning,namely,the number of colors by which the image and the query image differ.As it can be seen fromfigure6each of the four methods re-trieves the query image as the best candidate and indeed,their results also coincide for the second and third image returned as well.From the fourth image on,results differ somewhat. Among the FHD based methods,COG gives the best result (this is quite reasonable since the fuzzy set for which it is applied is convex),followed by nFHD and by AUC.Relative difference between scores decreases when moving down each column.The last image in second column,which also appears as the fourth image in the third column is interesting.This image has a building with glass walls behind what appears to beflowering trees.The walls appear blue due to the reflection of the sky(unseen in the image),therefore,from color point of view and,in fact,from the spatial distribution of the color,this image is similar to the query image.However,this image is in a totally different semantic category than the query image. It appears that the Euclidean distance does essentially as well as the COG as it preserves to almost the same extent the semantic category of the query image.A difference emerges in row four:the image returned as the third candidate(which is the same as the fourth candidate returned by COG)contains some artifacts in the lower part of the image which puts it in a slightly different semantic category(e.g.“seascape with a small bridge or pier”).Therefore,one can conclude that COG preserves the best the image content both as color and semantic category.Indeed this can be even better seen when the next five candidates for the same query are considered as shown in figure7.All the images retrieved by the COG method preserve the semantic category.This method is followed in order by the Euclidean Distance,nFHD andAUC.0.0000000.0000009.0000000.00000029.9023585.00000019.5322960.36321936.53977011.00000023.9182100.54558945.02331615.00000024.6744760.62229145.94798616.00000025.1566320.674405Fig.6.Topfive query result-set using equal weights for each image region. Images have been divided into regions.First three columns correspond to COG,nFHD,AUC respectively;the last column uses Euclidean distance.0.0000000.0000009.0000000.00000029.902358 5.00000019.5322960.36321936.53977011.00000023.9182100.54558945.02331615.00000024.6744760.62229145.94798616.00000025.1566320.67440546.45811617.00000026.9373580.78304147.03379017.00000027.3264560.78609148.08201518.00000027.6411240.80994349.01931918.00000027.8863150.82537450.93927220.00000027.9538530.866814 Fig.7.Nextfive query result-set using equal weight for each image region. Images have been divided into regions.First three columns correspond to COG,nFHD,AUC respectively;the last column uses Euclidean distance.V.C ONCLUSIONS AND FUTURE WORKThe relevance of the result-set of an CBIR system is hard to assess.It is based on what is relevant for a user,given the query image in a particular context.That is,relevance is both subjective and for the same user,it may be context dependent. This study presented initial results on a new approach to measure similarity between images using the notion of Fuzzy Hamming Distance and its use to content based image retrieval.Even with the remark at the beginning of this section, results seem promising:The main advantage of the FHD is that the extent to which two different images are considered indeed different can be tuned to become more context dependent and to capture(implicit)semantic image information.Several mechanisms can be used to rank and aggregate the similarity measures in addition to the simple point-valued mechanisms illustrated here.These would include mechanisms that rank and aggregate directly fuzzy numbers without a(too early)defuzzification.Developing such mechanisms,experi-ments with other image features(HSV histograms,textures), and further experiments with the proposed methods belong to the future work in this direction.A CKNOWLEDGMENTMircea Ionescu’s work for this study was supported by a Graduate Fellowship from the Ohio Board of Regents. Anca Ralescu’s work for this study was partially supported by a JSPS Senior Research Fellowship at the Brain Science Institute,RIKEN,Japan,and grant N000140310706from the Department of the Navy.R EFERENCES[1] A.G.Barto,R.S.Sutton,and C.W.Anderson,“Neuronlike adap-tive elements that can solve difficult learning control problems,”IEEE Trans.Systems,Man,and Cybernetics,vol.SMC-13,pp.834–846, Sept./Oct.1983.[2] A.N.Michel and ler,Qualitative Analysis of Large ScaleDynamical Systems,New York:Academic Press,1977.[3]P.J.Werbos,“Neural networks&the human mind:New mathematicsfits humanistic insight,”Proceedings of the1992IEEE Trans.Systems, Man,and Cybernetics,where,1992,vol.1,pp.78–83.。

几何矩不变量在基于内容医学图像检索中的应用金丰华 秦磊 汪蕙 罗立民(东南大学生物医学工程系,南京210096)摘要 基于图像内容的检索(Content based image retrieval,CBIR),是当前比较热门也是比较难的研究课题。

针对基于内容的医学图象检索,我们提出几个对于图像旋转、伸缩、位移不变的几何矩不变量集,对于图像数据库的初检效果较好。

关键词 基于图像内容检索 几何矩 矩不变量 医学图像数据库中图分类号 R318 文献标识码 A 文章编号 1006 4915(2002)02 0007 04The Application of Geometric Moment Invariants inField of Content-Based Medical Image RetrievalJin Fenghua Qin Lei Wang hui Luo Liming(Dep ar tment of Biomedical Engineer ing,Southeast University,N anj ing210096)Abstract Content-based image retrieval(CBIR)is now a pop but diffcult field.In this paper,we use some moment invariants for content-based medical image retrieval.T hey r emain constant w hen images rotate、transfer and scale,so they are fit for the first step of retr ieval.Key words Co ntent-based image retrieval(CBI R) Geometric mo ment M oment invariant medical image database1 引言多媒体和互联网技术的发展使得图像的来源日益扩大,特别在医学领域,由于近年来CT、MRI等医学设备的发展和普及,使得医学图像数据量剧增,更好地管理和利用这些资源将有利于医学工作者及的科研人员的工作。

目录摘要 (I)ABSTRACT (II)绪论 (1)1 自动图像标注概述 (3)1.1 研究目的和意义 (3)1.2 现有图像标注算法分类 (3)2 用于图像标注的特征提取 (7)2.1 颜色特征提取 (7)2.2 纹理特征提取 (8)3 支持向量机模型 (12)3.1 SVM模型原理及核函数 (12)3.2 参数设置和训练算法 (16)3.2.1 参数的设置 (16)3.3.2 SVM的训练算法 (17)3.3 LIBSVM软件包 (19)4 SVM技术用于自动图像标注 (23)4.1 特征提取模块 (23)4.2 SVM分类模块 (23)4.3 实验结果及分析 (24)结束语 (26)致谢 (27)参考文献 (28)附录MATLAB程序源代码 (29)摘要近年来,自动图像标注(Automatic Image Annotation,AIA)技术已经成为图像语义理解研究领域的热点。

随着机器学习理论的不断发展,包括相关模型、分类器模型等不同的学习模型已经被广泛地应用于自动图像标注研究领域。

自动图像标注就是让计算机自动地给无标注的图像加上能够反映图像内容的语义关键词。

自动图像标注在图像检索研究领域中非常具有挑战性,是实现图像语义检索的关键。

现有的自动图像标注算法可以大致分为基于分类的标注算法、基于概率关联模型的标注算法以及基于图学习的标注算法等三大类。

本文重点研究了另外一种自动图像标注算法——基于SVM技术的标注算法,研究了SVM原理,构造SVM 分类器,应用matlab对图像进行纹理、颜色特征的提取,通过分类器,实现图像自动标注。