Multi-Channel Parallel Adaptation Theory for Rule Discovery

- 格式:pdf

- 大小:176.76 KB

- 文档页数:21

巴克码多普勒容限带宽的扩展杨文华 宋力平 王其扬 (航天工业总公司八院804所 上海200082)文 摘 介绍和分析了多普勒容限带宽扩展的几种方法,提出用设计包络约束(EC)滤波器的方法设计巴克码的多普勒频移补偿滤波器,可以同时进行旁瓣抑制和多普勒容限带宽的扩展,并且使信噪比损失较小。

经计算机仿真证明有较好的结果。

主题词 巴克码 多普勒容限带宽 包络约束滤波器 多普勒频移补偿滤波器收稿日期:1997 05 29系上海市宇航学会会员。

0 引言相位编码信号有许多优点,但它有多普勒频移敏感性。

当雷达信号回波存在多普勒频移时,脉压后主瓣加宽,旁瓣增大,甚至起不到脉冲压缩的作用,这就限制了它的应用,因此多普勒容限带宽的扩展日益受到重视。

一方面,人们通过寻找新的码型来解决这个问题,如NLFM 码(Non Linear FM Sig nals)就是通过取样和量化非线性调频信号而来的,它具有低旁瓣特性和多普勒频移的不敏感性;另一方面,人们在雷达系统和旁瓣抑制滤波器上进行了研究。

文献[2]提出了采用非相干处理结构来降低多普勒频移的敏感性,文献[3]在非相干处理结构的基础上,在某一多普勒频率上采用不同的最佳准则设计多普勒频移补偿滤波器,得到了比较好的效果。

在实际应用中,也采用了多路匹配滤波器等各种各样的处理方法。

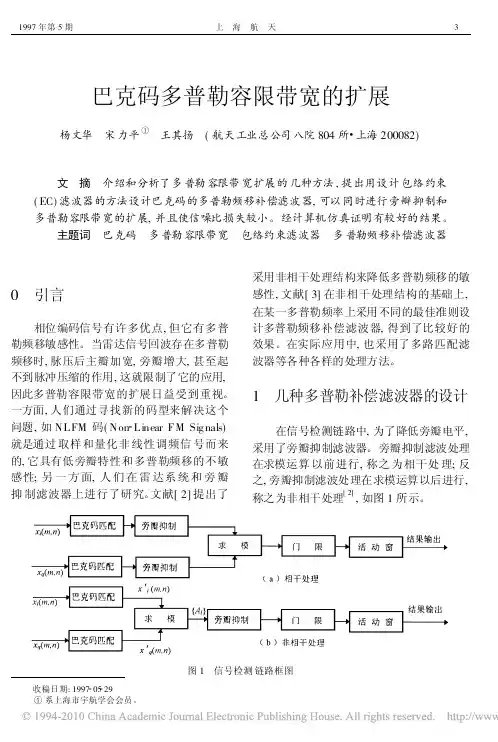

1 几种多普勒补偿滤波器的设计在信号检测链路中,为了降低旁瓣电平,采用了旁瓣抑制滤波器。

旁瓣抑制滤波处理在求模运算以前进行,称之为相干处理;反之,旁瓣抑制滤波处理在求模运算以后进行,称之为非相干处理[2],如图1所示。

图1 信号检测链路框图分析表明:采用非相干处理结构,可以降低多普勒频移的敏感性和扩展多普勒容限带宽[3]。

但是这样扩展是有限的:当目标高速飞行或旁瓣抑制滤波器应用于多普勒(PD)雷达时,非相干处理的多普勒容限带宽扩展已不能满足需要。

由于非相干处理结构的旁瓣抑制滤波器所考虑的只是多普勒频率的绝对值,其性能是关于零多普勒频率对称的,所以,就能以某一多普勒频率为中心频率,设计非相干处理结构的旁瓣抑制滤波器,得到较大的多普勒容限带宽。

多通道高采样率任意波形发生器的设计杨兴;王厚军;刘科【摘要】Arbitrary waveform generator (AWG) is a instrument which can generate arbitrary excitation signal. Output bandwidth, the number of channels and synchronous accuracy between different channels are the key specifications of AWG. In order to achieve high bandwidth, a multi-memories parallel structure is proposed to break through operation speed limitation of phase accumulator and look-up table. However, this structure introduces more complex synchronous error than traditional structures. The synchronization requirements of AWG with multi-memories parallel is analyzed emphatically in this article. The random initial phase of data clock and random trigger position are eliminated by deliberate distributing clock and trigger signal. The design of embedded phase calibration module is used to eliminate the random phase difference. Finally, the specifications of AWG are verified.%任意波形发生器是一种可以产生任意激励信号的测量仪器.输出波形带宽、通道数目以及通道间同步精度是任意波形发生器的关键指标.为了实现高带宽、多存储器并行的结构,并行存储技术被用来突破相位累加器和波形查找表工作速度的限制,然而该结构却存在比传统结构更加复杂的同步误差.针对此问题,该文分析了采用多存储器并行结构的任意波形发生器的同步需求,通过时钟、触发信号的精密分配以及内嵌相位校准模块设计,消除了数据时钟的随机初始相位、随机触发位置以及分频造成的随机相位差.最后对设计的任意波形发生器的相应指标进行测试验证.【期刊名称】《电子科技大学学报》【年(卷),期】2018(047)001【总页数】9页(P51-59)【关键词】任意波形发生器;高带宽;多存储器并行;同步【作者】杨兴;王厚军;刘科【作者单位】电子科技大学自动化工程学院成都 611731;电子科技大学自动化工程学院成都 611731;电子科技大学自动化工程学院成都 611731【正文语种】中文【中图分类】TM935任意波形发生器(AWG)可以产生频率稳定、分辨力高、频率切换速度快、相位噪声低的信号。

专利名称:LINK ADAPTATION SYSTEM AND METHOD FOR MULTICARRIER TRANSMISSIONSYSTEMS发明人:MAEDA, Junichi申请号:EP07738618.3申请日:20070307公开号:EP2115986A1公开日:20091111专利内容由知识产权出版社提供摘要:A communication device includes: a reception unit receiving communication signals having different frequencies, where the communication signals include logic channels, from another communication device; a measurement unit measuring line states of the received communication signals; a communication signal allocation unit allocating one or more communication signals to each of the logic channels; a margin setting unit setting a margin value for each logic channel and deciding the margin value set for the corresponding logic channel as a margin value of the communication signal; a communication speed decision unit deciding a communication speed of each of the communication signals such that an error rate of each of the communication signals is less than a prescribed value in line states degraded by the margin value of the communication signal from the measured line states; and a transmission unit notifying the other communication device of an allocation result of the communication signal and the decided communication speed.申请人:Sumitomo Electric Networks, Inc.地址:3-20-14 Higashi-gotanda Shinagawa-ku Tokyo 141-0022 JP国籍:JP代理机构:Cross, Rupert Edward Blount 更多信息请下载全文后查看。

一种基于切换步长的加权多模盲均衡算法牛聪;高勇【期刊名称】《现代电子技术》【年(卷),期】2013(000)019【摘要】研究了常数模算法、多模算法、加权多模算法,并分析了各个算法的优缺点。

针对加权多模盲均衡算法稳态误差小,但收敛速度慢的缺点,提出了一种基于切换步长的加权多模算法,最后对各算法在复信道环境下进行了仿真。

结果表明,改进算法不受相位偏移的影响,而且在稳态误差基本不变的情况下,加快收敛速度。

%The constant modulus algorithm (CMA),multi-mode algorithm (MMA) and weighted multimodulus algorithm (WMMA)are studied,and the merits and demerits of each algorithm are also analyzed. Though the steady-state error of weighted multimodulus algorithm is small,the rate of convergence is slow,for the reason,an improved weighted multimodulus algorithm based on switching step is proposed. Each of the algorithms is simulated in multi-channel environments. The results show that the improved algorithm is not influenced by the phase shift,and in the case of the steady-state error is essentially the same,the convergence speed can greatly accelerate.【总页数】4页(P64-66,69)【作者】牛聪;高勇【作者单位】四川大学电子信息学院,四川成都 610065;四川大学电子信息学院,四川成都 610065【正文语种】中文【中图分类】TN911.7-34【相关文献】1.一种基于MCMA的双模切换变步长的盲均衡算法 [J], 朱行涛;刘郁林;敖卫东2.基于多模误差切换的变步长盲均衡算法 [J], 李江;熊箭;归琳;余松煜3.一种新的基于多模误差切换的盲均衡算法 [J], 刘琚;代凌云4.基于加权因子非线性变化的改进加权多模盲均衡算法 [J], 张艳萍;崔伟轩5.基于自适应步长布谷鸟搜索算法优化的小波加权多模盲均衡算法 [J], 郑亚强因版权原因,仅展示原文概要,查看原文内容请购买。

水声通信多通道最大似然联合均衡译码方法

胡承昊;王海斌;台玉朋;汪俊

【期刊名称】《声学技术》

【年(卷),期】2022(41)4

【摘要】水平阵是水声通信中常见的阵列接收形式,但垂直阵也有重要的应用场合。

文章提出了一种适用于垂直阵的水声通信多通道最大似然联合均衡译码方法,相比

现有仅在信道均衡中叠加各通道信号的多通道判决反馈均衡方法(Multichannel Decision Feedback Equalizer,M-DFE),该方法利用最大似然准则,在一体化联合均衡译码过程中融合空间多通道接收信息,将空间增益直接用于抵抗水声信道多途衰

落和译码纠错,有效提升了水声通信的性能。

仿真结果表明,在误码率同样达到

10^(-3)的条件下,该方法所需信噪比相比M-DFE方法可下降2 dB。

海上实验结果显示,在多种信道条件下,该方法实现正确译码的通信速率是M-DFE方法的1.25倍以上。

【总页数】8页(P518-525)

【作者】胡承昊;王海斌;台玉朋;汪俊

【作者单位】中国科学院声学研究所;中国科学院大学

【正文语种】中文

【中图分类】TN929.3

【相关文献】

1.用于水声相干通信系统的联合迭代均衡和译码算法

2.一种用于水声通信的喷泉码最大似然译码方法

3.采用多通道信道均衡的调频水声语音通信

4.水声通信信源信道联合均衡译码方法

5.基于Polar码的水声通信信源信道联合译码方法

因版权原因,仅展示原文概要,查看原文内容请购买。

多频带自适应脉冲设计郭黎利;赵冰【摘要】In order to solve the problem of interference between an ultra-wide band system and existing communication systems, a multiple adaptive pulse based on finite prolate spheroidal wave functions was proposed. The system first sensed the spectrum environment, estimated the interference temperature, and searched the available band. Then it divided the available band into multiple sub-bands and generated an adaptive emission mask using the idea of OFDM for reference. It also system-designed the sub-band pulse using finite prolate spheroidal wave functions. Finally sub-band pulses were weighted and combined in a time domain to produce a new multiple adaptive pulse that could be seamlessly modified. This pulse can achieve more than 85% of spectrum utilization efficiency under the FCC emission mask and has strong flexibility and anti-jamming capabilities, as well as being able to achieve the seamless modification of launch waveform to adapt to the wireless environment.%针对UWB信号易受现有通信系统干扰的问题,提出基于有限项扁长椭球波函数的多频带自适应脉冲.利用认知无线电估计射频环境干扰温度并检测频谱空洞,然后借鉴OFDM的思想,将频带划分成若干个子带,生成自适应辐射掩蔽,利用有限项扁长椭球波函数设计各子带脉冲,最后将各子带脉冲在时域上进行加权组合,生成一种可进行无缝修正的多频带自适应脉冲.此脉冲在满足FCC辐射掩蔽要求的情况下,可达到85%以上的频谱利用率,同时具有很强的灵活性和抗干扰能力,可实现对发射波形的无缝修正以适应无线环境.【期刊名称】《哈尔滨工程大学学报》【年(卷),期】2011(032)006【总页数】5页(P825-829)【关键词】认知超宽带;多频带;自适应脉冲;扁长椭球波函数【作者】郭黎利;赵冰【作者单位】哈尔滨工程大学信息与通信工程学院,黑龙江哈尔滨150001;哈尔滨工程大学信息与通信工程学院,黑龙江哈尔滨150001【正文语种】中文【中图分类】TN914.2随着无线通信技术飞速发展,频谱资源日趋紧张,认知无线电(cognitive radio,CR)和超宽带无线通信(ultra wide band,UWB)作为解决频谱紧张的两大技术成为无线通信领域新的研究热点.认知无线电技术是一种智能的频谱共享技术,它能够主动感知频谱环境,通过一定的方法寻找未使用的频带,调整通信终端的参数设置,实现空闲的频谱资源的介入,从而达到提高频谱利用率、缓解频谱资源紧张的目的[1].超宽带无线通信技术基于共用频带的思想,与现有传统窄带无线技术共享频带,但是由于其所占的频谱范围很宽,且在设计过程中与外界环境没有交互,频谱共享缺乏灵活性,限制了频谱利用率的提高.将认知无线电技术与超宽带无线通信技术相结合[2-3],设计出一种新的智能无线系统——认知超宽带无线通信系统(cognitive ultra wide band,CUWB).在UWB系统中,脉冲形成器中脉冲波形的选择是至关重要的,此外脉冲的功率谱密度必须满足美国联邦通讯委员会(FCC)辐射掩蔽的限制,脉冲的特性制约了整个系统的性能、复杂度和频谱利用情况.常用的UWB脉冲主要有高斯脉冲及其导数组合、Rayleigh脉冲、Morlet小波脉冲以及厄密特脉冲等.高斯脉冲及其导数的组合脉冲能够很好的满足辐射掩蔽要求,频谱利用率较高,但是计算量较大,加权系数精度难以掌握[4];Rayleigh脉冲本质上与高斯脉冲类似,不易于窄带系统共存;Morlet小波脉冲的时域特性和频域特性均不错,能够满足FCC的辐射限制,频谱利用率高,但表达式中有频移项,适用于基于频移脉冲方式的UWB系统[5];厄密特各阶脉冲间互相正交,可以降低误码率和用户间干扰,但其能量集中于低频,各阶波形频谱相差大,需借助载波搬移频谱满足FCC要求[6].针对上述脉冲设计方法中存在的问题,本文提出一种结合认知无线电技术的无载波多频带自适应脉冲设计方法,该脉冲具有计算量小,参数易于控制,不需要载波搬移频谱,频谱利用率较高,可实时的修正波形等特点,与现有窄带通信系统有很好的兼容性.1 CUWB自适应脉冲1.1 扁长椭球波函数集为了实现高速率传输和低符号间干扰,脉冲的的持续时间要尽量短,即脉冲为时限信号.同时脉冲的频谱要限定在频谱空洞内,不能对其他用户产生干扰,即脉冲为带限信号.由奈奎斯特定律可知:频域上集中的信号必定在时域上分散,反之亦然.因此如何找到这样一组脉冲是目前研究的热点.D.Slepian等发现在椭球坐标系下求解赫姆霍兹方程时获得的椭球波函数集可有效地解决这一问题[7].扁长椭球波函数(PSWF)是带限[-ω,ω]和时限[-T/2,T/2]空间上的完备正交基[8-9]:扁长椭球波函数满足下列积分方程:式中:φk(x)为第k阶扁长椭球波函数,λ为对应的特征值,1>λ0>λ1>…>λk >…,式中:λk表示第k阶PSWF的能量集中度.由式(3)可以看出,φ(x)经过截止频率ω的低通滤波器之后,输出的是经过特征值λ加权后的函数本身φ(x).将上式进行变换得到由式(4)可以看出,时限扁长椭球波函数φ(x)经过截止频率[ωl,ωh]的带通滤波器h(t)之后,输出仍是它本身.φ(x)的频谱具有相同的特性:式中:Φn(ω)为φ(x)的频谱,Hn(ω)为h(t)的傅里叶变换.1.2 自适应脉冲设计认知无线电首先对接收到的射频信号进行干扰温度估计并检测频谱空洞,然后将可用频带按优先级排列、标识,并制定完备的退让和替代策略.当授权用户重新接入时,可选择跳转到备用频带或降低功率级数,以避免对授权用户产生干扰.对选定的通信频带构建动态自适应辐射掩蔽SCUWB(f).根据自适应辐射掩蔽规定的功率谱形状和覆盖范围,利用PSWF设计脉冲波形.PSWF具有计算量小,各阶脉冲相互正交,生成简单及接入灵活等优点,但单频带PSWF的频谱利用率较低.因此,本文借鉴OFDM的思想,将频带划分成若干个子带,并根据子带信道特性生成相应的子带辐射掩蔽.根据各子带的约束条件,利用PSWF产生相应的子带波形,并在时域上将子带脉冲进行加权累加.在所有满足条件的波形中,选择具有最大能量集中度的φ0作为子带脉冲pi(t):式中:M为子带个数.自适应脉冲波形为式中:bi为对应子带的加权系数.根据傅里叶变换的线性性质,自适应脉冲的频谱等于子带脉冲频谱的线性叠加:图1为多频带自适应脉冲的原理图,可用频带被划分成多个子带,根据子带自适应辐射掩蔽生成的子带脉冲在时域上被叠加.当系统检测到窄带干扰时,自适应辐射掩蔽发生变化,在干扰信号所在的频带内产生凹槽,该频带内的子带脉冲被撤销,利用剩余频带继续通信.在出现少量窄带干扰的情况下,系统不需要跳转到备用频带,从而实现无缝的修正发射波形以适应无线环境.图2对上述多频带自适应脉冲进行了仿真.从图2(a)中可以看出脉冲的带外特性很好,衰减速率很快,频谱利用率也较高.从图2(b)可看出在窄带干扰处,脉冲可产生深度达到40dB以上的凹槽,这些凹槽可以使CUWB系统避免与这一频带上的窄带系统产生干扰,从而实现共存.图1 多频带自适应脉冲Fig.1 Multi band adaptive pulse waveform图2 自适应脉冲功率谱密度Fig.2 PSD of adaptive pulse waveform2 脉冲频谱利用率计算脉冲频谱利用率是衡量CUWB脉冲优劣的一种方式[10],定义脉冲的带通为Bp,则频谱利用率为式中:Tf为脉冲重复周期.为了更好地利用频谱资源,提高频谱利用率,在划分各子带时并不是完全独立的,子带间有部分频带是相互重叠的,定义频带重叠率ξ为式中:Bi为子带频谱宽度,Bμ为重叠部分宽度.自适应脉冲频谱宽度Bp为频谱利用率η主要受频带重叠率ζ和子带宽度βi的影响.在子带宽度确定的情况下,ζ的增大会引起子带个数M的增加,导致计算量加大,系统复杂度增高.图3为在子带宽度不变的情况下,频谱利用率与频带重叠率之间的关系.从图3可以看出,随着频带重叠率的增加,脉冲的频谱利用率也逐渐增大.当频带重叠率达到最大时,频谱利用率达到85%以上.当存在窄带干扰时,自适应脉冲仍可以保持较高的频谱利用率.图3 频谱利用率Fig.3 Spectrum utilization efficiency表1为目前文献中各种UWB脉冲波形的频谱利用率情况.从表中可以看出,传统的高斯、Rayleigh、Hermite、小波脉冲直接做UWB脉冲时,频谱利用率都很低,经过组合累加后的脉冲频谱利用率得到了很大的提高.本文提出的多频带自适应PSWF脉冲不但避免了窄带干扰,而且频谱利用率优于19个组合Chirp压缩脉冲,仅次于33个组合高斯脉冲.此外,多频带自适应PSWF脉冲的计算量小于组合高斯脉冲和组合Chirp压缩脉冲,利用该方法设计的脉冲在提高频谱利用率的同时,降低了系统的计算量.表1 UWB脉冲频谱利用率Table 1 Spectrum utilization efficiency of UWB pulse脉冲波形频谱利用率50.90 33个组合高斯脉冲 92.16优化的Rayleigh4/6阶导脉冲 51.94文献[9]修正的Hermite l阶导脉冲 46.23 Morlet正交小波脉冲 30.70 19个组合Chirp压缩脉冲 83.33避免同频干扰的17个组合Chirp压缩脉冲 73.30单频带PSWF脉冲 39.00 10个子带的自适应PSWF脉冲/%修正的高斯5/7阶导脉冲85.83本文避免窄带干扰的10个子带自适应PSWF脉冲81.80 3 多频带自适应脉冲性能分析3.1 系统模型发射UWB信号最常用和最传统的方式是利用信息数据符号对脉冲进行调制,除此之外,为了形成所产生信号的频谱,还要用伪随机序列对数据符号进行编码.本文采用二进制正交PPM-TH-UWB,发射端输出信号为式中:cjTc定义了脉冲的相对于Ts整数倍时刻的抖动,ajε是由PPM调制引起的位移.在二进制正交PPM-TH-UWB中,发射波形可以表示为式中:τ0=jTs+cjTc,p0(t)和p1(t)表示信息比特“0”和“1”是每个脉冲携带的能量.信号经过AWGN信道后,接收端接收到的信号可以表示为式中:信道增益α和信道延迟τ都取决于发射机和接收机之间的传播距离.本文假设接收机与发射机已经同步,令τ=0.在接收端采用单相关器方案,接收信号送入相关器乘以相关掩模m(t)后,经积分器送入判决器进行判决[11]:相关器输出的干扰信号功率谱为式中:M(f)为m(t)的模,GN(f)为干扰信号的功率谱.3.2 自适应脉冲性能分析在传统的通信系统中,最常用的消除干扰的方法是设计滤波器.但是由于UWB信号带宽很宽,频带内的干扰源可能会很多并且数量和频率不确定.因此,单纯通过预先设计滤波器的方法很难消除干扰.可以通过系统参数和脉冲波形的选择来有效地消除干扰信号的影响,或与传统窄带系统实现共存[12].设频带内设窄带干扰带宽为BJ,中心频率为fJ,干扰功率谱为GN(f).则相关器输出的干扰功率谱为积分器输出干扰功率可表示为此时,可调整脉冲波形参数,使P(f)在频带[fJBJ/2,fJ+BJ/2]内产生凹陷,则窄带干扰对超宽带系统的影响降到最低.图4 窄带干扰下系统误码率Fig.4 BER with narrowband interference图4为窄带干扰下的误码率曲线,假设背景噪声为高斯白噪声,信号与窄带干扰的信干比为-15 dB.从图4中可以看出,窄带干扰对系统性能造成了很大影响,信噪比的提高对系统误码率没有明显的改善.在系统自适应调整脉冲波形参数,撤销干扰频带内的子带脉冲之后,系统躲避干扰频带继续通信,此时,系统误码率与没有窄带干扰时的误码率相近,很好的抑制了窄带干扰对系统性能的影响.4 结束语在认知超宽带无线通信系统能够正确感知频谱环境并动态使用可用频谱空洞的前提下,无线电脉冲波形要解决两大问题:1)如何提高频谱利用率; 2)实现与现有通信系统的兼容.本文借鉴OFDM的思想,提出了一种能够动态地对频谱环境做出快速反应的自适应脉冲,减小了系统的计算量,提高了反应速度,实现了与现有窄带通信系统的共存.为了进一步提高频谱利用率,本文提出了频带重叠率的概念,对多频带自适应脉冲进行了修正,虽然增加了设计的复杂度,但可以将频谱利用率提高到85%以上.最后,对脉冲的抗干扰性能进行了分析,多频带自适应脉冲可以很好的抑制窄带干扰对系统性能的影响.在本文提出的生成自适应脉冲方法中,并没有考虑子带脉冲相位对累加结果的影响,应在后续的学习工作中进一步深入研究.参考文献:【相关文献】[1]MITOLA J,MAGUIRE G Q.Cognitive radio:making software radios more personal [J].IEEE Personal Communications,1999,6(4):13-18.[2]MOY C,BISIAUX A,PAQUELET S.An ultra-wide band umbilical cord for cognitive radio systems[C]//Proceedings of IEEE International Symposium on Personal,Indoor and Mobile Radio Communications.Berlin,Germany,2005: 775-779.[3]GRANELLI F,ZHANG Honggang.Cognitive ultra wide band radio:a research vision and its open challenges[C]//Proceedings of IEEE 2nd International Workshop Networking with Ultra Wide Band Workshop on Ultra Wide Band for Sensor Networks.Rome,Italy,2005:55-59.[4]贝尼迪特,吉安卡拉.超宽带无线电基础[M].葛利嘉,朱林,袁晓芸,等译.北京:电子工业出版社,2006:131-167.[5]梁朝晖,周正.基于小波的超宽带脉冲波形设计[J].北京邮电大学学报,2005,28(3):43-45.LIANG Zhaohui,ZHOU Zheng.Wavelet-based pulse design for ultra-wideband system [J].Journal of Beijing University of Posts and Telecommunications,2005,28(3):43-45. [6]GHAVAMI M,MICHAEL L B,HARUYAMA S,et al.A novel UWB pulse shape modulation system[J].Wireless Personal Communications,2002,23(1):105-120. [7]SLEPIAN D,POLLACK H O.Prolate spheroidal wave functions,Fourier analysis,and uncertainty[J].Bell System Tech Journal,1961,40(1):43-64.[8]JITSUMATSU Y,KOHDA T.Prolate spheroidal wave functions induce Gaussian chip waveforms[C]//Proceedings of IEEE International Symposium on Information Theory Conference.Toronto,Canada,2008:6-11.[9]贺鹏飞.超宽带无线通信关键技术研究[D].北京:北京邮电大学,2007:28-43.HE Pengfei.Working out the key technologies of ultra wide band wireless communications [D].Beijing:Beijing University of Posts and Telecommunications,2007:28-43.[10]WU Xianren,TIAN Zhi,DAVIDSON T N.Optimal waveform design for UWB radio [J].IEEE Transactions on Signal Processing,2006,54(61):2009-2012.[11]朱刚.超宽带(UWB)原理与干扰[M].北京:清华大学出版社,2009:92-115.[12]岳光荣,葛利嘉.超宽带无线电抗干扰性能研究[J].电子与系统学报,2002,24(11):1544-1550.YU Guangrong,GE Lijia.System performance research of ultra-wide bandwidth radio in jamming environment[J].Journal of Electronics and Information Technology,2002,24(11):1544-1550.。

可变随机域宽度的多用户机会频谱接入方案

赵浩;罗涛;乐光新

【期刊名称】《北京邮电大学学报》

【年(卷),期】2010(33)1

【摘要】在分布式多用户频谱接入(OSA)环境下,从用户间信道选择同步问题成为干扰系统全网吞吐量的重要因素.为解决该问题,提出一种基于可变随机域宽度的多用户频谱接入方案,在充分利用随机化接入方式避免用户间同步的同时,通过改变可供随机接入选择的信道数(即随机域宽度),达到最优信道选择与同步避免的折中.仿真结果表明,相对于传统的全频段随机化接入同步避免方式,本方案可有效提升多用户机会频谱接入系统的全网吞吐量.

【总页数】4页(P52-55)

【关键词】机会频谱接入;分布式;用户间同步;可变随机域

【作者】赵浩;罗涛;乐光新

【作者单位】北京邮电大学信息与通信工程学院

【正文语种】中文

【中图分类】TN915

【相关文献】

1.Ad Hoc网络中基于距离的机会频谱接入方案 [J], 孙君;朱洪波

2.基于频谱聚合技术的多用户短波机会频谱接入 [J], 徐承龙;程云鹏;董文斌;孙浩

3.“碰撞触发随机化”的分布式多用户机会频谱接入 [J], 王雨蒙;沈良;徐承龙;张

海涛;孙浩

4.认知无线电中基于POMDP的机会频谱接入方案 [J], 李晓娅;张有光;吴华森

5.以频谱聚合技术为基础的多用户短波机会频谱接入 [J], 沈静;刘聪

因版权原因,仅展示原文概要,查看原文内容请购买。

文学术语对照表(英法德部分)A《A map of misreading》《误读的地图》Ableben der kunst 艺术的终结Abrams,Meyer Howard 阿勃拉姆斯Abstractism 抽象论Absurd 荒诞Act 行为Actant 行为体Acte de discours 话语行为Action 动作Adaptation 改编Adaquate konkretisation 恰当的具体化Adorno, Theodot Weisengrund 阿多尔诺Affective fallacy 感受谬见Affinity 类同Alexandrin 亚历山大体Alienation 异化Allegory 寓言Allegorical symbol 寓言式象征Alliteration 头韵Allusion 引喻Althusser, Louis 阿尔杜塞Ambiguity 含混American school 美国学派Analogy 类比Analyse de contenu 内容分析Analyse de texte 文本分析Analyse litteraire 文学分析Analyse semantique 语义分析Analyse structurale 结构分析Analytical/non-analytical 分解性符号链/非分解性signchain 符号链Anaphora 首语重复法Anapest 抑抑扬格《Anatomy of Criticism》《批评的剖析》Anchoring 锚定Animus und anima 男性潜倾和女性潜倾Anthology 选集Anthroponym 人素Anti-climax 反高潮Antiheros 反英雄Anti-intellectualism 反智性Anti-kunst 反艺术Antinarratve 反叙述Antinaturalism 反自然主义Antiquarianism 复古主义Anxiety of influence 影响焦虑Apllonian spirit 日神精神Apocalyptic 启示性Apocrypha 集外拾遗Appellstruktur 召唤结构Applikation 运用Appropriation 据为己有Arcadianism 阿卡迪亚情调Archaism 仿古Archetyp 原型Aristoteles 亚里士多德Arnold, Matthew 阿诺德,马修《Art poetica》《诗艺》Art as device 作为技巧的艺术Artistic beauty 艺术美《Art poetique》《诗的艺术》Art pour art 为艺术而艺术Aside 旁白Association 联想Assonance 半谐韵Assonance 不完全韵《Asthetik》《美学》Asthetische bildung 审美陶冶Asthetische distanz 美感距离Asthetische erfahrung 审美经验《Asthetische Erfahrung uadLiterarische Hermeneutik》《审美经验与文学阐释学》《Asthetische Theorie》《美学理论》Augustinus, Aurelius 奥古斯丁Ausdruck 表达Authentity 本真性Autobiography 自传Autobiographical novel 自传体小说Automoner komplex 自主情结Autonomie der kunst 艺术的自律性Avant-garde 先锋派Avant-texte 前文本BBacon, Francis 培根,费兰西斯Ballad 歌谣Barthes, Roland 巴尔特,罗朗Baudelaire, Charles 波德莱尔Beauty 美Begining rhyme 起韵Behavourist begining 行为主义式开场法Belles-lettres 美文学Benjamin, Walter 本雅明Biographical criticism 传记批评Black humour 黑色幽默《Black Qrpheus》《黑肤的奥尔甫斯》Blank verse 素体诗《Blindness and Insight》《盲目与悟解》Bloch, Ernst 布洛赫Bloom, Harold 布鲁姆Boileau, Nicolas 布瓦洛Booth, Wayne 布思Borrowing 借用Bound motif 束缚母题Brecht, Bertolt 布莱希特Brooks,Cleanth 布鲁克斯Brunetiere, Ferdinand 布吕纳介Bucolic 田园风味Burke,Kenneth 勃克Burlesque 谐谑模仿CCaesure 停顿Cartesianism 笛卡尔主义Cassirer, Ernst 卡西尔Catalog 排列Catalyser 催化单元Catastrophe 结局Catharsis 宣泄Causal sequence 因果序Chain verse 连环诗Channel 渠道Chanson de geste 武功歌Chapelain, Jean 夏泼兰Chiasmus 交叉排比《Chinese character as medium for poetry》《作为诗歌手段的中国文字》Chinoiserie 中国风Chronical play 编年史剧Chrononym 时素Climax 高潮Cloak and dagger 阴谋小说Cloak and sword 剑侠传奇Closet drama 书案剧Co-textual context 共存文本语境Code 信码Coincidence 巧合Coleridge, Samuel Taylor 柯勒律治,萨缪尔·泰勒Collage 拼贴Collective foculization 集体视角式Comedy of manners 风俗喜剧Comic relief 喜剧穿插Commentary 评论Comparative literature 比较文学Comparative poetics 比较诗学Compound narrator 复合叙述者Computational stylistics 数理风格学Conative 意动性Conceit 曲喻Context 语境Contextualism 语境论Contrapuntal 对位式Contrast 对比Convention 常规Conventionality 规约性Cousin,Victor 库赞Crane, R.S. 克莱恩,R.S.Crenologie 渊源学Critical distance 批评距离《Criticism and Ideology》《批评与意识形态》Croce, Benedetto 克罗齐Crown of sonnets 皇冠十四行诗Cubic poetry 立体诗Cult value 崇拜价值DDactyl 扬抑抑格Dante, Alighieri 但丁Das asthetische objekt 审美对象Das asthetische erlebnis 审美体验Dasein 此在《Das Literarische Kunst-werk》《文学艺术作品》《Das Prinzip der Hoffnung》《希望的原则》Das selbst 自性De Man, Paul 德曼Dead space 空档Decentering of subjectivity 主体分化Decoding 解码Decorum 合体Deep-structure 深层结构Demystification 非神话化Denudation 剥离Der funkionale realismus 功能现实主义Der hermeneutische zirkel 阐释的循环Der implizite leser 隐在的读者《Der Implizite Leser》《隐在的读者》Derrida, Jacques 德里达Description 描写Desemantization 消义化Diachronic 历时性Diachronische analyse 历时性分析Diary fiction 日记体小说Dichotomy 对立式《Dichter und Phantasie》《诗人与幻想》“Dictionary” interpretation“词典”式释义Diderot, Denis 狄德罗《Die Beziehung Zwischen Dichter undTagtraum》《诗人与白昼梦的关系》《Die Eigenart des Asthetischen》《审美特性》Die frankfurter schule 法兰克福学派《Die Geburt der Tragodie》《悲剧的诞生》《Die welt als wille und vorstellung》《作为意志和表象的世界》Differentia of literature 文学特异性Dilthey, wilhelm 狄尔泰Dime novel 一角钱小说Dimeter 双音步诗行Dionysian spirit 酒神精神Direct free form 直接自由式Direct quoted form 直接引语式Directory 指点Disclosure 去蔽Discourse 讲述《Discours surl'art Paetique》《关于诗歌艺术的演讲》《Discours surle style》《论风格》Disengagement 脱身Dissociation of sensibility 感觉解体Distortion of duration 时长变形Doggerel 打油诗Doxologie 流传学Dramatism 戏剧化《Du Sublime》《论崇高》Dynamic motif 动力性母题EEagleton,Terry 伊格尔顿《Eckermann:Gesprach mit Goethe》《和爱克曼的谈话录》Ecole de geneve 日内瓦学派《Economic and philosophic Manuscripts of 1844》《1844年经济学哲学手稿》Ecriture automatique 自动写作Ego 自我Einfuhlung;Empathy 移情Elegy 挽诗Eliot,T.S. 艾略特Ellipsis 省略Emotive 情绪性Empson,William 燕卜荪Encoding 编码Encomium 赞诗“Encyclopedia”interpretation“百科式”释义Endogene bilder 内生图象End-rhyme 尾韵Engels, Fridrich 恩格斯Enjambement 跨行Erlebnis 体验Enonce 表述Enonciation 表述行为英Enonciation/utterance法表述/被表述Enonciation/enonce Entkunstung 非艺术化Envelope structure 封套结构Epigram 警句Epiphany 灵悟Epistolary fiction 书信体小说Epithalamium,epithalamion 婚后诗Epoch 时代Erwartungshorizont 期待视野Euphuism 游浮体Evaluative commentary 评价性评论Exhibition Value 展览价值Expectation 期待Expectation of meaning 意义期待Expectation of non-reference 非指称化期待Expectation of rhythm 节律化期待Expectation of totality 整体化期待Explanation/understanding 说明/理解Explanatory commentary 解释性评论Explication de texte 文本解释Explicit ellipsis 明省略Explicit narrator 现身叙述者Extra-textual elements 超文本成分Eye-rhyme 目韵Eye-rhyme 视韵FFable 寓言Fallacy of communication 传达谬见Fairy-tale 童话Fait social 社会事实Farce 笑剧Feminine rhyme 阴韵Feminist criticism 女权主义批评Fest 节日Fields of semiotics 符号学领域分科Fish, Stanley Eugene 费什Flashback 倒述Flashforward 预述Flat character 扁平人物“Fly-on-the-wall” technique“墙上苍蝇”式叙述法Focalization 叙述角度Focus character 视角人物Folklore 民间传说Folktale 民间故事Followability 可追踪性Foregrounding 前推Foreshadowing 伏笔Form 形式Formalism 形式主义Fortune 际遇Foucault,Michel 福柯,米歇尔Fourteener 七音步十四音节Frame story 框架故事Framework-story 连环故事France,Anatole 法朗士Free motif 自由母题Free verse 自由诗French school 法国学派French poststructuralist 法国后结构主义符号学派Freud,Sigmund 弗洛伊德Freytag pyramid 弗雷塔格金字塔Function 功能Function 功能体GGadamer,Hans Georg 迦达默尔General literature 总体文学Generation 代Genetic fallacy 发生谬见Genette,Gerard 惹奈特,杰Genre 文类Genology 文类学Gesellschaftlichkeit der Kunst 艺术的社会性Gesellschaftliche rezeption 社会接受Gesellschaftliche vermittelung 社会中介Gestalt 格式塔Gestaltgesetze 格式塔规律Gestaltqualitat 格式塔质Gide,Andre 纪德Gnomic verse 格言诗Goldmann,Lucien 戈德曼Gongorism 贡古拉文风Gothic novel 哥特小说Grotesque 怪诞Greimas,Algidas Julien 格雷马斯HHabermas,Jurgen 哈贝马斯Half-rhyme 半韵Half-rhyme 腰韵Heiddeger,Martin 海德格尔Heresy of paraphrase 意释误说Hermeneutik 阐释学Heroic couplet 英雄双韵诗Hexameter 六音步诗行High comedy 高雅喜剧Higher narrative level 高叙述层次Hirsch,Eric Donald Jr 赫什Histoire litteraire 文学史Historical narration 历史叙述/文学叙述Holland,Norman N. 霍兰德Horizont 视界Horizont verschmelzung 视界融合Hulme,T.E. 休姆Humanism 人文主义Humanistic criticism 人文主义批评Humor 幽默Hymn 赞美诗IIamb 抑扬格Iconicity 象似性Id 本我Identif ikationsmuster 认同类型Ideologie kritik 意识形态批评Image 意象Imagination 想象Imitation 模仿Impersonality 非个性化Implicit ellipsis 暗省略Implicit lower narrative level 隐式低叙述层次Implicit narrator 隐身叙述者Implied author 隐指作者Implied metaphor 潜喻Implied reader 隐指读者Impure poetry 不纯诗Impressionistic criticism 印象式批评Index 指示体Indexical context 标示语境Indexicality 标示性Indice 标记体Indirect free form 间接自由式Indirect quoted form 间接引语式Individuation 个性化Influence 影响Influence study 影响研究Informant 信息体Ingarden, Roman 英加登Institution litteraire 文学建制Intertextuality 互文性Intentional context 意图语境Intentional fallacy 意图谬见Intentionaler gegenstand 意向对象Intentionalitat 意向性Interdisciplinary study 跨学科研究Interior monologue 内心独白Internal rhyme 内韵Interpretation 阐释《Intuition creatrice en art et enpoesie》《艺术和诗的创造性直觉》Irony 反讽Ironic commentary 反讽性评论Ironic narrative 反讽叙述《Is there a text in this class?》《这门课有无文本?》Isomorphie 异质同构JJames,Henry 詹姆斯,亨利Jameson,Fredric 詹姆逊Johnson,Samuel 约翰逊,萨缪尔Journal intime 内心日记Jung,Carl Gustav 荣格Juxtaposition 意象并置Juxtaposition 并置KKeats,John 济慈,约翰Kermode,J.Frank 科默德Kollektives unbewaβtes集体无意识Komplex 情结Konkrete Poesie 具象诗Konkretisation 具体化Krieger,Murray 克里格Kristeva,Julia 克里斯特娃,朱《Kritik der Urteilskraft》《判断力批判》Kulturindustrie 文化工业LLa nouvelle critique 法国新批评La psychocritique 精神批评《La phylosophie de l'art》《艺术哲学》Lacan,Jacques 拉康Lament 哀诗Language as gesture 姿势语论Langue/parole 语言/言语Lanson,Gustave 朗松,G.Leavis,F.R 李维斯Lebenstrieb/eros 生本能/性本能Leitmotiv 主导母题Leser 读者Libido 力必多Lichtung 澄明Life expression 生命表达Link sonnet 连韵十四行诗Lisibilite 可阅读性Lipps,Theodor 李普斯Literalism 字面论Local colour 地方色彩Low comedy 低俗喜剧Lower narrative level 低叙述层次Lukacs,Georg 卢卡契Lyric 抒情诗MMacherey,Pierre 马舍雷,彼Macro-semiotic system 宏观符号系统Macro-sign 宏观符号Macro-text 宏观文本Main plot 主情节Manipulative narrative 操纵式叙述Mannerism 矫饰主义Manuscript 手写本Marcuse,Hebert 马尔库塞Marx, Karl 马克思Masculin rhyme 阳韵Medium 中介Medievalism 中世纪精神Melodrama 情节剧Memoires fiction 回忆录小说Mesologie 媒介学Message 信息Meta- 元Metacriticism 元批评《Metahistory》《元历史》Metalanguage 元语言Metalingual 元语言性Metaphysische qualitat 形而上质量Metaphor 隐喻Meter 音步Metonymy 转喻Miller,J. Hillis 米勒Mime 哑剧Miracle play 奇迹剧Mise en scene 导演Misreading 误读Mock-heroic 戏仿英雄体Monodrama 独角戏Montage 蒙太奇Montivation 促动因素Moral criticism 道德批评Motif 母题Motivation 根据性Movement 运动Mukarovsky,Jan 慕卡洛夫斯基Multiple plot 复合情节Musical comedy 音乐喜剧Myth 神话NNaive dichtang 素朴诗Narratee 叙述接收者Narrative 叙述Narratorial intrusion 叙述干预Narrative poem 叙事诗Narrative perspective 叙述方位Narrative setup 叙述格局Narrative stratification 叙述分层Narrativity 叙述性Narratology 叙述学Narrator 叙述者Narrativity 叙述性Narratorial mediation 叙述加工National literature 民族文学Naturalization 自然化Natural beauty 自然美Negative influence 负影响Negativitat der kunst 艺术的否定性Neologism 新词风格New criticism 新批评派Nietzsche, Friedrich Wilhelm 尼采Nokan,Charles 诺康Non-referential pseudostatement非指称性伪陈述Non-significant system 无意义组合Northrop Frye 诺思洛普·弗莱Novel 长篇小说Novella 中篇小说Nucleus 核心单元OObjective criticism 客观批评Octave rima 八行诗Ode 颂诗Odipuscomplex 俄底浦斯情结Omniscient narrative 全知式叙述《On Modern Novel》《现代小说》《On Dramatic Art》《论戏剧艺术》Onomatopoeia 拟声Ontological criticism 本体式批评Open-ended 开放结尾式Opera 歌剧Organism 有机论Ostranenie 陌生化Overcoding 附加解码Overstatement 夸大陈述PPalindrome 回文Panegyric 颂文Pantheism 泛神论Paradigm 纵聚合系Paradox 悖论Paralipses 假省笔法Parallel study 平行研究Paraphrasability 可意释性Paratextual elements 类文本成分Parole 言语Parody 戏仿Passion play 耶稣受难剧Pastiche 仿作Pastoral 牧歌Pater,Walter 佩特,沃尔特Pathetic fallacy 感情误置Pathos 哀婉感受Pause 停顿Periphrasis 迂回Period 时期Persona 人格面具Personliches unbewates 个人无意识Perspective of incongruity 不相容透视Phatic 交际性《Phenomenologie de l'experienceesthetique》《审美经验现象学》Phrase rhythm 词组节奏Picaresque novel 流浪汉小说Pirated edition 盗版Platon 柏拉图Platonism 柏拉图主义Plot 情节Plotinus 普洛丁Plot typology 情节类型学Poetic licence 诗歌特权Poetic drama 诗剧Poetic truth 诗歌真理《Poetics》《诗学》Poetique de lespace 空间诗学Poetry 诗Poetry of inclusion 包容诗Poetry of exclusion 排它诗Point of view 视点Poulet, Georges 布莱Polyphone harmonie 复调和谐Pope,Alexander 蒲柏,亚历山大Pornography 色情文学Possible worlds 可能世界Post-structuralism 后结构主义Pound, Ezra 庞德Pragmatics 符用学Prague school 布拉格学派Preciosite 高雅Pre-text 前文本Prinzip der aquivalerz 等值原则Prinzip der entropie 均衡原则Private symbol 私设象征Problem novel 问题小说Problem play 问题剧Production of art 艺术生产Projektion 投射propagandism 宣传Prosody 诗律Prothalamion 婚前诗Proverb 格言Proverb 谚语Provincialism 外省风格Proust, Marcel 普鲁斯特Psychische energie 心理能Psychische funktion 心理功能Psychische valenz 心理值Psychische zustand 心态Psychological context 心理语境Psychological fiction 心理小说Psychological realism 心理现实主义Psychologische und 心理型和幻想型illusionarer typPsychologische rezeptionsforschung 接受心理分析Psychologische typen 心理类型Psychologism 心理主义Public symbol 公共象征Pulp magazine fiction 廉价杂志小说Pun 双关语Pure poetry 纯诗QQualisign/sinsign/legisign 形符/单符/义符Qualitative knowledge 质的知识Qualitative progression 质性进展Querelle des anciens ermodernes古今之争RRationalism 理性主义Rational and perceptual 理性与感性Raymond Williams 维廉斯·雷蒙德Ransom, John Crowe 兰色姆Reader-response criticism 读者反应批评Realismo magico 魔幻现实主义Referential 指称性Reflection/nachdenken 反思Reification 物化Repetition 重复Repetition 复述Replica 复制Reported speech 转述语Reproduction 复制Resemantization 再义化Revenge tragedy 复仇悲剧Rezeptionsasthetik 接受美学Rezeptionsvorgabe 接受前提Rhapsody 狂诗Rhyme 韵Rhythm 节奏Richards.I.A. 瑞恰慈Riviere,Jacques 里维埃,J.Rohstoff 素材Romanaclef 影射小说Roman noir/Gothic novel 黑色小说/哥特小说Romantic irony 浪漫式反讽Round character 圆形人物Rousseau,Jean-Jacques 卢梭,让-雅Ruskin John 拉斯金·约翰SSage 英雄传奇Said,Edward W. 赛义德Saint Thomas Aquinas 托马斯·阿奎那Sainte-Beuve,Charles Augustin 圣伯夫Sapphics 萨福体Sartre,Jean-Paul 萨特Saussure,Ferdinand de 索绪尔,费德Scene 场景Schatten 阴影Schematisierte ansichten 轮廓化图象Schichtenaufbau 层次构造Schleiermacher, Friedrich Ernst Daniel施莱尔马赫Schoppenhauer,Arthur 叔本华Science fiction 科幻小说Scientific criticism 科学化批评Scriptible/lisibe 可写式/可读式Secondary system 二度体系Semanalysis 符号分析学Semantics 符义学Semantique poetique 诗歌语义学Semantique structurale 结构语义学Semiosis 符指过程Semiotics 符号学Sensibility 感性Sentimentalische dichtung 感伤诗Sentimentalism 感伤主义Sequence deformation 时序变形《Seven Types of Ambiguity》《含混的七种类型》Shelley,Percy Bysshe 雪莱Shock 震惊Short story 短篇小说Sign 符号Signifier/signified 能指/所指Simile 明喻Situational context 场合语境Skaldic verse 行吟宫廷诗Sociology of literature 文学社会学Soliloquy 独白Sonnet 十四行诗Sontag, Susan 桑塔格,苏珊Source 渊源Source study 渊源研究Southern critics 南方学派Soviet semiotics 苏联符号学派Spatial form 空间形式Spatial sequence 空间序Spiel 游戏Stadialism 阶段平行论Stael, Madame de 斯塔尔夫人Stanzaic form 诗节形式Stanzaic form 段式Starobinski, Jean 斯塔罗宾斯基Static motif 静力性母题Stereotype 模式Stoffgeschichte 主题学Stream of consciousness 意识流Stretch 延长Structural realism 结构现实主义Structuralism 结构主义Structuralist/post-structuralist semiotics 结构主义与后结构主义符号学《Structuralist Poeties》《结构主义诗学》Structure 结构Structure/texture 构架/肌质论Stylistics 风格学Stylization 风格模仿Sub-narrative 次叙述Sub-plot 次情结Sublimation 升华Sublime 崇高《Summa Theologiae》《神学大全》Summary 缩写Superego 超我Super-narrative 超叙述Superposition 意象迭加Super-sign 超符号Supplementary commentary 补充性批评Surface structure 表层结构Suspense 悬疑Symbol 象征Symbolic action 象征行动Symptomatic reading 症候式阅读Synaesthesia 通感Synaesthesis 综感Synchronic/diachronic 共时性/历时性Synchronische anayse 共时性分析Synecdoche 提喻Syntactics 符形学Syntagm 横组合段Syntagmatic/paradigmatic 横组合/纵聚合TTaboo 塔布Tagtraum 白日梦Taine, Hippolyte 泰纳Tate, Allen 退特Tecriture 文体Temporal deformation 时间变形Temporal sequence 时间序Tendenz 倾向性Tenioha 天尔运波Tenor/vihicle 喻指/喻体Tension 张力Tercet 三行体诗节Terza rima 三行连环韵诗Tetralogy 四部曲Tetrameter 四音步诗行Text/discourse 文本/讲述Textual criticism 文本批评Texture 肌质Theatre of cruelty 残酷剧The epic theatre 叙事剧The new criticism 新批评The new humanism 新人文主义《Theory of Literature》《文学理论》The past/the present 叙述现在/被叙述现在《The Political Unconsciousn》《政治无意识》《The Sacred Wood》《圣林》The Second self 第二自我《The Well-Wrought Urn》《精致的瓮》The Verbal Icon 《语象》The yale critics 耶鲁学派Thibaudet, Albert 蒂博代Three unities 三一律Todestrieb /thanatos-trieb 死本能Todorov, Tzvetan 托多罗夫Toponym 地素Totality 总体性Totem 图腾Tradition 传统Tragicomedy 悲喜剧Translation 翻译Transpassing of stratification 跨层Trilogy 三部曲Trilling, Lionel 特里林Trimeter 三音步诗行Type 类型Typicality 典型性Typical character 典型人物Typology 类型学UUgliness 丑Unbestimmtheit 不确定性《Uber die Beziehungen der AnalytischenPsychologie zur Dichtung》《分析心理学与诗的关系》Undercoding 不足解码Understatement 克制陈述Unite narrative 叙述单元Unite significative 意义单元Unites romanesques 小说单元Unreliable narrative 不可靠叙述Urtumliche bilder 原始意象Utilitarianism 功利主义Utopian literature 乌托邦文学V《Validity in interpretation》《解释的正确性》Valery, Paul 瓦雷里,P. Variorum edition 集注本Verbal icon 语象Verfremdung 陌生化Verfremdungseffekt 陌生化效果Verstehen 理解Verisimilitude 逼真性Vico, Giovanni Battista 维柯V orurteil 成见W《Wahreit und Methode》《真理与方法》Wellek, Rene 韦莱克Well-made play 巧构剧Werkimmanente kritik 文本批评Wilde, Osear 王尔德Wilson, Edmund 威尔逊,艾德蒙Wimsatt, William K.Jr 维姆萨特Wirkungsgeschichte 作用史Wittgenstein,Rudwig 维特根斯坦World/earth 世界/大地World literature 世界文学ZZero-sign 零符号Zola,Emile 左拉Zwei-pole-theorie 两极理论。

基于IEEE802.11p的可变SCH时隙MAC机制刘灵雅;王聪;袁晨曦;马文峰【摘要】IEEE802.11p 突破了传统协议的限制,采取多信道协调的机制,但是控制信道和服务信道的长度是固定的,造成信道资源大量浪费。

针对传统IEEE802.11p 中信道利用率低的问题,提出了一种非饱和情况下可变的服务信道(Service Channel,SCH)时隙媒质接入控制(Medium Access Control,MAC)机制。

在此机制下,可以通过调整控制信道(Control Channel,CCH)和SCH 的长度,达到吞吐量最大化的效果。

该机制使用三维Markov 链模型来计算WAVE 服务广播信息(Wave Service Advertisement,WSA)帧的时延,并得出了一个非饱和状态下适用的可变的SCH 时隙MAC 计算模型。

仿真实验表明,该机制不仅提高了信道利用率,增加了吞吐量,同时也降低了信道的时延。

%The emergence of IEEE802.11p protocol effectively breaks through the limitations of traditional protocol, and the multi-channel coordination mechanism is usually adopted. However, recent research indicates that the fixed length ratio of between CCH and SCHs in IEEE1609.4 protocol would bring about huge waste of channel. This paper proposes a variable SCH interval multichannel medium access control (MAC) scheme under thenon-saturated channel. With this scheme, three dimensional Markov chain is used to calculate the delay of WSA frame, control the length of CCH interval and SCH interval and achieve the max throughput. The simulation results indicate that the proposed scheme can help VANETs remarkably naise the unsaturated throughput of SCHs and lower the transmission delay of data frames.【期刊名称】《通信技术》【年(卷),期】2017(050)001【总页数】6页(P56-61)【关键词】IEEE802.11p;多信道;吞吐量;时延;非饱和【作者】刘灵雅;王聪;袁晨曦;马文峰【作者单位】解放军理工大学通信工程学院,江苏南京210007;解放军理工大学通信工程学院,江苏南京210007;解放军理工大学通信工程学院,江苏南京210007;解放军理工大学通信工程学院,江苏南京210007【正文语种】中文【中图分类】TN929.5车联网(Vehicular Ad hoc Networks,VANETs)指的是车辆之间以及车辆与路边固定接入点之间的通信而构成的无线网络,是交通范畴内一种比较特殊的无线自组网(Mobile Ad-hoc Networks, MANETS)。

多通道甚高频超外差式接收机的设计作者:钱梦园杨国斌张援农姜春华来源:《现代电子技术》2019年第09期摘 ;要:针对甚高频天线阵信号的同时接收中存在信噪比差异过大以及信号质量不好等问题,设计一种通道差异小、噪声低、灵敏度高、动态范围大的多通道甚高频超外差式接收机。

该设计采用高灵敏度和大动态范围的超外差式接收机结构,前级放大器采用低噪声、高增益的放大器来降低整个接收机的噪声系数,并选择合理的预选滤波器和中频滤波器抑制镜像频率的干扰,链路中还采用匹配网络调节通道增益,使各个通道间的增益差异在合理的范围内,中频放大器选择合理的1 dB压缩点放大器以保证接收机的动态范围足够大。

该设计在经过测试后,各项接收指标均满足要求,可广泛应用于雷达、通信领域。

关键词:超外差接收机; 甚高频天线; 滤波器选择; 前级放大器; 通道增益调节; 匹配网络中图分类号: TN851.4⁃34 ; ; ; ; ; ; ; ; ; ; ; ; 文献标识码: A ; ; ; ; ; ; ; ; ; ; ; ;文章编号:1004⁃373X(2019)09⁃0001⁃04Design of multi⁃channel VHF superheterodyne receiverQIAN Mengyuan, YANG Guobin, ZHANG Yuannong, JIANG Chunhua(School of Electronic Information, Wuhan University, Wuhan 430072, China)Abstract: Since the simultaneous reception of VHF antenna array signals has the problems of unsuitable signal⁃to⁃noise ratio and poor signal quality, a multi⁃channel VHF superheterodyne receiver with small channel difference, low noise, high sensitivity and wide dynamic range is designed. The superheterodyne receiver structure with high sensitivity and wide dynamic range is adopted in the design. The low⁃noise high⁃gain amplifier is used as the preamplifier to reduce the noise coefficient of the entire receiver, and the reasonable preselection filter and intermediate frequency (IF) filter are selected to suppress the interference of the image frequency. The matching network is used in the link to adjust the channel gain to make the gain difference among all the channels within the reasonable range. A reasonable 1 dB compression point amplifier is selected as the IF amplifier to ensure the enough dynamic range of the receiver. The test results show that the receiving indicators of the receiver can meet the requirements, and the receiver can be widely used in radar and communication fields.Keywords: superheterodyne receiver; VHF antenna; filter selection; preamplifier; channel gain adjustment; matching network0 ;引 ;言接收機是雷达、通信系统中的“传感器”,是提取外界有用的信息并传输给系统的数据处理模块。

Multi-adversarial domain adaptation是一种解决多领域迁移问题的方法。

在多领域中,存在多个领域容易被杂糅在一起,或者领域映射发生错误的问题。

为了解决这个问题,该方法使用多个判别器来负责相应领域的判断。

具体地,先将每个数据点的领域概率分别计算出来,然后按概率赋到领域对应的判别器进行判断。

有K个领域就对应K个判别器,每个判别器负责一个领域。

由于每个点被按概率赋到相关领域判别器判断,所以能够减少领域对齐出错的现象。

以上内容仅供参考,建议查阅关于Multi-adversarial domain adaptation的文献资料,获取更准确的信息。

应用心理学Ch i nese Journa l of2010年第16卷第4期,362-368Appli ed P sycho l ogy2010.Vol 16.N o 4,362-368拥挤研究进展 概念、理论与影响因素戴 琨1,2 游旭群*1 晏碧华1(1.陕西师范大学心理学院,西安710062;2.陕西师范大学外国语学院,西安710062)摘 要 拥挤是环境心理学的重要研究领域,其内涵是对密度和空间限制的主观体验。

拥挤影响因素主要包括物理、社会、个人因素,拥挤可导致过度生理唤醒、负面社会行为和 失动机 。

现有拥挤理论从环境刺激和个体行为反应等多个角度对拥挤进行了阐释。

文章最后提出了中国文化背景下拥挤的未来研究方向。

关键词:拥挤 拥挤理论 拥挤压力源 环境心理学中图分类号:B845 6文献标识码:A 文章编号:1006-6020(2010)-00-0362-001 拥挤 和 密度在环境心理学中,对拥挤概念的认识过程就是对 拥挤 和 密度 两个概念的辨别过程。

越来越多的研究者已认识到高密度物理状态并不完全等同于拥挤心理体验,并针对 拥挤 、 密度 纷纷提出了自己的观点。

对 密度 的概念界定观点较为集中。

St oko ls(1972)和Kopec(2006)认为:密度是指一种涉及空间限制的物理状态和在给定空间中对人口数的数学测量。

N apo li等人(1996)则强调, 密度 是指一种涉及个人现有物理空间数量的表达方式。

密度 可被划分为 社会密度 、 空间密度 两种类型。

前者可通过改变固定空间中的人数来操纵,后者则可通过在保持人数不变的情况下改变现有空间来操纵,重点强调某特定环境中人均拥有空间大小。

有关拥挤概念则多种多样,研究者从不同角度对拥挤进行定义。

St okols(1972)认为, 拥挤 是指当个体的空间需求超过实际空间供给时的一种心理压力不适状态,是有限空间中的个体的一种个人主观体验。

其他研究者的观点包括: 拥挤 是指一种行为限制和行为干扰状态(Kopec, 2006); 拥挤 是指一种个人无法获得想要的隐私水平、无法充分控制同他人之间社会互动的状态(Napo l,i K il bri de和T ebbs,*通讯作者:游旭君,教授,博士生导师,Em ai:l youxuqun@s 。

现代电子技术Modern Electronics Technique2021年12月15日第44卷第24期Dec.2021Vol.44No.240引言光传送网(Optical Transport Network ,OTN )作为新一代光传输技术,从试用到现在,经历了长期的现网验证,其凭借诸多技术优势已经成为干线传输网的主要技术。

随着通信行业日新月异的变化,各类通信产品从无到有,从简单到复杂,数据通信业务不断增大,对网络通信容量的需求也急剧增长,这对网络功能提出了更大的挑战。

目前干线传输网的传输速率主要为100Gb/s ,400Gb/s 的传输速率也已出现。

在应对不断增大的数据传输要求时,几种传输速率并存的情况会持续一段时间[1]。

现有的传输网络设备成熟、价格稳定,若能继续使用现有设备实现几种速率的混传是经济、最有效的解决方法。

以100Gb/s 传输速率为例,IEEE 802.3ba 标准中规定了物理层接口规范,100Gb/s 信号可以在4条或10条物理通路上并行承载传输,即可以在每个波道为25Gb/s DOI :10.16652/j.issn.1004⁃373x.2021.24.005引用格式:顾艳丽,孙科学,肖建.光通信中信号的多通路适配研究[J].现代电子技术,2021,44(24):21⁃24.光通信中信号的多通路适配研究顾艳丽,孙科学,肖建(南京邮电大学电子与光学工程学院、微电子学院,江苏南京210023)摘要:光传送网(OTN )发展迅速,用较低的部署成本实现较高的传输速率是开发人员的研究焦点,因此文中开展了对光传输中信号适配功能的研究。

以光通路传送单元4(OTU4)信号为研究对象,设计了多通路并行接口,将OTU4帧分发到20条逻辑通道上,之后进行比特交织,最终复用到光模块的物理通道上。

在多通道并行接口设计部分,重点研究了发送方向循环分布的多通道分发方式和在帧中加入逻辑通道标识(LLM )的方法;阐述了接收方向帧对齐、通道标识恢复和多通道对齐的具体实现方法、过程和实现状态机;并将整个设计在可编程门阵列(FPGA )上进行实现。

a r X i v :c s /0105022v 1 [c s .A I ] 11 M a y 2001Multi-Channel Parallel Adaptation Theory for Rule Discovery Li Min Fu Department of CISE University of Florida Corresponding Author:Li Min Fu Department of Computer and Information Sciences,301CSE P.O.Box 116120University of Florida Gainesville,Florida 32611Phone:(352)392-1485e-mail:fu@cise.ufl.eduMulti-Channel Parallel Adaptation Theoryfor Rule DiscoveryLi Min FuAbstractIn this paper,we introduce a new machine learning theory based on multi-channel par-allel adaptation for rule discovery.This theory is distinguished from the familiar parallel-distributed adaptation theory of neural networks in terms of channel-based convergence tothe target rules.We show how to realize this theory in a learning system named CFRule.CFRule is a parallel weight-based model,but it departs from traditional neural computingin that its internal knowledge is comprehensible.Furthermore,when the model convergesupon training,each channel converges to a target rule.The model adaptation rule is de-rived by multi-level parallel weight optimization based on gradient descent.Since,however,gradient descent only guarantees local optimization,a multi-channel regression-based op-timization strategy is developed to effectively deal with this problem.Formally,we provethat the CFRule model can explicitly and precisely encode any given rule set.Also,weprove a property related to asynchronous parallel convergence,which is a critical elementof the multi-channel parallel adaptation theory for rule learning.Thanks to the quanti-zability nature of the CFRule model,rules can be extracted completely and soundly viaa threshold-based mechanism.Finally,the practical application of the theory is demon-strated in DNA promoter recognition and hepatitis prognosis prediction.Keywords:rule discovery,adaptation,optimization,regression,certainty factor,neural net-work,machine learning,uncertainty management,artificial intelligence.1IntroductionRules express general knowledge about actions or conclusions in given circumstances and also principles in given domains.In the if-then format,rules are an easy way to represent cognitive processes in psychology and a useful means to encode expert knowledge.In another perspective,rules are important because they can help scientists understand problems and engineers solve problems.These observations would account for the fact that rule learning or discovery has become a major topic in both machine learning and data mining research.The former discipline concerns the construction of computer programs which learn knowledge or skill while the latter is about the discovery of patterns or rules hidden in the data.The fundamental concepts of rule learning are discussed in[16].Methods for learning sets of rules include symbolic heuristic search[3,5],decision trees[17-18],inductive logic programming [13],neural networks[2,7,20],and genetic algorithms[10].A methodology comparison can be found in our previous work[9].Despite the differences in their computational frameworks, these methods perform a certain kind of search in the rule space(i.e.,the space of possible rules)in conjunction with some optimization plete search is difficult unless the domain is small,and a computer scientist is not interested in exhaustive search due to its exponential computational complexity.It is clear that significant issues have limited the effectiveness of all the approaches described.In particular,we should point out that all thealgorithms except exhaustive search guarantee only local but not global optimization.For example,a sequential covering algorithm such as CN2[5]performs a greedy search for a single rule at each sequential stage without backtracking and could make a suboptimal choice at any stage;a simultaneous covering algorithm such as ID3[18]learns the entire set of rules simultaneously but it searches incompletely through the hypothesis space because of attribute ordering;a neural network algorithm which adopts gradient-descent search is prone to local minima.In this paper,we introduce a new machine learning theory based on multi-channel parallel adaptation that shows great promise in learning the target rules from data by parallel global convergence.This theory is distinct from the familiar parallel-distributed adaptation theory of neural networks in terms of channel-based convergence to the target rules.We describe a system named CFRule which implements this theory.CFRule bases its computational characteristics on the certain factor(CF)model[4,22]it adopts.The CF model is a calculus of uncertainty mangement and has been used to approximate standard probability theory[1] in artificial intelligence.It has been found that certainty factors associated with rules can be revised by a neural network[6,12,15].Our research has further indicated that the CF model used as the neuron activation function(for combining inputs)can improve the neural-network performance[8].The rest of the paper is organized as follows.Section2describes the multi-channel rule learning model.Section3examines the formal properties of rule encoding.Section4derives the model parameter adaptation rule,presents a novel optimization strategy to deal with the local minimum problem due to gradient descent,and proves a property related to asynchronous parallel convergence,which is a critical element of the main theory.Section5formulates a rule extraction algorithm.Section6demonstrates practical applications.Then we draw conclusions in thefinal section.2The Multi-Channel Rule Learning ModelCFRule is a rule-learning system based on multi-level parameter optimization.The kernel of CFRule is a multi-channel rule learning model.CFRule can be embodied as an artificial neural network,but the neural network structure is not essential.We start with formal definitions about the model.Definition2.1The multi-channel rule learning model M is defined by k(k≥1)channels (Ch’s),an input vector(M in),and an output(M out)as follows:M≡(Ch1,Ch2,...,Ch k,M in,M out)(1)where−1≤M out≤1andM in≡(x1,x2,...,x d)(2) such that d is the input dimensionality and−1≤x i≤1for all i.The model has only a single output because here we assume the problem is a single-class, multi-rule learning problem.The framework can be easily extended to the multi-class case.Definition2.2Each channel(Ch j)is defined by an output weight(u j),a set of input weights (w ji’s),activation(φj),and influence(ψj)as follows:Ch j≡(u j,w j0,w j1,w j2,...,w jd,φj,ψj)(3)where w j0is the bias,0≤u j≤1,and−1≤w ji≤1for all i.The input weight vector (w j1,...,w jd)defines the channel’s pattern.Definition2.3Each channel’s activation is defined byφj=f cf(w j0,w j1x1,w j2x2,...,w jd x d)(4) where f cf is the CF-combining function[4,22],as defined below.Definition2.4The CF-combining function is given byf cf(x1,x2,...,y1,y2,...)=f+cf(x1,x2,...)+f−cf(y1,y2,...)(5)wheref+cf(x1,x2,...)=1− i(1−x i)(6)f−cf(y1,y2,...)=−1+ j(1+y j)(7) x i’s are nonnegative numbers and y j’s are negative numbers.As we will see,the CF-combining function contributes to several important computational properties instrumental to rule discovery.Definition2.5Each channel’s influence on the output is defined byψj=u jφj(8) Definition2.6The model output M out is defined byM out=f cf(ψ1,ψ2,...,ψk)(9)We call the class whose rules to be learned the target class,and define rules inferring(or explaining)that class to be the target rules.For instance,if the disease diabetes is the target class,then the diagnostic rules for diabetes would be the target rules.Each target rule defines a condition under which the given class can be inferred.Note that we do not consider rules which deny the target class,though such rules can be defined by reversing the class concept. The task of rule learning is to learn or discover a set of target rules from given instances called training instances(data).It is important that rules learned should be generally applicable to the entire domain,not just the training data.How well the target rules learned from the training data can be applied to unseen data determines the generalization performance.Instances which belong to the target class are called positive instances,else,called negative instances.Ideally,a positive training instance should match at least one target rule learned and vice versa,whereas a negative training instance should match none.So,if there is only a single target rule learned,then it must be matched by all(or most)positive training instances.But if multiple target rules are learned,then each rule is matched by some(rather than all)positive training instances.Since the number of possible rule sets is far greater than the number of possible rules,the problem of learning multiple rules is naturally much more complex than that of learning single rules.In the multi-channel rule learning theory,the model learns to sort out instances so that instances belonging to different rulesflow through different channels,and at the same time, channels are adapted to accommodate their pertinent instances and learn corresponding rules. Notice that this is a mutual process and it cannot occur all at once.In the beginning,the rules are not learned and the channels are not properly shaped,both informationflow and adaptation are more or less random,but through self-adaptation,the CFRule model will gradually converge to the correct rules,each encoded by a channel.The essence of this paper is to prove this property.In the model design,a legitimate question is what the optimal number of channels is.This is just like the question raised for a neural network of how many hidden(internal computing) units should be used.It is true that too many hidden units cause data overfitting and make generalization worse[7].Thus,a general principle is to use a minimal number of hidden units. The same principle can be equally well applied to the CFRule model.However,there is a difference.In ordinary neural networks,the number of hidden units is determined by the sample size,while in the CFRule model,the number of channels should match the number of rules embedded in the data.Since,however,we do not know how many rules are present in the data,our strategy is to use a minimal number of channels that admits convergence on the training data.The model’s behavior is characterized by three aspects:•Information processing:Compute the model output for a given input vector.•Learning or training:Adjust channels’parameters(output and input weights)so thatthe input vector is mapped into the output for every instance in the training data.•Rule extraction:Extract rules from a trained model.Thefirst aspect has been described already.3Model Representation of RulesThe IF-THEN rule(i.e.,If the premise,then the action)is a major knowledge representation paradigm in artificial intelligence.Here we make analysis of how such rules can be represented with proper semantics in the CFRule model.Definition3.1CFRule learns rules in the form ofIF A+1,...,A+i,,...,¬A−1,...,¬A−j,...,THEN the target class with a certainty factor.where A+i is a positive antecedent(in the positive form),A−j a negated antecedent(in the negative form),and¬reads“not.”Each antecedent can be a discrete or discretized attribute (feature),variable,or a logic proposition.The IF part must not be empty.The attached certainty factor in the THEN part,called the rule CF,is a positive real≤1.The rule’s premise is restricted to a conjunction,and no disjunction is allowed.The collection of rules for a certain class can be formulated as a DNF(disjunctive normal form)logic expression, namely,the disjunction of conjunctions,which implies the class.However,rules defined here are not traditional logic rules because of the attached rule CFs meant to capture uncertainty. We interpret a rule by saying when its premise holds(that is,all positive antecedents mentioned are true and all negated antecedents mentioned are false),the target concept holds at the given confidence level.CFRule can also learn rules with weighted antecedents(a kind of fuzzy rules), but we will not consider this case here.There is increasing evidence to indicate that good rule encoding capability actually fa-cilitates rule discovery in the data.In the theorems that follow,we show how the CFRule model can explicitly and precisely encode any given rule set.We note that the ordinary sigmoid-function neural network can only implicitly and approximately does this.Also,we note although the threshold function of the perceptron model enables it to learn conjunc-tions or disjunctions,the non-differentiability of this function prohibits the use of an adaptive procedure in a multilayer construct.Theorem3.1For any rule represented by Definition3.1,there exists a channel in the CFRule model to encode the rule so that if an instance matches the rule,the channel’s activation is1, else0.(Proof):This can be proven by construction.Suppose we implement channel j by setting the bias weight to1,the input weights associated with all positive attributes in the rule’s premiseto1,the input weights associated with all negated attributes in the rule’s premise to−1,the rest of the input weights to0,andfinally the output weight to the rule CF.Assume that each instance is encoded by a bipolar vector in which for each attribute,1means true and−1false. When an instance matches the rule,the following conditions hold:x i=1if x i is part of the rule’s premise,x i=−1if¬x i is part of the rule’s premise,and otherwise x i can be of any value.For such an instance,given the above construction,it is true that w ji x i=1or0for all i.Thus,the channel’s activation(by Definition2.3),φj=f cf(w j0=1,w j1x1,w j2x2,...,w jd x d)(10)must be1according to f cf.On the other hand,if an instance does not match the rule,then there exists i such that w ji x i=−1.Since w j0(the bias weight)=1,the channel’s activation is0due to f cf.2Theorem3.2Assume that rule CF’s>θ(0≤θ≤1).For any set of rules represented by Definition3.1,there exists a CFRule model to encode the rule set so that if an instance matches any of the given rules,the model output is>θ,else0.(Proof):Suppose there are k rules in the set.As suggested in the proof of Theorem3.1,we construct k channels,each encoding a different rule in the given rule set so that if an instance matches,say rule j,then the activation(φj)of channel j is1.In this case,since the channel’s influenceψj is given by u jφj(where u j is set to the rule CF)and the rule CF>θ,it follows thatψj>θ.It is then clear that the model output must be>θsince it combines influences from all channels that≥0but at least one>θ.On the other hand,if an instance fails to match any of the rules,all the channels’activations are zero,so is the model output.24Model Adaptation and ConvergenceIn neural computing,the backpropagation algorithm[19]can be viewed as a multilayer,par-allel optimization strategy that enables the network to converge to a local optimum solution. The black-box nature of the neural network solution is reflected by the fact that the pattern (the input weight vector)learned by each neuron does not bear meaningful knowledge.The CFRule model departs from traditional neural computing in that its internal knowledge is comprehensible.Furthermore,when the model converges upon training,each channel con-verges to a target rule.How to achieve this objective and what is the mathematical theory are the main issues to be addressed.4.1Model Training Based on Gradient DescentThe CFRule model learns to map a set of input vectors(e.g.,extracted features)into a set of outputs(e.g.,class information)by training.An input vector along with its target outputconstitute a training instance.The input vector is encoded as a1/−1bipolar vector.The target output is1for a positive instance and0for a negative instance.Starting with a random or estimated weight setting,the model is trained to adapt itself to the characteristics of the training instances by changing weights(both output and input weights)for every channel in the model.Typically,instances are presented to the model one at a time.When all instances are examined(called an epoch),the network will start over with thefirst instance and repeat.Iterations continue until the system performance has reached a satisfactory level.The learning rule of the CFRule model is derived in the same way as the backpropagation algorithm[19].The training objective is to minimize the sum of squared errors in the data. In each learning cycle,a training instance is given and the weights of channel j(for all j)are updated byu j(t+1)=u j(t)+∆u j(11)w ji(t+1)=w ji(t)+∆w ji(12)where u j:the output weight,w ji:an input weight,the argument t denotes iteration t,and∆the adjustment.The weight adjustment on the current instance is based on gradient descent. Consider channel j.For the output weight(u j),∆u j=−η(∂E/∂u j)(13) (η:the learning rate)where1E=Sinceφj is not directly related to E,thefirst partial derivative on the right hand side of the above equation is expanded by the chain rule again to obtain∂E/∂φj=(∂E/∂M out)(∂M out/∂φj)=−D(∂M out/∂φj)Substituting these results into Eq.(15)leads to the following definition.Definition4.2The learning rule for input weight w ji of channel j is given by∆w ji=ηd j(∂φj/∂w ji)(16)whered j=D(∂M out/∂φj)Assume thatφj=f+cf(w j1x1,w j2x2,...,w jd′x d′)+f−cf(w jd′+1x d′+1,...,w jd x d)(17)Suppose d′>1and d−d′>1.The partial derivative∂φj∂w ji =(l=i,l≤d′(1−w jl x l))x i(18)Case(b)If w ji x i<0,∂φj∂w ji=x i.4.2Multi-Channel Regression-Based OptimizationIt is known that gradient descent can onlyfind a local-minimum.When the error surface isflat or very convoluted,such an algorithm often ends up with a bad local minimum.Moreover,the learning performance is measured by the error on unseen data independent of the training set. Such error is referred to as generalization error.We note that minimization of the training error by the backpropagation algorithm does not guarantee simultaneous minimization of generalization error.What is worse,generalization error may instead rise after some point along the training curve due to an undesired phenomenon known as overfitting[7].Thus, global optimization techniques for network training(e.g.,[21])do not necessarily offer help as far as generalization is concerned.To address this issue,CFRule uses a novel optimization strategy called multi-channel regression-based optimization(MCRO).In Definition2.4,f+cf and f−cf can also be expressed asf+cf(x1,x2,...)= i x i− i j x i x j+ i jkx i x j x k− (20)f−cf(y1,y2,...)= i y i+ i j y i y j+ i jky i y j y k+ (21)When the arguments(x i’s and y i’s)are small,the CF function behaves somewhat like a linear function.It can be seen that if the magnitude of every argument is<0.1,thefirst order approximation of the CF function is within an error of10%or so.Since when learning starts,all the weights take on small values,this analysis has motivated the MCRO strategy for improving the gradient descent solution.The basic idea behind MCRO is to choose a starting point based on the linear regression analysis,in contrast to gradient descent which uses a random starting point.If we can use regression analysis to estimate the initial influence of each input variable on the model output,how can we know how to distribute this estimate over multiple channels? In fact,this is the most intricate part of the whole idea since each channel’s structure and parameters are yet to be learned.The answer will soon be clear.In CFRule,each channel’s activation is defined byφj=f cf(w j0,w j1x1,w j2x2,...)(22) Suppose we separate the linear component from the nonlinear component(R)inφj to obtainφj=(di=0w ji x i)+R j(23) We apply the same treatment to the model output(Definition2.6)M out=f cf(u1φ1,u2φ2,...)(24)so thatM out=(kj=1u jφj)+R out(25) Then we substitute Eq.(23)into Eq.(25)to obtainM out=(kj=1di=0u j w ji x i)+R acc(26)in which the right hand side is equivalent to[di=0(kj=1u j w ji)x i]+R accNote thatR acc=(kj=1u j R j)+R outSuppose linear regression analysis produces the following estimation equation for the model output:M′out=b0+b1x1+...(all the input variables and the output transformed to the range from0to1).Table1:The target rules in the simulation experiment.rule1:IF x1and¬x2and x7THEN the target conceptrule2:IF x1and¬x4and x5THEN the target conceptrule3:IF x6and x11THEN the target conceptTable2:Comparison of the MCRO strategy with random start for the convergence to the target rules.The results were validated by the statistical t test with the level of significance <0.01and<0.025(degrees of freedom=48)for the training and test error rates upon convergence,respectively.Random Start Level of Significance0.010 2.470.012 2.344.3Asynchronous Parallel ConvergenceIn the multi-channel rule learning theory,there are two possible modes of parallel convergence. In the synchronous mode,all channels converge to their respective target patterns at the same time,whereas in the asynchronous mode,each channel converges at a different time.In a self-adaptation or self-organization model without a global clock,the synchronous mode is not a plausible scenario of convergence.On the other hand,the asynchronous mode may not arrive at global convergence(i.e.,every channel converging to its target pattern)unless there is a mechanism to protect a target pattern once it is converged upon.Here we examine a formal property of CFRule on this new learning issue.Theorem4.1Suppose at time t,channel j of the CFRule model has learned an exact pattern (w j1,w j2,...,w jd)(d≥1)such that w j0(the bias)=1and w ji=1or−1or0for1≤i≤d.At time t+1when the model is trained on a given instance with the input vector(x0,x1,x2,...,x d) (x0=1and x i=1or−1for all1≤i≤d),the pattern is unchanged unless there is a single mismatched weight(weight w ji is mismatched if and only if w ji x i=−1).Let∆w ji(t+1)be the weight adjustment for w ji.Then(a)If there is no mismatch,then∆w ji(t+1)=0for all i.(b)If there are more than one mismatched weight then∆w ji(t+1)=0for all i.(Proof):In case(a),there is no mismatch,so w ji x i=1or0for all i.There exists l such that w jl x l=1and l=i,for example,w j0x0=1as given.From Eq.(18),∂φj)=0∂w jiIn case(b),the proof for matched weights is the same as that in case(a).Consider only mismatched weights w ji’s such that w ji x i=−1.Since there are at least two mismatched weights,there exists l such that w jl x l=−1and l=i.From Eq.(19),∂φj)=0∂w jiIn the case of a single mismatched weight,∂φjwhich is not zero,so the weight adjustment∆w ji(t+1)may or may not be zero,depending on the error d j.2Since model training starts with small weight values,the initial pattern associated with each channel cannot be exact.When training ends,the channel’s pattern may still be inexact because of possible noise,inconsistency,and uncertainty in the data.However,from the proof of the above theorem,we see that when the nonzero weights in the channel’s pattern grow larger,the error derivative(d jφjTable3:Asynchronous parallel convergence to the target rules in the CFRule model.Channels 1,2,3converge to target rules1,2,3,respectively.w j,i denotes the input weight associated with the input x i in channel j.An epoch consists of a presentation of all training instances.epoch151015202530essarily happen in practical circumstances involving data noise,inconsistency,uncertainty, and inadequate sample sizes.However,in whatever circumstances,it turns out that a simple thresholding mechanism suffices to distinguish important from unimportant weights in the CFRule model.Since the weight absolute values range from0to1,it is reasonable to use 0.5as the threshold,but this value does not always guarantee optimal performance.How to search for a good threshold in a continuous range is difficult.Fortunately,thanks to the quantizability nature of the system adopting the CF model[9],only a handful of values need to be considered.Our research has narrowed it down to four candidate values:0.35,0.5,0.65, and0.8.A larger threshold makes extracted rules more general,whereas a smaller threshold more specific.In order to lessen data overfitting,our heuristic is to choose a higher value as long as the training error is ing an independent cross-validation data set is a good idea if enough data is available.The rule extraction algorithm is formulated below.The threshold-based algorithm described here is fundamentally different from the search-based algorithm in neural network rule extraction[7,9,20].The main advantage with the threshold-based approach is its linear computational complexity with the total number of weights,in contrast to polynomial or even exponential complexity incurred by the search-based approach.Furthermore,the former approach obviates the need of a special training,pruning,Table4:The promoter(of prokaryotes)consensus sequences.Region@-36=T@-35=T@-34=G@-33=AMinus-10@-9=A@-8=Tor approximation procedure commonly used in the latter approach for complexity reduction. As a result,the threshold-based,direct approach should produce better and more reliable rules.Notice that this approach is not applicable to the ordinary sigmoid-function neural network where knowledge is entangled.The admissibility of the threshold-based algorithm for rule extraction in CFRule can be ascribed to the CF-combining function.6ApplicationsTwo benchmark data sets were selected to demonstrate the value of CFRule on practical domains.The promoter data set is characterized by high dimensionality relative to the sample size,while the hepatitis data has a lot of missing values.Thus,both pose a challenging problem.The decision-tree-based rule generator system C4.5[18]was taken as a control since it(and with its later version)is the currently most representative(or most often used)rule learning system,and also the performance of C4.5is optimized in a statistical sense.6.1Promoter Recognition in DNAIn the promoter data set[23],there are106instances with each consisting of a DNA nucleotide string of four base types:A(adenine),G(guanine),C(cytosine),and T(thymine).Each instance string is comprised of57sequential nucleotides,includingfifty nucleotides before (minus)and six following(plus)the transcription site.An instance is a positive instance if the promoter region is present in the sequence,else it is a negative instance.There are53positive instances and53negative instances,respectively.Each position of an instance sequence is encoded by four bits with each bit designating a base type.So an instance is encoded by a vector of228bits along with a label indicating a positive or negative instance.In the literature of molecular biology,promoter(of prokaryotes)sequences have average constitutions of-TTGACA-and-TATAAT-,respectively,located at so-called minus-35and minus-10regions[14],as shown in Table4.The CFRule model in this study had3channels,which were the minimal number of channels to bring the training error under0.02upon convergence.Still,the model is relativelyTable5:The average two-fold cross-validation error rates of the rules learned by C4.5and CFRule,respectively.Domain CFRulePromoters(withoutprior knowledge)12.8%7.1%Table6:The promoter prediction rules learned from106instances by CFRule and C4.5, respectively.¬:not.@:at.DNA Sequence PatternCFRule@-34=G@-33=¬G@-12=¬G@-36=T@-35=T@-31=¬C@-12=¬G@-45=A@-36=T@-35=T#1#2#3Rule#1C4.5MALE and NO STEROID and ALBUMIN<3.7databases.6.2Hepatitis Prognosis PredictionIn the data set concerning hepatitis prognosis1,there are155instances,each described by19 attributes.Continuous attributes were discretized,then the data set was randomly partitioned into two halves(78and77cases),and then cross-validation was carried out.CFRule and C4.5 used exactly the same data to ensure fair comparison.The CFRule model for this problem consisted of2channels.Again,CFRule was superior to C4.5based on the cross-validation performance(see Table5).However,both systems learned the same single rule from the whole 155instances,as displayed in Table7.To learn the same rule by two fundamentally different systems is quite a coincidence,but it suggests the rule is true in a global sense.7ConclusionsIf global optimization is a main issue for automated rule discovery from data,then current machine learning theories do not seem adequate.For instance,the decision-tree and neural-network based algorithms,which dodge the complexity of exhaustive search,guarantee only local but not global optimization.In this paper,we introduce a new machine learning theory。